Model evaluation and optimization Topic O Using crossvalidation

- Slides: 34

Model evaluation and optimization

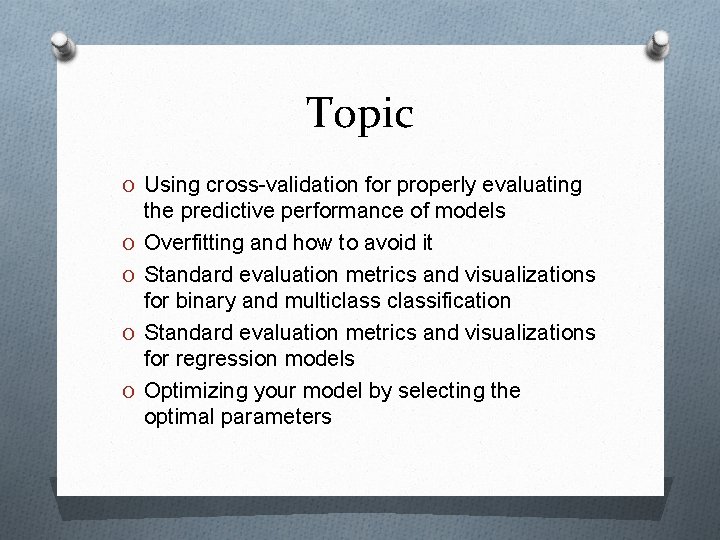

Topic O Using cross-validation for properly evaluating O O the predictive performance of models Overfitting and how to avoid it Standard evaluation metrics and visualizations for binary and multiclassification Standard evaluation metrics and visualizations for regression models Optimizing your model by selecting the optimal parameters

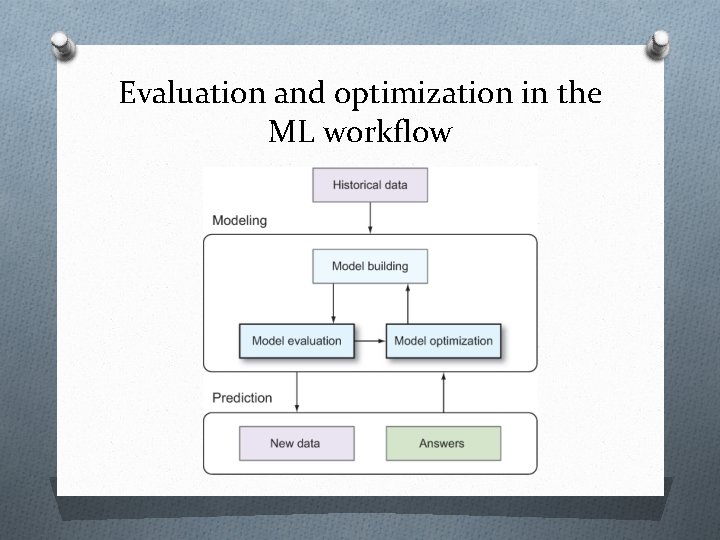

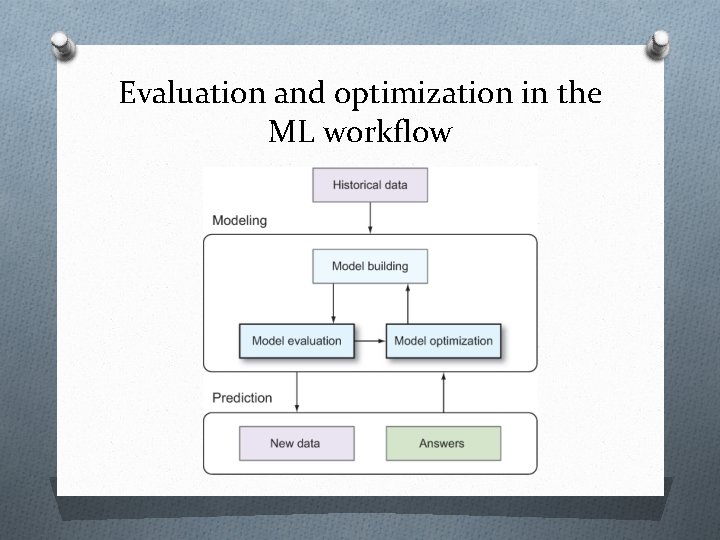

Evaluation and optimization in the ML workflow

Model generalization: assessing predictive accuracy for new data O The problem: overfitting and model optimization O The solution: cross-validation O Recommendation when using crossvalidation

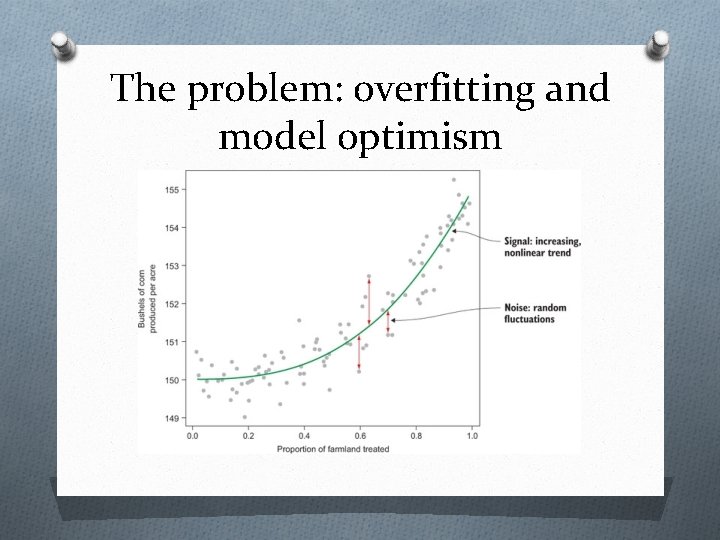

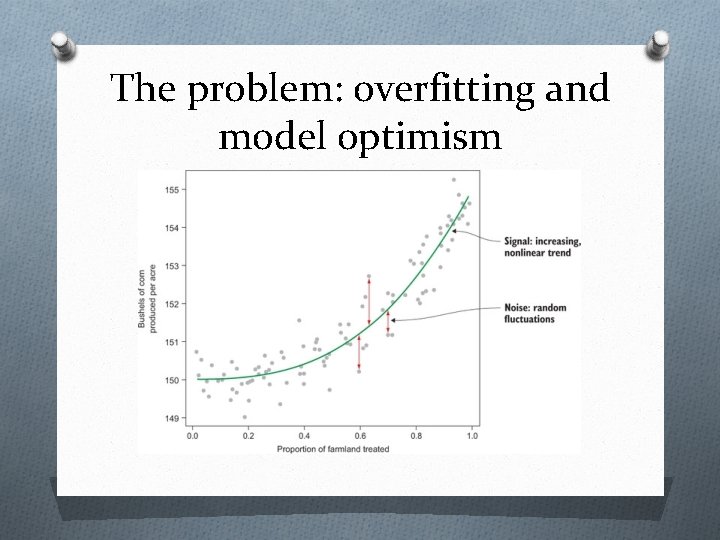

The problem: overfitting and model optimism

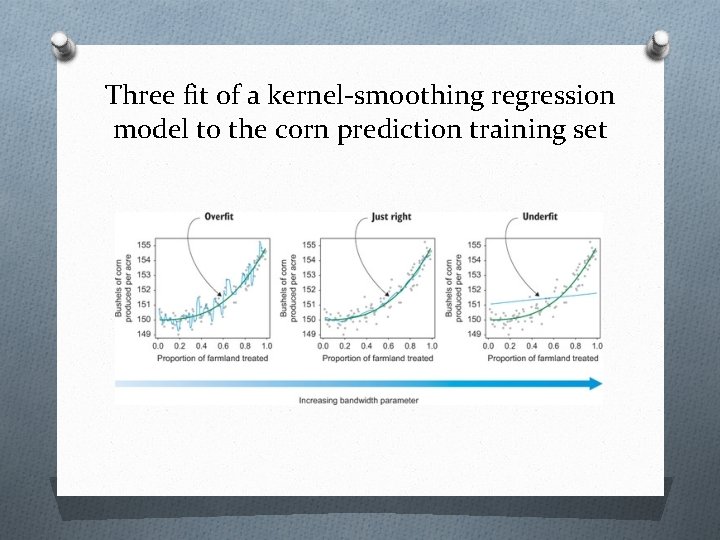

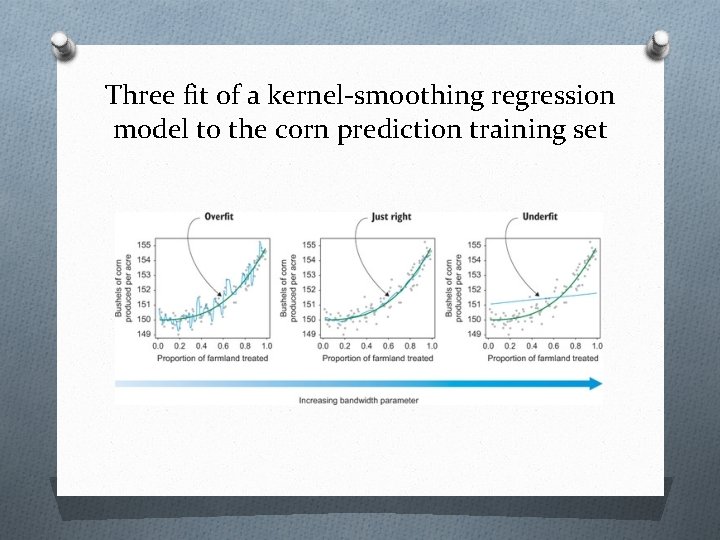

Three fit of a kernel-smoothing regression model to the corn prediction training set

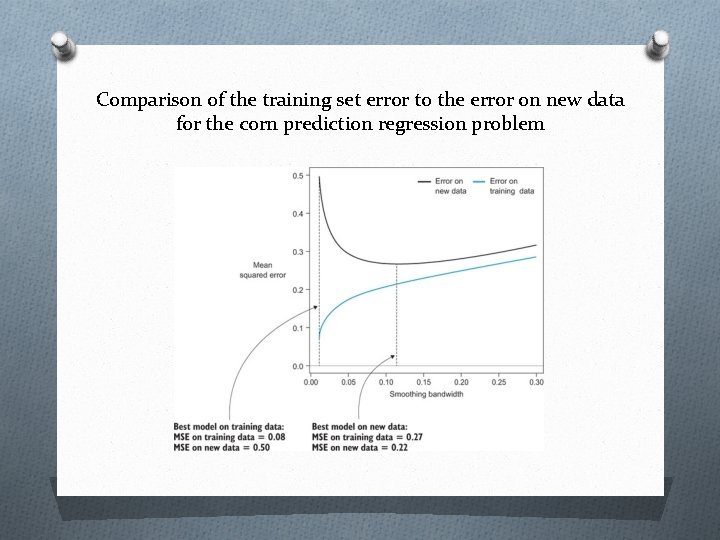

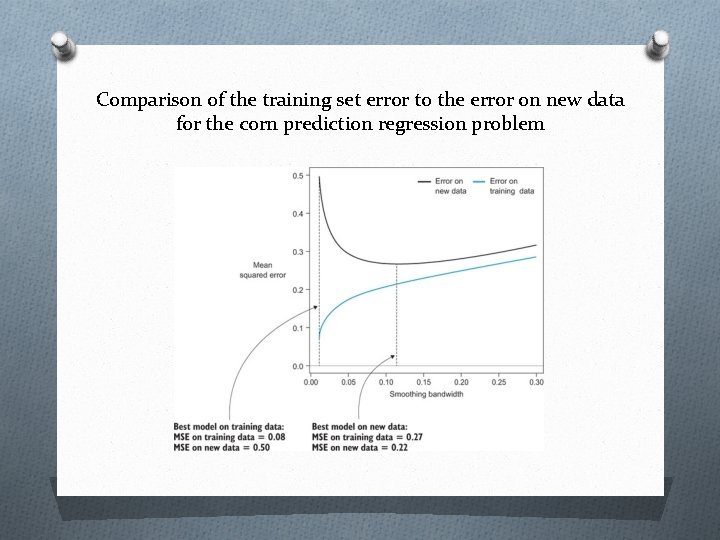

Comparison of the training set error to the error on new data for the corn prediction regression problem

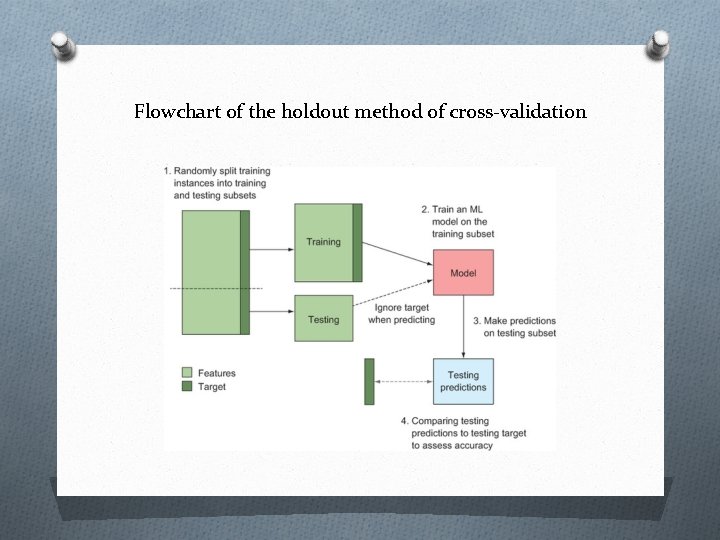

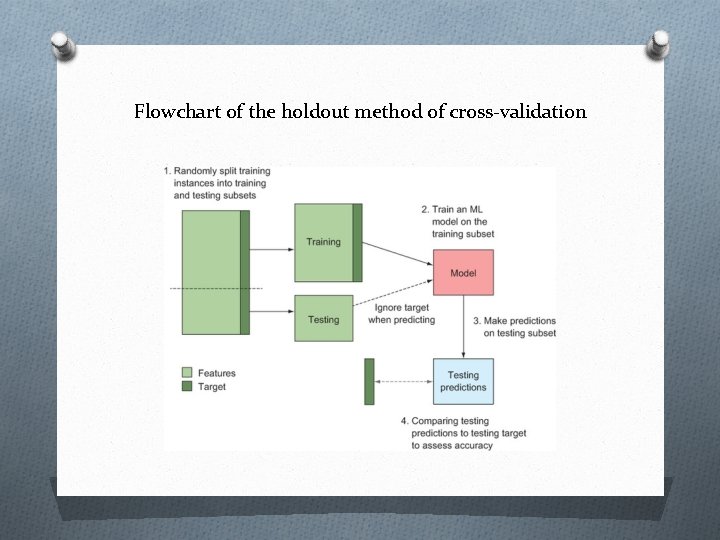

Flowchart of the holdout method of cross-validation

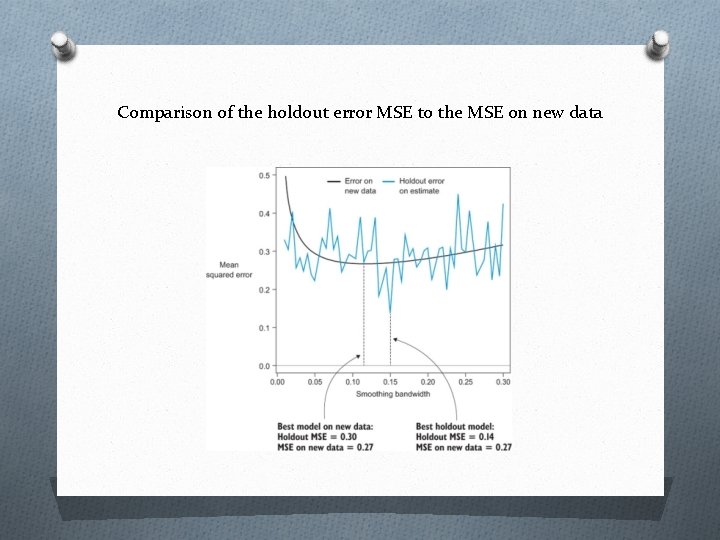

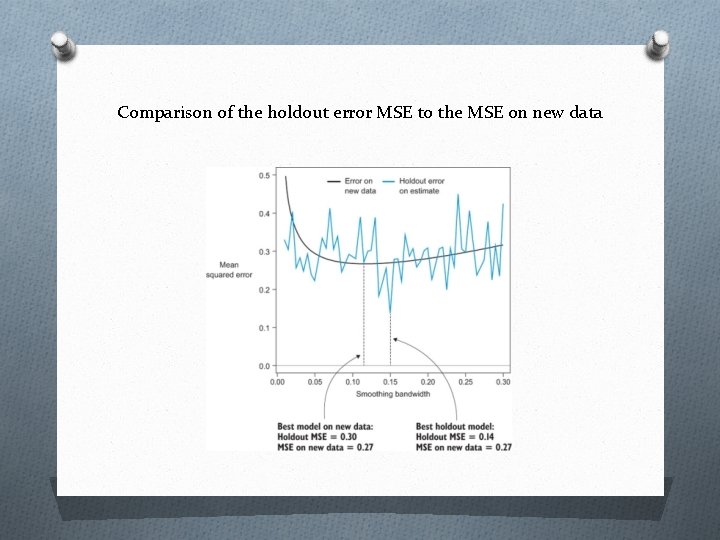

Comparison of the holdout error MSE to the MSE on new data

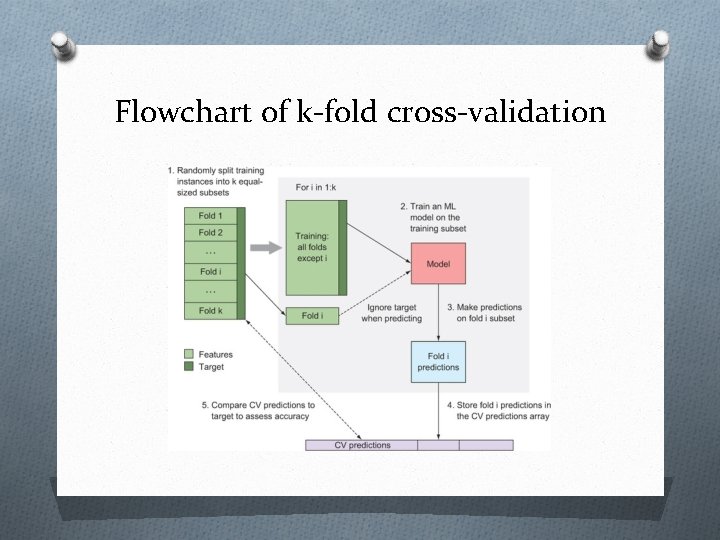

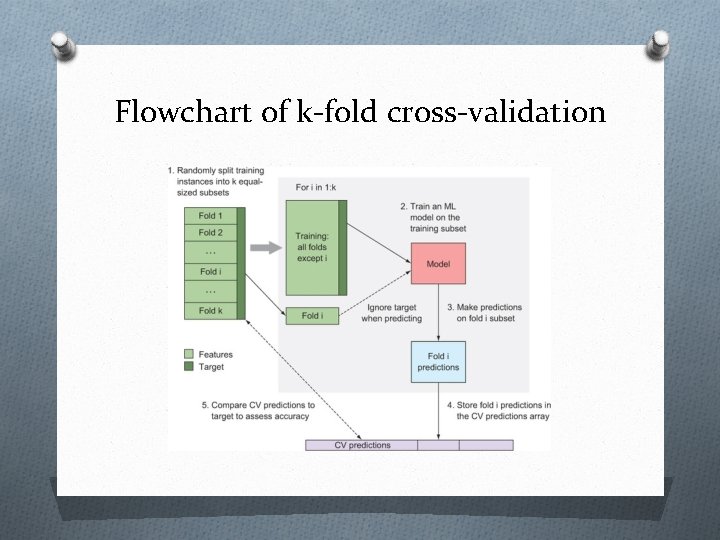

Flowchart of k-fold cross-validation

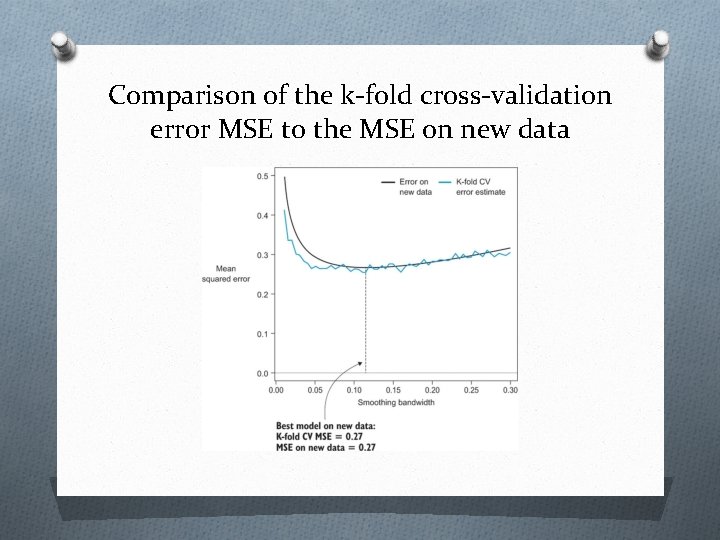

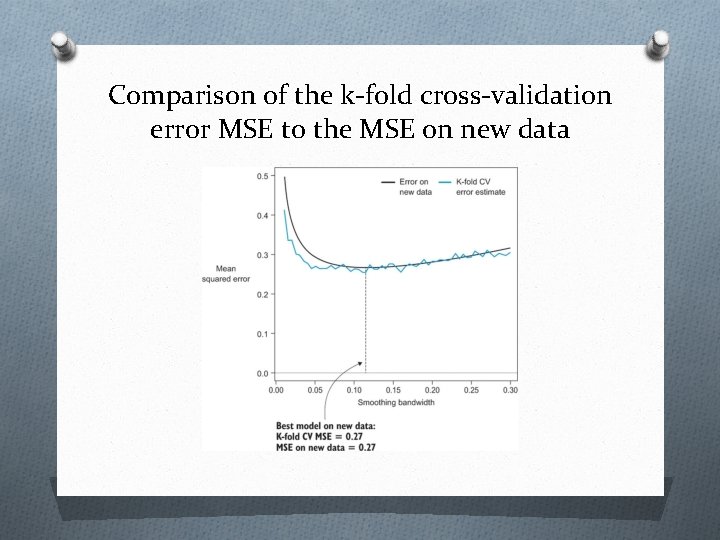

Comparison of the k-fold cross-validation error MSE to the MSE on new data

Evaluation of classification models O Class-wise accuracy and confusion matrix O Accuracy trade-offs and ROC curves O Multclassification

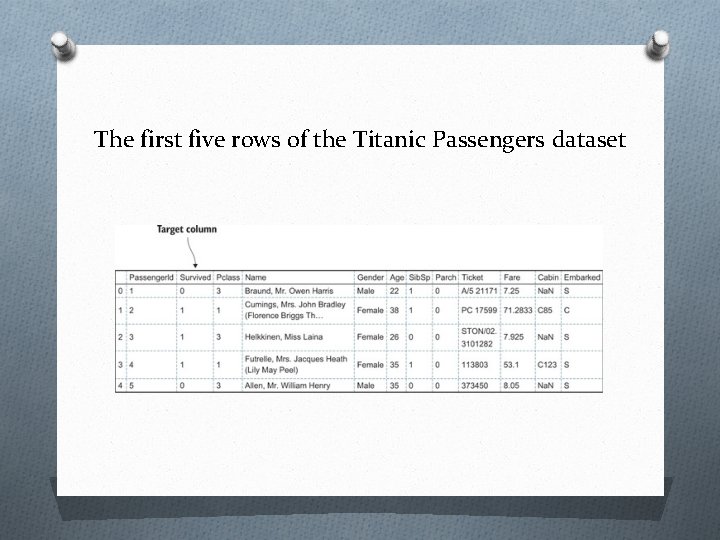

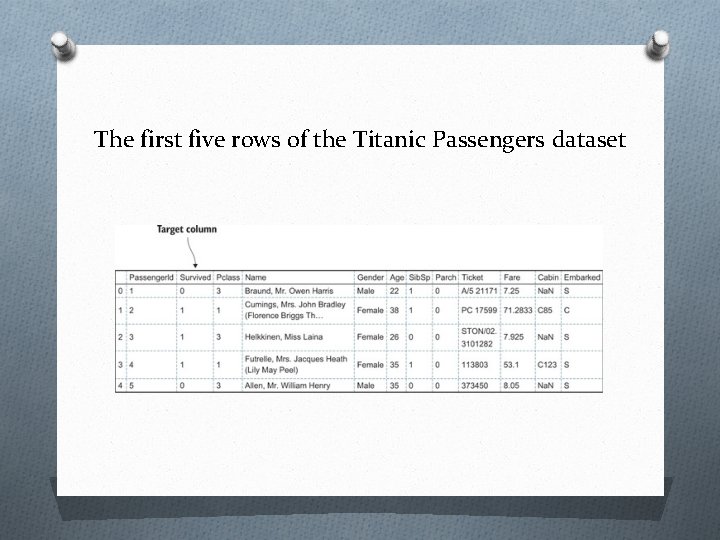

The first five rows of the Titanic Passengers dataset

Splitting the full dataset into training and testing sets

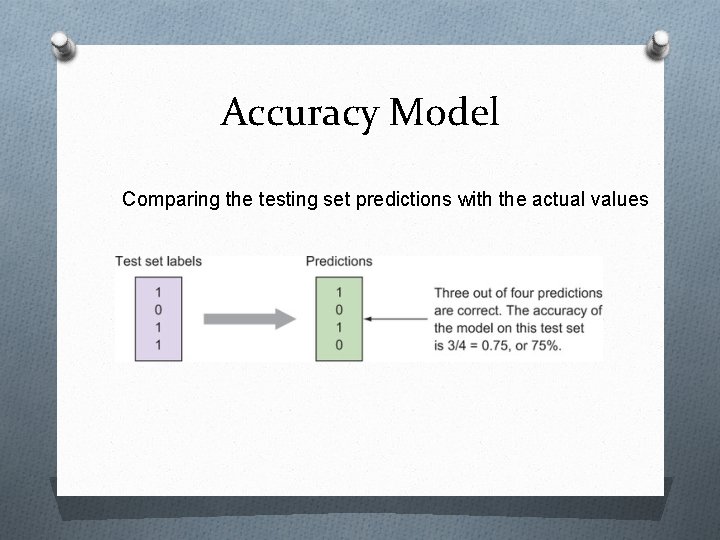

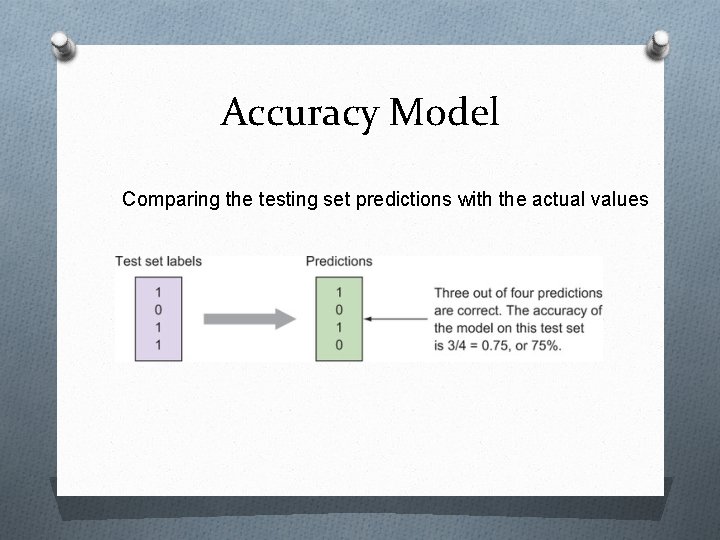

Accuracy Model Comparing the testing set predictions with the actual values

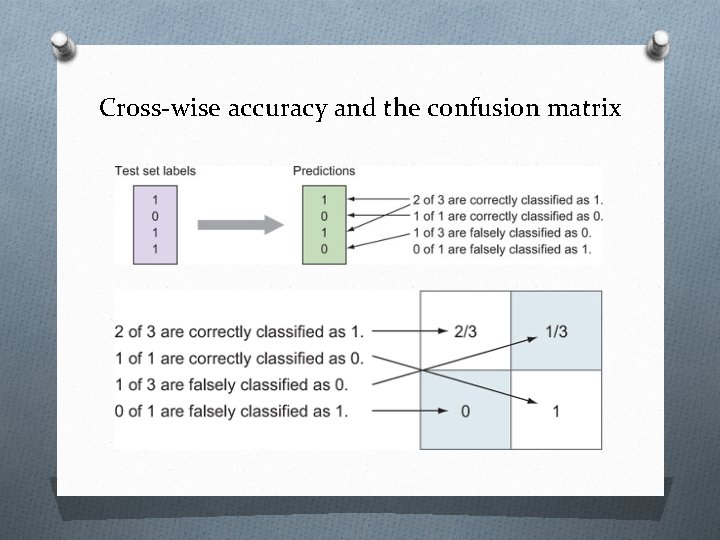

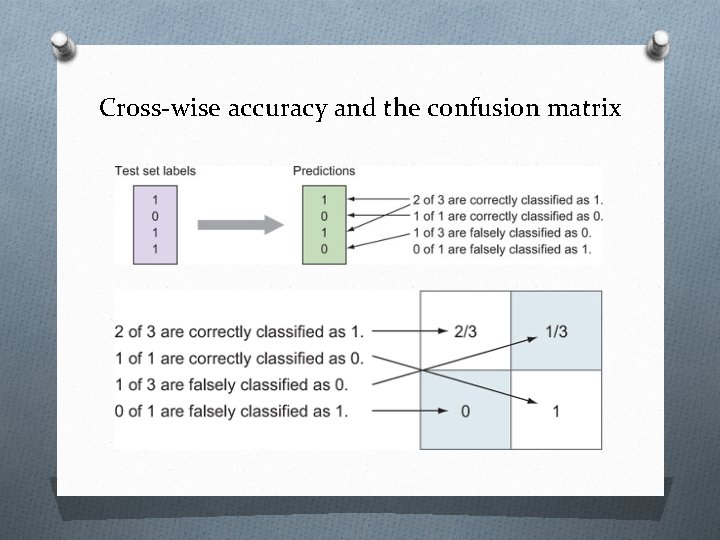

Cross-wise accuracy and the confusion matrix

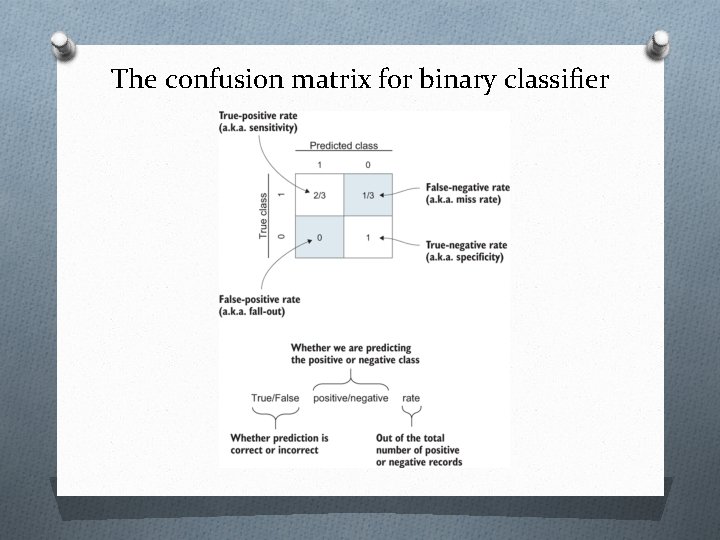

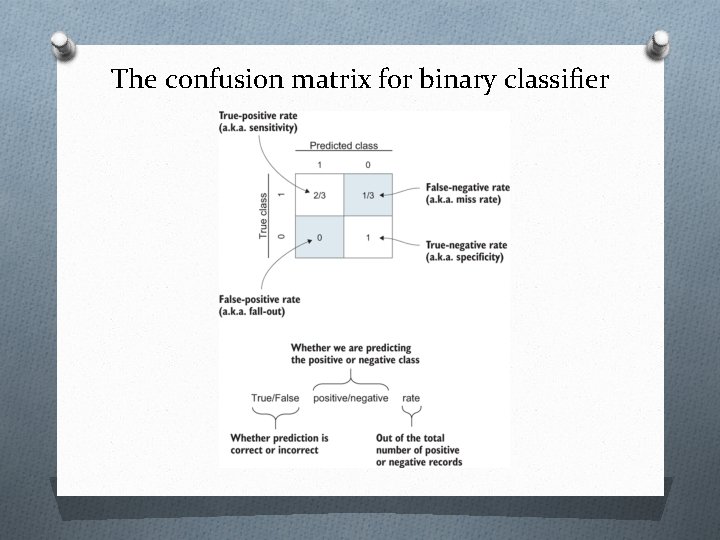

The confusion matrix for binary classifier

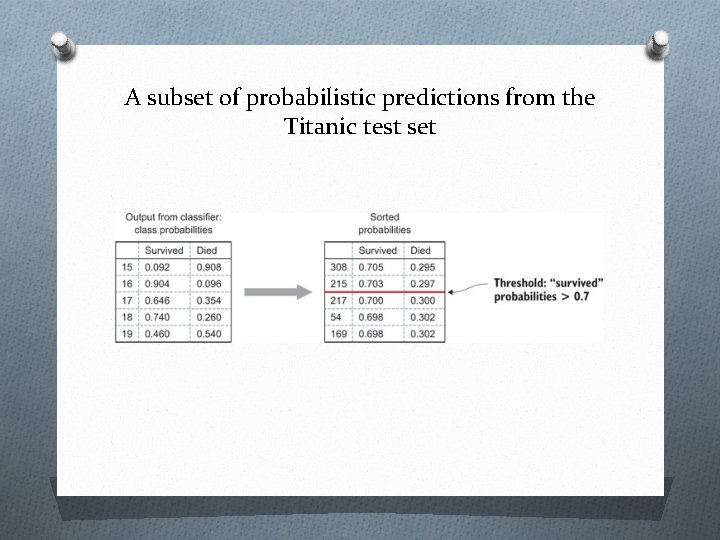

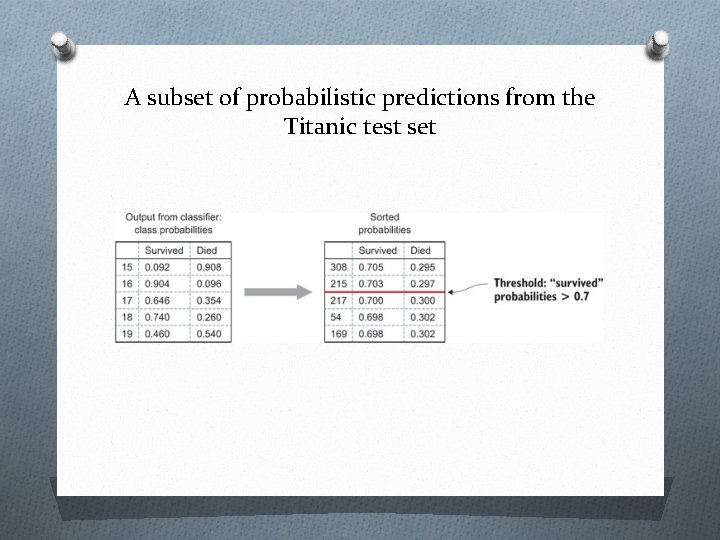

A subset of probabilistic predictions from the Titanic test set

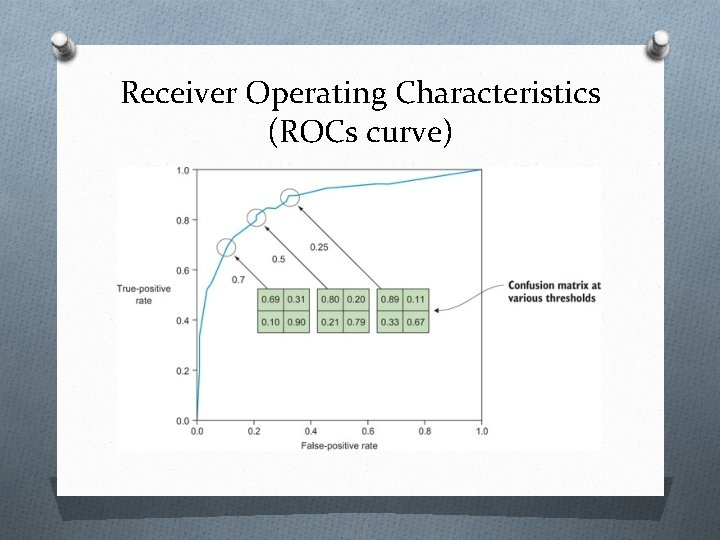

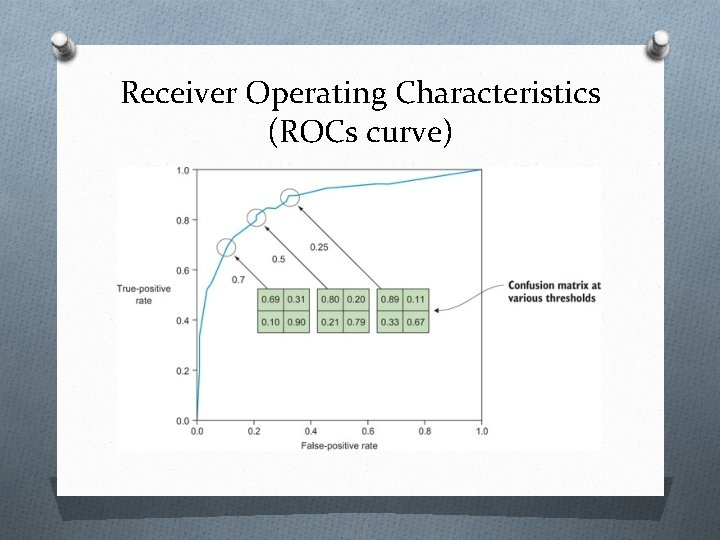

Receiver Operating Characteristics (ROCs curve)

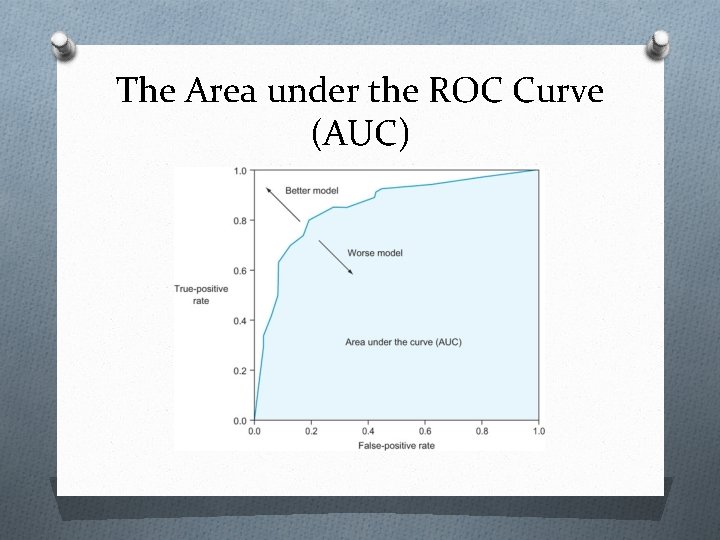

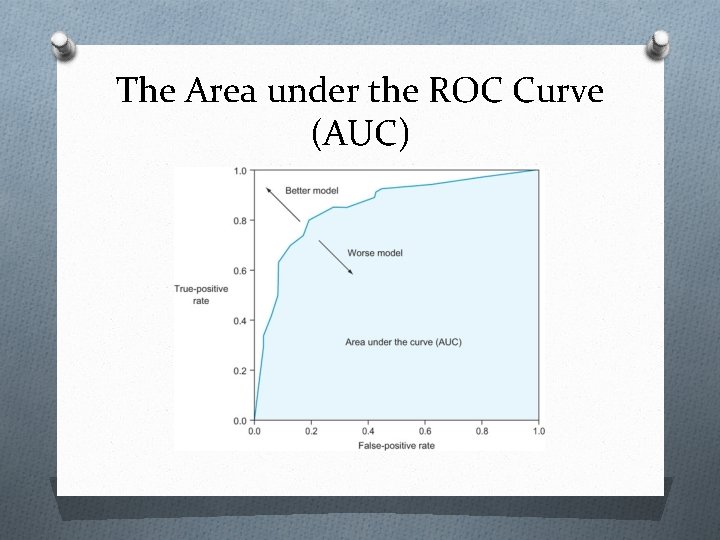

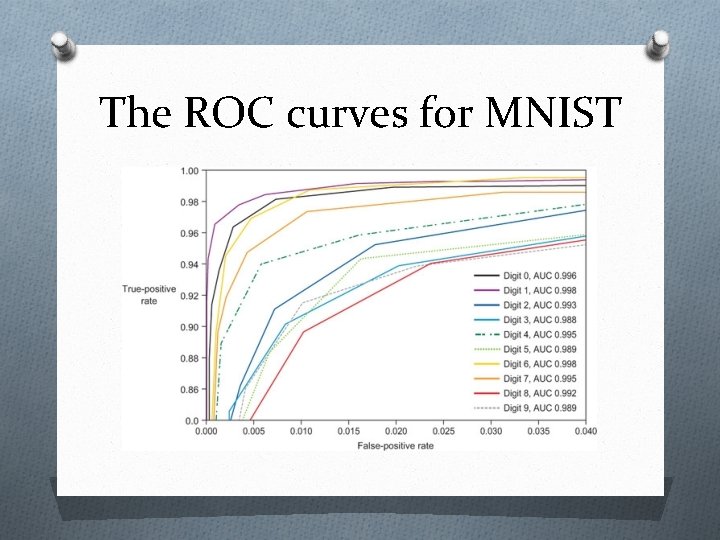

The Area under the ROC Curve (AUC)

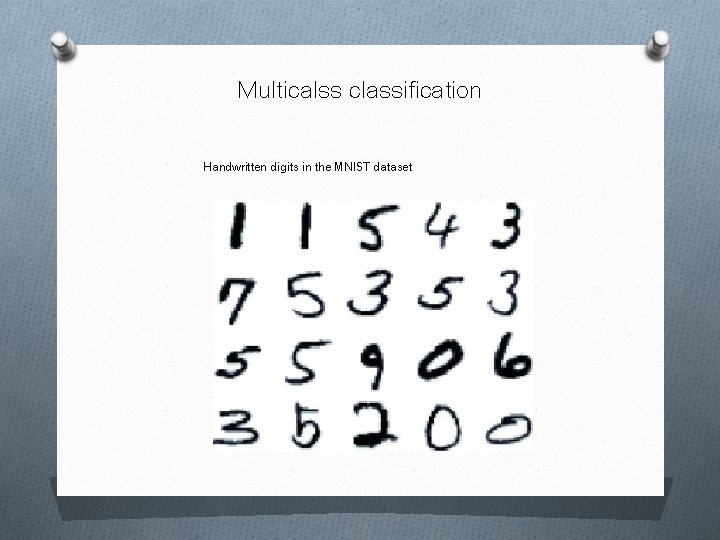

Multicalss classification Handwritten digits in the MNIST dataset

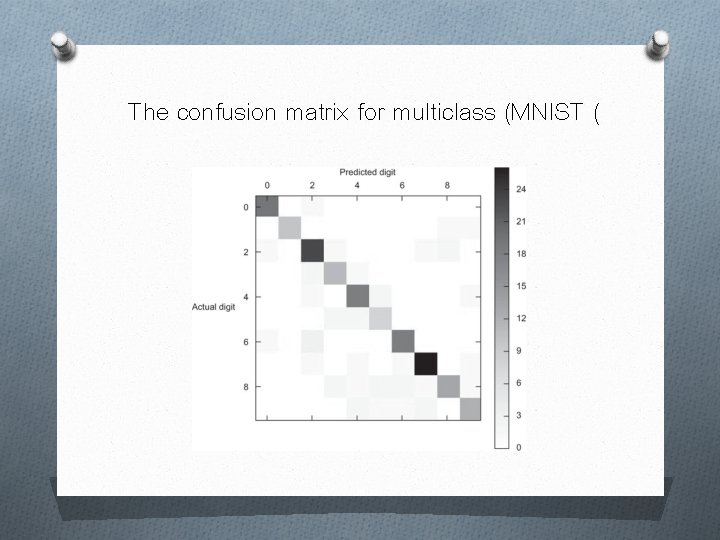

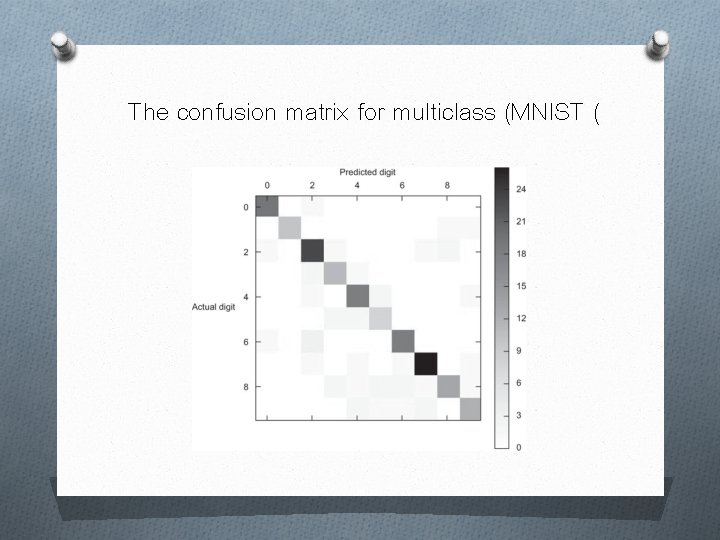

The confusion matrix for multiclass (MNIST (

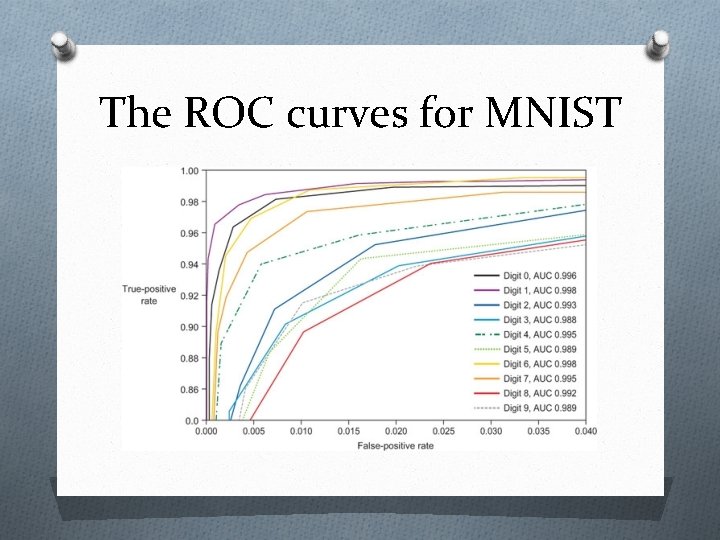

The ROC curves for MNIST

Evaluation of regression models O Using simple regression performance metrics O Examining residuals Model optimization O ML algorithms and their tuning parameters O Grid search

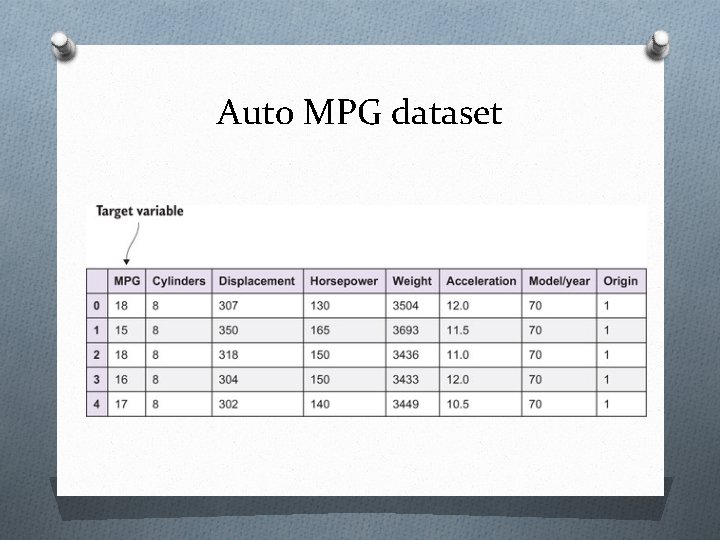

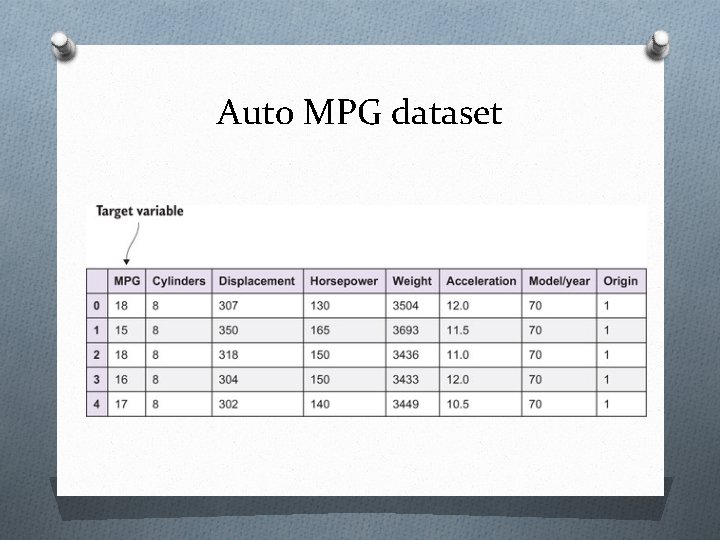

Auto MPG dataset

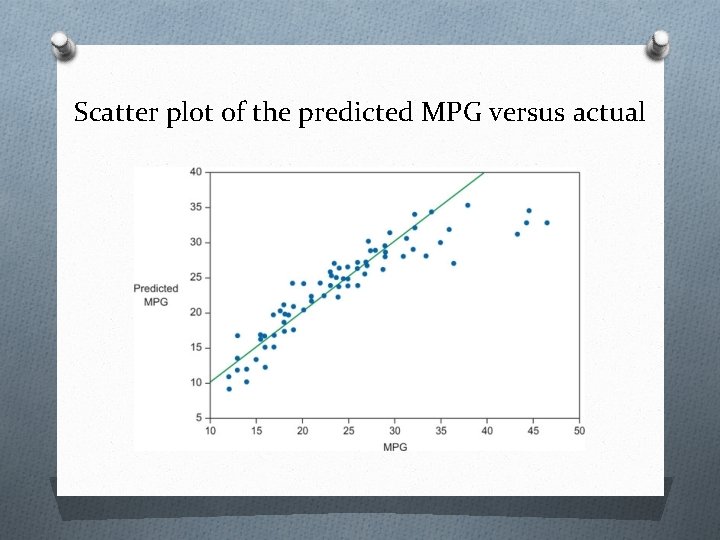

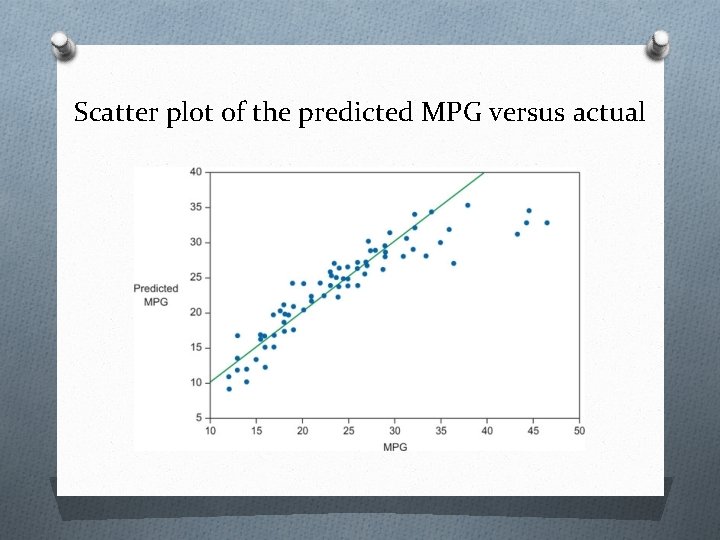

Scatter plot of the predicted MPG versus actual

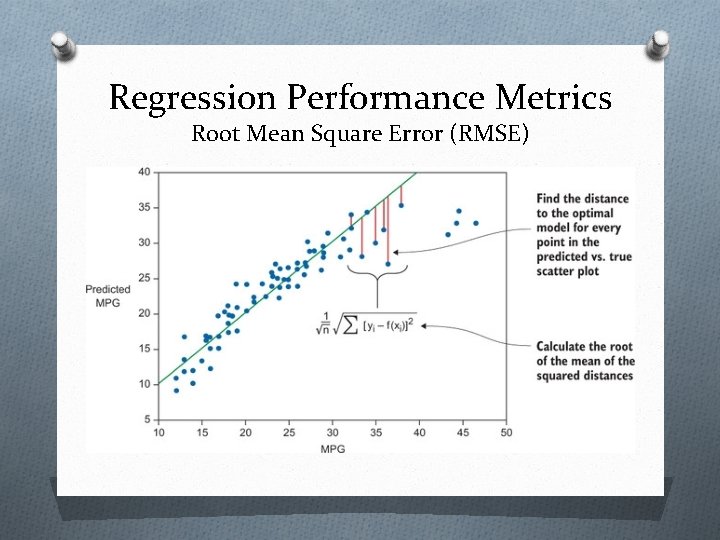

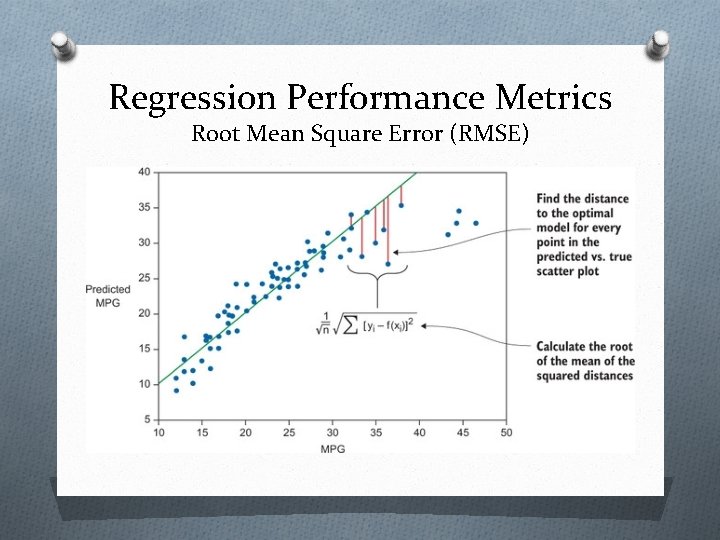

Regression Performance Metrics Root Mean Square Error (RMSE)

Regression Performance Metrics O Keep the following in mind: O Always use cross-validation to assess the model, to avoid overfitting O The evaluation metric should align with the problem at hand

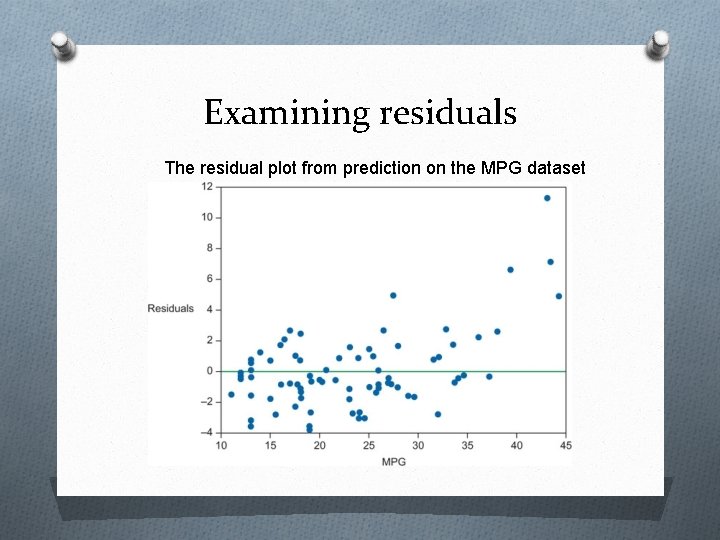

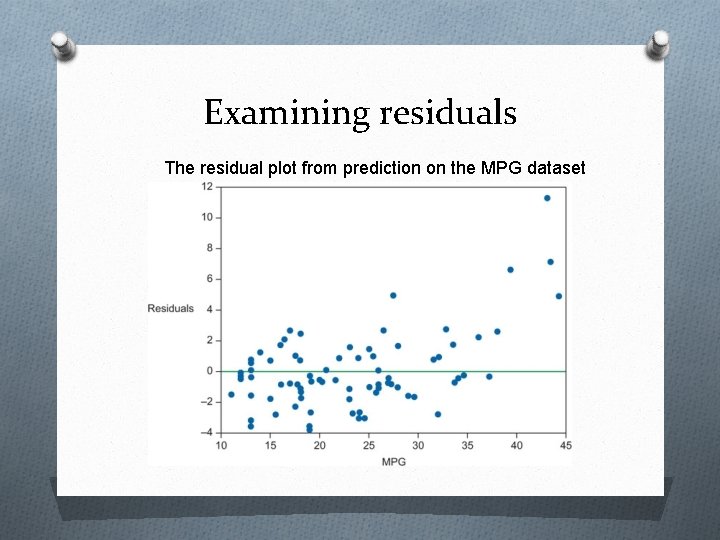

Examining residuals The residual plot from prediction on the MPG dataset

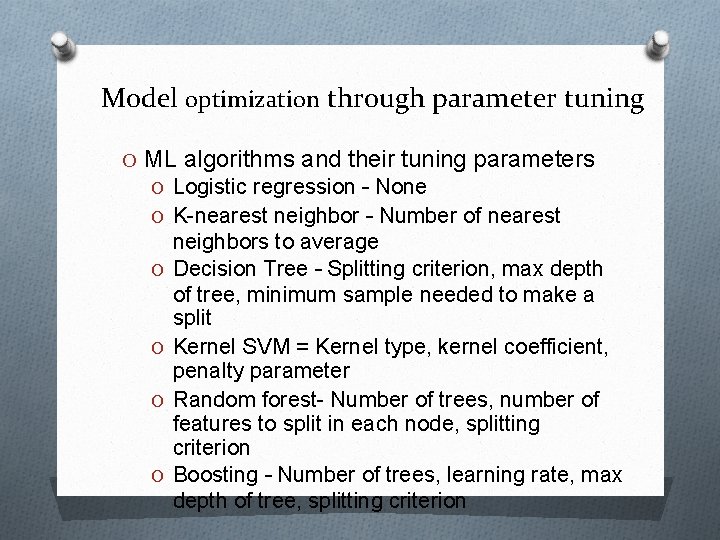

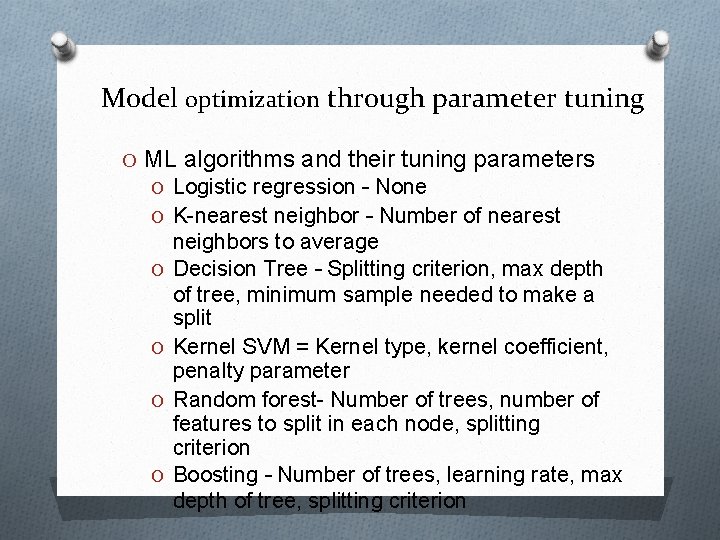

Model optimization through parameter tuning O ML algorithms and their tuning parameters O Logistic regression – None O K-nearest neighbor – Number of nearest O O neighbors to average Decision Tree – Splitting criterion, max depth of tree, minimum sample needed to make a split Kernel SVM = Kernel type, kernel coefficient, penalty parameter Random forest- Number of trees, number of features to split in each node, splitting criterion Boosting – Number of trees, learning rate, max depth of tree, splitting criterion

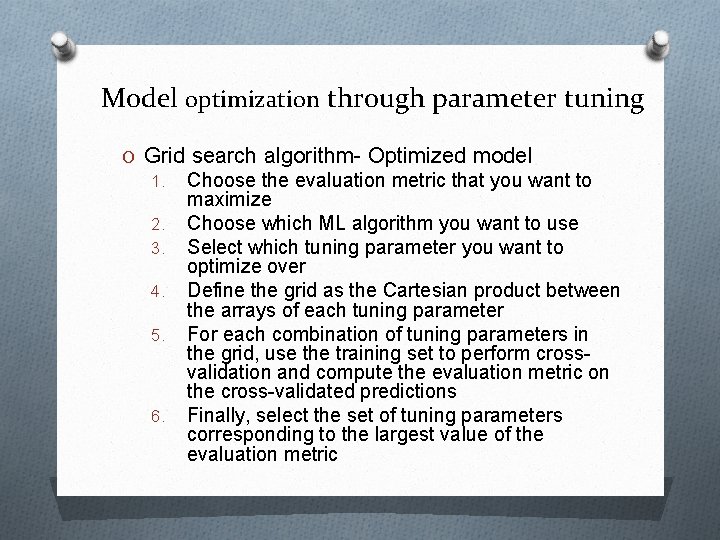

Model optimization through parameter tuning O Grid search algorithm- Optimized model 1. Choose the evaluation metric that you want to maximize 2. Choose which ML algorithm you want to use 3. Select which tuning parameter you want to optimize over 4. Define the grid as the Cartesian product between the arrays of each tuning parameter 5. For each combination of tuning parameters in the grid, use the training set to perform crossvalidation and compute the evaluation metric on the cross-validated predictions 6. Finally, select the set of tuning parameters corresponding to the largest value of the evaluation metric

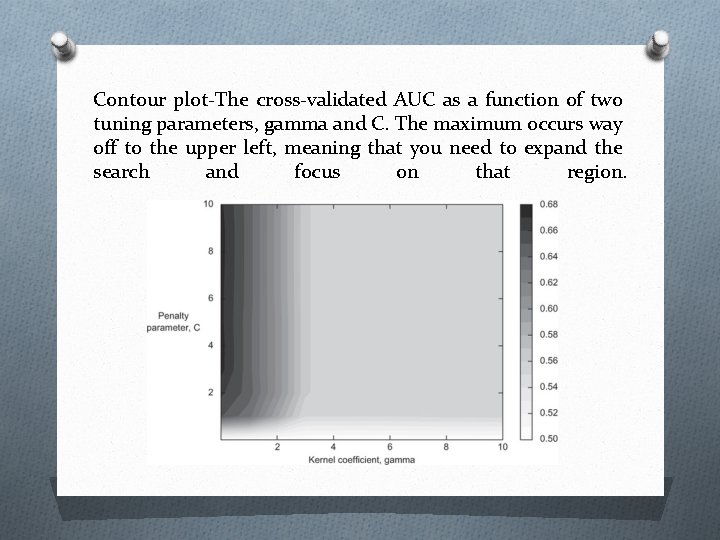

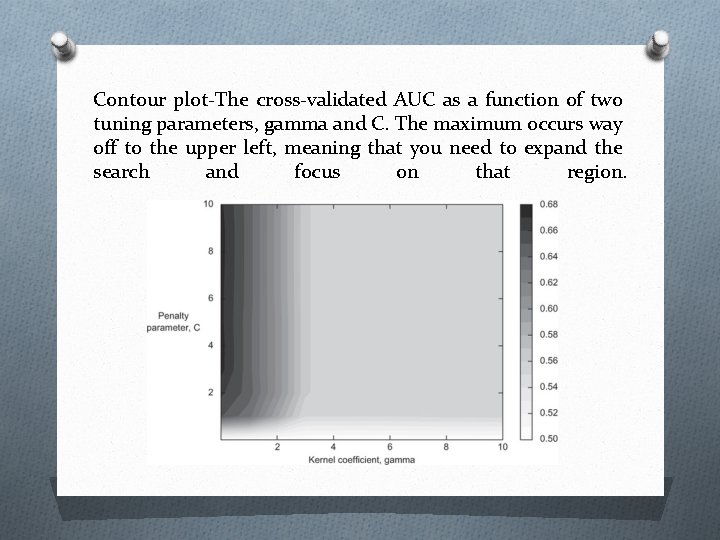

Contour plot-The cross-validated AUC as a function of two tuning parameters, gamma and C. The maximum occurs way off to the upper left, meaning that you need to expand the search and focus on that region.

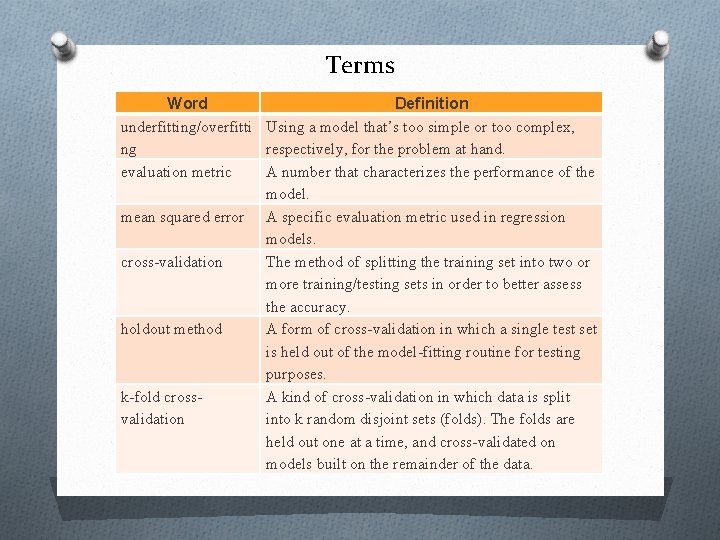

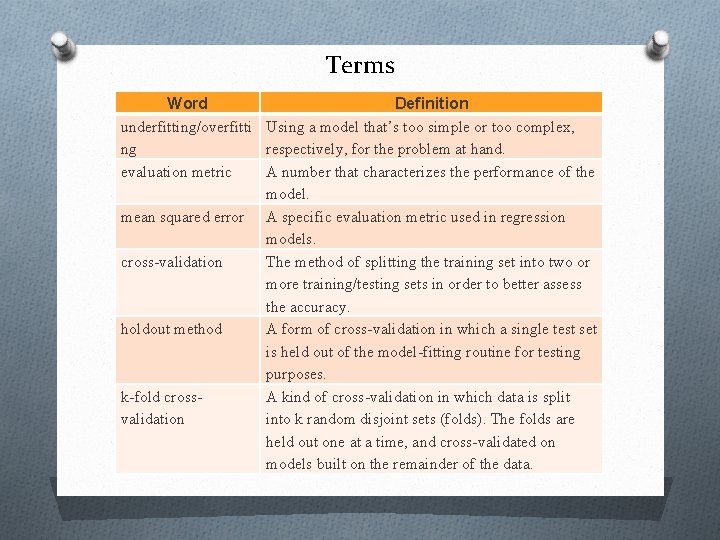

Terms Word Definition underfitting/overfitti Using a model that’s too simple or too complex, ng respectively, for the problem at hand. evaluation metric A number that characterizes the performance of the model. mean squared error A specific evaluation metric used in regression models. cross-validation The method of splitting the training set into two or more training/testing sets in order to better assess the accuracy. holdout method A form of cross-validation in which a single test set is held out of the model-fitting routine for testing purposes. k-fold cross. A kind of cross-validation in which data is split validation into k random disjoint sets (folds). The folds are held out one at a time, and cross-validated on models built on the remainder of the data.

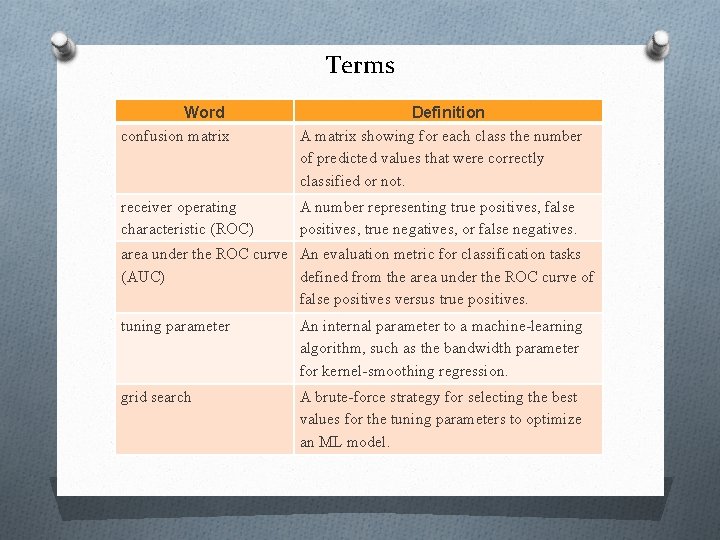

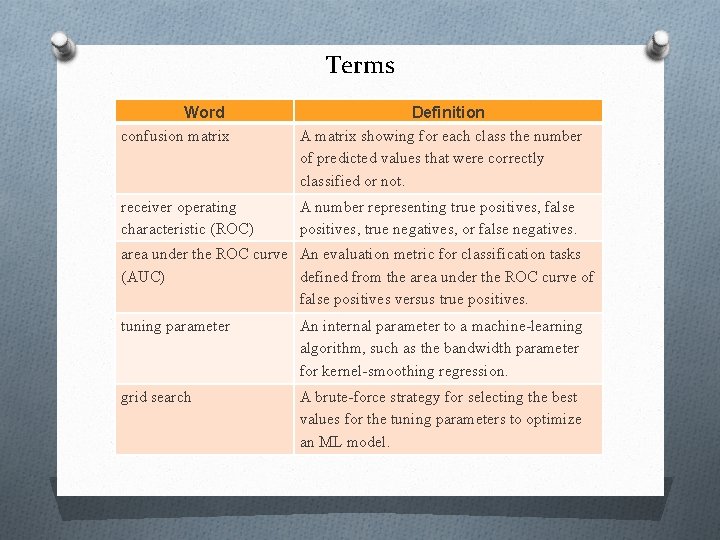

Terms Word confusion matrix Definition A matrix showing for each class the number of predicted values that were correctly classified or not. receiver operating characteristic (ROC) A number representing true positives, false positives, true negatives, or false negatives. area under the ROC curve An evaluation metric for classification tasks (AUC) defined from the area under the ROC curve of false positives versus true positives. tuning parameter An internal parameter to a machine-learning algorithm, such as the bandwidth parameter for kernel-smoothing regression. grid search A brute-force strategy for selecting the best values for the tuning parameters to optimize an ML model.