Model based RL Part 1 1 2 Model

Model based RL Part 1 1

2

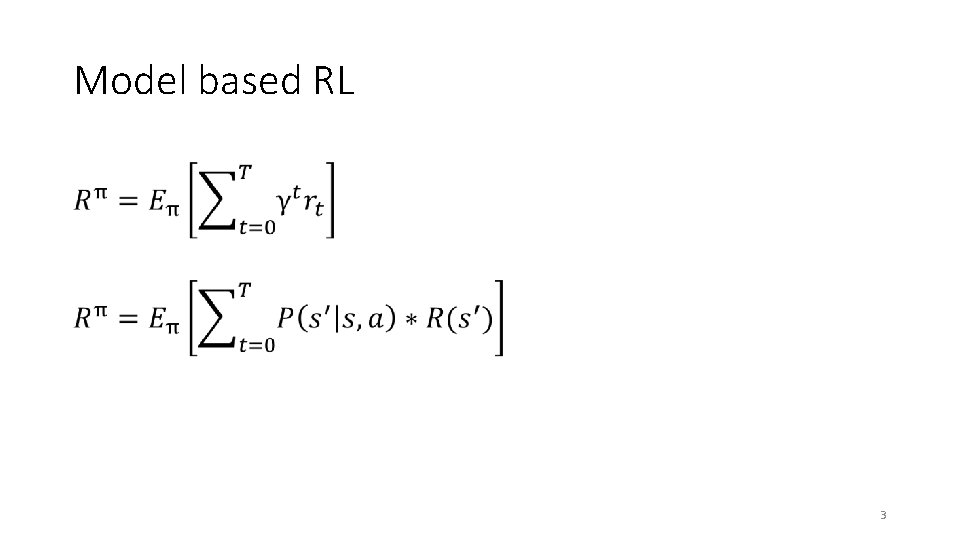

Model based RL 3

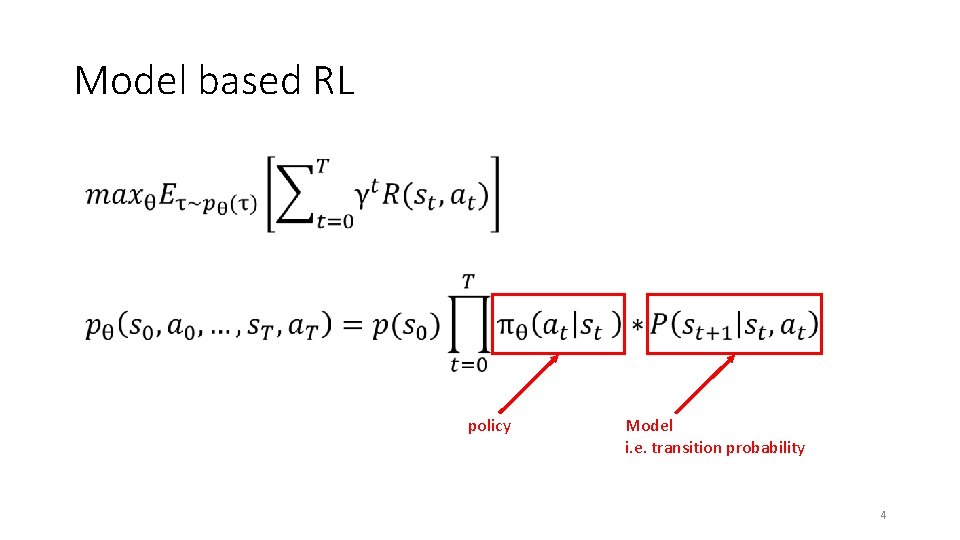

Model based RL policy Model i. e. transition probability 4

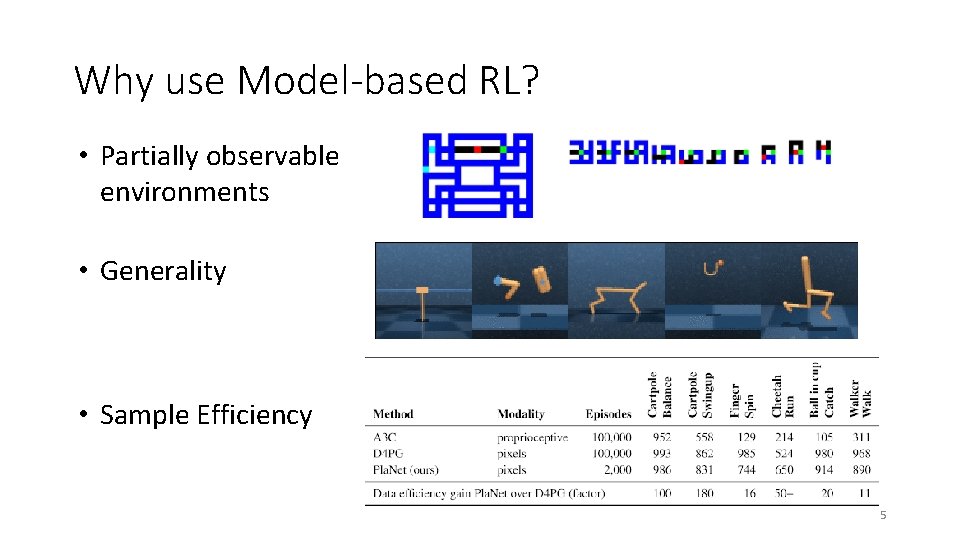

Why use Model-based RL? • Partially observable environments • Generality • Sample Efficiency 5

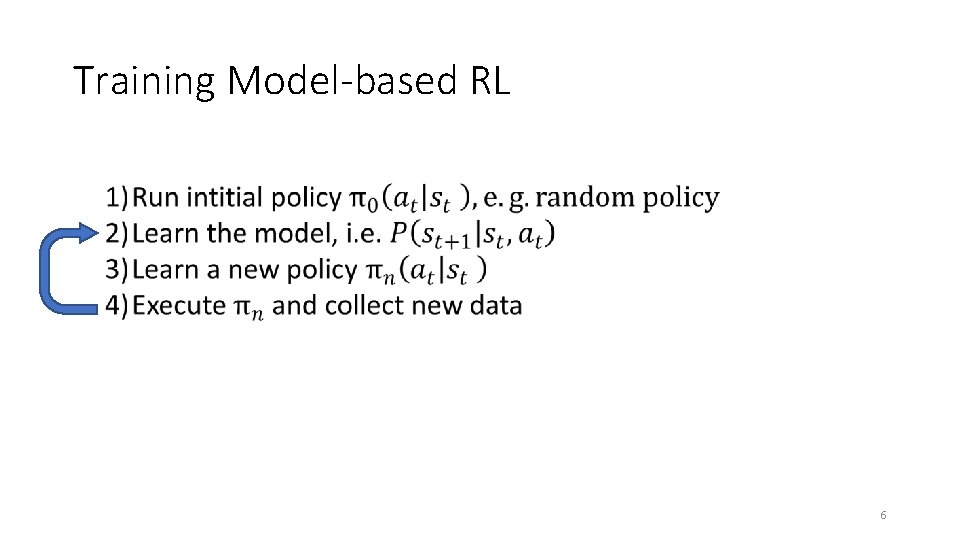

Training Model-based RL 6

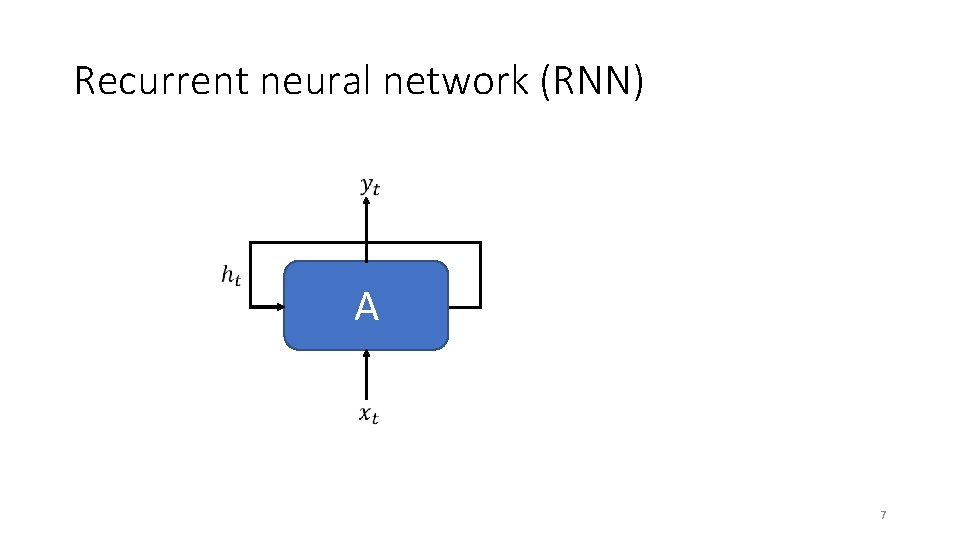

Recurrent neural network (RNN) A 7

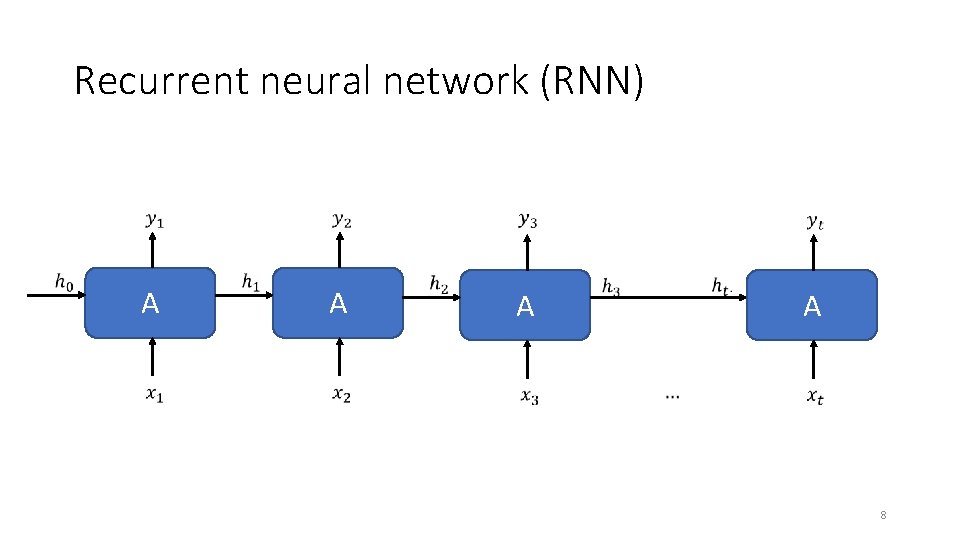

Recurrent neural network (RNN) A A 8

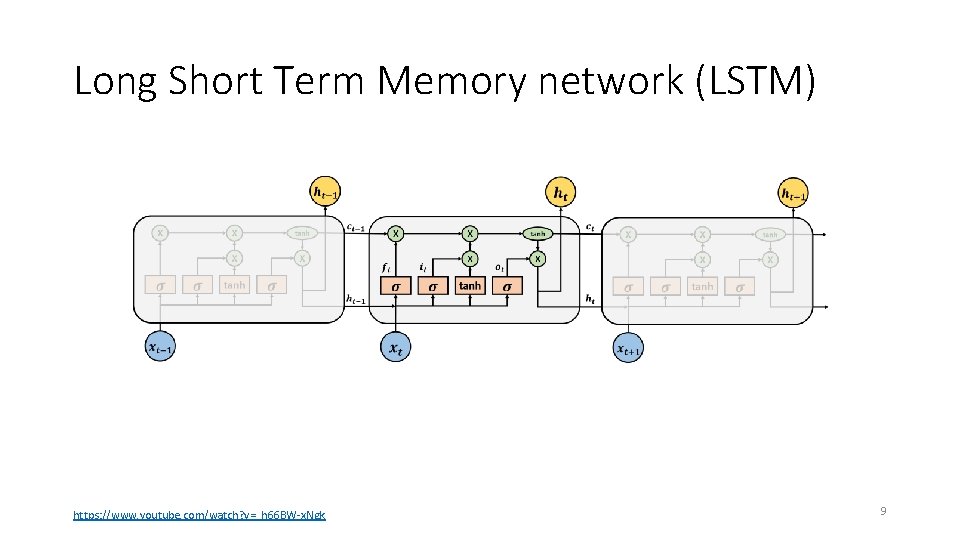

Long Short Term Memory network (LSTM) https: //www. youtube. com/watch? v=_h 66 BW-x. Ngk 9

World Models David Ha Google Brain Jürgen Schmidhuber AI Lab, IDSIA (USI & SUPSI) https: //worldmodels. github. io/ 10

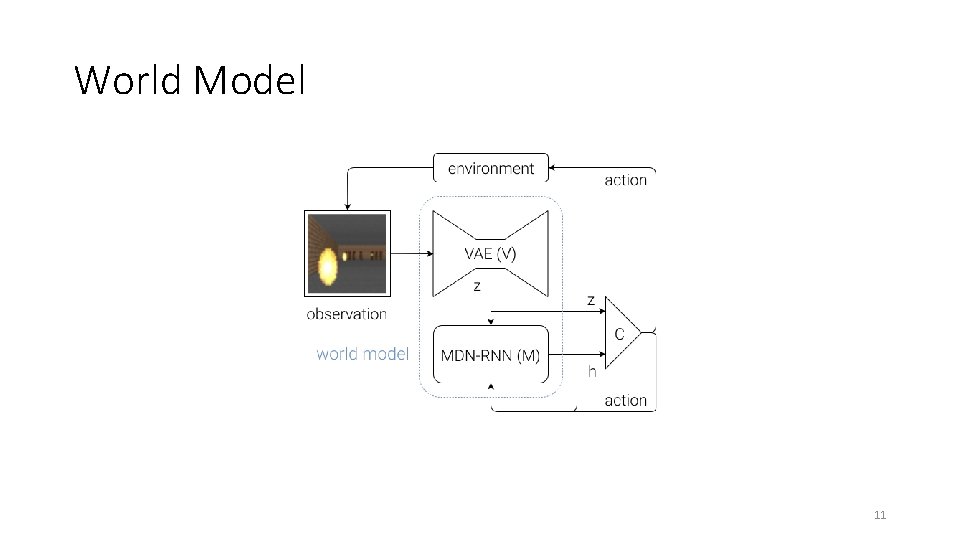

World Model 11

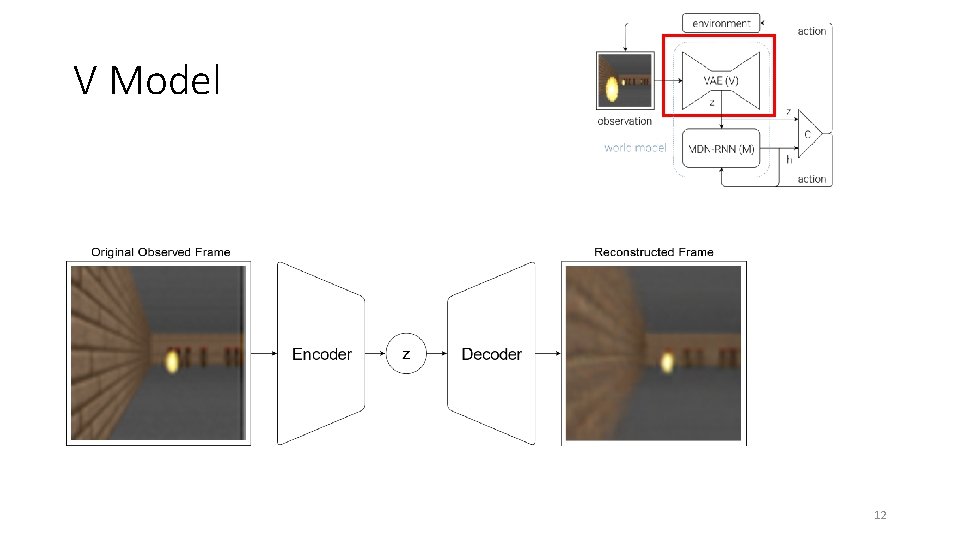

V Model 12

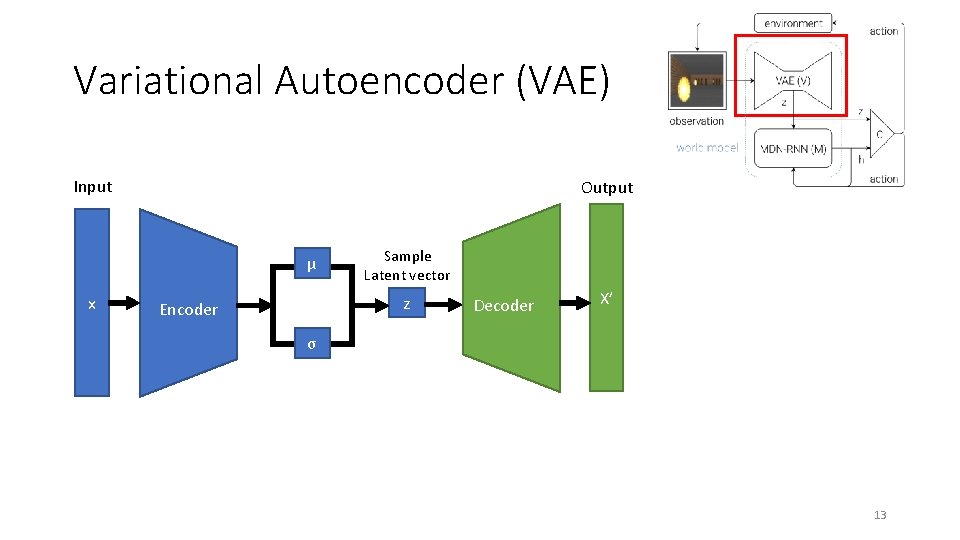

Variational Autoencoder (VAE) Input Output µ x Sample Latent vector z Encoder Decoder X’ σ 13

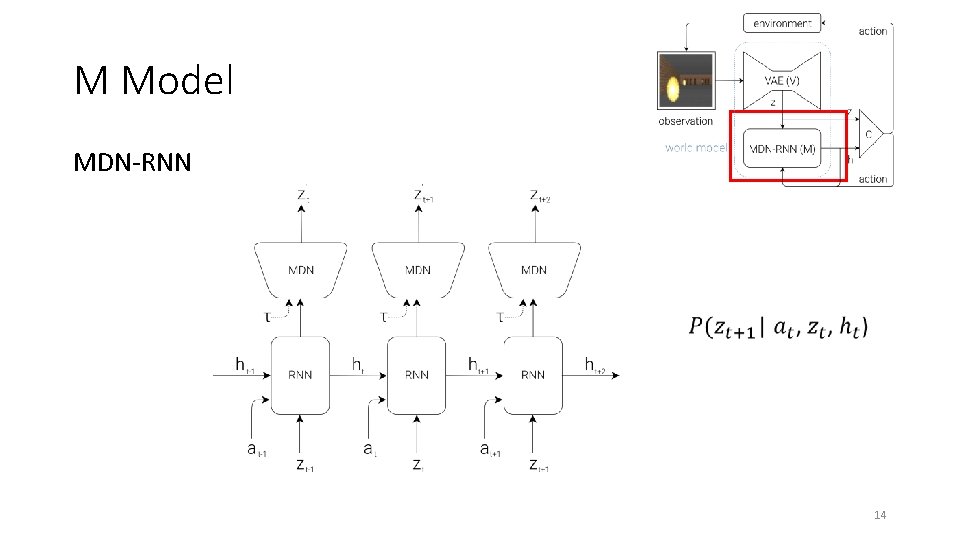

M Model MDN-RNN 14

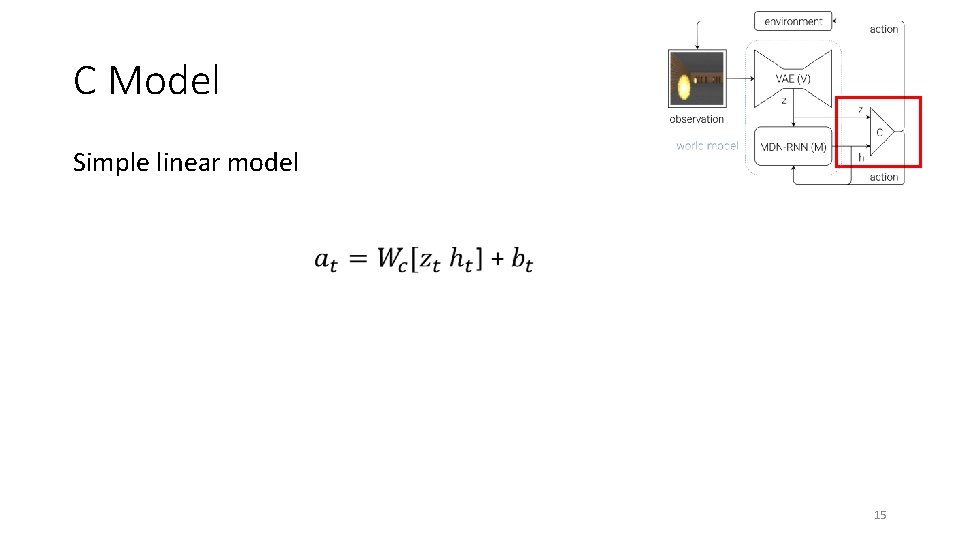

C Model Simple linear model 15

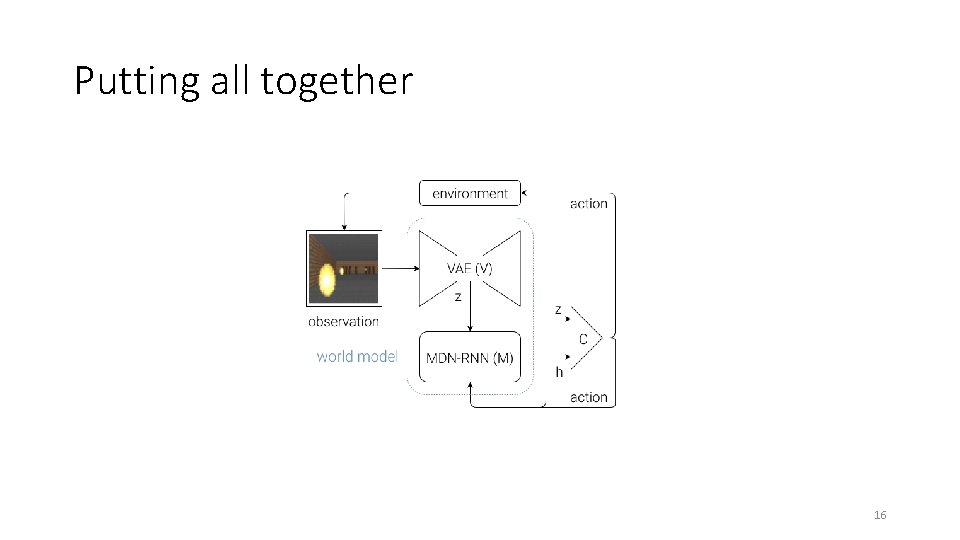

Putting all together 16

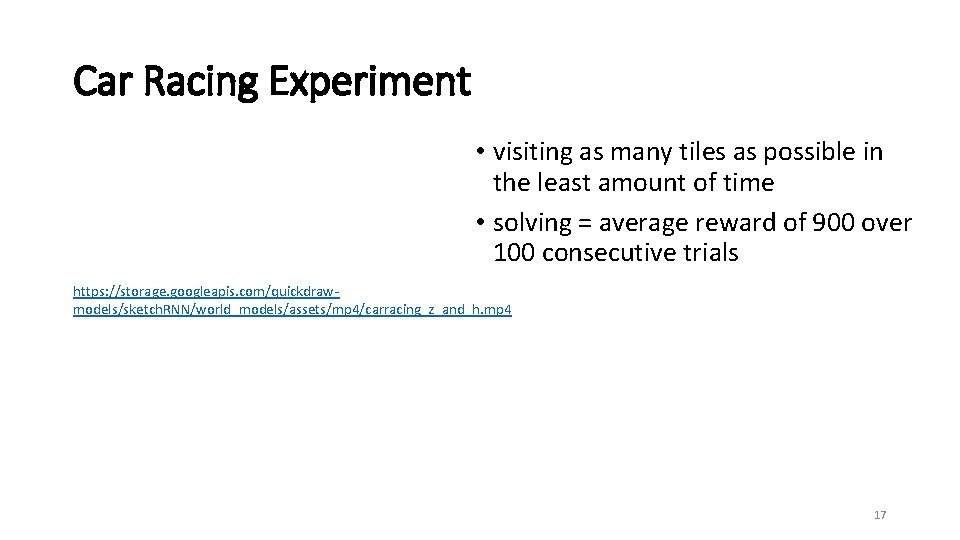

Car Racing Experiment • visiting as many tiles as possible in the least amount of time • solving = average reward of 900 over 100 consecutive trials https: //storage. googleapis. com/quickdrawmodels/sketch. RNN/world_models/assets/mp 4/carracing_z_and_h. mp 4 17

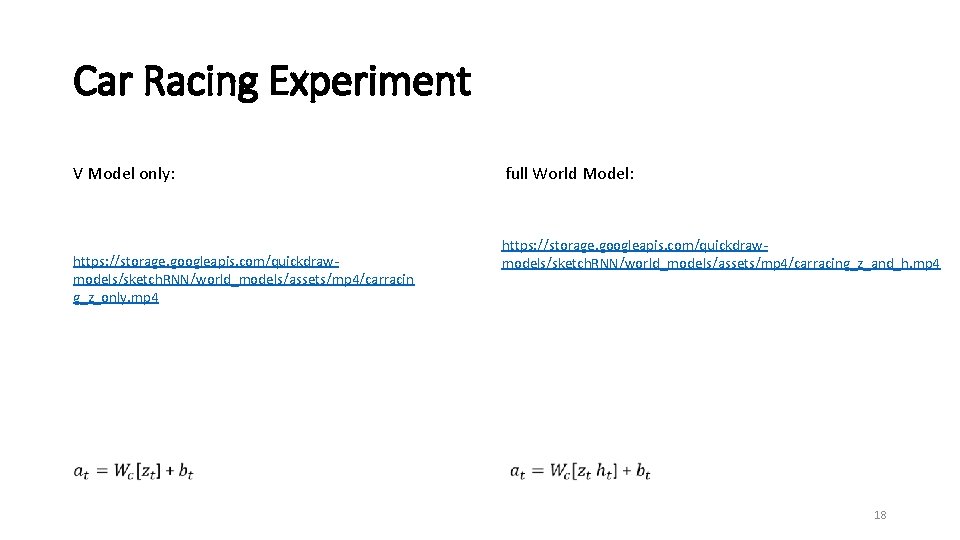

Car Racing Experiment V Model only: https: //storage. googleapis. com/quickdrawmodels/sketch. RNN/world_models/assets/mp 4/carracin g_z_only. mp 4 full World Model: https: //storage. googleapis. com/quickdrawmodels/sketch. RNN/world_models/assets/mp 4/carracing_z_and_h. mp 4 18

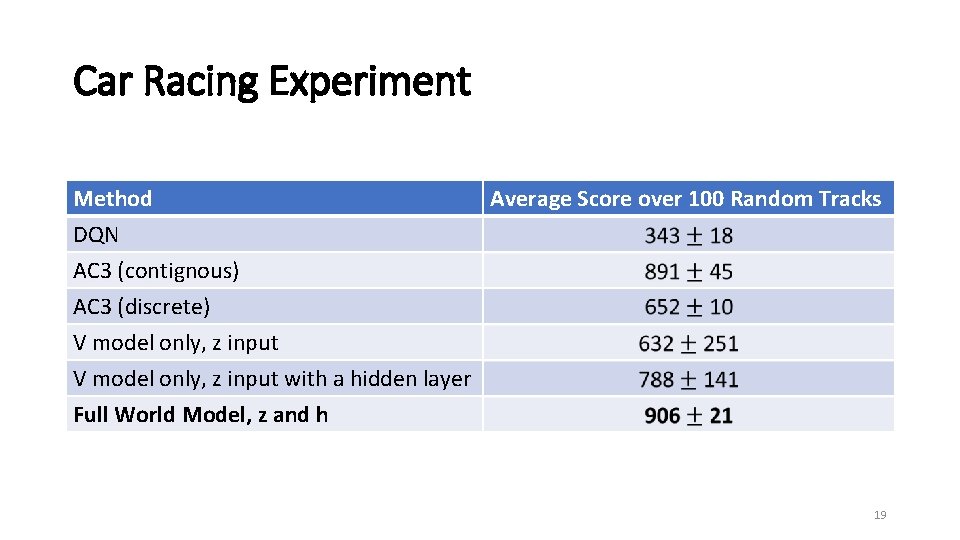

Car Racing Experiment Method DQN AC 3 (contignous) AC 3 (discrete) Average Score over 100 Random Tracks V model only, z input with a hidden layer Full World Model, z and h 19

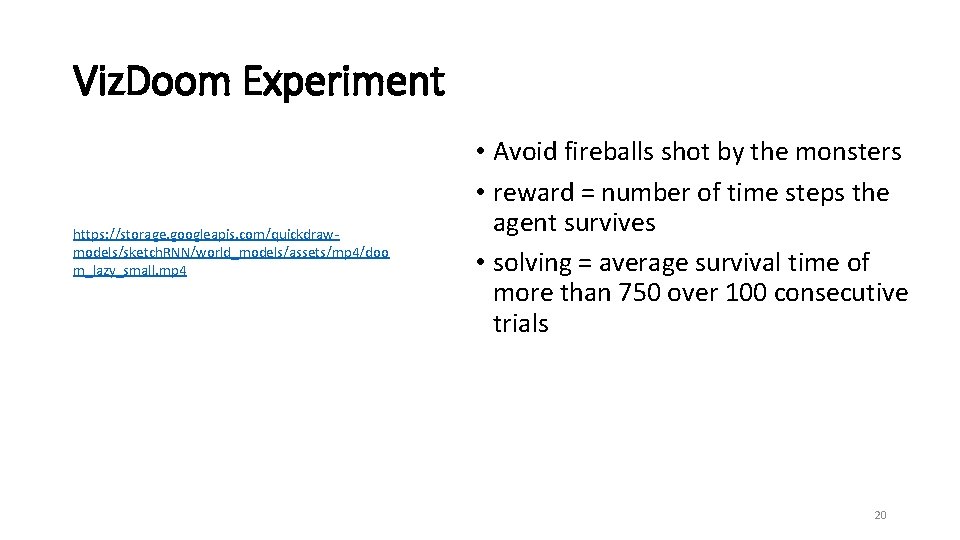

Viz. Doom Experiment https: //storage. googleapis. com/quickdrawmodels/sketch. RNN/world_models/assets/mp 4/doo m_lazy_small. mp 4 • Avoid fireballs shot by the monsters • reward = number of time steps the agent survives • solving = average survival time of more than 750 over 100 consecutive trials 20

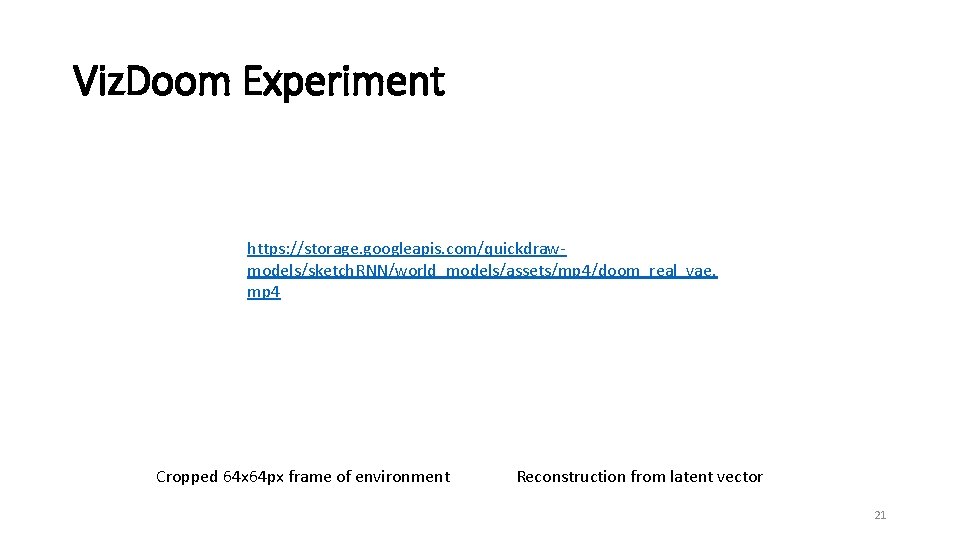

Viz. Doom Experiment https: //storage. googleapis. com/quickdrawmodels/sketch. RNN/world_models/assets/mp 4/doom_real_vae. mp 4 Cropped 64 x 64 px frame of environment Reconstruction from latent vector 21

Viz. Doom Experiment https: //storage. googleapis. com/quickdrawmodels/sketch. RNN/world_models/assets/mp 4/doom_adversa rial. mp 4 22

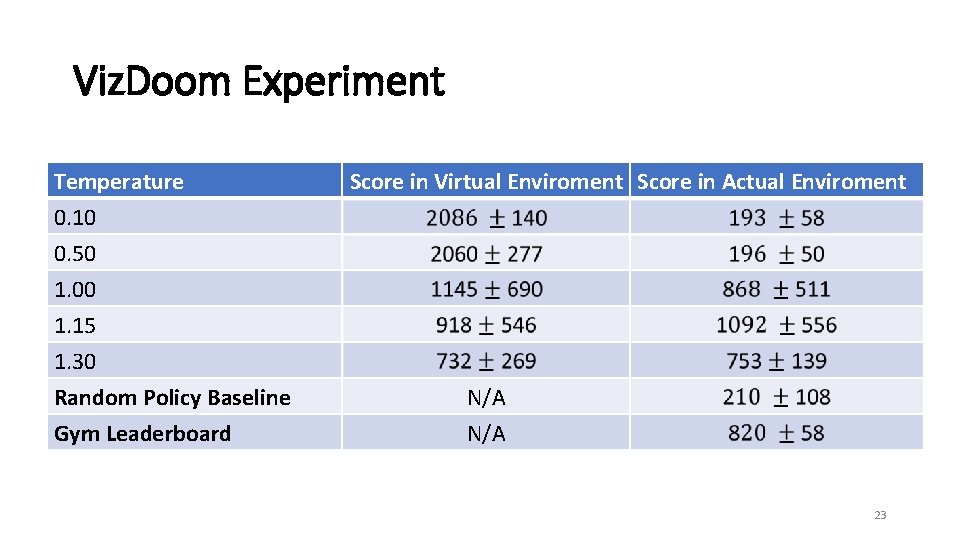

Viz. Doom Experiment Temperature 0. 10 0. 50 1. 00 1. 15 1. 30 Random Policy Baseline Gym Leaderboard Score in Virtual Enviroment Score in Actual Enviroment N/A 23

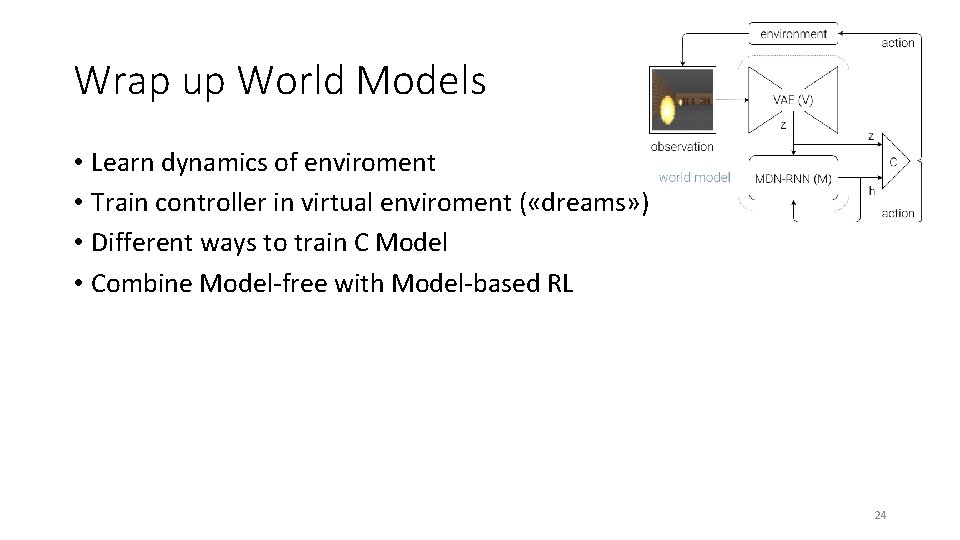

Wrap up World Models • Learn dynamics of enviroment • Train controller in virtual enviroment ( «dreams» ) • Different ways to train C Model • Combine Model-free with Model-based RL 24

Temporal Difference Variational Auto-Encoder Karol Gregor, George Papamakarios, Frederic Besse, Lars Buesing, Théophane Weber Deep. Mind 25

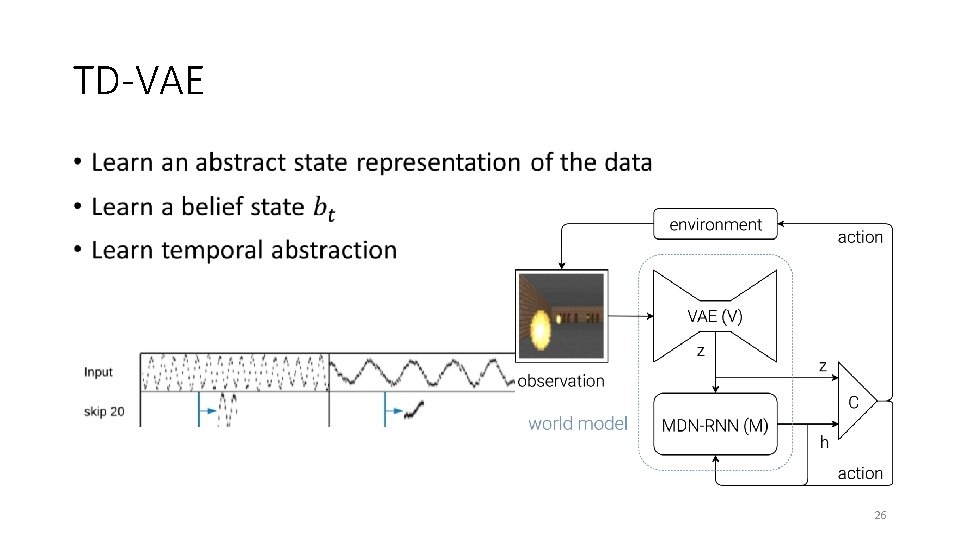

TD-VAE • 26

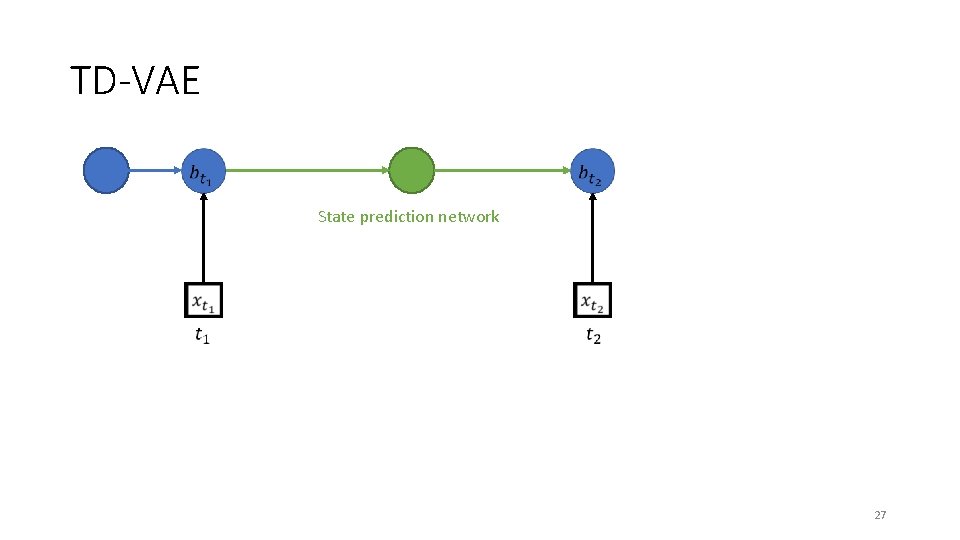

TD-VAE State prediction network 27

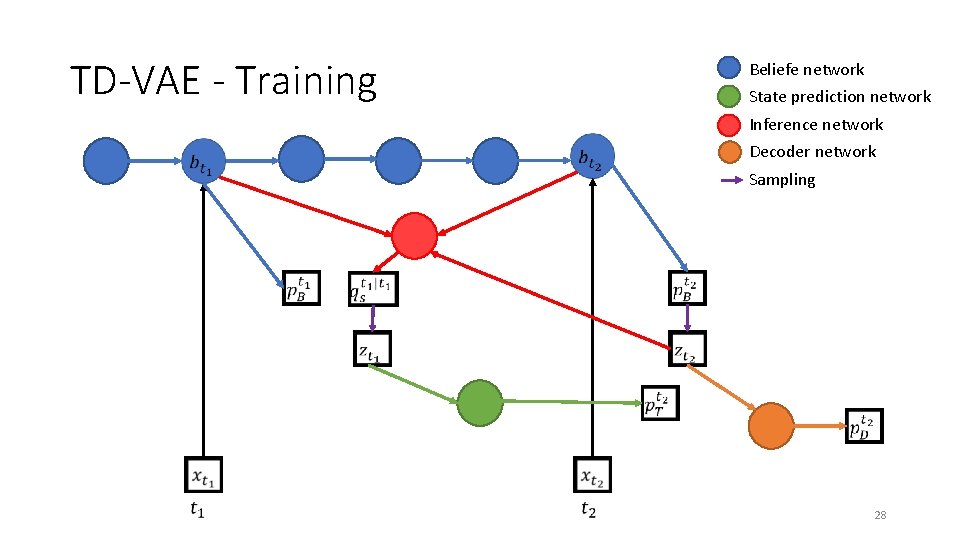

TD-VAE - Training Beliefe network State prediction network Inference network Decoder network Sampling 28

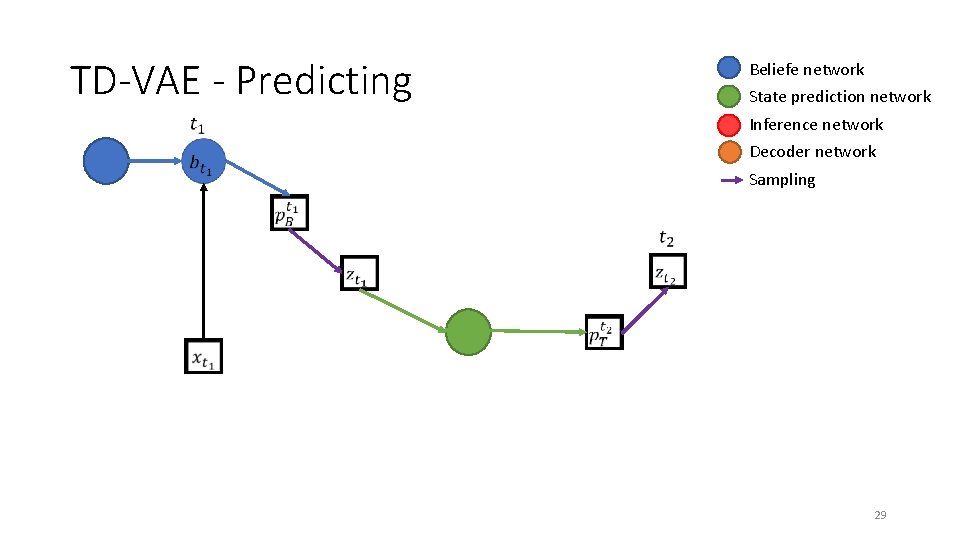

TD-VAE - Predicting Beliefe network State prediction network Inference network Decoder network Sampling 29

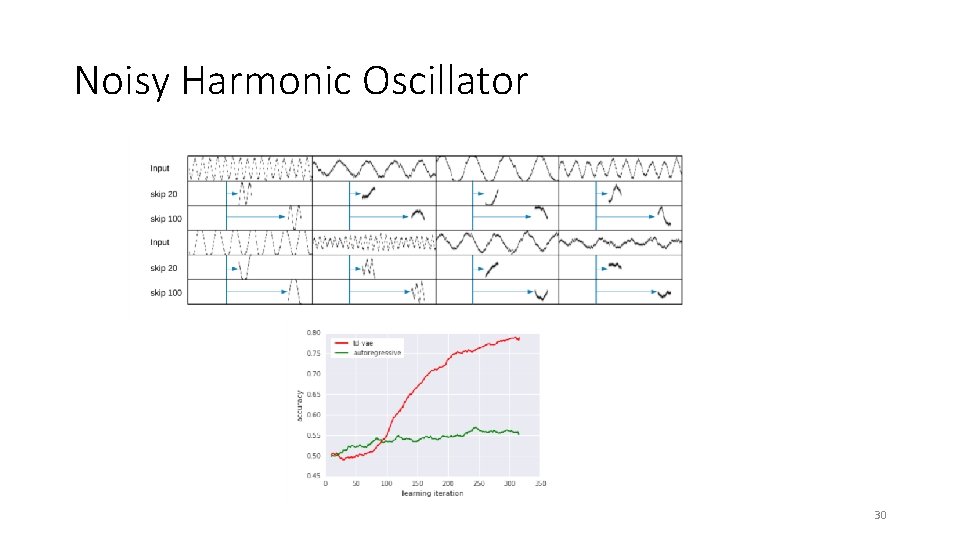

Noisy Harmonic Oscillator 30

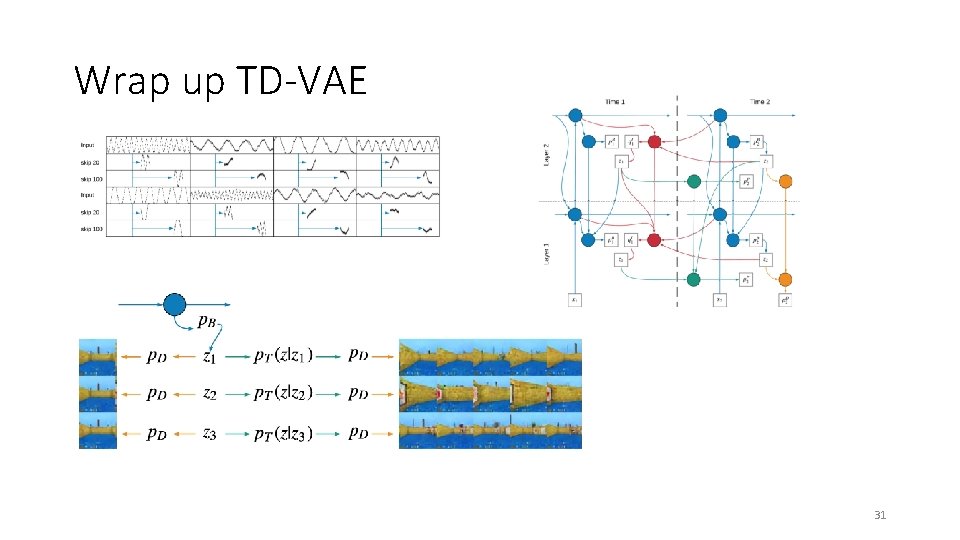

Wrap up TD-VAE 31

Thank you for your attention 32

Questions? 33

- Slides: 33