Missing Data in Randomized Control Trials John W

- Slides: 40

Missing Data in Randomized Control Trials John W. Graham The Prevention Research Center and Department of Biobehavioral Health Penn State University jgraham@psu. edu IES/NCER Summer Research Training Institute, August 2, 2010

Sessions in Three Parts n n (1) Introduction: Missing Data Theory (2) Attrition: Bias and Lost Power After the break. . . n (3) Hands-on with Multiple Imputation n n Multiple Imputation with NORM SPSS Automation Utility (New!) n n SPSS Regression HLM Automation Utility (New!) n 2 -Level Regression with HLM 6

Recent Papers n Graham, J. W. , (2009). Missing data analysis: making it work in the real world. Annual Review of Psychology, 60, 549 -576. n Graham, J. W. , Cumsille, P. E. , & Elek-Fisk, E. (2003). Methods for handling missing data. In J. A. Schinka & W. F. Velicer (Eds. ). Research Methods in Psychology (pp. 87_114). Volume 2 of Handbook of Psychology (I. B. Weiner, Editor-in-Chief). New York: John Wiley & Sons. n Graham, J. W. (2010, forthcoming). Missing Data: Analysis and Design. New York: Springer. n n n Chapter 4: Multiple Imputation with Norm 2. 03 Chapter 6: Multiple Imputation and Analysis with SPSS 17/18 Chapter 7: Multiple Imputation and Analysis with Multilevel (Cluster) Data

Recent Papers n Collins, L. M. , Schafer, J. L. , & Kam, C. M. (2001). A comparison of inclusive and restrictive strategies in modern missing data procedures. Psychological Methods, 6, 330 -351. n Schafer, J. L. , & Graham, J. W. (2002). Missing data: our view of the state of the art. Psychological Methods, 7, 147177. n Graham, J. W. , Taylor, B. J. , Olchowski, A. E. , & Cumsille, P. E. (2006). Planned missing data designs in psychological research. Psychological Methods, 11, 323 -343.

Part 1: A Brief Introduction to Analysis with Missing Data

Problem with Missing Data n Analysis procedures were designed for complete data . . .

Solution 1 n n n Design new model-based procedures Missing Data + Parameter Estimation in One Step Full Information Maximum Likelihood (FIML) SEM and Other Latent Variable Programs (Amos, LISREL, Mplus, Mx, LTA)

Solution 2 n Data based procedures n n e. g. , Multiple Imputation (MI) Two Steps n Step 1: Deal with the missing data n n n (e. g. , replace missing values with plausible values Produce a product Step 2: Analyze the product as if there were no missing data

FAQ n Aren't you somehow helping yourself with imputation? . . .

NO. Missing data imputation. . . n does NOT give you something for nothing n DOES let you make use of all data you have. . .

FAQ n Is the imputed value what the person would have given?

NO. When we impute a value. . n We do not impute for the sake of the value itself n We impute to preserve important characteristics of the whole data set . . .

We want. . . n unbiased parameter estimation n n Good estimate of variability n n e. g. , b-weights e. g. , standard errors best statistical power

Causes of Missingness n Ignorable MCAR: Missing Completely At Random n MAR: Missing At Random n n Non-Ignorable n MNAR: Missing Not At Random

MCAR (Missing Completely At Random) n MCAR 1: Cause of missingness completely random process (like coin flip) n MCAR 2: (essentially MCAR) Cause uncorrelated with variables of interest n Example: parents move n n No bias if cause omitted

MAR (Missing At Random) n Missingness may be related to measured variables n But no residual relationship with unmeasured variables n n Example: reading speed No bias if you control for measured variables

MNAR (Missing Not At Random) n Even after controlling for measured variables. . . n Residual relationship with unmeasured variables n Example: drug use reason for absence

MNAR Causes n The recommended methods assume missingness is MAR n But what if the cause of missingness is not MAR? n Should these methods be used when MAR assumptions not met?

YES! These Methods Work! n Suggested methods work better than “old” methods n Multiple causes of missingness n n Only small part of missingness may be MNAR Suggested methods usually work very well

Methods: "Old" vs MAR vs MNAR n MAR methods (MI and ML) n n n are ALWAYS at least as good as, usually better than "old" methods (e. g. , listwise deletion) Methods designed to handle MNAR missingness are NOT always better than MAR methods

Analysis: Old and New

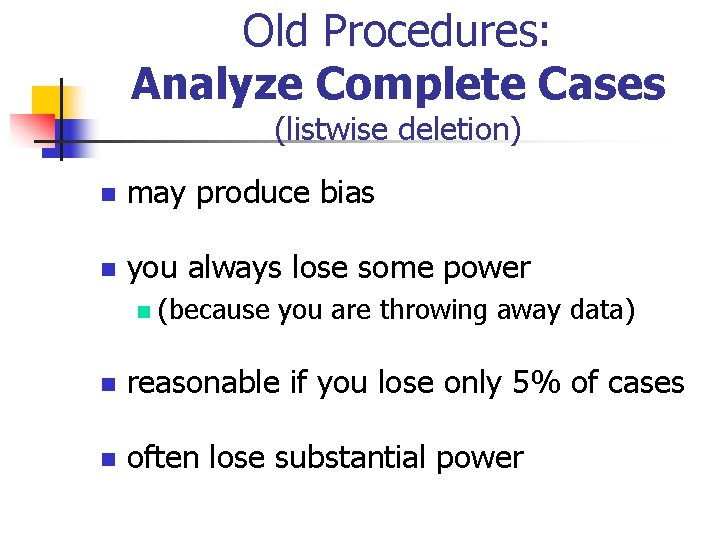

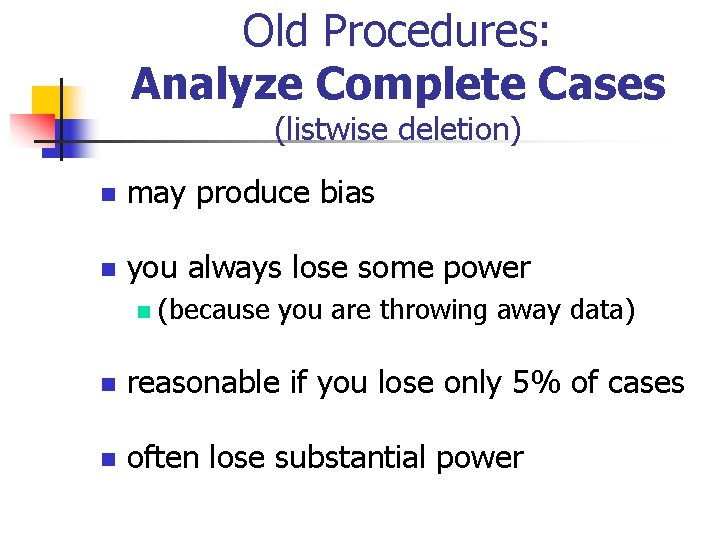

Old Procedures: Analyze Complete Cases (listwise deletion) n may produce bias n you always lose some power n (because you are throwing away data) n reasonable if you lose only 5% of cases n often lose substantial power

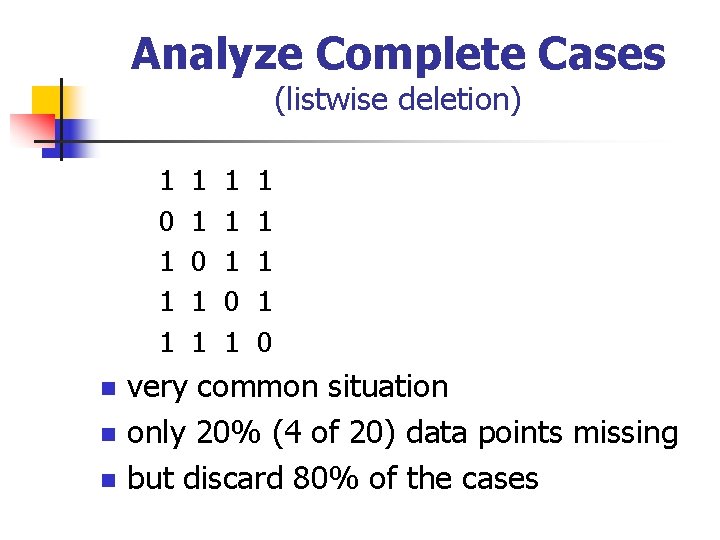

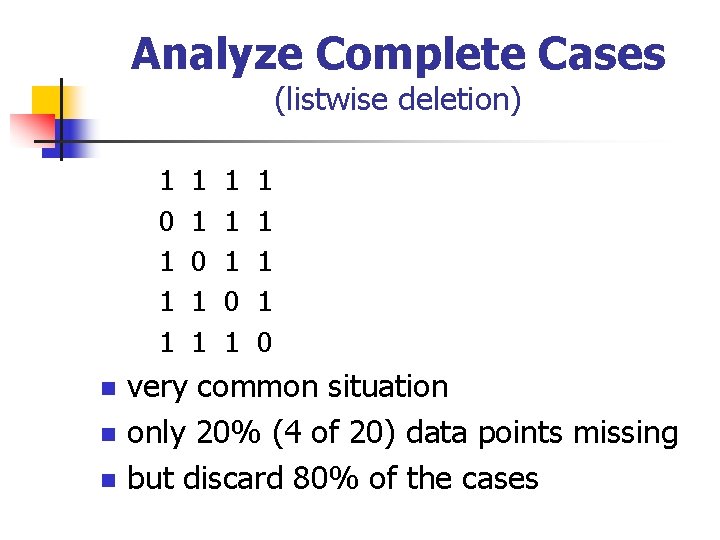

Analyze Complete Cases (listwise deletion) n n n 1 1 1 1 0 very common situation only 20% (4 of 20) data points missing but discard 80% of the cases

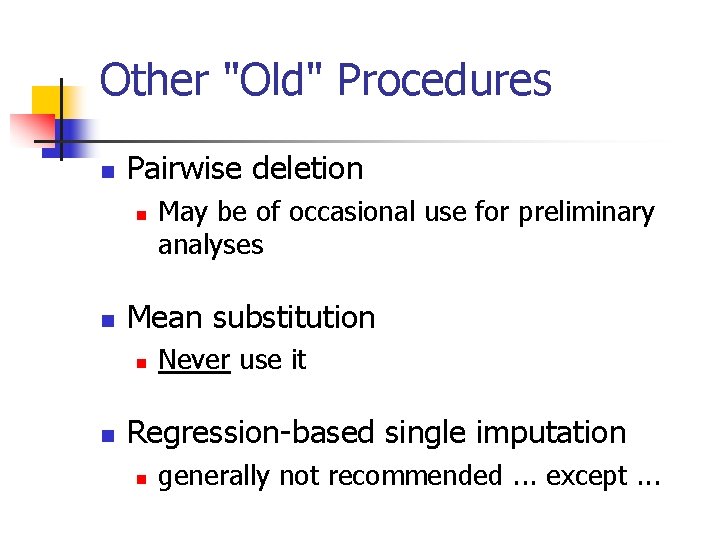

Other "Old" Procedures n Pairwise deletion n n Mean substitution n n May be of occasional use for preliminary analyses Never use it Regression-based single imputation n generally not recommended. . . except. . .

Recommended Model-Based Procedures n n Multiple Group SEM (Structural Equation Modeling) Latent Transition Analysis (Collins et al. ) n A latent class procedure

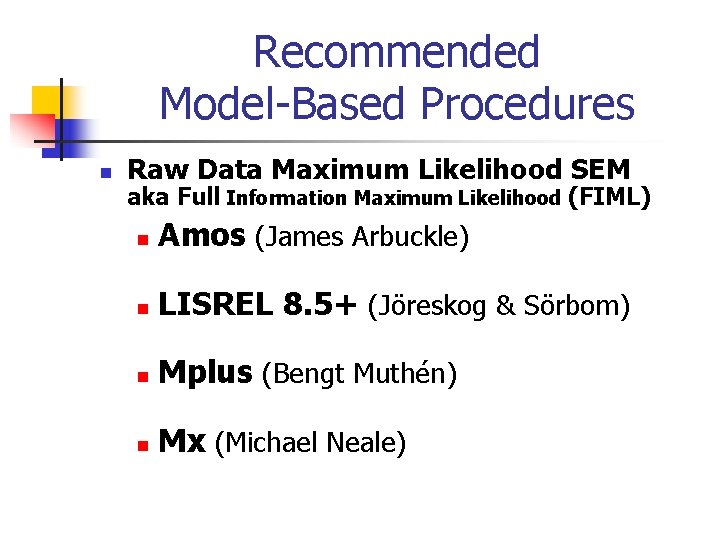

Recommended Model-Based Procedures n Raw Data Maximum Likelihood SEM aka Full Information Maximum Likelihood (FIML) n Amos (James Arbuckle) n LISREL 8. 5+ (Jöreskog & Sörbom) n Mplus (Bengt Muthén) n Mx (Michael Neale)

Amos, Mx, Mplus, LISREL 8. 8 n Structural Equation Modeling (SEM) Programs n In Single Analysis. . . n Good Estimation n Reasonable standard errors n Windows Graphical Interface

Limitation with Model-Based Procedures n That particular model must be what you want

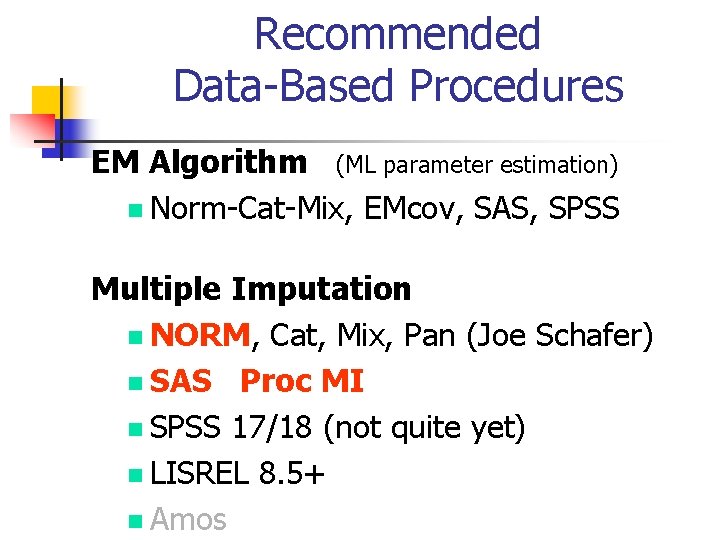

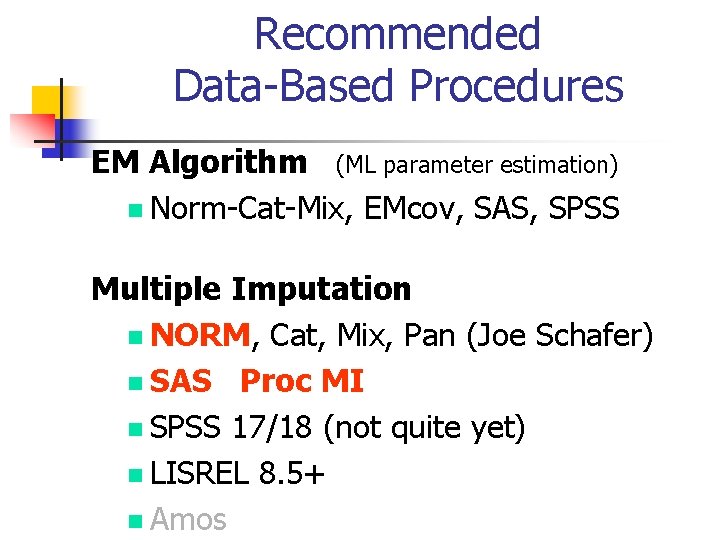

Recommended Data-Based Procedures EM Algorithm (ML parameter estimation) n Norm-Cat-Mix, EMcov, SAS, SPSS Multiple Imputation n NORM, Cat, Mix, Pan (Joe Schafer) n SAS Proc MI n SPSS 17/18 (not quite yet) n LISREL 8. 5+ n Amos

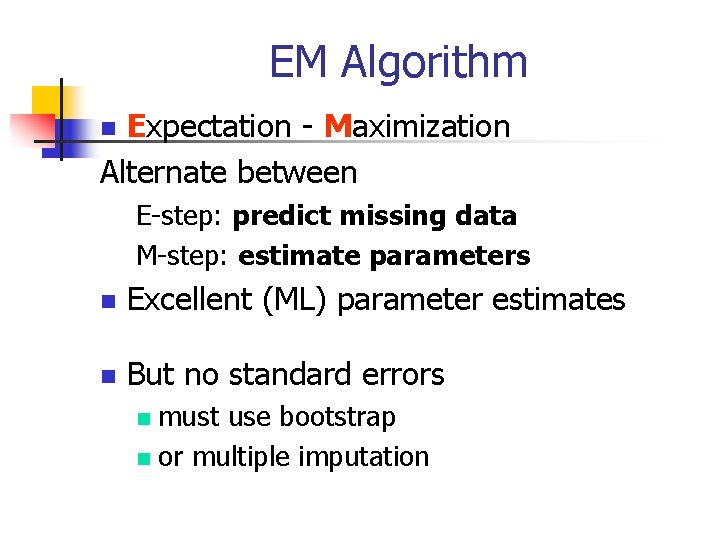

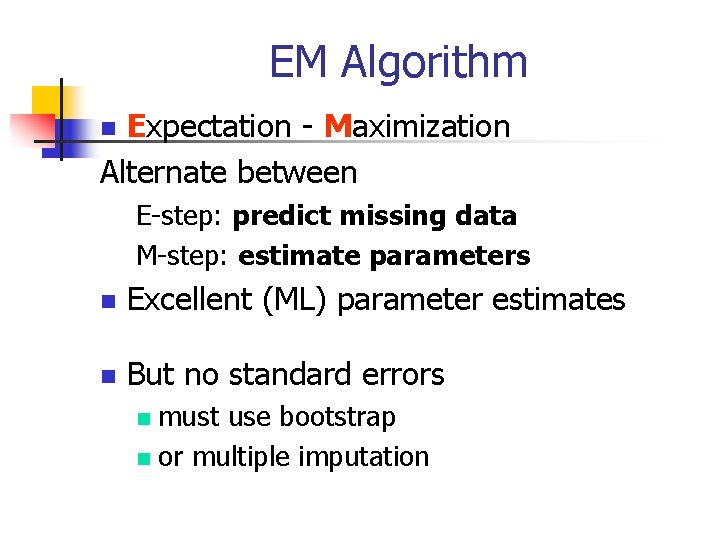

EM Algorithm Expectation - Maximization Alternate between n E-step: predict missing data M-step: estimate parameters n Excellent (ML) parameter estimates n But no standard errors must use bootstrap n or multiple imputation n

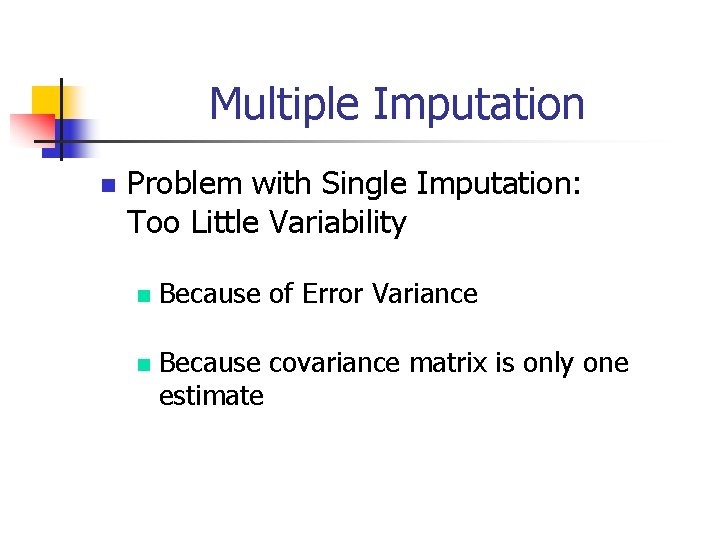

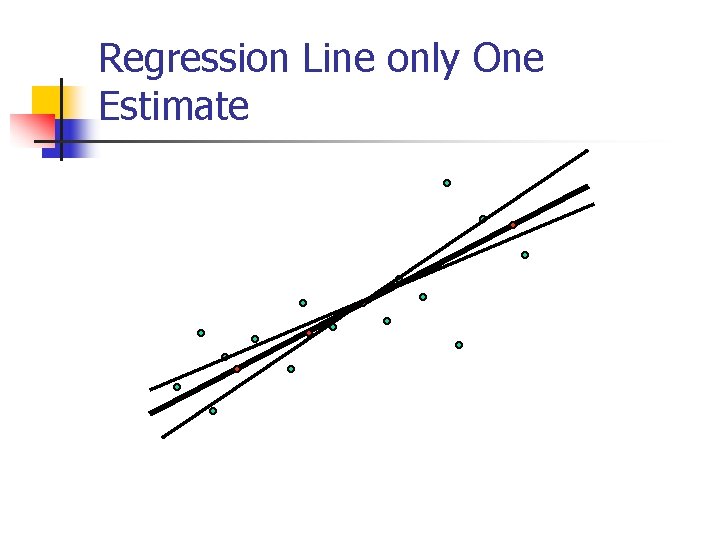

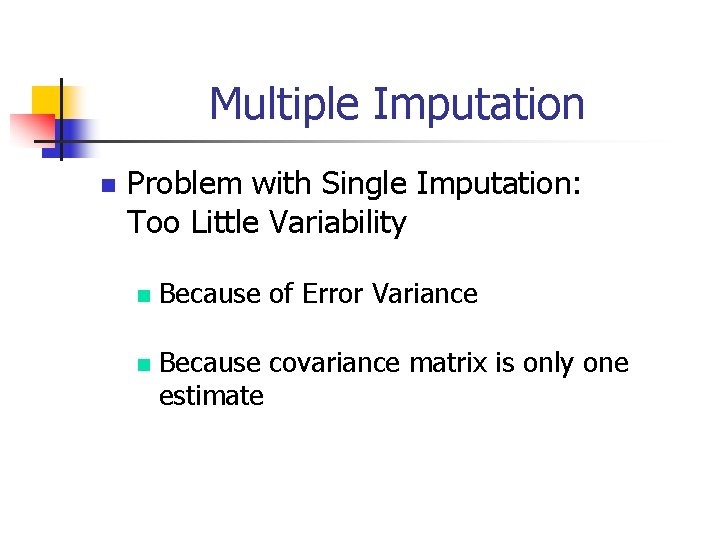

Multiple Imputation n Problem with Single Imputation: Too Little Variability n n Because of Error Variance Because covariance matrix is only one estimate

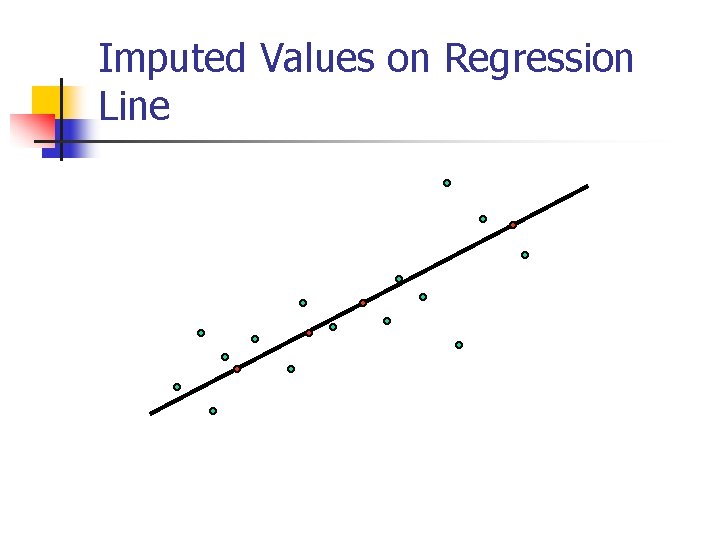

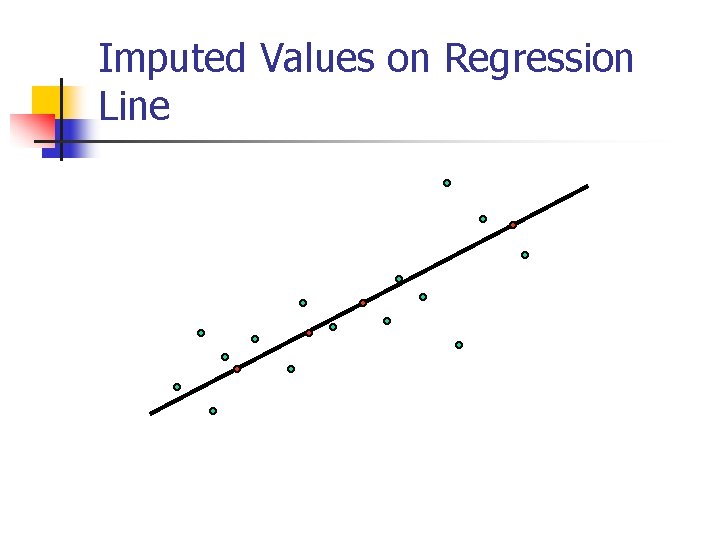

Too Little Error Variance n Imputed value lies on regression line

Imputed Values on Regression Line

Restore Error. . . n Add random normal residual

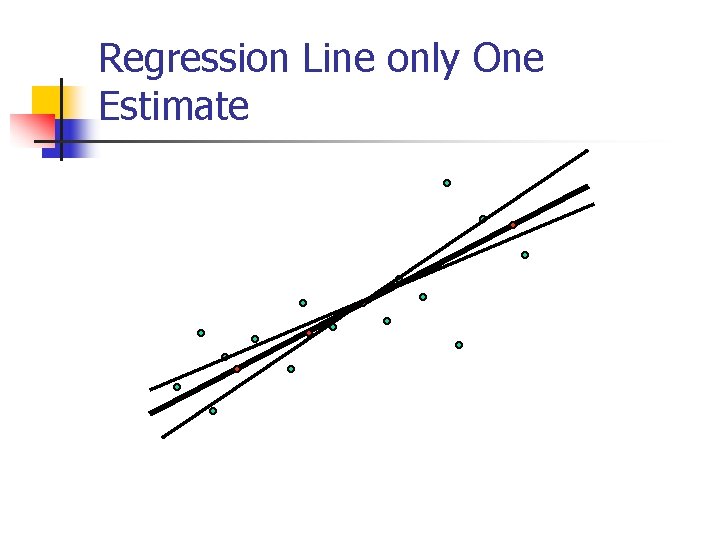

Regression Line only One Estimate

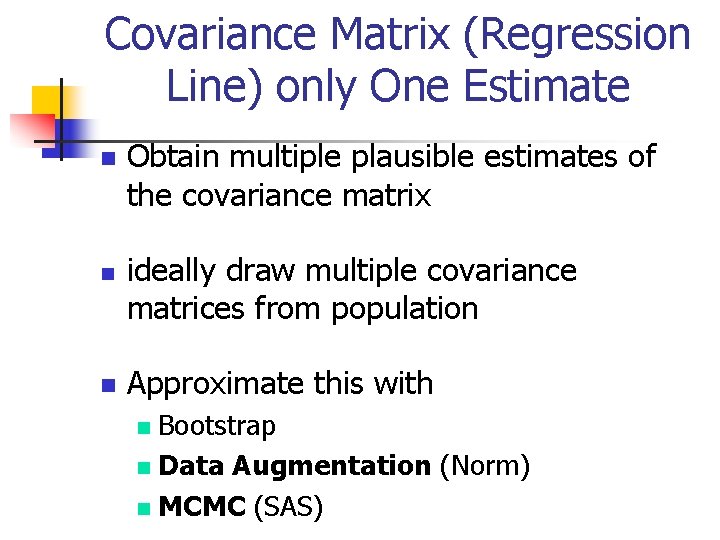

Covariance Matrix (Regression Line) only One Estimate n n n Obtain multiple plausible estimates of the covariance matrix ideally draw multiple covariance matrices from population Approximate this with Bootstrap n Data Augmentation (Norm) n MCMC (SAS) n

Data Augmentation n stochastic version of EM n EM E (expectation) step: predict missing data n M (maximization) step: estimate parameters n n Data Augmentation I (imputation) step: simulate missing data n P (posterior) step: simulate parameters n

Data Augmentation n Parameters from consecutive steps. . . too related n i. e. , not enough variability n n after 50 or 100 steps of DA. . . covariance matrices are like random draws from the population

Multiple Imputation Allows: n Unbiased Estimation n Good standard errors provided number of imputations (m) is large enough n too few imputations reduced power with small effect sizes n

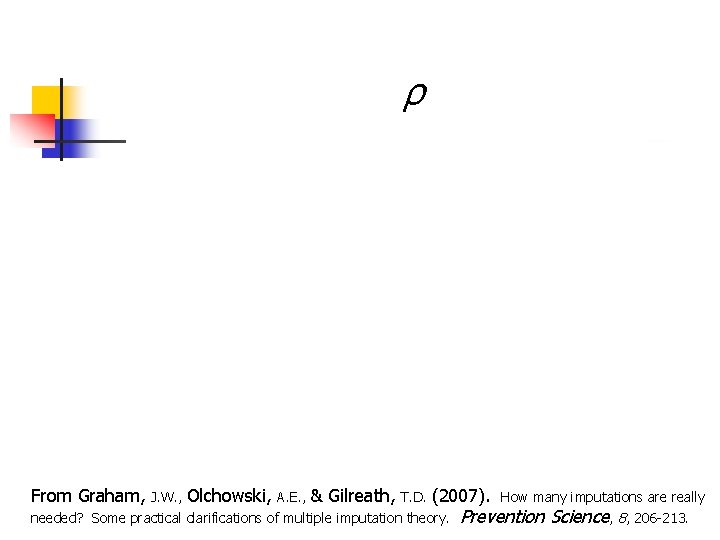

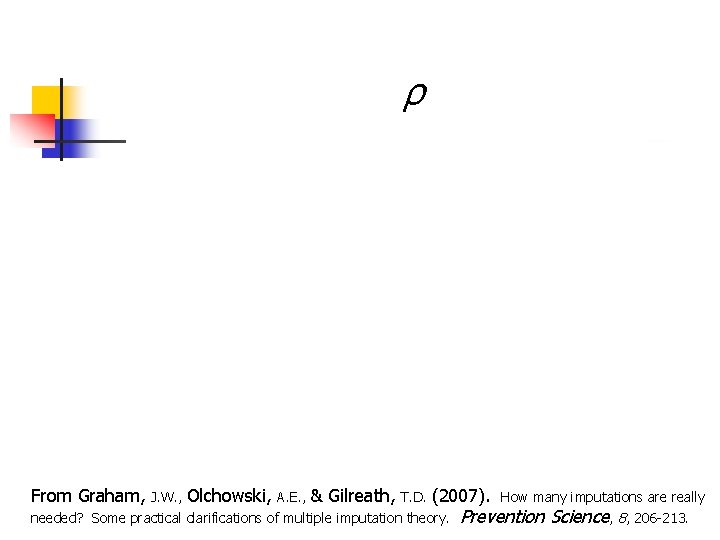

ρ From Graham, J. W. , Olchowski, A. E. , & Gilreath, T. D. (2007). How many imputations are really needed? Some practical clarifications of multiple imputation theory. Prevention Science, 8, 206 -213.