Mirror File System A Multiple Server File System

- Slides: 32

Mirror File System A Multiple Server File System John Wong CTO John. Wong@Twin. Peak. Soft. com Twin Peaks Software Inc. Page 1 of

Multiple Server File System • Conventional File System – UFS, EXT 3 and NFS – Manage and store files on a single server and its storage devices • Multiple Server File system – Page 2 of Manage and store files on multiple servers and their storage devices

Problems • Single resource is vulnerable • Redundancy provides a safety net – – – Page 3 of Disk level Storage level TCP/IP level File System level Application => => => RAID Storage Replication SNDR CFS, MFS Clustering system Database

Why MFS? • Many advantages over existing technologies Page 4 of

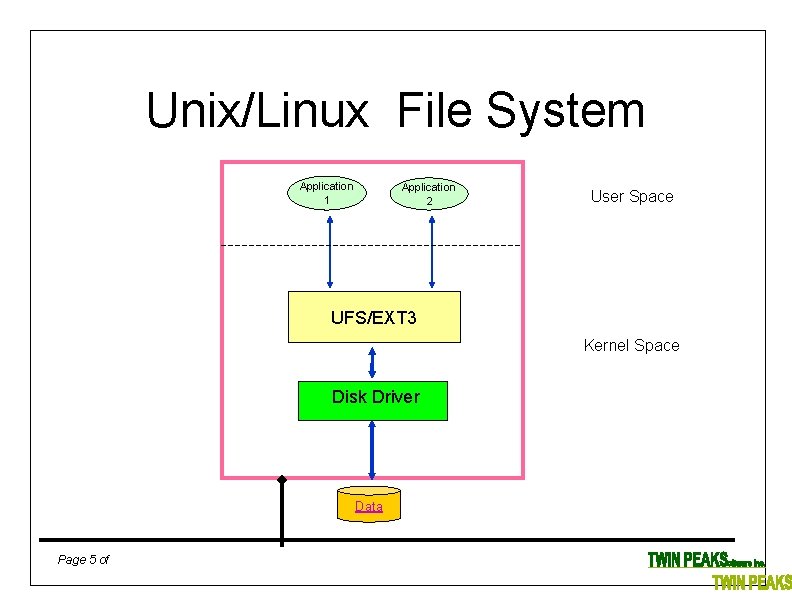

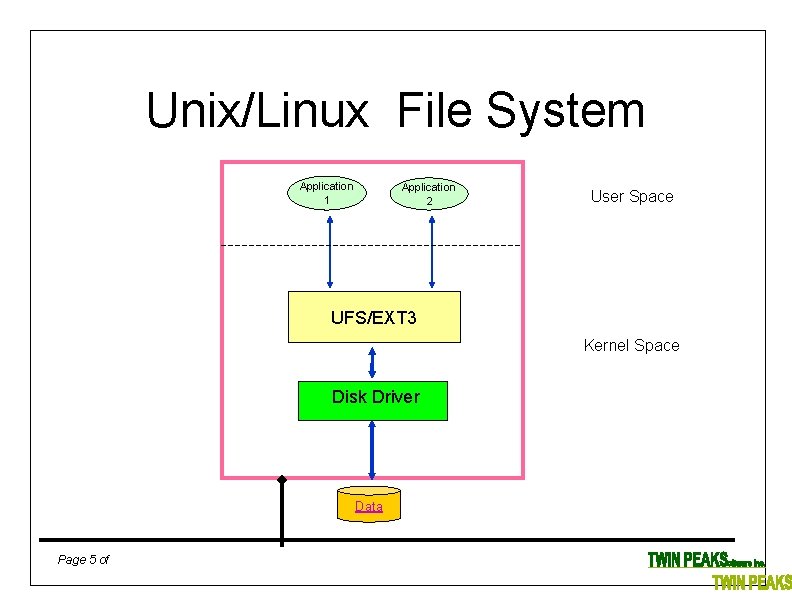

Unix/Linux File System Application 1 Application 2 User Space UFS/EXT 3 Kernel Space Disk Driver Data Page 5 of

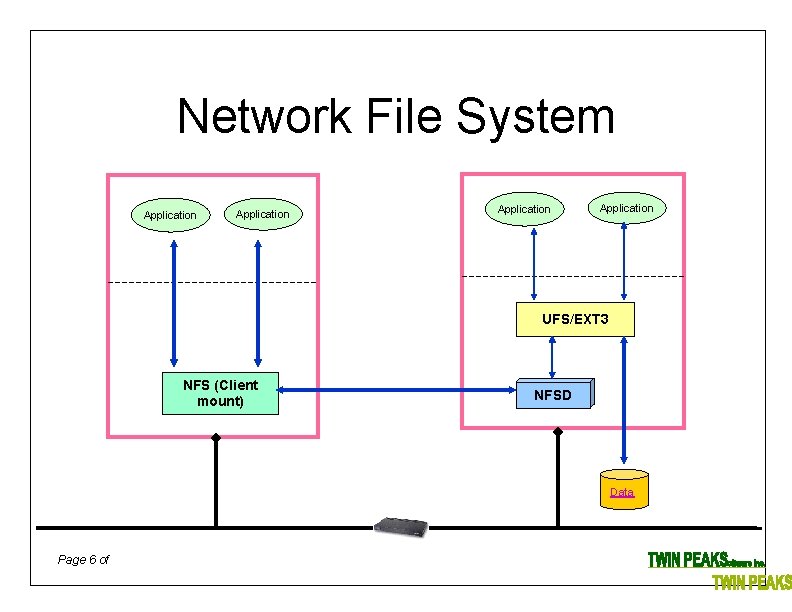

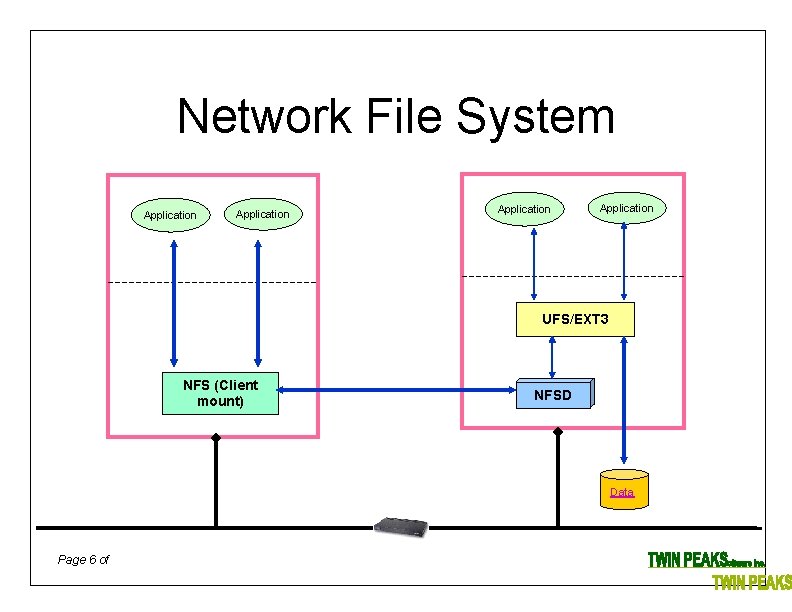

Network File System Application UFS/EXT 3 NFS (Client mount) NFSD Data Page 6 of

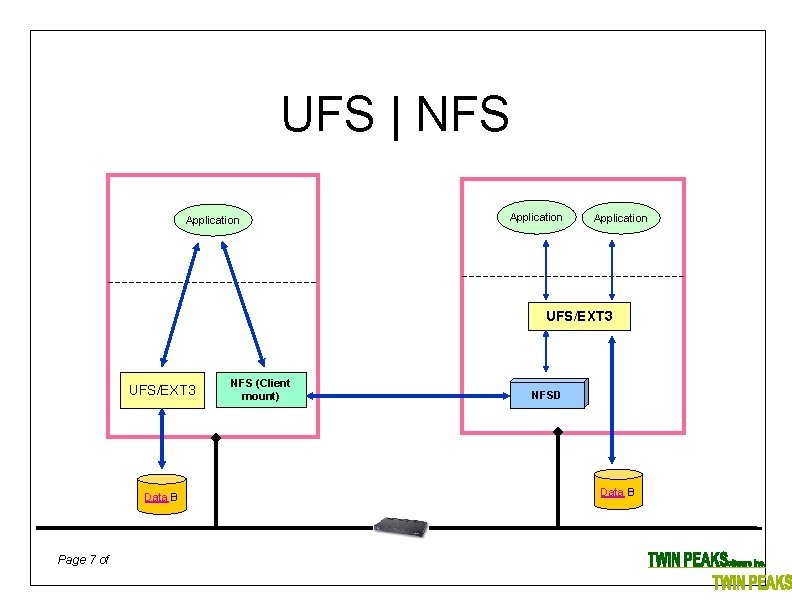

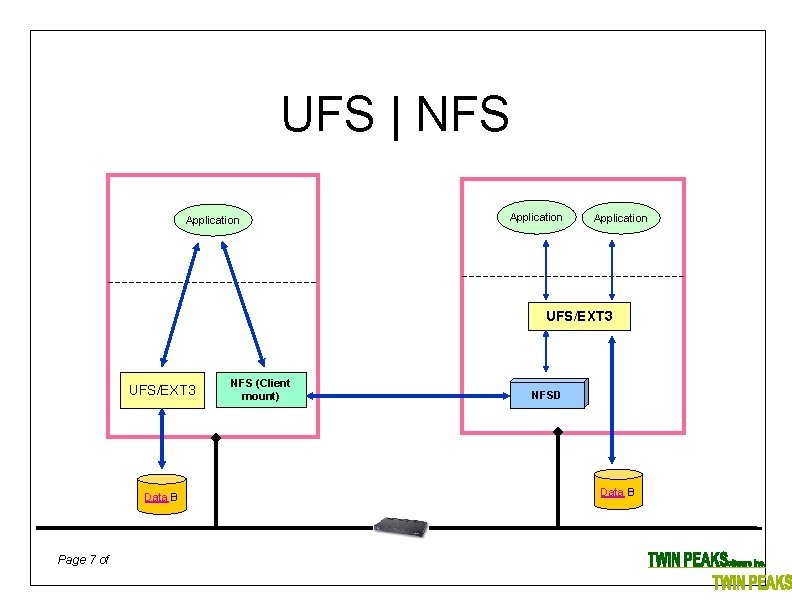

UFS | NFS Application UFS/EXT 3 Data B Page 7 of NFS (Client mount) NFSD Data B

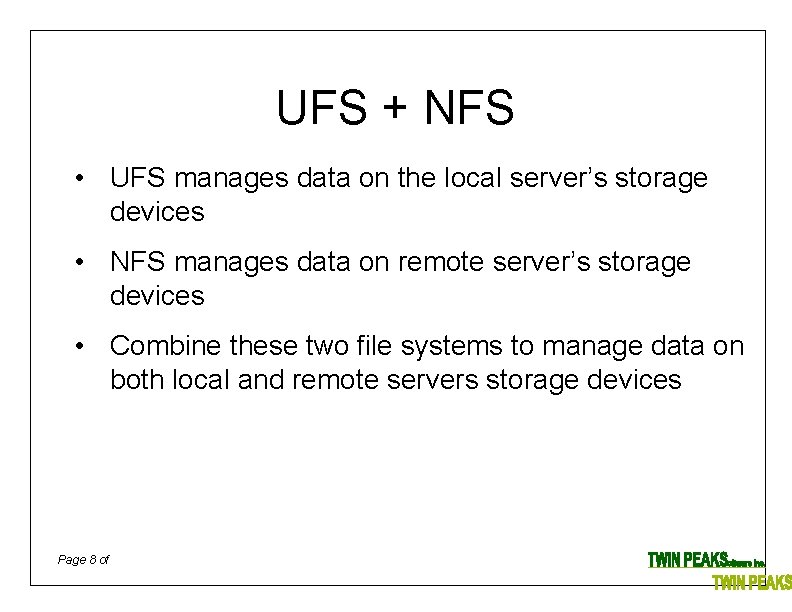

UFS + NFS • UFS manages data on the local server’s storage devices • NFS manages data on remote server’s storage devices • Combine these two file systems to manage data on both local and remote servers storage devices Page 8 of

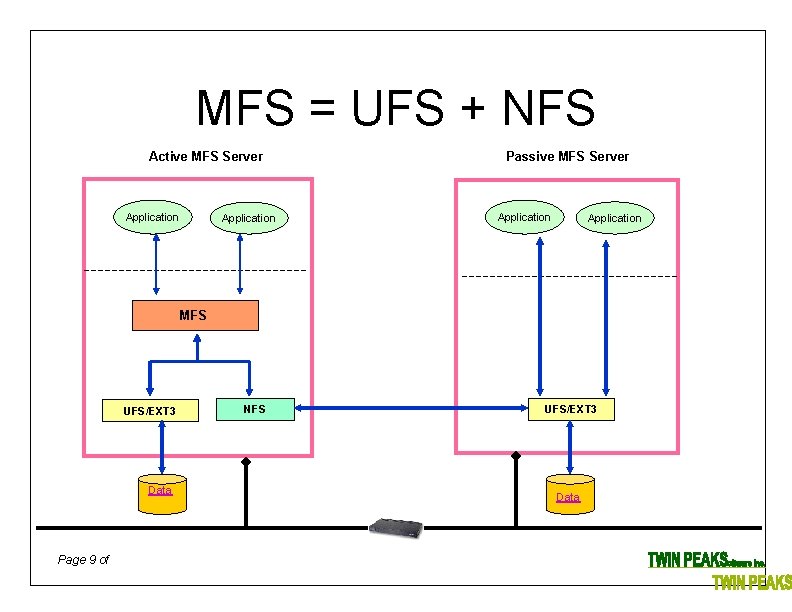

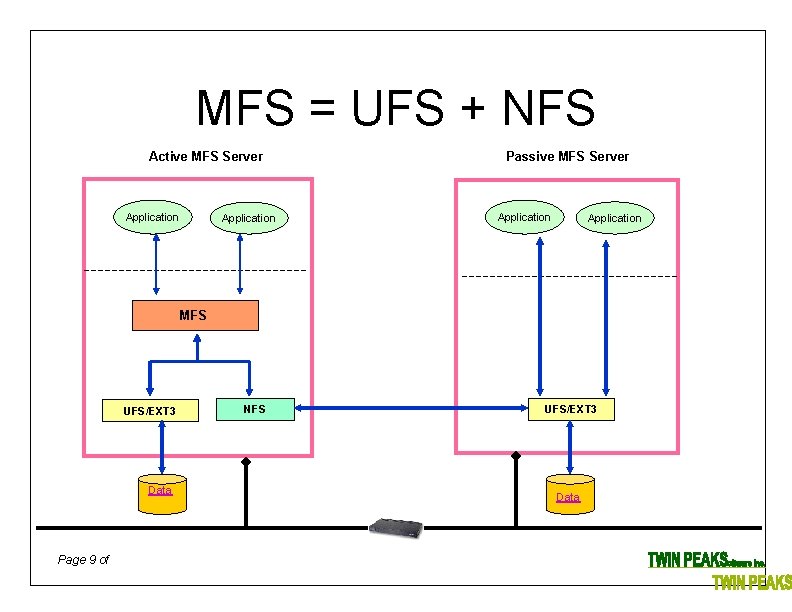

MFS = UFS + NFS Active MFS Server Application Passive MFS Server Application MFS UFS/EXT 3 Data Page 9 of NFS UFS/EXT 3 Data

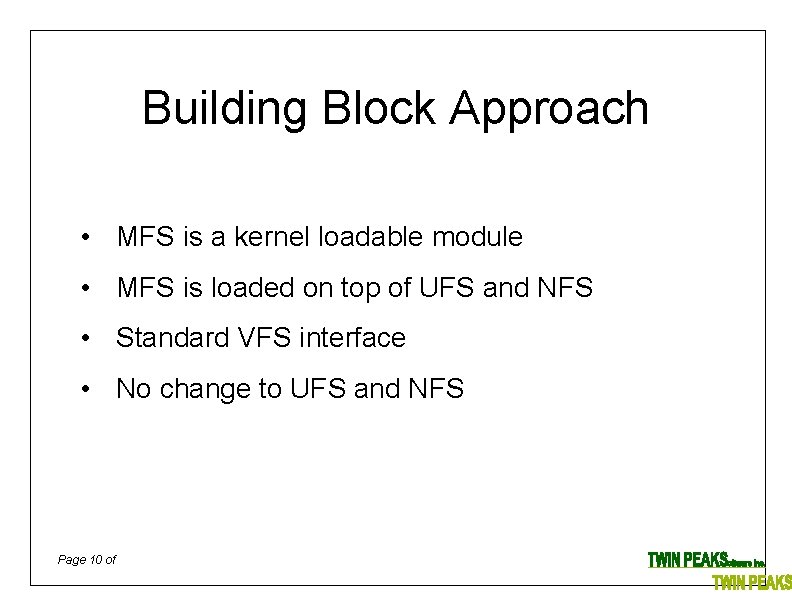

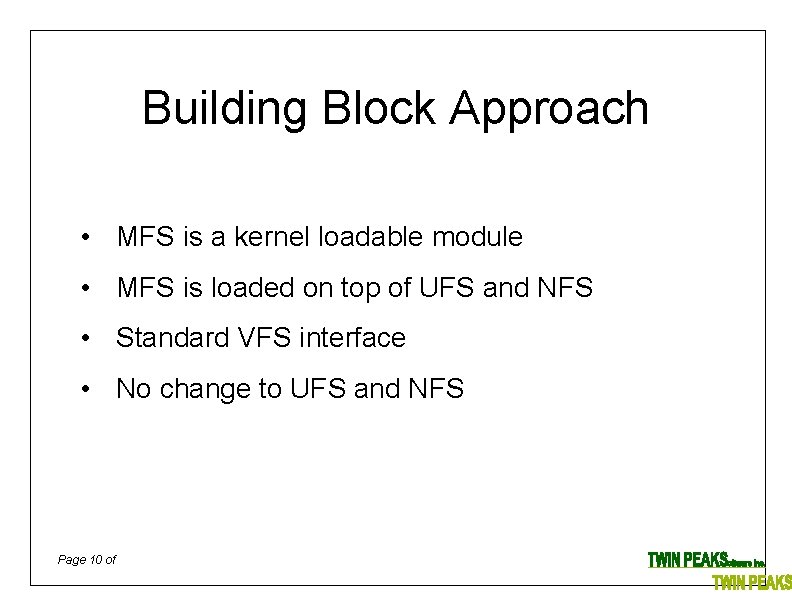

Building Block Approach • MFS is a kernel loadable module • MFS is loaded on top of UFS and NFS • Standard VFS interface • No change to UFS and NFS Page 10 of

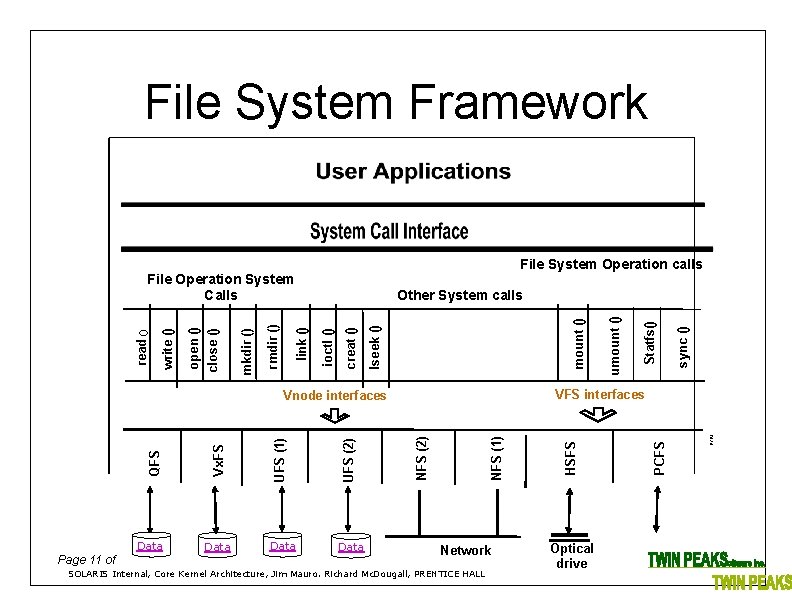

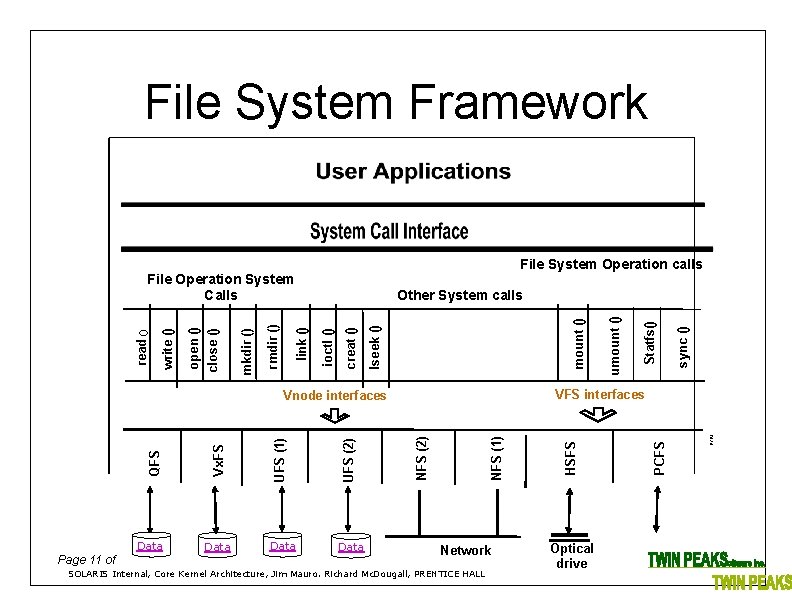

File System Framework File System Operation calls Network SOLARIS Internal, Core Kernel Architecture, Jim Mauro. Richard Mc. Dougall, PRENTICE HALL Optical drive Statfs() sync () PCFS Data PCFS UFS (2) Data NFS (1) UFS (1) Data NFS (2) Vx. FS QFS Data HSFS VFS interfaces Vnode interfaces Page 11 of umount () lseek () creat () Other System calls ioctl () link () rmdir () mkdir () open () close () write () read () File Operation System Calls

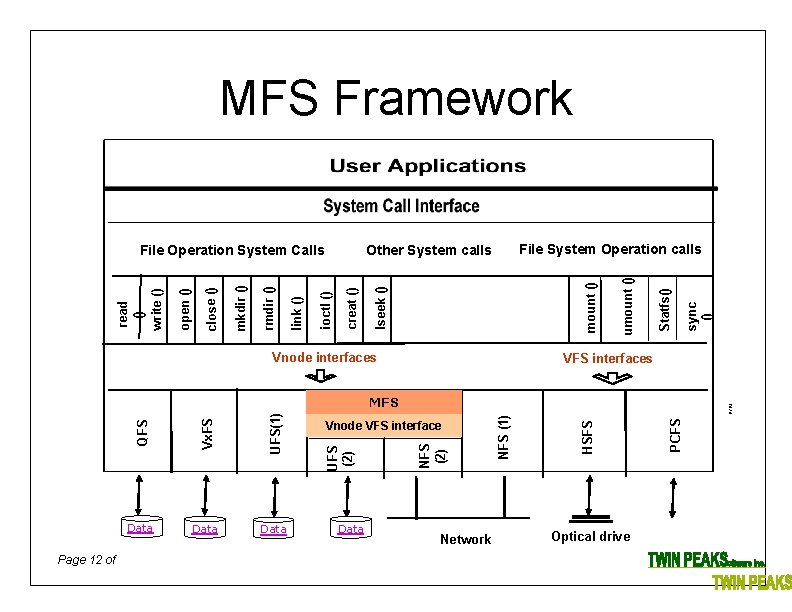

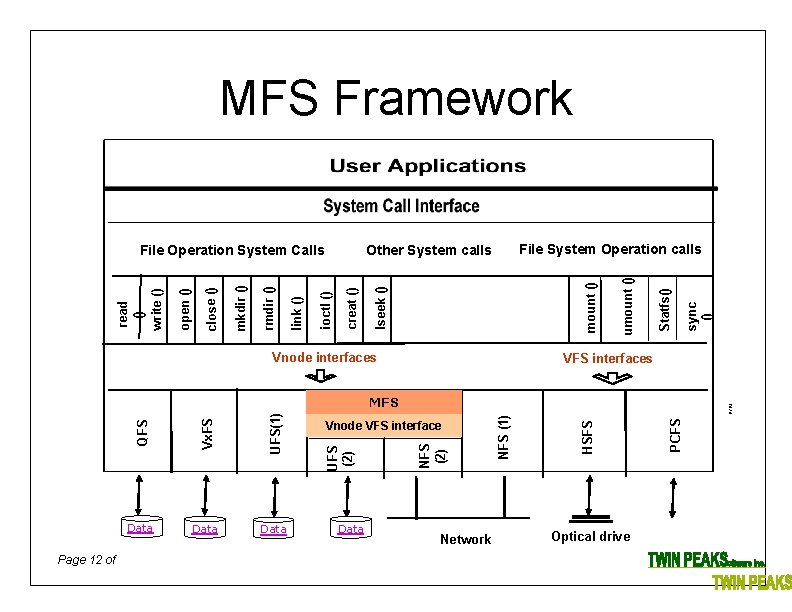

MFS Framework Vnode interfaces VFS interfaces Page 12 of Data Optical drive PCFS HSFS PCFS Network NFS (1) UFS(1) Data NFS (2) Vx. FS Data Vnode VFS interface UFS (2) QFS MFS Data sync () Statfs() umount () lseek () creat () ioctl () link () rmdir () mkdir () close () open () read () write () File System Operation calls Other System calls File Operation System Calls

Transparency • Transparent to users and applications - No re-compilation or re-link needed • Transparent to existing file structures - Same pathname access • Transparent to underlying file systems - UFS, NFS Page 13 of

Mount Mechanism • Conventional Mount - One directory, one file system • MFS Mount - One directory, two or more file systems Page 14 of

Mount Mechanism # mount –F mfs host: /ndir 1/ndir 2 /udir 1/udir 2 - First mount the NFS on a UFS directory - Then mount the MFS on top of UFS and NFS - Existing UFS tree structure /udir 1/udir 2 becomes a local copy of MFS - Newly mounted host: /ndir 1/ndir 2 becomes a remote copy of MFS - Same mount options as NFS except no ‘-o hard’ option Page 15 of

MFS mfsck Command # /usr/lib/fs/mfsck mfs_dir - After MFS mount succeeds, the local copy may not be identical to the remote copy. - Use mfsck (the MFS fsck) to synchronize them. - The mfs_dir can be any directory under MFS mount point. - Multiple mfsck commands can be invoked at the same time. Page 16 of

READ/WRITE Vnode Operation • All VFS/vnode operations received by MFS • READ related operation: read, getattr, …. those operations only need to go to local copy (UFS). • WRITE related operation: write, setattr, …. . those operations go to both local (UFS) and remote (NFS) copy simultaneously (using threads) Page 17 of

Mirroring Granularity • Directory Level - Mirror any UFS directory instead of entire UFS file system - Directory A mirrored to Server A - Directory B mirrored to Server B • Block Level Update - Only changed block is mirrored Page 18 of

MFS msync Command # /usr/lib/fs/msync mfs_root_dir - A daemon that synchronizes MFS pair after a remote MFS partner fails. - Upon a write failure, MFS: - Logs name of file to which the write operation failed - Starts a heartbeat thread to verify the remote MFS server is back online - Once the remote MFS server is back online, msync uses the log to sync missing files to remote server. Page 19 of

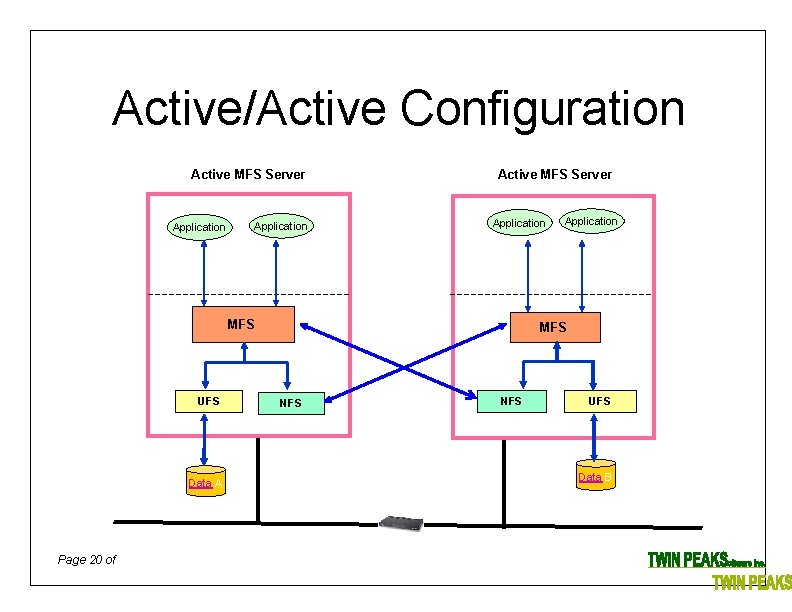

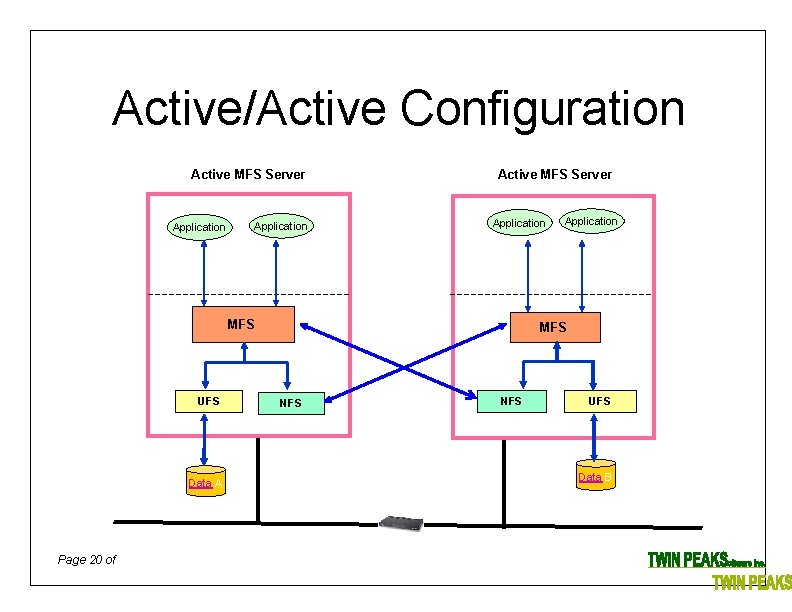

Active/Active Configuration Server Active MFS Server Application MFS UFS Data A Page 20 of Application MFS NFS UFS Data B

MFS Locking Mechanism MFS uses UFS, NFS file record lock. Locking is required for the active-active configuration. Locking enables write-related vnode operations as atomic operations. Locking is enabled by default. Locking is not necessary in active-passive configuration. Page 21 of

Real -Time and Scheduled • Real-time -- Replicate file in real-time • Scheduled -- Log file path, offset and size -- Replicate only changed portion of a file Page 22 of

Applications • Online File Backup • Server File Backup, active passive • Server/NAS Clustering, active Active Page 23 of

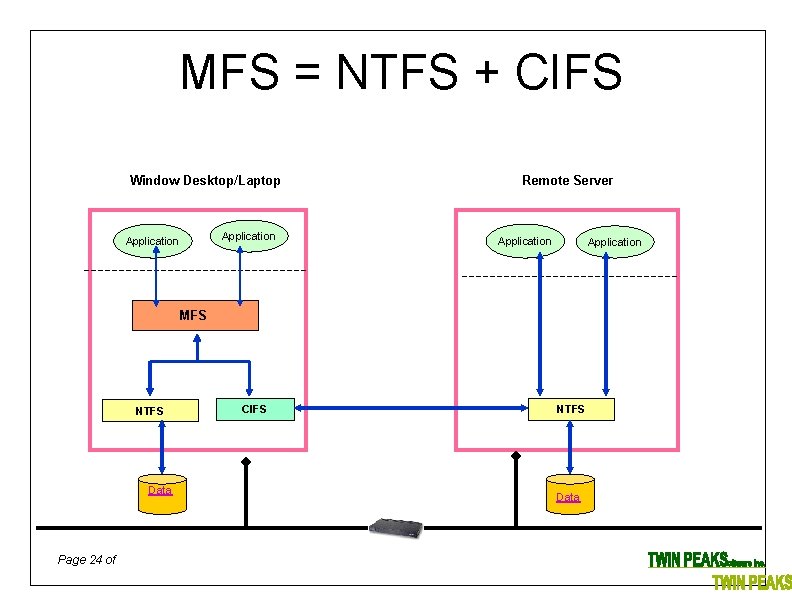

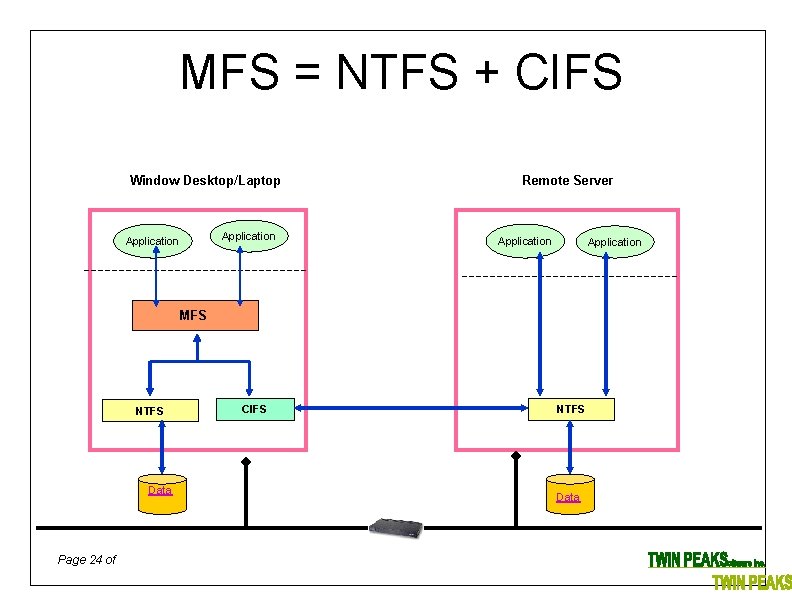

MFS = NTFS + CIFS Window Desktop/Laptop Application Remote Server Application MFS NTFS Data Page 24 of CIFS NTFS Data

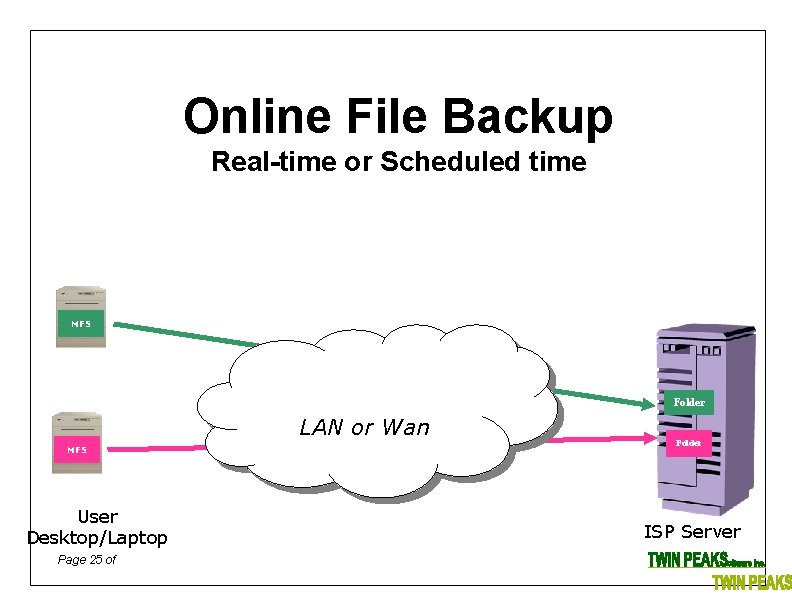

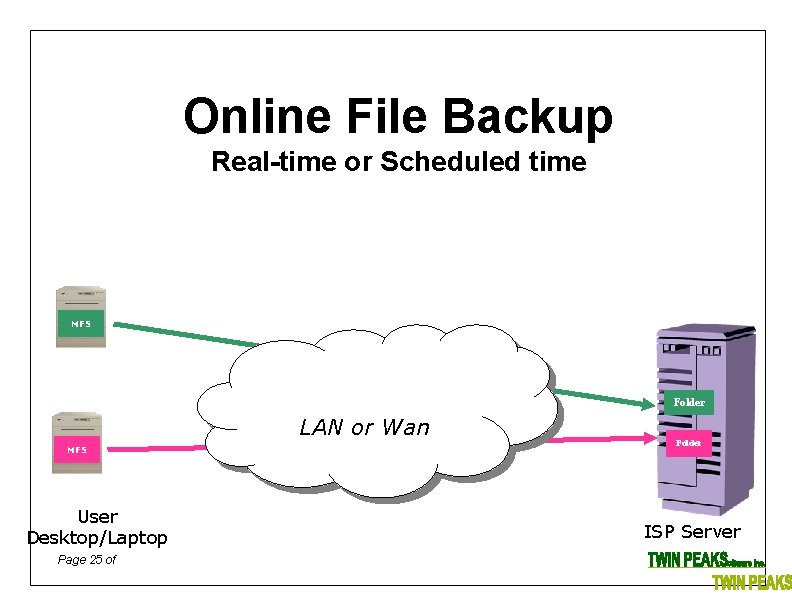

Online File Backup Real-time or Scheduled time MFS Folder LAN or Wan MFS User Desktop/Laptop Page 25 of Folder ISP Server

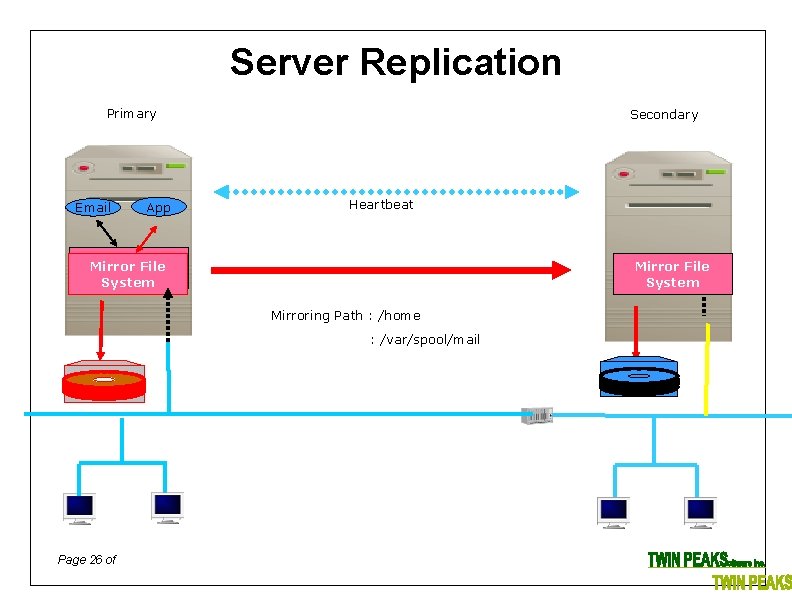

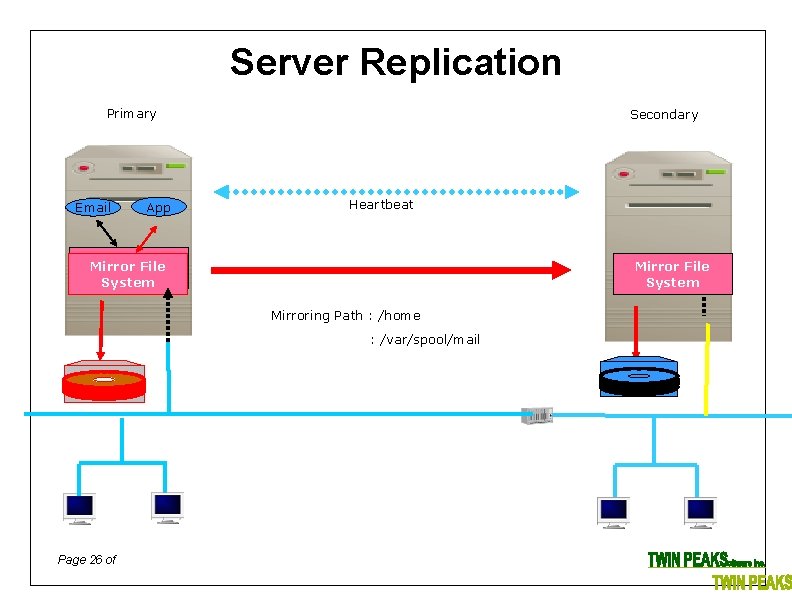

Server Replication Primary Email App Secondary Heartbeat Mirror File Mirror System Mirror File System Mirroring Path : /home : /var/spool/mail Page 26 of

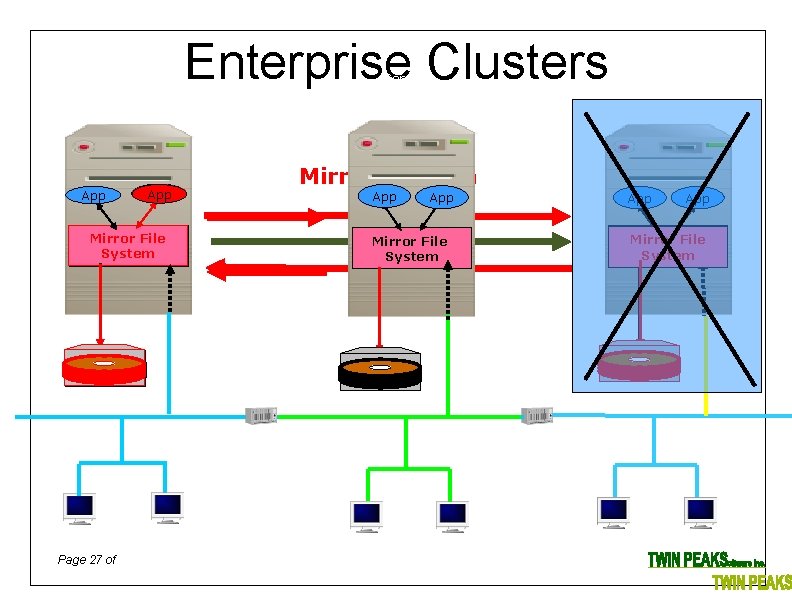

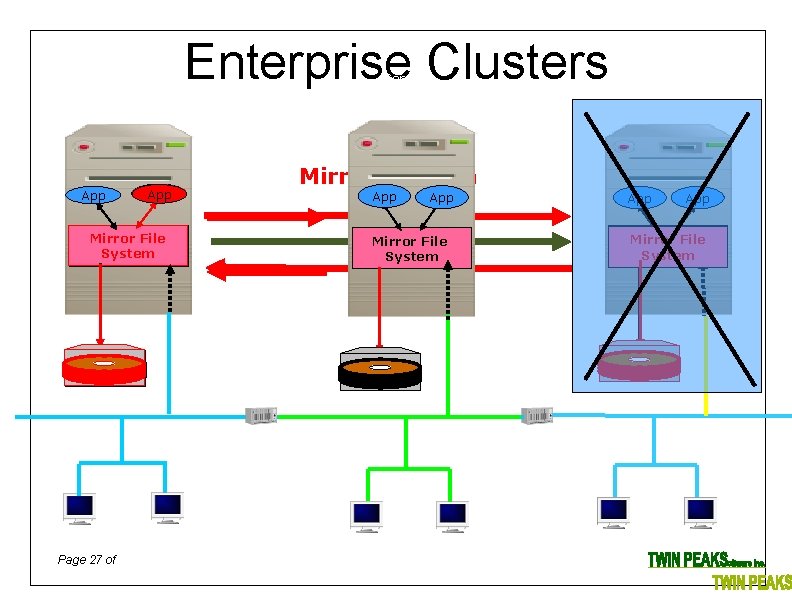

Enterprise Clusters Central App Mirror File System Page 27 of Mirroring Path App App Mirror File System

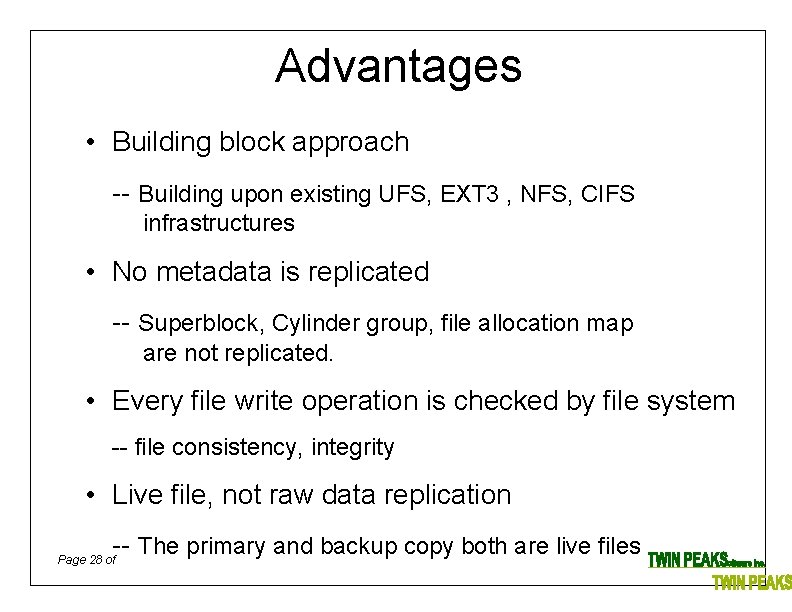

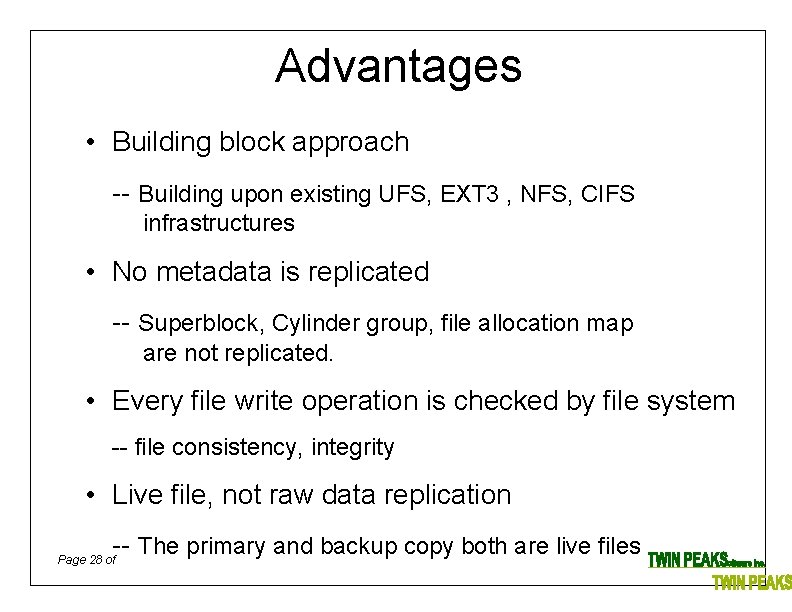

Advantages • Building block approach -- Building upon existing UFS, EXT 3 , NFS, CIFS infrastructures • No metadata is replicated -- Superblock, Cylinder group, file allocation map are not replicated. • Every file write operation is checked by file system -- file consistency, integrity • Live file, not raw data replication -- The primary and backup copy both are live files Page 28 of

Advantages • Interoperability -- Two nodes can be different systems -- Storage systems can be different • Small granularity -- Directory level, not entire file system • One to many or many to one replication Page 29 of

Advantages • Fast replication -- Replication in Kernel file system module • Immediate failover -- No need to fsck and mount operation • Geographically dispersed clustering -- Two nodes can be separated by hundreds of miles • Easy to deploy and manage -- Only one copy of MFS running on primary server is needed for replication Page 30 of

Why MFS? • Better Data Protection • Better Disaster Recovery • Better RAS • Better Scalability • Better Performance • Better Resources Utilization Page 31 of

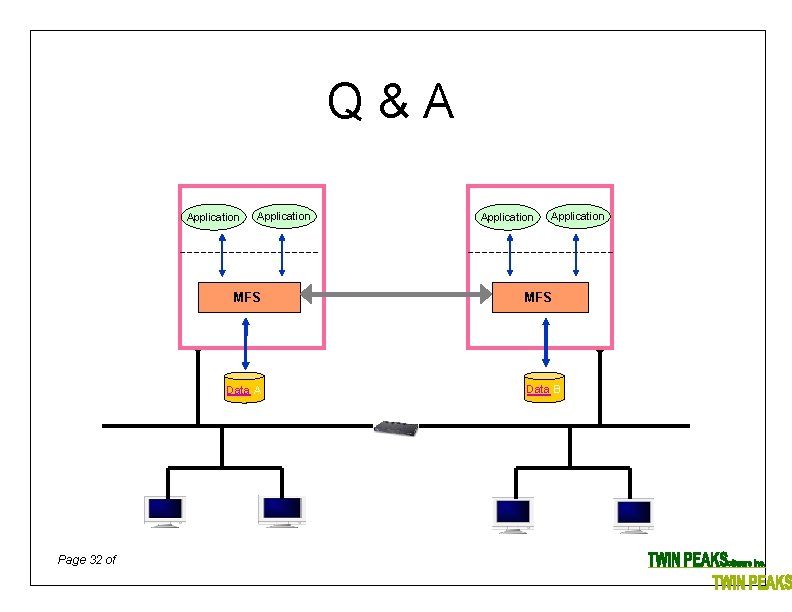

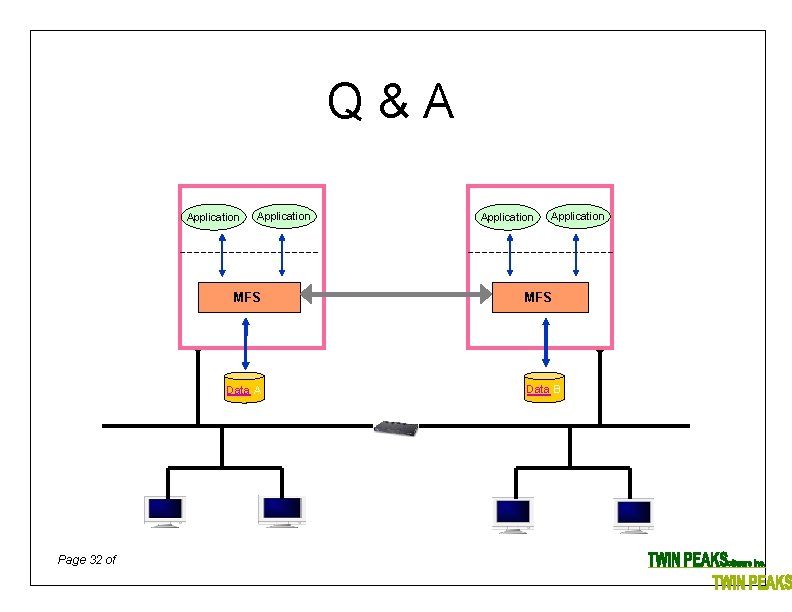

Q&A Application MFS Data A Page 32 of Application MFS Data B