MIPS Pipelining Part I Dr Anilkumar K G

- Slides: 92

MIPS Pipelining: Part I Dr. Anilkumar K. G 1

Textbook and References Textbook(s): 1. 2. Computer Architecture: A Quantitative Approach 4 th Edition, David A. Patterson and John L. Hennessy, Morgan Kaufmann Publishers, 2005. (ISBN: 0 -12 -370490 -1) Digital Design and Computer Architecture, 2 nd Edition, David M. H and Sarah L. H, Morgan Kaufmann, Elsevier, 2013 (ISBN: 978 -0 -12 -394424 -5) Reference(s): 1. Computer Architecture and Implementation, Harvey G. Cragon, Cambridge University Press, 2000 (ISBN: 0 -52 -165168 -9) Simulator: Tasm (Turbo Assembler) Link: https: //en. wikipedia. org/wiki/MIPS_instruction_set Dr. Anilkumar K. G 2

Objective(s( n n n One of the objectives of this study is to make each student to familiar with the pipeline architecture of a commercial Microprocessor such as MIPS system. Once the students gain the knowledge of the pipelined hardware structure of a microprocessor, then it is possible for them to apply that into any microprocessor related fields. Finally, the students will get the knowledge of the complete operation a computer system. Dr. Anilkumar K. G 3

Introduction n This section describes the features of a fivestage RISC(Reduced Instruction Set Computer) pipeline machine – MIPS 32 -bit system and its issue of hazards and performance problems. Dr. Anilkumar K. G 4

What is Pipelining? q Pipeline is a performance improvement techniquemultiple instructions are overlapped in execution n Pipeline takes advantages of parallelism that exists among the actions needed to execute an instruction q q q Today, pipelining is the key performance technique used to make fast CPUs A pipeline is like an automobile assembly line In a computer pipeline, each step in the pipeline completes a part of an instruction, each of these steps called a pipe stage or a pipe segment n The stages are connected one to the next to form a pipe Dr. Anilkumar K. G 5

Pipelining q q In an automobile assembly line, throughput is defined as the no. of vehicles per hour The throughput of an instruction pipeline is determined by how often an instruction exits in the pipeline n n Because the pipeline stages are hooked together, all the stages must be ready to proceed at the same time The time required between moving an instruction from one stage to the next of the pipeline is a processor cycle (or a pipeline cycle) q Length of a processor cycle is determined by the time required for the slowest pipe stage Dr. Anilkumar K. G 6

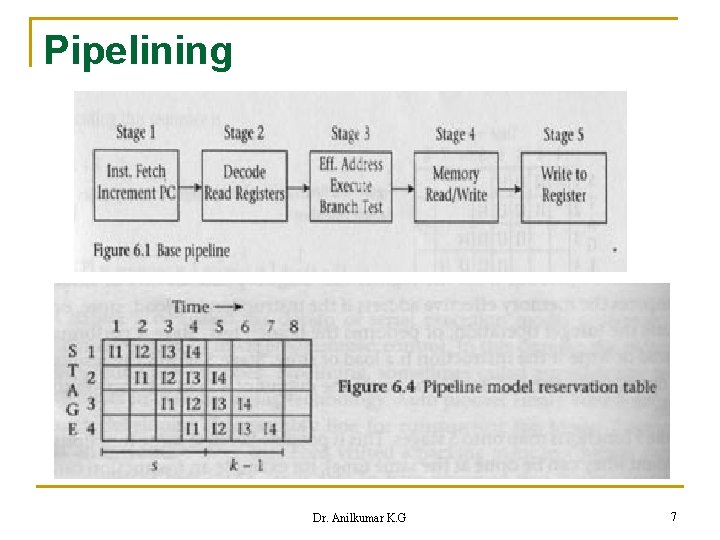

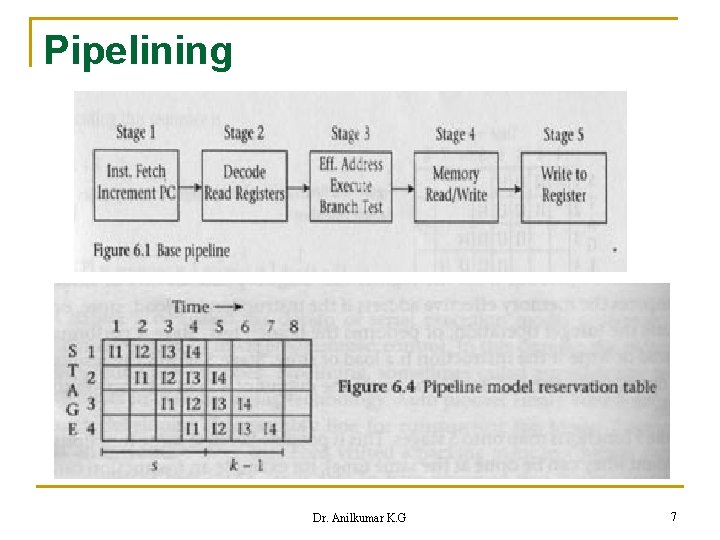

Pipelining Dr. Anilkumar K. G 7

Goal of Pipelining Designer n n Goal of a pipeline designer is to balance the length of each pipeline stages If the stages are perfectly balanced, then the time per instruction on the pipelined processor is Time per instruction on unpipelined machine Number of pipeline stages in a pipelined machine n The speedup from pipelining equals to the no. of pipe stages (only when the pipeline CPI is 1) Dr. Anilkumar K. G 8

Goal of Pipelining Designer n Pipelining yields a reduction in the average execution time per instruction q q Pipelining does not reduce the execution time of an instruction The reduction can be viewed as decreasing n n CPI, Clock cycle time, Or combination of both Pipelining is an implementation technique that exploits parallelism among the instructions in a sequential instruction stream q It is not visible to the programmer Dr. Anilkumar K. G 9

The Basic MIPS Instruction Set n MIPS architectures are characterized by the following few key properties: q q All operations on data apply to data in registers and typically change the entire register (32 bits/reg in a MIPS 32 bit system) The only operations that affect memory are load and store operations n q n Load and store operations that load or store a full register Size of an instruction is fixed These properties simplifies the implementation of pipelining in RISC processors (MIPS is a RISC processor) Dr. Anilkumar K. G 10

Five Stages of MIPS Pipeline Unit q IF (Instruction fetch) stage: n n q Send the PC (Program Counter) to memory and fetch the current instruction from memory Update the PC for the next sequential instruction by adding 4 to the PC (each instruction is 4 byte in size) ID (Instruction decode/register fetch): n n n Decode the instruction and read the operand registers from the register file Do the equality test on the registers, for branch test Compute the branch target address by adding the offset to the incremented PC Dr. Anilkumar K. G 11

A simple Implementation of a RISC Instruction Set n q Instruction decoding done in parallel with reading registers, because register specifiers are at a fixed location in a RISC architecture, called fixed-field decoding EX (Execution/effective address cycle): n n n Memory reference operation: ALU adds the base register and the offset to form the effective address for load/store Register-Register operation: ALU performs the operation specified by the ALU opcode on the value given by register operands Register-Immediate operation: ALU performs the operation specified by the ALU opcode based on the immediate value Dr. Anilkumar K. G 12

A simple Implementation of a RISC Instruction Set q MEM (Memory access) stage: n n q If the operation is a load, memory read uses the effective address which is computed in the previous cycle If it is a store, the memory writes happen using the effective address WB (Write-back result to register file) stage: n n WB stage is supports only reg-reg and load instructions Write the result into the register file, whether is from memory (load) or from ALU Dr. Anilkumar K. G 13

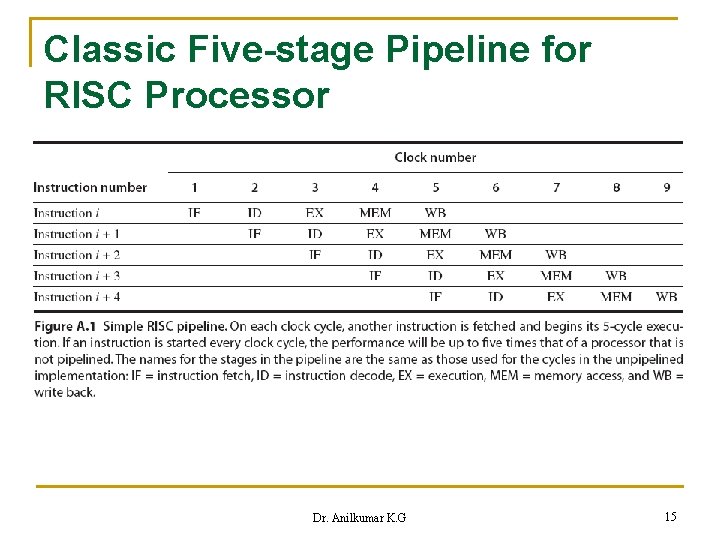

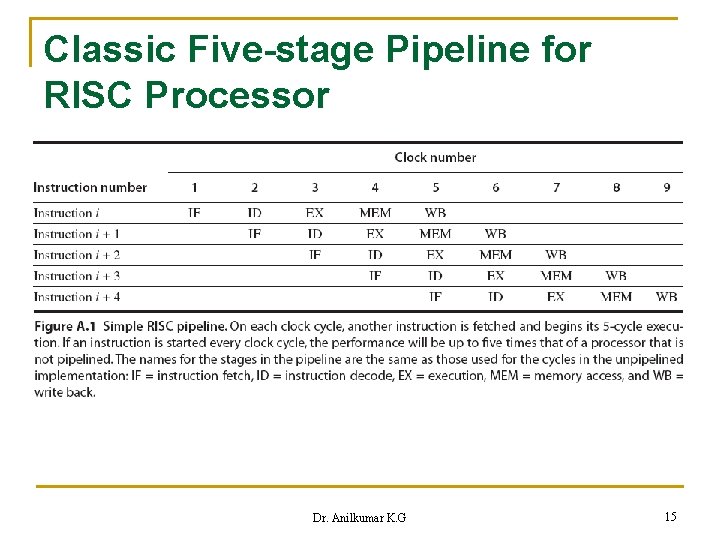

Classic Five-stage Pipeline for a RISC Processor n Each of the clock cycle from previous section becomes a pipe stage – a cycle in the pipeline q n Pipe stages shown in Figure A. 1 Although each instruction takes 5 clock cycles to complete (each stage needs 1 cock cycle), during each clock cycle the HW will initiate new instruction Dr. Anilkumar K. G 14

Classic Five-stage Pipeline for RISC Processor Dr. Anilkumar K. G 15

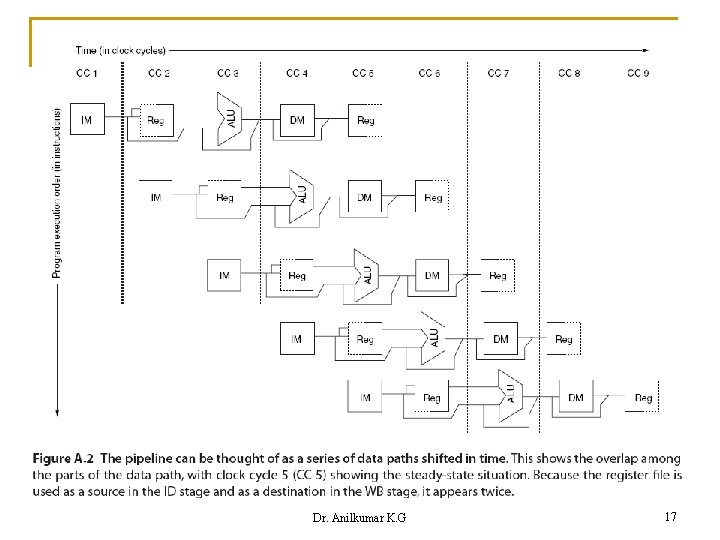

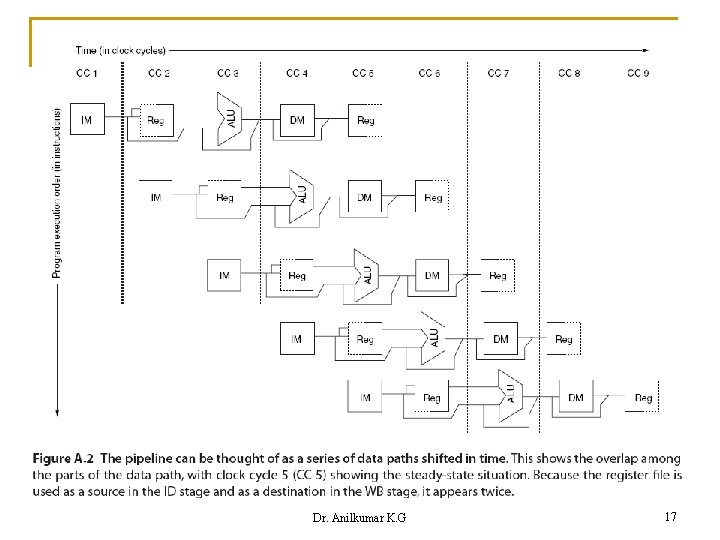

Classic Five-stage Pipeline for RISC Processor n In a pipeline, we don’t try to perform two different operations with the same data path resource on the same clock cycle q q q A single ALU cannot be asked to compute an effective address and perform a subtract operation at the same time we must ensure that the overlap of instructions in the pipeline cannot cause such a conflict Figure A. 2 shows a simplified version of a RISC data path drawn in pipeline fashion Dr. Anilkumar K. G 16

Dr. Anilkumar K. G 17

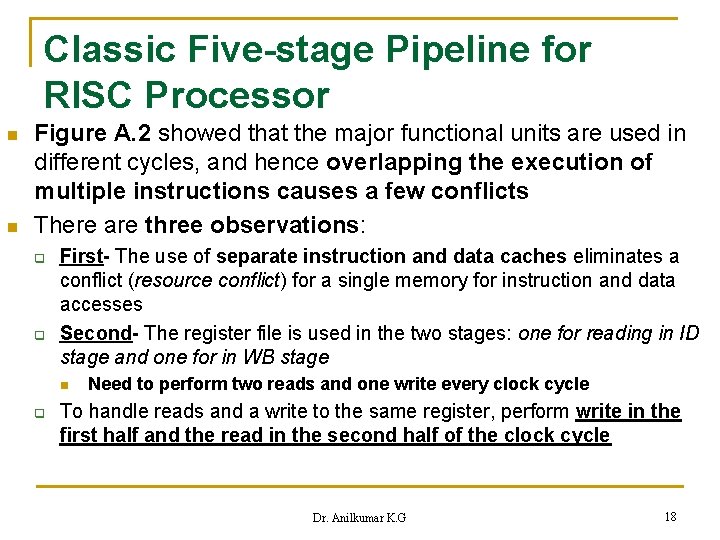

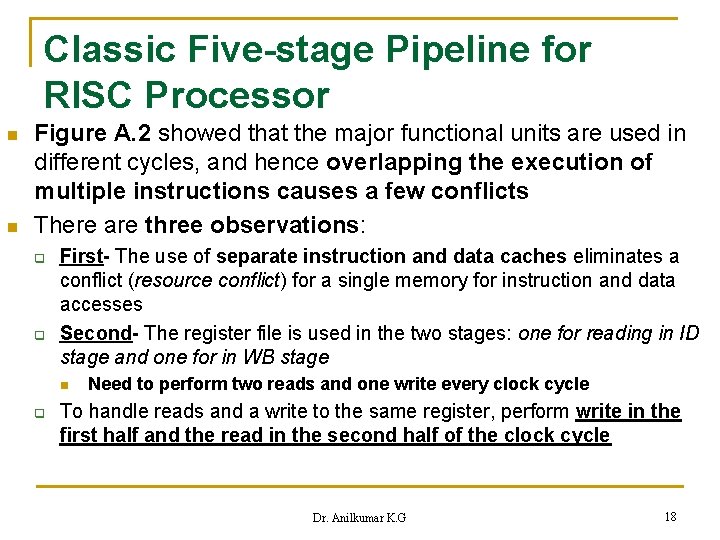

Classic Five-stage Pipeline for RISC Processor n n Figure A. 2 showed that the major functional units are used in different cycles, and hence overlapping the execution of multiple instructions causes a few conflicts There are three observations: q q First- The use of separate instruction and data caches eliminates a conflict (resource conflict) for a single memory for instruction and data accesses Second- The register file is used in the two stages: one for reading in ID stage and one for in WB stage n q Need to perform two reads and one write every clock cycle To handle reads and a write to the same register, perform write in the first half and the read in the second half of the clock cycle Dr. Anilkumar K. G 18

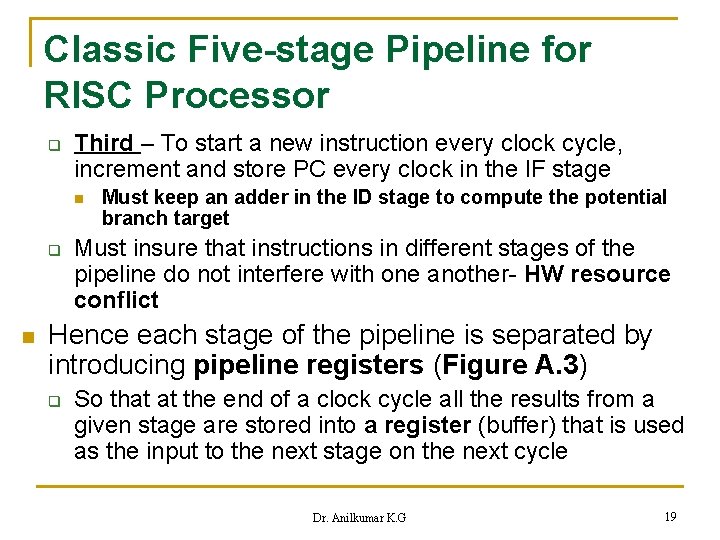

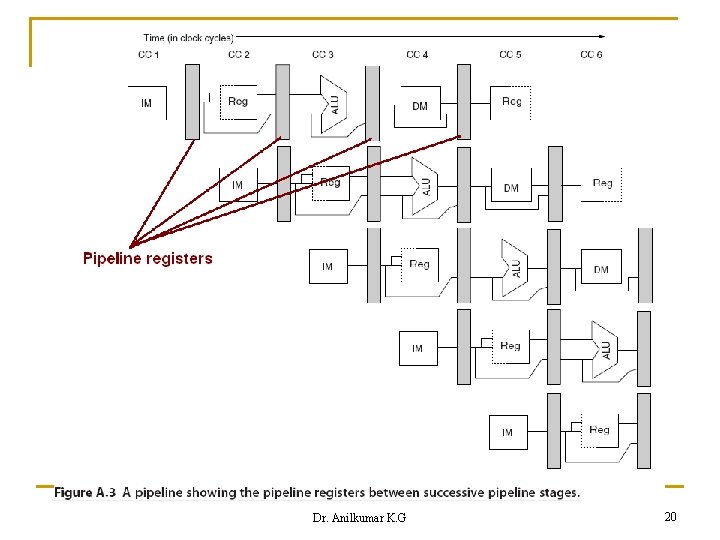

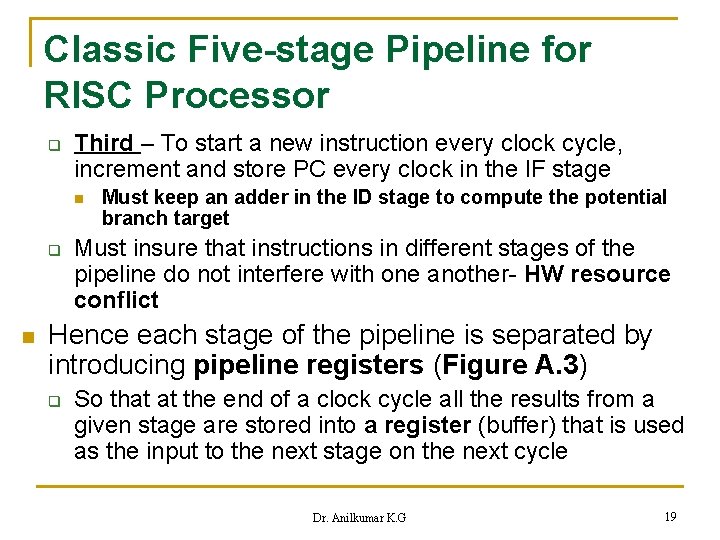

Classic Five-stage Pipeline for RISC Processor q Third – To start a new instruction every clock cycle, increment and store PC every clock in the IF stage n q n Must keep an adder in the ID stage to compute the potential branch target Must insure that instructions in different stages of the pipeline do not interfere with one another- HW resource conflict Hence each stage of the pipeline is separated by introducing pipeline registers (Figure A. 3) q So that at the end of a clock cycle all the results from a given stage are stored into a register (buffer) that is used as the input to the next stage on the next cycle Dr. Anilkumar K. G 19

Dr. Anilkumar K. G 20

Classic Five-stage Pipeline for RISC Processor n The pipeline registers play the key role of carrying intermediate results from one stage to another where the source and destination may not be directly adjacent n n For ex. the register value to be stored during a store instruction is read during ID, but not actually used until MEM; It is passed through two pipeline registers to reach the data memory during the MEM stage Likewise, the result of an ALU instruction is computed during EX, but not actually stored until WB; it arrives there by passing through two pipeline registers Look at the details of a four-stage pipeline to get a clear pipeline idea! Dr. Anilkumar K. G 21

Basic Performance Issue in Pipelining increases CPU’s instruction throughput q n n The no. of instructions completed per unit time increases Pipeline does not reduce the execution time of an individual instruction But it increases the exe. time of each instruction due to overhead in the control of the pipeline mechanism Due to pipeline process, a program runs faster even though no single instruction runs faster Issues arise from pipeline are latency, imbalance among pipeline stages and pipeline overhead Dr. Anilkumar K. G 22

Basic Performance Issue in q. Pipelining Imbalance among the pipe stages reduces performance since the clock cannot run faster than the time needed for the slowest stage (such as memory access) q Pipeline overhead arises from the combination of pipeline register delay and clock skew n q q That is, pipeline registers add setup time and propagation delay to the system Clock skew is the maximum delay between when the clock arrives at any two pipeline registers In a Pipeline, average instr. execution time can be given as: Average instruction exe. time = Clock cycle time x Average CPI Dr. Anilkumar K. G 23

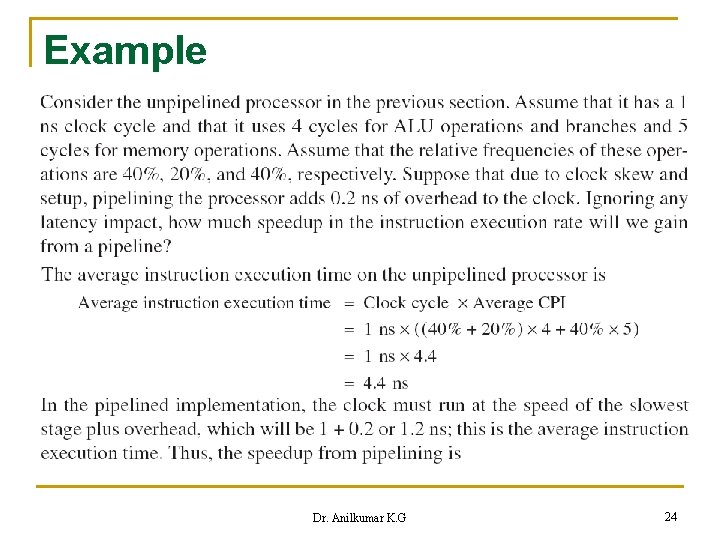

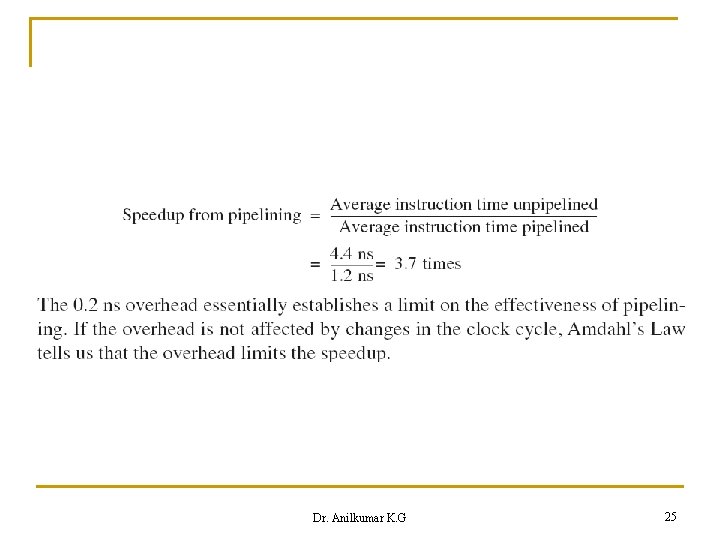

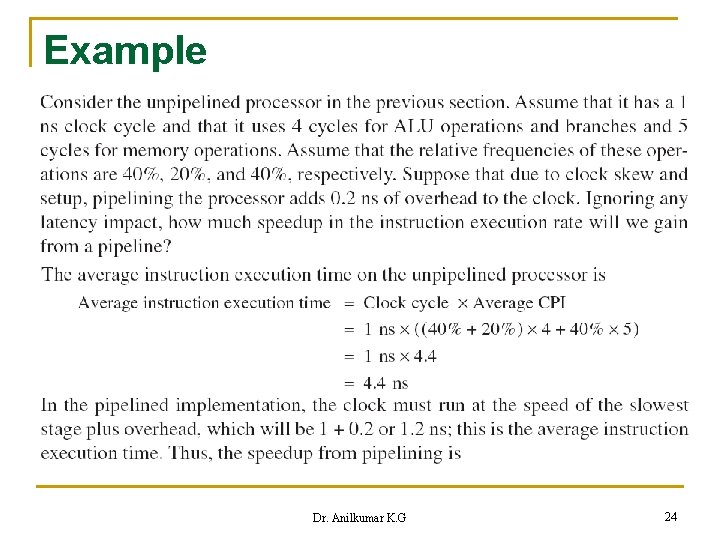

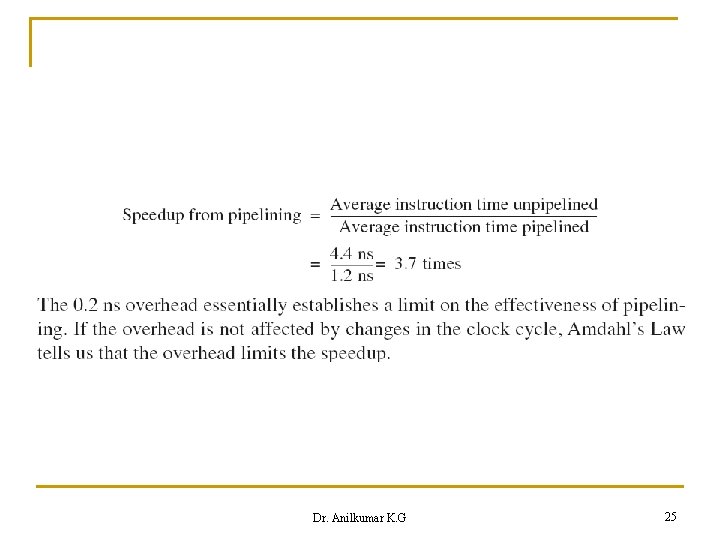

Example Dr. Anilkumar K. G 24

Dr. Anilkumar K. G 25

The Major Hurdle of Pipelining – Pipeline Hazards n In a pipeline, there situations called hazards, that prevent the next instruction in the instructionstream from executing during its designated clock cycle q Hazards reduce performance from pipelining Dr. Anilkumar K. G 26

Classes of Pipeline Hazards n There are classes of hazards: q q Structural hazards arise from resource conflicts when the HW cannot support all possible combinations of instructions simultaneously in overlapped execution (pipelined execution) Data hazards arise when an instruction depends on the results of a pervious instruction in a way that is exposed by the overlapping of instructions in the pipeline Control hazards arise from the pipelining of branch instructions and other instructions that change the PC Hazards in pipelines can make it to stall the pipeline Dr. Anilkumar K. G 27

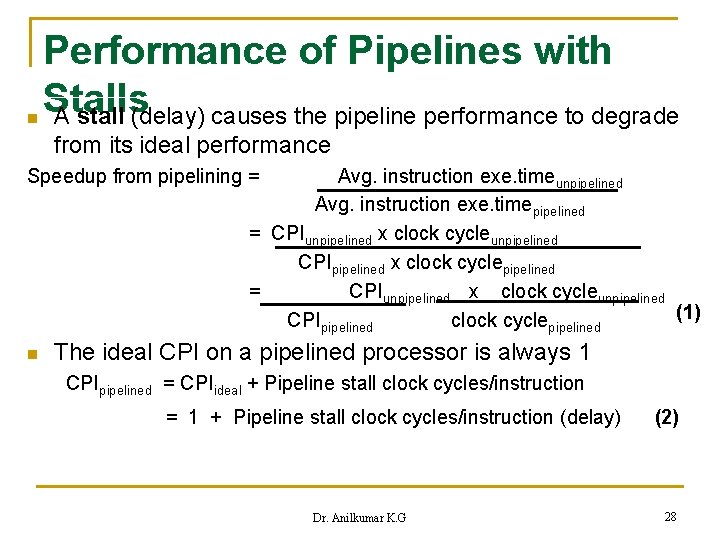

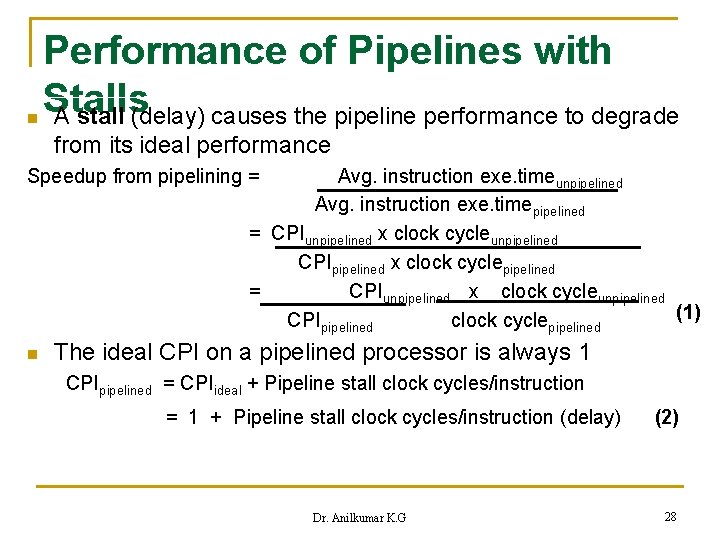

Performance of Pipelines with Stalls n A stall (delay) causes the pipeline performance to degrade from its ideal performance Speedup from pipelining = Avg. instruction exe. timeunpipelined Avg. instruction exe. timepipelined = CPIunpipelined x clock cycleunpipelined CPIpipelined x clock cyclepipelined = CPIunpipelined x clock cycleunpipelined (1) CPIpipelined clock cyclepipelined n The ideal CPI on a pipelined processor is always 1 CPIpipelined = CPIideal + Pipeline stall clock cycles/instruction = 1 + Pipeline stall clock cycles/instruction (delay) Dr. Anilkumar K. G (2) 28

n n n Performance of Pipelines with If. Stalls we ignore the cycle time overhead and assume the stages are perfectly balanced, then the clock cycle time of pipelined and un-pipelined processors are equal, hence speedup; Speedup = CPIunpipelined 1 + Pipeline stall cycles/instruction (3) A case where all instructions take the same no. of cycles, which must also be equal to the no. of pipeline stages, called the pipeline depth In this case, the CPIunpipelined is equal to the depth of the pipeline (Pipelinedepth), hance Speedup = Pipelinedepth 1 + Pipeline stall cycles/instruction (4) Dr. Anilkumar K. G 29

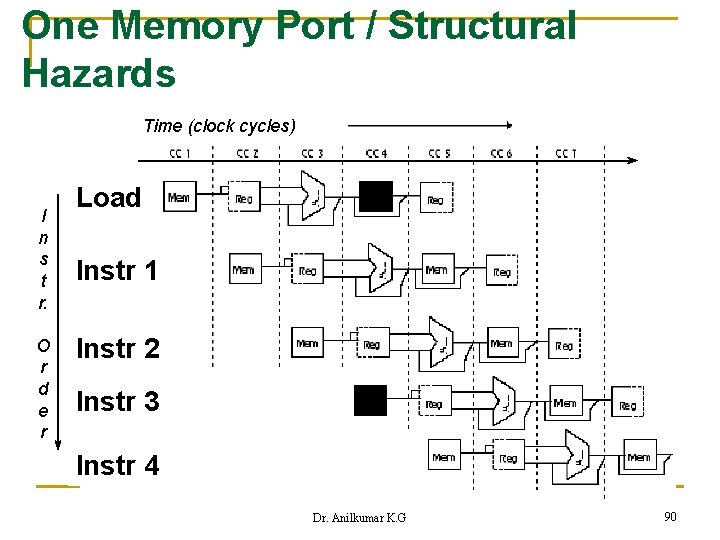

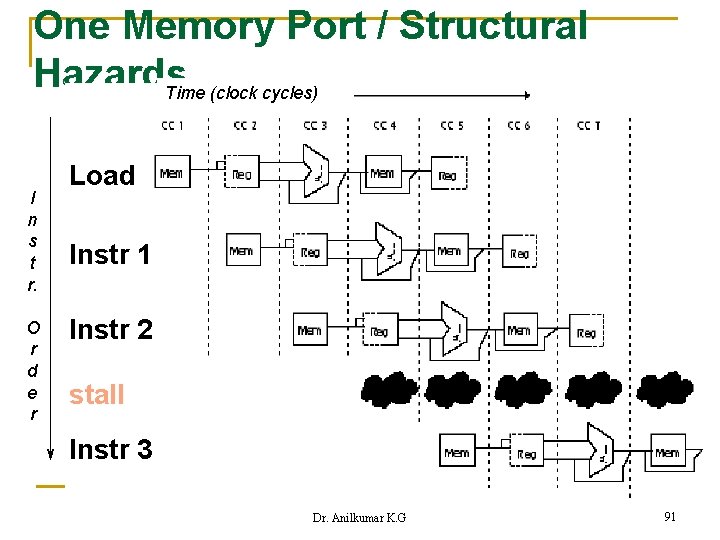

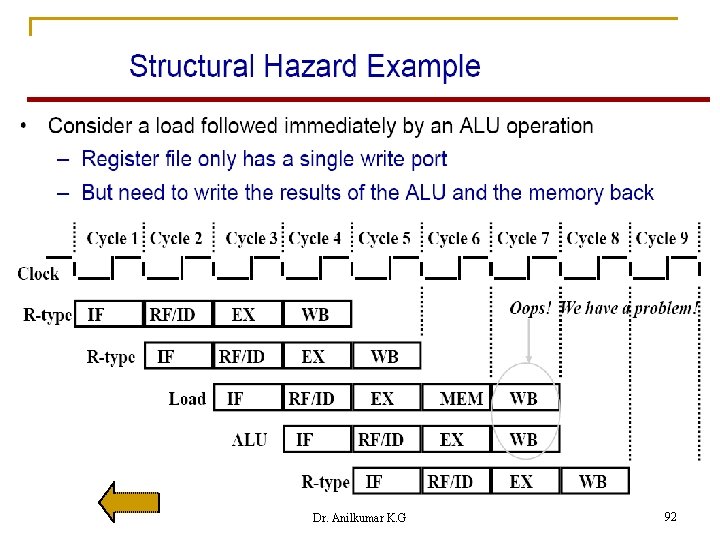

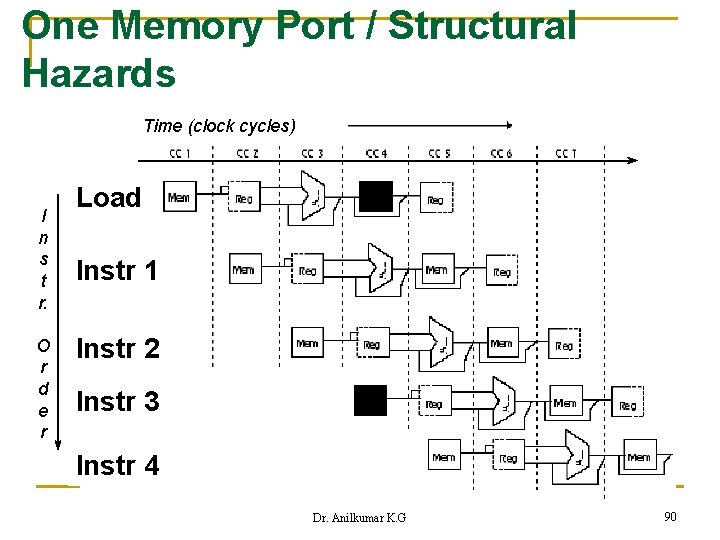

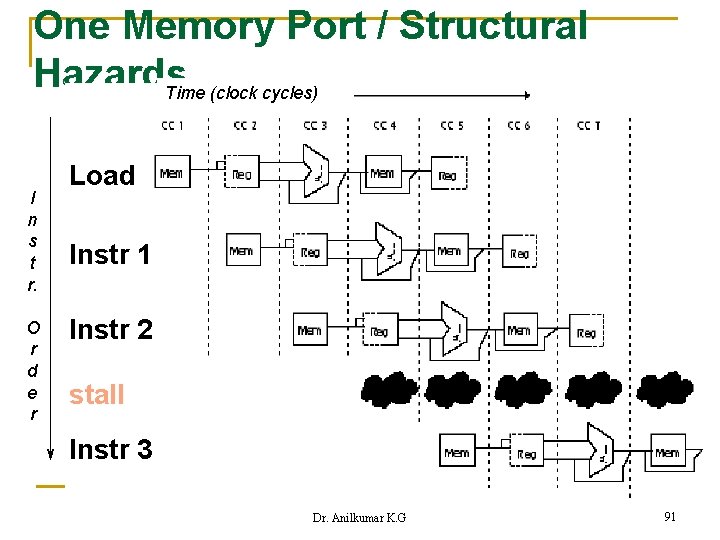

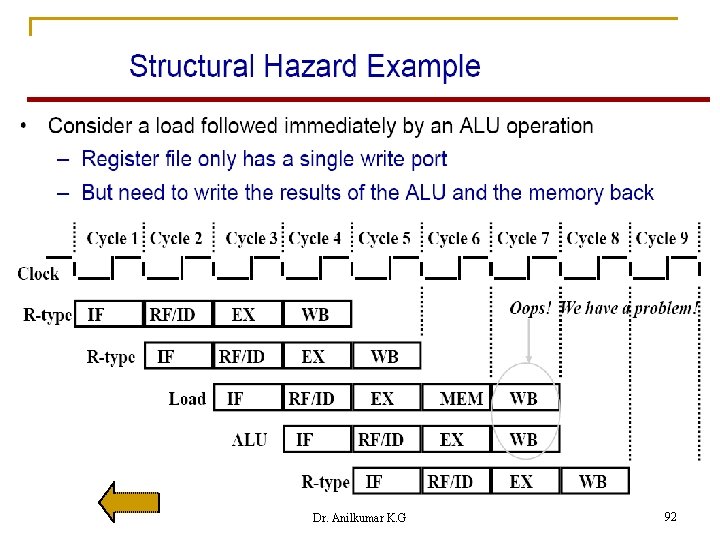

Structural Hazards n n When a processor is pipelined, the overlapped execution of instructions requires pipelining of its functional units (stages) If some combination of instructions cannot be pipelined properly due to resource conflicts, the processor is said to have a structural hazard q q n That is, structural hazards arise when some functional unit is not fully pipelined Hence a sequence of instructions using that HW resource cannot proceed at the rate of one per clock cycle some HW resource has not been duplicated enough to allow all combinations of instructions in the pipeline can also cause structural hazards q For ex. a processor have only one register-file write port, the pipeline wants to perform two write in a clock cycle generates a structural hazard Dr. Anilkumar K. G 30

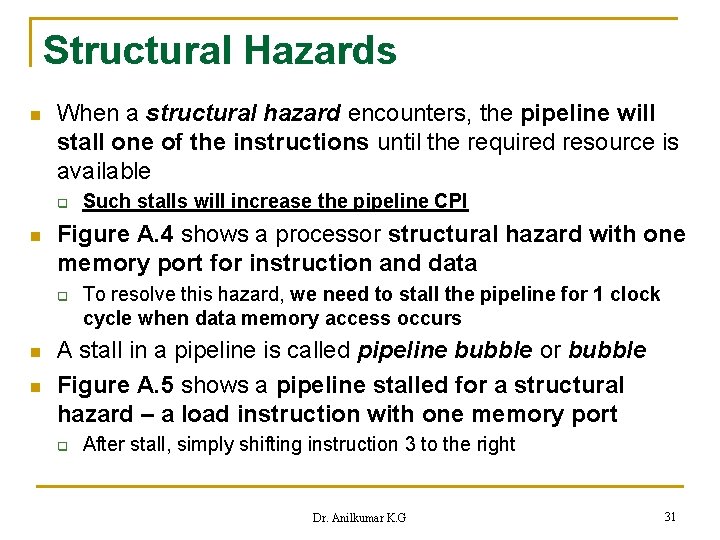

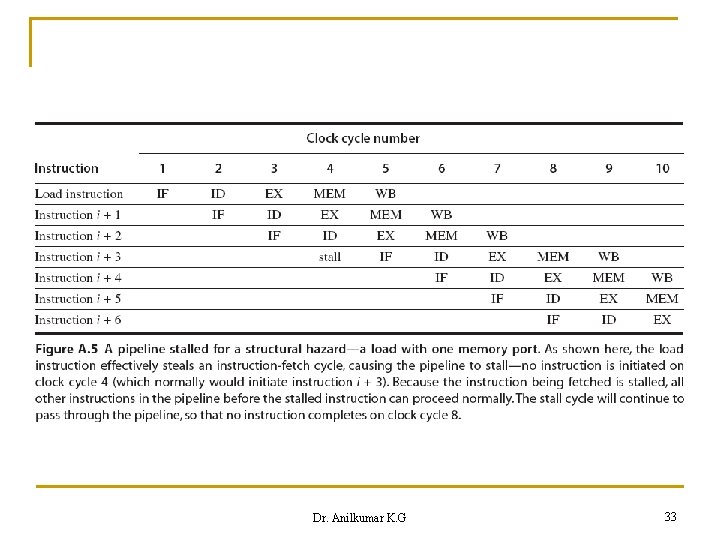

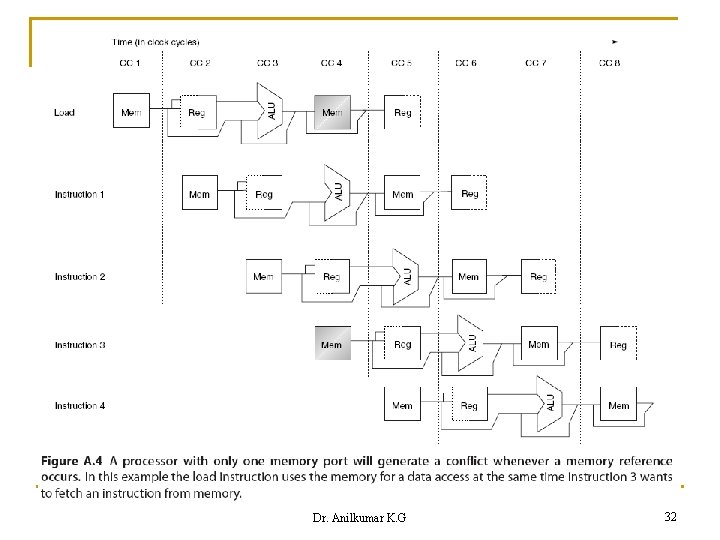

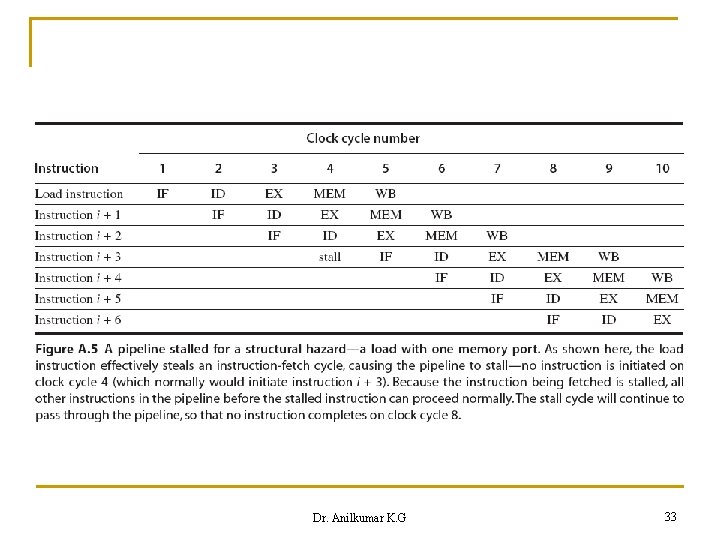

Structural Hazards n When a structural hazard encounters, the pipeline will stall one of the instructions until the required resource is available q n Figure A. 4 shows a processor structural hazard with one memory port for instruction and data q n n Such stalls will increase the pipeline CPI To resolve this hazard, we need to stall the pipeline for 1 clock cycle when data memory access occurs A stall in a pipeline is called pipeline bubble or bubble Figure A. 5 shows a pipeline stalled for a structural hazard – a load instruction with one memory port q After stall, simply shifting instruction 3 to the right Dr. Anilkumar K. G 31

Dr. Anilkumar K. G 32

Dr. Anilkumar K. G 33

Structural Hazards n n A pipelined processor without the structural hazard will run faster Designer should provide a separate memory access for instructions either by splitting cache into instruction and data caches or by using instruction buffers to hold instructions Dr. Anilkumar K. G 34

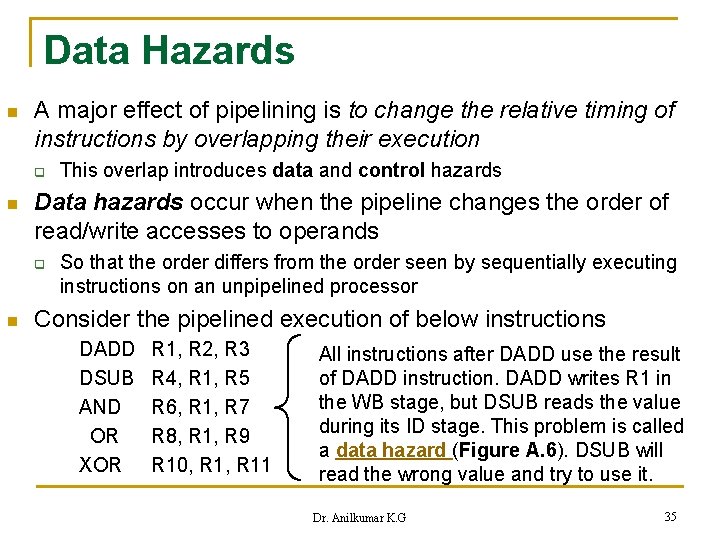

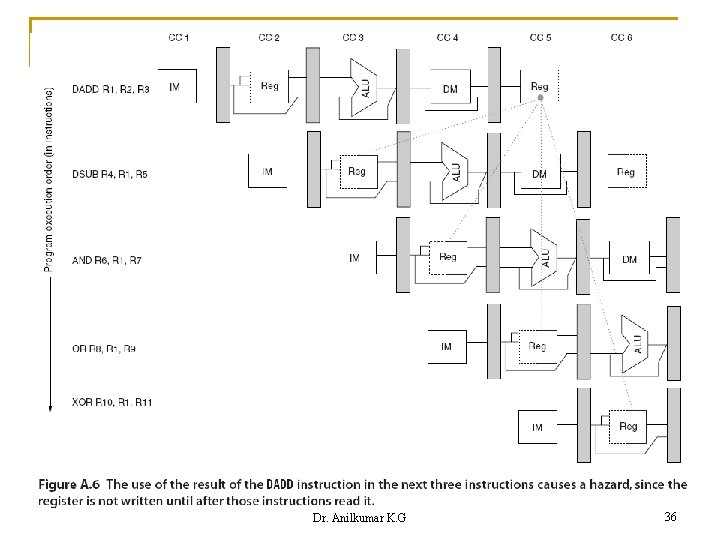

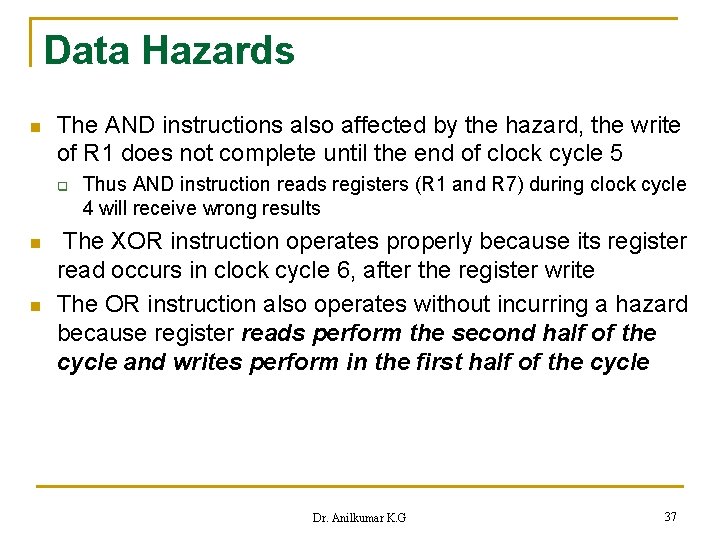

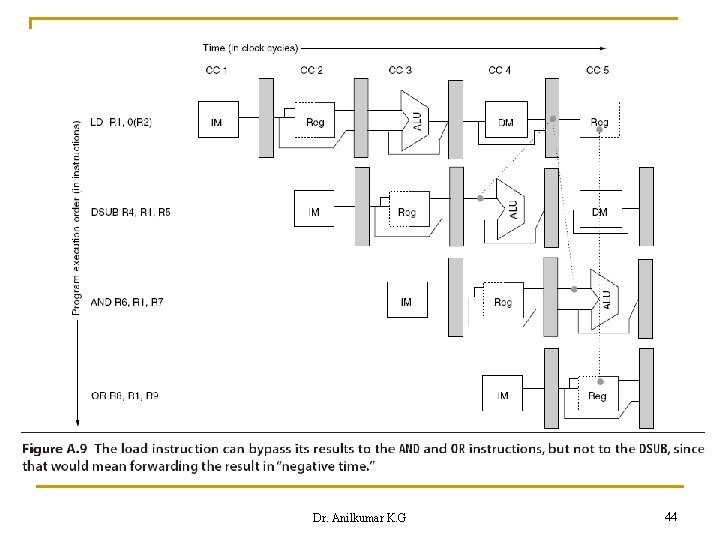

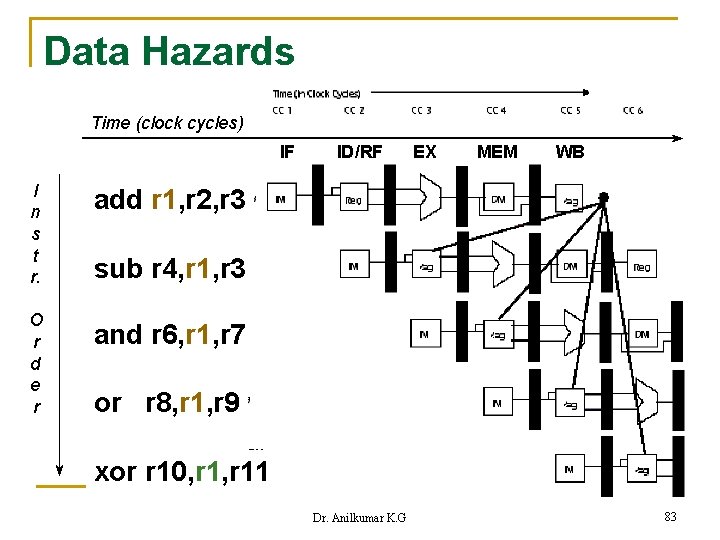

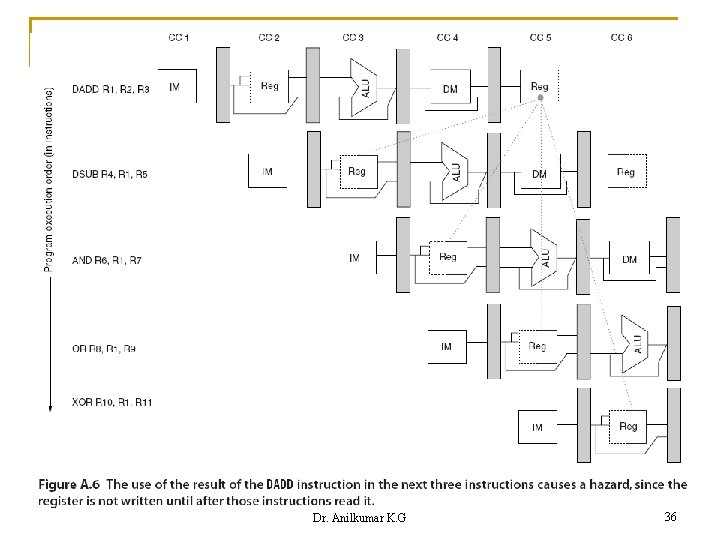

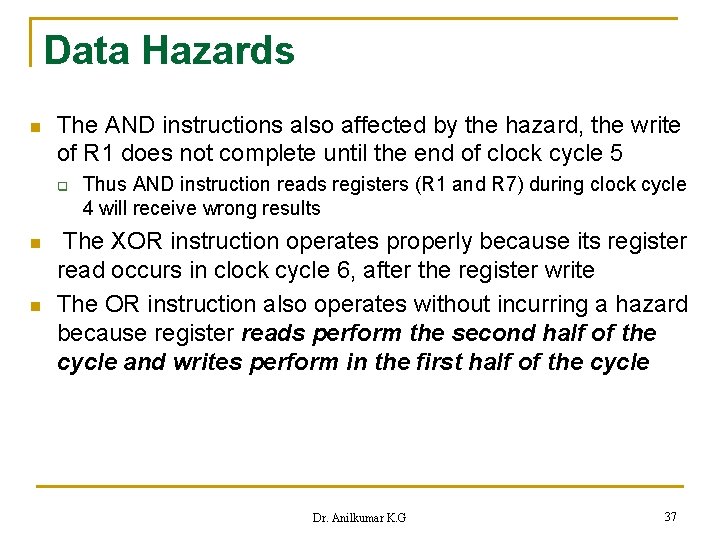

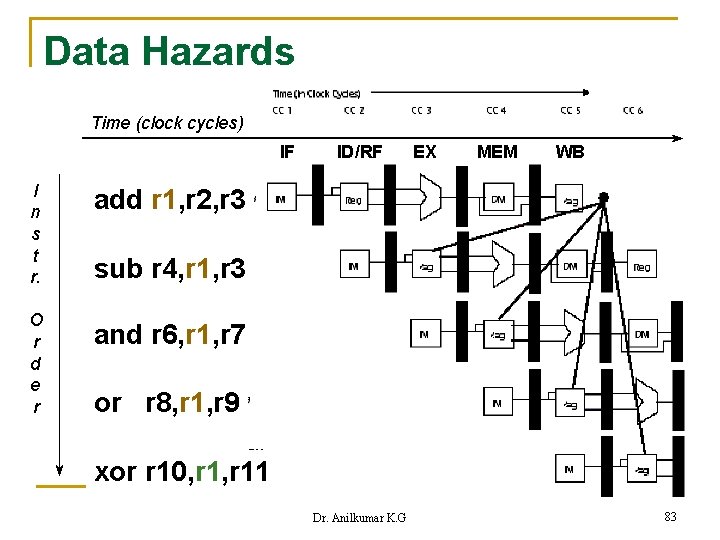

Data Hazards n A major effect of pipelining is to change the relative timing of instructions by overlapping their execution q n Data hazards occur when the pipeline changes the order of read/write accesses to operands q n This overlap introduces data and control hazards So that the order differs from the order seen by sequentially executing instructions on an unpipelined processor Consider the pipelined execution of below instructions DADD DSUB AND OR XOR R 1, R 2, R 3 R 4, R 1, R 5 R 6, R 1, R 7 R 8, R 1, R 9 R 10, R 11 All instructions after DADD use the result of DADD instruction. DADD writes R 1 in the WB stage, but DSUB reads the value during its ID stage. This problem is called a data hazard (Figure A. 6). DSUB will read the wrong value and try to use it. Dr. Anilkumar K. G 35

Dr. Anilkumar K. G 36

Data Hazards n The AND instructions also affected by the hazard, the write of R 1 does not complete until the end of clock cycle 5 q n n Thus AND instruction reads registers (R 1 and R 7) during clock cycle 4 will receive wrong results The XOR instruction operates properly because its register read occurs in clock cycle 6, after the register write The OR instruction also operates without incurring a hazard because register reads perform the second half of the cycle and writes perform in the first half of the cycle Dr. Anilkumar K. G 37

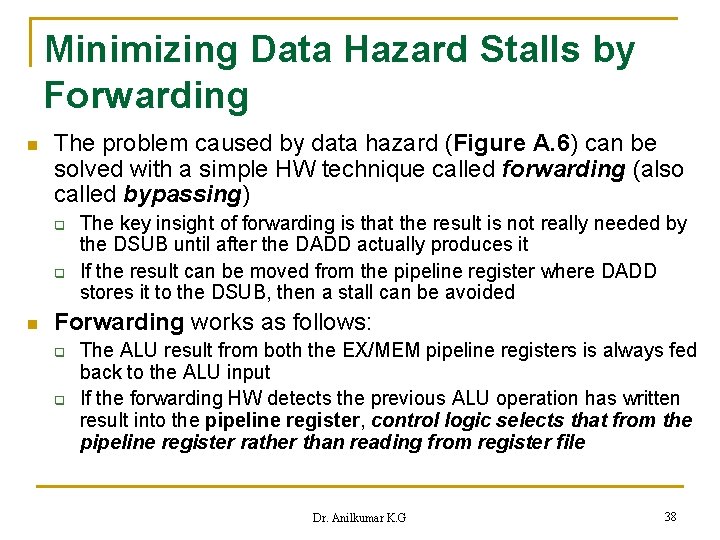

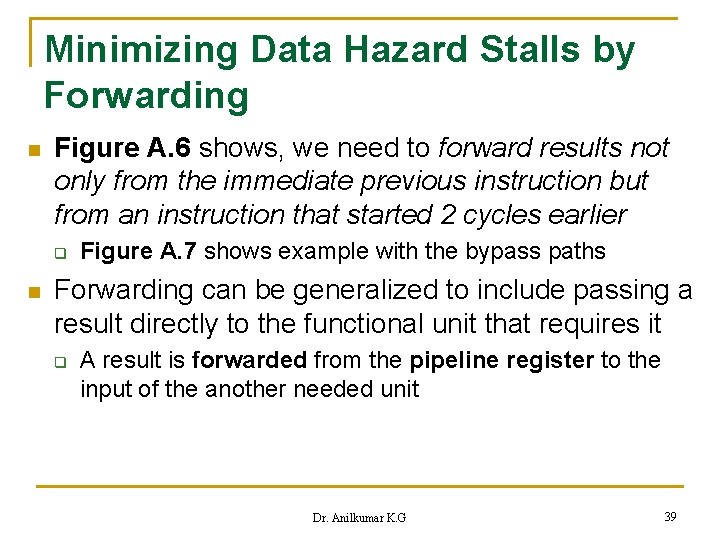

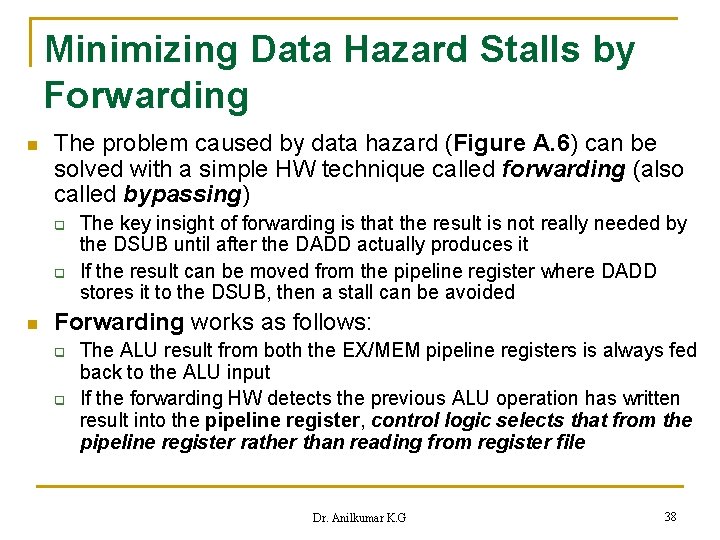

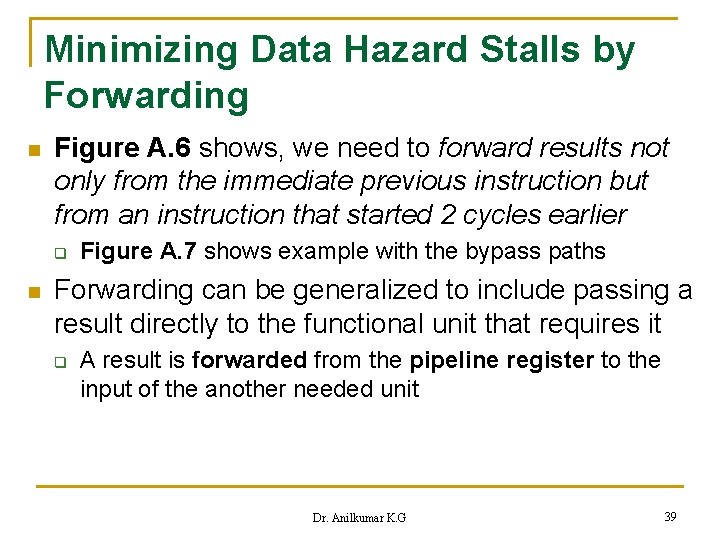

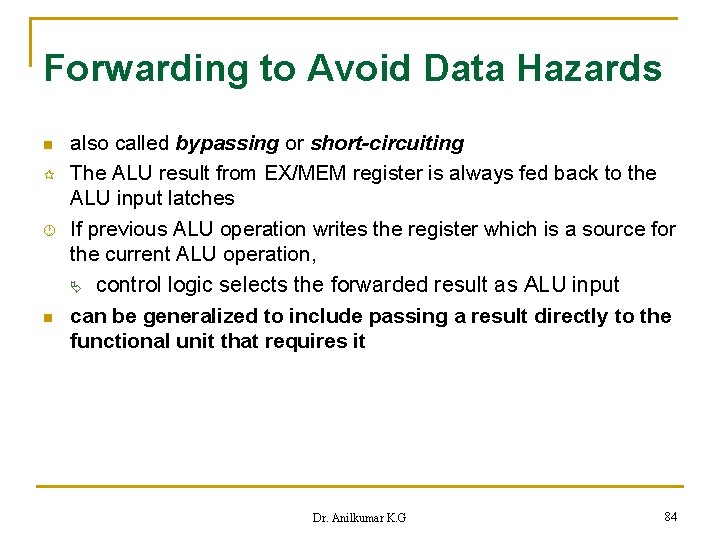

Minimizing Data Hazard Stalls by Forwarding n The problem caused by data hazard (Figure A. 6) can be solved with a simple HW technique called forwarding (also called bypassing) q q n The key insight of forwarding is that the result is not really needed by the DSUB until after the DADD actually produces it If the result can be moved from the pipeline register where DADD stores it to the DSUB, then a stall can be avoided Forwarding works as follows: q q The ALU result from both the EX/MEM pipeline registers is always fed back to the ALU input If the forwarding HW detects the previous ALU operation has written result into the pipeline register, control logic selects that from the pipeline register rather than reading from register file Dr. Anilkumar K. G 38

Minimizing Data Hazard Stalls by Forwarding n Figure A. 6 shows, we need to forward results not only from the immediate previous instruction but from an instruction that started 2 cycles earlier q n Figure A. 7 shows example with the bypass paths Forwarding can be generalized to include passing a result directly to the functional unit that requires it q A result is forwarded from the pipeline register to the input of the another needed unit Dr. Anilkumar K. G 39

Dr. Anilkumar K. G 40

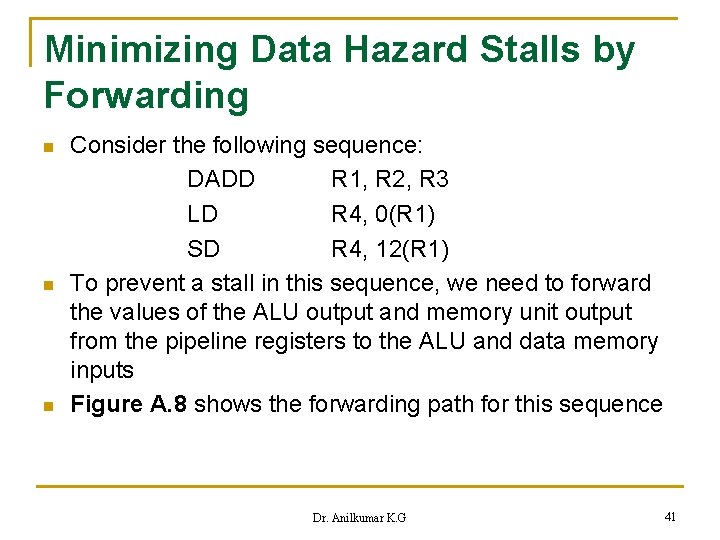

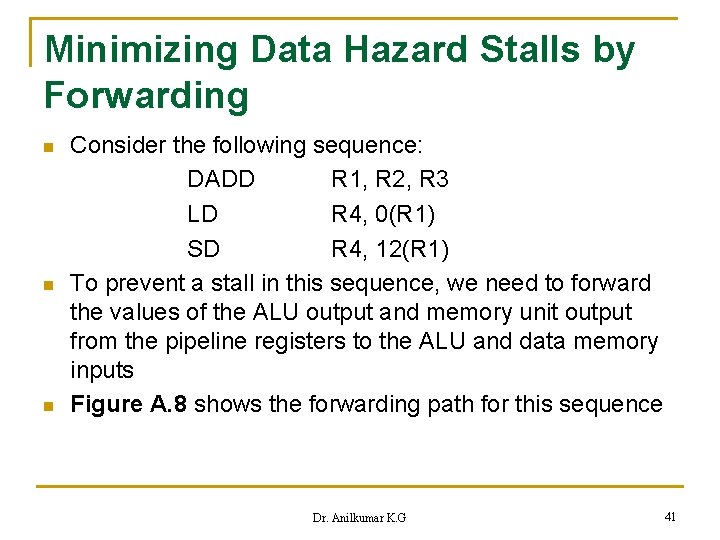

Minimizing Data Hazard Stalls by Forwarding n n n Consider the following sequence: DADD R 1, R 2, R 3 LD R 4, 0(R 1) SD R 4, 12(R 1) To prevent a stall in this sequence, we need to forward the values of the ALU output and memory unit output from the pipeline registers to the ALU and data memory inputs Figure A. 8 shows the forwarding path for this sequence Dr. Anilkumar K. G 41

Dr. Anilkumar K. G 42

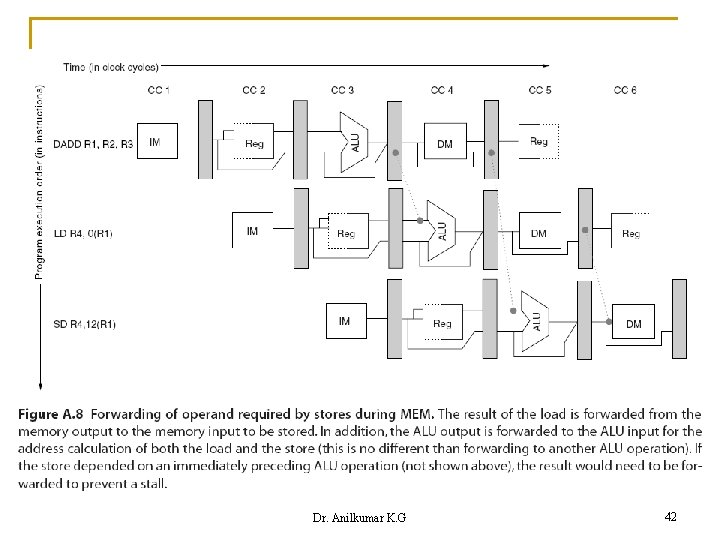

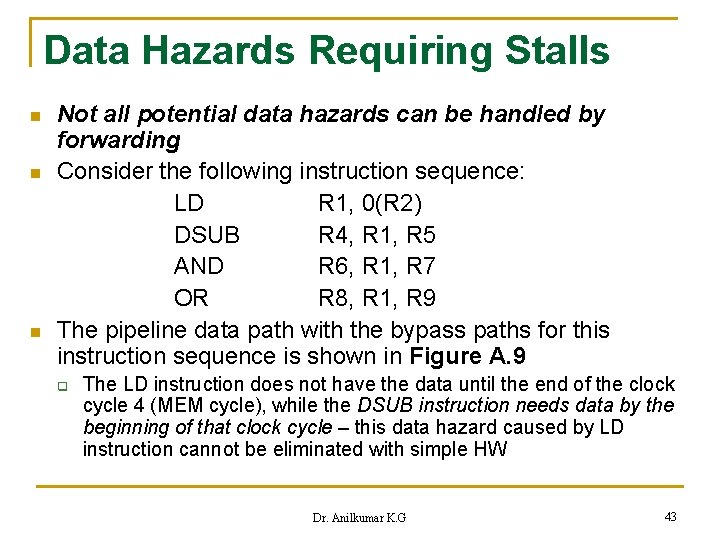

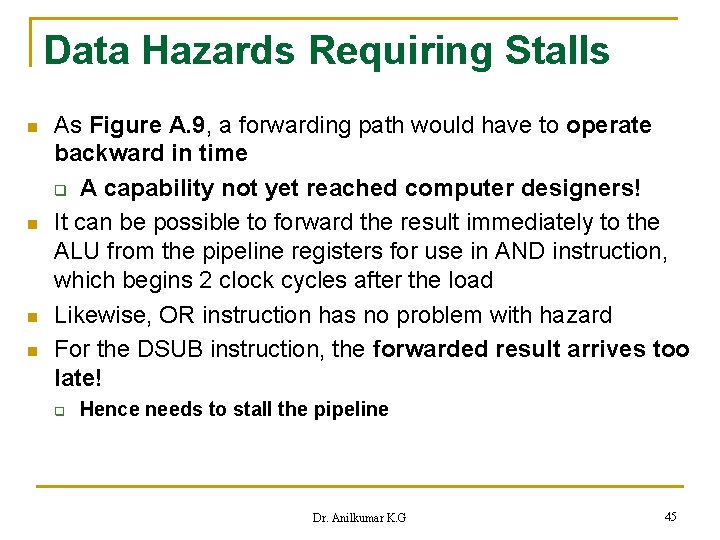

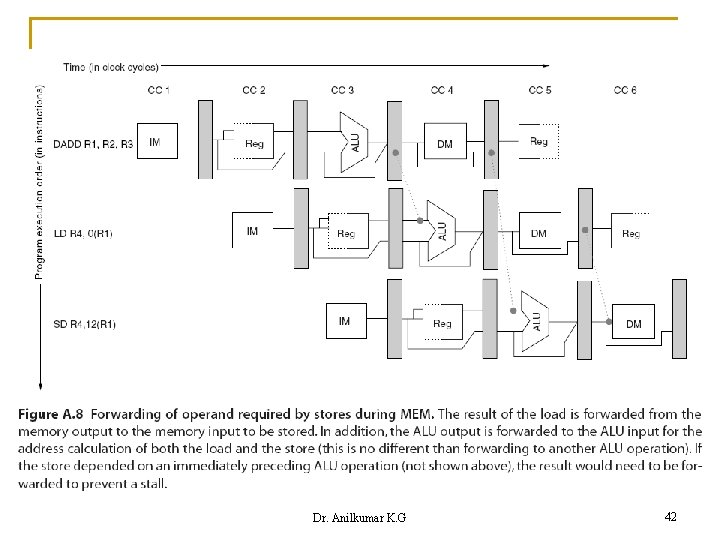

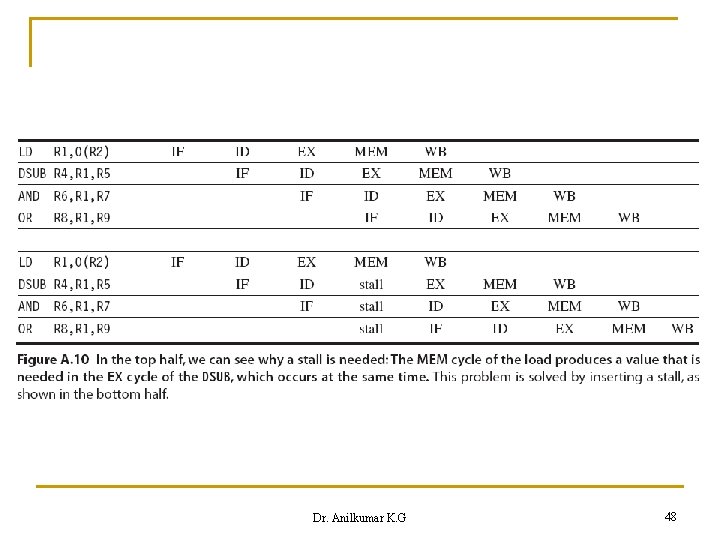

Data Hazards Requiring Stalls n n n Not all potential data hazards can be handled by forwarding Consider the following instruction sequence: LD R 1, 0(R 2) DSUB R 4, R 1, R 5 AND R 6, R 1, R 7 OR R 8, R 1, R 9 The pipeline data path with the bypass paths for this instruction sequence is shown in Figure A. 9 q The LD instruction does not have the data until the end of the clock cycle 4 (MEM cycle), while the DSUB instruction needs data by the beginning of that clock cycle – this data hazard caused by LD instruction cannot be eliminated with simple HW Dr. Anilkumar K. G 43

Dr. Anilkumar K. G 44

Data Hazards Requiring Stalls n n As Figure A. 9, a forwarding path would have to operate backward in time q A capability not yet reached computer designers! It can be possible to forward the result immediately to the ALU from the pipeline registers for use in AND instruction, which begins 2 clock cycles after the load Likewise, OR instruction has no problem with hazard For the DSUB instruction, the forwarded result arrives too late! q Hence needs to stall the pipeline Dr. Anilkumar K. G 45

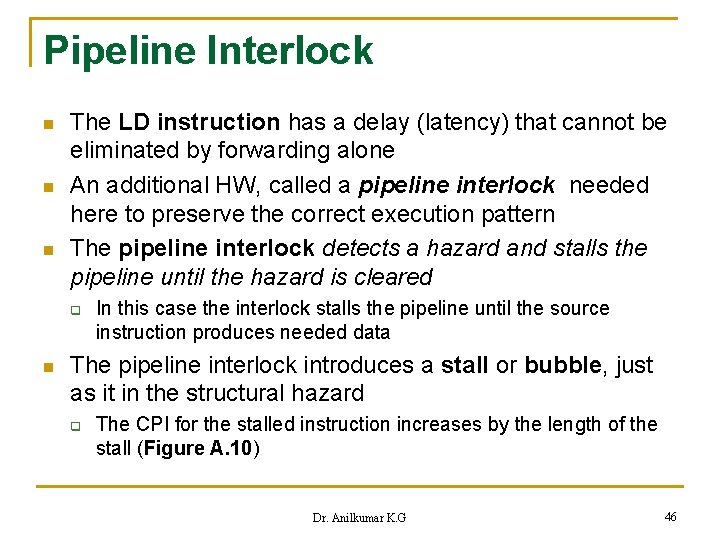

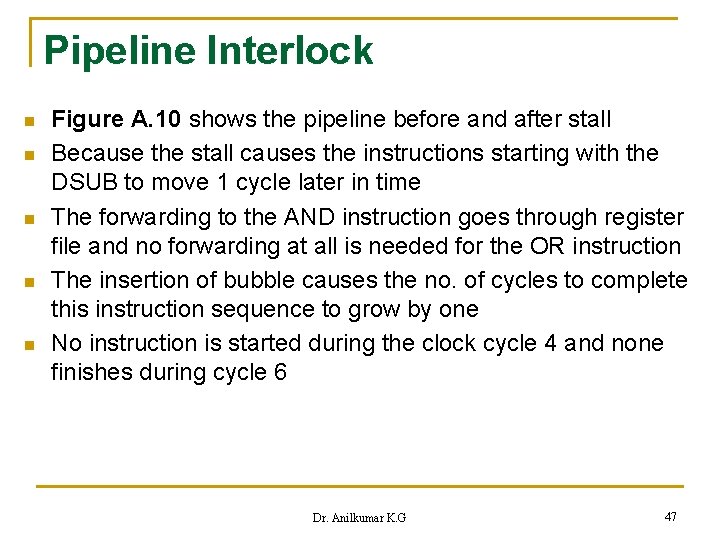

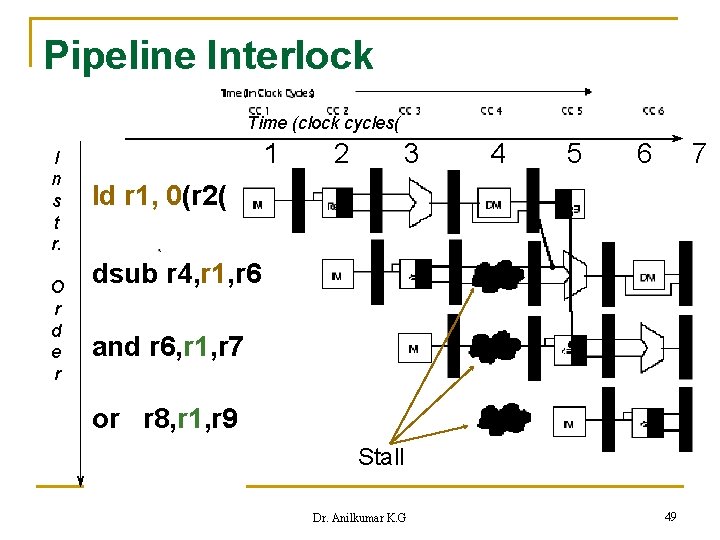

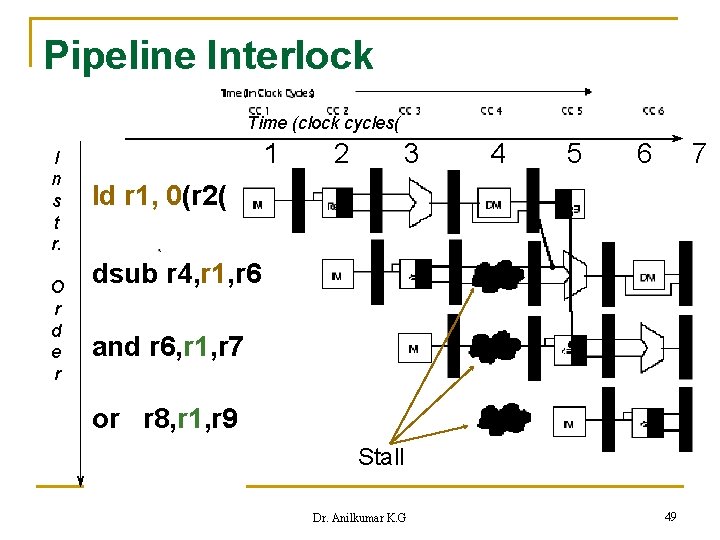

Pipeline Interlock n n n The LD instruction has a delay (latency) that cannot be eliminated by forwarding alone An additional HW, called a pipeline interlock needed here to preserve the correct execution pattern The pipeline interlock detects a hazard and stalls the pipeline until the hazard is cleared q n In this case the interlock stalls the pipeline until the source instruction produces needed data The pipeline interlock introduces a stall or bubble, just as it in the structural hazard q The CPI for the stalled instruction increases by the length of the stall (Figure A. 10) Dr. Anilkumar K. G 46

Pipeline Interlock n n n Figure A. 10 shows the pipeline before and after stall Because the stall causes the instructions starting with the DSUB to move 1 cycle later in time The forwarding to the AND instruction goes through register file and no forwarding at all is needed for the OR instruction The insertion of bubble causes the no. of cycles to complete this instruction sequence to grow by one No instruction is started during the clock cycle 4 and none finishes during cycle 6 Dr. Anilkumar K. G 47

Dr. Anilkumar K. G 48

Pipeline Interlock Time (clock cycles( I n s t r. O r d e r 1 2 3 4 5 6 7 ld r 1, 0(r 2( dsub r 4, r 1, r 6 and r 6, r 1, r 7 or r 8, r 1, r 9 Stall Dr. Anilkumar K. G 49

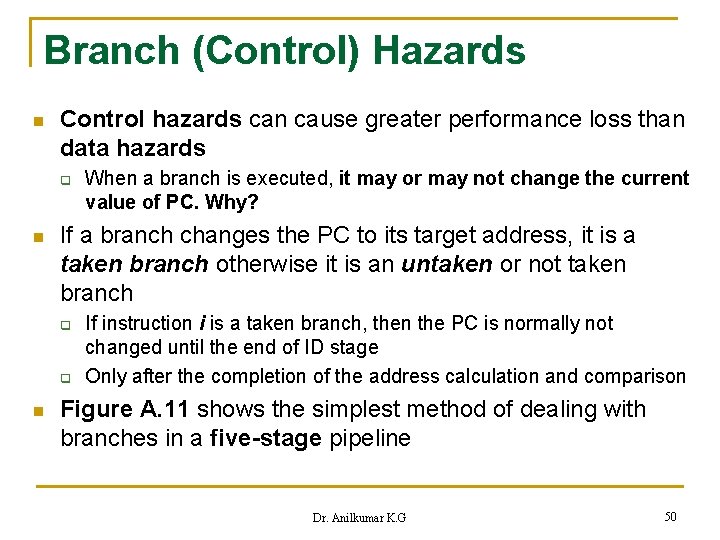

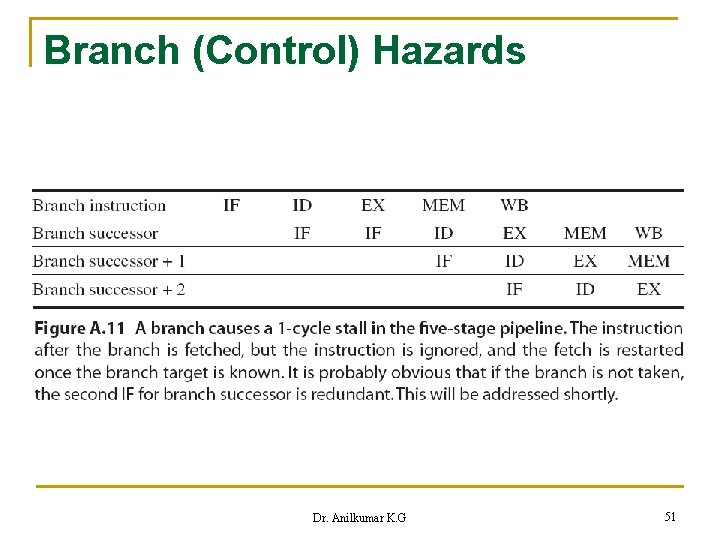

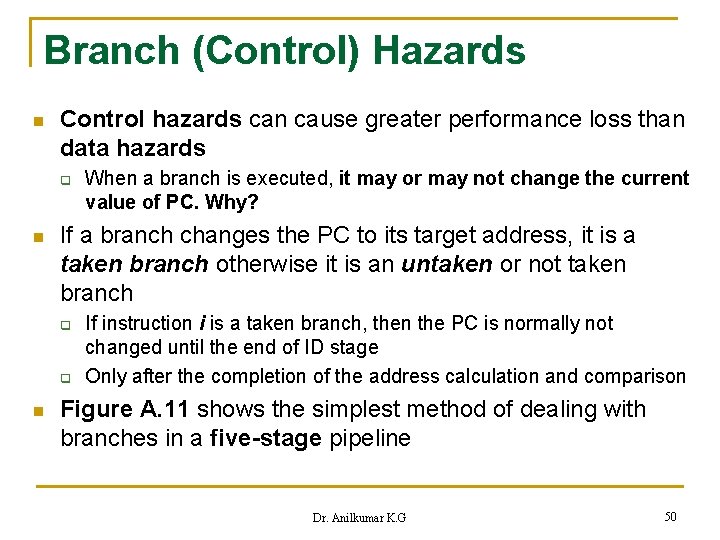

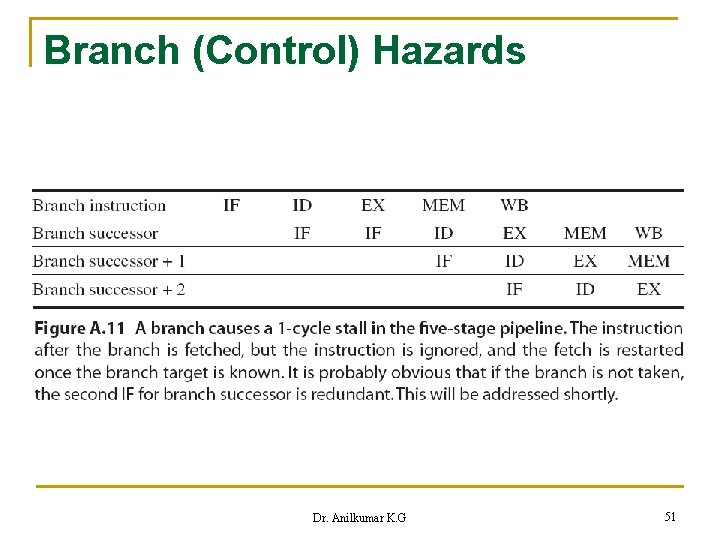

Branch (Control) Hazards n Control hazards can cause greater performance loss than data hazards q n If a branch changes the PC to its target address, it is a taken branch otherwise it is an untaken or not taken branch q q n When a branch is executed, it may or may not change the current value of PC. Why? If instruction i is a taken branch, then the PC is normally not changed until the end of ID stage Only after the completion of the address calculation and comparison Figure A. 11 shows the simplest method of dealing with branches in a five-stage pipeline Dr. Anilkumar K. G 50

Branch (Control) Hazards Dr. Anilkumar K. G 51

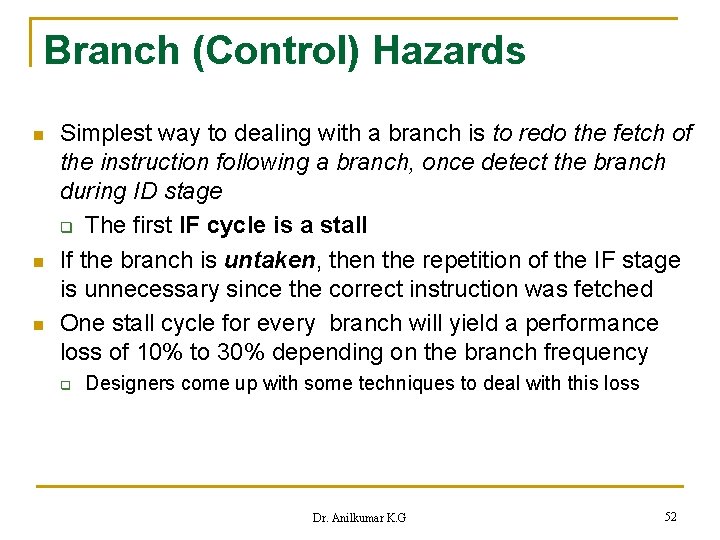

Branch (Control) Hazards n n n Simplest way to dealing with a branch is to redo the fetch of the instruction following a branch, once detect the branch during ID stage q The first IF cycle is a stall If the branch is untaken, then the repetition of the IF stage is unnecessary since the correct instruction was fetched One stall cycle for every branch will yield a performance loss of 10% to 30% depending on the branch frequency q Designers come up with some techniques to deal with this loss Dr. Anilkumar K. G 52

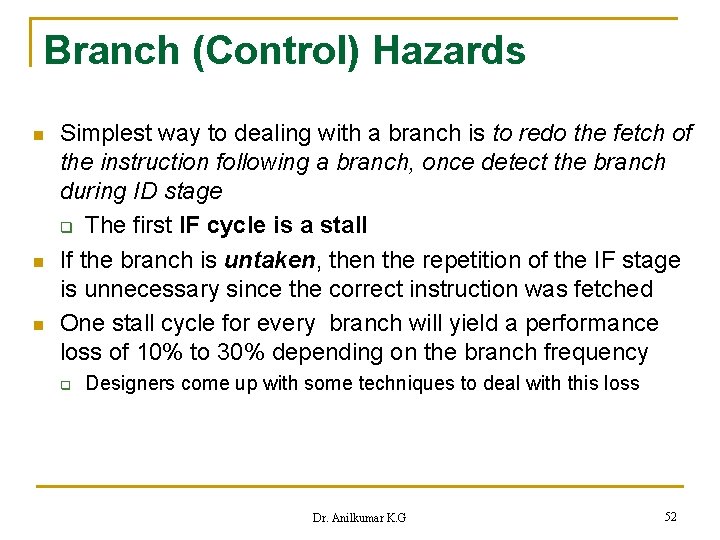

n Reducing Pipeline Branch Penalties There are four simple compile time schemes for dealing with the pipeline stalls caused by branch delay q The SW can try to minimize the branch penalty using the knowledge of HW scheme and branch behavior 1). The simplest scheme to handle branches is to freeze or flush the pipeline: q q q Freeze the pipeline, holding or deleting any instructions after the branch until the branch destination is known It is very simple to implement in both SW and HW In this case the branch penalty is fixed and cannot be reduced by SW Dr. Anilkumar K. G 53

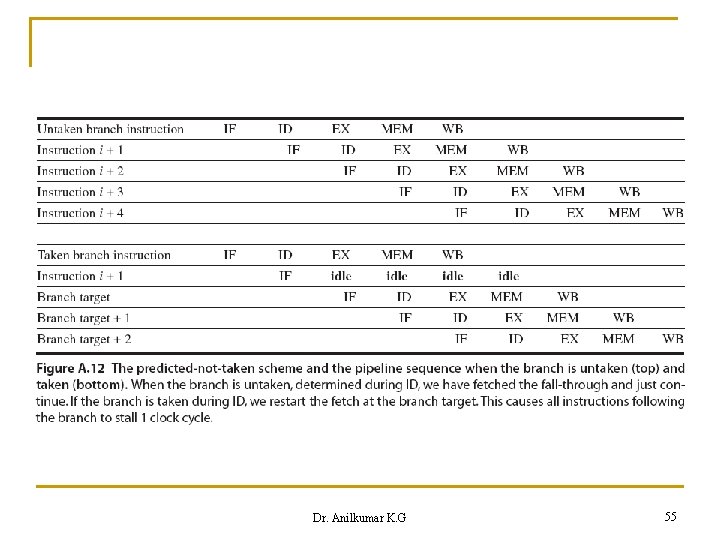

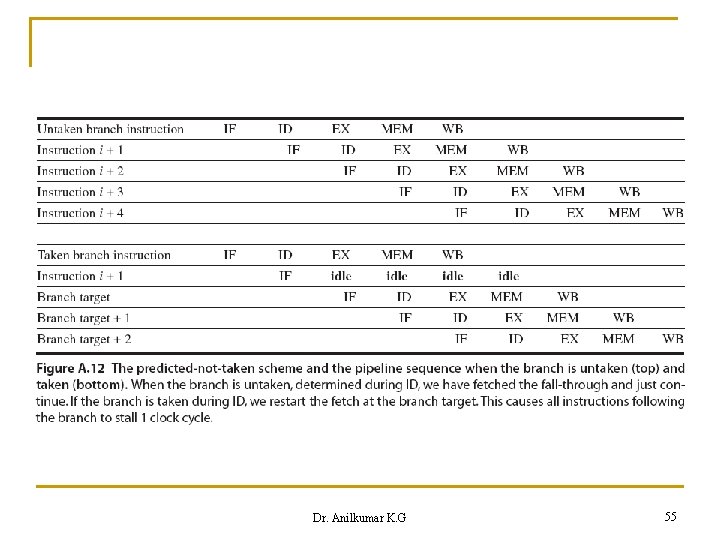

Reducing Pipeline Branch Penalties 2). A higher performance, slightly complex scheme is to treat every branch as not taken q q q Simply allowing the HW to continue as if the branch were not taken Not to change the processor state until the branch outcome is definitely known during the EXE stage If the branch is taken, then the processor must cancel the fetched non-target instructions And fetch only the target instructions (cause branch penalty!) Figure A. 12 shows a predict not take pipeline scheme Dr. Anilkumar K. G 54

Dr. Anilkumar K. G 55

Reducing Pipeline Branch Penalties 3). An alternative scheme is to treat every branch as taken q As soon as the branch is decoded & the target address is computed in ID, treat the branch is taken and begin to fetch and execute its target instruction: n n n q In this five-stage pipeline, it is not possible to know the target address earlier than the branch outcome In some processors, implicitly set conditional codes – the branch target is known before the branch outcome, and a predictedtaken scheme make sense If the branch is not taken, then incurs branch penalty by refetching instructions after the branch instruction! In either predicted-taken or predicted-not-taken scheme, the compiler can improve the performance by organizing the code Dr. Anilkumar K. G 56

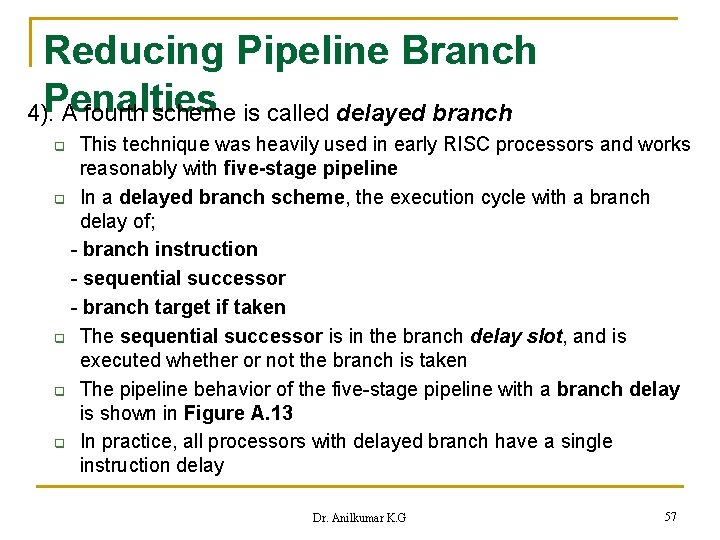

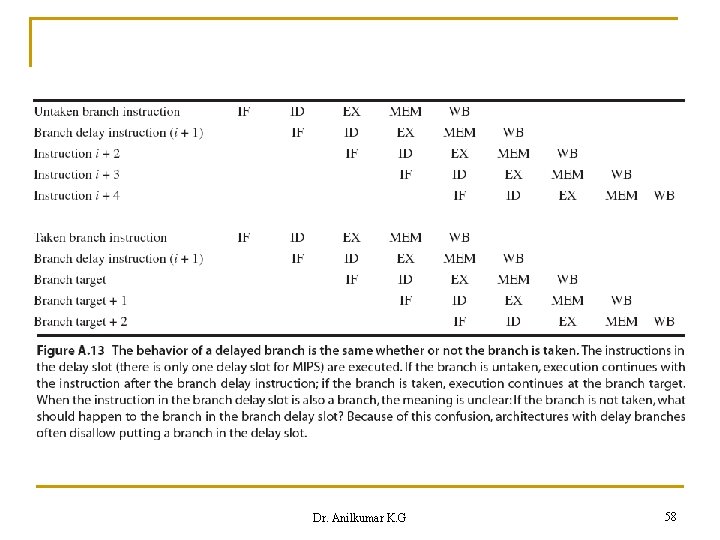

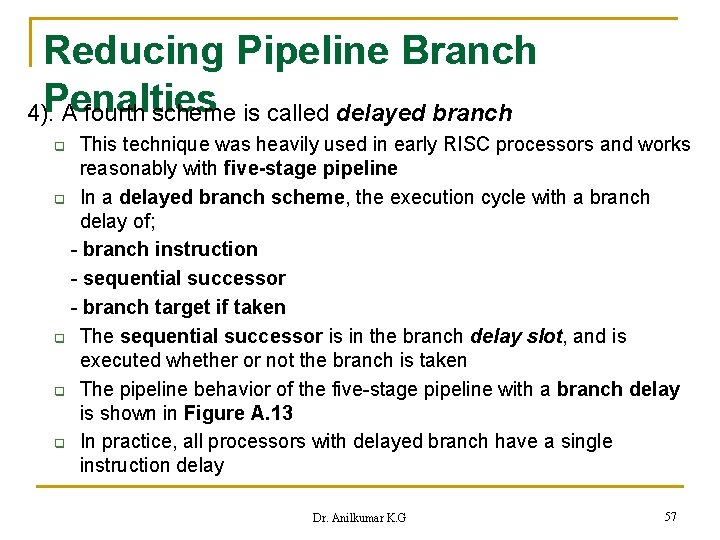

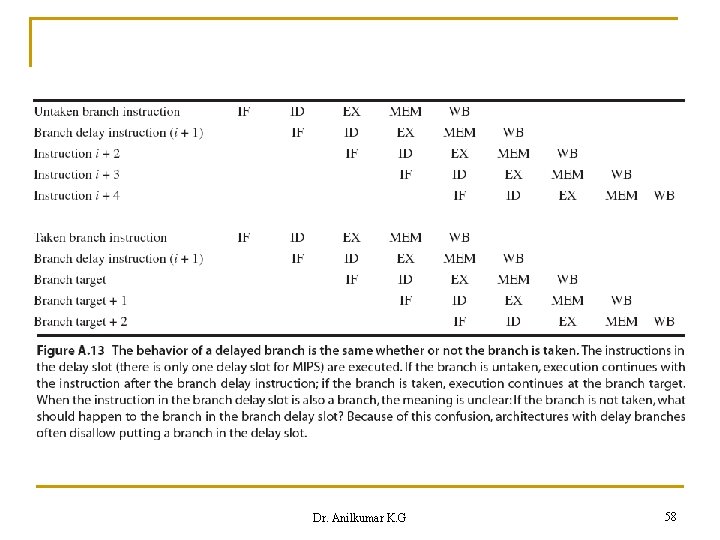

Reducing Pipeline Branch 4). Penalties A fourth scheme is called delayed branch This technique was heavily used in early RISC processors and works reasonably with five-stage pipeline q In a delayed branch scheme, the execution cycle with a branch delay of; - branch instruction - sequential successor - branch target if taken q The sequential successor is in the branch delay slot, and is executed whether or not the branch is taken q The pipeline behavior of the five-stage pipeline with a branch delay is shown in Figure A. 13 q In practice, all processors with delayed branch have a single instruction delay q Dr. Anilkumar K. G 57

Dr. Anilkumar K. G 58

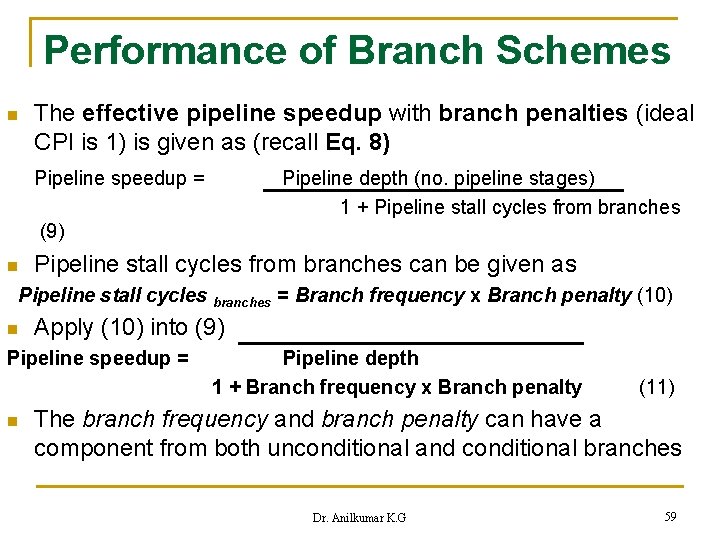

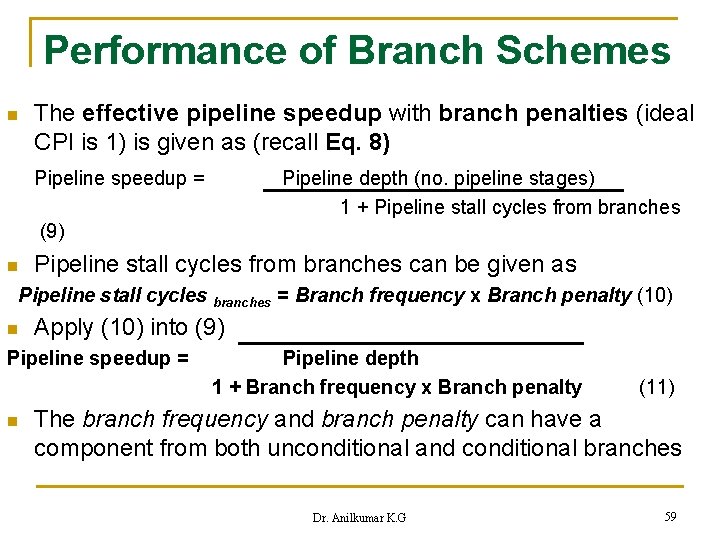

Performance of Branch Schemes n The effective pipeline speedup with branch penalties (ideal CPI is 1) is given as (recall Eq. 8) Pipeline speedup = Pipeline depth (no. pipeline stages) 1 + Pipeline stall cycles from branches (9) n Pipeline stall cycles from branches can be given as Pipeline stall cycles branches = Branch frequency x Branch penalty (10) n Apply (10) into (9) Pipeline speedup = n Pipeline depth 1 + Branch frequency x Branch penalty (11) The branch frequency and branch penalty can have a component from both unconditional and conditional branches Dr. Anilkumar K. G 59

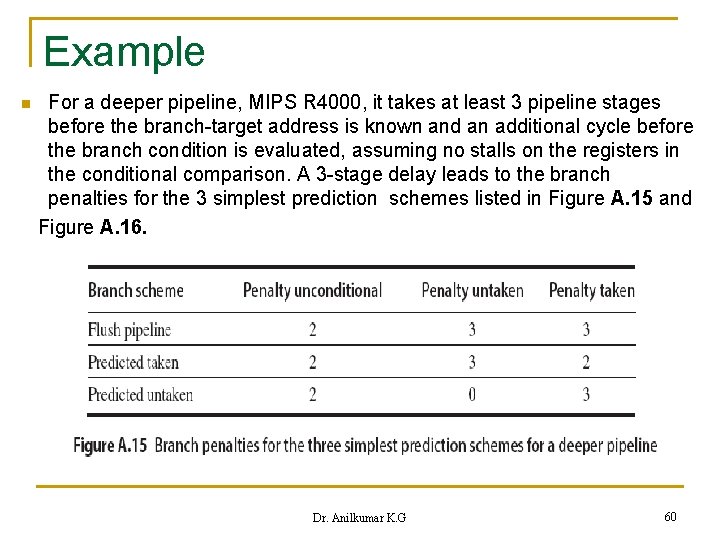

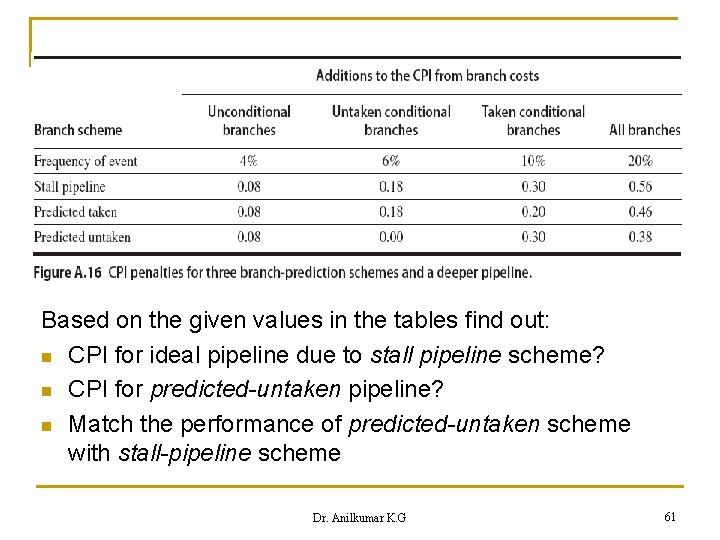

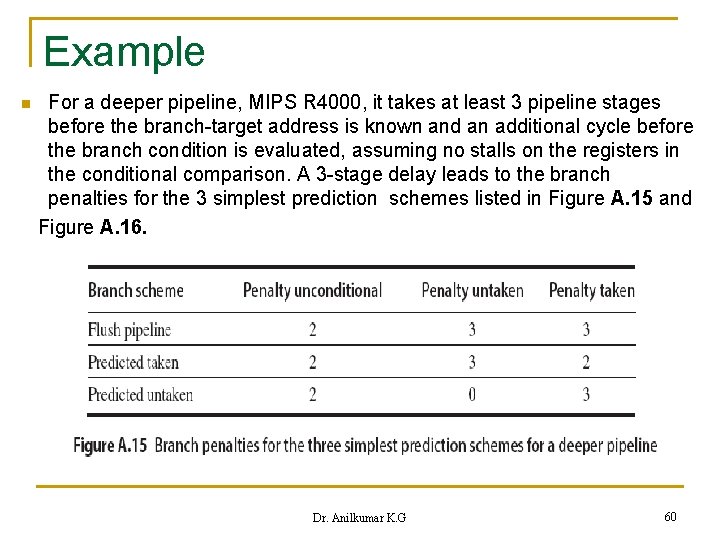

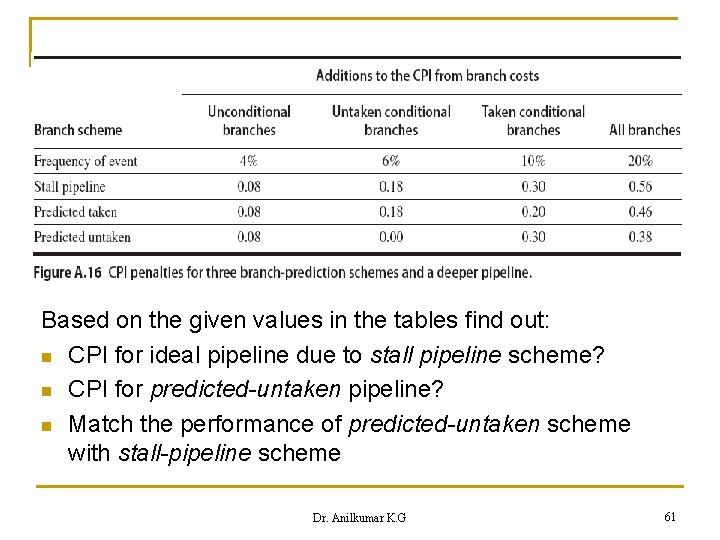

Example n For a deeper pipeline, MIPS R 4000, it takes at least 3 pipeline stages before the branch-target address is known and an additional cycle before the branch condition is evaluated, assuming no stalls on the registers in the conditional comparison. A 3 -stage delay leads to the branch penalties for the 3 simplest prediction schemes listed in Figure A. 15 and Figure A. 16. Dr. Anilkumar K. G 60

Based on the given values in the tables find out: n CPI for ideal pipeline due to stall pipeline scheme? n CPI for predicted-untaken pipeline? n Match the performance of predicted-untaken scheme with stall-pipeline scheme Dr. Anilkumar K. G 61

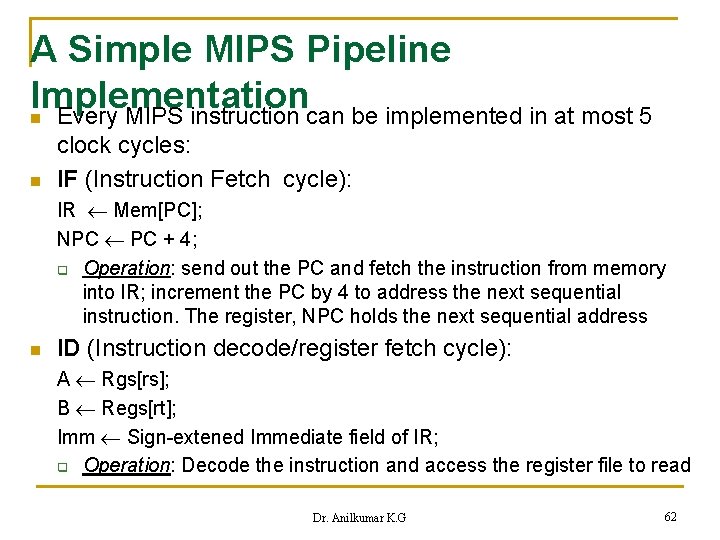

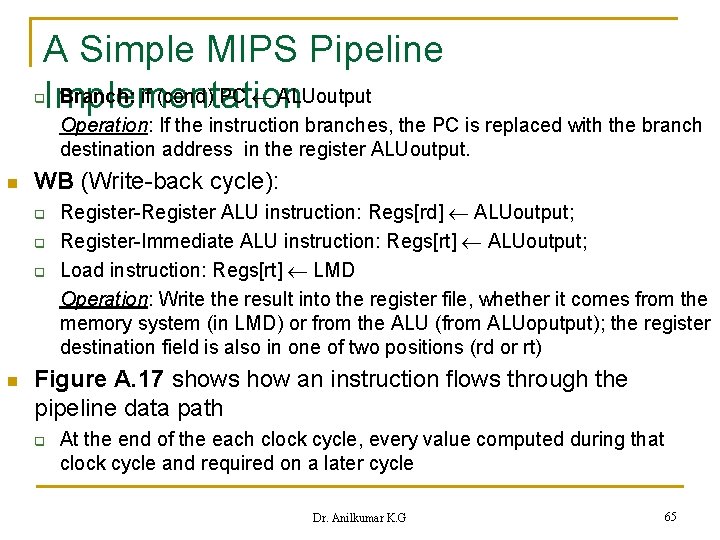

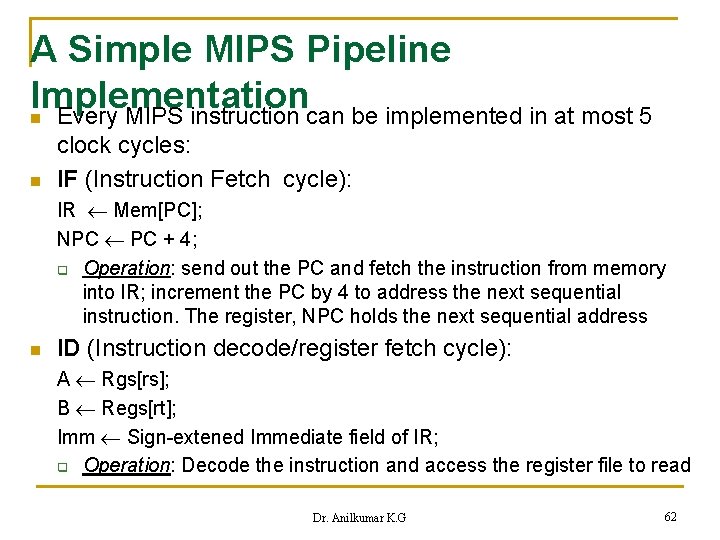

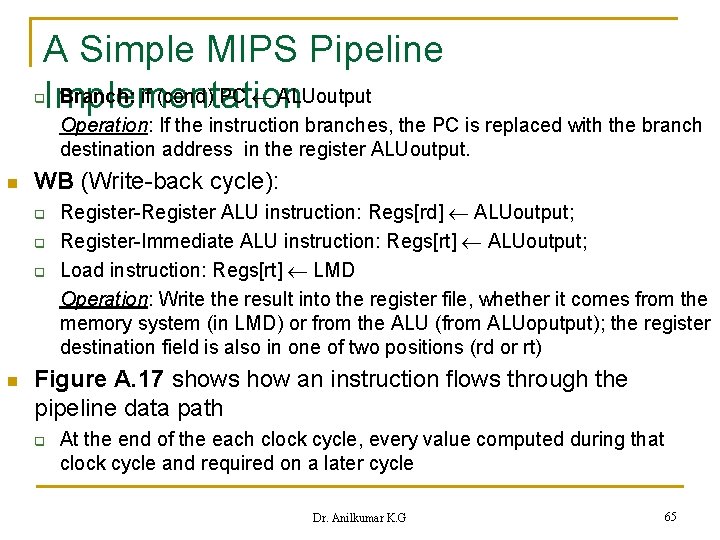

A Simple MIPS Pipeline Implementation n Every MIPS instruction can be implemented in at most 5 n clock cycles: IF (Instruction Fetch cycle): IR Mem[PC]; NPC PC + 4; q Operation: send out the PC and fetch the instruction from memory into IR; increment the PC by 4 to address the next sequential instruction. The register, NPC holds the next sequential address n ID (Instruction decode/register fetch cycle): A Rgs[rs]; B Regs[rt]; Imm Sign-extened Immediate field of IR; q Operation: Decode the instruction and access the register file to read Dr. Anilkumar K. G 62

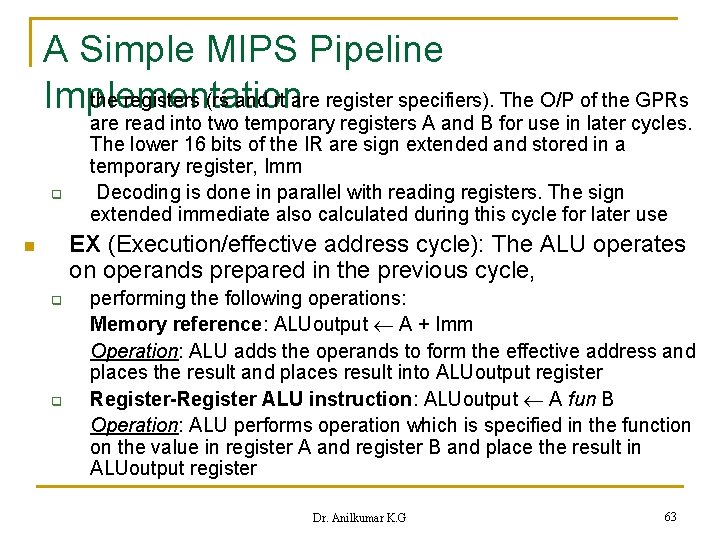

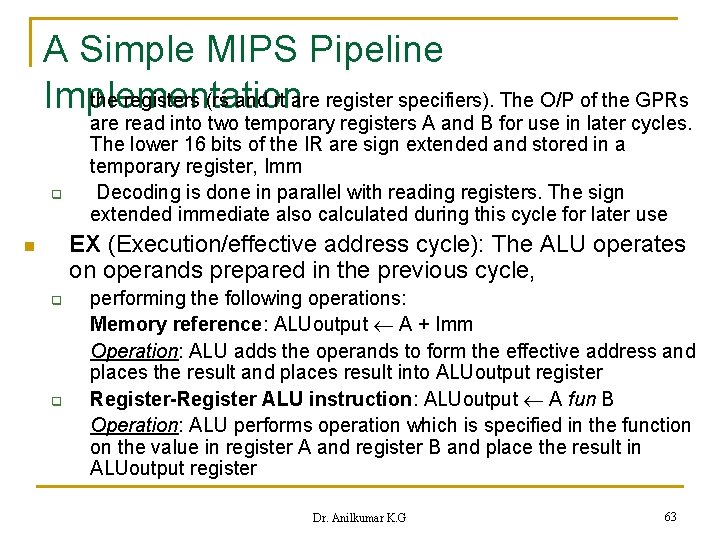

A Simple MIPS Pipeline the registers (rs and rt are register specifiers). The O/P of the GPRs Implementation q are read into two temporary registers A and B for use in later cycles. The lower 16 bits of the IR are sign extended and stored in a temporary register, Imm Decoding is done in parallel with reading registers. The sign extended immediate also calculated during this cycle for later use EX (Execution/effective address cycle): The ALU operates on operands prepared in the previous cycle, n q q performing the following operations: Memory reference: ALUoutput A + Imm Operation: ALU adds the operands to form the effective address and places the result and places result into ALUoutput register Register-Register ALU instruction: ALUoutput A fun B Operation: ALU performs operation which is specified in the function on the value in register A and register B and place the result in ALUoutput register Dr. Anilkumar K. G 63

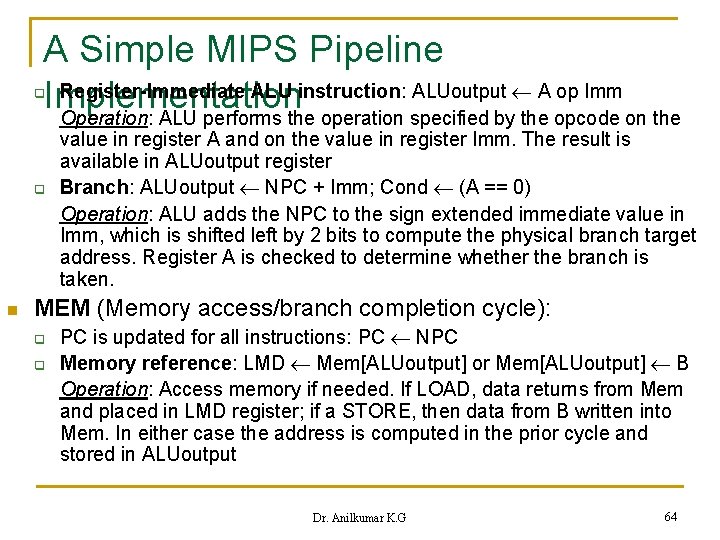

A Simple MIPS Pipeline Register-Immediate ALU instruction: ALUoutput A op Imm Implementation Operation: ALU performs the operation specified by the opcode on the q q n value in register A and on the value in register Imm. The result is available in ALUoutput register Branch: ALUoutput NPC + Imm; Cond (A == 0) Operation: ALU adds the NPC to the sign extended immediate value in Imm, which is shifted left by 2 bits to compute the physical branch target address. Register A is checked to determine whether the branch is taken. MEM (Memory access/branch completion cycle): q q PC is updated for all instructions: PC NPC Memory reference: LMD Mem[ALUoutput] or Mem[ALUoutput] B Operation: Access memory if needed. If LOAD, data returns from Mem and placed in LMD register; if a STORE, then data from B written into Mem. In either case the address is computed in the prior cycle and stored in ALUoutput Dr. Anilkumar K. G 64

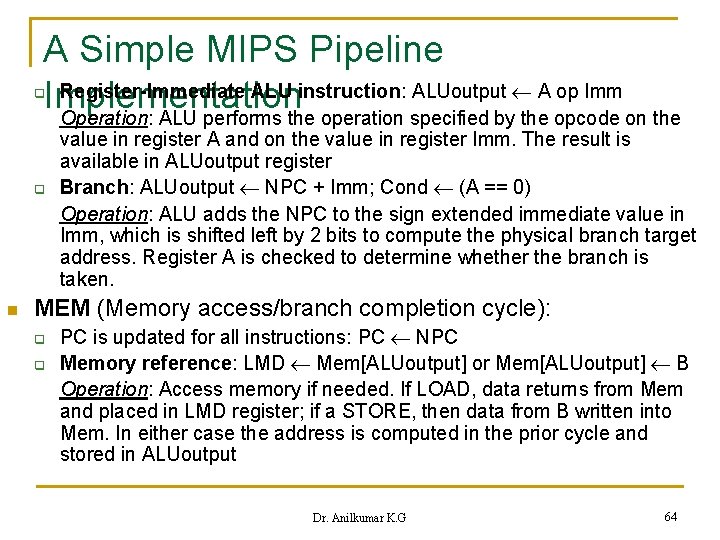

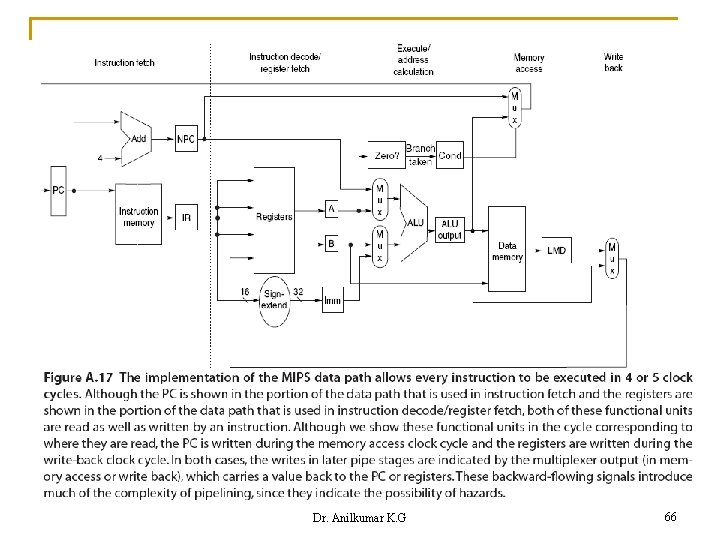

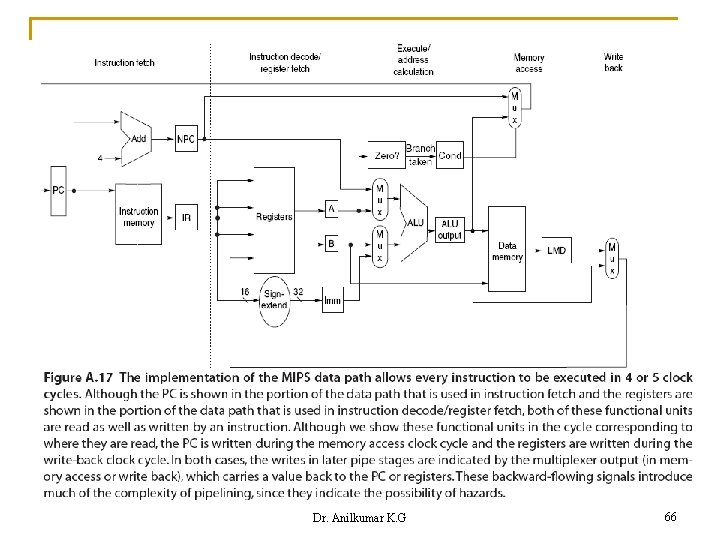

A Simple MIPS Pipeline Branch: if (cond) PC ALUoutput Implementation q Operation: If the instruction branches, the PC is replaced with the branch destination address in the register ALUoutput. n WB (Write-back cycle): q q q n Register-Register ALU instruction: Regs[rd] ALUoutput; Register-Immediate ALU instruction: Regs[rt] ALUoutput; Load instruction: Regs[rt] LMD Operation: Write the result into the register file, whether it comes from the memory system (in LMD) or from the ALU (from ALUoputput); the register destination field is also in one of two positions (rd or rt) Figure A. 17 shows how an instruction flows through the pipeline data path q At the end of the each clock cycle, every value computed during that clock cycle and required on a later cycle Dr. Anilkumar K. G 65

Dr. Anilkumar K. G 66

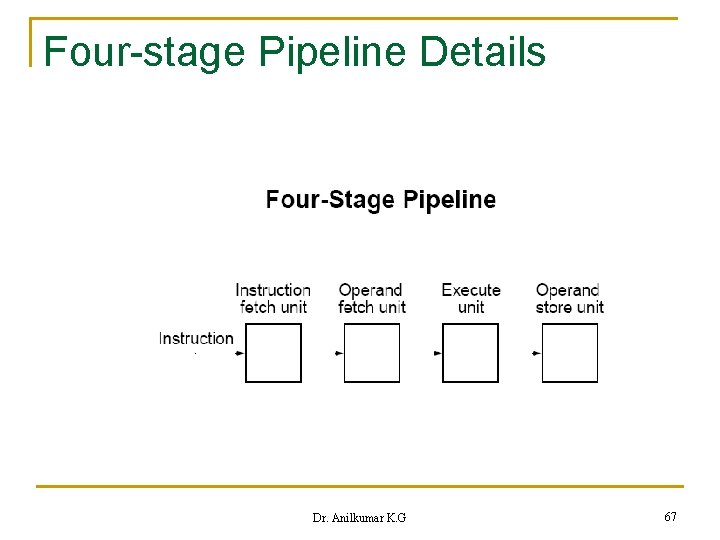

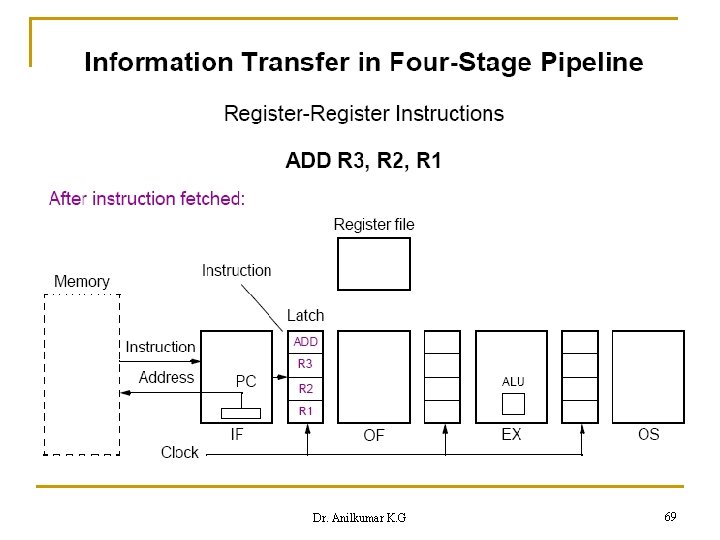

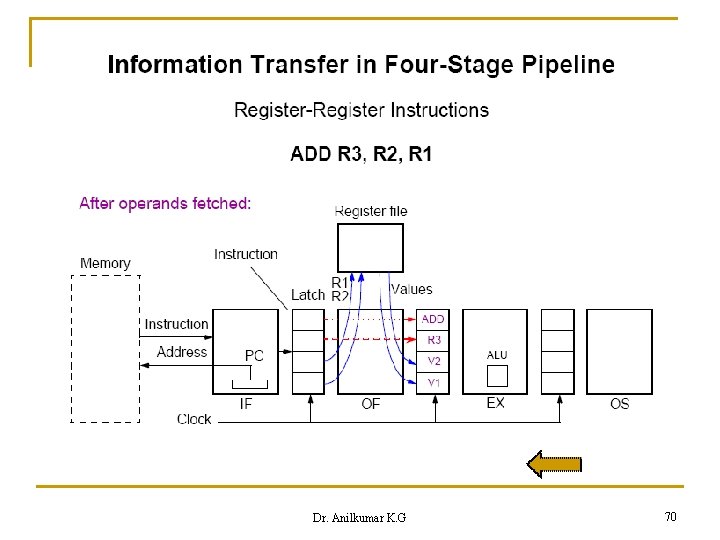

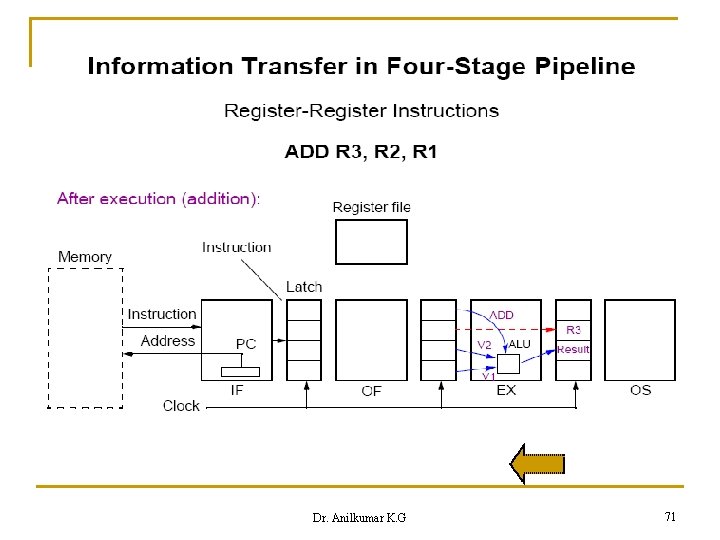

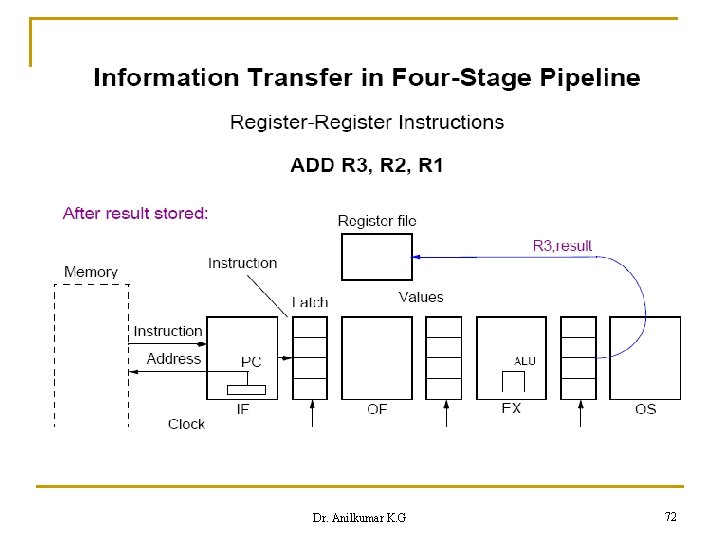

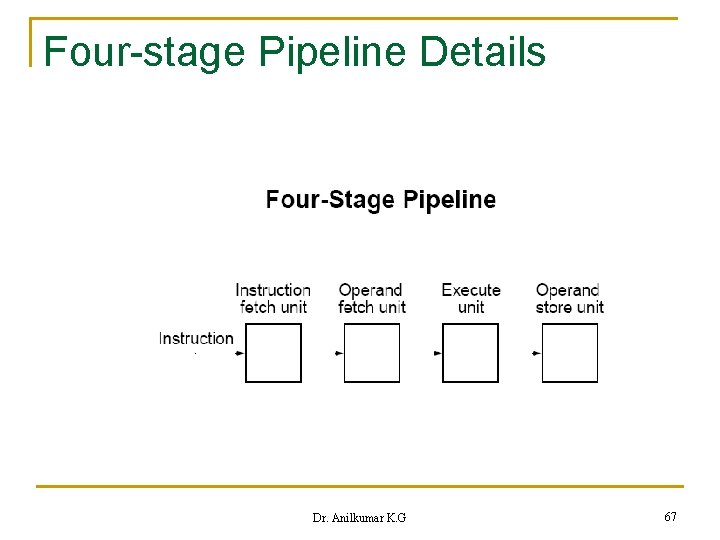

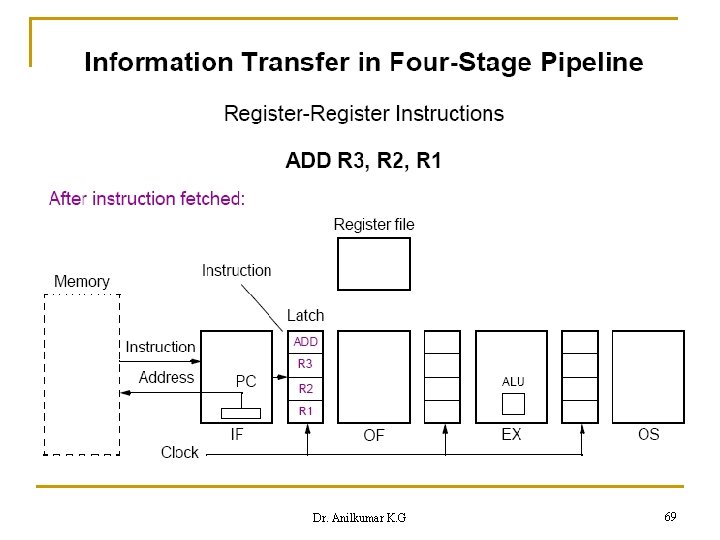

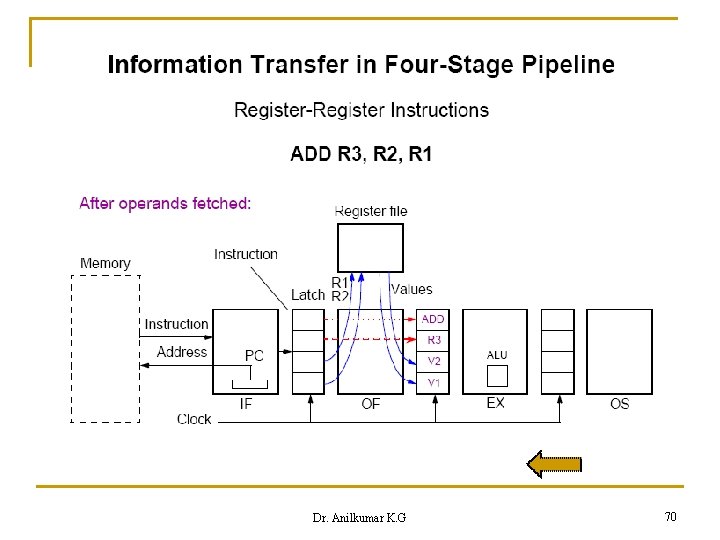

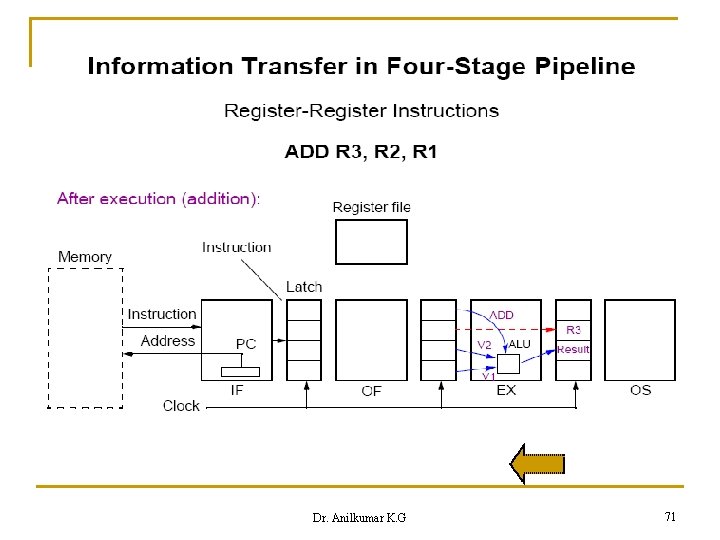

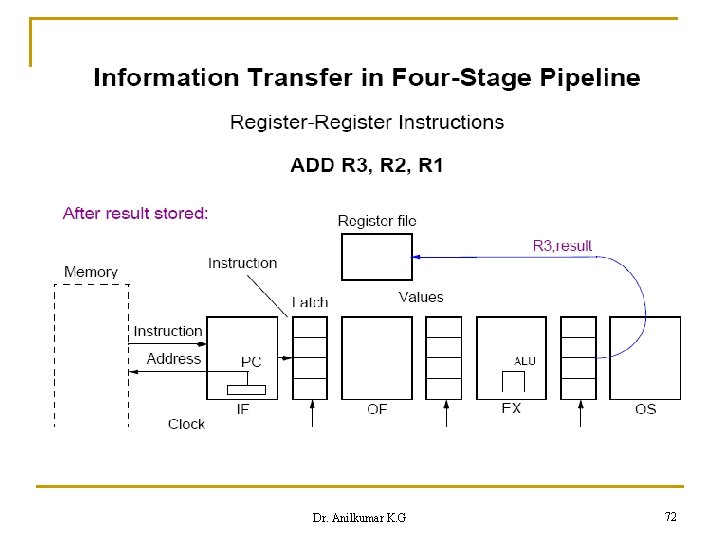

Four-stage Pipeline Details Dr. Anilkumar K. G 67

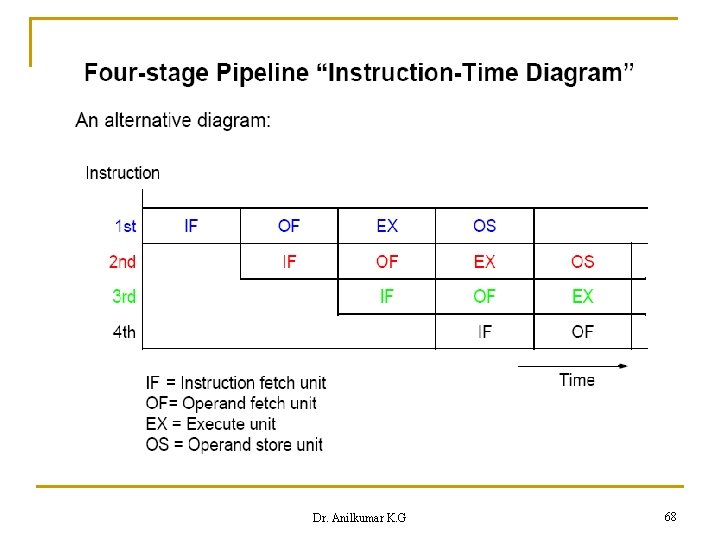

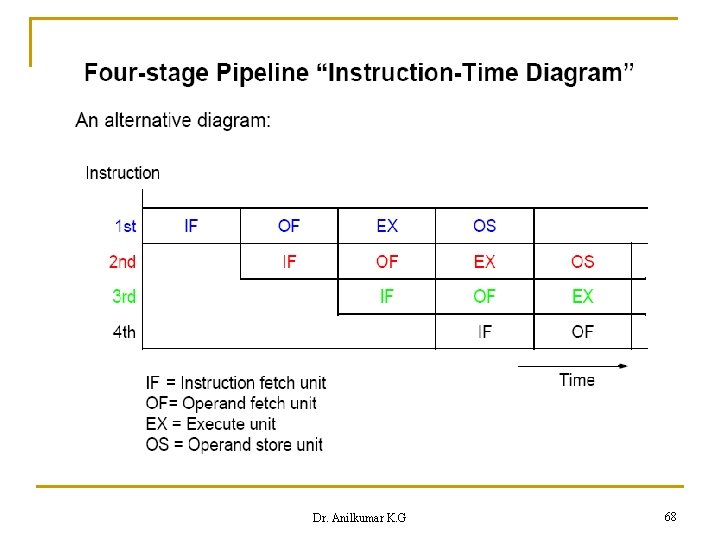

Dr. Anilkumar K. G 68

Dr. Anilkumar K. G 69

Dr. Anilkumar K. G 70

Dr. Anilkumar K. G 71

Dr. Anilkumar K. G 72

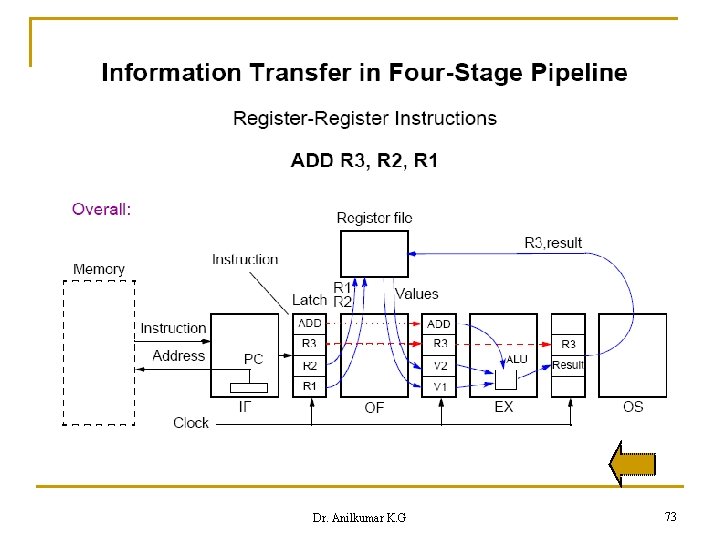

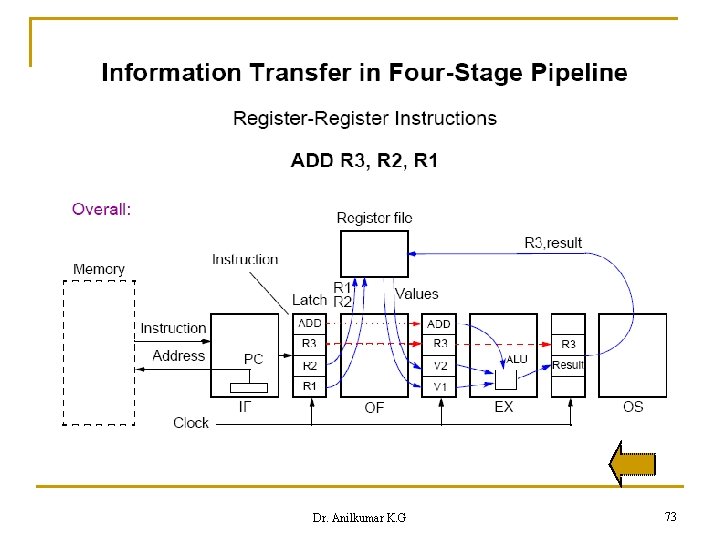

Dr. Anilkumar K. G 73

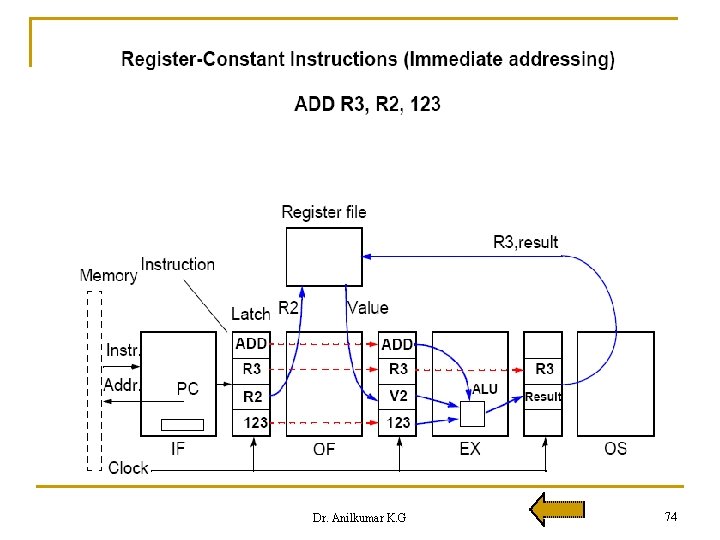

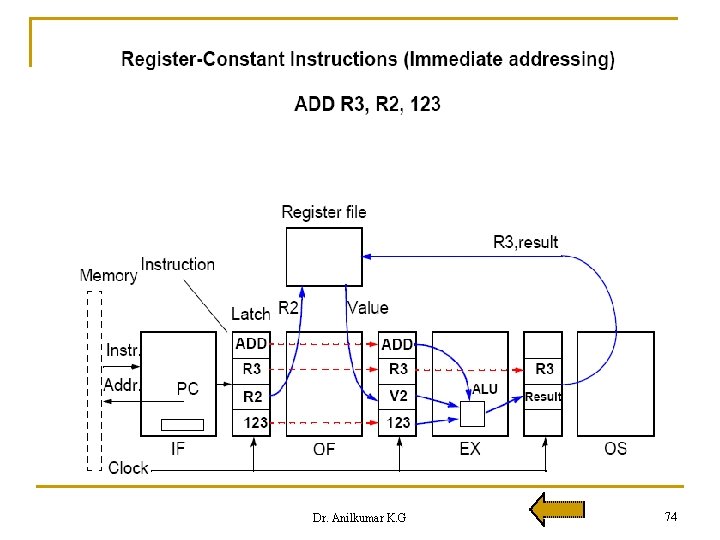

Dr. Anilkumar K. G 74

Pipeline Hazards: A detailed description! Dr. Anilkumar K. G 75

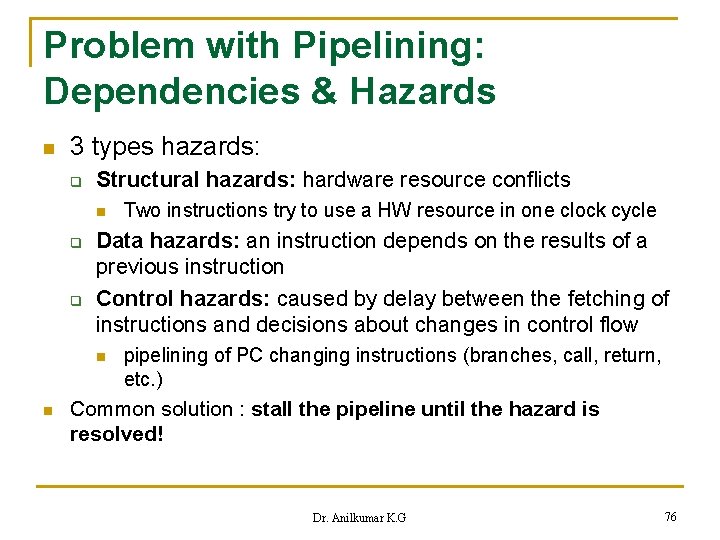

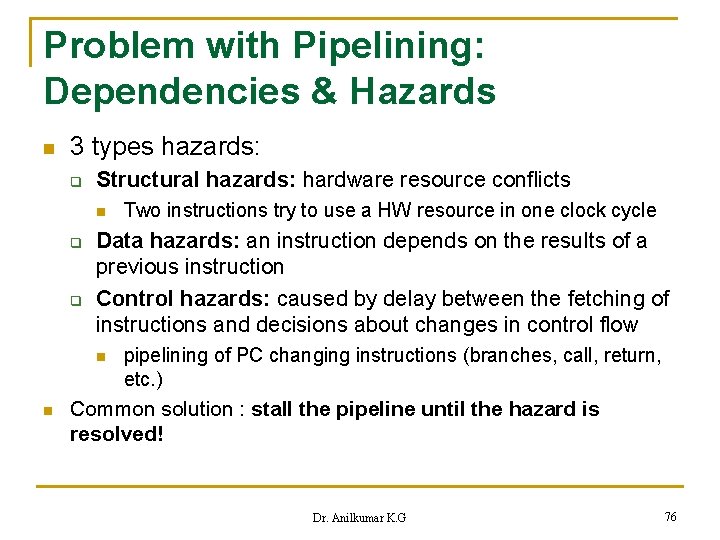

Problem with Pipelining: Dependencies & Hazards n 3 types hazards: q Structural hazards: hardware resource conflicts n q q Data hazards: an instruction depends on the results of a previous instruction Control hazards: caused by delay between the fetching of instructions and decisions about changes in control flow n n Two instructions try to use a HW resource in one clock cycle pipelining of PC changing instructions (branches, call, return, etc. ) Common solution : stall the pipeline until the hazard is resolved! Dr. Anilkumar K. G 76

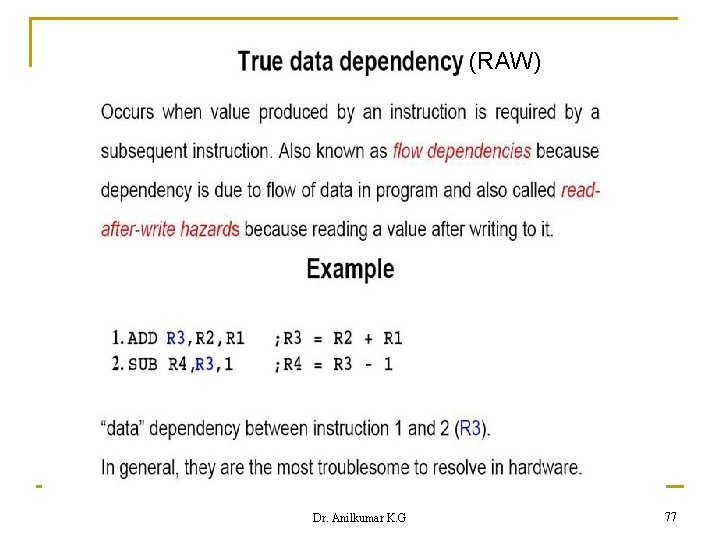

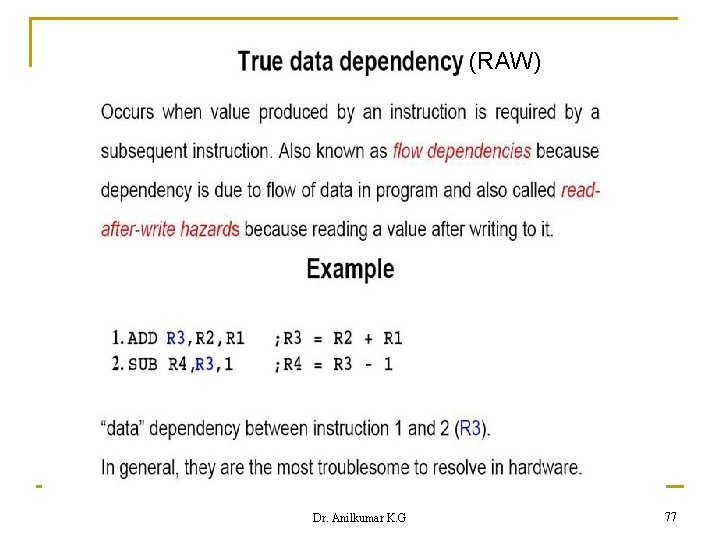

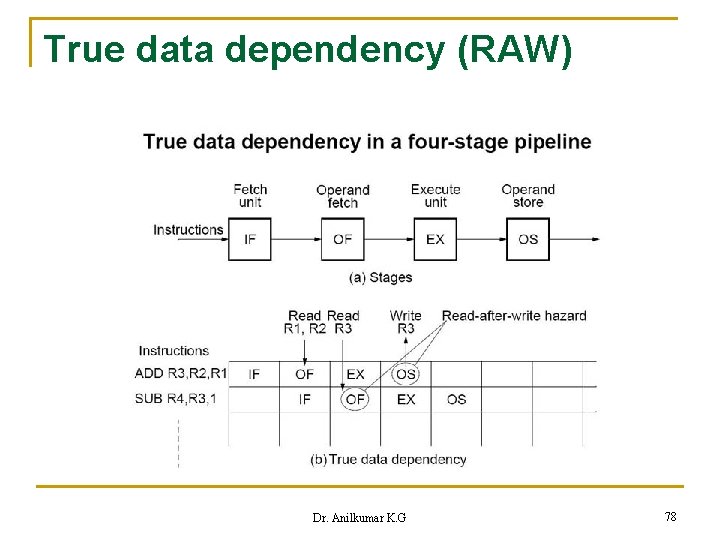

(RAW) Dr. Anilkumar K. G 77

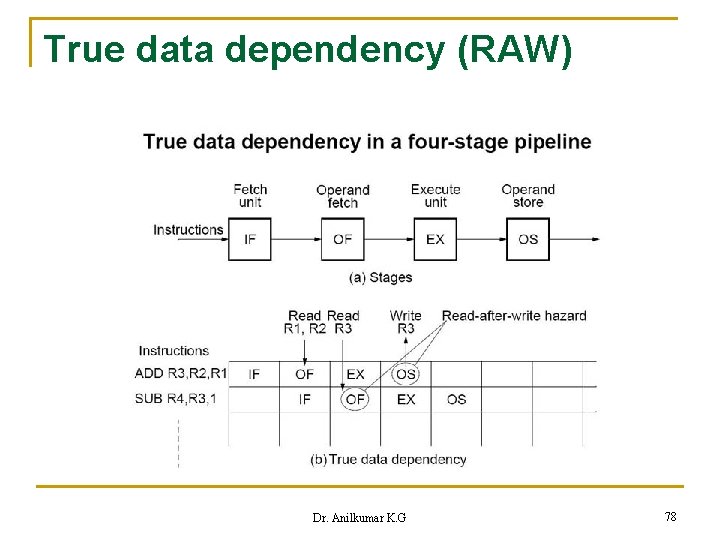

True data dependency (RAW) Dr. Anilkumar K. G 78

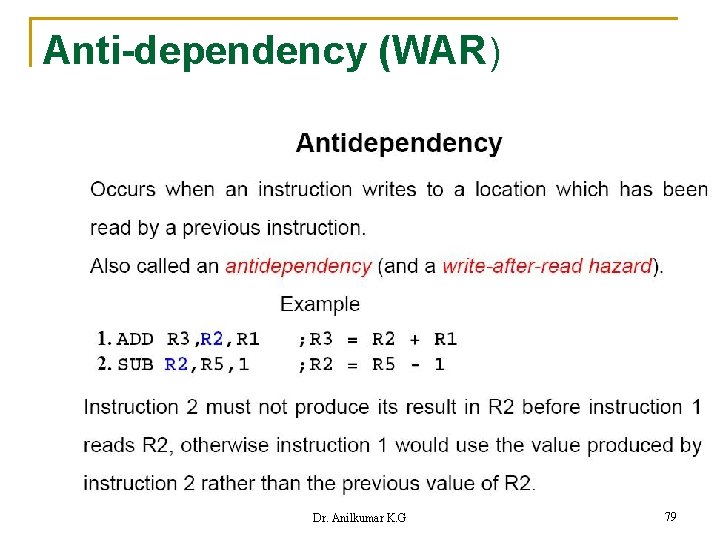

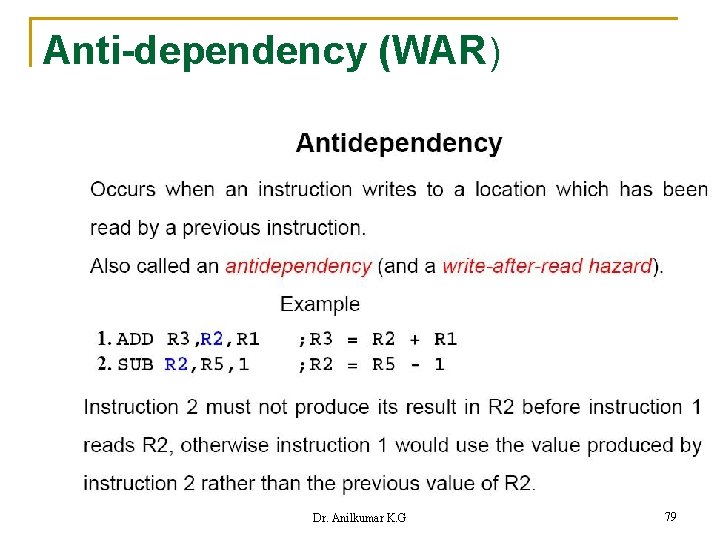

Anti-dependency (WAR) Dr. Anilkumar K. G 79

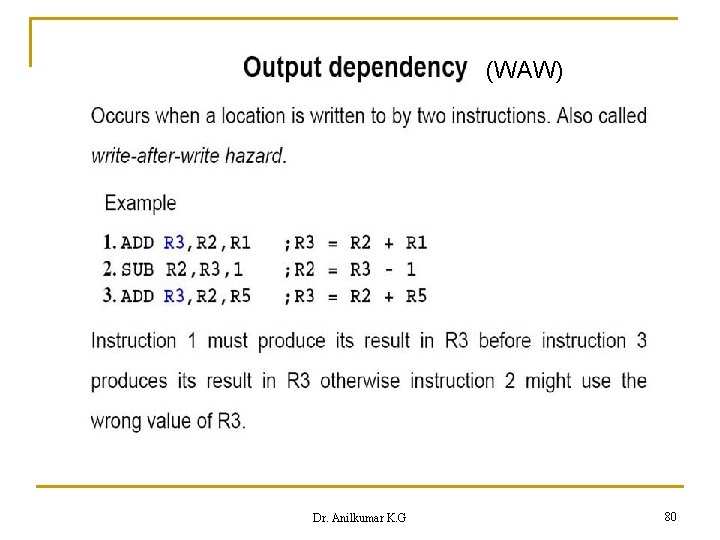

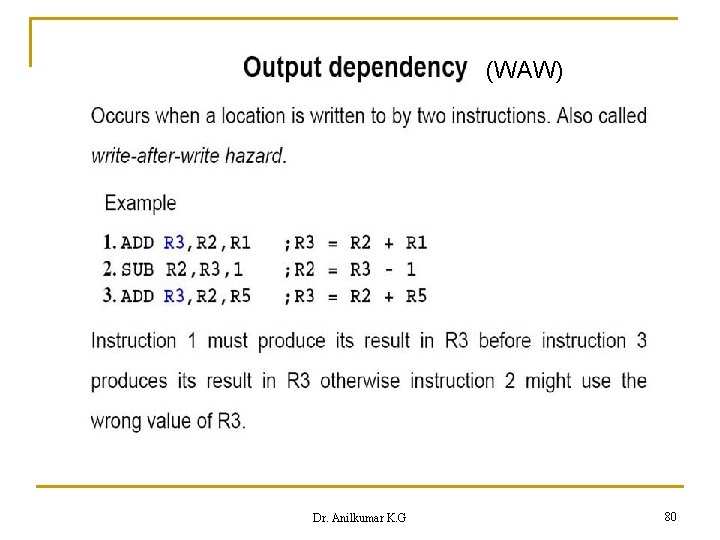

(WAW) Dr. Anilkumar K. G 80

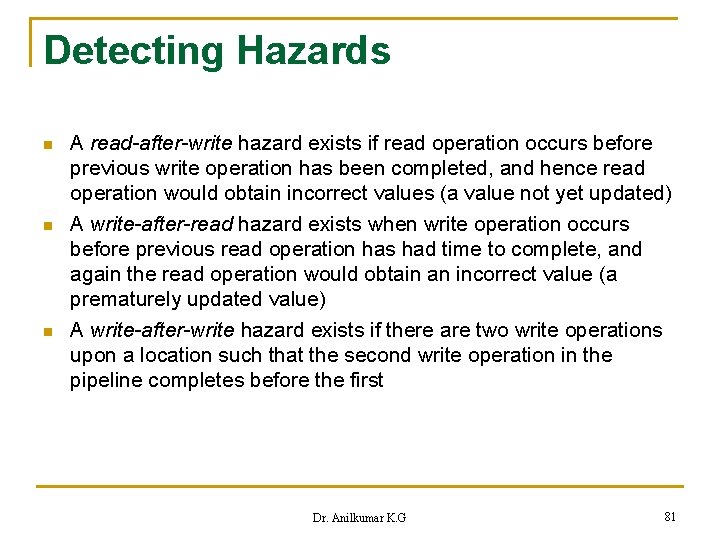

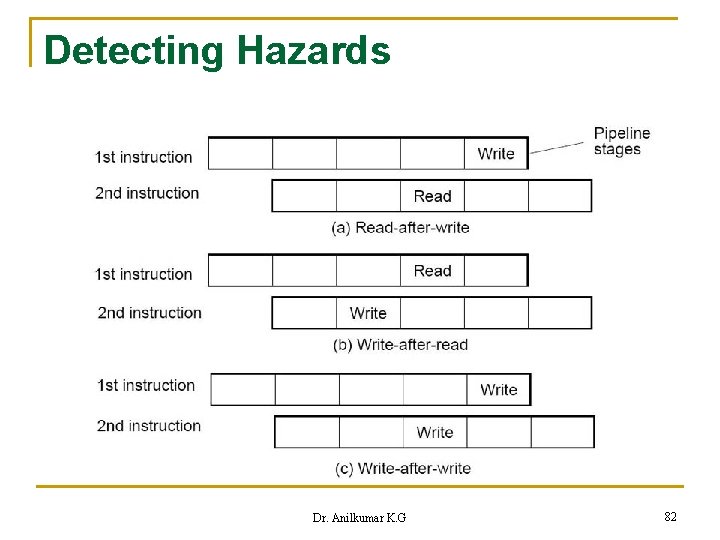

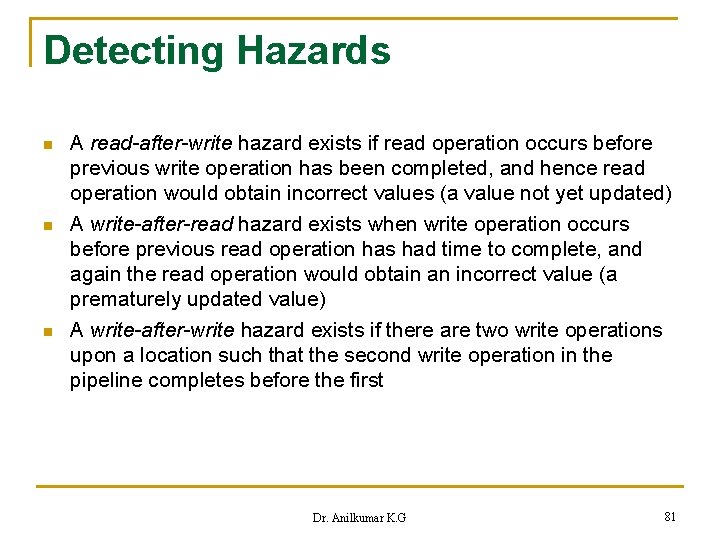

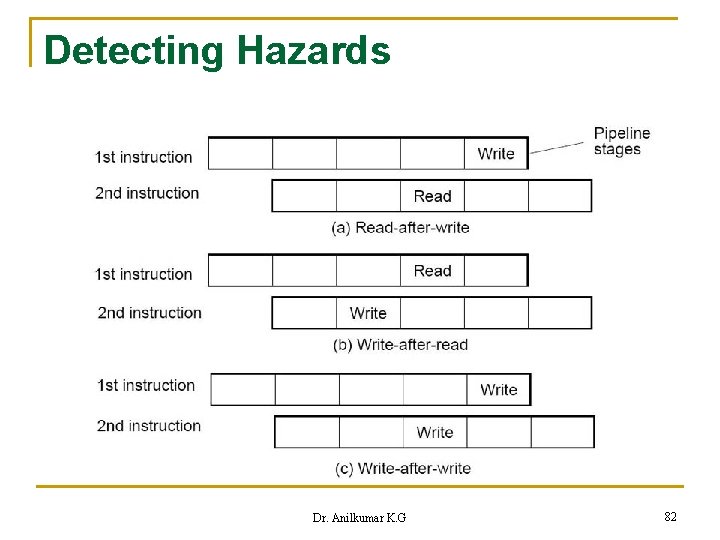

Detecting Hazards n n n A read-after-write hazard exists if read operation occurs before previous write operation has been completed, and hence read operation would obtain incorrect values (a value not yet updated) A write-after-read hazard exists when write operation occurs before previous read operation has had time to complete, and again the read operation would obtain an incorrect value (a prematurely updated value) A write-after-write hazard exists if there are two write operations upon a location such that the second write operation in the pipeline completes before the first Dr. Anilkumar K. G 81

Detecting Hazards Dr. Anilkumar K. G 82

Data Hazards Time (clock cycles) IF I n s t r. O r d e r ID/RF EX MEM WB add r 1, r 2, r 3 sub r 4, r 1, r 3 and r 6, r 1, r 7 or r 8, r 1, r 9 xor r 10, r 11 Dr. Anilkumar K. G 83

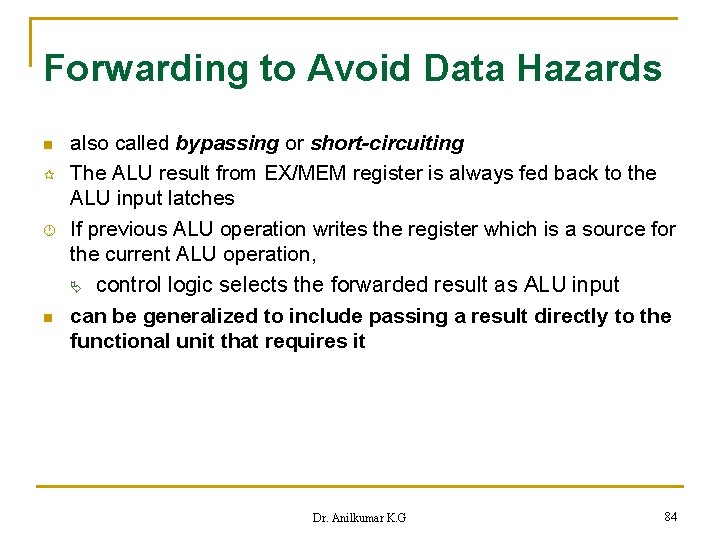

Forwarding to Avoid Data Hazards n ¶ · n also called bypassing or short-circuiting The ALU result from EX/MEM register is always fed back to the ALU input latches If previous ALU operation writes the register which is a source for the current ALU operation, Ä control logic selects the forwarded result as ALU input can be generalized to include passing a result directly to the functional unit that requires it Dr. Anilkumar K. G 84

Forwarding to Avoid Data Hazards n n n Forwarding refers to by passing result of one instruction directly to another instruction to eliminate use of immediate storage locations Can be applied to compiler level to eliminate unnecessary references to memory locations by forwarding values through registers Can be applied to HW level to eliminate pipeline cycles for reading registers updated in a previous pipeline stage Dr. Anilkumar K. G 85

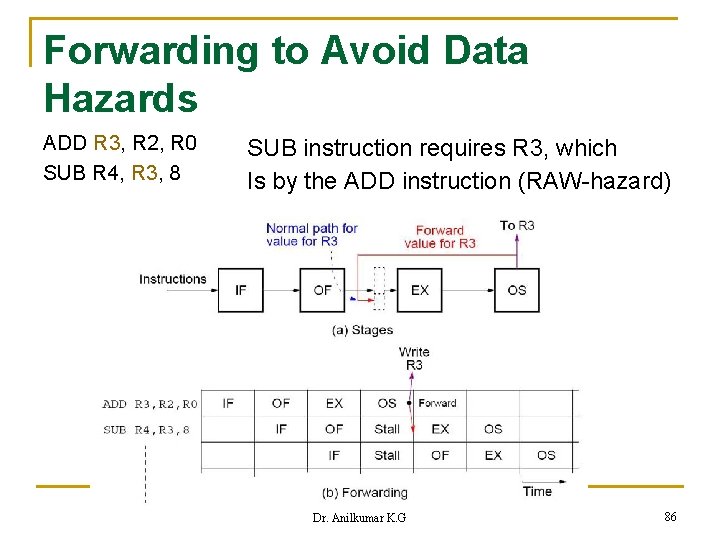

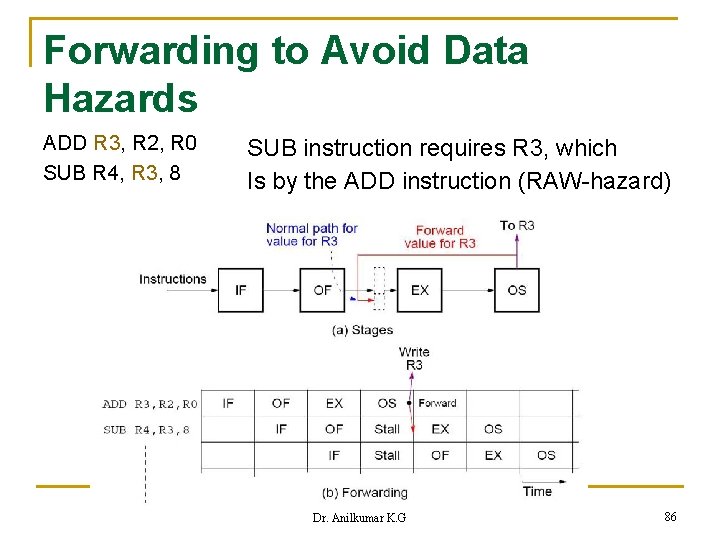

Forwarding to Avoid Data Hazards ADD R 3, R 2, R 0 SUB R 4, R 3, 8 SUB instruction requires R 3, which Is by the ADD instruction (RAW-hazard) Dr. Anilkumar K. G 86

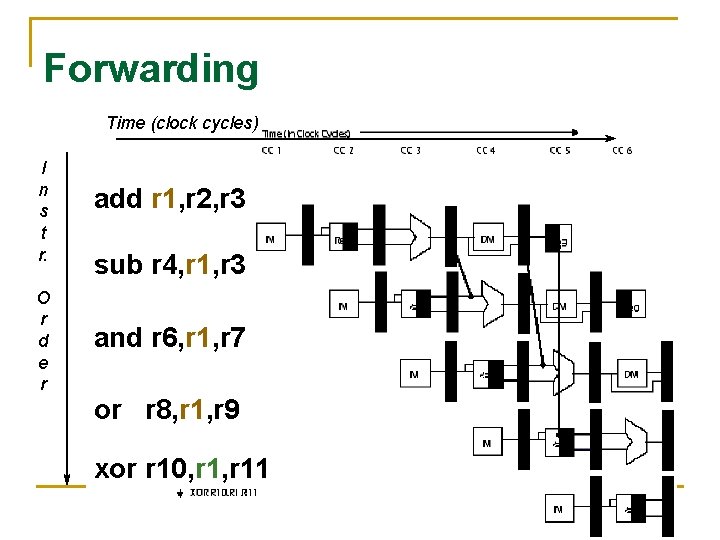

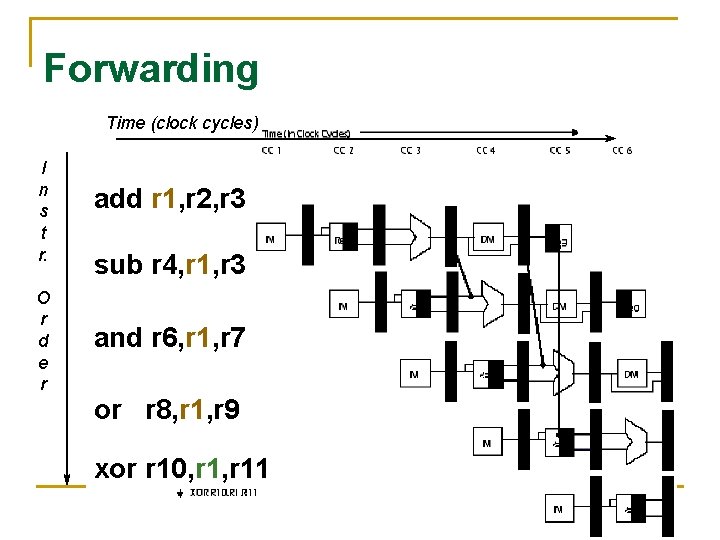

Forwarding Time (clock cycles) I n s t r. O r d e r add r 1, r 2, r 3 sub r 4, r 1, r 3 and r 6, r 1, r 7 or r 8, r 1, r 9 xor r 10, r 11 Dr. Anilkumar K. G 87

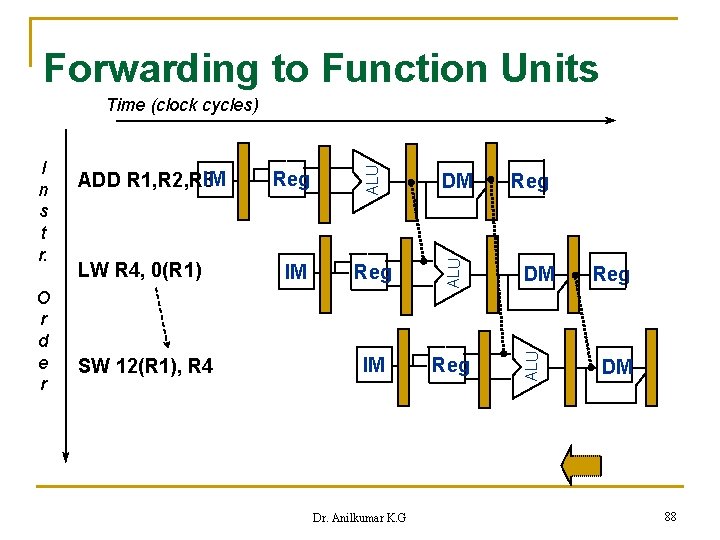

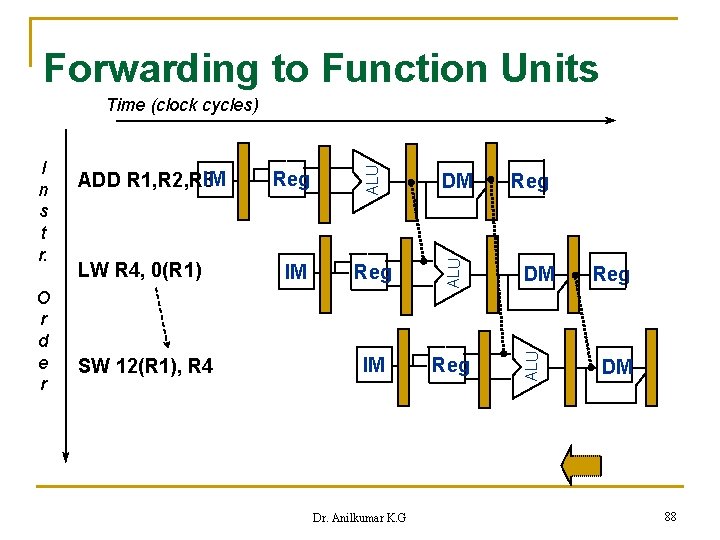

Forwarding to Function Units LW R 4, 0(R 1) SW 12(R 1), R 4 Reg DM IM Reg Dr. Anilkumar K. G Reg DM ALU O r d e r IM ADD R 1, R 2, R 3 ALU I n s t r. ALU Time (clock cycles) Reg DM 88

Structural Hazards n arise when q q n Example: single memory for both data and instructions Ä Ä n some functional unit is not fully pipelined not enough duplication of some resource conflicts between data-memory reference and instruction reference. Resolve: stall the pipeline for one clock cycle when data memory access occurs. A stall : a pipeline bubble Dr. Anilkumar K. G 89

One Memory Port / Structural Hazards Time (clock cycles) I n s t r. O r d e r Load Instr 1 Instr 2 Instr 3 Instr 4 Dr. Anilkumar K. G 90

One Memory Port / Structural Hazards Time (clock cycles) I n s t r. O r d e r Load Instr 1 Instr 2 stall Instr 3 Dr. Anilkumar K. G 91

Dr. Anilkumar K. G 92