Mining Reference Tables for Automatic Text Segmentation E

Mining Reference Tables for Automatic Text Segmentation E. Agichtein Columbia Univ. V. Ganti Microsoft R. KDD’ 04 Shui-Lung Chuang Oct 27, 2004

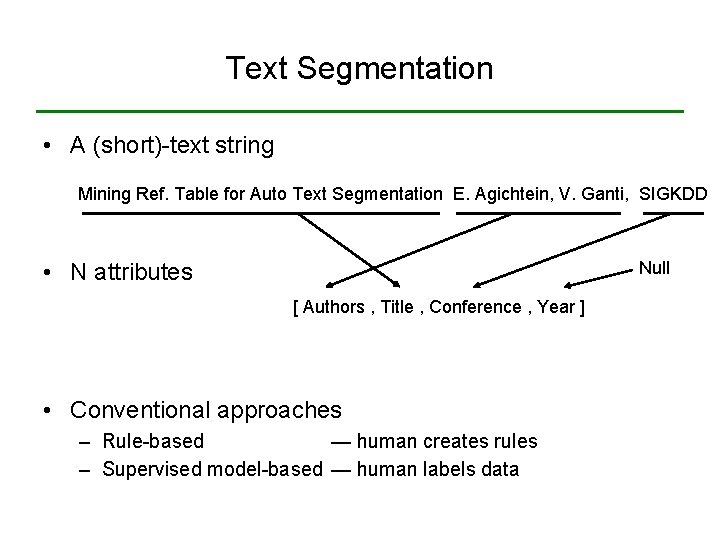

Text Segmentation • A (short)-text string Mining Ref. Table for Auto Text Segmentation E. Agichtein, V. Ganti, SIGKDD Null • N attributes [ Authors , Title , Conference , Year ] • Conventional approaches – Rule-based — human creates rules – Supervised model-based — human labels data

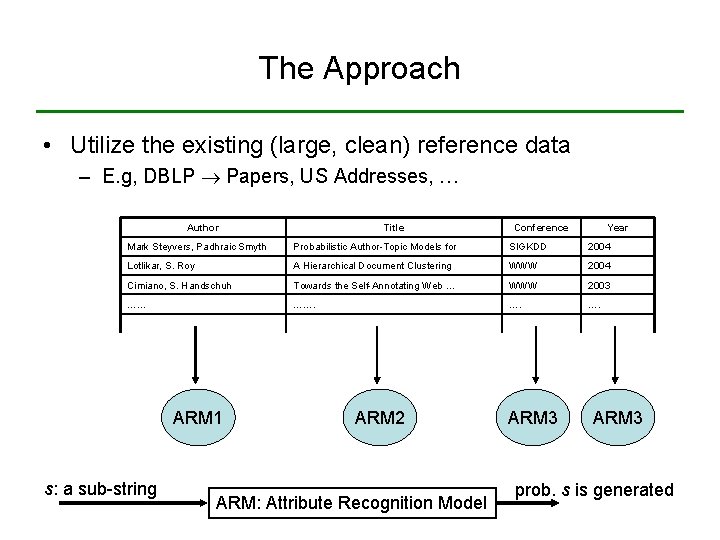

The Approach • Utilize the existing (large, clean) reference data – E. g, DBLP Papers, US Addresses, … Author Title Conference Year Mark Steyvers, Padhraic Smyth Probabilistic Author-Topic Models for SIGKDD 2004 Lotlikar, S. Roy A Hierarchical Document Clustering WWW 2004 Cimiano, S. Handschuh Towards the Self-Annotating Web … WWW 2003 …… ……. …. ARM 1 s: a sub-string ARM 2 ARM: Attribute Recognition Model ARM 3 prob. s is generated

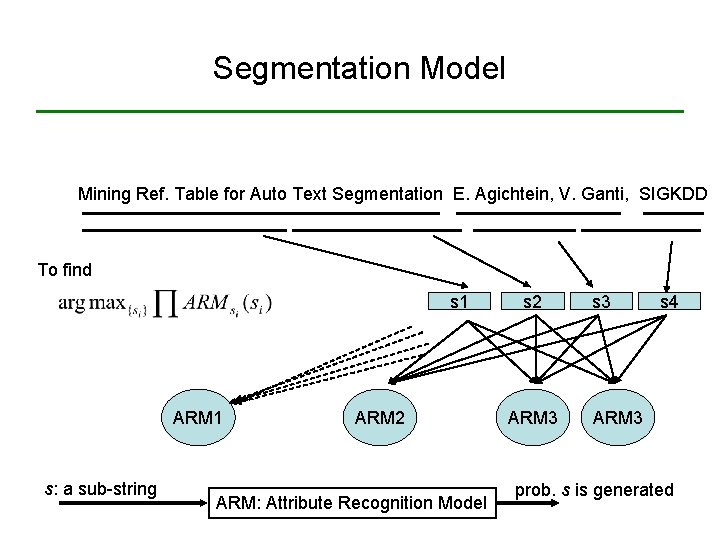

Segmentation Model Mining Ref. Table for Auto Text Segmentation E. Agichtein, V. Ganti, SIGKDD To find s 1 ARM 1 s: a sub-string ARM 2 ARM: Attribute Recognition Model s 2 ARM 3 s 4 ARM 3 prob. s is generated

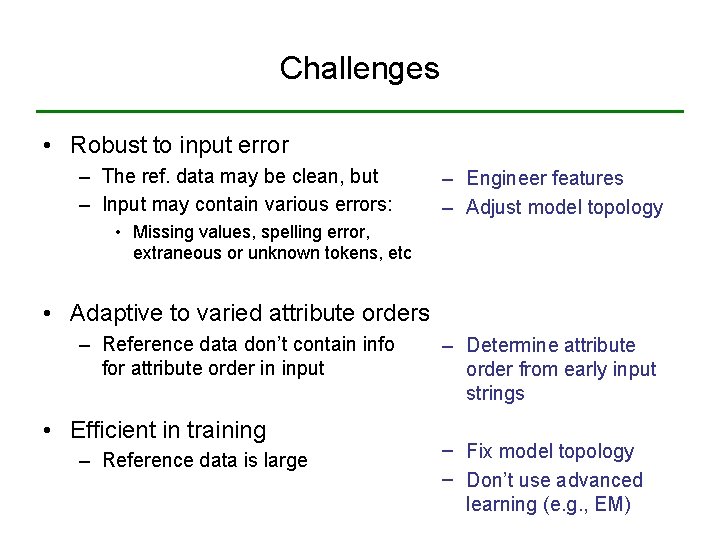

Challenges • Robust to input error – The ref. data may be clean, but – Input may contain various errors: – Engineer features – Adjust model topology • Missing values, spelling error, extraneous or unknown tokens, etc • Adaptive to varied attribute orders – Reference data don’t contain info for attribute order in input • Efficient in training – Reference data is large – Determine attribute order from early input strings – Fix model topology – Don’t use advanced learning (e. g. , EM)

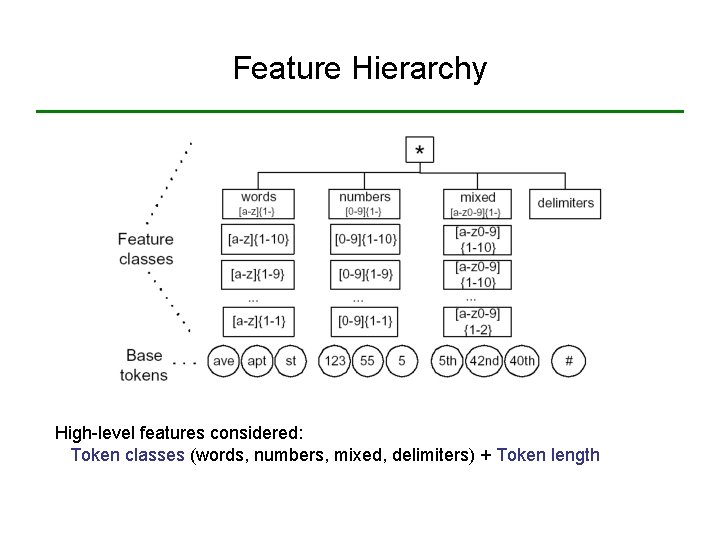

Feature Hierarchy High-level features considered: Token classes (words, numbers, mixed, delimiters) + Token length

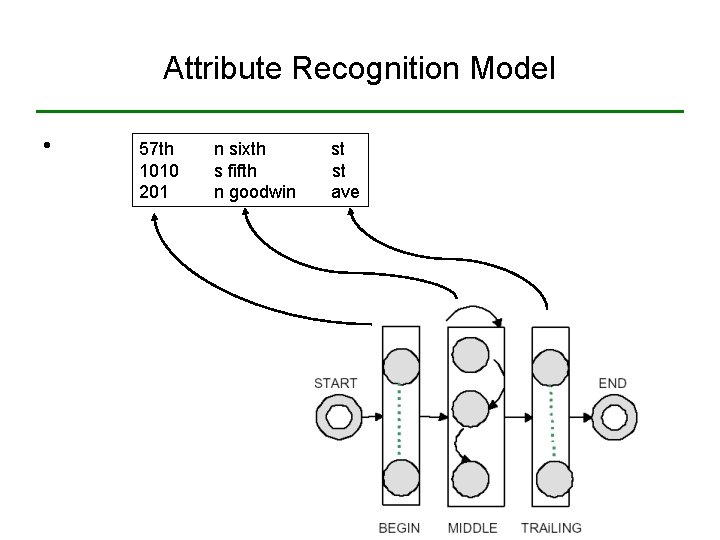

Attribute Recognition Model • 57 th 1010 201 n sixth s fifth n goodwin st st ave

![Model Training • 57 th 1010 201 Mixed [a-z 0 -9]{1, -} …… [a-z Model Training • 57 th 1010 201 Mixed [a-z 0 -9]{1, -} …… [a-z](http://slidetodoc.com/presentation_image_h2/a790f29781b6ad77408e7c1d0f2c574b/image-8.jpg)

Model Training • 57 th 1010 201 Mixed [a-z 0 -9]{1, -} …… [a-z 0 -9]{1, 5} [a-z 0 -9]{1, 4} 57 th n sixth s fifth n goodwin st st ave Emission: p(x|e)=(x=e) ? 1 : 0 Transition: B { M, T, END } M { M, T, END } T { T, END }

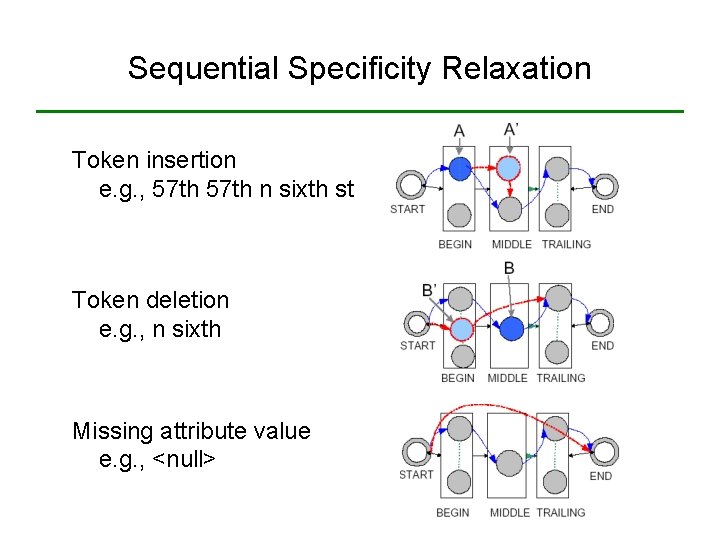

Sequential Specificity Relaxation Token insertion e. g. , 57 th n sixth st Token deletion e. g. , n sixth Missing attribute value e. g. , <null>

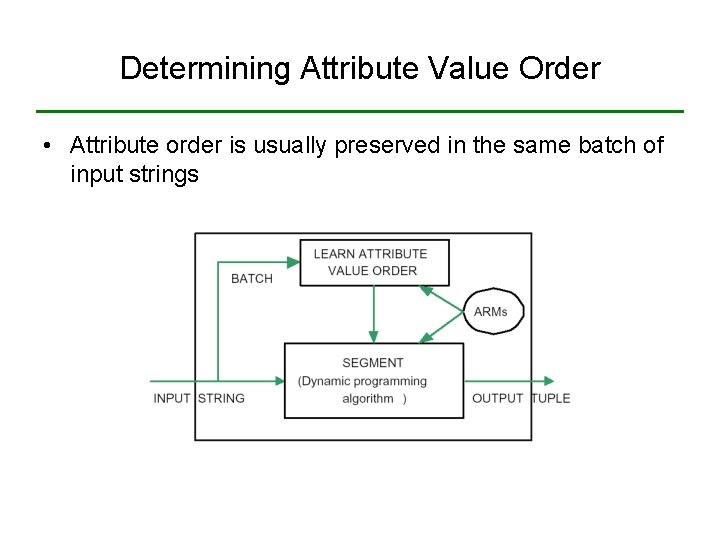

Determining Attribute Value Order • Attribute order is usually preserved in the same batch of input strings

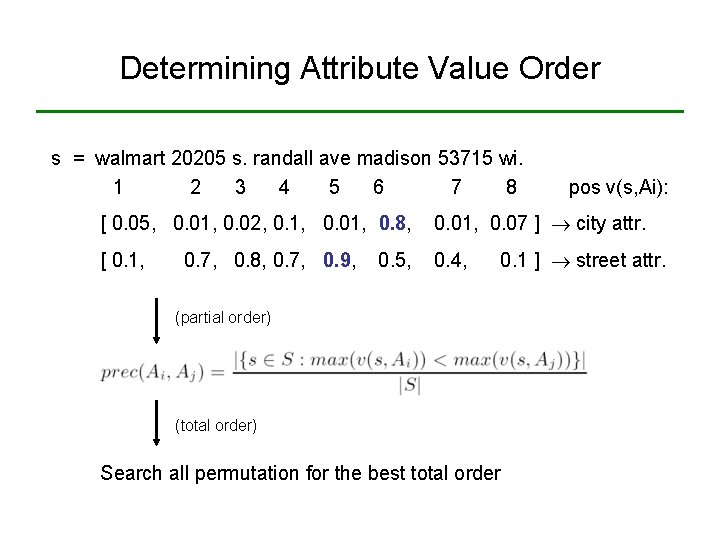

Determining Attribute Value Order s = walmart 20205 s. randall ave madison 53715 wi. 1 2 3 4 5 6 7 8 pos v(s, Ai): [ 0. 05, 0. 01, 0. 02, 0. 1, 0. 01, 0. 8, 0. 01, 0. 07 ] city attr. [ 0. 1, 0. 4, 0. 7, 0. 8, 0. 7, 0. 9, 0. 5, 0. 1 ] street attr. (partial order) (total order) Search all permutation for the best total order

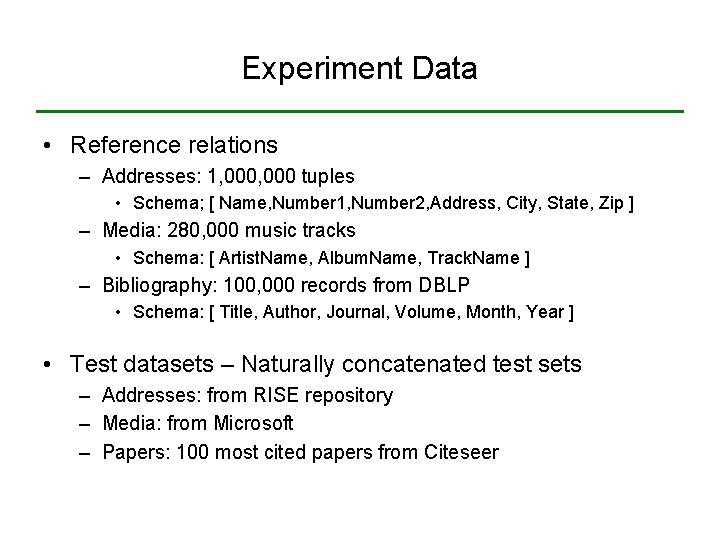

Experiment Data • Reference relations – Addresses: 1, 000 tuples • Schema; [ Name, Number 1, Number 2, Address, City, State, Zip ] – Media: 280, 000 music tracks • Schema: [ Artist. Name, Album. Name, Track. Name ] – Bibliography: 100, 000 records from DBLP • Schema: [ Title, Author, Journal, Volume, Month, Year ] • Test datasets – Naturally concatenated test sets – Addresses: from RISE repository – Media: from Microsoft – Papers: 100 most cited papers from Citeseer

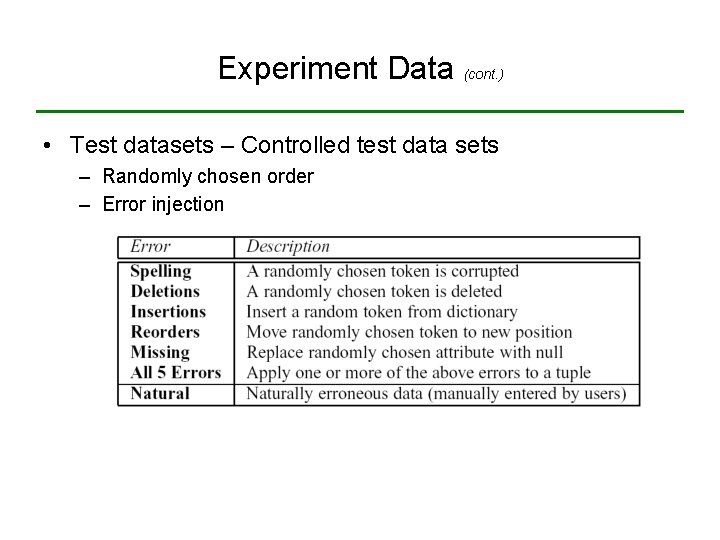

Experiment Data (cont. ) • Test datasets – Controlled test data sets – Randomly chosen order – Error injection

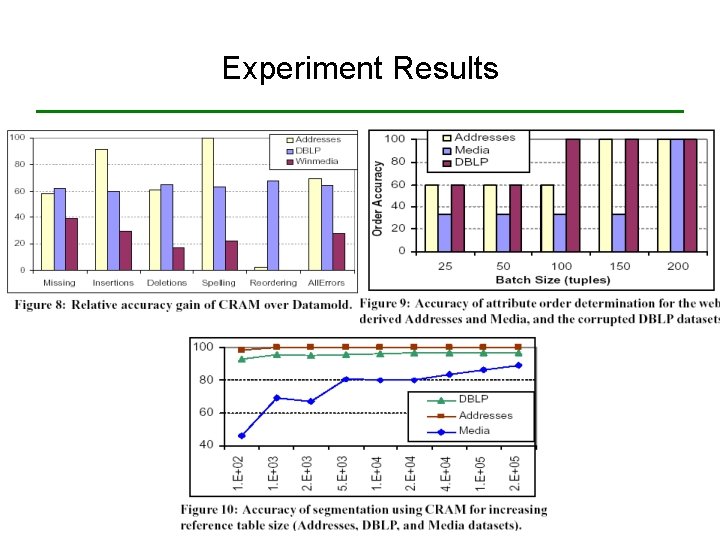

Experiment Results

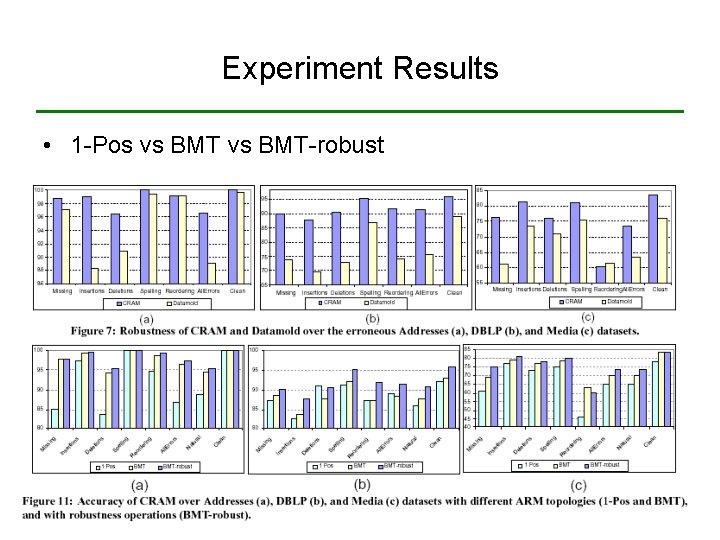

Experiment Results • 1 -Pos vs BMT-robust

Comments • The idea of using reference tables is good • The approach is well engineered to deal with issues of robustness and efficiency • Experiment is thorough • The approach is somewhat still ad hoc, and every component seems replaceable

- Slides: 16