MINING OPTIMAL DECISION TREES FROM ITEMSET LATTICES Siegfried

- Slides: 16

MINING OPTIMAL DECISION TREES FROM ITEMSET LATTICES Siegfried Nijssen, Elisa Fromont KDD’ 07 Speaker: Li, Huei. Jyun Advisor: Koh, Jia. Ling Date: 2008/3/13

OUTLINE Introduction Queries for decision trees The DL 8 algorithm Experiments Conclusions 2

INTRODUCTION Most well-known algorithms (for instance, C 4. 5) for building decision trees use a top-down induction paradigm, in which a good split is chosen heuristically. If such algorithms do not find a tree that satisfies the specified constraints, this does not mean that such a tree does not exist For a sufficiently large number of datasets, what their true optimum under given constraints is. For small, mostly artificial datasets, small decision trees are not always preferable in terms of generalization ability 3

INTRODUCTION Propose an exact algorithm for building decision trees that does not rely on the traditional approach of heuristic top-down induction Address the problem of finding exact optimal decision trees under constraints 4

QUERIES FOR DECISION TREES The problems can be seen as queries to a database These queries consist of three parts The first part specifies the constraints on the nodes of decision trees T 1: set of locally constrained decision trees Decision. Trees: the set of all possible decision trees paths(T): the itemsets that correspond to paths in the tree p(I): express a constraint on paths. Ex: p(I): =(freq(I)≧minfreq) The evaluation of p(I) must be independent of the tree T of which I is part. p must be anti-monotonic. 5

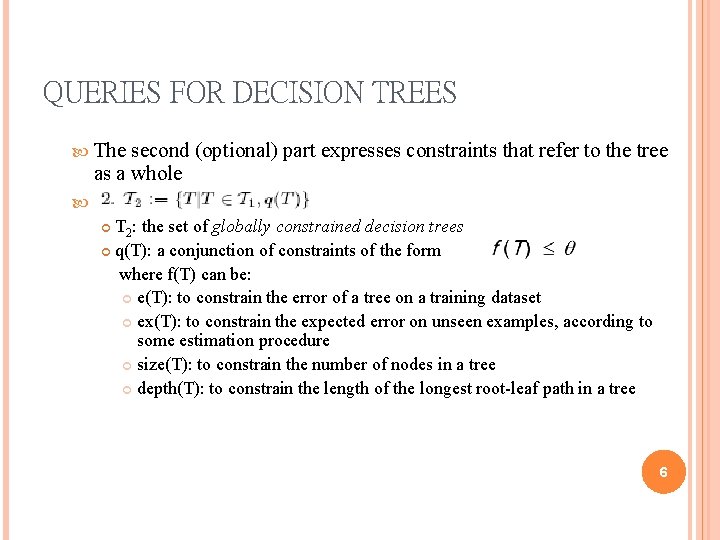

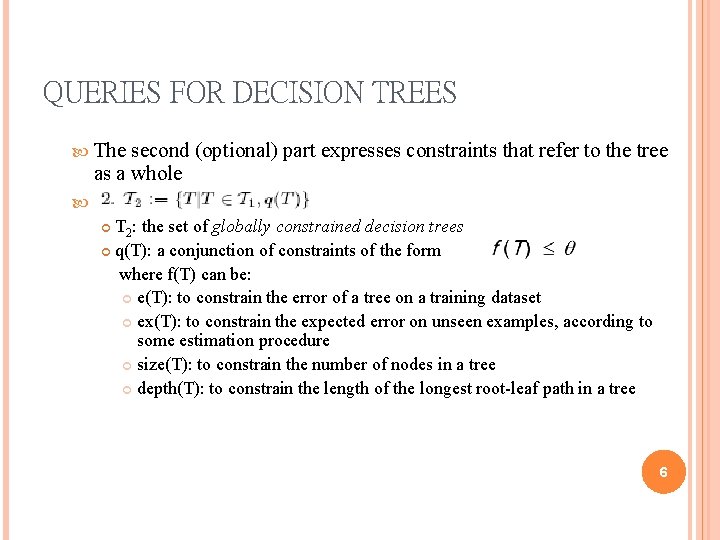

QUERIES FOR DECISION TREES The second (optional) part expresses constraints that refer to the tree as a whole T 2: the set of globally constrained decision trees q(T): a conjunction of constraints of the form where f(T) can be: e(T): to constrain the error of a tree on a training dataset ex(T): to constrain the expected error on unseen examples, according to some estimation procedure size(T): to constrain the number of nodes in a tree depth(T): to constrain the length of the longest root-leaf path in a tree 6

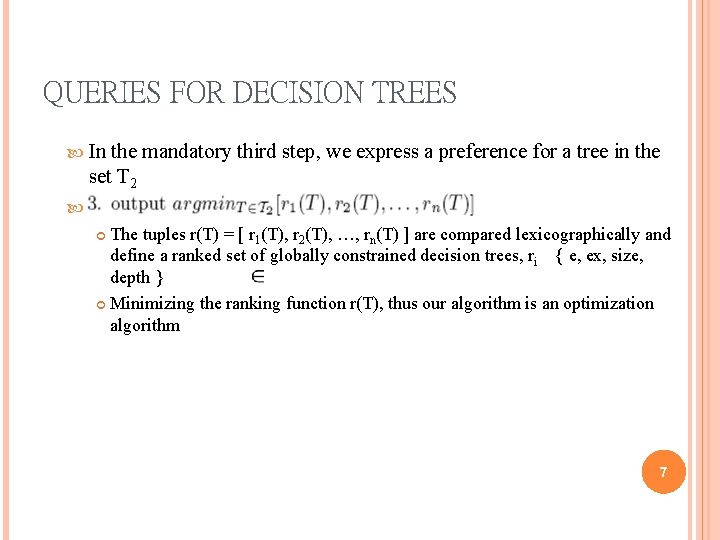

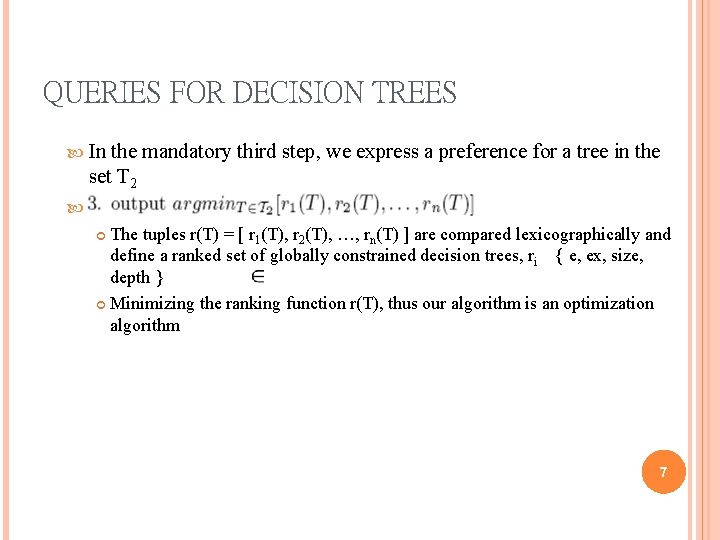

QUERIES FOR DECISION TREES In the mandatory third step, we express a preference for a tree in the set T 2 The tuples r(T) = [ r 1(T), r 2(T), …, rn(T) ] are compared lexicographically and define a ranked set of globally constrained decision trees, ri { e, ex, size, depth } Minimizing the ranking function r(T), thus our algorithm is an optimization algorithm 7

QUERIES FOR DECISION TREES r(T) p(T) This query investigates all decision trees in which each leaf covers at least minfreq examples of the training data Among these trees, we find the smallest one To retrieve accurate trees of bounded size, Query 1 can be extended such that q(T): = size(T) ≦ maxsize 8

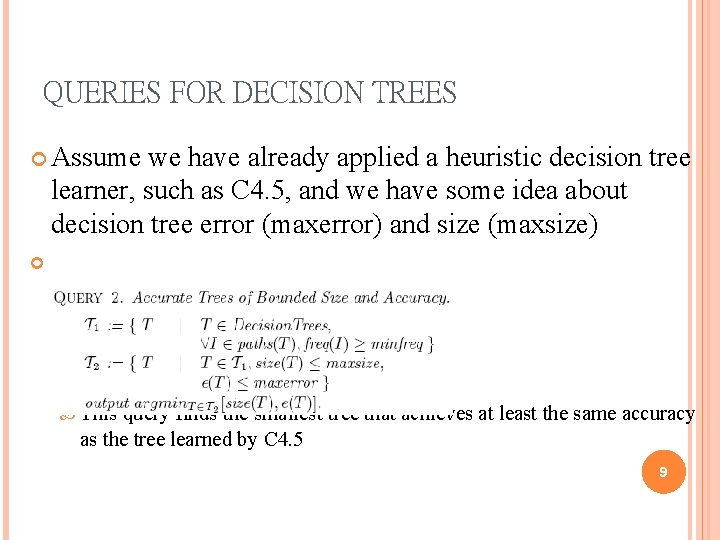

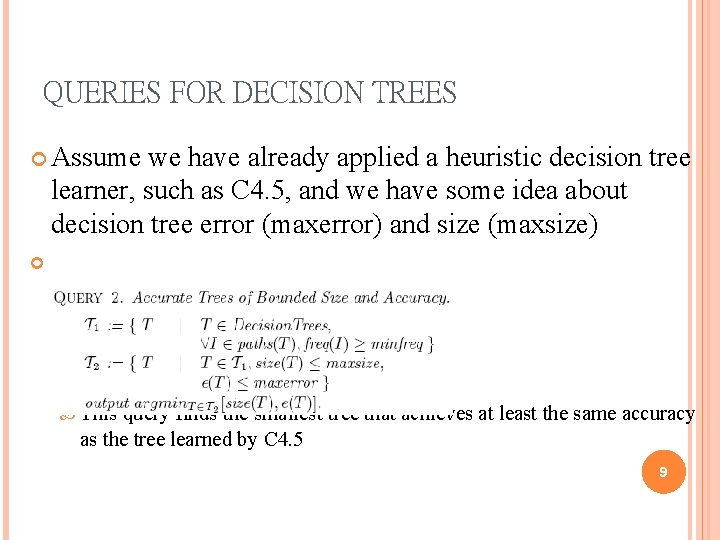

QUERIES FOR DECISION TREES Assume we have already applied a heuristic decision tree learner, such as C 4. 5, and we have some idea about decision tree error (maxerror) and size (maxsize) This query finds the smallest tree that achieves at least the same accuracy as the tree learned by C 4. 5 9

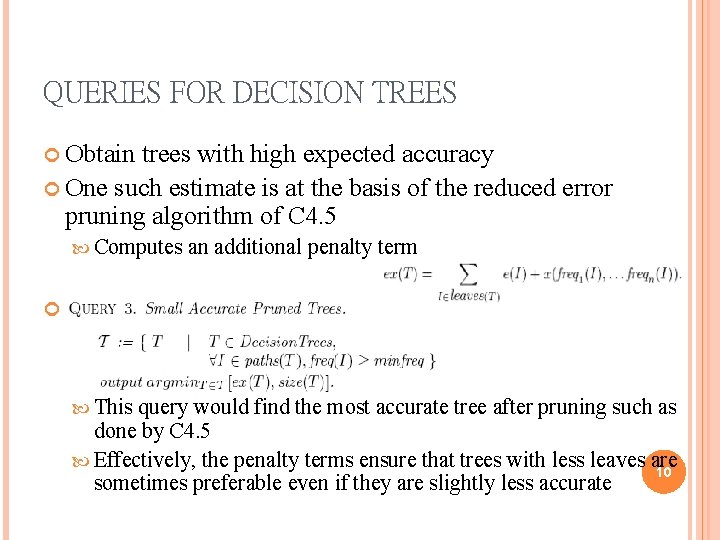

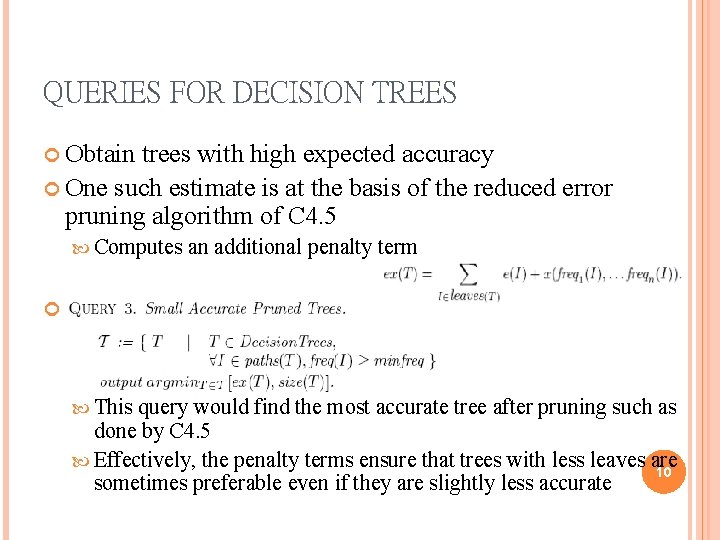

QUERIES FOR DECISION TREES Obtain trees with high expected accuracy One such estimate is at the basis of the reduced error pruning algorithm of C 4. 5 Computes an additional penalty term This query would find the most accurate tree after pruning such as done by C 4. 5 Effectively, the penalty terms ensure that trees with less leaves are 10 sometimes preferable even if they are slightly less accurate

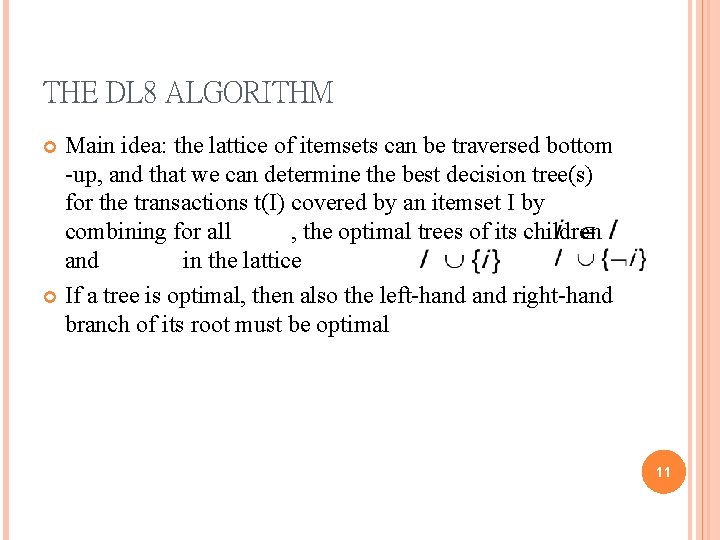

THE DL 8 ALGORITHM Main idea: the lattice of itemsets can be traversed bottom -up, and that we can determine the best decision tree(s) for the transactions t(I) covered by an itemset I by combining for all , the optimal trees of its children and in the lattice If a tree is optimal, then also the left-hand right-hand branch of its root must be optimal 11

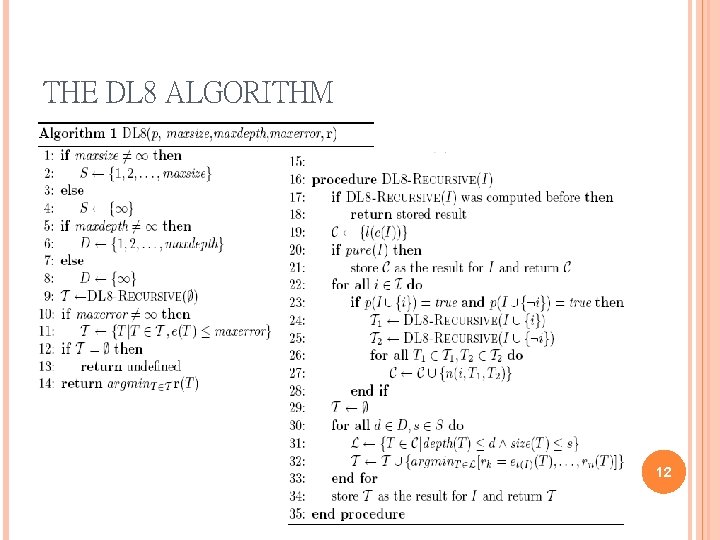

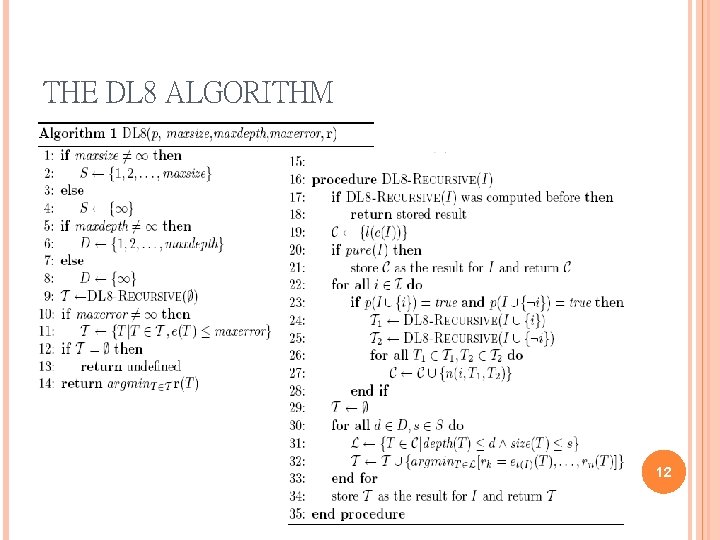

THE DL 8 ALGORITHM 12

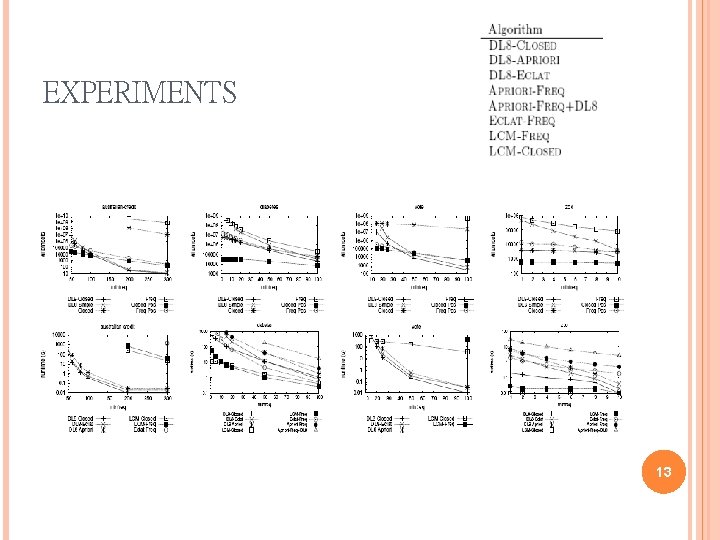

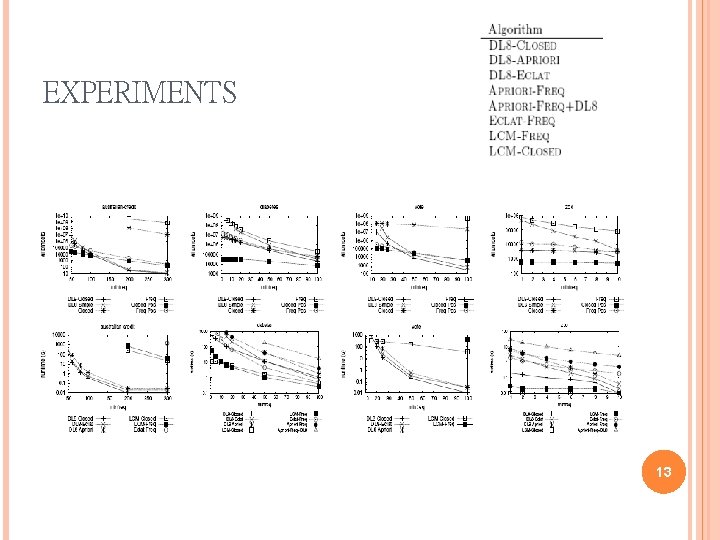

EXPERIMENTS 13

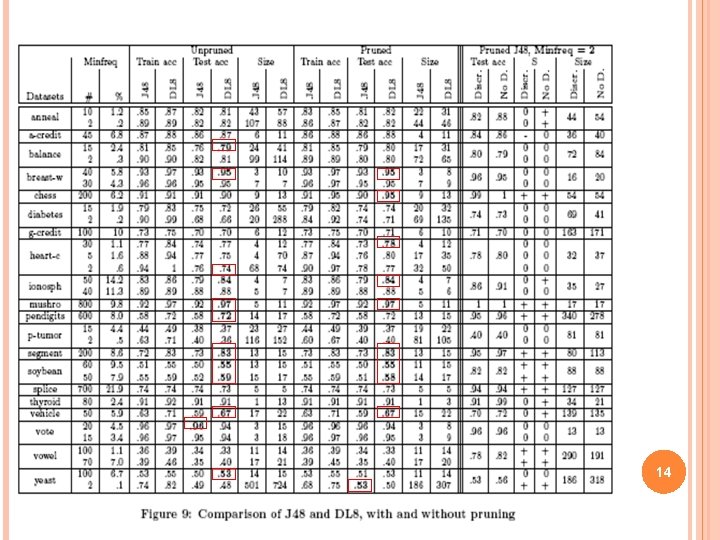

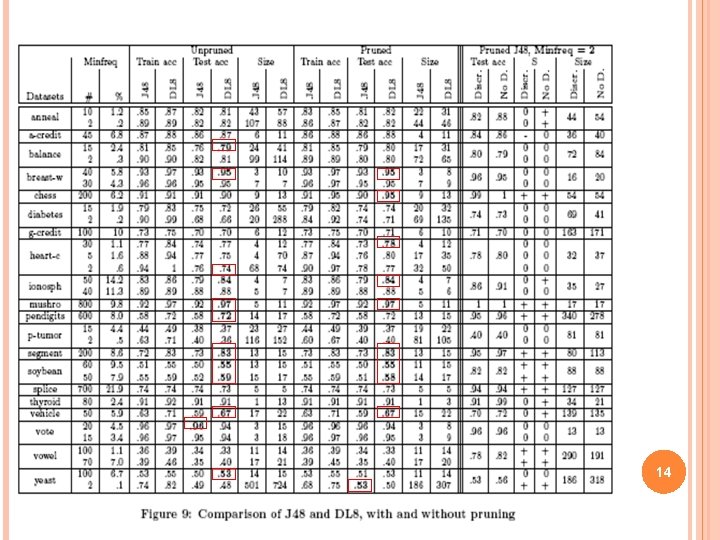

14

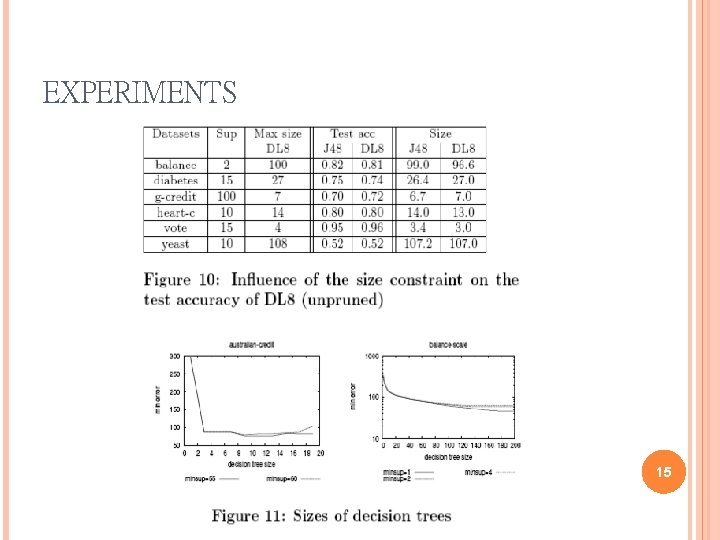

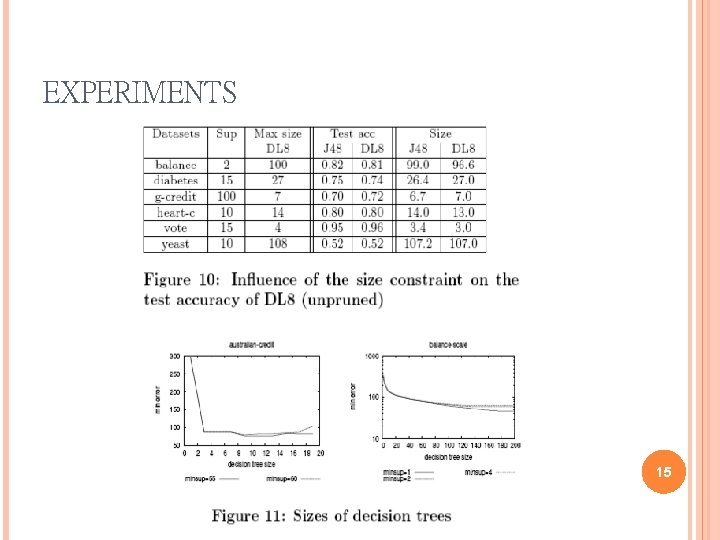

EXPERIMENTS 15

CONCLUSIONS Presented DL 8, an algorithm for finding decision trees that maximize an optimization criterion under constraints Successfully applied this algorithm on a large number of datasets 16