Mining of Massive Datasets Knowledge discovery from data

- Slides: 23

Mining of Massive Datasets: Knowledge discovery from data

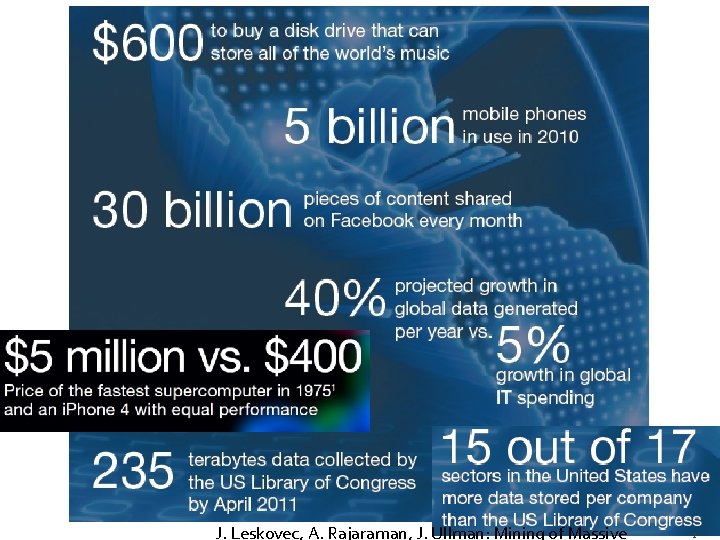

J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive 2

Data contains value and knowledge 3

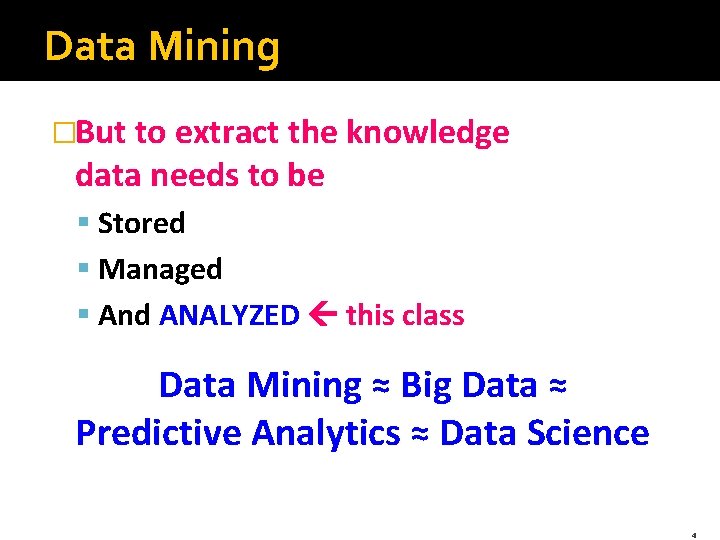

Data Mining �But to extract the knowledge data needs to be § Stored § Managed § And ANALYZED this class Data Mining ≈ Big Data ≈ Predictive Analytics ≈ Data Science 4

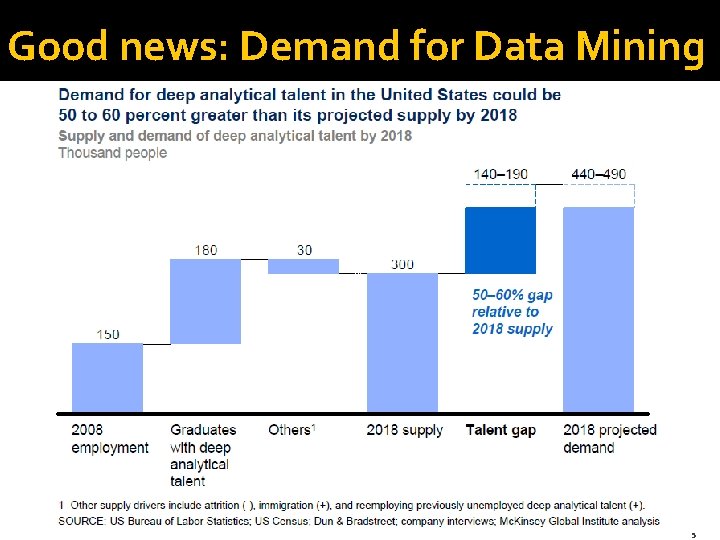

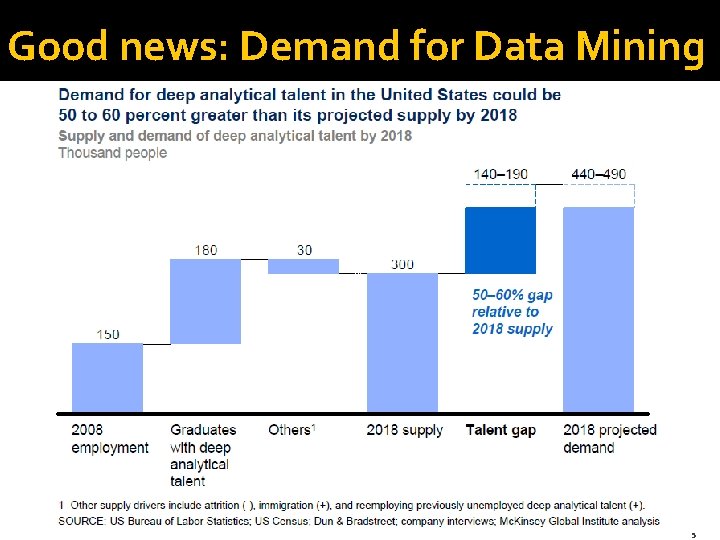

Good news: Demand for Data Mining 5

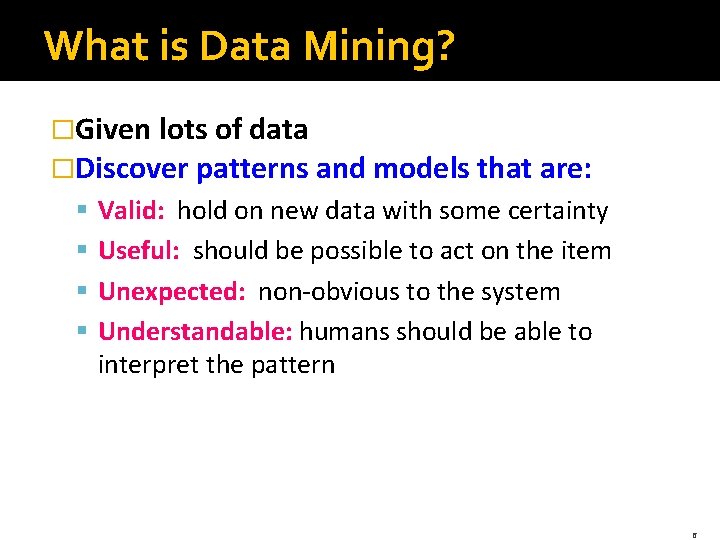

What is Data Mining? �Given lots of data �Discover patterns and models that are: § § Valid: hold on new data with some certainty Useful: should be possible to act on the item Unexpected: non-obvious to the system Understandable: humans should be able to interpret the pattern 6

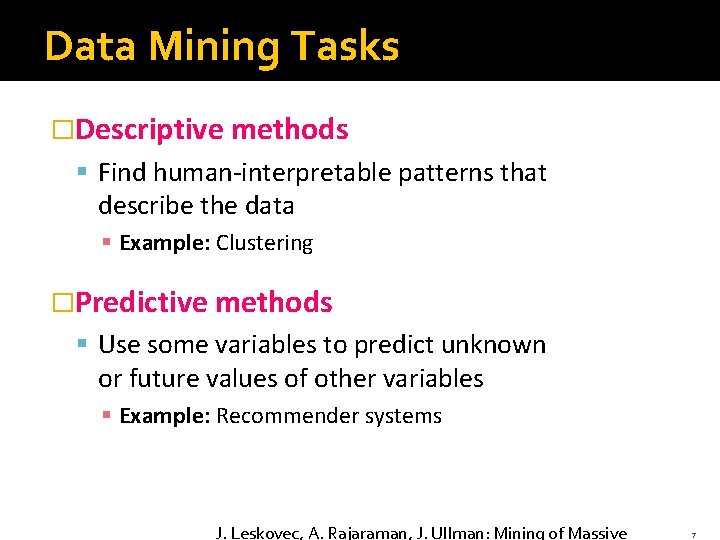

Data Mining Tasks �Descriptive methods § Find human-interpretable patterns that describe the data § Example: Clustering �Predictive methods § Use some variables to predict unknown or future values of other variables § Example: Recommender systems J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive 7

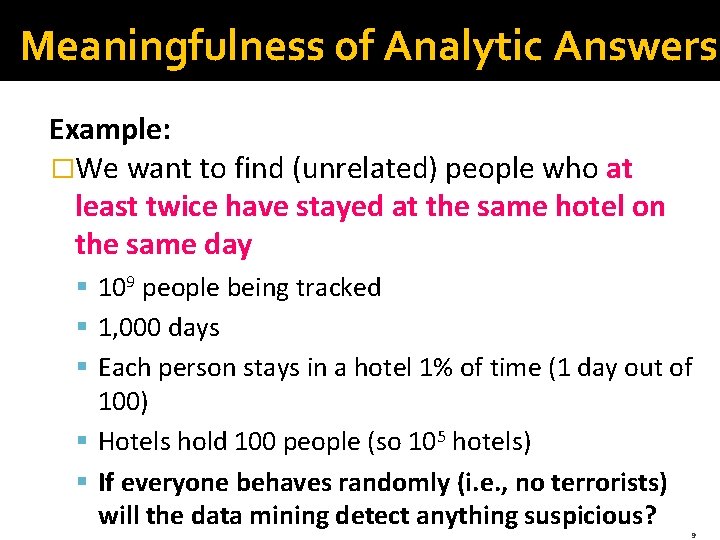

Meaningfulness of Analytic Answers �A risk with “Data mining” is that an analyst can “discover” patterns that are meaningless �Statisticians call it Bonferroni’s principle: § Roughly, if you look in more places for interesting patterns than your amount of data will support, you are bound to find garbage 8

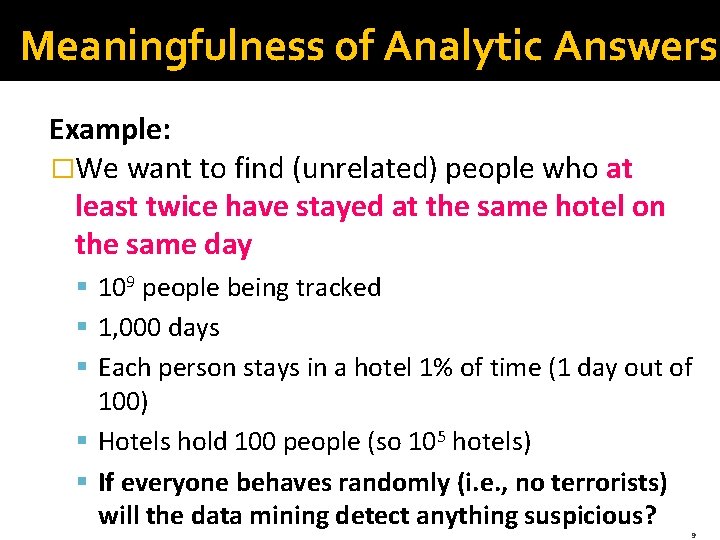

Meaningfulness of Analytic Answers Example: �We want to find (unrelated) people who at least twice have stayed at the same hotel on the same day § 109 people being tracked § 1, 000 days § Each person stays in a hotel 1% of time (1 day out of 100) § Hotels hold 100 people (so 105 hotels) § If everyone behaves randomly (i. e. , no terrorists) will the data mining detect anything suspicious? 9

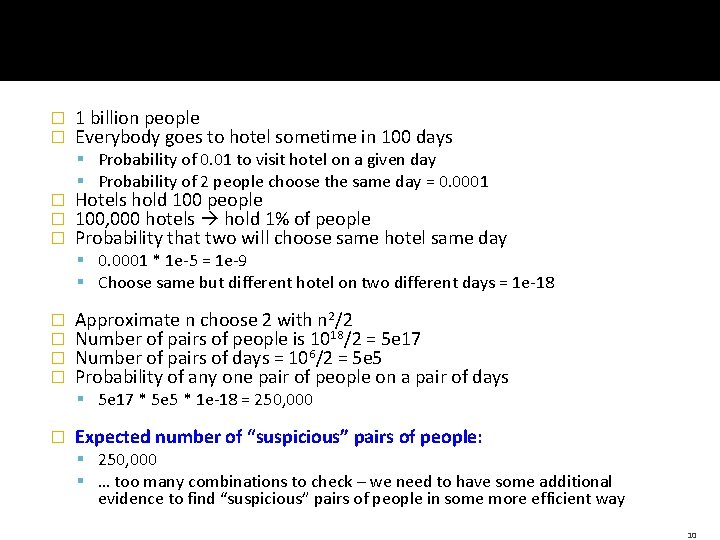

� � � 1 billion people Everybody goes to hotel sometime in 100 days § Probability of 0. 01 to visit hotel on a given day § Probability of 2 people choose the same day = 0. 0001 Hotels hold 100 people 100, 000 hotels hold 1% of people Probability that two will choose same hotel same day § 0. 0001 * 1 e-5 = 1 e-9 § Choose same but different hotel on two different days = 1 e-18 � � Approximate n choose 2 with n 2/2 Number of pairs of people is 1018/2 = 5 e 17 Number of pairs of days = 106/2 = 5 e 5 Probability of any one pair of people on a pair of days § 5 e 17 * 5 e 5 * 1 e-18 = 250, 000 � Expected number of “suspicious” pairs of people: § 250, 000 § … too many combinations to check – we need to have some additional evidence to find “suspicious” pairs of people in some more efficient way 10

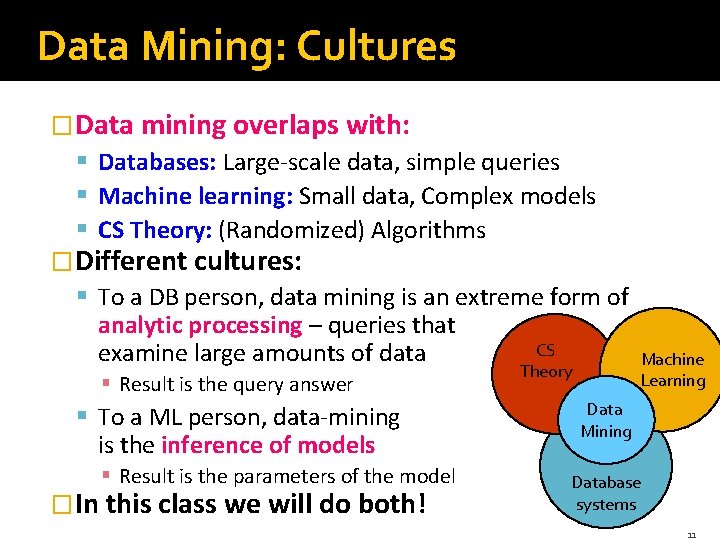

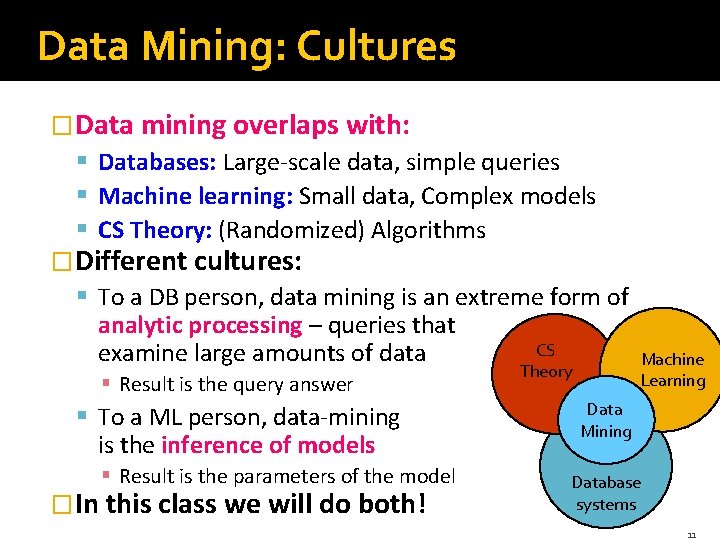

Data Mining: Cultures �Data mining overlaps with: § Databases: Large-scale data, simple queries § Machine learning: Small data, Complex models § CS Theory: (Randomized) Algorithms �Different cultures: § To a DB person, data mining is an extreme form of analytic processing – queries that CS examine large amounts of data § Result is the query answer § To a ML person, data-mining is the inference of models § Result is the parameters of the model �In this class we will do both! Theory Machine Learning Data Mining Database systems 11

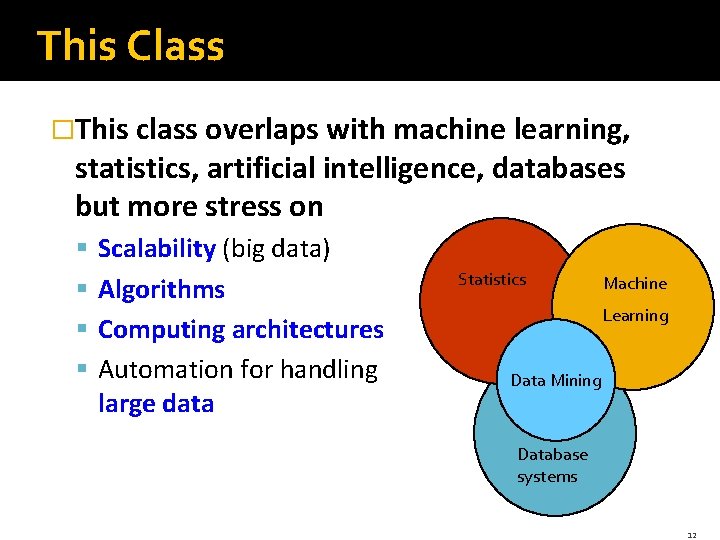

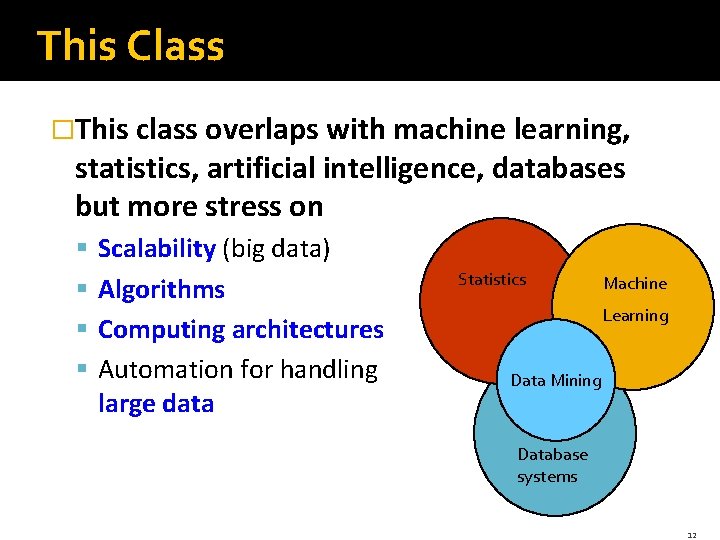

This Class �This class overlaps with machine learning, statistics, artificial intelligence, databases but more stress on § § Scalability (big data) Algorithms Computing architectures Automation for handling large data Statistics Machine Learning Data Mining Database systems 12

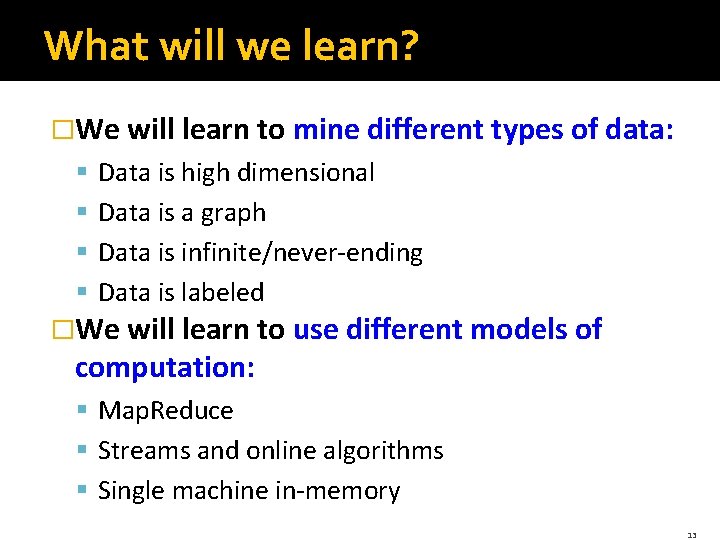

What will we learn? �We will learn to mine different types of data: § § Data is high dimensional Data is a graph Data is infinite/never-ending Data is labeled �We will learn to use different models of computation: § Map. Reduce § Streams and online algorithms § Single machine in-memory 13

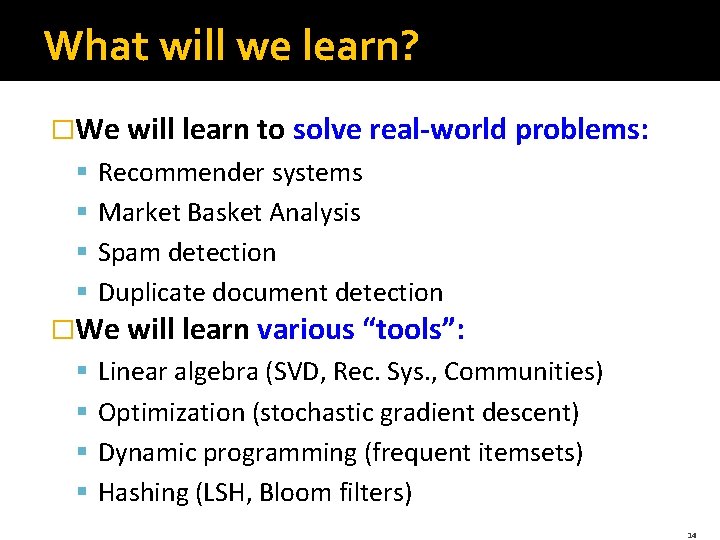

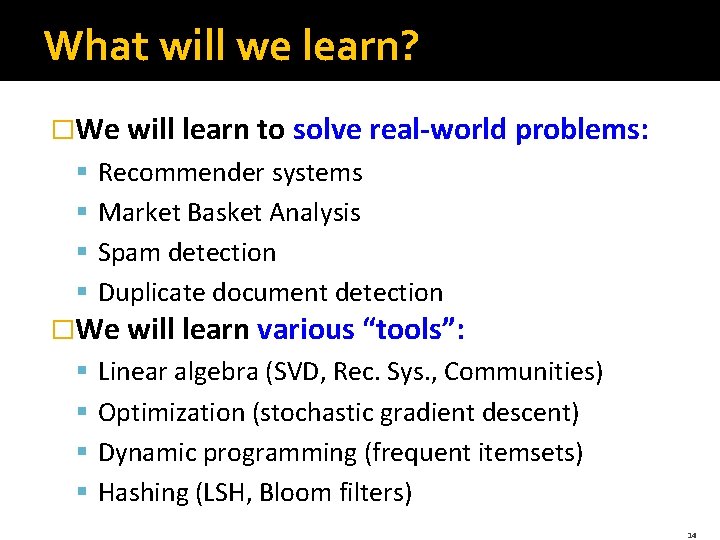

What will we learn? �We will learn to solve real-world problems: § § Recommender systems Market Basket Analysis Spam detection Duplicate document detection �We will learn various “tools”: § § Linear algebra (SVD, Rec. Sys. , Communities) Optimization (stochastic gradient descent) Dynamic programming (frequent itemsets) Hashing (LSH, Bloom filters) 14

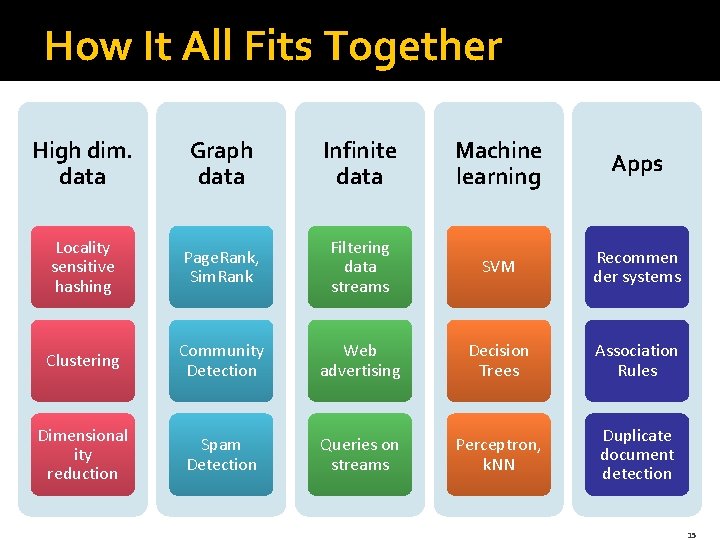

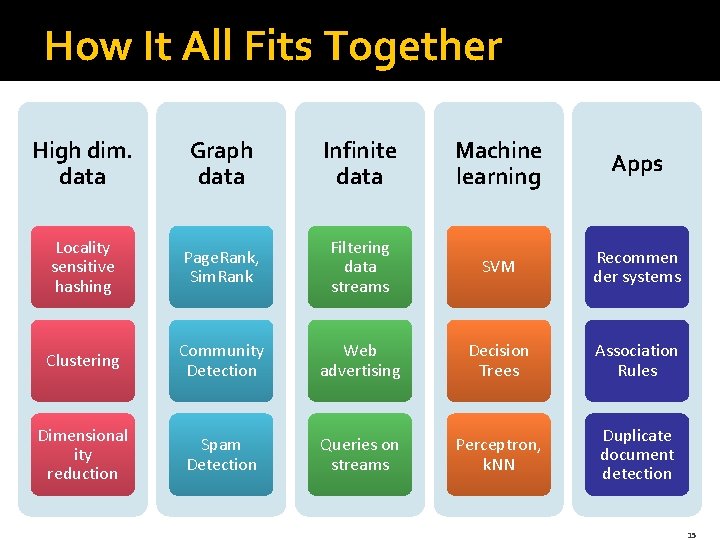

How It All Fits Together High dim. data Graph data Infinite data Machine learning Apps Locality sensitive hashing Page. Rank, Sim. Rank Filtering data streams SVM Recommen der systems Clustering Community Detection Web advertising Decision Trees Association Rules Dimensional ity reduction Spam Detection Queries on streams Perceptron, k. NN Duplicate document detection 15

I data♥ How do you want that data? 16

About the Course

�Professor § § Quinn Snell 3366 TMCB 801 -422 -5098 snell@cs. byu. edu �Office hours: § By Appointment 18

Prerequisites �Algorithms (CS 312) § Dynamic programming, basic data structures �Basic probability § Moments, typical distributions, MLE, … �Programming (CS 240) § Your choice, but C++/Java will be very useful �We provide some background, but the class will be fast paced 19

Course Logistics �Course website: http: //bigdatalab. cs. byu. edu/cs 476 § Lecture slides § Homeworks, solutions § Readings �Readings: § Mining of Massive Datasets § Free online: http: //www. mmds. org § CRISP-DM 1. 0 by Pete Chapman et. al. § ftp: //ftp. software. ibm. com/software/analytics/spss/support/ Modeler/Documentation/14/User. Manual/CRISP-DM. pdf 20

Logistics: Communication �For e-mailing assignments, always use: § bigdata. snell@gmail. com �How to submit? § Homework write-up: § 1 -2 pages § Email PDF �Reading Quizzes § Noted on schedule with a Q 21

Work for the Course �Short reading quizzes: 5% �Midterm & Final exams: 40% �Homework & Labs: 55% �It’s going to be fun and hard work. 22

What’s after the class �I have big-data RA positions open! § I will post details 23