Mining Association Rules Association rules Association rules can

- Slides: 13

Mining Association Rules

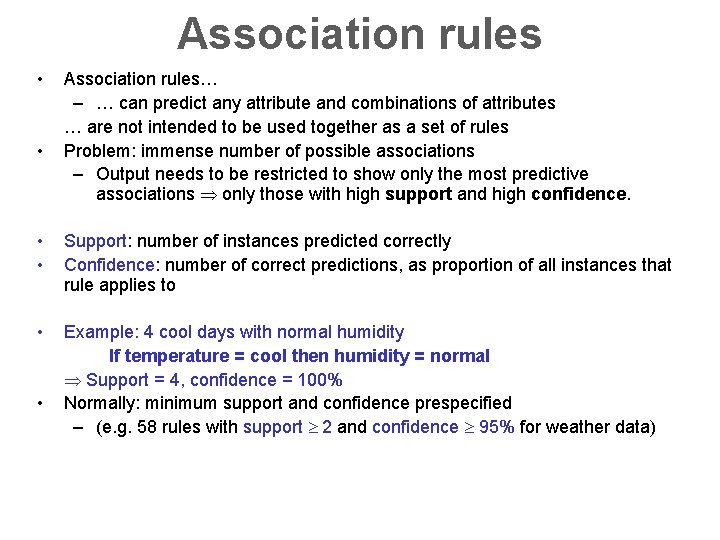

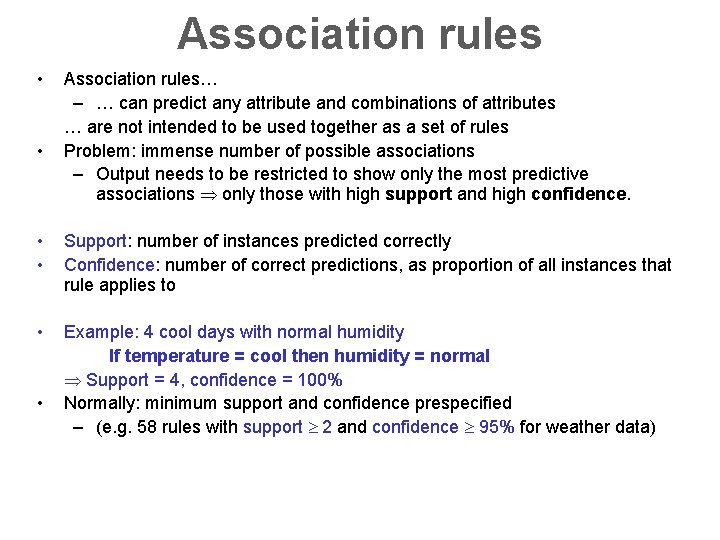

Association rules • • Association rules… – … can predict any attribute and combinations of attributes … are not intended to be used together as a set of rules Problem: immense number of possible associations – Output needs to be restricted to show only the most predictive associations Þ only those with high support and high confidence. • • Support: number of instances predicted correctly Confidence: number of correct predictions, as proportion of all instances that rule applies to • Example: 4 cool days with normal humidity If temperature = cool then humidity = normal Þ Support = 4, confidence = 100% Normally: minimum support and confidence prespecified – (e. g. 58 rules with support ³ 2 and confidence ³ 95% for weather data) •

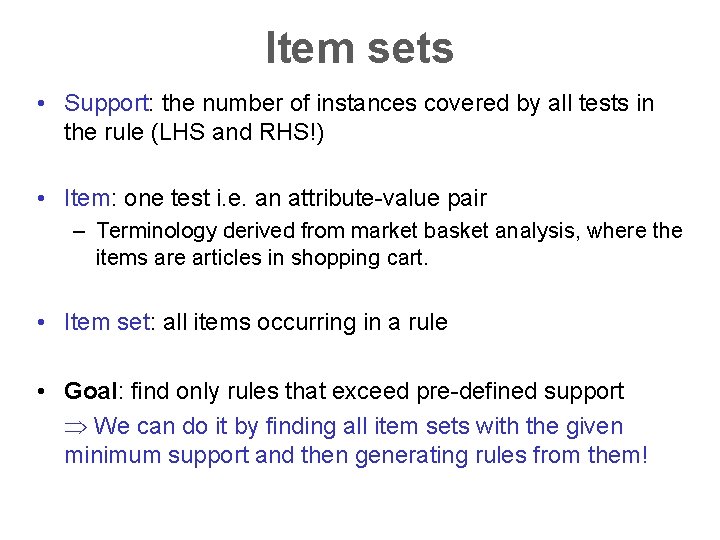

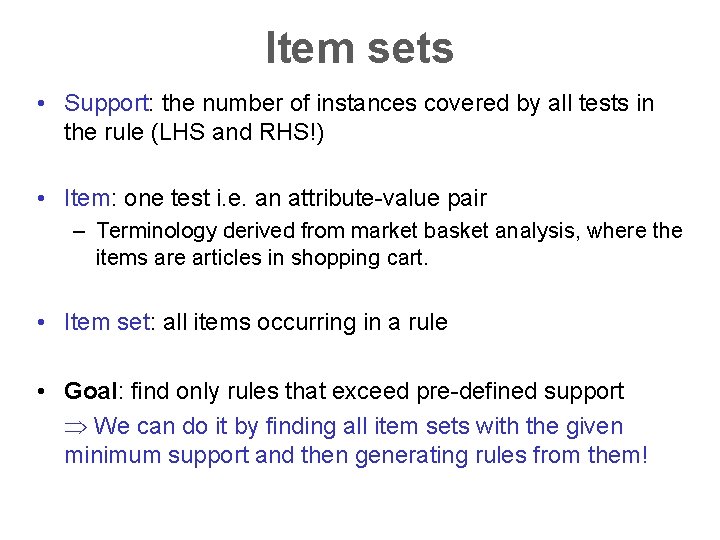

Item sets • Support: the number of instances covered by all tests in the rule (LHS and RHS!) • Item: one test i. e. an attribute-value pair – Terminology derived from market basket analysis, where the items are articles in shopping cart. • Item set: all items occurring in a rule • Goal: find only rules that exceed pre-defined support Þ We can do it by finding all item sets with the given minimum support and then generating rules from them!

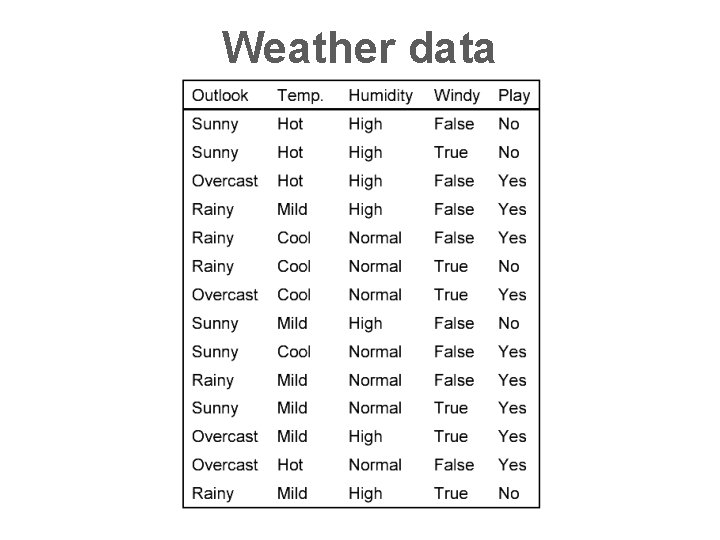

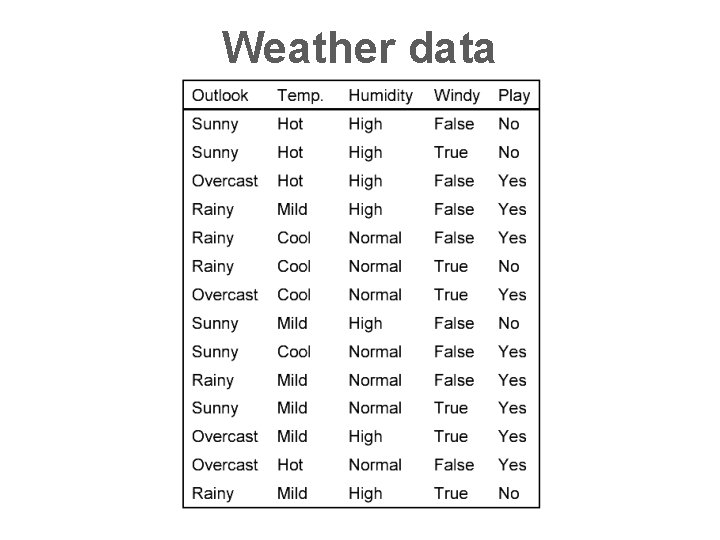

Weather data

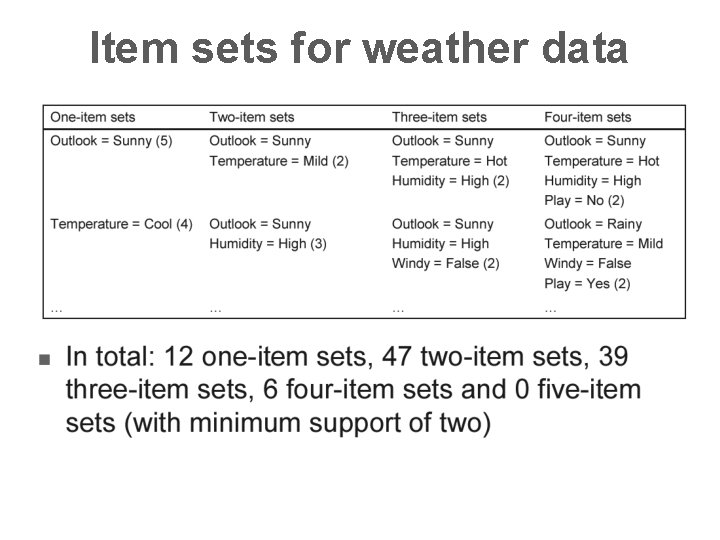

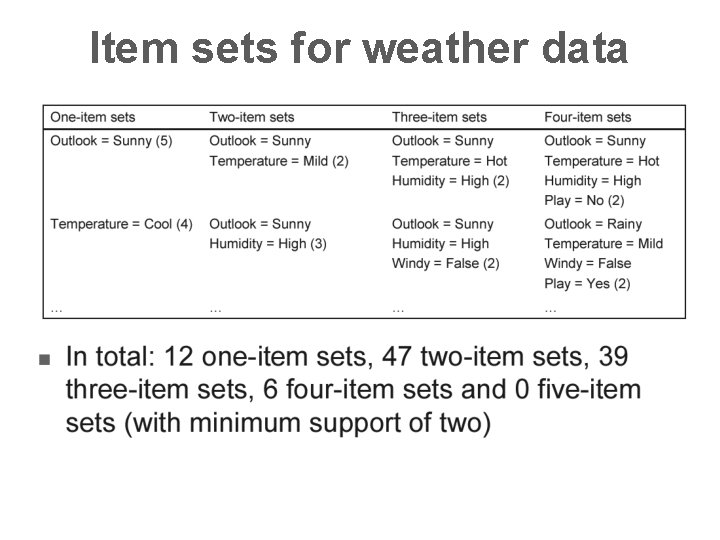

Item sets for weather data

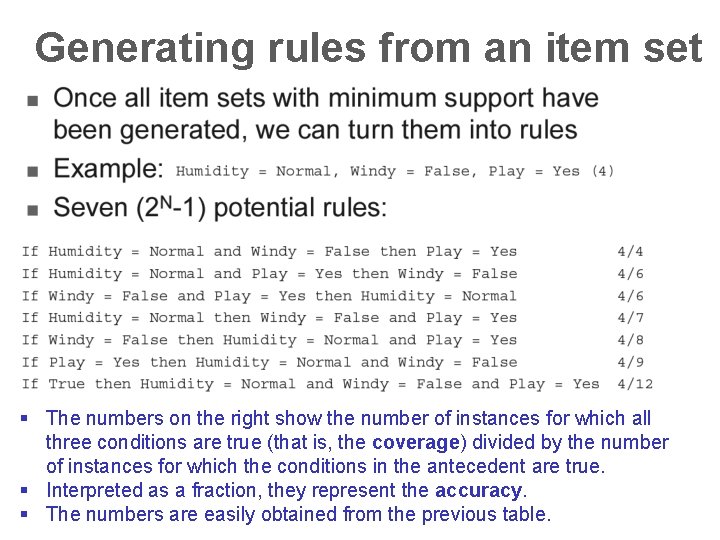

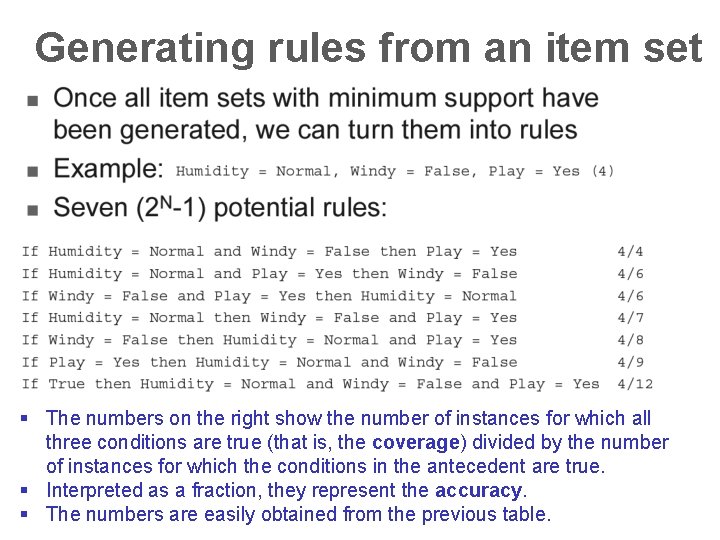

Generating rules from an item set § The numbers on the right show the number of instances for which all three conditions are true (that is, the coverage) divided by the number of instances for which the conditions in the antecedent are true. § Interpreted as a fraction, they represent the accuracy. § The numbers are easily obtained from the previous table.

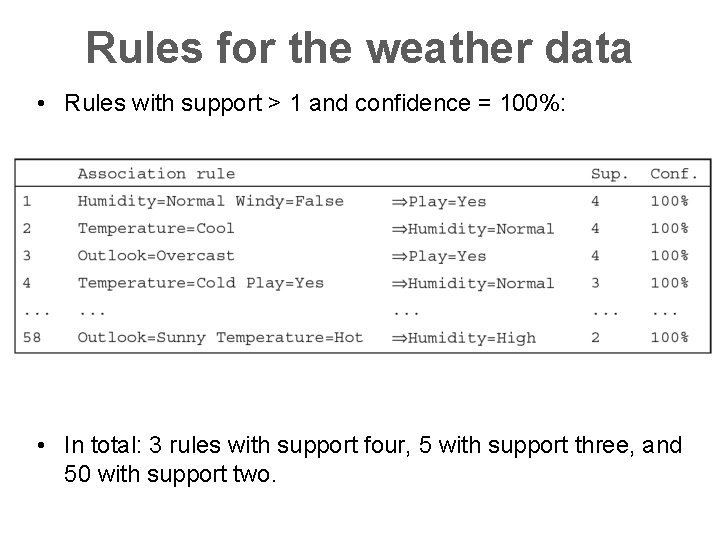

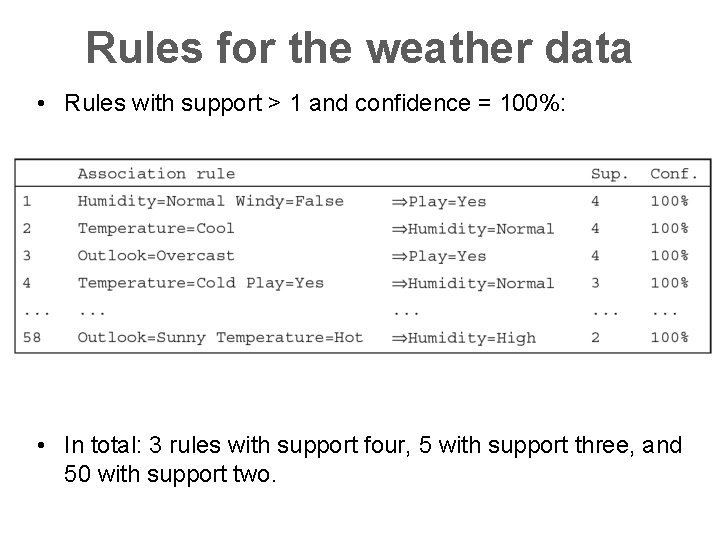

Rules for the weather data • Rules with support > 1 and confidence = 100%: • In total: 3 rules with support four, 5 with support three, and 50 with support two.

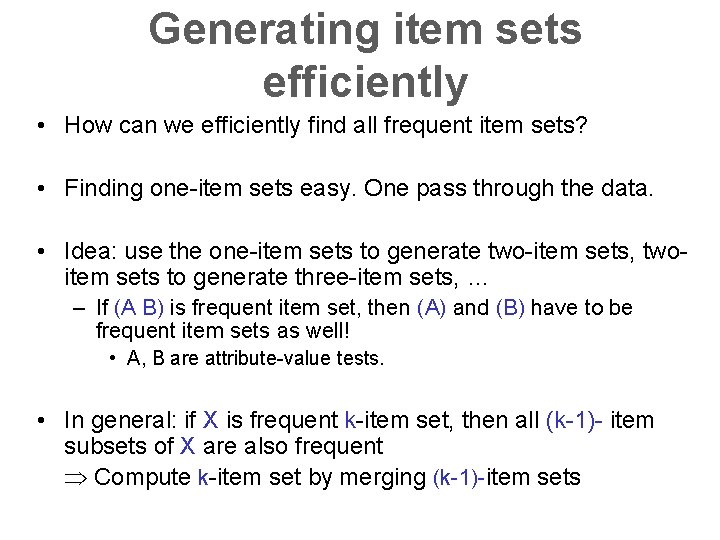

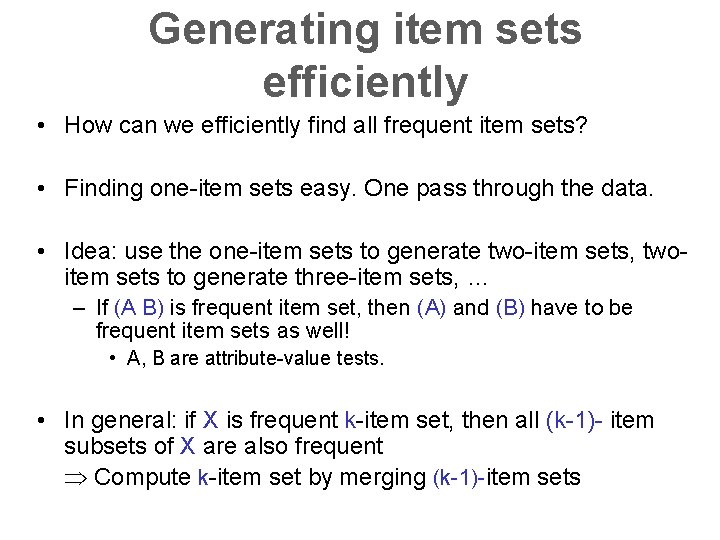

Generating item sets efficiently • How can we efficiently find all frequent item sets? • Finding one-item sets easy. One pass through the data. • Idea: use the one-item sets to generate two-item sets, twoitem sets to generate three-item sets, … – If (A B) is frequent item set, then (A) and (B) have to be frequent item sets as well! • A, B are attribute-value tests. • In general: if X is frequent k-item set, then all (k-1)- item subsets of X are also frequent Þ Compute k-item set by merging (k-1)-item sets

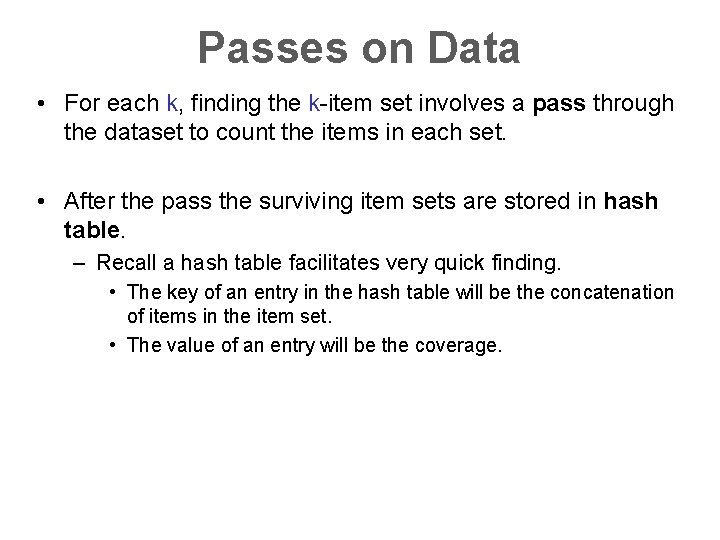

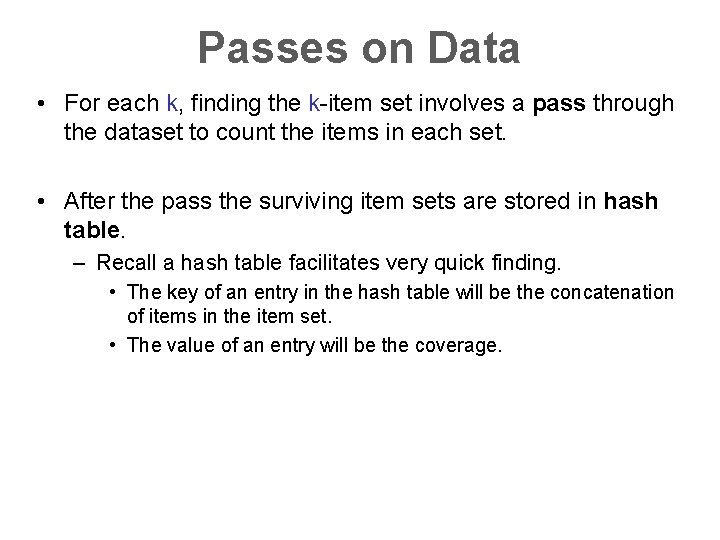

Passes on Data • For each k, finding the k-item set involves a pass through the dataset to count the items in each set. • After the pass the surviving item sets are stored in hash table. – Recall a hash table facilitates very quick finding. • The key of an entry in the hash table will be the concatenation of items in the item set. • The value of an entry will be the coverage.

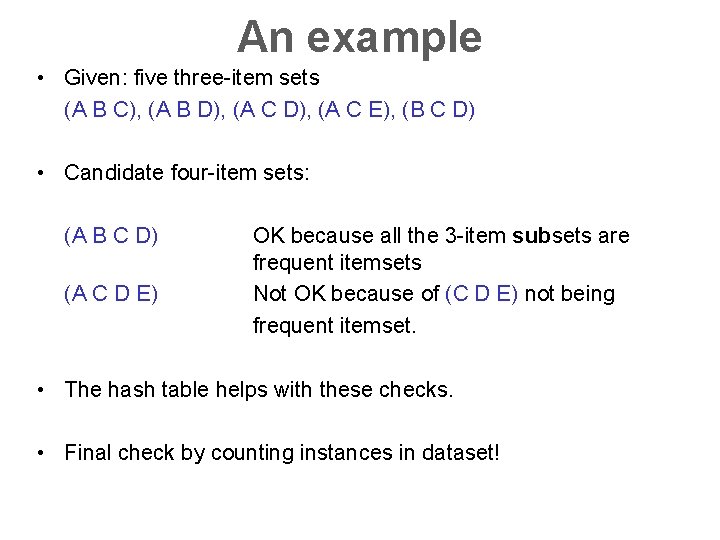

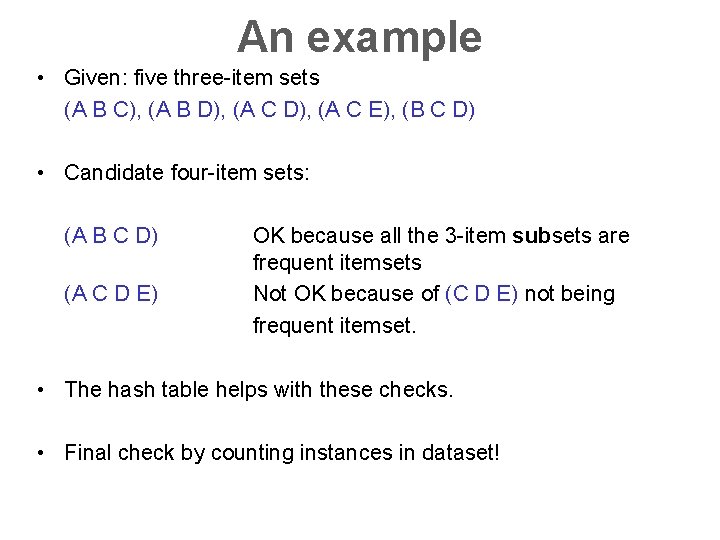

An example • Given: five three-item sets (A B C), (A B D), (A C E), (B C D) • Candidate four-item sets: (A B C D) (A C D E) OK because all the 3 -item subsets are frequent itemsets Not OK because of (C D E) not being frequent itemset. • The hash table helps with these checks. • Final check by counting instances in dataset!

Generating rules efficiently • Suppose we found the frequent itemsets. • Now, we are looking for all high-confidence rules – Support of antecedent obtained from hash table – But: brute-force method is (2 N-1) • Better way: building (c+1)-consequent rules from -consequent ones c • Observation: (c+1)-consequent rule can only hold if all corresponding c-consequent rules also hold • Resulting algorithm similar to procedure for large item sets

Discussion of association rules • Above method makes one pass through the data for each different size item set • Other possibility: generate (k+2)-item sets just after (k+1)item sets have been generated without validating first the (k+1)-item sets. – Result: more ( k+2)-item sets than necessary will be considered but less passes through the data – Makes sense if data too large for main memory

Tabular format • Tabular format isn’t always appropriate. • Association rules are often used in situations where attributes are unary – either present or not. – E. g. in supermarket basket analysis, • an instance is a particular shopping cart • attributes are all the items for sale • attribute values are present if that item appears in the cart and missing otherwise. • Most carts contain far fewer items than there are in the supermarket, and it’s more efficient to represent each instance as a list of attributes whose value is present.