Mining a Year of Speech a Digging into

![American and English Same set of acoustic models e. g. same [ɑ] for US American and English Same set of acoustic models e. g. same [ɑ] for US](https://slidetodoc.com/presentation_image_h/c846119a6143d33d6a77084fe46d9905/image-40.jpg)

![“Speech in the wild” Listen they were going [belch] that ain't a burp he “Speech in the wild” Listen they were going [belch] that ain't a burp he](https://slidetodoc.com/presentation_image_h/c846119a6143d33d6a77084fe46d9905/image-69.jpg)

![Not just for linguists Tausczik and Pennebaker (2010): “We [social psychologists] are in the Not just for linguists Tausczik and Pennebaker (2010): “We [social psychologists] are in the](https://slidetodoc.com/presentation_image_h/c846119a6143d33d6a77084fe46d9905/image-70.jpg)

- Slides: 75

Mining a Year of Speech: a “Digging into Data” project http: //www. phon. ox. ac. uk/mining/

Mining (a) Year(s) of Speech: a “Digging into Data” project http: //www. phon. ox. ac. uk/mining/

John Coleman Greg Kochanski Ladan Ravary Sergio Grau Oxford University Phonetics Laboratory Lou Burnard Jonathan Robinson The British Library

Mark Liberman Jiahong Yuan Chris Cieri Phonetics Laboratory and Linguistic Data Consortium University of Pennsylvania

with support from our“Digging into Data” competition funders and with thanks for pump-priming support from the Oxford University John Fell Fund, and from the British Library

The “Digging into Data” challenge “The creation of vast quantities of Internet accessible digital data and the development of techniques for large-scale data analysis and visualization have led to remarkable new discoveries in genetics, astronomy and other fields. . . With books, newspapers, journals, films, artworks, and sound recordings being digitized on a massive scale, it is possible to apply data analysis techniques to large collections of diverse cultural heritage resources as well as scientific data. ”

In “Mining a Year of Speech” we addressed the challenges of working with very large audio collections of spoken language.

Challenges of very large audio collections of spoken language How does a researcher find audio segments of interest? How do audio corpus providers mark them up to facilitate searching and browsing? How to make very large scale audio collections accessible?

Challenges • Amount of material • Storage – CD quality audio: 635 MB/hour – Uncompressed. wav files: 115 MB/hour – 2. 8 GB/day – 85 GB/month – 1. 02 TB/year – Library/archive. wav files: 1 GB/hr, 9 TB/yr Spoken audio = 250 times XML

Challenges • Storing 1. 02 TB/year: not really a problem in 21 st century • 1 TB (1000 GB) hard drive: c. £ 65 --- Now £ 39. 95! • Computing (distance measures, alignments, labels etc): multiprocessor cluster

Challenges • Amount of material • Computing – distance measures, etc. – alignment of labels – searching and browsing – Just reading or copying 9 TB takes >1 day – Download time: days or weeks

Challenges To make large corpora practical, you need: • A detailed index, so users can find the parts they need • A way of using the index to access slices of the corpus ? <w c 5="AV 0" hw="well" pos="ADV" >Well </w>

Potential users • Members of public interested in specific bits of content • Scientists with broader interests, e. g. law scholars, political scientists: text searches • Phoneticians and speech engineers: retrieval based on pronunciation and sound

Searching audio: some kinds of questions you might ask 1. When did X say Y? For example, "find the video clip where George Bush said 'read my lips'. " 2. Are there changes in dialects, or in their social status, that are tied to the new social media? 3. How do arguments work? For example, how do different people handle interruptions? 4. How frequent are linguistic features such as phrase-final rising intonation ("uptalk") across different age groups, genders, social classes, and regions?

Some large(ish) speech corpora • Switch. Board corpus: 13 days of audio. • Spoken Dutch Corpus: 1 month, but only a fraction is phonetically transcribed. • Spoken Spanish: 4. 6 days, orthographically transcribed. • Buckeye Corpus (OSU): c. 2 days. • Wellington Corpus of Spoken New Zealand English, c. 3 days transcribed • Digital Archive of Southern Speech (American)

The “Year of Speech” • A grove of corpora, held at various sites with a common indexing scheme and search tools • US English material: 2, 240 hrs of telephone conversations • 1, 255 hrs of broadcast news • As-yet unpublished talk show conversations (1000 hrs), Supreme Court oral arguments (5000 hrs), political speeches and debates • British English: Spoken part of the British National Corpus, >7. 4 million words of transcribed speech • Recently digitized by collaboration with British Library

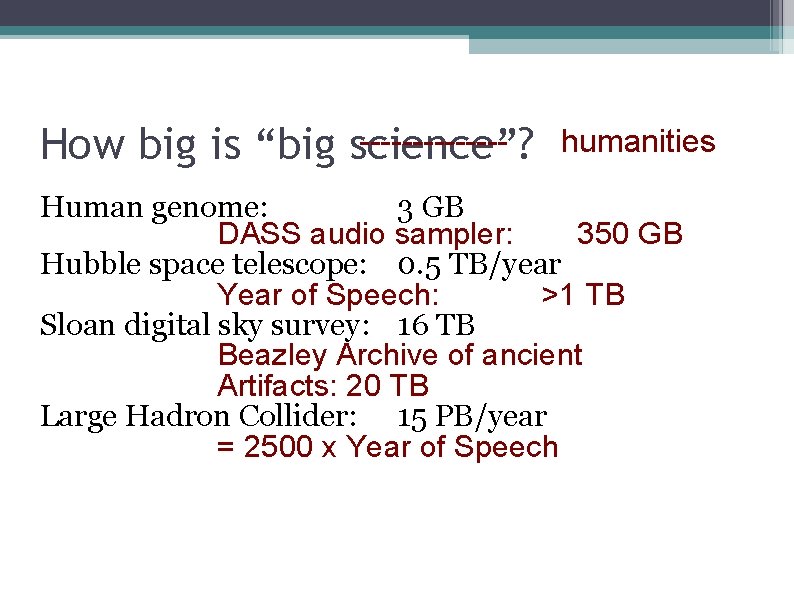

How big is “big science”? Human genome: 3 GB DASS audio sampler: 350 GB Hubble space telescope: 0. 5 TB/year Year of Speech: >1 TB Sloan digital sky survey: 16 TB Beazley Archive of ancient Artifacts: 20 TB Large Hadron Collider: 15 PB/year = 2500 x Year of Speech

-------How big is “big science”? humanities Human genome: 3 GB DASS audio sampler: 350 GB Hubble space telescope: 0. 5 TB/year Year of Speech: >1 TB Sloan digital sky survey: 16 TB Beazley Archive of ancient Artifacts: 20 TB Large Hadron Collider: 15 PB/year = 2500 x Year of Speech

Analogue audio in libraries British Library: >1 m disks and tapes, 5% digitized Library of Congress Recorded Sound Reference Center: >2 m items, including … International Storytelling Foundation: >8000 hrs of audio and video European broadcast archives: >20 m hrs (2, 283 years) cf. Large Hadron Collider 75% on ¼” tape 20% shellac and vinyl 7% digital

Analogue audio in libraries World wide: ~100 m hours (11, 415 yrs) analogue i. e. 4 -5 Large Hadron Colliders! Cost of professional digitization: ~£ 20/$32 per tape (e. g. C-90 cassette) Using speech recognition and natural language technologies (e. g. summarization) could provide more detailed cataloguing/indexing without timeconsuming human listening

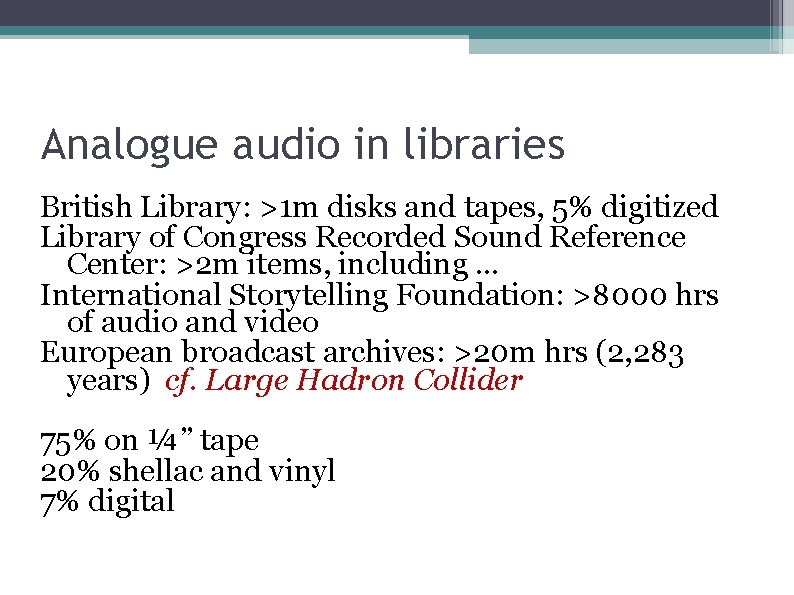

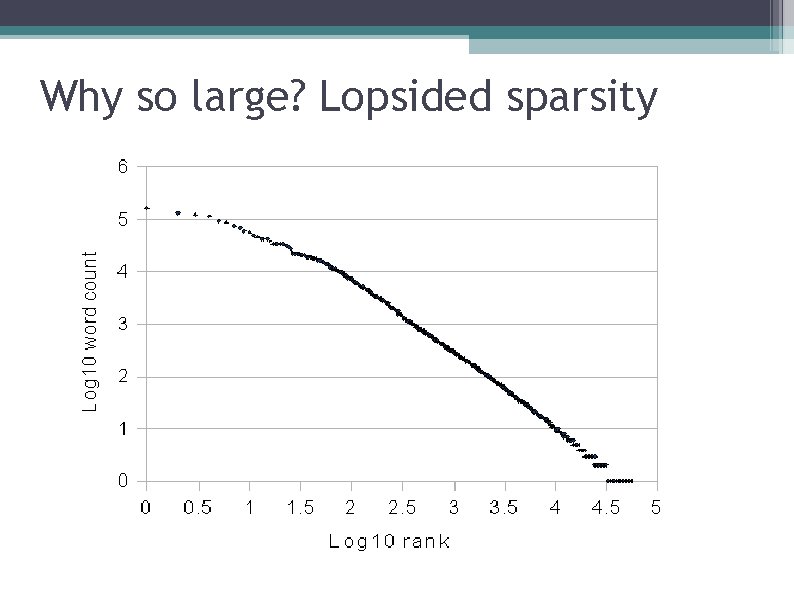

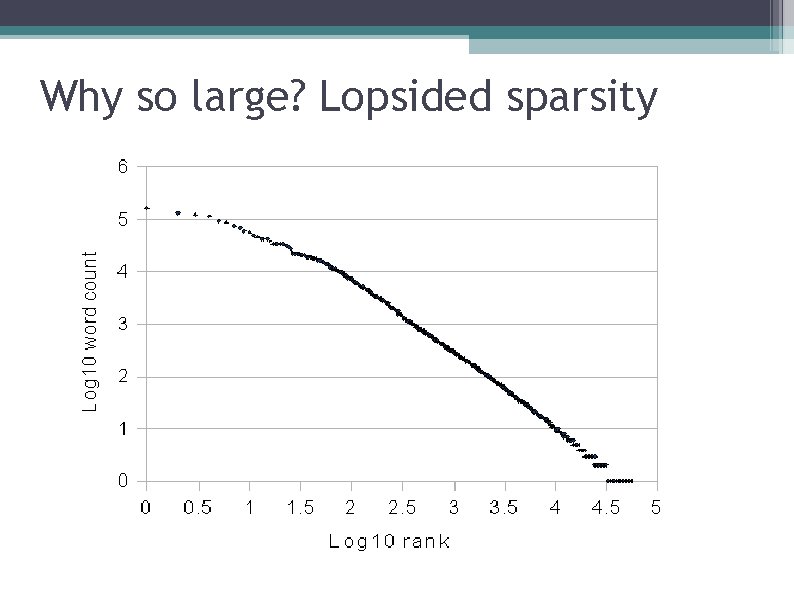

Why so large? Lopsided sparsity I Top ten words each occur You 58, 000 times it the 's and n't a That 12, 400 words (23%) only Yeah occur once

Why so large? Lopsided sparsity

Why so large? Lopsided sparsity

Lopsided sparsity and size Fox and Robles (2010): 22 examples of It's likeenactments [e. g. it's like 'mmmmmm'] in 10 hours of data

Lopsided sparsity and size Rare phonetic word-joins I'm trying seem/n to alarng clock swimmim pool gettim paid weddim present 60 tokens per ~10 million 310 18 44 19 15 7 of the 'swimming pool' examples are from one family on one occasion

Lopsided sparsity and size Final -t/-d deletion: • • just want left slammed 19563 tokens 5221 432 6

A rule of thumb To catch most • English sounds, you need minutes of audio • common words of English … a few hours • a typical person's vocabulary … >100 hrs • pairs of common words … >1000 hrs • arbitrary word-pairs … >100 years

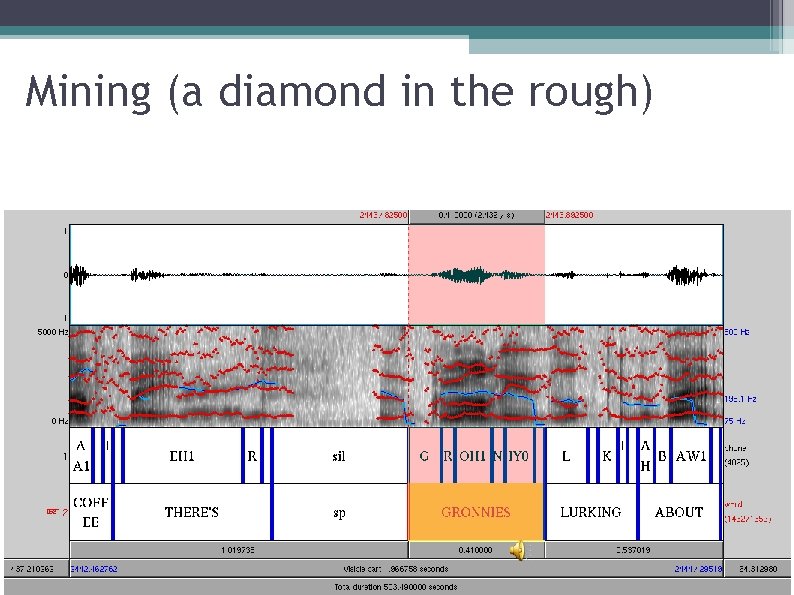

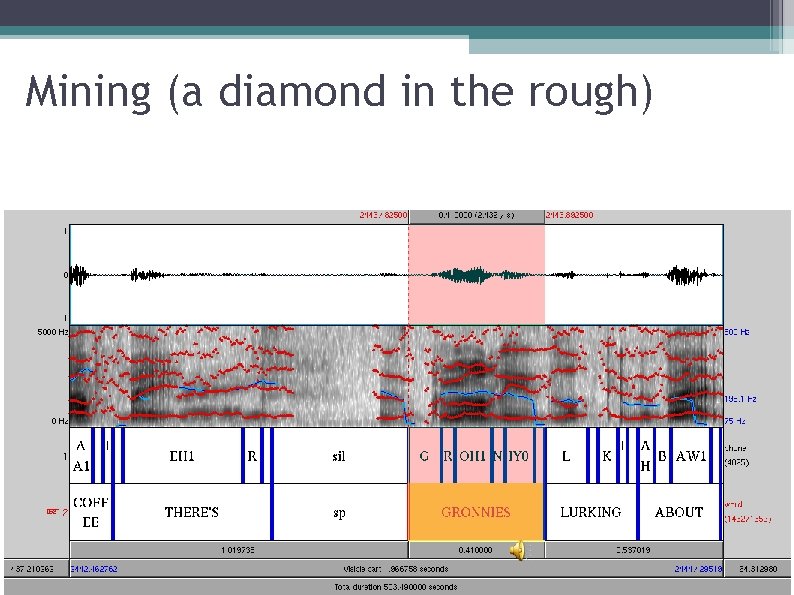

Rare and unique wonders aqualunging boringest chambermaiding de-grandfathered europeaney gronnies hoptastic lawnmowing mellies noseless punny regurgitate-arianism scunny smackerooney tooked weppings yak-chucker zombieness

Not just repositories of words Specific phrases or constructions Particularities of people's voices and speaking habits Dog-directed speech Parrot-directed speech

Language(? ) in the wild • A parrot • Talking to a dog • Try transcribing this! • There’s gronnies lurking about

Unusual voices Circumstances of use How is the 'voice' selected? Do men do it more than women? Young more than old? How do the speaker's and listener's brains produce, interpret or store “odd voice” pronunciations and strange intonations?

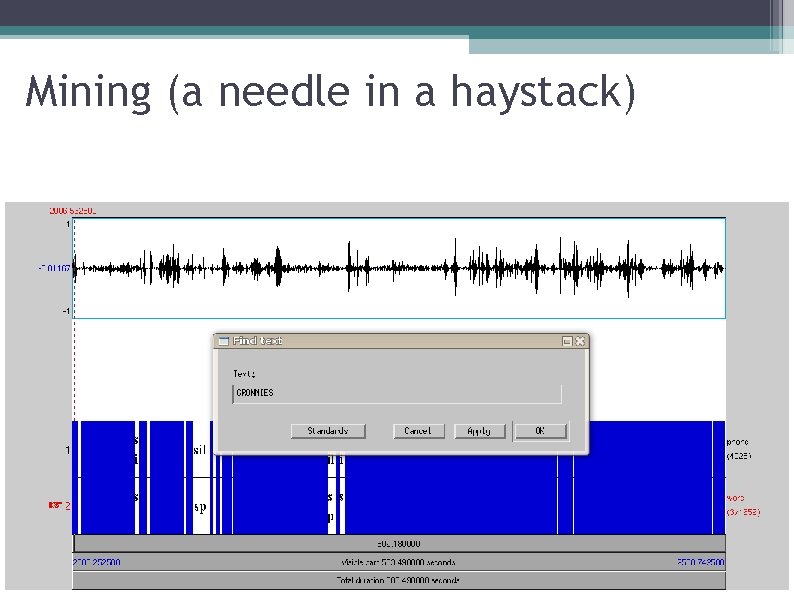

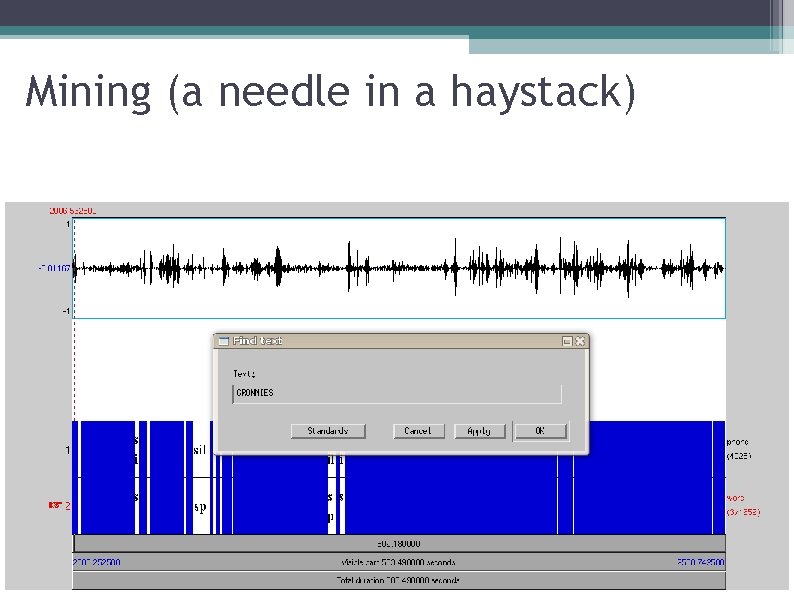

Main problem in large corpora Finding needles in the haystack To address that challenge, we think there are two “killer apps” Forced alignment Data linking: open exposure of digital material, coupled with cross-searching

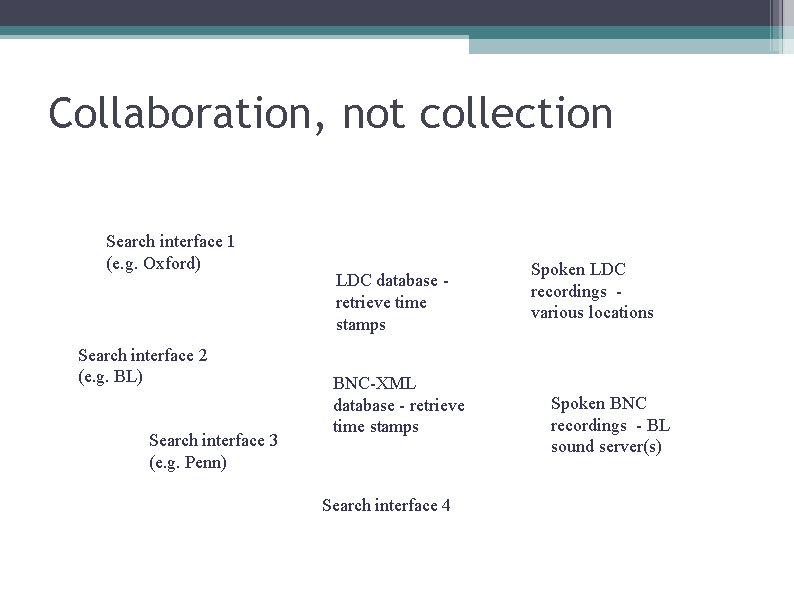

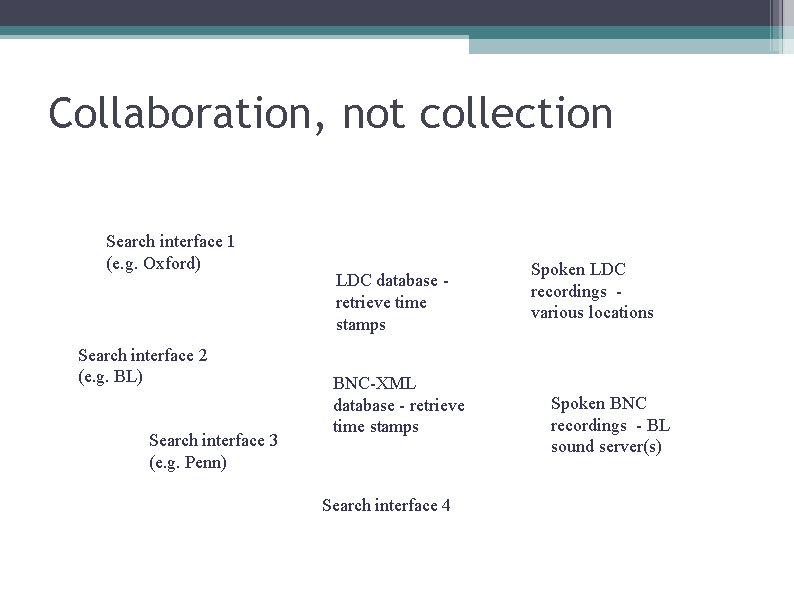

Collaboration, not collection Search interface 1 (e. g. Oxford) Search interface 2 (e. g. BL) Search interface 3 (e. g. Penn) LDC database retrieve time stamps BNC-XML database - retrieve time stamps Search interface 4 (e. g. Lancaster ? ) Spoken LDC recordings various locations Spoken BNC recordings - BL sound server(s)

Corpora in the Year of Speech Spontaneous speech Spoken BNC ~1400 hrs Conversational telephone speech Read text Libri. Vox audio books Broadcast news US Supreme Court oral arguments Political discourse Oral history interviews US vernacular dialects/Sociolinguistic interviews

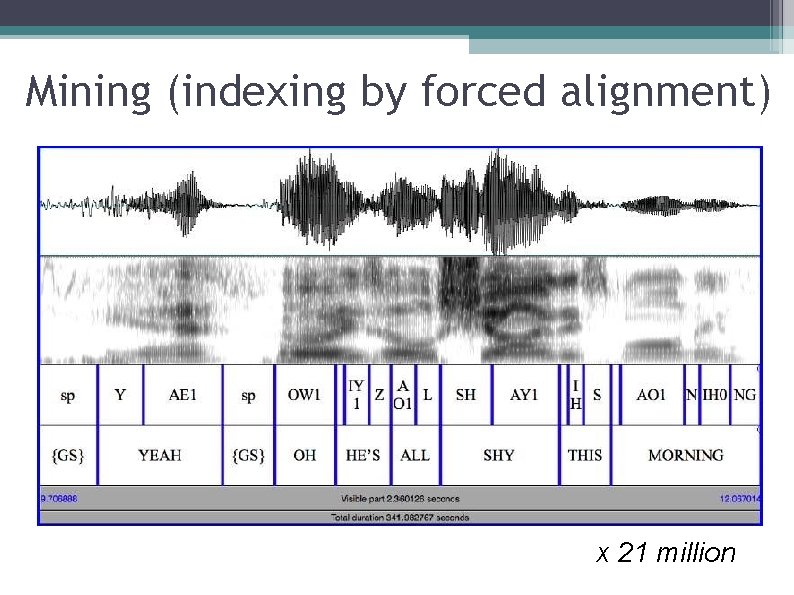

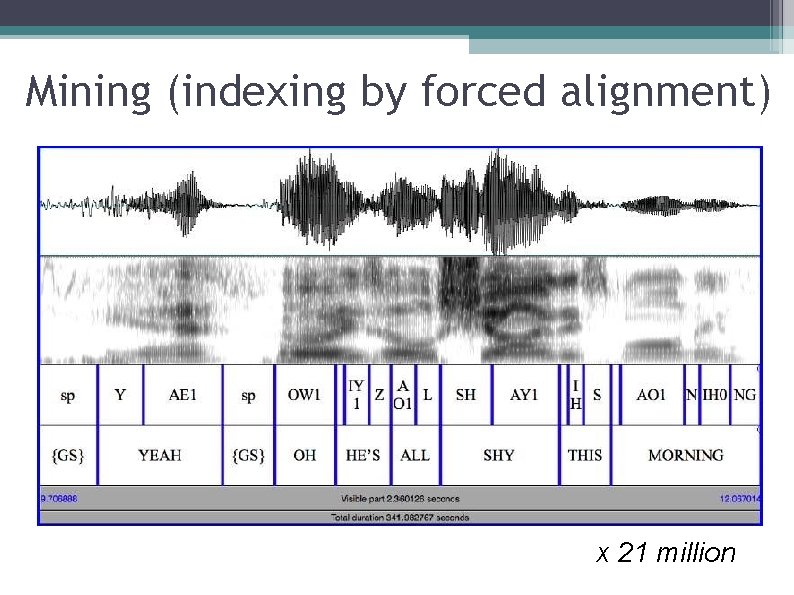

Practicalities • In order to be of much practical use, such very large corpora must be indexed at word and segment level • All included speech corpora must therefore have associated text transcriptions • We’re using the Penn Phonetics Laboratory Forced Aligner to associate each word and segment with the corresponding start and end points in the sound files

Mining (indexing by forced alignment) x 21 million

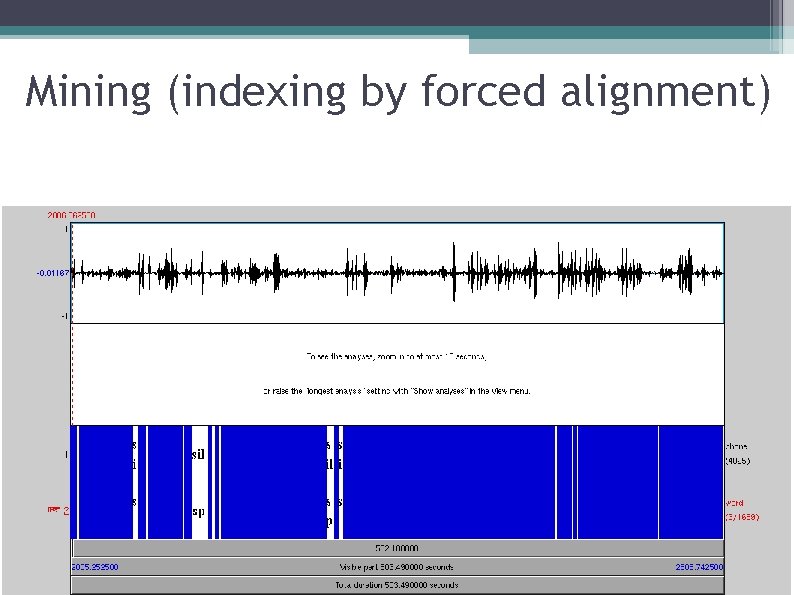

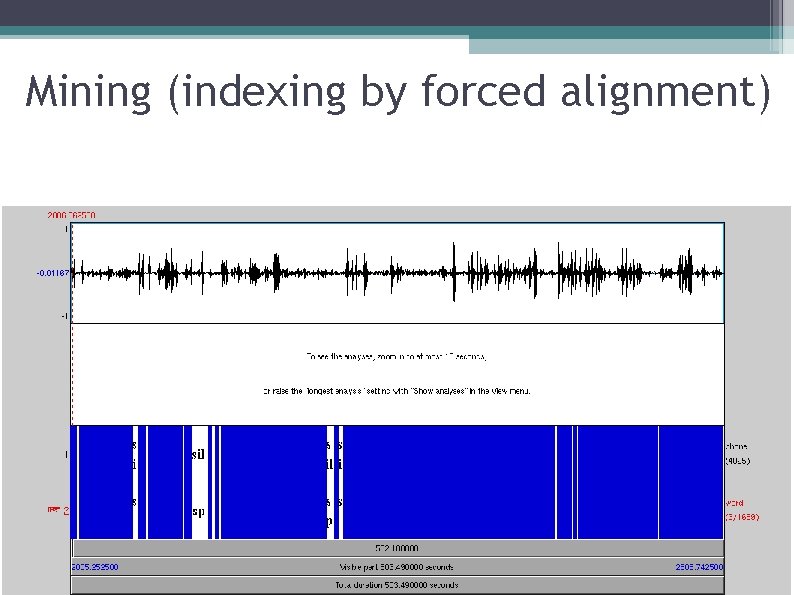

Mining (indexing by forced alignment)

Mining (a needle in a haystack)

Mining (a diamond in the rough)

![American and English Same set of acoustic models e g same ɑ for US American and English Same set of acoustic models e. g. same [ɑ] for US](https://slidetodoc.com/presentation_image_h/c846119a6143d33d6a77084fe46d9905/image-40.jpg)

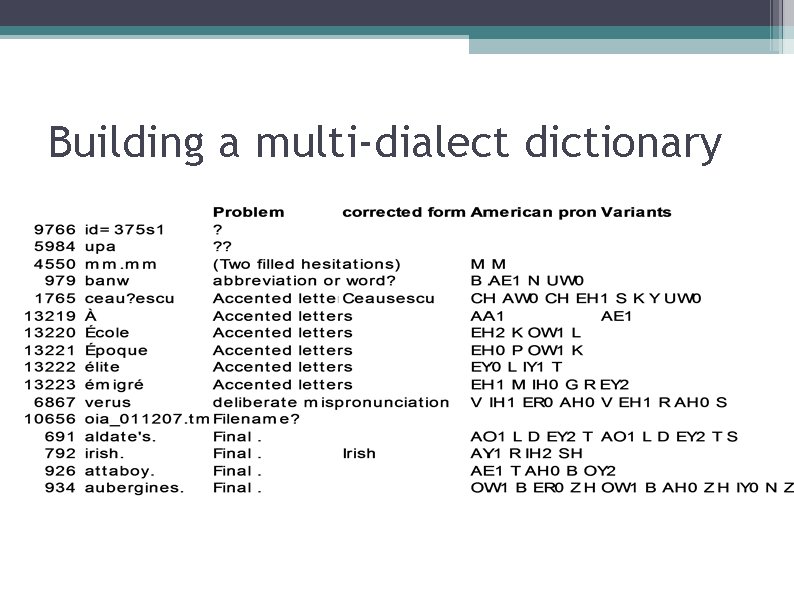

American and English Same set of acoustic models e. g. same [ɑ] for US “Bob” and UK “Ba(r)b” Pronunciation differences between different varieties were dealt with by listing multiple phonetic transcriptions

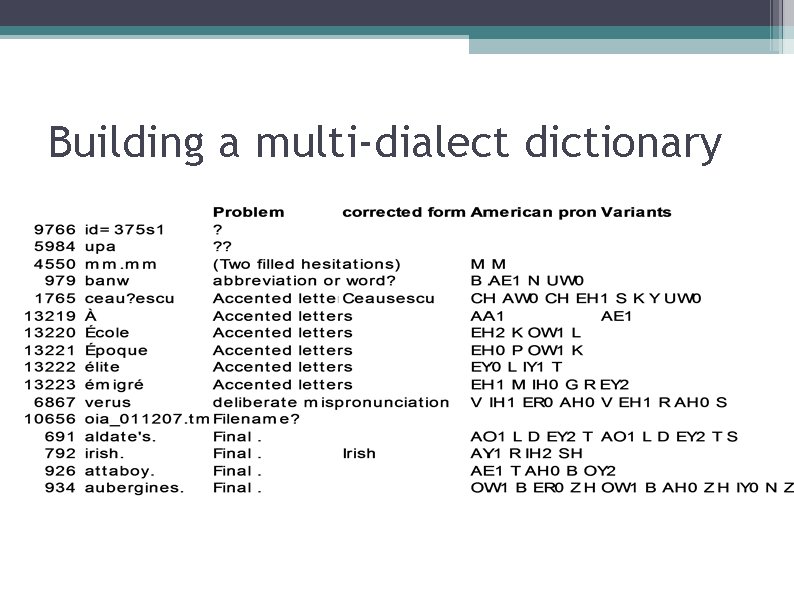

Building a multi-dialect dictionary

Building a multi-dialect dictionary

Tools/user interfaces

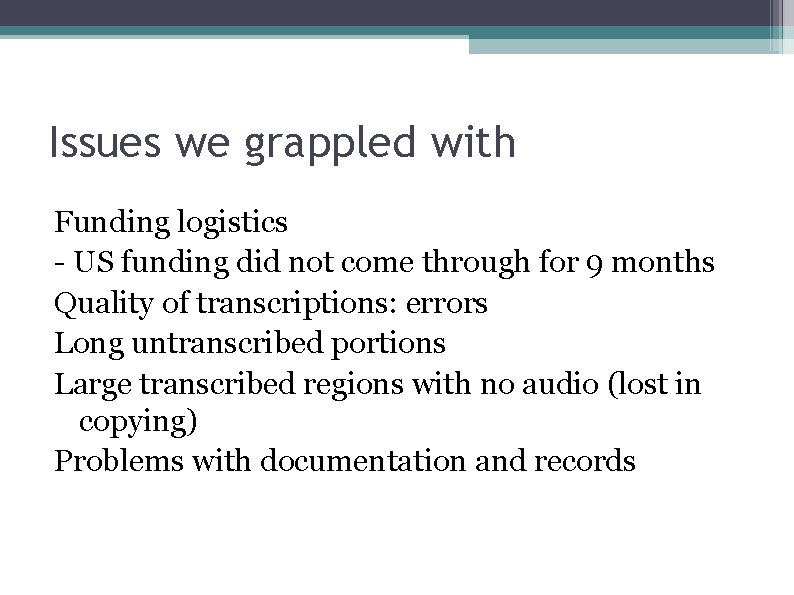

Issues we grappled with Funding logistics - US funding did not come through for 9 months Quality of transcriptions: errors Long untranscribed portions Large transcribed regions with no audio (lost in copying) Problems with documentation and records

Issues we grappled with Broadcast recordings may include untranscribed commercials Transcripts generally edit out dysfluencies Political speeches may extemporize, departing from the published script

Issues we grappled with Some causes of difficulty in forced alignment: • Overlapping speakers • Background noise/music/babble • Transcription errors • Variable signal loudness • Reverberation • Distortion • Poor speaker vocal health/voice quality • Unexpected accents

Issues we’re still grappling with • No standards for adding phonemic transcriptions and timing information to XML transcriptions • Many different possible schemes • How to decide?

Anonymization • The text transcriptions in the published BNC have already been anonymized • Some parts of the audio (e. g. COLT) have also been published • Full names, personal addresses and telephone numbers were replaced by <gap> tags • We use the location of all such tags to mute (silence) the corresponding portions of audio

Intellectual property responsibilities All <gap>s must be checked to ensure accuracy This is a much bigger job than we had anticipated (>13, 000 anonymization 'gaps') Checking the alignment of gaps is labour-intensive/slow Compounded by poor automatic alignments

Rare and unique wonders aeriated bolshiness canoodling drownded even-stevens gakky kiddy-fied mindblank noggin pythonish re-snogged sameyness stripesey tuitioning watermanship yukkified

Collaboration, not collection Search interface 1 (e. g. Oxford) Search interface 2 (e. g. BL) Search interface 3 (e. g. Penn) LDC database retrieve time stamps BNC-XML database - retrieve time stamps Search interface 4 Spoken LDC recordings various locations Spoken BNC recordings - BL sound server(s)

Publication/release plans: LDC

Publication/release plans: BNC • Until we complete the checking of the alignment of anonymization gaps, we cannot yet release the full BNC Spoken Audio corpus • In the mean time, we have prepared a BNC Spoken Audio Sampler (release imminent!) – Including the audio, alignments, and our proposed XML extensions – plus the multi-dialect pronouncing dictionary • Later: full release as linked data via the British Library Archival Sound Recordings server

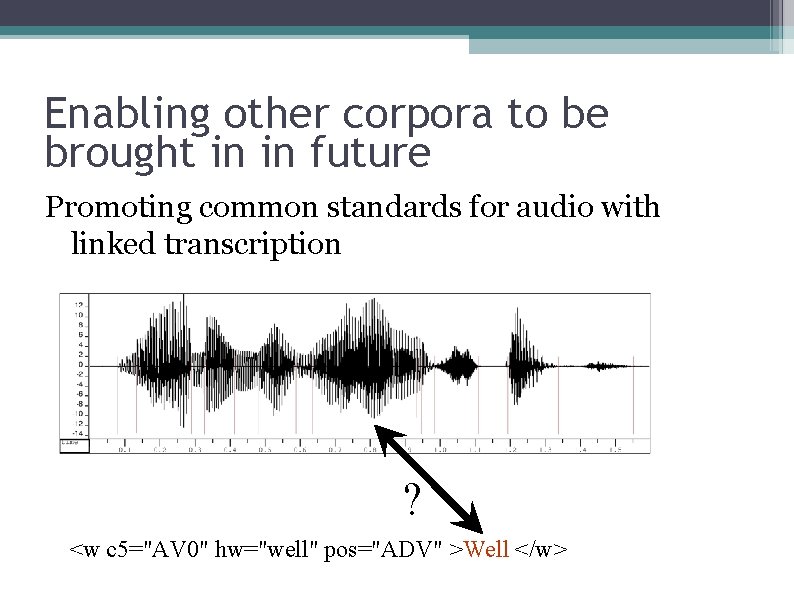

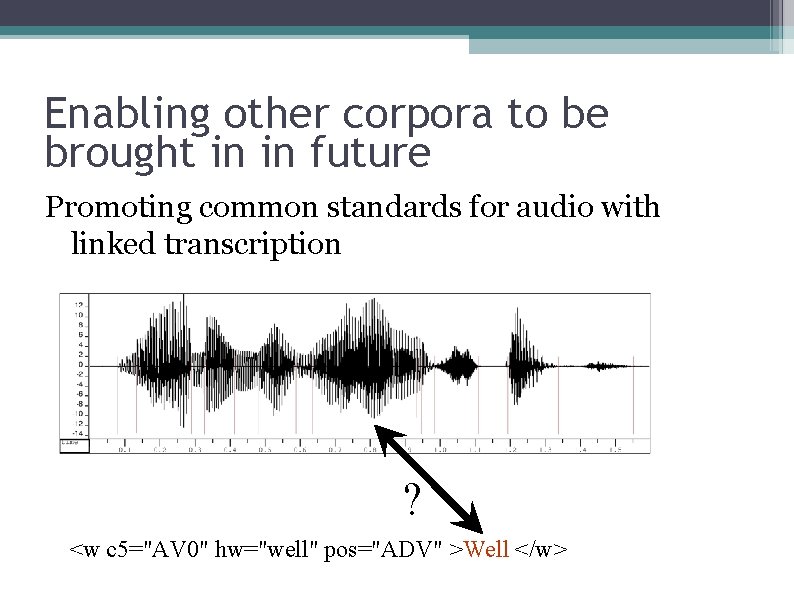

Enabling other corpora to be brought in in future Promoting common standards for audio with linked transcription ? <w c 5="AV 0" hw="well" pos="ADV" >Well </w>

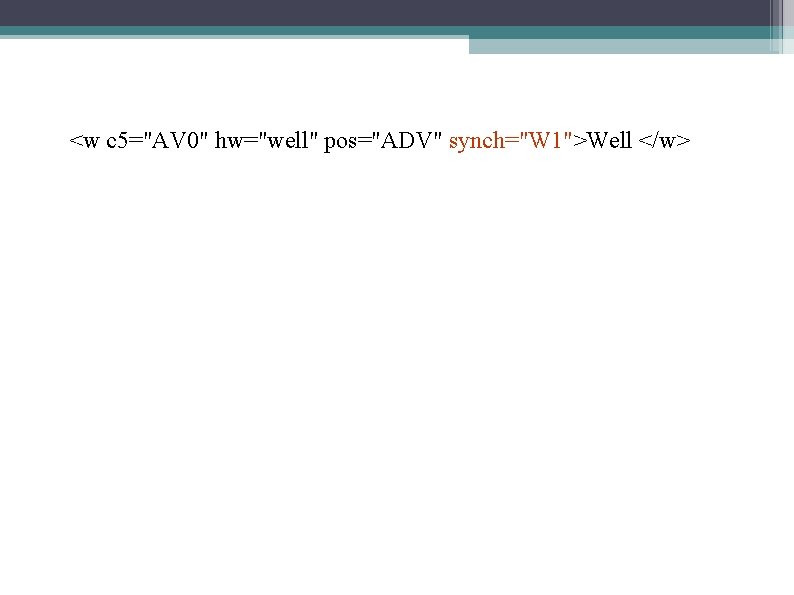

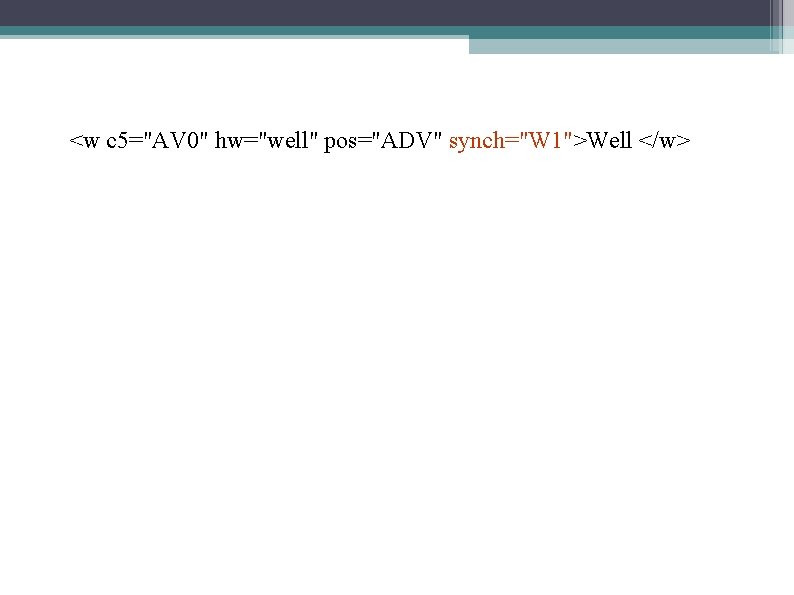

<w c 5="AV 0" hw="well" pos="ADV" synch="W 1">Well </w>

<w c 5="AV 0" hw="well" pos="ADV" synch="W 1">Well </w> <fs type="word"> <f name="transcription"> <string>Well </string> </f> <f name="phonemes"> <fs type="phoneme"> <f name="W" f. Val="#W" synch="P 1. 1"/> <f name="EH 1" f. Val="#EH 1" synch="P 1. 2"/> <f name="L" f. Val="#L" synch="P 1. 3"/> </fs>

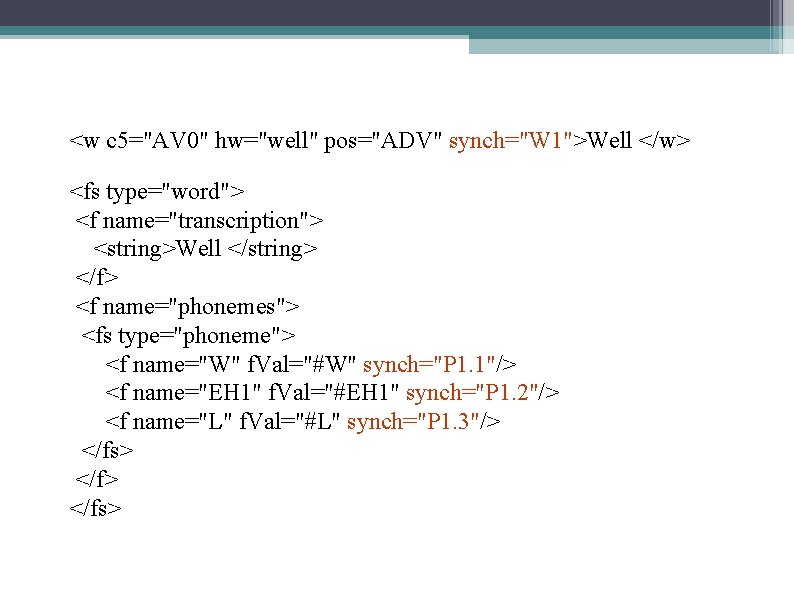

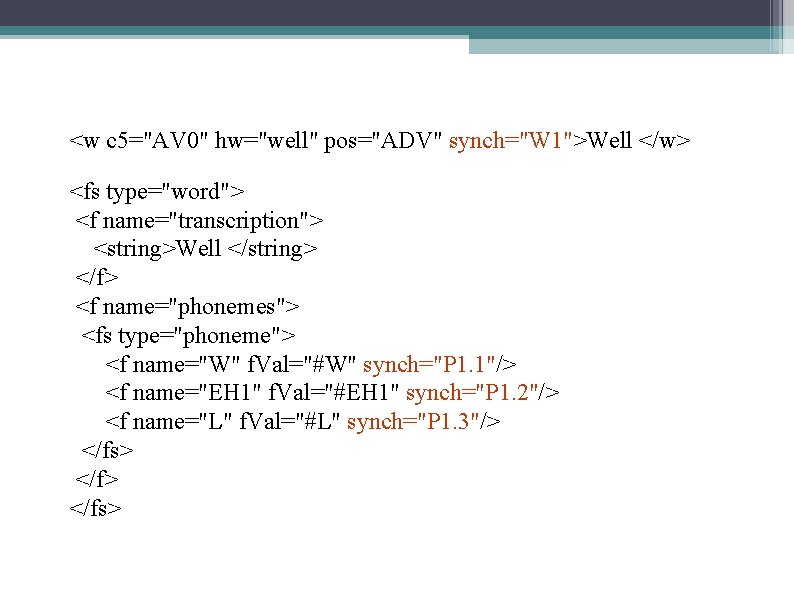

<timeline origin="0" unit="s" xml: id="TL 0"> <when xml: id="W 1" from="1. 6925" to="2. 1125"/> <when xml: id="W 2" from="2. 1125" to="2. 3125"/> <when xml: id="P 1. 1" from="1. 6925" to="1. 8225"/> <when xml: id="P 1. 2" from="1. 8225" to="1. 9225"/> <when xml: id="P 1. 3" from="1. 9225" to="2. 1125"/> <when xml: id="P 2. 1" from="2. 1125" to="2. 1825"/> <when xml: id="P 2. 2" from="2. 1825" to="2. 3125"/>. . . </timeline>

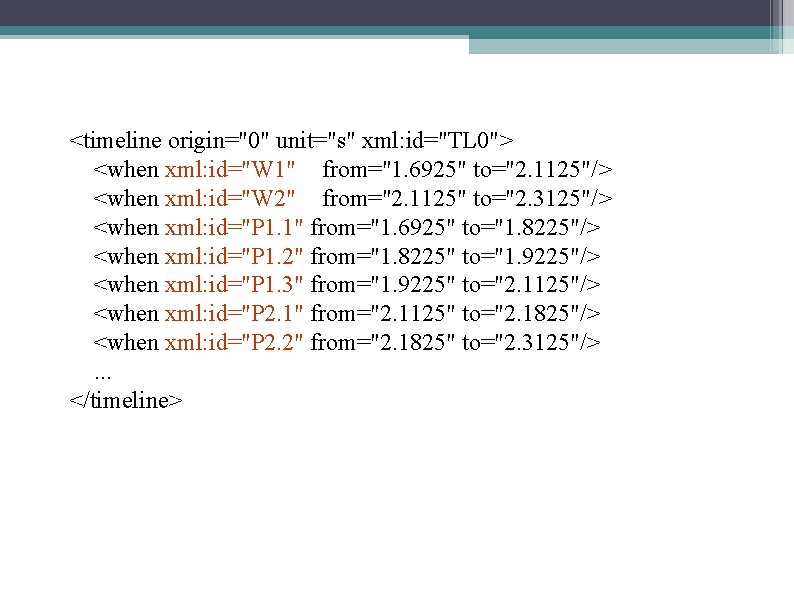

Enabling other corpora to be brought in in future • Negotiating with other speech corpus providers to join the “federation” • Accumulating transcriptions (in ordinary spelling) by crowdsourcing

New Insights How does the large scale change our research? What did we learn at the large scale that we couldn't see before? - We present an illustrative selection of pilot studies

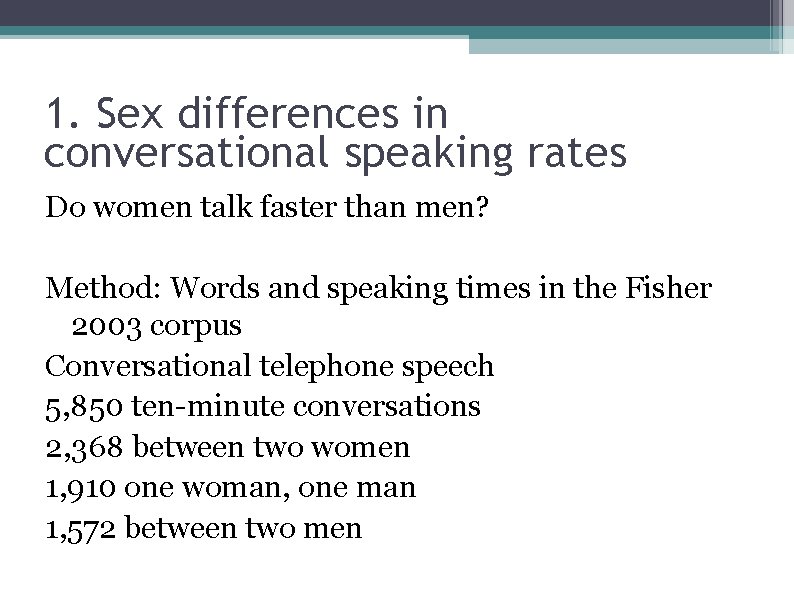

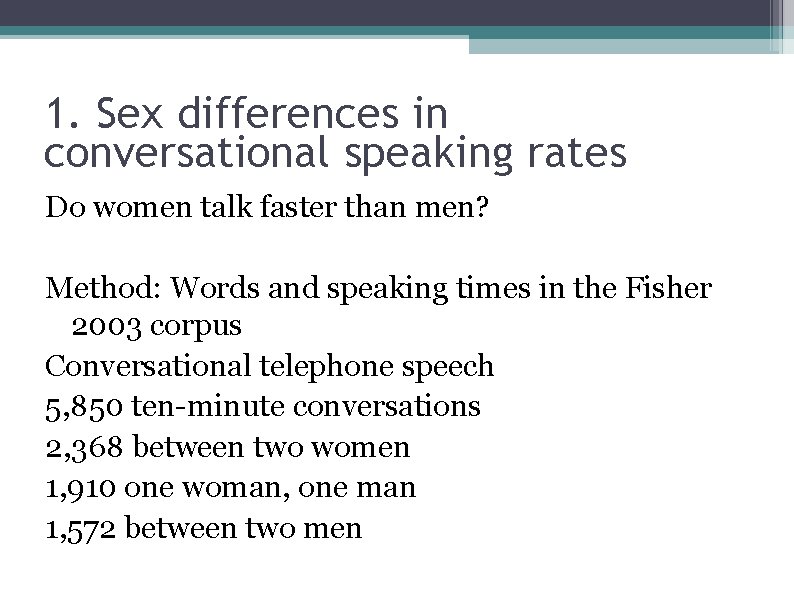

1. Sex differences in conversational speaking rates Do women talk faster than men? Method: Words and speaking times in the Fisher 2003 corpus Conversational telephone speech 5, 850 ten-minute conversations 2, 368 between two women 1, 910 one woman, one man 1, 572 between two men

Do women talk faster than men? No

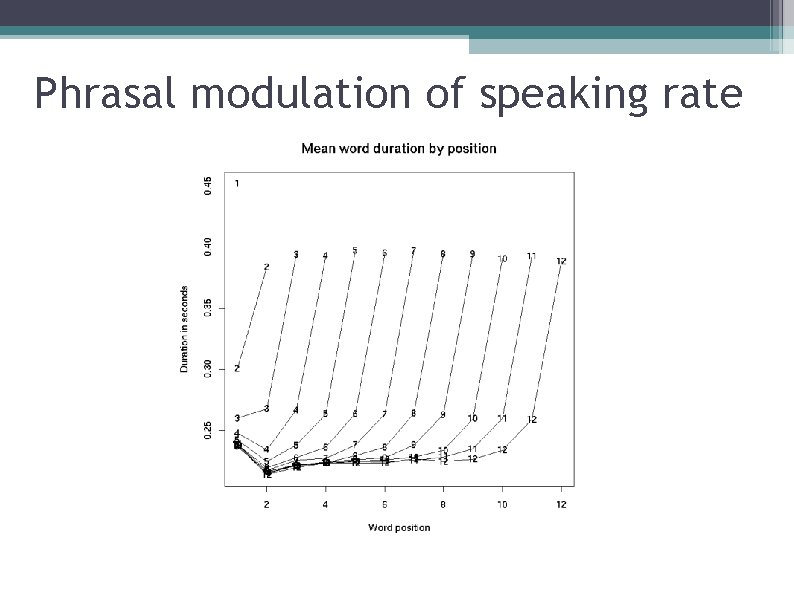

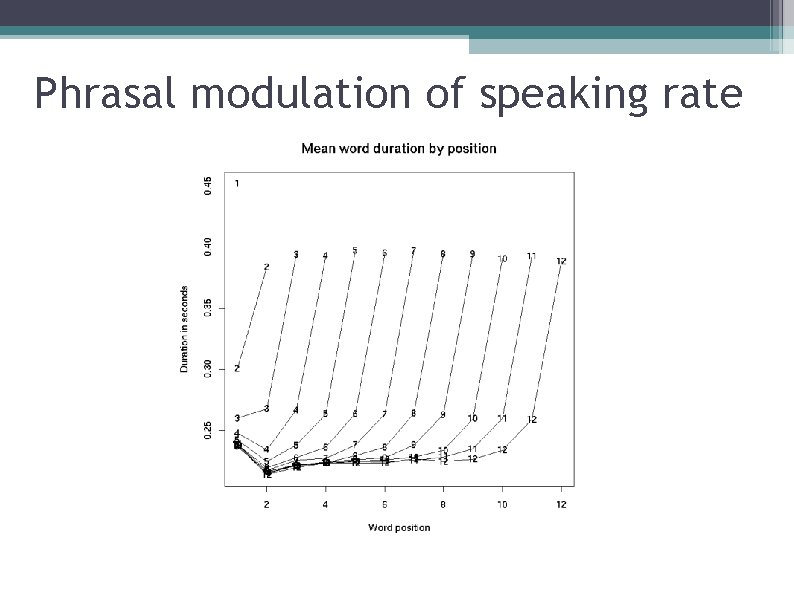

2. Phrasal modulation of speaking rate “Phrase final lengthening” (rallentando) What is a spoken “phrase”? Method: Word duration by position between pauses Data: Switchboard (conversational telephone speech)

Phrasal modulation of speaking rate

Phrasal modulation of speaking rate

3. How does speaking rate reflect the ebb and flow of conversations? Method: Word- or syllable-count in moving window over time-aligned transcripts

Ebb and flow of conversation

4. Previously unattested (“impossible”) assimilations of word-final consonants Rare phonetic word-joins I'm trying seem/n to alarng clock swimmim pool gettim paid weddim present

5. Integration of language and other behavior

![Speech in the wild Listen they were going belch that aint a burp he “Speech in the wild” Listen they were going [belch] that ain't a burp he](https://slidetodoc.com/presentation_image_h/c846119a6143d33d6a77084fe46d9905/image-69.jpg)

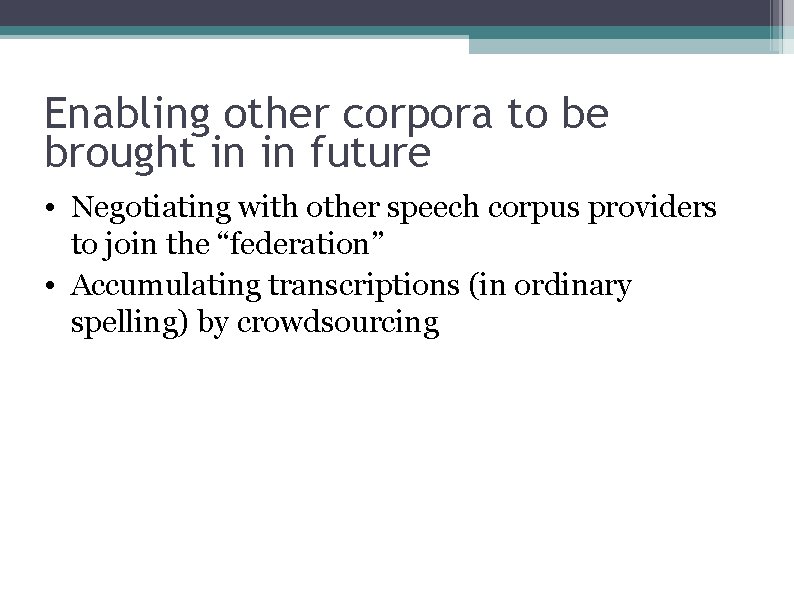

“Speech in the wild” Listen they were going [belch] that ain't a burp he said Like I'd be talking like this and suddenly it'll go [mimics microphone noises] He simply went [sound effect] through his nose Come on then shitbox

![Not just for linguists Tausczik and Pennebaker 2010 We social psychologists are in the Not just for linguists Tausczik and Pennebaker (2010): “We [social psychologists] are in the](https://slidetodoc.com/presentation_image_h/c846119a6143d33d6a77084fe46d9905/image-70.jpg)

Not just for linguists Tausczik and Pennebaker (2010): “We [social psychologists] are in the midst of a technological revolution whereby, for the first time, researchers can link daily word use to a broad array of real-world behaviors … results using LIWC [text analysis software] demonstrate its ability to detect meaning in a wide variety of settings, to show attentional focus, emotionality, social relationships, thinking styles, and individual differences. ”

Not just for linguists Ireland et al. (2011): Similarity in how people talk with one another on speed dates (measured by their usage of function words) predicts “increased likelihood of mutual romantic interest”, “mutually desired future contact” and “relationship stability at a 3 -month follow-up. ”

Not just for linguists Black et al. (forthcoming): “when the justices focus more unpleasant language toward one attorney, the side he represents is more likely to lose. ”

Finally Some parts of Year of Speech corpora are extremely challenging to automatically align with transcriptions Other parts are relatively easy For “cleaner” recordings, forced alignment of far larger corpora (e. g. 20 -year C-SPAN archive) is already possible, in principle As more and more audiovisual material becomes digitized, automatic alignment of transcriptions is essential for users to navigate through these libraries of the future.

Thank you very much!