MIKE 2 Method for an Integrated Knowledge Environment

- Slides: 16

MIKE 2 (Method for an Integrated Knowledge Environment) for Data Governance and Management Informatica Webinar: Data Governance and Management — How Ready Are You? Sean Mc. Clowry Solutions Architect Bearing. Point May 2006 Business and Systems Aligned. Business Empowered. TM

MIKE 2 Overview Implementing an Overall Data Governance Program What are the guiding principles to a successful Data Governance program? • Achieve alignment of information models with business models from the get-go ü Enable people with the right skills to build and manage new information systems ü Improve processes around information compliance, policies, practices and measurement ü Create a culture of information excellence and information management organization structured to yield results • Deliver solutions that meet the needs of today’s highly federated organizations ü Quantitatively identify data governance problems and resolve them ü Perform root cause analysis that led to poor data governance ü Remove complexity by ensuring all information is exchanged through standards ü Increase automation for the exchange of information across systems • Must advance to a model focused on information development, just as we have models for developing applications and infrastructures © 2005 Bearing. Point, Inc. 2

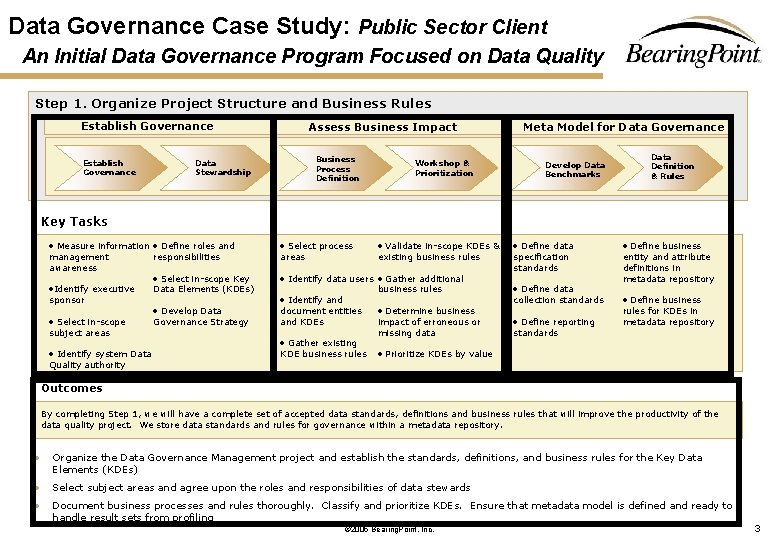

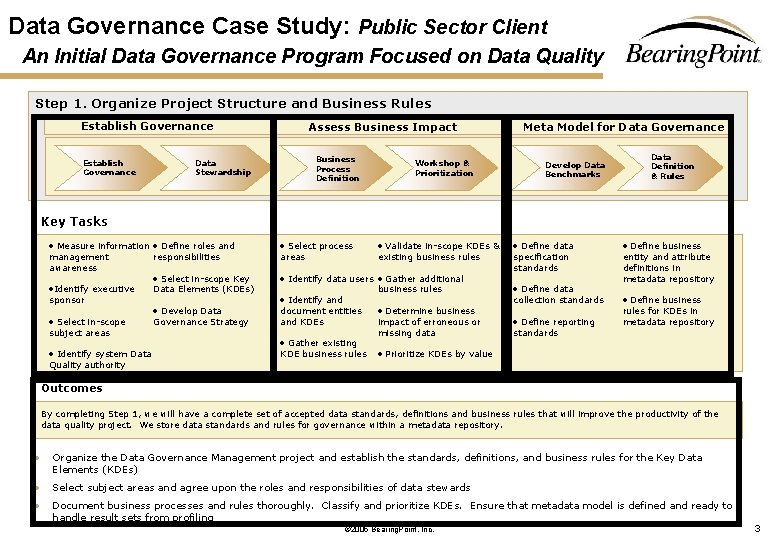

Data Governance Case Study: Public Sector Client An Initial Data Governance Program Focused on Data Quality Step 1. Organize Project Structure and Business Rules Establish Governance Assess Business Impact Business Process Definition Data Stewardship Workshop & Prioritization Meta Model for Data Governance Develop Data Benchmarks Data Definition & Rules Key Tasks · Measure information · Define roles and management awareness responsibilities ·Identify executive Data Elements (KDEs) sponsor · Select in-scope subject areas · Identify system Data Quality authority · Select in-scope Key · Develop Data Governance Strategy · Select process areas · Validate in-scope KDEs & existing business rules · Identify data users · Gather additional business rules · Identify and document entities and KDEs · Gather existing KDE business rules · Determine business impact of erroneous or missing data · Define data specification standards · Define data collection standards · Define reporting standards · Define business entity and attribute definitions in metadata repository · Define business rules for KDEs in metadata repository · Prioritize KDEs by value Outcomes By completing Step 1, we will have a complete set of accepted data standards, definitions and business rules that will improve the productivity of the data quality project. We store data standards and rules for governance within a metadata repository. • Organize the Data Governance Management project and establish the standards, definitions, and business rules for the Key Data Elements (KDEs) • Select subject areas and agree upon the roles and responsibilities of data stewards • Document business processes and rules thoroughly. Classify and prioritize KDEs. Ensure that metadata model is defined and ready to handle result sets from profiling © 2005 Bearing. Point, Inc. 3

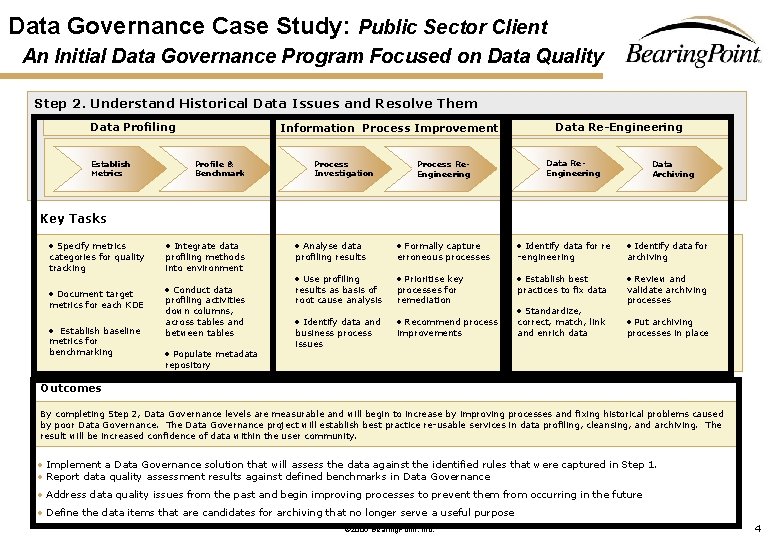

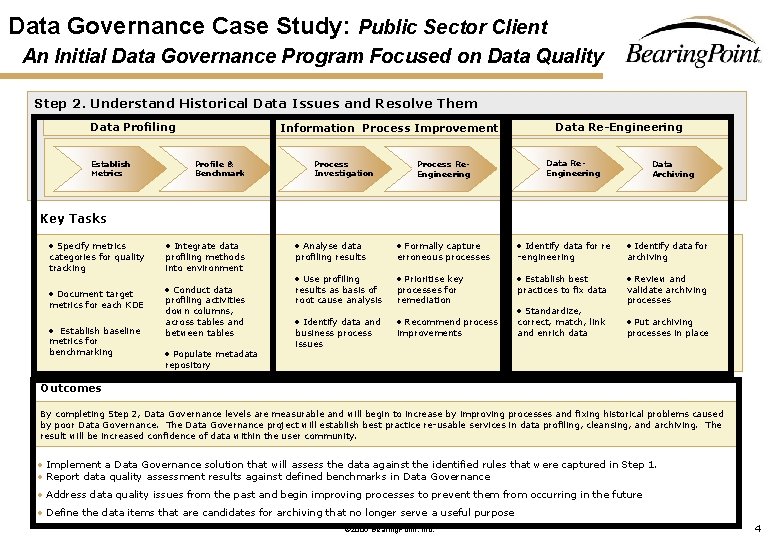

Data Governance Case Study: Public Sector Client An Initial Data Governance Program Focused on Data Quality Business Data Workshop & Step 2. Establish Understand Historical Data Issues and Resolve Them Process Governance Stewardship Prioritisation Definition Data Profiling Establish Metrics Information Process Improvement Profile & Benchmark Process Investigation Process Re. Engineering Data Re-Engineering Data Re. Engineering Data Archiving Key Tasks · Specify metrics • Integrate data · Document target · Conduct data categories for quality tracking metrics for each KDE · Establish baseline metrics for benchmarking profiling methods into environment profiling activities down columns, across tables and between tables · Populate metadata · Analyse data · Formally capture · Identify data for · Use profiling · Prioritise key · Establish best · Review and · Identify data and · Recommend process profiling results as basis of root cause analysis business process issues erroneous processes for remediation improvements -engineering practices to fix data · Standardize, correct, match, link and enrich data archiving validate archiving processes · Put archiving processes in place repository Outcomes By completing Step 2, Data Governance levels are measurable and will begin to increase by improving processes and fixing historical problems caused by poor Data Governance. The Data Governance project will establish best practice re-usable services in data profiling, cleansing, and archiving. The result will be increased confidence of data within the user community. • Implement a Data Governance solution that will assess the data against the identified rules that were captured in Step 1. • Report data quality assessment results against defined benchmarks in Data Governance • Address data quality issues from the past and begin improving processes to prevent them from occurring in the future • Define the data items that are candidates for archiving that no longer serve a useful purpose © 2005 Bearing. Point, Inc. 4

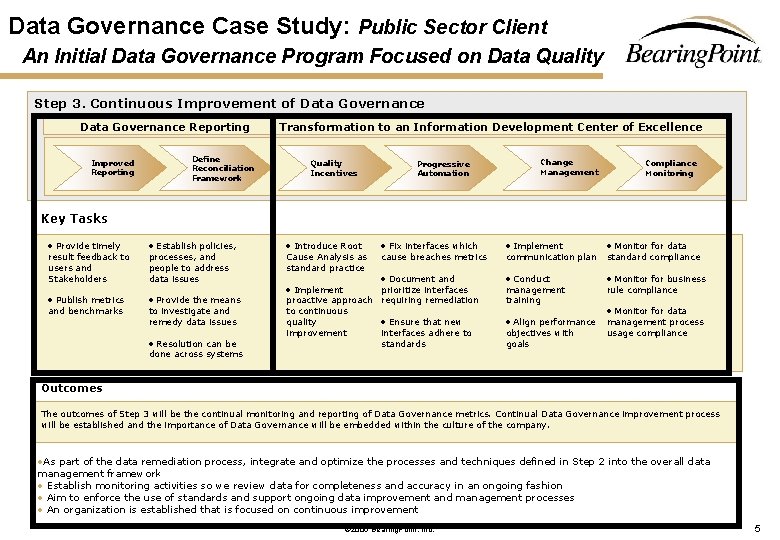

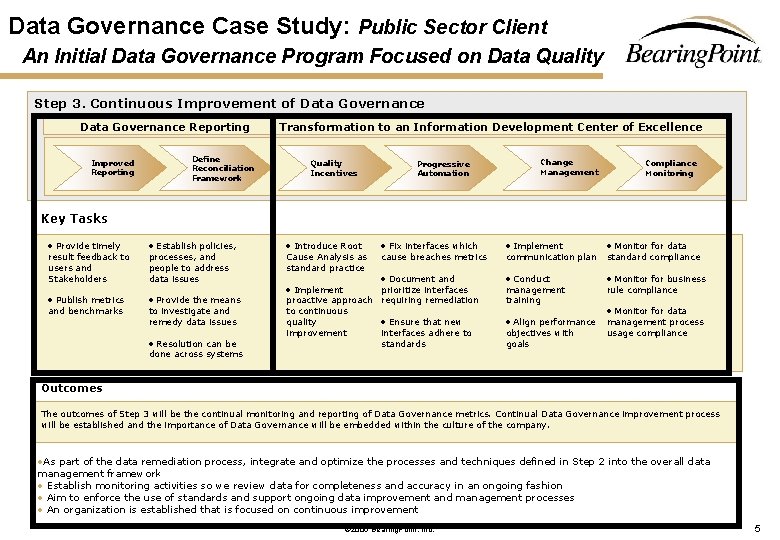

Data Governance Case Study: Public Sector Client An Initial Data Governance Program Focused on Data Quality Step 3. Continuous Improvement of Data Governance Reporting Improved Reporting Define Reconciliation Framework Transformation to an Information Development Center of Excellence Quality Incentives Progressive Automation Change Management Compliance Monitoring Key Tasks · Provide timely result feedback to users and Stakeholders · Publish metrics and benchmarks · Establish policies, processes, and people to address data issues · Provide the means to investigate and remedy data issues · Resolution can be done across systems · Introduce Root Cause Analysis as standard practice · Implement · Fix interfaces which · Implement · Monitor for data · Document and · Conduct · Monitor for business cause breaches metrics prioritize interfaces proactive approach requiring remediation to continuous quality · Ensure that new improvement interfaces adhere to standards communication plan management training · Align performance objectives with goals standard compliance rule compliance · Monitor for data management process usage compliance Outcomes The outcomes of Step 3 will be the continual monitoring and reporting of Data Governance metrics. Continual Data Governance improvement process will be established and the importance of Data Governance will be embedded within the culture of the company. • As part of the data remediation process, integrate and optimize the processes and techniques defined in Step 2 into the overall data management framework • Establish monitoring activities so we review data for completeness and accuracy in an ongoing fashion • Aim to enforce the use of standards and support ongoing data improvement and management processes • An organization is established that is focused on continuous improvement © 2005 Bearing. Point, Inc. 5

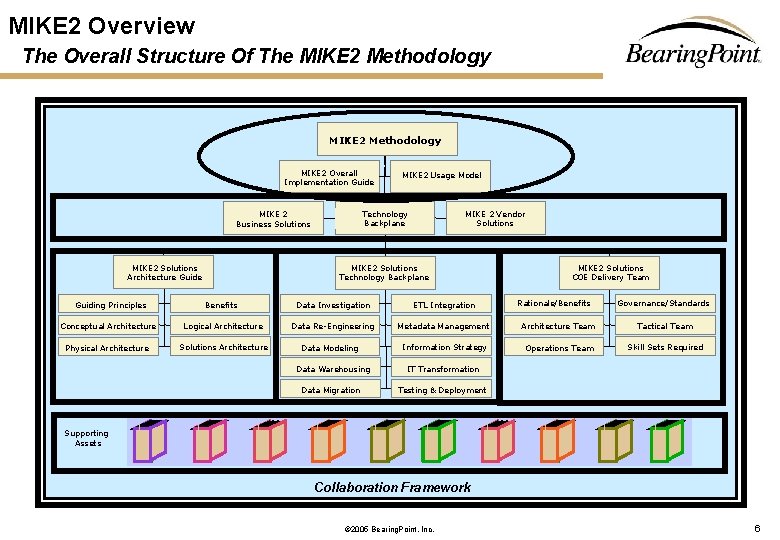

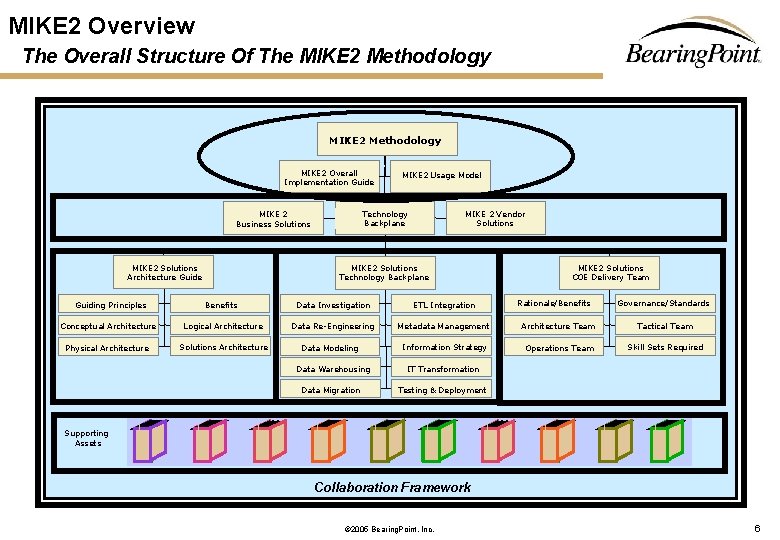

MIKE 2 Overview The Overall Structure Of The MIKE 2 Methodology MIKE 2 Overall Implementation Guide Technology Backplane MIKE 2 Business Solutions MIKE 2 Solutions Architecture Guide MIKE 2 Usage Model MIKE 2 Vendor Solutions MIKE 2 Solutions Technology Backplane MIKE 2 Solutions COE Delivery Team Rationale/Benefits Governance/Standards Guiding Principles Benefits Data Investigation ETL Integration Conceptual Architecture Logical Architecture Data Re-Engineering Metadata Management Architecture Team Tactical Team Physical Architecture Solutions Architecture Information Strategy Operations Team Skill Sets Required Data Modeling Data Warehousing Data Migration IT Transformation Testing & Deployment Supporting Assets Collaboration Framework © 2005 Bearing. Point, Inc. 6

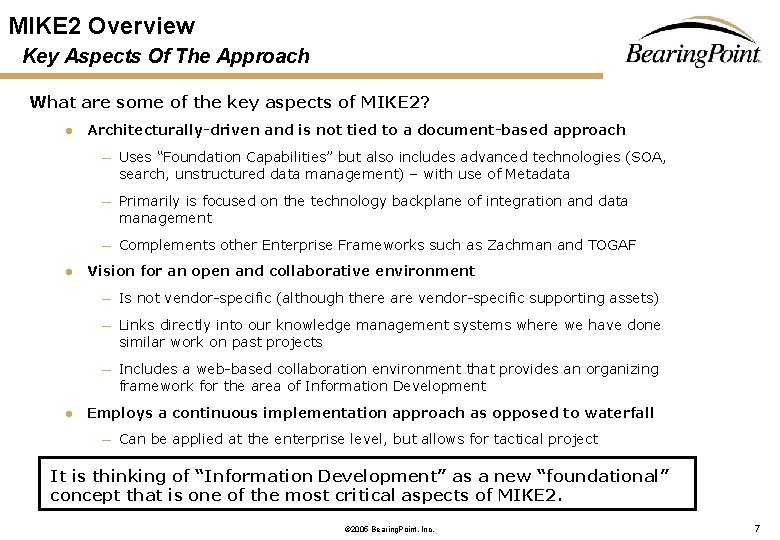

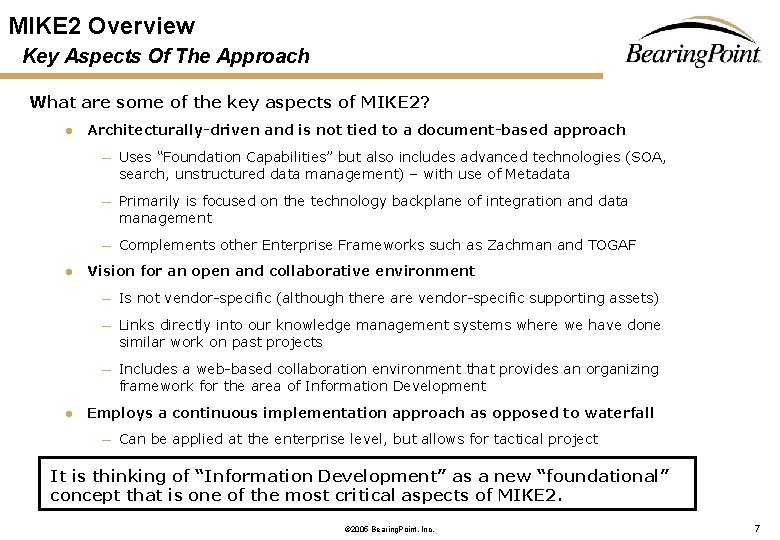

MIKE 2 Overview Key Aspects Of The Approach What are some of the key aspects of MIKE 2? l l l Architecturally-driven and is not tied to a document-based approach — Uses “Foundation Capabilities” but also includes advanced technologies (SOA, search, unstructured data management) – with use of Metadata — Primarily is focused on the technology backplane of integration and data management — Complements other Enterprise Frameworks such as Zachman and TOGAF Vision for an open and collaborative environment — Is not vendor-specific (although there are vendor-specific supporting assets) — Links directly into our knowledge management systems where we have done similar work on past projects — Includes a web-based collaboration environment that provides an organizing framework for the area of Information Development Employs a continuous implementation approach as opposed to waterfall — Can be applied at the enterprise level, but allows for tactical project It is thinking of “Information Development” as a new “foundational” concept that is one of the most critical aspects of MIKE 2. © 2005 Bearing. Point, Inc. 7

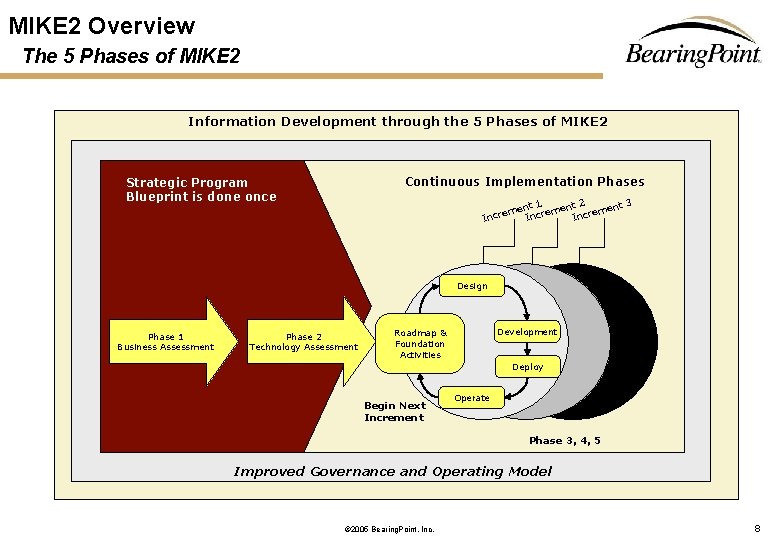

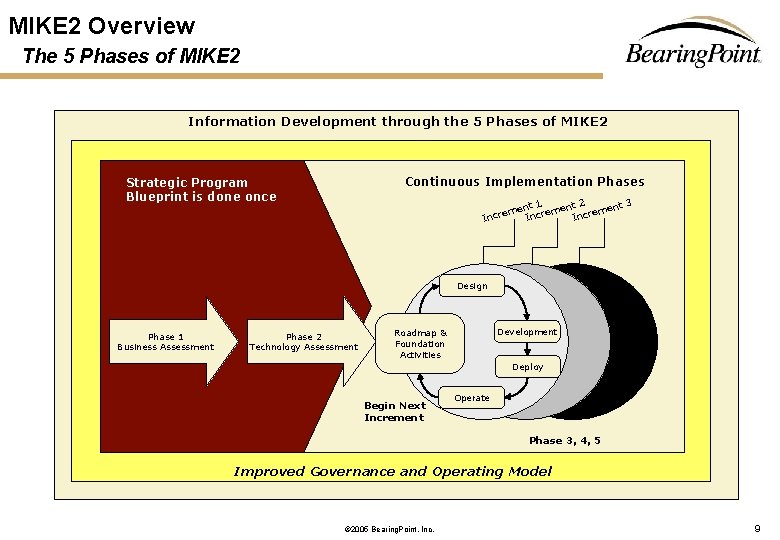

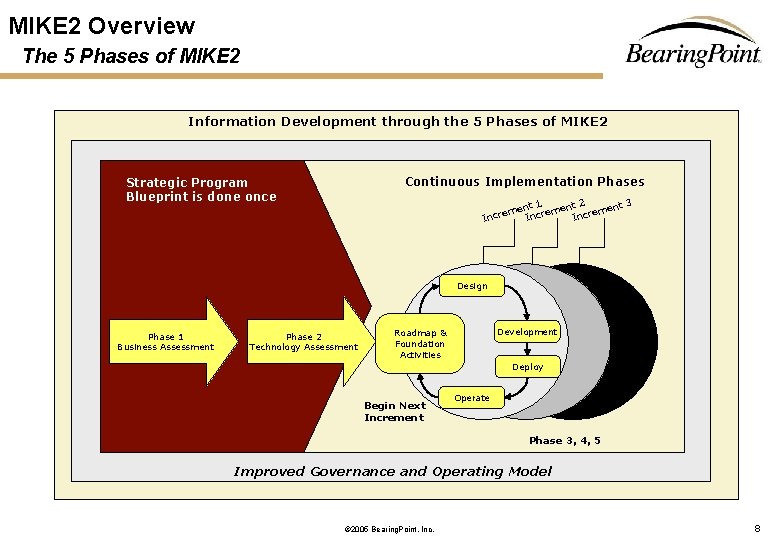

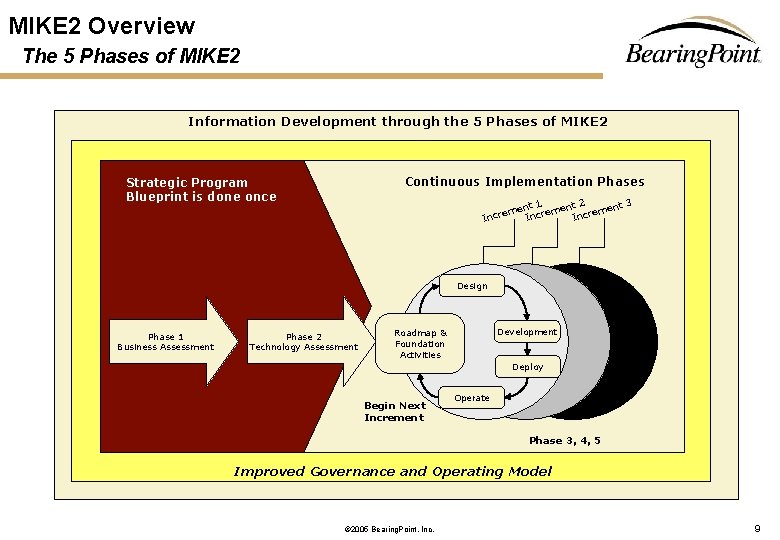

MIKE 2 Overview The 5 Phases of MIKE 2 Information Development through the 5 Phases of MIKE 2 Continuous Implementation Phases Strategic Program Blueprint is done once t 3 t 2 t 1 men cremen e r c In In In Design Phase 1 Business Assessment Phase 2 Technology Assessment Development Roadmap & Foundation Activities Deploy Begin Next Increment Operate Phase 3, 4, 5 Improved Governance and Operating Model © 2005 Bearing. Point, Inc. 8

MIKE 2 Overview The 5 Phases of MIKE 2 Information Development through the 5 Phases of MIKE 2 Continuous Implementation Phases Strategic Program Blueprint is done once t 3 t 2 t 1 men cremen e r c In In In Design Phase 1 Business Assessment Phase 2 Technology Assessment Development Roadmap & Foundation Activities Deploy Begin Next Increment Operate Phase 3, 4, 5 Improved Governance and Operating Model © 2005 Bearing. Point, Inc. 9

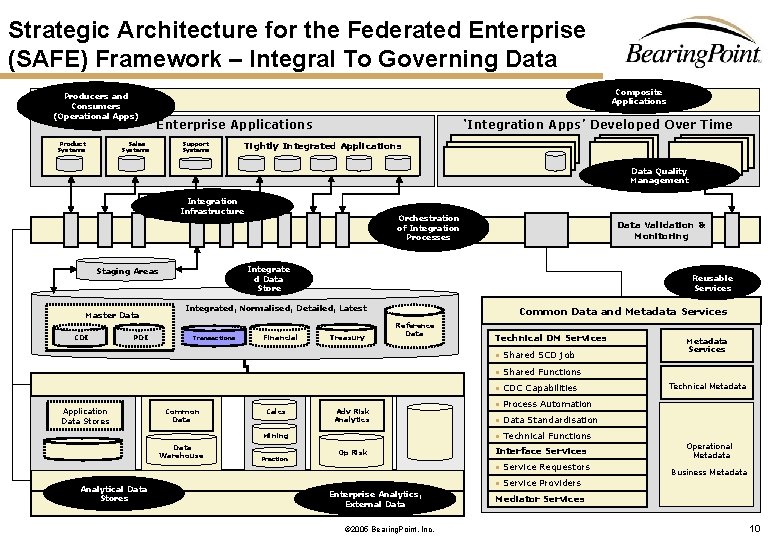

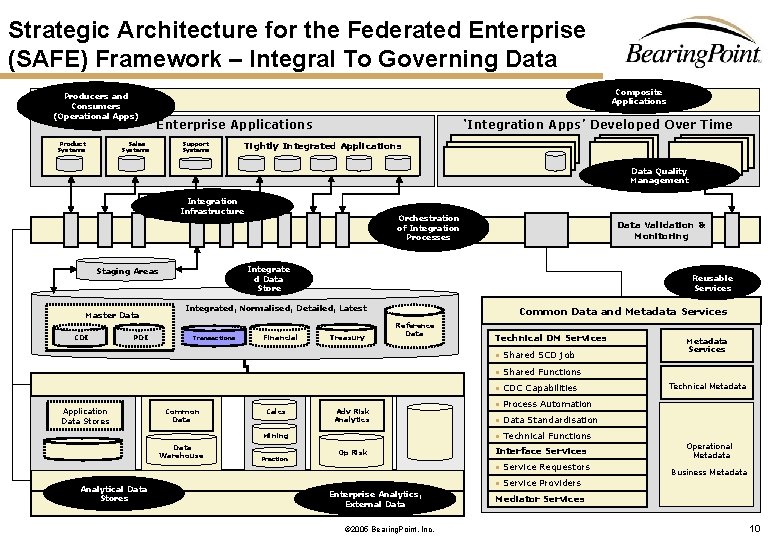

Strategic Architecture for the Federated Enterprise (SAFE) Framework – Integral To Governing Data Producers and Consumers (Operational Apps) Product Systems Composite Applications Enterprise Applications Sales Systems Support Systems ‘Integration Apps’ Developed Over Time Tightly Integrated Applications Data Quality Management Integration Infrastructure CDI PDI Application Data Stores Reusable Services Integrated, Normalised, Detailed, Latest Transactions Common Data Financial Calcs Treasury Common Data and Metadata Services Reference Data Adv Risk Analytics Mining Data Warehouse Analytical Data Stores Data Validation & Monitoring Integrate d Data Store Staging Areas Master Data Orchestration of Integration Processes Prection Op Risk Enterprise Analytics, External Data © 2005 Bearing. Point, Inc. Technical DM Services n Shared SCD job n Shared Functions n CDC Capabilities n Process Automation n Data Standardisation n Technical Functions Interface Services n Service Requestors n Service Providers Metadata Services Technical Metadata Operational Metadata Business Metadata Mediator Services 10

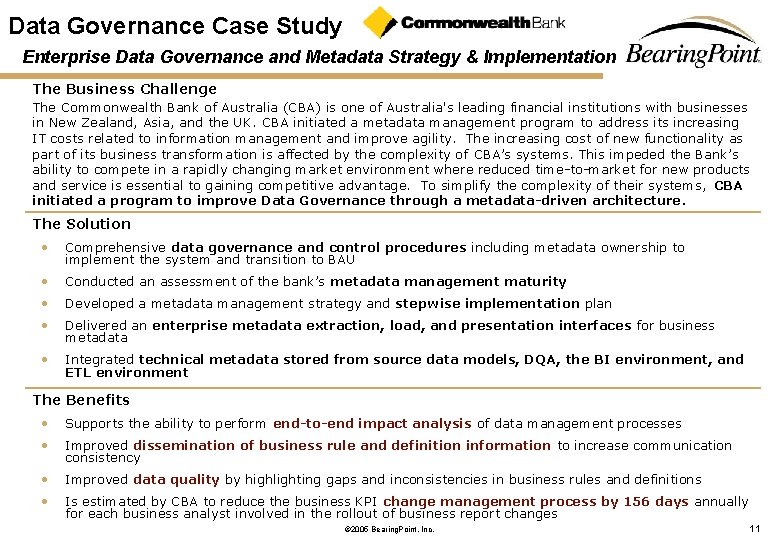

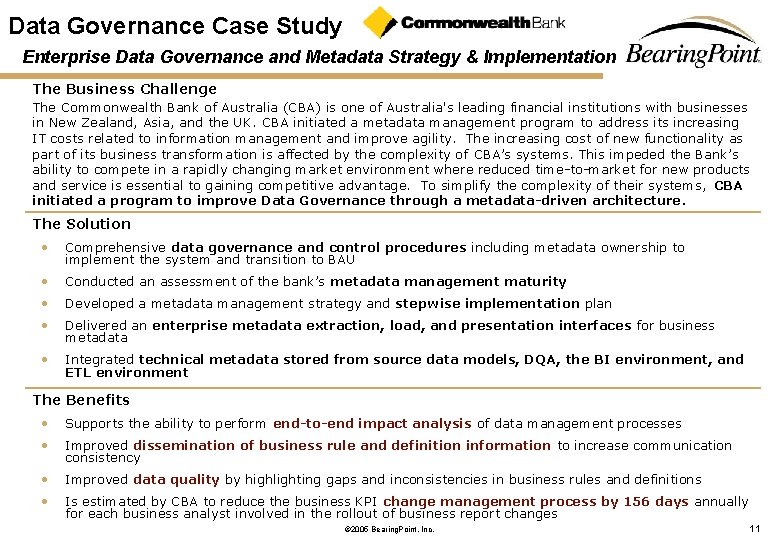

Data Governance Case Study Enterprise Data Governance and Metadata Strategy & Implementation The Business Challenge The Commonwealth Bank of Australia (CBA) is one of Australia's leading financial institutions with businesses in New Zealand, Asia, and the UK. CBA initiated a metadata management program to address its increasing IT costs related to information management and improve agility. The increasing cost of new functionality as part of its business transformation is affected by the complexity of CBA’s systems. This impeded the Bank’s ability to compete in a rapidly changing market environment where reduced time-to-market for new products and service is essential to gaining competitive advantage. To simplify the complexity of their systems, CBA initiated a program to improve Data Governance through a metadata-driven architecture. The Solution • Comprehensive data governance and control procedures including metadata ownership to implement the system and transition to BAU • Conducted an assessment of the bank’s metadata management maturity • Developed a metadata management strategy and stepwise implementation plan • Delivered an enterprise metadata extraction, load, and presentation interfaces for business metadata • Integrated technical metadata stored from source data models, DQA, the BI environment, and ETL environment The Benefits • Supports the ability to perform end-to-end impact analysis of data management processes • Improved dissemination of business rule and definition information to increase communication consistency • Improved data quality by highlighting gaps and inconsistencies in business rules and definitions • Is estimated by CBA to reduce the business KPI change management process by 156 days annually for each business analyst involved in the rollout of business report changes © 2005 Bearing. Point, Inc. 11

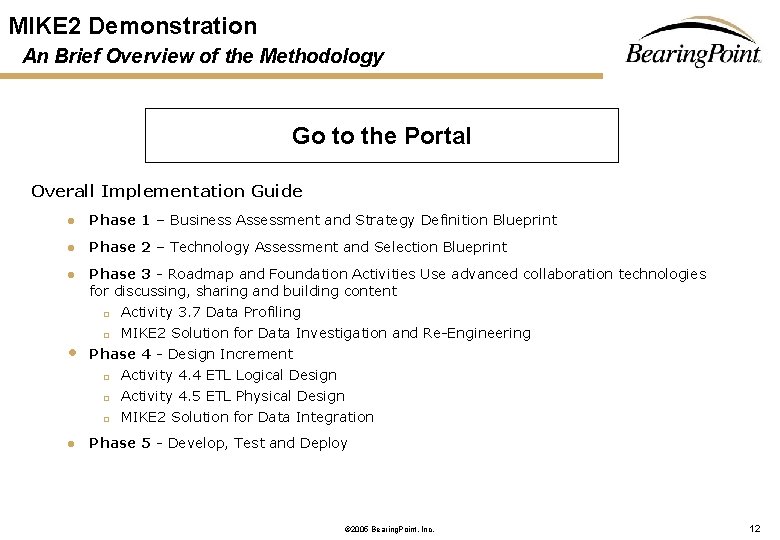

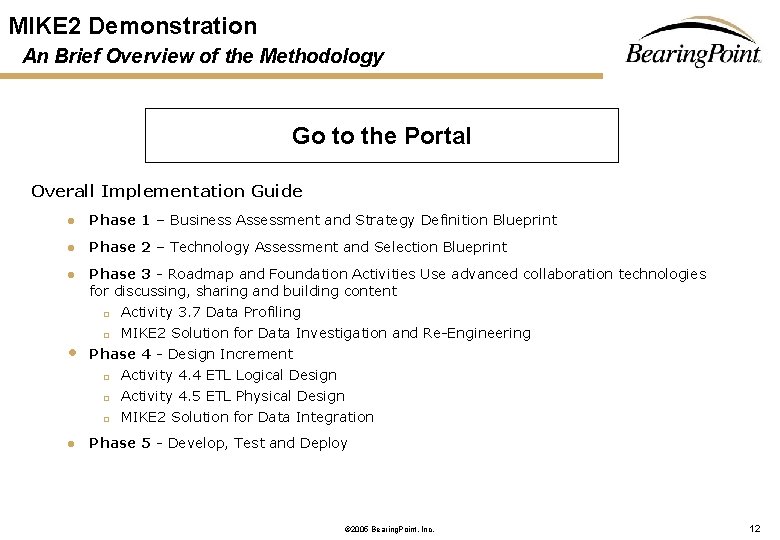

MIKE 2 Demonstration An Brief Overview of the Methodology Go to the Portal Overall Implementation Guide l Phase 1 – Business Assessment and Strategy Definition Blueprint l Phase 2 – Technology Assessment and Selection Blueprint l Phase 3 - Roadmap and Foundation Activities Use advanced collaboration technologies for discussing, sharing and building content q Activity 3. 7 Data Profiling q MIKE 2 Solution for Data Investigation and Re-Engineering Phase 4 - Design Increment q Activity 4. 4 ETL Logical Design q Activity 4. 5 ETL Physical Design q MIKE 2 Solution for Data Integration • l Phase 5 - Develop, Test and Deploy © 2005 Bearing. Point, Inc. 12

Discussion Question 1: Metadata Management Best Practice How can you move to this metadata-driven approach that you have described? l • What are the steps that you go through? q Define a strategic architecture that provides a blueprint to moving towards a metadata-driven architecture. Metadata management crosses all components in the architecture q Get foundational capabilities in place through data modeling best practices and the use of a well-defined Data Dictionary q Take an active approach to metadata management means that it is part of the SLDC – be ready to move to a model-driven approach How do you measure if you are on track? q Assess impact of changes in definitive terms – tables, columns, entities, classes of systems, hours in development time, etc. q Maximize automation in documenting of data relationships and flows, and subsequent changes q Capture operational metadata to understand the impact of changes in design time q Be mindful of efficiency in the development and analysis lifecycle – deployment plan, data classification, and team-based development with proper read/write access control is key © 2005 Bearing. Point, Inc. 13

Discussion Question 2: Master Data Management Across the Enterprise What are the most significant issues that need to be addressed regarding Master Data Management (MDM), as pertains to Data Governance and Management? l l How do you manage a high degree of overlap in master data? q First step is to understand where systems overlap on this enterprise data model, and the relationships between primary masters, secondary masters and slaves of master data q Then seek to come to a common agreement on domain values that are stored across a number of systems. Generally, a combination of standardizing to common domain values and making integration metadata-driven is the key to success How do you treat the complex data quality issues with master data especially with customer and locality data from legacy systems? q Start with a quantitative analysis by data profiling tool is critical to defining the scope of the problem q Then design the information governance (stewardship, ownership, policies) requirements around master data. Combine preventive, detective, and policy-based enforcement to implement governance objectives q Integrate policies and standards, architectural considerations, and process design practices in order to effectively address the increasing federation of our systems and volumetric increase in data © 2005 Bearing. Point, Inc. 14

Discussion Question 3: Prioritizing your Data Integration Investments How do you scope your project and prioritize data integration investments? l l What should we keep in mind in an initial phase? q Establish the overall strategic technology blueprint that outlines the capabilities that you need for next 3 years – 5 years. Define your common technology needs for data integration to make enterprise purchases. q Leverage your compliance mandates such as SOX, IFRS and Basel II as a mobilizing force to launch a transformation around Data Governance and Management q It is often sensible to select a department to show business value beyond IT cost reductions. Starting with data quality initiatives typically deliver the fastest ROI that is easiest to quantify What are your views on a centralized versus distributed approach, and incremental expansion from departmental to organization-wide? q Move to a centralized model for information management and integration, to complement business models in the areas that have highest degrees of shared elements q Optimize resources through a hybrid model where you combine centralized and distributed resources q Don’t over-design – get into it by prototyping and profiling. Progressively automate after understanding data management issues © 2005 Bearing. Point, Inc. 15

MIKE 2 Methodology Data Governance and Management – Lessons Learned Driving Incremental Value Proportional to Business Demand ü Align the Data Governance and Management program with a key business initiative that will contribute to your strategic goals ü Don’t try to fix everything at once. Score quick wins along the way Standard-driven, Exercise Care to Existing Environment ü Data standards are the cornerstone of an effective Data Governance and Management program ü Applications come and go, but the data largely stays the same. The Data Governance and Management decisions you make today will have a profound impact on your business Rigorous Approach to Organizational Alignment ü Data governance and management program is not an IT-only initiative. It requires active involvement and leadership from the business as well as within the IT ü Executive Sponsor must provide leadership and senior data stewards must accept accountability ü Building an integrated and accurate enterprise view won’t happen on its own – align initiatives across business units Strategy Process Organization Technology People Information Development © 2005 Bearing. Point, Inc. 16