Midterm review Announcement Midterm Time Oct 27 th

- Slides: 25

Midterm review

Announcement • Midterm – Time: Oct. 27 th, 6 -8 PM 7 -9 PM – Location: GB 404 & GB 405 – Policy: close book – Coverage: [lec. 1, lec. 7 (dynamic memory management)] 2021 -09 -14

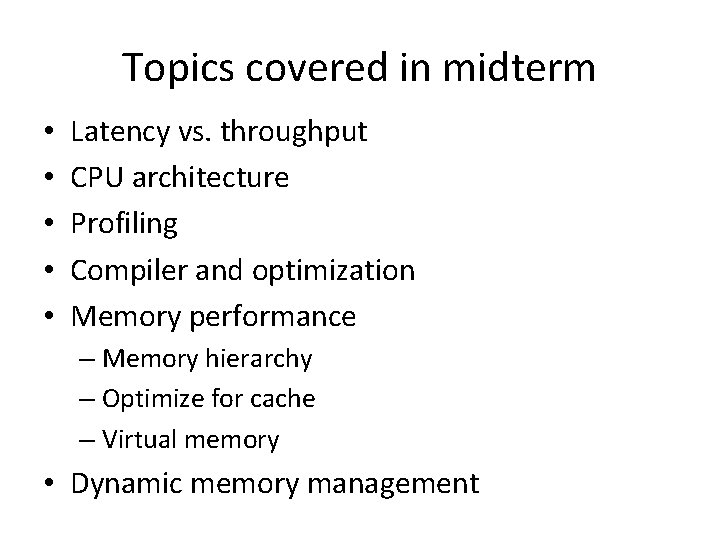

Topics covered in midterm • • • Latency vs. throughput CPU architecture Profiling Compiler and optimization Memory performance – Memory hierarchy – Optimize for cache – Virtual memory • Dynamic memory management

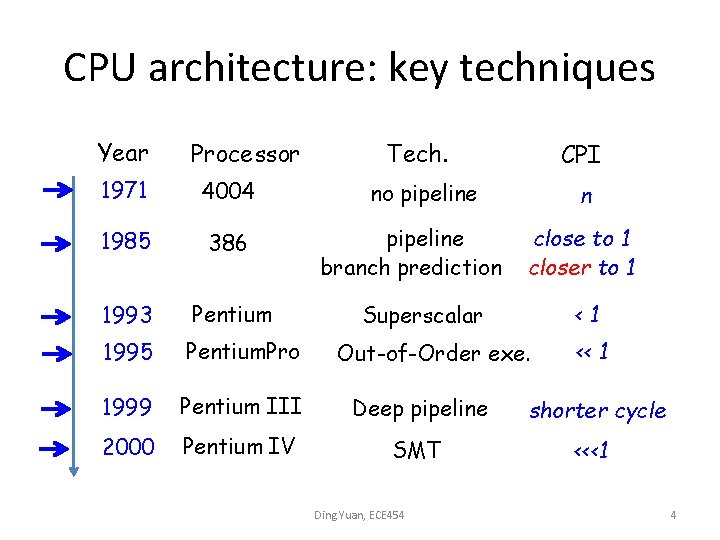

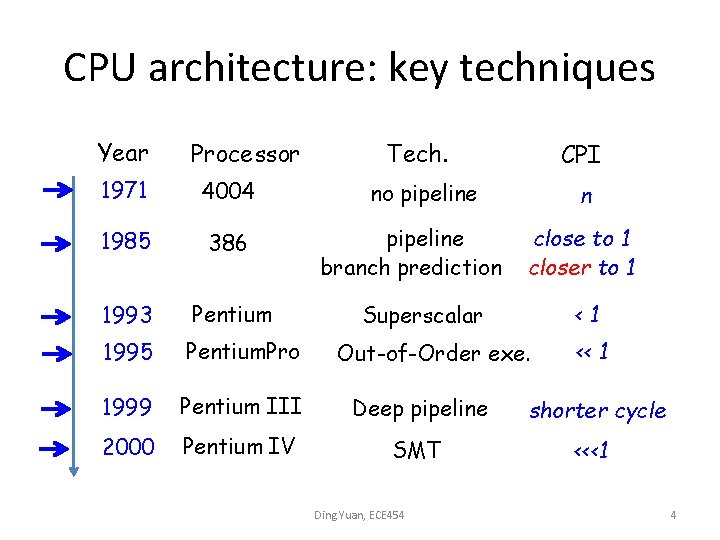

CPU architecture: key techniques Year Processor Tech. CPI 1971 4004 1985 386 1993 Pentium 1995 Pentium. Pro 1999 Pentium III Deep pipeline shorter cycle 2000 Pentium IV SMT <<<1 no pipeline branch prediction n close to 1 closer to 1 <1 Superscalar Out-of-Order exe. Ding Yuan, ECE 454 << 1 4

Profiling • Why do we need profiling? • Amdahl’s law speedup = Old. Time / New. Time • Example problem: If an optimization makes loops go 4 times faster, and applying the optimization to my program makes it go twice as fast, what fraction of my program is loops? Solution: looptime = x * oldtime; newtime = looptime/4 + othertime = x*oldtime/4 + (1 -x)*oldtime speedup = oldtime/newtime = 1/(x/4 + 1 -x) = 1/(1 -0. 75 x) = 2

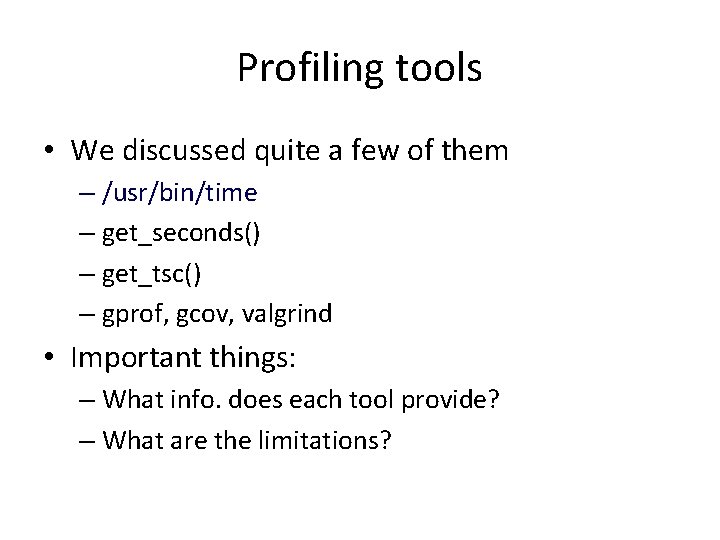

Profiling tools • We discussed quite a few of them – /usr/bin/time – get_seconds() – get_tsc() – gprof, gcov, valgrind • Important things: – What info. does each tool provide? – What are the limitations?

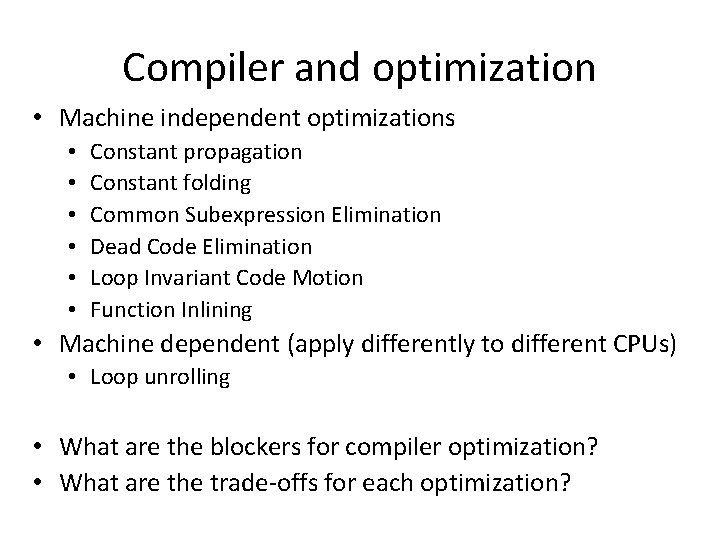

Compiler and optimization • Machine independent optimizations • • • Constant propagation Constant folding Common Subexpression Elimination Dead Code Elimination Loop Invariant Code Motion Function Inlining • Machine dependent (apply differently to different CPUs) • Loop unrolling • What are the blockers for compiler optimization? • What are the trade-offs for each optimization?

Q 9 from midterm 2013 Consider the following functions: int max(int x, int y) { return x < y ? y : x; } void incr(int *xp, int v) { *xp += v; } int add (int i, int j) { return i + j; } The following code fragment calls these functions: 1 int max_sum (int m, int n) { // m and n are large integers 2 int i; 3 int sum = 0; 4 5 for (i = 0; i < max(m, n); incr (&i, 1)) { 6 sum = add(data[i], sum); // data is an integer array 7 } 8 9 return sum; 10 } A). identify all of the optimization opportunities for this code and explain each one. Also discuss whether it can be performed by the compiler or not. (6 marks)

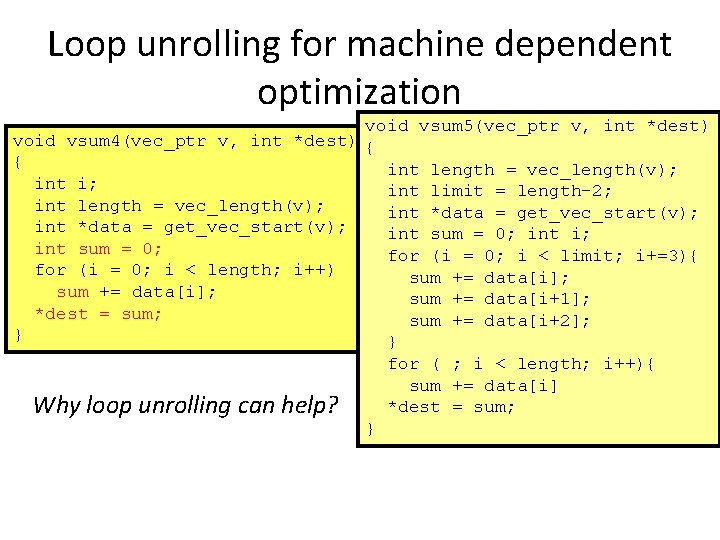

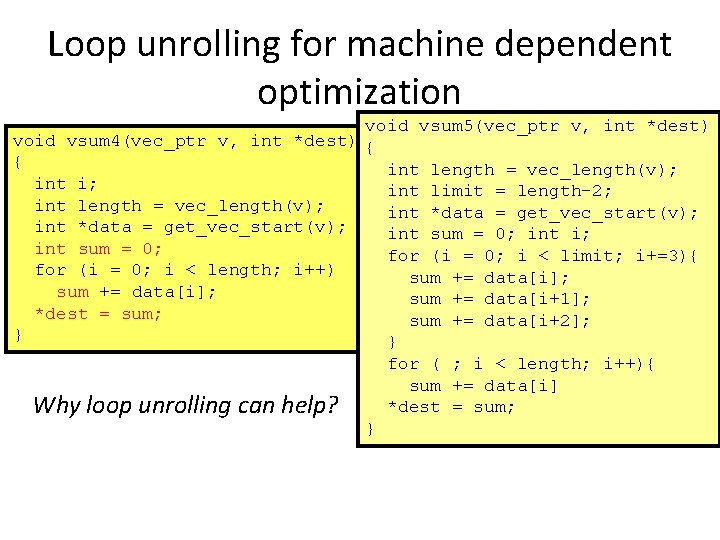

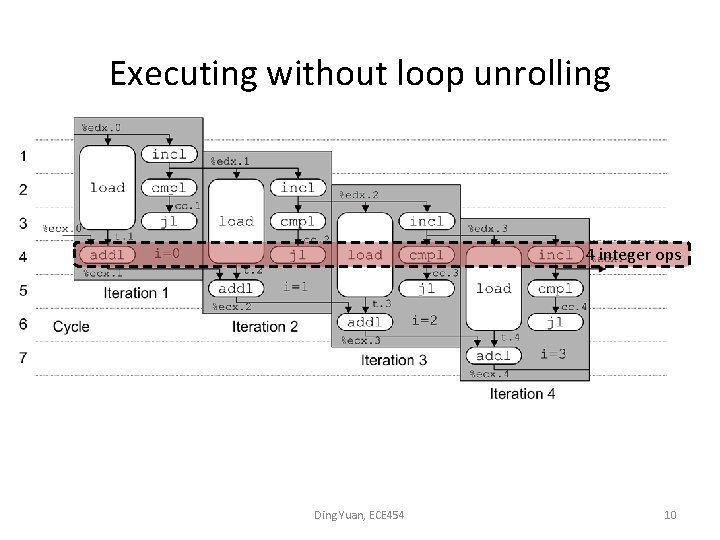

Loop unrolling for machine dependent optimization void vsum 5(vec_ptr v, int *dest) void vsum 4(vec_ptr v, int *dest) { { int length = vec_length(v); int i; int limit = length-2; int length = vec_length(v); int *data = get_vec_start(v); int sum = 0; int i; int sum = 0; for (i = 0; i < limit; i+=3){ for (i = 0; i < length; i++) sum += data[i]; sum += data[i+1]; *dest = sum; sum += data[i+2]; } } for ( ; i < length; i++){ sum += data[i] *dest = sum; Why loop unrolling can help? }

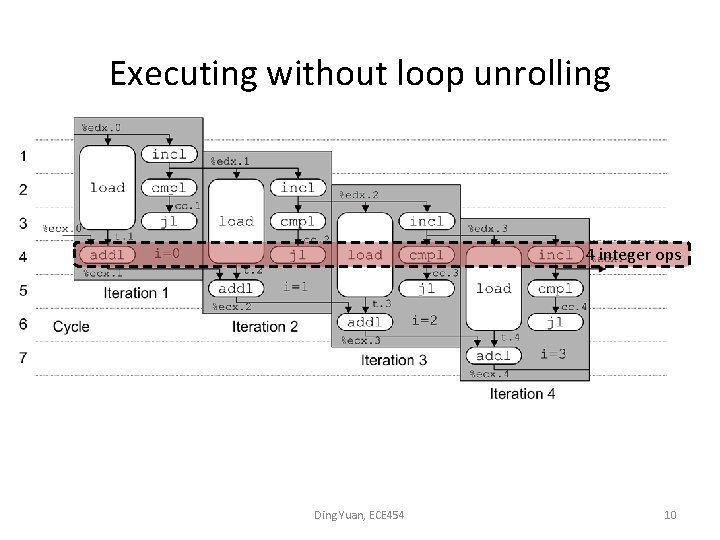

Executing without loop unrolling 4 integer ops Ding Yuan, ECE 454 10

Q 11@midterm 2013

Memory performance: cache • Motivation – L 1 cache reference 0. 5 ns – Main memory reference 100 ns • 200 X slower!

Why Caches Work • Locality: Programs tend to use data and instructions with addresses near or equal to those they have used recently • Temporal locality: block – Recently referenced items are likely to be referenced again in the near future • Spatial locality: – Items with nearby addresses tend to be referenced close together in time block

Cache hierarchy: example 1 Direct mapped: One block per set Assume: cache block size 8 bytes S= 2 s sets v tag 0 1 2 3 4 5 6 7 Address of int: t bits 0… 01 find set 100

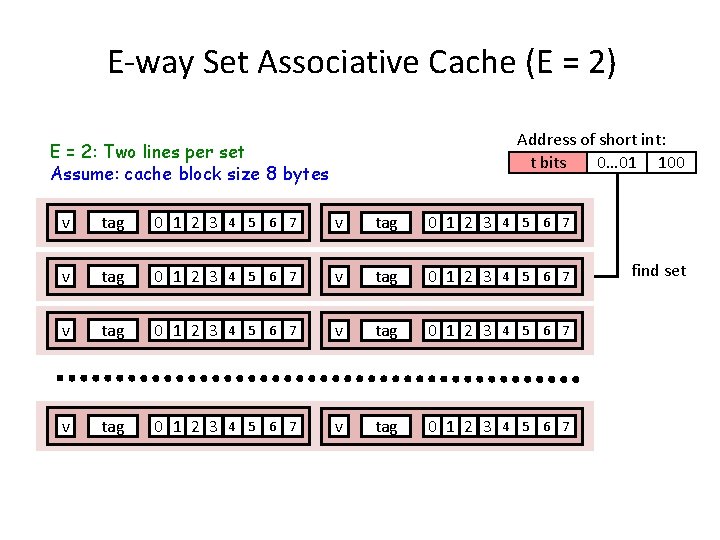

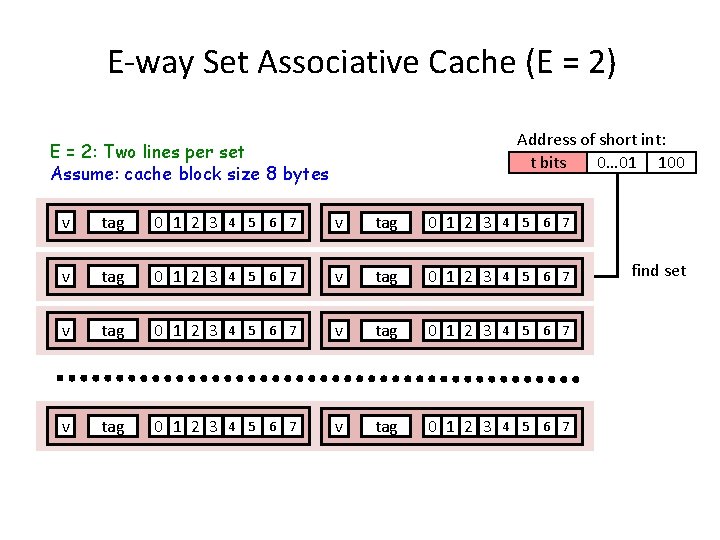

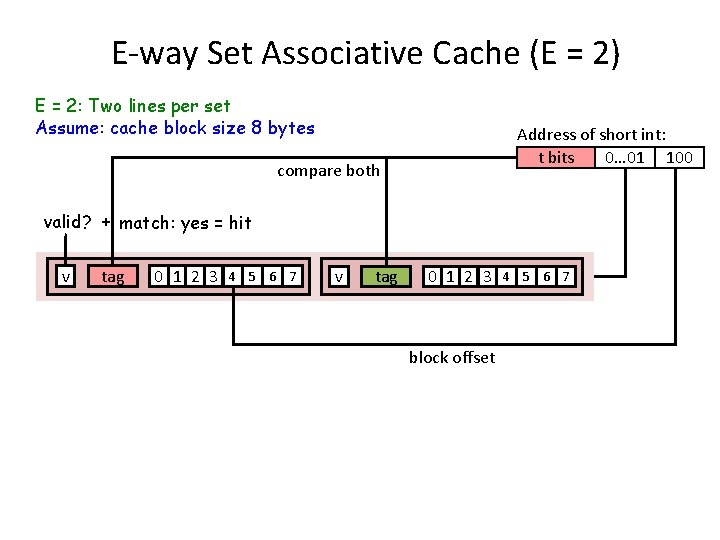

E-way Set Associative Cache (E = 2) Address of short int: t bits 0… 01 100 E = 2: Two lines per set Assume: cache block size 8 bytes v tag 0 1 2 3 4 5 6 7 v tag 0 1 2 3 4 5 6 7 find set

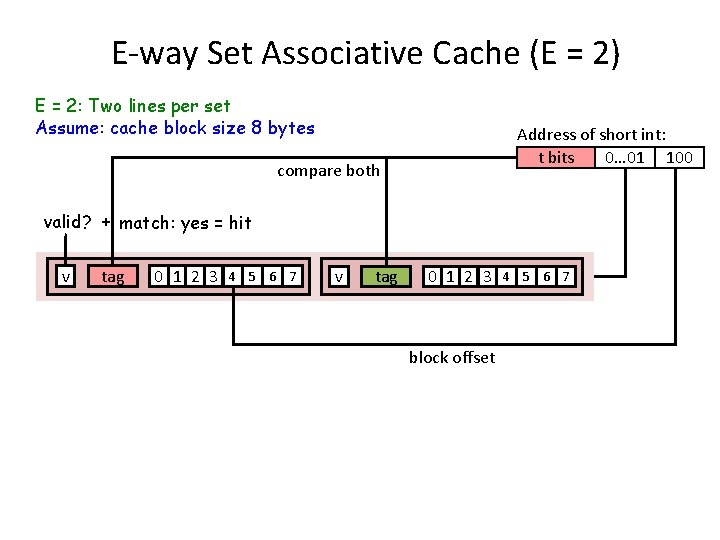

E-way Set Associative Cache (E = 2) E = 2: Two lines per set Assume: cache block size 8 bytes Address of short int: t bits 0… 01 100 compare both valid? + match: yes = hit v tag 0 1 2 3 4 5 6 7 v tag 0 1 2 3 block offset 4 5 6 7

E-way Set Associative Cache (E = 2) E = 2: Two lines per set Assume: cache block size 8 bytes Address of short int: t bits 0… 01 100 compare both valid? + match: yes = hit v tag 0 1 2 3 4 5 6 7 v tag 0 1 2 3 block offset short int (2 Bytes) is here No match: • One line in set is selected for eviction and replacement • Replacement policies: random, least recently used (LRU), … 4 5 6 7

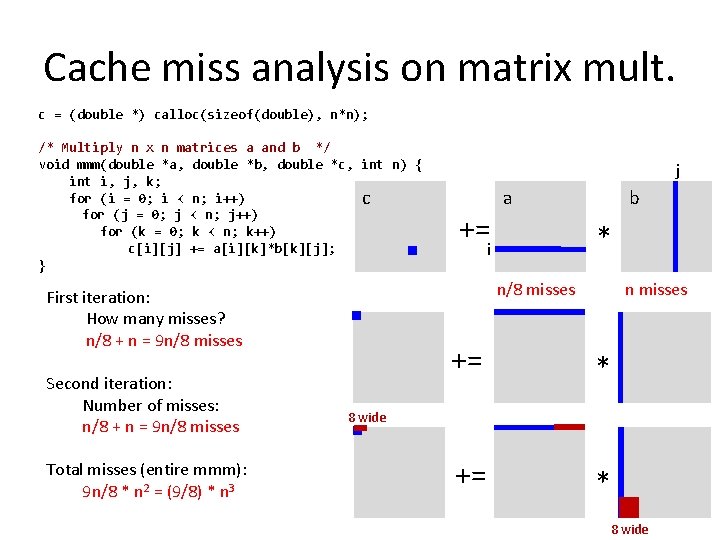

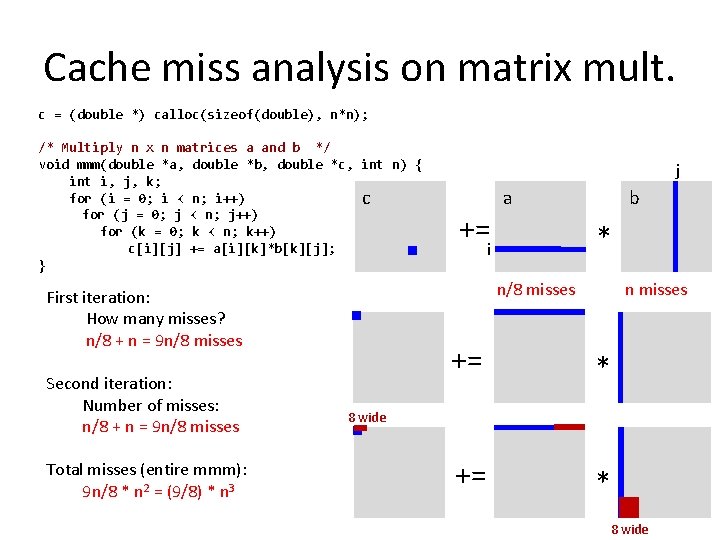

Cache miss analysis on matrix mult. c = (double *) calloc(sizeof(double), n*n); /* Multiply n x n matrices a and b */ void mmm(double *a, double *b, double *c, int n) { int i, j, k; for (i = 0; i < n; i++) c for (j = 0; j < n; j++) for (k = 0; k < n; k++) c[i][j] += a[i][k]*b[k][j]; } Total misses (entire mmm): 9 n/8 * n 2 = (9/8) * n 3 +=i a b * n/8 misses First iteration: How many misses? n/8 + n = 9 n/8 misses Second iteration: Number of misses: n/8 + n = 9 n/8 misses j n misses += * 8 wide

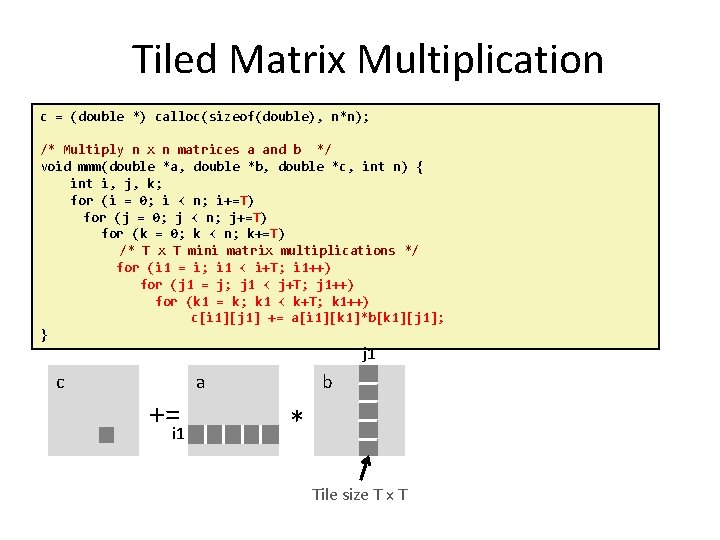

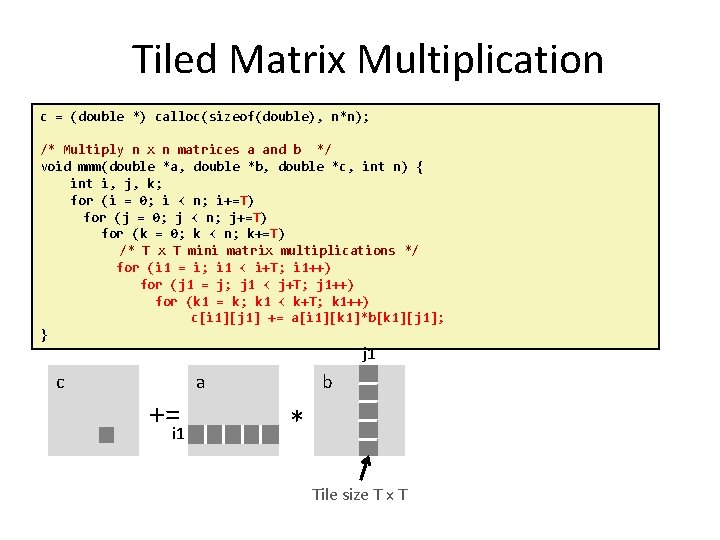

Tiled Matrix Multiplication c = (double *) calloc(sizeof(double), n*n); /* Multiply n x n matrices a and b */ void mmm(double *a, double *b, double *c, int n) { int i, j, k; for (i = 0; i < n; i+=T) for (j = 0; j < n; j+=T) for (k = 0; k < n; k+=T) /* T x T mini matrix multiplications */ for (i 1 = i; i 1 < i+T; i 1++) for (j 1 = j; j 1 < j+T; j 1++) for (k 1 = k; k 1 < k+T; k 1++) c[i 1][j 1] += a[i 1][k 1]*b[k 1][j 1]; } j 1 c a +=i 1 b * Tile size T x T

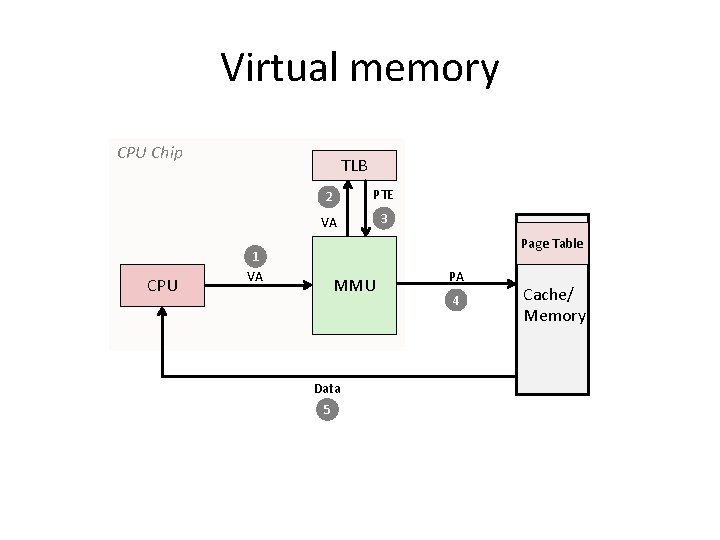

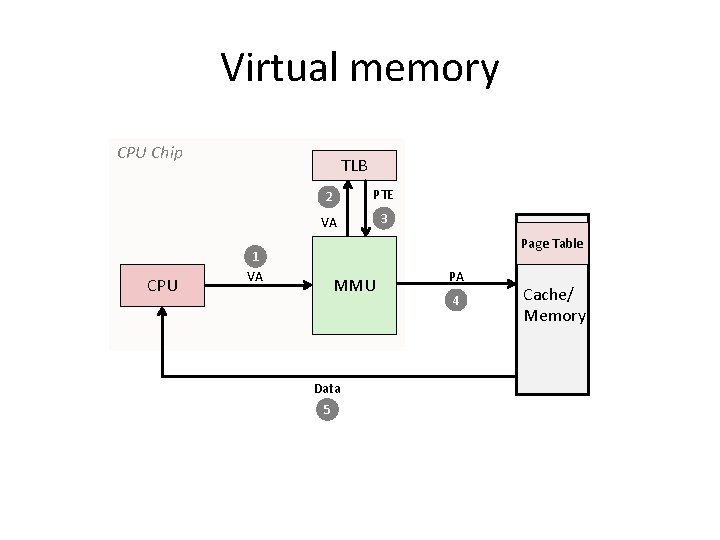

Virtual memory CPU Chip CPU TLB 2 PTE VA 3 Page Table 1 VA MMU Data 5 PA 4 Cache/ Memory

Complete data reference analysis • Q 7@midterm 2013

Dynamic mem. management • Alignment – What is alignment? why alignment? • Q 6@midterm 2013

malloc/free • How do we know how much memory to free just given a pointer? • How do we keep track of the free blocks? • How do we pick a block to use for allocation -- many might fit? • How do we reinsert freed block?

Keeping Track of Free Blocks • Method 1: Implicit list using lengths -- links all blocks 4 4 6 6 4 4 • Method 2: Explicit list among the free blocks using pointers within the free blocks Successor links A 4 B 4 4 4 6 6 4 4 C Predecessor links • Method 3: Segregated free list – Different free lists for different size classes

Final remarks • Time/location/policy – Time: Oct. 27 th, 7 -9 PM – Location: GB 404 & GB 405 – Policy: close book • Make sure you understand the practice midterm • Best of luck!