Microarchitecture of Superscalars 4 Decoding Dezs Sima Fall

- Slides: 30

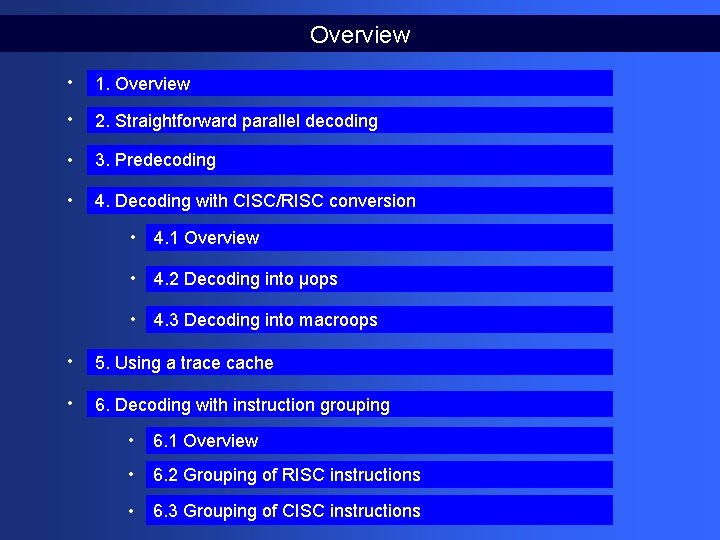

Microarchitecture of Superscalars (4) Decoding Dezső Sima Fall 2007 (Ver. 2. 0) Dezső Sima, 2007

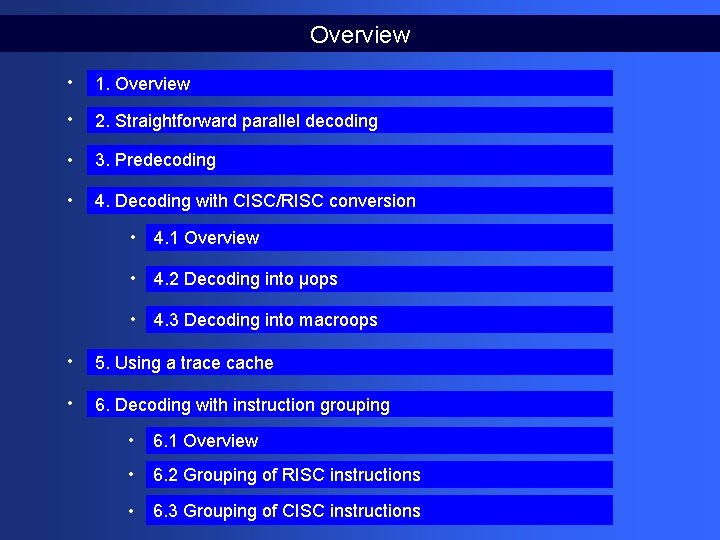

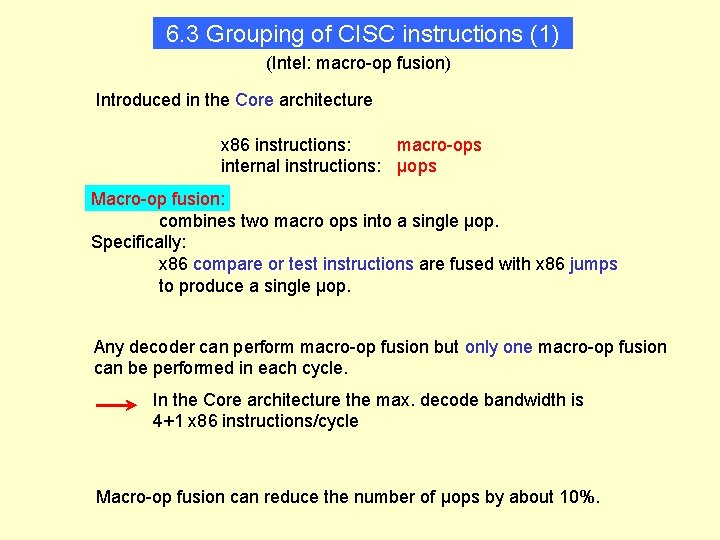

Overview • 1. Overview • 2. Straightforward parallel decoding • 3. Predecoding • 4. Decoding with CISC/RISC conversion • 4. 1 Overview • 4. 2 Decoding into µops • 4. 3 Decoding into macroops • 5. Using a trace cache • 6. Decoding with instruction grouping • 6. 1 Overview • 6. 2 Grouping of RISC instructions • 6. 3 Grouping of CISC instructions

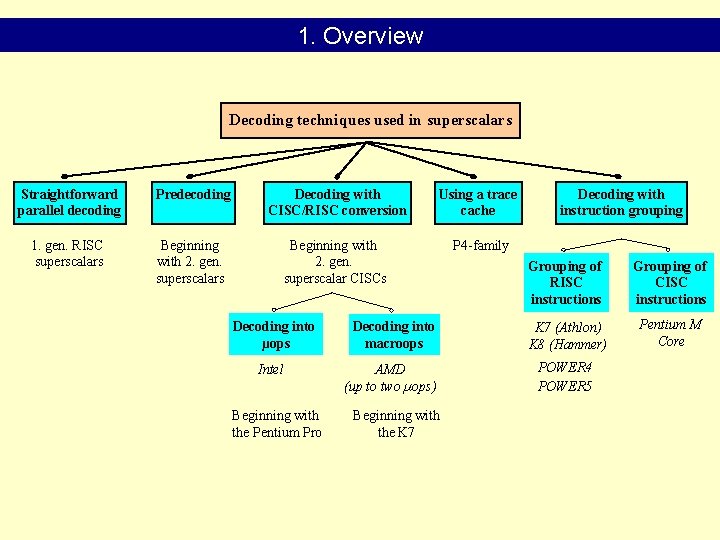

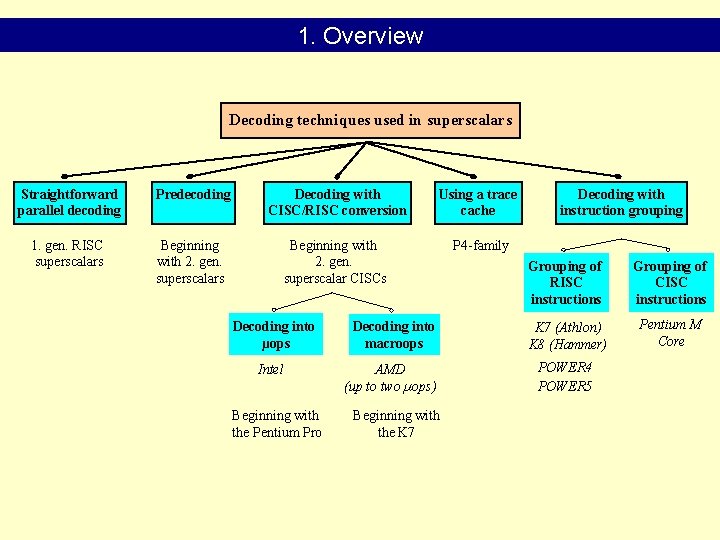

1. Overview Decoding techniques used in superscalars Straightforward parallel decoding Predecoding Decoding with CISC/RISC conversion Using a trace cache 1. gen. RISC superscalars Beginning with 2. gen. superscalar CISCs P 4 -family Decoding with instruction grouping Grouping of RISC instructions Grouping of CISC instructions Pentium M Core Decoding into µops Decoding into macroops K 7 (Athlon) K 8 (Hammer) Intel AMD (up to two µops) POWER 4 POWER 5 Beginning with the Pentium Pro Beginning with the K 7

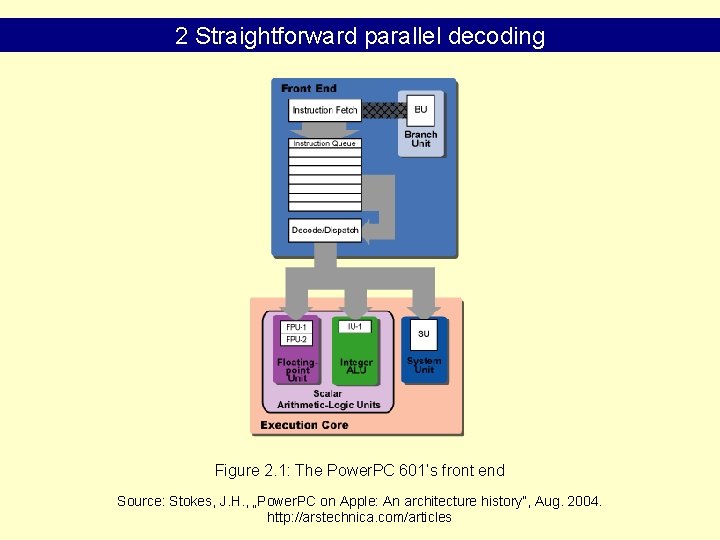

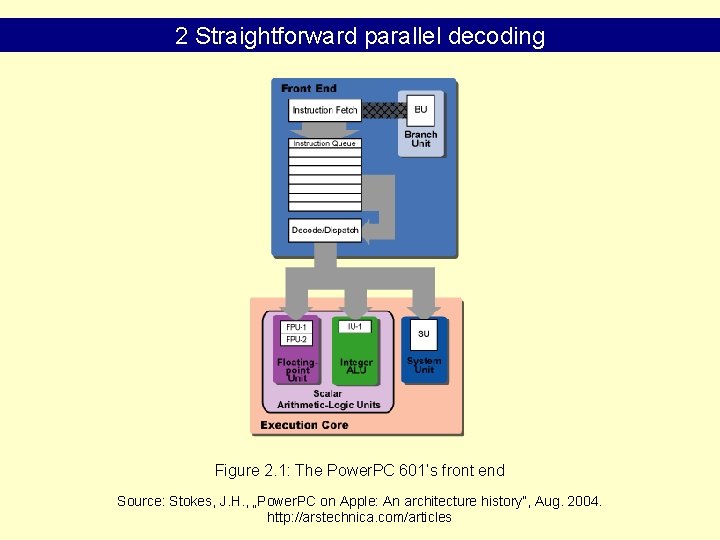

2 Straightforward parallel decoding Figure 2. 1: The Power. PC 601’s front end Source: Stokes, J. H. , „Power. PC on Apple: An architecture history”, Aug. 2004. http: //arstechnica. com/articles

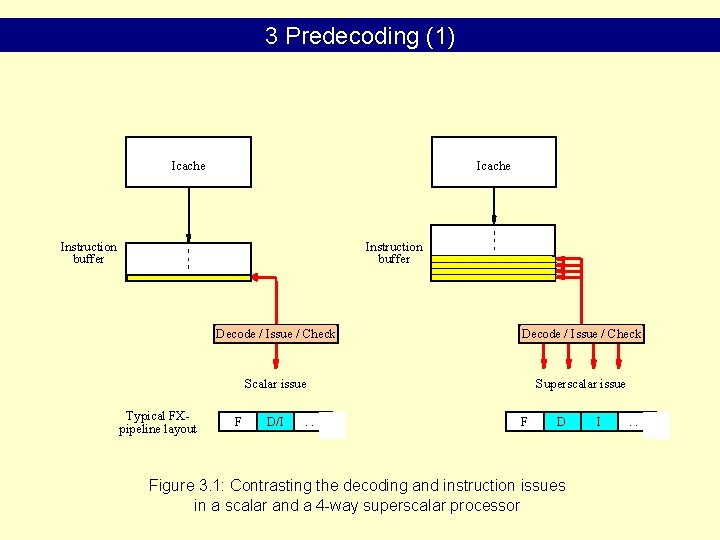

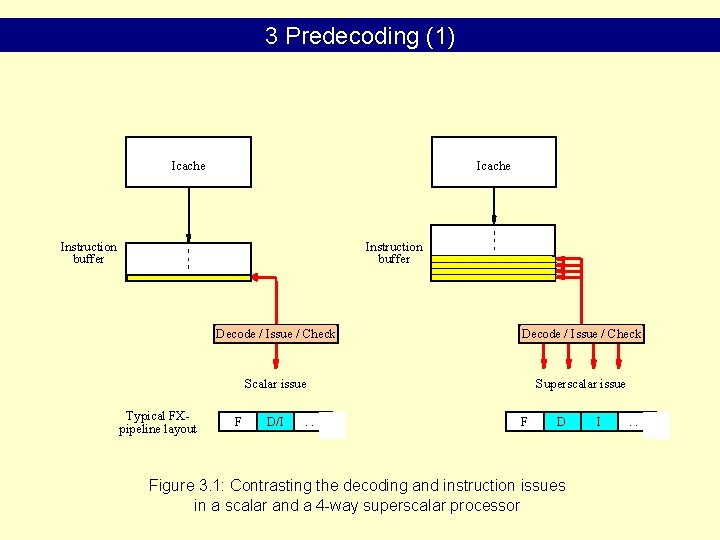

3 Predecoding (1) Icache Instruction buffer Typical FXpipeline layout Decode / Issue / Check Scalar issue Superscalar issue F D/I . . . F D Figure 3. 1: Contrasting the decoding and instruction issues in a scalar and a 4 -way superscalar processor I . . .

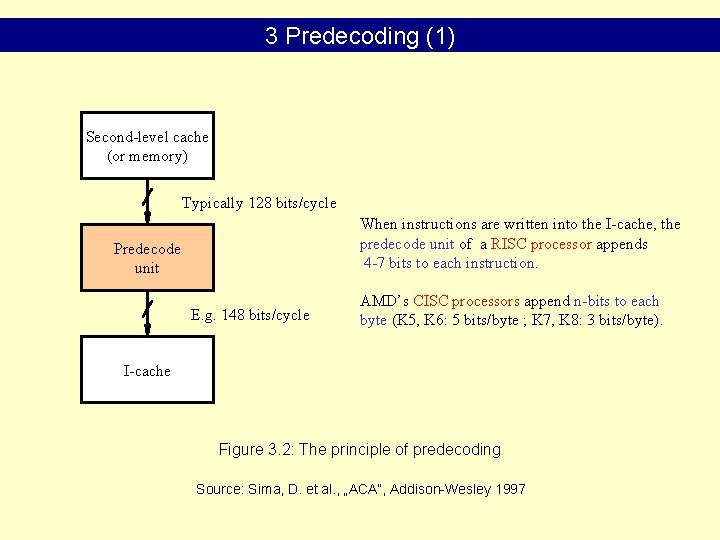

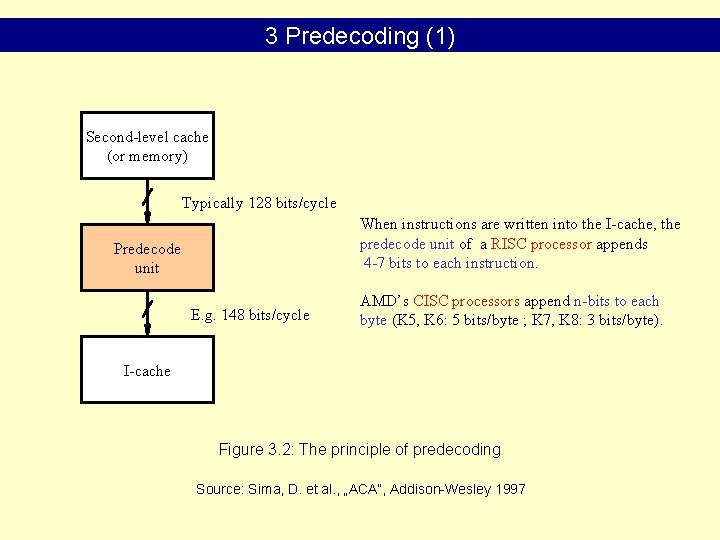

3 Predecoding (1) Second-level cache (or memory) Typically 128 bits/cycle When instructions are written into the I-cache, the predecode unit of a RISC processor appends 4 -7 bits to each instruction. Predecode unit E. g. 148 bits/cycle AMD’s CISC processors append n-bits to each byte (K 5, K 6: 5 bits/byte ; K 7, K 8: 3 bits/byte). I-cache Figure 3. 2: The principle of predecoding Source: Sima, D. et al. , „ACA”, Addison-Wesley 1997

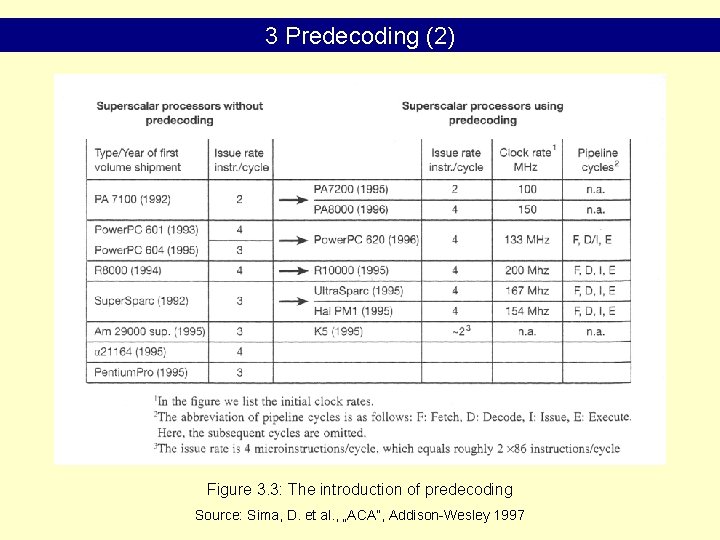

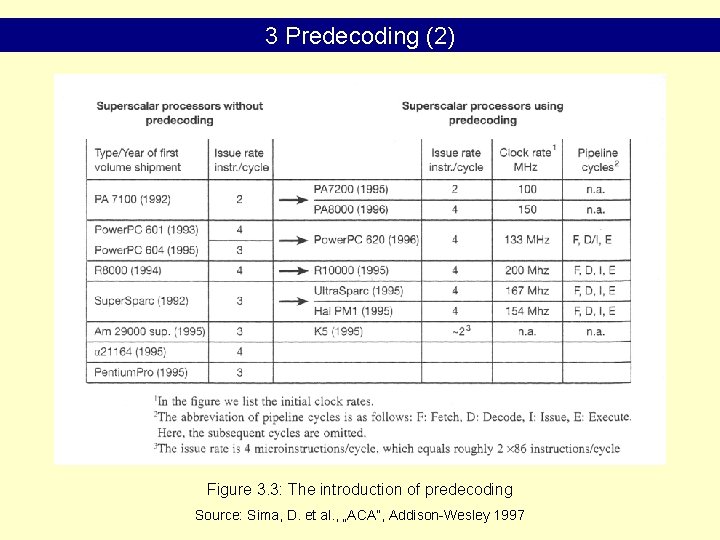

3 Predecoding (2) Figure 3. 3: The introduction of predecoding Source: Sima, D. et al. , „ACA”, Addison-Wesley 1997

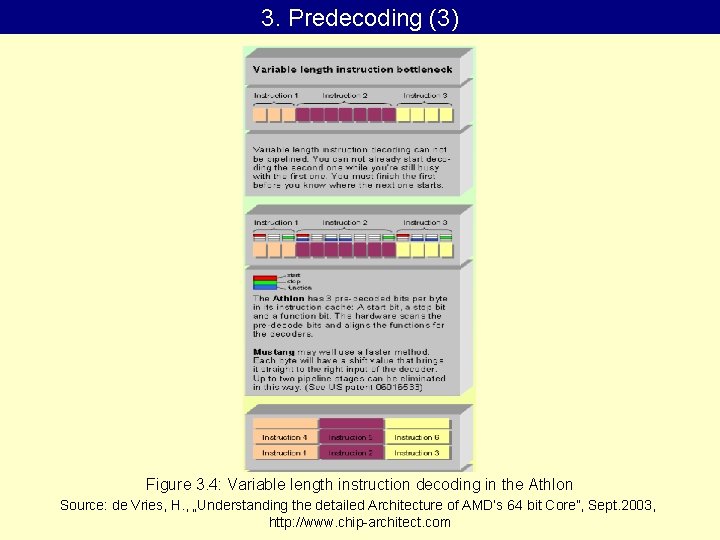

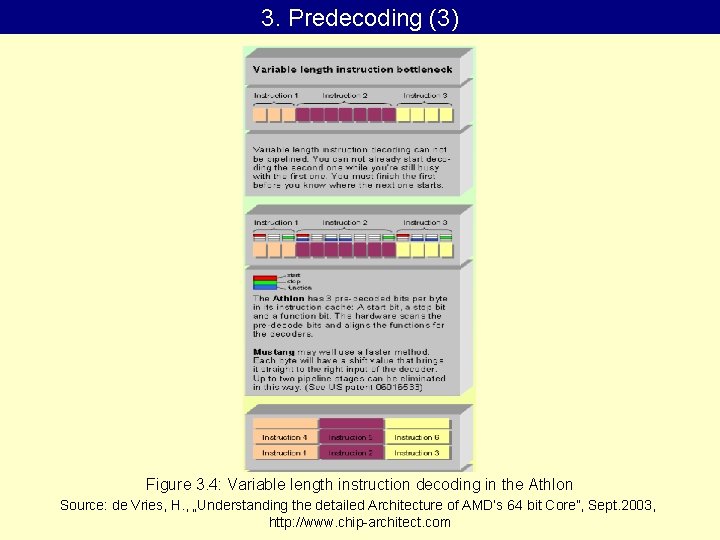

3. Predecoding (3) Figure 3. 4: Variable length instruction decoding in the Athlon Source: de Vries, H. , „Understanding the detailed Architecture of AMD’s 64 bit Core”, Sept. 2003, http: //www. chip-architect. com

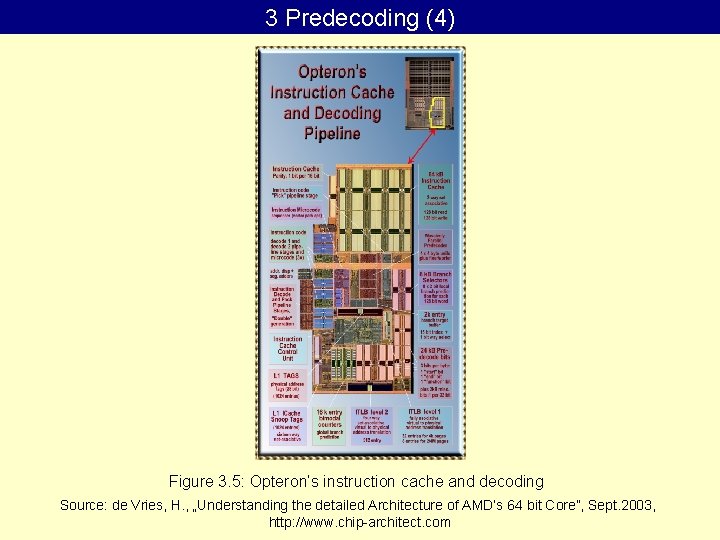

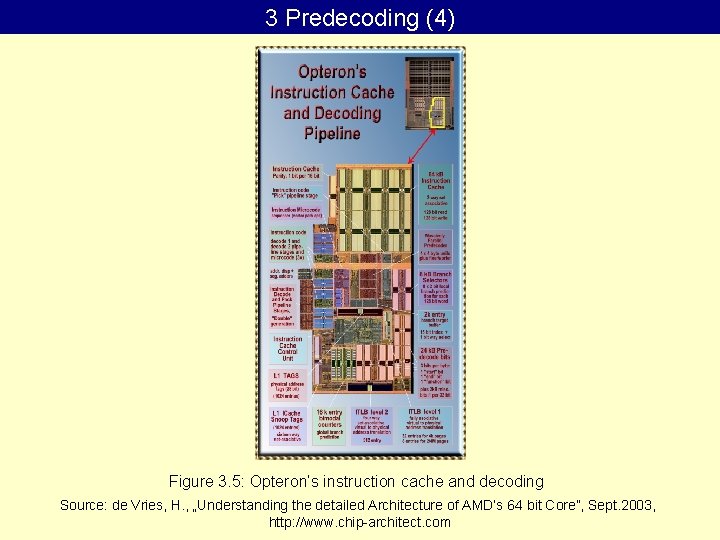

3 Predecoding (4) Figure 3. 5: Opteron’s instruction cache and decoding Source: de Vries, H. , „Understanding the detailed Architecture of AMD’s 64 bit Core”, Sept. 2003, http: //www. chip-architect. com

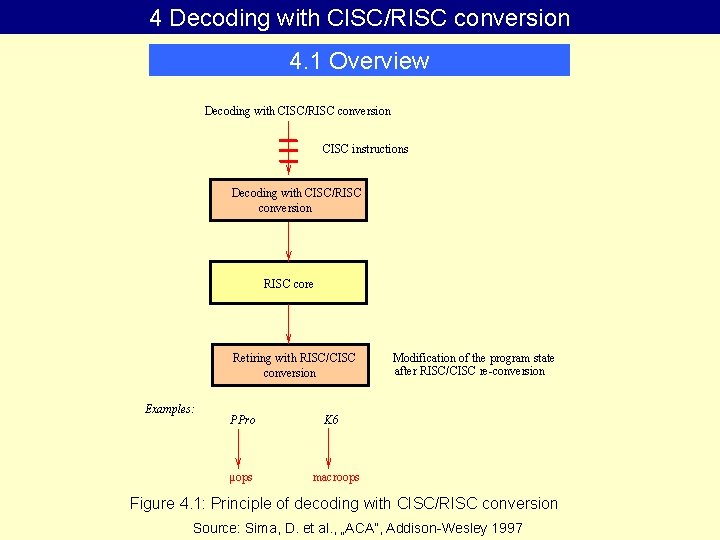

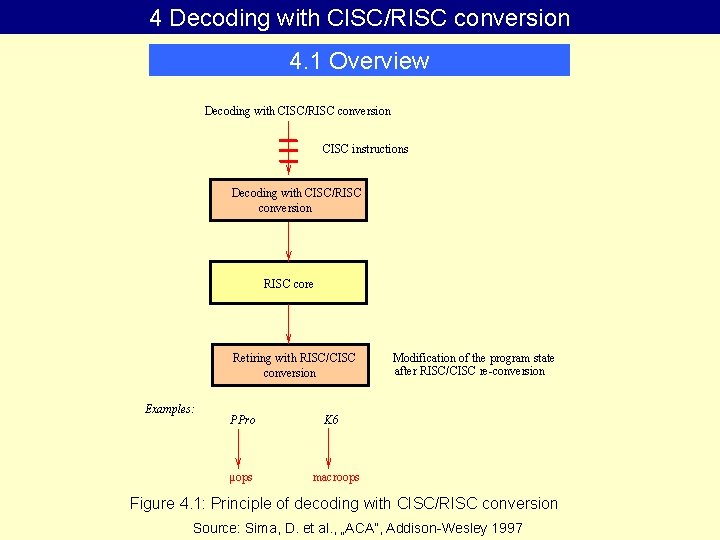

4 Decoding with CISC/RISC conversion 4. 1 Overview Decoding with CISC/RISC conversion CISC instructions Decoding with CISC/RISC conversion RISC core Retiring with RISC/CISC conversion Examples: PPro µops Modification of the program state after RISC/CISC re-conversion K 6 macroops Figure 4. 1: Principle of decoding with CISC/RISC conversion Source: Sima, D. et al. , „ACA”, Addison-Wesley 1997

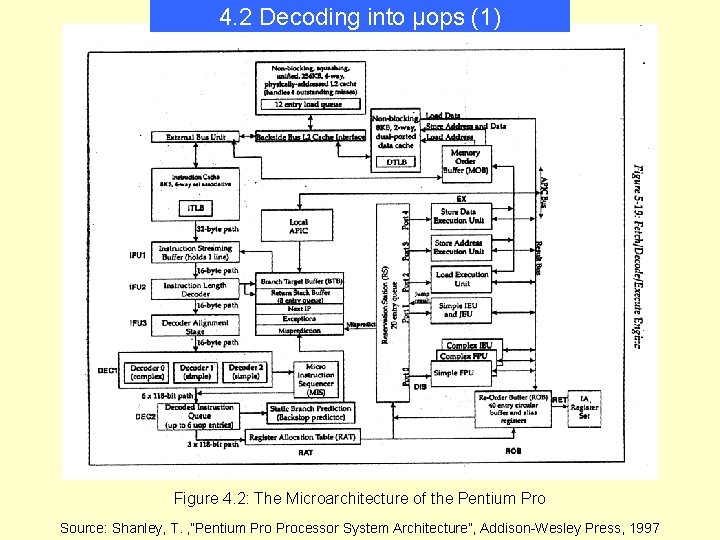

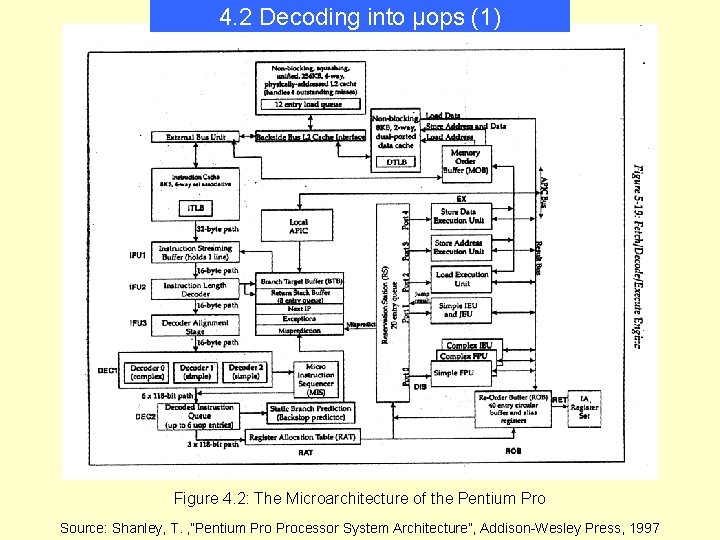

4. 2 Decoding into µops (1) Figure 4. 2: The Microarchitecture of the Pentium Pro Source: Shanley, T. , ”Pentium Processor System Architecture”, Addison-Wesley Press, 1997

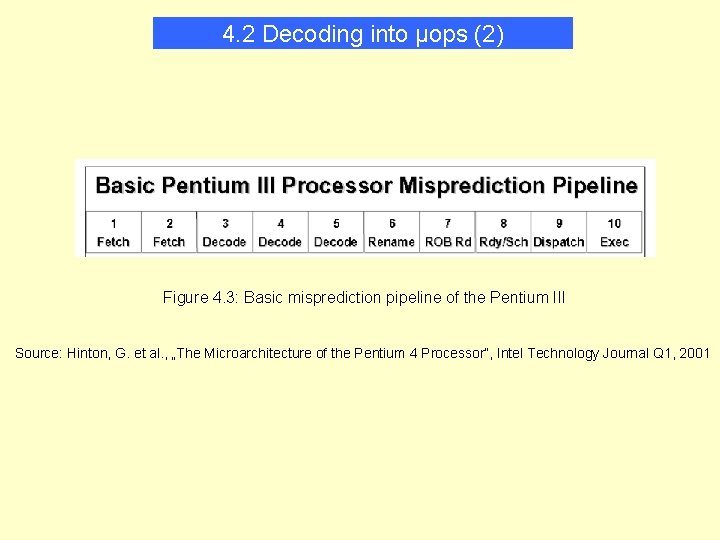

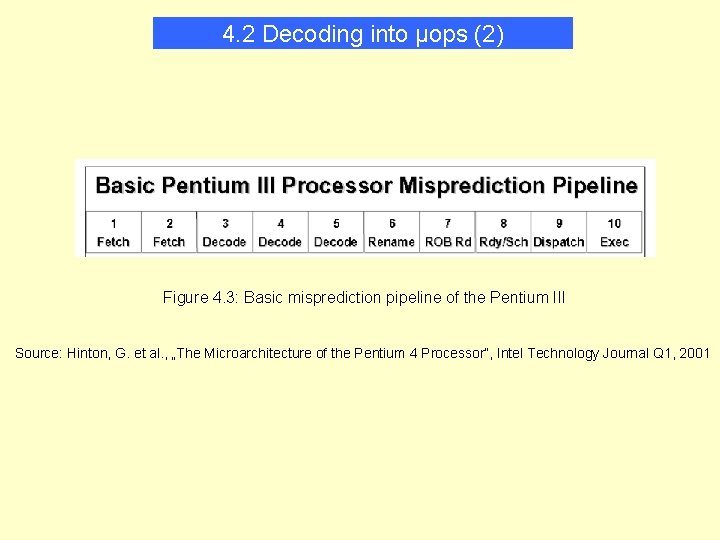

4. 2 Decoding into µops (2) Figure 4. 3: Basic misprediction pipeline of the Pentium III Source: Hinton, G. et al. , „The Microarchitecture of the Pentium 4 Processor”, Intel Technology Journal Q 1, 2001

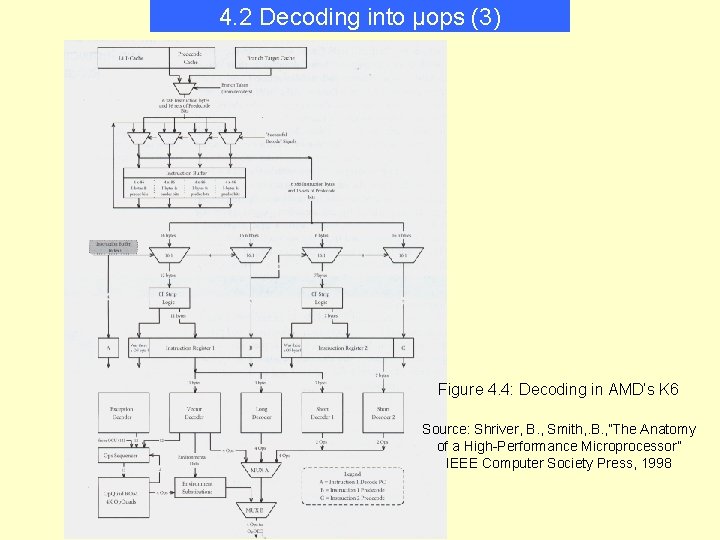

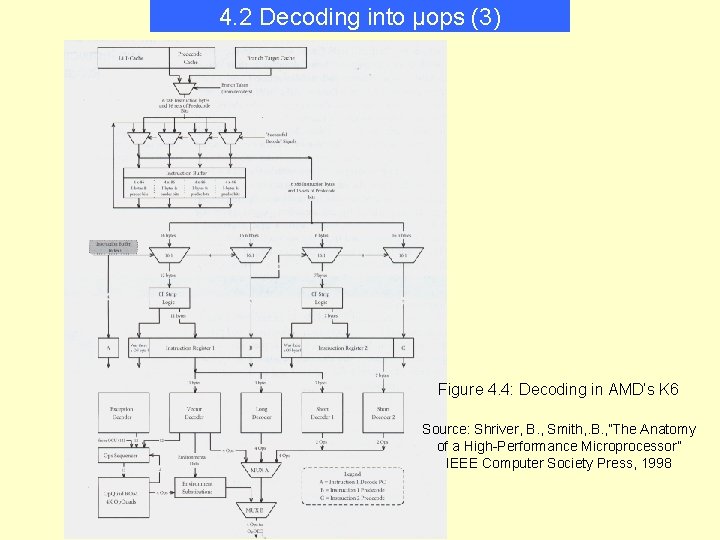

4. 2 Decoding into µops (3) Figure 4. 4: Decoding in AMD’s K 6 Source: Shriver, B. , Smith, . B. , ”The Anatomy of a High-Performance Microprocessor” IEEE Computer Society Press, 1998

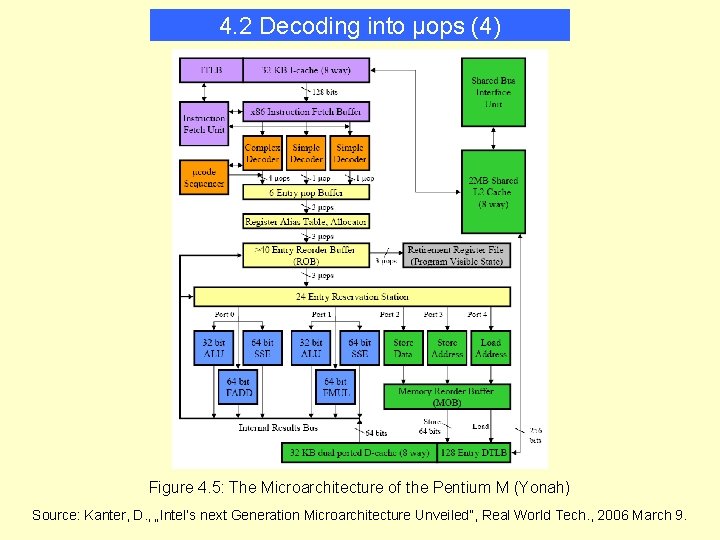

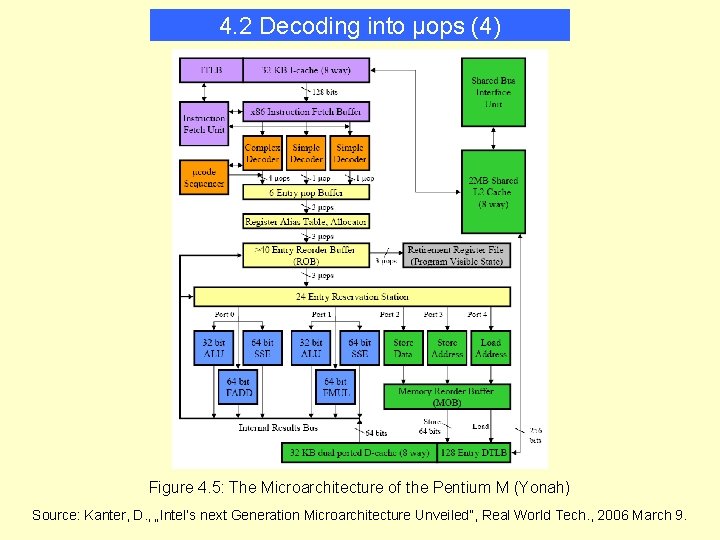

4. 2 Decoding into µops (4) Figure 4. 5: The Microarchitecture of the Pentium M (Yonah) Source: Kanter, D. , „Intel’s next Generation Microarchitecture Unveiled”, Real World Tech. , 2006 March 9.

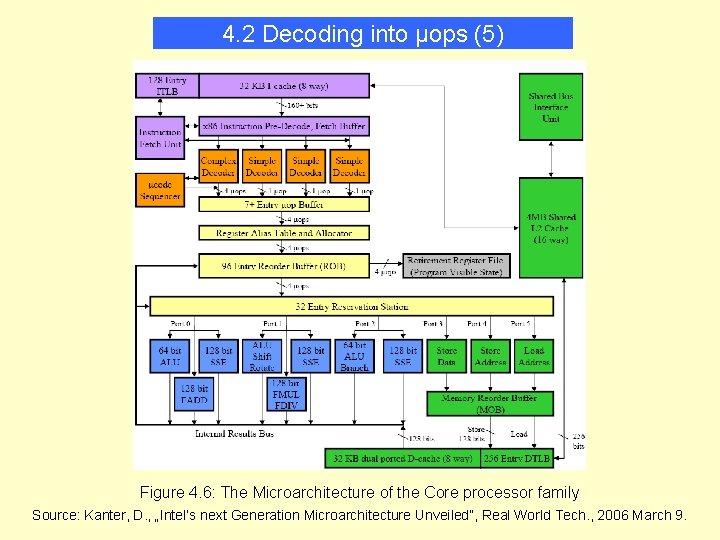

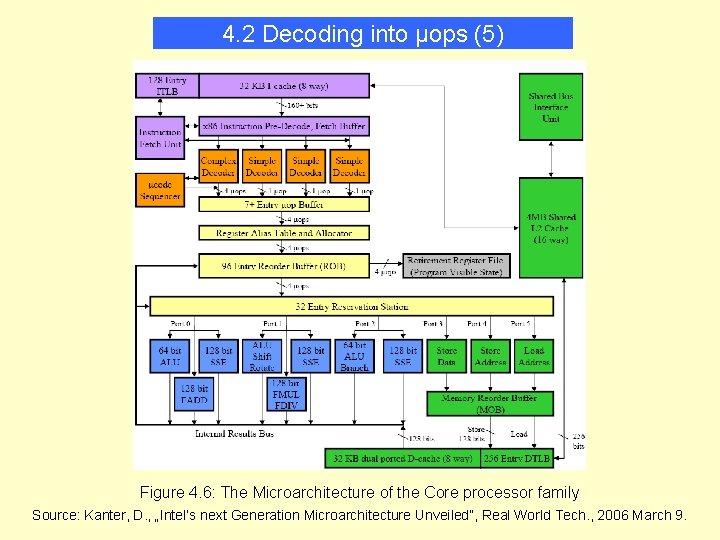

4. 2 Decoding into µops (5) Figure 4. 6: The Microarchitecture of the Core processor family Source: Kanter, D. , „Intel’s next Generation Microarchitecture Unveiled”, Real World Tech. , 2006 March 9.

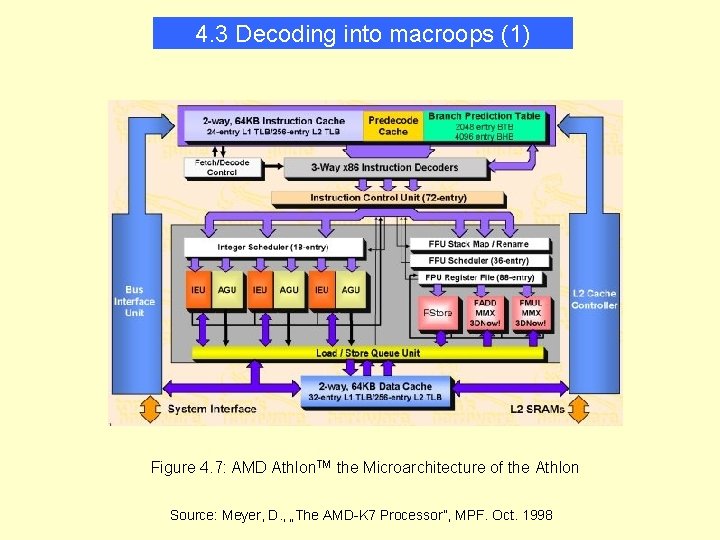

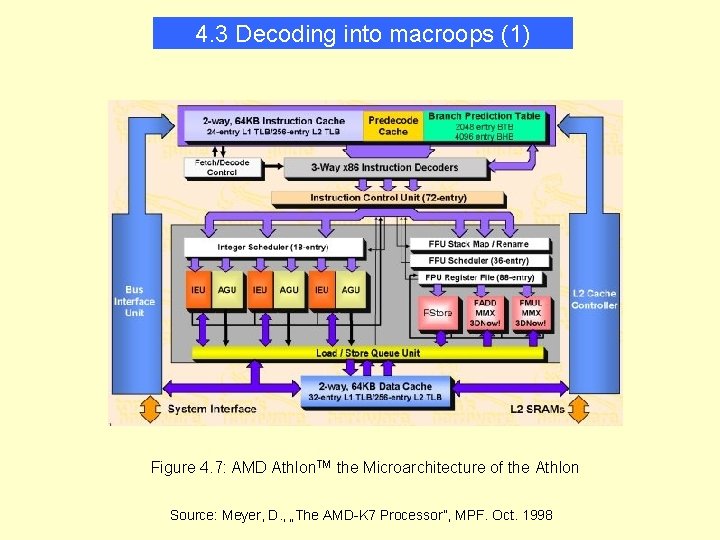

4. 3 Decoding into macroops (1) Figure 4. 7: AMD Athlon. TM the Microarchitecture of the Athlon Source: Meyer, D. , „The AMD-K 7 Processor”, MPF. Oct. 1998

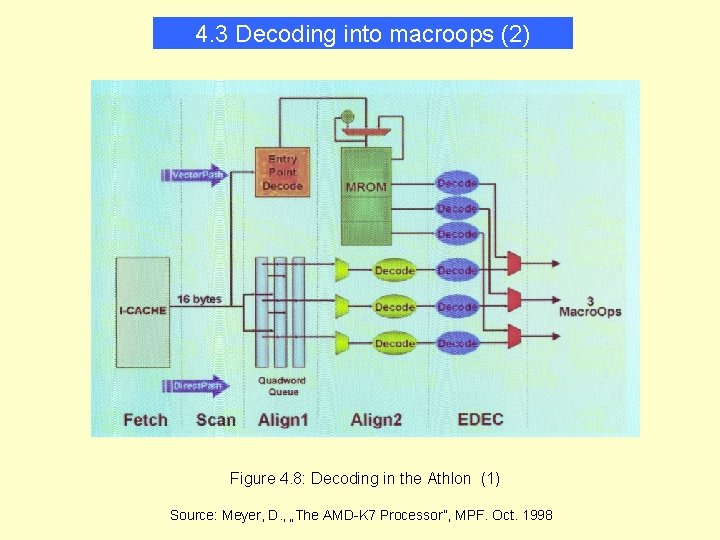

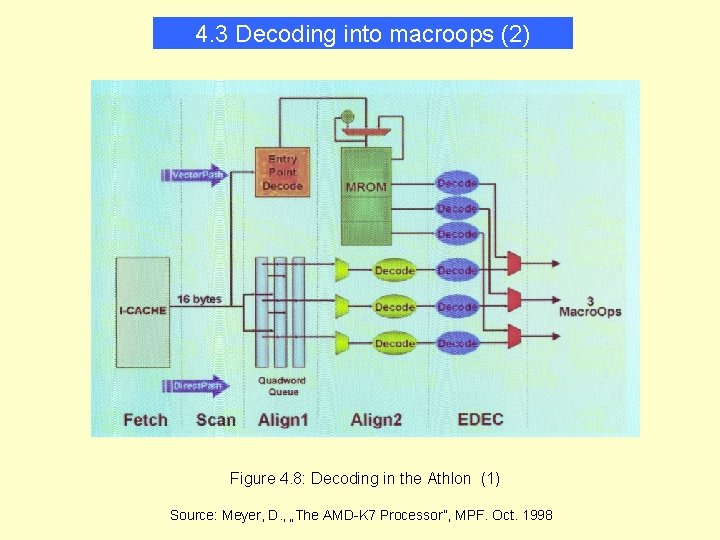

4. 3 Decoding into macroops (2) Figure 4. 8: Decoding in the Athlon (1) Source: Meyer, D. , „The AMD-K 7 Processor”, MPF. Oct. 1998

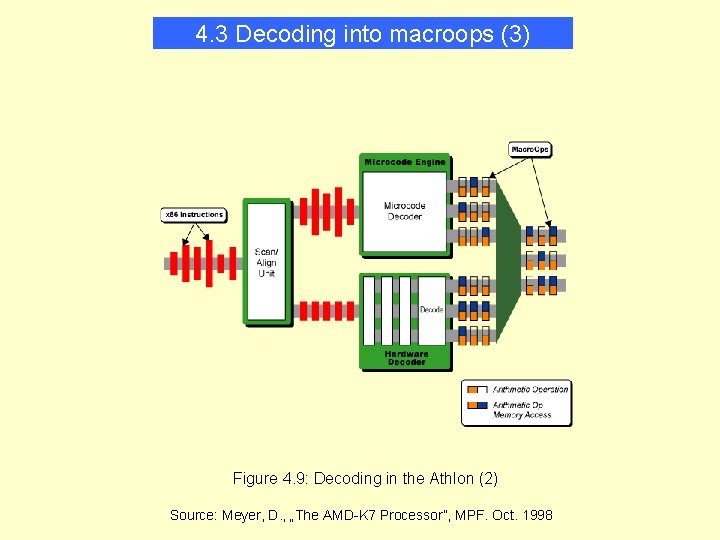

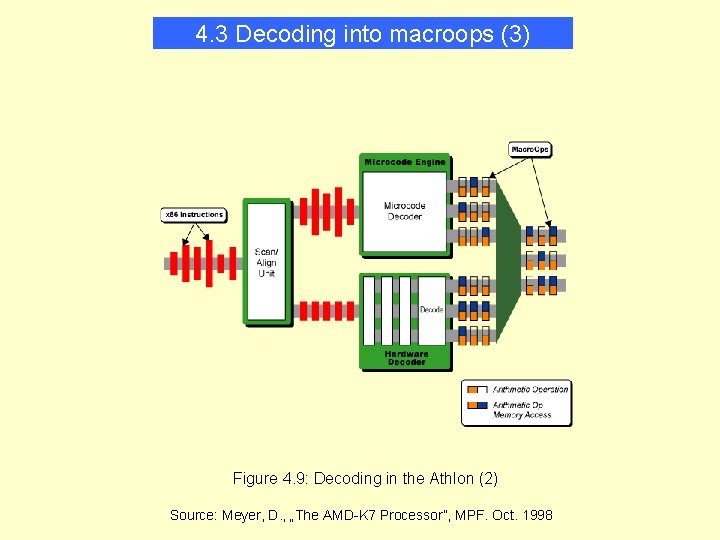

4. 3 Decoding into macroops (3) Figure 4. 9: Decoding in the Athlon (2) Source: Meyer, D. , „The AMD-K 7 Processor”, MPF. Oct. 1998

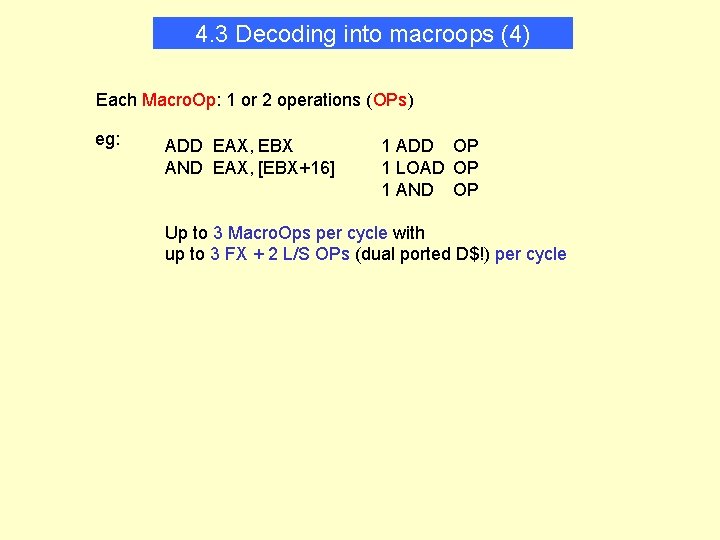

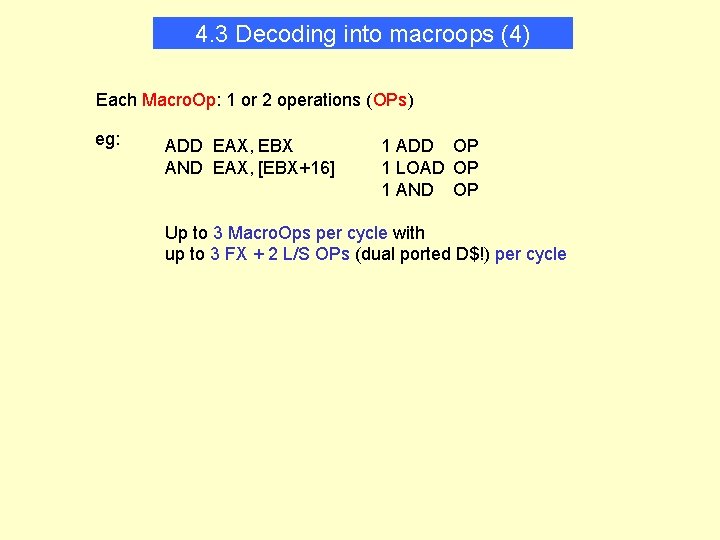

4. 3 Decoding into macroops (4) Each Macro. Op: 1 or 2 operations (OPs) eg: ADD EAX, EBX AND EAX, [EBX+16] 1 ADD OP 1 LOAD OP 1 AND OP Up to 3 Macro. Ops per cycle with up to 3 FX + 2 L/S OPs (dual ported D$!) per cycle

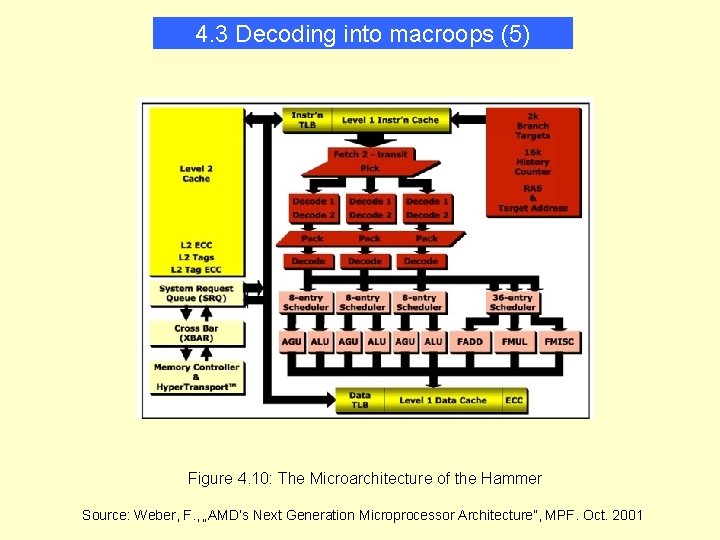

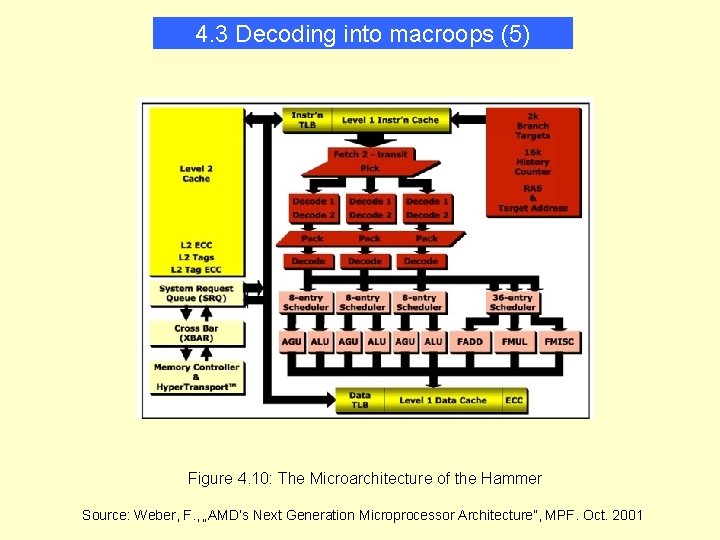

4. 3 Decoding into macroops (5) Figure 4. 10: The Microarchitecture of the Hammer Source: Weber, F. , „AMD’s Next Generation Microprocessor Architecture”, MPF. Oct. 2001

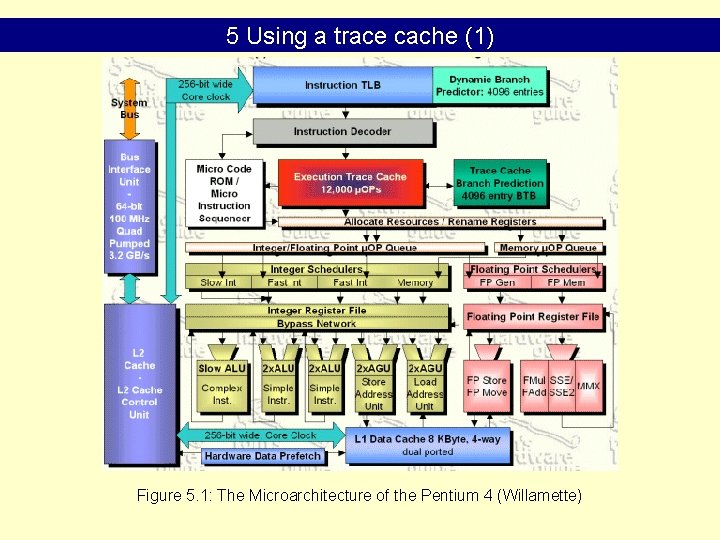

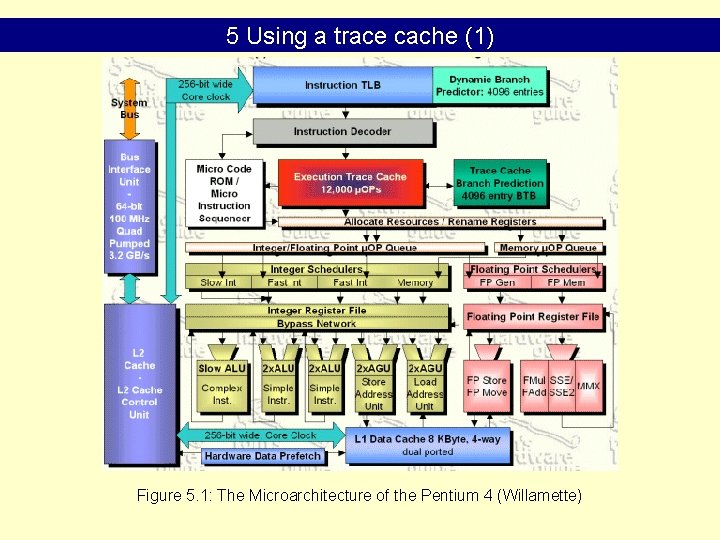

5 Using a trace cache (1) Figure 5. 1: The Microarchitecture of the Pentium 4 (Willamette)

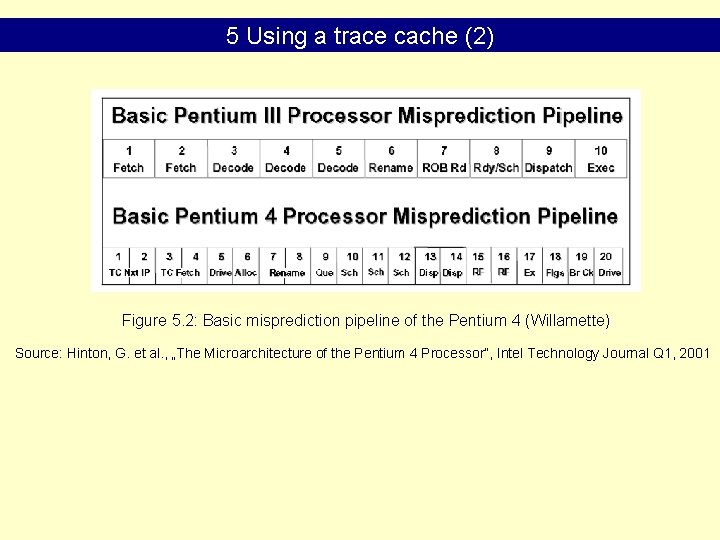

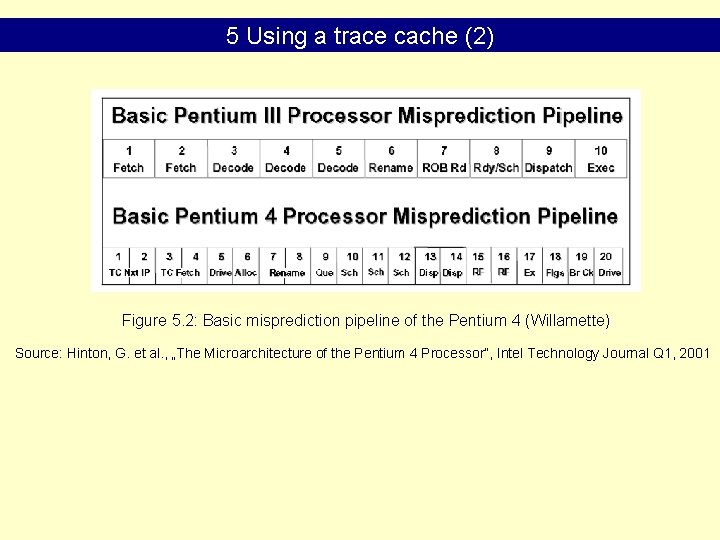

5 Using a trace cache (2) Figure 5. 2: Basic misprediction pipeline of the Pentium 4 (Willamette) Source: Hinton, G. et al. , „The Microarchitecture of the Pentium 4 Processor”, Intel Technology Journal Q 1, 2001

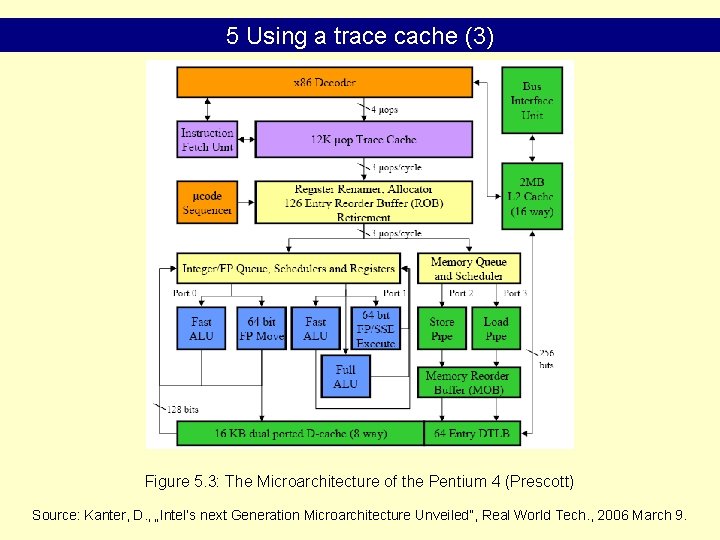

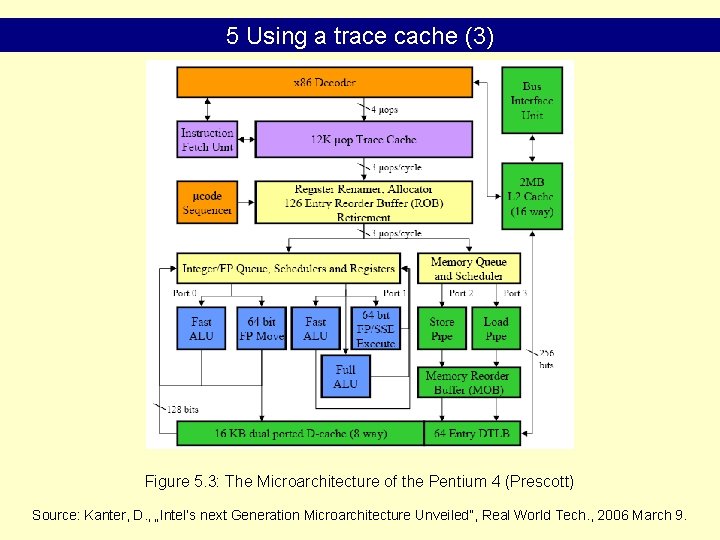

5 Using a trace cache (3) Figure 5. 3: The Microarchitecture of the Pentium 4 (Prescott) Source: Kanter, D. , „Intel’s next Generation Microarchitecture Unveiled”, Real World Tech. , 2006 March 9.

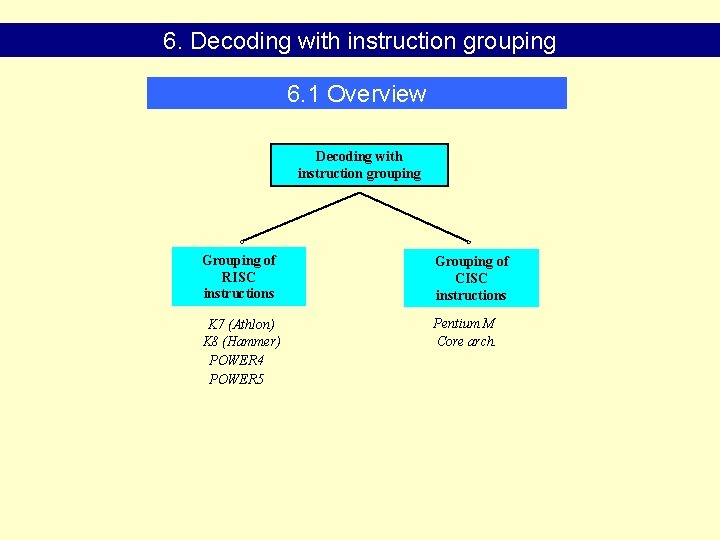

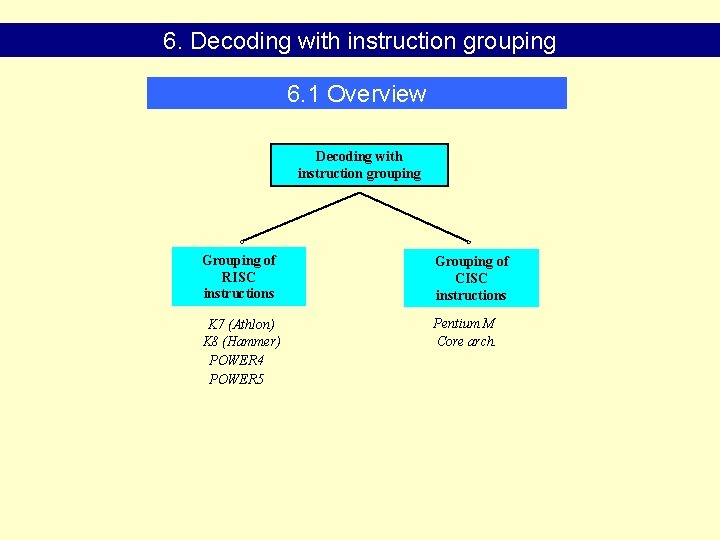

6. Decoding with instruction grouping 6. 1 Overview Decoding with instruction grouping Grouping of RISC instructions Grouping of CISC instructions K 7 (Athlon) K 8 (Hammer) POWER 4 POWER 5 Pentium M Core arch.

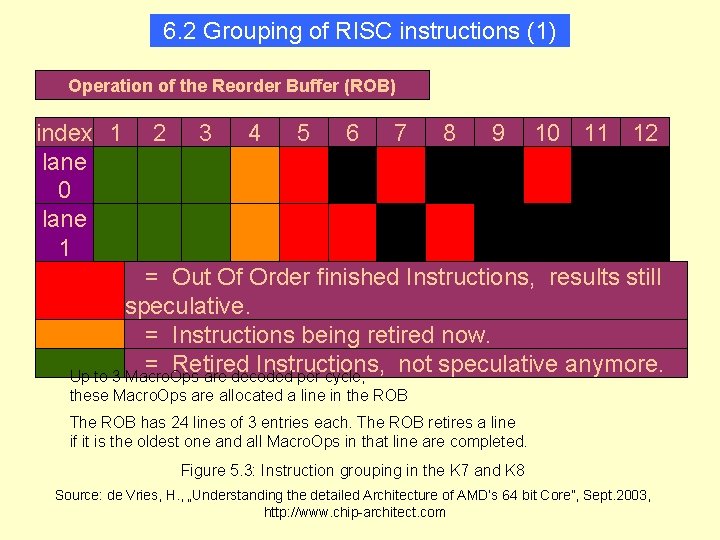

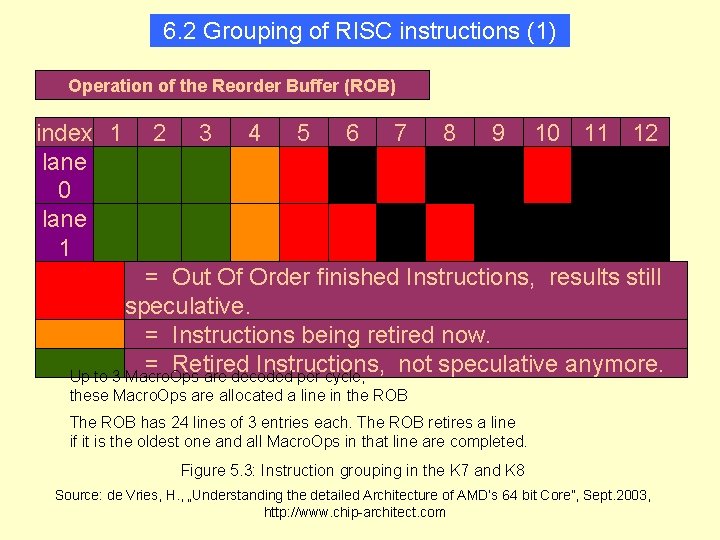

6. 2 Grouping of RISC instructions (1) Operation of the Reorder Buffer (ROB) index 1 2 3 4 5 6 7 8 9 10 11 12 lane 0 lane 1 lane = Out Of Order finished Instructions, results still 2 speculative. = Instructions being retired now. = Retired Instructions, not speculative anymore. Up to 3 Macro. Ops are decoded per cycle, these Macro. Ops are allocated a line in the ROB The ROB has 24 lines of 3 entries each. The ROB retires a line if it is the oldest one and all Macro. Ops in that line are completed. Figure 5. 3: Instruction grouping in the K 7 and K 8 Source: de Vries, H. , „Understanding the detailed Architecture of AMD’s 64 bit Core”, Sept. 2003, http: //www. chip-architect. com

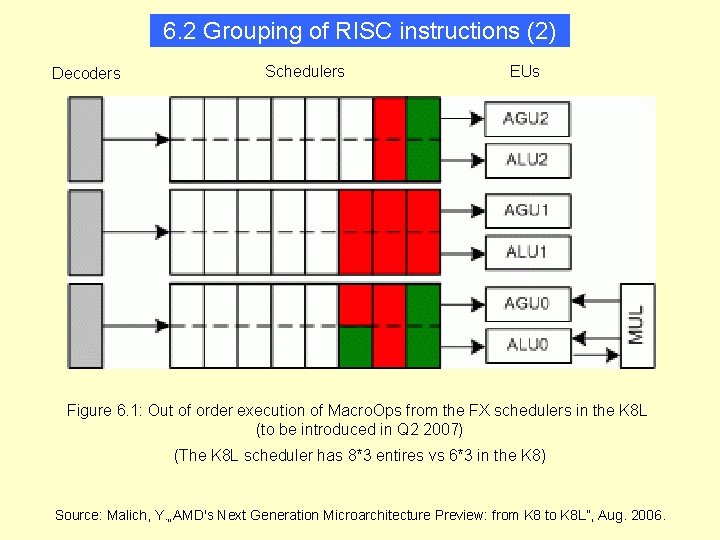

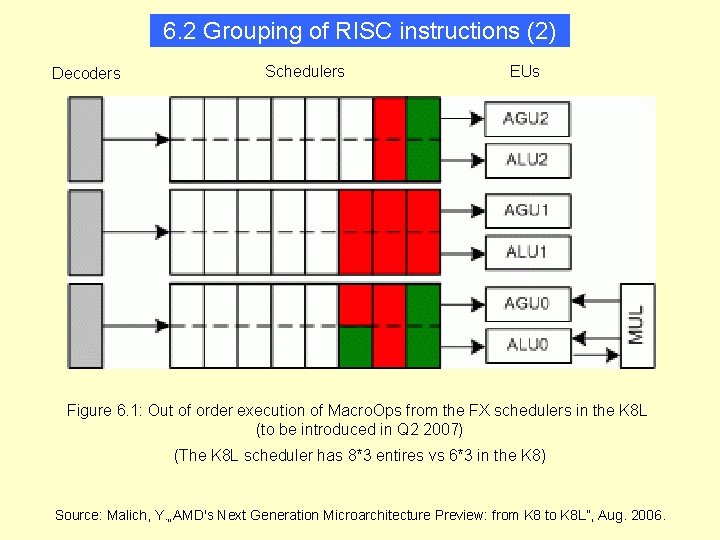

6. 2 Grouping of RISC instructions (2) Decoders Schedulers EUs Figure 6. 1: Out of order execution of Macro. Ops from the FX schedulers in the K 8 L (to be introduced in Q 2 2007) (The K 8 L scheduler has 8*3 entires vs 6*3 in the K 8) Source: Malich, Y. „AMD's Next Generation Microarchitecture Preview: from K 8 to K 8 L”, Aug. 2006.

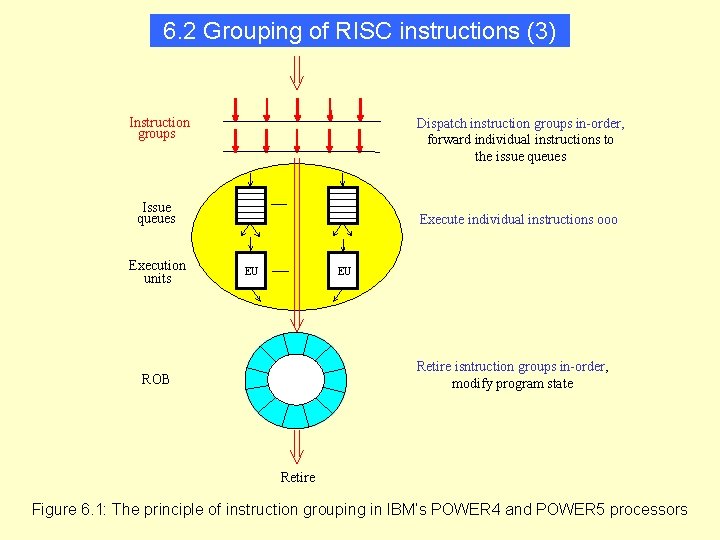

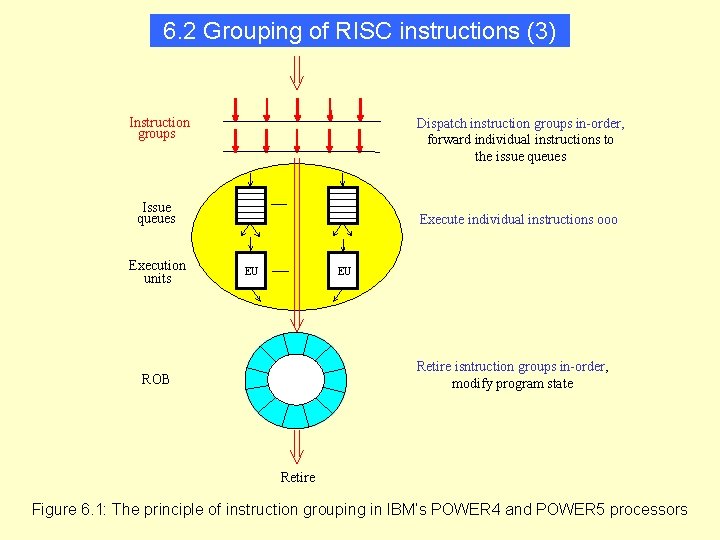

6. 2 Grouping of RISC instructions (3) Instruction groups Dispatch instruction groups in-order, forward individual instructions to the issue queues Issue queues Execution units Execute individual instructions ooo EU EU Retire isntruction groups in-order, modify program state ROB Retire Figure 6. 1: The principle of instruction grouping in IBM’s POWER 4 and POWER 5 processors

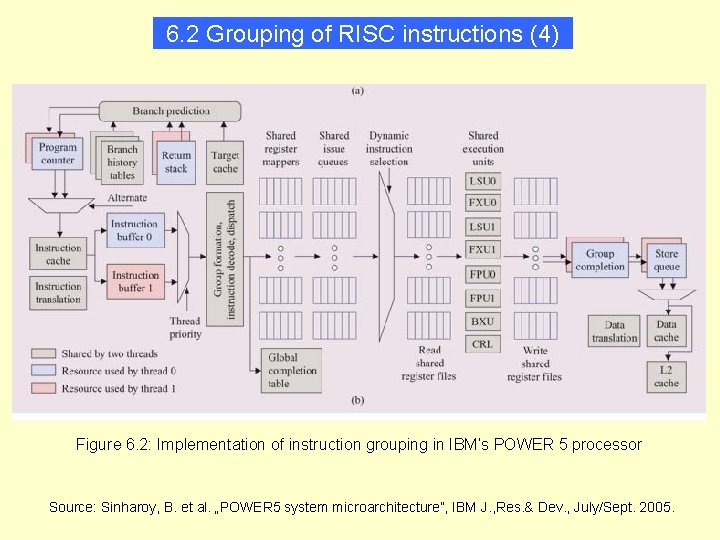

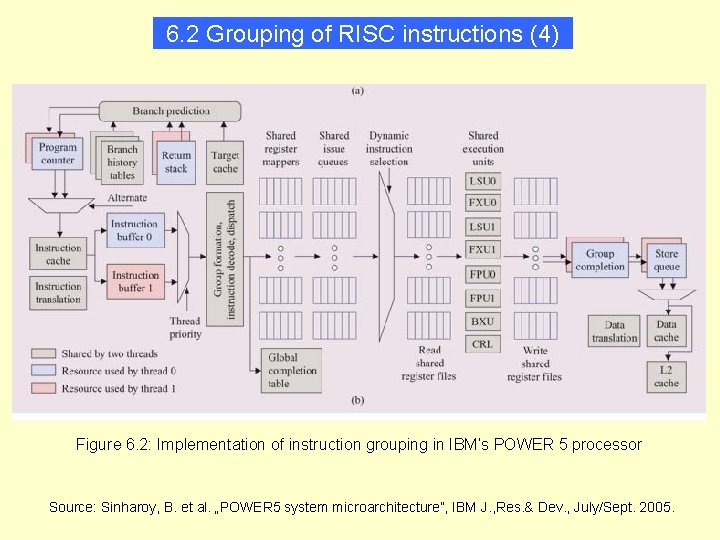

6. 2 Grouping of RISC instructions (4) Figure 6. 2: Implementation of instruction grouping in IBM’s POWER 5 processor Source: Sinharoy, B. et al. „POWER 5 system microarchitecture”, IBM J. , Res. & Dev. , July/Sept. 2005.

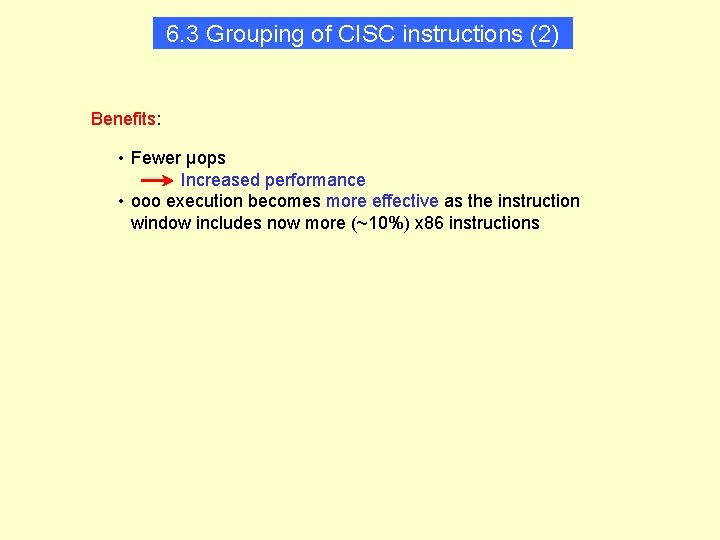

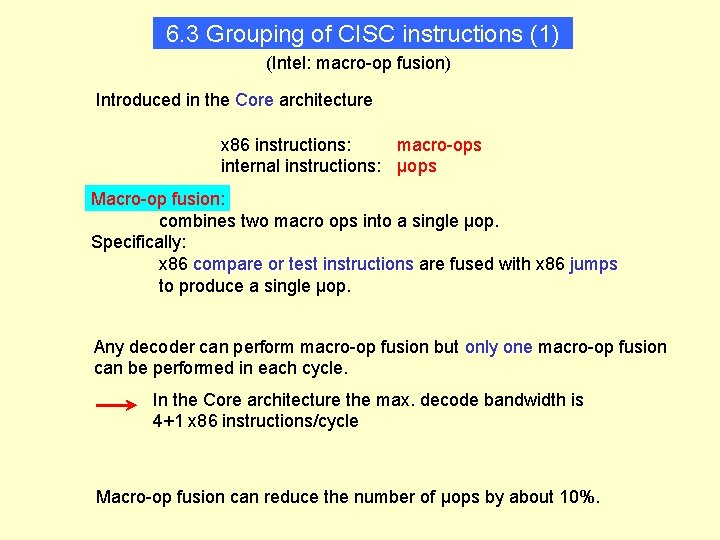

6. 3 Grouping of CISC instructions (1) (Intel: macro-op fusion) Introduced in the Core architecture x 86 instructions: macro-ops internal instructions: μops Macro-op fusion: combines two macro ops into a single μop. Specifically: x 86 compare or test instructions are fused with x 86 jumps to produce a single μop. Any decoder can perform macro-op fusion but only one macro-op fusion can be performed in each cycle. In the Core architecture the max. decode bandwidth is 4+1 x 86 instructions/cycle Macro-op fusion can reduce the number of μops by about 10%.

6. 3 Grouping of CISC instructions (2) Benefits: • Fewer μops Increased performance • ooo execution becomes more effective as the instruction window includes now more (~10%) x 86 instructions