Methods and Tools for Data Intensive Science on

- Slides: 44

Methods and Tools for Data Intensive Science on Distributed Resources Marian Bubak Department of Computer Science and Cyfronet AGH University of Science and Technology Krakow, Poland http: //dice. cyfronet. pl Informatics Institute, System and Network Engineering University of Amsterdam www. science. uva. nl/~gvlam/wsvlam/

Coauthors • Daniel Harezlak • Jan Meizner • Piotr Nowakowski dice. cyfronet. pl • • Adam Belloum Mikolaj Baranowski Reggie Cushing Spiros Koulouzis www. science. uva. nl/~gvlam/wsvlam

Outline Motivation and research objectives Federating data resources An approach to data centric processing A framework for developing complex applications • Conclusion • References • •

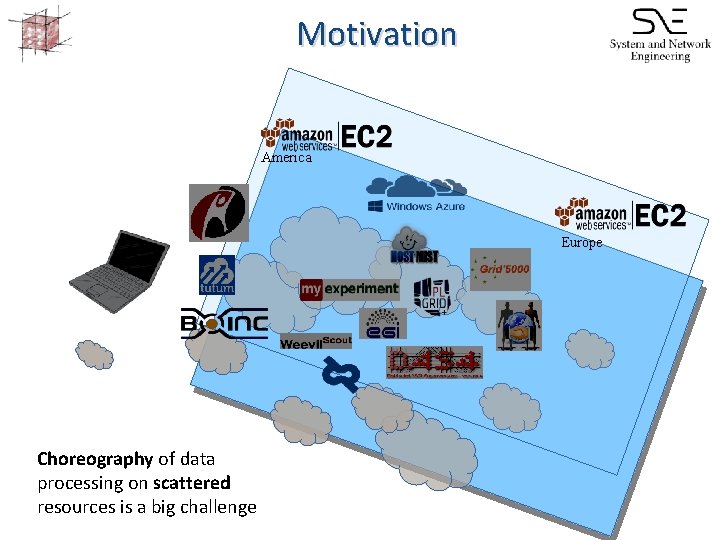

Motivation America Europe Choreography of data processing on scattered resources is a big challenge

Motivation and main goal • Recent trends – Enhanced scientific discovery is becoming collaborative and analysis focused – In-silico experiments are more and more complex – Available compute and data resources are distributed and heterogeneous • Main goal – How to create an ecosystem where scientific data and processes can be linked through semantics and used as alternative to the current manual composition of e. Science applications?

Federating distributed data - challenges • • • The heterogeneity of data access technologies and distribution of datasets makes sharing and unified access difficult Ad-hoc client implementations are ofen developed to consume data from different sources It is impractical to force storage providers to install and maintain large software stacks so that different clients can have access In VPH-Share datasets are not located in a single storage infrastructure and are available through different technologies Vendor lock-in is an issue when using clouds A variety of legacy applications can only access data from a local disk

Federating distributed data - objectives • • • We want to build on top of existing infrastructure to provide large-scale advanced storage capabilities This requires flexible, distributed, decentralized, scalable, fault tolerant, self -optimizing, solutions that will address the issues of scientific communities Issues • Data volume • Data availability • Data retrievability • Data integrity • Sharing • Privacy • Federation of datasets

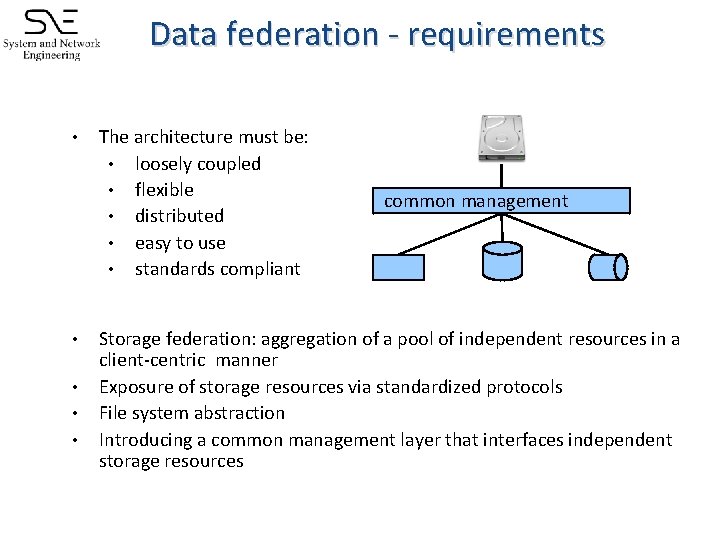

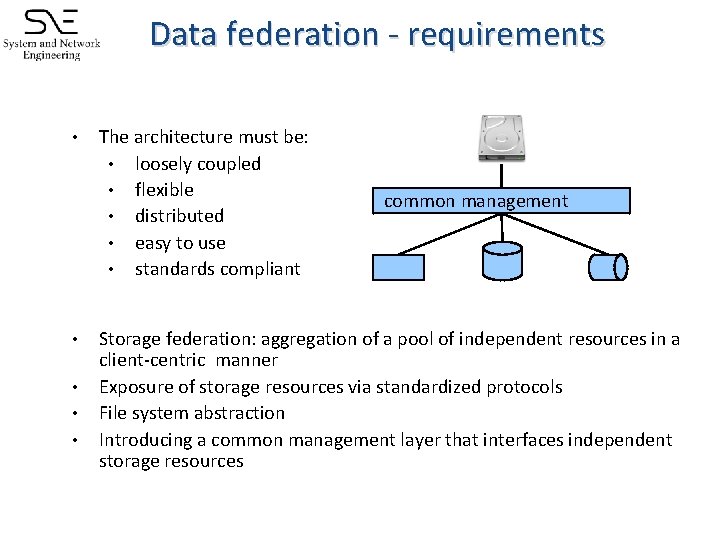

Data federation - requirements • • • The architecture must be: • loosely coupled • flexible • distributed • easy to use • standards compliant common management Storage federation: aggregation of a pool of independent resources in a client-centric manner Exposure of storage resources via standardized protocols File system abstraction Introducing a common management layer that interfaces independent storage resources

LOBCDER – a solution for data federation The Large OBject Cloud Data storag. E fede. Ration is a lightweight, easily deployable streaming service • • loosely couples a variety of storage technologies transparently presents a distributed file system applications perceive a system which mimics a local FS handles locating files and transporting data, with • Access transparency • Location transparency • Concurrency transparency • Heterogeneity • Replication transparency • Migration transparency

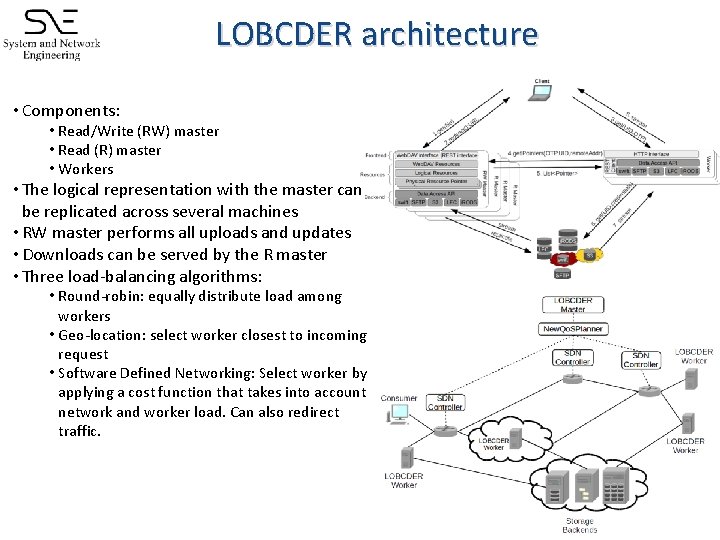

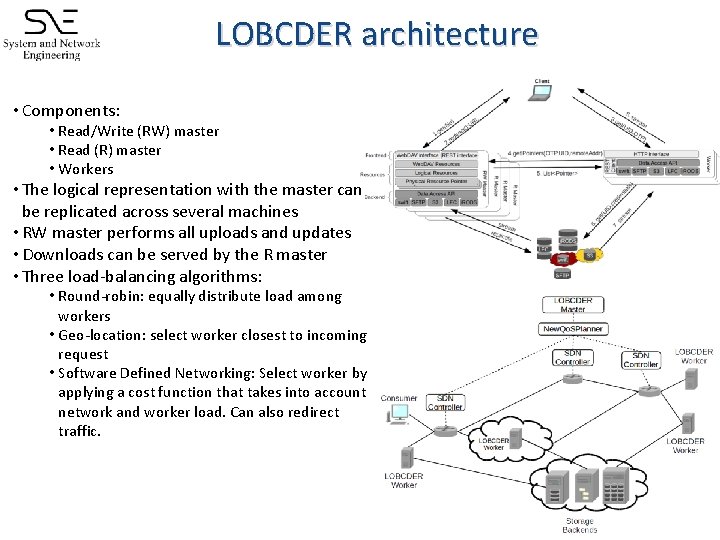

LOBCDER architecture • Components: • Read/Write (RW) master • Read (R) master • Workers • The logical representation with the master can be replicated across several machines • RW master performs all uploads and updates • Downloads can be served by the R master • Three load-balancing algorithms: • Round-robin: equally distribute load among workers • Geo-location: select worker closest to incoming request • Software Defined Networking: Select worker by applying a cost function that takes into account network and worker load. Can also redirect traffic.

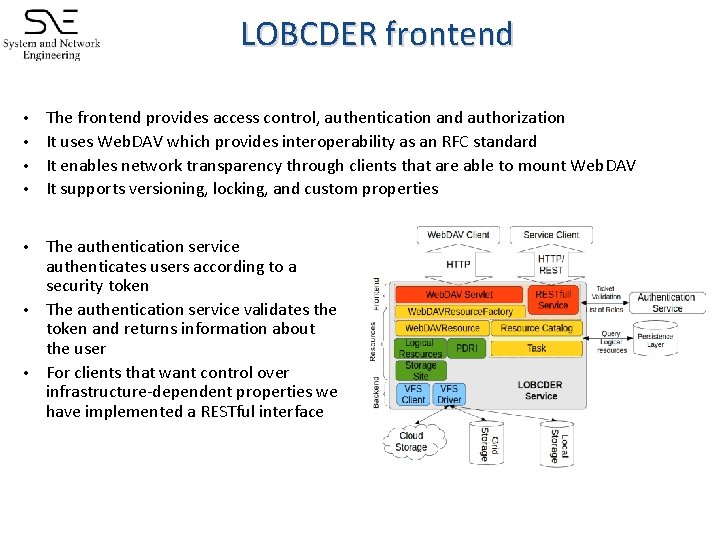

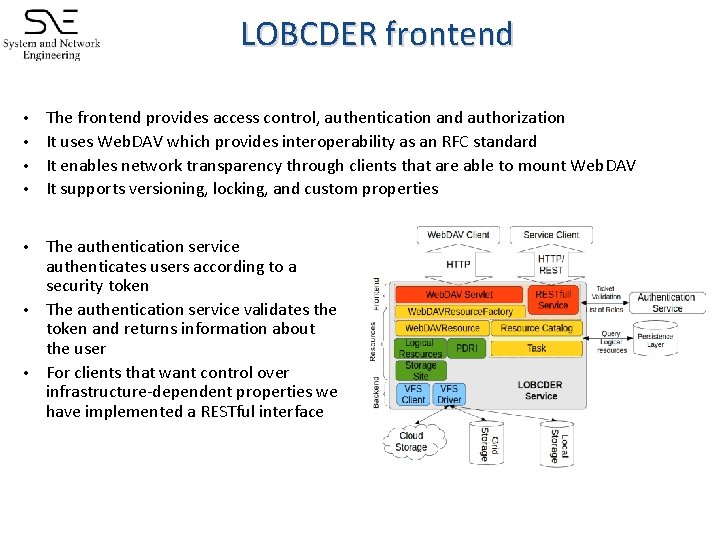

LOBCDER frontend The frontend provides access control, authentication and authorization • It uses Web. DAV which provides interoperability as an RFC standard • It enables network transparency through clients that are able to mount Web. DAV • It supports versioning, locking, and custom properties • The authentication service authenticates users according to a security token • The authentication service validates the token and returns information about the user • For clients that want control over infrastructure-dependent properties we have implemented a RESTful interface •

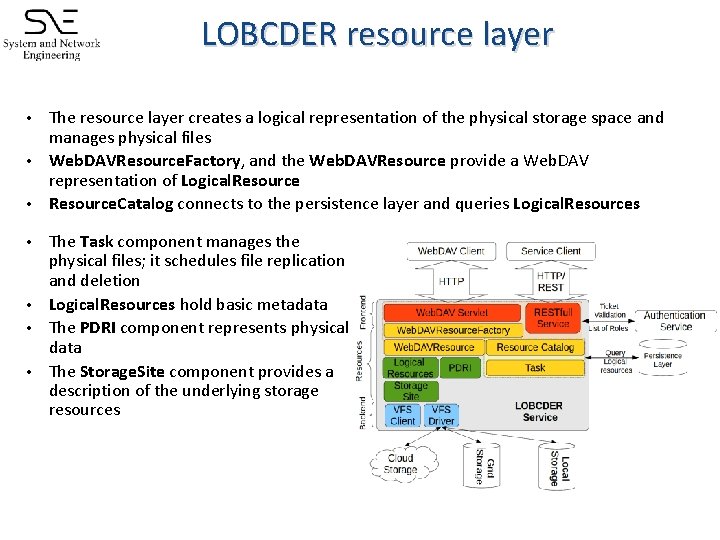

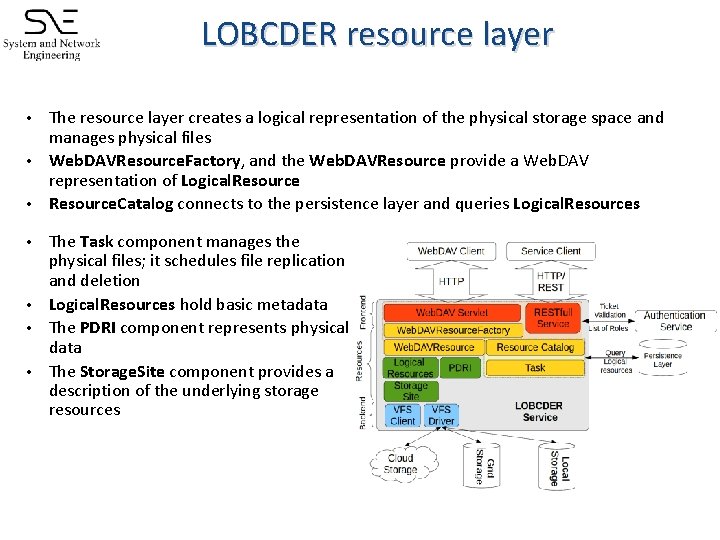

LOBCDER resource layer The resource layer creates a logical representation of the physical storage space and manages physical files • Web. DAVResource. Factory, and the Web. DAVResource provide a Web. DAV representation of Logical. Resource • Resource. Catalog connects to the persistence layer and queries Logical. Resources • The Task component manages the physical files; it schedules file replication and deletion • Logical. Resources hold basic metadata • The PDRI component represents physical data • The Storage. Site component provides a description of the underlying storage resources •

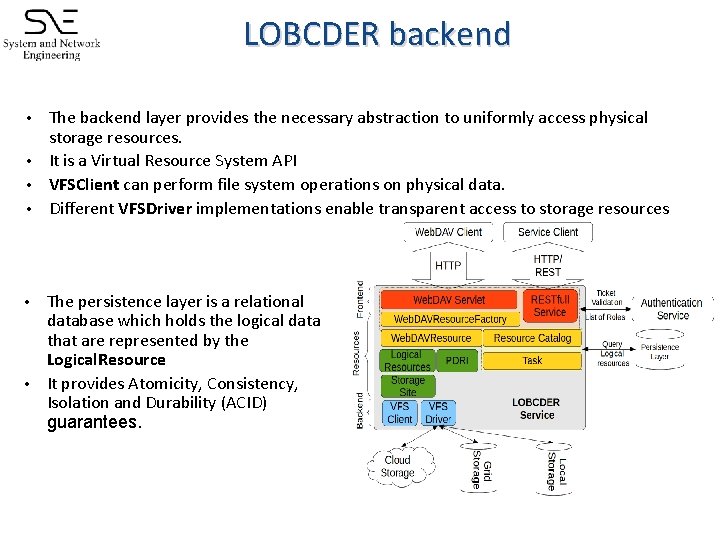

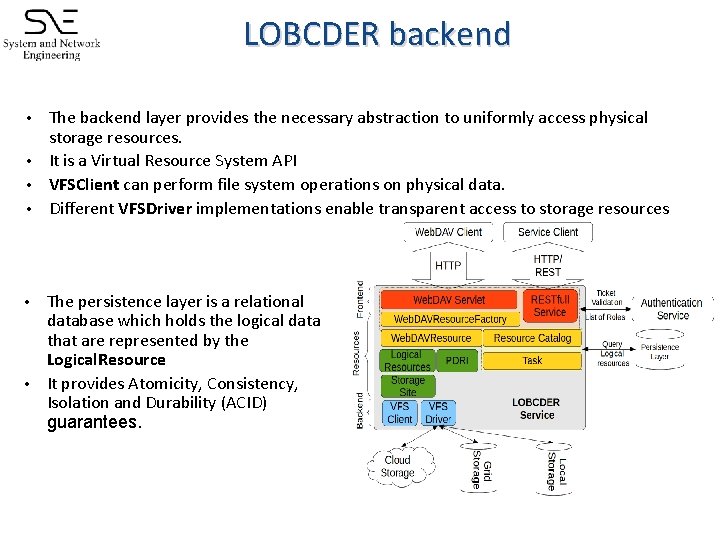

LOBCDER backend The backend layer provides the necessary abstraction to uniformly access physical storage resources. • It is a Virtual Resource System API • VFSClient can perform file system operations on physical data. • Different VFSDriver implementations enable transparent access to storage resources • The persistence layer is a relational database which holds the logical data that are represented by the Logical. Resource • It provides Atomicity, Consistency, Isolation and Durability (ACID) guarantees. •

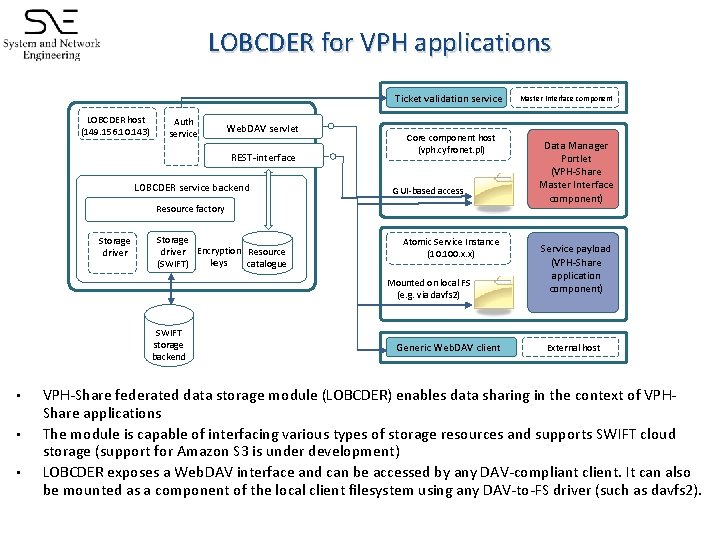

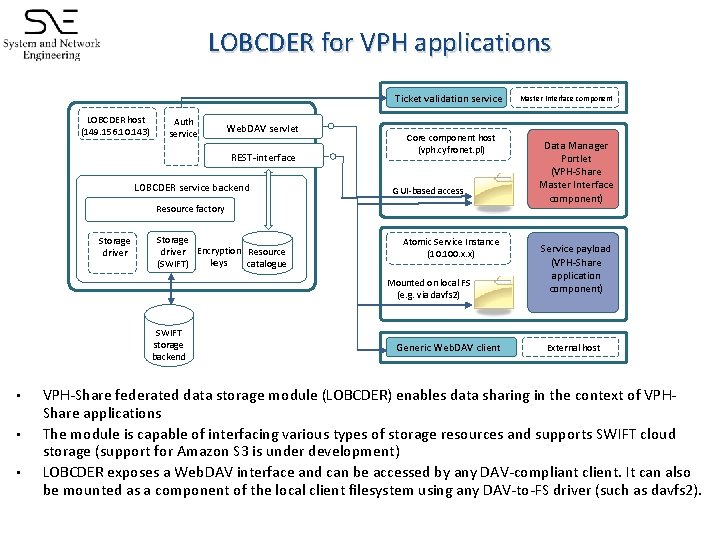

LOBCDER for VPH applications Ticket validation service LOBCDER host (149. 156. 10. 143) Auth service Web. DAV servlet REST-interface LOBCDER service backend Core component host (vph. cyfronet. pl) GUI-based access Resource factory Storage driver Encryption Resource keys (SWIFT) catalogue Atomic Service Instance (10. 100. x. x) Mounted on local FS (e. g. via davfs 2) SWIFT storage backend • • • Generic Web. DAV client Master Interface component Data Manager Portlet (VPH-Share Master Interface component) Service payload (VPH-Share application component) External host VPH-Share federated data storage module (LOBCDER) enables data sharing in the context of VPHShare applications The module is capable of interfacing various types of storage resources and supports SWIFT cloud storage (support for Amazon S 3 is under development) LOBCDER exposes a Web. DAV interface and can be accessed by any DAV-compliant client. It can also be mounted as a component of the local client filesystem using any DAV-to-FS driver (such as davfs 2).

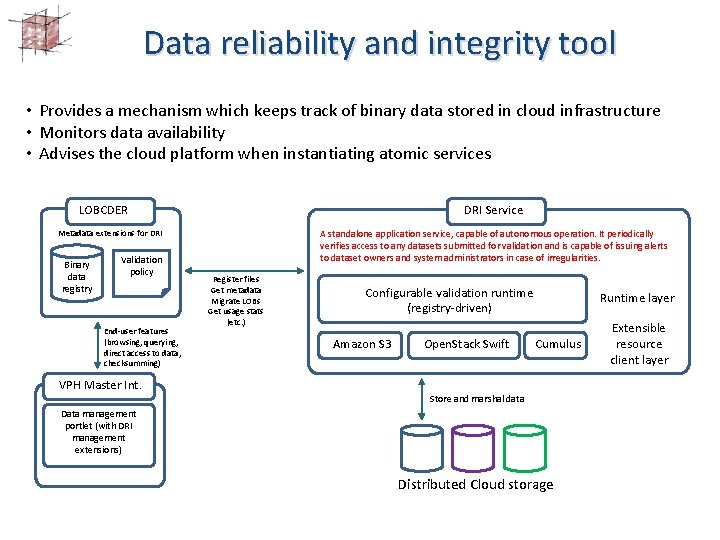

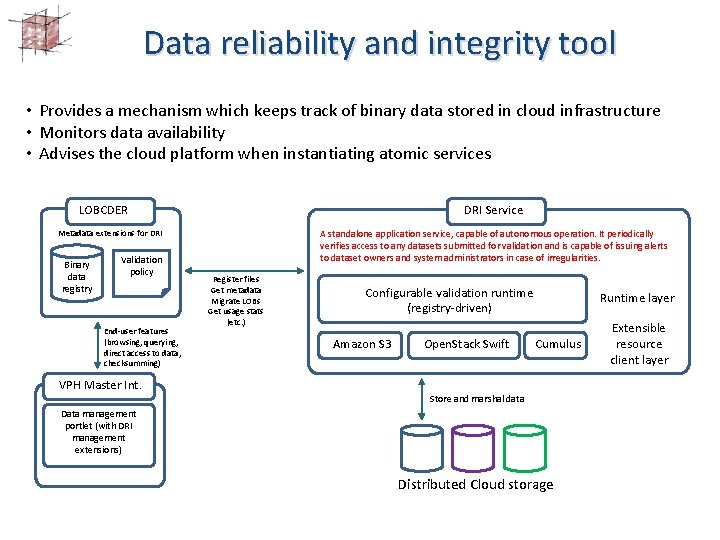

Data reliability and integrity tool • Provides a mechanism which keeps track of binary data stored in cloud infrastructure • Monitors data availability • Advises the cloud platform when instantiating atomic services LOBCDER DRI Service Metadata extensions for DRI Binary data registry Validation policy End-user features (browsing, querying, direct access to data, checksumming) VPH Master Int. A standalone application service, capable of autonomous operation. It periodically verifies access to any datasets submitted for validation and is capable of issuing alerts to dataset owners and system administrators in case of irregularities. Register files Get metadata Migrate LOBs Get usage stats (etc. ) Configurable validation runtime (registry-driven) Amazon S 3 Open. Stack Swift Runtime layer Cumulus Store and marshal data Data management portlet (with DRI management extensions) Distributed Cloud storage Extensible resource client layer

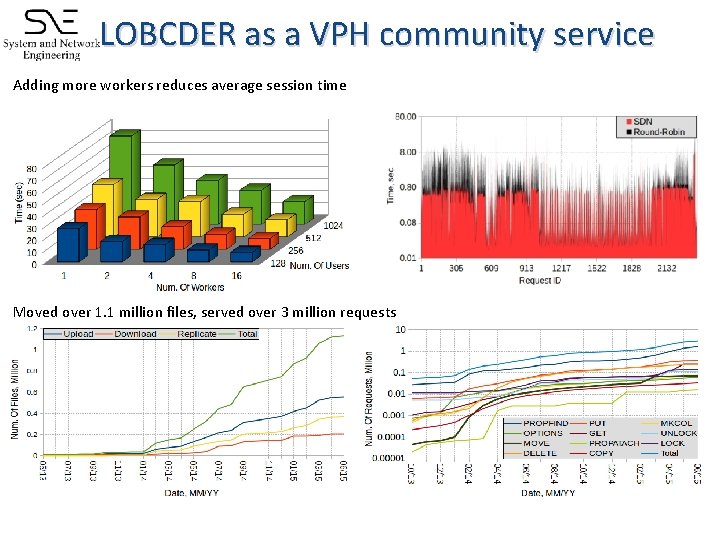

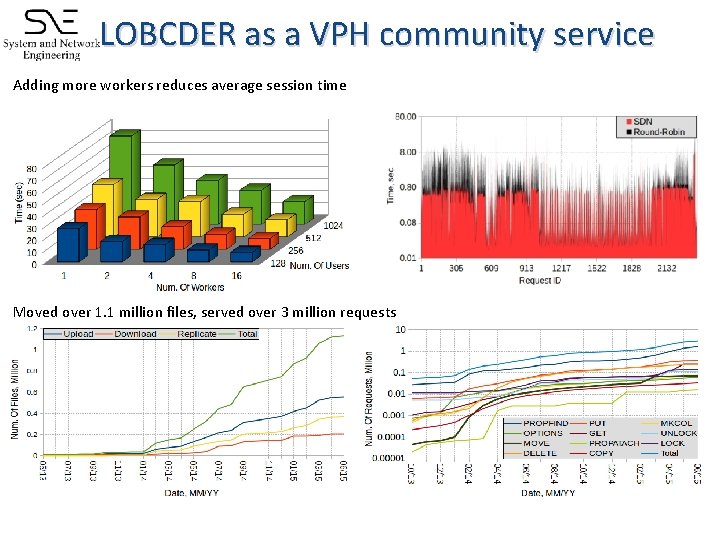

LOBCDER as a VPH community service Adding more workers reduces average session time Moved over 1. 1 million files, served over 3 million requests

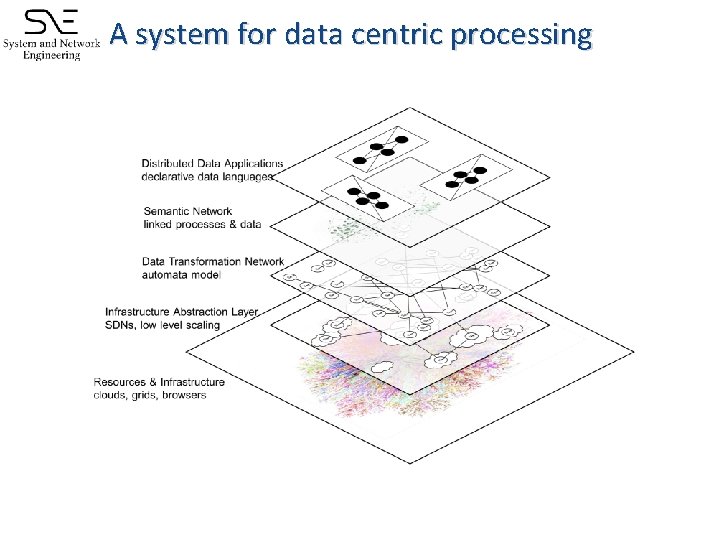

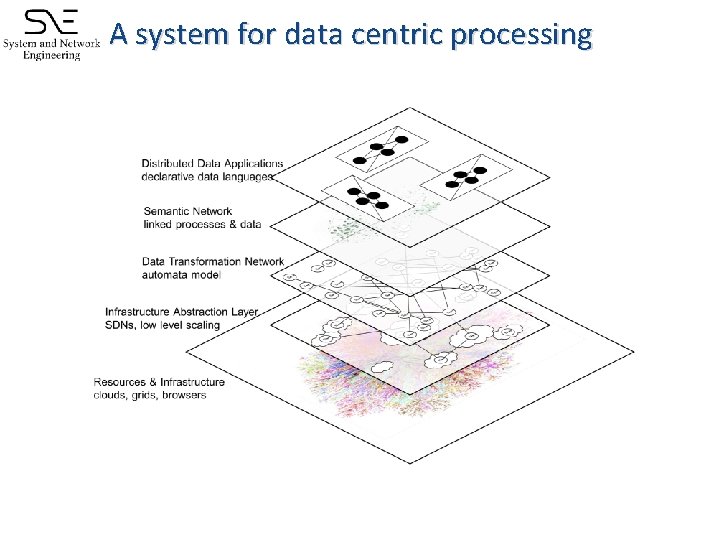

A system for data centric processing

Automata-based dynamic data processing • Data processing schema can be considered as a state transformation graph • Data automata can be considered as a concurrency model for data processing – a runtime knows when data can be processed in parallel or not – memory managers can assign memory ownership to threads based on states • Compared to dataflow, stateflow graphs add more data processing expressiveness – tasks not only fire on data availability but also on the state (value) of data • The graph facilitates data processing in many ways – – Data state can be easily tracked Using the graph as a protocol header, a virtual data processing network layer is achieved Data becomes self routable to processing nodes Collaboration can be achieved by joining the virtual network

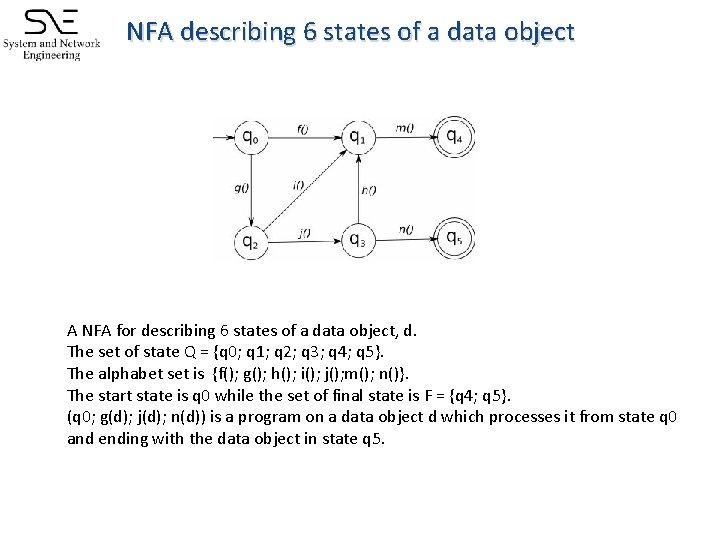

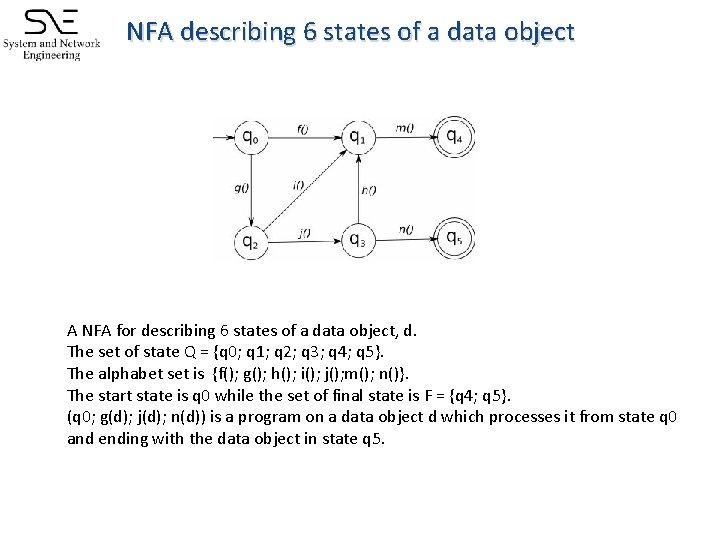

NFA describing 6 states of a data object A NFA for describing 6 states of a data object, d. The set of state Q = {q 0; q 1; q 2; q 3; q 4; q 5}. The alphabet set is {f(); g(); h(); i(); j(); m(); n()}. The start state is q 0 while the set of final state is F = {q 4; q 5}. (q 0; g(d); j(d); n(d)) is a program on a data object d which processes it from state q 0 and ending with the data object in state q 5.

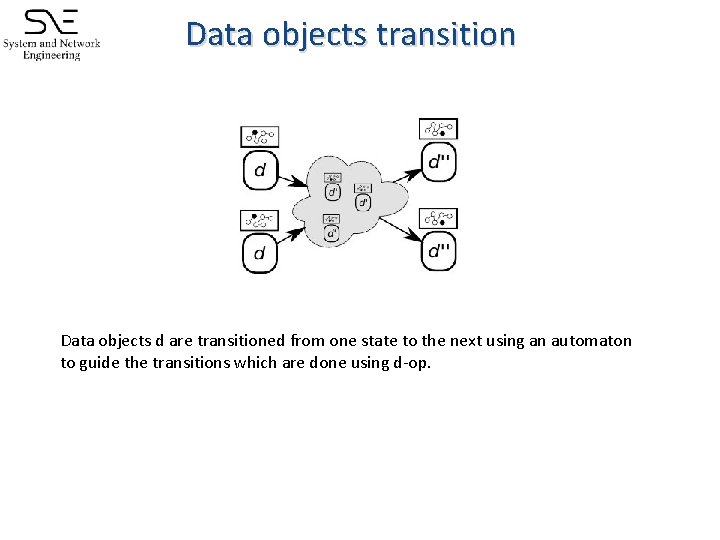

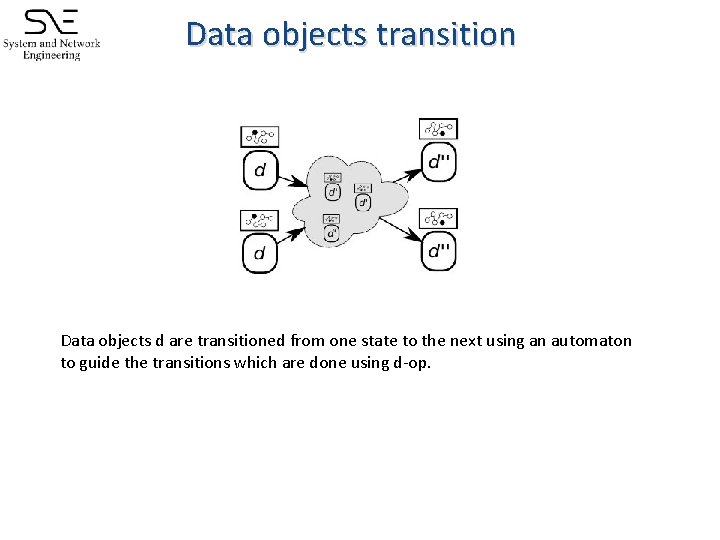

Data objects transition Data objects d are transitioned from one state to the next using an automaton to guide the transitions which are done using d-op.

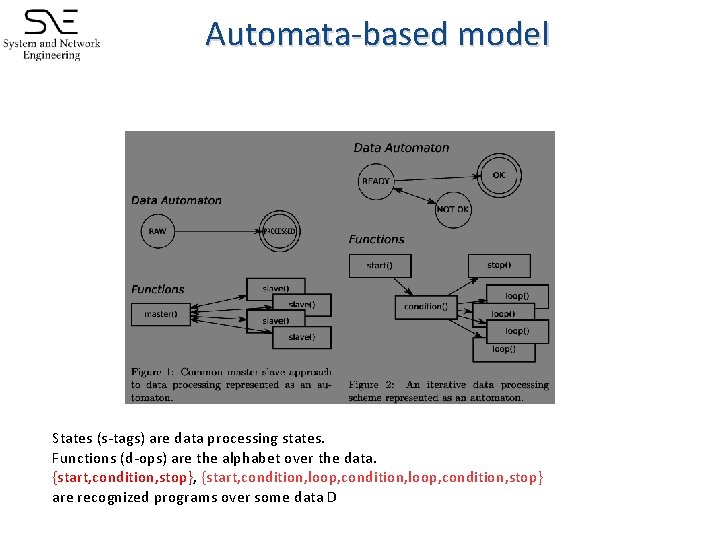

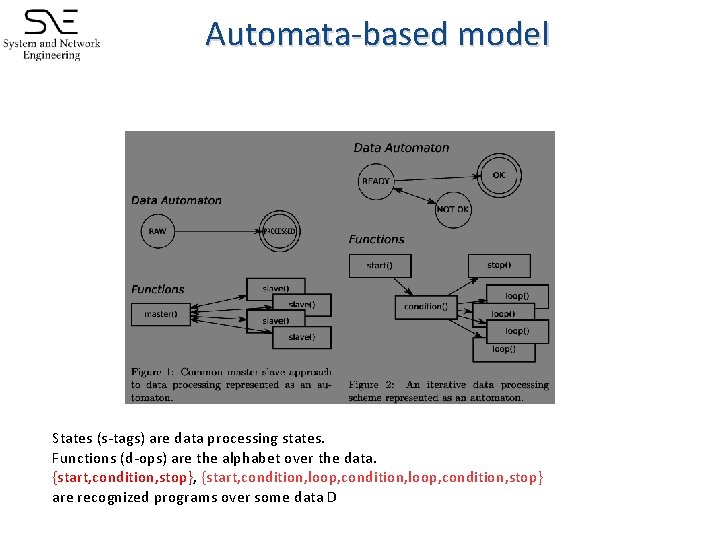

Automata-based model States (s-tags) are data processing states. Functions (d-ops) are the alphabet over the data. {start, condition, stop}, {start, condition, loop, condition, stop} are recognized programs over some data D

A Data Processing Protocol ● ● ● Describe data processing as a state graph Package graph, code and data into a packet Have a protocol that routes the packets here and there Decentralize data processing A TCP/IP approach to data processing interoperability

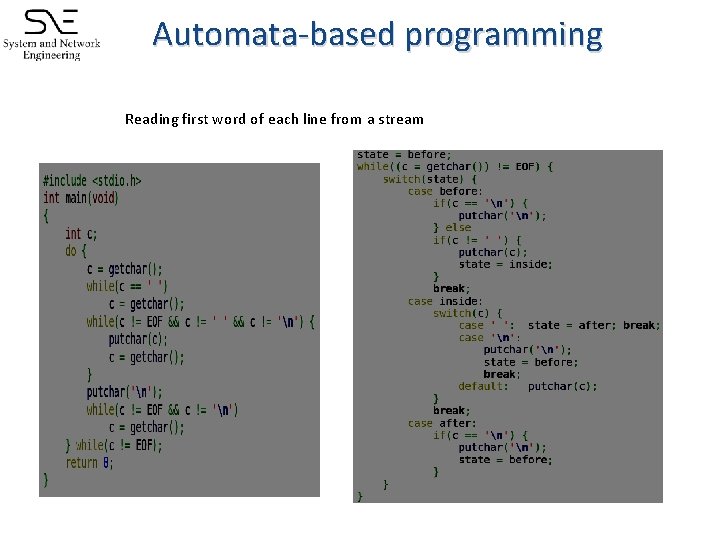

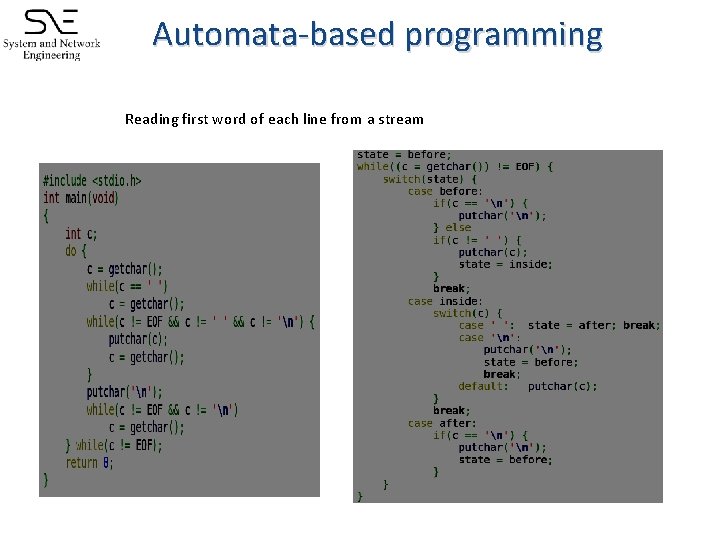

Automata-based programming Reading first word of each line from a stream

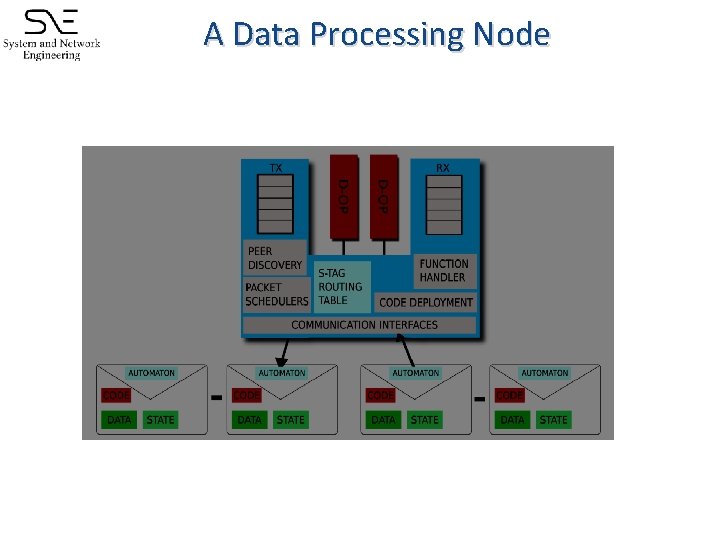

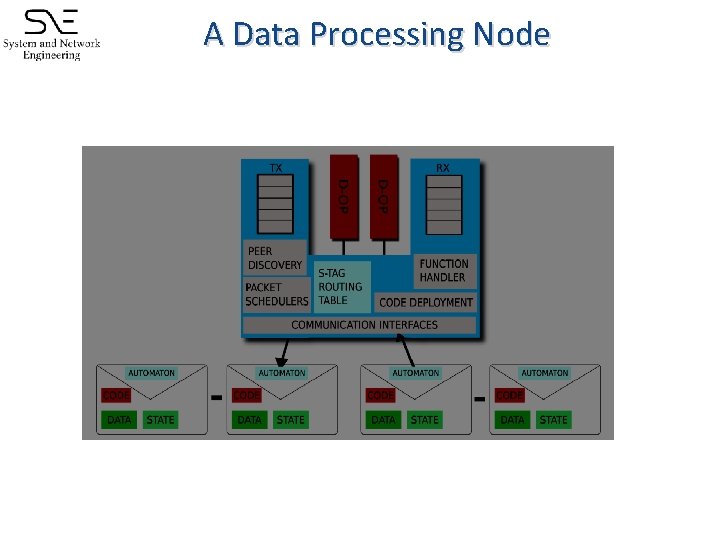

A Data Processing Node

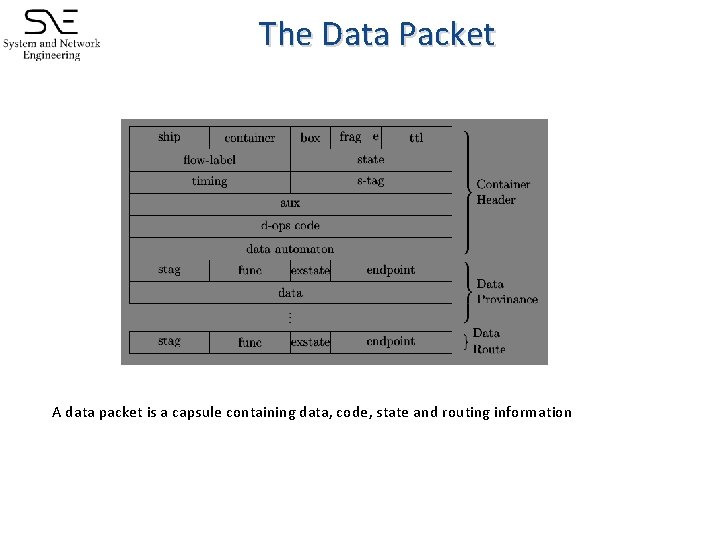

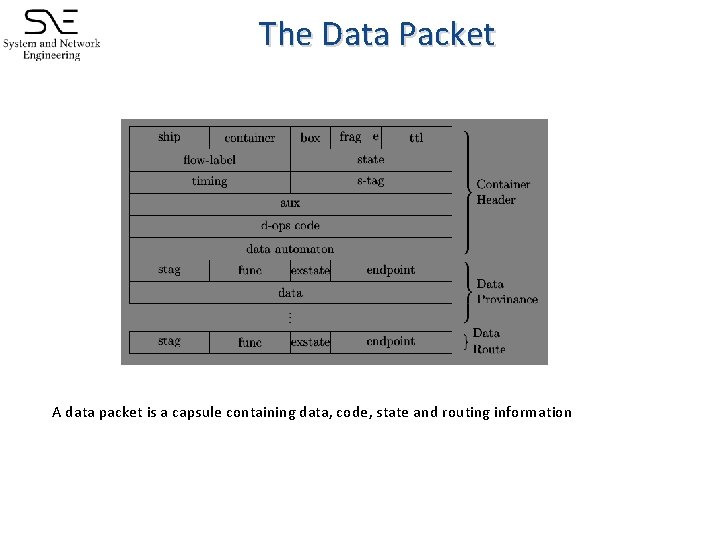

The Data Packet A data packet is a capsule containing data, code, state and routing information

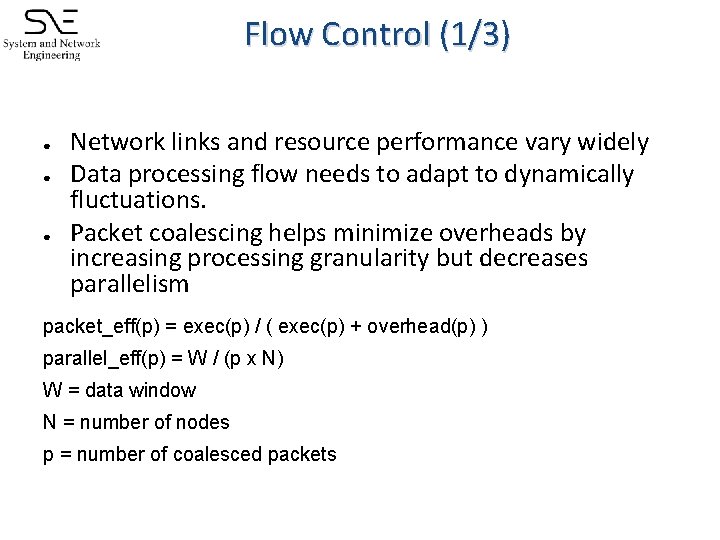

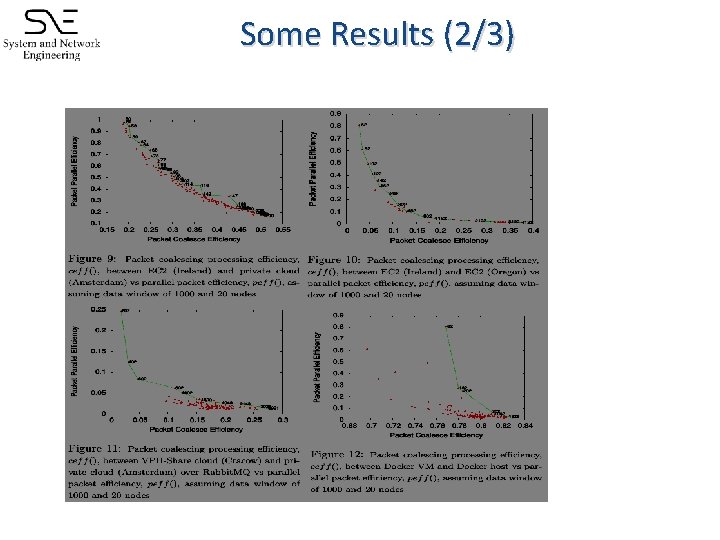

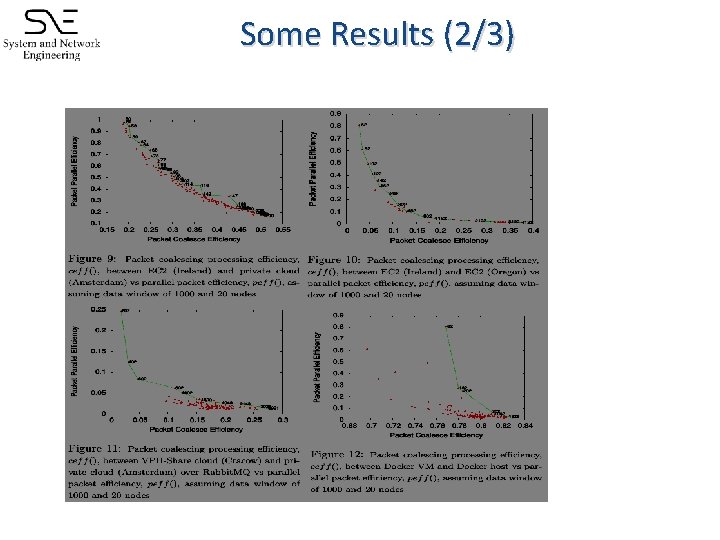

Flow Control (1/3) ● ● ● Network links and resource performance vary widely Data processing flow needs to adapt to dynamically fluctuations. Packet coalescing helps minimize overheads by increasing processing granularity but decreases parallelism packet_eff(p) = exec(p) / ( exec(p) + overhead(p) ) parallel_eff(p) = W / (p x N) W = data window N = number of nodes p = number of coalesced packets

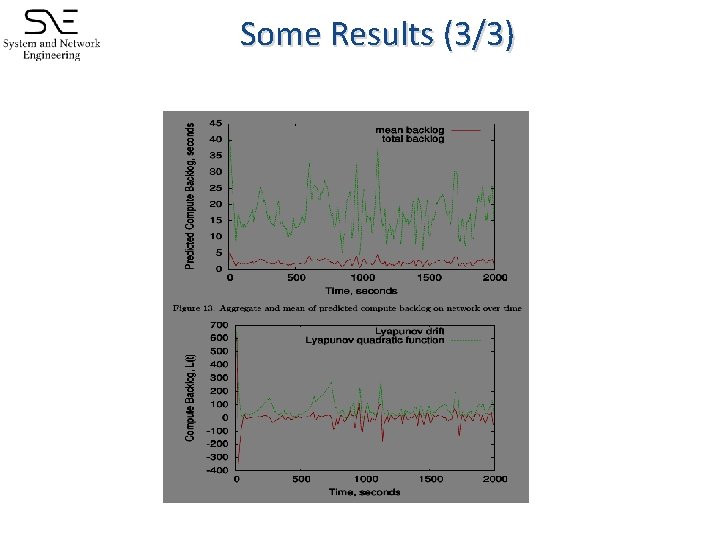

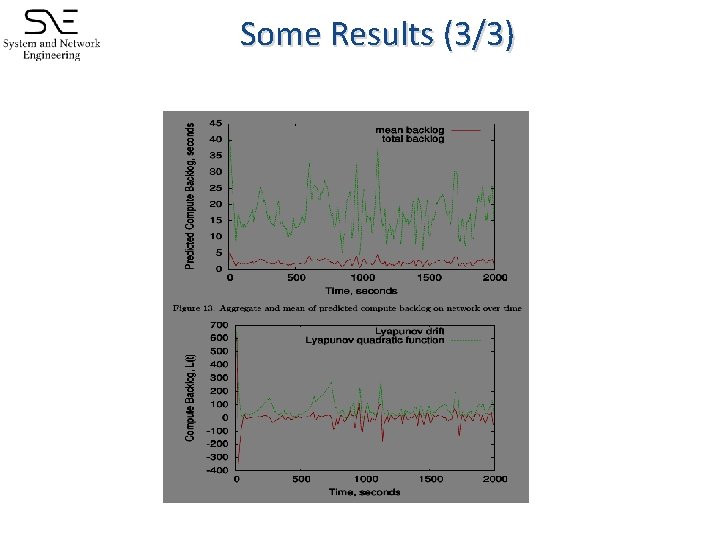

Flow Control (2/3) ● ● ● The distributed network is a queuing network Backlogs tend to degrade the network Lyapunov function for network backlogs L(t) = ½ΣQi(t)2 Δ(t) = L(t+1) - L(t)

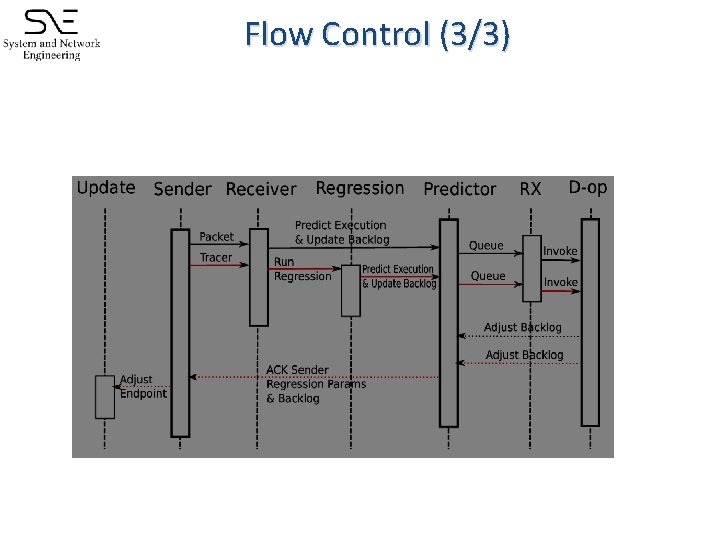

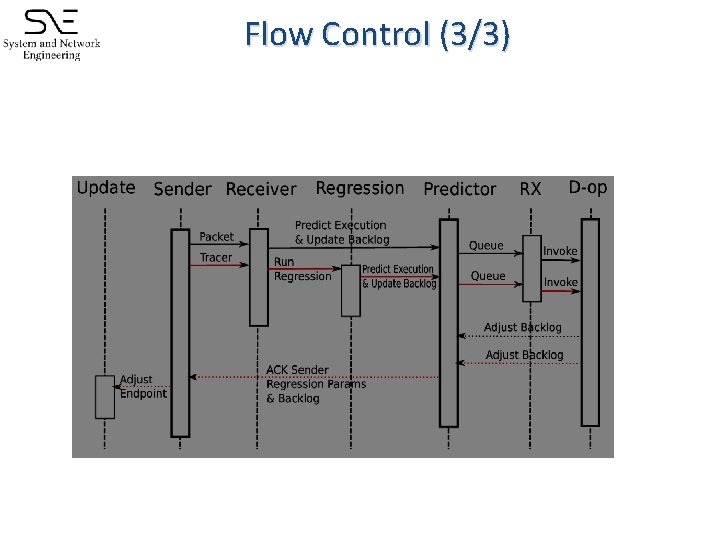

Flow Control (3/3)

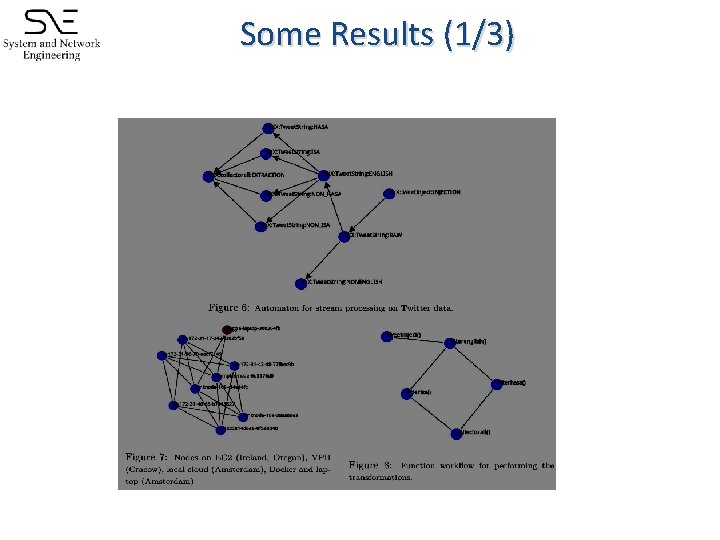

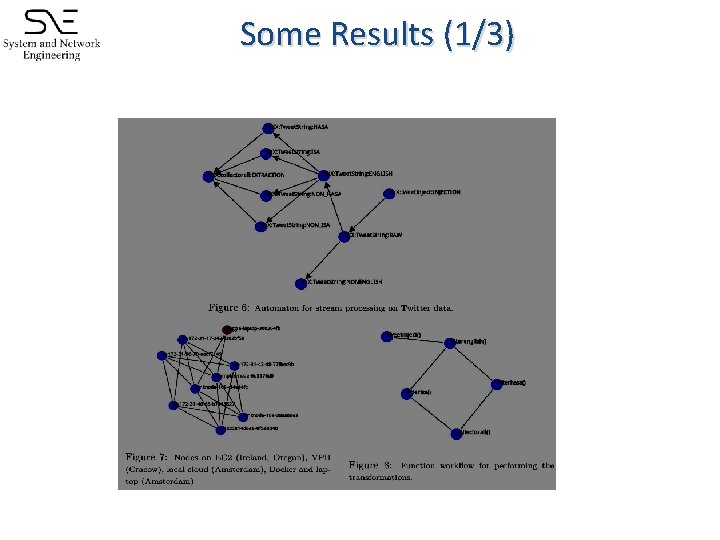

Some Results (1/3)

Some Results (2/3)

Some Results (3/3)

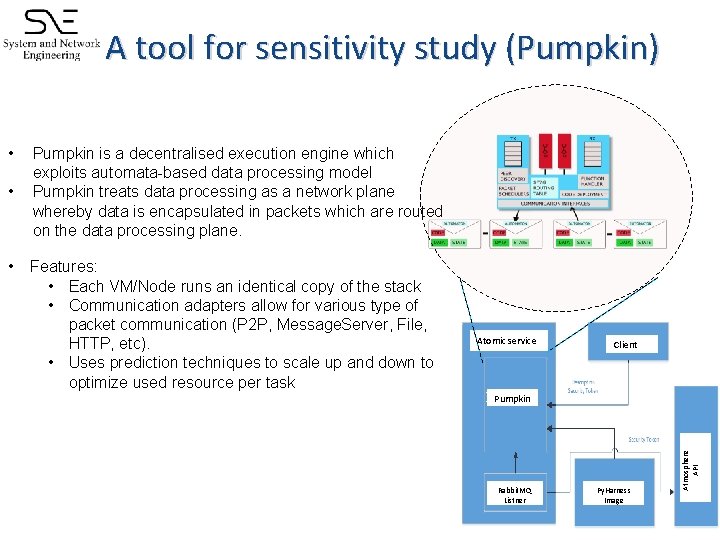

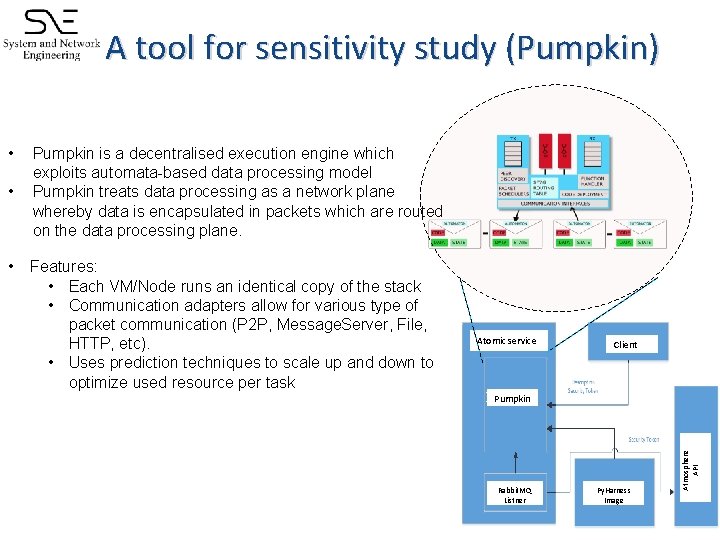

A tool for sensitivity study (Pumpkin) • • Pumpkin is a decentralised execution engine which exploits automata-based data processing model Pumpkin treats data processing as a network plane whereby data is encapsulated in packets which are routed on the data processing plane. Features: • Each VM/Node runs an identical copy of the stack • Communication adapters allow for various type of packet communication (P 2 P, Message. Server, File, HTTP, etc). • Uses prediction techniques to scale up and down to optimize used resource per task Atomic service Client Pumpkin Rabbit. MQ Listner Py. Harness Image Atmosphere API •

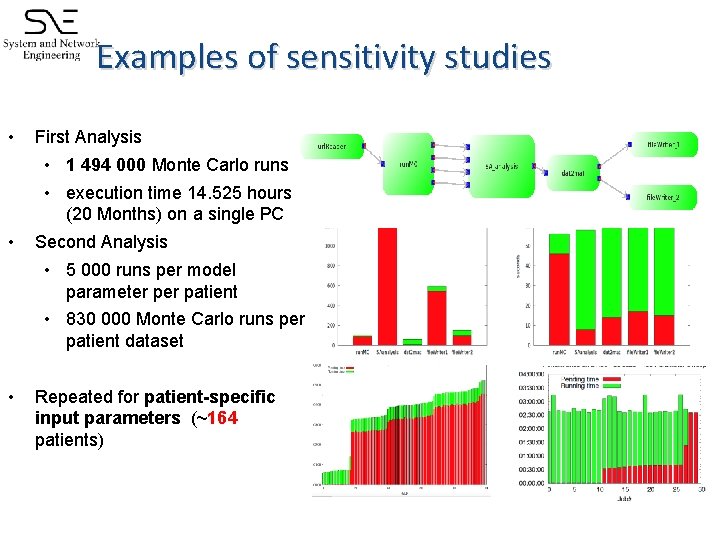

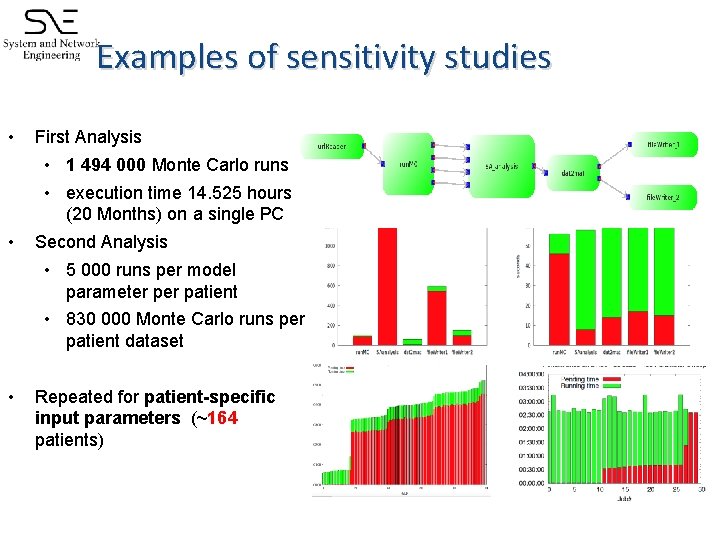

Examples of sensitivity studies • First Analysis • 1 494 000 Monte Carlo runs • execution time 14. 525 hours (20 Months) on a single PC • Second Analysis • 5 000 runs per model parameter patient • 830 000 Monte Carlo runs per patient dataset • Repeated for patient-specific input parameters (~164 patients)

A framework for building DSLs Motivation: • Issues with applications based on graph representation: closely coupled, big number of dependencies, hard to share applications Imbalance between how complex services are (not that complex) and how easy is to use them from the programming point of view (not that easy) § � � � Many data sources and execution environments Diversity of libraries Different authentication methods Goals: • Reproducibility • Find notation that would allow application sharing, reusing and extending • Hide complexity behind an abstraction

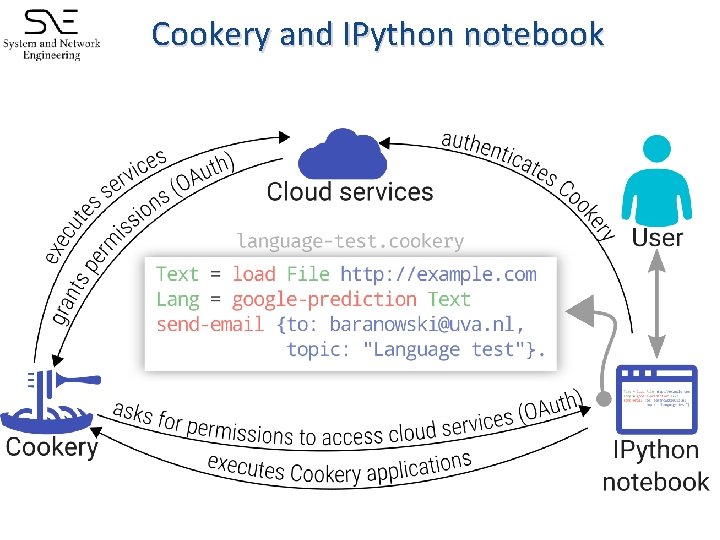

Cookery � � It is a framework for developing applications in a cloud environment; design patterns and techniques of accessing data sources and services: Cookery language (in IPython notebook) Cookery middleware Cookery backend

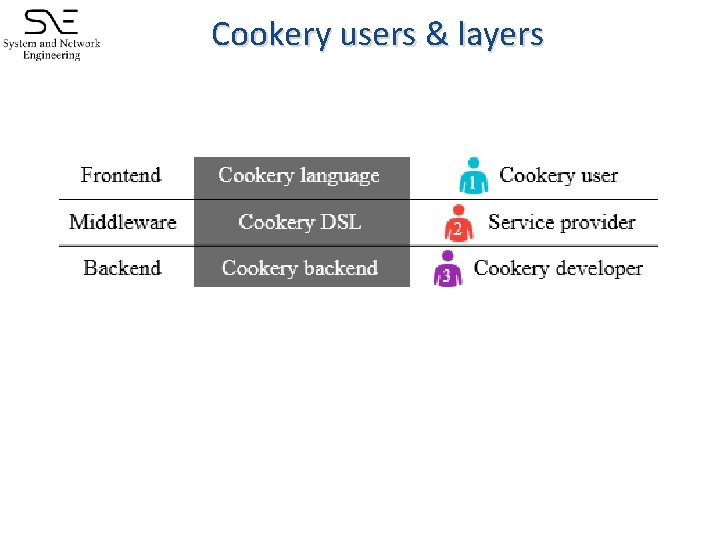

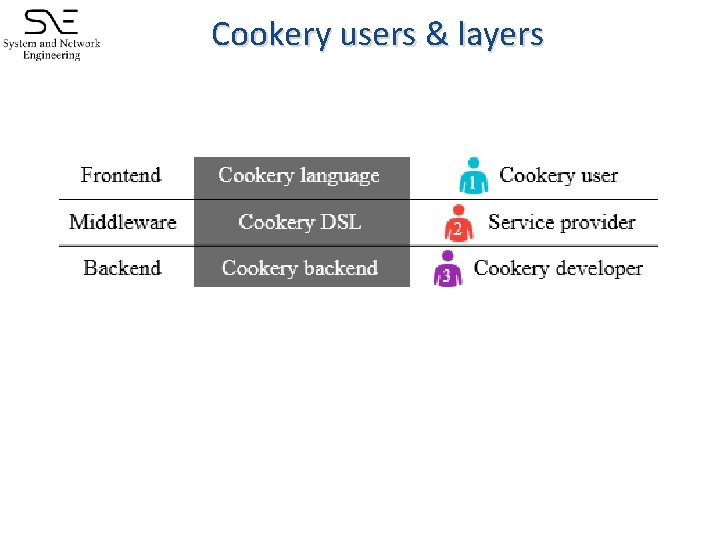

Cookery users & layers

Cookery language � Based on English syntax: � � variable – action – subject – condition � � � Variable – represents data flow Action – represents remote operation Subject – represents data Condition – represents local operation (context with or if )

Reproducibility in clouds with Cookery Separation between the application logic and the implementation: Cookery developer � Implement access to libraries and protocols required by Cloud providers with the Cookery backend Service provider � Provide service or a software container (e. g. , docker) � Provide Cookery middleware for a particular service (data transformations) Cookery user � Grants privileges to Cookery to deploy/access required Cloud services (user pays for it) � Develops application logic with Cookery language

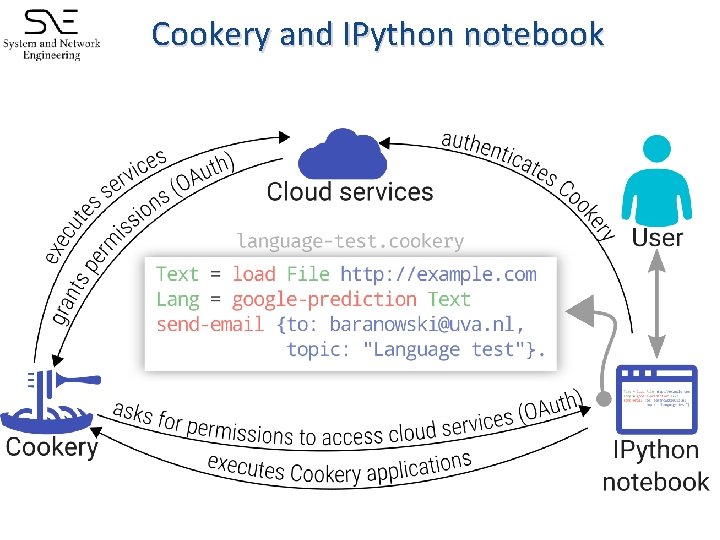

Cookery and IPython notebook

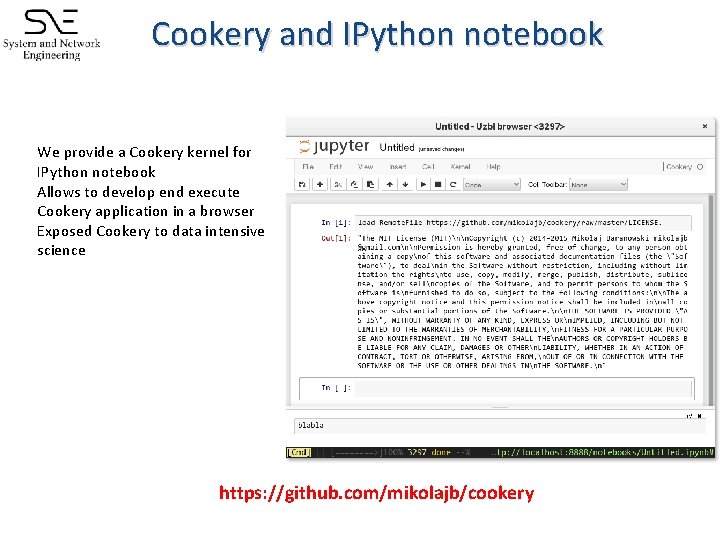

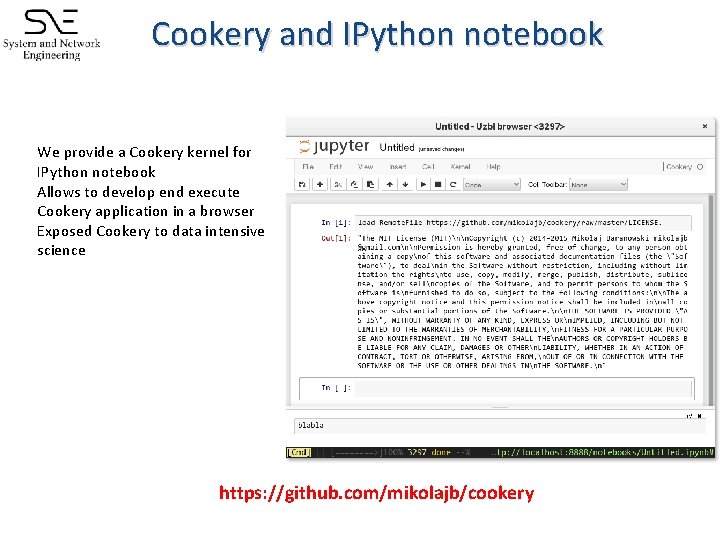

Cookery and IPython notebook � � � We provide a Cookery kernel for IPython notebook Allows to develop end execute Cookery application in a browser Exposed Cookery to data intensive science https: //github. com/mikolajb/cookery

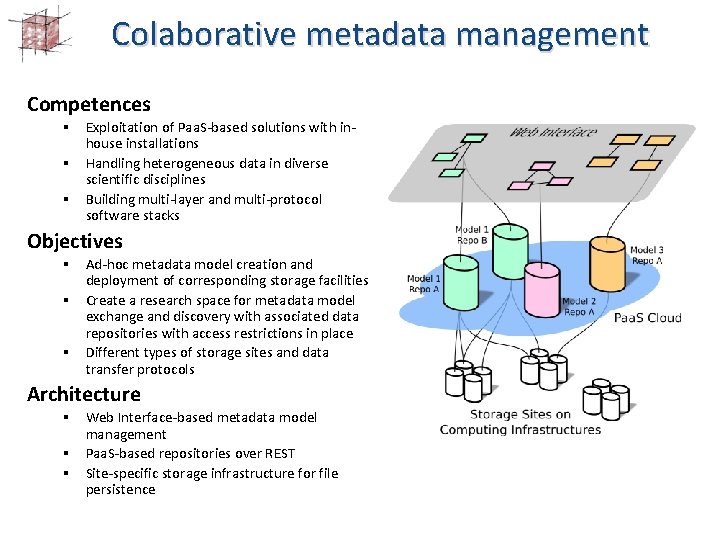

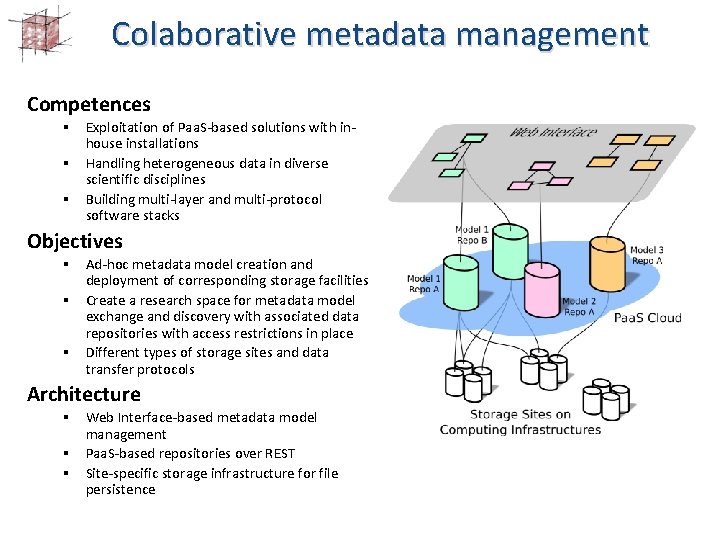

Colaborative metadata management Competences § Exploitation of Paa. S-based solutions with inhouse installations § Handling heterogeneous data in diverse scientific disciplines § Building multi-layer and multi-protocol software stacks Objectives § Ad-hoc metadata model creation and deployment of corresponding storage facilities § Create a research space for metadata model exchange and discovery with associated data repositories with access restrictions in place § Different types of storage sites and data transfer protocols Architecture § Web Interface-based metadata model management § Paa. S-based repositories over REST § Site-specific storage infrastructure for file persistence

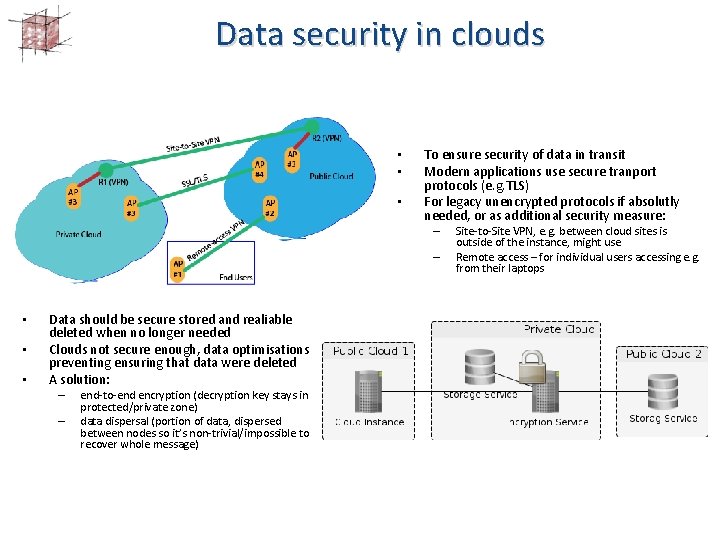

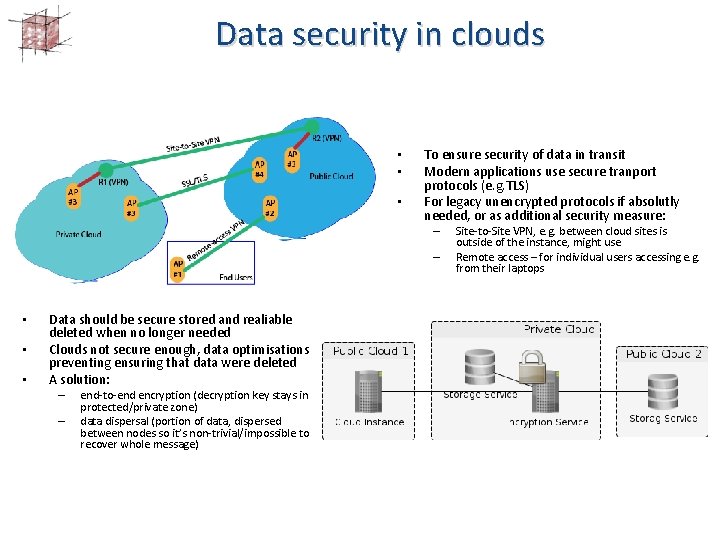

Data security in clouds • • • To ensure security of data in transit Modern applications use secure tranport protocols (e. g. TLS) For legacy unencrypted protocols if absolutly needed, or as additional security measure: – – • • • Data should be secure stored and realiable deleted when no longer needed Clouds not secure enough, data optimisations preventing ensuring that data were deleted A solution: – – end-to-end encryption (decryption key stays in protected/private zone) data dispersal (portion of data, dispersed between nodes so it’s non-trivial/impossible to recover whole message) Site-to-Site VPN, e. g. between cloud sites is outside of the instance, might use Remote access – for individual users accessing e. g. from their laptops

Conclusion Data-centric distributed computing should be tackled jointly from both the abstract data processing, semantic models and the infrastructure fronts so as to increase the knowledge and availability of data. It requires to • investigate new and emerging resources and resource compositions • investigate and propose new scaling techniques to fit contemporary data and resource set ups • study new models of data processing and its applicability to concrete data processing • study the role and implications of semantics in the data processing In the future, the shift from data to knowledge will shift the focus of processing from the current data-centric processing to knowledge centric processing

References • • Spiros Koulouzis, Reggie Cushing, Kostas Karasavvas, Adam Belloum, and Marian Bubak. Enabling web services to consume and produce large datasets. IEEE Internet Computing, 16(1): 52– 60, 2012 Spiros Koulouzis, Dmitry Vasyunin, Reginald Cushing, Adam Belloum, and Marian. Bubak. Cloud data federation for scientific applications. In Euro-Par 2013: Parallel Processing Workshops, LNCS 8374, pp 13– 22. Springer, 2014 Spiros Koulouzis, Adam Belloum, Marian Bubak, Pablo Lamata, Daniel Nolte, Dmitry Vasyunin, Cees de Laat. Distributed Data Management Service for VPH Applications, IEEE Internet Computing, accepted, 2015 Spiros Koulouzis , Adam S. Z. Belloum, Marian T. Bubak, Zhiming Zhao, Miroslav Zivkovic, Cees T. A. M. de Laat. SDN-Aware Federation of Distributed Data, In review: Future Generation of Computer Systems, 2015 Reginald Cushing, Spiros Koulouzis, Adam Belloum, and Marian Bubak. Applying workflow as a service paradigm to application farming. Concurrency and Computation: Practice and Experience, 26(6): 1297– 1312, 2014 Reginald Cushing, Adam Belloum, Marian Bubak, and Cees de Laat. Automata-based dynamic data processing for clouds. In Euro-Par 2014: Parallel Processing Workshops, LNCS 8805, pp 93– 104, 2014. Reginald Cushing, Adam Belloum, Marian Bubak, and Cees de Laat. Towards Computing Without Borders: Data Processing Plane, In review: Future Generation of Computer Systems, 2015. Reginald Cushing, Marian Bubak, Adam Belloum, and Cees de Laat. Beyond Scientific Workflows: Networked Open Processes. IEEE 9 th International Conference on e. Science, pages 357– 364, 2013. • Mikolaj Baranowski, Adam Belloum, and Marian Bubak. Cookery: a Framework for Developing Cloud Applications, HPCS Conference, Amsterdam 2015 • D. Harężlak, M. Kasztelnik, M. Pawlik, B. Wilk, and M. Bubak: A Lightweight Method of Metadata and Data Management with Data. Net. In: M. Bubak, J. Kitowski, K. Wiatr (Eds. ): e. Science on Distributed Computing Infrastructure, LNCS 8500. Springer, pp. 164 -177, 2014 J. Meizner, M. Bubak, M. Malawski, P. Nowakowski: Secure Storage and Processing of Confidential Data on Public Clouds. In: PPAM 2013, LNCS 8384, pp. 272 -282, Springer, 2014 •