Meta Optimization Improving Compiler Heuristics with Machine Learning

- Slides: 34

Meta Optimization Improving Compiler Heuristics with Machine Learning Mark Stephenson, Una-May O’Reilly, Martin, and Saman Amarasinghe MIT Computer Architecture Group Presented by Utku Aydonat

Motivation • Compiler writers are faced with many challenges: – Many compiler problems are NP-hard – Modern architectures are inextricably complex • Simple models can’t capture architecture intricacies – Micro-architectures change quickly

Motivation • Heuristics alleviate complexity woes – Find good approximate solutions for a large class of applications – Find solutions quickly • Unfortunately… – They require a lot of trial-and-error tweaking to achieve suitable performance – Fine tuning is a tedious process

Priority Functions • A single priority or cost function often dictates the efficacy of a heuristic • Priority Function: A function of the factors that affect a given problem • Priority functions rank the options available to a compiler heuristic

Priority Functions • Graph coloring register allocation (selecting nodes to spill) • List scheduling (identifying instructions in worklist to schedule first) • Hyperblock formation (selecting paths to include) • Data Prefetching (inserting prefetch instructions)

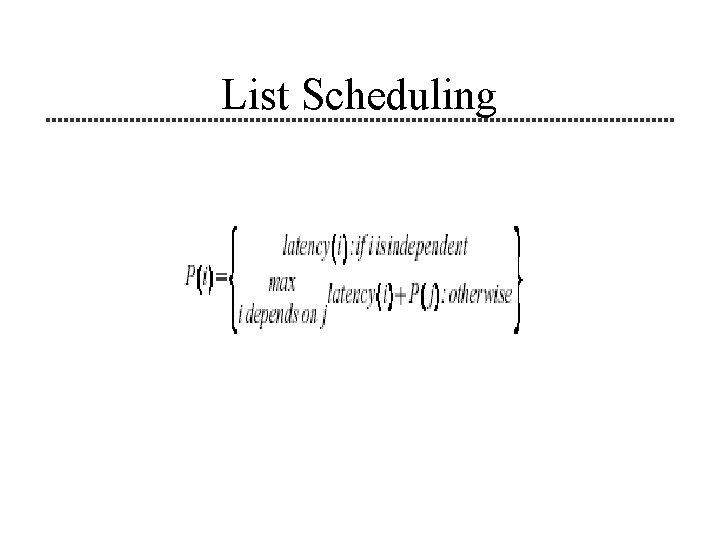

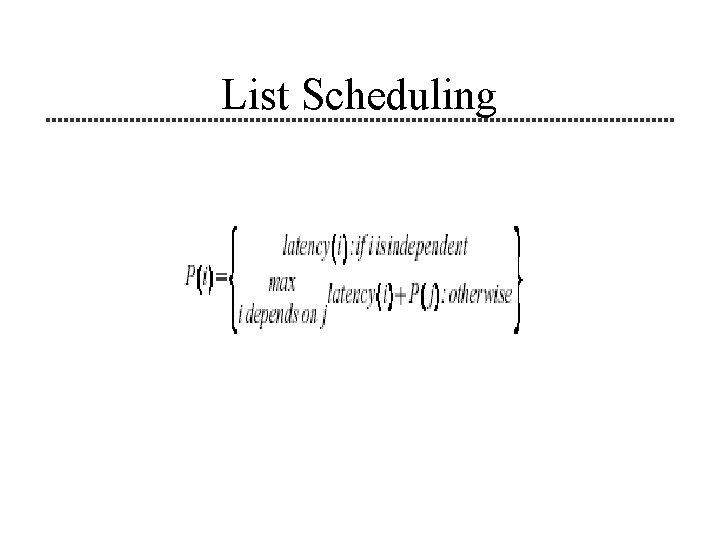

List Scheduling

Machine Learning • They propose using machine learning techniques to automatically search the priority function space – Feedback directed optimization – Find a function that works well for a broad range of applications – Needs to be applied only once

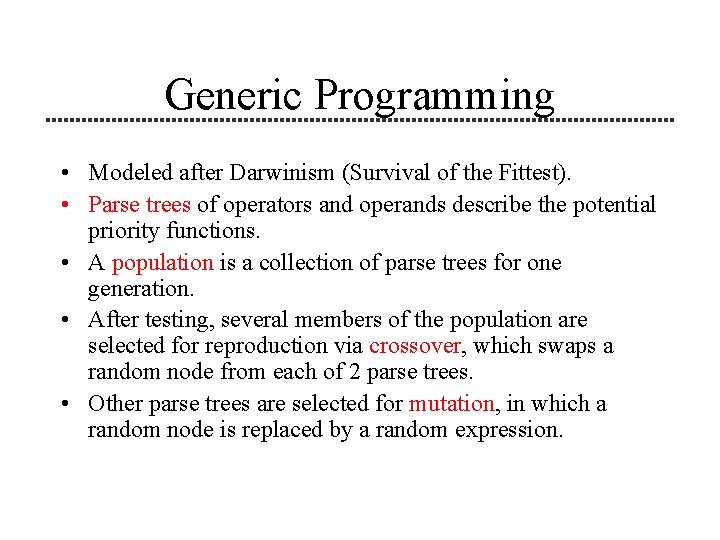

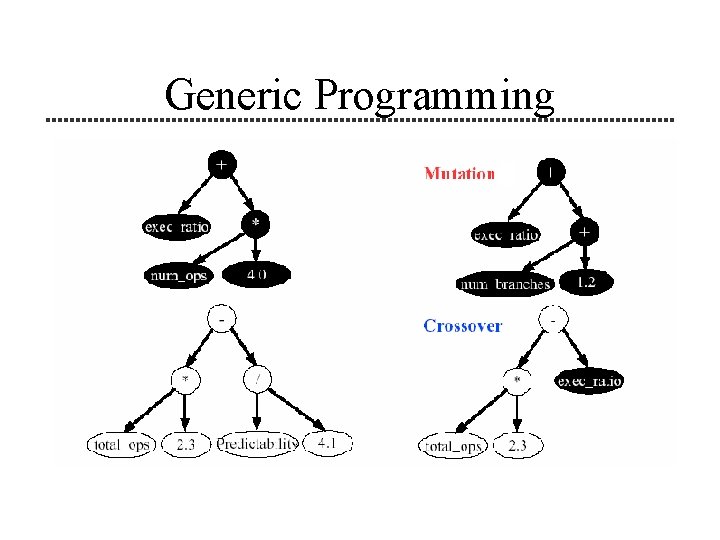

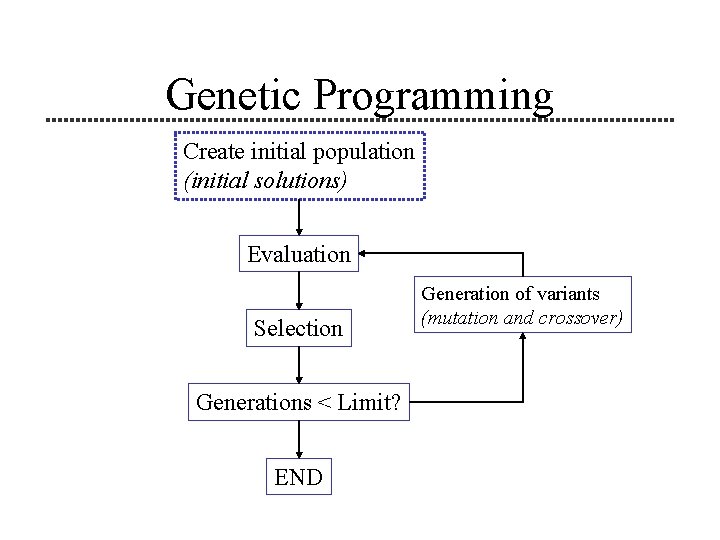

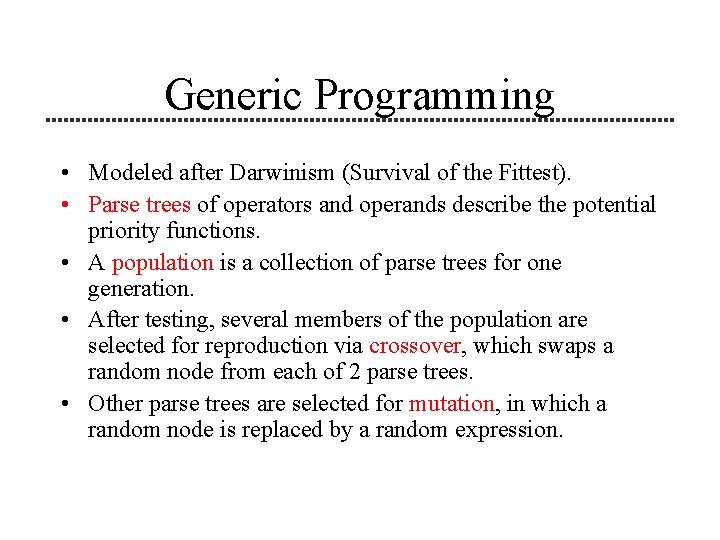

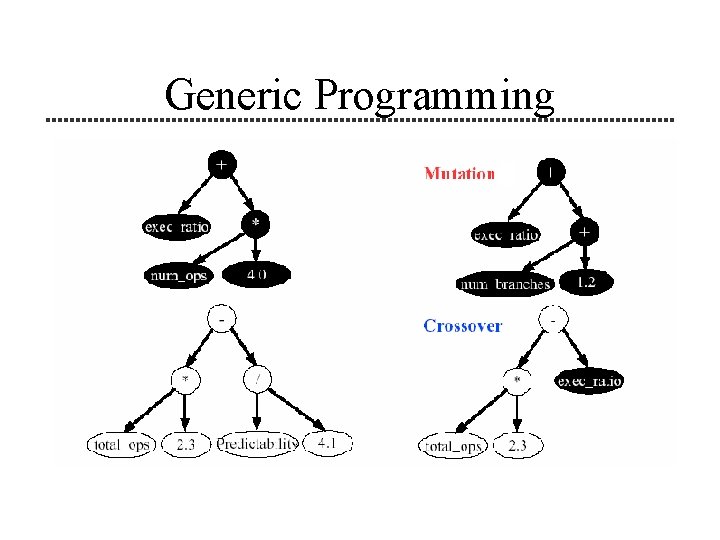

Generic Programming • Modeled after Darwinism (Survival of the Fittest). • Parse trees of operators and operands describe the potential priority functions. • A population is a collection of parse trees for one generation. • After testing, several members of the population are selected for reproduction via crossover, which swaps a random node from each of 2 parse trees. • Other parse trees are selected for mutation, in which a random node is replaced by a random expression.

Generic Programming

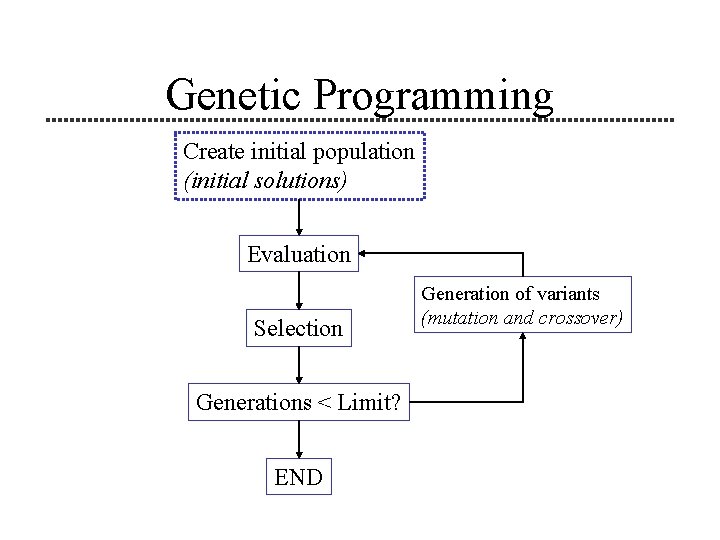

Genetic Programming Create initial population (initial solutions) Evaluation Selection Generations < Limit? END Generation of variants (mutation and crossover)

Generic Programming • The system selects the smaller of several expressions that are equally fit so that the parse trees do not grow exponentially. • Tournament selection is used repeatedly to select parse trees for crossover. – Choose N expressions at random from the population and select the one with the highest fitness. • Dynamic subset selection (DSS) is used to reduce fitness evaluations to achieve suitable solution.

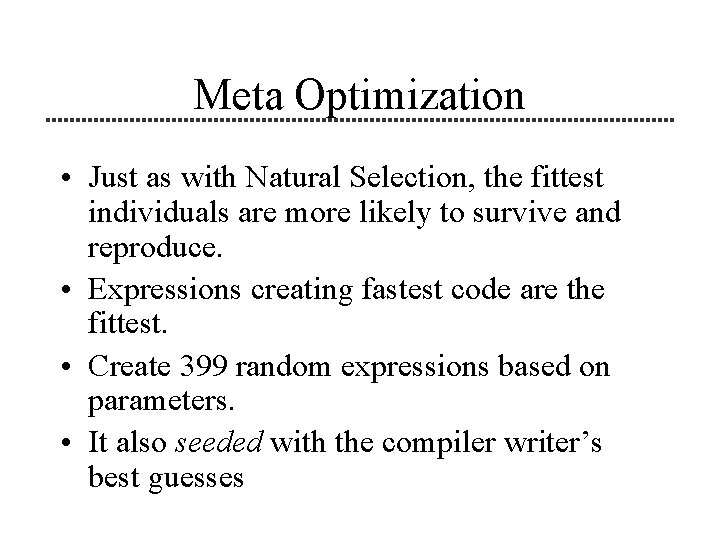

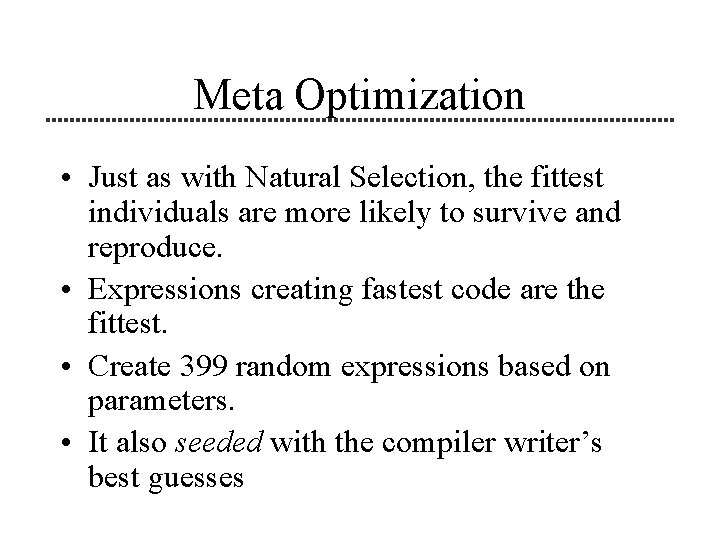

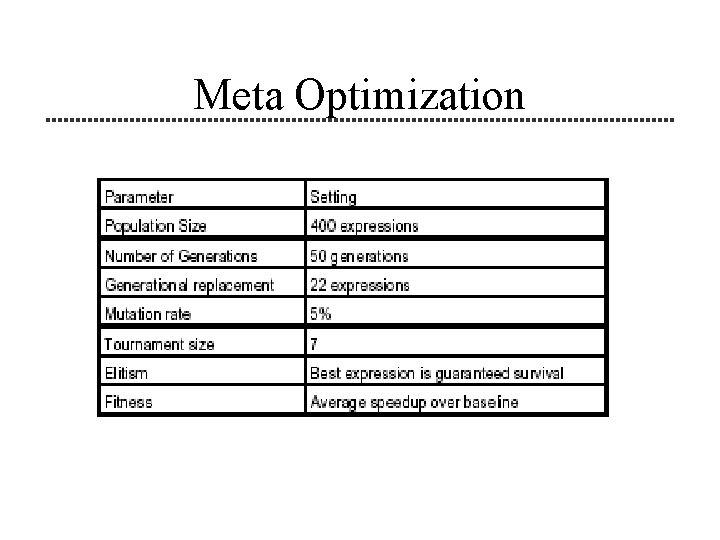

Meta Optimization • Just as with Natural Selection, the fittest individuals are more likely to survive and reproduce. • Expressions creating fastest code are the fittest. • Create 399 random expressions based on parameters. • It also seeded with the compiler writer’s best guesses

Meta Optimization

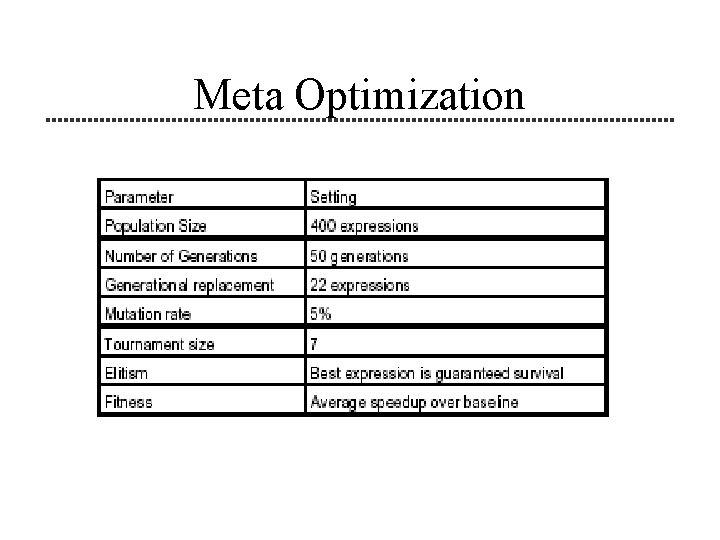

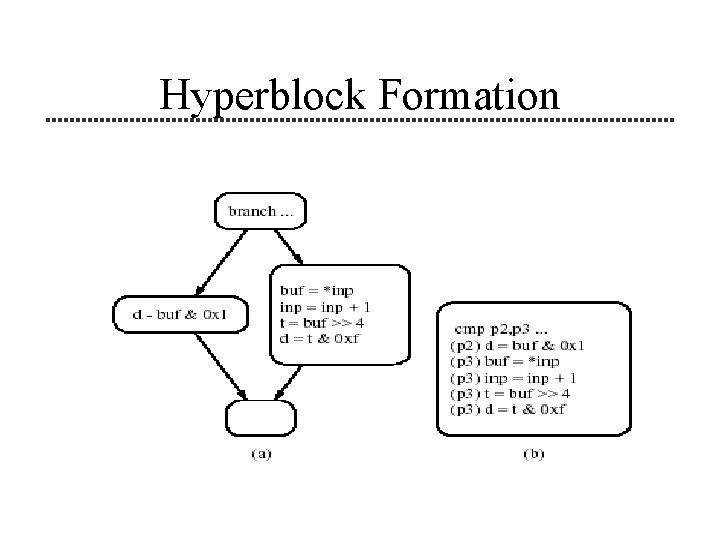

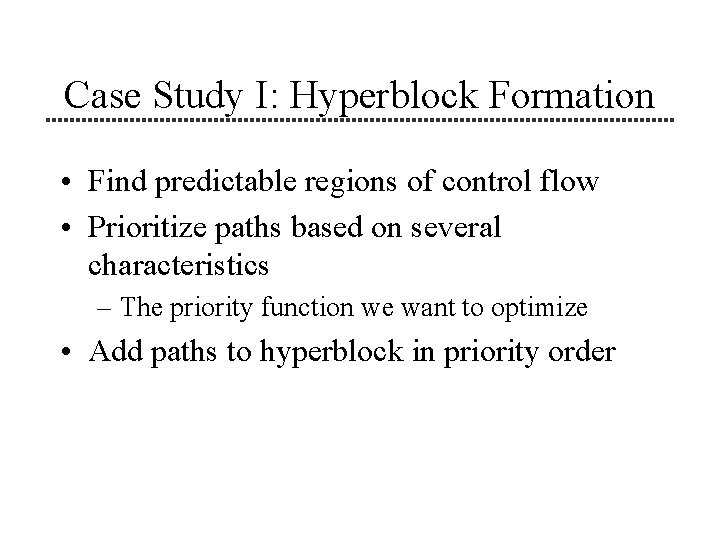

Case Study I: Hyperblock Formation • Find predictable regions of control flow • Prioritize paths based on several characteristics – The priority function we want to optimize • Add paths to hyperblock in priority order

Hyperblock Formation

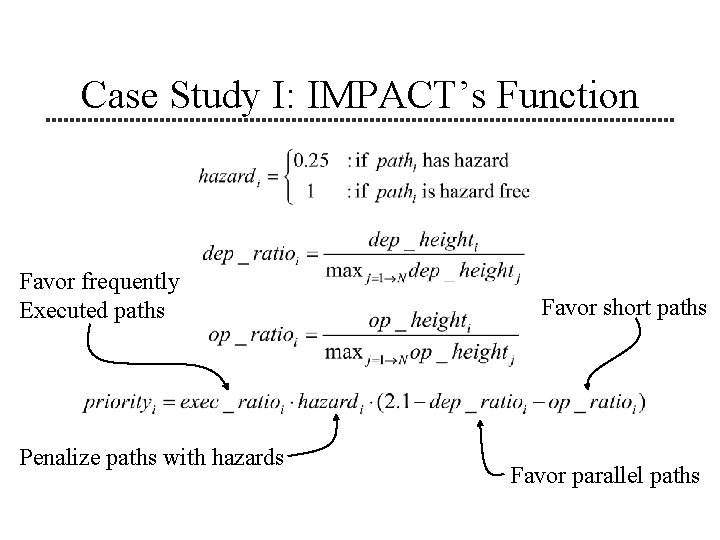

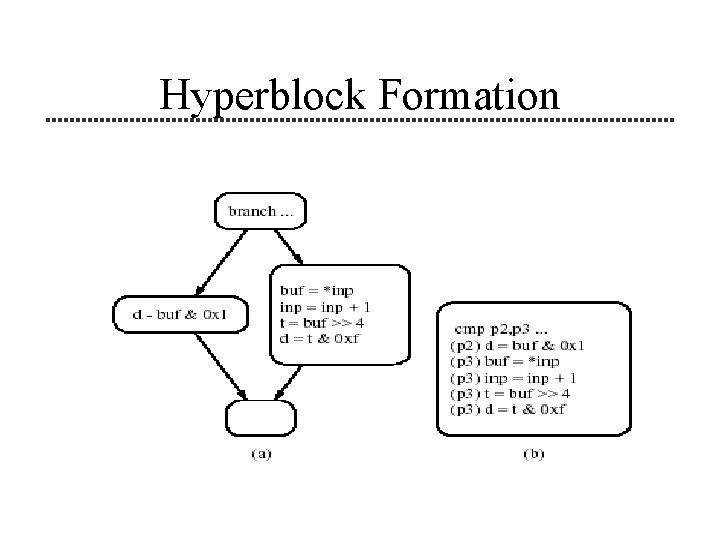

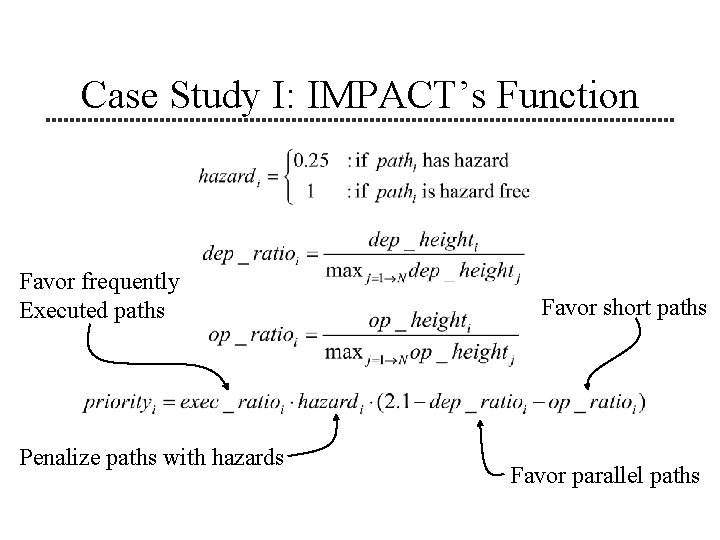

Case Study I: IMPACT’s Function Favor frequently Executed paths Penalize paths with hazards Favor short paths Favor parallel paths

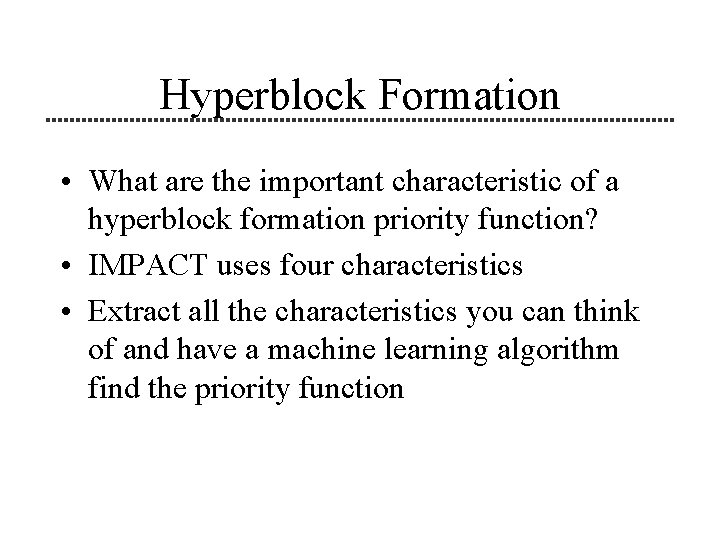

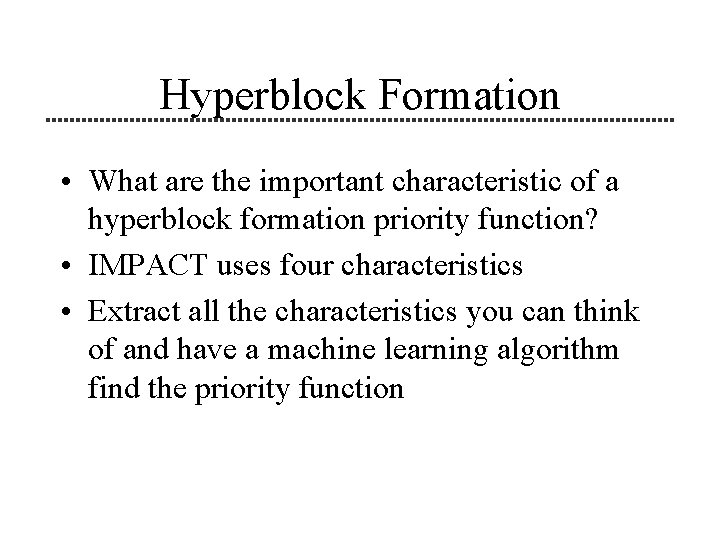

Hyperblock Formation • What are the important characteristic of a hyperblock formation priority function? • IMPACT uses four characteristics • Extract all the characteristics you can think of and have a machine learning algorithm find the priority function

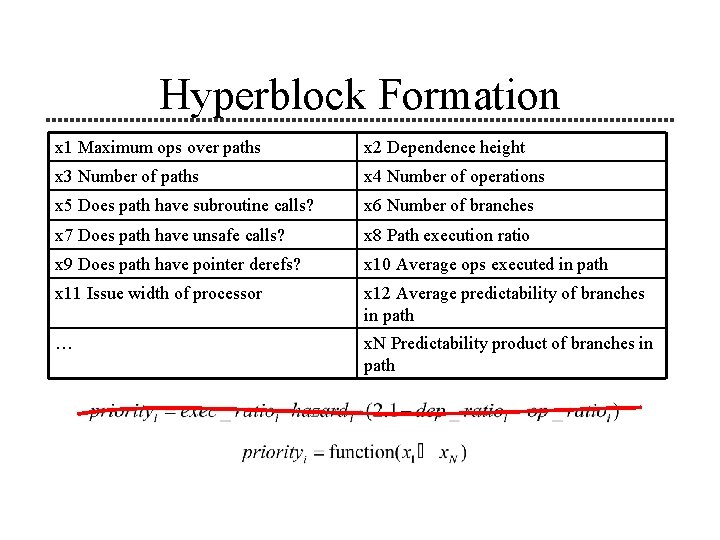

Hyperblock Formation x 1 Maximum ops over paths x 2 Dependence height x 3 Number of paths x 4 Number of operations x 5 Does path have subroutine calls? x 6 Number of branches x 7 Does path have unsafe calls? x 8 Path execution ratio x 9 Does path have pointer derefs? x 10 Average ops executed in path x 11 Issue width of processor x 12 Average predictability of branches in path … x. N Predictability product of branches in path

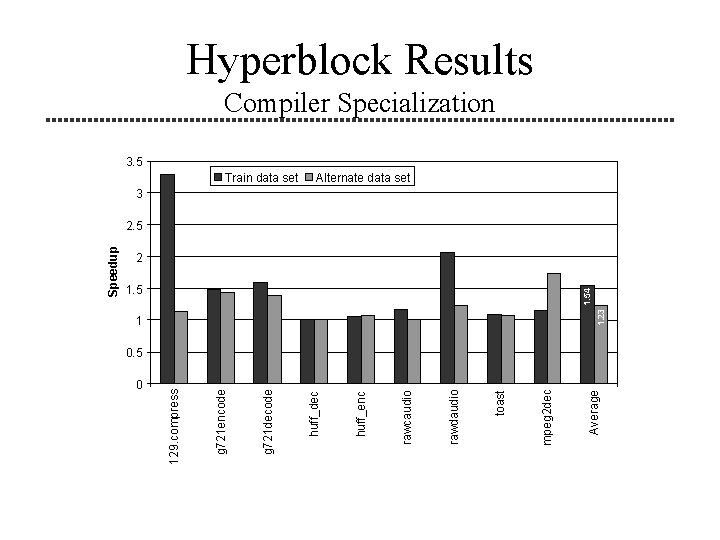

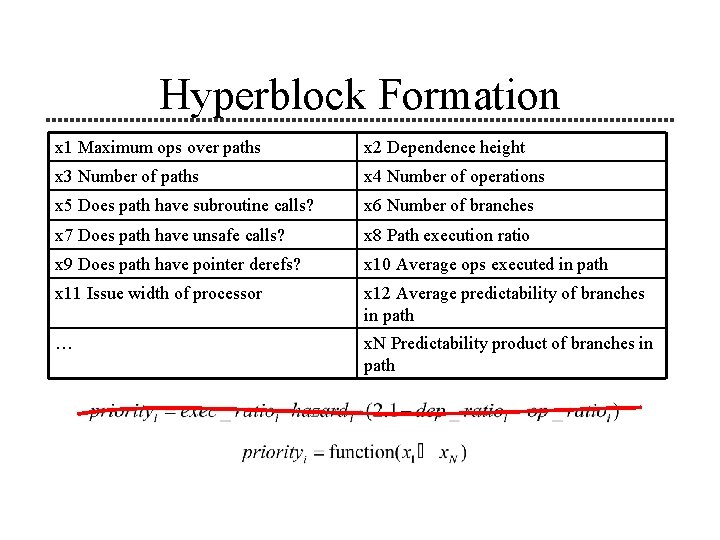

0 1 1. 23 1. 54 1. 5 Average mpeg 2 dec toast rawdaudio rawcaudio huff_enc Train data set huff_dec g 721 decode g 721 encode 129. compress Speedup Hyperblock Results Compiler Specialization 3. 5 Alternate data set 3 2. 5 2 0. 5

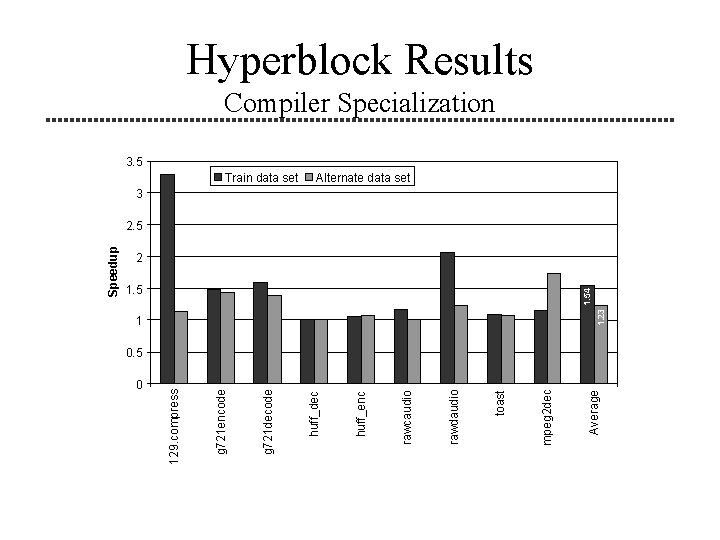

Hyperblock Results A General Purpose Priority Function

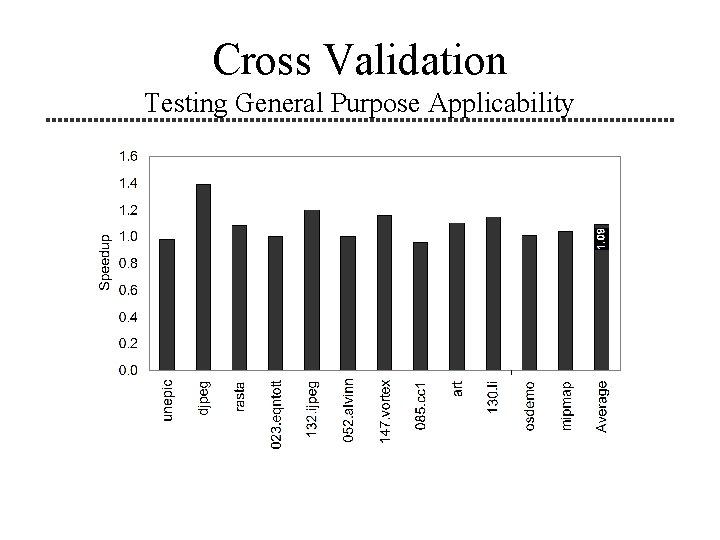

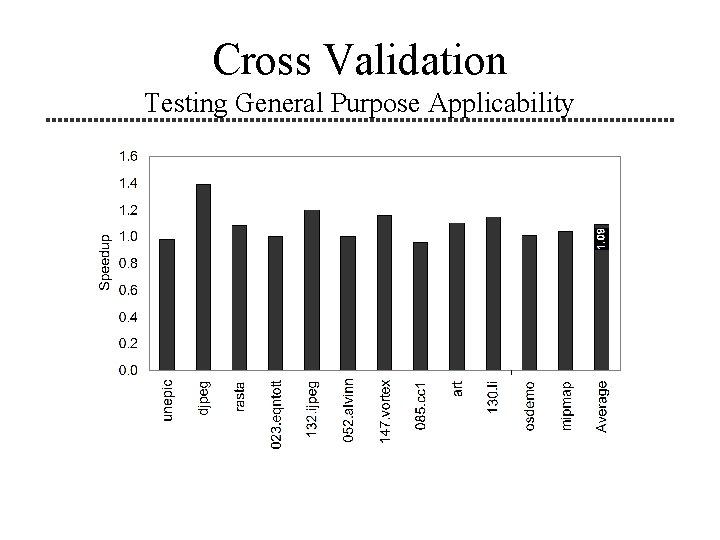

Cross Validation Testing General Purpose Applicability

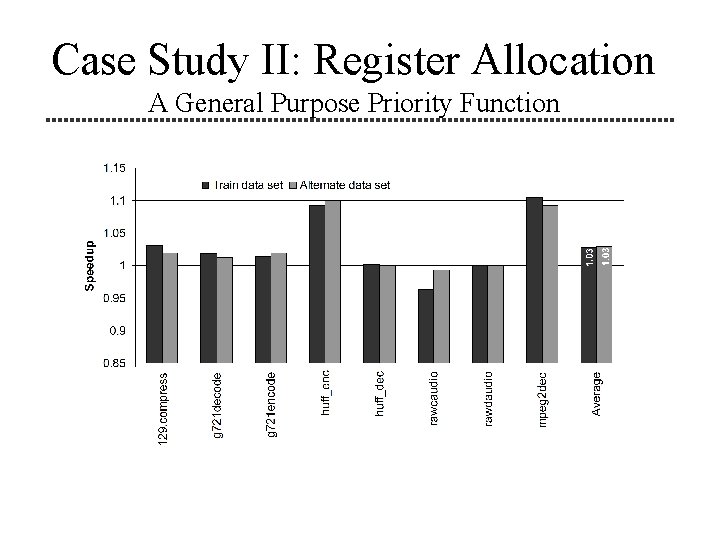

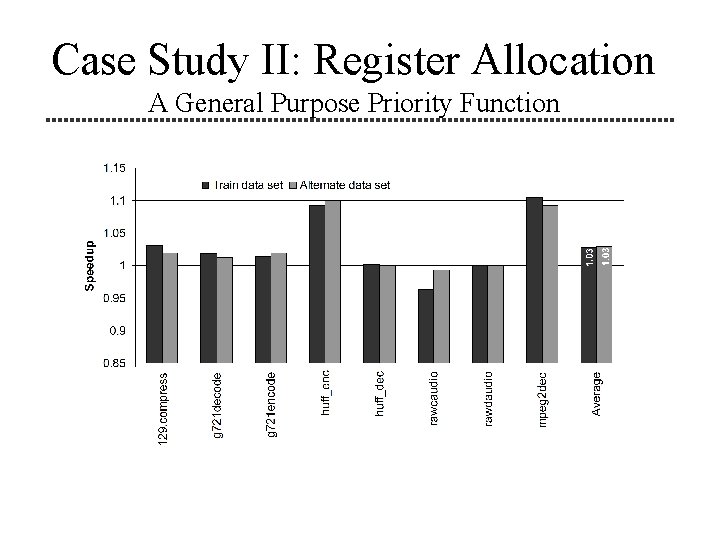

Case Study II: Register Allocation A General Purpose Priority Function

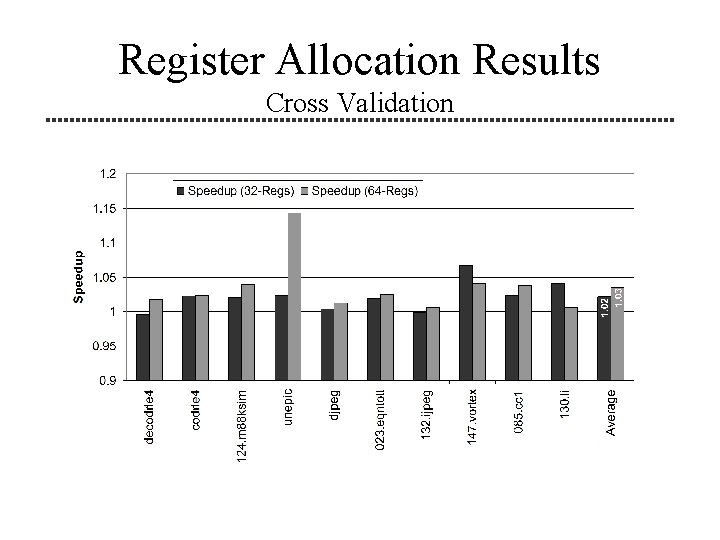

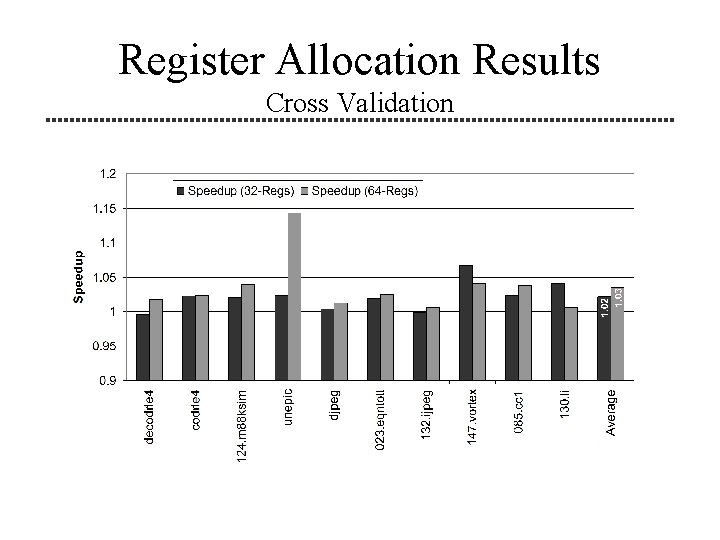

Register Allocation Results Cross Validation

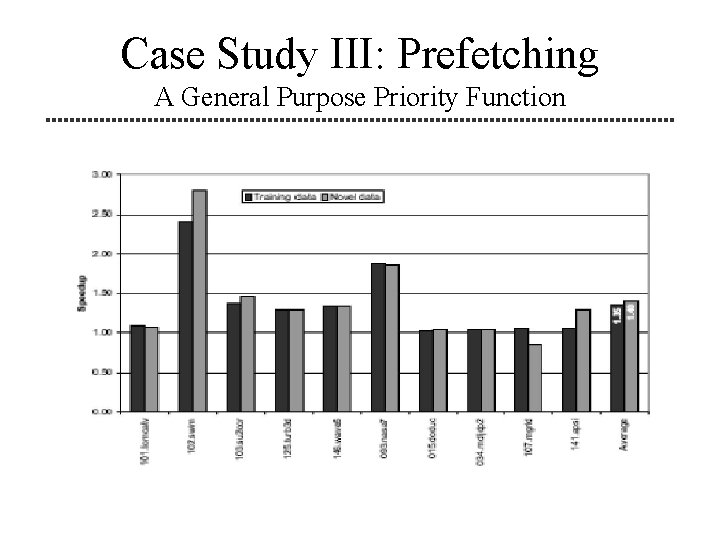

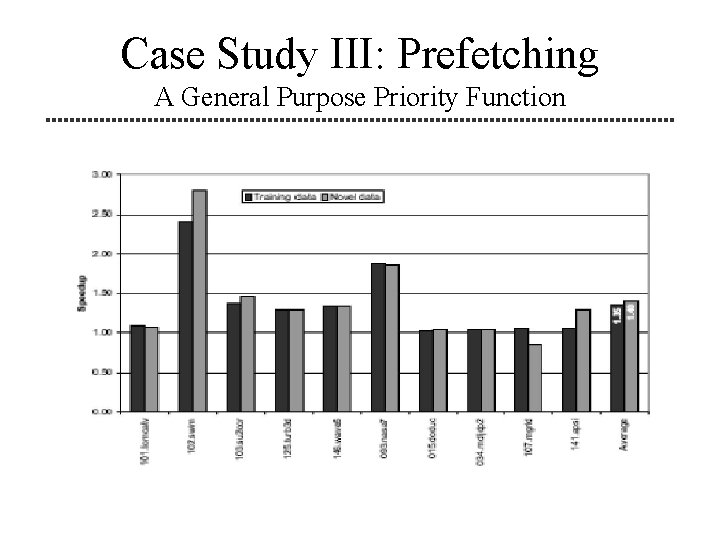

Case Study III: Prefetching A General Purpose Priority Function

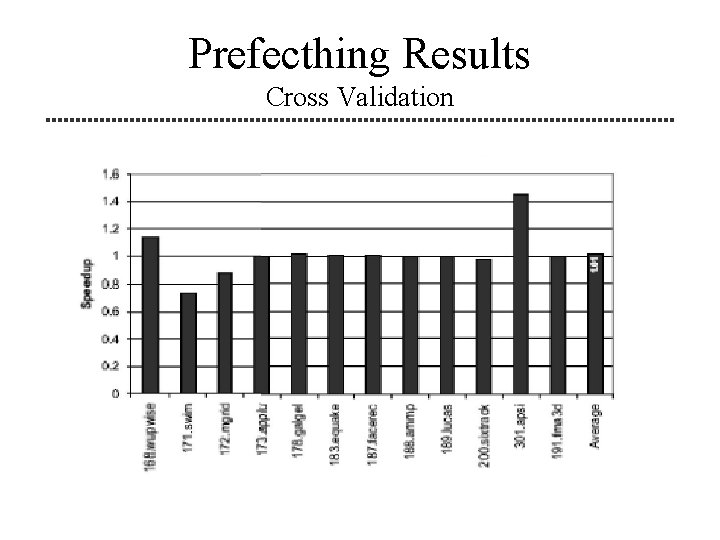

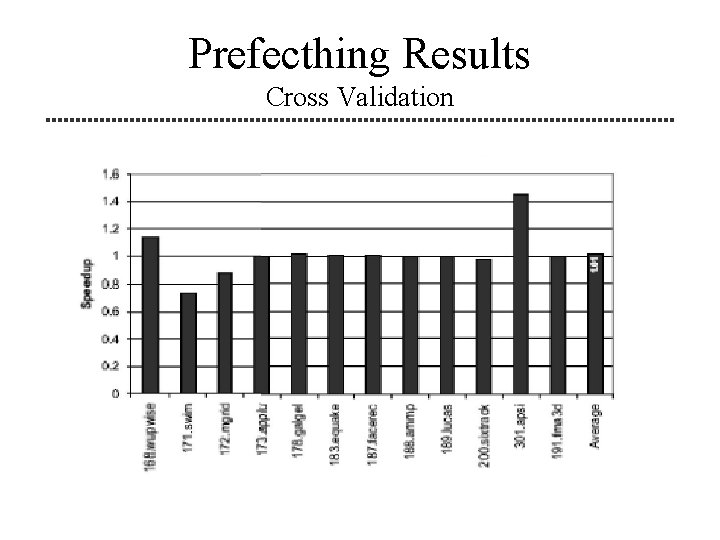

Prefecthing Results Cross Validation

Conclusion • Machine learning techniques can identify effective priority functions • ‘Proof of concept’ by evolving three well known priority functions • Human cycles v. computer cycles

My Conclusions • Heuristics to improve heuristics – How to choose population size, mutation rate, tournament size? • Does it guarantee better results? • Requires a lot of experiments for the results to converge. – Do we have that opportunity? • It is very effective for optimizing a specific application space.

Thank you!

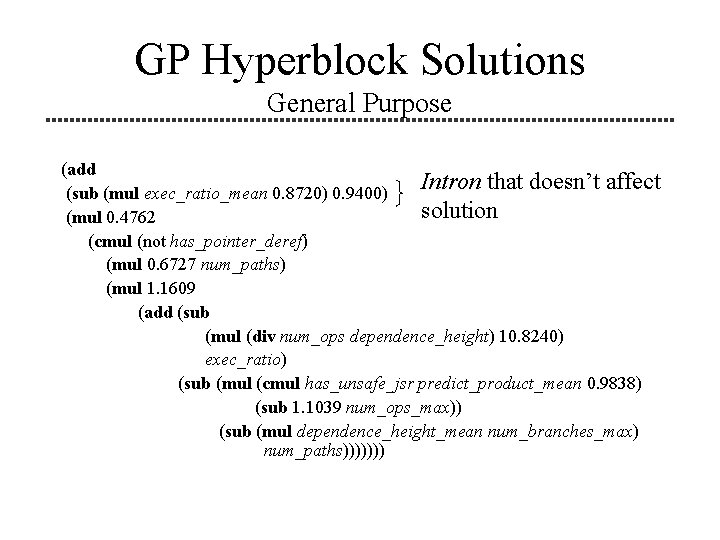

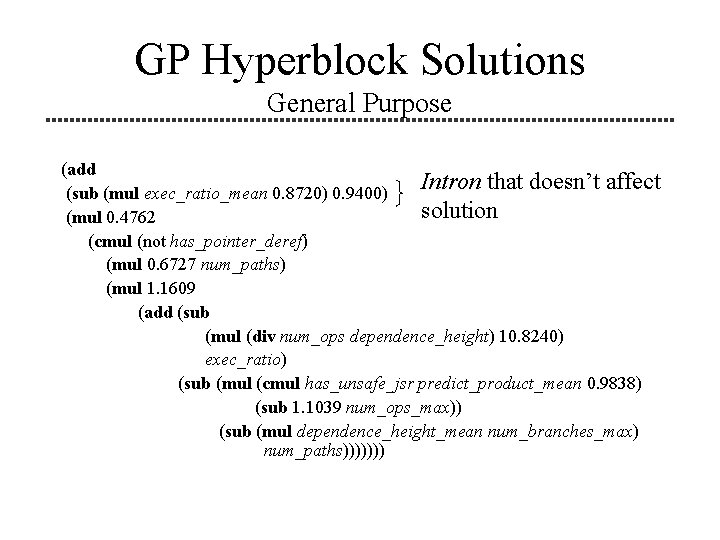

GP Hyperblock Solutions General Purpose (add Intron that doesn’t affect (sub (mul exec_ratio_mean 0. 8720) 0. 9400) solution (mul 0. 4762 (cmul (not has_pointer_deref) (mul 0. 6727 num_paths) (mul 1. 1609 (add (sub (mul (div num_ops dependence_height) 10. 8240) exec_ratio) (sub (mul (cmul has_unsafe_jsr predict_product_mean 0. 9838) (sub 1. 1039 num_ops_max)) (sub (mul dependence_height_mean num_branches_max) num_paths)))))))

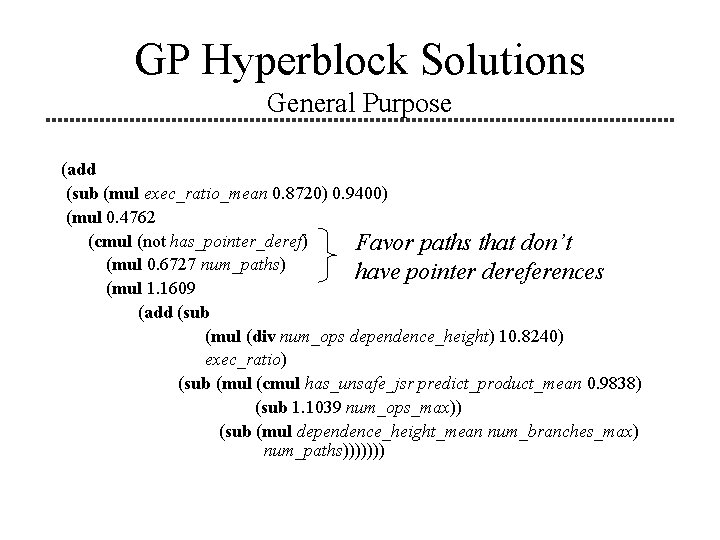

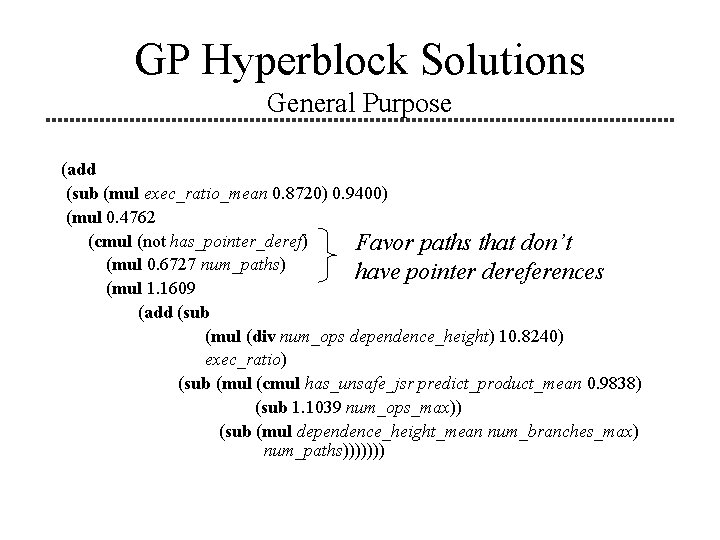

GP Hyperblock Solutions General Purpose (add (sub (mul exec_ratio_mean 0. 8720) 0. 9400) (mul 0. 4762 (cmul (not has_pointer_deref) Favor paths that don’t (mul 0. 6727 num_paths) have pointer dereferences (mul 1. 1609 (add (sub (mul (div num_ops dependence_height) 10. 8240) exec_ratio) (sub (mul (cmul has_unsafe_jsr predict_product_mean 0. 9838) (sub 1. 1039 num_ops_max)) (sub (mul dependence_height_mean num_branches_max) num_paths)))))))

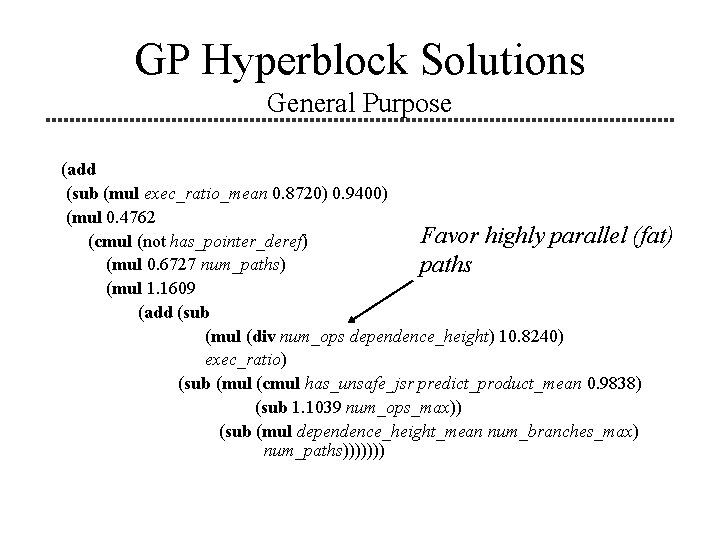

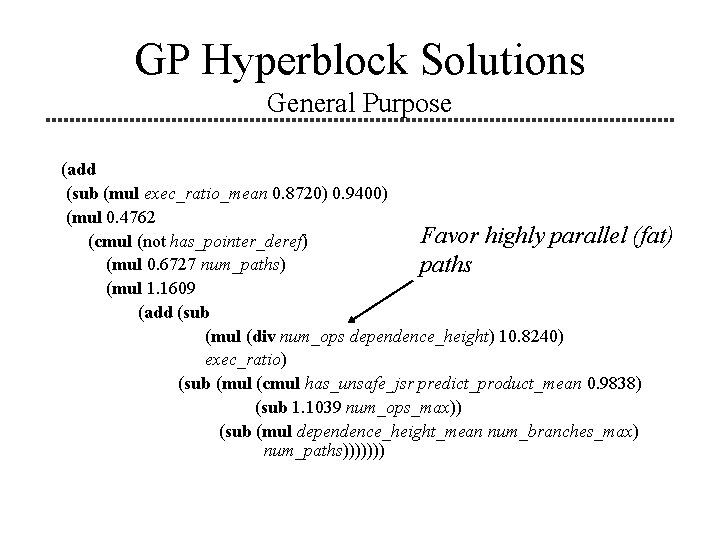

GP Hyperblock Solutions General Purpose (add (sub (mul exec_ratio_mean 0. 8720) 0. 9400) (mul 0. 4762 Favor highly parallel (fat) (cmul (not has_pointer_deref) (mul 0. 6727 num_paths) paths (mul 1. 1609 (add (sub (mul (div num_ops dependence_height) 10. 8240) exec_ratio) (sub (mul (cmul has_unsafe_jsr predict_product_mean 0. 9838) (sub 1. 1039 num_ops_max)) (sub (mul dependence_height_mean num_branches_max) num_paths)))))))

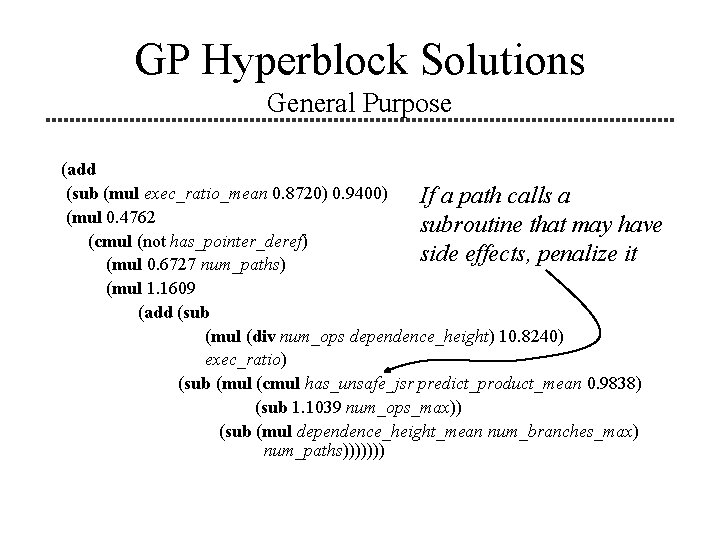

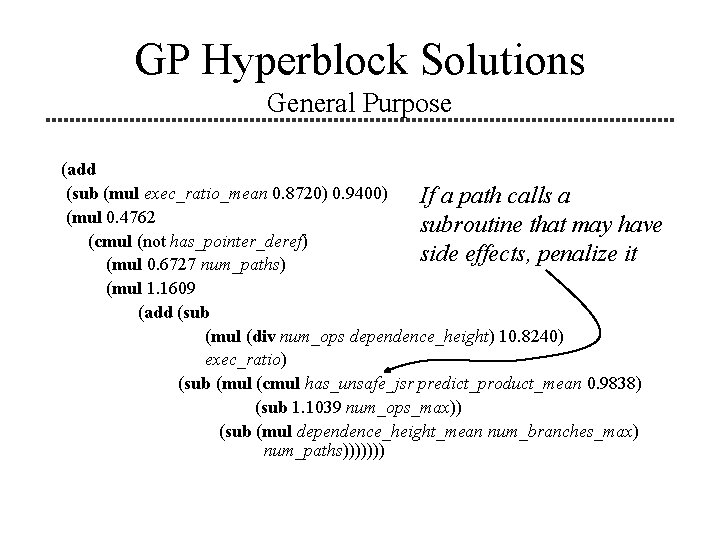

GP Hyperblock Solutions General Purpose (add (sub (mul exec_ratio_mean 0. 8720) 0. 9400) If a path calls a (mul 0. 4762 subroutine that may have (cmul (not has_pointer_deref) side effects, penalize it (mul 0. 6727 num_paths) (mul 1. 1609 (add (sub (mul (div num_ops dependence_height) 10. 8240) exec_ratio) (sub (mul (cmul has_unsafe_jsr predict_product_mean 0. 9838) (sub 1. 1039 num_ops_max)) (sub (mul dependence_height_mean num_branches_max) num_paths)))))))

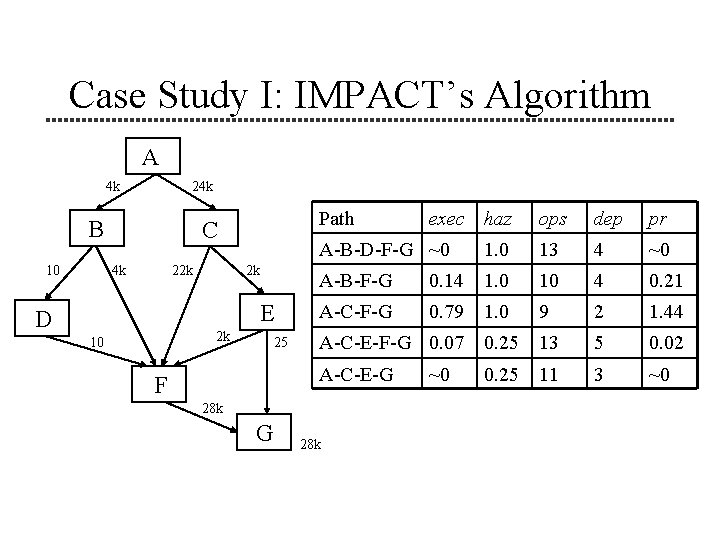

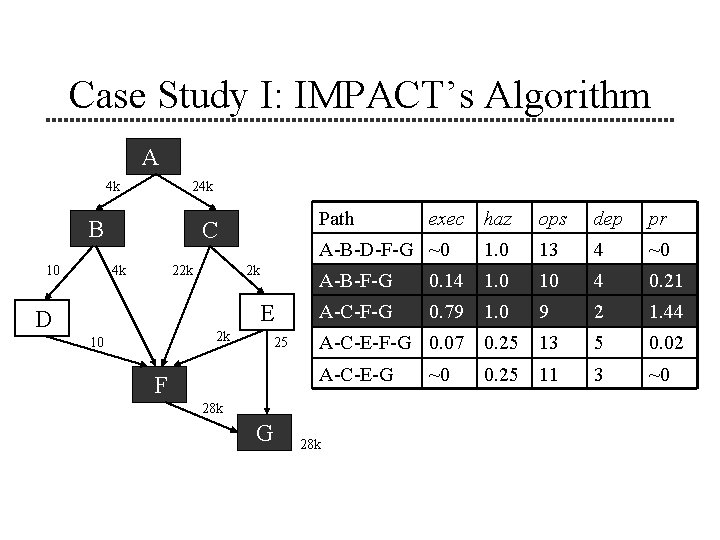

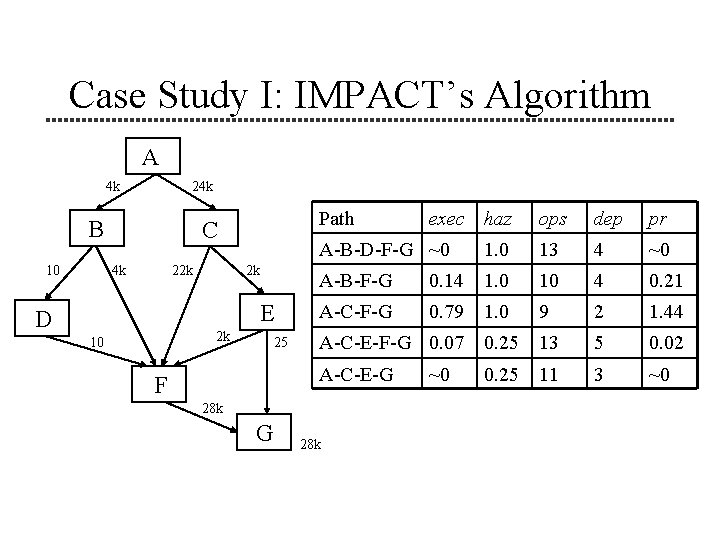

Case Study I: IMPACT’s Algorithm A 4 k 24 k B 10 Path C 4 k 22 k 2 k E D 2 k 10 25 F ops dep pr A-B-D-F-G ~0 1. 0 13 4 ~0 A-B-F-G 0. 14 1. 0 10 4 0. 21 A-C-F-G 0. 79 1. 0 9 2 1. 44 A-C-E-F-G 0. 07 0. 25 13 5 0. 02 A-C-E-G 0. 25 11 3 ~0 28 k G exec haz 28 k ~0

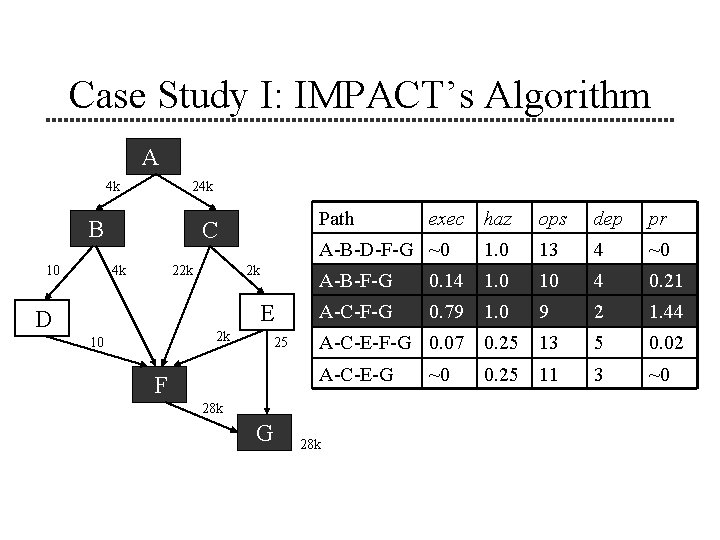

Case Study I: IMPACT’s Algorithm A 4 k 24 k B 10 Path C 4 k 22 k 2 k E D 2 k 10 25 F ops dep pr A-B-D-F-G ~0 1. 0 13 4 ~0 A-B-F-G 0. 14 1. 0 10 4 0. 21 A-C-F-G 0. 79 1. 0 9 2 1. 44 A-C-E-F-G 0. 07 0. 25 13 5 0. 02 A-C-E-G 0. 25 11 3 ~0 28 k G exec haz 28 k ~0