Messaging layer software Generally an application programmers interface

![Low-overhead messaging layer • Active messages[Eicken, ’ 95] and Illinois Fast Messages[Pakin, et al. Low-overhead messaging layer • Active messages[Eicken, ’ 95] and Illinois Fast Messages[Pakin, et al.](https://slidetodoc.com/presentation_image_h2/434d7cd3f455e490ebbc68c533e59fe1/image-17.jpg)

- Slides: 32

Messaging layer software • Generally an application programmers interface (API) provides access to the services of the messaging layer through a set of library procedures and functions. • The Message Passing Interface (MPI) represents a standard message-passing API that has evolved out of the collective efforts of many people. • Transmission of a message is initiated via a call to a message passing procedure such as send(buf, nbytes, dest). 分散処理論2 (No 10) 1

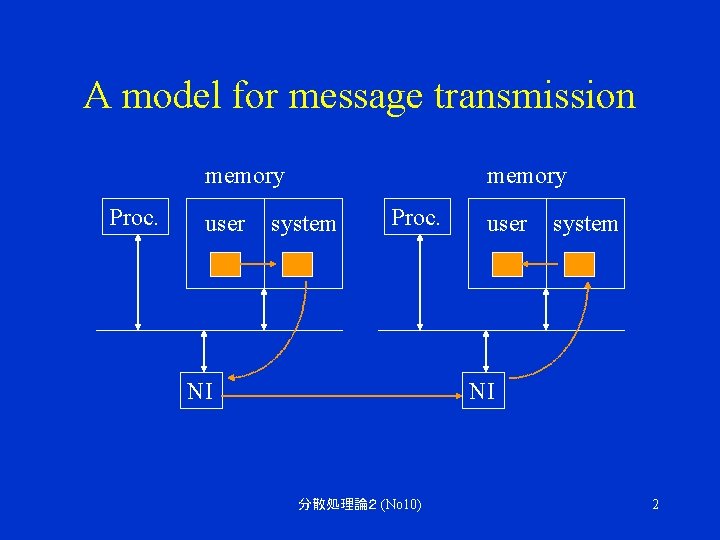

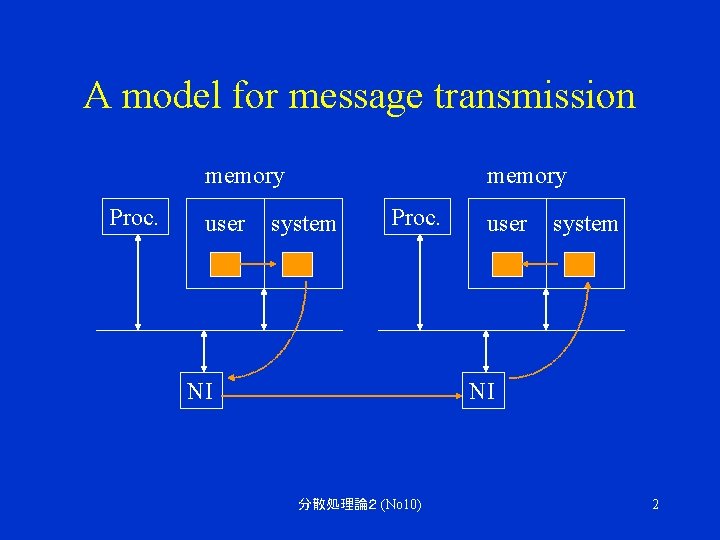

A model for message transmission memory Proc. user memory system Proc. NI user system NI 分散処理論2 (No 10) 2

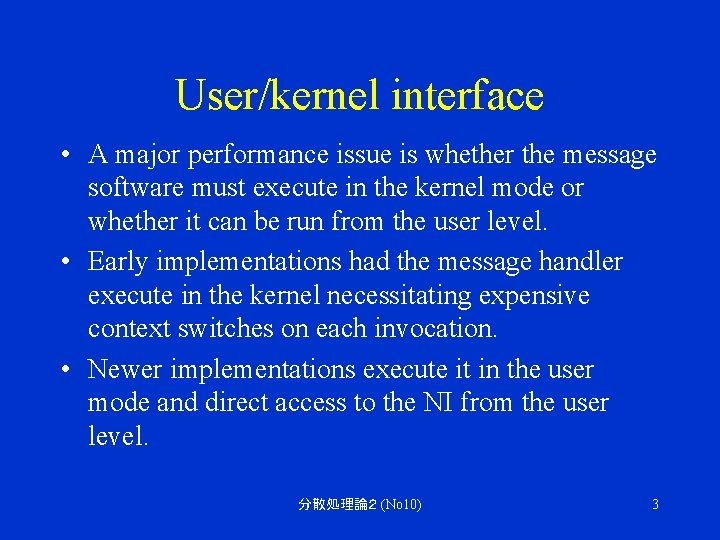

User/kernel interface • A major performance issue is whether the message software must execute in the kernel mode or whether it can be run from the user level. • Early implementations had the message handler execute in the kernel necessitating expensive context switches on each invocation. • Newer implementations execute it in the user mode and direct access to the NI from the user level. 分散処理論2 (No 10) 3

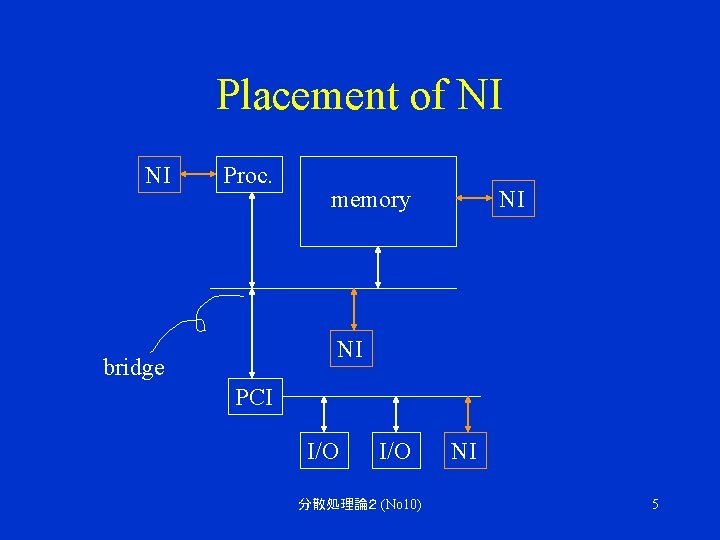

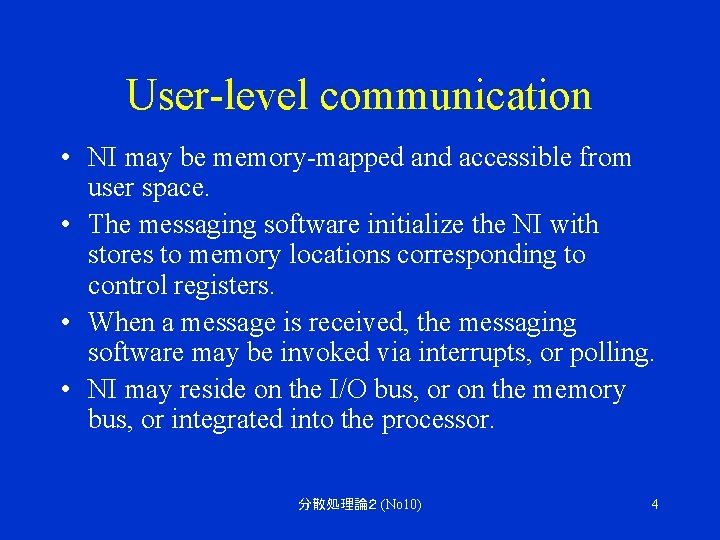

User-level communication • NI may be memory-mapped and accessible from user space. • The messaging software initialize the NI with stores to memory locations corresponding to control registers. • When a message is received, the messaging software may be invoked via interrupts, or polling. • NI may reside on the I/O bus, or on the memory bus, or integrated into the processor. 分散処理論2 (No 10) 4

Placement of NI NI Proc. memory NI NI bridge PCI I/O 分散処理論2 (No 10) NI 5

Network interface (NI) control • In a case of direct memory access (DMA), the software must initialize the appropriate DMA channel and initiate DMA (e. g. load the starting address and the counter). • Pages used as targets of DMA or used to hold NI data structures such as queues should be pinned down to prevent them from swapped out. 分散処理論2 (No 10) 6

NI coprocessor • Some node architecture (e. g. Intel Paragon) make use of a coprocessor to execute all messagepassing functions. • The interaction of the coprocessor with the compute processor may be interrupt driven or polled. • This permits significant overlap between the message processing and computation, however do little for latency. 分散処理論2 (No 10) 7

packetization • A message packet must be created (packetization) with a header containing information required to correctly route the packet to its destination. • Most MPP netwrok interfaces implement the majority packetization (table lookup to generate routing tag and computing Cyclic Redundancy Check checks sums) functions in hardware. • Packtization overheads have been largely minimized in modern machines by the hardware support. 分散処理論2 (No 10) 8

Copying and buffering • Routing protocols remain deadlock free under the consumption assumption: all messages destined for a node are eventually consumed. • Memory is limited, and therefore some flow control between senders and receivers is necessary. • The Fast Message library[SC 95] uses a return-tosender optimistic flow control protocol for buffer management (it is returned to the sender when it cannot be received). • Packets are optimistically transmitted after allocating buffer space at the source for the packet. 分散処理論2 (No 10) 9

In-order delivery • Adaptive routing and use of VCs may cause packets to arrive out of order since they may be routed along different paths or overtaking. • The message layer may have to buffer out-of-order packets so that packets may be delivered to the user programs in order. • Reordering message packets may entail the use of sequence numbers within the headers to enable reconstruction. 分散処理論2 (No 10) 10

Reliable message delivery • Most message-passing programs are predicated upon the reliable delivery of messages to ensure correct execution. • Reliable delivery may be ensured by acknowledgements from the destination and may require buffering messages until such acknowledgements are received. • The cost of managing these buffers and the processing of acknowledgements can be charged as overhead due to reliable delivery. 分散処理論2 (No 10) 11

protection • When message handlers operate in the user address space, protection from interfering with the message-passing state of other programs is necessary. • One approach is to have a user scheduler that manages the network interface [Martin, ’ 94]. • When a message handler receives a message, a unique ID in the message is checked. • If the message is not destined for the currently active process, it is stored in a separate queue. 分散処理論2 (No 10) 12

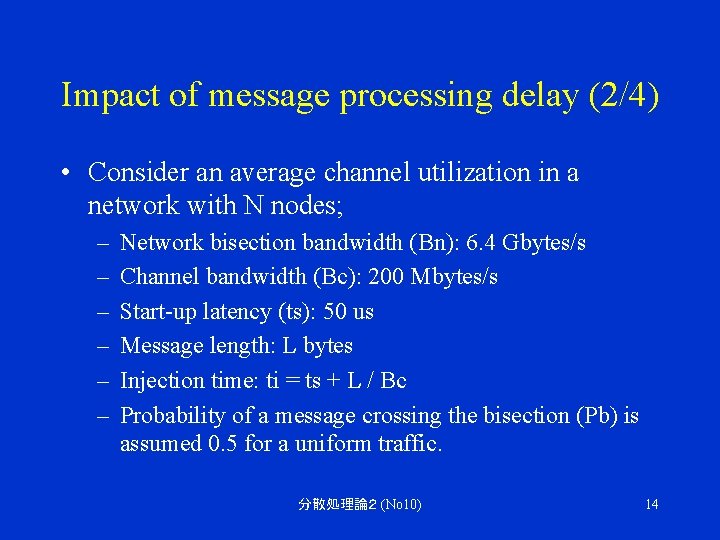

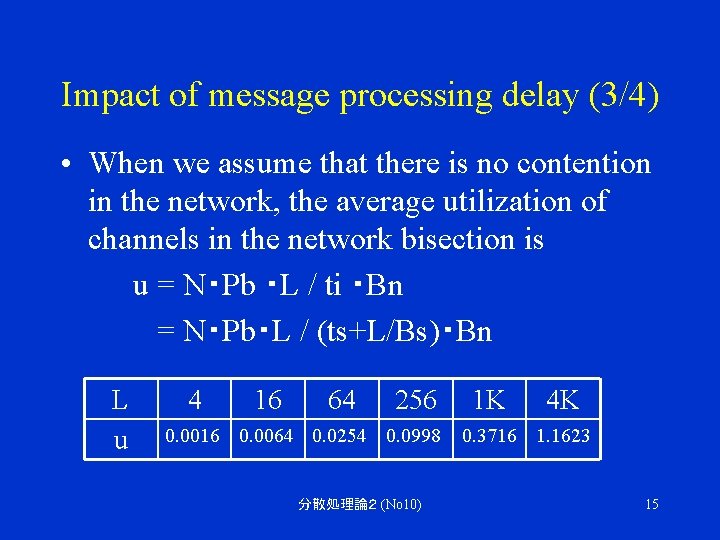

Impact of message processing delay (1/4) • The aggregation of the various overheads produced by the software messaging layer has a considerable impact on the performance of interconnection network. • An increase if the software overhead directly affects the communication latency. • It can limit the throughput achieved by the network since the injection rate decreases as software overhead increases. 分散処理論2 (No 10) 13

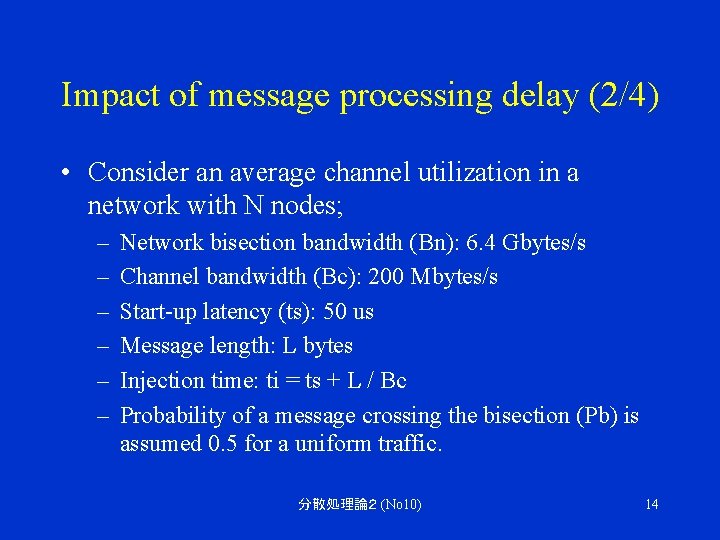

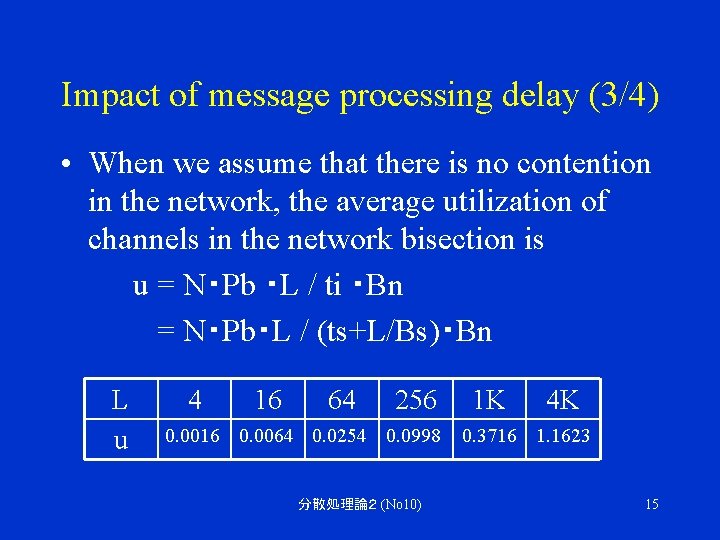

Impact of message processing delay (2/4) • Consider an average channel utilization in a network with N nodes; – – – Network bisection bandwidth (Bn): 6. 4 Gbytes/s Channel bandwidth (Bc): 200 Mbytes/s Start-up latency (ts): 50 us Message length: L bytes Injection time: ti = ts + L / Bc Probability of a message crossing the bisection (Pb) is assumed 0. 5 for a uniform traffic. 分散処理論2 (No 10) 14

Impact of message processing delay (3/4) • When we assume that there is no contention in the network, the average utilization of channels in the network bisection is u = N・Pb ・L / ti ・Bn = N・Pb・L / (ts+L/Bs)・Bn L u 4 16 64 256 0. 0016 0. 0064 0. 0254 0. 0998 分散処理論2 (No 10) 1 K 4 K 0. 3716 1. 1623 15

Impact of message processing delay (4/4) • Those values are upper bounds because they assume there is no contention in the network. • The channel utilization for short messages is very low. • It means network performance is limited by software overhead (no benefit in increasing channel bandwidth). • For traffic exhibiting a high degree of locality (small Pb), full channel utilization cannot be achieved regardless of message length. 分散処理論2 (No 10) 16

![Lowoverhead messaging layer Active messagesEicken 95 and Illinois Fast MessagesPakin et al Low-overhead messaging layer • Active messages[Eicken, ’ 95] and Illinois Fast Messages[Pakin, et al.](https://slidetodoc.com/presentation_image_h2/434d7cd3f455e490ebbc68c533e59fe1/image-17.jpg)

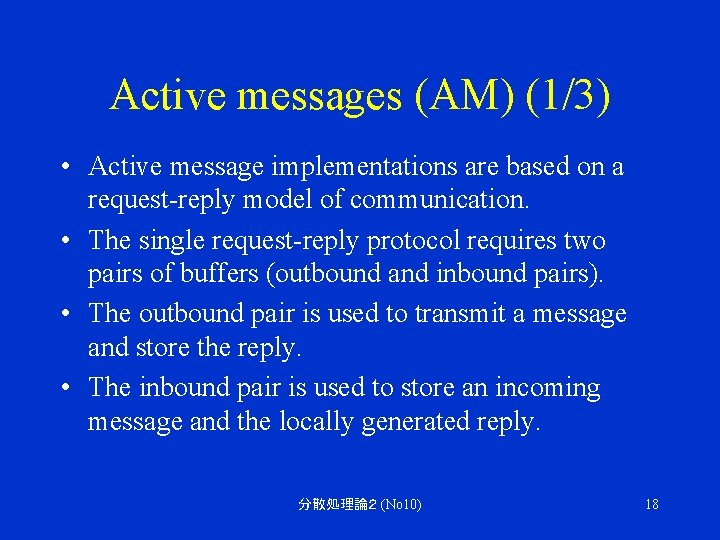

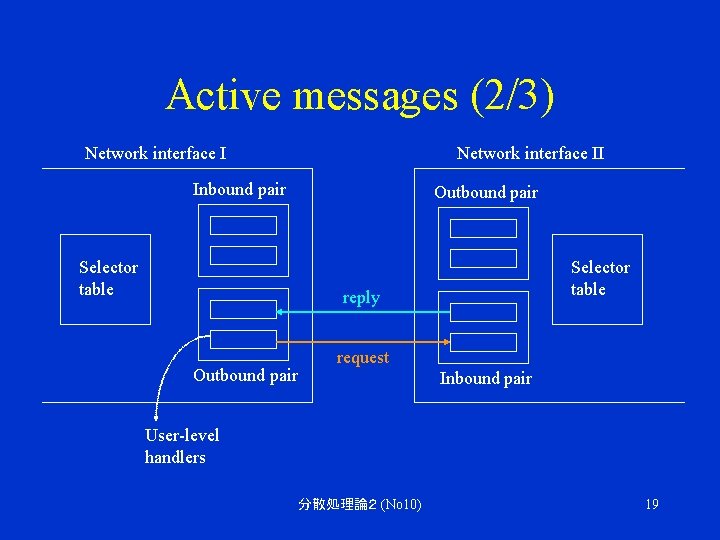

Low-overhead messaging layer • Active messages[Eicken, ’ 95] and Illinois Fast Messages[Pakin, et al. , ’ 95] are good examples of low-overhead messaging layer. • The basic principle underlying them is that the message itself contains the address of a user-level handler which is to be executed on message reception. • Network interface (NI) is mapped into the user address space so that user programs have access to the control registers and buffer memory within the NI. 分散処理論2 (No 10) 17

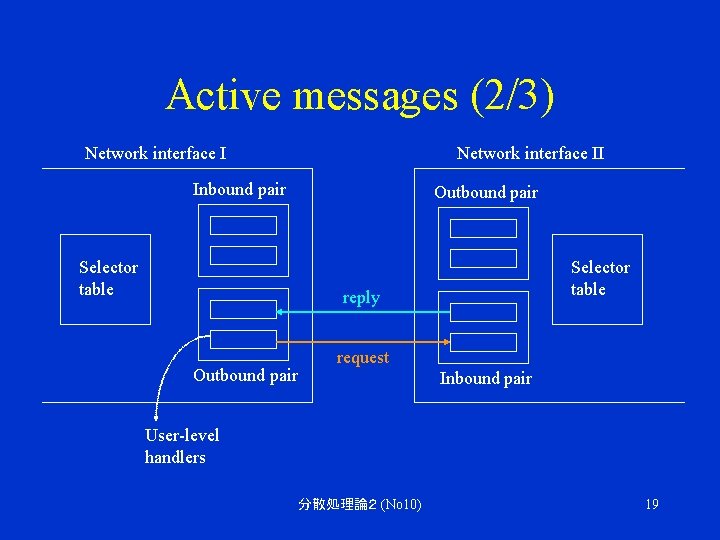

Active messages (AM) (1/3) • Active message implementations are based on a request-reply model of communication. • The single request-reply protocol requires two pairs of buffers (outbound and inbound pairs). • The outbound pair is used to transmit a message and store the reply. • The inbound pair is used to store an incoming message and the locally generated reply. 分散処理論2 (No 10) 18

Active messages (2/3) Network interface II Inbound pair Selector table Outbound pair Selector table reply Outbound pair request Inbound pair User-level handlers 分散処理論2 (No 10) 19

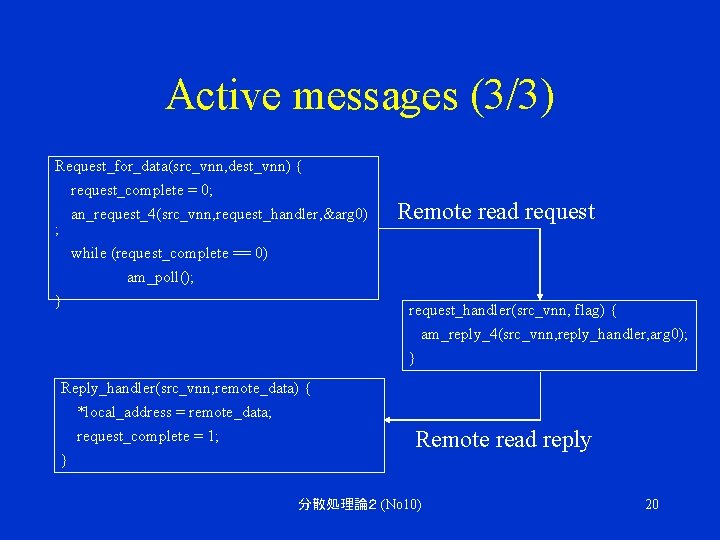

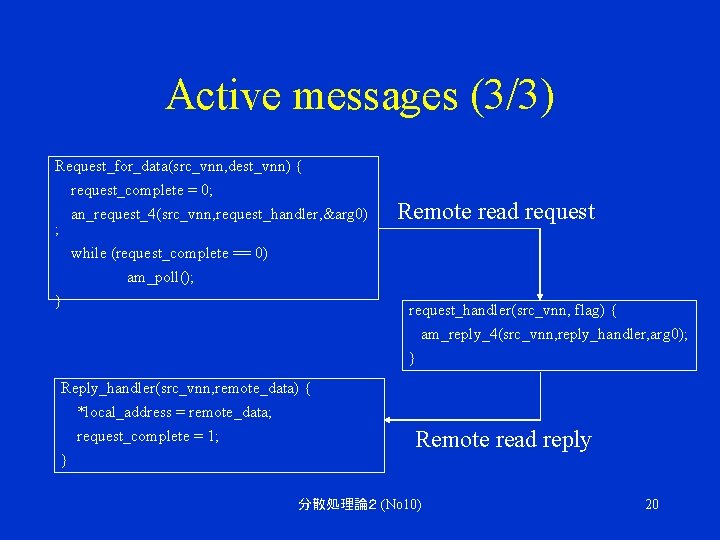

Active messages (3/3) Request_for_data(src_vnn, dest_vnn) { request_complete = 0; an_request_4(src_vnn, request_handler, &arg 0) ; Remote read request while (request_complete == 0) am_poll(); } request_handler(src_vnn, flag) { am_reply_4(src_vnn, reply_handler, arg 0); } Reply_handler(src_vnn, remote_data) { *local_address = remote_data; request_complete = 1; } Remote read reply 分散処理論2 (No 10) 20

Illinois fast message (FM) • The FM procedures are similar to AM in that a handler is specified within the message, however there is no notion of request-reply message pairs. • Program properties such as deadlock freedom are the responsibility of, and must be guaranteed by, the programmer. • This reduced constraints can lead to very efficient implementations. 分散処理論2 (No 10) 21

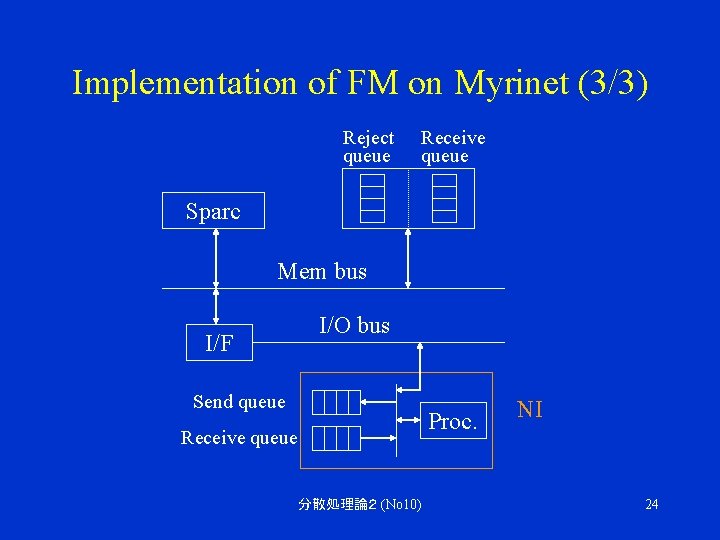

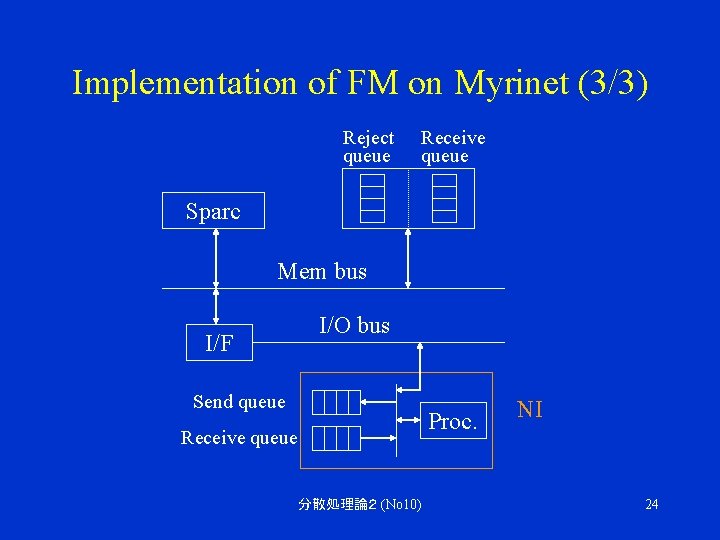

Implementation of FM on Myrinet (1/3) • The Myrinet interface (LANai) incorporates a processor datapath that executes a control program for injecting/ejecting messages from the network interface and setting up transfers to and from the host. • The LANai is mapped into the host address space. • The implementation of the message function is via four queues (send/receive, reject/receive). 分散処理論2 (No 10) 22

Implementation of FM on Myrinet (2/3) • The FM send functions write directly into the send queues in the network interfaces, initiating transmission. • On a receive, the interface will DMA the message into the receive queue in the kernel memory (DMA is allowed to/from kernel page on SUN WSs). • The reject queue is used for implementation of the optimistic buffer allocation (reject a message with no buffer space available). 分散処理論2 (No 10) 23

Implementation of FM on Myrinet (3/3) Reject queue Receive queue Sparc Mem bus I/F I/O bus Send queue Proc. Receive queue 分散処理論2 (No 10) NI 24

Message passing interface (MPI) (1/3) • Through an open participatory process, the first MPI standard was completed in June 1994. • The MIP-2 was defined in July 1997 with some extension such as dynamic process generation. • The MPI standard is an application programming interface, not an implementation. • Data type information appears in the interface to permit support across heterogeneous environments where the values of an operand may have distinct representations on different machines, e. g. byte ordering. 分散処理論2 (No 10) 25

Message passing interface (MPI) (2/3) • The stated goals of MPI are summarized as – The interface should reflect the needs of applications. – The interface should not constrain the implementation, i. e. , permit optimizations that may eliminate buffering, use extensive buffering, support concurrent communication and computation, etc. – The semantics should be language-independent. – The user should be relieved from the responsibility for reliable communication. – Usage should not require much deviation from wellunderstood current practice. – The interface design should be supportive of thread safety. 分散処理論2 (No 10) 26

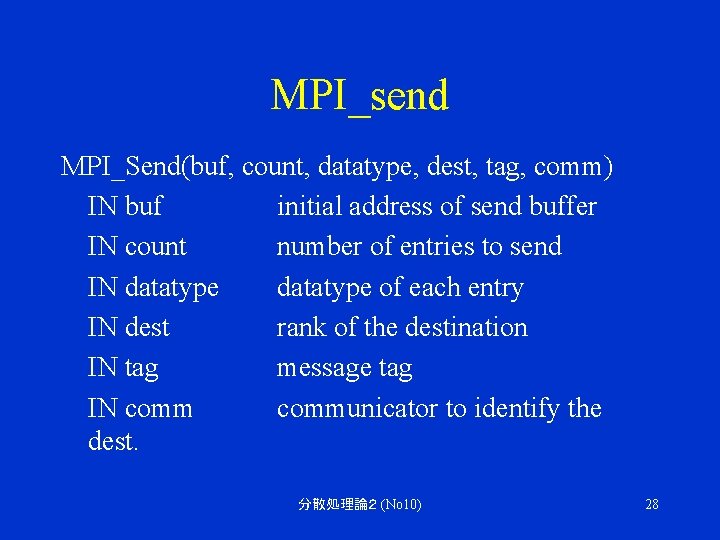

Message passing interface (MPI) (3/3) • An MPI program consists of autonomous processes, executing their own code in either the MIMD or SPMD programming paradigms. • Each process usually executes in its own address space and communicates via MPI communication primitives. • The basic communication mechanism within MPI is point-to-point communication between pairs of processes. • MPI also supports some collective communications which are frequently used in parallel algorithms. 分散処理論2 (No 10) 27

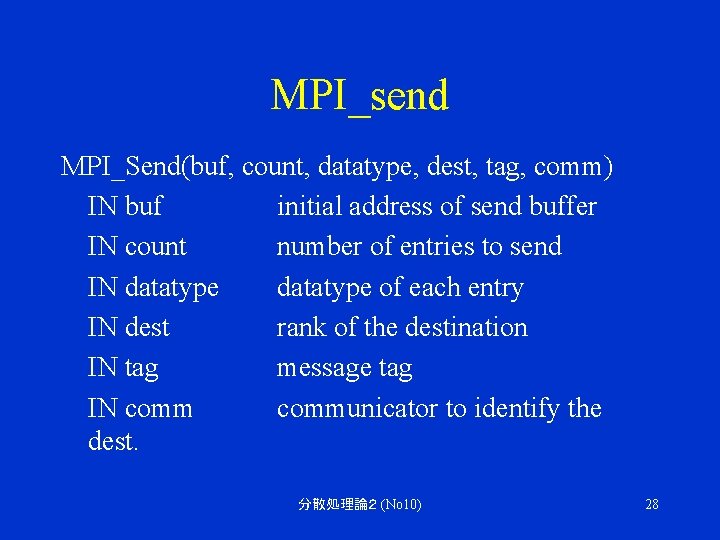

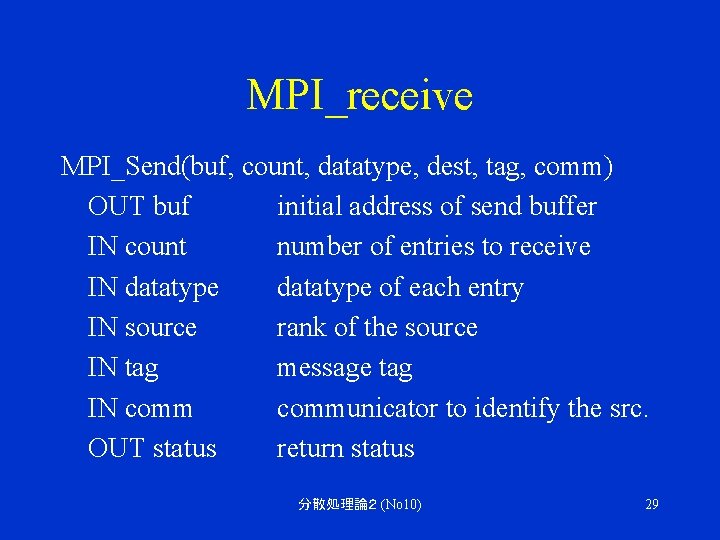

MPI_send MPI_Send(buf, count, datatype, dest, tag, comm) IN buf initial address of send buffer IN count number of entries to send IN datatype of each entry IN dest rank of the destination IN tag message tag IN communicator to identify the dest. 分散処理論2 (No 10) 28

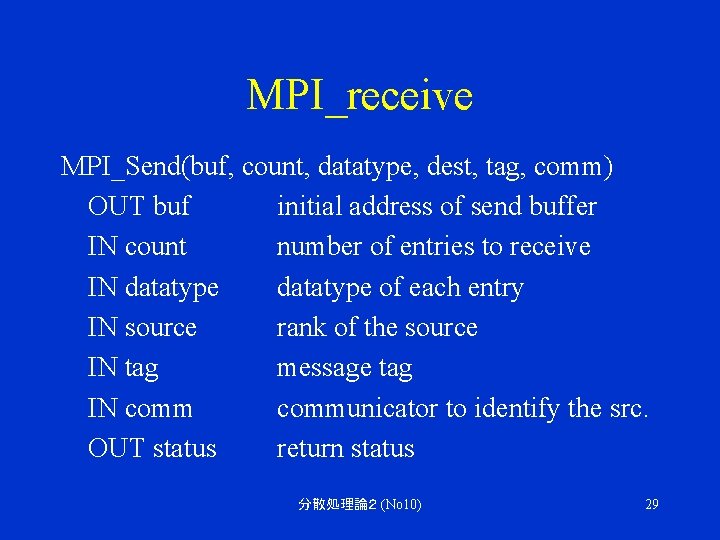

MPI_receive MPI_Send(buf, count, datatype, dest, tag, comm) OUT buf initial address of send buffer IN count number of entries to receive IN datatype of each entry IN source rank of the source IN tag message tag IN communicator to identify the src. OUT status return status 分散処理論2 (No 10) 29

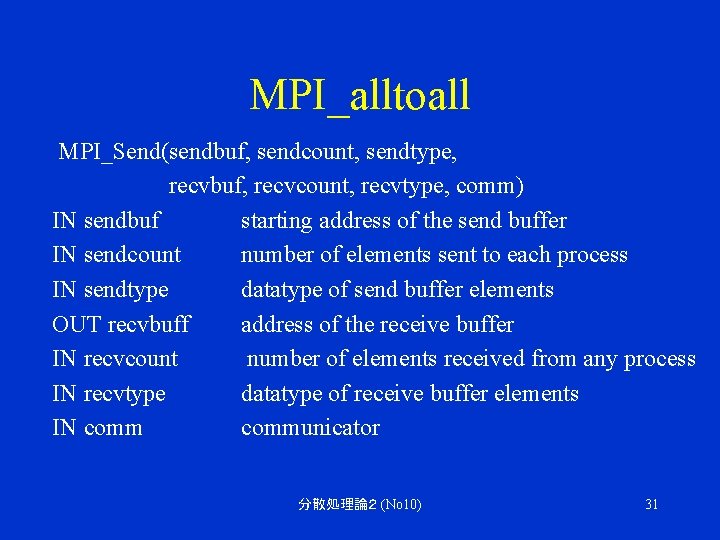

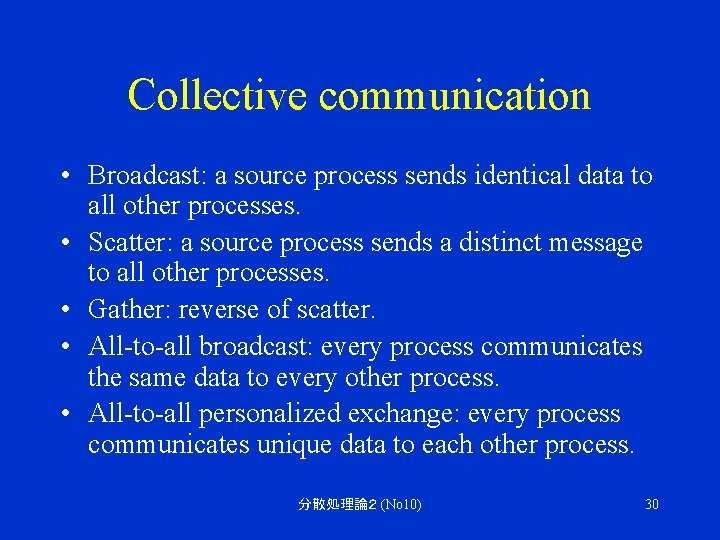

Collective communication • Broadcast: a source process sends identical data to all other processes. • Scatter: a source process sends a distinct message to all other processes. • Gather: reverse of scatter. • All-to-all broadcast: every process communicates the same data to every other process. • All-to-all personalized exchange: every process communicates unique data to each other process. 分散処理論2 (No 10) 30

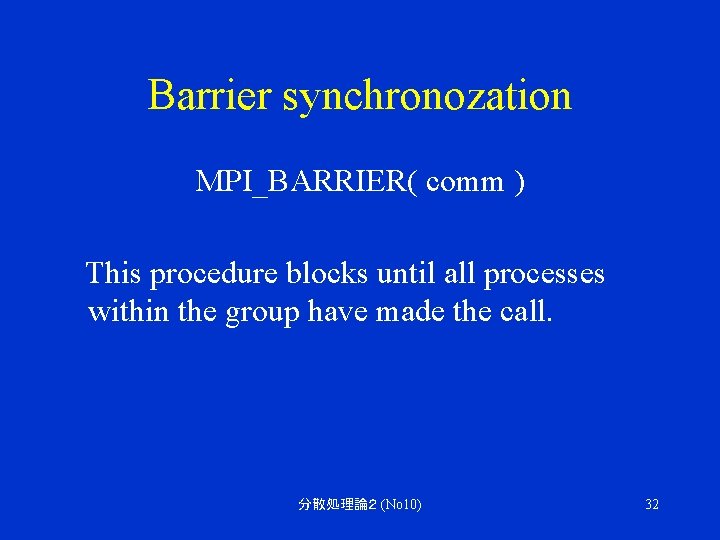

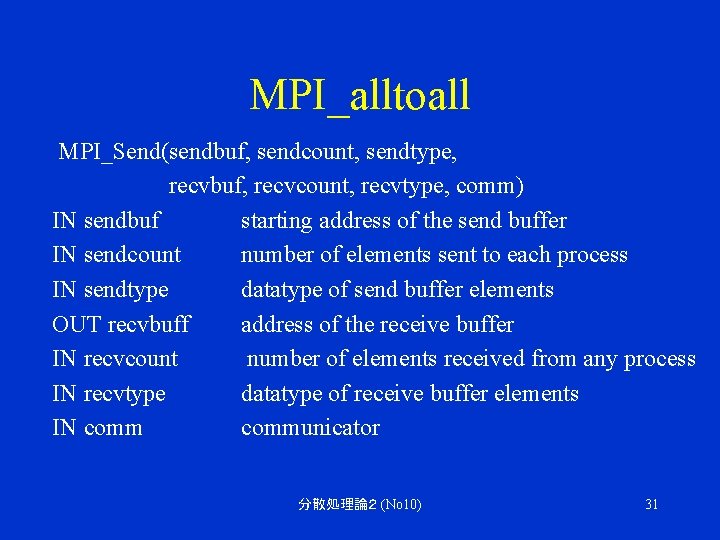

MPI_alltoall MPI_Send(sendbuf, sendcount, sendtype, recvbuf, recvcount, recvtype, comm) IN sendbuf starting address of the send buffer IN sendcount number of elements sent to each process IN sendtype datatype of send buffer elements OUT recvbuff address of the receive buffer IN recvcount number of elements received from any process IN recvtype datatype of receive buffer elements IN communicator 分散処理論2 (No 10) 31

Barrier synchronozation MPI_BARRIER( comm ) This procedure blocks until all processes within the group have made the call. 分散処理論2 (No 10) 32