MessagePassing Computing Collective patterns and MPI routines 2

- Slides: 35

Message-Passing Computing Collective patterns and MPI routines 2 - Synchronizing Computations • Barriers implementations • Safety and deadlock • Safe MPI routines ITCS 4/5145 Parallel computing, UNC-Charlotte, B. Wilkinson Jan 4, 2014 slides 6 d. ppt 6 a. 1

Recap on synchronization Our collective data transfer patterns do not specify whether synchronized (an implementation detail) MPI routines that implement these patterns do not generally synchronize processes. Collective data transfer MPI routines have same semantics as if individual MPI_ send()s and MPI_recv()’s were used (according to the MPI standard). Unfortunate as depends on how implemented!!! Different implementations may do things differently and with different network configurations. (Check with new MPI version 3. )

Synchronizing processes Needed in many applications to ensure parallel processes start at same point and complete data is available to work on by processes. Synchronization should be avoided where possible as it delays processes but sometimes it is unavoidable. Some algorithms such as iterative computations require previous iteration values to compute next iteration values. So previous values need to be computed first. (This constraint can be relaxed for increased performance in some cases, see later in course)

Synchronous Message Passing Routines that return when message transfer completed. Synchronous send routine • Returns only after message received (matching receive posted). In MPI, MPI_SSend() routine. Synchronous receive routine • Waits until the message it is expecting arrives. In MPI, actually the regular MPI_recv() routine. 2 a. 4

Synchronous Message Passing Synchronous message-passing routines intrinsically perform two actions: • • They transfer data and They synchronize processes. 2 a. 5

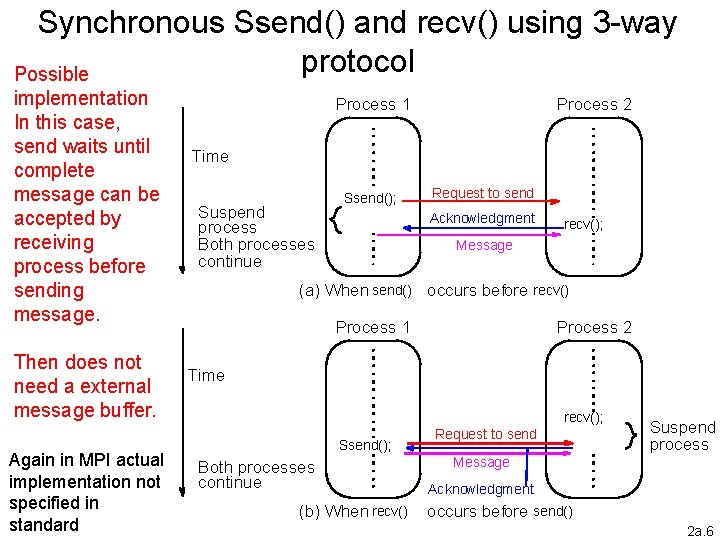

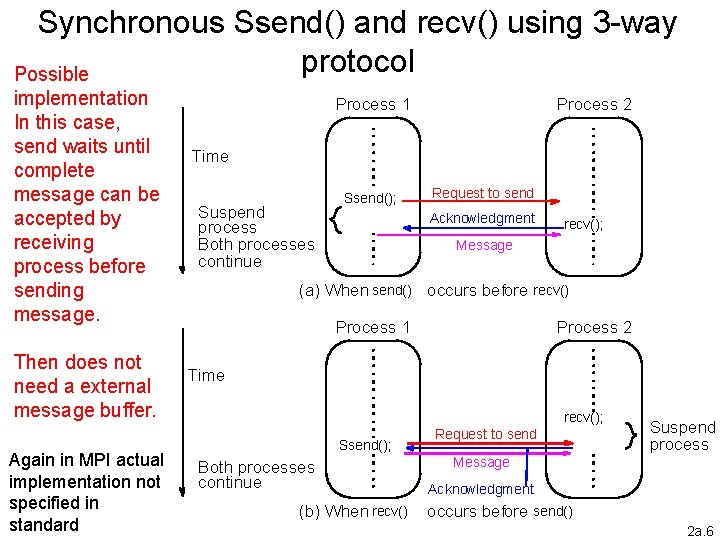

Synchronous Ssend() and recv() using 3 -way protocol Possible implementation In this case, send waits until complete message can be accepted by receiving process before sending message. Then does not need a external message buffer. Again in MPI actual implementation not specified in standard Process 1 Process 2 Time Suspend process Both processes continue Ssend(); Request to send Acknowledgment recv(); Message (a) When send() occurs before recv() Process 1 Process 2 Time recv(); Ssend(); Both processes continue (b) When recv() Request to send Suspend process Message Acknowledgment occurs before send() 2 a. 6

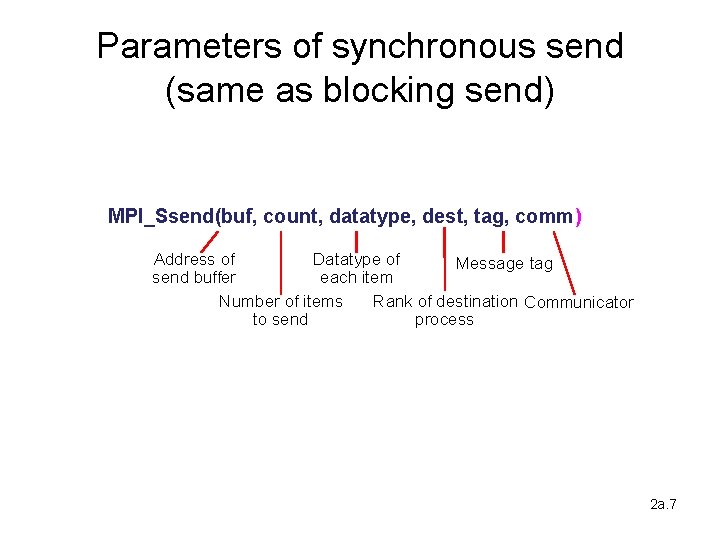

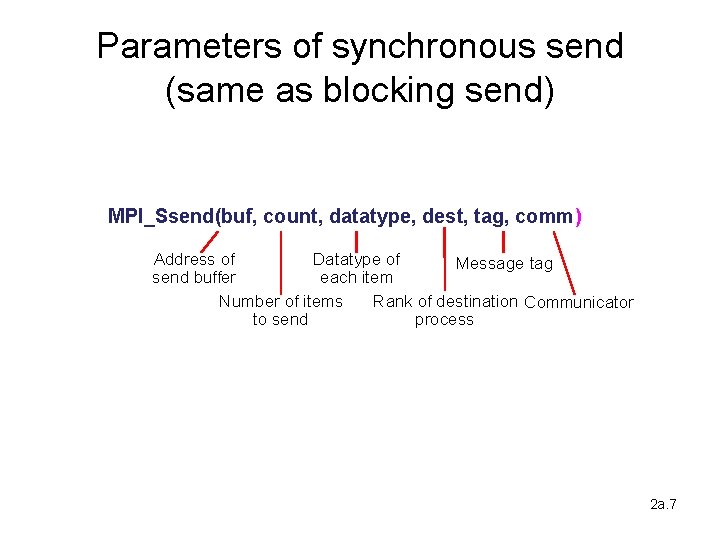

Parameters of synchronous send (same as blocking send) MPI_Ssend(buf, count, datatype, dest, tag, comm) Address of Datatype of Message tag send buffer each item Number of items Rank of destination Communicator to send process 2 a. 7

Asynchronous Message Passing • Routines that do not wait for actions to complete before returning. Usually require local storage for messages. • More than one version depending upon the actual semantics for returning. • In general, they do not synchronize processes but allow processes to move forward sooner. • Must be used with care. 2 a. 8

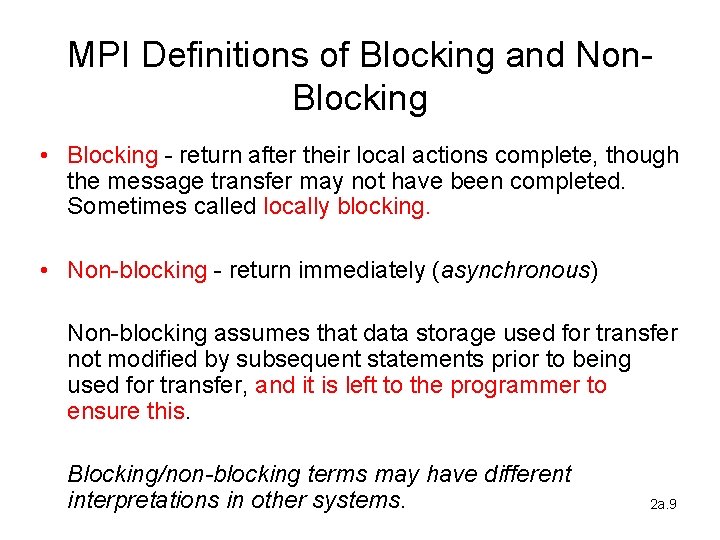

MPI Definitions of Blocking and Non. Blocking • Blocking - return after their local actions complete, though the message transfer may not have been completed. Sometimes called locally blocking. • Non-blocking - return immediately (asynchronous) Non-blocking assumes that data storage used for transfer not modified by subsequent statements prior to being used for transfer, and it is left to the programmer to ensure this. Blocking/non-blocking terms may have different interpretations in other systems. 2 a. 9

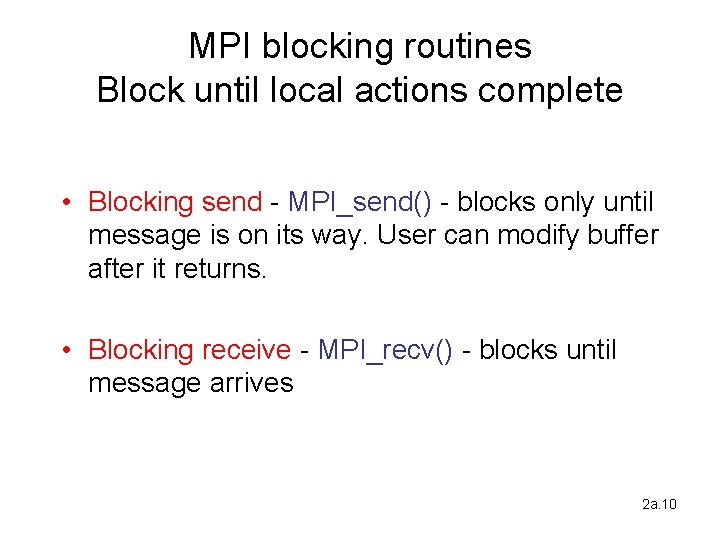

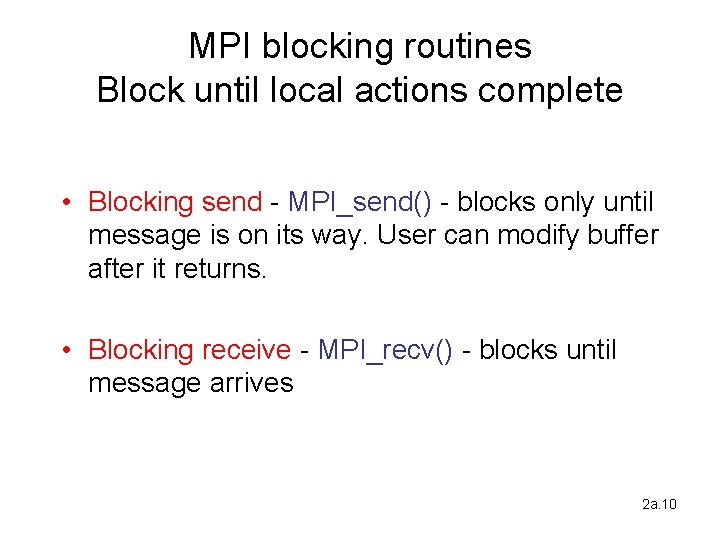

MPI blocking routines Block until local actions complete • Blocking send - MPI_send() - blocks only until message is on its way. User can modify buffer after it returns. • Blocking receive - MPI_recv() - blocks until message arrives 2 a. 10

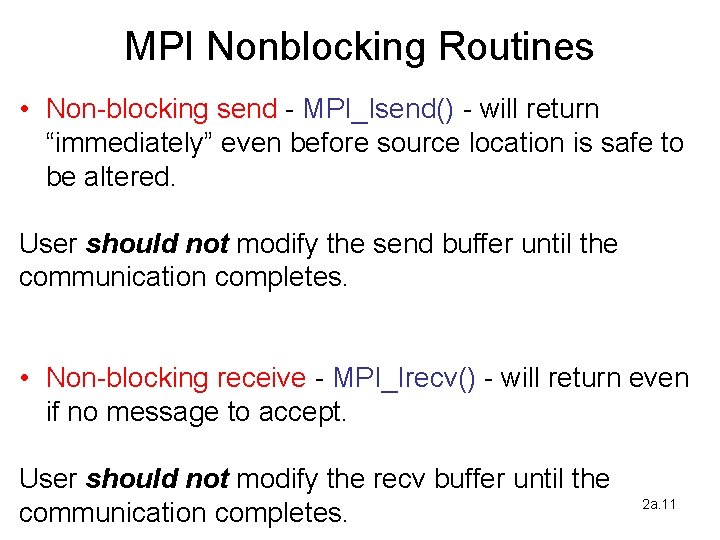

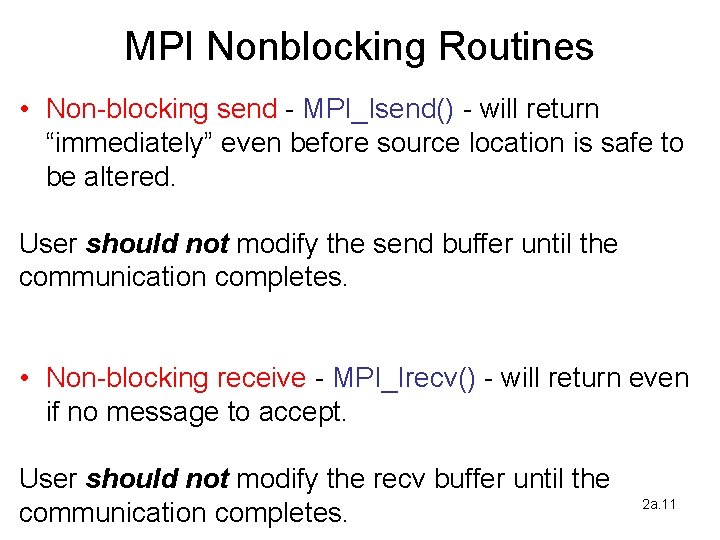

MPI Nonblocking Routines • Non-blocking send - MPI_Isend() - will return “immediately” even before source location is safe to be altered. User should not modify the send buffer until the communication completes. • Non-blocking receive - MPI_Irecv() - will return even if no message to accept. User should not modify the recv buffer until the communication completes. 2 a. 11

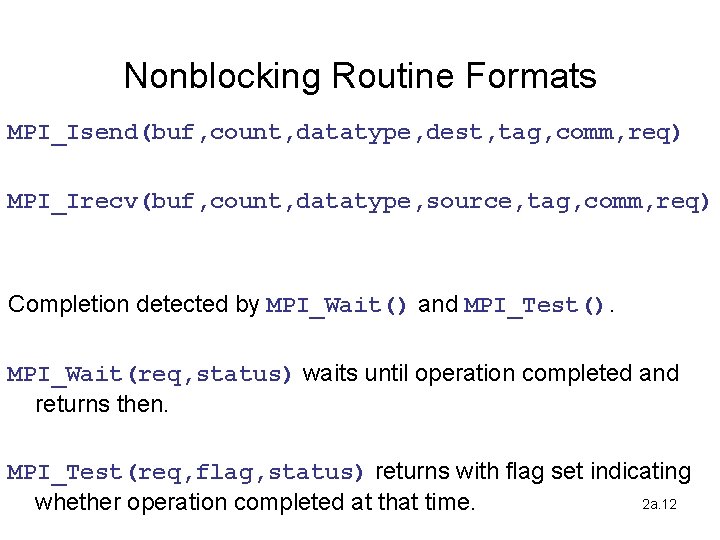

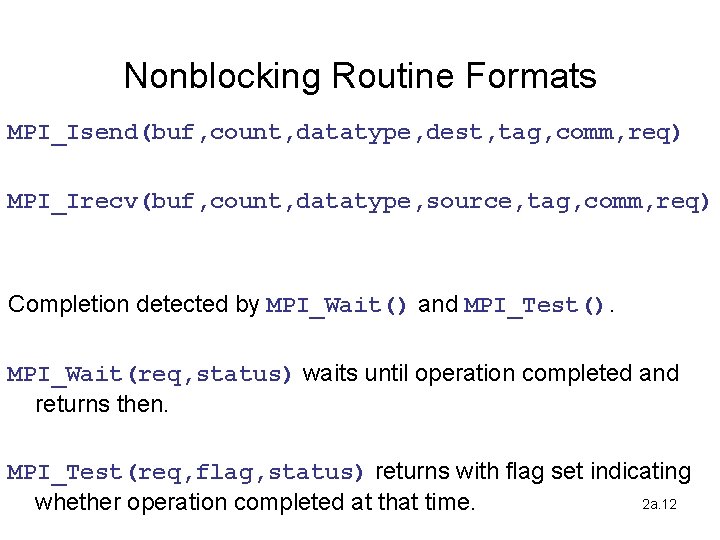

Nonblocking Routine Formats MPI_Isend(buf, count, datatype, dest, tag, comm, req) MPI_Irecv(buf, count, datatype, source, tag, comm, req) Completion detected by MPI_Wait() and MPI_Test(). MPI_Wait(req, status) waits until operation completed and returns then. MPI_Test(req, flag, status) returns with flag set indicating 2 a. 12 whether operation completed at that time.

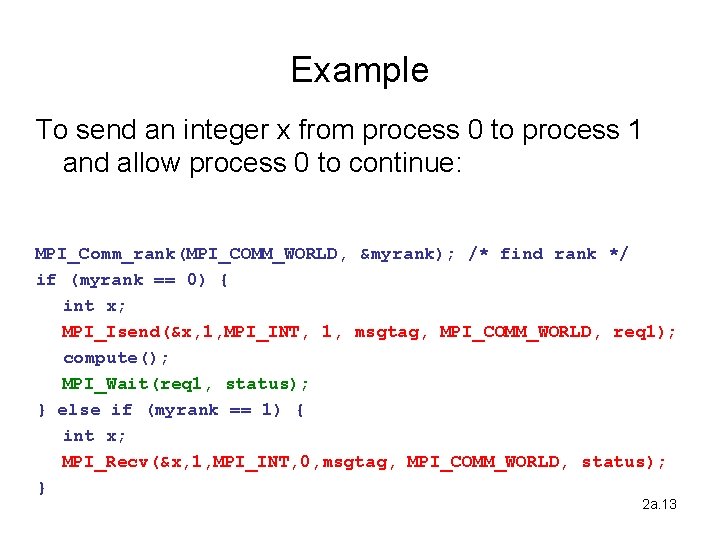

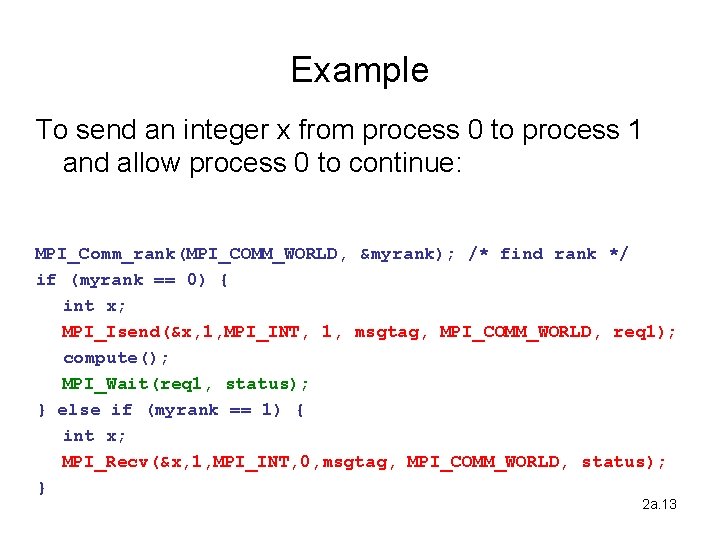

Example To send an integer x from process 0 to process 1 and allow process 0 to continue: MPI_Comm_rank(MPI_COMM_WORLD, &myrank); /* find rank */ if (myrank == 0) { int x; MPI_Isend(&x, 1, MPI_INT, 1, msgtag, MPI_COMM_WORLD, req 1); compute(); MPI_Wait(req 1, status); } else if (myrank == 1) { int x; MPI_Recv(&x, 1, MPI_INT, 0, msgtag, MPI_COMM_WORLD, status); } 2 a. 13

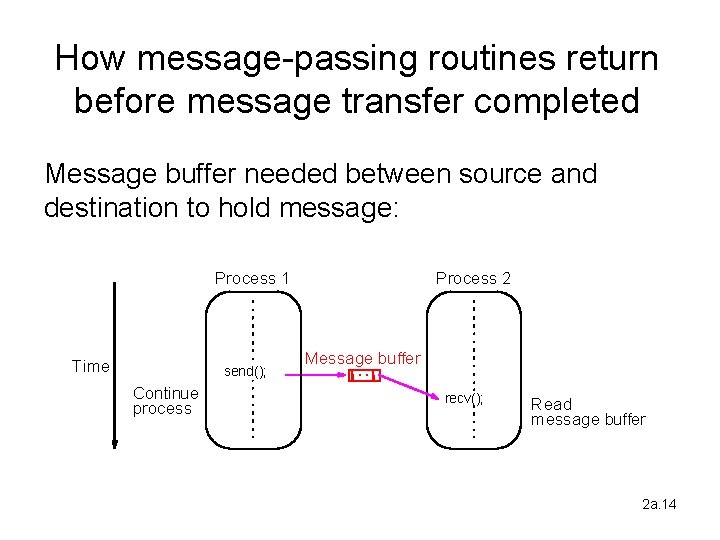

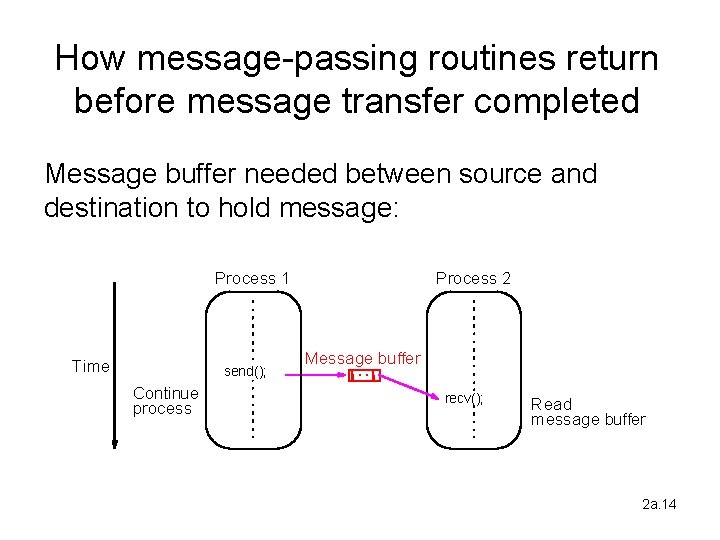

How message-passing routines return before message transfer completed Message buffer needed between source and destination to hold message: Process 1 Time send(); Continue process Process 2 Message buffer recv(); Read message buffer 2 a. 14

Asynchronous (blocking) routines changing to synchronous routines • Message buffers only of finite length • A point could be reached when send routine held up because all available buffer space exhausted. • Then, send routine will wait until storage becomes re-available - i. e. routine will behave as a synchronous routine. 2 a. 15

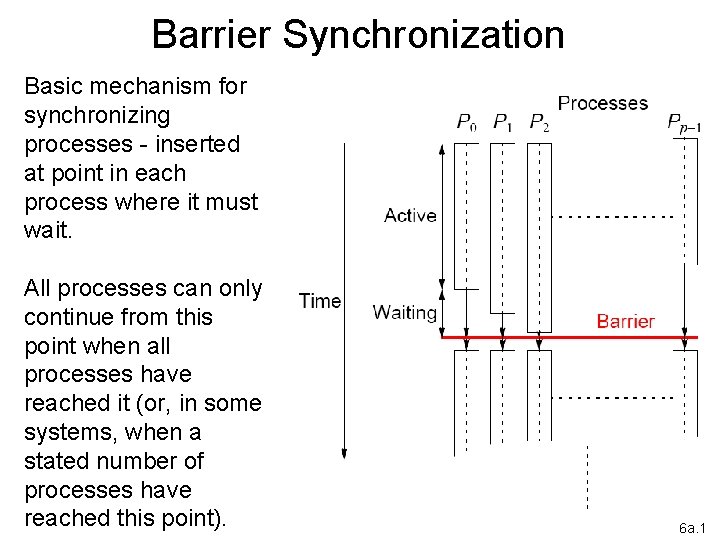

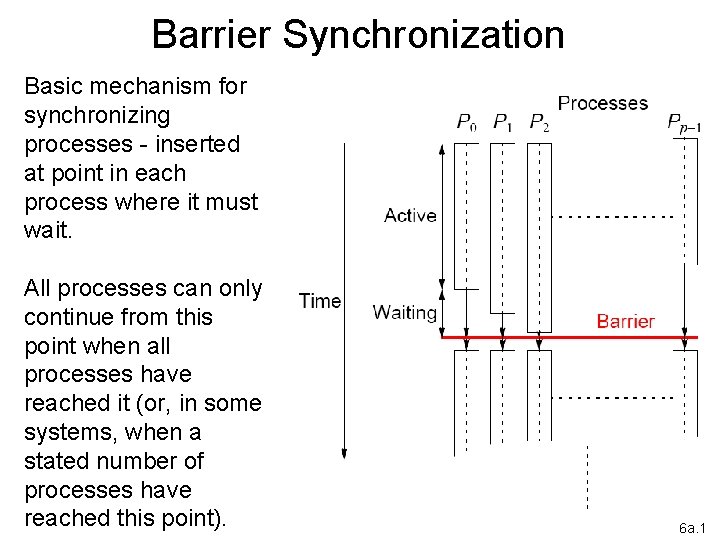

Barrier Synchronization Basic mechanism for synchronizing processes - inserted at point in each process where it must wait. All processes can only continue from this point when all processes have reached it (or, in some systems, when a stated number of processes have reached this point). 6 a. 1

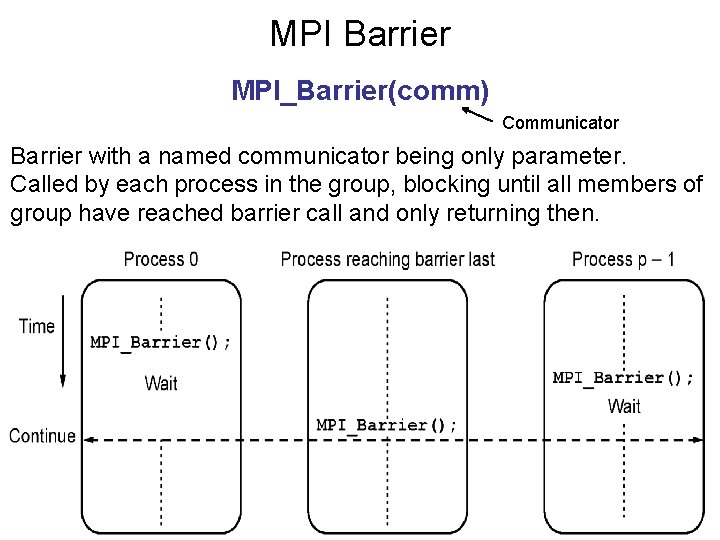

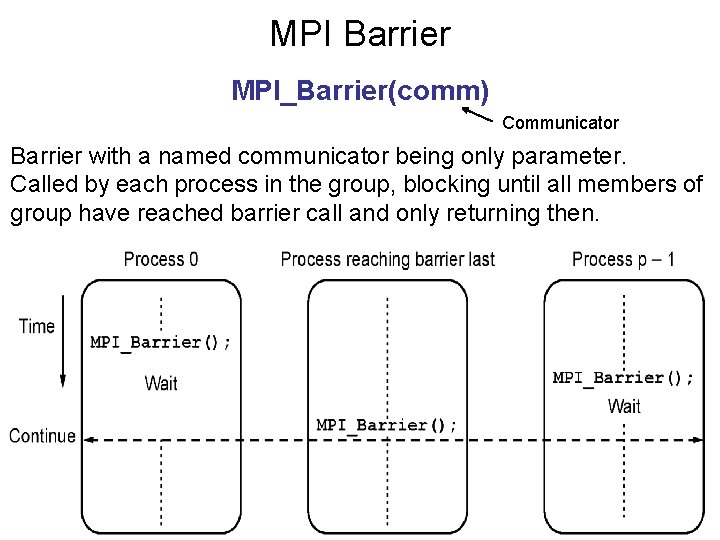

MPI Barrier MPI_Barrier(comm) Communicator Barrier with a named communicator being only parameter. Called by each process in the group, blocking until all members of group have reached barrier call and only returning then.

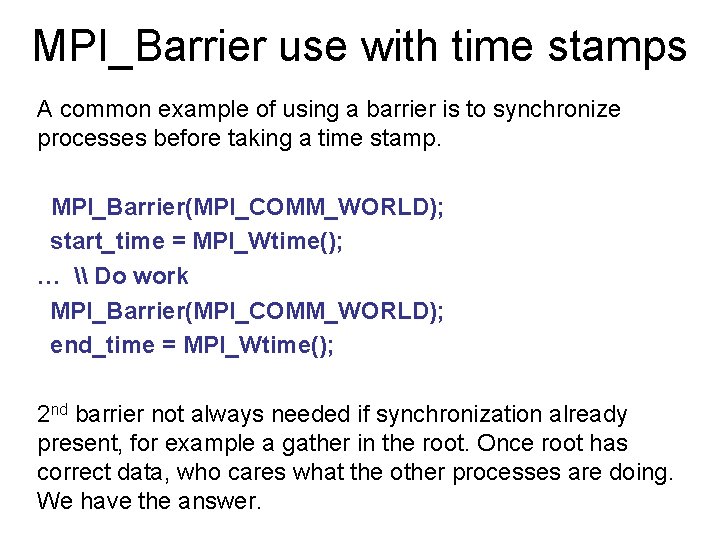

MPI_Barrier use with time stamps A common example of using a barrier is to synchronize processes before taking a time stamp. MPI_Barrier(MPI_COMM_WORLD); start_time = MPI_Wtime(); … \ Do work MPI_Barrier(MPI_COMM_WORLD); end_time = MPI_Wtime(); 2 nd barrier not always needed if synchronization already present, for example a gather in the root. Once root has correct data, who cares what the other processes are doing. We have the answer.

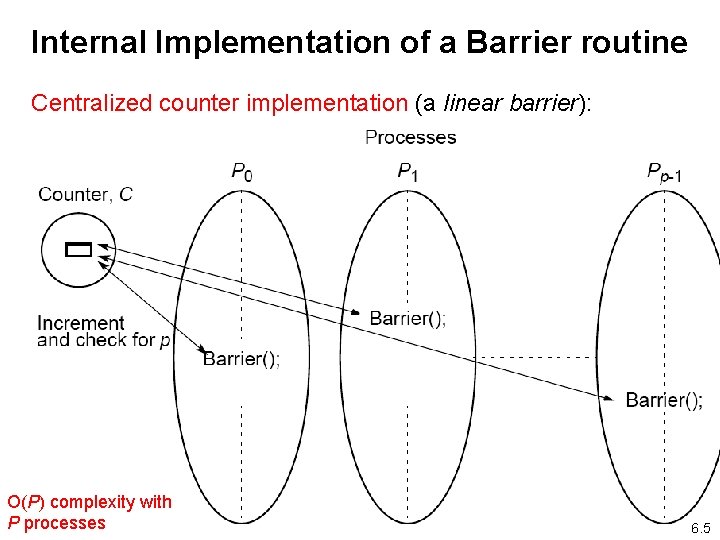

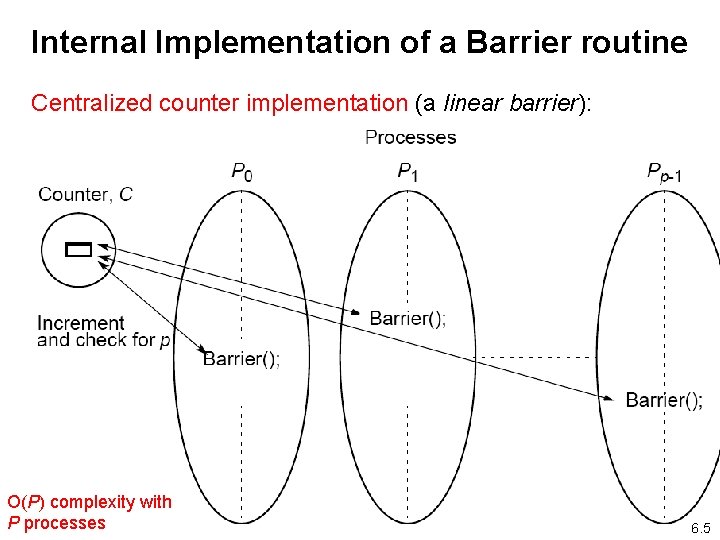

Internal Implementation of a Barrier routine Centralized counter implementation (a linear barrier): O(P) complexity with P processes 6. 5

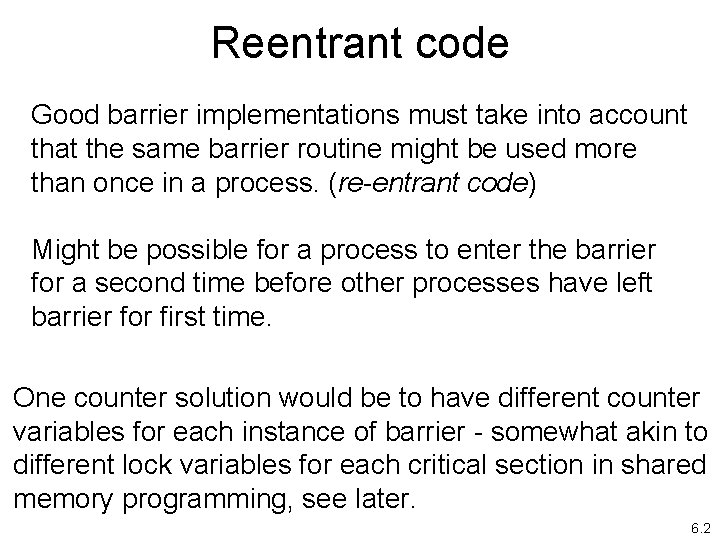

Reentrant code Good barrier implementations must take into account that the same barrier routine might be used more than once in a process. (re-entrant code) Might be possible for a process to enter the barrier for a second time before other processes have left barrier for first time. One counter solution would be to have different counter variables for each instance of barrier - somewhat akin to different lock variables for each critical section in shared memory programming, see later. 6. 2

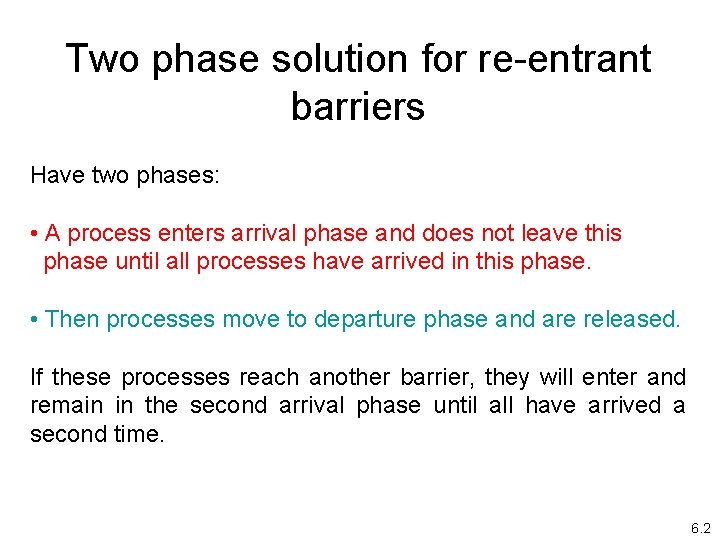

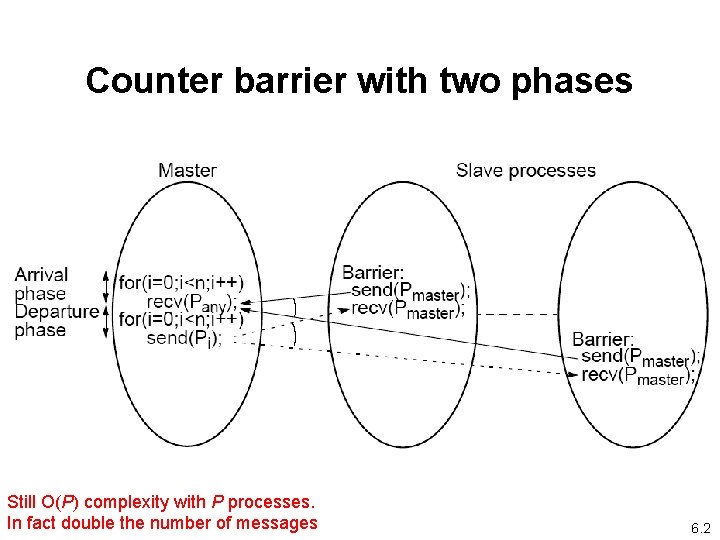

Two phase solution for re-entrant barriers Have two phases: • A process enters arrival phase and does not leave this phase until all processes have arrived in this phase. • Then processes move to departure phase and are released. If these processes reach another barrier, they will enter and remain in the second arrival phase until all have arrived a second time. 6. 2

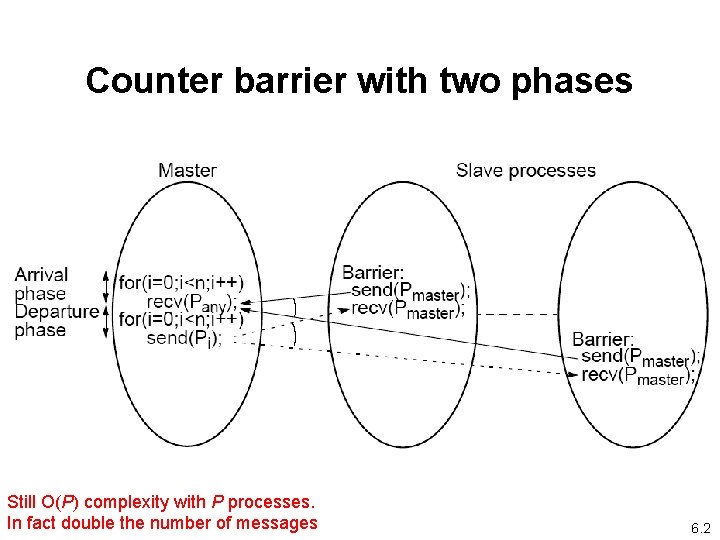

Counter barrier with two phases Still O(P) complexity with P processes. In fact double the number of messages 6. 2

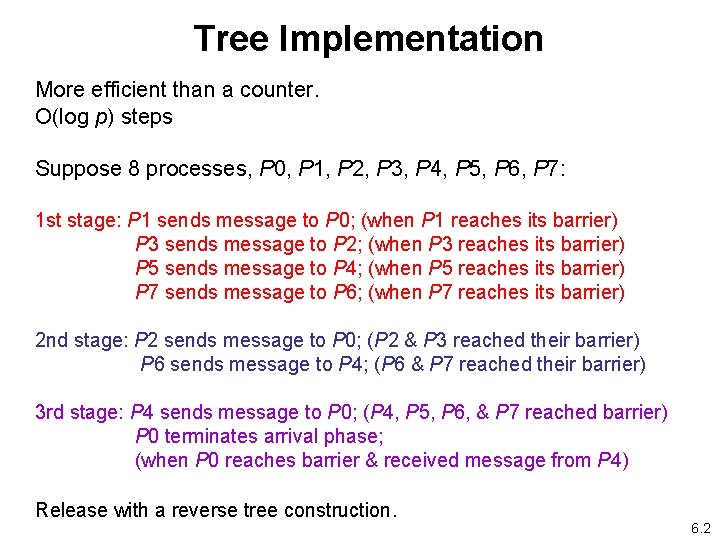

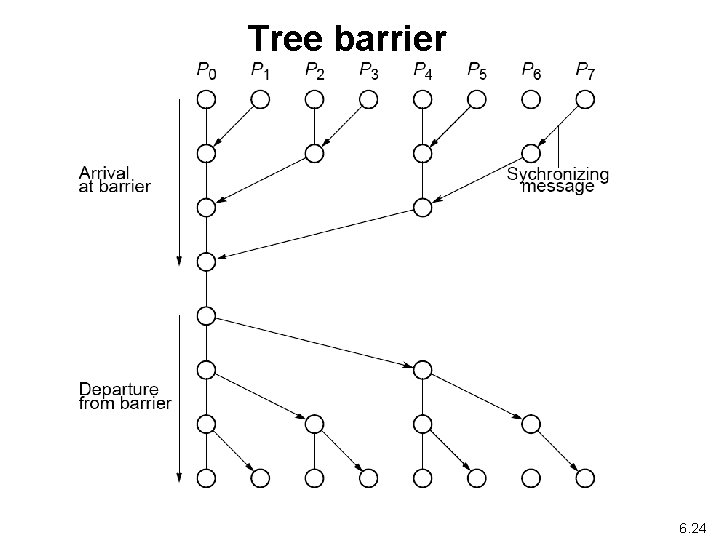

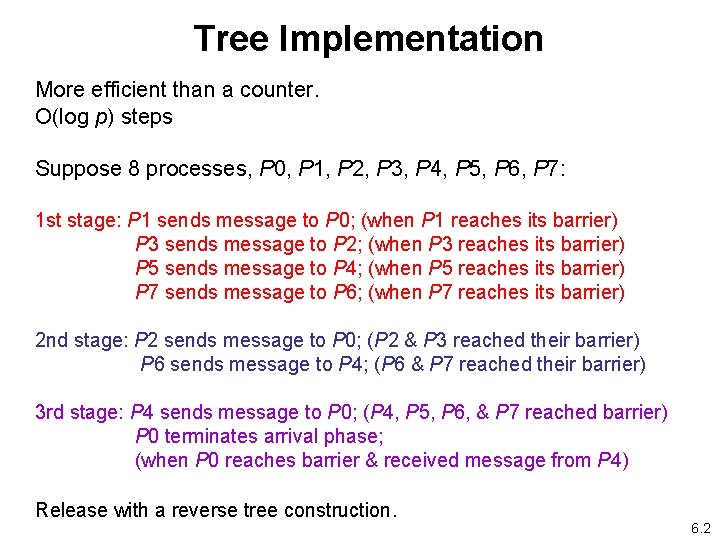

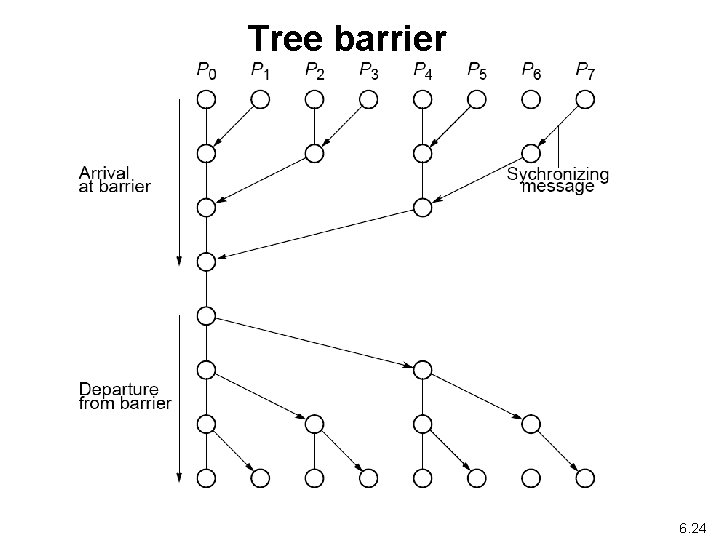

Tree Implementation More efficient than a counter. O(log p) steps Suppose 8 processes, P 0, P 1, P 2, P 3, P 4, P 5, P 6, P 7: 1 st stage: P 1 sends message to P 0; (when P 1 reaches its barrier) P 3 sends message to P 2; (when P 3 reaches its barrier) P 5 sends message to P 4; (when P 5 reaches its barrier) P 7 sends message to P 6; (when P 7 reaches its barrier) 2 nd stage: P 2 sends message to P 0; (P 2 & P 3 reached their barrier) P 6 sends message to P 4; (P 6 & P 7 reached their barrier) 3 rd stage: P 4 sends message to P 0; (P 4, P 5, P 6, & P 7 reached barrier) P 0 terminates arrival phase; (when P 0 reaches barrier & received message from P 4) Release with a reverse tree construction. 6. 2

Tree barrier 6. 24

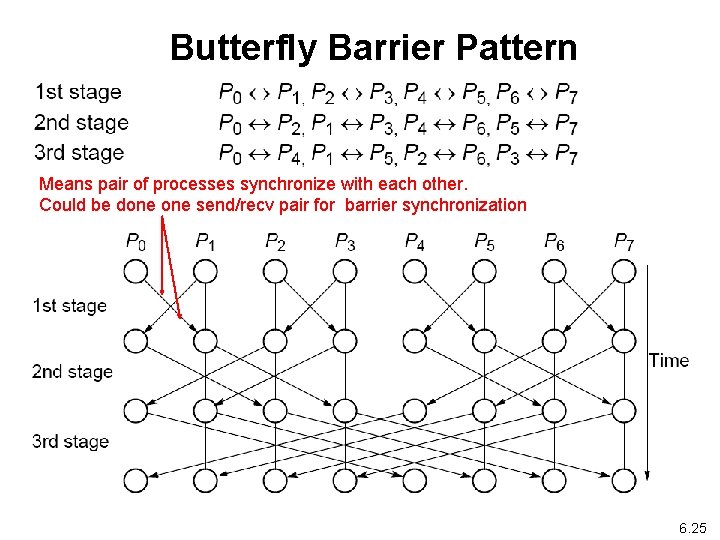

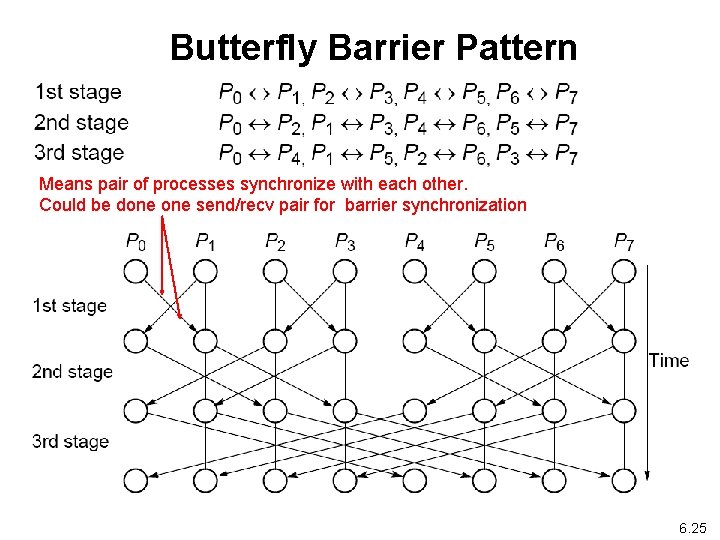

Butterfly Barrier Pattern Means pair of processes synchronize with each other. Could be done send/recv pair for barrier synchronization 6. 25

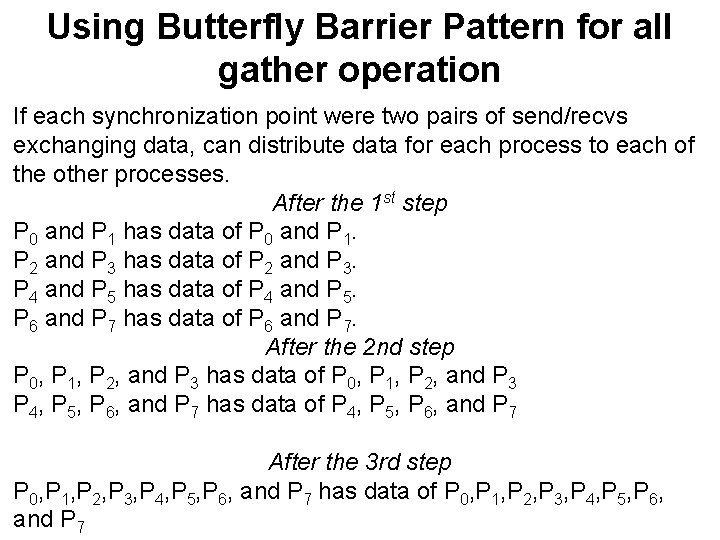

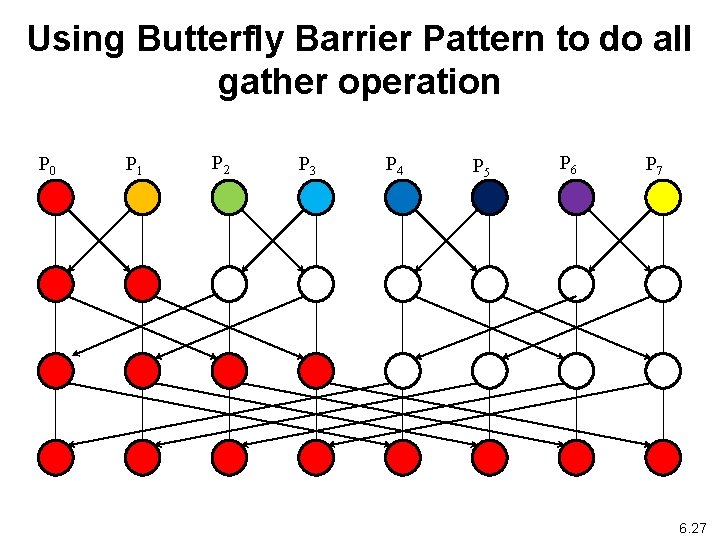

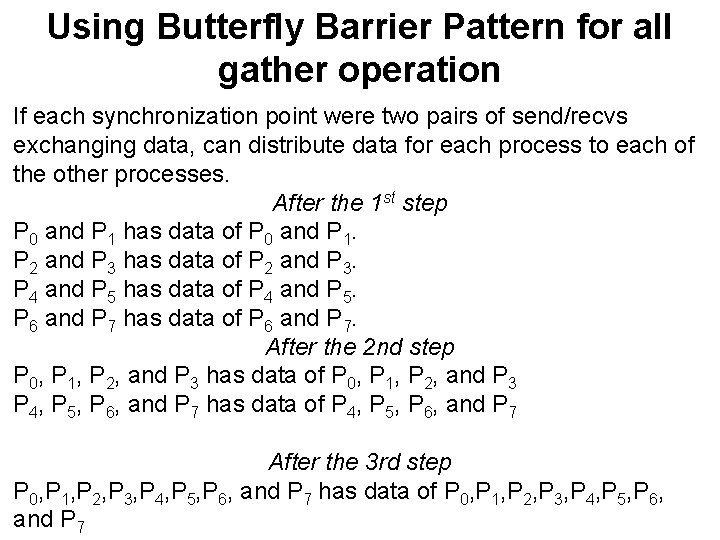

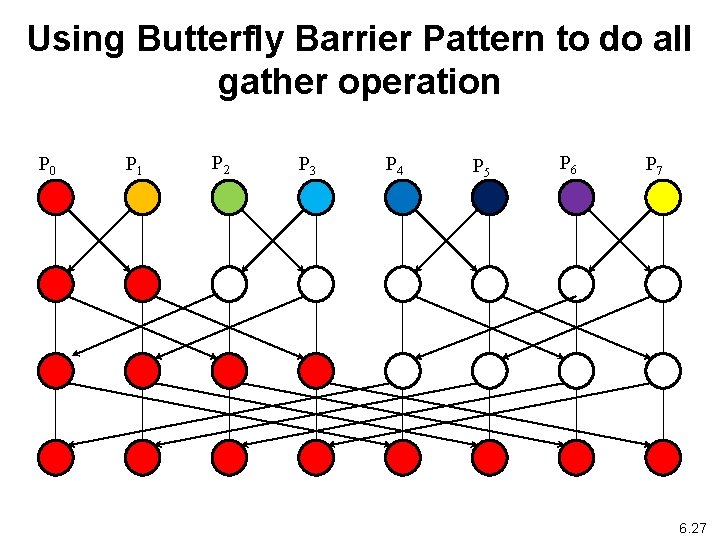

Using Butterfly Barrier Pattern for all gather operation If each synchronization point were two pairs of send/recvs exchanging data, can distribute data for each process to each of the other processes. After the 1 st step P 0 and P 1 has data of P 0 and P 1. P 2 and P 3 has data of P 2 and P 3. P 4 and P 5 has data of P 4 and P 5. P 6 and P 7 has data of P 6 and P 7. After the 2 nd step P 0, P 1, P 2, and P 3 has data of P 0, P 1, P 2, and P 3 P 4, P 5, P 6, and P 7 has data of P 4, P 5, P 6, and P 7 After the 3 rd step P 0, P 1, P 2, P 3, P 4, P 5, P 6, and P 7 has data of P 0, P 1, P 2, P 3, P 4, P 5, P 6, and P 7

Using Butterfly Barrier Pattern to do all gather operation P 0 P 1 P 2 P 3 P 4 P 5 P 6 P 7 6. 27

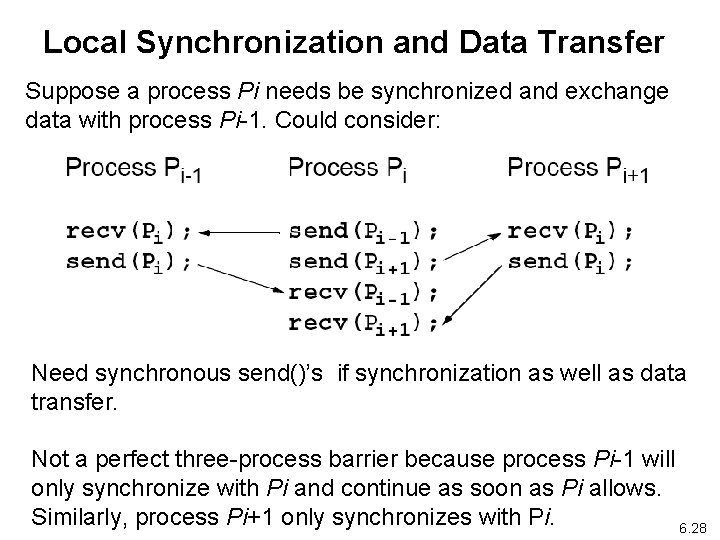

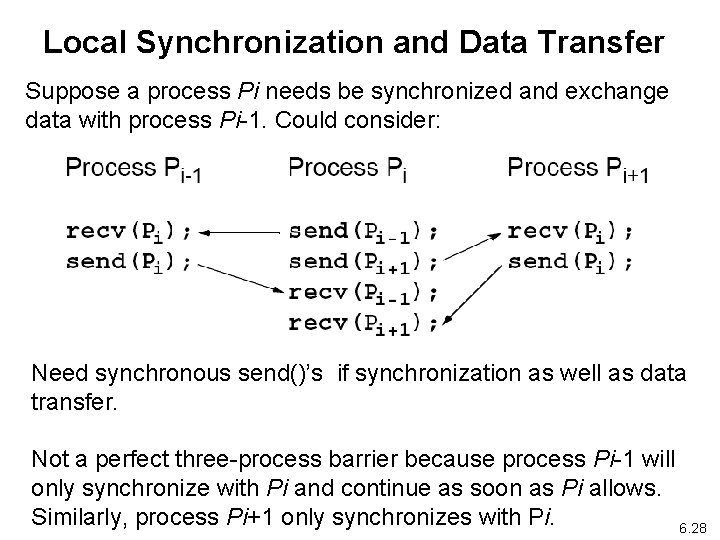

Local Synchronization and Data Transfer Suppose a process Pi needs be synchronized and exchange data with process Pi-1. Could consider: Need synchronous send()’s if synchronization as well as data transfer. Not a perfect three-process barrier because process Pi-1 will only synchronize with Pi and continue as soon as Pi allows. Similarly, process Pi+1 only synchronizes with Pi. 6. 28

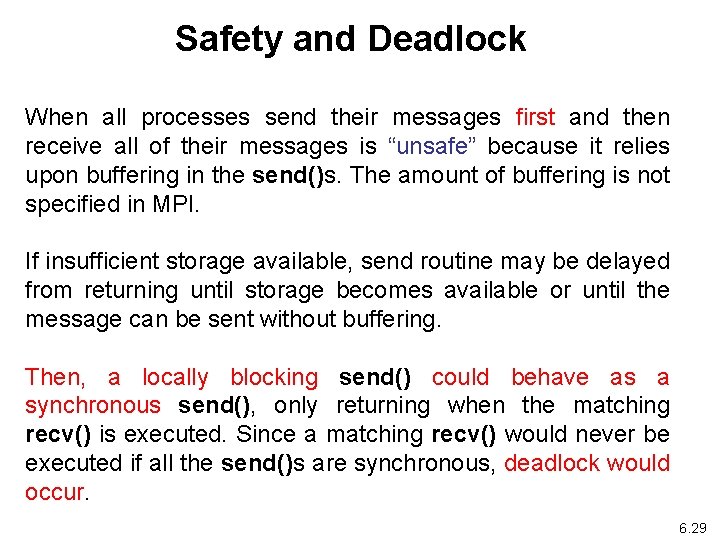

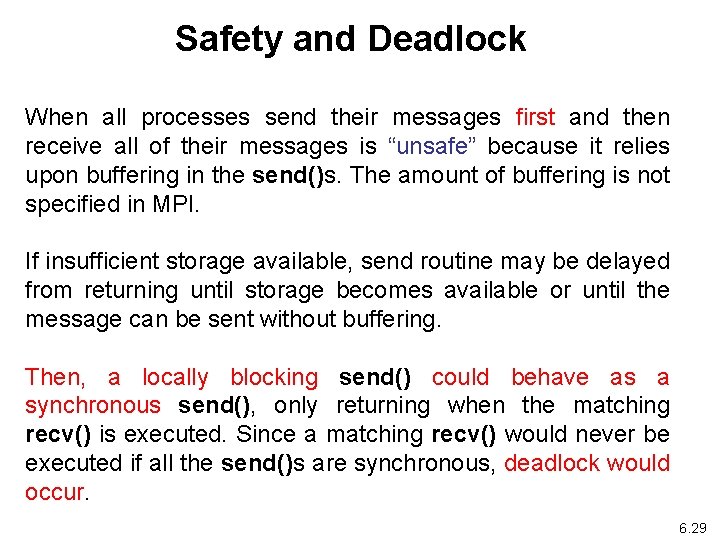

Safety and Deadlock When all processes send their messages first and then receive all of their messages is “unsafe” because it relies upon buffering in the send()s. The amount of buffering is not specified in MPI. If insufficient storage available, send routine may be delayed from returning until storage becomes available or until the message can be sent without buffering. Then, a locally blocking send() could behave as a synchronous send(), only returning when the matching recv() is executed. Since a matching recv() would never be executed if all the send()s are synchronous, deadlock would occur. 6. 29

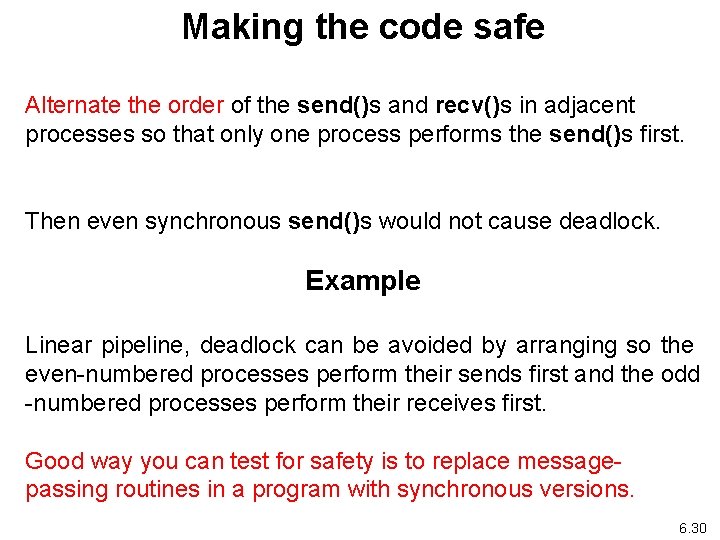

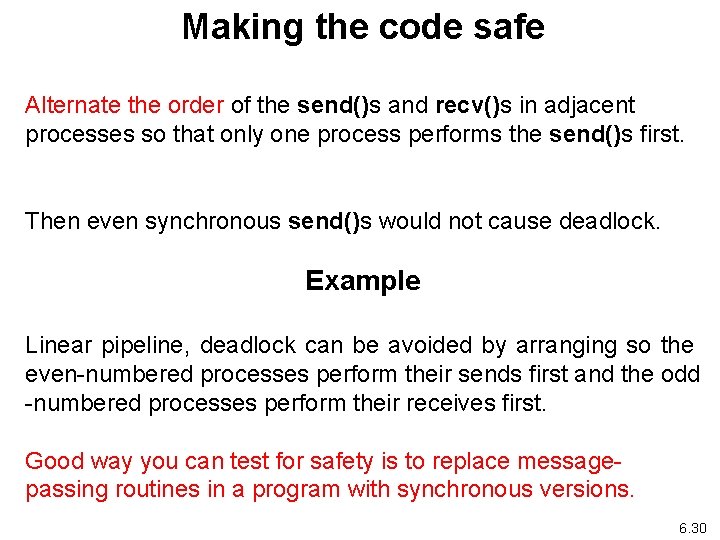

Making the code safe Alternate the order of the send()s and recv()s in adjacent processes so that only one process performs the send()s first. Then even synchronous send()s would not cause deadlock. Example Linear pipeline, deadlock can be avoided by arranging so the even-numbered processes perform their sends first and the odd -numbered processes perform their receives first. Good way you can test for safety is to replace messagepassing routines in a program with synchronous versions. 6. 30

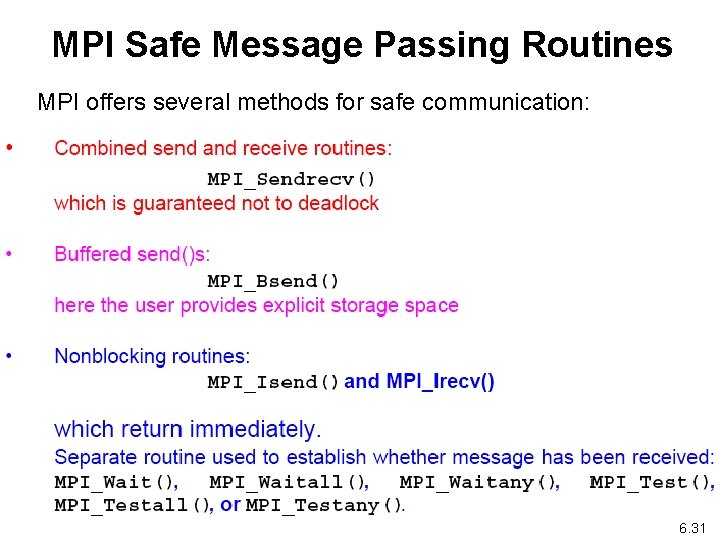

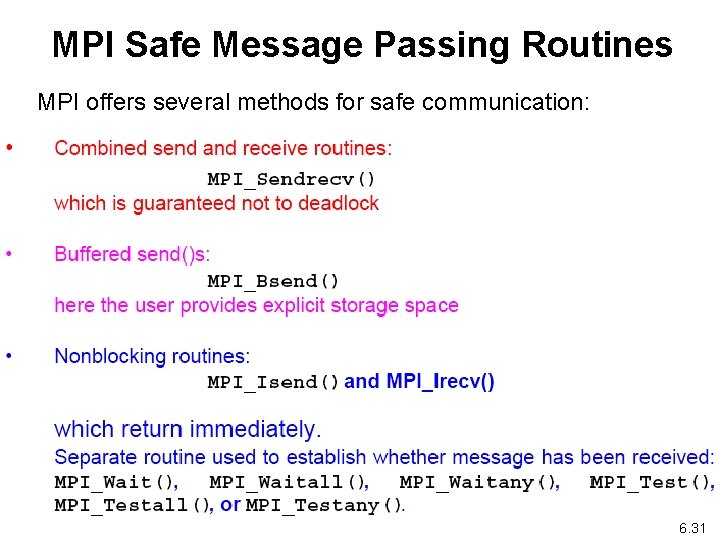

MPI Safe Message Passing Routines MPI offers several methods for safe communication: 6. 31

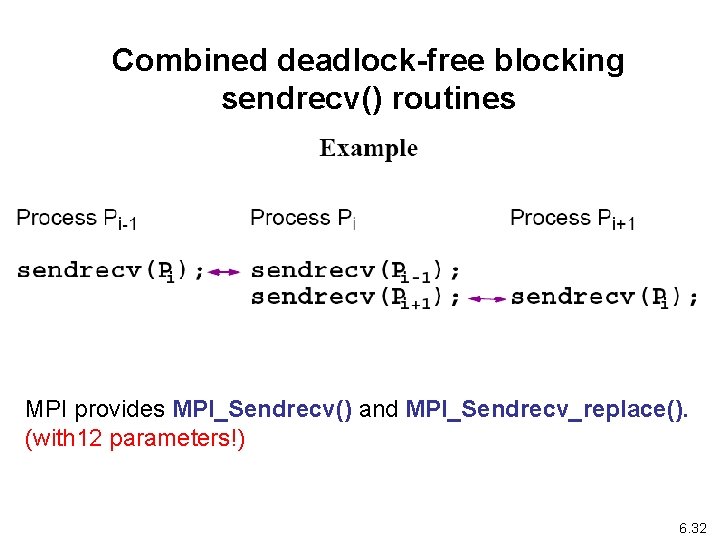

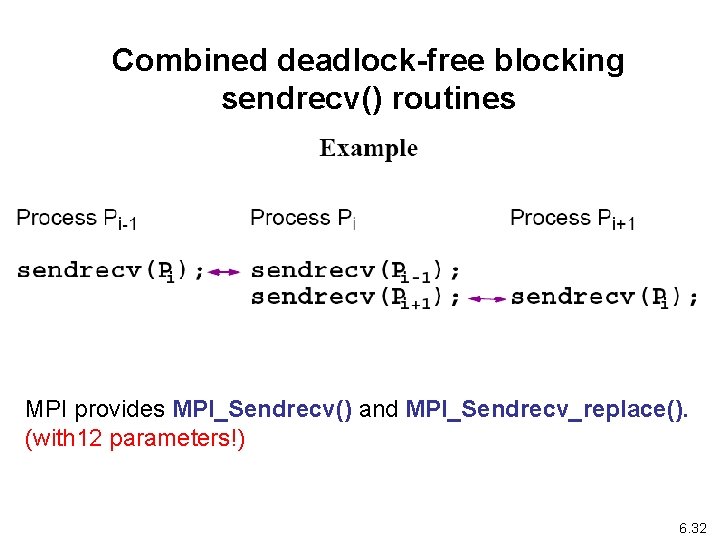

Combined deadlock-free blocking sendrecv() routines MPI provides MPI_Sendrecv() and MPI_Sendrecv_replace(). (with 12 parameters!) 6. 32

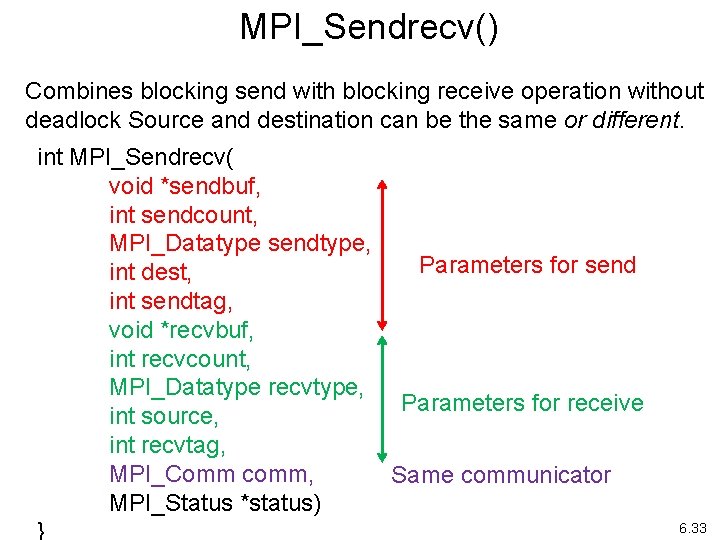

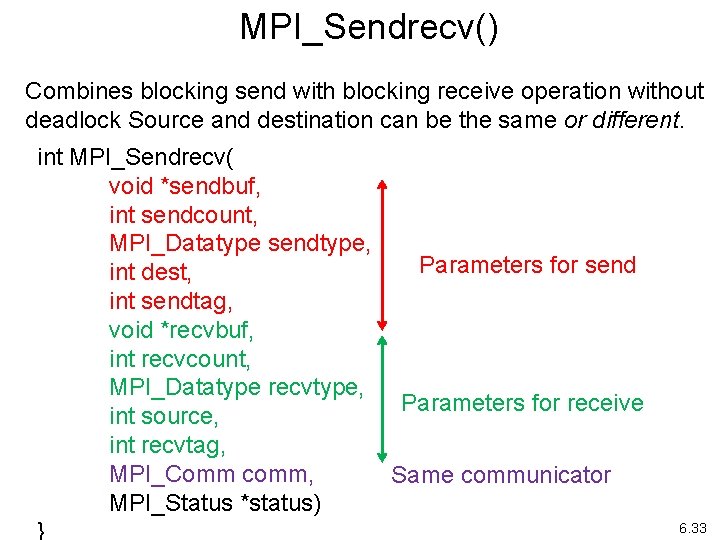

MPI_Sendrecv() Combines blocking send with blocking receive operation without deadlock Source and destination can be the same or different. int MPI_Sendrecv( void *sendbuf, int sendcount, MPI_Datatype sendtype, Parameters for send int dest, int sendtag, void *recvbuf, int recvcount, MPI_Datatype recvtype, Parameters for receive int source, int recvtag, MPI_Comm comm, Same communicator MPI_Status *status) } 6. 33

MPI Version 3 • • • Approved Sept 21, 2012 http: //www. mpi-forum. org/docs. html Extension of previous MPI 2. 2 Major update of MPI Collective routine added including: – Nonblocking Collective Operations – New One-sided Communication Operations – Neighborhood Collectives • C++ bindings dropped as added little! Not done in class. Can still use C routines in C++ programs. )

Questions 2 a. 35