MEMORY SYSTEMS Chapter 12 Memory Hierarchy Cache Memory

- Slides: 46

MEMORY SYSTEMS Chapter 12

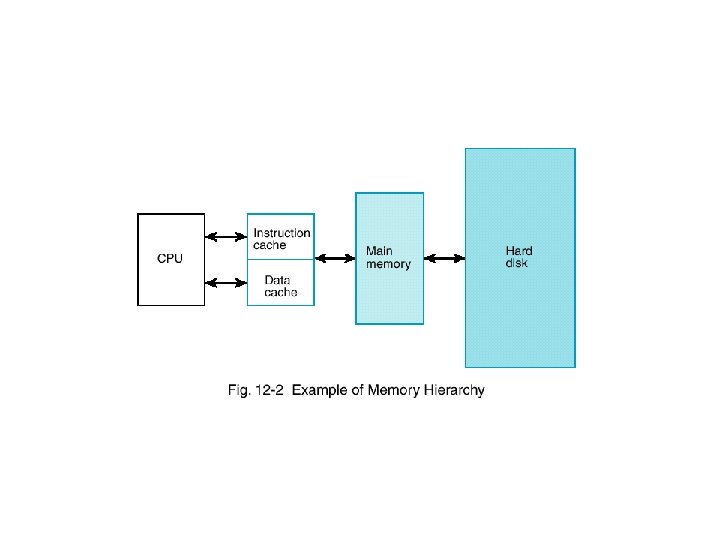

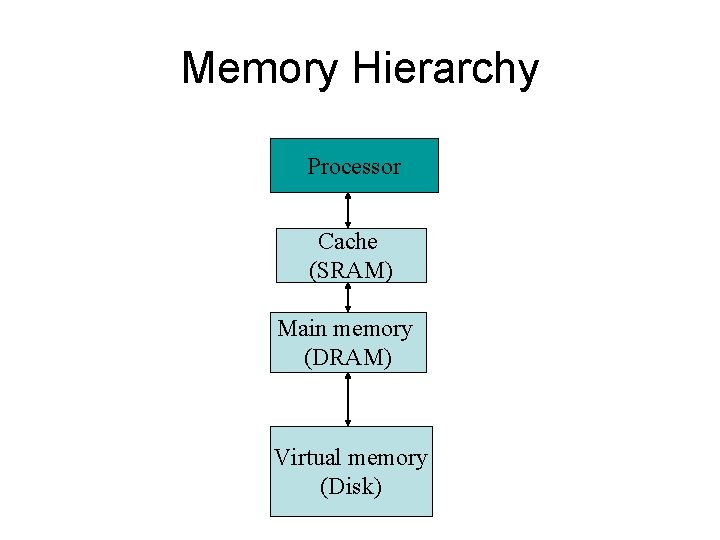

Memory Hierarchy

Cache Memory • The aim is to provide operands to the CPU at the speed it can process them. • Memory systems lag behind the processor both in – time and – bandwidth

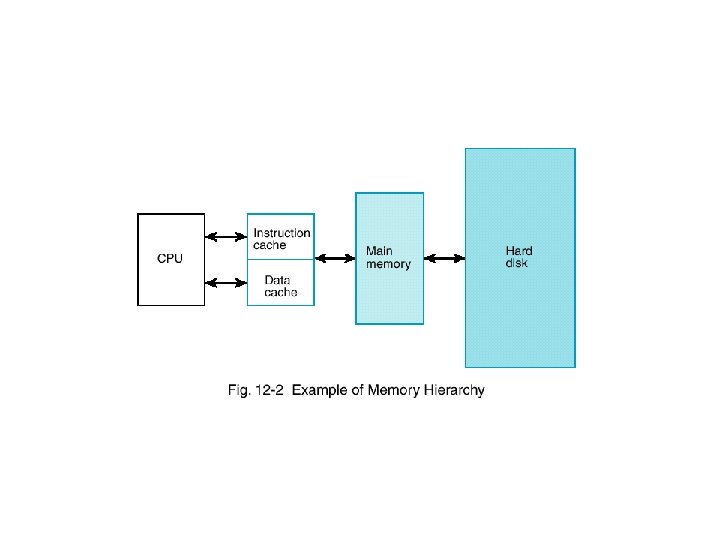

Cache Memory One way to attack this problem is using cache memories. Cache holds the most recently used memory words in a small fast memory, speeding up access to them. One of the most effective techniques for improving both bandwidth and latency comes from the use of multiple caches. Split cache : separate caches for instructions and data

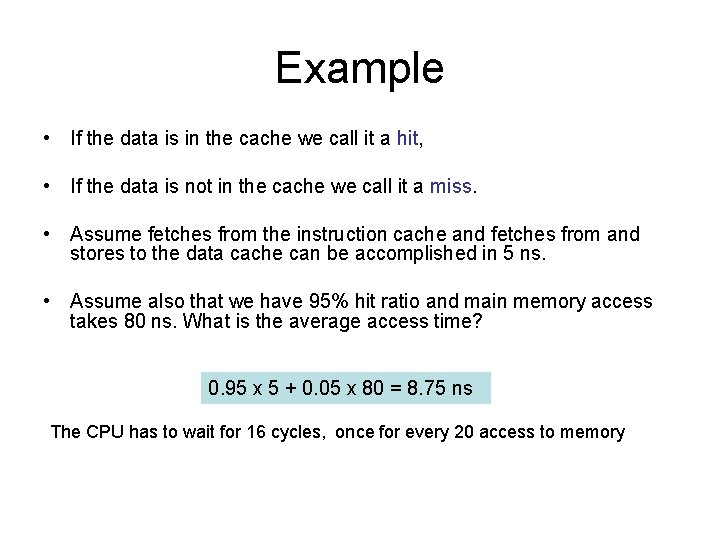

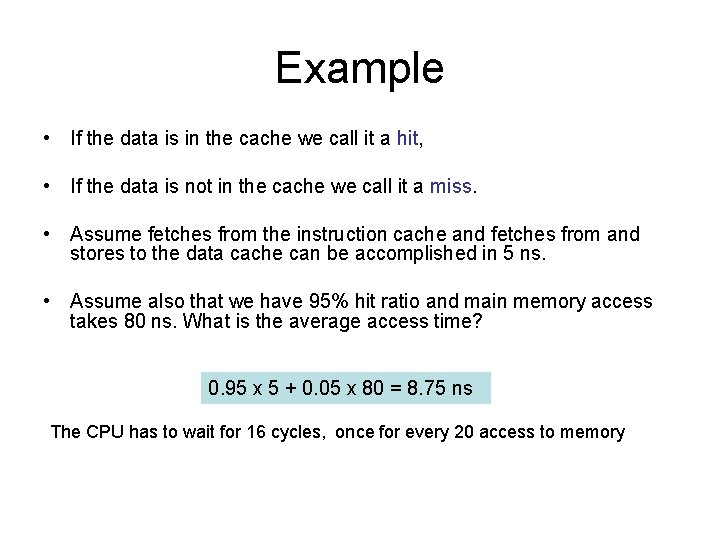

Example • If the data is in the cache we call it a hit, • If the data is not in the cache we call it a miss. • Assume fetches from the instruction cache and fetches from and stores to the data cache can be accomplished in 5 ns. • Assume also that we have 95% hit ratio and main memory access takes 80 ns. What is the average access time? 0. 95 x 5 + 0. 05 x 80 = 8. 75 ns The CPU has to wait for 16 cycles, once for every 20 access to memory

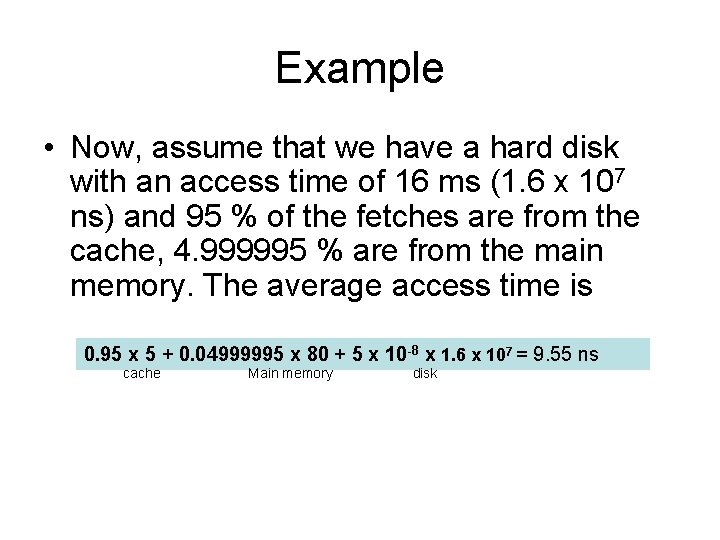

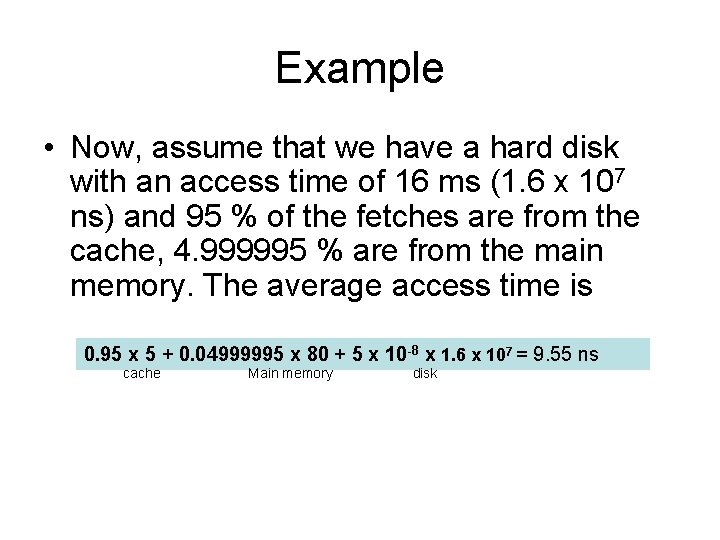

Example • Now, assume that we have a hard disk with an access time of 16 ms (1. 6 x 107 ns) and 95 % of the fetches are from the cache, 4. 999995 % are from the main memory. The average access time is 0. 95 x 5 + 0. 04999995 x 80 + 5 x 10 -8 x 1. 6 x 107 = 9. 55 ns cache Main memory disk

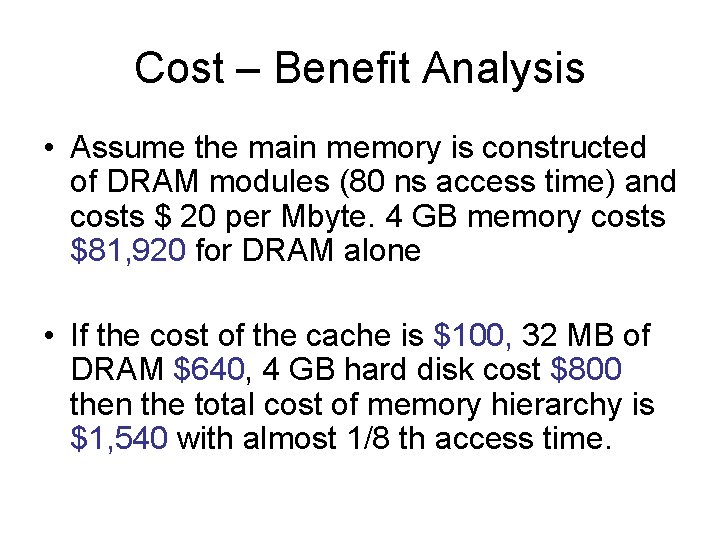

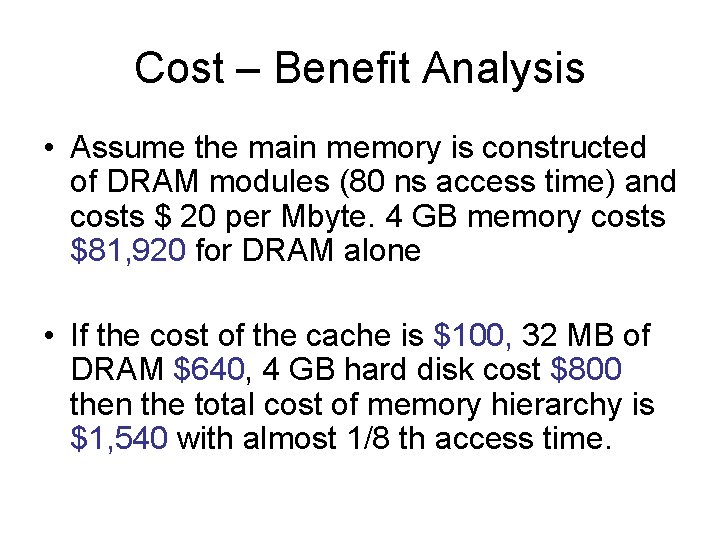

Cost – Benefit Analysis • Assume the main memory is constructed of DRAM modules (80 ns access time) and costs $ 20 per Mbyte. 4 GB memory costs $81, 920 for DRAM alone • If the cost of the cache is $100, 32 MB of DRAM $640, 4 GB hard disk cost $800 then the total cost of memory hierarchy is $1, 540 with almost 1/8 th access time.

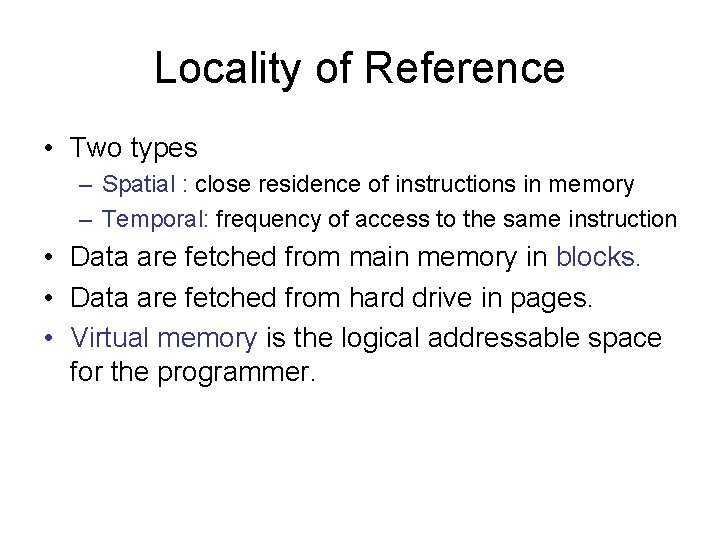

Locality of Reference • Two types – Spatial : close residence of instructions in memory – Temporal: frequency of access to the same instruction • Data are fetched from main memory in blocks. • Data are fetched from hard drive in pages. • Virtual memory is the logical addressable space for the programmer.

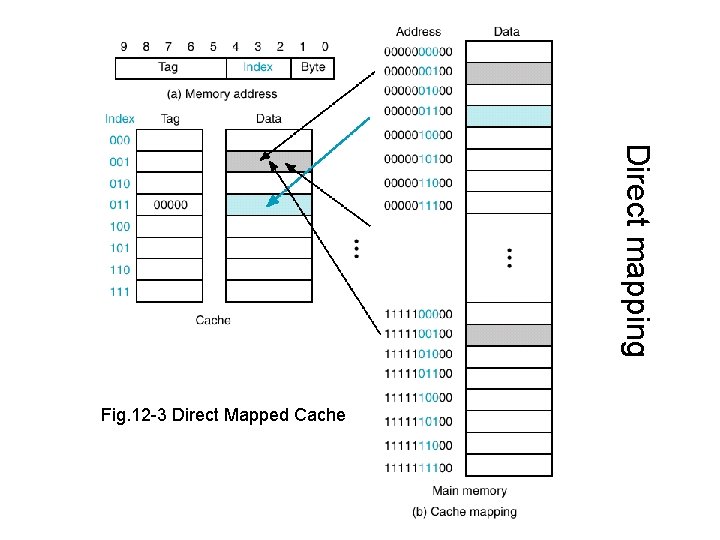

Direct Mapped Cache • Assume that we have a very small cache of eight 32 -bit words and a small main memory with 1 KB (256 words) We need • 3 bits to address cache words • 8 bits to address main memory words • How are we going to map memory addresses onto cache addresses?

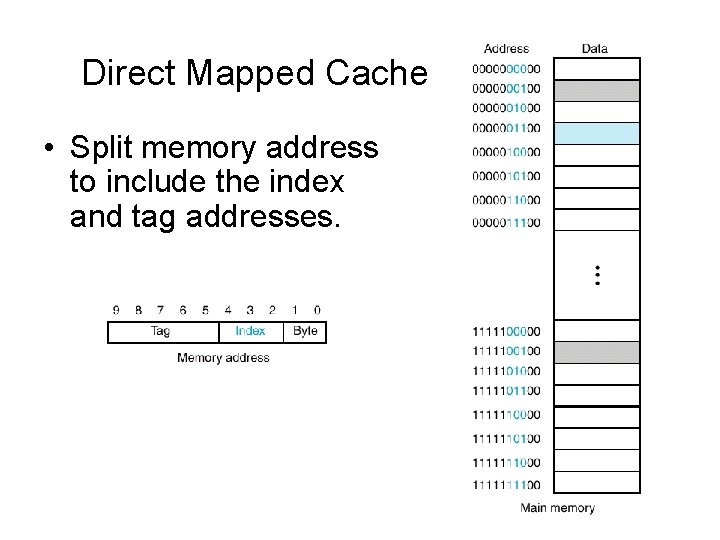

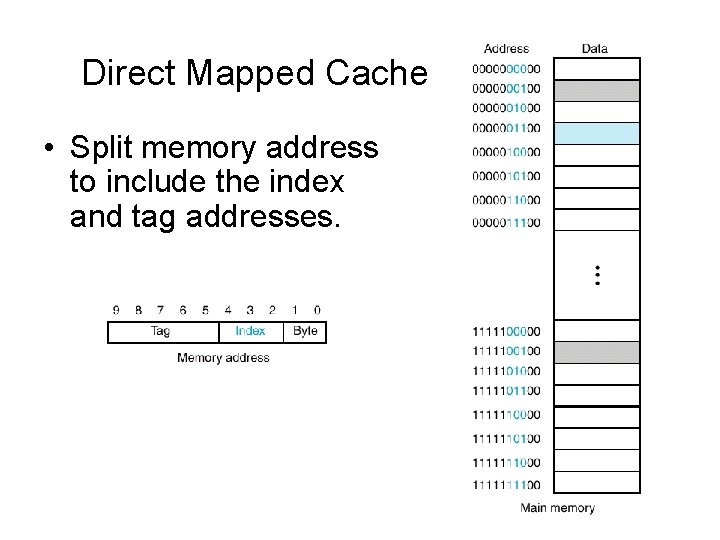

Direct Mapped Cache • Split memory address to include the index and tag addresses.

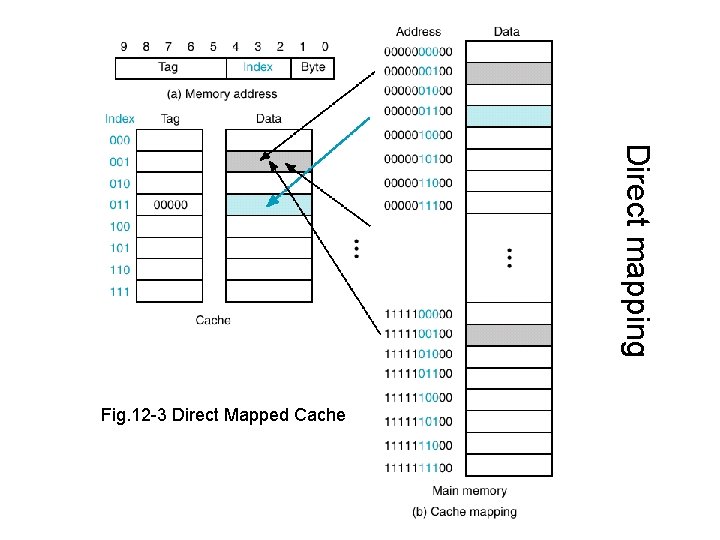

Direct mapping Fig. 12 -3 Direct Mapped Cache

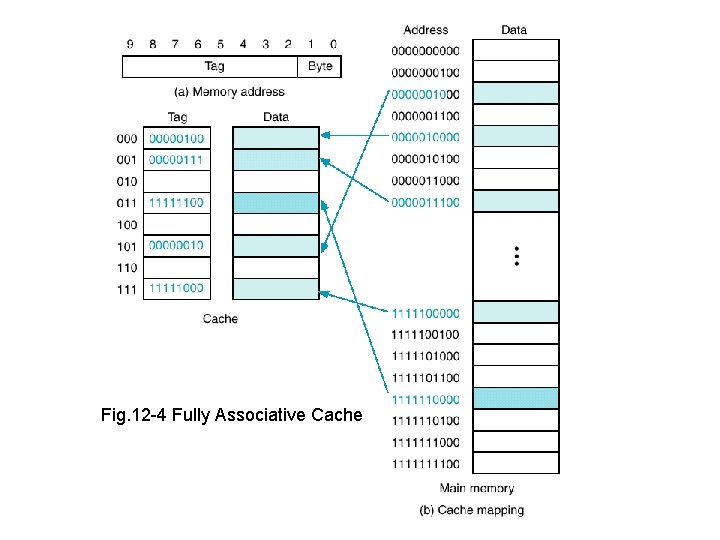

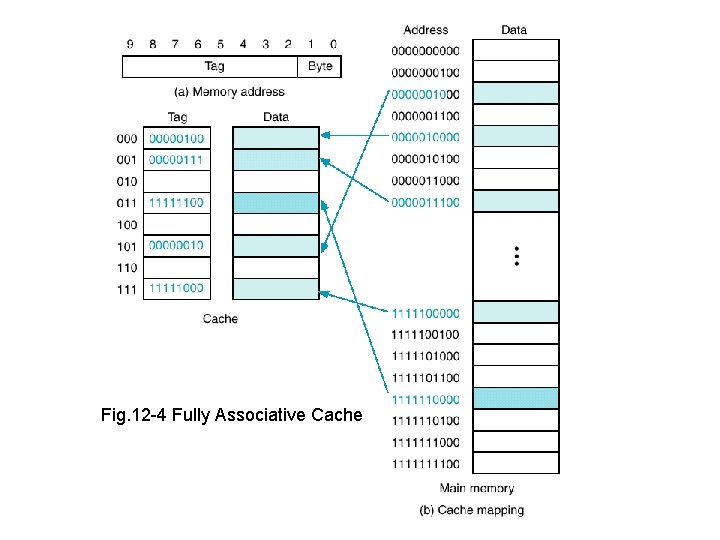

Associative Mapping • In the example of direct mapping there are 32 possible memory locations for every cache location. • Extreme example would be an instruction and its operand addressing memory with the same tag. • In associative mapping any word from main memory can be stored any where in the cache. • This requires that the whole address to be stored as a tag in the cache This is called fully associative mapping.

Fig. 12 -4 Fully Associative Cache

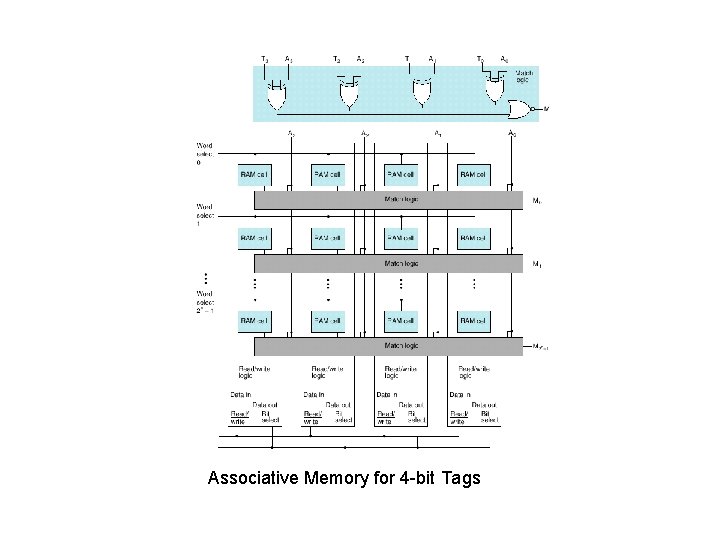

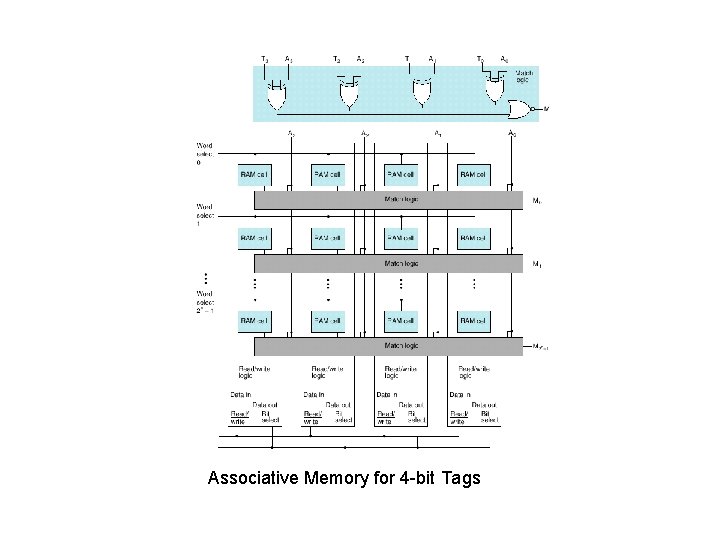

Associative Memory for 4 -bit Tags

Associative Mapping • Replacement Policy – Random replacement – FIFO – replace the oldest one – LRU – replace the least recently used one.

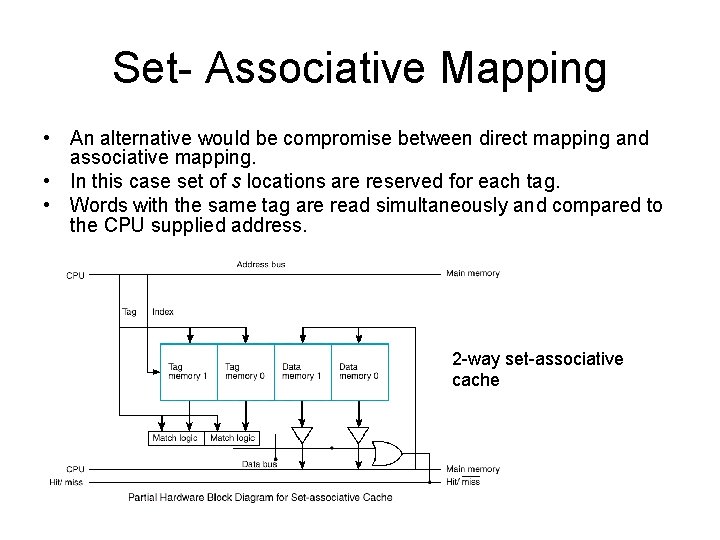

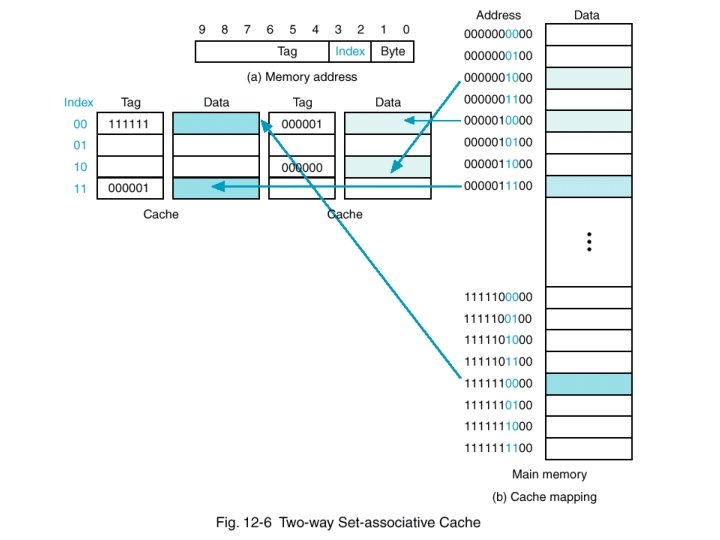

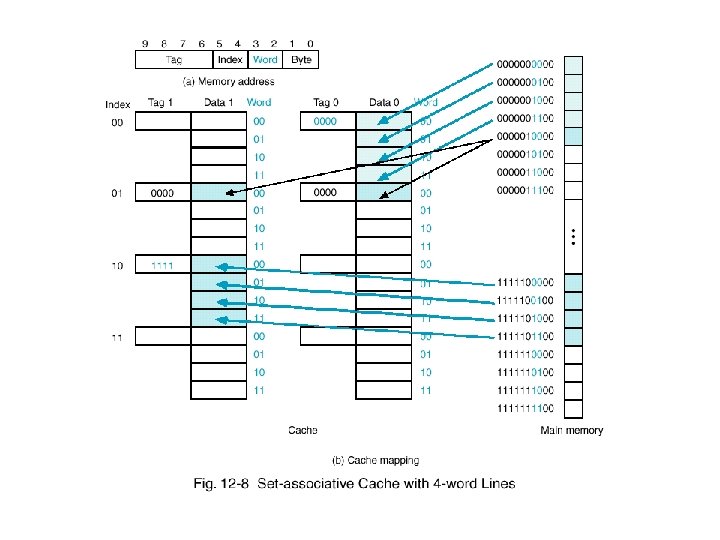

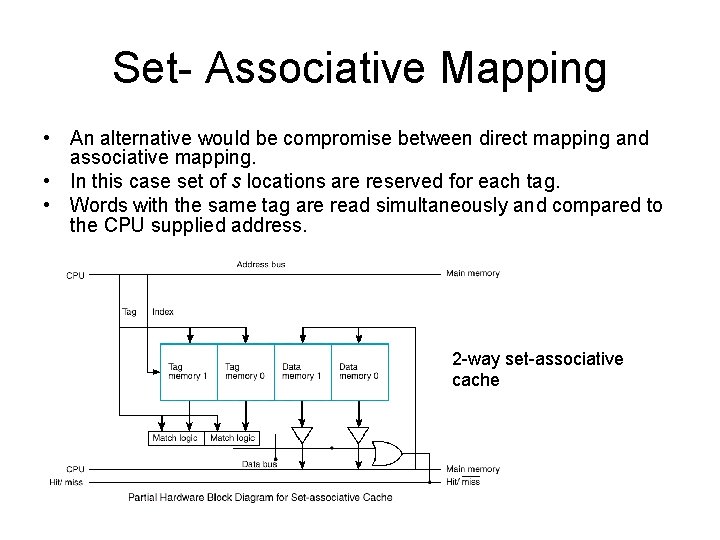

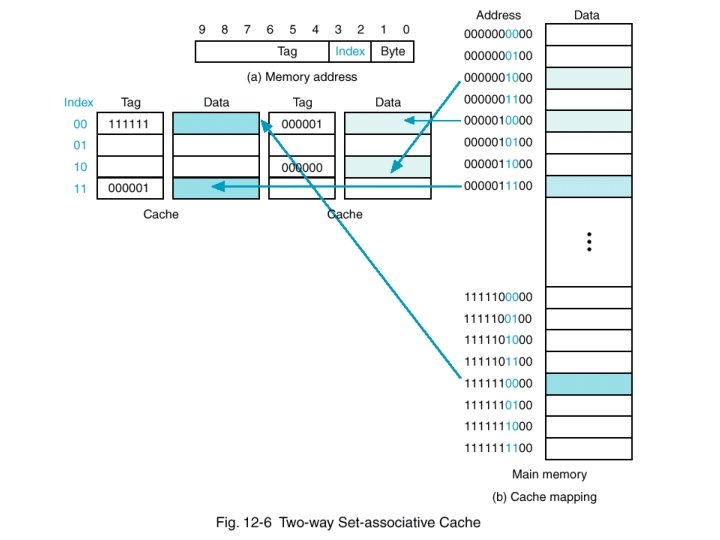

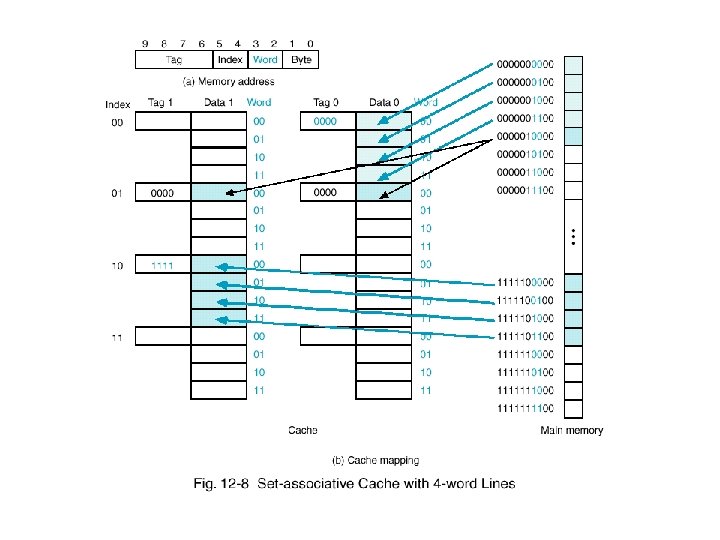

Set- Associative Mapping • An alternative would be compromise between direct mapping and associative mapping. • In this case set of s locations are reserved for each tag. • Words with the same tag are read simultaneously and compared to the CPU supplied address. 2 -way set-associative cache

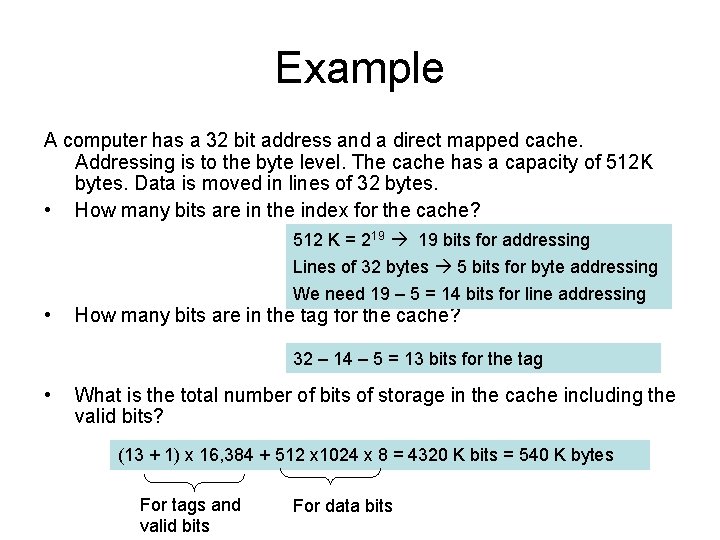

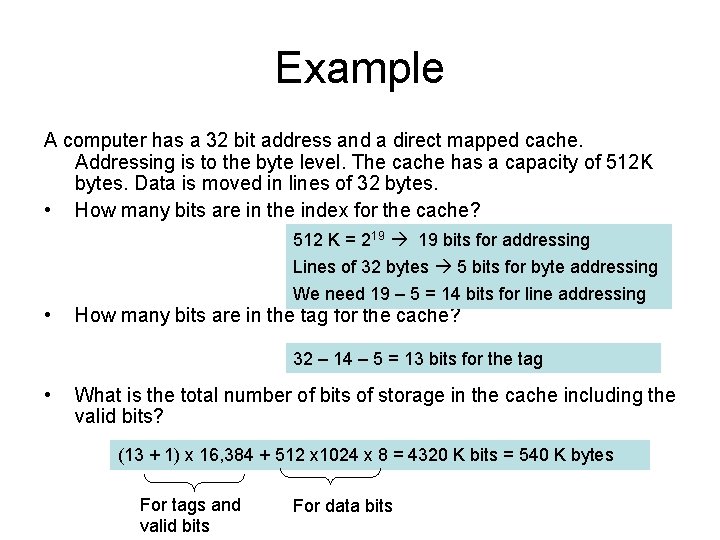

Example A computer has a 32 bit address and a direct mapped cache. Addressing is to the byte level. The cache has a capacity of 512 K bytes. Data is moved in lines of 32 bytes. • How many bits are in the index for the cache? 512 K = 219 19 bits for addressing Lines of 32 bytes 5 bits for byte addressing We need 19 – 5 = 14 bits for line addressing • How many bits are in the tag for the cache? 32 – 14 – 5 = 13 bits for the tag • What is the total number of bits of storage in the cache including the valid bits? (13 + 1) x 16, 384 + 512 x 1024 x 8 = 4320 K bits = 540 K bytes For tags and valid bits For data bits

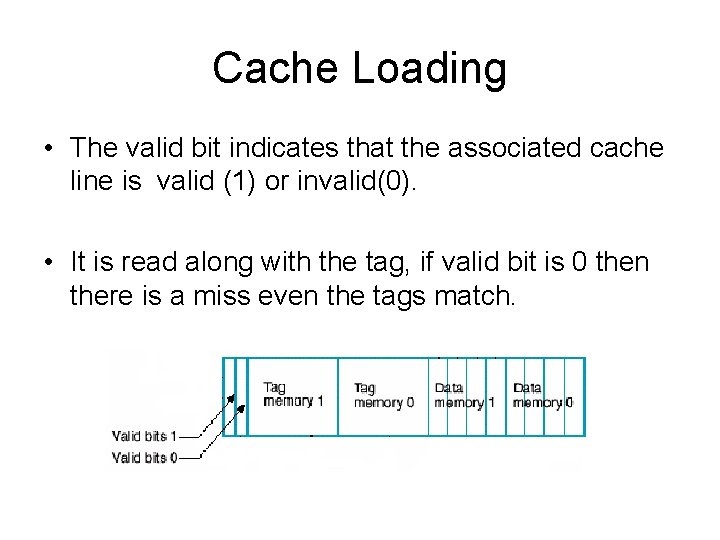

Cache Loading • The valid bit indicates that the associated cache line is valid (1) or invalid(0). • It is read along with the tag, if valid bit is 0 then there is a miss even the tags match.

Write Methods • How should writes be treated? • There are three possibilities: 1. Write the result into the main memory 2. Write the result into the cache 3. Write the result into both the main memory and into the cache.

Write Methods Write through – The result is always written into the main memory. • Write buffering technique is used to avoid slowing down of memory write by buffering the word to be written and its address in a register. • In most designs the word is also written into the cache if there is hit. • The memory is up-to-date but there is a lot of writing traffic.

Write Methods • Write deferred or write back the CPU performs a write only to the cache in the case of a cache hit. • If there is a miss the CPU performs a write to main memory. Two possibilities: 1. 2. • Read the line containing the word to be written into the cache with the new word written into both the cache and the main memory – write allocate. On a write miss, simply write into main memory. The disadvantage is that memory and the cache are inconsistent.

Write Methods Write back is required whenever a new line is to be brought from main memory on a read miss. If the location in the cache has been written into it has to be written back into the main memory before its location is released. To avoid write back on every read miss, dirty bit is used. If this bit is a (1) then the word has been written and needs write back, if it is a (0), it can be replaced without write back.

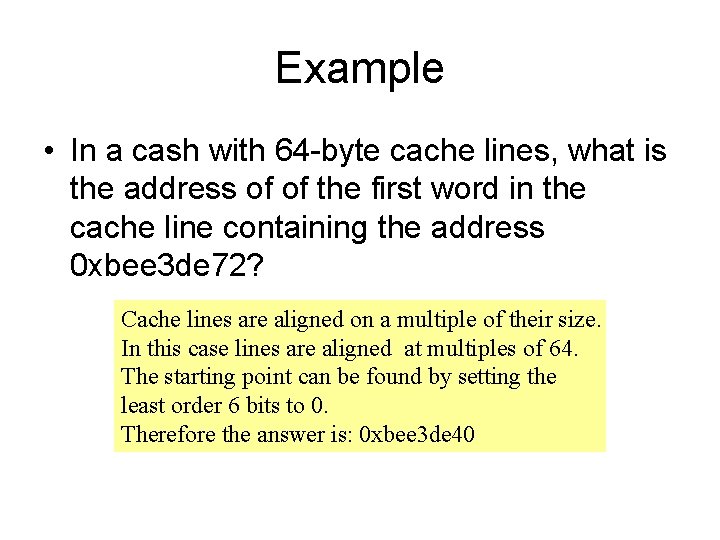

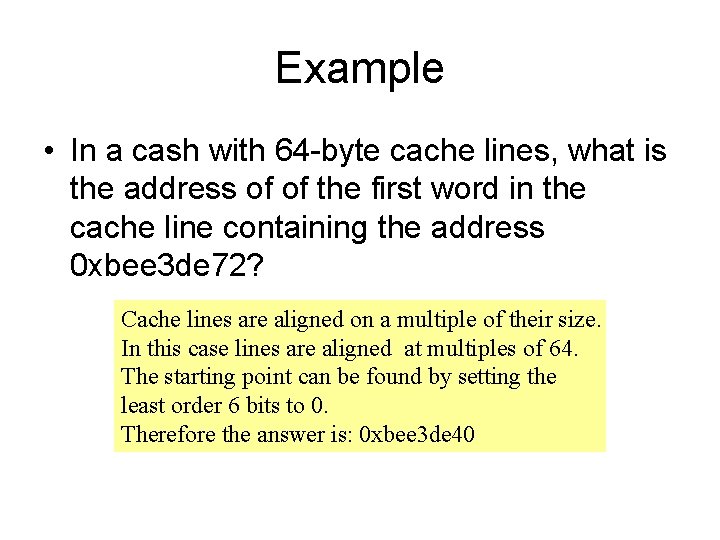

Example • In a cash with 64 -byte cache lines, what is the address of of the first word in the cache line containing the address 0 xbee 3 de 72? Cache lines are aligned on a multiple of their size. In this case lines are aligned at multiples of 64. The starting point can be found by setting the least order 6 bits to 0. Therefore the answer is: 0 xbee 3 de 40

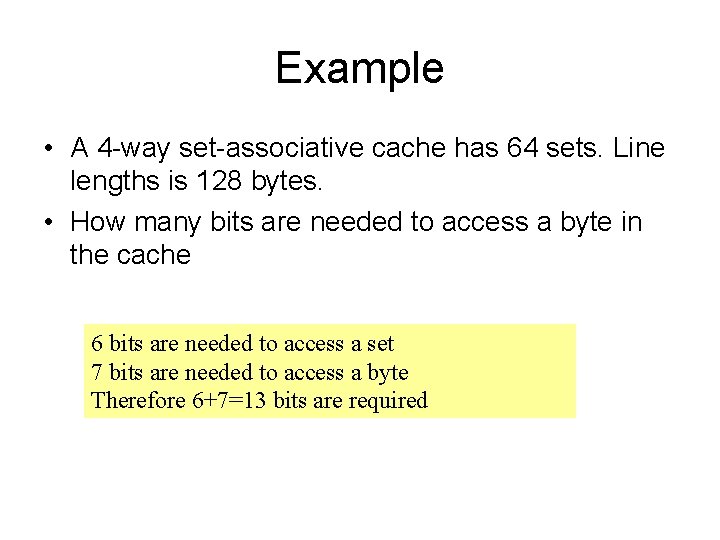

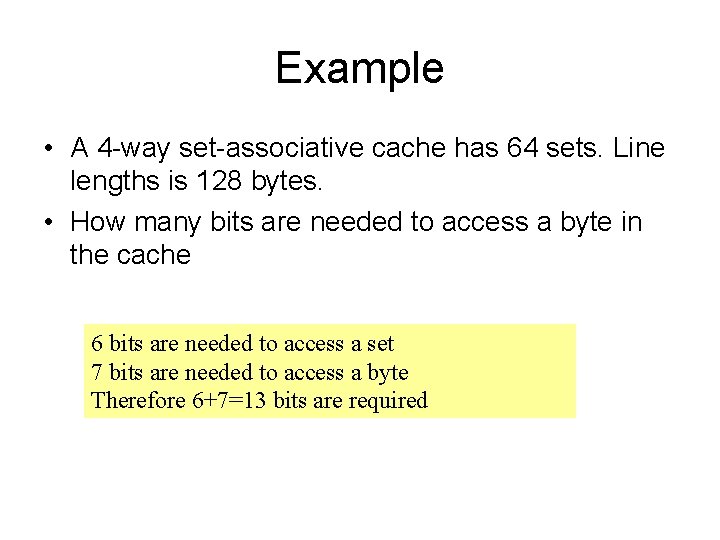

Example • A 4 -way set-associative cache has 64 sets. Line lengths is 128 bytes. • How many bits are needed to access a byte in the cache 6 bits are needed to access a set 7 bits are needed to access a byte Therefore 6+7=13 bits are required

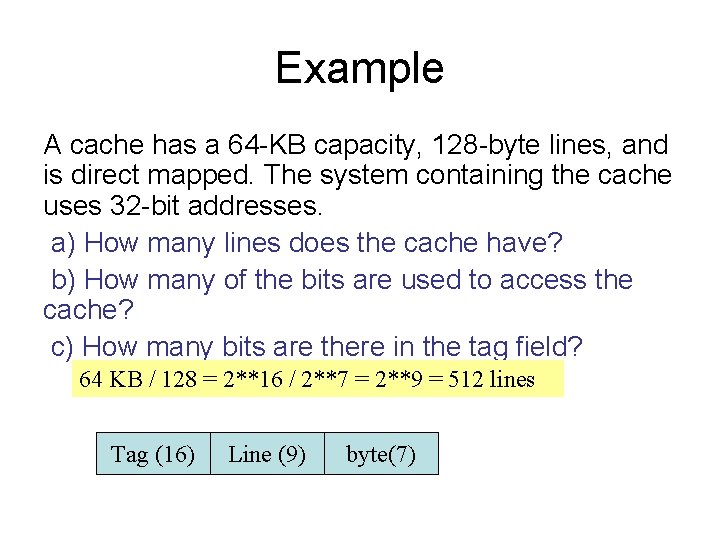

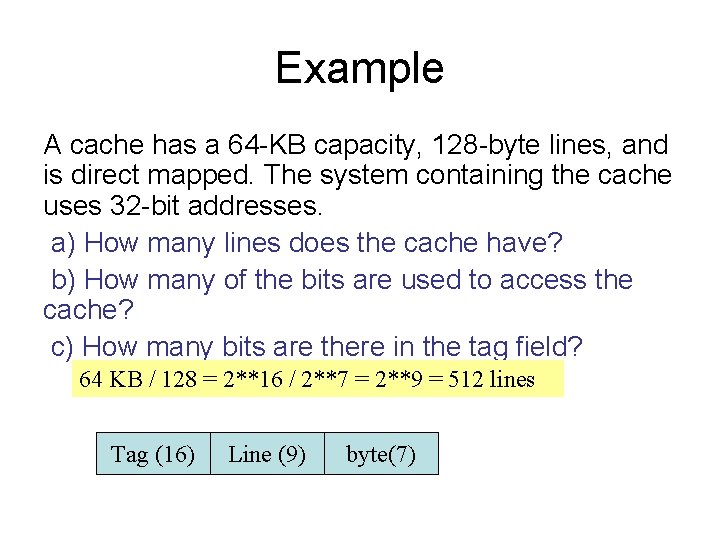

Example A cache has a 64 -KB capacity, 128 -byte lines, and is direct mapped. The system containing the cache uses 32 -bit addresses. a) How many lines does the cache have? b) How many of the bits are used to access the cache? c) How many bits are there in the tag field? 64 KB / 128 = 2**16 / 2**7 = 2**9 = 512 lines Tag (16) Line (9) byte(7)

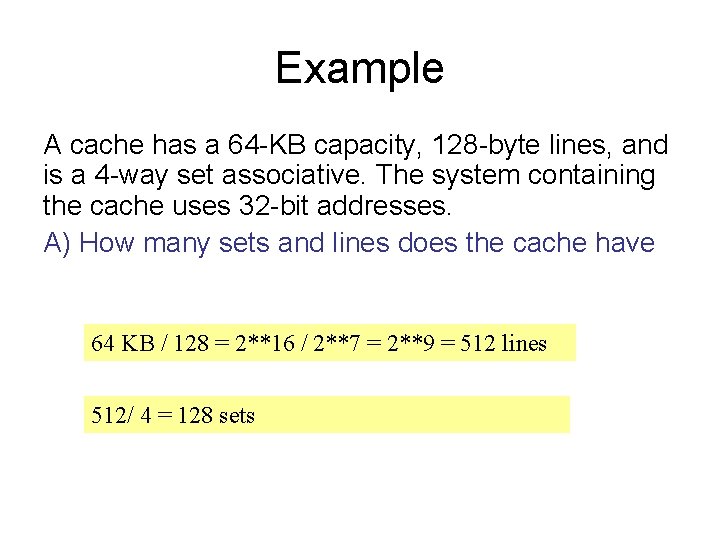

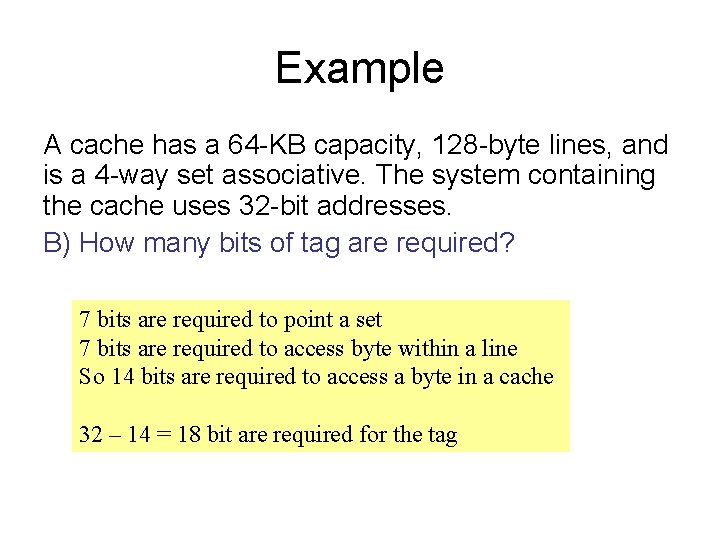

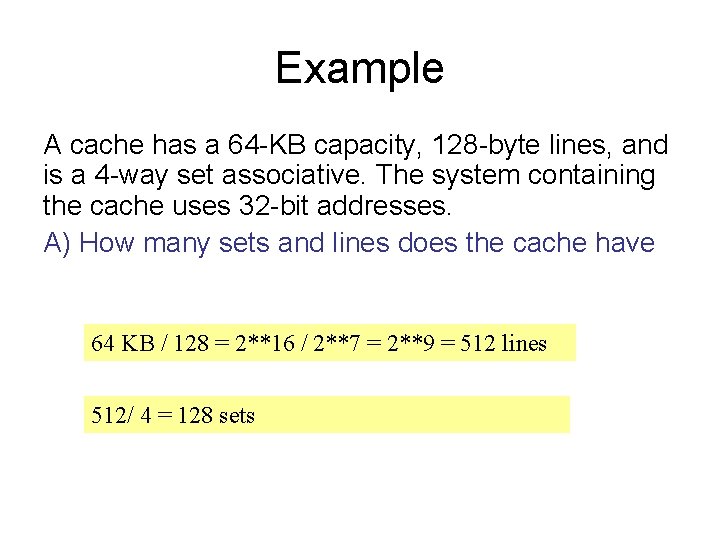

Example A cache has a 64 -KB capacity, 128 -byte lines, and is a 4 -way set associative. The system containing the cache uses 32 -bit addresses. A) How many sets and lines does the cache have 64 KB / 128 = 2**16 / 2**7 = 2**9 = 512 lines 512/ 4 = 128 sets

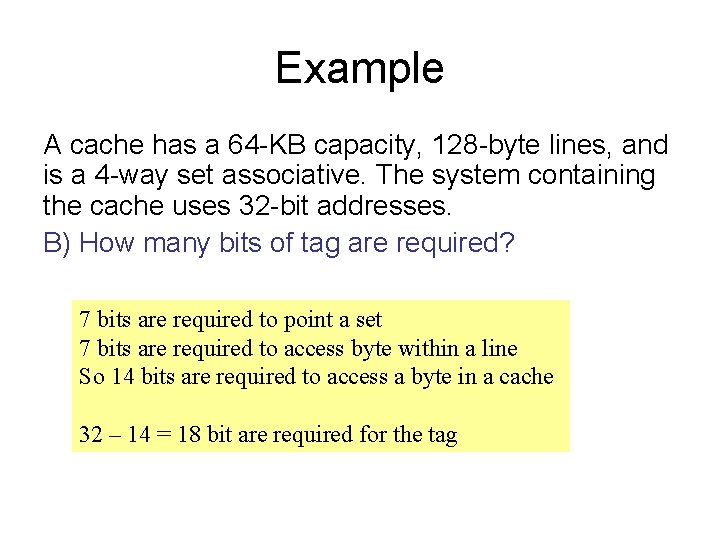

Example A cache has a 64 -KB capacity, 128 -byte lines, and is a 4 -way set associative. The system containing the cache uses 32 -bit addresses. B) How many bits of tag are required? 7 bits are required to point a set 7 bits are required to access byte within a line So 14 bits are required to access a byte in a cache 32 – 14 = 18 bit are required for the tag

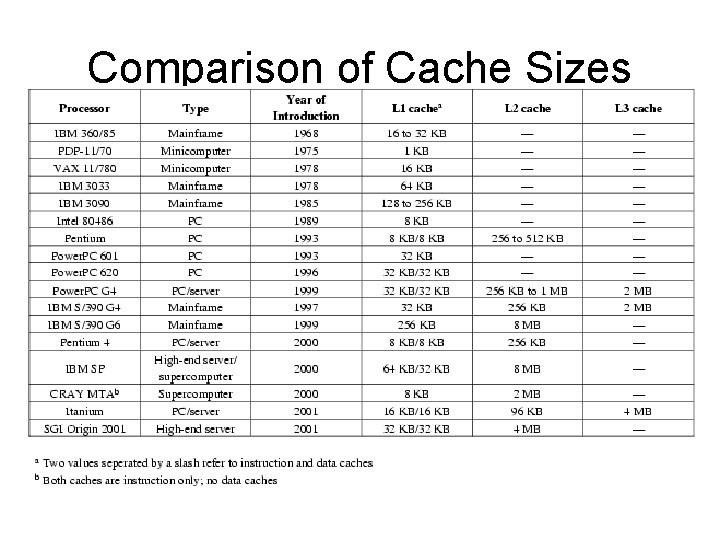

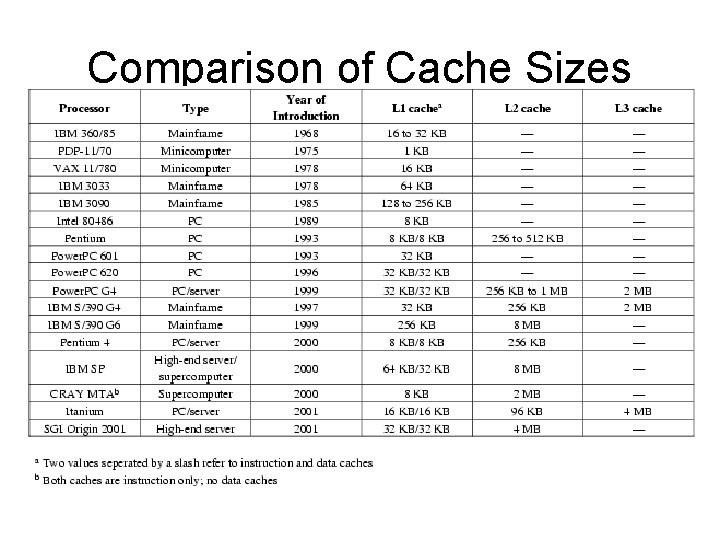

Comparison of Cache Sizes

Virtual Memory

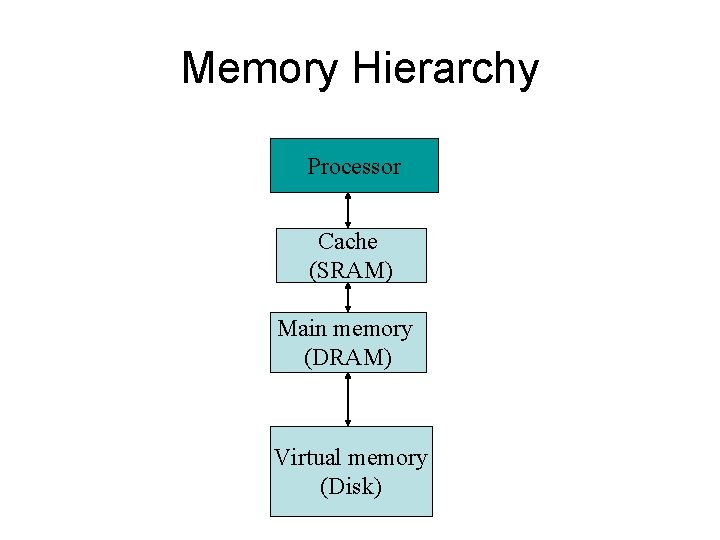

Memory Hierarchy Processor Cache (SRAM) Main memory (DRAM) Virtual memory (Disk)

Virtual Memory • Programs see the whole address space and don’t bother about the physical address. • Virtual address space is the logical address space seen by the program. • Physical address space is the actual accessable memory. • Hardware and software mechanisms are needed to map logical address into physical address.

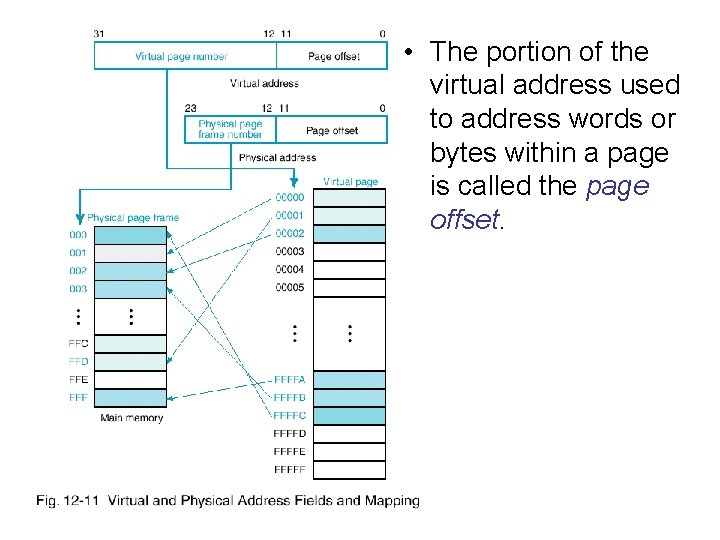

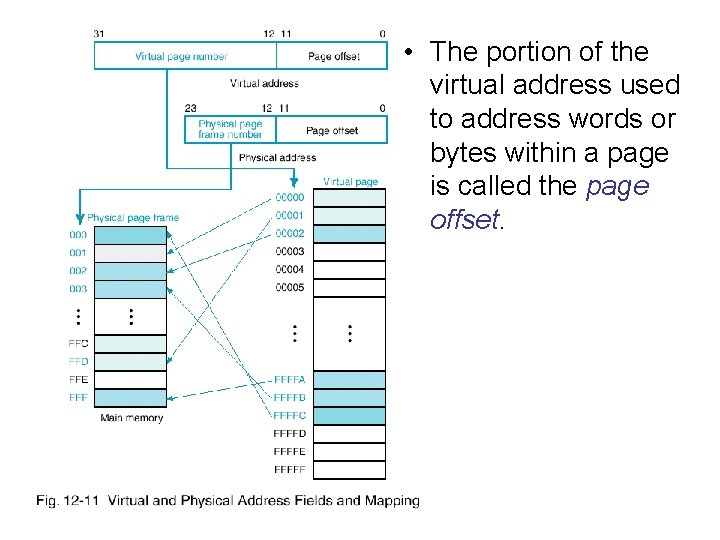

Virtual Memory • To facilitate mapping virtual address to physical address the virtual address space is divided into pages. • Main memory is also divided to fixed blocks of the same size called page frames. Example Assume that a page consists of 4 K bytes, and assume that 32 bits are used to address the virtual address space. This means we have 32 – 12 = 20 220 pages If the main memory is 16 M bytes then we have 24 – 12 =12 212 page frames.

• The portion of the virtual address used to address words or bytes within a page is called the page offset.

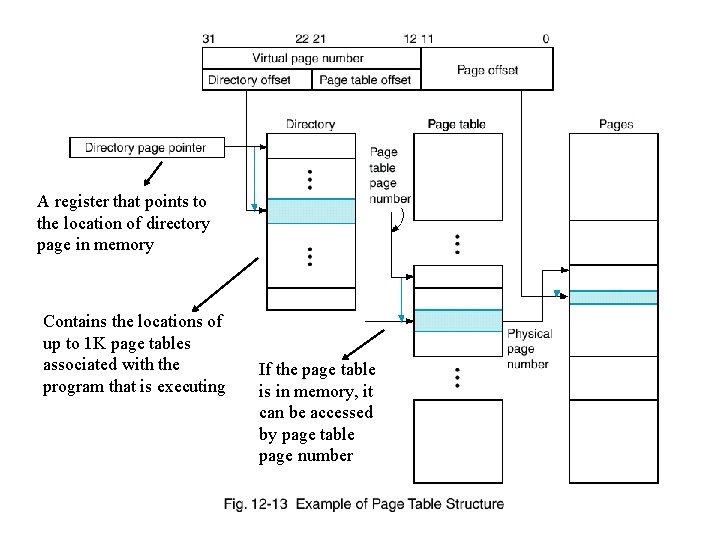

Page Tables • Page tables are used to map virtual addresses to physical addresses. • A special table for each program called a directory page provides the mappings used to locate the 4 KB program page tables.

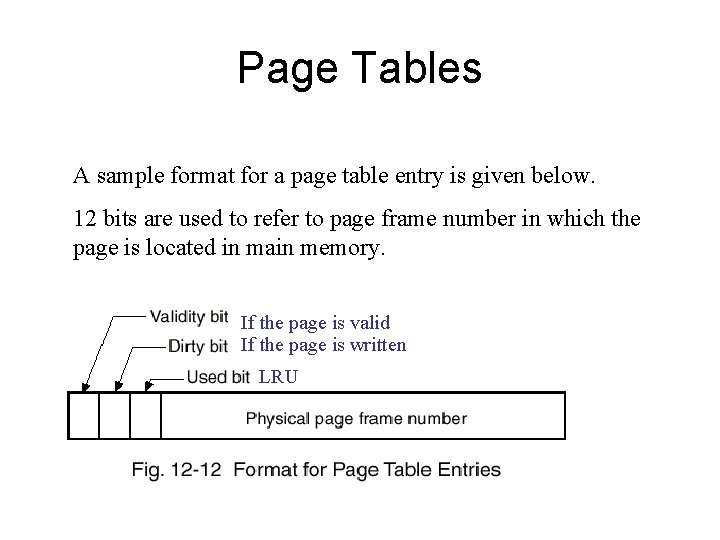

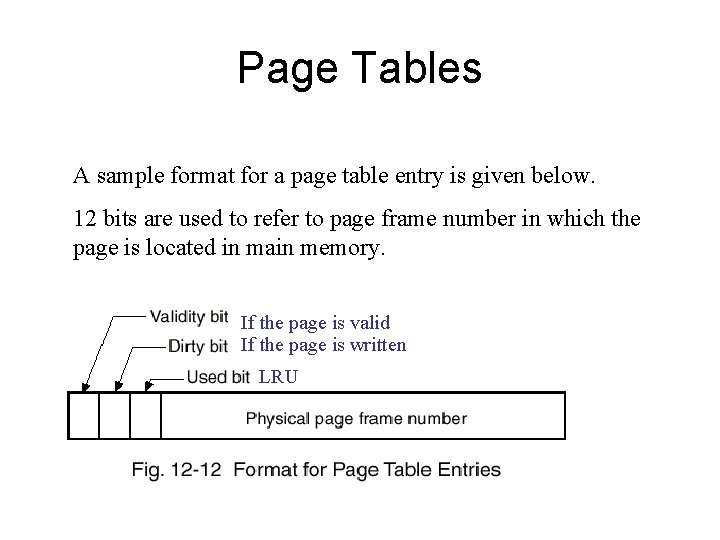

Page Tables A sample format for a page table entry is given below. 12 bits are used to refer to page frame number in which the page is located in main memory. If the page is valid If the page is written LRU

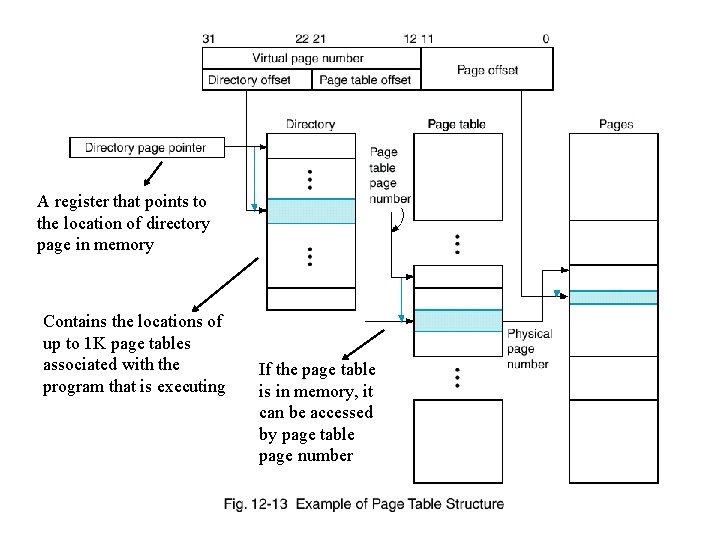

A register that points to the location of directory page in memory Contains the locations of up to 1 K page tables associated with the program that is executing If the page table is in memory, it can be accessed by page table page number

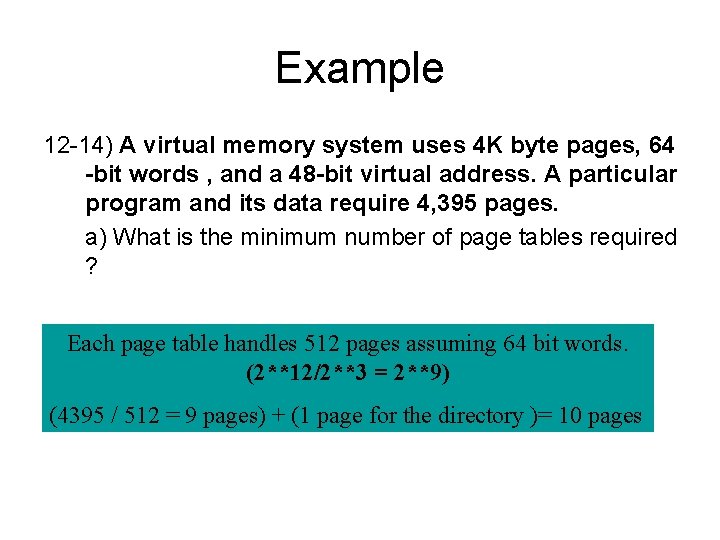

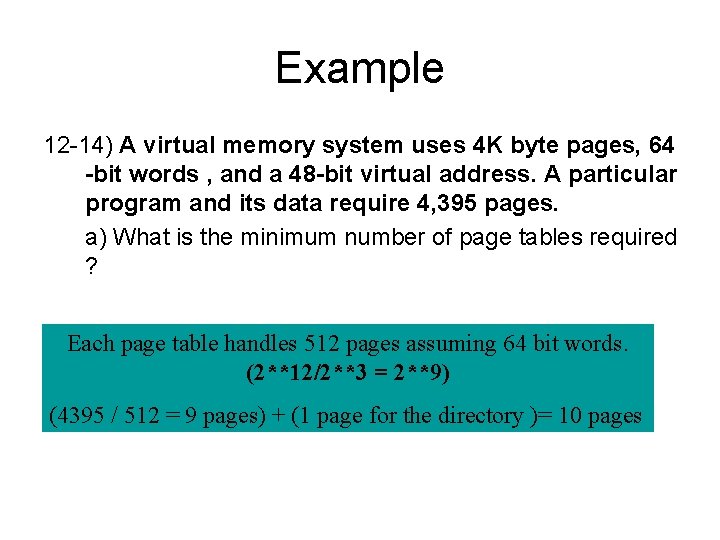

Example 12 -14) A virtual memory system uses 4 K byte pages, 64 -bit words , and a 48 -bit virtual address. A particular program and its data require 4, 395 pages. a) What is the minimum number of page tables required ? Each page table handles 512 pages assuming 64 bit words. (2**12/2**3 = 2**9) (4395 / 512 = 9 pages) + (1 page for the directory )= 10 pages

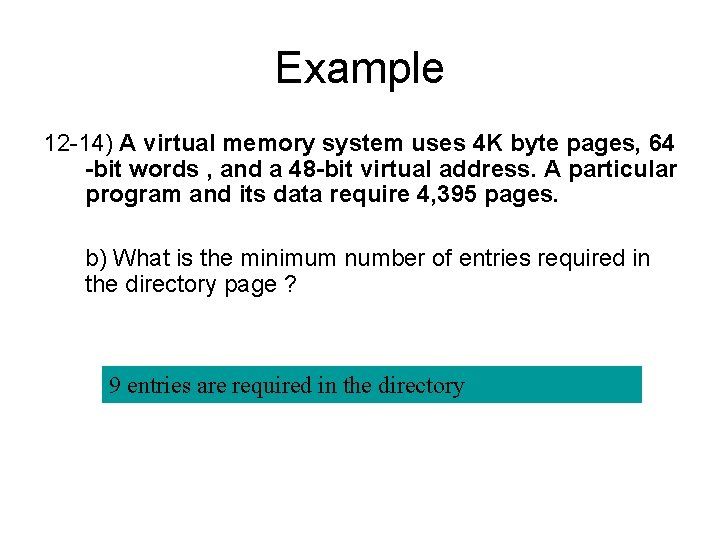

Example 12 -14) A virtual memory system uses 4 K byte pages, 64 -bit words , and a 48 -bit virtual address. A particular program and its data require 4, 395 pages. b) What is the minimum number of entries required in the directory page ? 9 entries are required in the directory

Example 12 -14) A virtual memory system uses 4 K byte pages, 64 -bit words , and a 48 -bit virtual address. A particular program and its data require 4, 395 pages. c) based on your anwers to a and b , how many entries are there in the last page table. ? 4395 – 8 * 512 = 299 entries in the last page

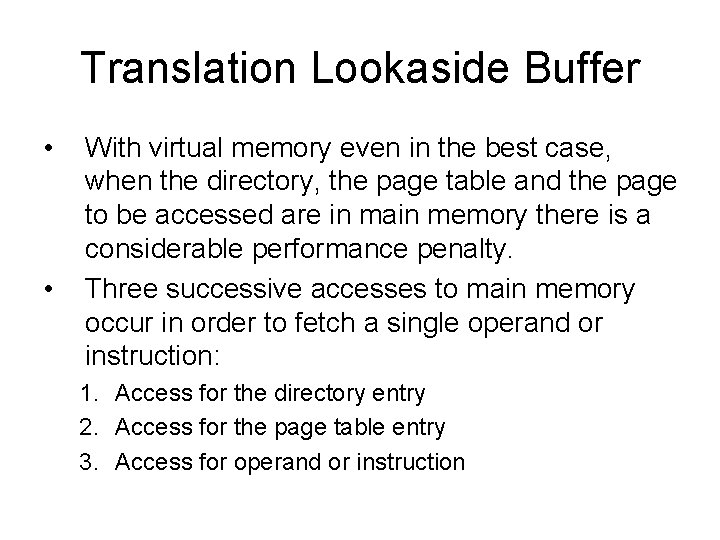

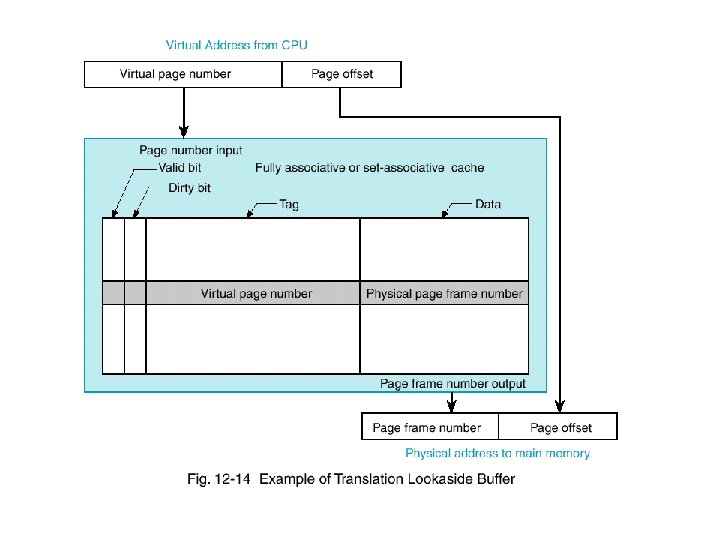

Translation Lookaside Buffer • • With virtual memory even in the best case, when the directory, the page table and the page to be accessed are in main memory there is a considerable performance penalty. Three successive accesses to main memory occur in order to fetch a single operand or instruction: 1. Access for the directory entry 2. Access for the page table entry 3. Access for operand or instruction

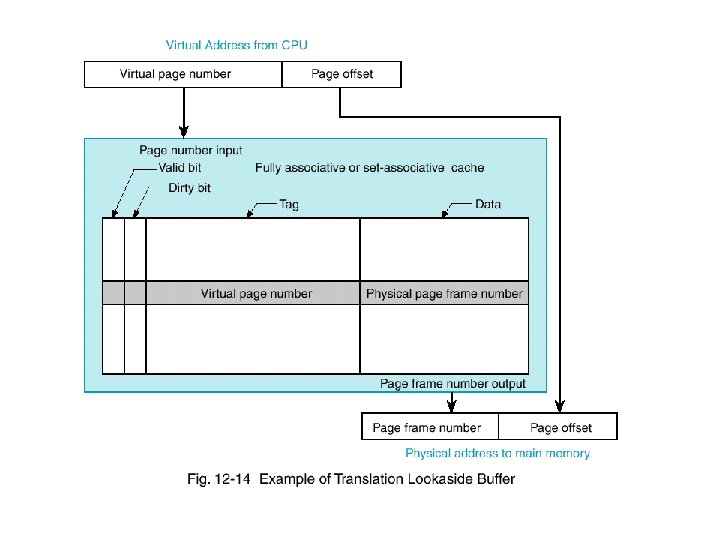

Translation Lookaside Buffer • Translation lookaside buffer (TLB) is a cache for the purpose of translating the virtual address directly into a physical address.

Translation Lookaside Buffer • If there is a TLB miss, then main memory is accessed for directory table entry and page table entry. • If there is a physical page in memory then the page table entry is brought into TLB cache. • If the physical page does not exist in main memory then a page fault occurs. – A software implemented action fetches the page from the disk to main memory.