Memory Management Tom Roeder CS 215 2006 fa

![Motivation l What’s wrong here? char* f(){ char c[100]; for(int i = 0; i Motivation l What’s wrong here? char* f(){ char c[100]; for(int i = 0; i](https://slidetodoc.com/presentation_image_h2/a8ffc566e382b0ab0de68b2035df8e19/image-3.jpg)

![Motivation l Solution: no explicit malloc/free (new/delete) l eg. in Java/C# { double[] A Motivation l Solution: no explicit malloc/free (new/delete) l eg. in Java/C# { double[] A](https://slidetodoc.com/presentation_image_h2/a8ffc566e382b0ab0de68b2035df8e19/image-4.jpg)

- Slides: 30

Memory Management Tom Roeder CS 215 2006 fa

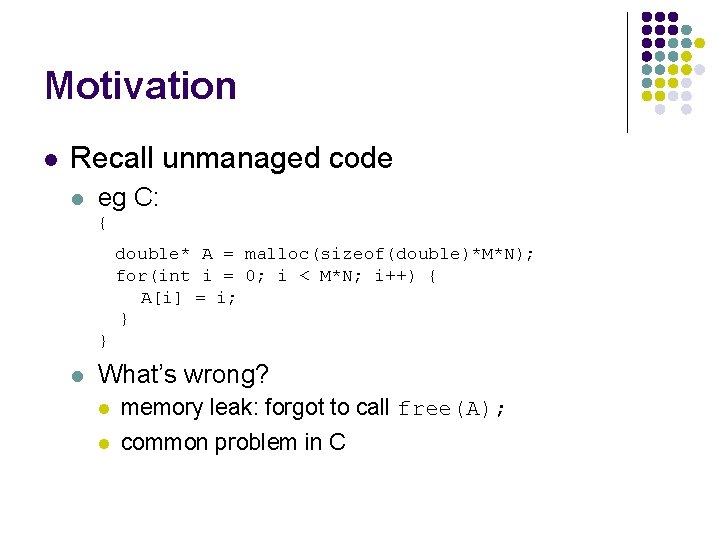

Motivation l Recall unmanaged code l eg C: { double* A = malloc(sizeof(double)*M*N); for(int i = 0; i < M*N; i++) { A[i] = i; } } l What’s wrong? l memory leak: forgot to call free(A); l common problem in C

![Motivation l Whats wrong here char f char c100 forint i 0 i Motivation l What’s wrong here? char* f(){ char c[100]; for(int i = 0; i](https://slidetodoc.com/presentation_image_h2/a8ffc566e382b0ab0de68b2035df8e19/image-3.jpg)

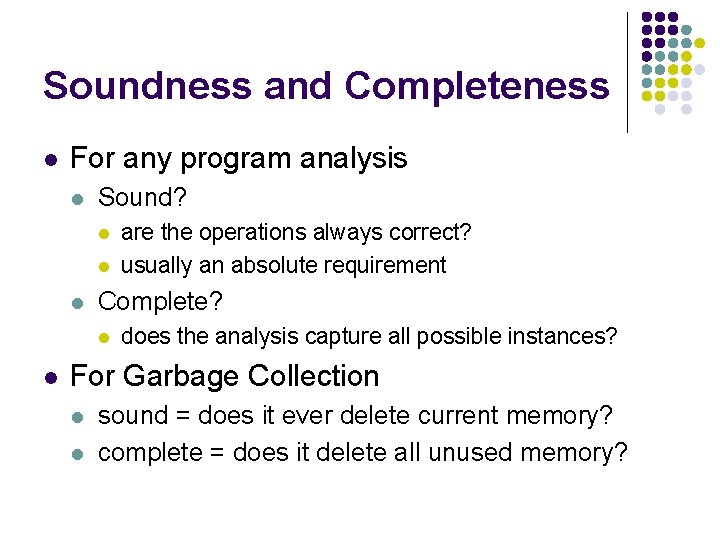

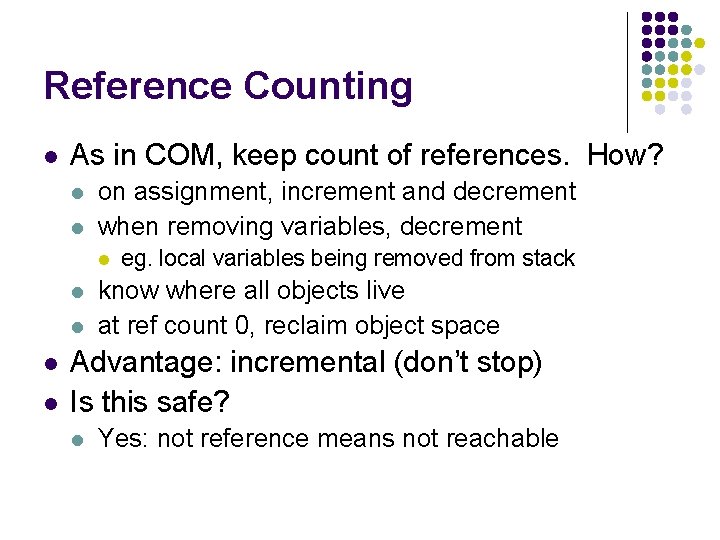

Motivation l What’s wrong here? char* f(){ char c[100]; for(int i = 0; i < 100; i++) { c[i] = i; } return c; } l Returning memory allocated on the stack l Can you still do this in C#? l no: array sizes must be specified in “new” expressions

![Motivation l Solution no explicit mallocfree newdelete l eg in JavaC double A Motivation l Solution: no explicit malloc/free (new/delete) l eg. in Java/C# { double[] A](https://slidetodoc.com/presentation_image_h2/a8ffc566e382b0ab0de68b2035df8e19/image-4.jpg)

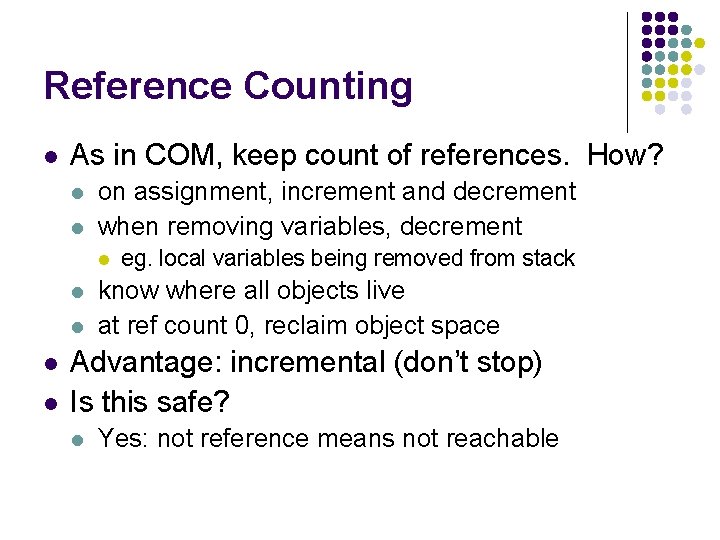

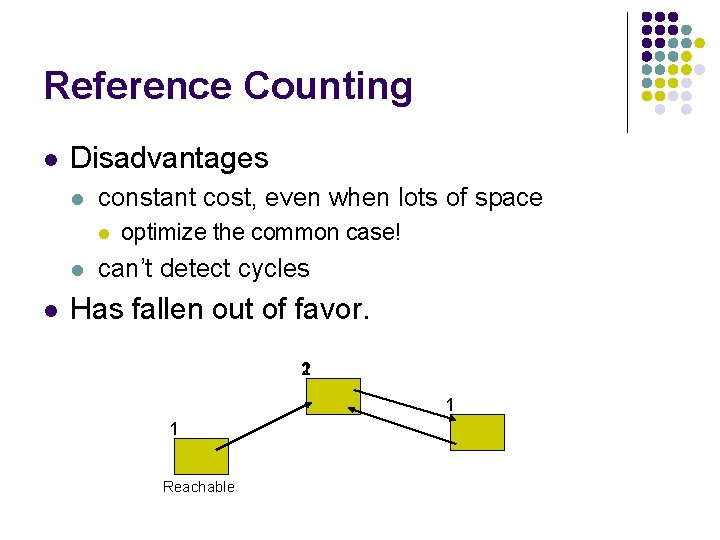

Motivation l Solution: no explicit malloc/free (new/delete) l eg. in Java/C# { double[] A = new double[M*N]; for(int i = 0; i < M*N; i++) { A[i] = i; } } l l No leak: memory is “lost” but freed later A Garbage collector tries to free memory l keeps track of used information somehow

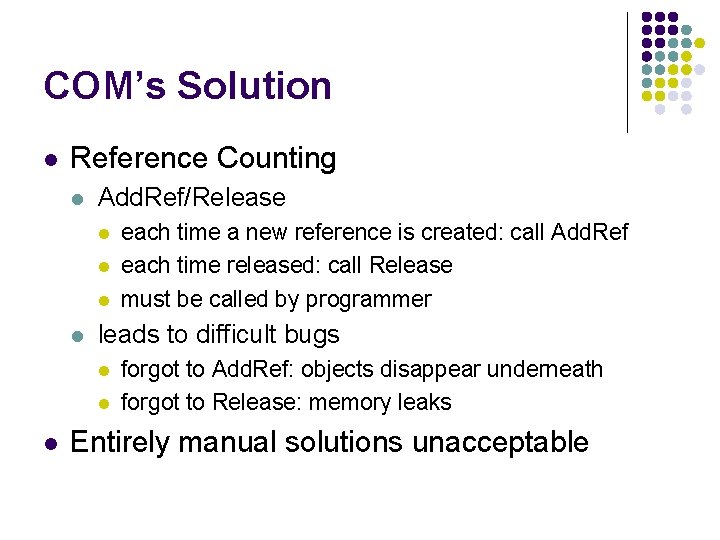

COM’s Solution l Reference Counting l Add. Ref/Release l l leads to difficult bugs l l l each time a new reference is created: call Add. Ref each time released: call Release must be called by programmer forgot to Add. Ref: objects disappear underneath forgot to Release: memory leaks Entirely manual solutions unacceptable

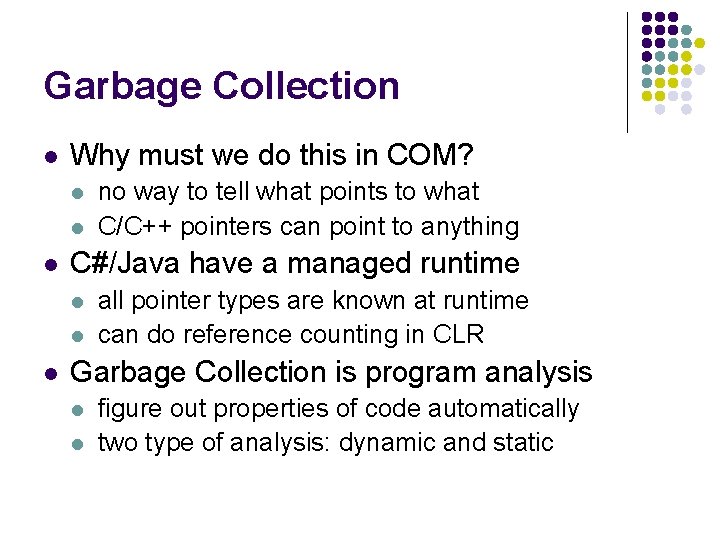

Garbage Collection l Why must we do this in COM? l l l C#/Java have a managed runtime l l l no way to tell what points to what C/C++ pointers can point to anything all pointer types are known at runtime can do reference counting in CLR Garbage Collection is program analysis l l figure out properties of code automatically two type of analysis: dynamic and static

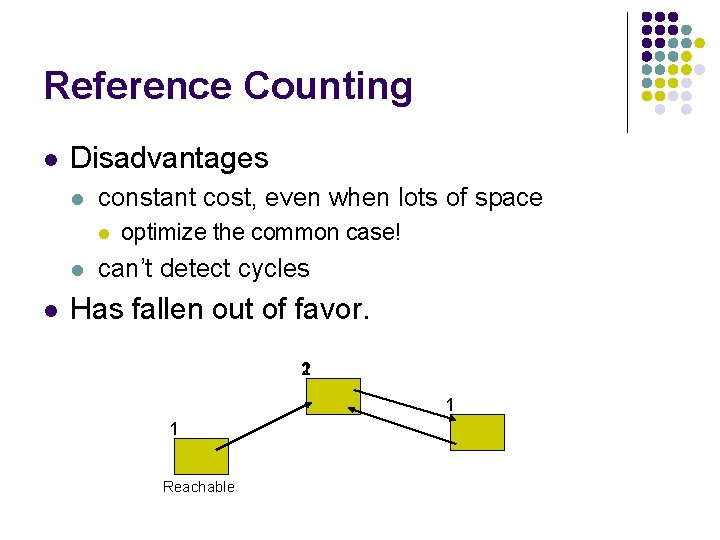

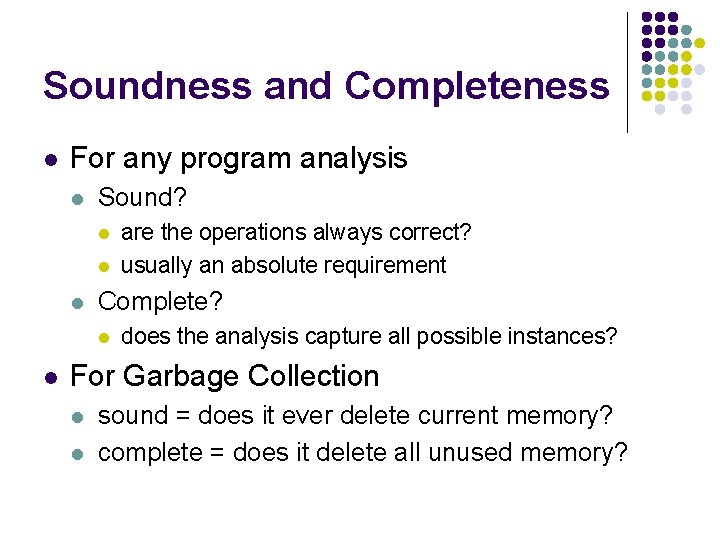

Soundness and Completeness l For any program analysis l Sound? l l l Complete? l l are the operations always correct? usually an absolute requirement does the analysis capture all possible instances? For Garbage Collection l l sound = does it ever delete current memory? complete = does it delete all unused memory?

Reference Counting l As in COM, keep count of references. How? l l on assignment, increment and decrement when removing variables, decrement l l l eg. local variables being removed from stack know where all objects live at ref count 0, reclaim object space Advantage: incremental (don’t stop) Is this safe? l Yes: not reference means not reachable

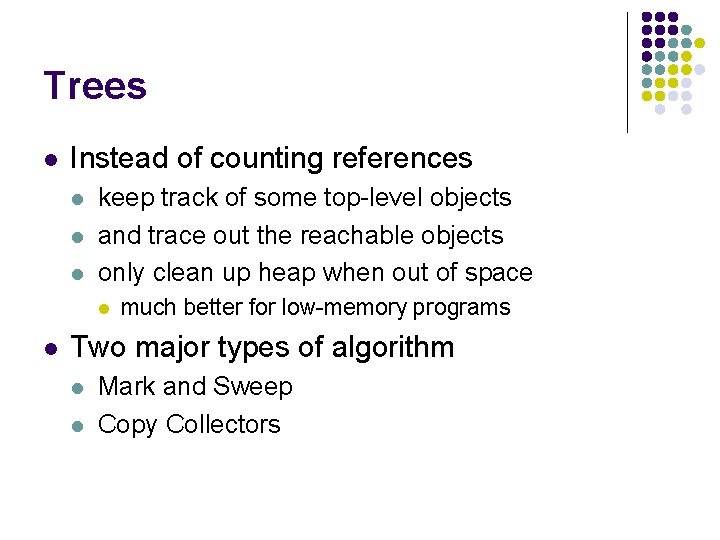

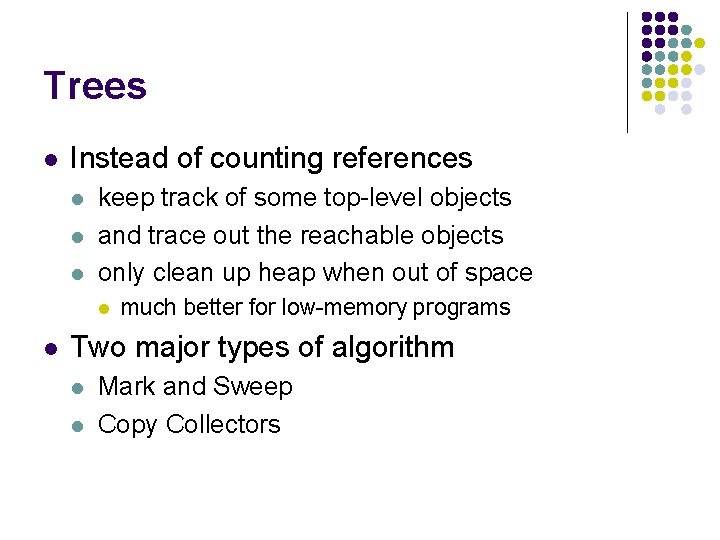

Reference Counting l Disadvantages l constant cost, even when lots of space l l l optimize the common case! can’t detect cycles Has fallen out of favor. 1 2 1 1 Reachable

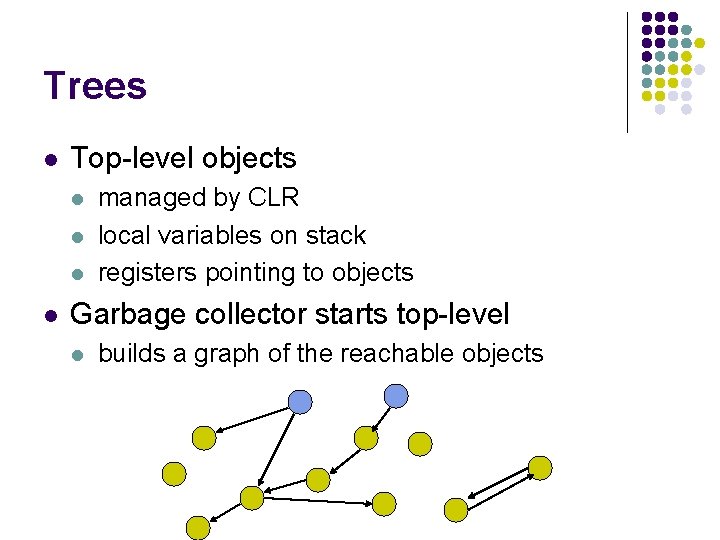

Trees l Instead of counting references l l l keep track of some top-level objects and trace out the reachable objects only clean up heap when out of space l l much better for low-memory programs Two major types of algorithm l l Mark and Sweep Copy Collectors

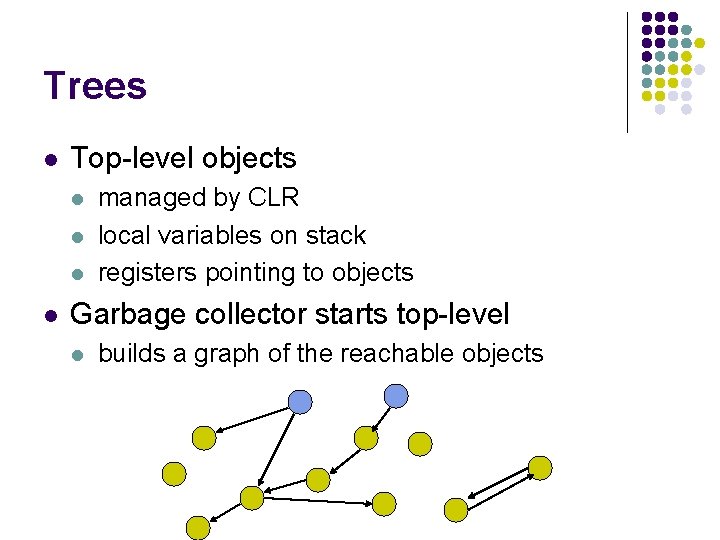

Trees l Top-level objects l l managed by CLR local variables on stack registers pointing to objects Garbage collector starts top-level l builds a graph of the reachable objects

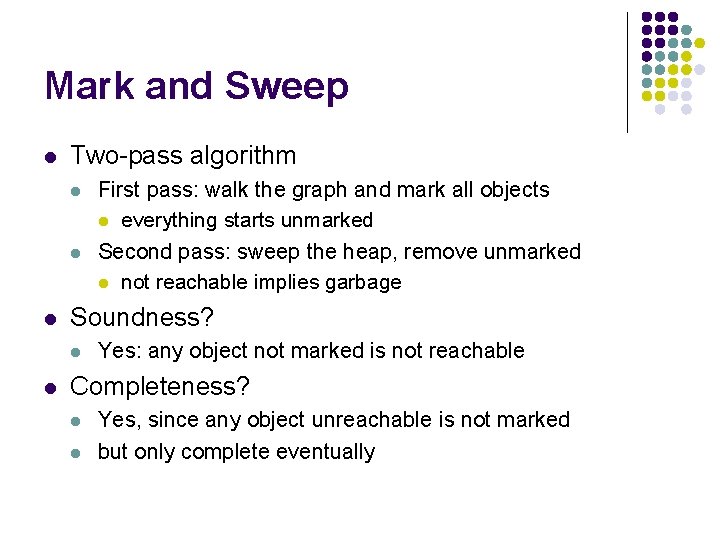

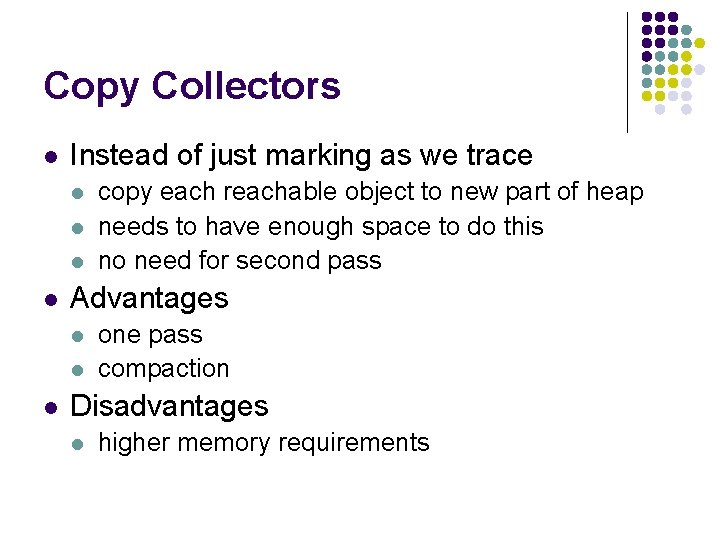

Mark and Sweep l Two-pass algorithm l l l Soundness? l l First pass: walk the graph and mark all objects l everything starts unmarked Second pass: sweep the heap, remove unmarked l not reachable implies garbage Yes: any object not marked is not reachable Completeness? l l Yes, since any object unreachable is not marked but only complete eventually

Mark and Sweep l Can be expensive l eg. emacs l l l everything stops and collection happens this is a general problem for garbage collection at end of first phase, know all reachable objects l l should use this information how could we use it?

Copy Collectors l Instead of just marking as we trace l l Advantages l l l copy each reachable object to new part of heap needs to have enough space to do this no need for second pass one pass compaction Disadvantages l higher memory requirements

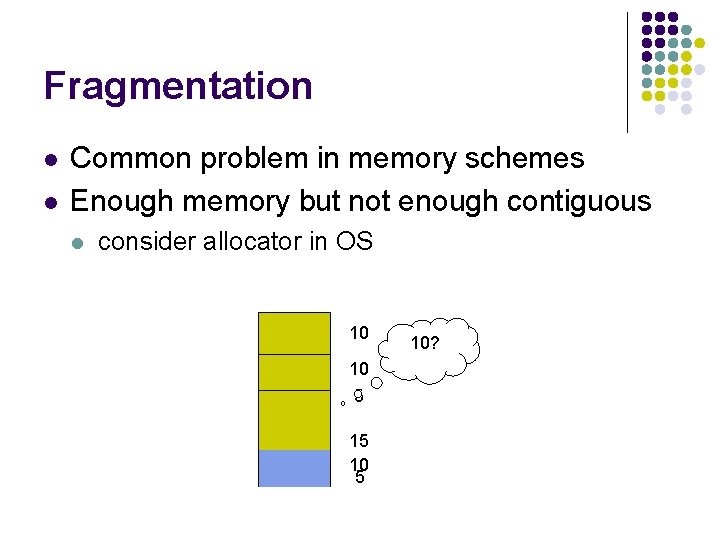

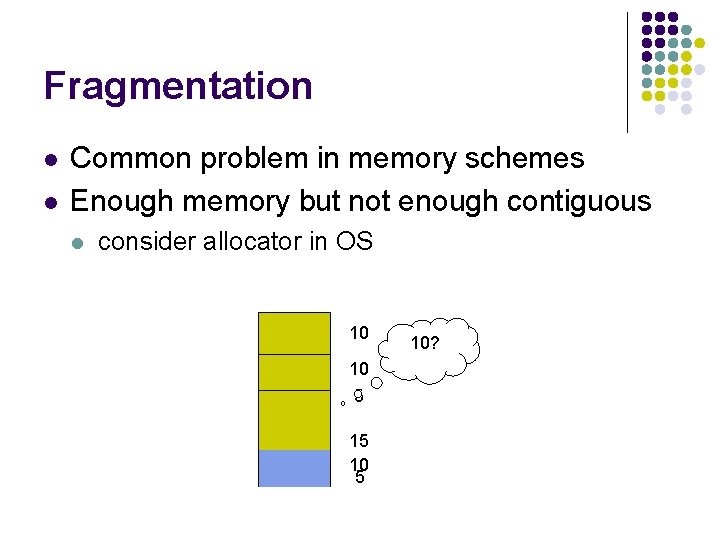

Fragmentation l l Common problem in memory schemes Enough memory but not enough contiguous l consider allocator in OS 10 10 5 15 10?

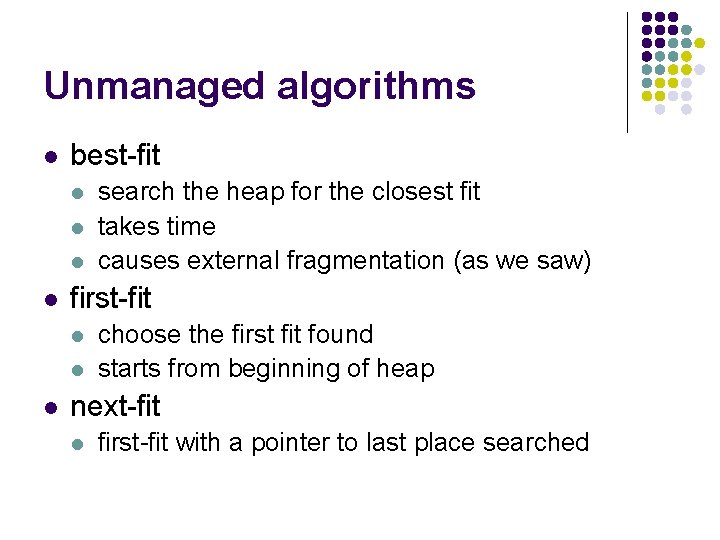

Unmanaged algorithms l best-fit l l first-fit l l l search the heap for the closest fit takes time causes external fragmentation (as we saw) choose the first fit found starts from beginning of heap next-fit l first-fit with a pointer to last place searched

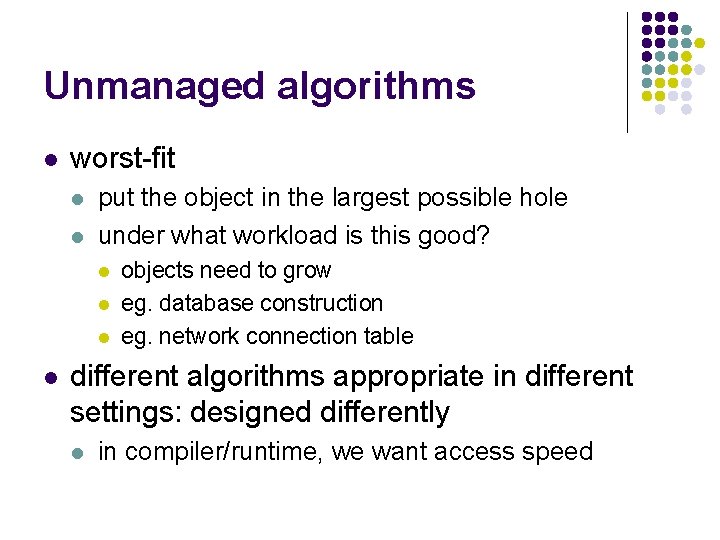

Unmanaged algorithms l worst-fit l l put the object in the largest possible hole under what workload is this good? l l objects need to grow eg. database construction eg. network connection table different algorithms appropriate in different settings: designed differently l in compiler/runtime, we want access speed

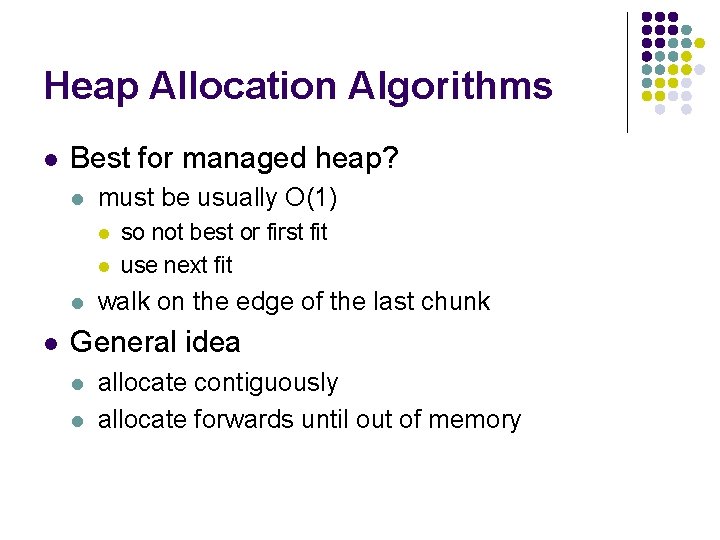

Heap Allocation Algorithms l Best for managed heap? l must be usually O(1) l l so not best or first fit use next fit walk on the edge of the last chunk General idea l l allocate contiguously allocate forwards until out of memory

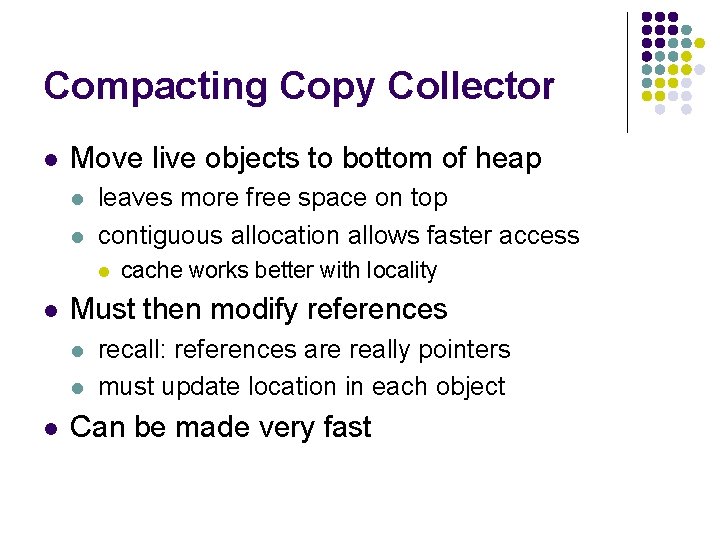

Compacting Copy Collector l Move live objects to bottom of heap l l leaves more free space on top contiguous allocation allows faster access l l Must then modify references l l l cache works better with locality recall: references are really pointers must update location in each object Can be made very fast

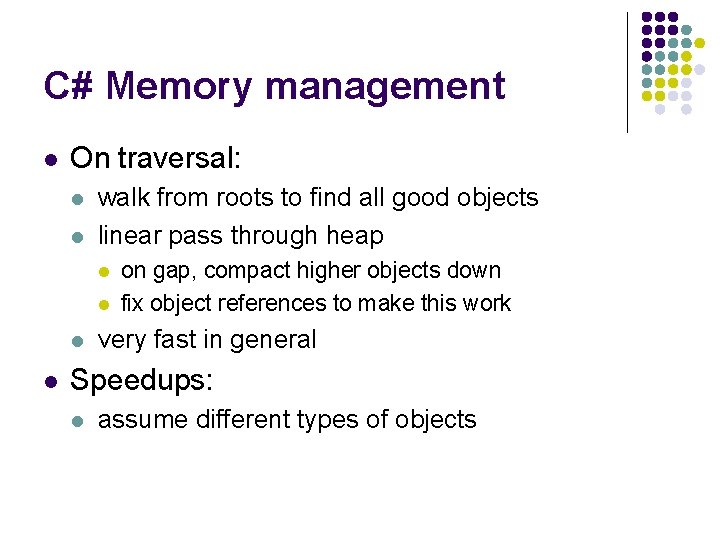

Compacting Copy Collector l Another possible collector: l l l divide memory into two halves fill up one half before doing any collection on full: l l walk the trees and copy to other side work from new side Need twice memory of other collectors But don’t need to find space in old side l contiguous allocation is easy

C# Memory management l Related to next-fit, copy-collector l l l keep a Next. Obj. Pointer to next free space use it for new objects until no more space Keep knowledge of Root objects l l global and static object pointers all thread stack local variables registers pointing to objects maintained by JIT compiler and runtime l eg. JIT keeps a table of roots

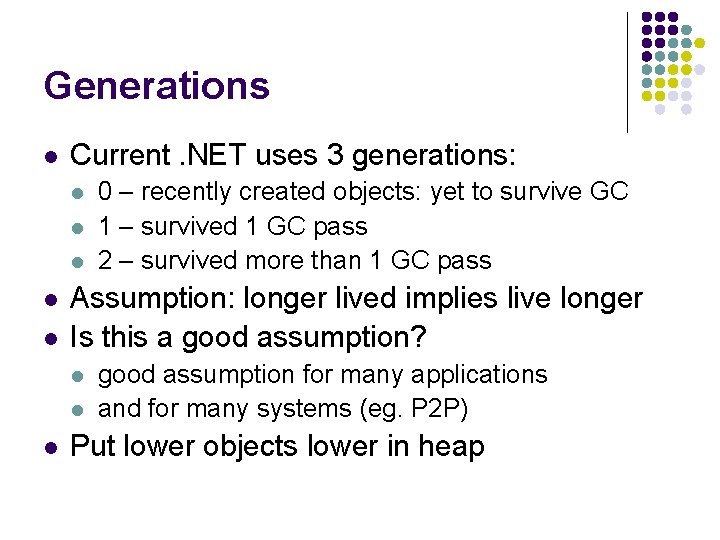

C# Memory management l On traversal: l l walk from roots to find all good objects linear pass through heap l l on gap, compact higher objects down fix object references to make this work very fast in general Speedups: l assume different types of objects

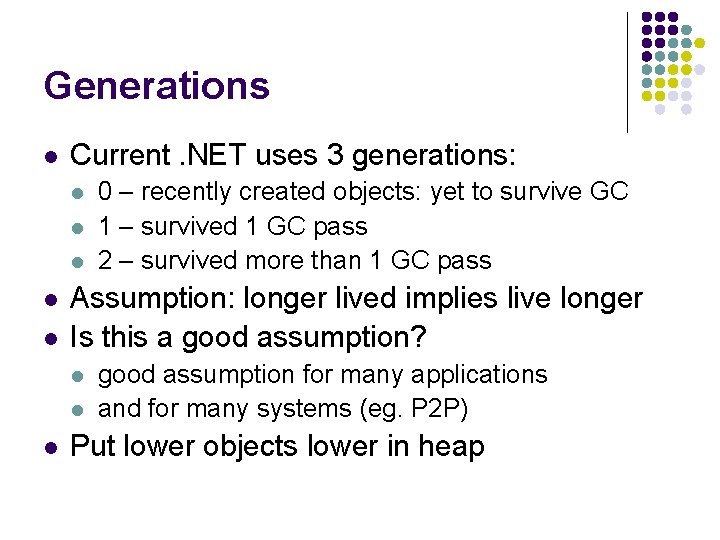

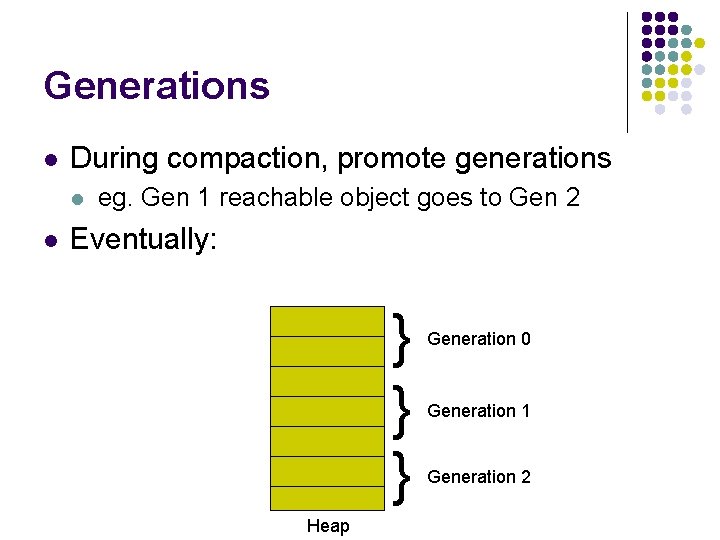

Generations l Current. NET uses 3 generations: l l l Assumption: longer lived implies live longer Is this a good assumption? l l l 0 – recently created objects: yet to survive GC 1 – survived 1 GC pass 2 – survived more than 1 GC pass good assumption for many applications and for many systems (eg. P 2 P) Put lower objects lower in heap

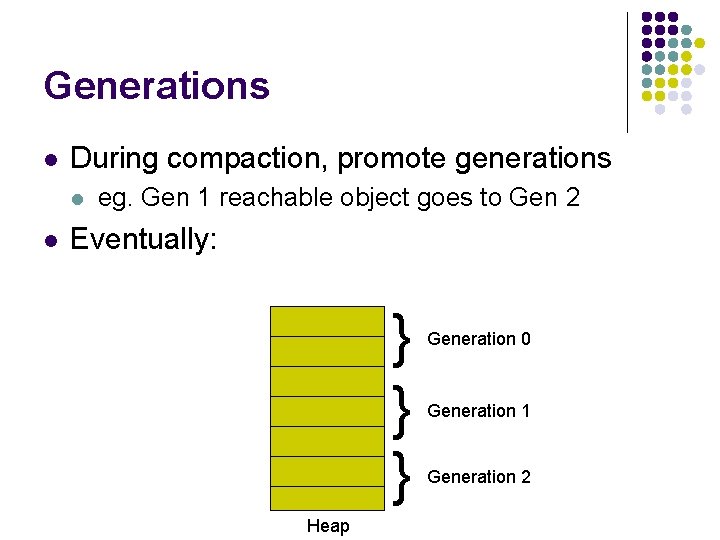

Generations l During compaction, promote generations l l eg. Gen 1 reachable object goes to Gen 2 Eventually: } } } Heap Generation 0 Generation 1 Generation 2

More Generation Optimization l Don’t trace references in old objects. Why? l l l speed improvement but could refer to young objects Use Write-Watch support. How? l l note if an old object has some field set then can trace through references

Large Objects Heap l Area of the heap dedicated to large objects l never compacted. Why? l l automatic generation 2 l l l rarely collected large objects likely to have long lifetime Commonly used for Data. Grid objects l l copy cost outweights any locality results from database queries 20 k or more

Object Pinning l Can require that an object not move l l could hurt GC performance useful for unsafe operation in fact, needed to make pointers work syntax: l l fixed(…) { … } will not move objects in the declaration in the block

Finalization l Recall C++ destructors: ~My. Class() { // cleanup } l l l called when object is deleted does cleanup for this object Don’t do this in C# (or Java) l l l similar construct exists but only called on GC no guarantees when

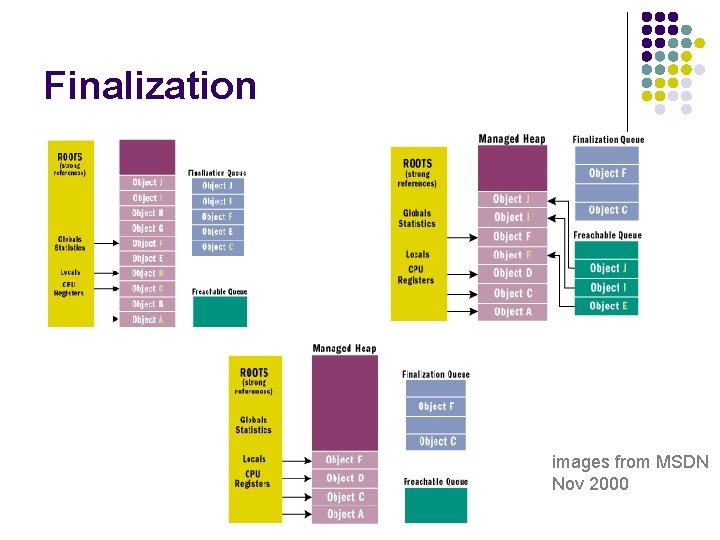

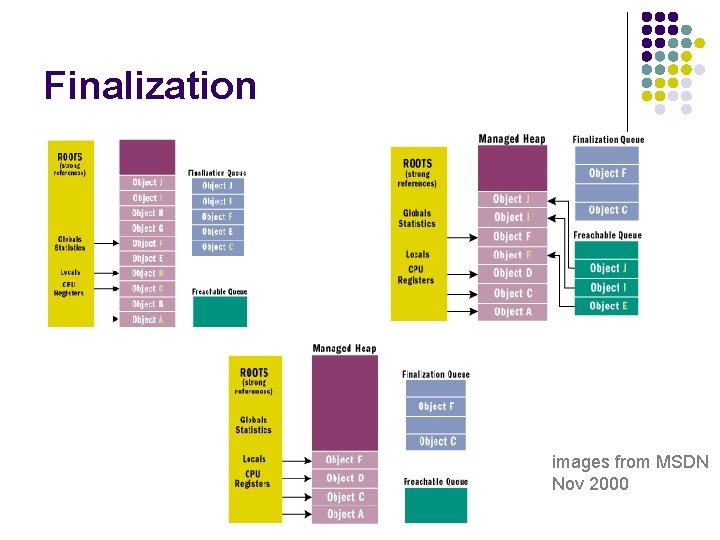

Finalization l More common idiom: public void Finalize() { base. Finalize(); Dispose(false); } l l l maybe needed for unmanaged resources slows down GC significantly Finalization in GC: l when object with Finalize method created l l add to Finalization Queue when about to be GC’ed, add to Freachable Queue

Finalization images from MSDN Nov 2000