Memory Management Operating Systems Spring 2003 Gregory Grisha

- Slides: 44

Memory Management Operating Systems - Spring 2003 Gregory (Grisha) Chokler Ittai Abraham Zinovi Rabinovich

Background • A compiler creates an executable code • An executable code must be brought into main memory to run – Operating system maps the executable code onto the main memory – The “mapping” depends on the hardware • Hardware accesses the memory locations in the course of the execution

Memory management • Principal operation: Bringing programs into the memory for execution by the processor • Address Binding: – Compile time, e. g. MS-DOS. com programs – Load time – relocatable code – Execution time – moved around in memory

Memory management (II) • Requirements and issues: – Relocation – Protection – Sharing – Logical and physical organization

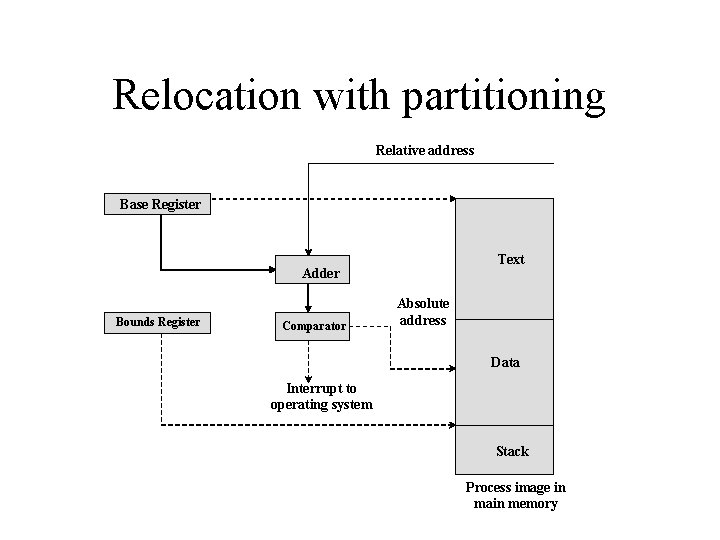

Relocation • Compiler generated addresses are relative • A process can be (dynamically) loaded at different memory location – Actual physical mapping is not known at compile time • Simple if processes are allocated contiguous memory ranges • Complicated otherwise, e. g. segmentation or paging is used

Protection • Processes must be unable to reference addresses of other processes or those of the operating system • Cannot be enforced at compile time (especially if relocation is present) • Each memory access must be checked for validity – Enforced by hardware

Sharing • Protection must be flexible enough to allow controlled sharing of the same portion of the main memory – E. g. , shared libraries – load once into main memory and allow several programs access simultaneously

Logical organization • Memory is organized as a linear onedimensional address space • Programs are typically organized into modules – can be written/compiled independently, have different degrees of protection, shared • A mechanism is desirable for supporting a logical structure of the user programs

Physical organization • Two level hierarchical storage – Main memory: fast, expensive, scarce, volatile – Secondary storage: slow, cheap, abundant, persistent • Memory management involves 2 -directional information flow between the levels – Swapping

Memory management (III) • Management: How physical and virtual address spaces of multiple processes correlate? • Allocation of physical memory for virtual space intervals – Placement algorithms – Replacement algorithms

Allocator Issues • Patterns of memory reuse – Reuse preference for recently-freed intervals – Preference for intervals in certain regions • Splitting and Coalescing – Are large free intervals split or allocated as a whole? – Are adjacent free intervals unified into one? • Fits – If several possibilities exist for free intervals allocation, which is used? • Splitting thresholds – If some portion of allocated interval is not used by virtual counterpart, will the remainder become separate free interval ?

More Problems • Fragmentation – inability to reuse memory that is free – External fragmentation – there are free unused fragments in physical memory outside allocated intervals – Internal fragmentation – there is free unused fragment space inside allocated interval

Fragmentation: Sources • Isolated deaths – Should allocator place in memory objects that “die” (expire) together, fragmentation would be low • Time-varying behavior – For example free small blocks, request larger ones • Ramps/Peaks/Plateaus – Should these changes follow a known pattern, allocations could be optimized

Memory management: Allocation • Partitioning – now obsolete, but useful for demonstration of basic principles – Fixed – Dynamic • By list of intervals • By hierarchy of intervals • Paging and segmentation – without and with virtual memory

Allocation by Fixed partitioning • Main memory is divided into a number of static partitions – all partitions of the same size – a collection of different sizes • A process can be loaded into a partition of equal or greater size – A process cannot be scattered among many partitions

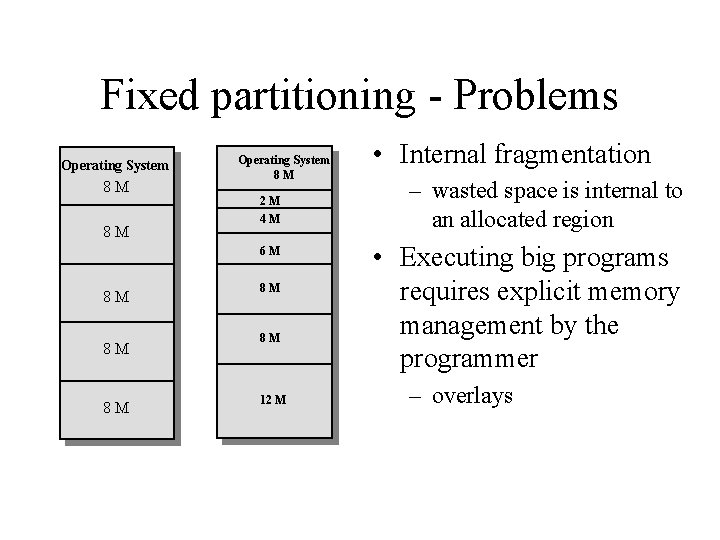

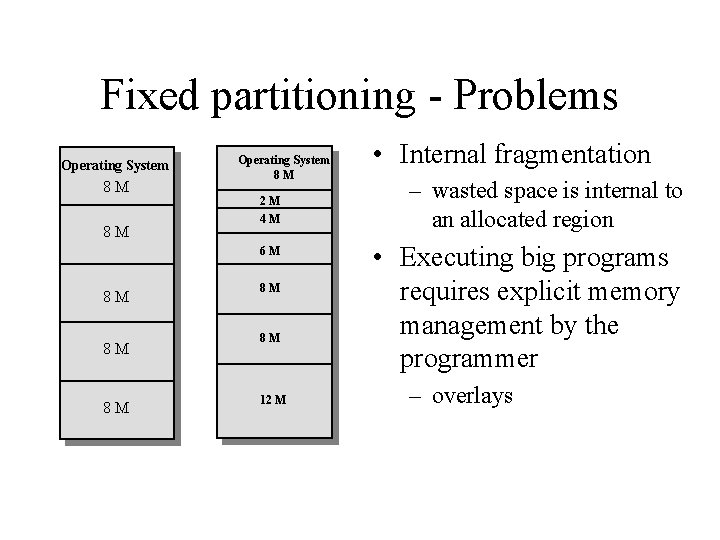

Fixed partitioning - Problems Operating System 8 M 8 M Operating System 8 M 2 M 4 M 6 M 8 M 8 M 8 M 12 M • Internal fragmentation – wasted space is internal to an allocated region • Executing big programs requires explicit memory management by the programmer – overlays

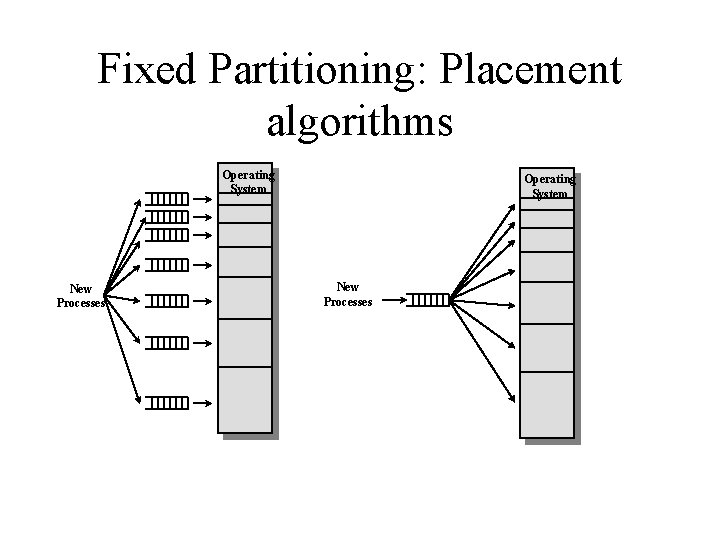

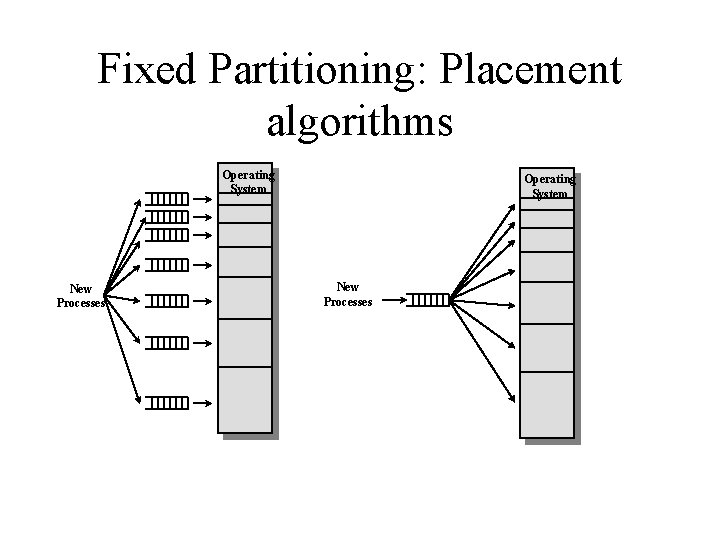

Fixed Partitioning: Placement algorithms Operating System New Processes

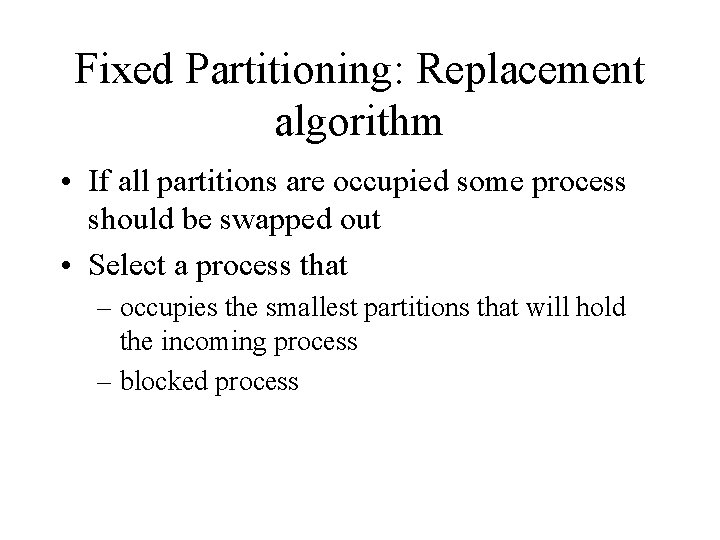

Fixed Partitioning: Replacement algorithm • If all partitions are occupied some process should be swapped out • Select a process that – occupies the smallest partitions that will hold the incoming process – blocked process

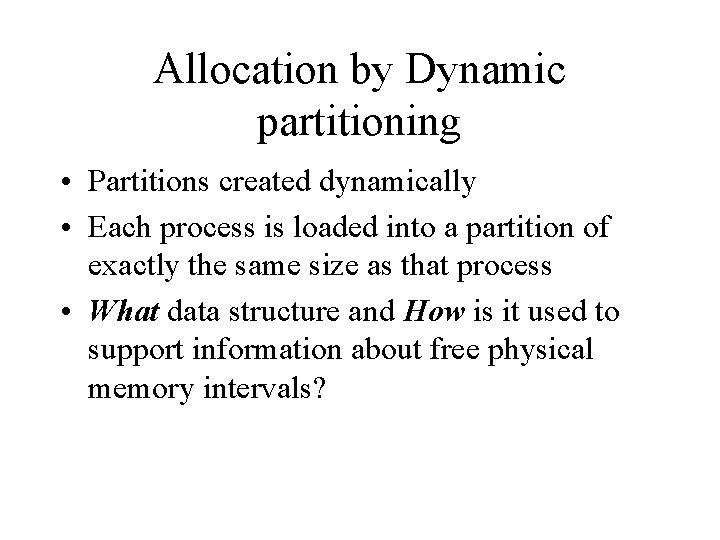

Allocation by Dynamic partitioning • Partitions created dynamically • Each process is loaded into a partition of exactly the same size as that process • What data structure and How is it used to support information about free physical memory intervals?

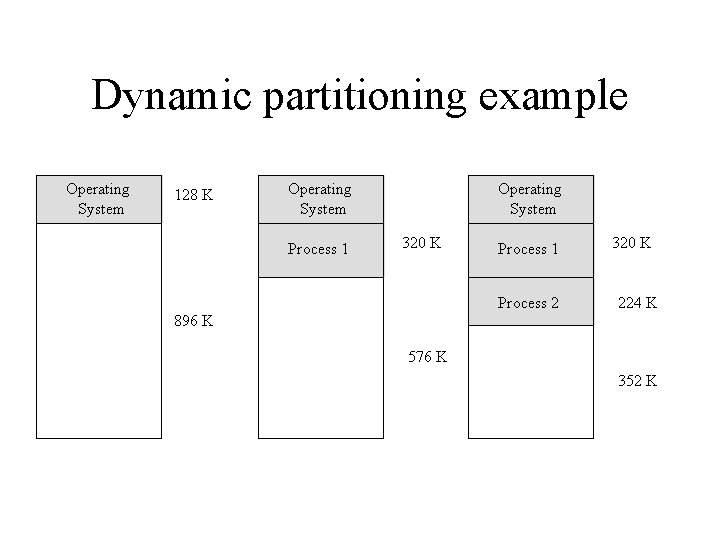

Dynamic partitioning example Operating System 128 K Operating System Process 1 Operating System 320 K Process 1 Process 2 320 K 224 K 896 K 576 K 352 K

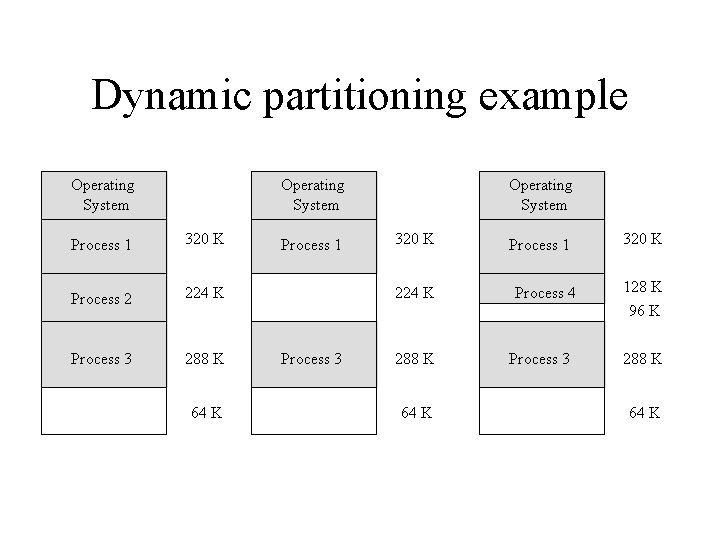

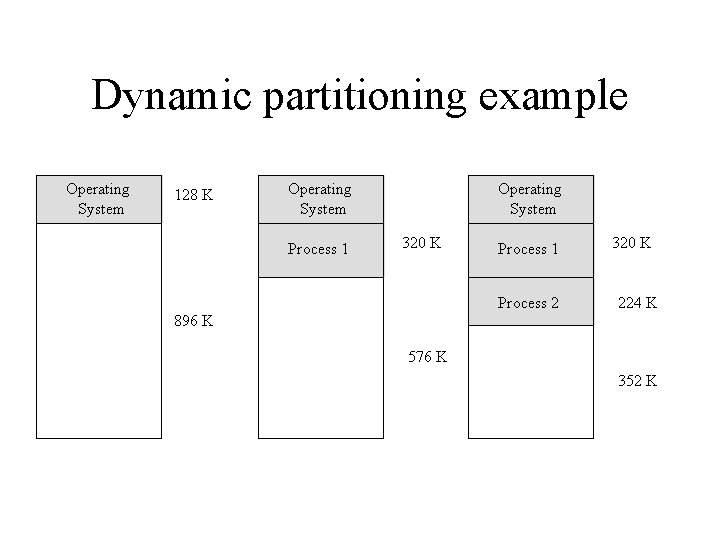

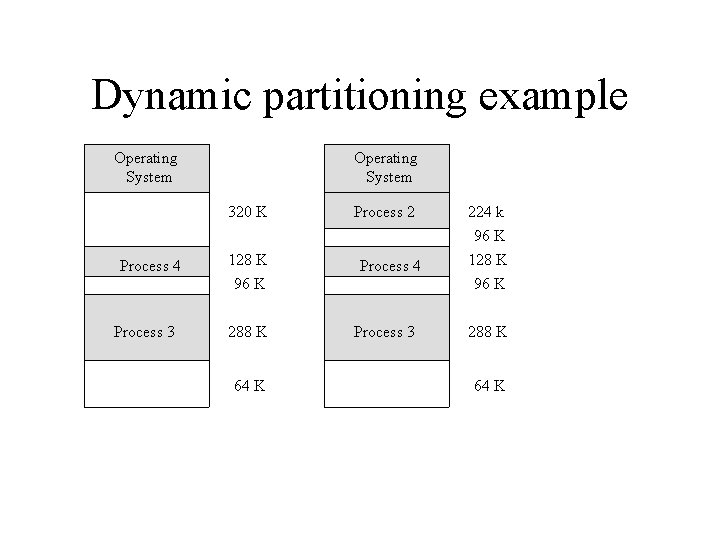

Dynamic partitioning example Operating System Process 1 320 K Process 2 224 K Process 3 288 K 64 K Process 1 Operating System 320 K 224 K Process 3 288 K 64 K Process 1 Process 4 Process 3 320 K 128 K 96 K 288 K 64 K

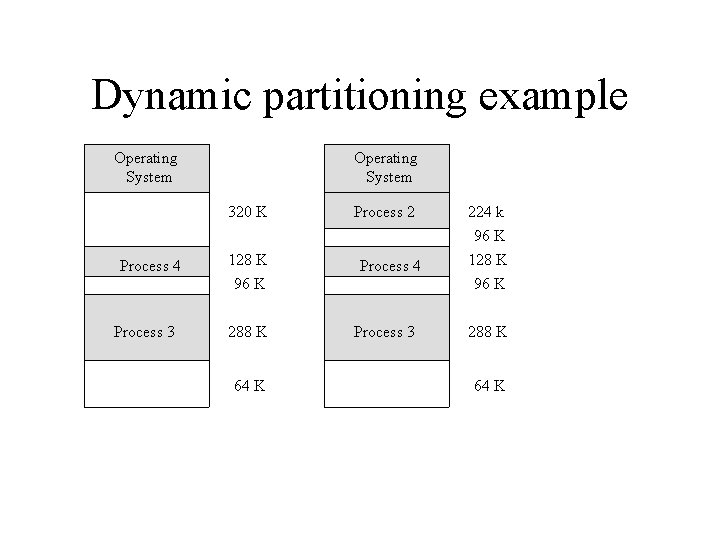

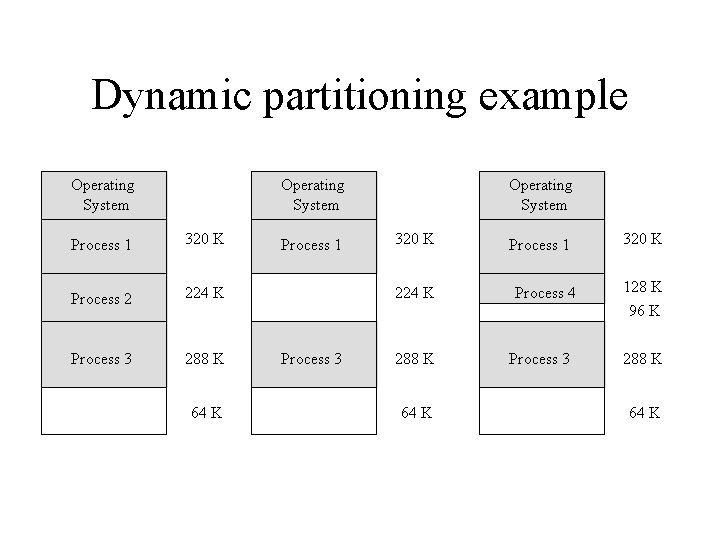

Dynamic partitioning example Operating System 320 K Process 4 Process 3 128 K 96 K 288 K 64 K Process 2 Process 4 Process 3 224 k 96 K 128 K 96 K 288 K 64 K

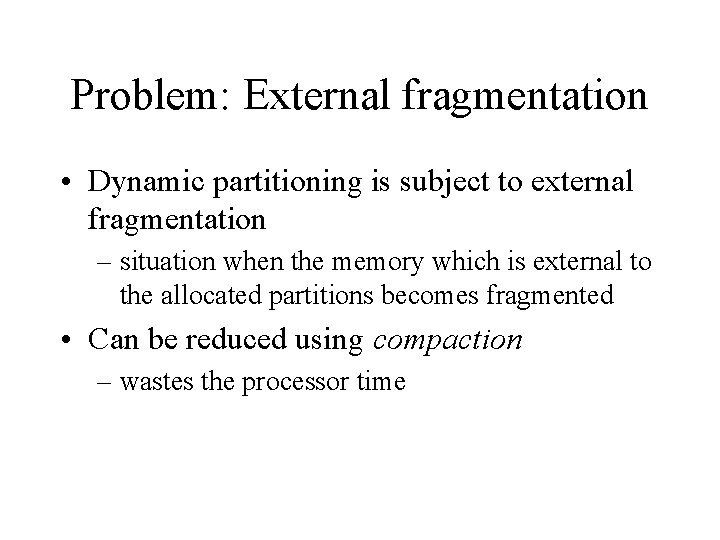

Problem: External fragmentation • Dynamic partitioning is subject to external fragmentation – situation when the memory which is external to the allocated partitions becomes fragmented • Can be reduced using compaction – wastes the processor time

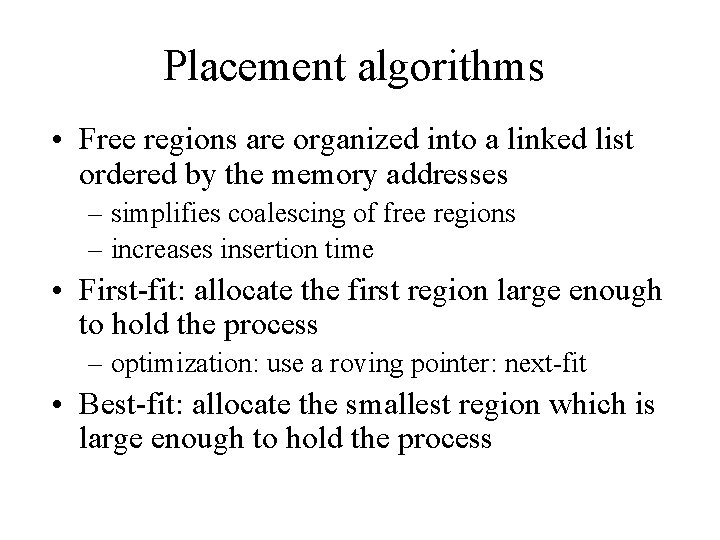

Placement algorithms • Free regions are organized into a linked list ordered by the memory addresses – simplifies coalescing of free regions – increases insertion time • First-fit: allocate the first region large enough to hold the process – optimization: use a roving pointer: next-fit • Best-fit: allocate the smallest region which is large enough to hold the process

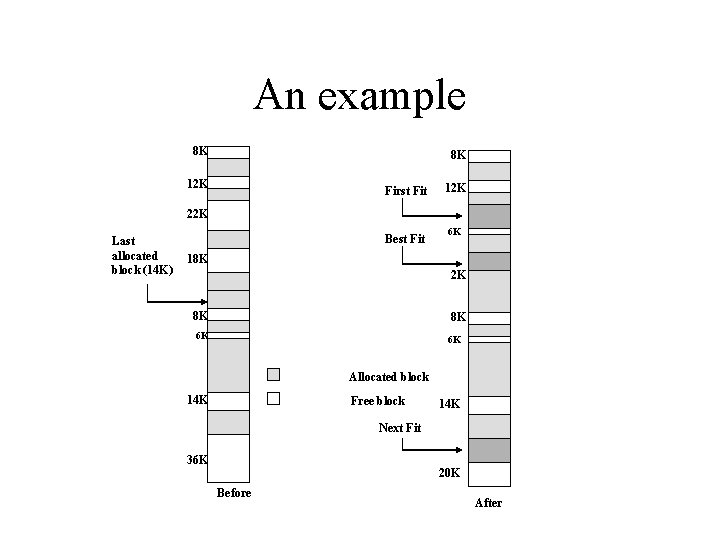

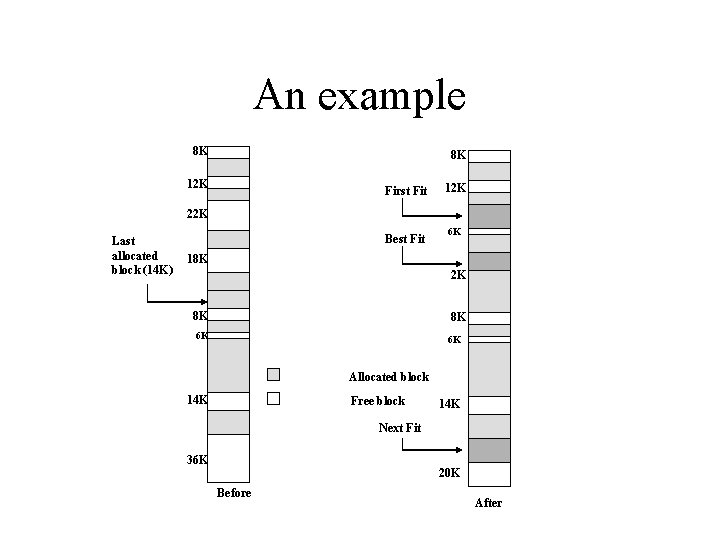

An example 8 K 8 K 12 K First Fit 12 K 22 K Last allocated block (14 K) Best Fit 6 K 18 K 2 K 8 K 8 K 6 K 6 K Allocated block 14 K Free block 14 K Next Fit 36 K 20 K Before After

Placement algorithms discussion • First-fit: – efficient and simple – tends to create more fragmentation near the beginning of the list • solved by next-fit • Best-fit: – less efficient (might search the whole list) – tends to create small unusable fragments

Buddy systems • Hierarchy of free intervals • Approximates the best-fit principle • Efficient search – first (best) fit has a linear complexity • Simplified coalescing

Buddy Systems: Variations • Binary buddies – system allocates memory in blocks which are powers of 2 • Fibonacci buddies – Block sizes are Fibonacci numbers, recall every Fibonacci number is sum of previous two • Weighted buddies – Block sizes are either power of two, or three times the power of two: 2, 3, 4, 6, 8, 12… • Double buddies – Two different buddy system with staggered sizes, e. g. one with 2, 4, 8, 16… and second with 3, 6, 12, 24…

Binary buddy system • Memory blocks are available in size of 2 K where L <= K <= U and where – 2 L = smallest size of block allocated – 2 U = largest size of block allocated(generally, the entire memory available) • Initially, all the available space is treated as a single block of size 2 U

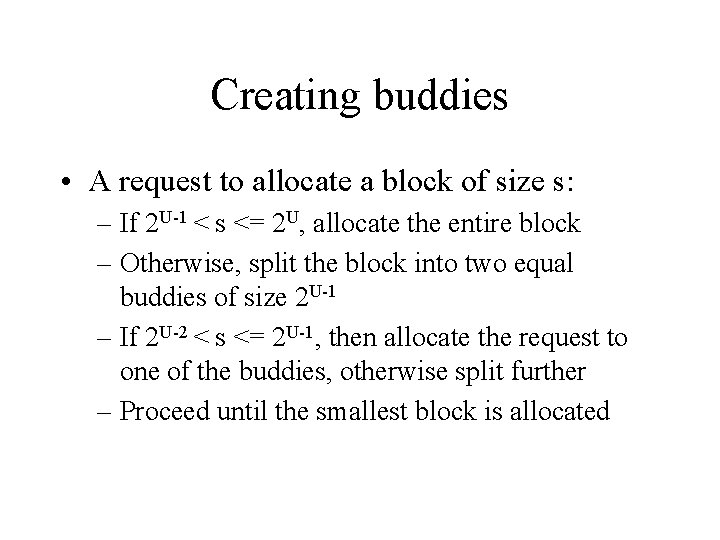

Creating buddies • A request to allocate a block of size s: – If 2 U-1 < s <= 2 U, allocate the entire block – Otherwise, split the block into two equal buddies of size 2 U-1 – If 2 U-2 < s <= 2 U-1, then allocate the request to one of the buddies, otherwise split further – Proceed until the smallest block is allocated

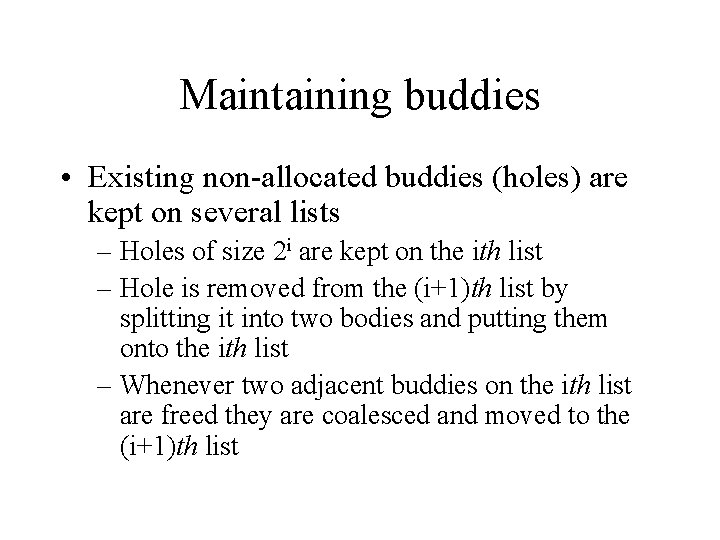

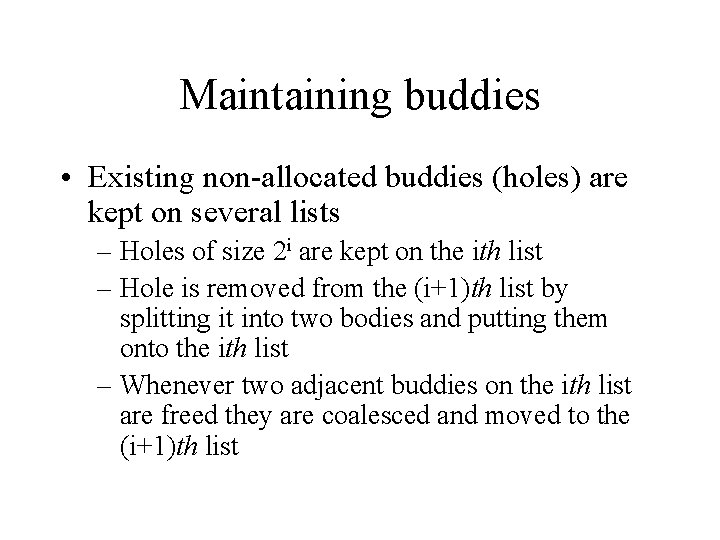

Maintaining buddies • Existing non-allocated buddies (holes) are kept on several lists – Holes of size 2 i are kept on the ith list – Hole is removed from the (i+1)th list by splitting it into two bodies and putting them onto the ith list – Whenever two adjacent buddies on the ith list are freed they are coalesced and moved to the (i+1)th list

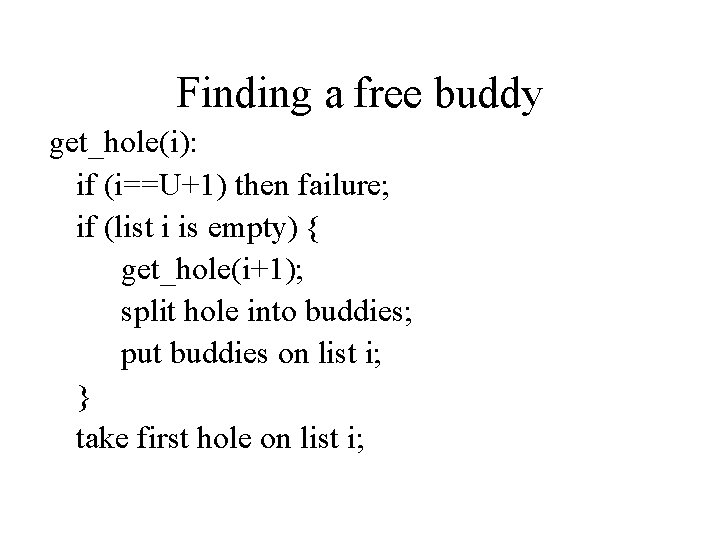

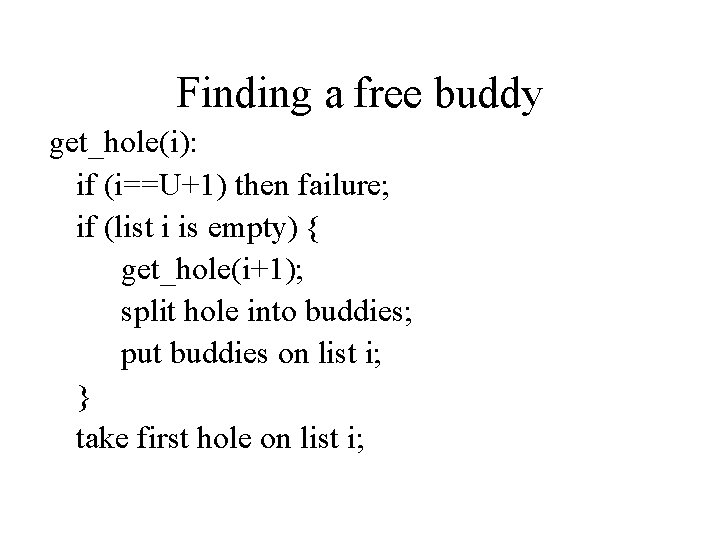

Finding a free buddy get_hole(i): if (i==U+1) then failure; if (list i is empty) { get_hole(i+1); split hole into buddies; put buddies on list i; } take first hole on list i;

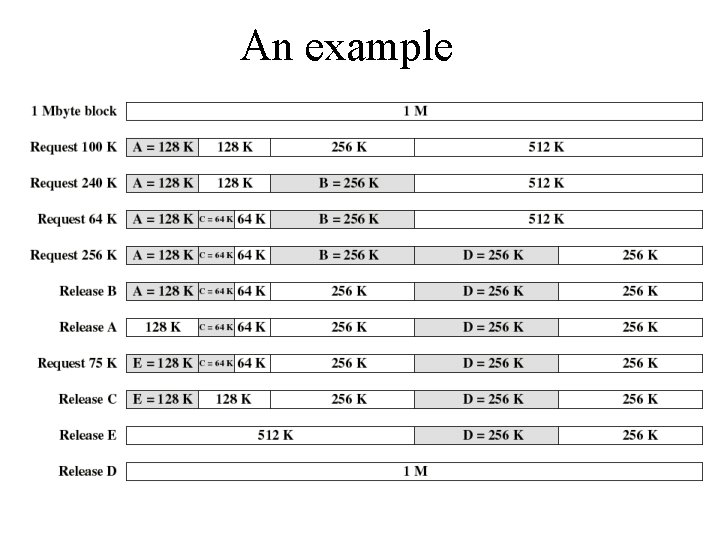

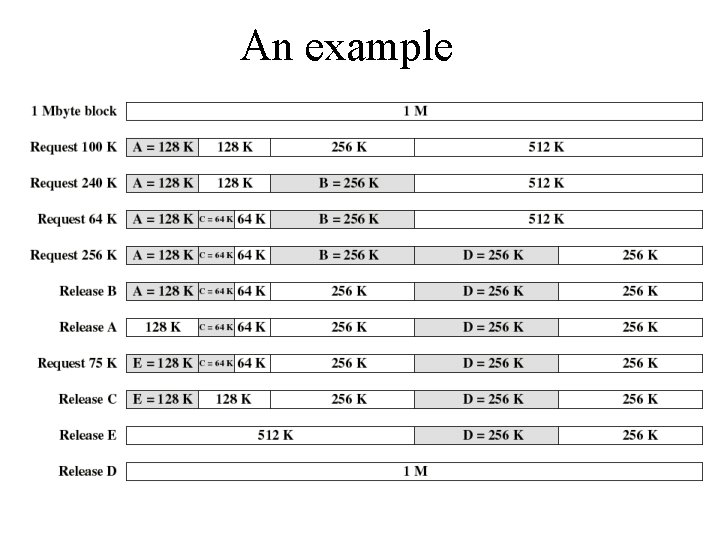

An example

Remarks • Buddy system is a reasonable compromise between the fixed and dynamic partitioning • Internal fragmentation is a problem – Expected case is about 28% which is high • Buddy System is not used for (system) memory management nowadays • Used for memory management by user level libraries (malloc)

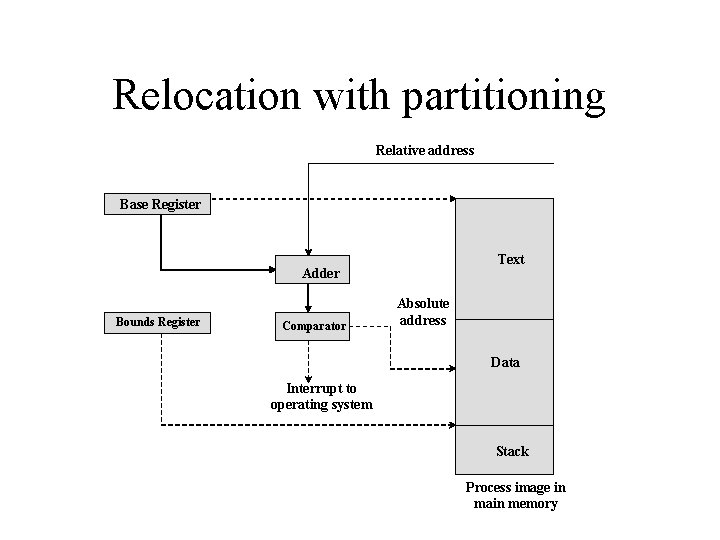

Relocation with partitioning Relative address Base Register Text Adder Bounds Register Comparator Absolute address Data Interrupt to operating system Stack Process image in main memory

Paging • The most efficient and flexible method of memory allocation • Process memory is divided into fixed size chunks of the same size, called pages • Pages are mapped onto frames in the main memory • Internal fragmentation is possible with paging (negligible)

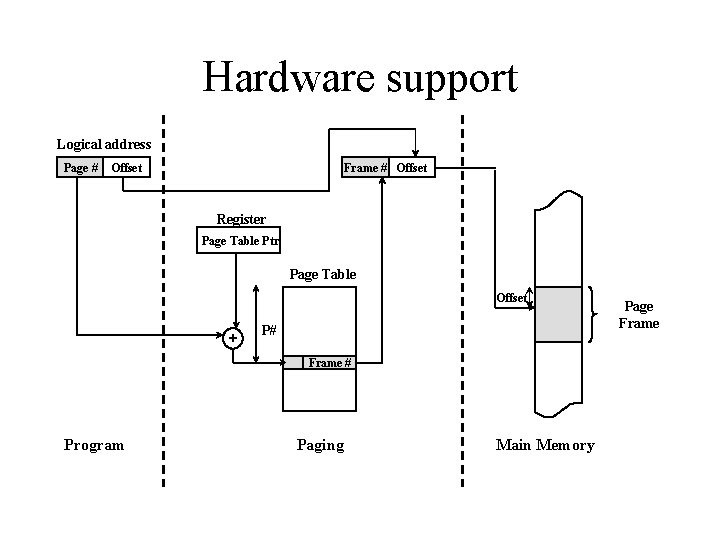

Paging support • Process pages can be scattered all over the main memory • Process page table maintains mapping of process pages onto frames • Relocation becomes complicated – Hardware support is needed to support translation of relative addresses within a program into the memory addresses

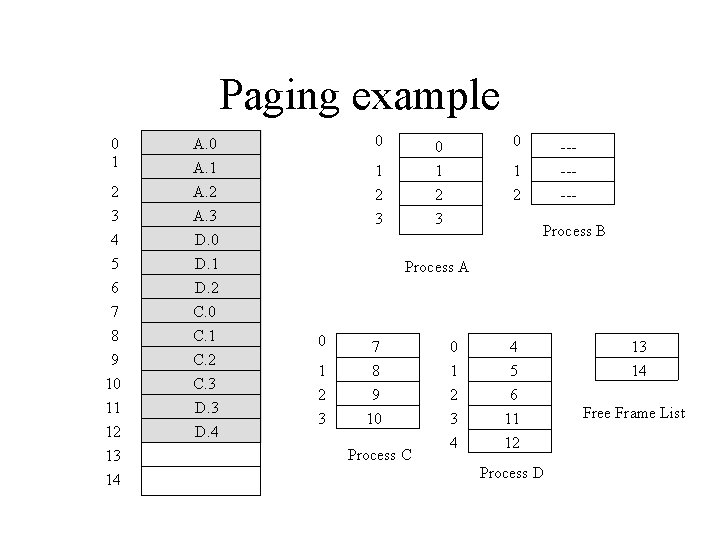

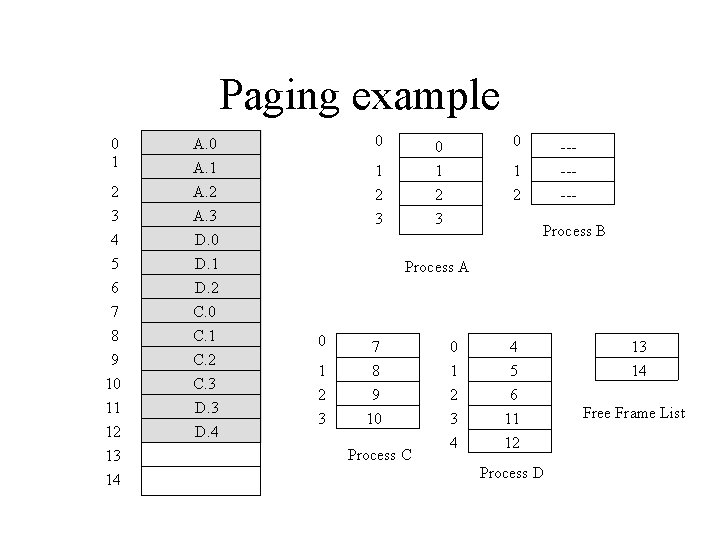

Paging example 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 0 A. 1 A. 2 A. 3 D. 0 D. 1 D. 2 C. 0 C. 1 C. 2 C. 3 D. 4 0 0 1 2 3 ------- 1 2 Process B Process A 0 1 2 3 7 8 9 10 Process C 0 1 2 3 4 4 5 6 11 12 Process D 13 14 Free Frame List

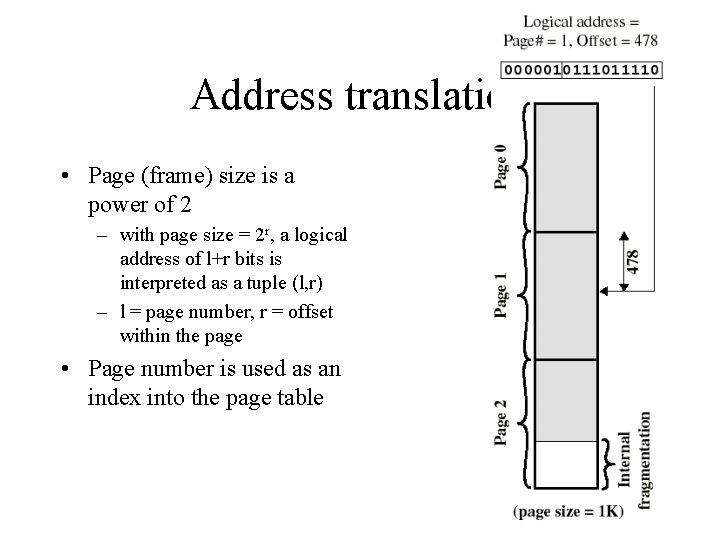

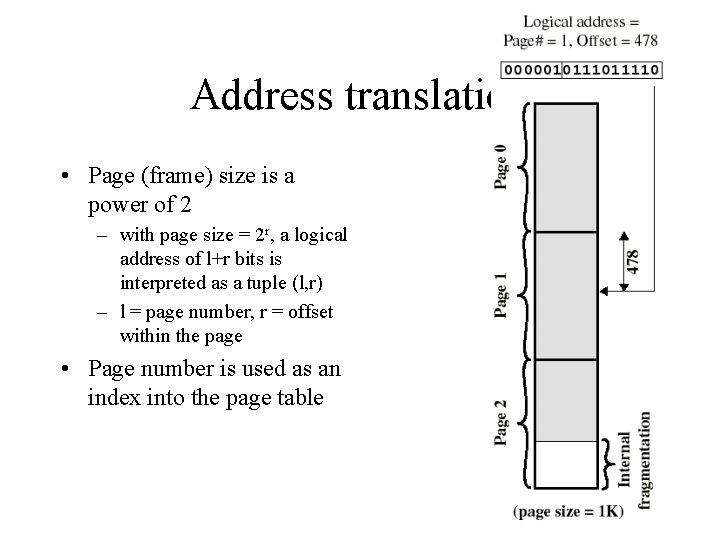

Address translation • Page (frame) size is a power of 2 – with page size = 2 r, a logical address of l+r bits is interpreted as a tuple (l, r) – l = page number, r = offset within the page • Page number is used as an index into the page table

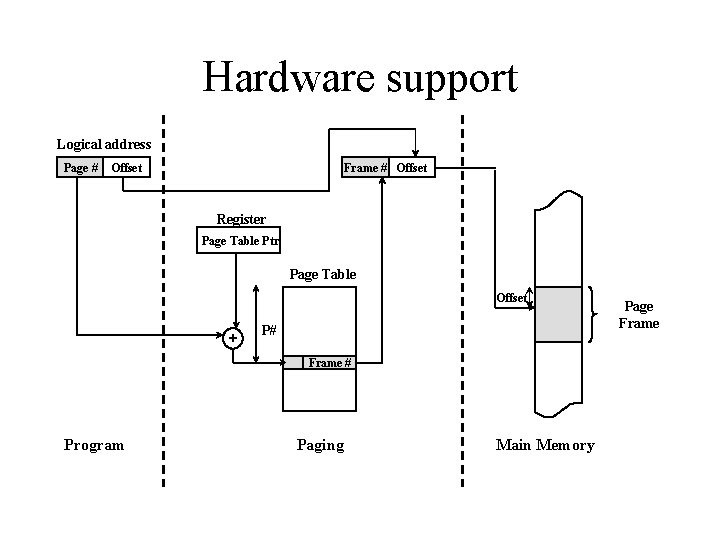

Hardware support Logical address Page # Offset Frame # Offset Register Page Table Ptr Page Table Offset + P# Frame # Program Paging Main Memory Page Frame

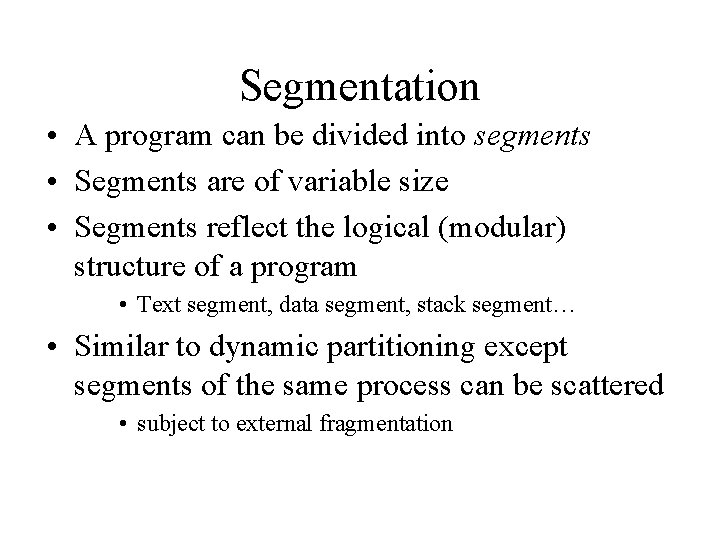

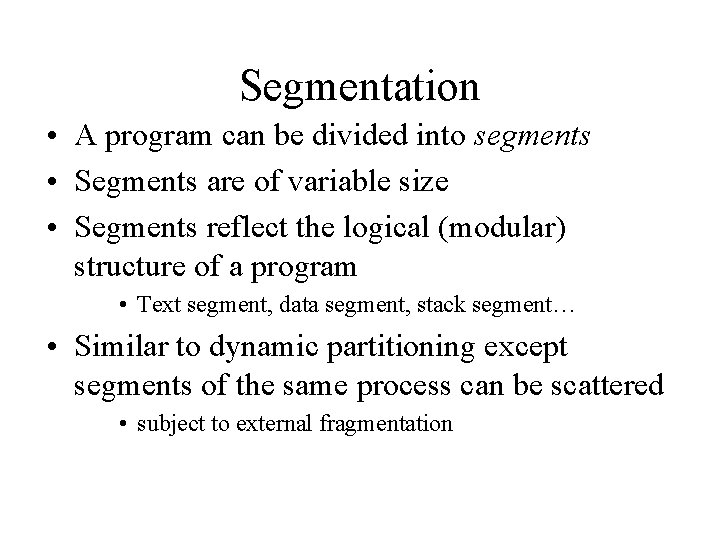

Segmentation • A program can be divided into segments • Segments are of variable size • Segments reflect the logical (modular) structure of a program • Text segment, data segment, stack segment… • Similar to dynamic partitioning except segments of the same process can be scattered • subject to external fragmentation

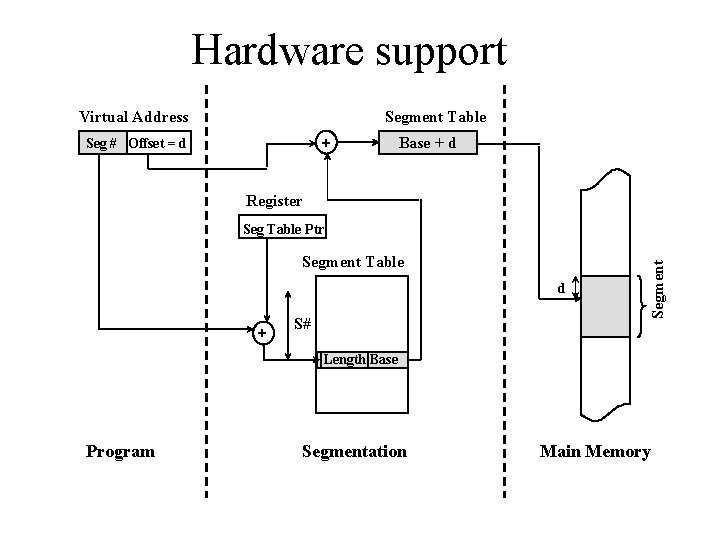

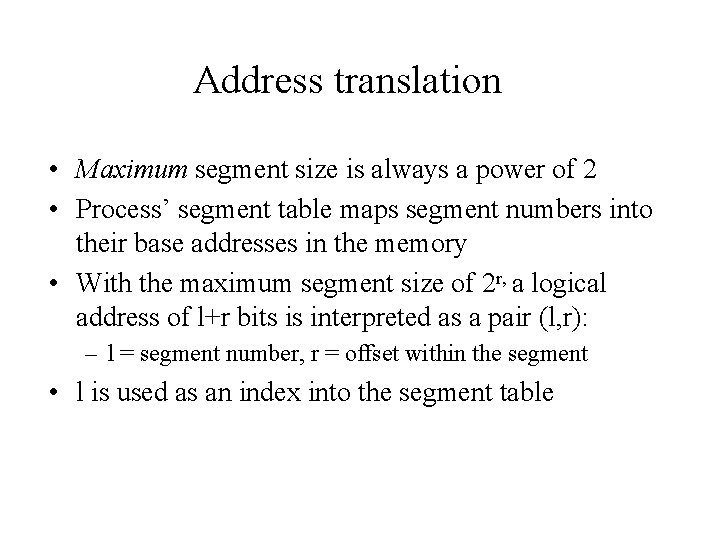

Address translation • Maximum segment size is always a power of 2 • Process’ segment table maps segment numbers into their base addresses in the memory • With the maximum segment size of 2 r, a logical address of l+r bits is interpreted as a pair (l, r): – l = segment number, r = offset within the segment • l is used as an index into the segment table

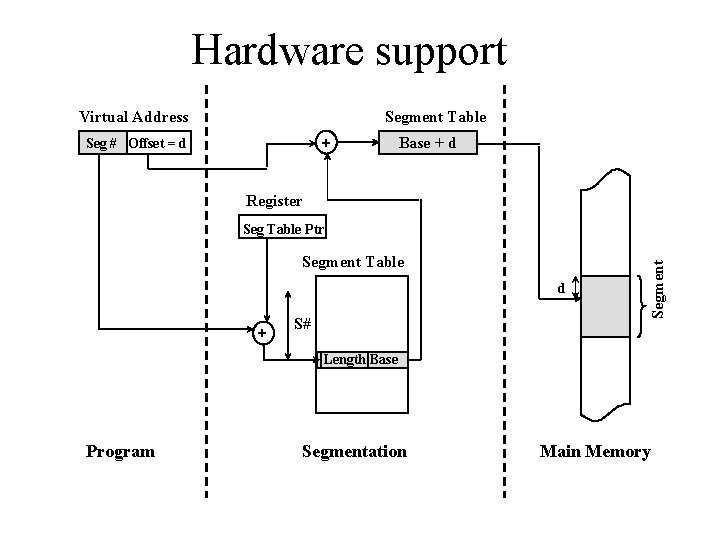

Hardware support Virtual Address Segment Table + Seg # Offset = d Base + d Register Segment Table d + S# Segment Seg Table Ptr Length Base Program Segmentation Main Memory

Remarks • The price of paging/segmentation is a sophisticated hardware • But the advantages exceed by far • Paging decouples address translation and memory allocation – Not all the logical addresses are necessary mapped into physical memory at every given moment - virtual memory • Paging and segmentation are often combined to benefit from both worlds