MEMORY MANAGEMENT In Mono Programming or Multi programming

- Slides: 71

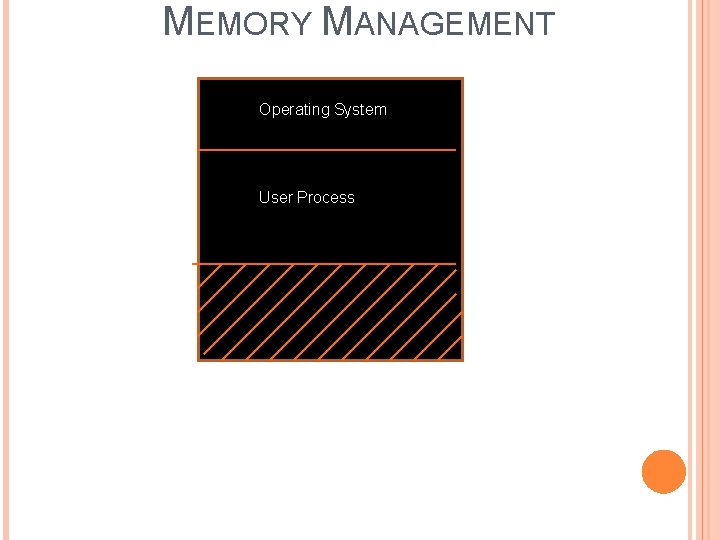

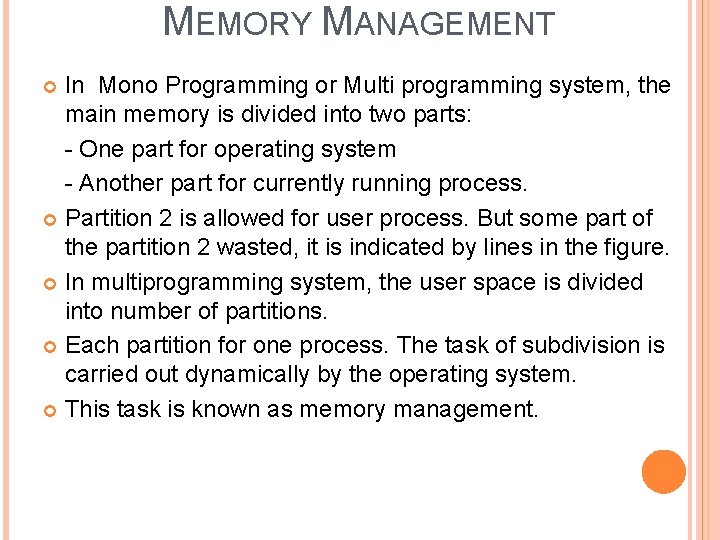

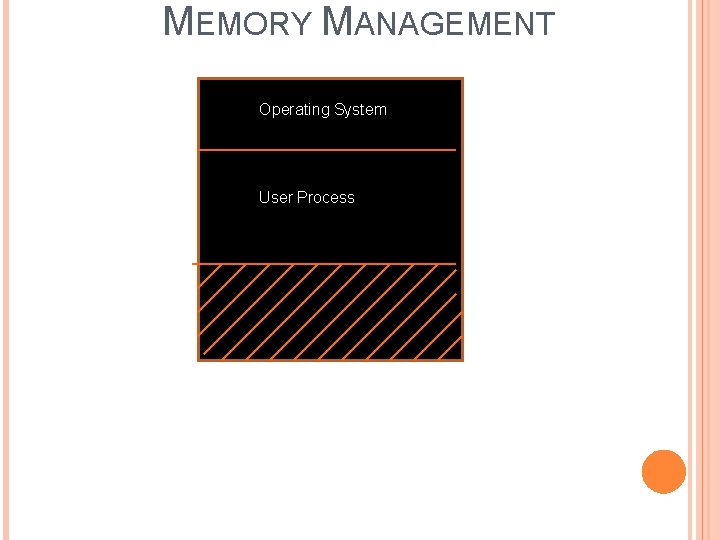

MEMORY MANAGEMENT In Mono Programming or Multi programming system, the main memory is divided into two parts: - One part for operating system - Another part for currently running process. Partition 2 is allowed for user process. But some part of the partition 2 wasted, it is indicated by lines in the figure. In multiprogramming system, the user space is divided into number of partitions. Each partition for one process. The task of subdivision is carried out dynamically by the operating system. This task is known as memory management.

MEMORY MANAGEMENT Operating System User Process

ADDRESS BINDING The processes on the disk that are waiting to be brought into memory for execution form the input queue. The process of mapping program’s address space in CPU to its corresponding address space in memory is called address binding. A compiler typically bind these addresses to relocatable addresses. The loader will in turn bind the relocatable addresses to absolute addresses. Each binding is a mapping from one address to another.

COMPILE TIME BINDING Location of program in physical memory must be known at compile time. Compiler generates absolute code. Compiler binds names to actual physical addresses. Loading ≡ copying executable file to appropriate location in memory. If starting location changes, program will have to be recompiled. Example: . COM programs in MS-DOS

LOAD TIME BINDING Compiler generates relocatable code � compiler binds names to relative addresses (offsets from starting address) � compiler also generates relocation table. Linker resolves external names and combines object files into one loadable module. (Linking) loader converts relative addresses to physical addresses. No relocation allowed during execution

EXECUTION TIME BINDING Programs/compiled units may need to be moved during execution from one memory segment to another. So binding is delayed to the time of execution. CPU generates relative addresses. Relative addresses bound are to physical addresses at runtime, based on location of translated units. Suitable hardware support required. This hardware device is called Memory-Management Unit(MMU) The compile-time and load-time address binding methods generate identical logical and physical addresses, but in execution-time binding they are different.

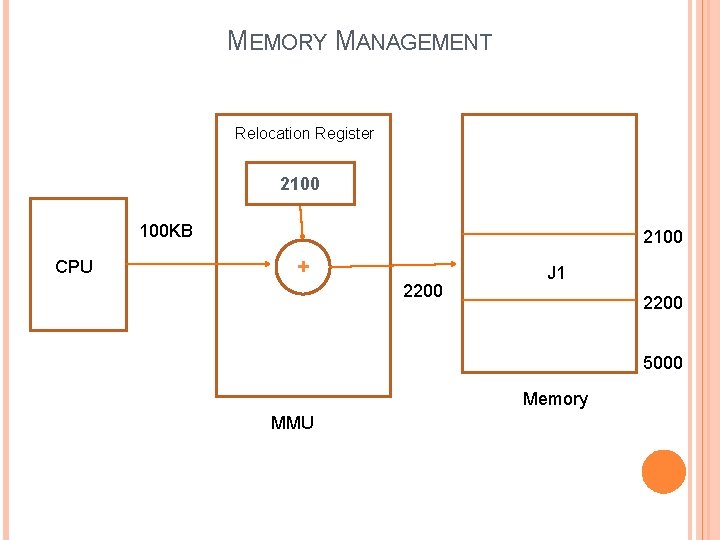

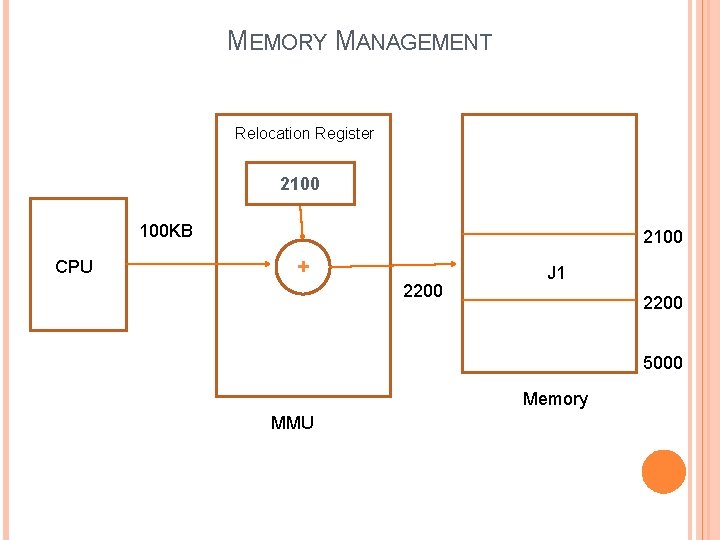

LOGICAL ADDRESS VERSUS PHYSICAL ADDRESS An Address generated by CPU is called logical address. An address generated by memory management unit—that is, the one loaded into the memory-address register of the memory is called physical address. For example, J 1 is a program, written by a user, the size of program is 100 KB. But program is dynamically loaded in the main memory from 2100 to 2200 KB, this is actual loaded address in main memory is called physical address. The set of all logical address are generated by a program is referred to as a “Logical Address Space”. The set of all physical address corresponding these logical address referred to as a “Physical Address Space”. Physical Address Space= Logical Address Space + Contents of the relocation register.

MEMORY MANAGEMENT Relocation Register 2100 100 KB CPU 2100 + 2200 J 1 2200 5000 Memory MMU

DYNAMIC LOADING The size of the process executing is limited to the size of physical memory. In dynamic loading, a routine is not loaded until it is called. All routines are kept on disk in a relocatable load format. The main program is loaded into memory and is executed. When that program needs to call another routine, the calling routine first checks to see whether the other routine has been loaded. If the routine is not in memory, loader loads it into memory and update the program’s address tables and pass control to newly loaded routine.

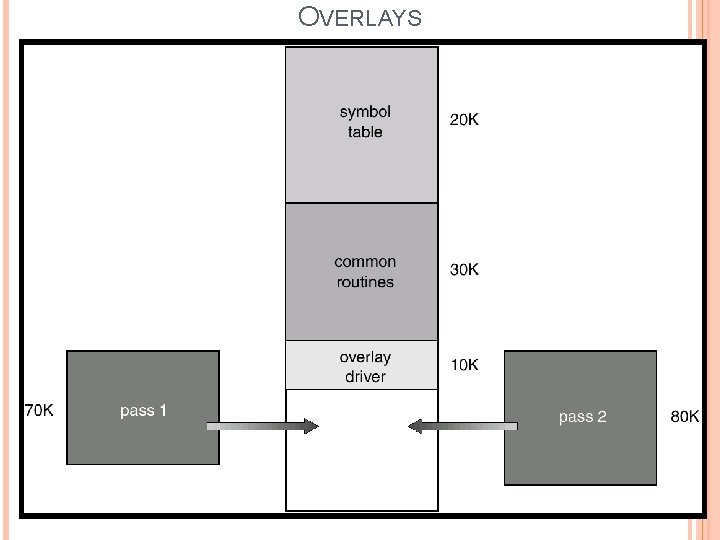

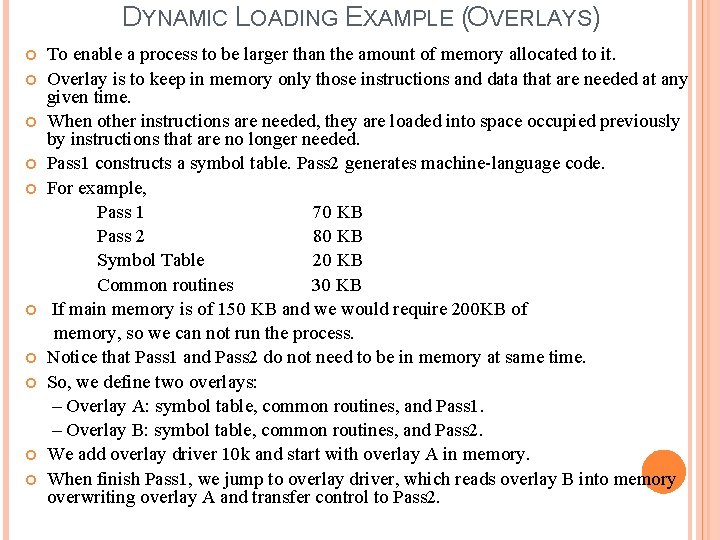

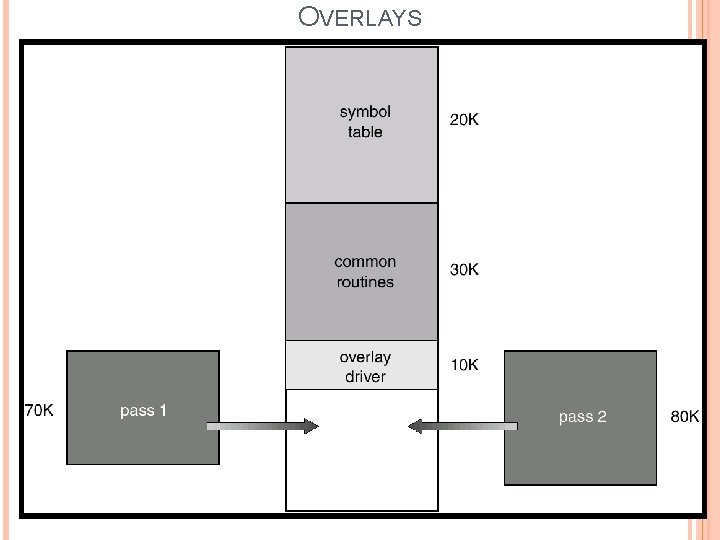

DYNAMIC LOADING EXAMPLE (OVERLAYS) To enable a process to be larger than the amount of memory allocated to it. Overlay is to keep in memory only those instructions and data that are needed at any given time. When other instructions are needed, they are loaded into space occupied previously by instructions that are no longer needed. Pass 1 constructs a symbol table. Pass 2 generates machine-language code. For example, Pass 1 70 KB Pass 2 80 KB Symbol Table 20 KB Common routines 30 KB If main memory is of 150 KB and we would require 200 KB of memory, so we can not run the process. Notice that Pass 1 and Pass 2 do not need to be in memory at same time. So, we define two overlays: – Overlay A: symbol table, common routines, and Pass 1. – Overlay B: symbol table, common routines, and Pass 2. We add overlay driver 10 k and start with overlay A in memory. When finish Pass 1, we jump to overlay driver, which reads overlay B into memory overwriting overlay A and transfer control to Pass 2.

OVERLAYS

DYNAMIC LINKING Dynamic linking is used with system libraries, such as language subroutine libraries. Without this facility, each program on a system must include a copy of its language libraries. Thus, it wastes both disk space and main memory.

DYNAMIC LINKING—STUB The stub is a small piece of code which is included in the executable image of the program. When stub is executed, it checks to see whether the needed routine is already in memory. If not, it locates the library and loads it into memory. The stub replace itself with the address of the routine and executes the routine. The next time that particular code segment is reached, the library routine is executed directly. Thus, all processes that use a library execute only one copy of the library code.

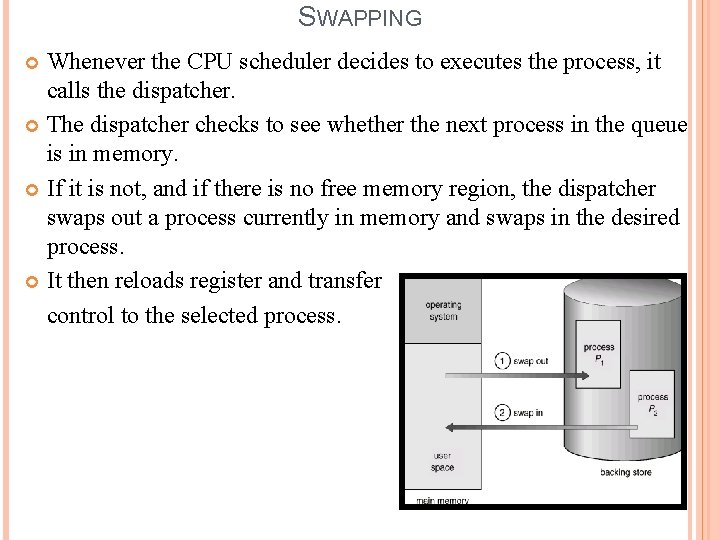

SWAPPING Swapping is the method to improve the main memory utilization. Switch a process from main memory to disk is said to be “swap out” and “switch from disk to main memory is said to be “swap in”. This type of mechanism is said to be “swapping”. We can achieve the efficient memory utilization with swapping. The swapping require backing store. The backing store is commonly a fast disk. It must be large enough to accommodate the copies of all process images for all users. When process is swapped out, its executable image is copied into backing store. The system maintains a ready queue consisting of all processes whose memory images are on the backing store and are ready to run.

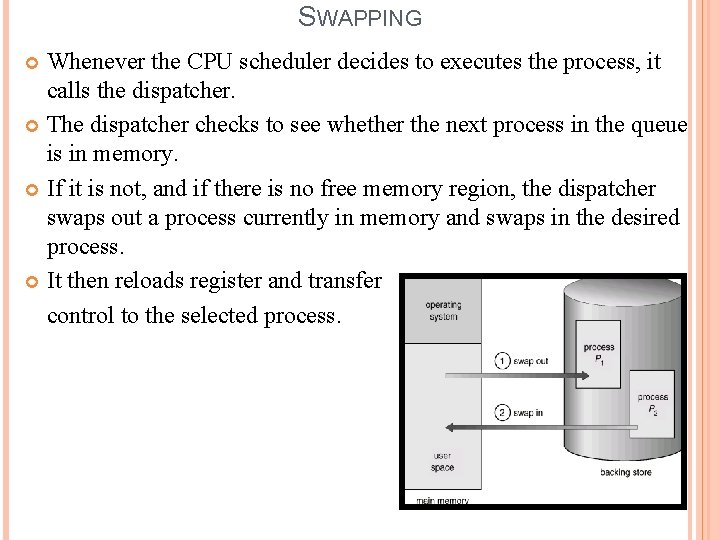

SWAPPING Whenever the CPU scheduler decides to executes the process, it calls the dispatcher. The dispatcher checks to see whether the next process in the queue is in memory. If it is not, and if there is no free memory region, the dispatcher swaps out a process currently in memory and swaps in the desired process. It then reloads register and transfer control to the selected process.

SWAPPING A process that is swapped out will be swapped back into the same memory space it occupied previously. This is dependent on the method used by address binding. If binding is done at compile or load time, then the process cannot be easily moved to a different location. If execution-time binding is used, then a process can be swapped into different memory space, because physical addresses are computed during execution time.

SWAP TIME The context switch time must not be very higher. Example: the size of user process is 100 MB and the transfer rate for baking store is 50 MB per second. Thus the 100 MB process can take 100 MB/50 MB per sec=2 seconds Consider average latency time as 8 milliseconds. Thus, the swap time is 2008 milliseconds and total swap in and swap out time will be 4016 milliseconds. (Latency is the delay from input into a system to desired outcome)

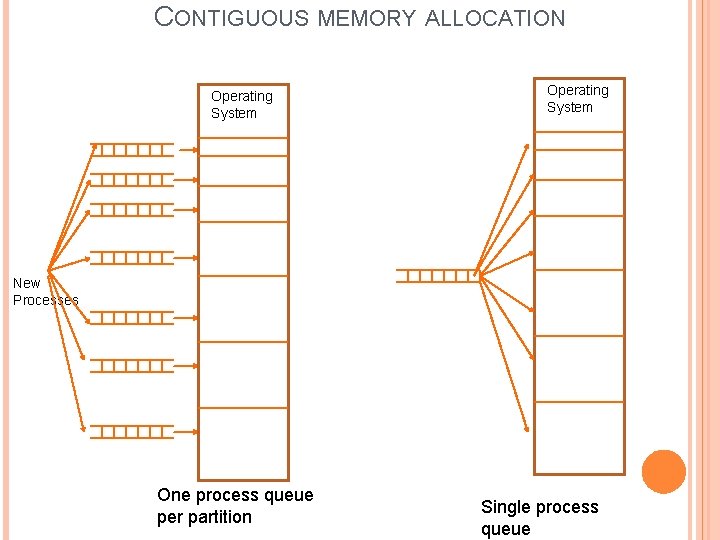

CONTIGUOUS MEMORY ALLOCATION Several user processes residing in memory at the same time. Memory can be divided into a number of specific-sized partitions, where each partition may contain exactly one process. Therefore, the degree of multiprogramming is bound by the number of partitions. Or all memory is available for user processes as one large block (hole). When a process arrives and needs memory, we search for a hole large enough for this process. If we find a space, we allocate only as much memory as is needed, keeping the rest available to satisfy future requests.

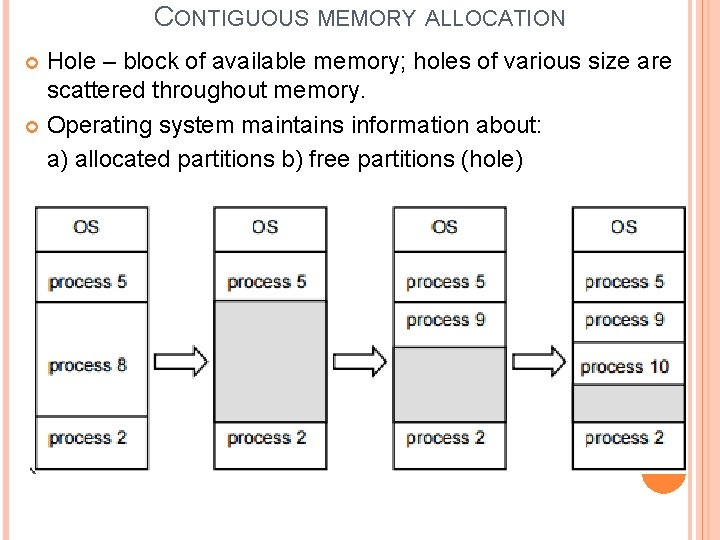

CONTIGUOUS MEMORY ALLOCATION Hole – block of available memory; holes of various size are scattered throughout memory. Operating system maintains information about: a) allocated partitions b) free partitions (hole)

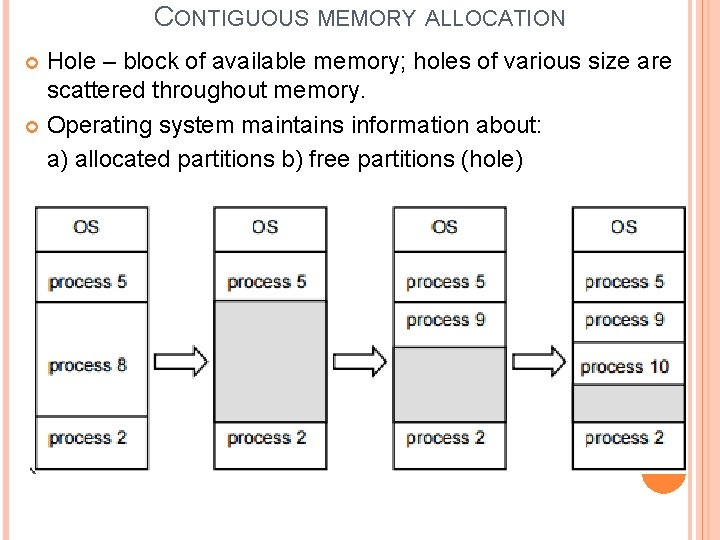

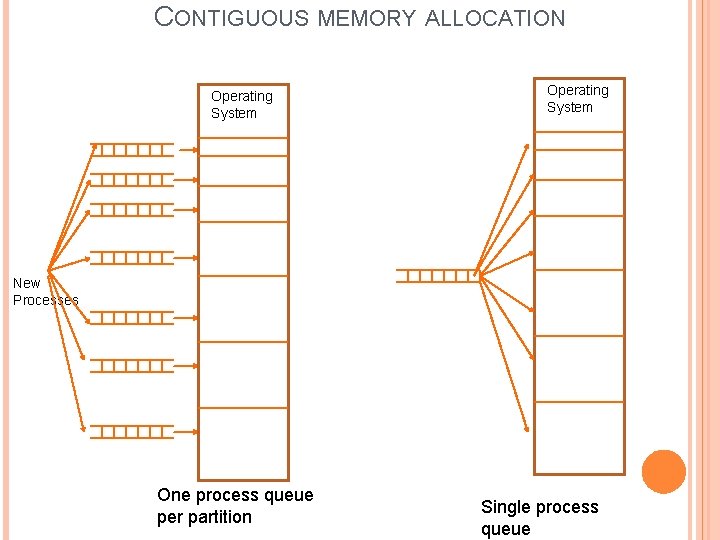

CONTIGUOUS MEMORY ALLOCATION Operating System New Processes One process queue per partition Single process queue

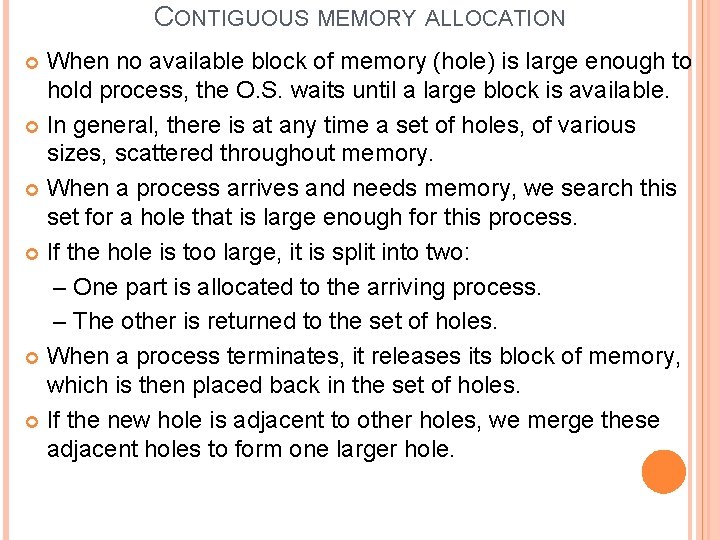

CONTIGUOUS MEMORY ALLOCATION When no available block of memory (hole) is large enough to hold process, the O. S. waits until a large block is available. In general, there is at any time a set of holes, of various sizes, scattered throughout memory. When a process arrives and needs memory, we search this set for a hole that is large enough for this process. If the hole is too large, it is split into two: – One part is allocated to the arriving process. – The other is returned to the set of holes. When a process terminates, it releases its block of memory, which is then placed back in the set of holes. If the new hole is adjacent to other holes, we merge these adjacent holes to form one larger hole.

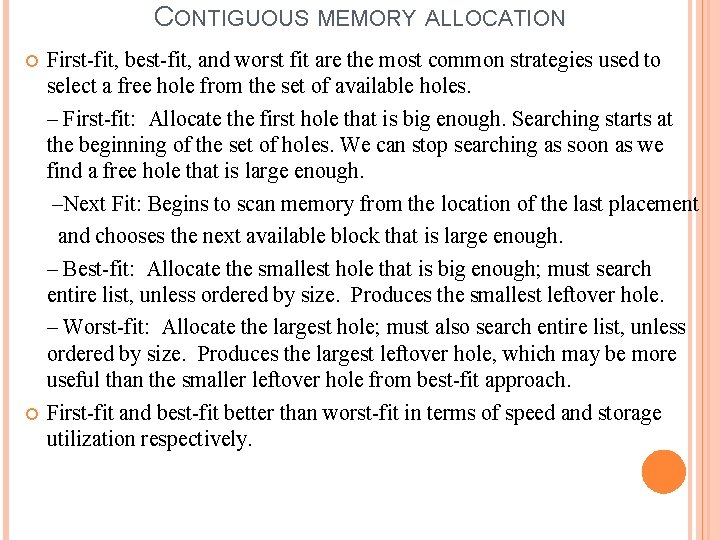

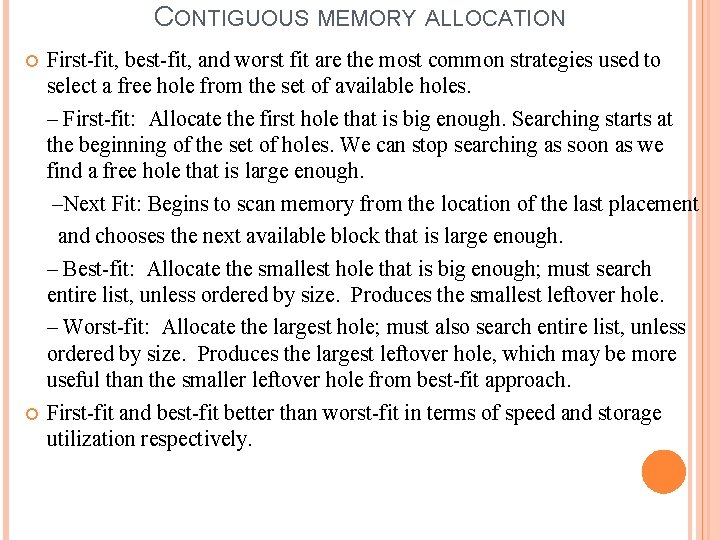

CONTIGUOUS MEMORY ALLOCATION First-fit, best-fit, and worst fit are the most common strategies used to select a free hole from the set of available holes. – First-fit: Allocate the first hole that is big enough. Searching starts at the beginning of the set of holes. We can stop searching as soon as we find a free hole that is large enough. –Next Fit: Begins to scan memory from the location of the last placement and chooses the next available block that is large enough. – Best-fit: Allocate the smallest hole that is big enough; must search entire list, unless ordered by size. Produces the smallest leftover hole. – Worst-fit: Allocate the largest hole; must also search entire list, unless ordered by size. Produces the largest leftover hole, which may be more useful than the smaller leftover hole from best-fit approach. First-fit and best-fit better than worst-fit in terms of speed and storage utilization respectively.

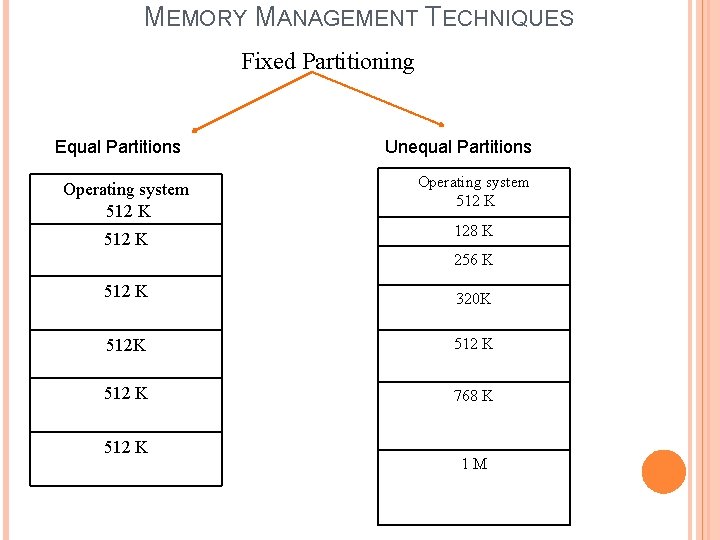

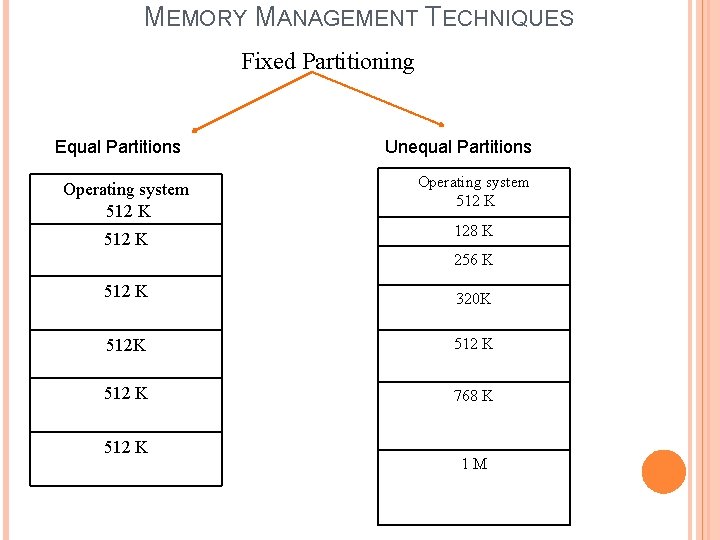

MEMORY MANAGEMENT TECHNIQUES Fixed Partitioning Equal Partitions Unequal Partitions Operating system 512 K 128 K 256 K 512 K 320 K 512 K 768 K 512 K 1 M

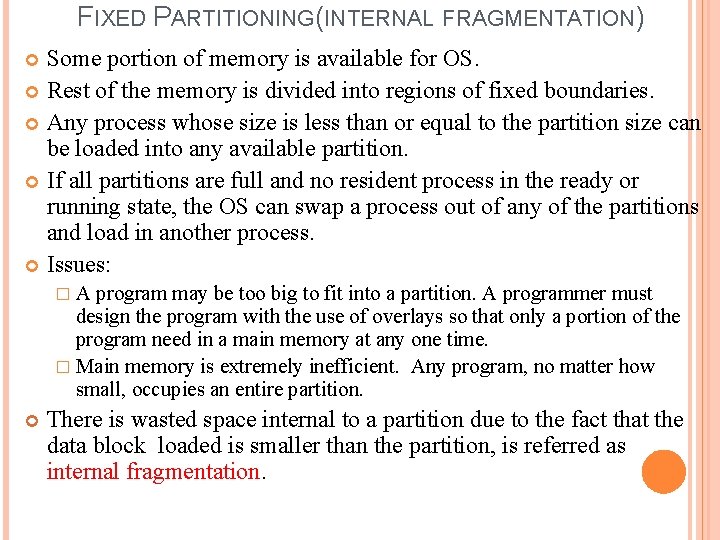

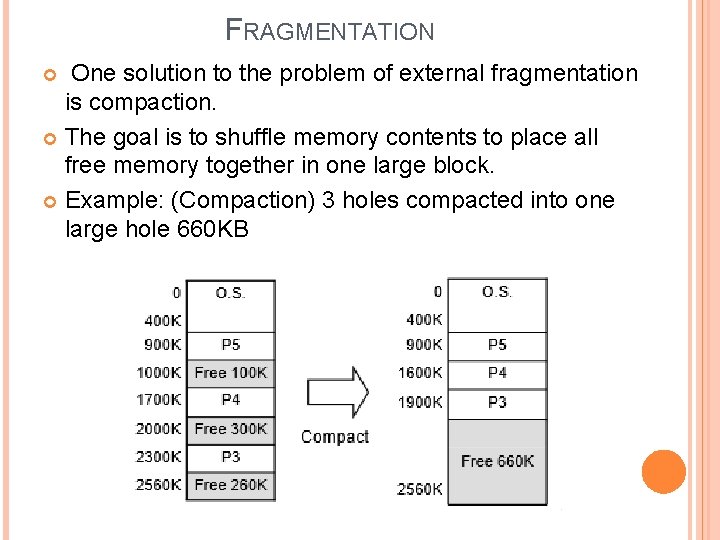

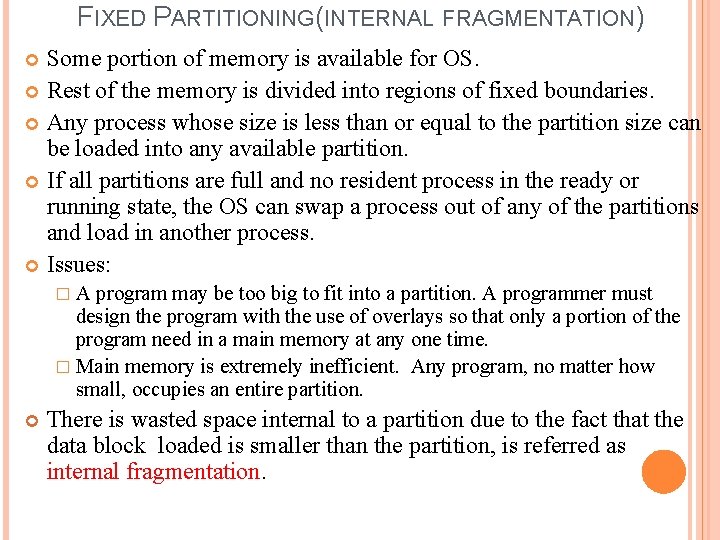

FIXED PARTITIONING(INTERNAL FRAGMENTATION) Some portion of memory is available for OS. Rest of the memory is divided into regions of fixed boundaries. Any process whose size is less than or equal to the partition size can be loaded into any available partition. If all partitions are full and no resident process in the ready or running state, the OS can swap a process out of any of the partitions and load in another process. Issues: �A program may be too big to fit into a partition. A programmer must design the program with the use of overlays so that only a portion of the program need in a main memory at any one time. � Main memory is extremely inefficient. Any program, no matter how small, occupies an entire partition. There is wasted space internal to a partition due to the fact that the data block loaded is smaller than the partition, is referred as internal fragmentation.

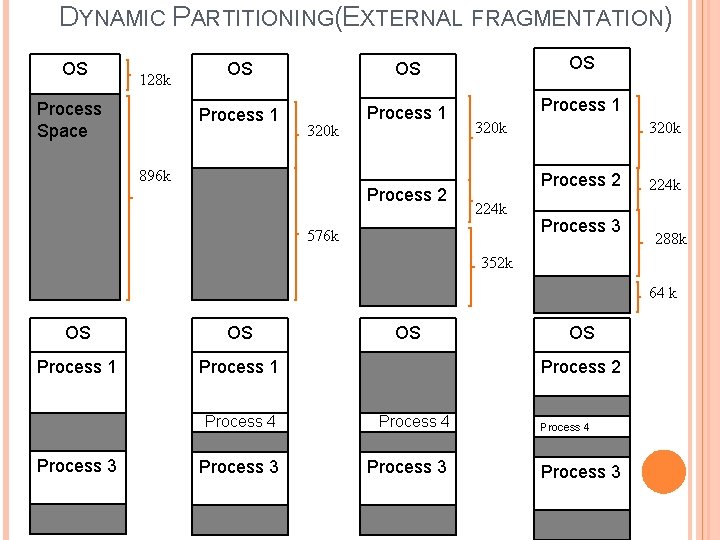

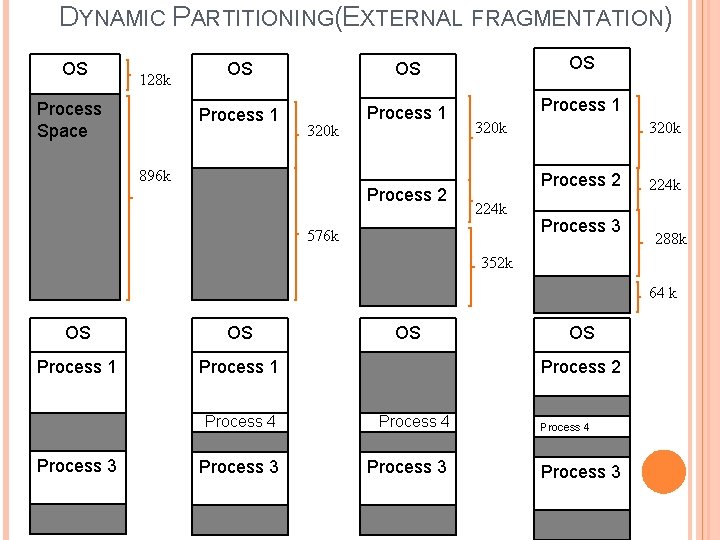

DYNAMIC PARTITIONING(EXTERNAL FRAGMENTATION) OS 128 k Process Space OS OS OS Process 1 320 k 896 k Process 2 320 k Process 2 224 k 576 k Process 3 224 k 288 k 352 k 64 k OS OS Process 1 Process 3 OS OS Process 2 Process 4 Process 3

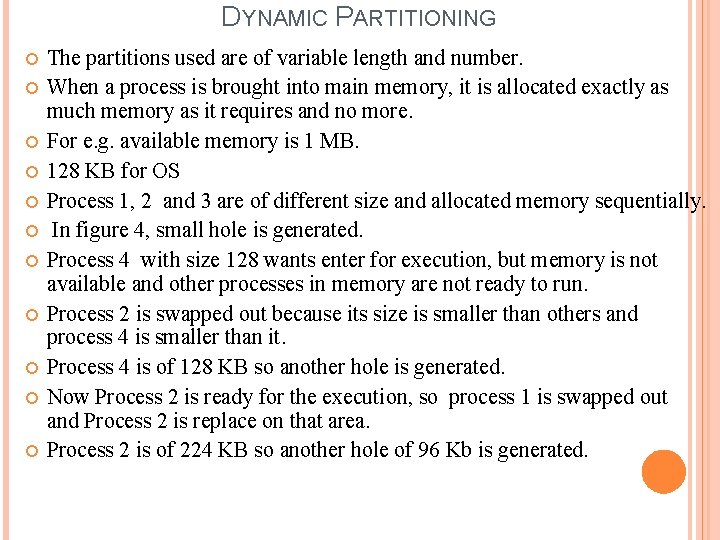

DYNAMIC PARTITIONING The partitions used are of variable length and number. When a process is brought into main memory, it is allocated exactly as much memory as it requires and no more. For e. g. available memory is 1 MB. 128 KB for OS Process 1, 2 and 3 are of different size and allocated memory sequentially. In figure 4, small hole is generated. Process 4 with size 128 wants enter for execution, but memory is not available and other processes in memory are not ready to run. Process 2 is swapped out because its size is smaller than others and process 4 is smaller than it. Process 4 is of 128 KB so another hole is generated. Now Process 2 is ready for the execution, so process 1 is swapped out and Process 2 is replace on that area. Process 2 is of 224 KB so another hole of 96 Kb is generated.

DYNAMIC PARTITIONING This method starts out well, but as time goes on, memory becomes more and more fragmented. This phenomenon is called external fragmentation. Compaction: � It is technique to overcome external fragmentation. � From time to time, the operating system shifts the processes so that they are contagious and all the free memory is combined together into one block. � It is very time consuming and wasting processor time.

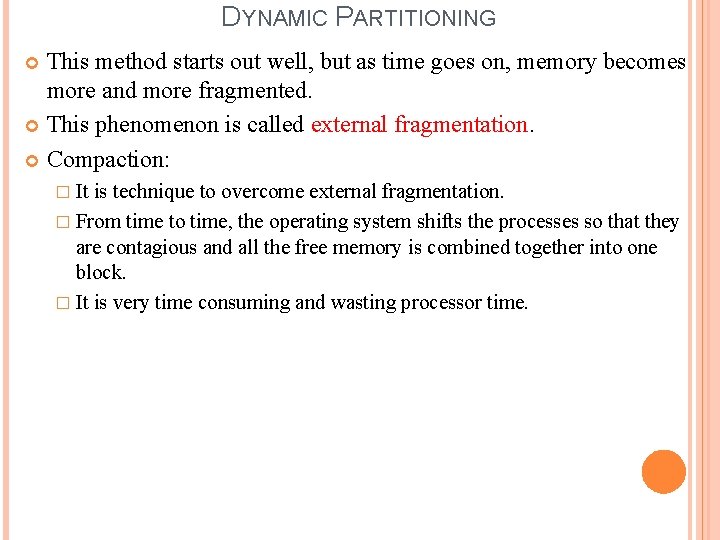

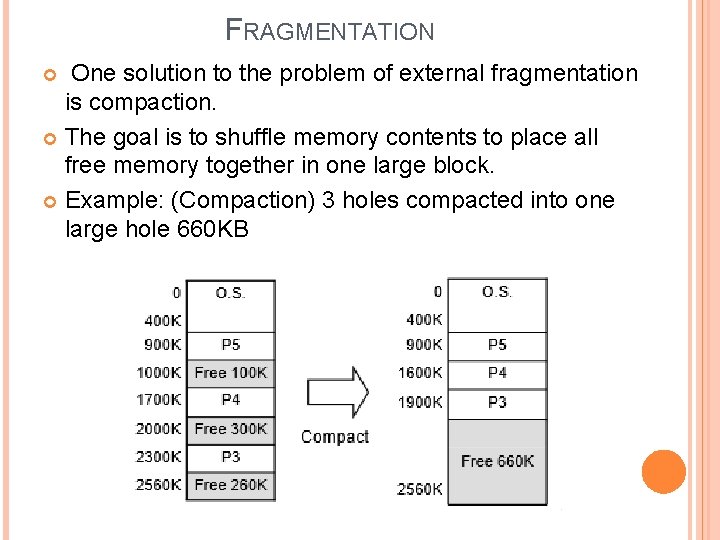

FRAGMENTATION One solution to the problem of external fragmentation is compaction. The goal is to shuffle memory contents to place all free memory together in one large block. Example: (Compaction) 3 holes compacted into one large hole 660 KB

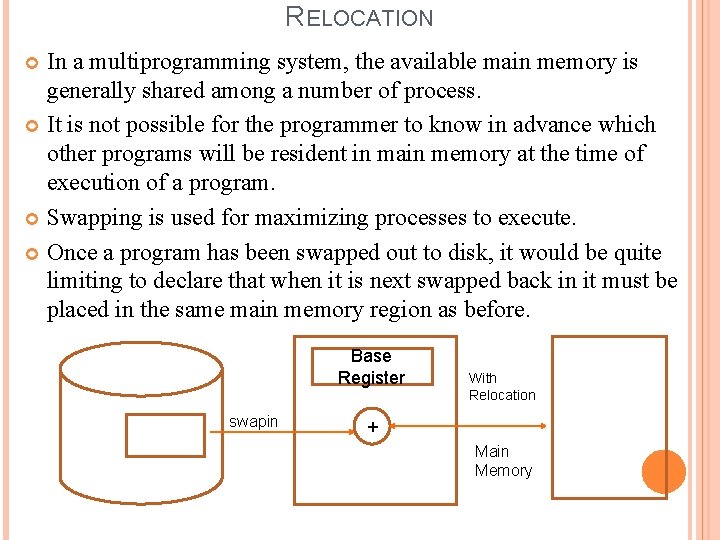

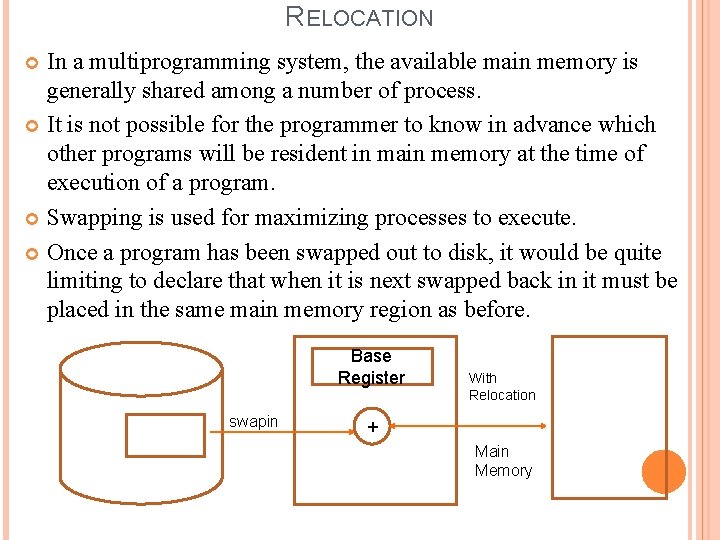

RELOCATION In a multiprogramming system, the available main memory is generally shared among a number of process. It is not possible for the programmer to know in advance which other programs will be resident in main memory at the time of execution of a program. Swapping is used for maximizing processes to execute. Once a program has been swapped out to disk, it would be quite limiting to declare that when it is next swapped back in it must be placed in the same main memory region as before. Base Register swapin With Relocation + Main Memory

RELOCATION The base register holds the smallest legal physical memory address. The Limit register contains the size of the process. If the logical address is greater than the contents of the base register, then it is authorized access, other wise it is trap to OS. If the physical address(Logical + base) is less than the base + limit , it causes no problem otherwise it is trap to the operating system.

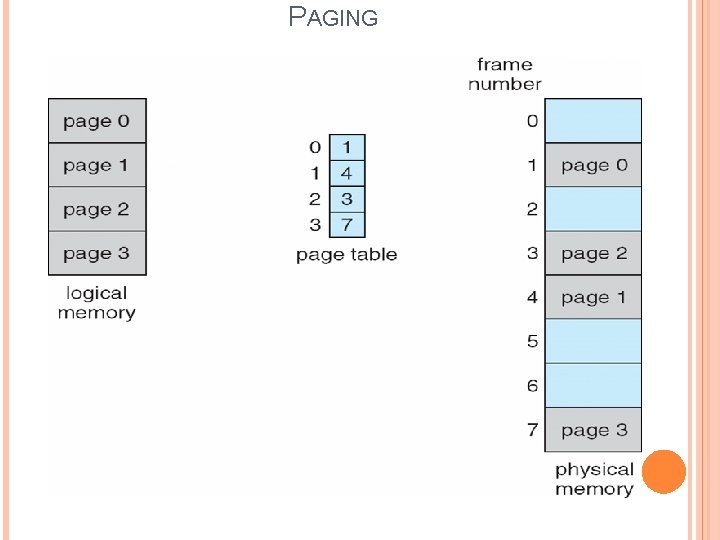

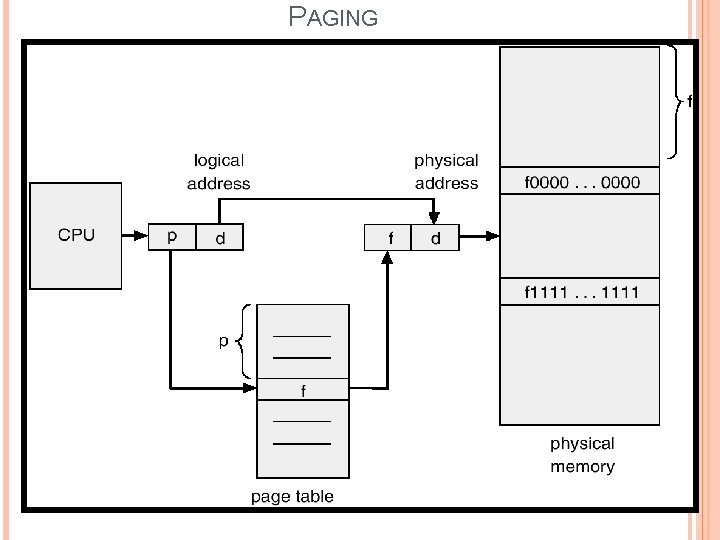

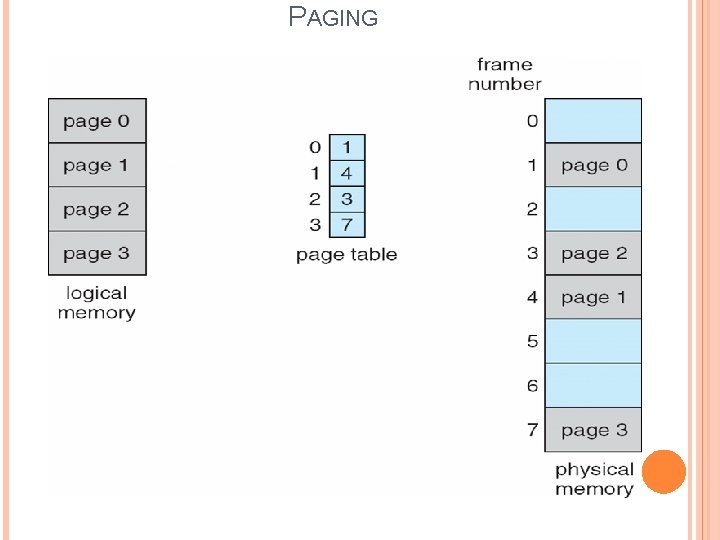

PAGING Non contagious Memory Allocation Scheme. Paging avoids external fragmentation and need for compaction. Paging means dividing physical memory into fixed size blocks called frames. The Process is divided into fixed size blocks called pages. Page size and frame size must be equal. The size of the frame or page is depends on operating system.

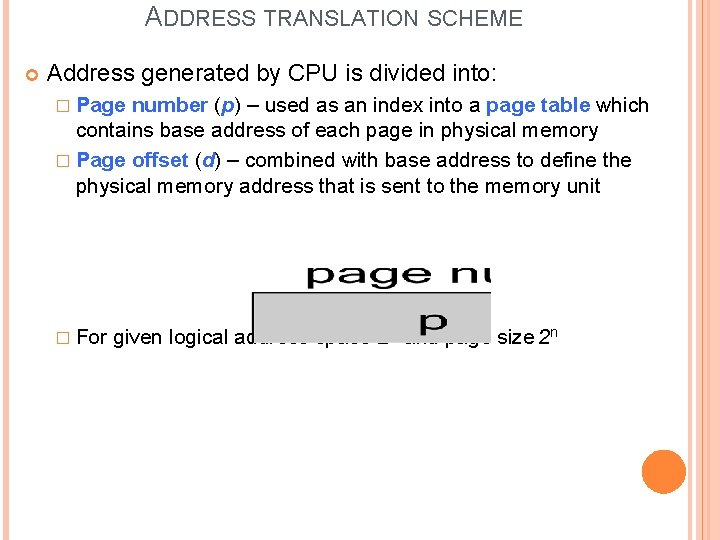

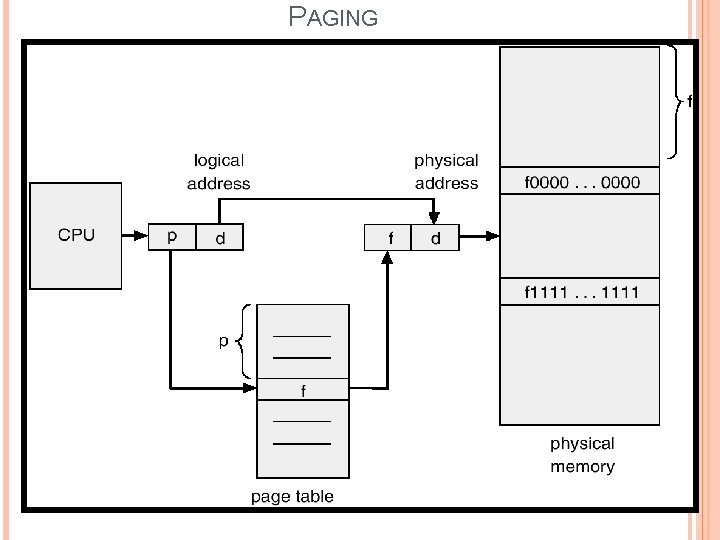

ADDRESS TRANSLATION SCHEME Address generated by CPU is divided into: � Page number (p) – used as an index into a page table which contains base address of each page in physical memory � Page offset (d) – combined with base address to define the physical memory address that is sent to the memory unit � For given logical address space 2 m and page size 2 n

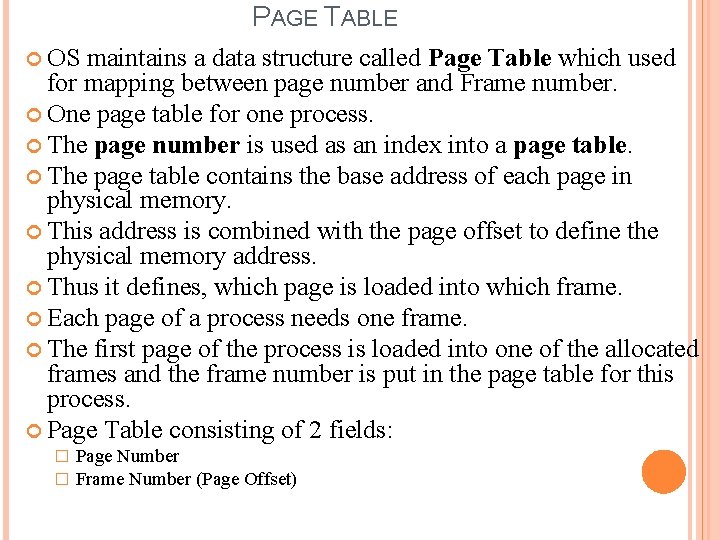

PAGE TABLE OS maintains a data structure called Page Table which used for mapping between page number and Frame number. One page table for one process. The page number is used as an index into a page table. The page table contains the base address of each page in physical memory. This address is combined with the page offset to define the physical memory address. Thus it defines, which page is loaded into which frame. Each page of a process needs one frame. The first page of the process is loaded into one of the allocated frames and the frame number is put in the page table for this process. Page Table consisting of 2 fields: � � Page Number Frame Number (Page Offset)

PAGING

PAGING

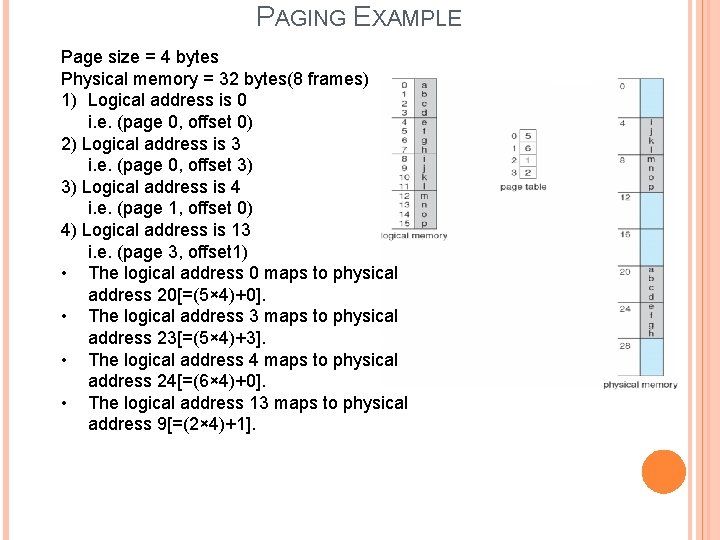

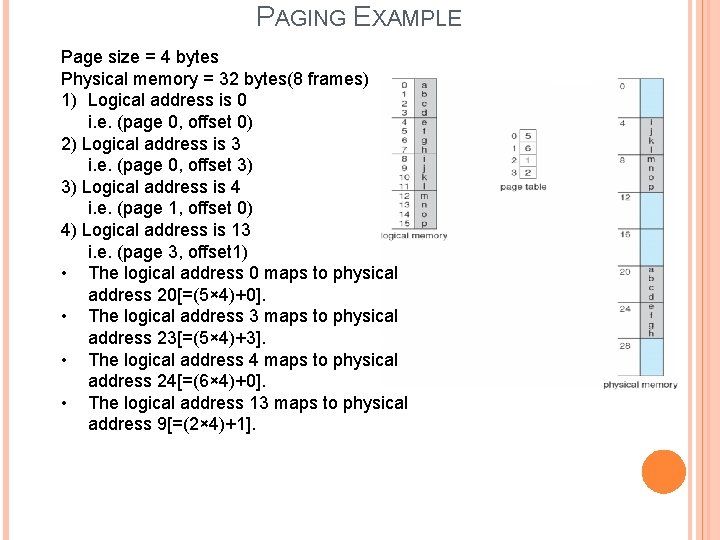

PAGING EXAMPLE Page size = 4 bytes Physical memory = 32 bytes(8 frames) 1) Logical address is 0 i. e. (page 0, offset 0) 2) Logical address is 3 i. e. (page 0, offset 3) 3) Logical address is 4 i. e. (page 1, offset 0) 4) Logical address is 13 i. e. (page 3, offset 1) • The logical address 0 maps to physical address 20[=(5× 4)+0]. • The logical address 3 maps to physical address 23[=(5× 4)+3]. • The logical address 4 maps to physical address 24[=(6× 4)+0]. • The logical address 13 maps to physical address 9[=(2× 4)+1].

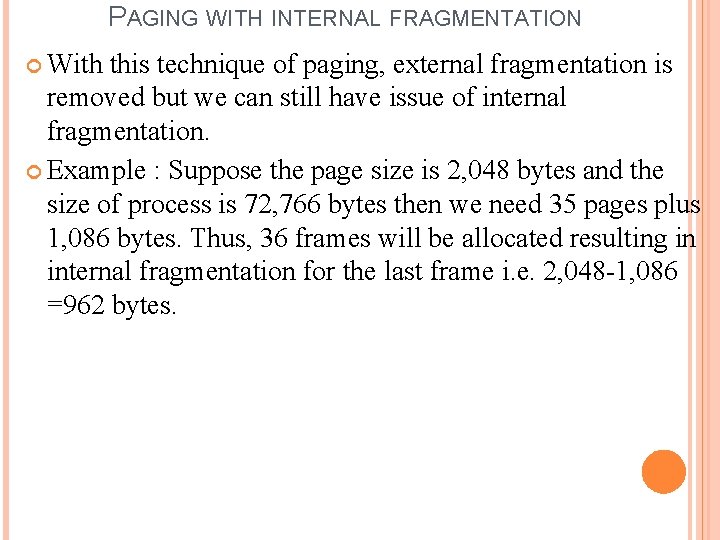

PAGING WITH INTERNAL FRAGMENTATION With this technique of paging, external fragmentation is removed but we can still have issue of internal fragmentation. Example : Suppose the page size is 2, 048 bytes and the size of process is 72, 766 bytes then we need 35 pages plus 1, 086 bytes. Thus, 36 frames will be allocated resulting in internal fragmentation for the last frame i. e. 2, 048 -1, 086 =962 bytes.

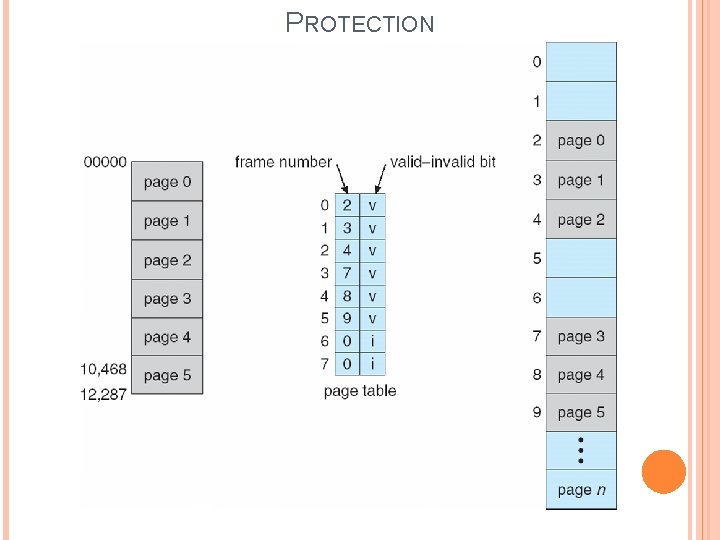

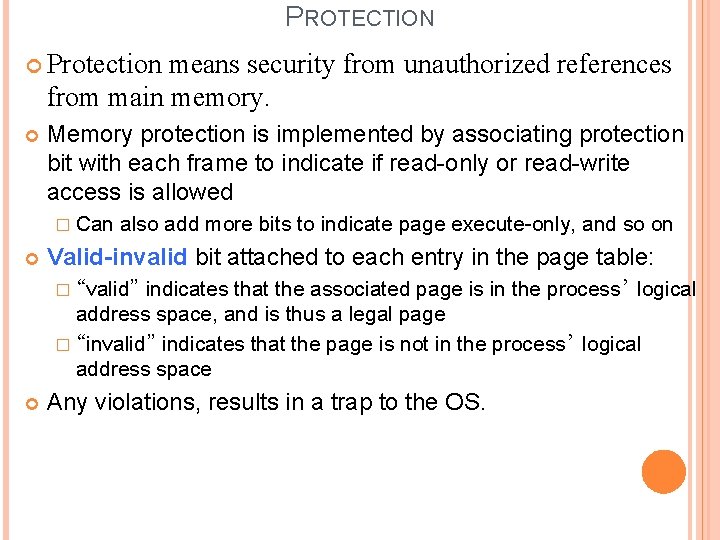

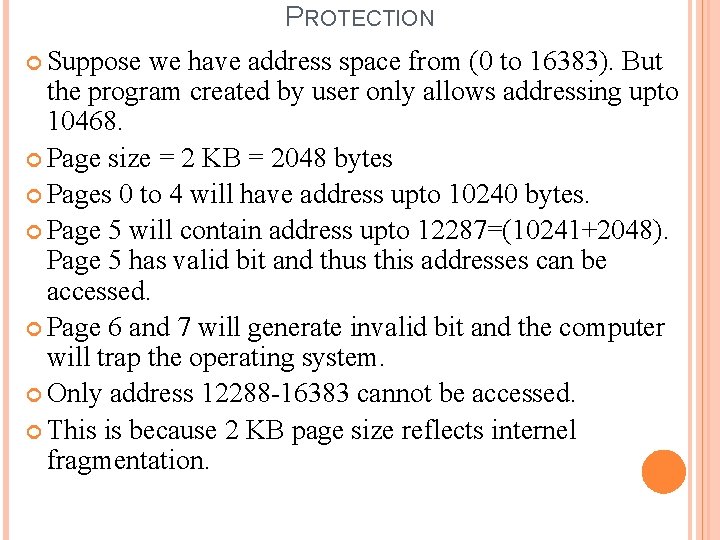

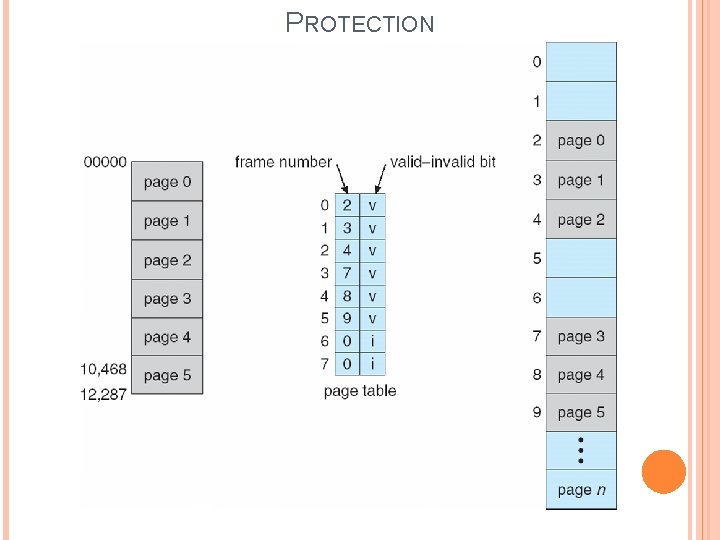

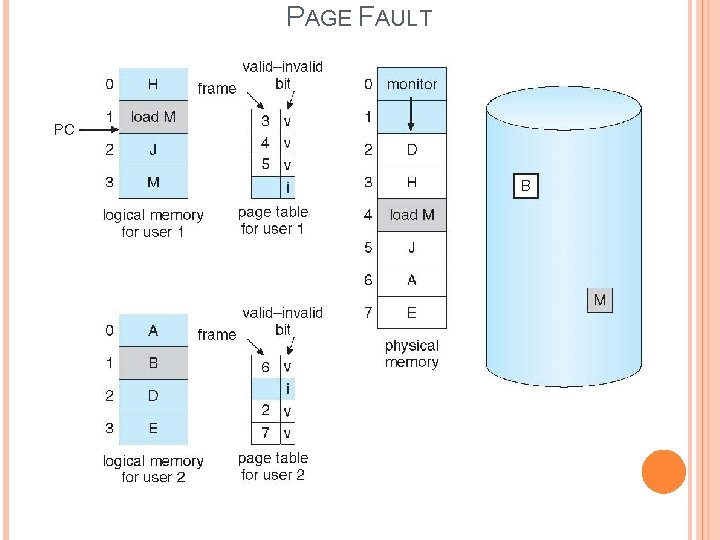

PROTECTION Protection means security from unauthorized references from main memory. Memory protection is implemented by associating protection bit with each frame to indicate if read-only or read-write access is allowed � Can also add more bits to indicate page execute-only, and so on Valid-invalid bit attached to each entry in the page table: indicates that the associated page is in the process’ logical address space, and is thus a legal page � “invalid” indicates that the page is not in the process’ logical address space � “valid” Any violations, results in a trap to the OS.

PROTECTION Suppose we have address space from (0 to 16383). But the program created by user only allows addressing upto 10468. Page size = 2 KB = 2048 bytes Pages 0 to 4 will have address upto 10240 bytes. Page 5 will contain address upto 12287=(10241+2048). Page 5 has valid bit and thus this addresses can be accessed. Page 6 and 7 will generate invalid bit and the computer will trap the operating system. Only address 12288 -16383 cannot be accessed. This is because 2 KB page size reflects internel fragmentation.

PROTECTION

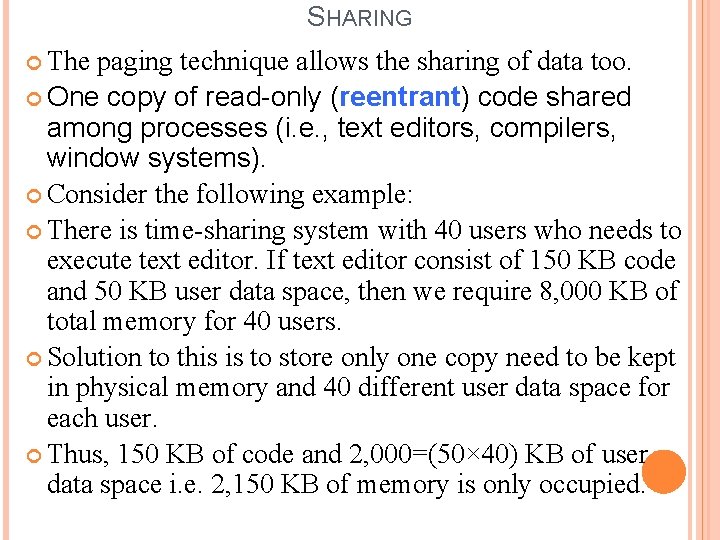

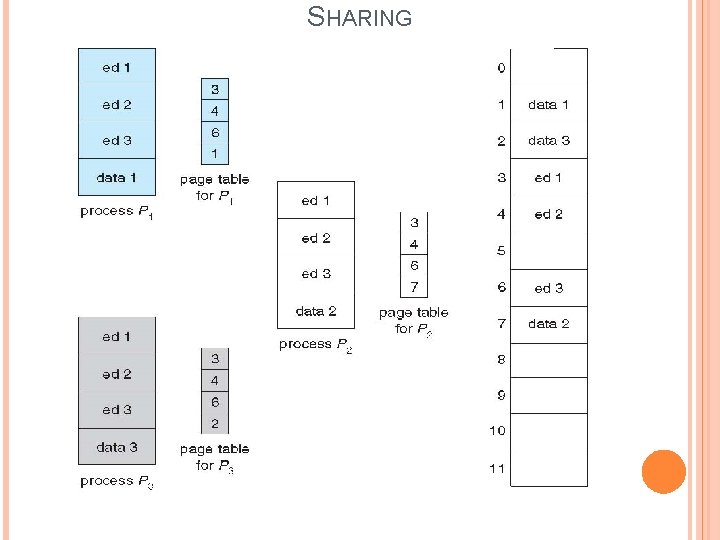

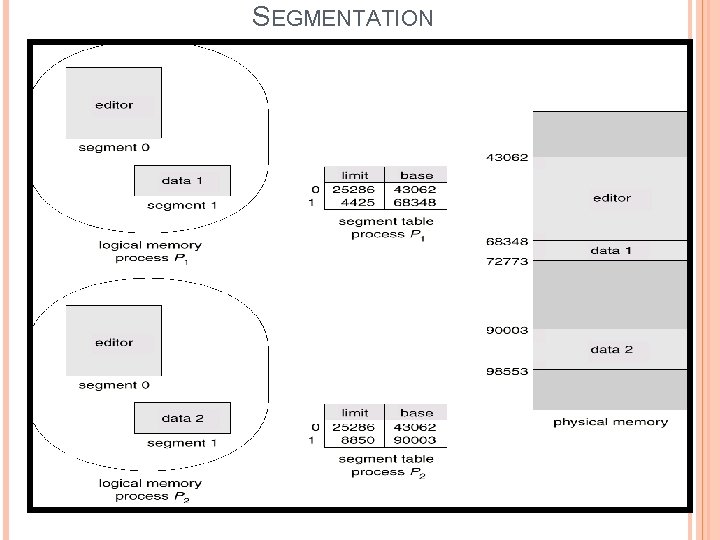

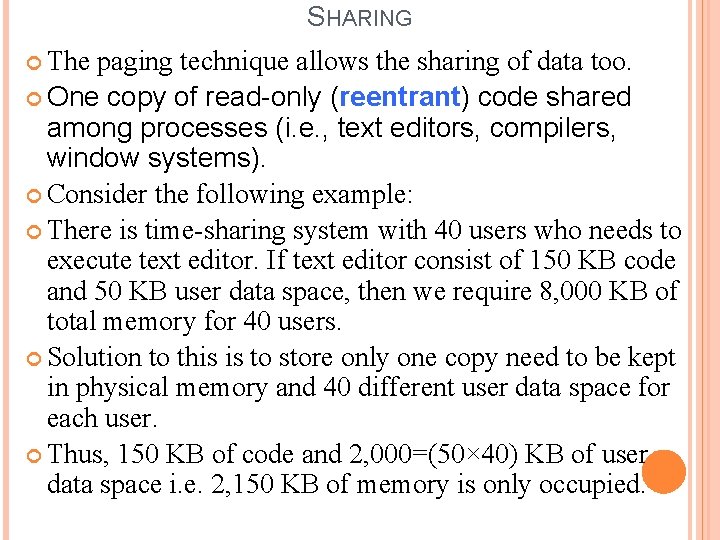

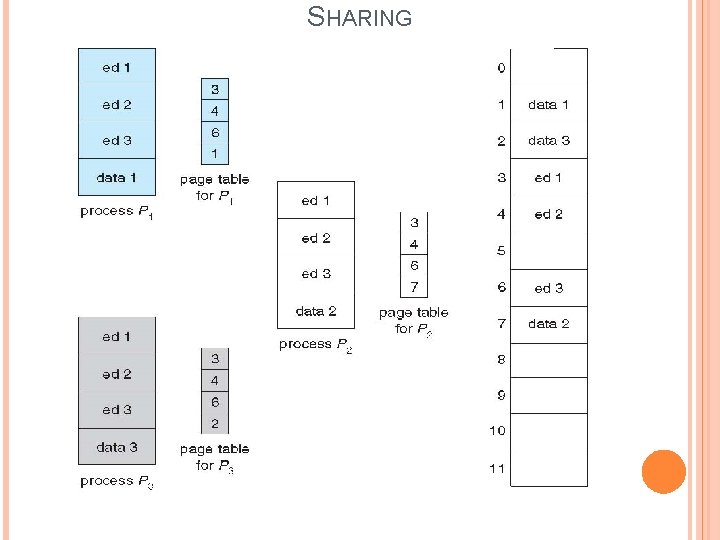

SHARING The paging technique allows the sharing of data too. One copy of read-only (reentrant) code shared among processes (i. e. , text editors, compilers, window systems). Consider the following example: There is time-sharing system with 40 users who needs to execute text editor. If text editor consist of 150 KB code and 50 KB user data space, then we require 8, 000 KB of total memory for 40 users. Solution to this is to store only one copy need to be kept in physical memory and 40 different user data space for each user. Thus, 150 KB of code and 2, 000=(50× 40) KB of user data space i. e. 2, 150 KB of memory is only occupied.

SHARING

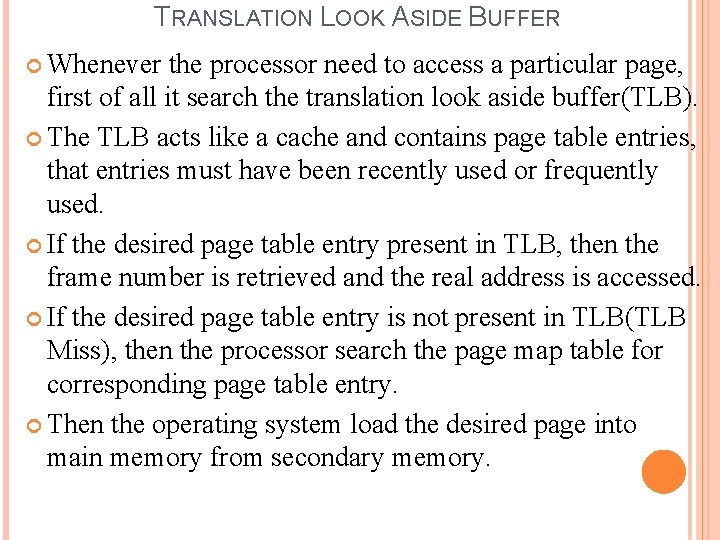

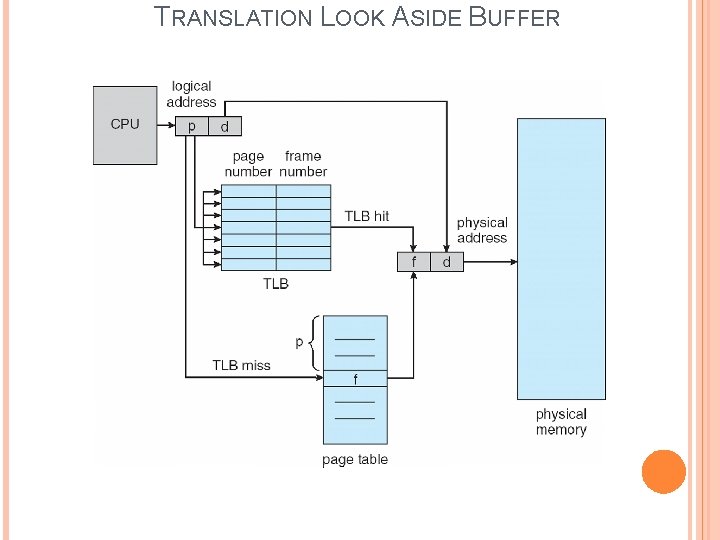

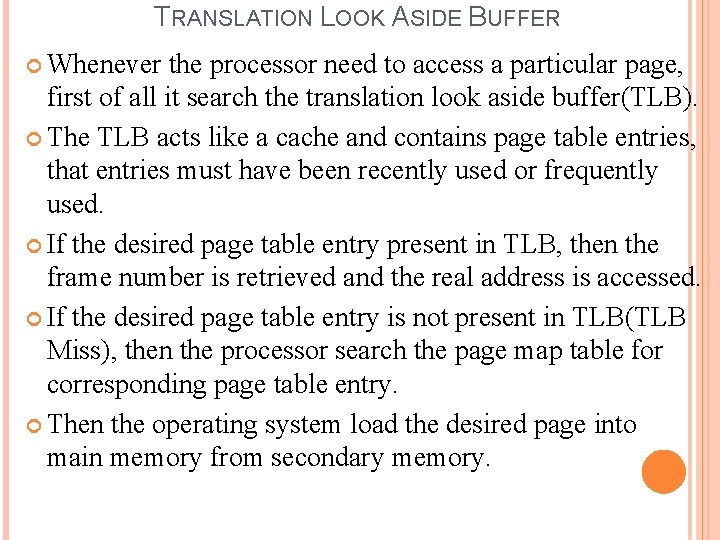

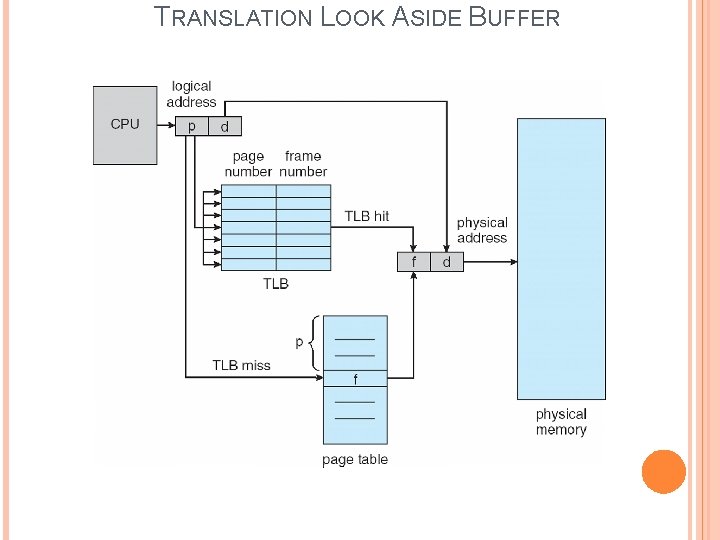

TRANSLATION LOOK ASIDE BUFFER Whenever the processor need to access a particular page, first of all it search the translation look aside buffer(TLB). The TLB acts like a cache and contains page table entries, that entries must have been recently used or frequently used. If the desired page table entry present in TLB, then the frame number is retrieved and the real address is accessed. If the desired page table entry is not present in TLB(TLB Miss), then the processor search the page map table for corresponding page table entry. Then the operating system load the desired page into main memory from secondary memory.

TRANSLATION LOOK ASIDE BUFFER

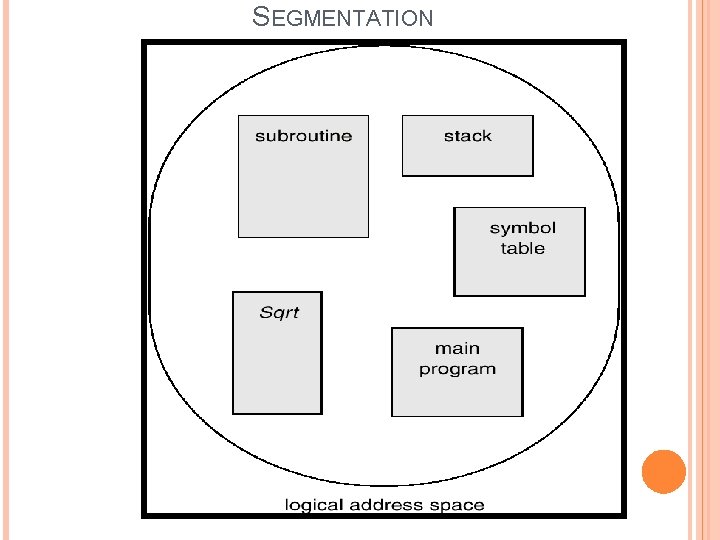

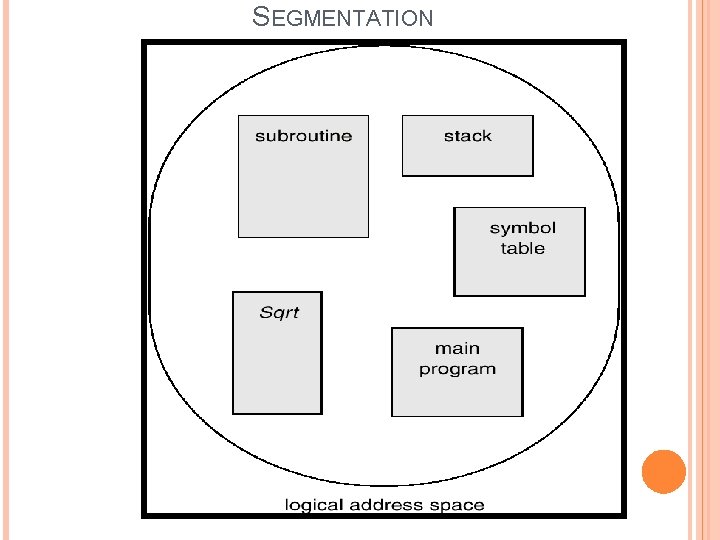

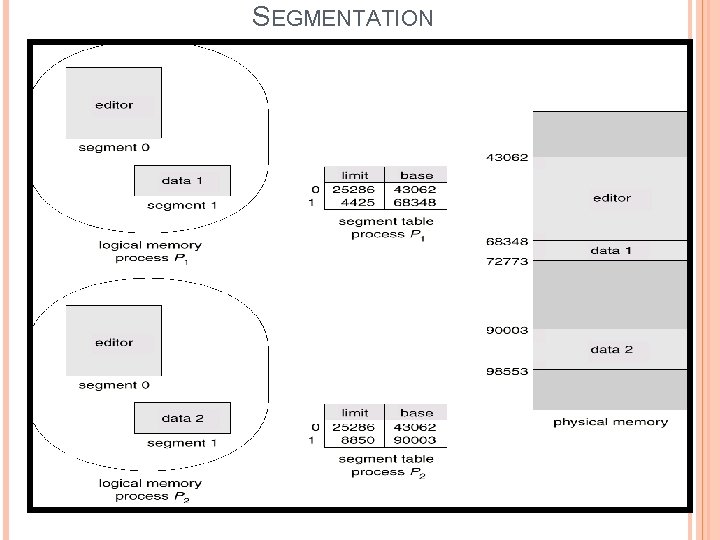

SEGMENTATION A segment can be defined as a logical grouping of instructions such as a subroutines, array, or a data area. Every program(process) is a collection of these segments. Technique of managing this segments is called segmentation. A program is a collection of segments such as: main program, procedure, function, method, object, local variables, global variables, common block, stack, symbol table, arrays

SEGMENTATION

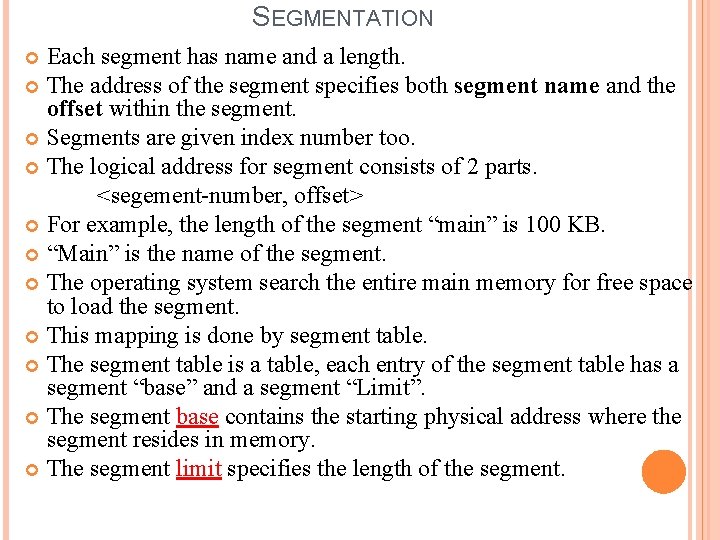

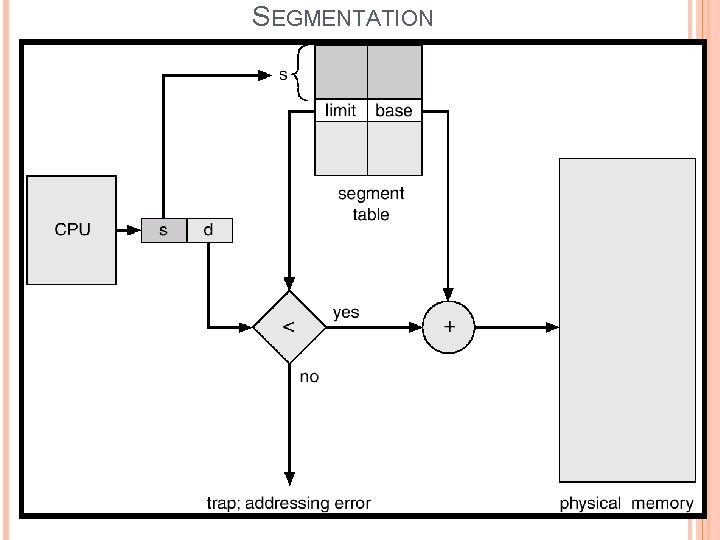

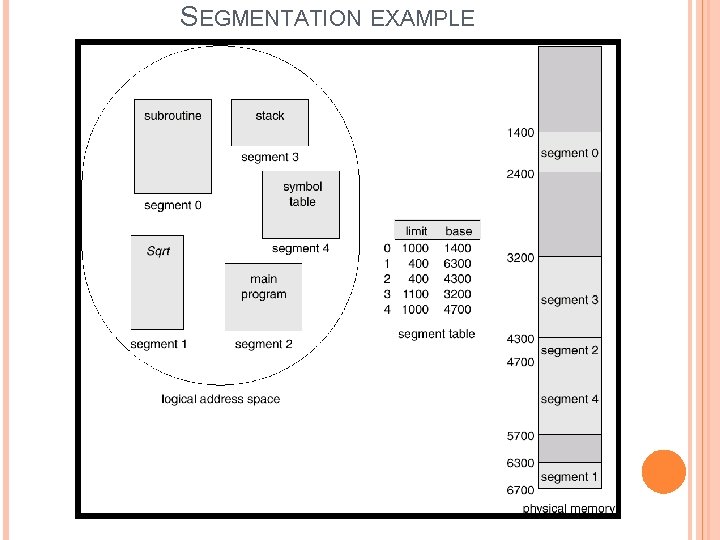

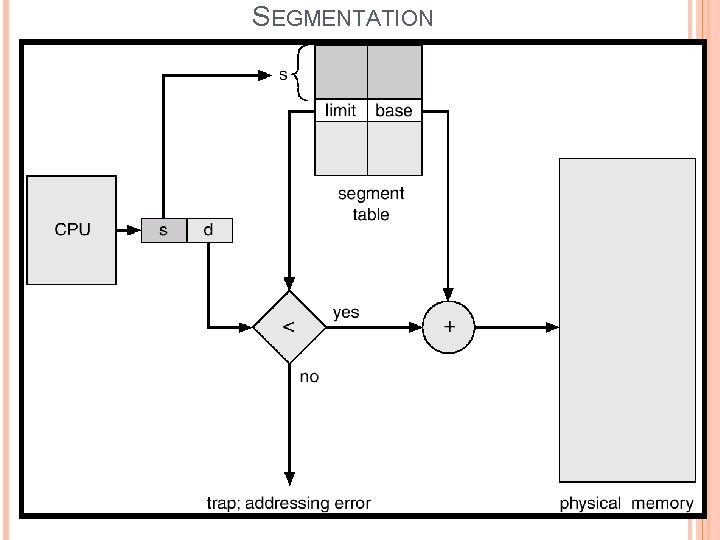

SEGMENTATION Each segment has name and a length. The address of the segment specifies both segment name and the offset within the segment. Segments are given index number too. The logical address for segment consists of 2 parts. <segement-number, offset> For example, the length of the segment “main” is 100 KB. “Main” is the name of the segment. The operating system search the entire main memory for free space to load the segment. This mapping is done by segment table. The segment table is a table, each entry of the segment table has a segment “base” and a segment “Limit”. The segment base contains the starting physical address where the segment resides in memory. The segment limit specifies the length of the segment.

SEGMENTATION

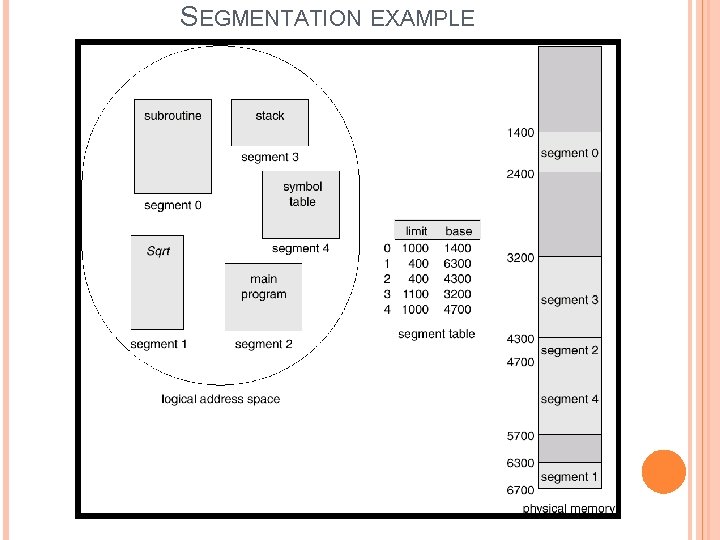

SEGMENTATION EXAMPLE

SEGMENTATION

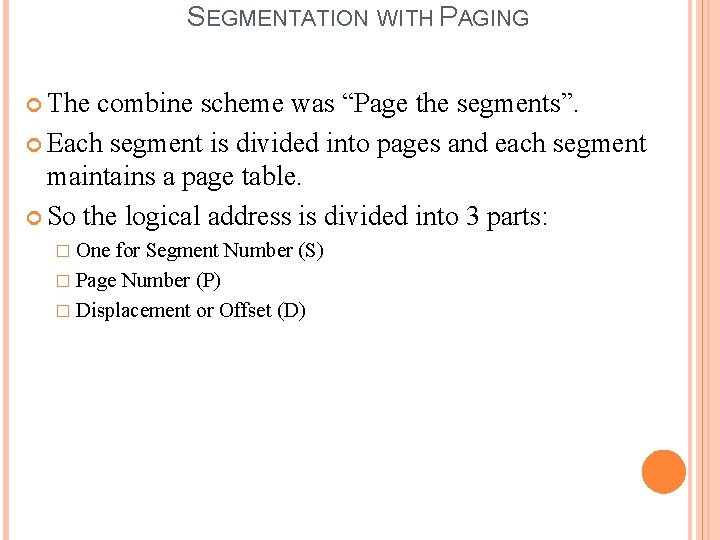

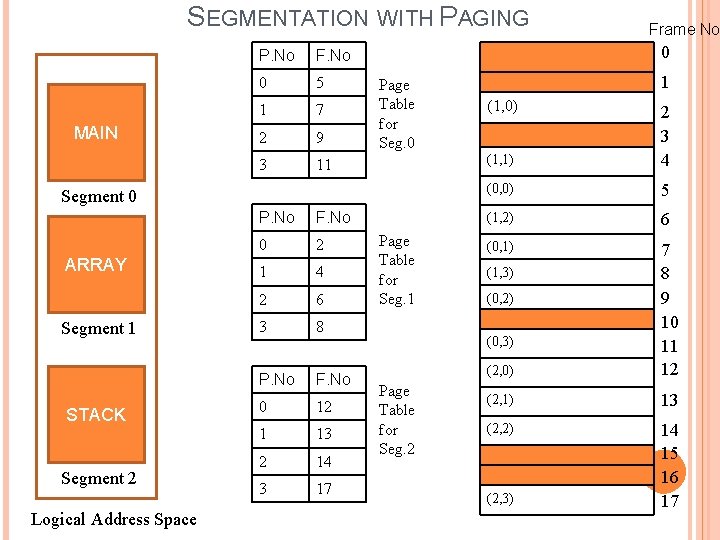

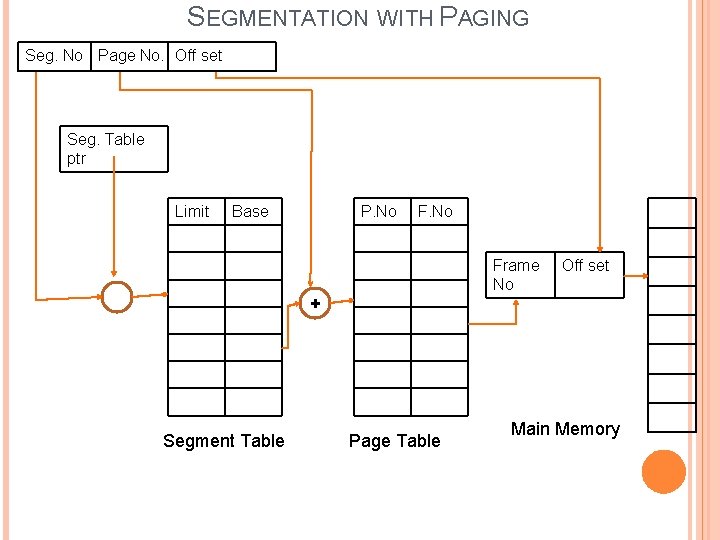

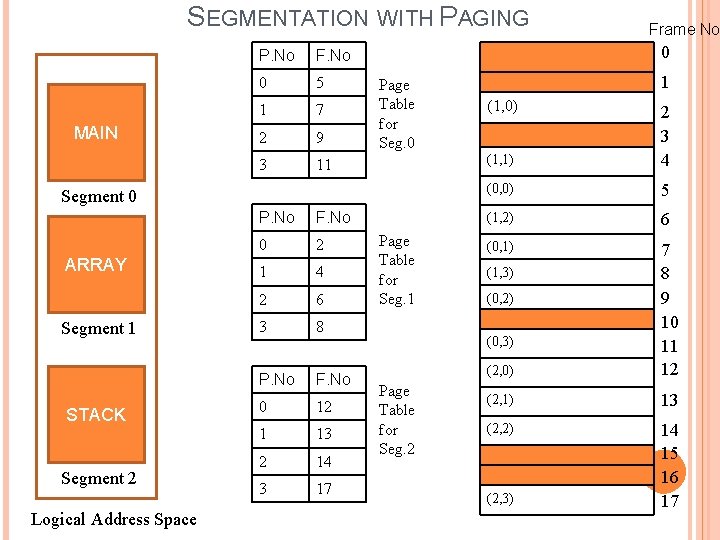

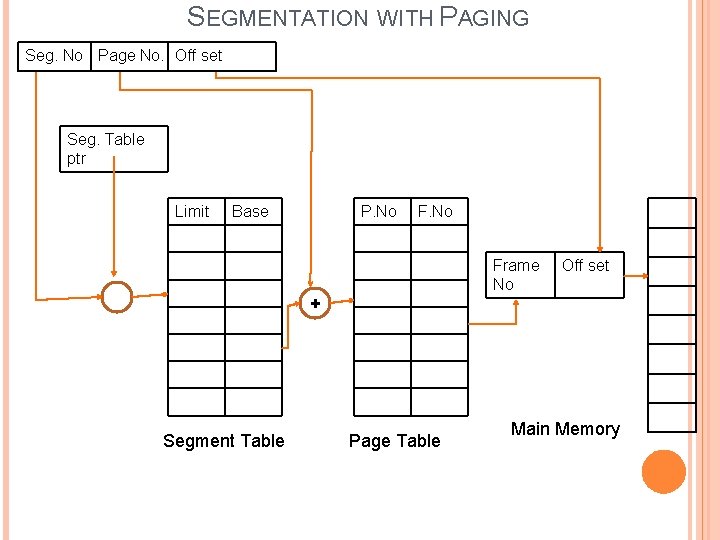

SEGMENTATION WITH PAGING The combine scheme was “Page the segments”. Each segment is divided into pages and each segment maintains a page table. So the logical address is divided into 3 parts: � One for Segment Number (S) � Page Number (P) � Displacement or Offset (D)

SEGMENTATION WITH PAGING MAIN P. No F. No 0 5 1 7 2 9 3 11 0 Page Table for Seg. 0 Segment 0 ARRAY Segment 1 STACK Segment 2 Logical Address Space P. No F. No 0 2 1 4 2 6 3 8 P. No F. No 0 12 1 13 2 14 3 17 Frame No Page Table for Seg. 1 1 (1, 0) (1, 1) 2 3 4 (0, 0) 5 (1, 2) 6 (0, 1) (2, 0) 7 8 9 10 11 12 (2, 1) 13 (2, 2) 14 15 16 17 (1, 3) (0, 2) (0, 3) Page Table for Seg. 2 (2, 3)

SEGMENTATION WITH PAGING Seg. No Page No. Off set Seg. Table ptr Limit Base P. No Frame No + Segment Table Page Table Off set Main Memory

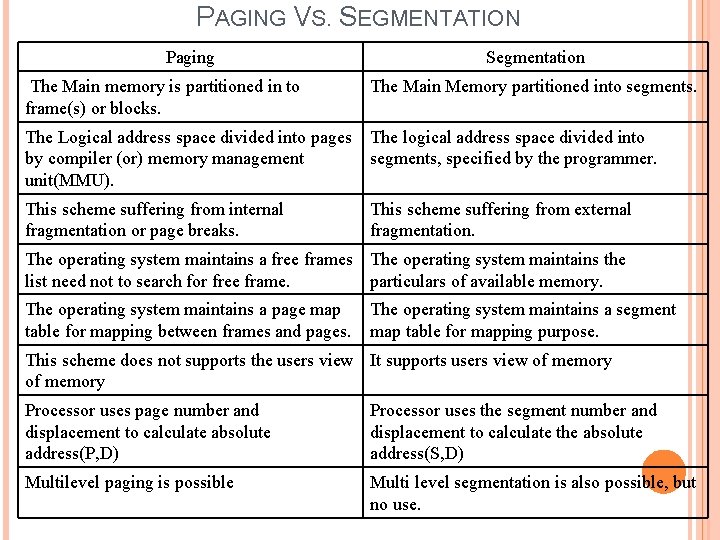

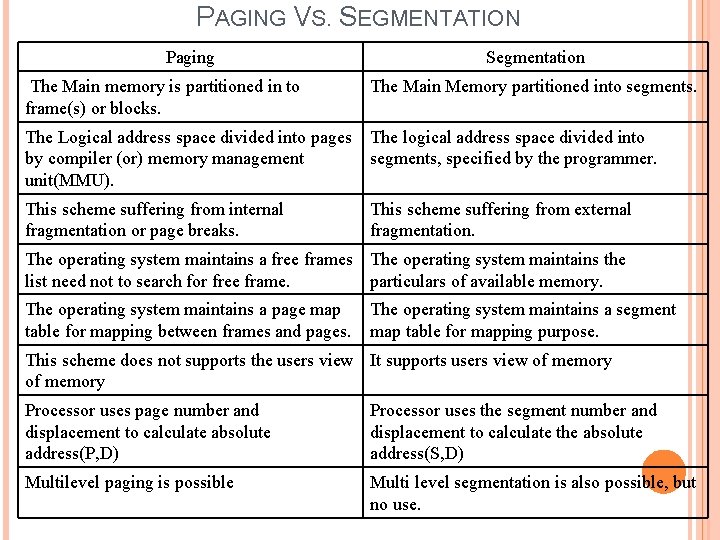

PAGING VS. SEGMENTATION Paging Segmentation The Main memory is partitioned in to frame(s) or blocks. The Main Memory partitioned into segments. The Logical address space divided into pages by compiler (or) memory management unit(MMU). The logical address space divided into segments, specified by the programmer. This scheme suffering from internal fragmentation or page breaks. This scheme suffering from external fragmentation. The operating system maintains a free frames The operating system maintains the list need not to search for free frame. particulars of available memory. The operating system maintains a page map table for mapping between frames and pages. The operating system maintains a segment map table for mapping purpose. This scheme does not supports the users view It supports users view of memory Processor uses page number and displacement to calculate absolute address(P, D) Processor uses the segment number and displacement to calculate the absolute address(S, D) Multilevel paging is possible Multi level segmentation is also possible, but no use.

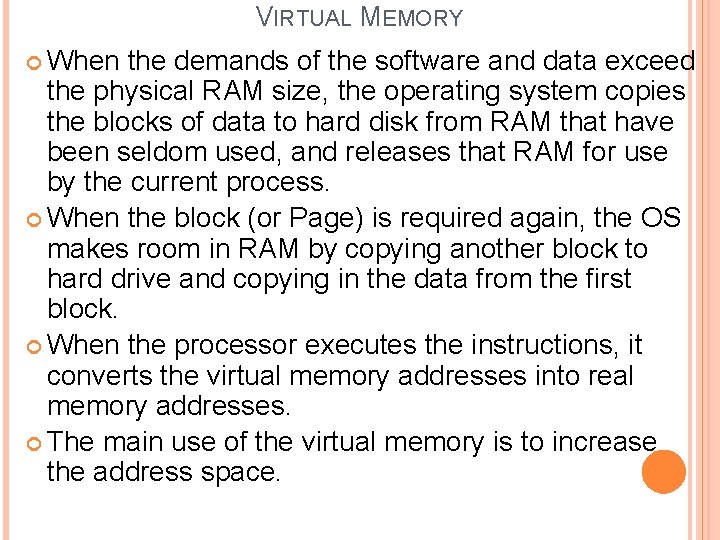

VIRTUAL MEMORY When the demands of the software and data exceed the physical RAM size, the operating system copies the blocks of data to hard disk from RAM that have been seldom used, and releases that RAM for use by the current process. When the block (or Page) is required again, the OS makes room in RAM by copying another block to hard drive and copying in the data from the first block. When the processor executes the instructions, it converts the virtual memory addresses into real memory addresses. The main use of the virtual memory is to increase the address space.

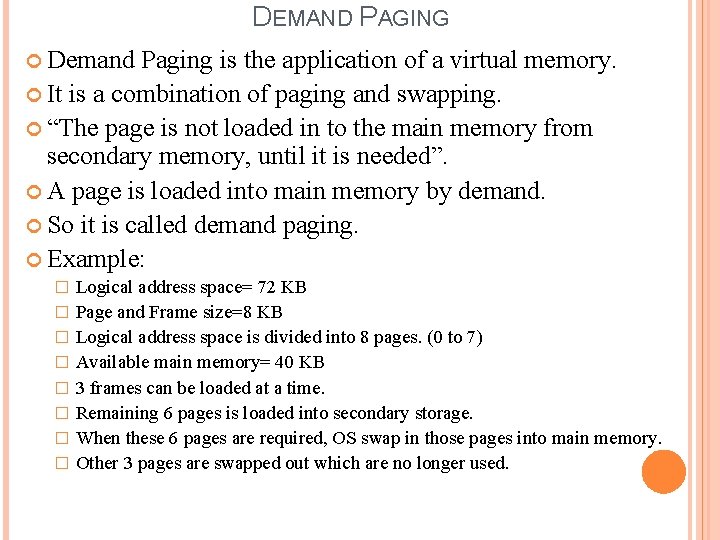

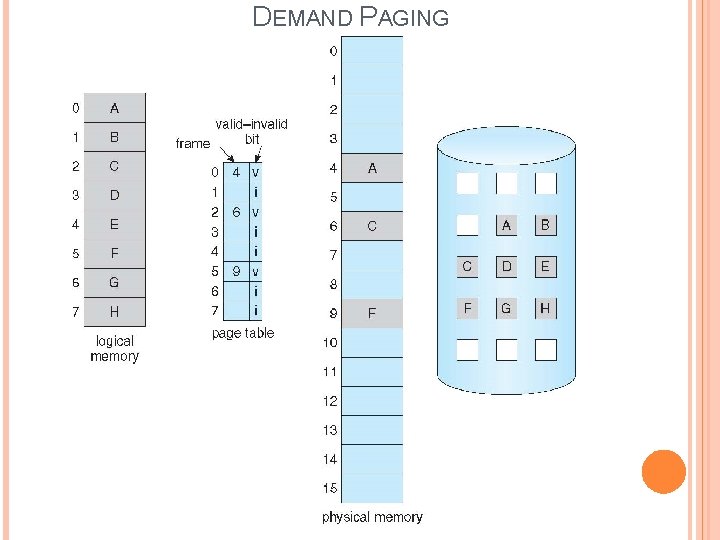

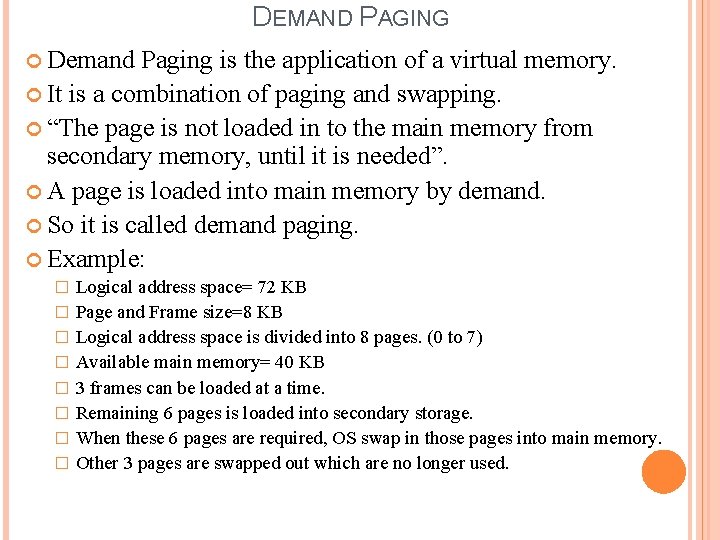

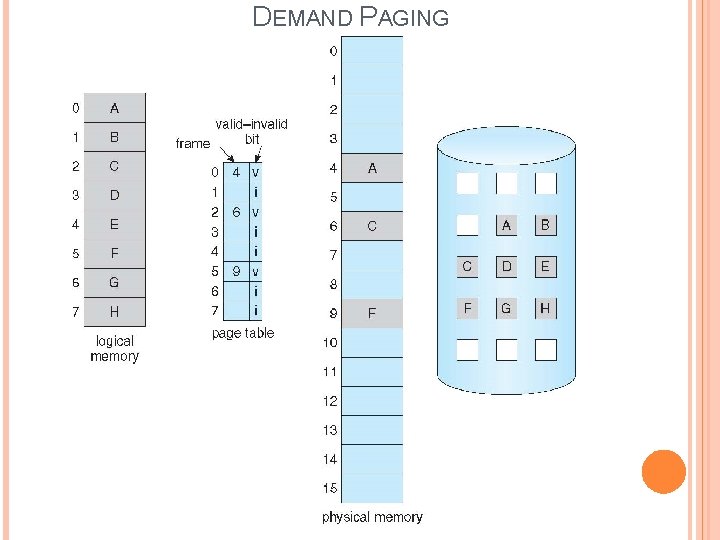

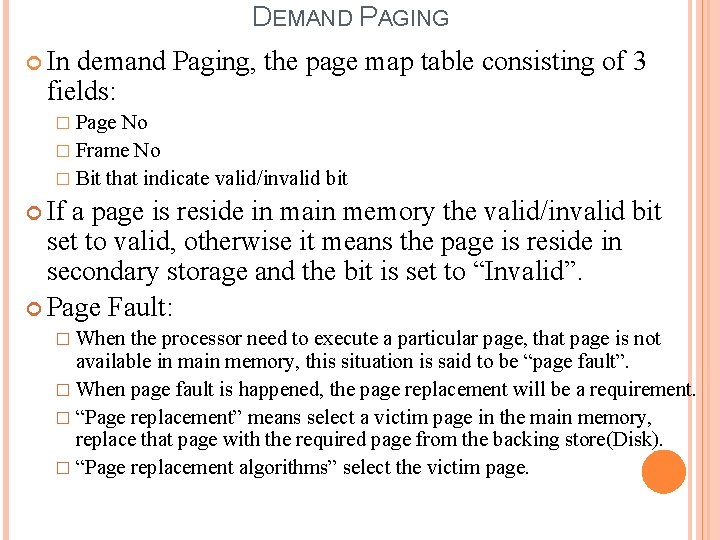

DEMAND PAGING Demand Paging is the application of a virtual memory. It is a combination of paging and swapping. “The page is not loaded in to the main memory from secondary memory, until it is needed”. A page is loaded into main memory by demand. So it is called demand paging. Example: � � � � Logical address space= 72 KB Page and Frame size=8 KB Logical address space is divided into 8 pages. (0 to 7) Available main memory= 40 KB 3 frames can be loaded at a time. Remaining 6 pages is loaded into secondary storage. When these 6 pages are required, OS swap in those pages into main memory. Other 3 pages are swapped out which are no longer used.

DEMAND PAGING

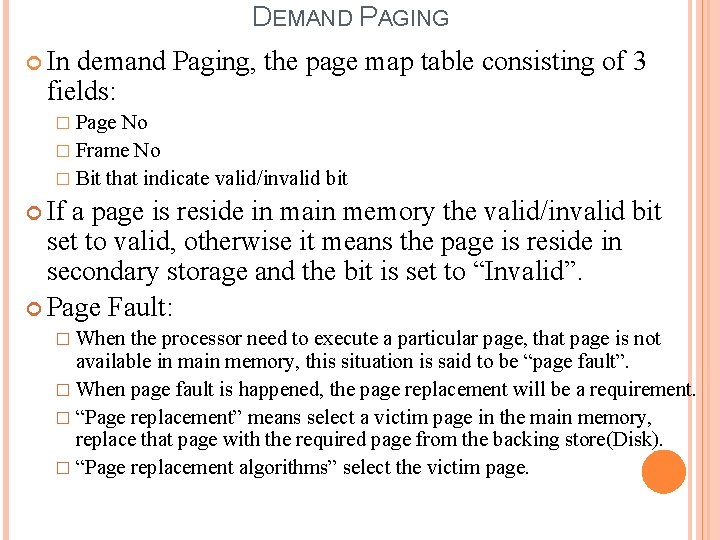

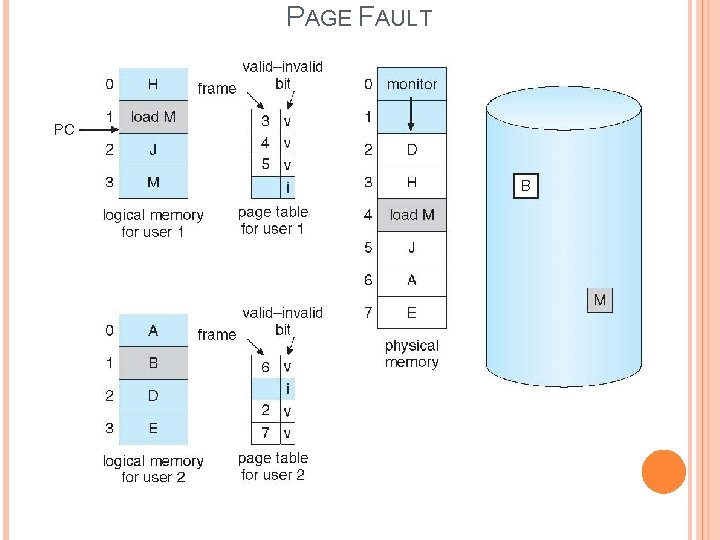

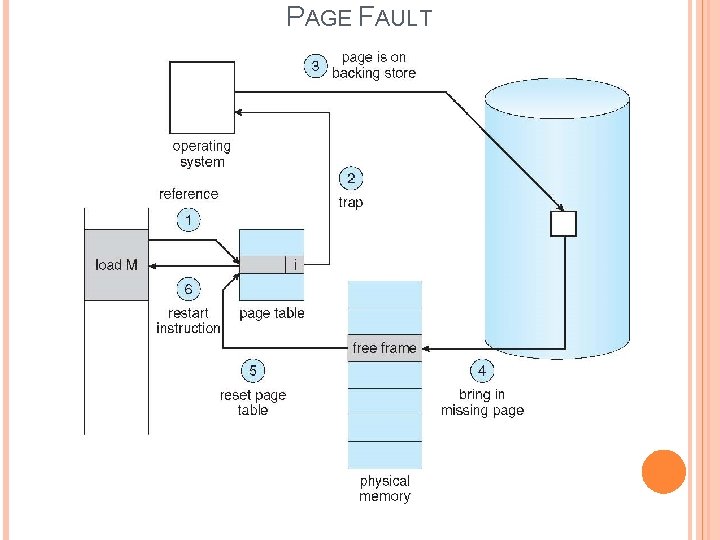

DEMAND PAGING In demand Paging, the page map table consisting of 3 fields: � Page No � Frame No � Bit that indicate valid/invalid bit If a page is reside in main memory the valid/invalid bit set to valid, otherwise it means the page is reside in secondary storage and the bit is set to “Invalid”. Page Fault: � When the processor need to execute a particular page, that page is not available in main memory, this situation is said to be “page fault”. � When page fault is happened, the page replacement will be a requirement. � “Page replacement” means select a victim page in the main memory, replace that page with the required page from the backing store(Disk). � “Page replacement algorithms” select the victim page.

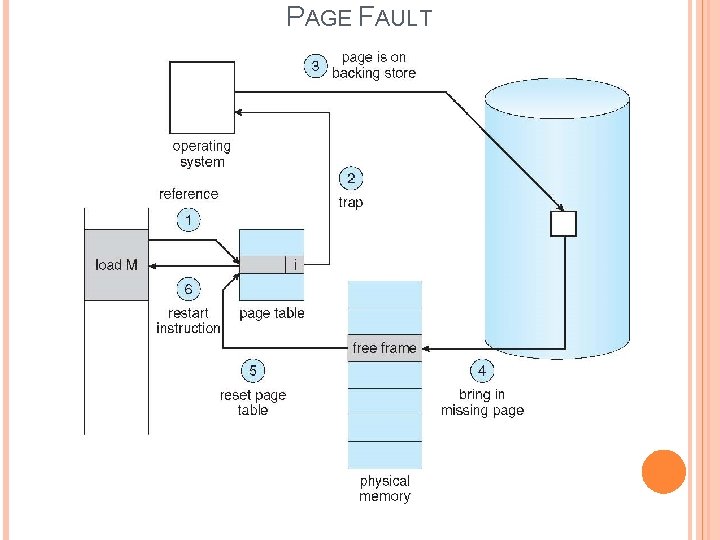

PAGE FAULT

PAGE FAULT

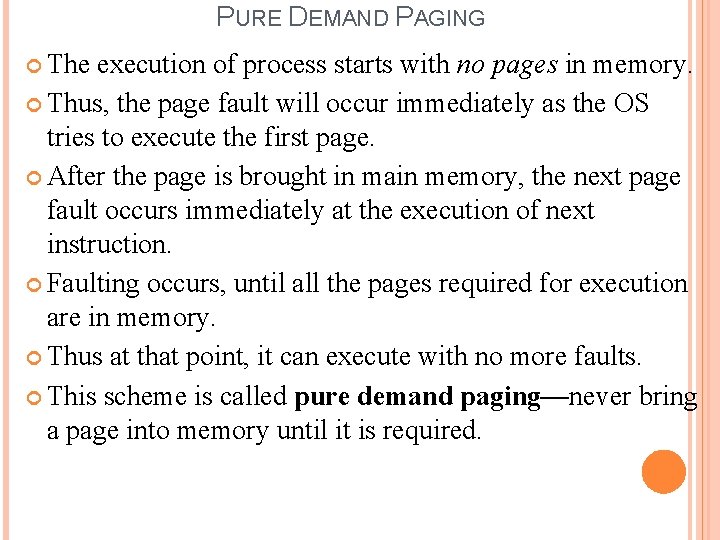

PURE DEMAND PAGING The execution of process starts with no pages in memory. Thus, the page fault will occur immediately as the OS tries to execute the first page. After the page is brought in main memory, the next page fault occurs immediately at the execution of next instruction. Faulting occurs, until all the pages required for execution are in memory. Thus at that point, it can execute with no more faults. This scheme is called pure demand paging—never bring a page into memory until it is required.

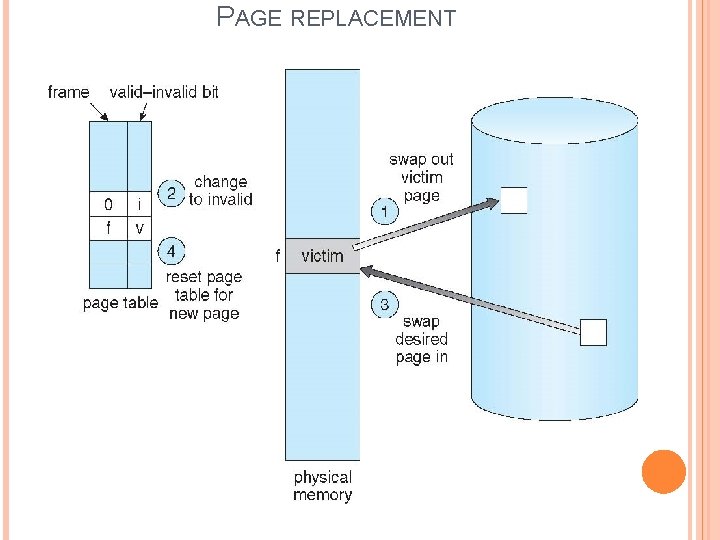

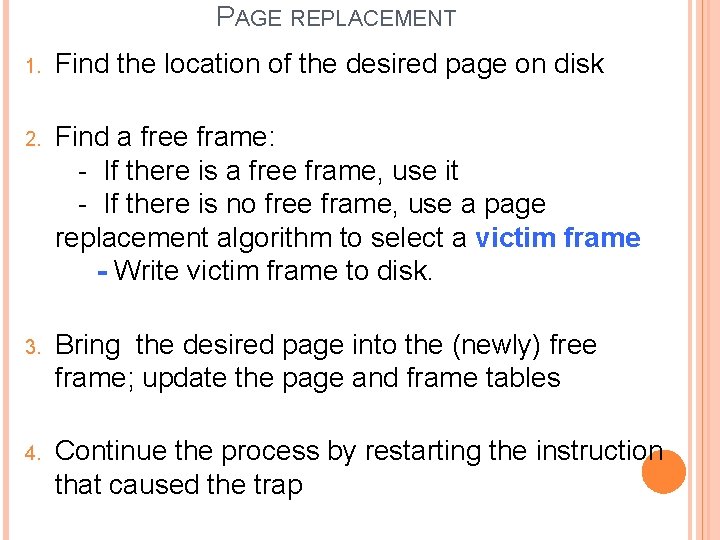

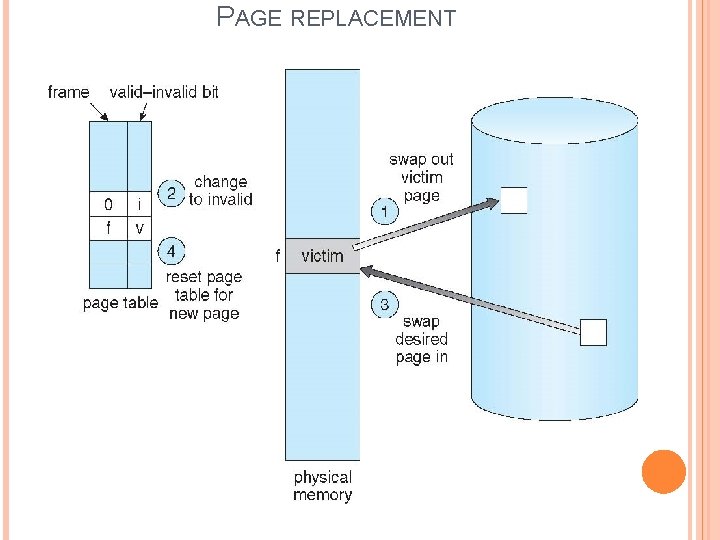

PAGE REPLACEMENT 1. Find the location of the desired page on disk 2. Find a free frame: - If there is a free frame, use it - If there is no free frame, use a page replacement algorithm to select a victim frame - Write victim frame to disk. 3. Bring the desired page into the (newly) free frame; update the page and frame tables 4. Continue the process by restarting the instruction that caused the trap

PAGE REPLACEMENT

DIRTY BIT (OR MODIFY BIT) Each page has a modify bit associated with it. Whenever data is written on the page, the modify bit is set to indicate that the page is being modified. When we select page for replacement, modify bit is checked. If the bit is set, it means the page has been modified as it was already in main memory. If the bit is not set, it means the page has not been modified since it entered the main memory.

PAGE REPLACEMENT ALGORITHM FIFO ( First in First Out) OP (Optimal Page) LRU (Least Recently Used)

FIFO (FIRST IN FIRST OUT) FIFO is the simplest page replacement, the idea behind this is “Replace a page that is oldest page of all the pages of main memory” or “Replace the page that has been in memory longest”. FIFO focuses on the length of a time a page has been in memory rather than how much the page is being used.

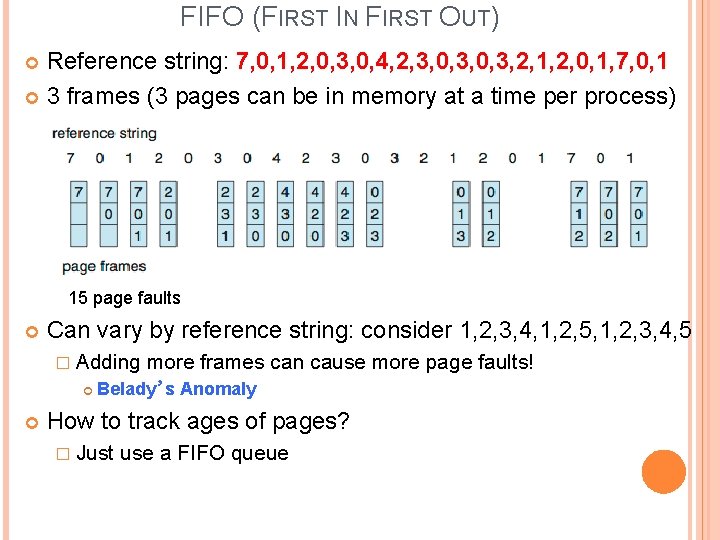

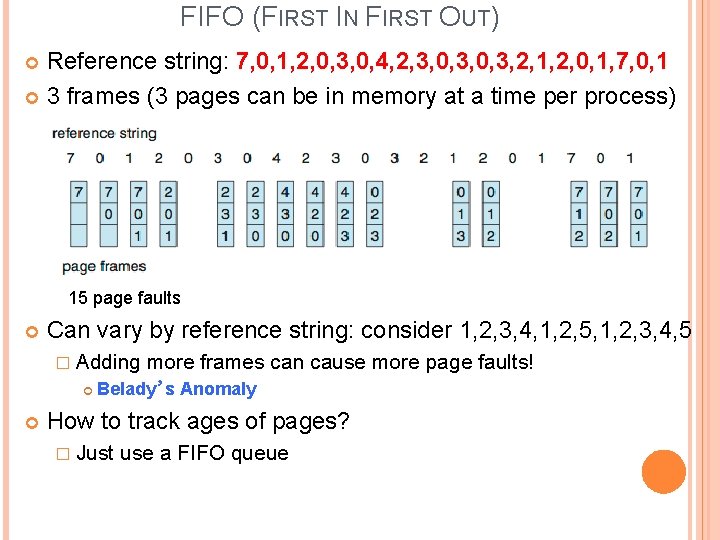

FIFO (FIRST IN FIRST OUT) Reference string: 7, 0, 1, 2, 0, 3, 0, 4, 2, 3, 0, 3, 2, 1, 2, 0, 1, 7, 0, 1 3 frames (3 pages can be in memory at a time per process) 15 page faults Can vary by reference string: consider 1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5 � Adding more frames can cause more page faults! Belady’s Anomaly How to track ages of pages? � Just use a FIFO queue

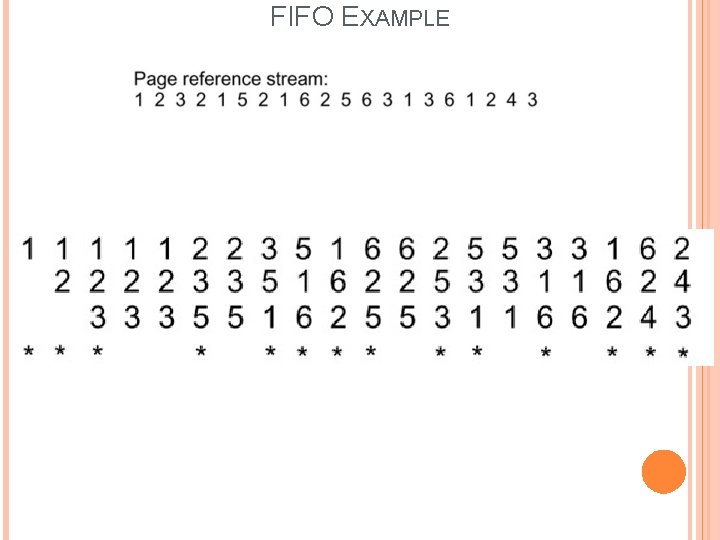

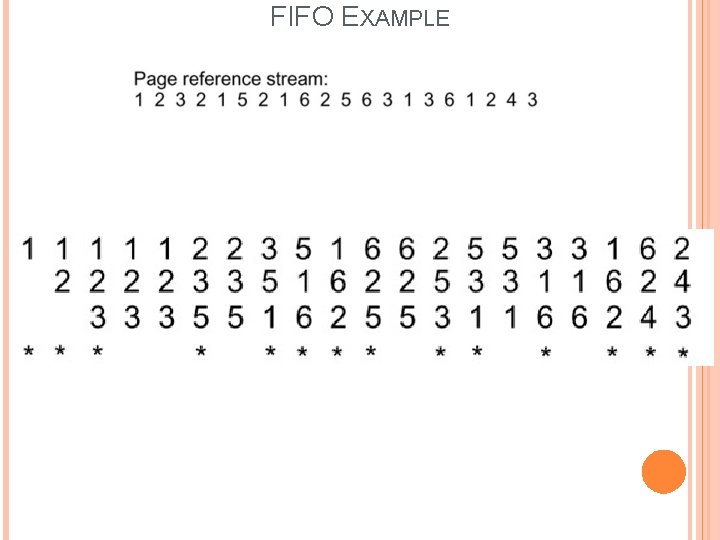

FIFO EXAMPLE

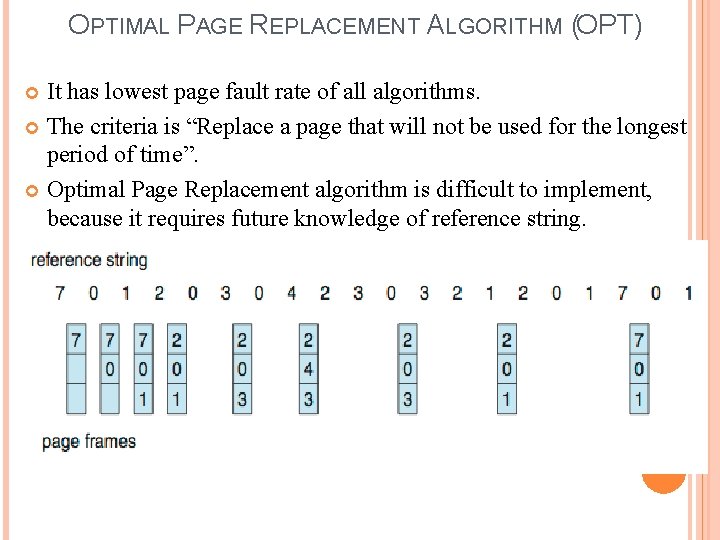

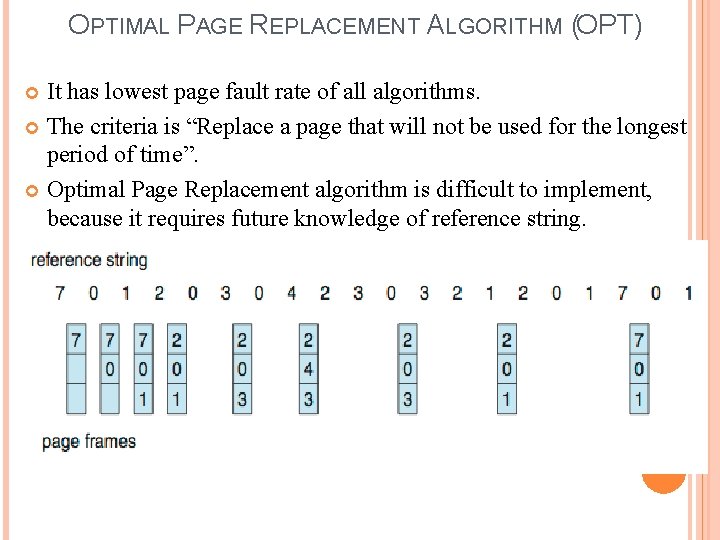

OPTIMAL PAGE REPLACEMENT ALGORITHM (OPT) It has lowest page fault rate of all algorithms. The criteria is “Replace a page that will not be used for the longest period of time”. Optimal Page Replacement algorithm is difficult to implement, because it requires future knowledge of reference string.

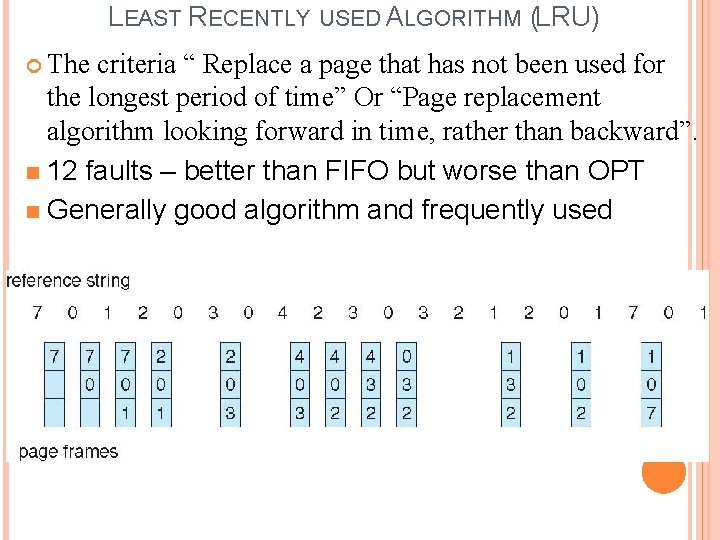

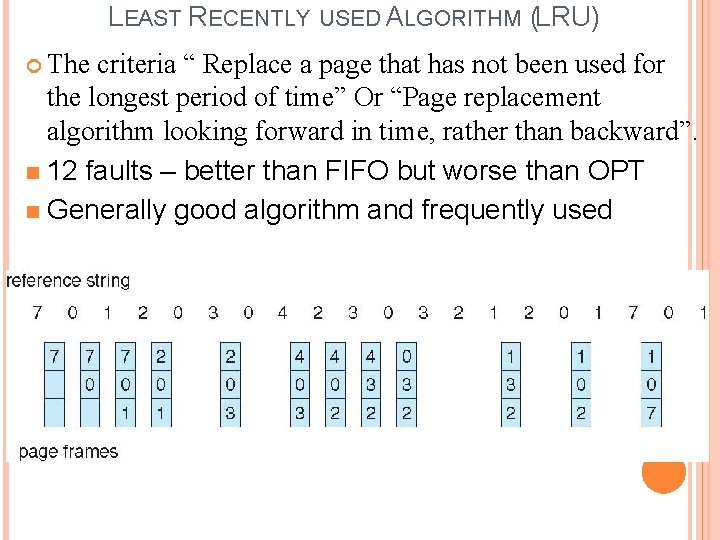

LEAST RECENTLY USED ALGORITHM (LRU) The criteria “ Replace a page that has not been used for the longest period of time” Or “Page replacement algorithm looking forward in time, rather than backward”. n 12 faults – better than FIFO but worse than OPT n Generally good algorithm and frequently used

EXAMPLE