Memory Management Chapter 4 Memory hierarchy Programmers want

- Slides: 27

Memory Management Chapter 4

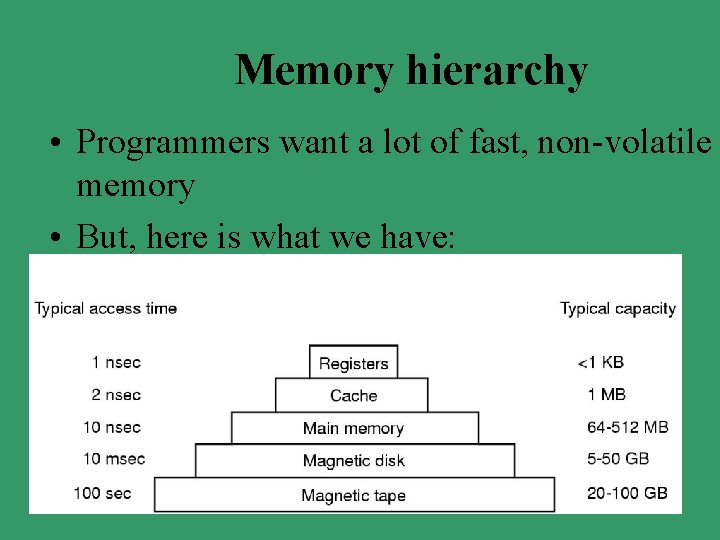

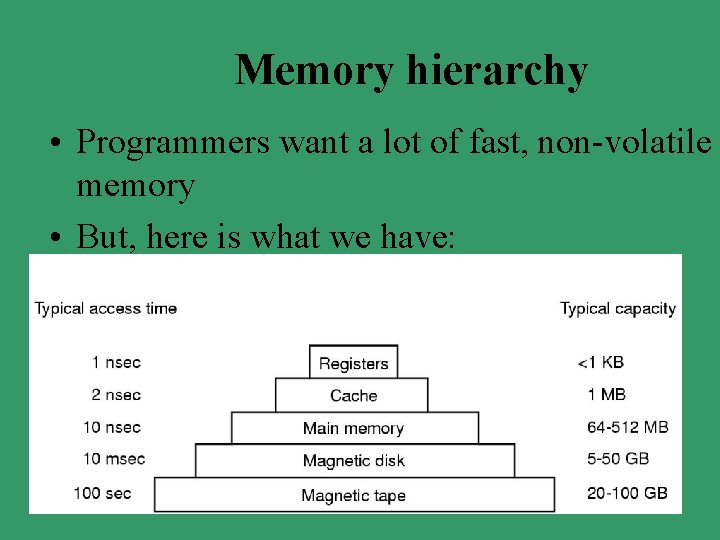

Memory hierarchy • Programmers want a lot of fast, non-volatile memory • But, here is what we have:

Memory manager • Tracks which parts of memory are in use • Allocates memory to processes – Long-term scheduling • De-allocates memory from processes • Swaps between main memory and disk when main memory is too small to hold all of the processes – Intermediate scheduling

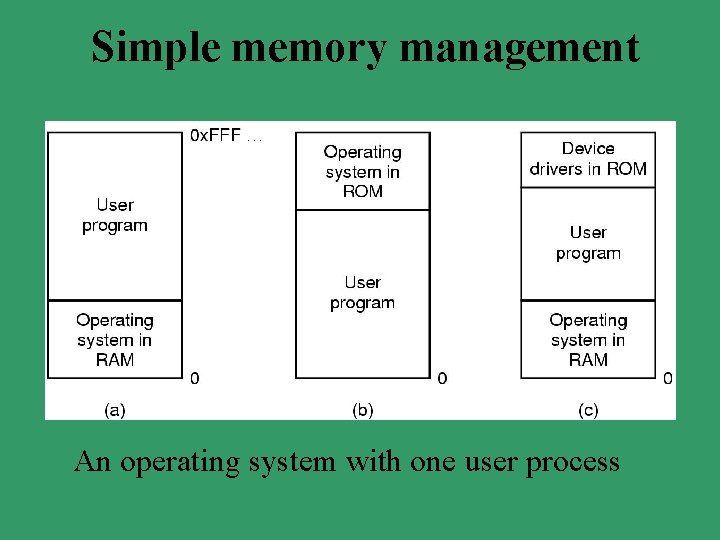

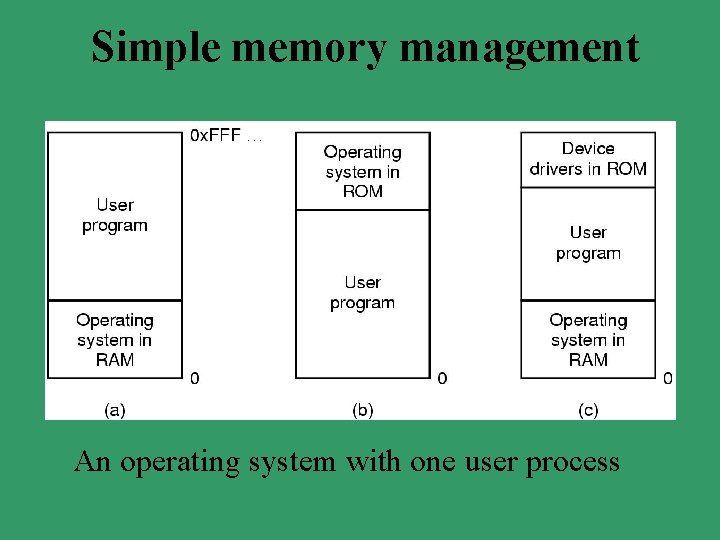

Simple memory management An operating system with one user process

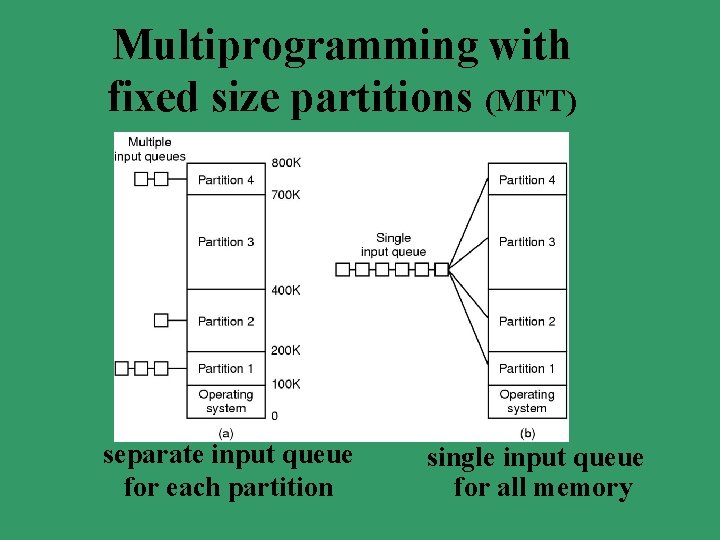

Multiprogramming • Use fixed-sized partitions (MFT) – Each partition contained only one process – Very simple • More flexibility is achieved with MVT – OS knows which parts of memory are available – When a process is to be loaded, it needs a large enough block of contiguous memory

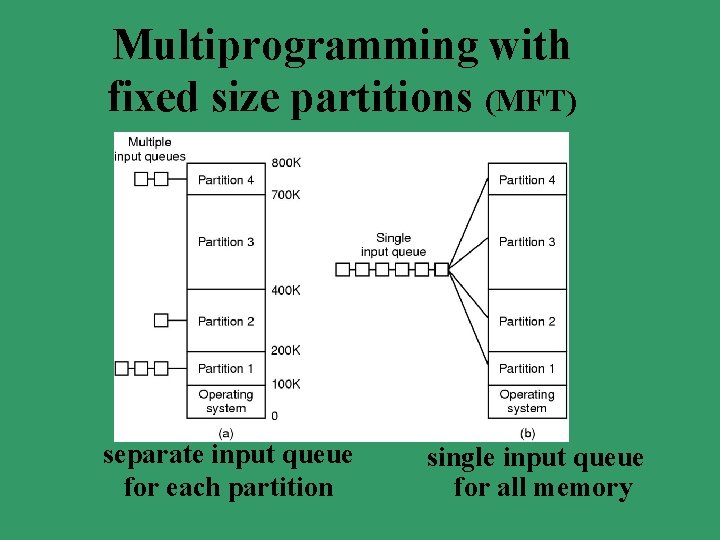

Multiprogramming with fixed size partitions (MFT) separate input queue for each partition single input queue for all memory

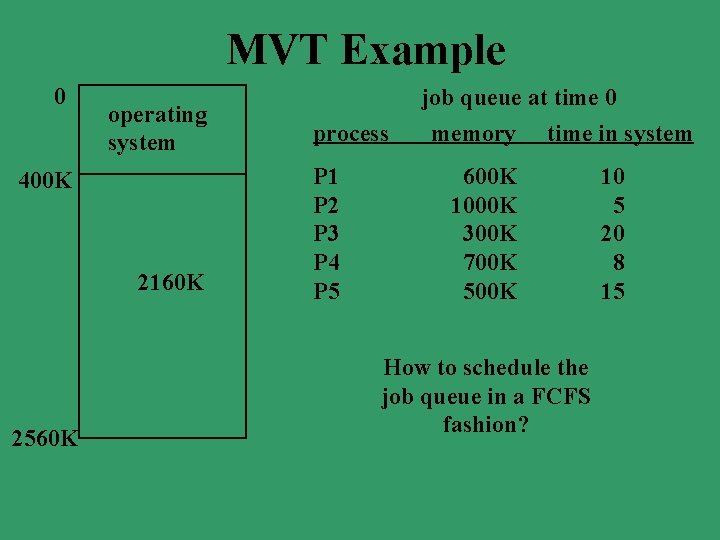

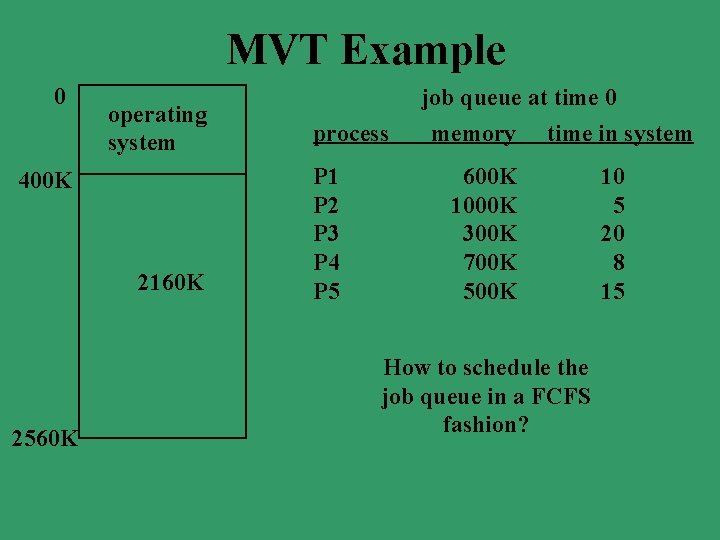

MVT Example 0 operating system 400 K 2160 K 2560 K process P 1 P 2 P 3 P 4 P 5 job queue at time 0 memory time in system 600 K 1000 K 300 K 700 K 500 K How to schedule the job queue in a FCFS fashion? 10 5 20 8 15

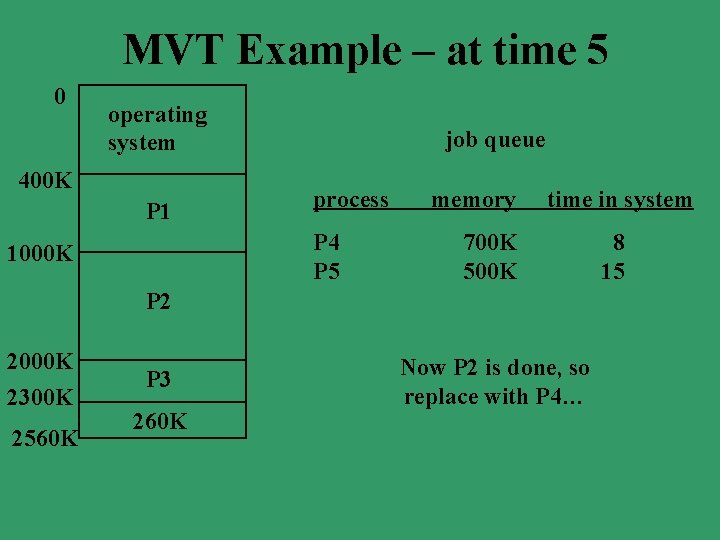

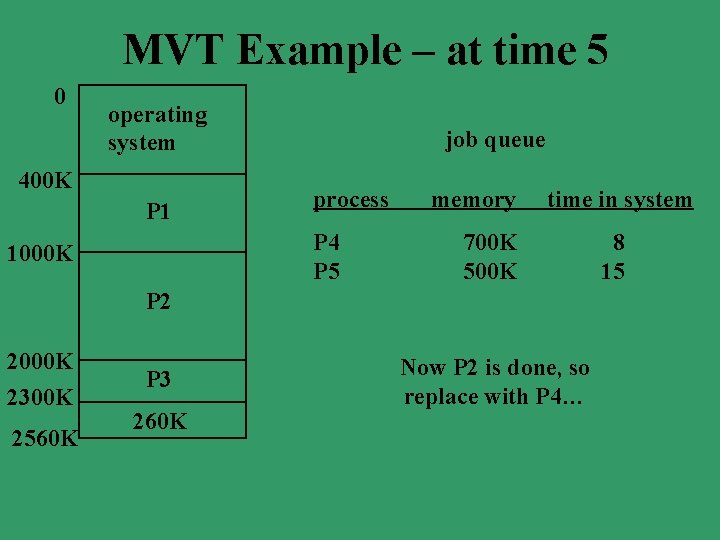

MVT Example – at time 5 0 operating system 400 K P 1 job queue process P 4 P 5 1000 K memory time in system 700 K 500 K P 2 2000 K 2300 K 2560 K P 3 260 K Now P 2 is done, so replace with P 4… 8 15

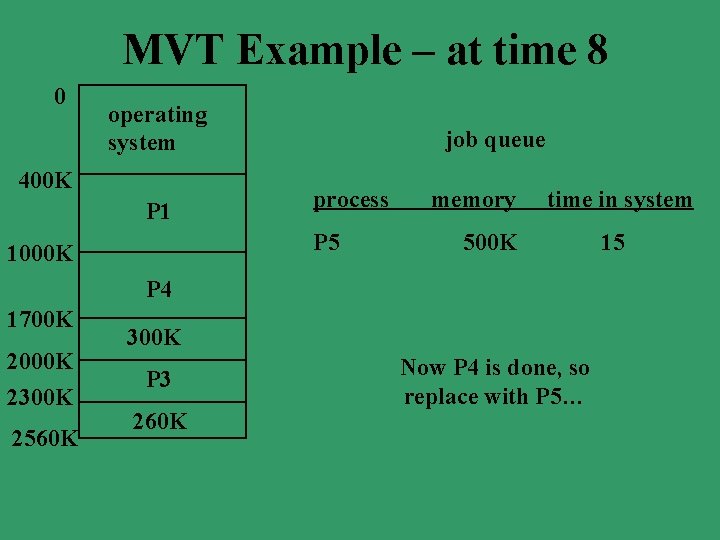

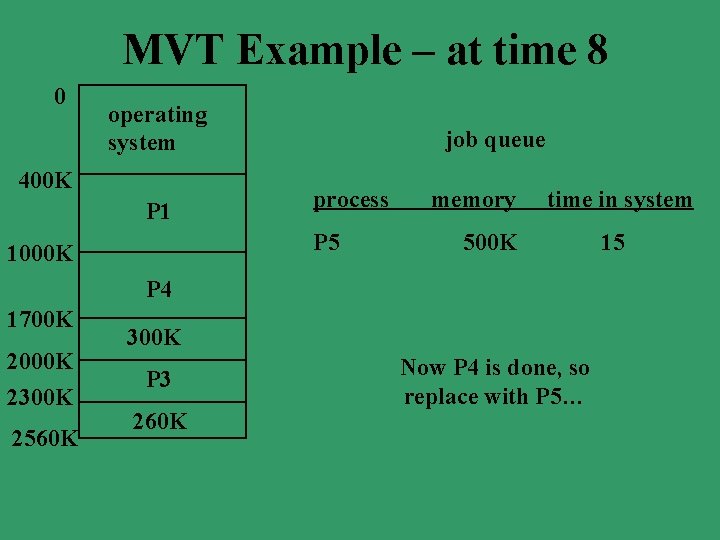

MVT Example – at time 8 0 operating system 400 K P 1 job queue process P 5 1000 K memory time in system 500 K P 4 1700 K 2000 K 2300 K 2560 K 300 K P 3 260 K Now P 4 is done, so replace with P 5… 15

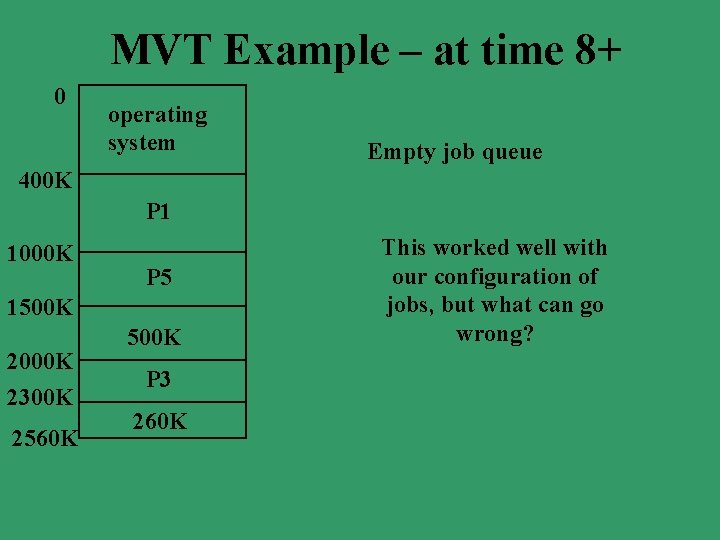

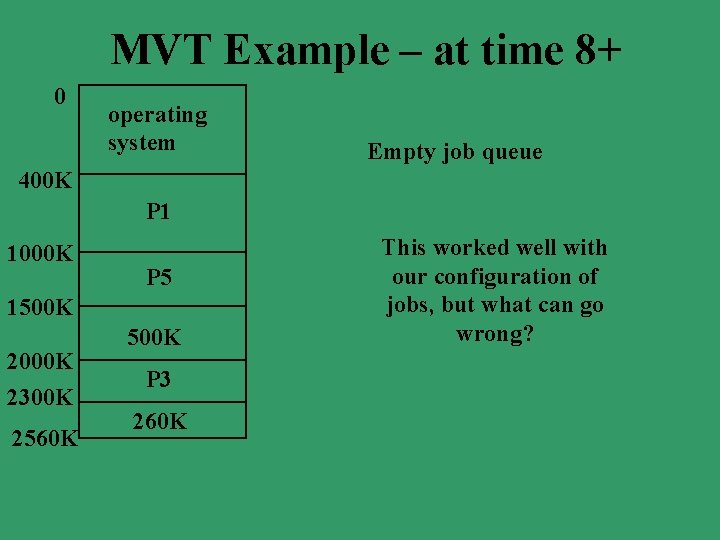

MVT Example – at time 8+ 0 operating system Empty job queue 400 K P 1 1000 K P 5 1500 K 2000 K 2300 K 2560 K 500 K P 3 260 K This worked well with our configuration of jobs, but what can go wrong?

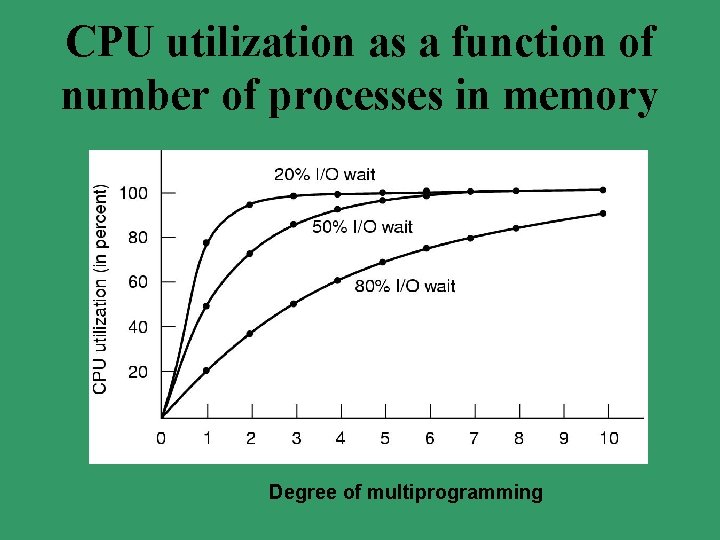

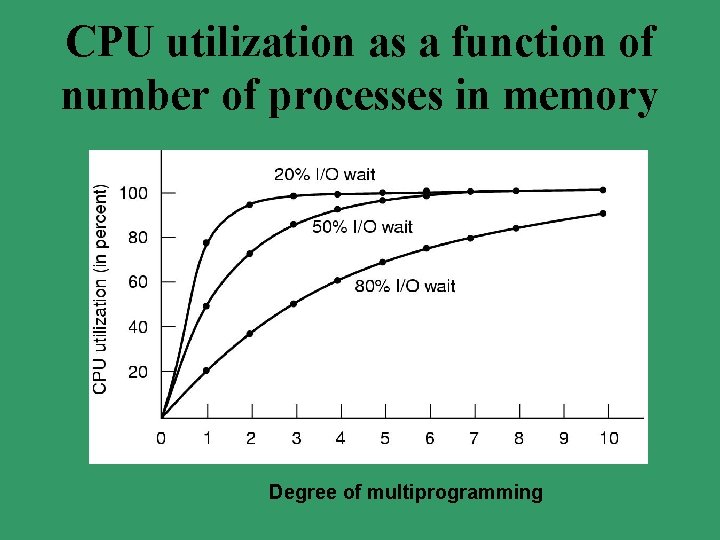

CPU utilization as a function of number of processes in memory Degree of multiprogramming

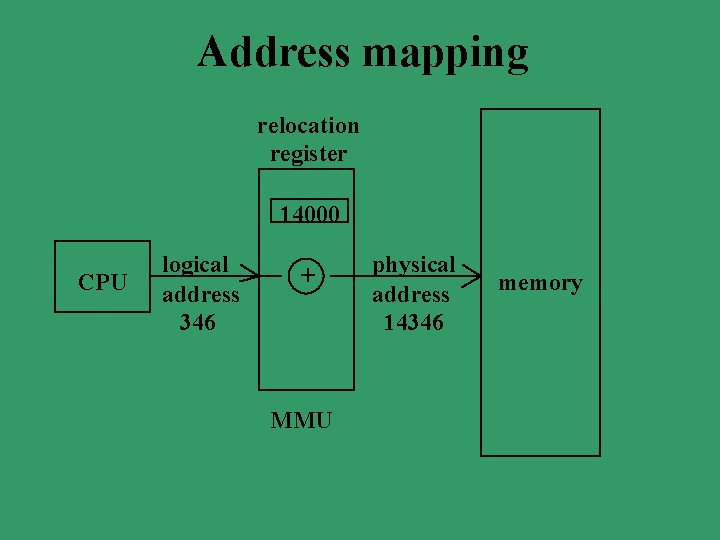

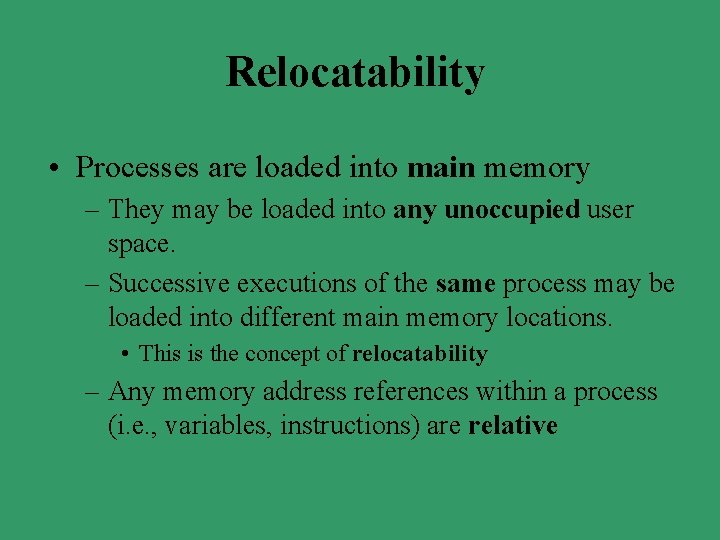

Relocatability • Processes are loaded into main memory – They may be loaded into any unoccupied user space. – Successive executions of the same process may be loaded into different main memory locations. • This is the concept of relocatability – Any memory address references within a process (i. e. , variables, instructions) are relative

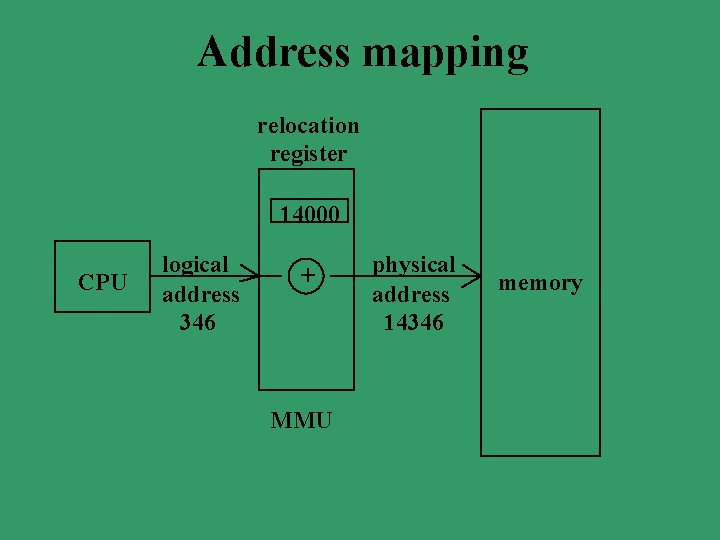

Address mapping relocation register 14000 CPU logical address 346 + MMU physical address 14346 memory

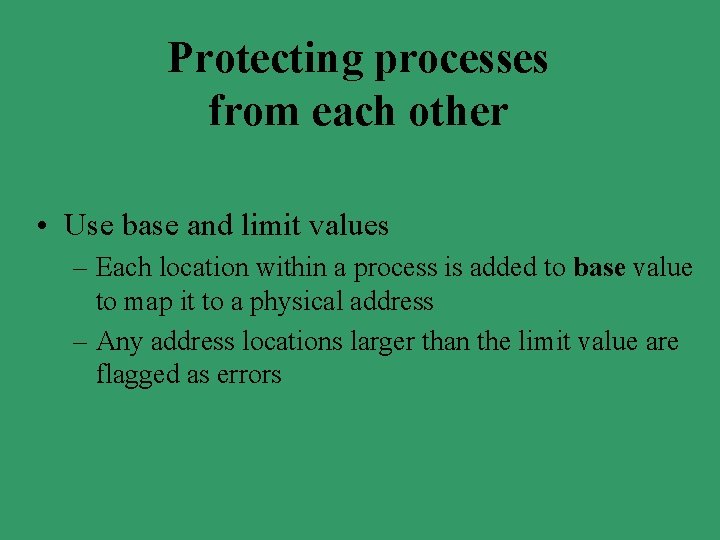

Protecting processes from each other • Use base and limit values – Each location within a process is added to base value to map it to a physical address – Any address locations larger than the limit value are flagged as errors

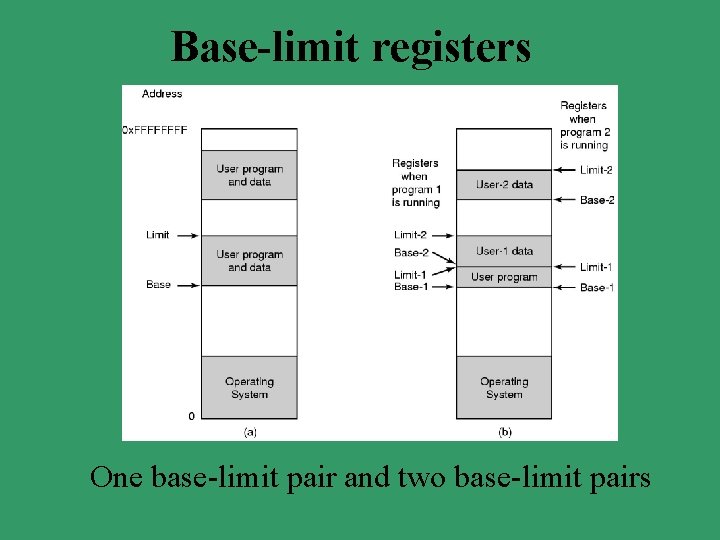

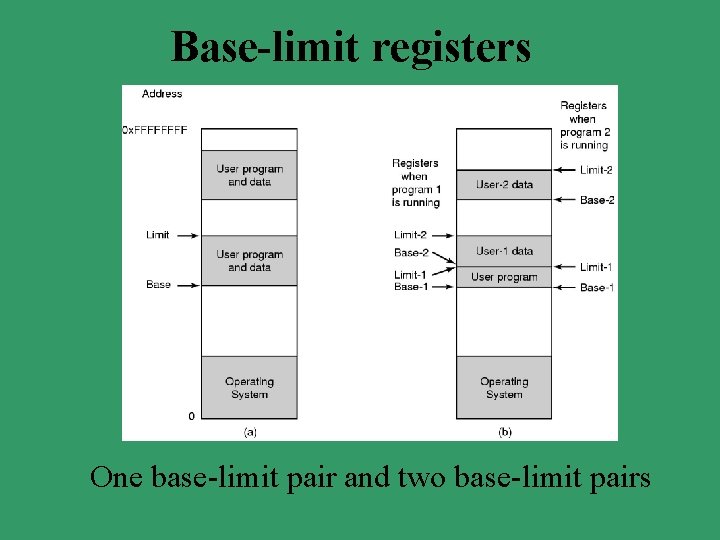

Base-limit registers One base-limit pair and two base-limit pairs

Swapping • What to do if there is not enough memory to store all active processes? – Swap certain processes out and then back in • An executing process must be in main memory, but can be temporarily swapped out & then back into memory – Consider a preemptive CPU scheduling algorithm such as RR

About swapping. . . • When the CPU is ready for the next process, it selects it from the ready queue. – If it has been swapped out, the memory manager brings it back in. – If there is no room for it, another process is swapped out first. – Context-switch time is high. • If a process is to be swapped out of main memory, it must be completely idle – If it is waiting for I/O, then another candidate is found, if possible

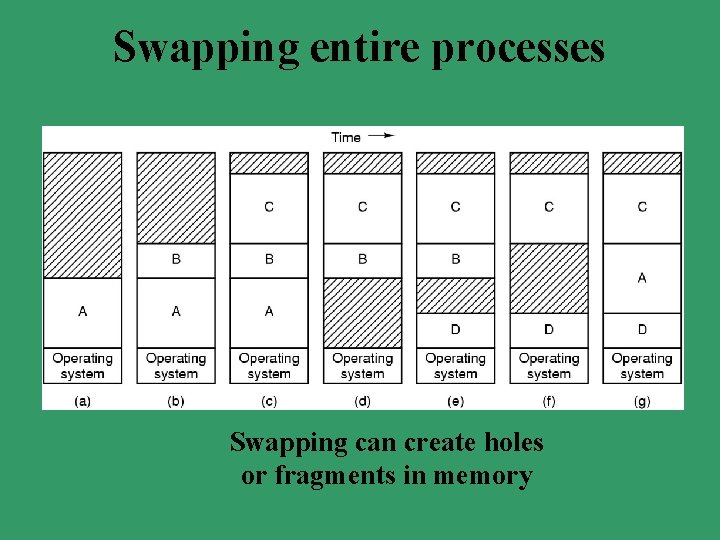

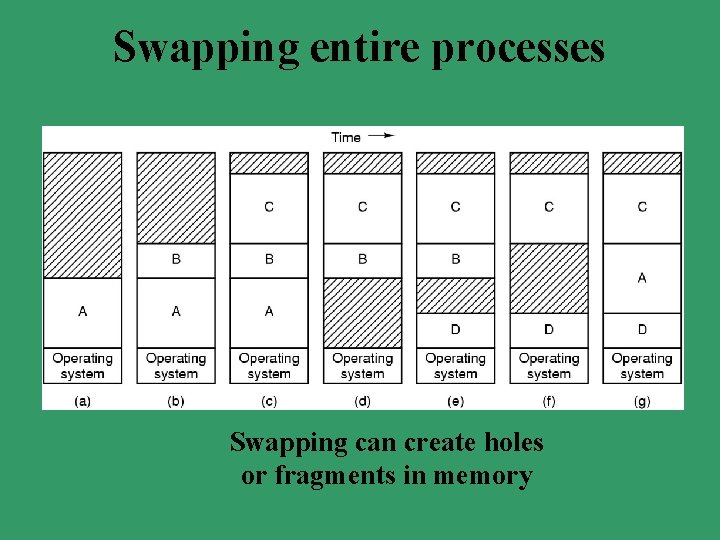

Swapping entire processes Swapping can create holes or fragments in memory

Fragmentation • As processes are loaded and removed from memory, available memory space is broken into pieces • When there is enough memory space to satisfy a request, but it is not contiguous, we say there is external fragmentation • When there is wasted space within a process’ address space, we have internal fragmentation (later on this)

Compaction • A solution to external fragmentation. • Partitions are rearranged to collect all the fragments into one large block. – Requires all internal addresses to be remapped to new physical addresses – All partitions are moved to one end of memory and all base and limit registers are altered. • Very costly

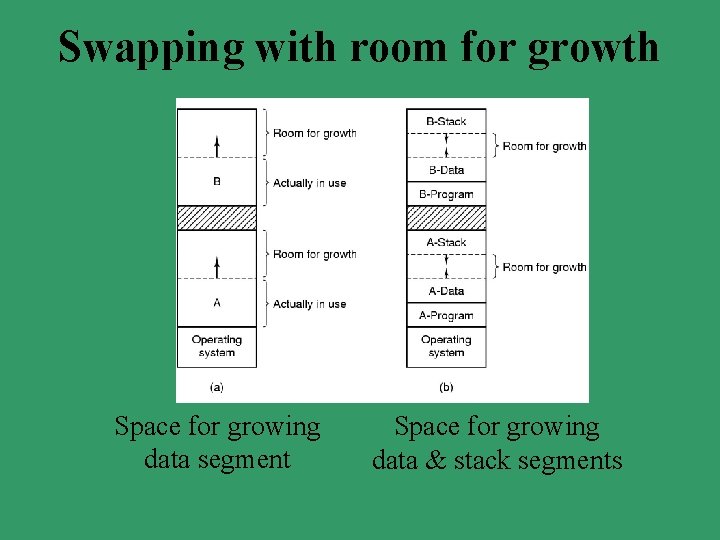

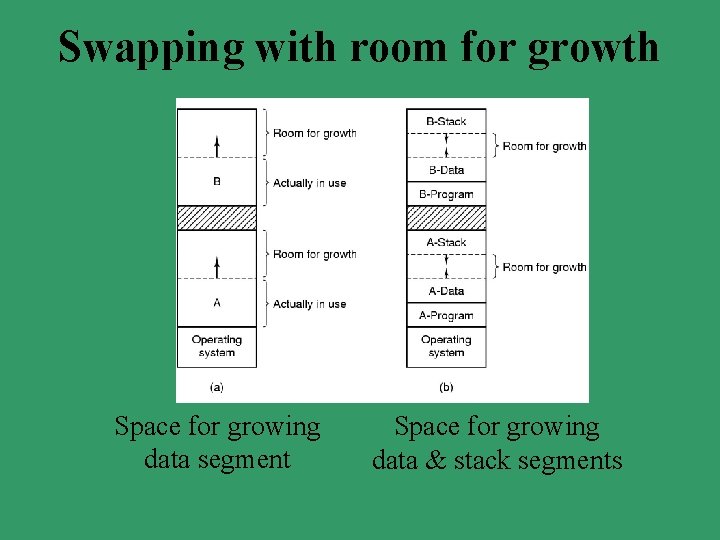

Swapping with room for growth Space for growing data segment Space for growing data & stack segments

Implementation • How is dynamic memory allocation actually implemented? • Two approaches – Using bitmaps – Using linked lists • Strategies for assignment of processes to memory spaces

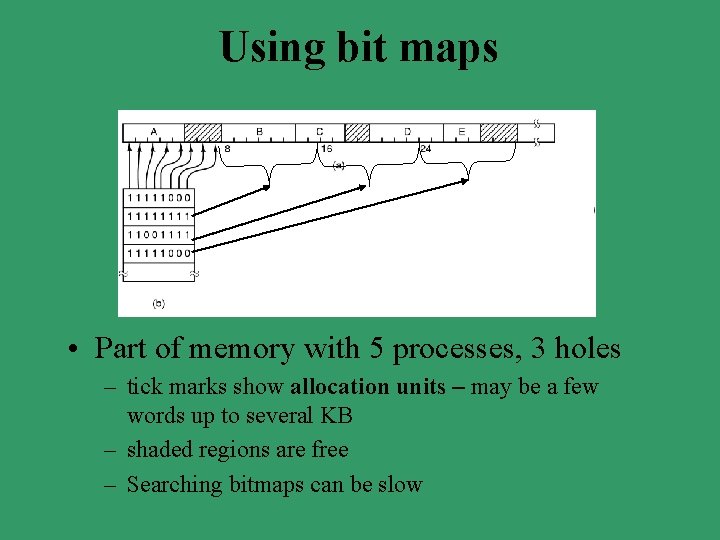

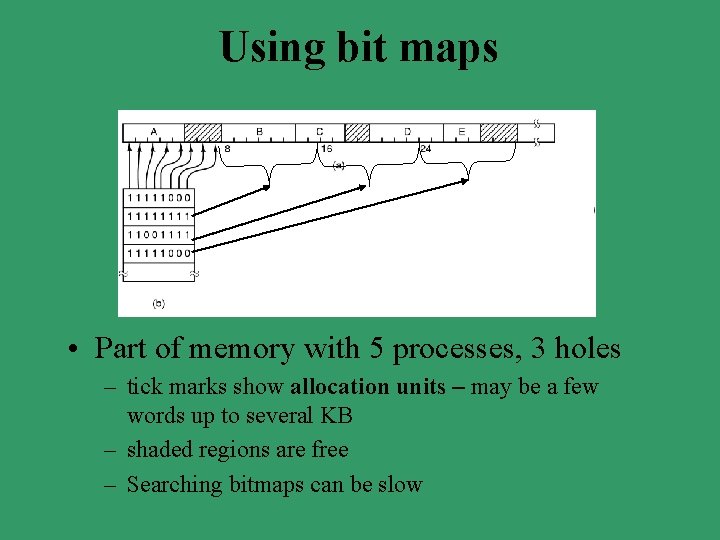

Using bit maps • Part of memory with 5 processes, 3 holes – tick marks show allocation units – may be a few words up to several KB – shaded regions are free – Searching bitmaps can be slow

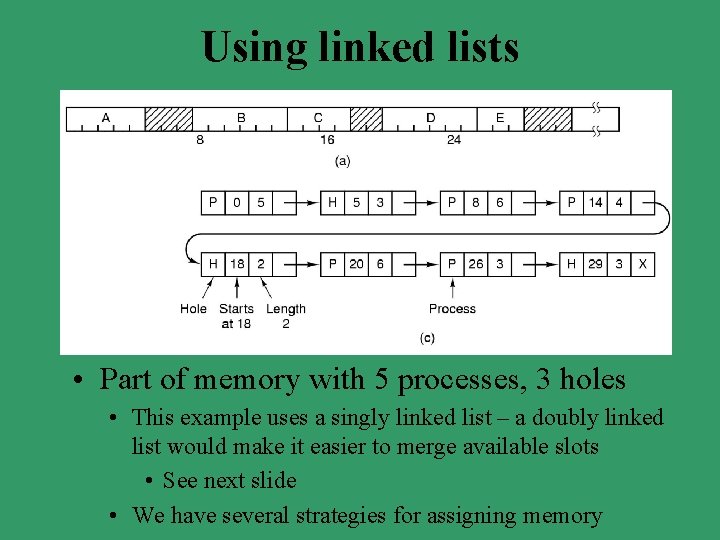

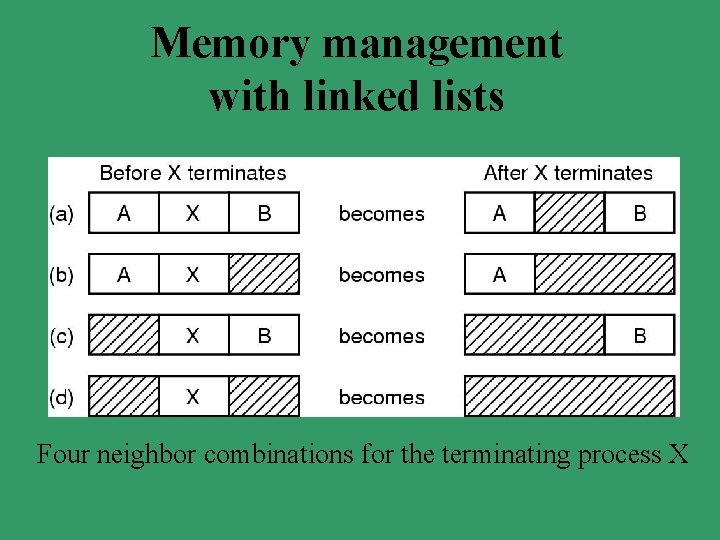

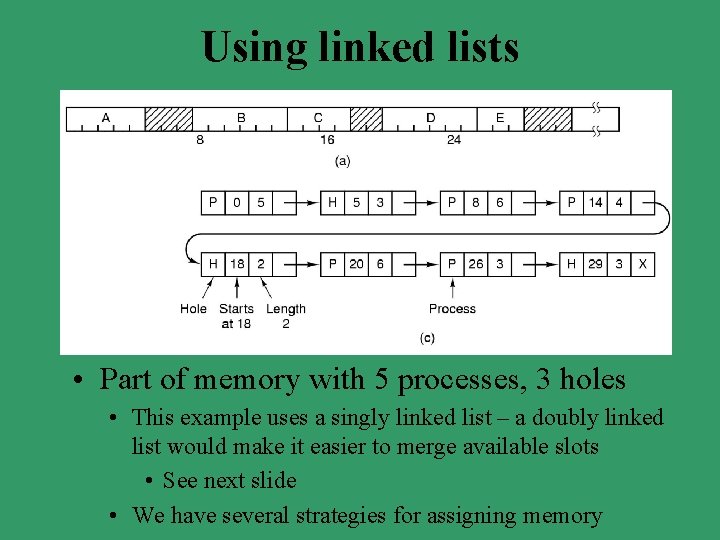

Using linked lists • Part of memory with 5 processes, 3 holes • This example uses a singly linked list – a doubly linked list would make it easier to merge available slots • See next slide • We have several strategies for assigning memory

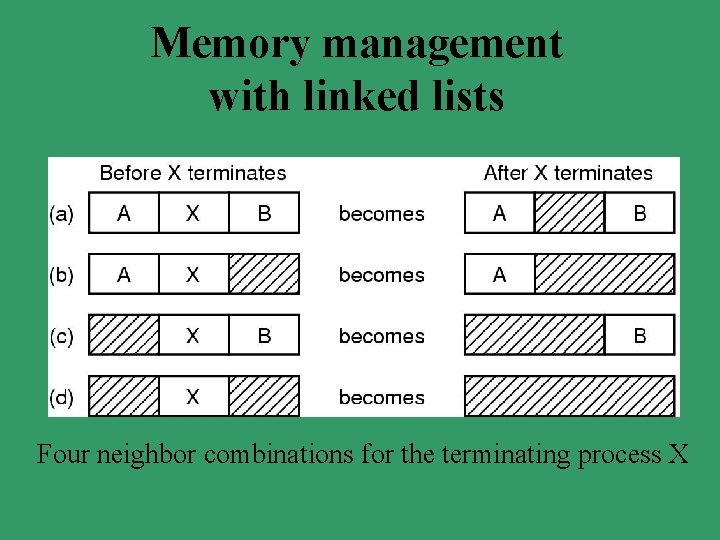

Memory management with linked lists Four neighbor combinations for the terminating process X

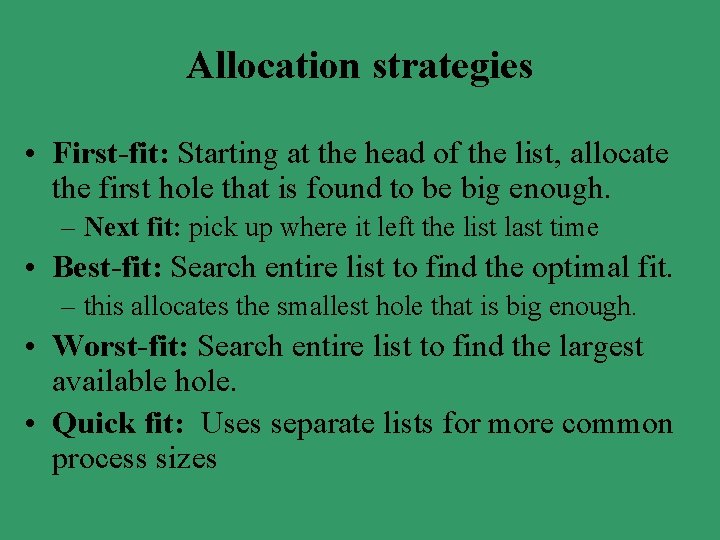

Allocation strategies • First-fit: Starting at the head of the list, allocate the first hole that is found to be big enough. – Next fit: pick up where it left the list last time • Best-fit: Search entire list to find the optimal fit. – this allocates the smallest hole that is big enough. • Worst-fit: Search entire list to find the largest available hole. • Quick fit: Uses separate lists for more common process sizes

Assignment • In-class: – p. 264 - #5 • HW: – Given memory partitions of 100 K, 500 K, 200 K, 300 K, 600 K (in order), how would each of firstfit, best-fit, worst-fit, and next-fit algorithms place processes of 212 K, 417 K, 112 K, 426 K (in this order)? – Read Sections 4. 3 & 4. 4