Memory Management Caching Operating Systems CS 550 CPU

![Looping • Improper • Proper int arr[1000]; for(i = 0; i < 1000; i++) Looping • Improper • Proper int arr[1000]; for(i = 0; i < 1000; i++)](https://slidetodoc.com/presentation_image_h/fe8b440199e94c000086696befed48db/image-9.jpg)

- Slides: 9

Memory Management: Caching ◦ Operating Systems ◦ CS 550

CPU Caching v Cache is on chip memory used by the CPU to decrease the average time to access memory v. Modern CPUs have 3 types of cache, in general. v Data cache - variables are stored here v Instruction cache - operations to run are stored here v Translation look aside buffer - used to speed up virtual to physical memory access for both instructions and data v Cache hit - processor immediately reads data from or writes to cache v Cache miss - cache allocates a new entry reading in data from memory v Cache lines - representation of a block of memory in cache

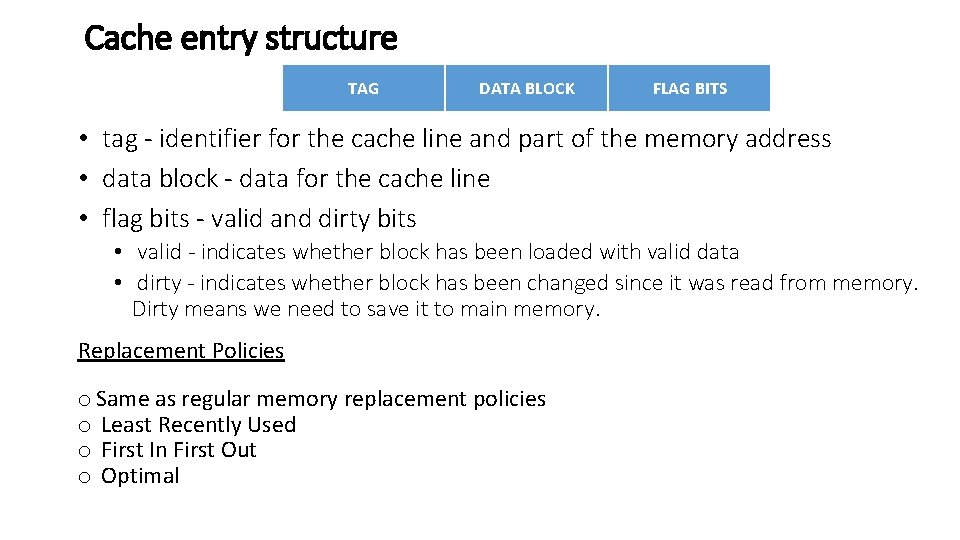

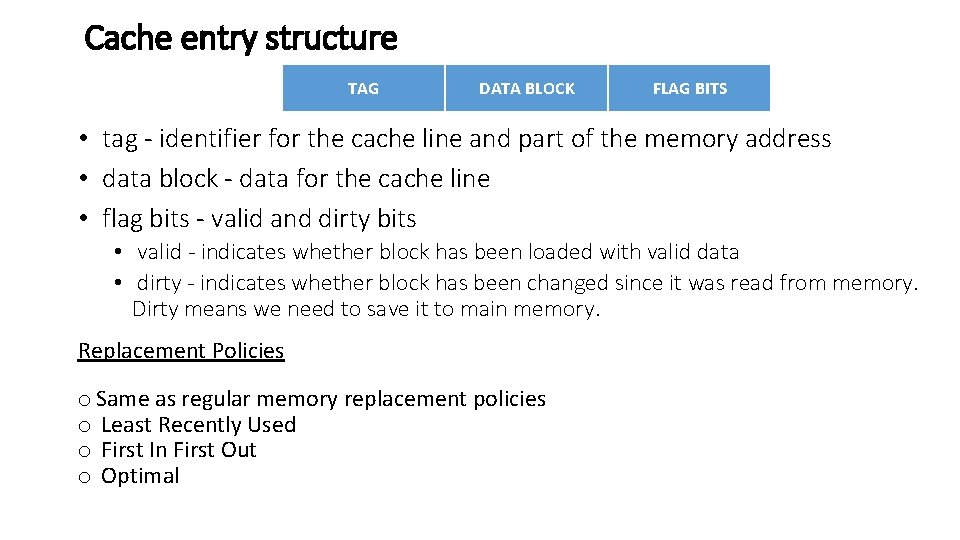

Cache entry structure TAG DATA BLOCK FLAG BITS • tag - identifier for the cache line and part of the memory address • data block - data for the cache line • flag bits - valid and dirty bits • valid - indicates whether block has been loaded with valid data • dirty - indicates whether block has been changed since it was read from memory. Dirty means we need to save it to main memory. Replacement Policies o Same as regular memory replacement policies o Least Recently Used o First In First Out o Optimal

Associative Cache • Used to decide where in cache a memory entry will go. • See http: //upload. wikimedia. org/wikipedia/commons/9/93/Cac he%2 Cassociative-fill-both. png • Fully associative - replacement policy may choose any entry • Set association - memory entries are mapped to specific locations in cache • Important in programming for high performance systems, because interleaving data in loops may allow for fewer cache misses

Translation Lookaside Buffer (TLB) Ø memory management hardware for cache Ø improves speed to translate virtual addresses Ø includes basic memory information such as references to pages, frames, and variables.

Memory Access Uniform memory access o Memory location accessed is independent of the processor o Non-uniform memory access (NUMA) o Systems where memory access times vary wildly o Memory location accessed depends upon the processor

Cache Coherency Ø Consistency of data on cache shared by local resources (CPUs). Three levels of coherence Ø Write ops appear to occur instantly Ø Processors see the same changes of values for each operand Ø Different processors might see an op and assume different values (non-coherence) Ø Implemented by Ø Snooping - individual caches monitor other caches Ø Snarfing - cache controller watches addresses and memory and updates its data copies once other data is updated in memory Ø Concurrency protocol - how to implement coherence ØCcnuma Ø allows for consistent memory image across sockets Ø IPC used to keep a consistent memory image when more than one cache stores the same memory location

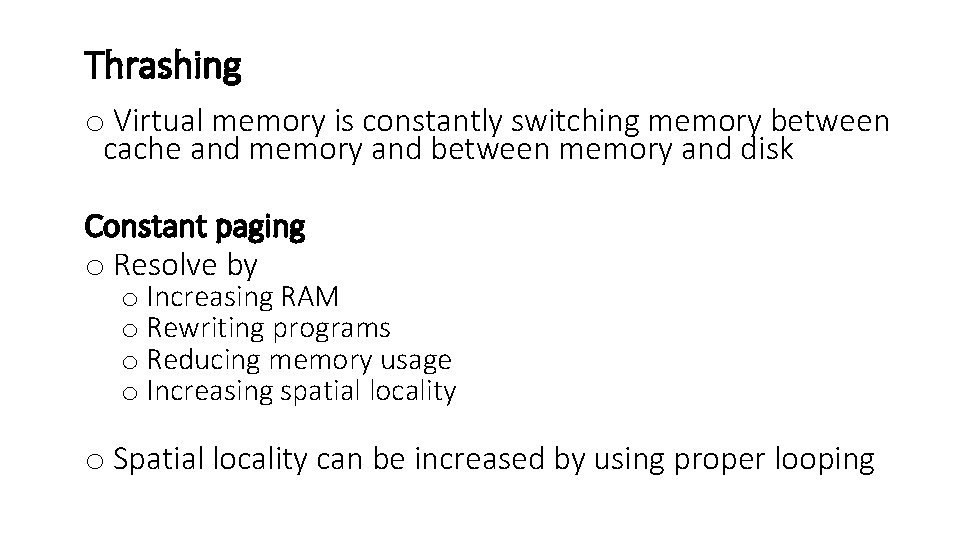

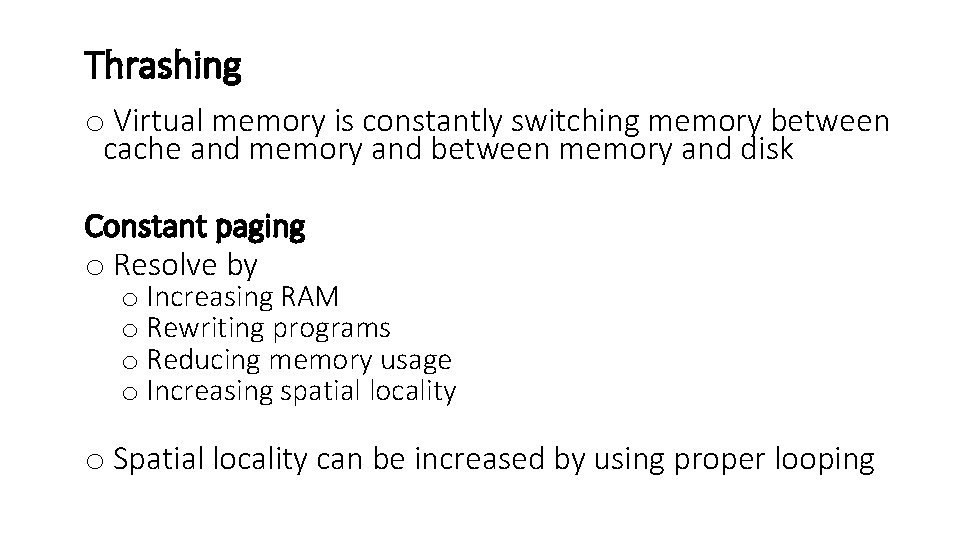

Thrashing o Virtual memory is constantly switching memory between cache and memory and between memory and disk Constant paging o Resolve by o Increasing RAM o Rewriting programs o Reducing memory usage o Increasing spatial locality o Spatial locality can be increased by using proper looping

![Looping Improper Proper int arr1000 fori 0 i 1000 i Looping • Improper • Proper int arr[1000]; for(i = 0; i < 1000; i++)](https://slidetodoc.com/presentation_image_h/fe8b440199e94c000086696befed48db/image-9.jpg)

Looping • Improper • Proper int arr[1000]; for(i = 0; i < 1000; i++) { for(j = 0; j < 1000; j++) arr[j][i] = get. Value(); } int arr[1000]; for(i = 0; i < 1000; i++) { for(j = 0; j < 1000; j++) arr[i][j] = get. Value(); }