Memory Hierarchy Latency Capacity Bandwidth L 0 5

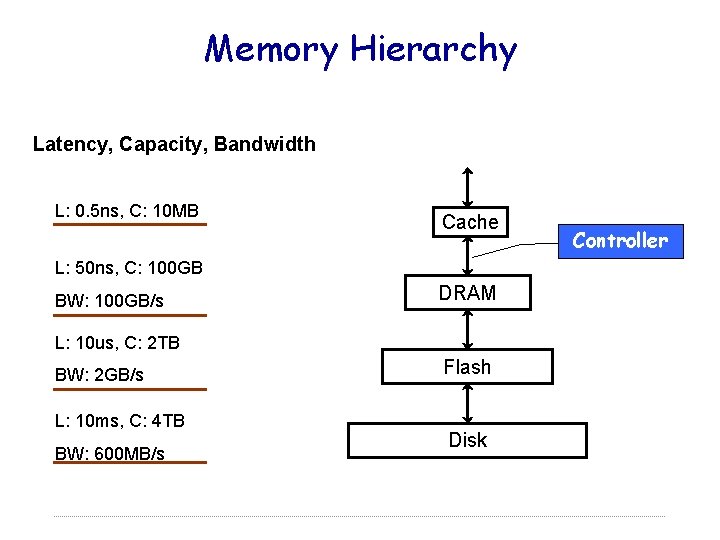

Memory Hierarchy Latency, Capacity, Bandwidth L: 0. 5 ns, C: 10 MB Cache L: 50 ns, C: 100 GB BW: 100 GB/s DRAM L: 10 us, C: 2 TB BW: 2 GB/s L: 10 ms, C: 4 TB BW: 600 MB/s Flash Disk Controller

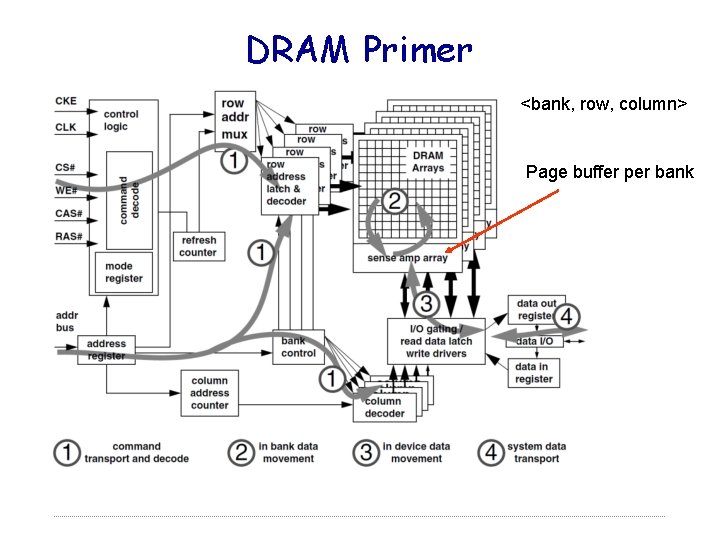

DRAM Primer <bank, row, column> Page buffer per bank

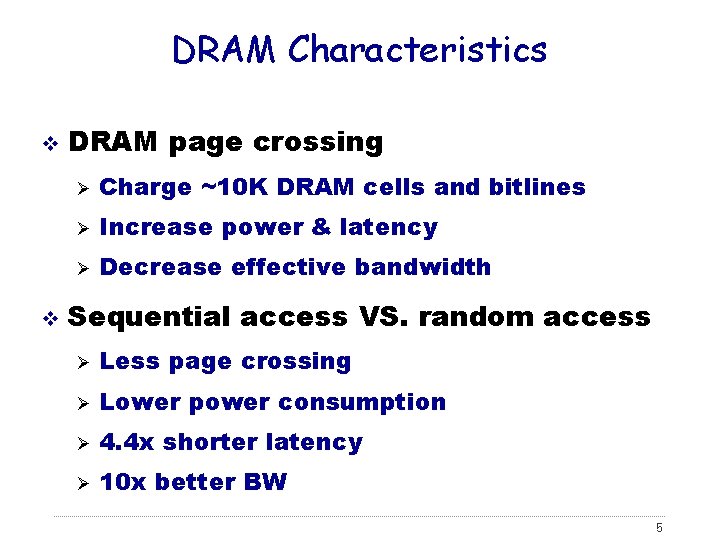

DRAM Characteristics v v DRAM page crossing Ø Charge ~10 K DRAM cells and bitlines Ø Increase power & latency Ø Decrease effective bandwidth Sequential access VS. random access Ø Less page crossing Ø Lower power consumption Ø 4. 4 x shorter latency Ø 10 x better BW 5

Take Away: DRAM = Disk

Embedded Controller Bad News l None available as in general purpose processor Good News l Opportunities for customization

Agenda v Overview v Multi-Port Memory Controller (MPMC) Design v “Out-of-Core” Algorithmic Exploration

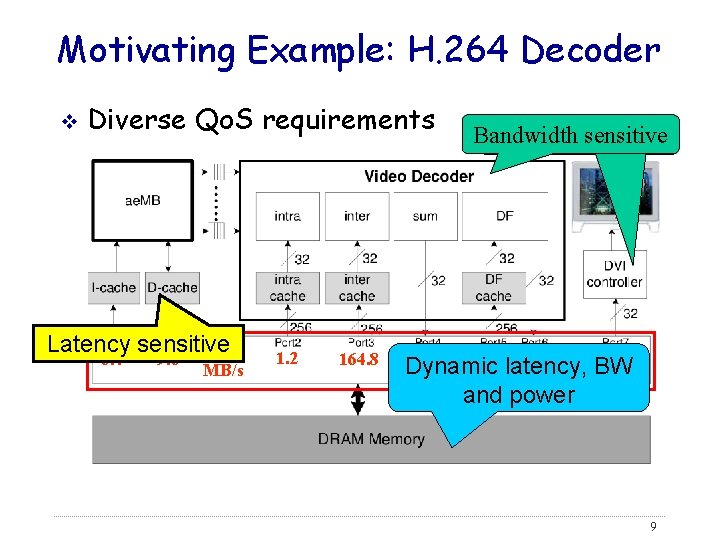

Motivating Example: H. 264 Decoder v Diverse Qo. S requirements Latency sensitive 6. 4 9. 6 MB/s 1. 2 164. 8 Bandwidth sensitive 0. 09 31. 0 156. 7 94 Dynamic latency, BW and power 9

Wanted v Bandwidth guarantee v Prioritized access v Reduced page crossing 1

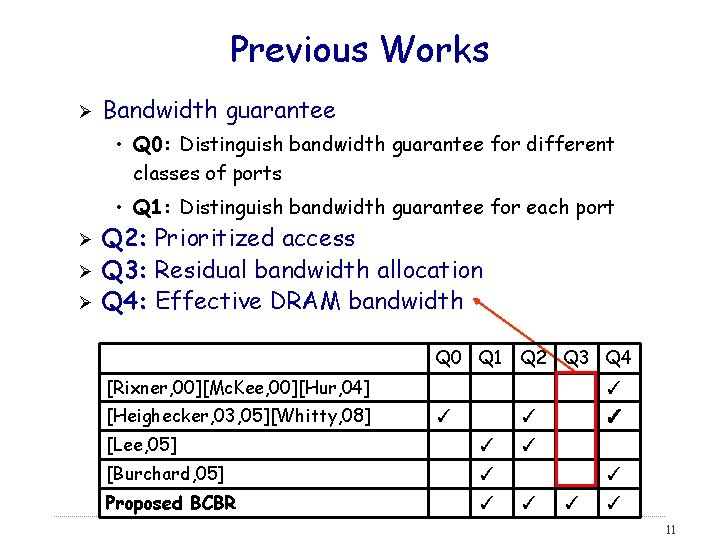

Previous Works Ø Bandwidth guarantee • Q 0: Distinguish bandwidth guarantee for different classes of ports • Q 1: Distinguish bandwidth guarantee for each port Ø Ø Ø Q 2: Prioritized access Q 3: Residual bandwidth allocation Q 4: Effective DRAM bandwidth Q 0 Q 1 Q 2 Q 3 Q 4 [Rixner, 00][Mc. Kee, 00][Hur, 04] [Heighecker, 03, 05][Whitty, 08] ✓ ✓ ✓ [Lee, 05] ✓ [Burchard, 05] ✓ Proposed BCBR ✓ ✓ ✓ ✓ 11

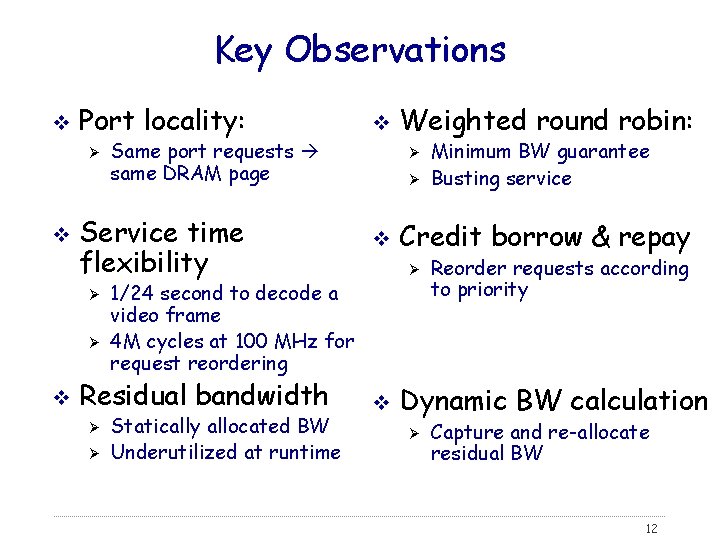

Key Observations v Port locality: Ø v Same port requests same DRAM page Service time flexibility Ø Ø Weighted round robin: Ø Ø v Ø Statically allocated BW Underutilized at runtime v Minimum BW guarantee Busting service Credit borrow & repay 1/24 second to decode a video frame 4 M cycles at 100 MHz for request reordering Residual bandwidth Ø v Reorder requests according to priority Dynamic BW calculation Ø Capture and re-allocate residual BW 12

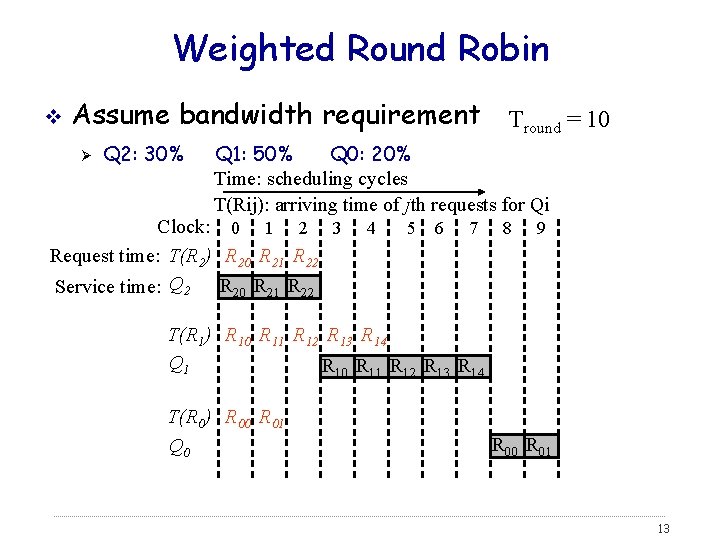

Weighted Round Robin v Assume bandwidth requirement Tround = 10 Ø Q 2: 30% Q 1: 50% Q 0: 20% Time: scheduling cycles T(Rij): arriving time of jth requests for Qi Clock: 0 1 2 3 Request time: T(R 2) R 20 R 21 R 22 Service time: Q 2 R 20 R 21 R 22 4 5 6 7 8 9 T(R 1) R 10 R 11 R 12 R 13 R 14 Q 1 R 10 R 11 R 12 R 13 R 14 T(R 0) R 00 R 01 Q 0 R 01 13

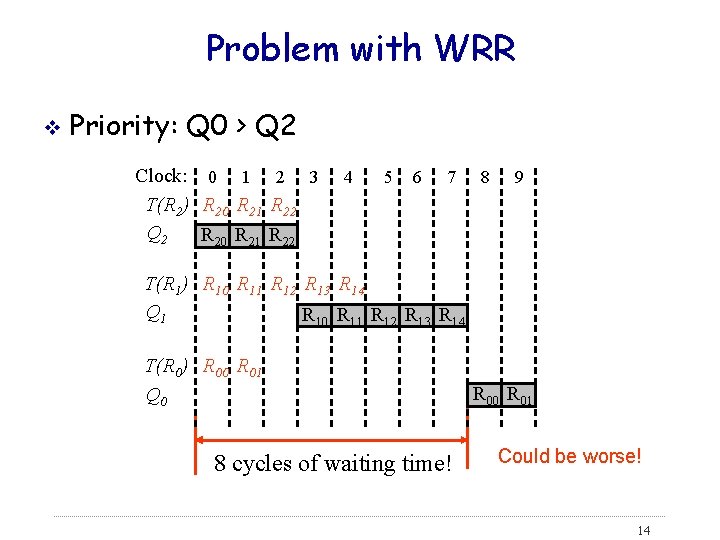

Problem with WRR v Priority: Q 0 > Q 2 Clock: 0 1 2 3 T(R 2) R 20 R 21 R 22 Q 2 R 20 R 21 R 22 4 5 6 7 8 9 T(R 1) R 10 R 11 R 12 R 13 R 14 Q 1 R 10 R 11 R 12 R 13 R 14 T(R 0) R 00 R 01 Q 0 8 cycles of waiting time! R 00 R 01 Could be worse! 14

Borrow Credits v Zero Waiting time for Q 0! Clock: 0 1 2 3 T(R 2) R 20 R 21 R 22 Q 2 R 20 4 5 6 7 8 9 T(R 1) R 10 R 11 R 12 Q 1 borrow T(R 0) R 00 R 01 Q 0* R 00 R 01 debt. Q 0 Q 2 Q 2 15

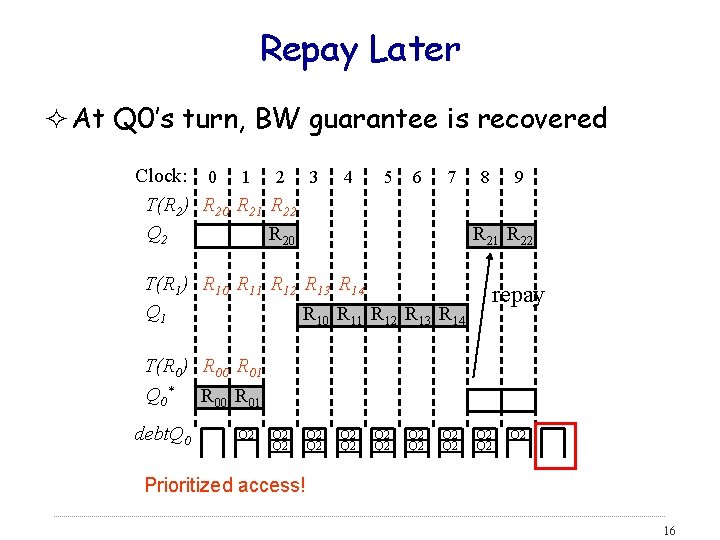

Repay Later ² At Q 0’s turn, BW guarantee is recovered Clock: 0 1 2 3 T(R 2) R 20 R 21 R 22 Q 2 R 20 4 5 6 7 8 9 R 21 R 22 T(R 1) R 10 R 11 R 12 R 13 R 14 Q 1 R 10 R 11 R 12 R 13 R 14 repay T(R 0) R 00 R 01 Q 0* R 00 R 01 debt. Q 0 Q 2 Q 2 Q 2 Q 2 Prioritized access! 16

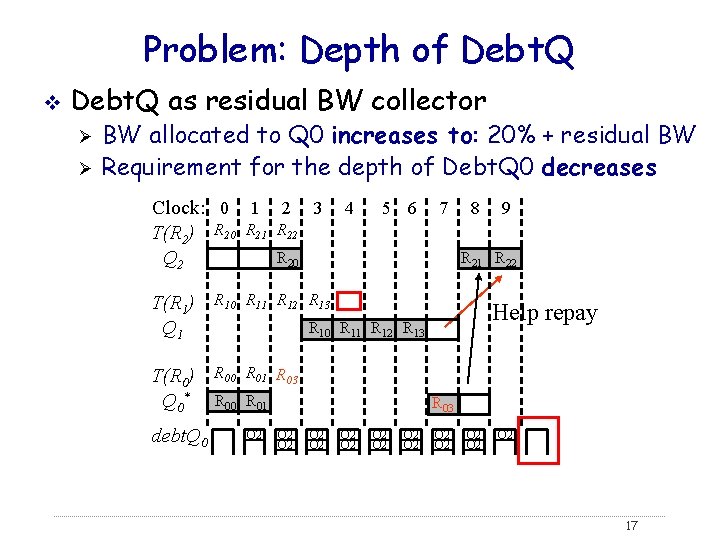

Problem: Depth of Debt. Q v Debt. Q as residual BW collector Ø Ø BW allocated to Q 0 increases to: 20% + residual BW Requirement for the depth of Debt. Q 0 decreases Clock: 0 1 2 3 T(R 2) R 20 R 21 R 22 R 20 Q 2 T(R 1) Q 1 R 10 R 11 R 12 R 13 T(R 0) Q 0 * R 00 R 01 R 03 debt. Q 0 4 5 6 7 8 R 21 R 22 Help repay R 10 R 11 R 12 R 13 R 00 R 01 Q 2 9 R 03 Q 2 Q 2 Q 2 Q 2 17

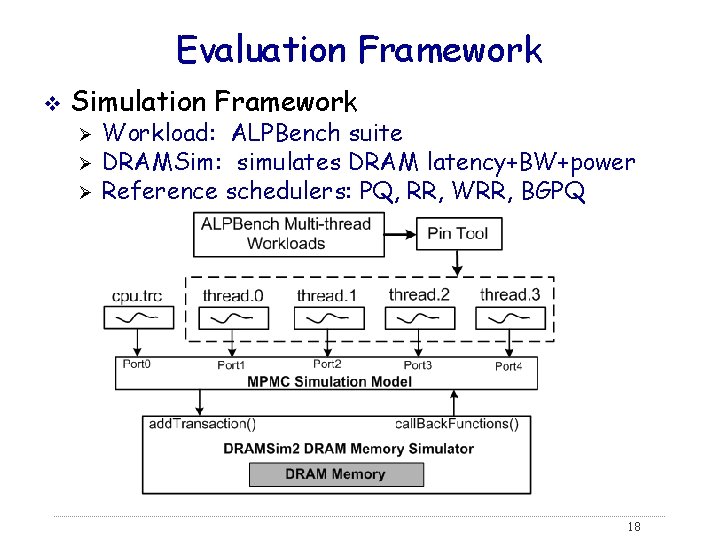

Evaluation Framework v Simulation Framework Ø Ø Ø Workload: ALPBench suite DRAMSim: simulates DRAM latency+BW+power Reference schedulers: PQ, RR, WRR, BGPQ 18

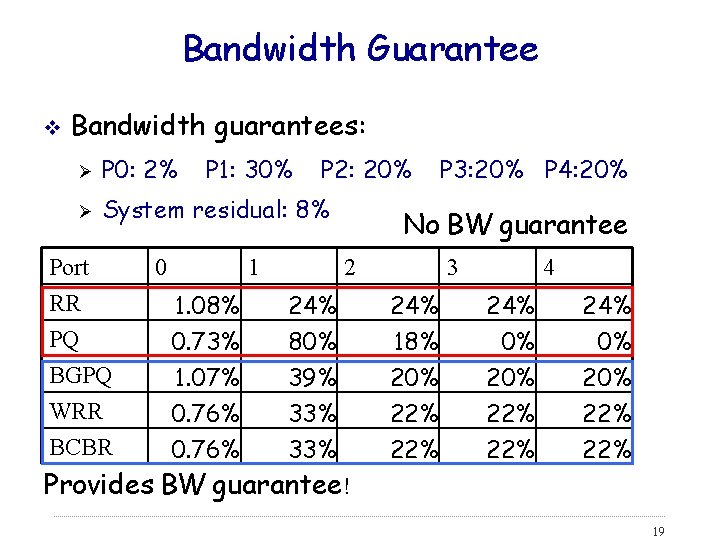

Bandwidth Guarantee v Bandwidth guarantees: Ø P 0: 2% Ø System residual: 8% Port RR PQ BGPQ WRR BCBR P 1: 30% 0 P 2: 20% 1 1. 08% 0. 73% 1. 07% 0. 76% No BW guarantee 2 24% 80% 39% 33% P 3: 20% P 4: 20% Provides BW guarantee! 3 24% 18% 20% 22% 4 24% 0% 20% 22% 22% 19

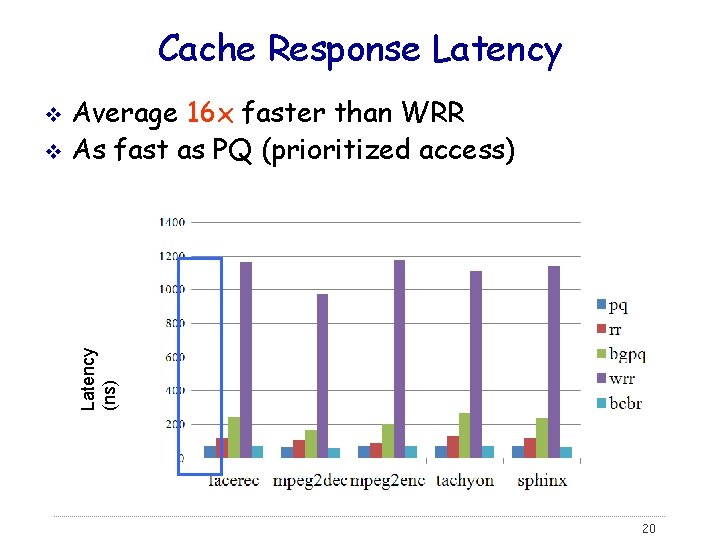

Cache Response Latency Average 16 x faster than WRR v As fast as PQ (prioritized access) Latency (ns) v 20

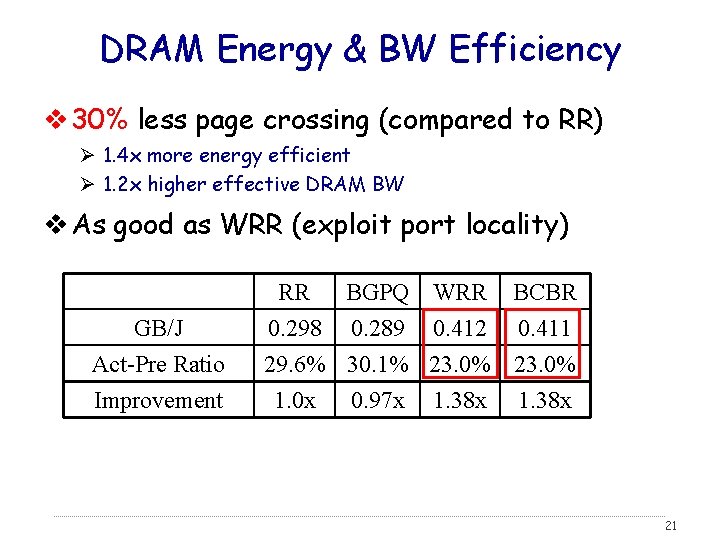

DRAM Energy & BW Efficiency v 30% less page crossing (compared to RR) Ø 1. 4 x more energy efficient Ø 1. 2 x higher effective DRAM BW v As good as WRR (exploit port locality) GB/J Act-Pre Ratio Improvement RR BGPQ WRR BCBR 0. 298 0. 289 0. 412 0. 411 29. 6% 30. 1% 23. 0% 1. 0 x 0. 97 x 1. 38 x 21

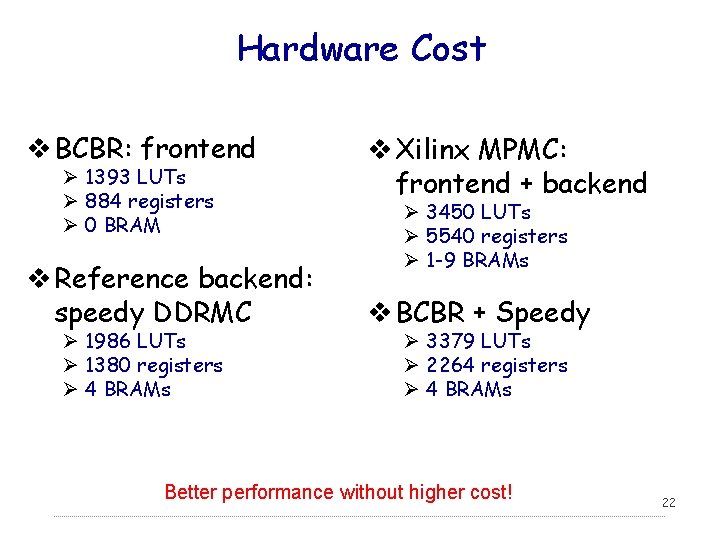

Hardware Cost v BCBR: frontend Ø 1393 LUTs Ø 884 registers Ø 0 BRAM v Reference backend: speedy DDRMC Ø 1986 LUTs Ø 1380 registers Ø 4 BRAMs v Xilinx MPMC: frontend + backend Ø 3450 LUTs Ø 5540 registers Ø 1 -9 BRAMs v BCBR + Speedy Ø 3379 LUTs Ø 2264 registers Ø 4 BRAMs Better performance without higher cost! 22

Agenda v Overview v Multi-Port Memory Controller (MPMC) Design v “Out-of-Core” Algorithm / Architecture Exploration

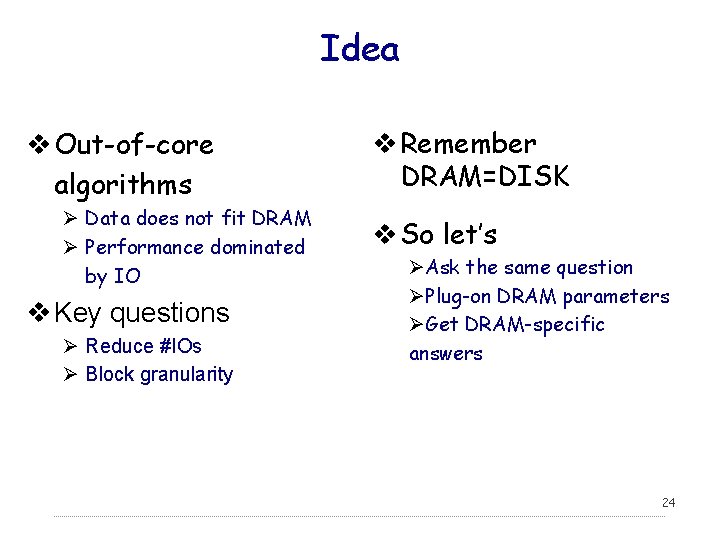

Idea v Out-of-core algorithms Ø Data does not fit DRAM Ø Performance dominated by IO v Key questions Ø Reduce #IOs Ø Block granularity v Remember DRAM=DISK v So let’s ØAsk the same question ØPlug-on DRAM parameters ØGet DRAM-specific answers 24

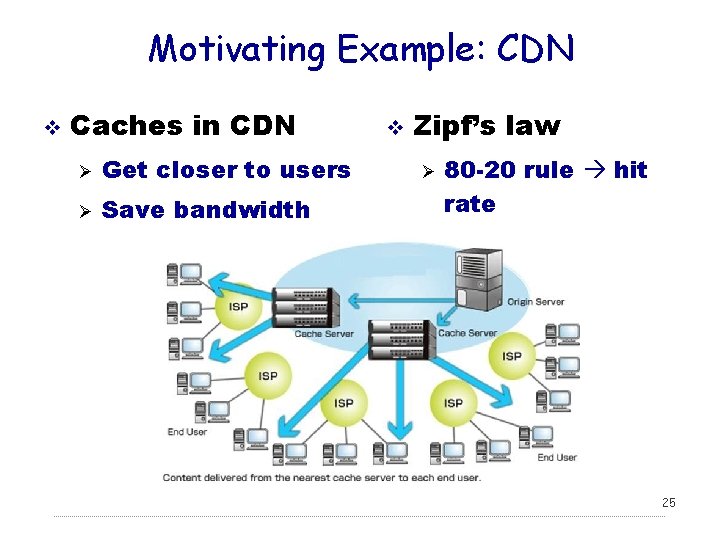

Motivating Example: CDN v Caches in CDN Ø Get closer to users Ø Save bandwidth v Zipf’s law Ø 80 -20 rule hit rate 25

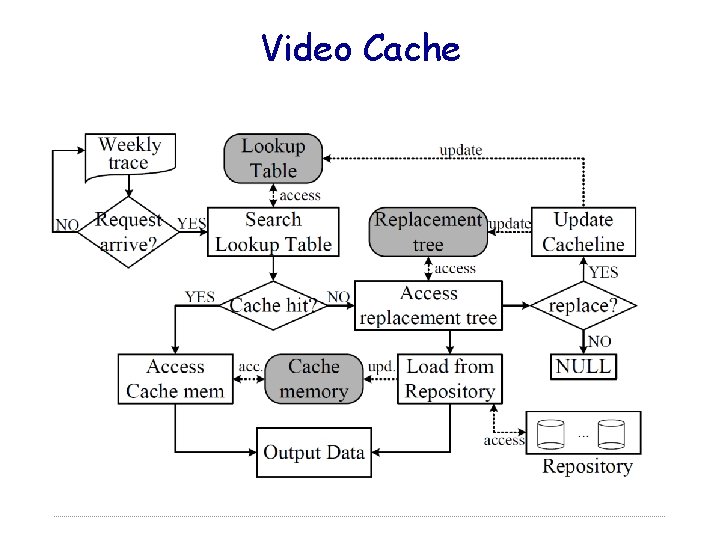

Video Cache

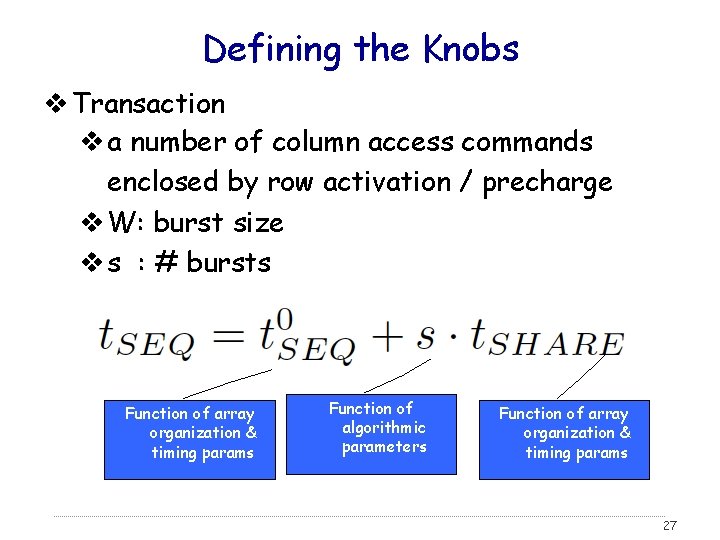

Defining the Knobs v Transaction v a number of column access commands enclosed by row activation / precharge v W: burst size v s : # bursts Function of array organization & timing params Function of algorithmic parameters Function of array organization & timing params 27

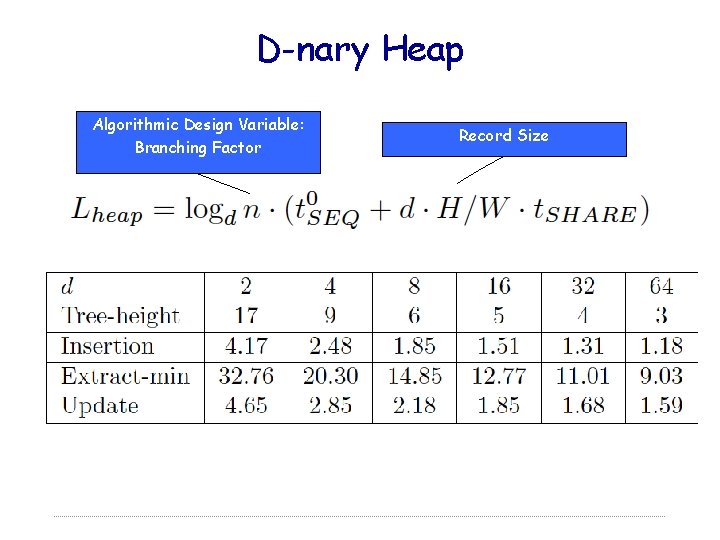

D-nary Heap Algorithmic Design Variable: Branching Factor Record Size

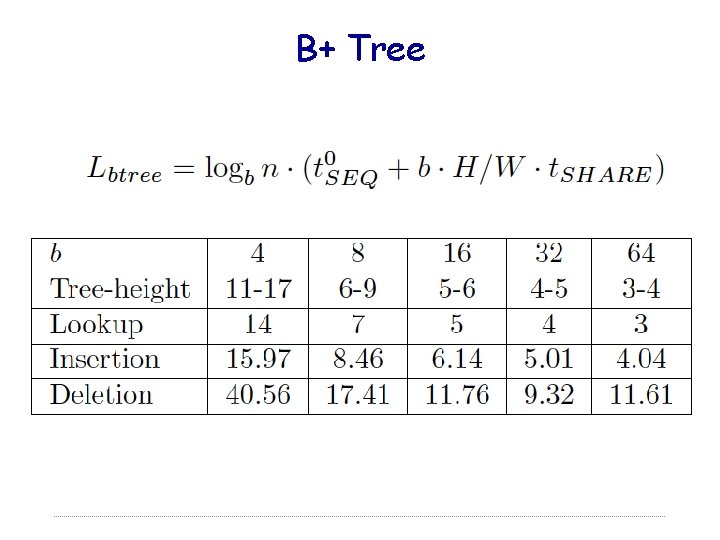

B+ Tree

Lessons Learned v Optimal result can be beautifully derived! v Big O does not matter in some cases Ø Depending on data input characteristics

- Slides: 28