Memory 1 Memory Allocation n n Compile for

- Slides: 47

Memory 1

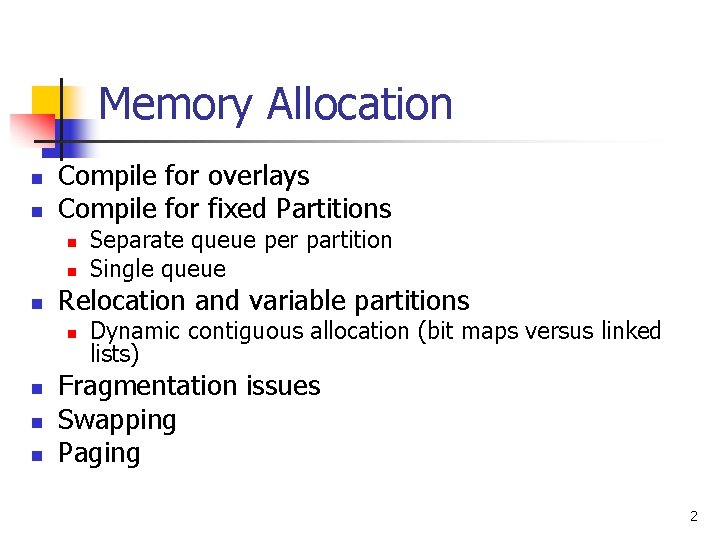

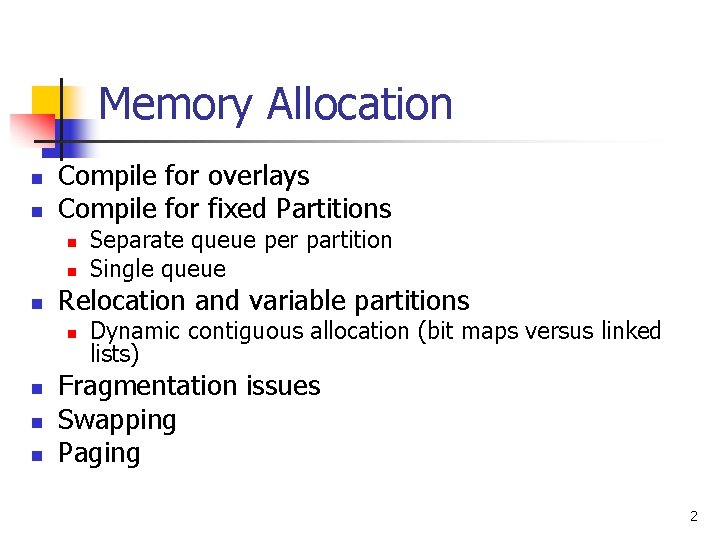

Memory Allocation n n Compile for overlays Compile for fixed Partitions n n n Relocation and variable partitions n n Separate queue per partition Single queue Dynamic contiguous allocation (bit maps versus linked lists) Fragmentation issues Swapping Paging 2

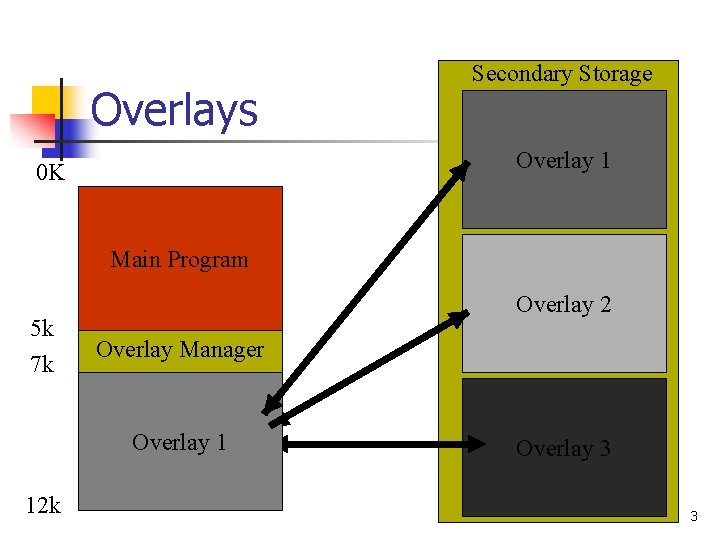

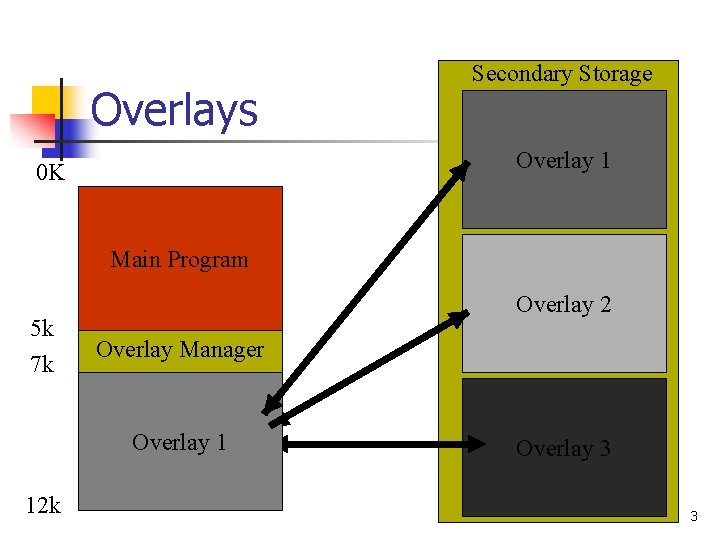

Overlays Secondary Storage Overlay 1 0 K Main Program 5 k 7 k Overlay 2 Overlay Manager Overlay. Area 132 12 k Overlay 3 3

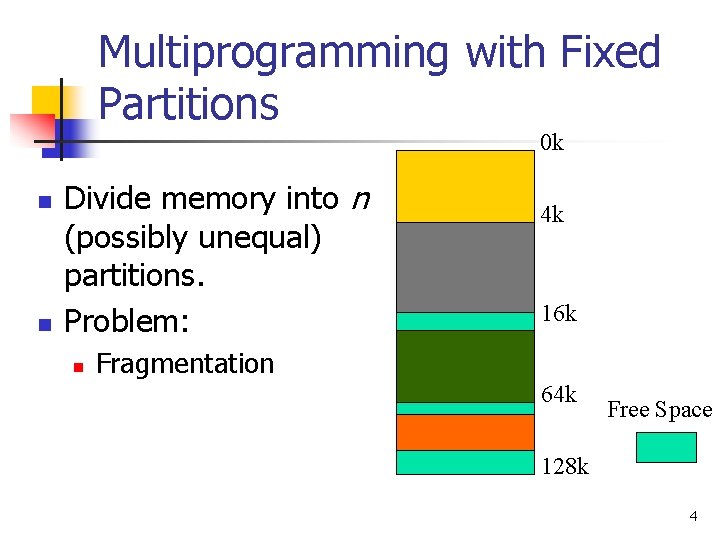

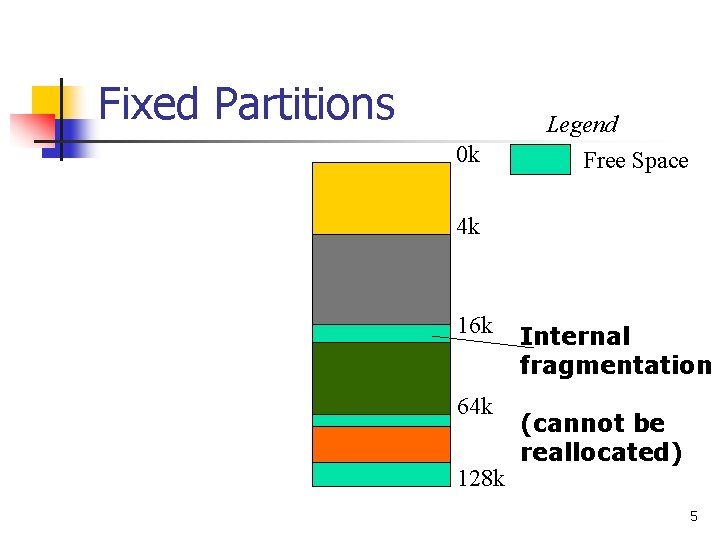

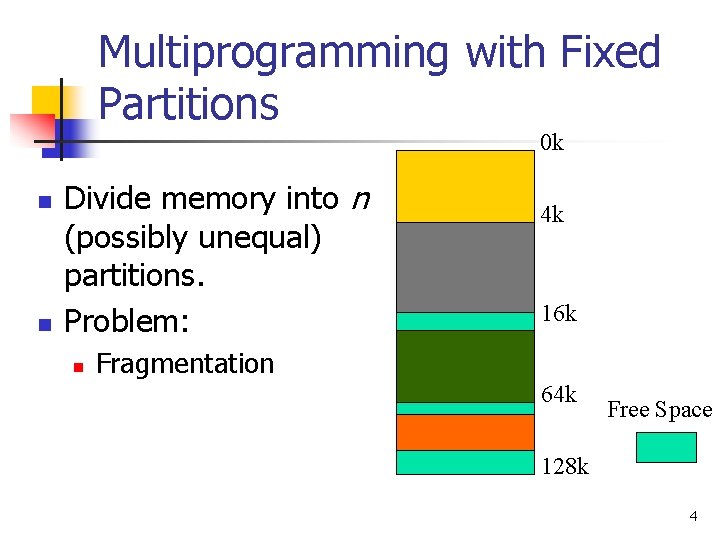

Multiprogramming with Fixed Partitions 0 k n n Divide memory into n (possibly unequal) partitions. Problem: n 4 k 16 k Fragmentation 64 k Free Space 128 k 4

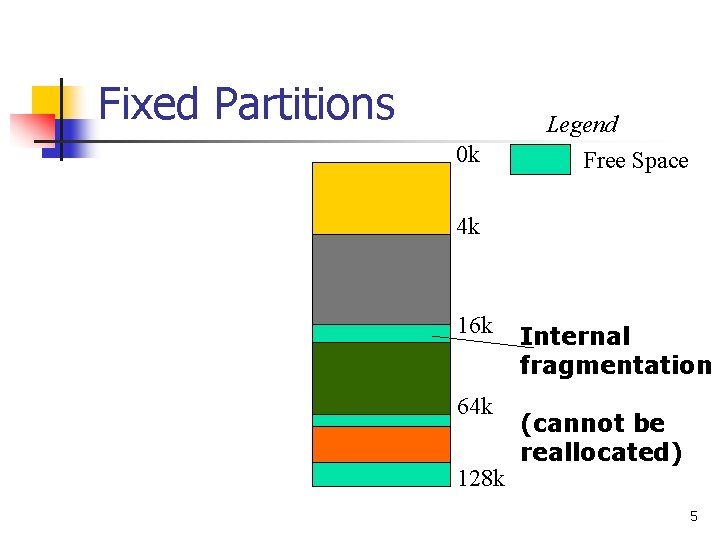

Fixed Partitions Legend 0 k Free Space 4 k 16 k 64 k 128 k Internal fragmentation (cannot be reallocated) 5

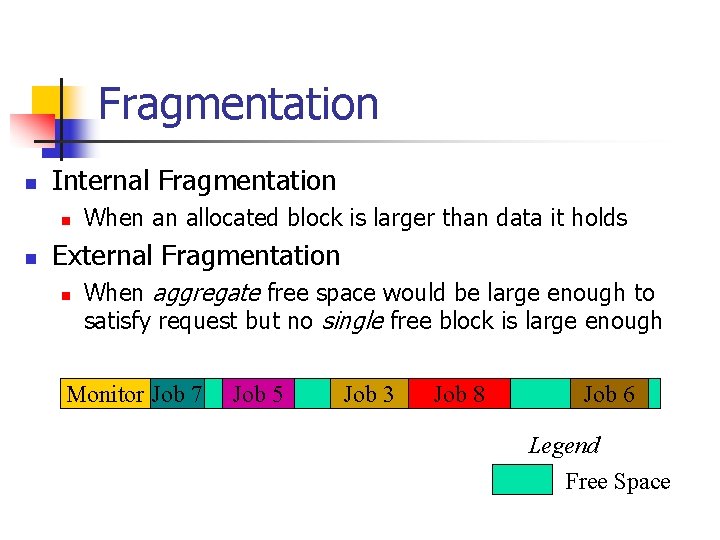

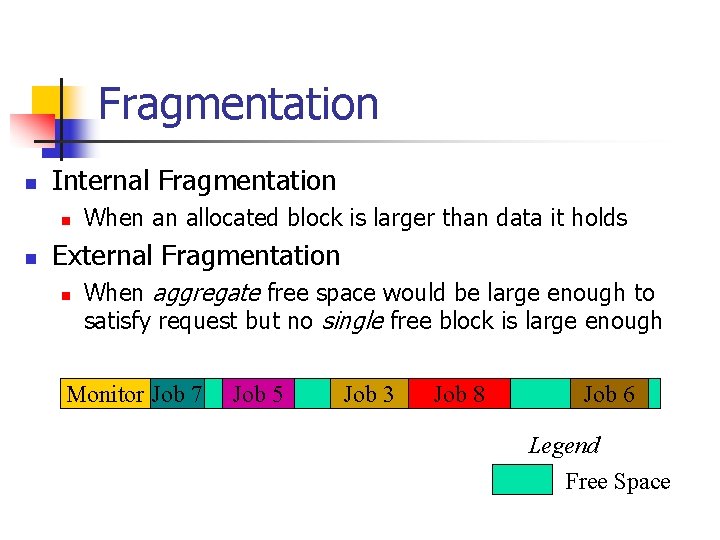

Fragmentation n Internal Fragmentation n n When an allocated block is larger than data it holds External Fragmentation n When aggregate free space would be large enough to satisfy request but no single free block is large enough Monitor Job 7 Job 5 Job 3 Job 8 Free 6 Job Legend Free Space

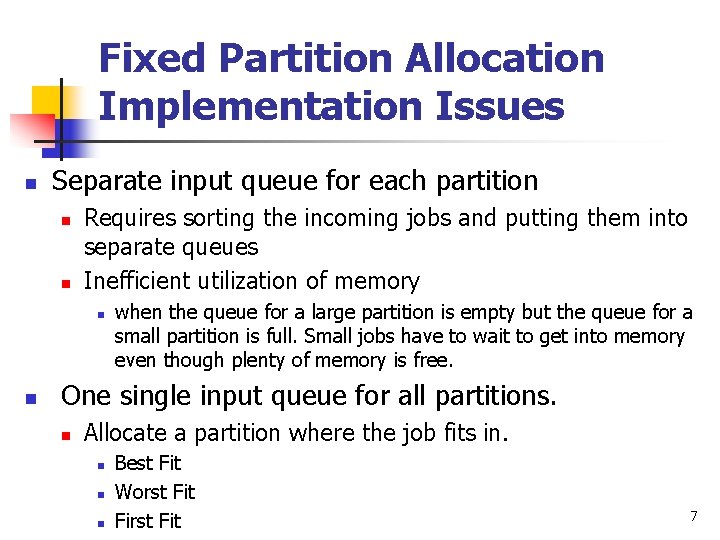

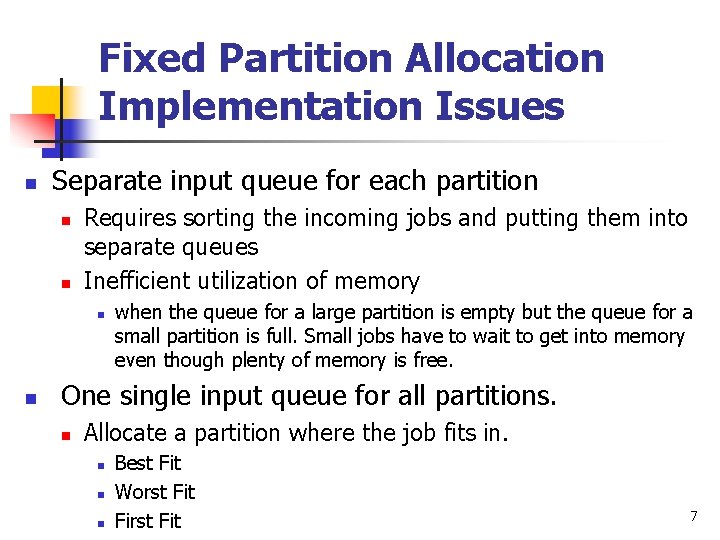

Fixed Partition Allocation Implementation Issues n Separate input queue for each partition n n Requires sorting the incoming jobs and putting them into separate queues Inefficient utilization of memory n n when the queue for a large partition is empty but the queue for a small partition is full. Small jobs have to wait to get into memory even though plenty of memory is free. One single input queue for all partitions. n Allocate a partition where the job fits in. n n n Best Fit Worst First Fit 7

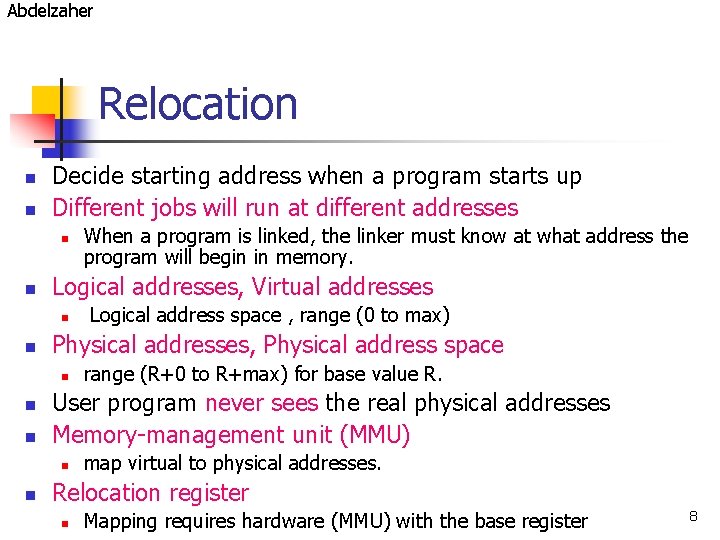

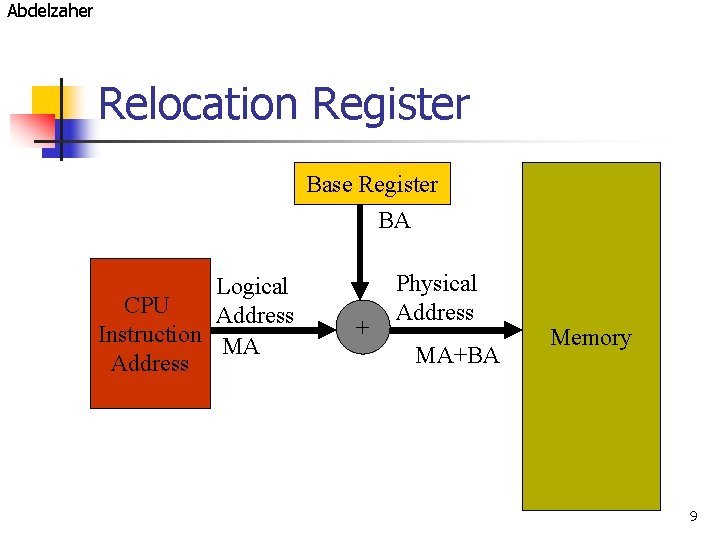

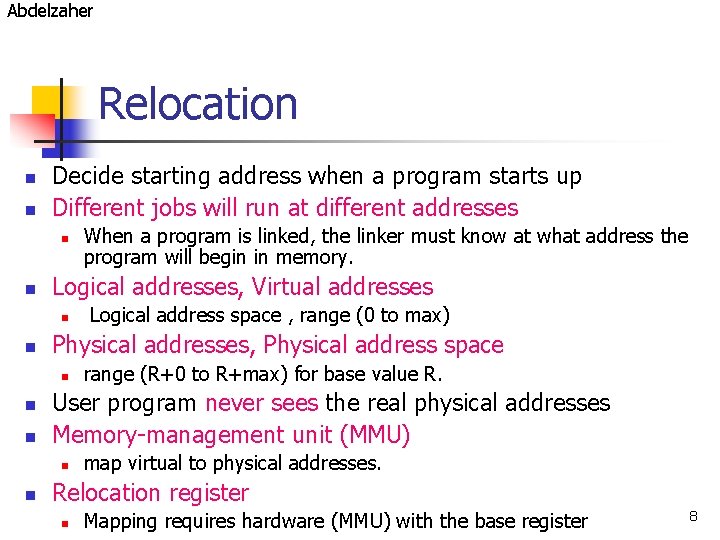

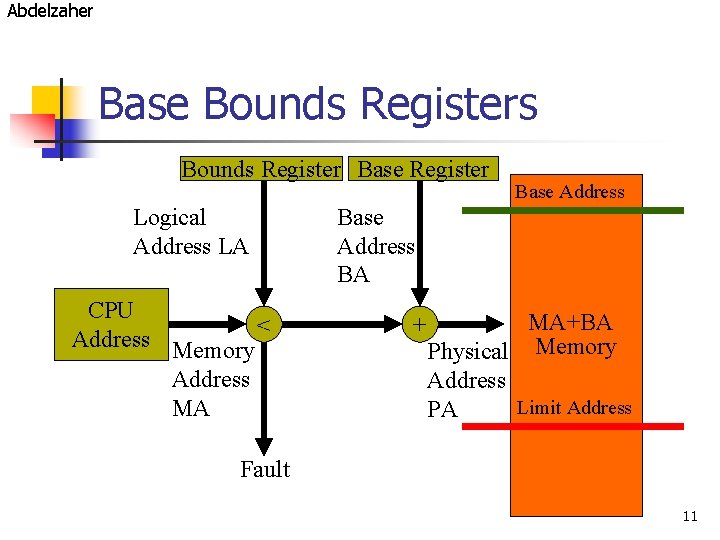

Abdelzaher Relocation n n Decide starting address when a program starts up Different jobs will run at different addresses n n Logical addresses, Virtual addresses n n n range (R+0 to R+max) for base value R. User program never sees the real physical addresses Memory-management unit (MMU) n n Logical address space , range (0 to max) Physical addresses, Physical address space n n When a program is linked, the linker must know at what address the program will begin in memory. map virtual to physical addresses. Relocation register n Mapping requires hardware (MMU) with the base register 8

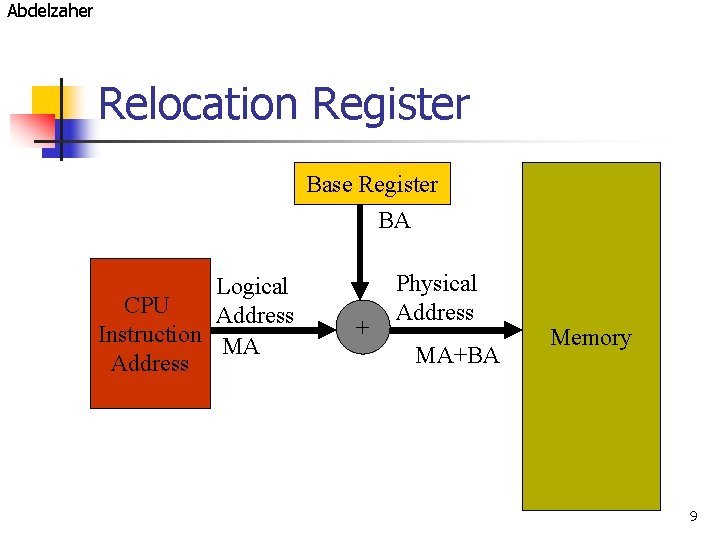

Abdelzaher Relocation Register Base Register BA Logical CPU Address Instruction MA Address + Physical Address MA+BA Memory 9

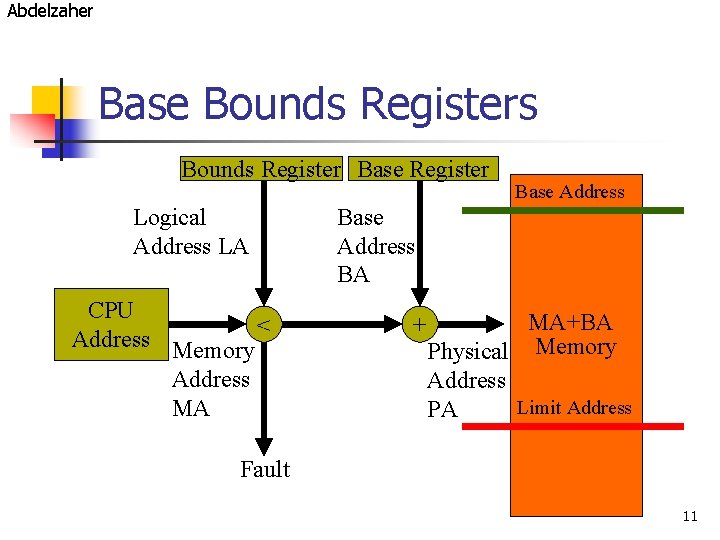

Abdelzaher Question 1 - Protection n Problem: n n How to prevent a malicious process from writing or jumping into other user's or OS partitions Solution: n Base bounds registers 10

Abdelzaher Base Bounds Registers Bounds Register Base Register Logical Address LA CPU < Address Memory Address MA Base Address BA + MA+BA Physical Memory Address Limit Address PA Fault 11

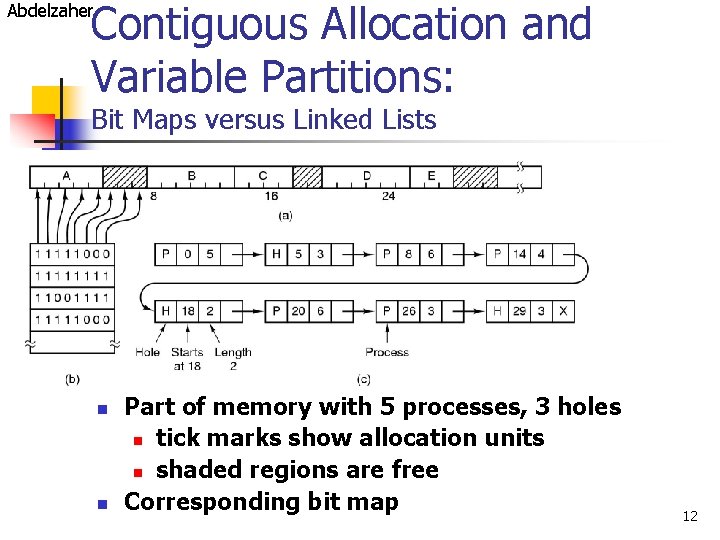

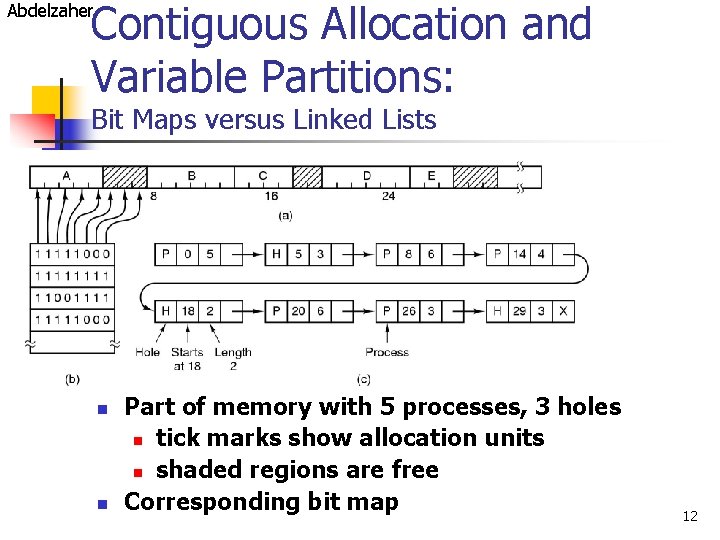

Contiguous Allocation and Variable Partitions: Abdelzaher Bit Maps versus Linked Lists n n Part of memory with 5 processes, 3 holes n tick marks show allocation units n shaded regions are free Corresponding bit map 12

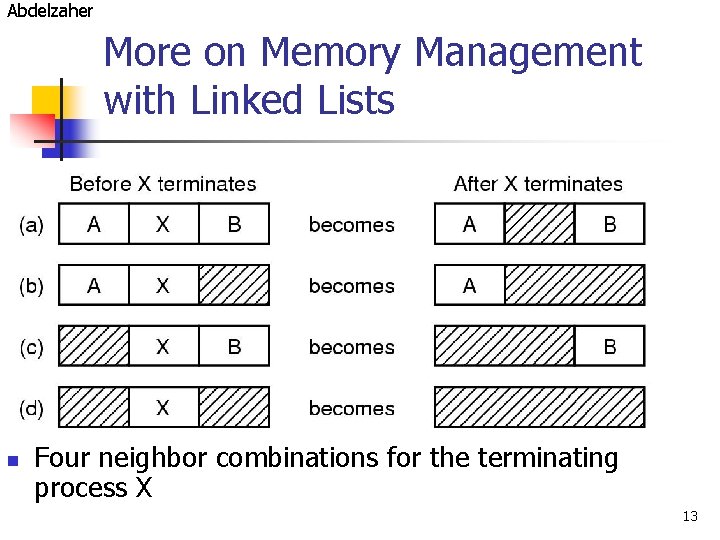

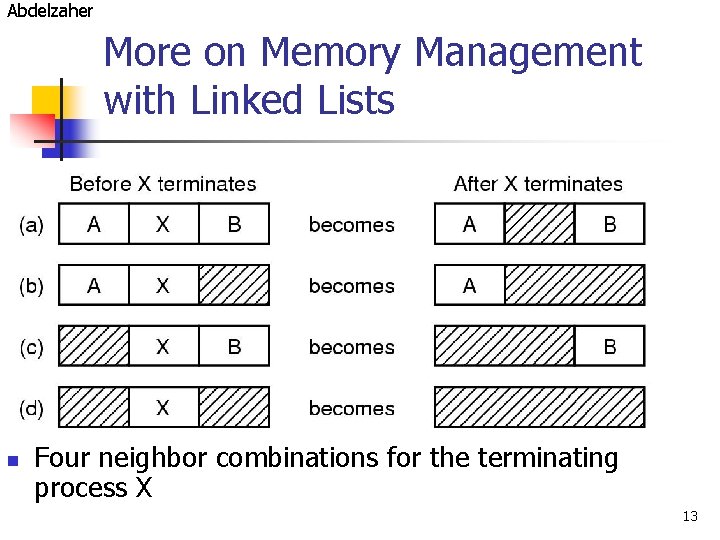

Abdelzaher More on Memory Management with Linked Lists n Four neighbor combinations for the terminating process X 13

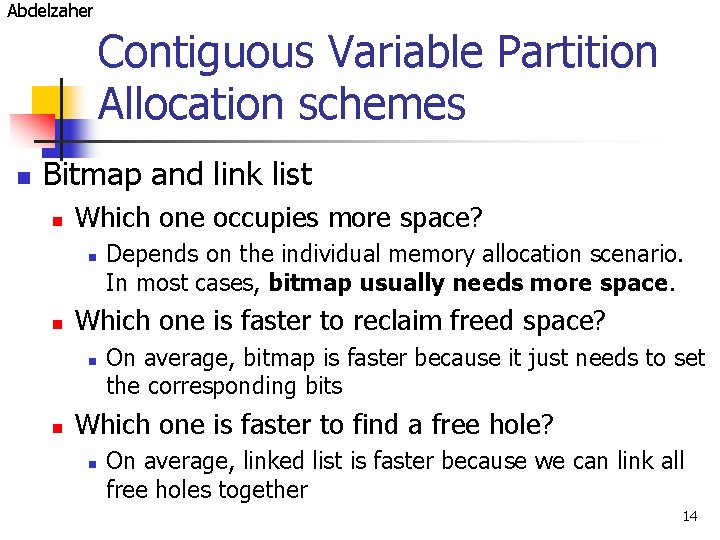

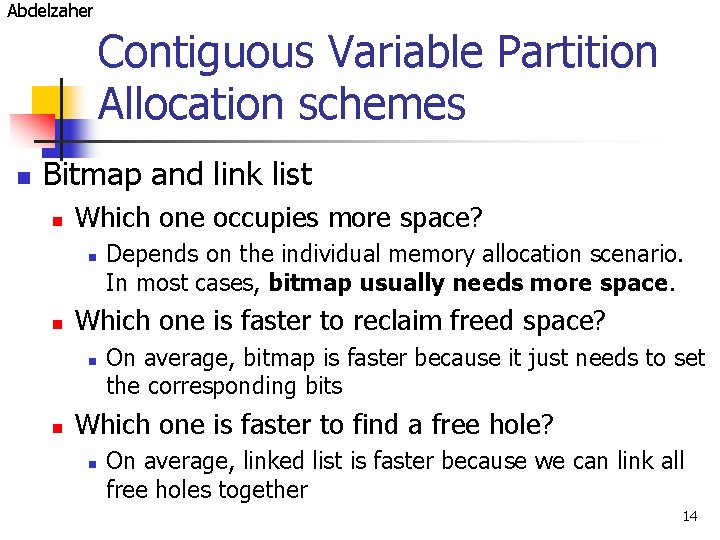

Abdelzaher Contiguous Variable Partition Allocation schemes n Bitmap and link list n Which one occupies more space? n n Which one is faster to reclaim freed space? n n Depends on the individual memory allocation scenario. In most cases, bitmap usually needs more space. On average, bitmap is faster because it just needs to set the corresponding bits Which one is faster to find a free hole? n On average, linked list is faster because we can link all free holes together 14

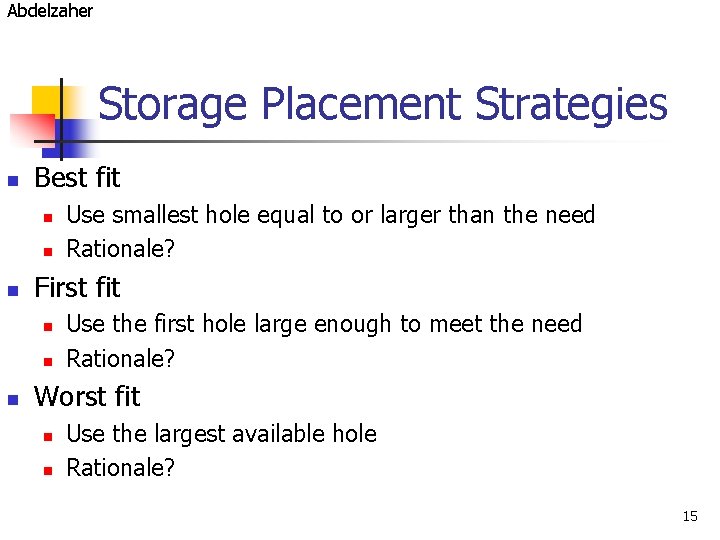

Abdelzaher Storage Placement Strategies n Best fit n n n First fit n n n Use smallest hole equal to or larger than the need Rationale? Use the first hole large enough to meet the need Rationale? Worst fit n n Use the largest available hole Rationale? 15

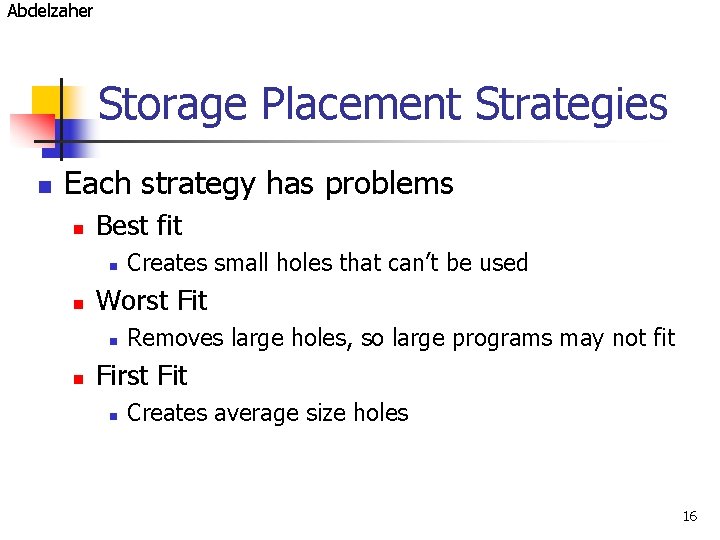

Abdelzaher Storage Placement Strategies n Each strategy has problems n Best fit n n Worst Fit n n Creates small holes that can’t be used Removes large holes, so large programs may not fit First Fit n Creates average size holes 16

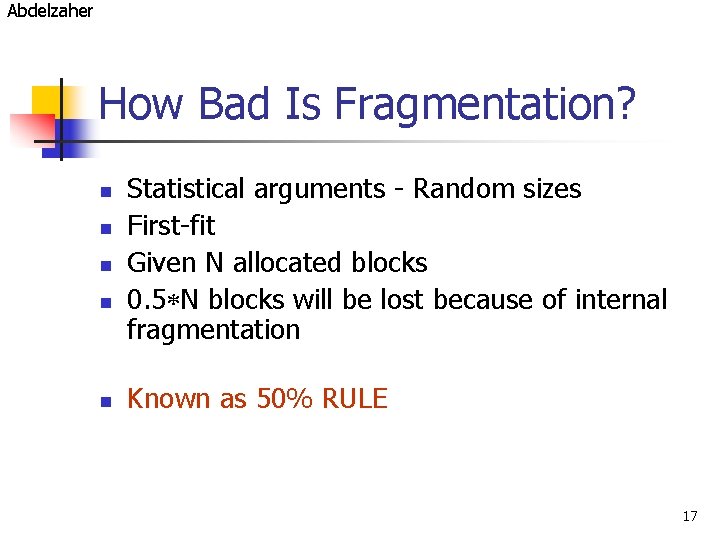

Abdelzaher How Bad Is Fragmentation? n n n Statistical arguments - Random sizes First-fit Given N allocated blocks 0. 5 N blocks will be lost because of internal fragmentation Known as 50% RULE 17

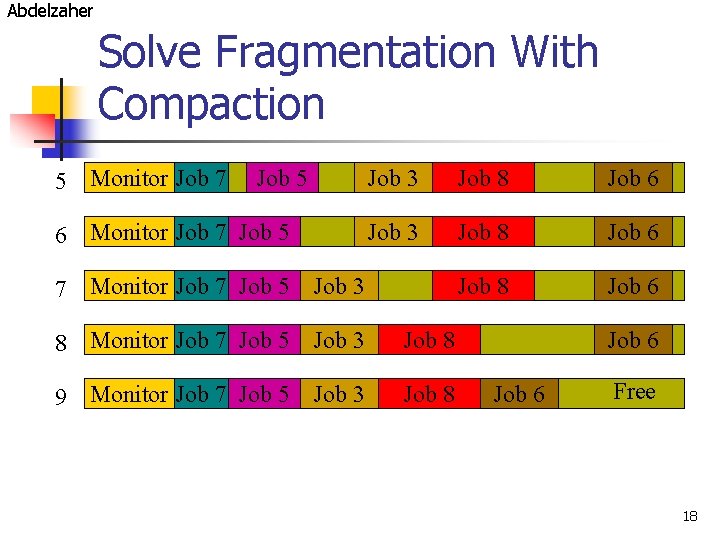

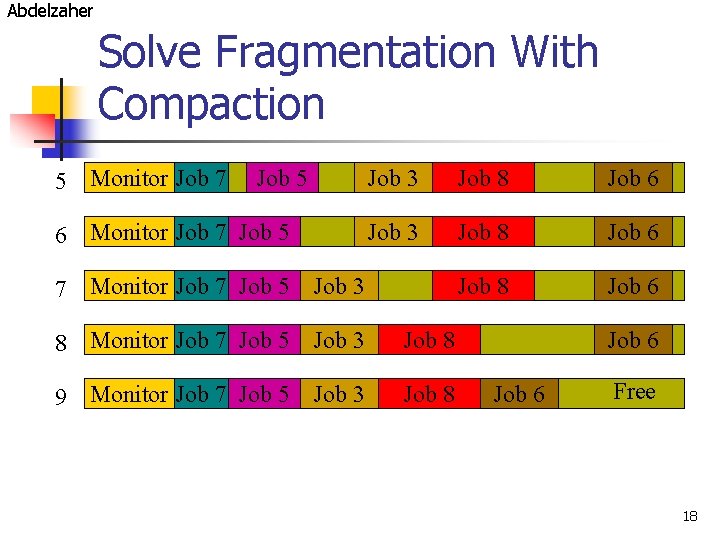

Abdelzaher Solve Fragmentation With Compaction 5 Monitor Job 7 Job 5 6 Monitor Job 7 Job 5 Job 3 Job 8 Free 6 Job 7 Monitor Job 7 Job 5 Job 3 8 Monitor Job 7 Job 5 Job 3 Job 8 9 Monitor Job 7 Job 5 Job 3 Job 8 Free 6 Job 6 Free 18

Abdelzaher Storage Management Problems n Fixed partitions suffer from n n Variable partitions suffer from n n internal fragmentation external fragmentation Compaction suffers from n overhead 19

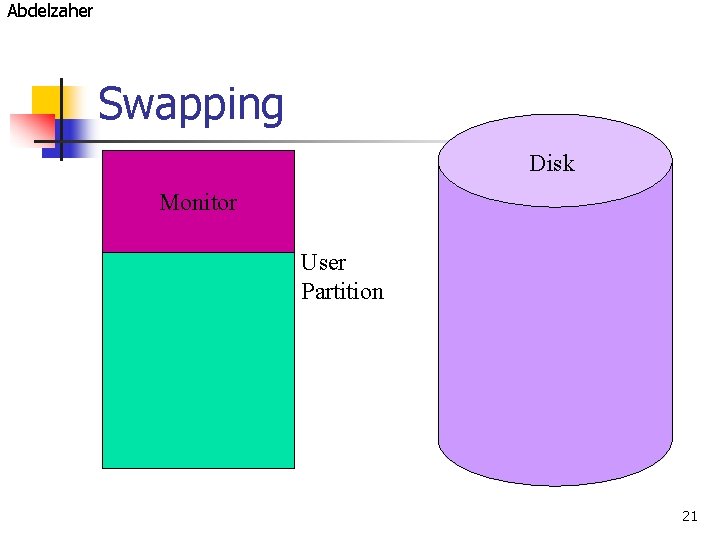

Abdelzaher Question n What if there are more processes than what could fit into the memory? 20

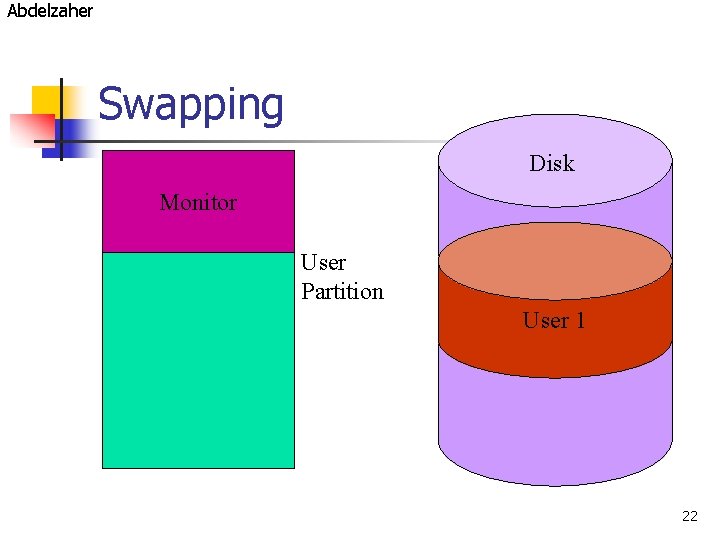

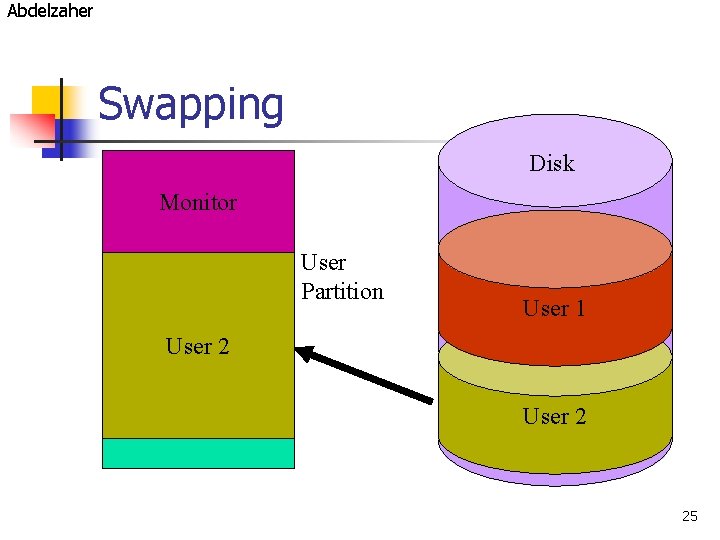

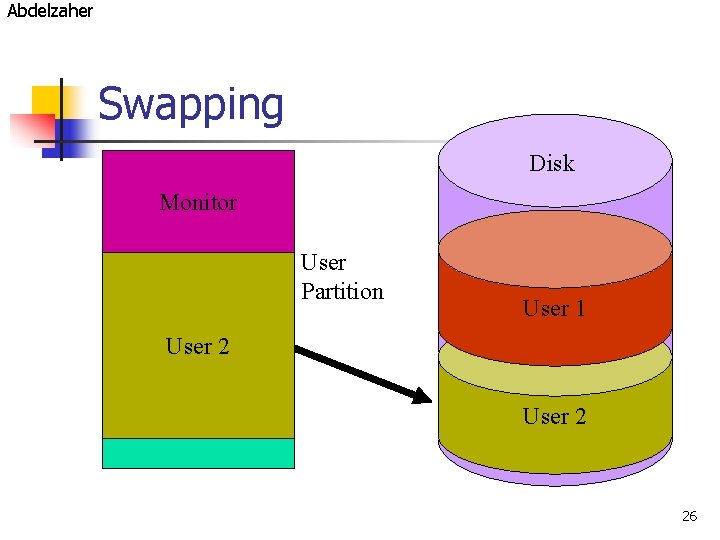

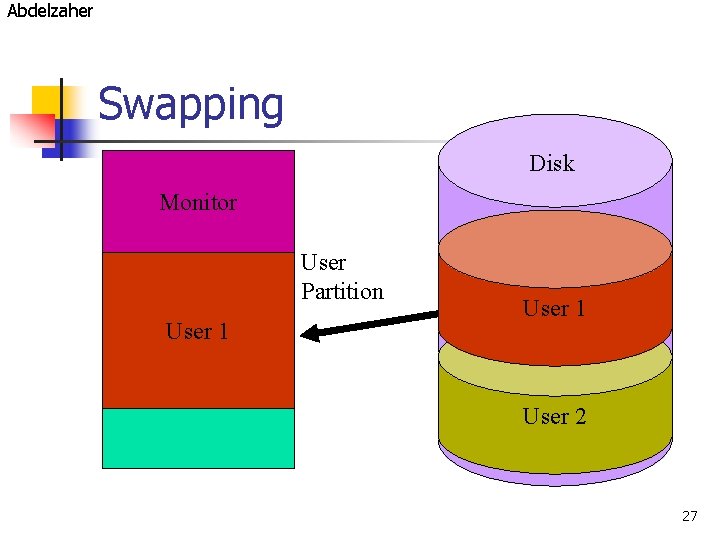

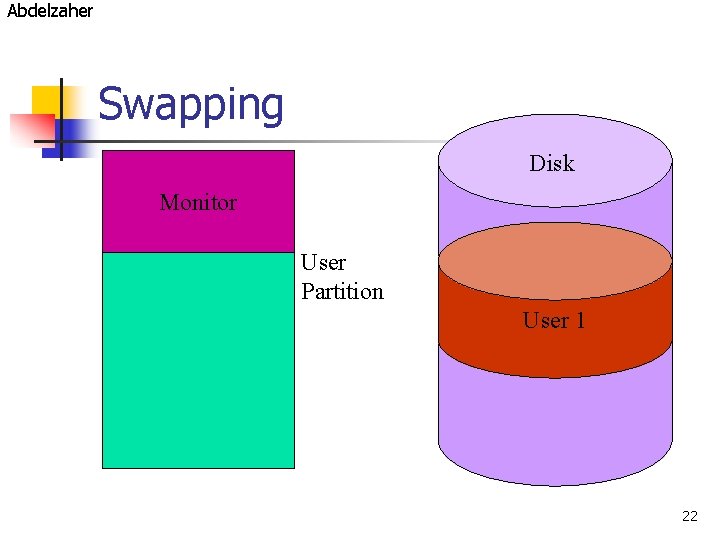

Abdelzaher Swapping Disk Monitor User Partition 21

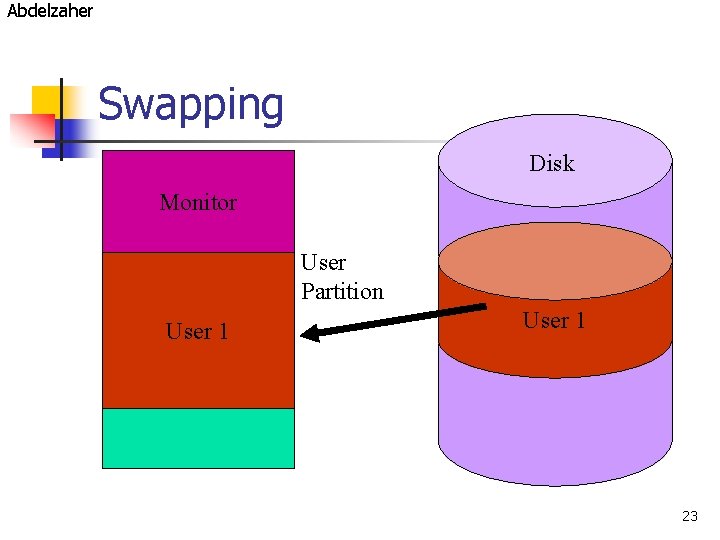

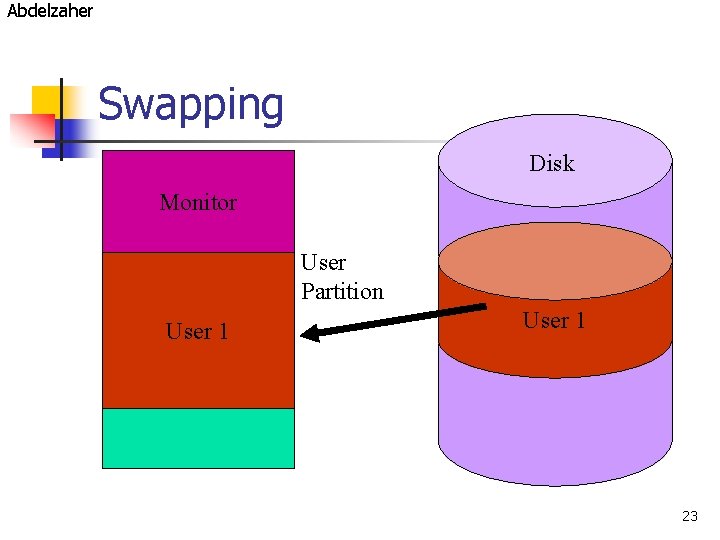

Abdelzaher Swapping Disk Monitor User Partition User 1 22

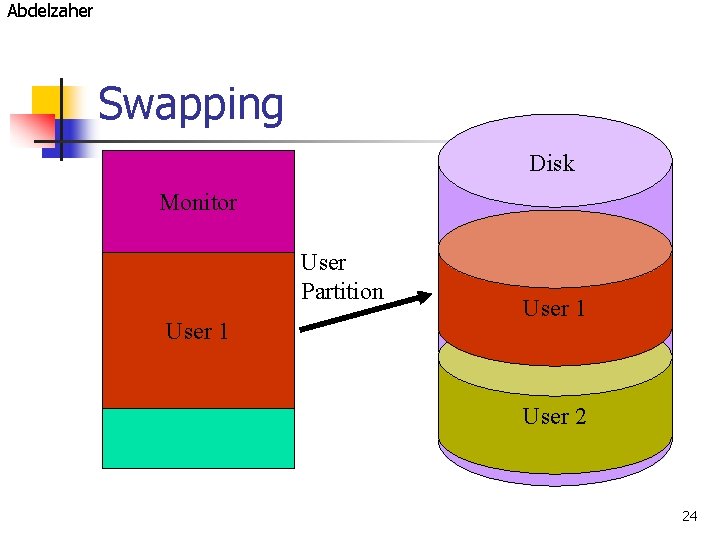

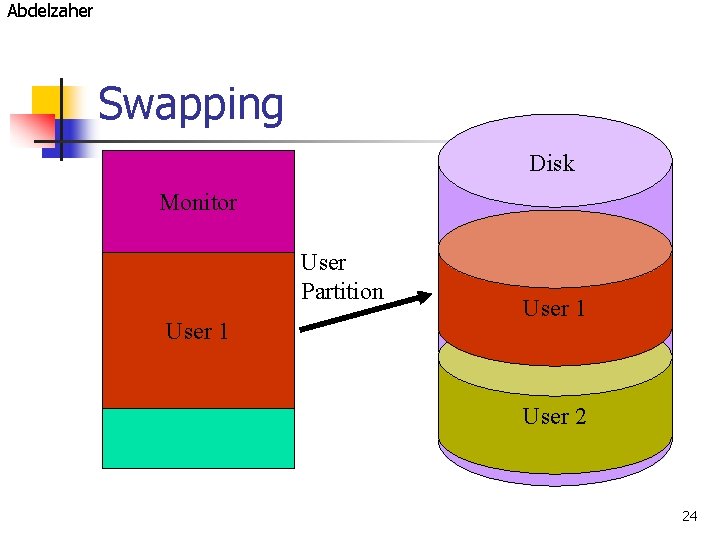

Abdelzaher Swapping Disk Monitor User Partition User 1 23

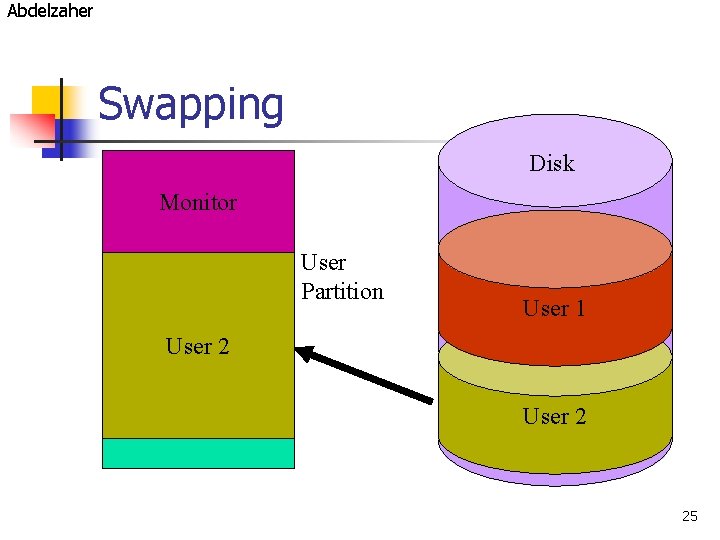

Abdelzaher Swapping Disk Monitor User Partition User 1 User 2 24

Abdelzaher Swapping Disk Monitor User Partition User 1 User 2 25

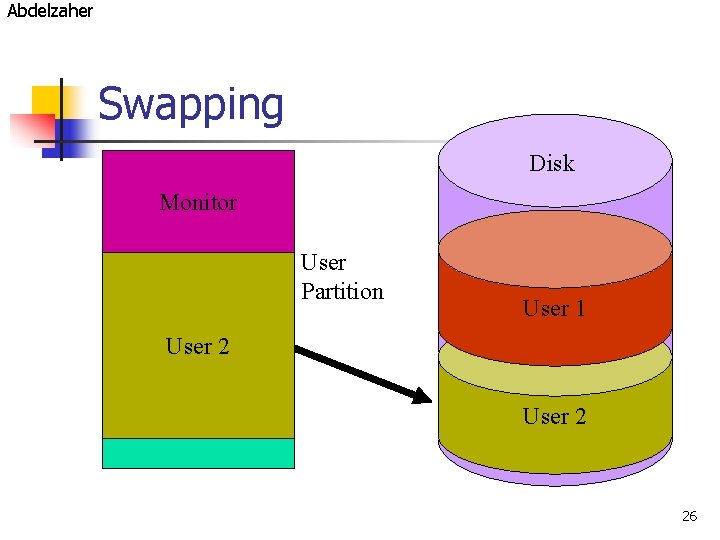

Abdelzaher Swapping Disk Monitor User Partition User 1 User 2 26

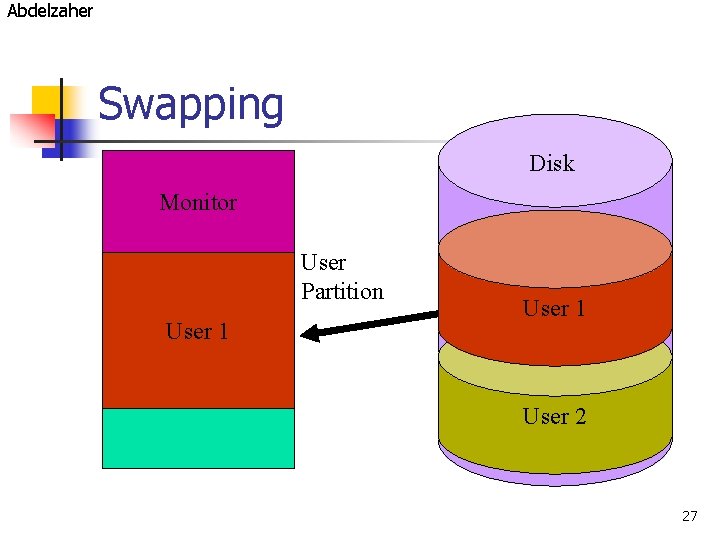

Abdelzaher Swapping Disk Monitor User Partition User 1 User 2 27

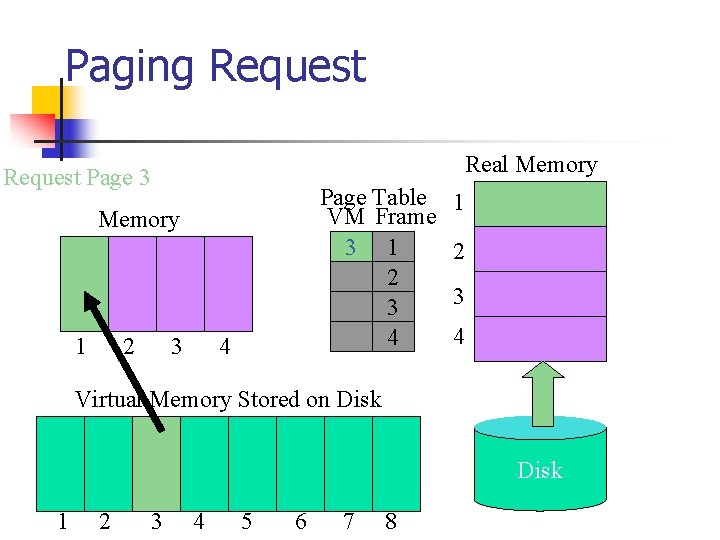

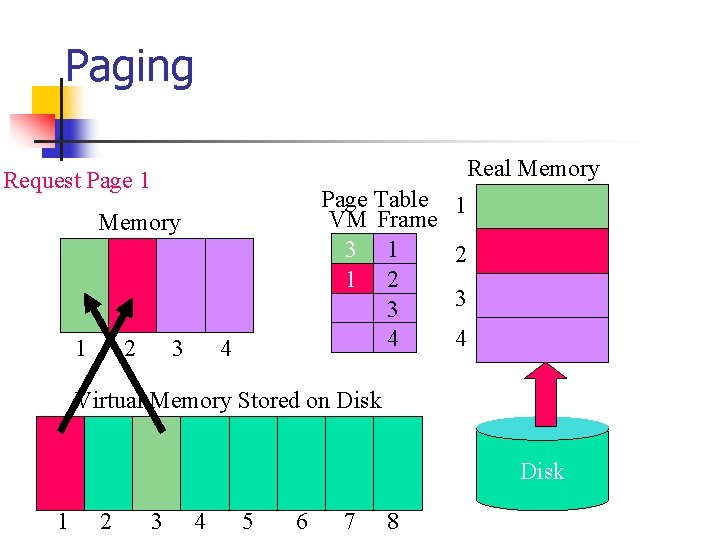

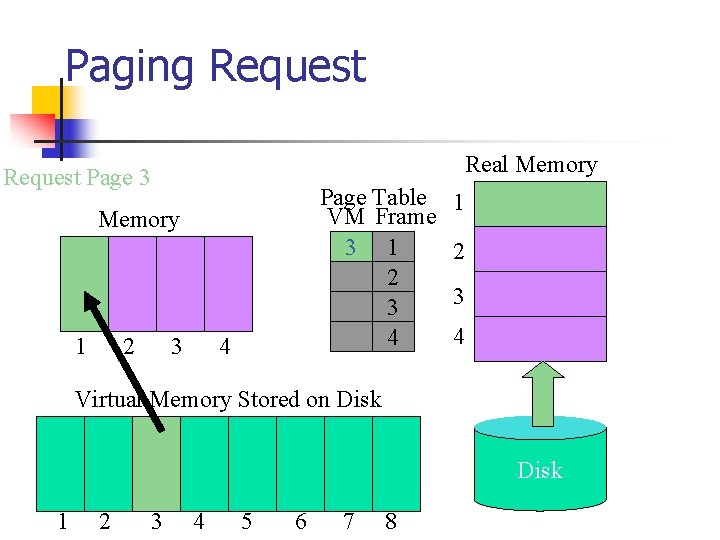

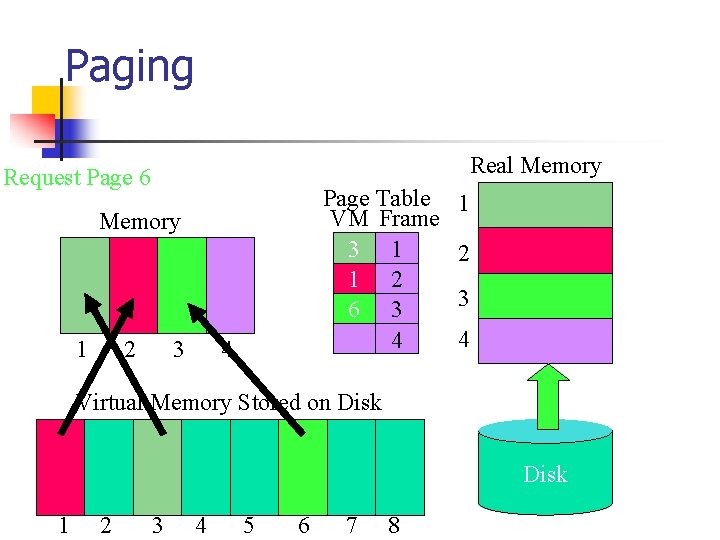

Paging Request Real Memory Request Page 3 Page Table VM Frame 3 1 2 3 4 Memory 1 2 3 4 Virtual Memory Stored on Disk 1 2 3 4 5 6 7 8

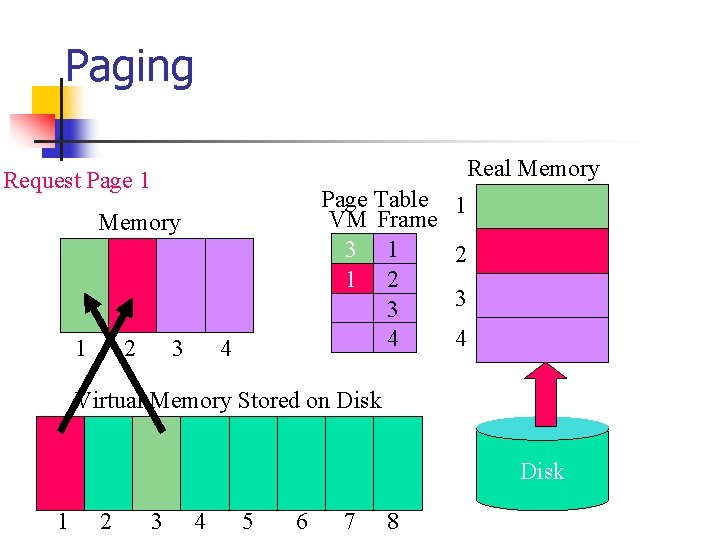

Paging Real Memory Request Page 1 Page Table VM Frame 3 1 1 2 3 4 Memory 1 2 3 4 Virtual Memory Stored on Disk 1 2 3 4 5 6 7 8

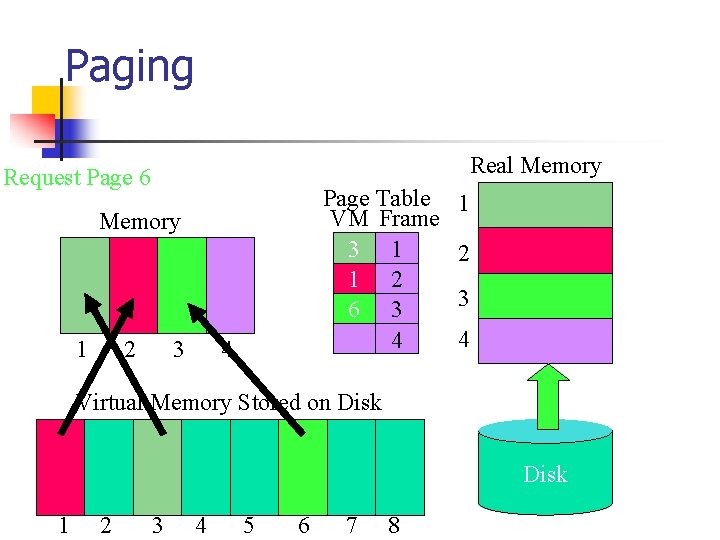

Paging Real Memory Request Page 6 Page Table VM Frame 3 1 1 2 6 3 4 Memory 1 2 3 4 Virtual Memory Stored on Disk 1 2 3 4 5 6 7 8

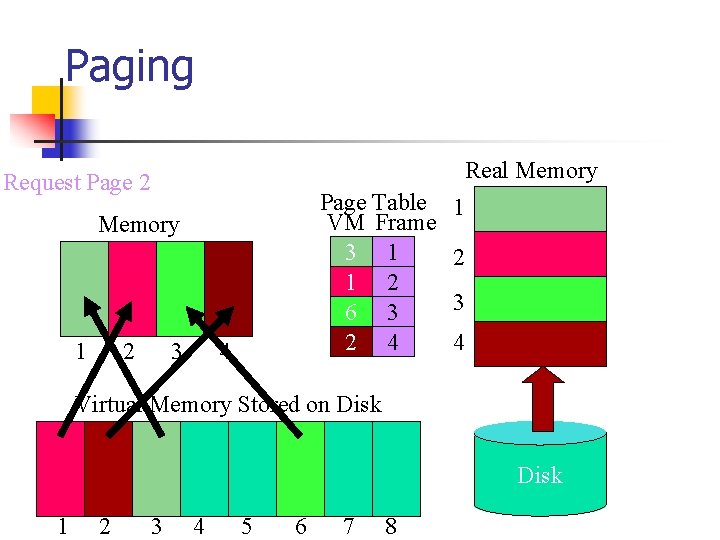

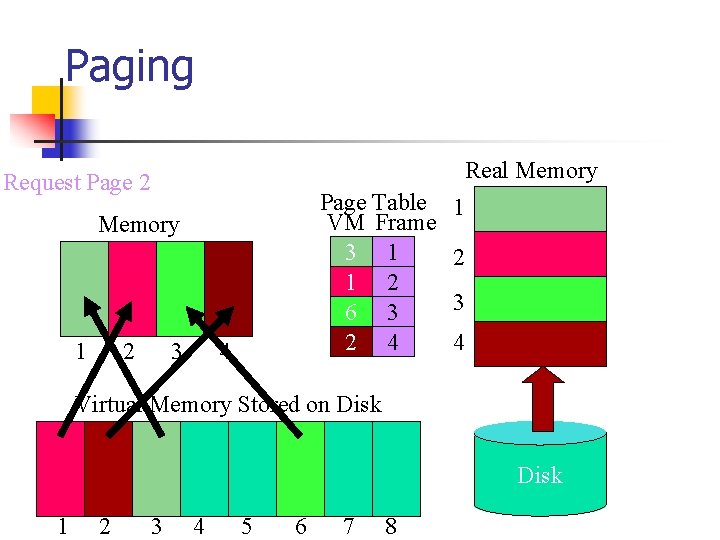

Paging Real Memory Request Page 2 Page Table VM Frame 3 1 1 2 6 3 2 4 Memory 1 2 3 4 Virtual Memory Stored on Disk 1 2 3 4 5 6 7 8

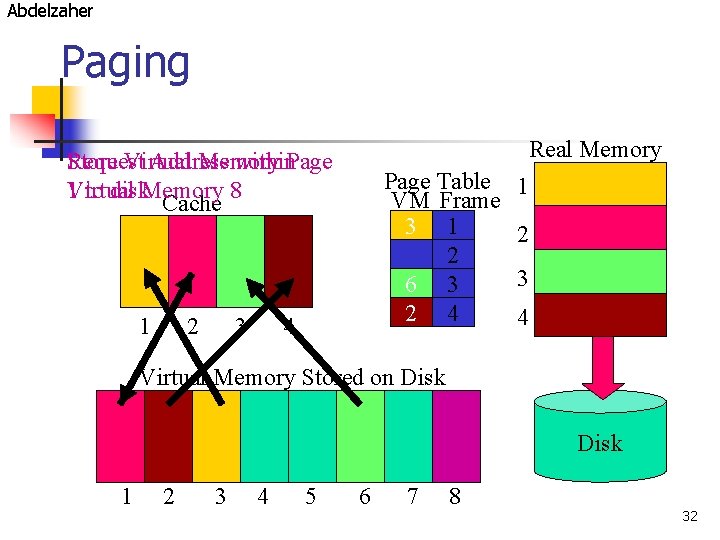

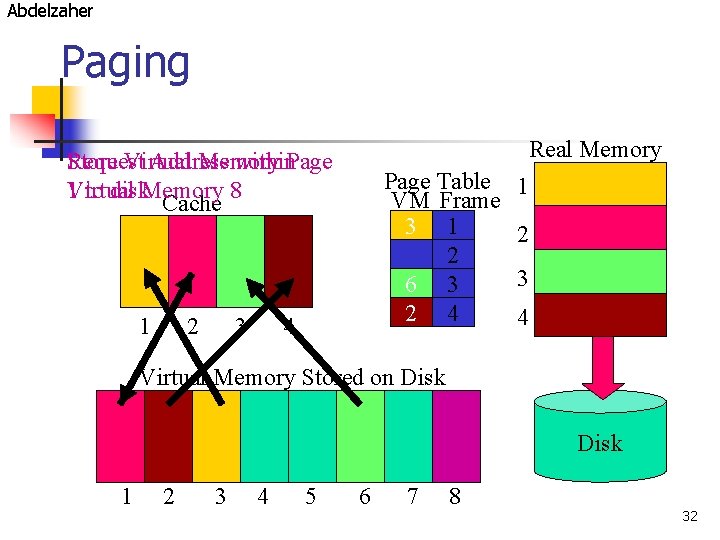

Abdelzaher Paging Real Memory Request Store Virtual Address Memory within. Page 1 to disk. Memory 8 Virtual Cache 1 2 3 Page Table VM Frame 3 1 1 2 6 3 2 4 4 1 2 3 4 Virtual Memory Stored on Disk 1 2 3 4 5 6 7 8 32

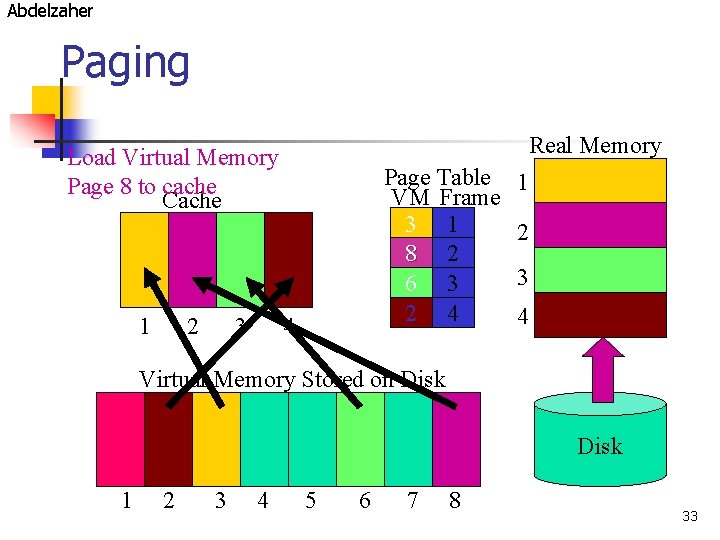

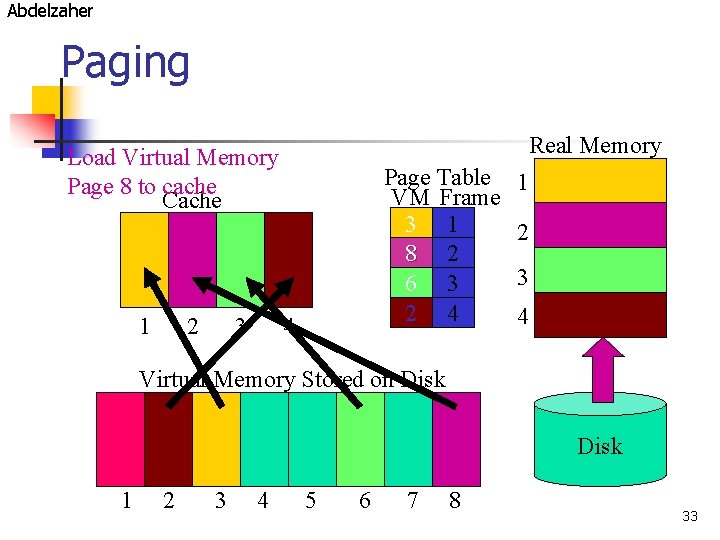

Abdelzaher Paging Real Memory Load Virtual Memory Page 8 to cache Cache 1 2 3 Page Table VM Frame 3 1 8 2 6 3 2 4 4 1 2 3 4 Virtual Memory Stored on Disk 1 2 3 4 5 6 7 8 33

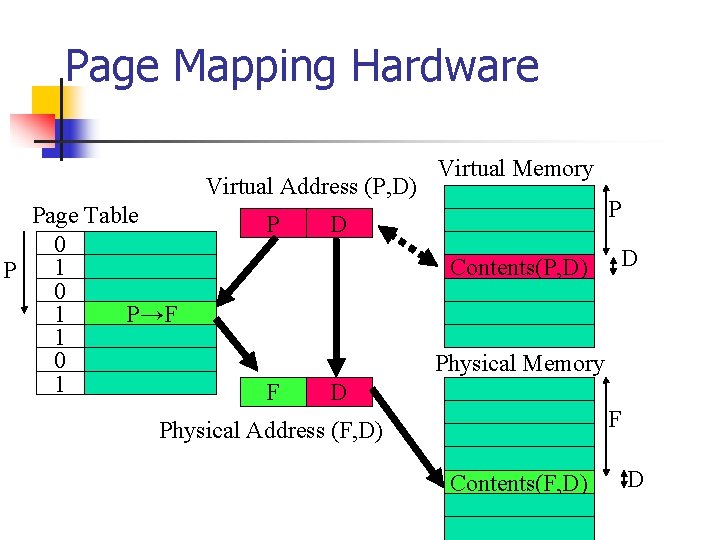

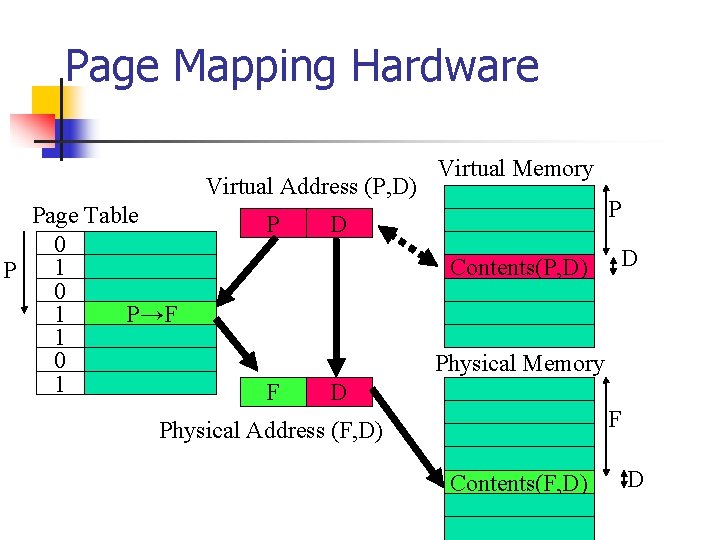

Page Mapping Hardware Virtual Address (P, D) Page Table 0 1 P→F 1 0 1 P Virtual Memory P D Contents(P, D) D Physical Memory F D F Physical Address (F, D) Contents(F, D) D

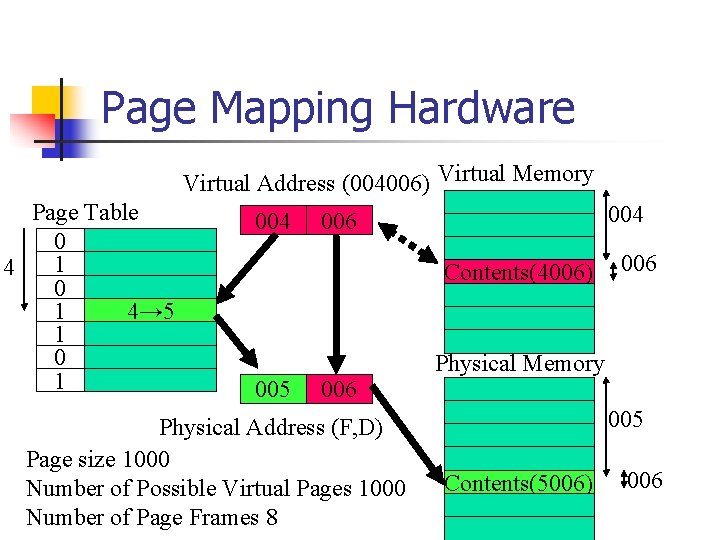

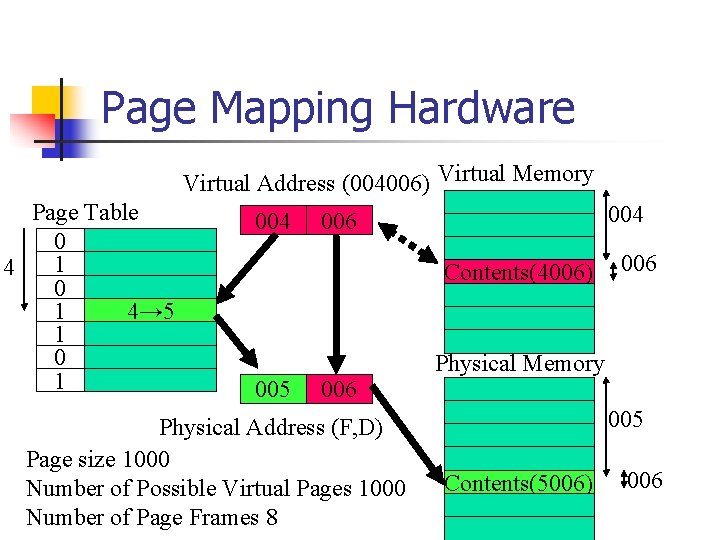

Page Mapping Hardware Virtual Address (004006) Virtual Memory Page Table 0 1 4 0 4→ 5 1 1 004 006 Contents(4006) 005 006 Physical Address (F, D) Page size 1000 Number of Possible Virtual Pages 1000 Number of Page Frames 8 006 Physical Memory 005 Contents(5006) 006

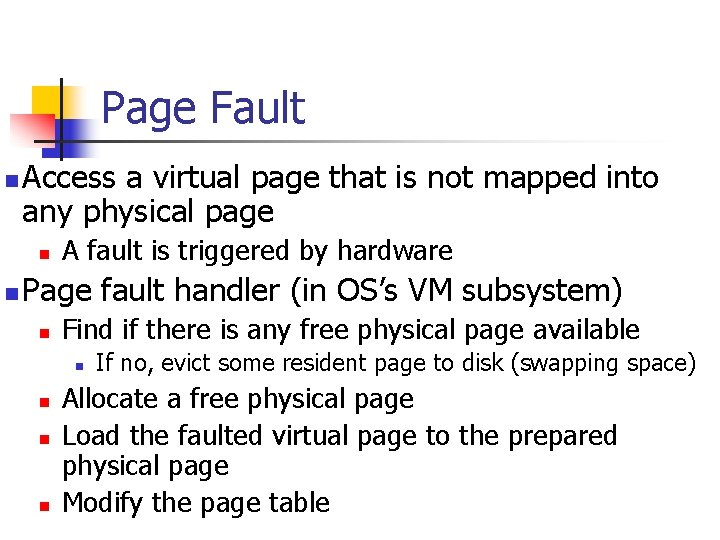

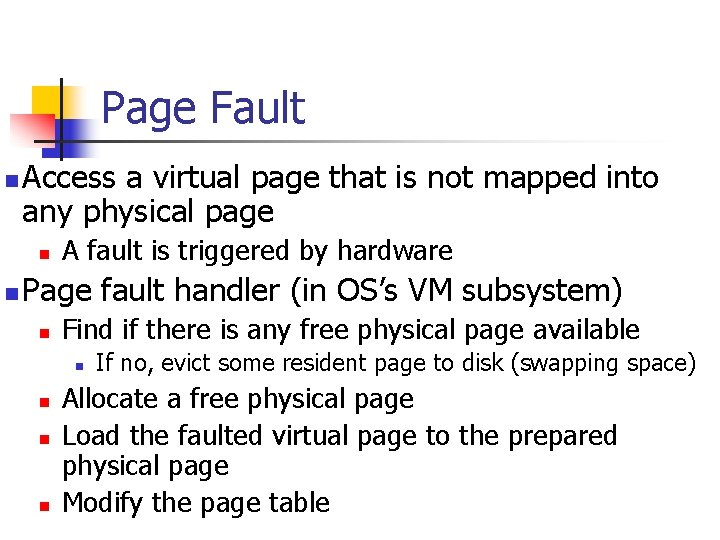

Page Fault n Access a virtual page that is not mapped into any physical page n n A fault is triggered by hardware Page fault handler (in OS’s VM subsystem) n Find if there is any free physical page available n n If no, evict some resident page to disk (swapping space) Allocate a free physical page Load the faulted virtual page to the prepared physical page Modify the page table

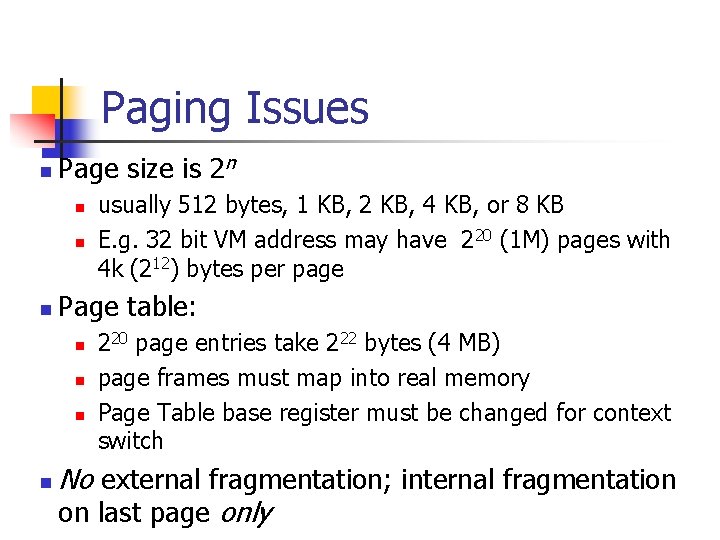

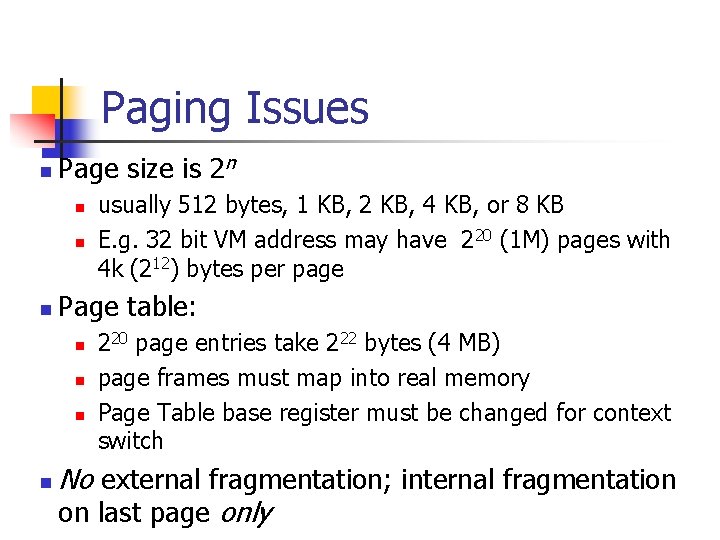

Paging Issues n Page size is 2 n n Page table: n n usually 512 bytes, 1 KB, 2 KB, 4 KB, or 8 KB E. g. 32 bit VM address may have 220 (1 M) pages with 4 k (212) bytes per page 220 page entries take 222 bytes (4 MB) page frames must map into real memory Page Table base register must be changed for context switch No external fragmentation; internal fragmentation on last page only

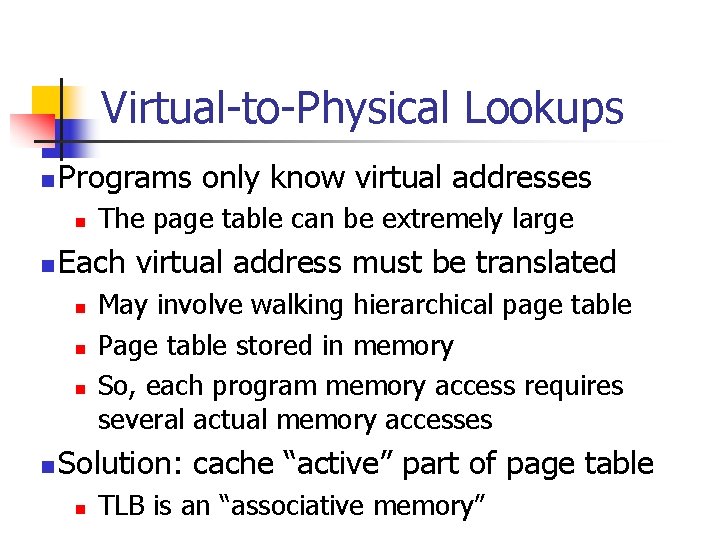

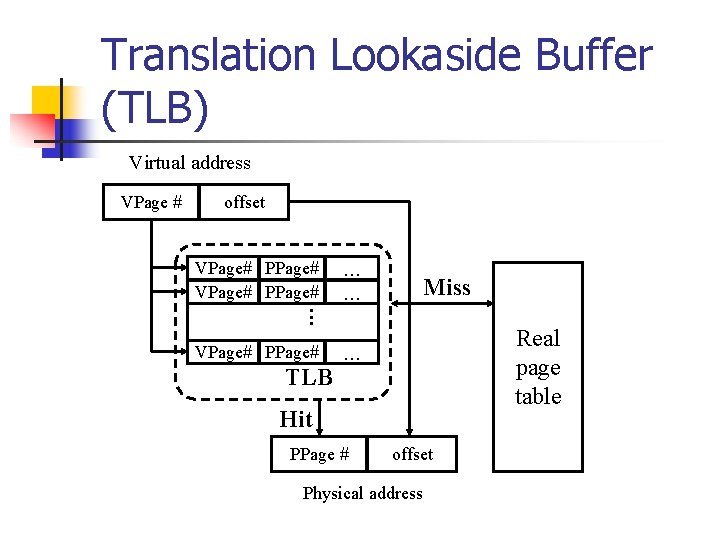

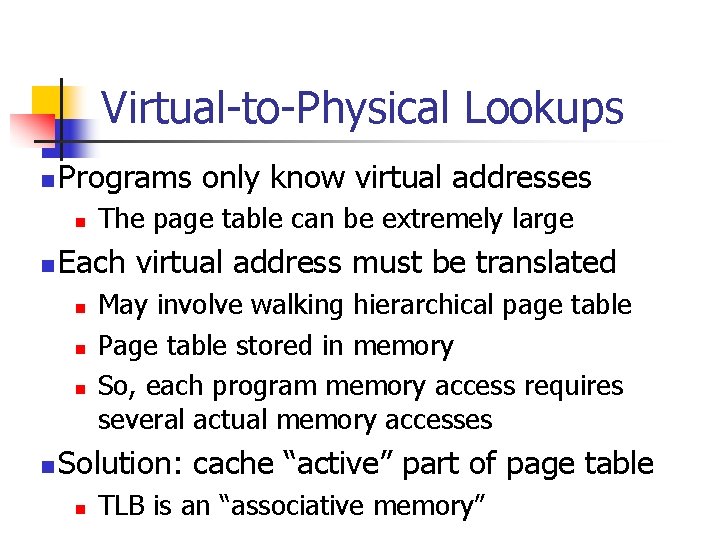

Virtual-to-Physical Lookups n Programs only know virtual addresses n n Each virtual address must be translated n n The page table can be extremely large May involve walking hierarchical page table Page table stored in memory So, each program memory access requires several actual memory accesses Solution: cache “active” part of page table n TLB is an “associative memory”

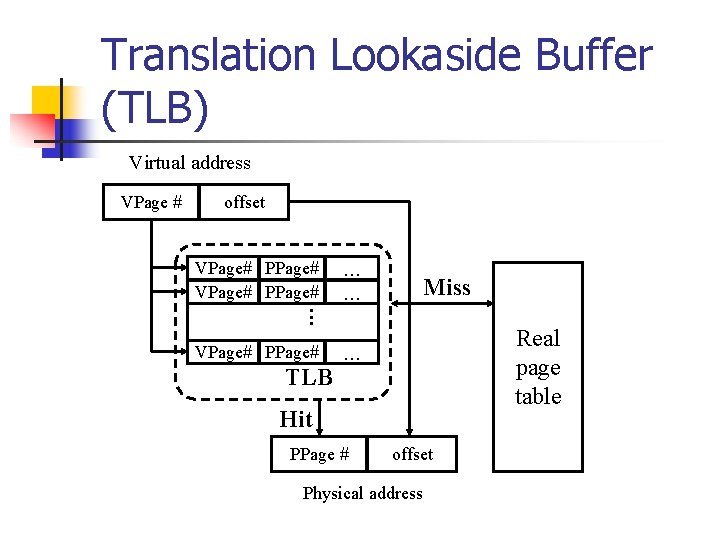

Translation Lookaside Buffer (TLB) Virtual address VPage # offset VPage# PPage#. . VPage# PPage# . . . TLB Miss Real page table Hit PPage # offset Physical address

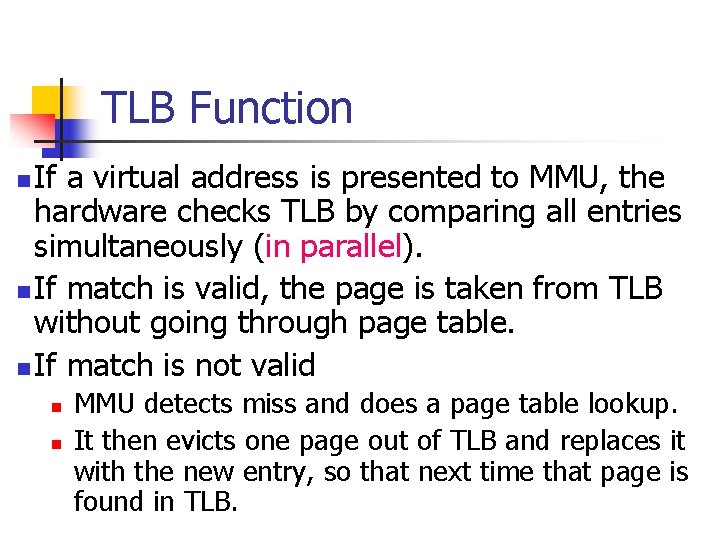

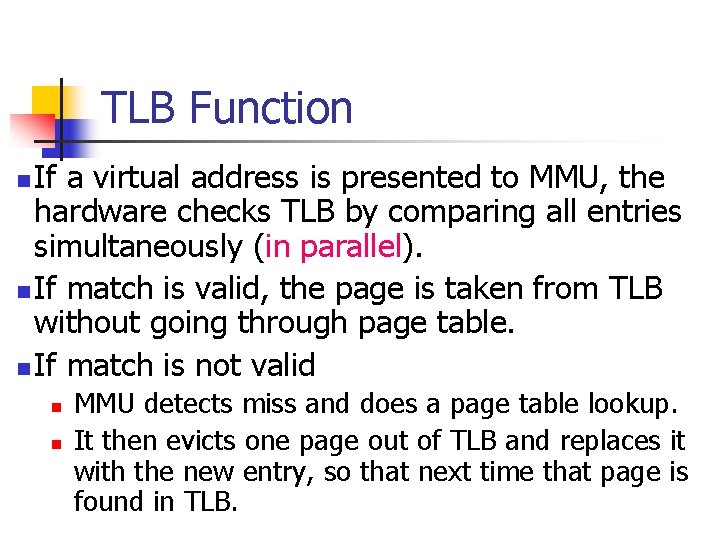

TLB Function If a virtual address is presented to MMU, the hardware checks TLB by comparing all entries simultaneously (in parallel). n If match is valid, the page is taken from TLB without going through page table. n If match is not valid n n n MMU detects miss and does a page table lookup. It then evicts one page out of TLB and replaces it with the new entry, so that next time that page is found in TLB.

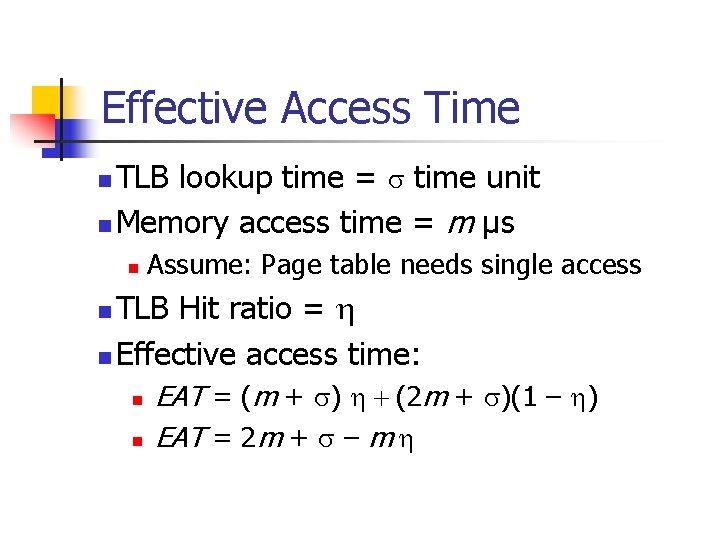

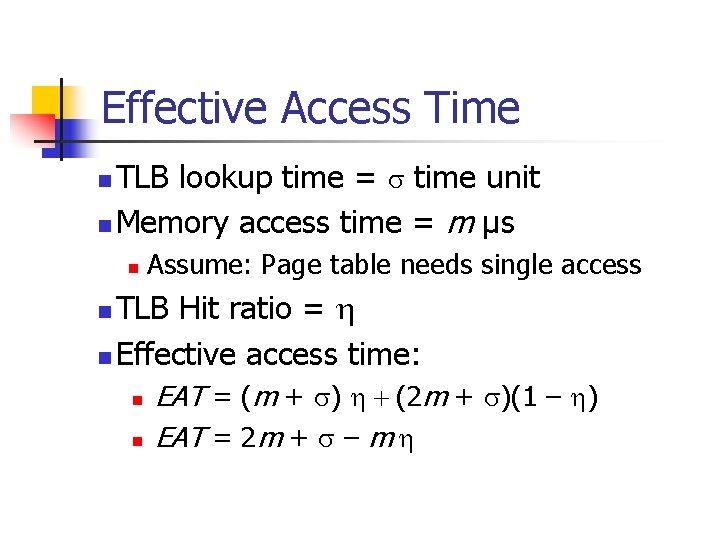

Effective Access Time TLB lookup time = s time unit n Memory access time = m µs n n Assume: Page table needs single access TLB Hit ratio = h n Effective access time: n n n EAT = (m + s) h + (2 m + s)(1 – h) EAT = 2 m + s – m h

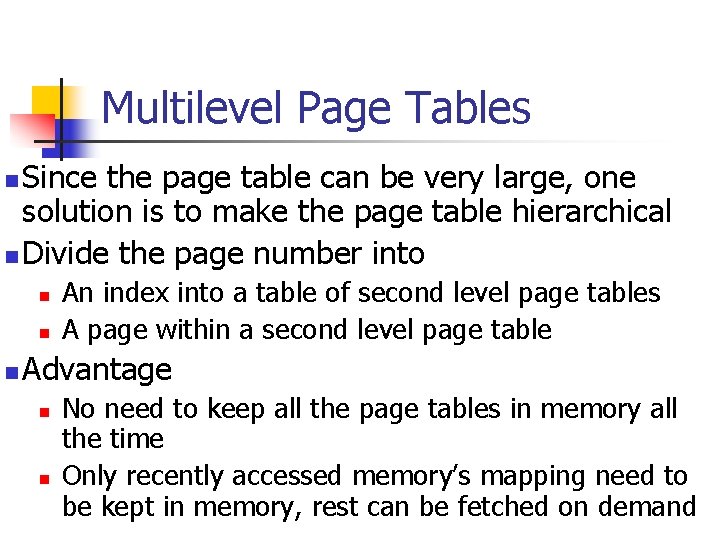

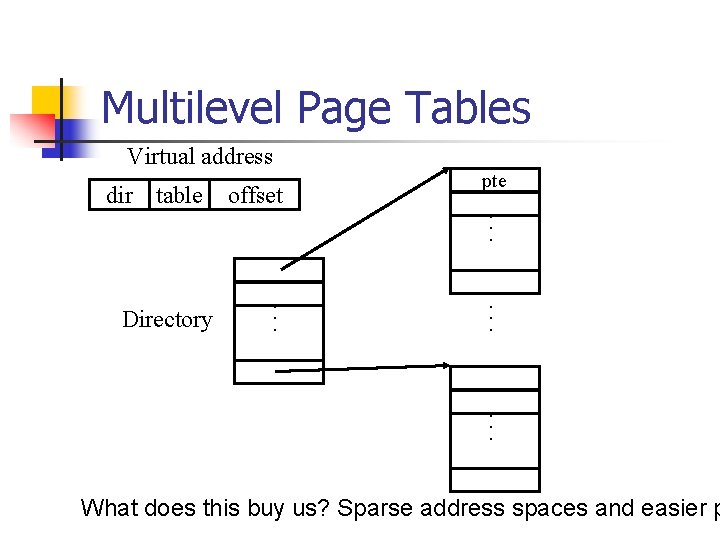

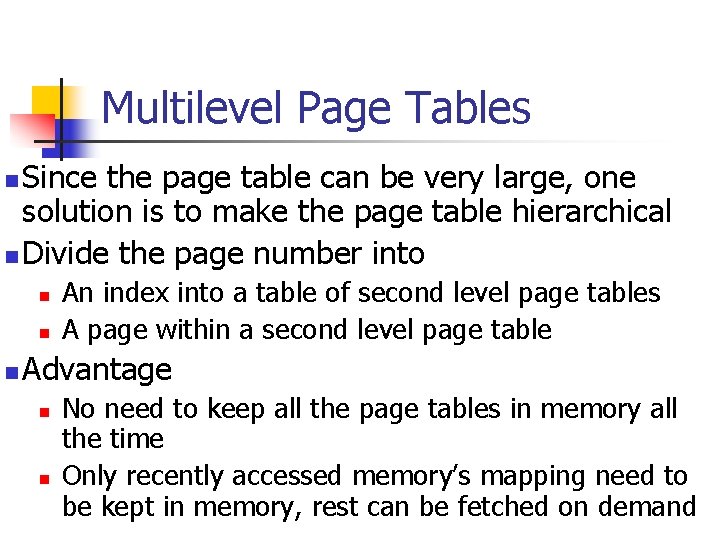

Multilevel Page Tables Since the page table can be very large, one solution is to make the page table hierarchical n Divide the page number into n n An index into a table of second level page tables A page within a second level page table Advantage n n No need to keep all the page tables in memory all the time Only recently accessed memory’s mapping need to be kept in memory, rest can be fetched on demand

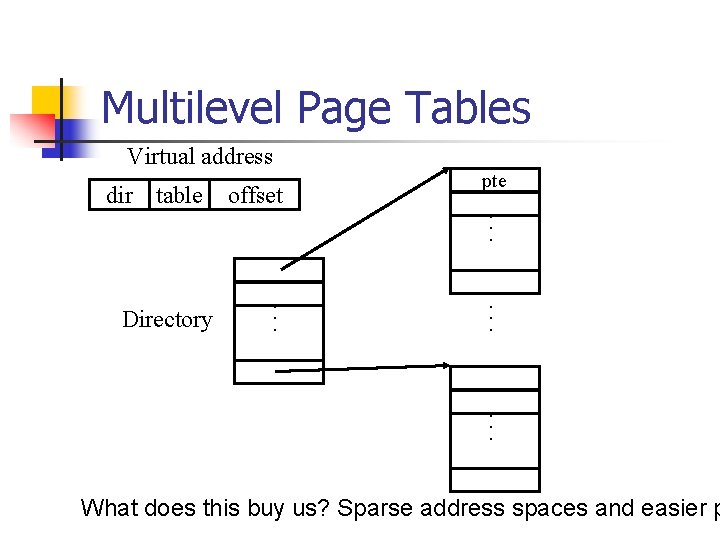

Multilevel Page Tables Virtual address dir table Directory offset . . . pte . . What does this buy us? Sparse address spaces and easier p

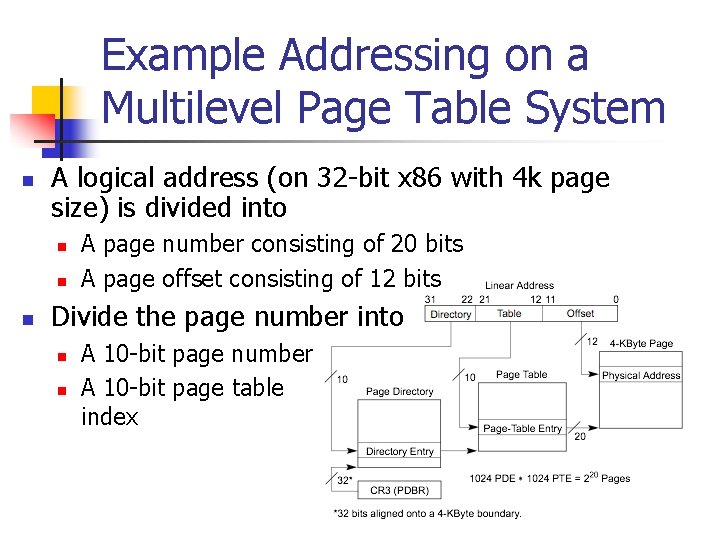

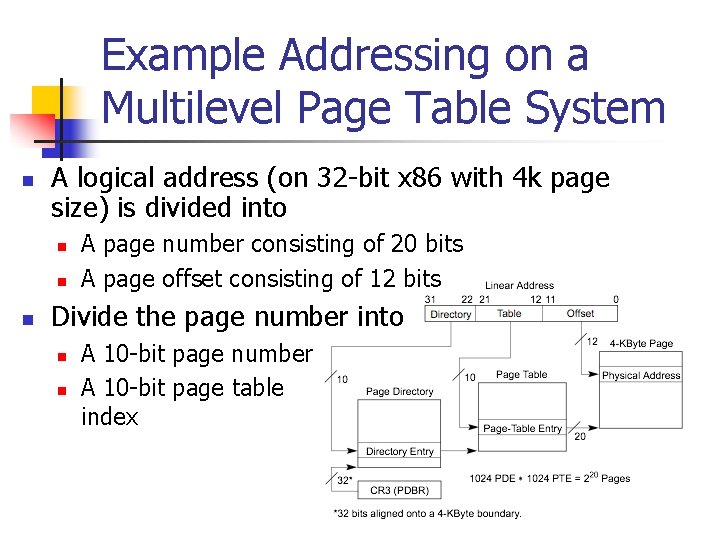

Example Addressing on a Multilevel Page Table System n A logical address (on 32 -bit x 86 with 4 k page size) is divided into n n n A page number consisting of 20 bits A page offset consisting of 12 bits Divide the page number into n n A 10 -bit page number A 10 -bit page table index

Multilevel Paging and Performance Each level stored as separate table n Assume: n-level page table n Converting logical to physical address: n This may take _____ memory accesses n (TLB access is not a memory access) n

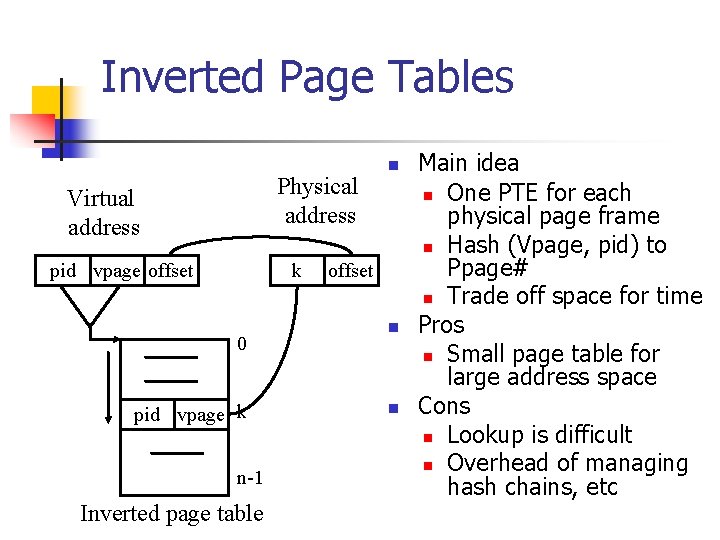

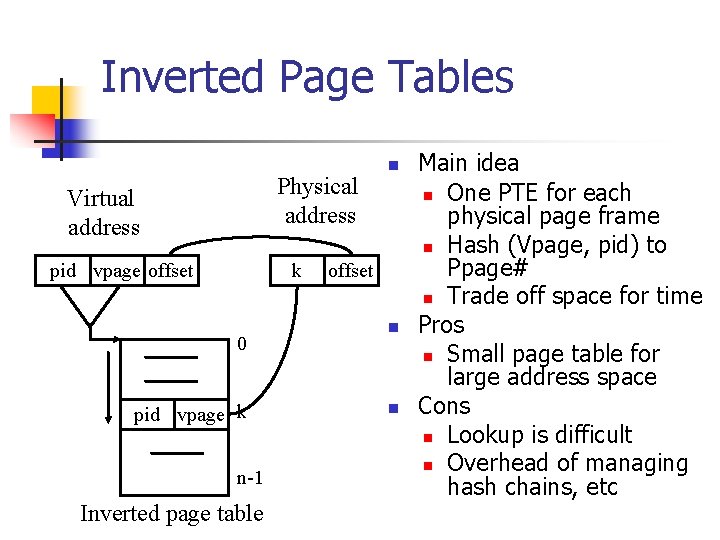

Inverted Page Tables Physical address Virtual address pid vpage offset k 0 pid vpage k n-1 Inverted page table n offset n n Main idea n One PTE for each physical page frame n Hash (Vpage, pid) to Ppage# n Trade off space for time Pros n Small page table for large address space Cons n Lookup is difficult n Overhead of managing hash chains, etc

Abdelzaher Paging n n Provide user with virtual memory that is as big as user needs Store virtual memory on disk Cache parts of virtual memory being used in real memory Load and store cached virtual memory without user program intervention 47