Measuring UNL Research the use and interpretation of

- Slides: 68

Measuring UNL Research the use and interpretation of bibliometric indicators Martijn S. Visser Lisbon, 29 June 2012

Contents 1. Role of citation analysis in research evaluation 2. Coverage of bibliometric databases 3. Bibliometric indicators 4. Challenges and Future Work

1. Role of citation analysis in research evaluation • What do citations measure? • Citation analysis and peer review

Citation motivations (Garfield, 1962) • Paying homage to pioneers • Giving credit for related work (homage to peers) • Identifying methodology, equipment, etc. • Providing background reading • Correcting one’s own work • Correcting the work of others • Criticizing previous work • Substantiating claims • Alerting to forthcoming work • Providing leads to poorly disseminated, poorly indexed, or uncited work • Authenticating data and classes of fact (physical constants, etc. ) • Identifying original publications in which an idea or concept was discussed • Identifying original publication or other work describing an eponymic concept or term (. . . ) • Disclaiming work or ideas of others (negative claims) • Disputing priority claims of others (negative homage)

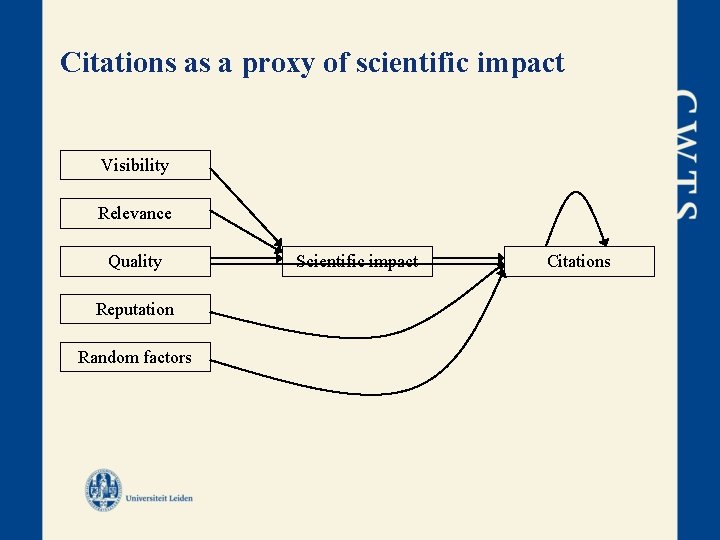

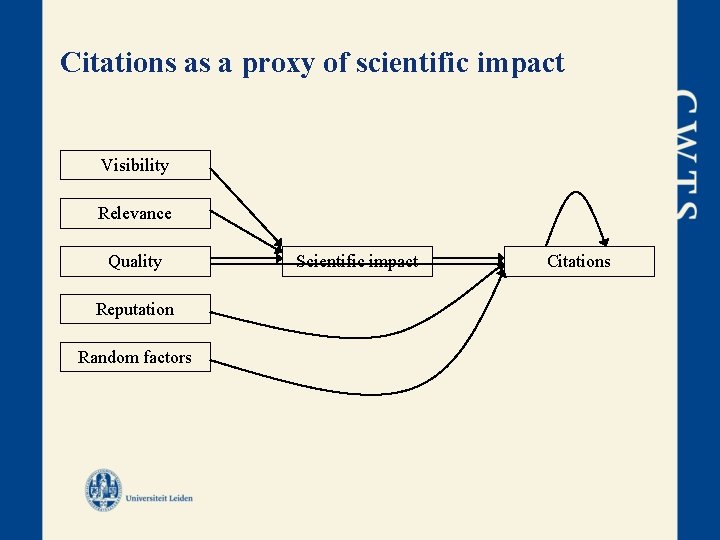

Citations as a proxy of scientific impact Visibility Relevance Quality Reputation Random factors Scientific impact Citations

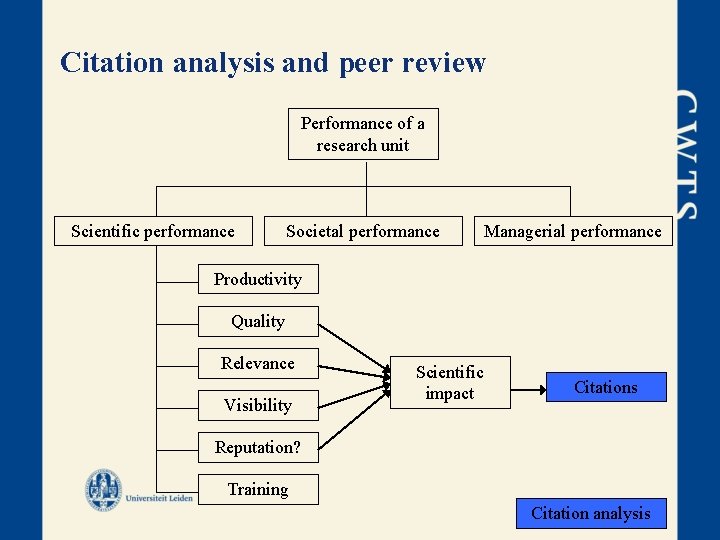

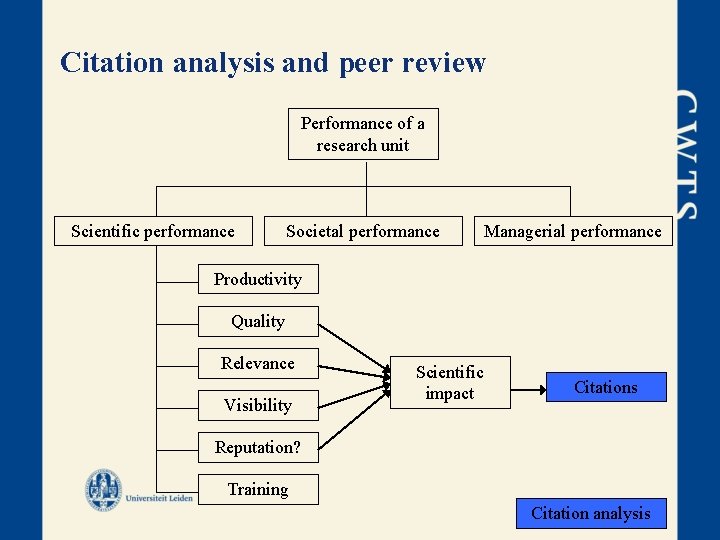

Citation analysis and peer review Performance of a research unit Scientific performance Societal performance Managerial performance Productivity Quality Relevance Visibility Scientific impact Citations Reputation? Training Citation analysis

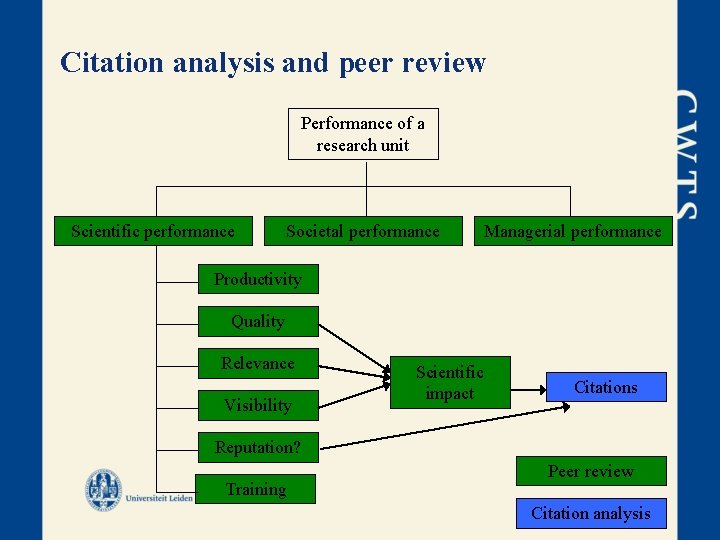

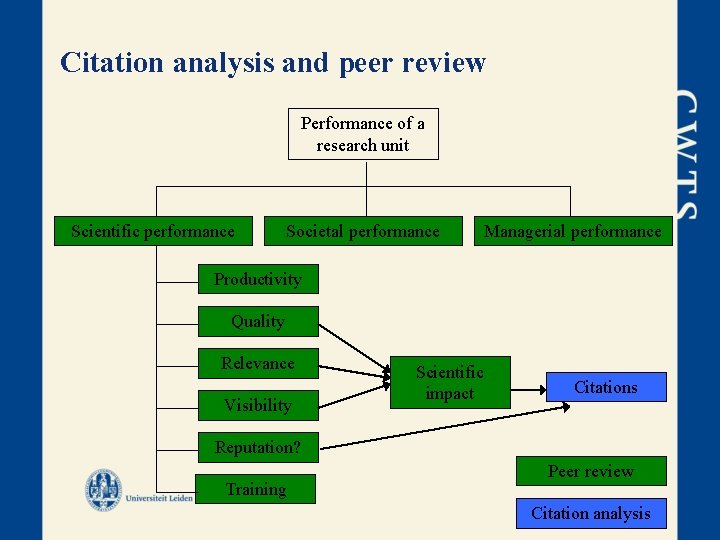

Citation analysis and peer review Performance of a research unit Scientific performance Societal performance Managerial performance Productivity Quality Relevance Visibility Scientific impact Citations Reputation? Training Peer review Citation analysis

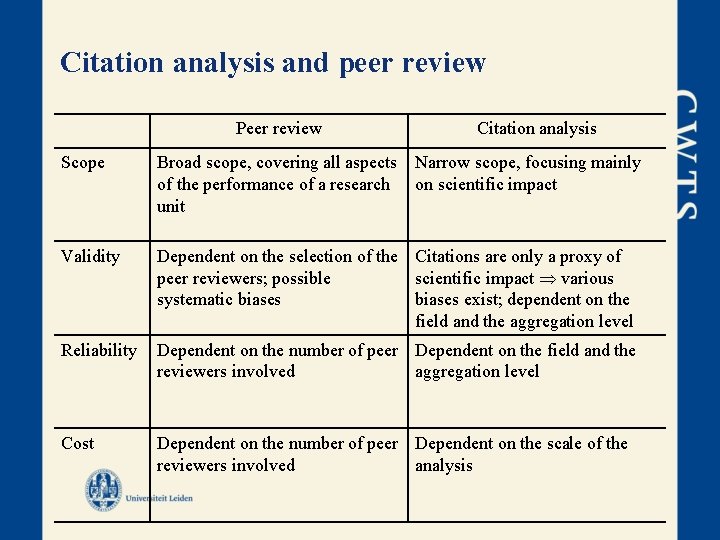

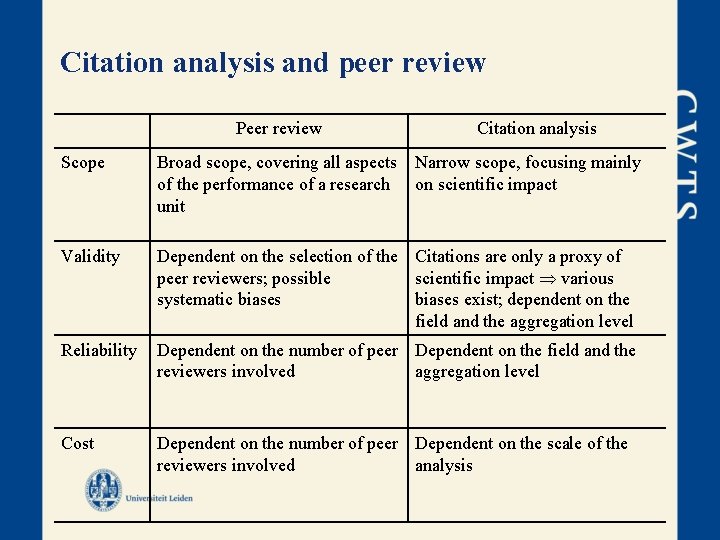

Citation analysis and peer review Peer review Citation analysis Scope Broad scope, covering all aspects Narrow scope, focusing mainly of the performance of a research on scientific impact unit Validity Dependent on the selection of the Citations are only a proxy of peer reviewers; possible scientific impact various systematic biases exist; dependent on the field and the aggregation level Reliability Dependent on the number of peer Dependent on the field and the reviewers involved aggregation level Cost Dependent on the number of peer Dependent on the scale of the reviewers involved analysis

2. Coverage of the Citation Index • Measuring Coverage • UNL coverage

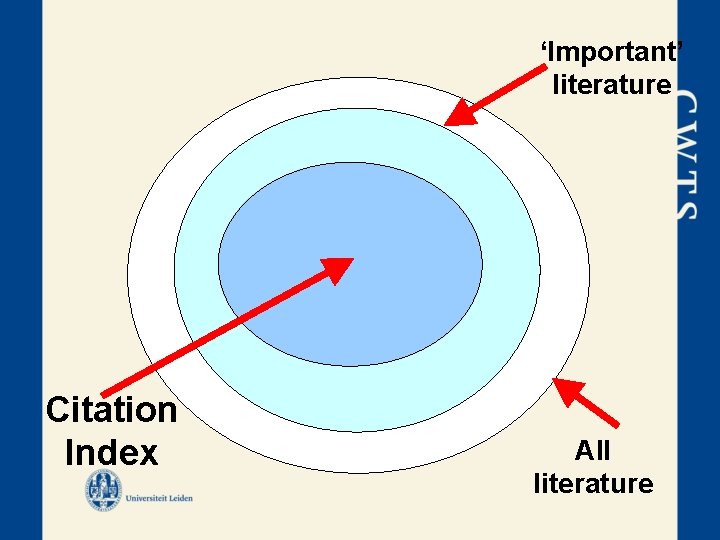

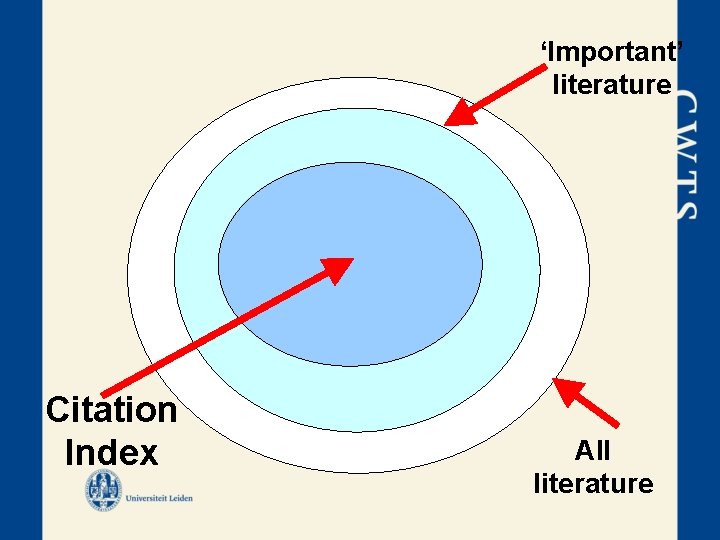

‘Important’ literature Citation Index All literature

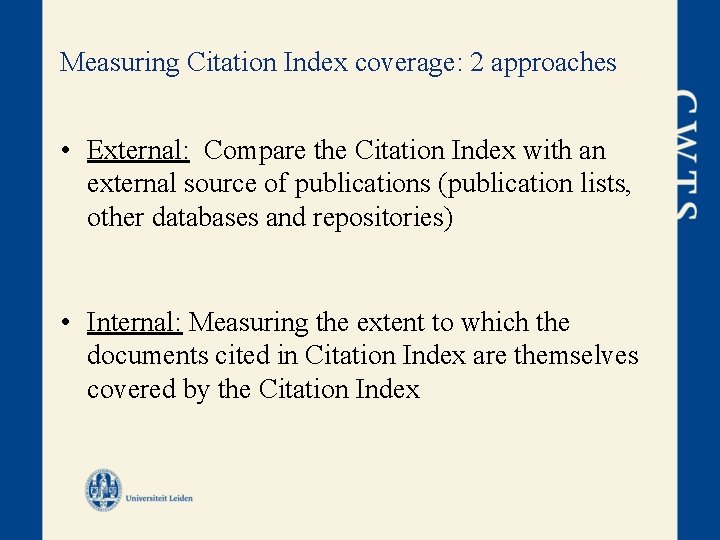

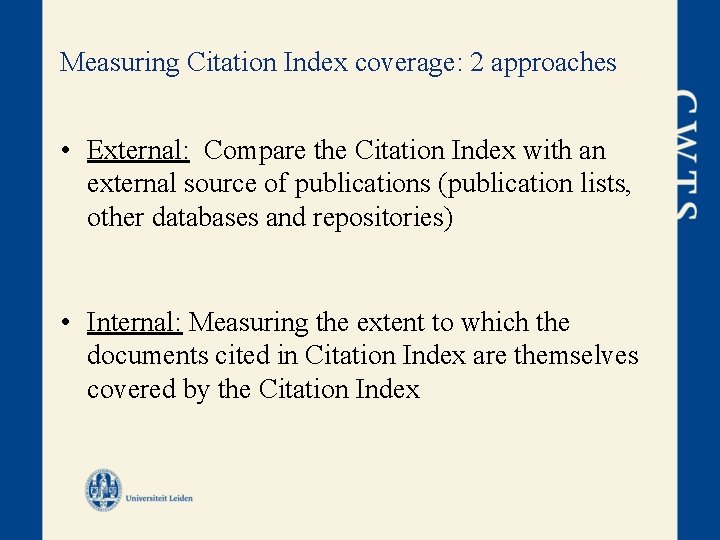

Measuring Citation Index coverage: 2 approaches • External: Compare the Citation Index with an external source of publications (publication lists, other databases and repositories) • Internal: Measuring the extent to which the documents cited in Citation Index are themselves covered by the Citation Index

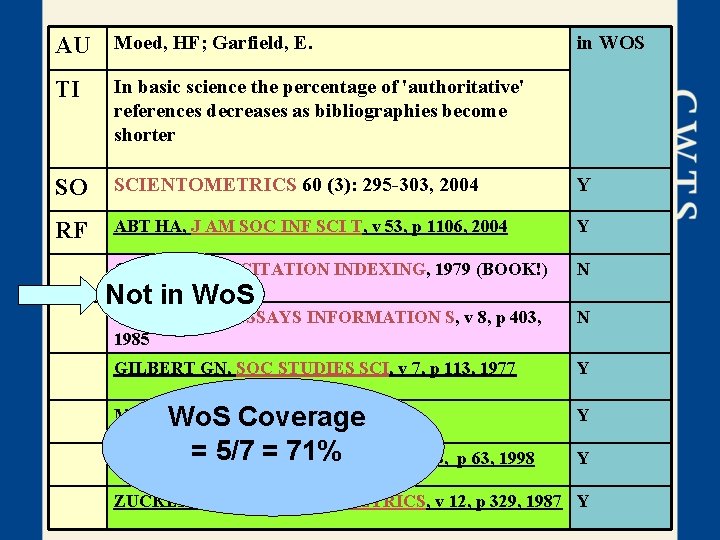

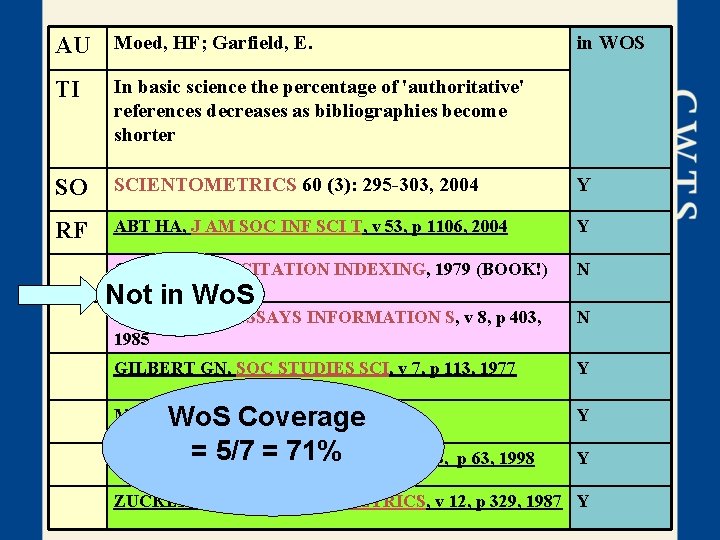

AU Moed, HF; Garfield, E. in WOS TI In basic science the percentage of 'authoritative' references decreases as bibliographies become shorter SO SCIENTOMETRICS 60 (3): 295 -303, 2004 Y RF ABT HA, J AM SOC INF SCI T, v 53, p 1106, 2004 Y GARFIELD, E. CITATION INDEXING, 1979 (BOOK!) N Not in Wo. S GARFIELD E, ESSAYS INFORMATION S, v 8, p 403, 1985 N GILBERT GN, SOC STUDIES SCI, v 7, p 113, 1977 Y MERTON RK, ISIS, v 79, p 606, 1988 Wo. S Coverage Y = 5/7 = 71% ROUSSEAU R, SCIENTOMETRICS, v 43, p 63, 1998 Y ZUCKERMAN H, SCIENTOMETRICS, v 12, p 329, 1987 Y

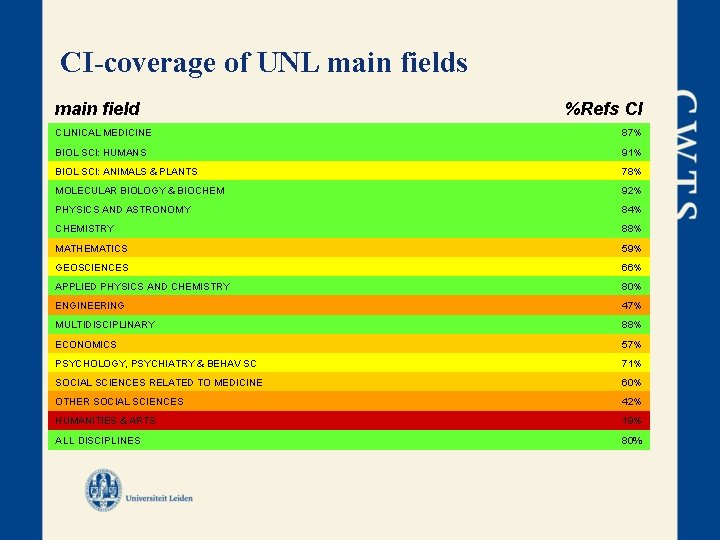

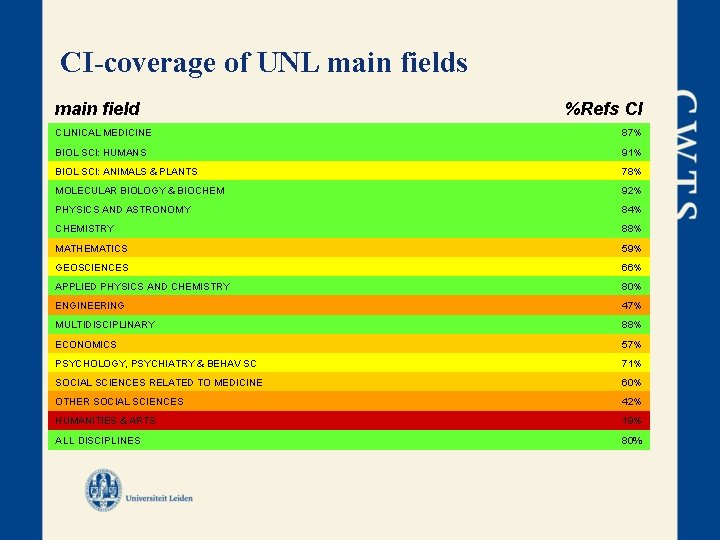

CI-coverage of UNL main fields main field %Refs CI CLINICAL MEDICINE 87% BIOL SCI: HUMANS 91% BIOL SCI: ANIMALS & PLANTS 78% MOLECULAR BIOLOGY & BIOCHEM 92% PHYSICS AND ASTRONOMY 84% CHEMISTRY 88% MATHEMATICS 59% GEOSCIENCES 66% APPLIED PHYSICS AND CHEMISTRY 80% ENGINEERING 47% MULTIDISCIPLINARY 88% ECONOMICS 57% PSYCHOLOGY, PSYCHIATRY & BEHAV SC 71% SOCIAL SCIENCES RELATED TO MEDICINE 60% OTHER SOCIAL SCIENCES 42% HUMANITIES & ARTS 19% ALL DISCIPLINES 80%

3. Bibliometric Indicators • Size dependence vs size independent indicators • Normalized indicators • Dimensions of scientific performance

Unnormalized indicators • Indicators: – P: Number of publications – TCS: Total citation score – MCS: Mean citation score • Calculation: – Only documents classified as ‘article’, ‘review’, or ‘letter’ – Self citations are ignored

Size dependence vs size independence (2) • Size-dependent and size-independent indicators address different questions • Size-independent indicators (MCS): – How does UNLperform compared with other Portuguese univs? – How ‘prestigious’ is UNL? • Size-dependent indicators (P, TCS): – Is the subscription fee of this journal reasonable? – How influential has this research group been during a given period?

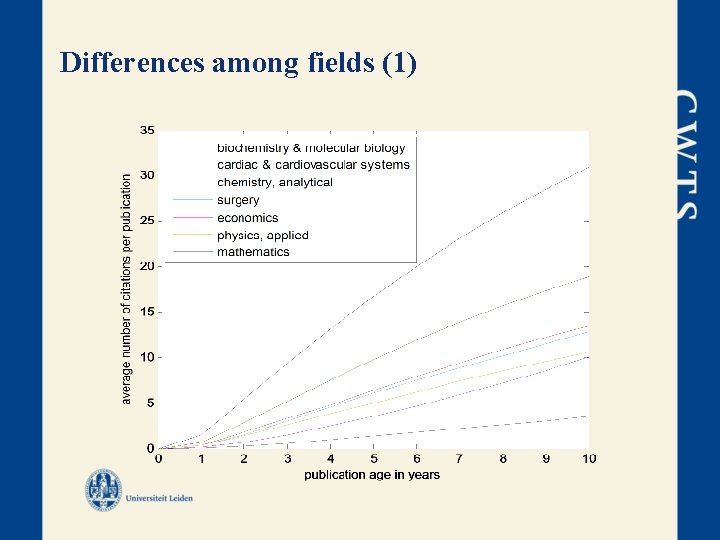

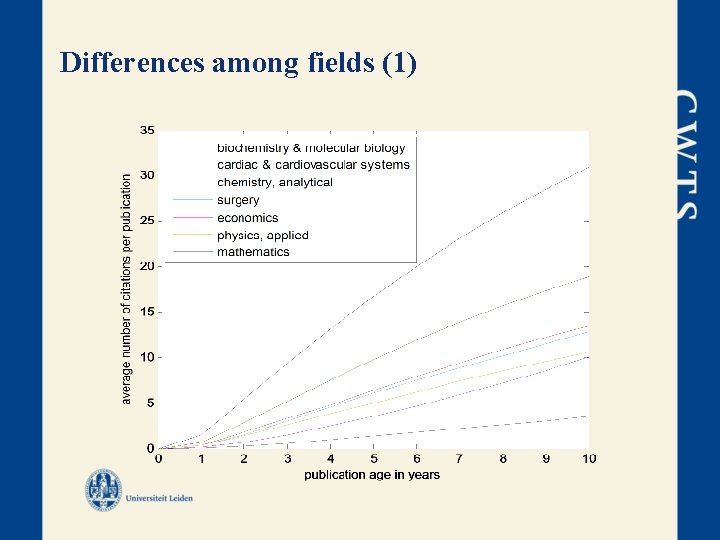

Differences among fields (1)

Normalized indicators • Indicators: – MNCS: Mean normalized citation score – MNJS: Mean normalized journal score – A/E Ptop 10%: Actual to expected ratio of publications in top 10% • Calculation: – Documents classified as ‘letter’ have a weight of 0. 25 – Citation window length must be at least 12 months

Expected number of citations • The expected number of citations of a publication is defined as the average number of citations of all publications – published in the same field, – published in the same year, and – having the same document type

Dimensions of scientific profile • Output • Impact • Journal impact • Collaboration • Scientific profile • Knowledge user profile

4. Challenges and work in progress • Definition of fields • Increasing coverage of bibliometric database • Stability intervals • Increasing number of authors / collaboration

Thank you for your attention!

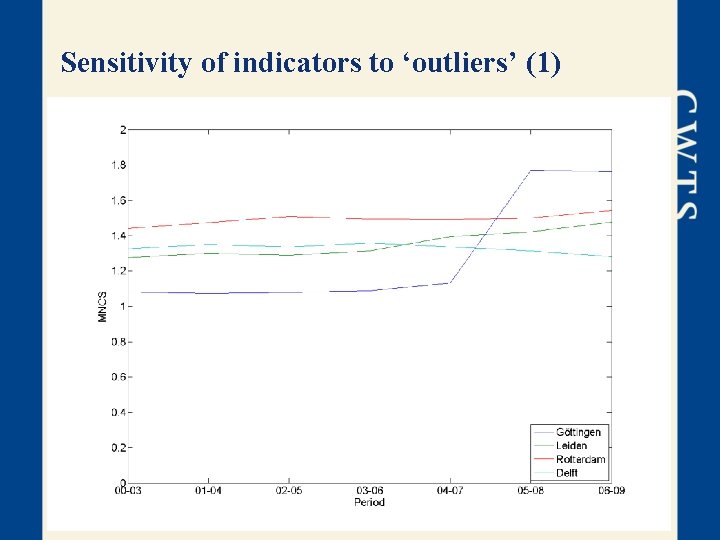

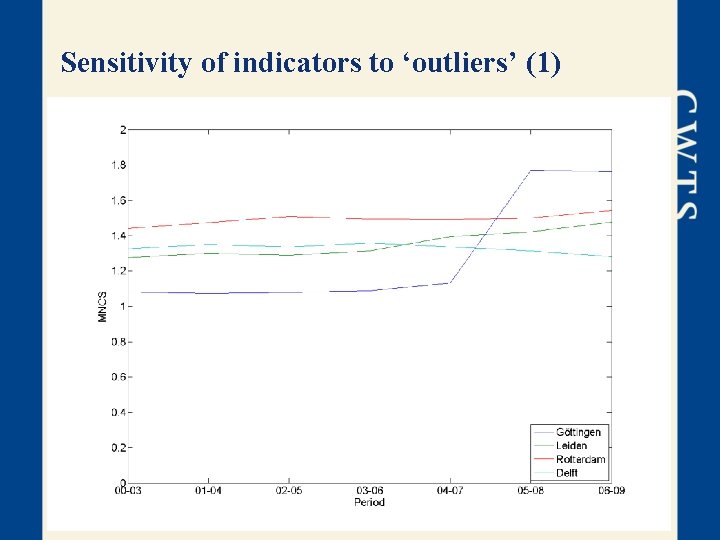

Sensitivity of indicators to ‘outliers’ (1)

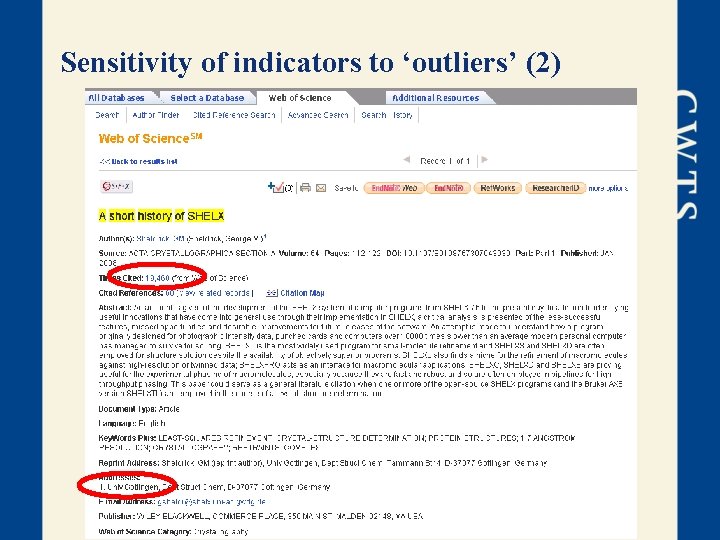

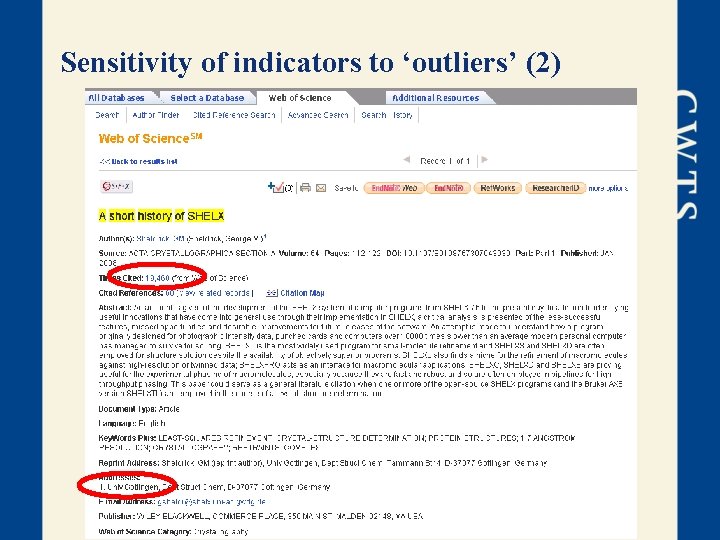

Sensitivity of indicators to ‘outliers’ (2)

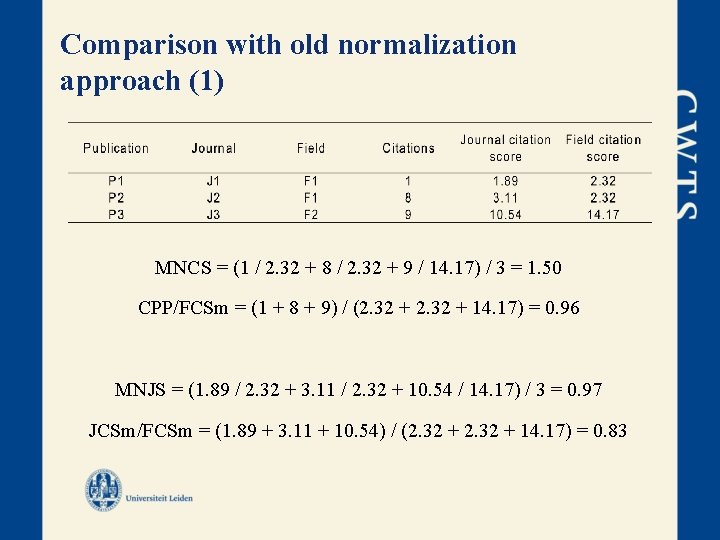

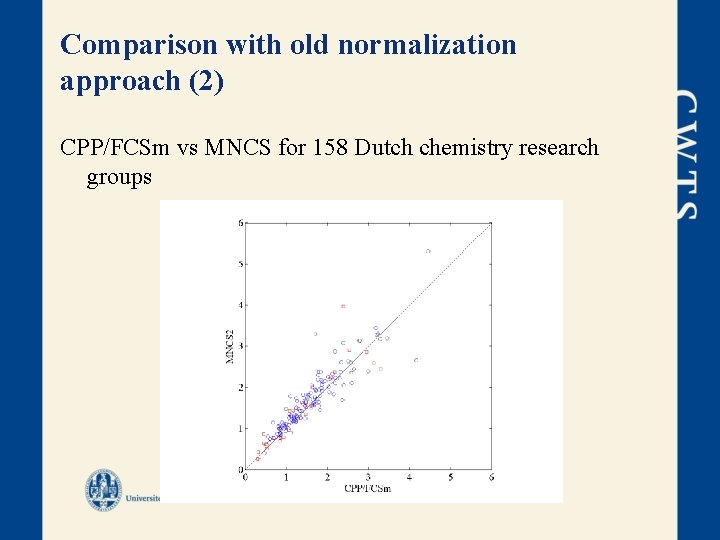

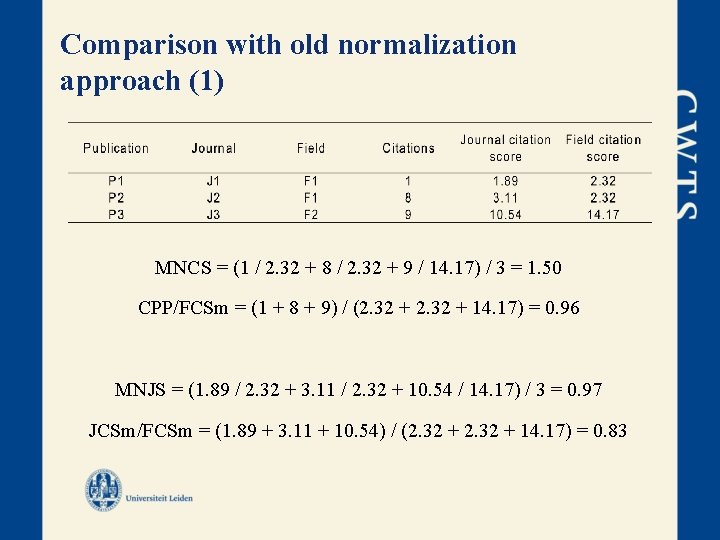

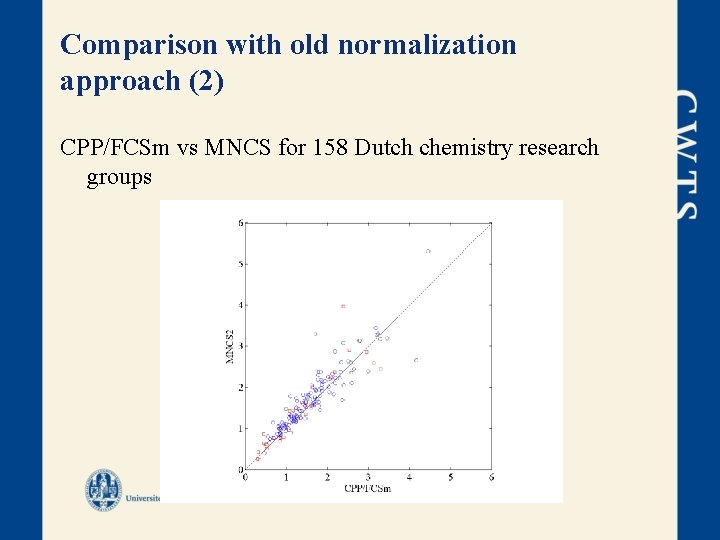

Comparison with old normalization approach (1) MNCS = (1 / 2. 32 + 8 / 2. 32 + 9 / 14. 17) / 3 = 1. 50 CPP/FCSm = (1 + 8 + 9) / (2. 32 + 14. 17) = 0. 96 MNJS = (1. 89 / 2. 32 + 3. 11 / 2. 32 + 10. 54 / 14. 17) / 3 = 0. 97 JCSm/FCSm = (1. 89 + 3. 11 + 10. 54) / (2. 32 + 14. 17) = 0. 83

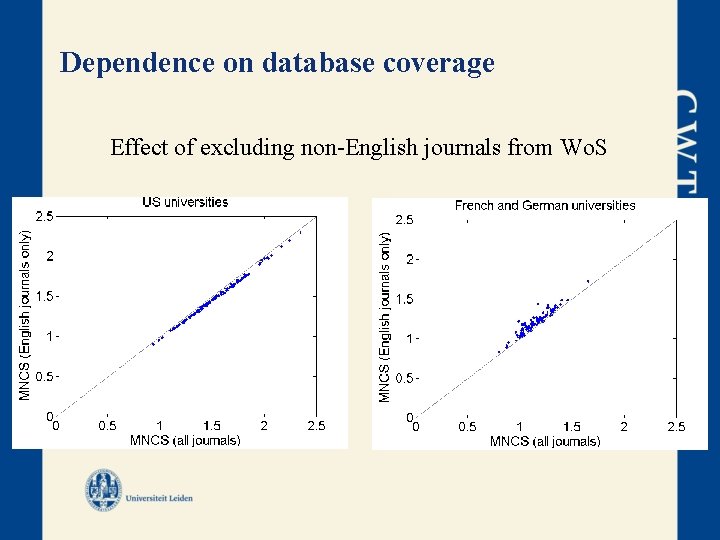

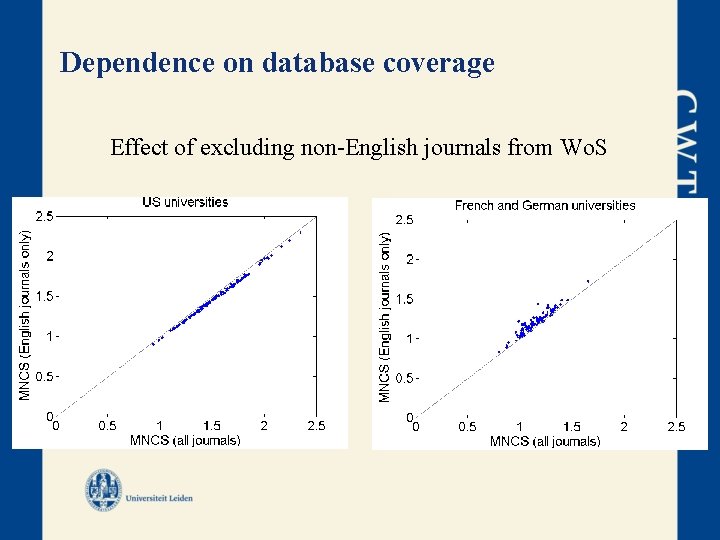

Dependence on database coverage Effect of excluding non-English journals from Wo. S

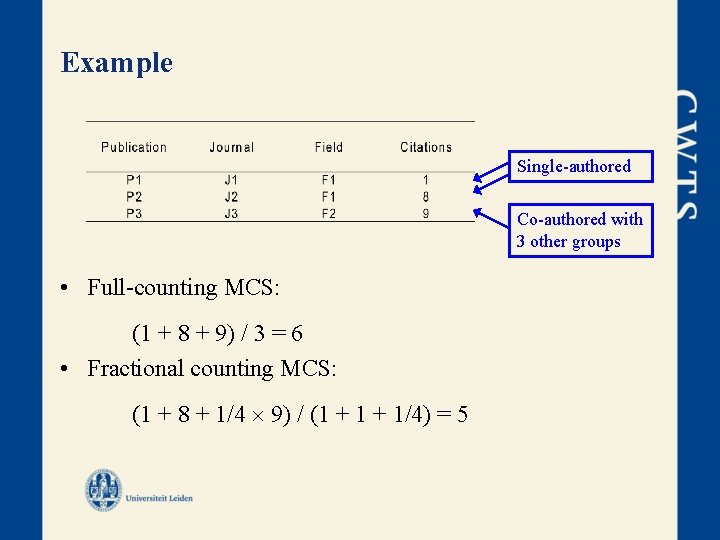

Full counting vs fractional counting • Full counting means that all publications have the same weight • Fractional counting means that the weight of a publication is inversely proportional to the number of collaborators

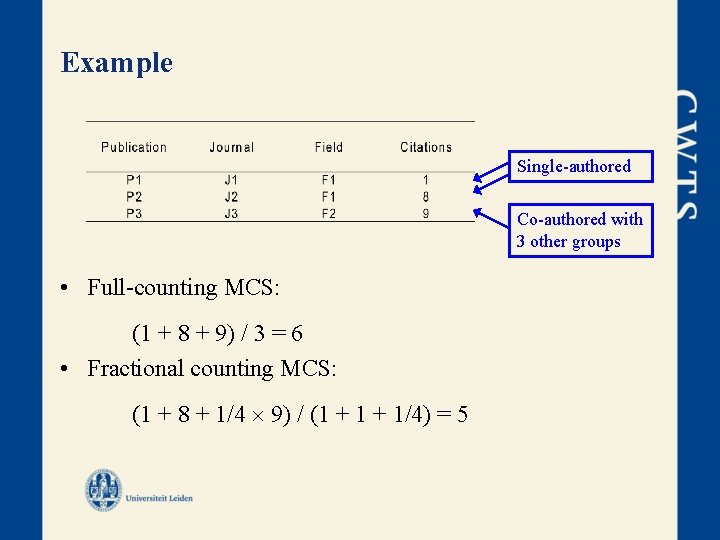

Example Single-authored Co-authored with 3 other groups • Full-counting MCS: (1 + 8 + 9) / 3 = 6 • Fractional counting MCS: (1 + 8 + 1/4 9) / (1 + 1/4) = 5

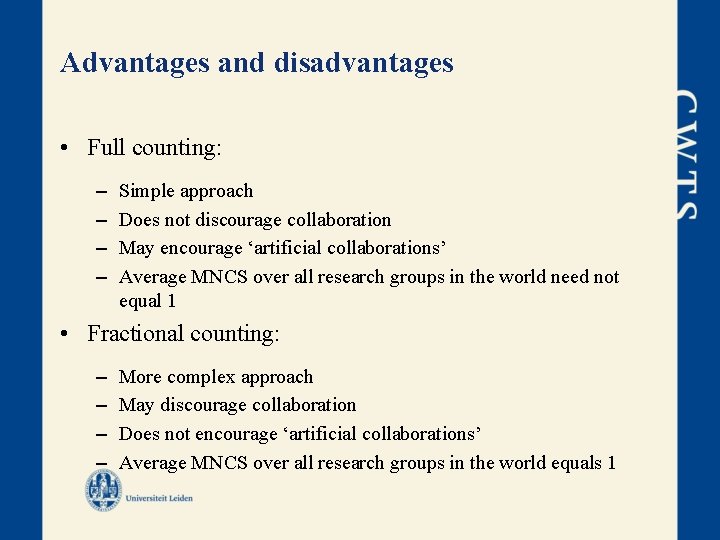

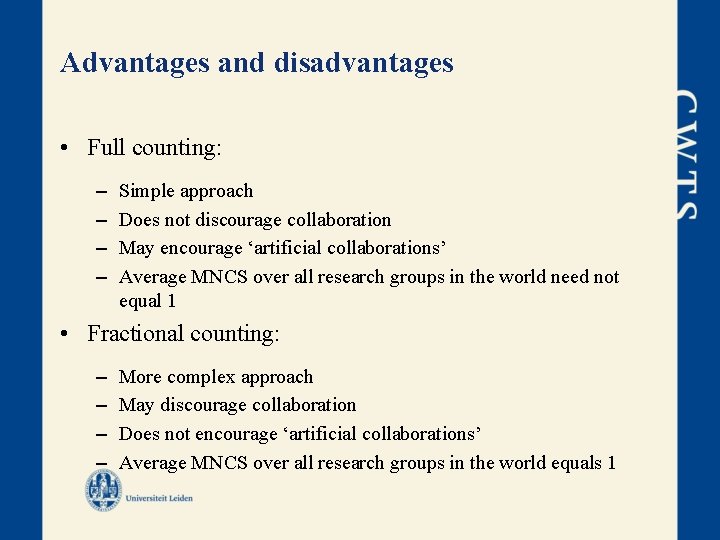

Advantages and disadvantages • Full counting: – – Simple approach Does not discourage collaboration May encourage ‘artificial collaborations’ Average MNCS over all research groups in the world need not equal 1 • Fractional counting: – – More complex approach May discourage collaboration Does not encourage ‘artificial collaborations’ Average MNCS over all research groups in the world equals 1

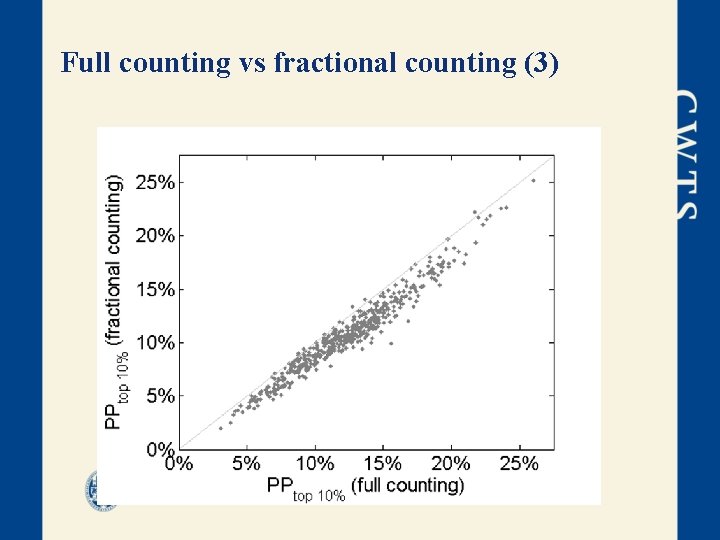

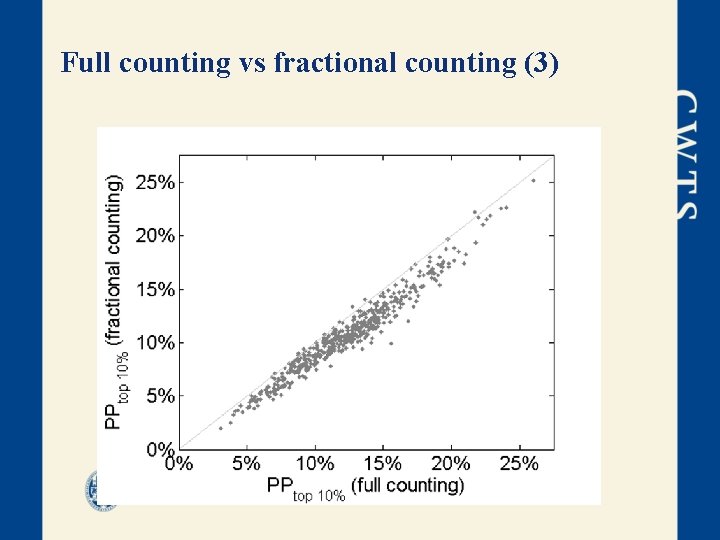

Full counting vs fractional counting (3)

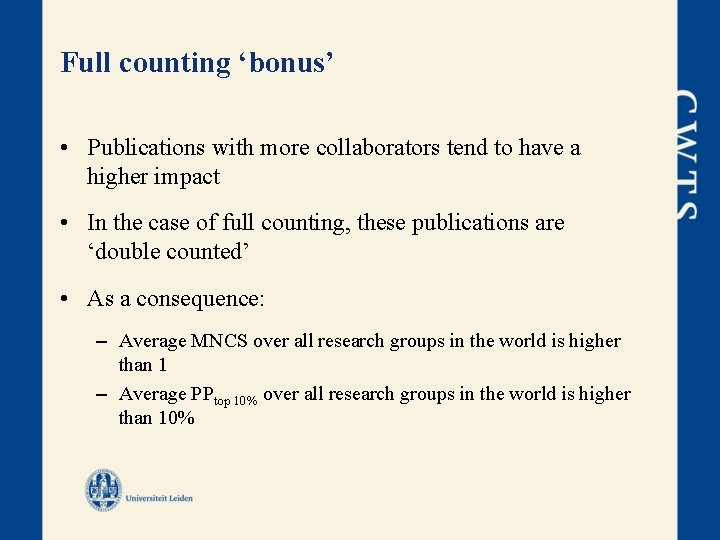

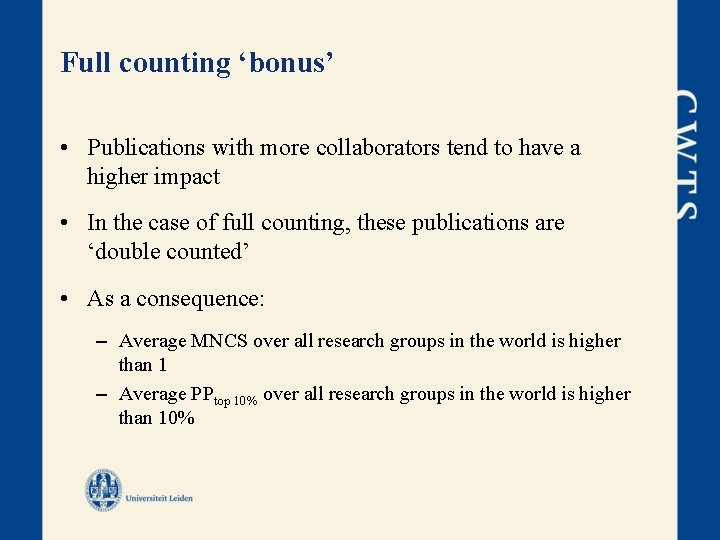

Full counting ‘bonus’ • Publications with more collaborators tend to have a higher impact • In the case of full counting, these publications are ‘double counted’ • As a consequence: – Average MNCS over all research groups in the world is higher than 1 – Average PPtop 10% over all research groups in the world is higher than 10%

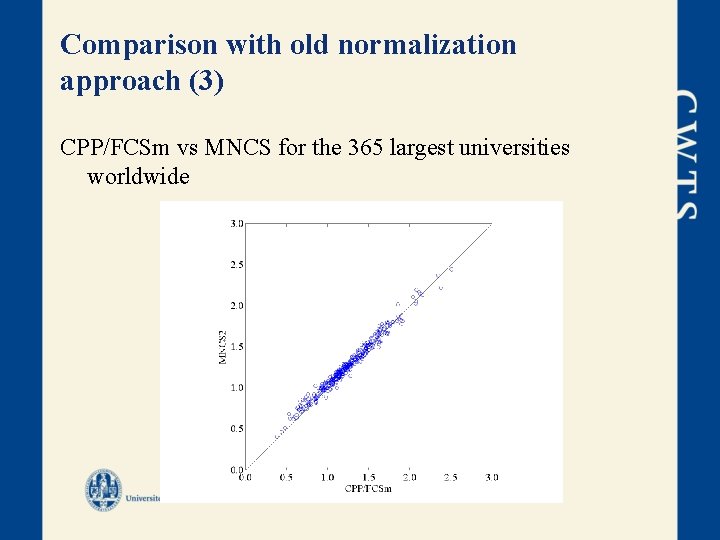

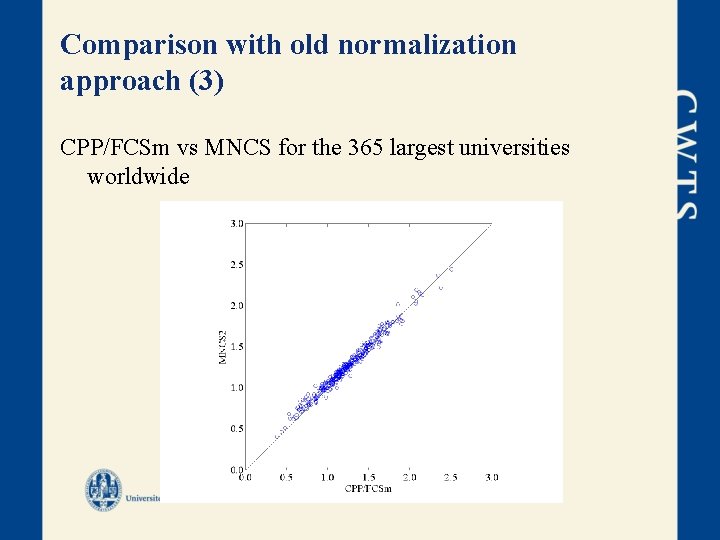

Comparison with old normalization approach (2) CPP/FCSm vs MNCS for 158 Dutch chemistry research groups

Comparison with old normalization approach (3) CPP/FCSm vs MNCS for the 365 largest universities worldwide

Productivity is not rewarded • Two equally-sized research groups • Group 1: – 100 publications with 20 citations each – Mean citation score: (100 20) / 100 = 20 • Group 2: – 100 publications with 20 citations each and 50 publications with 10 citations each – Mean citation score: (100 20 + 50 10) / (100 + 50) = 16. 67 • Group 2 has a lower mean citation score, even though this group seems to have performed better

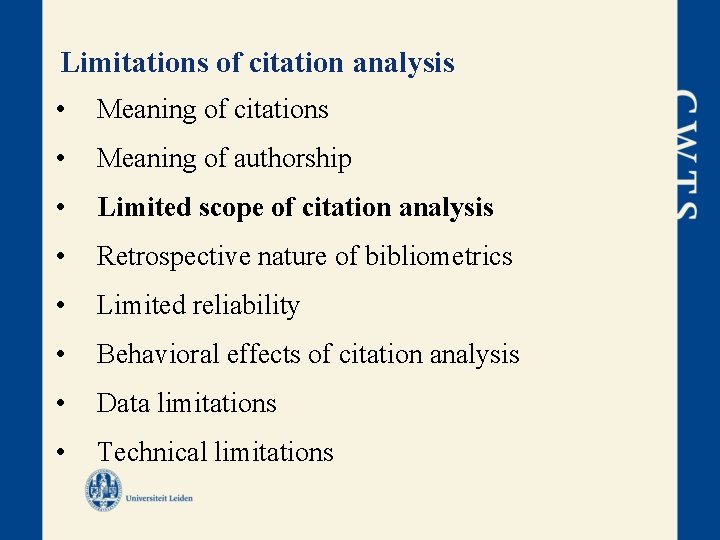

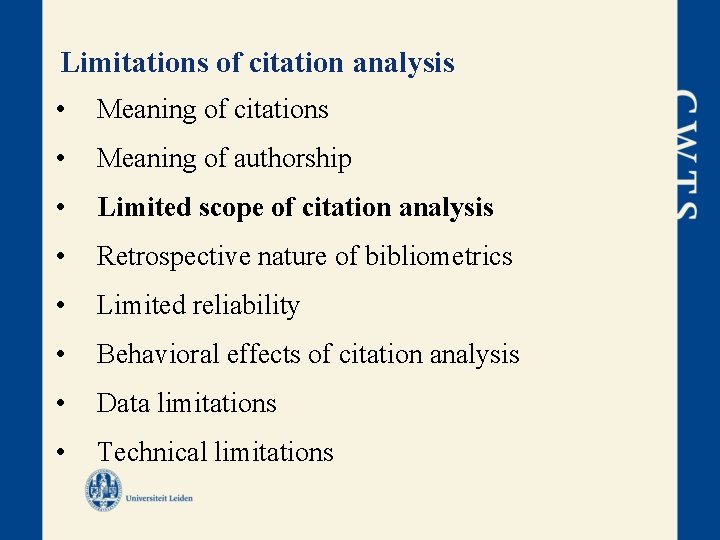

Limitations of citation analysis • Important being aware of them • 2 main categories of limitations: – Conceptual: limitations that are related to the concept of citations. – Practical: more data and technical issues in the calculation and use of bibliometric indicators.

Limitations of citation analysis • Meaning of citations • Meaning of authorship • Limited scope of citation analysis • Retrospective nature of bibliometrics • Limited reliability • Behavioral effects of citation analysis • Data limitations • Technical limitations

1) Meaning of citations • Citations are assumed to measure scientific influence • Other factors influence the meaning citations • Do all citations measure the same concept? • Let’s discuss an example…

Limitations of citation analysis • Meaning of citations • Meaning of authorship • Limited scope of citation analysis • Retrospective nature of bibliometrics • Limited reliability • Behavioral effects of citation analysis • Data limitations • Technical limitations

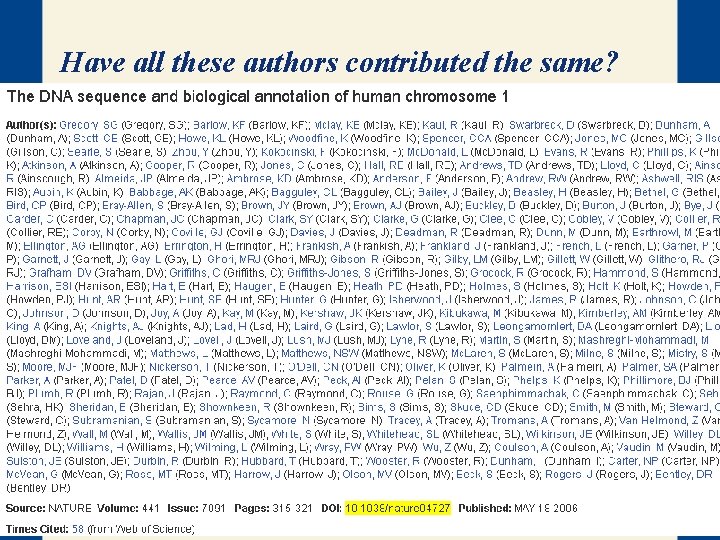

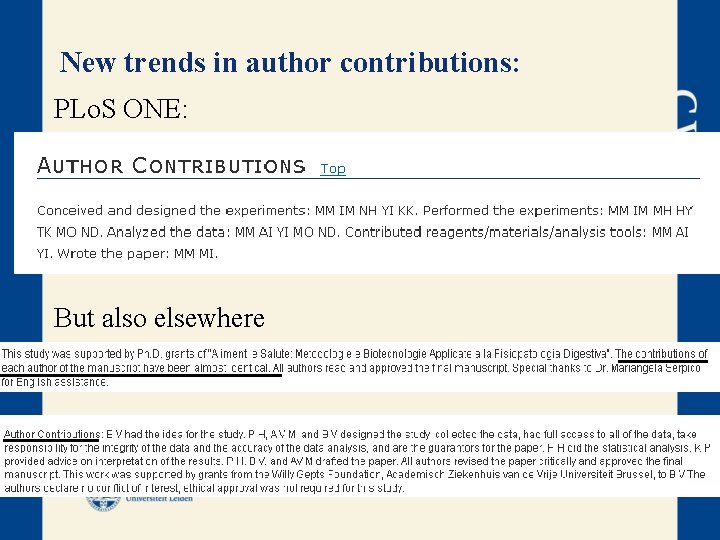

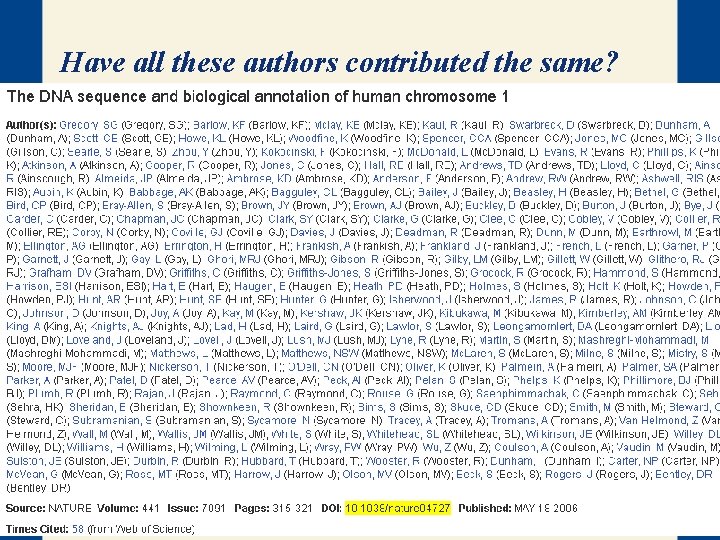

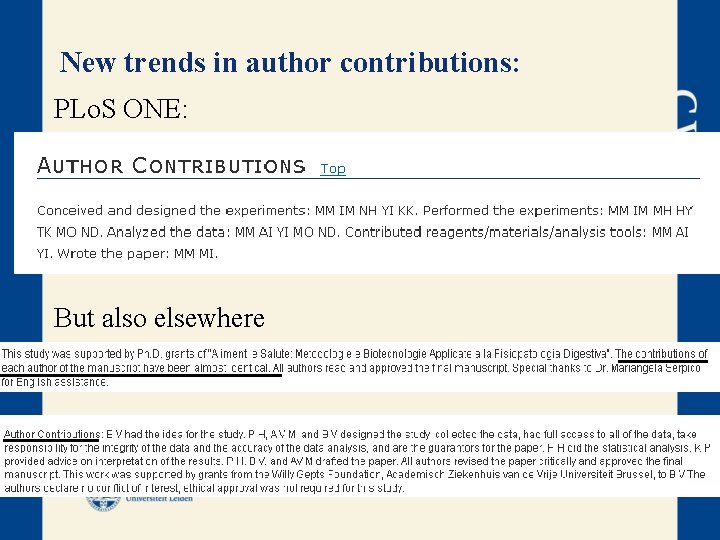

2) Meaning of authorship • Most publications have multiple authors • How much each author should be credited for the citations of their publications? • Let’s see an example:

Have all these authors contributed the same? Citation 1. “The h-index, introduced only 2 years ago, has become a real hype in and even outside informetrics: Ball (2005, 2007), Bornmann and Daniel (2005, 2007 a), […. ] Rao and Rousseau (2007), Vinkler (2007), Vanclay (2007) and see also the papers in the special issue on the Hirsch index in Journal of Informetrics 1(3), 2007: Schubert and Glänzel (2007), Beirlant, Glänzel, Carbonez and Leemans (2007), Costas and Bordons (2007) and Bornmann and Daniel (2007 b). ” Citation 2. “Costas and Bordons (2007) analyze the relationship of the h-index with other bibliometric indicators. . . The authors suggest that the h-index tends to underestimate the achievement of scientists with a "selective publication strategy", that is, those who do not publish a high number of documents but who achieve a very important international impact. In addition, a good correlation is found between the h-index and [. . . ] absolute indicators of quantity. Finally, they notice that the widespread use of the h-index in the assessment of scientists' careers might […] foster productivity instead of promoting quality […] since the maximum h-index an author can obtain is that of his/her total number of publications”

New trends in author contributions: PLo. S ONE: But also elsewhere

Limitations of citation analysis • Meaning of citations • Meaning of authorship • Limited scope of citation analysis • Retrospective nature of bibliometrics • Limited reliability • Behavioral effects of citation analysis • Data limitations • Technical limitations

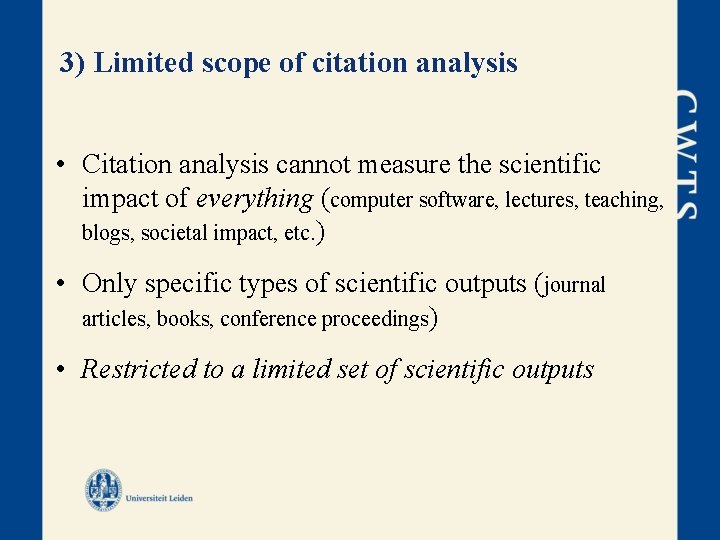

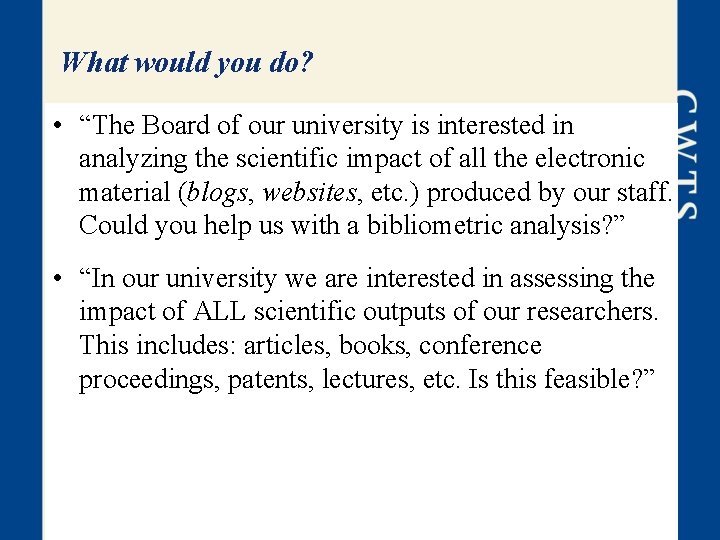

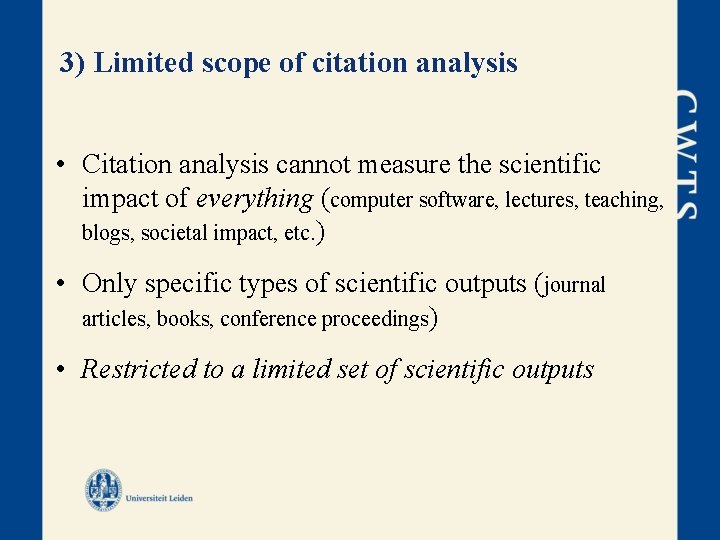

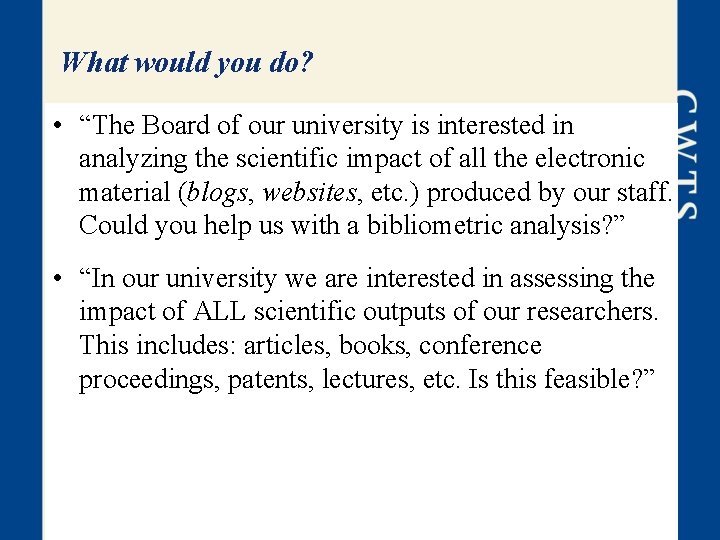

3) Limited scope of citation analysis • Citation analysis cannot measure the scientific impact of everything (computer software, lectures, teaching, blogs, societal impact, etc. ) • Only specific types of scientific outputs (journal articles, books, conference proceedings) • Restricted to a limited set of scientific outputs

What would you do? • “The Board of our university is interested in analyzing the scientific impact of all the electronic material (blogs, websites, etc. ) produced by our staff. Could you help us with a bibliometric analysis? ” • “In our university we are interested in assessing the impact of ALL scientific outputs of our researchers. This includes: articles, books, conference proceedings, patents, lectures, etc. Is this feasible? ”

Limitations of citation analysis • Meaning of citations • Meaning of authorship • Limited scope of citation analysis • Retrospective nature of bibliometrics • Limited reliability • Behavioral effects of citation analysis • Data limitations • Technical limitations

4) Retrospective nature of bibliometrics • Backwards looking • Sometimes only short term impact (e. g. recent publications) • Using recent publications can be problematic • Let’s discuss an example.

What would you say? • Institute created in October 2011 • Very young researchers appointed (age ~30) • Since then ~50 pubs. have been produced • Is a citation analysis useful?

Limitations of citation analysis • Meaning of citations • Meaning of authorship • Limited scope of citation analysis • Retrospective nature of bibliometrics • Limited reliability • Behavioral effects of citation analysis • Data limitations • Technical limitations

5) Limited reliability • Dependence on the volume of citations and publications • Small numbers of publications introduce noise (individual level) • Some disciplines have a low ‘citation density’ (e. g. mathematics, engineering, and most socials sciences) • This limitation can not be solved • Let’s discuss an example.

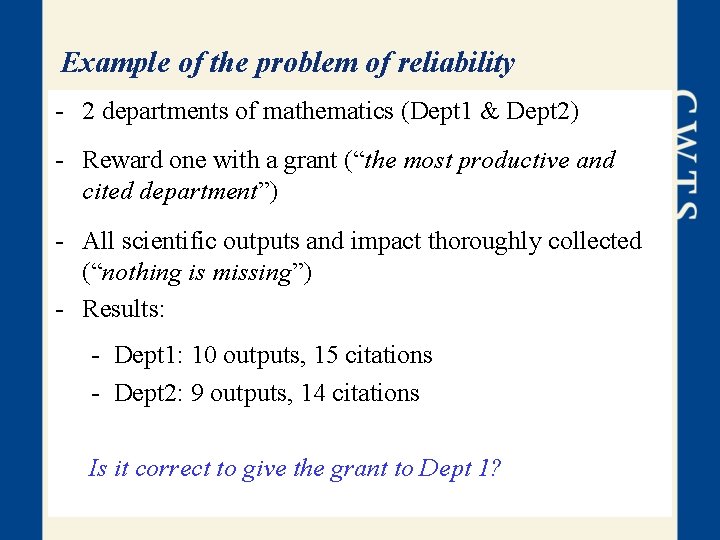

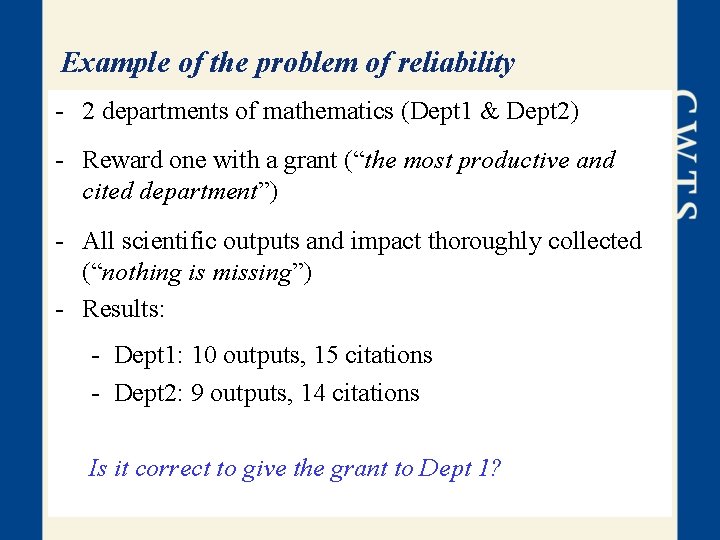

Example of the problem of reliability - 2 departments of mathematics (Dept 1 & Dept 2) - Reward one with a grant (“the most productive and cited department”) - All scientific outputs and impact thoroughly collected (“nothing is missing”) - Results: - Dept 1: 10 outputs, 15 citations - Dept 2: 9 outputs, 14 citations Is it correct to give the grant to Dept 1?

Limitations of citation analysis • Meaning of citations • Meaning of authorship • Limited scope of citation analysis • Retrospective nature of bibliometrics • Limited reliability • Behavioral effects of citation analysis • Data limitations • Technical limitations

6) Behavioral effects of citation analysis • Researchers may change their behaviour • These changes sometimes are desired • Others are not: – salami slicing, multiple publication, citation cliques, selfcitations, etc.

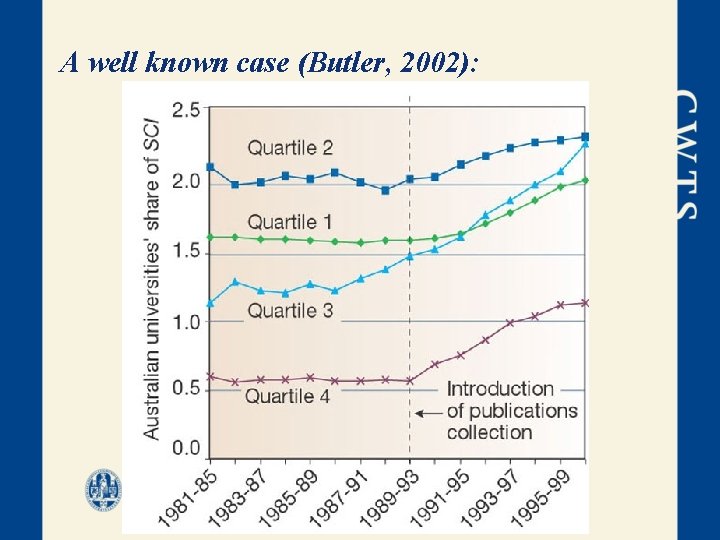

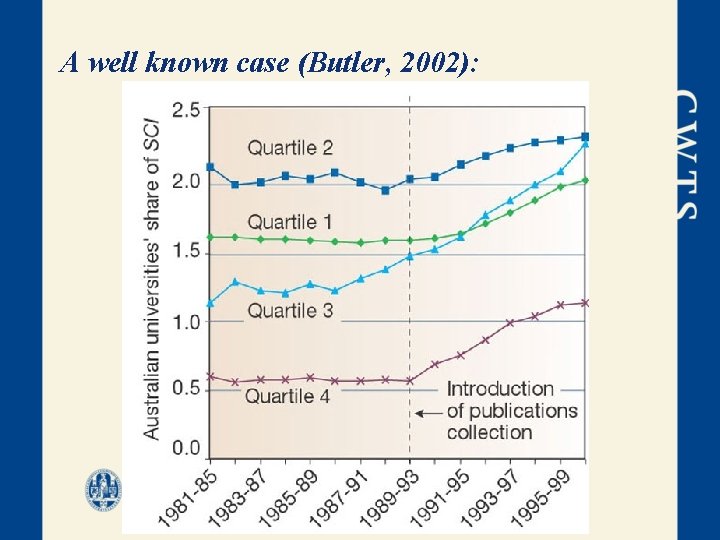

A well known case (Butler, 2002): • In 1993 the Australian government changed its policy for research funding allocation • Stronger accent was put in the n. publications in the SCI. • What do you think that happened with the scientific production in Australia after 1993?

A well known case (Butler, 2002):

Limitations of citation analysis • Meaning of citations • Meaning of authorship • Limited scope of citation analysis • Retrospective nature of bibliometrics • Limited reliability • Behavioral effects of citation analysis • Data limitations • Technical limitations

7) Data limitations • Coverage limitations (e. g. Wo. S/Scopus) • No books / local journals covered • No data on the ‘input side’ – N. scientists; money spent; etc.

Limitations of citation analysis • Meaning of citations • Meaning of authorship • Limited scope of citation analysis • Retrospective nature of bibliometrics • Limited reliability • Behavioral effects of citation analysis • Data limitations • Technical limitations

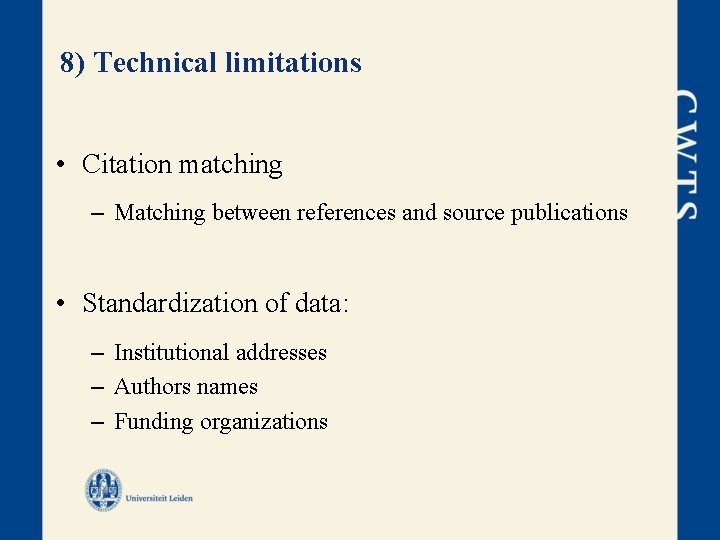

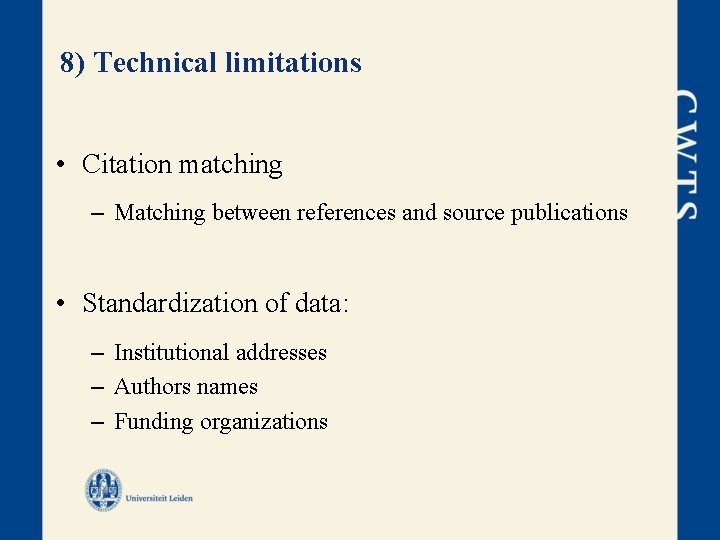

8) Technical limitations • Citation matching – Matching between references and source publications • Standardization of data: – Institutional addresses – Authors names – Funding organizations

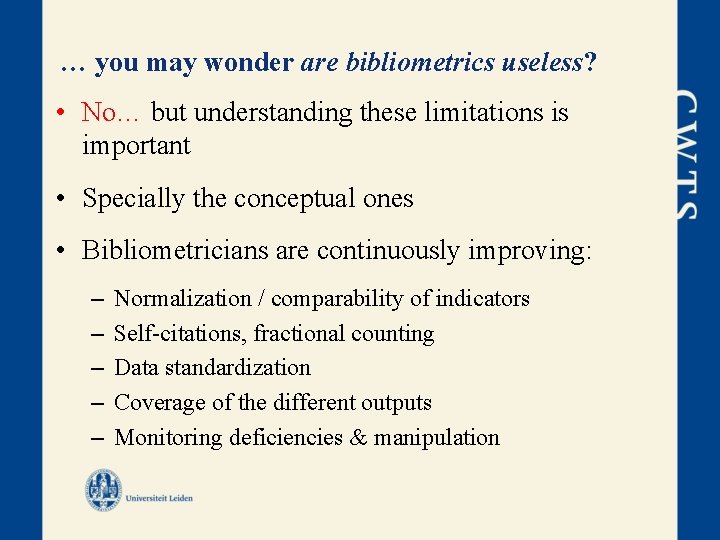

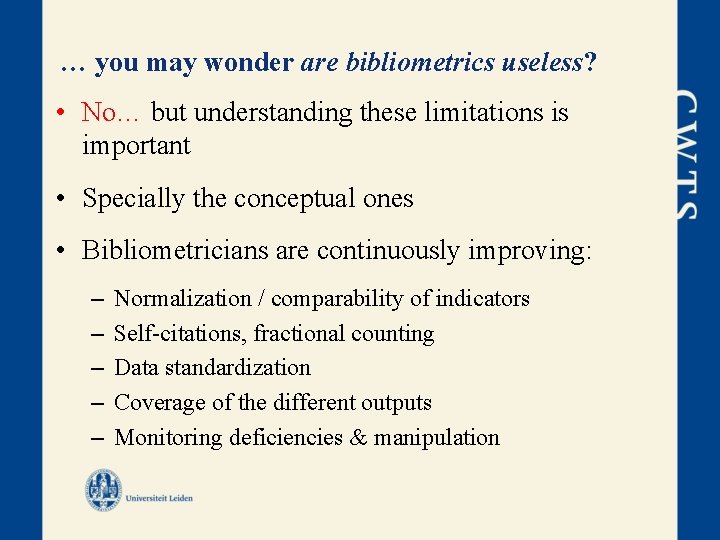

… you may wonder are bibliometrics useless? • No… but understanding these limitations is important • Specially the conceptual ones • Bibliometricians are continuously improving: – – – Normalization / comparability of indicators Self-citations, fractional counting Data standardization Coverage of the different outputs Monitoring deficiencies & manipulation

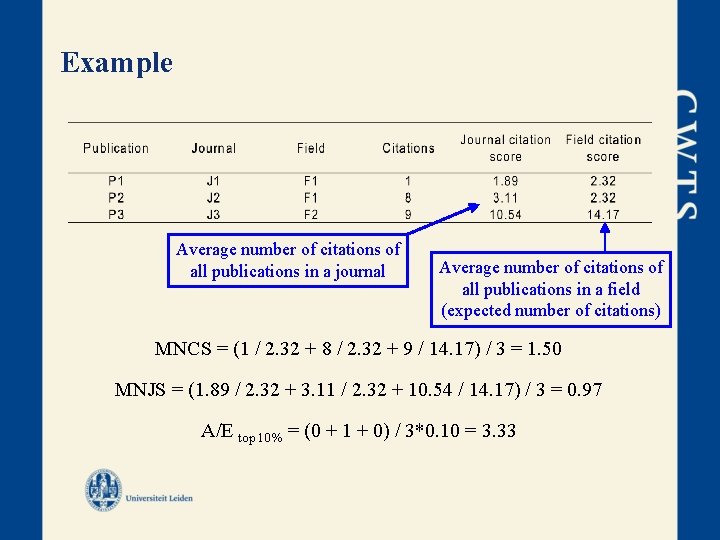

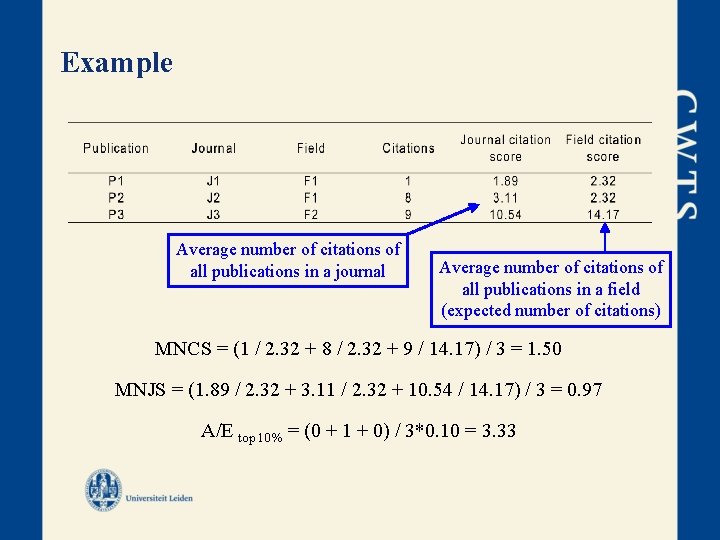

Example Average number of citations of all publications in a journal Average number of citations of all publications in a field (expected number of citations) MNCS = (1 / 2. 32 + 8 / 2. 32 + 9 / 14. 17) / 3 = 1. 50 MNJS = (1. 89 / 2. 32 + 3. 11 / 2. 32 + 10. 54 / 14. 17) / 3 = 0. 97 A/E top 10% = (0 + 1 + 0) / 3*0. 10 = 3. 33

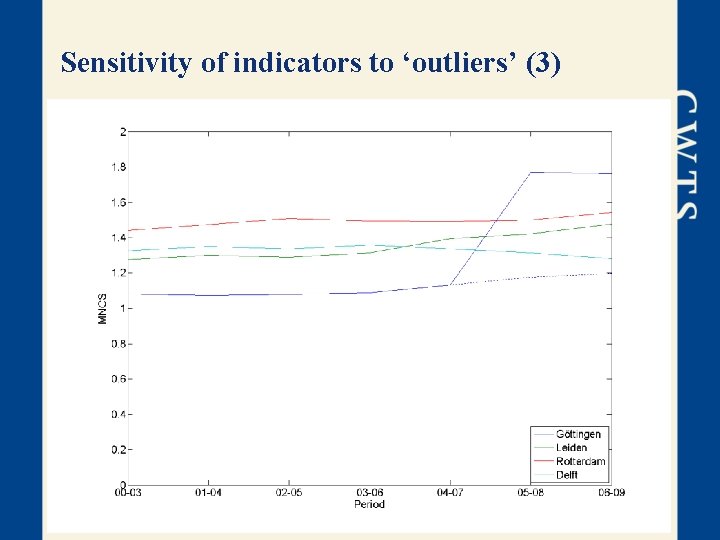

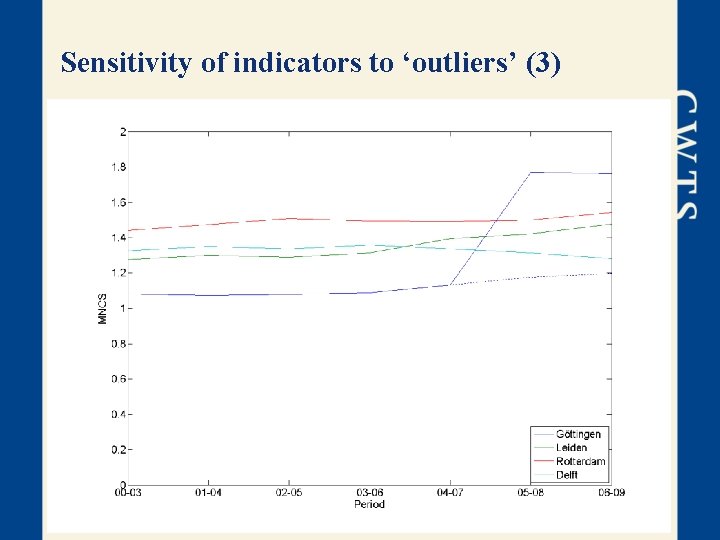

Sensitivity of indicators to ‘outliers’ (3)

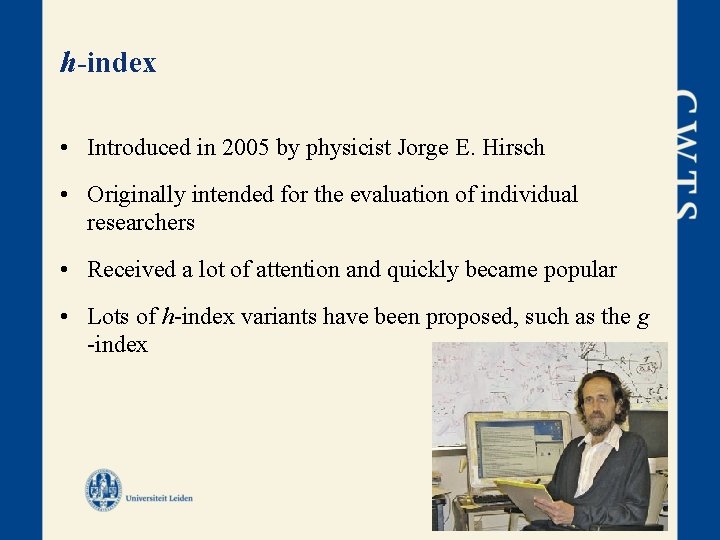

h-index • Introduced in 2005 by physicist Jorge E. Hirsch • Originally intended for the evaluation of individual researchers • Received a lot of attention and quickly became popular • Lots of h-index variants have been proposed, such as the g -index

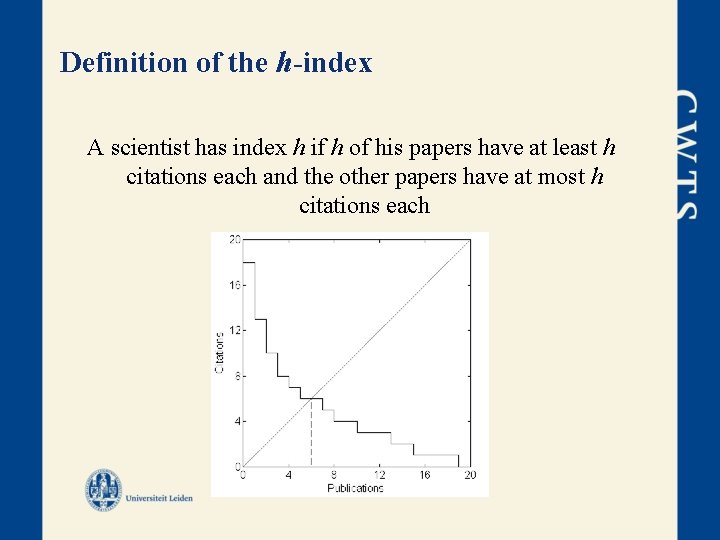

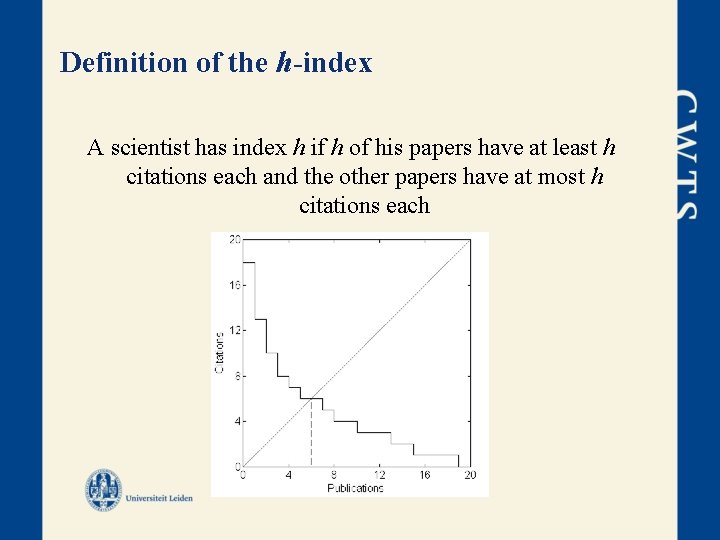

Definition of the h-index A scientist has index h if h of his papers have at least h citations each and the other papers have at most h citations each

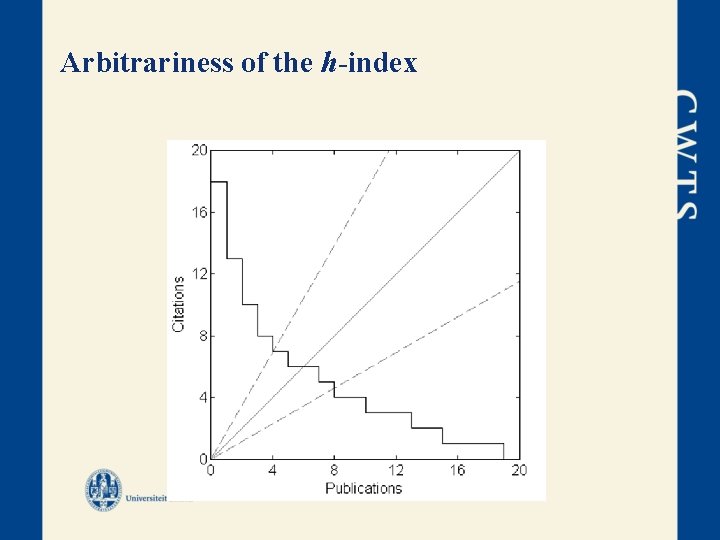

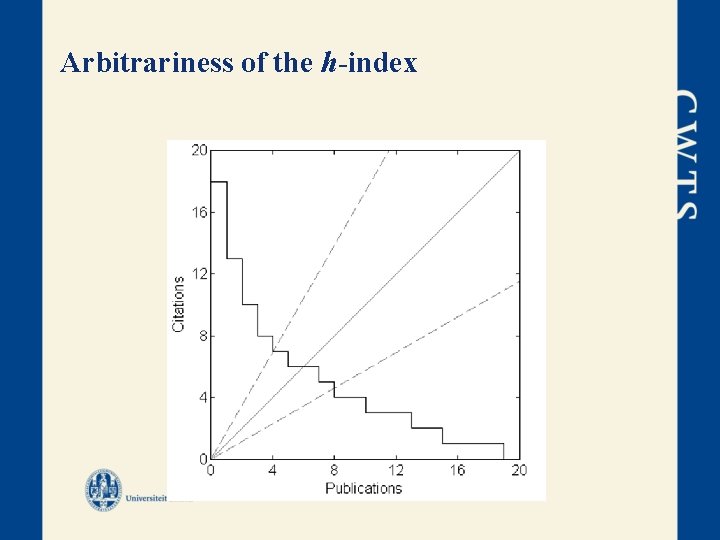

Arbitrariness of the h-index

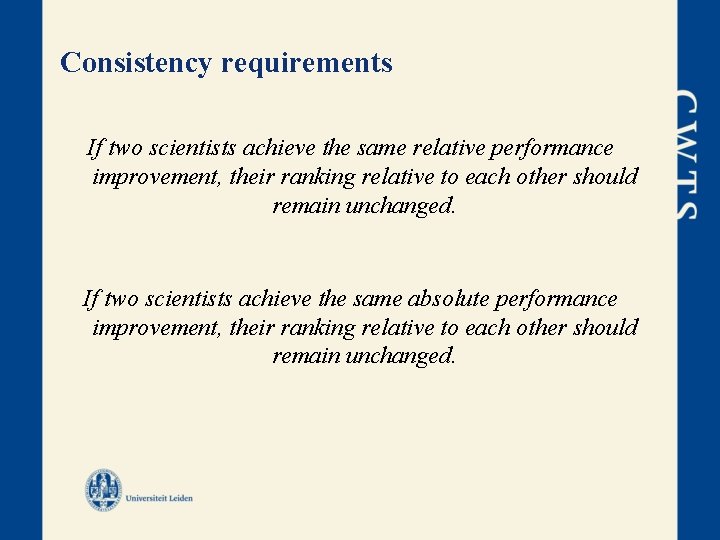

Consistency requirements If two scientists achieve the same relative performance improvement, their ranking relative to each other should remain unchanged. If two scientists achieve the same absolute performance improvement, their ranking relative to each other should remain unchanged.

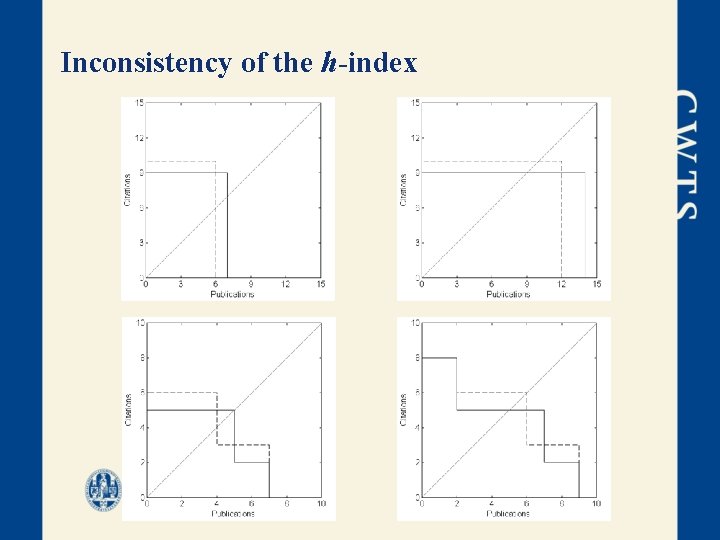

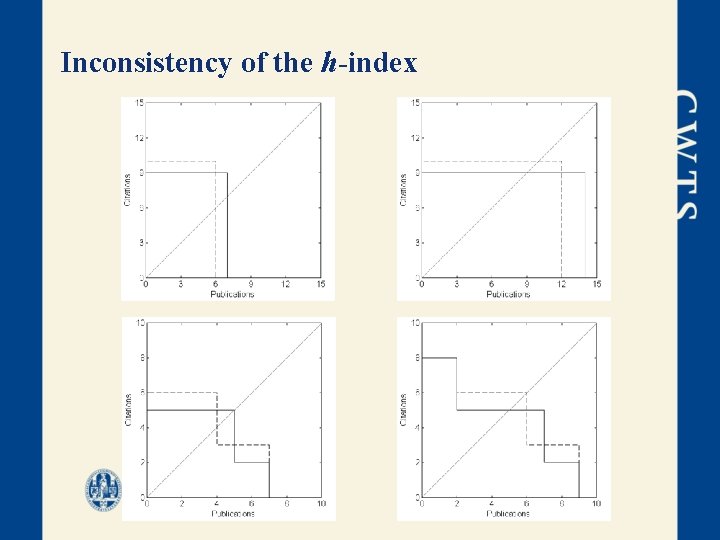

Inconsistency of the h-index

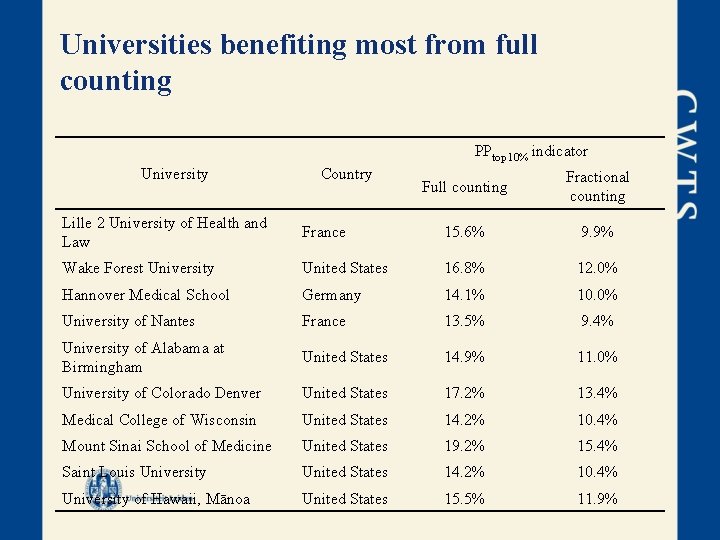

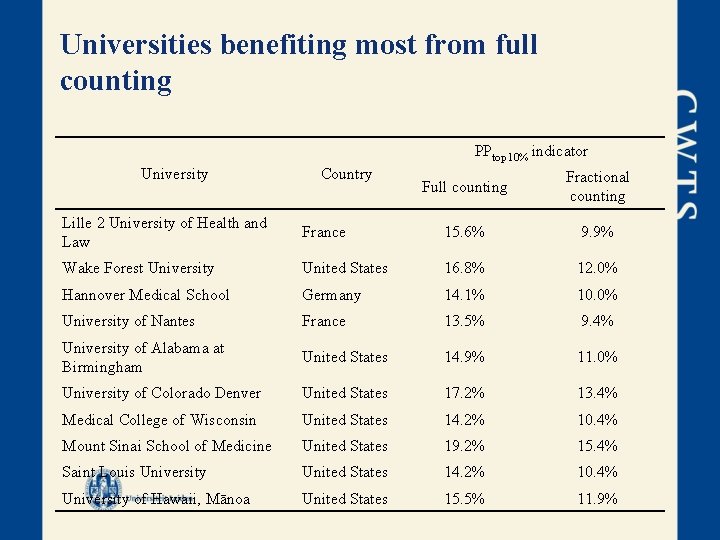

Universities benefiting most from full counting PPtop 10% indicator University Country Full counting Fractional counting Lille 2 University of Health and Law France 15. 6% 9. 9% Wake Forest University United States 16. 8% 12. 0% Hannover Medical School Germany 14. 1% 10. 0% University of Nantes France 13. 5% 9. 4% University of Alabama at Birmingham United States 14. 9% 11. 0% University of Colorado Denver United States 17. 2% 13. 4% Medical College of Wisconsin United States 14. 2% 10. 4% Mount Sinai School of Medicine United States 19. 2% 15. 4% Saint Louis University United States 14. 2% 10. 4% University of Hawaii, Mānoa United States 15. 5% 11. 9%

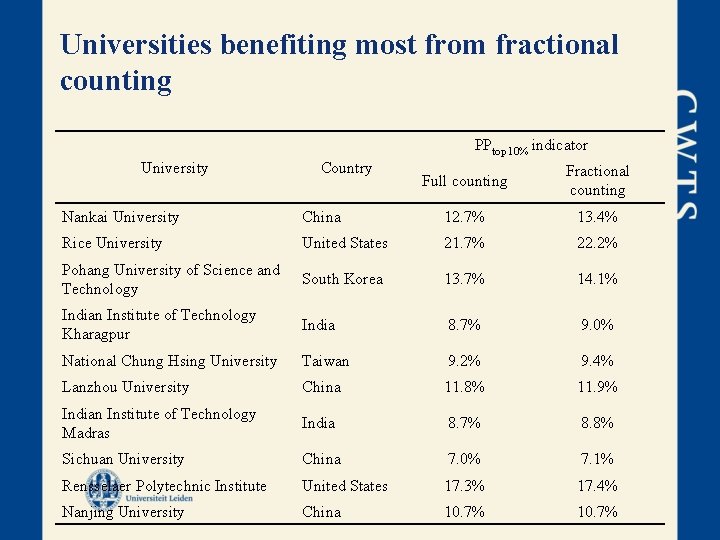

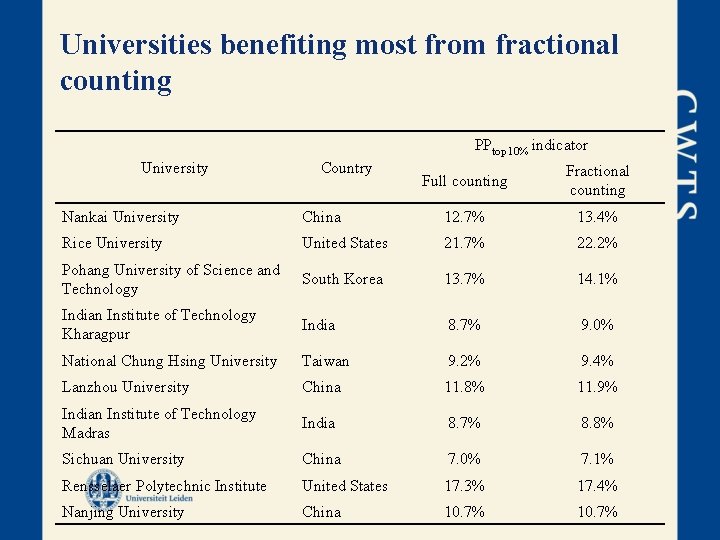

Universities benefiting most from fractional counting PPtop 10% indicator University Country Full counting Fractional counting Nankai University China 12. 7% 13. 4% Rice University United States 21. 7% 22. 2% Pohang University of Science and Technology South Korea 13. 7% 14. 1% Indian Institute of Technology Kharagpur India 8. 7% 9. 0% National Chung Hsing University Taiwan 9. 2% 9. 4% Lanzhou University China 11. 8% 11. 9% Indian Institute of Technology Madras India 8. 7% 8. 8% Sichuan University China 7. 0% 7. 1% Rensselaer Polytechnic Institute United States 17. 3% 17. 4% Nanjing University China 10. 7%