MEASURING TEACHERS USE OF FORMATIVE ASSESSMENT A LEARNING

- Slides: 43

MEASURING TEACHERS' USE OF FORMATIVE ASSESSMENT: A LEARNING PROGRESSIONS APPROACH BRENT DUCKOR (SJSU) DIANA WILMOT (PAUSD) BILL CONRAD & JIMMY SCHERRER (SCCOE) AMY DRAY (UCB)

WHY FORMATIVE ASSESSMENT? ØBlack and Wiliam (1998) report that studies of formative assessment (FA) show an effect size on standardized tests between 0. 4 and 0. 7, far larger than most educational interventions and equivalent to approximately 8 months of additional instruction; ØFurther, FA is particularly effective for low achieving students, narrowing the gap between them and high achievers, while raising overall achievement; ØEnhancing FA would be an extremely cost-effective way to improve teaching practice; ØUnfortunately, they also find that FA is relatively rare in the classroom, and that most teachers lack effective FA skills.

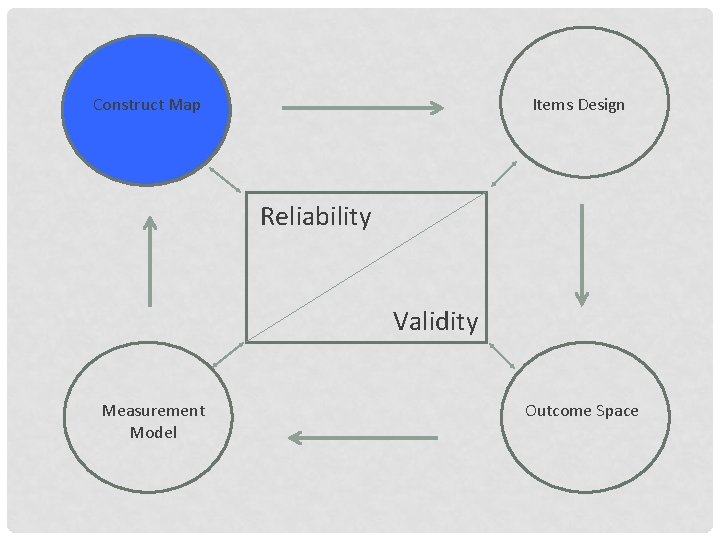

MEASURING EFFECTIVE FA PRACTICE: TOWARD A CYCLE OF PSYCHOMETRIC INQUIRY ØDefine constructs in multi-dimensional space ØDesign items and observations protocol ØIterate scoring strategy in alignment construct maps ØApply measurement model to validate teacher and item-level claims and to warrant inferences about effective practice

FA

Ask an expert TEACHERS WHO PRACTICE ASSESSMENT FOR LEARNING KNOW AND CAN Øunderstand articulate in advance of teaching the achievement targets that their students are to hit; Øinform their students about those learning goals, in terms that students understand; Øtranslate classroom assessment results into frequent descriptive feedback Øfor students, providing them with specific insights as to how to improve; Øcontinuously adjusting instruction based on the results of classroom assessments.

FA 1. 0 RESEARCH SUGGESTS Novice Expert Poses closed ended questions Frames open ended, probative questions Calls on first hand in the air Allows sufficient wait time Looks to usual suspects Systematically uses response routines to increase participation Ignores and/or fails to uncover student thinking Anticipates student thinking patterns and greets them

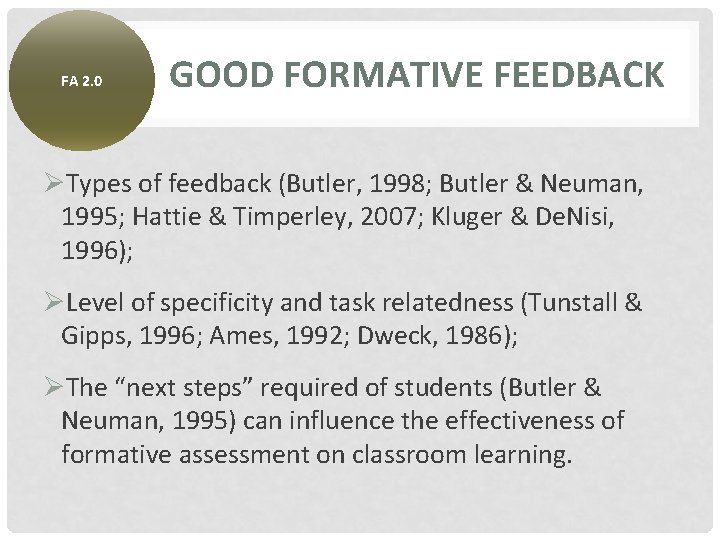

FA 2. 0 GOOD FORMATIVE FEEDBACK ØTypes of feedback (Butler, 1998; Butler & Neuman, 1995; Hattie & Timperley, 2007; Kluger & De. Nisi, 1996); ØLevel of specificity and task relatedness (Tunstall & Gipps, 1996; Ames, 1992; Dweck, 1986); ØThe “next steps” required of students (Butler & Neuman, 1995) can influence the effectiveness of formative assessment on classroom learning.

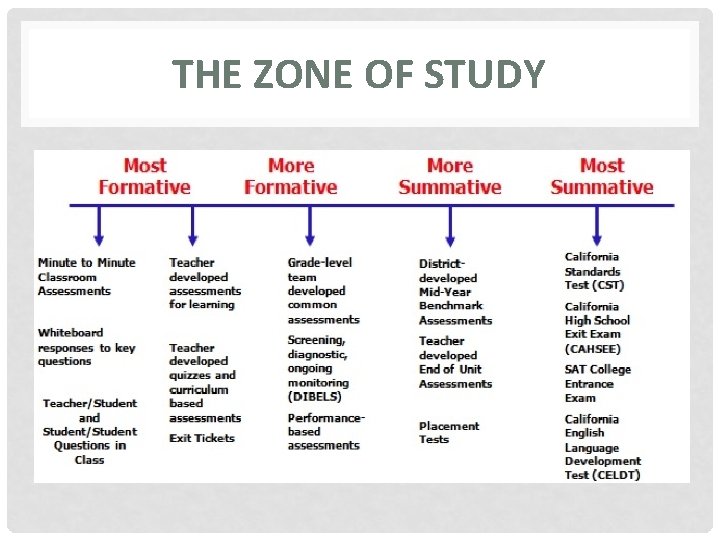

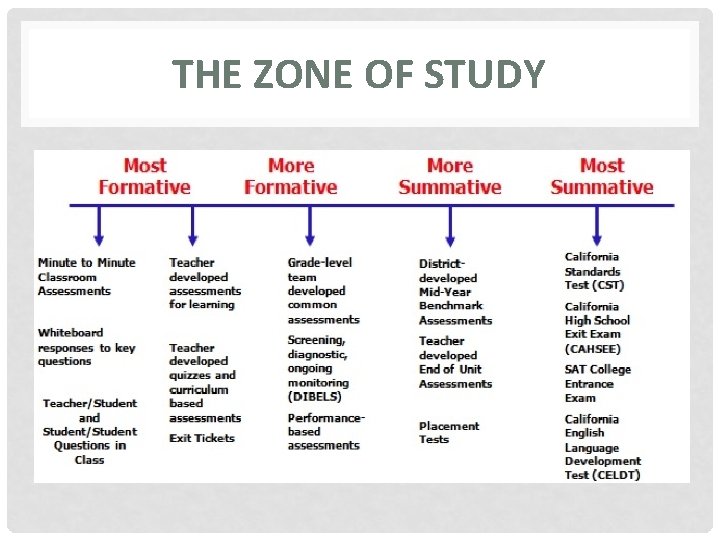

THE ZONE OF STUDY

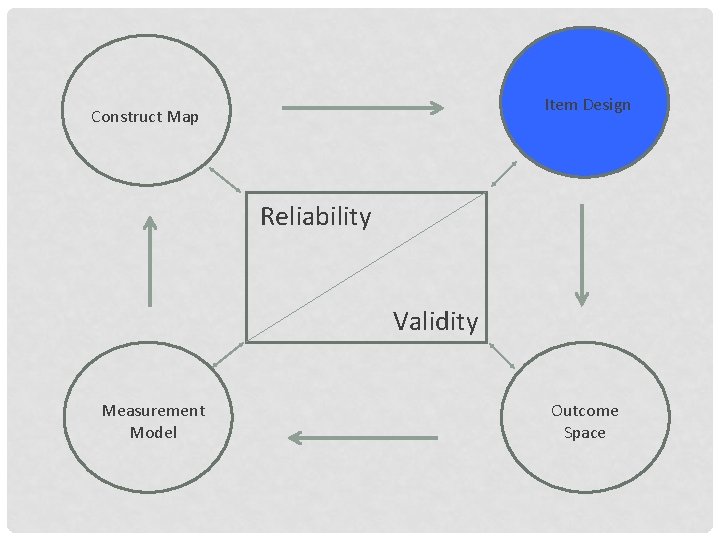

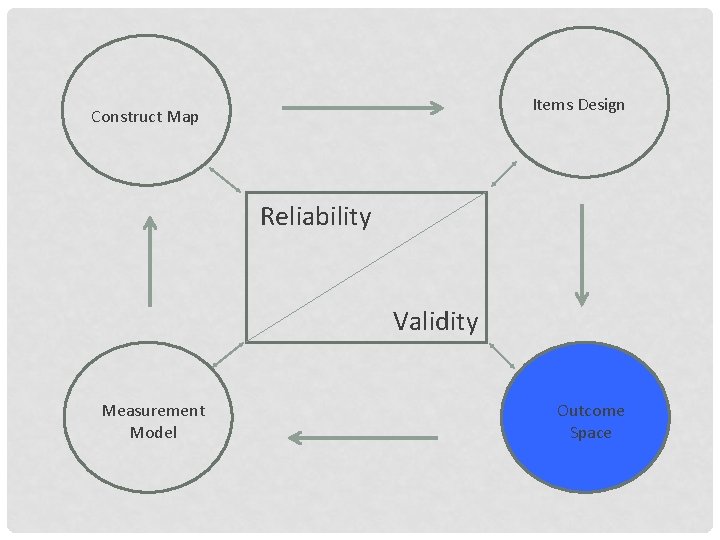

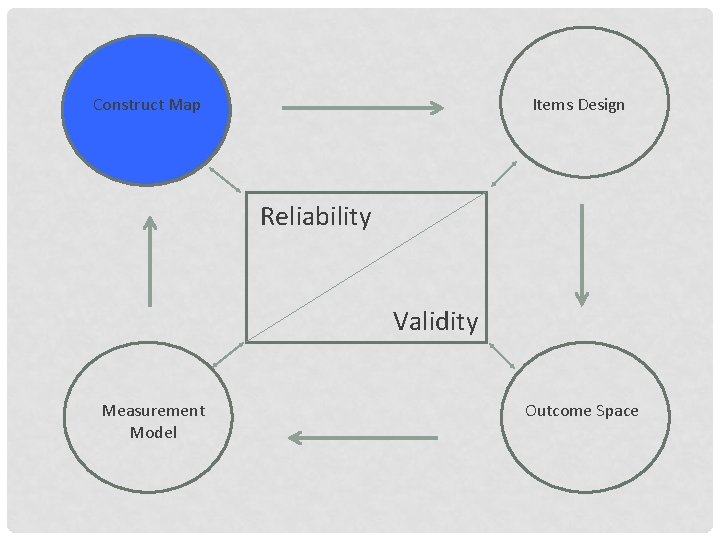

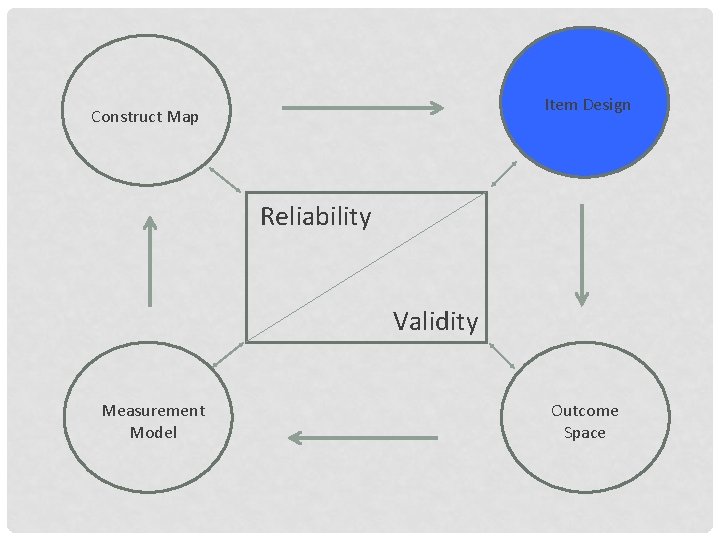

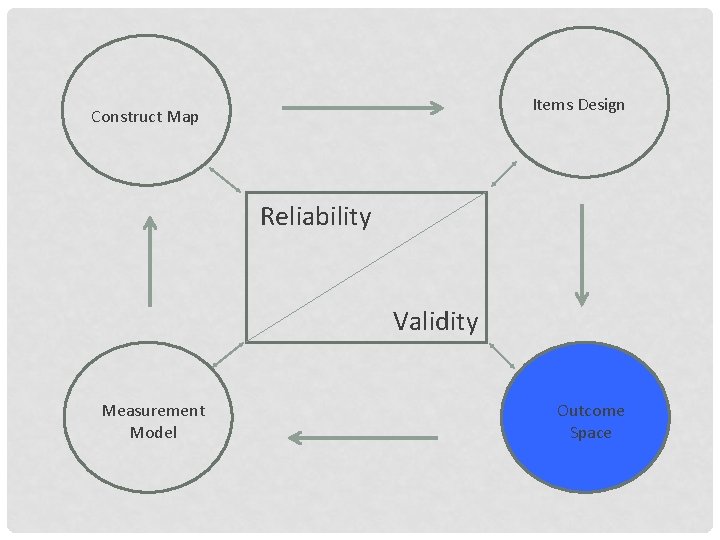

Construct Map Items Design Reliability Validity Measurement Model Outcome Space

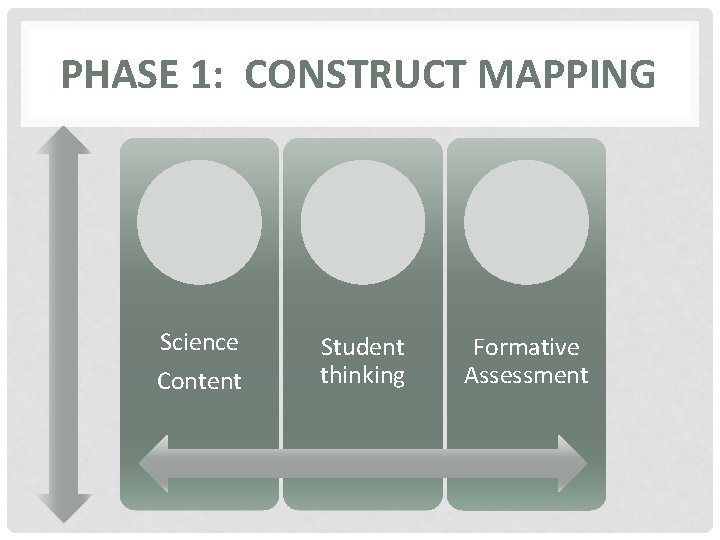

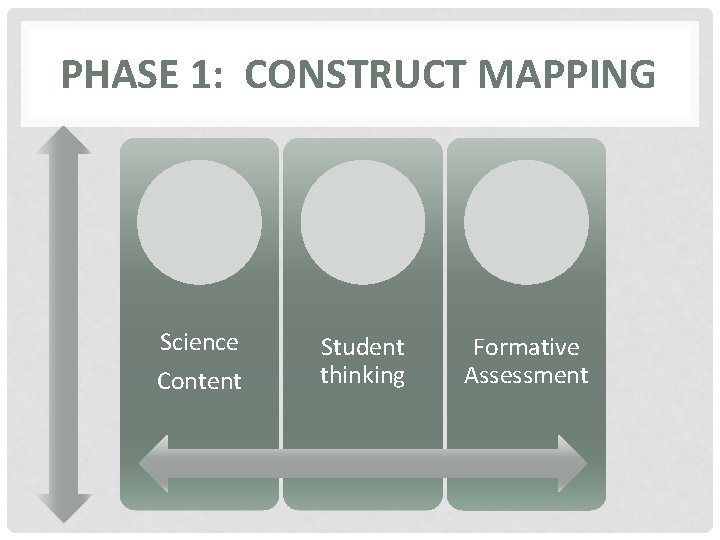

PHASE 1: CONSTRUCT MAPPING Science Content Student thinking Formative Assessment

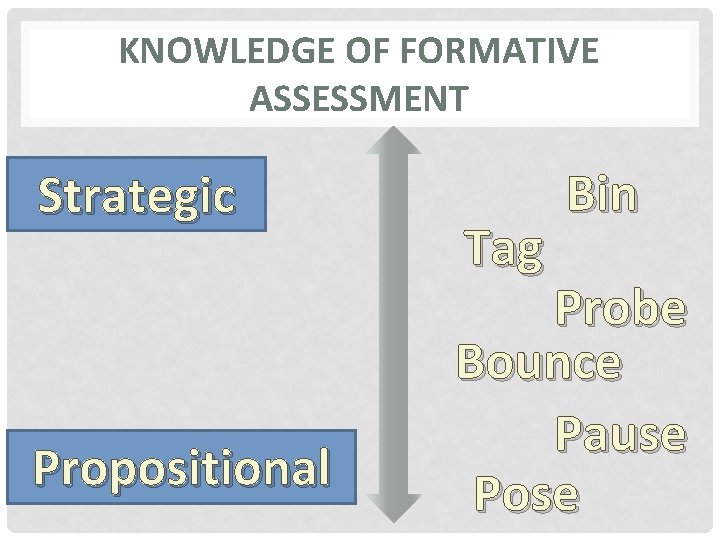

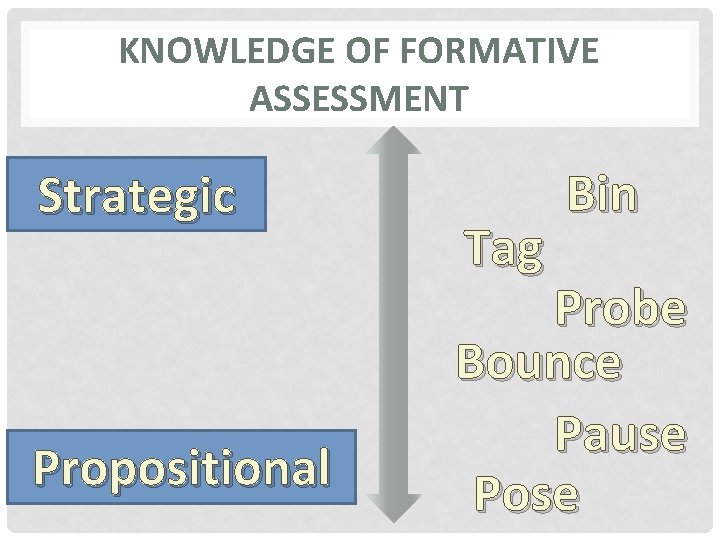

KNOWLEDGE OF FORMATIVE ASSESSMENT Strategic Propositional Tag Bin Probe Bounce Pause Pose

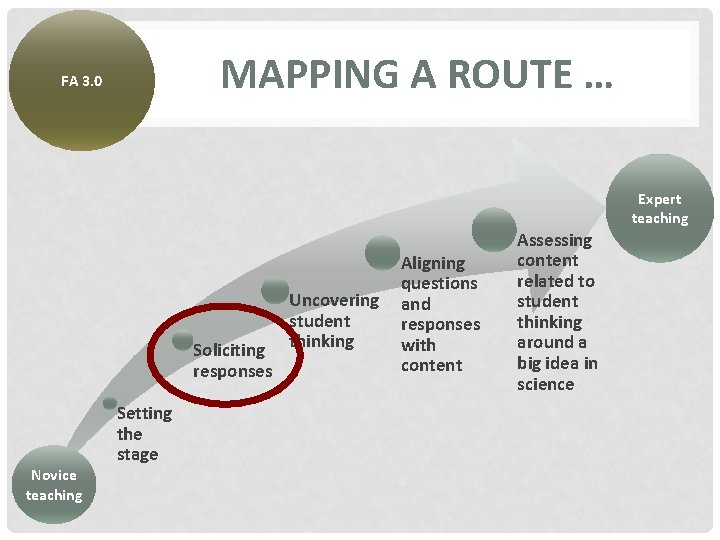

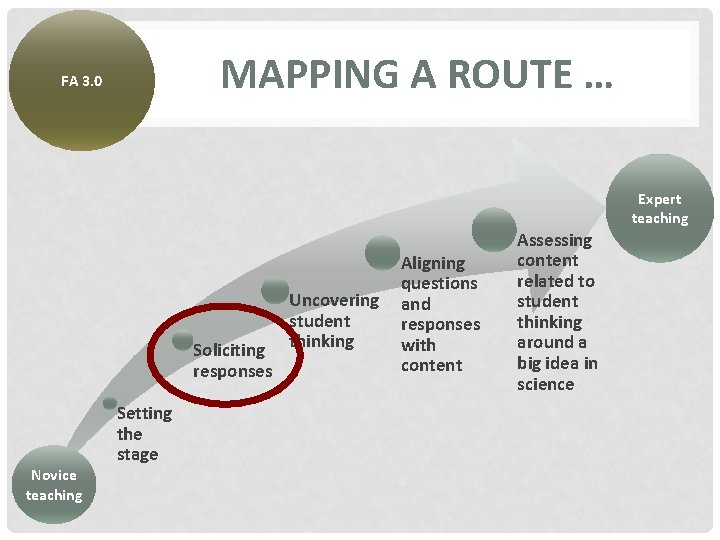

MAPPING A ROUTE … FA 3. 0 Expert teaching Aligning questions Uncovering and student responses with Soliciting thinking content responses Novice teaching Setting the stage Assessing content related to student thinking around a big idea in science

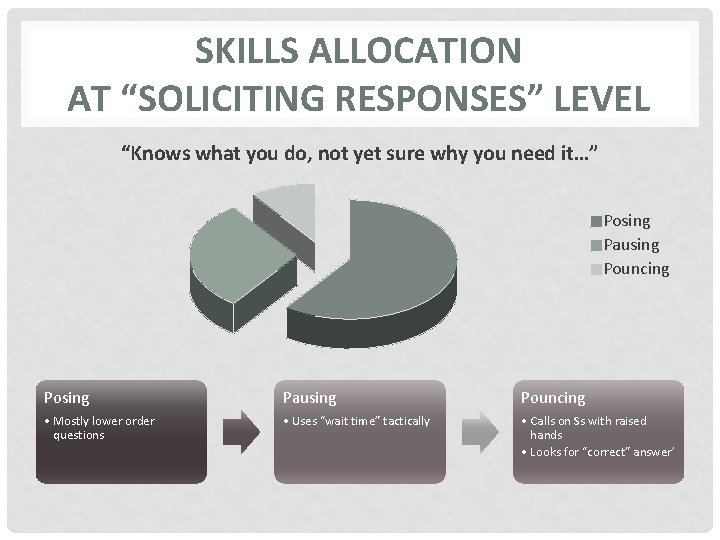

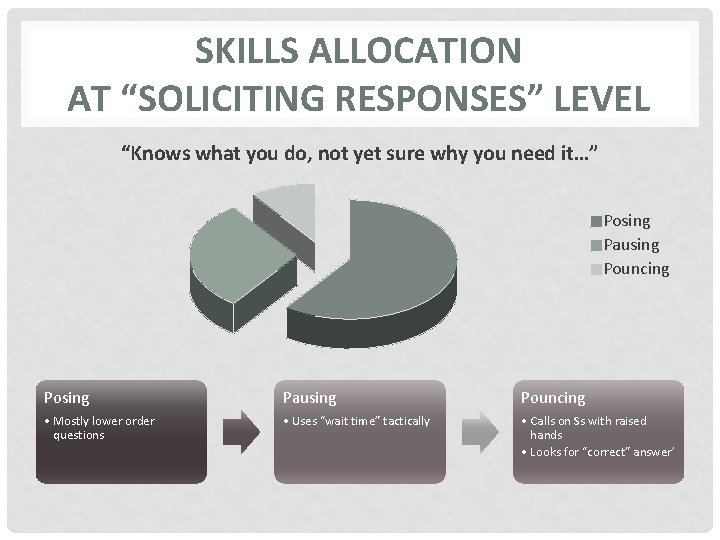

SKILLS ALLOCATION AT “SOLICITING RESPONSES” LEVEL “Knows what you do, not yet sure why you need it…” Posing Pausing Pouncing • Mostly lower order questions • Uses “wait time” tactically • Calls on Ss with raised hands • Looks for “correct” answer’

Item Design Construct Map Reliability Validity Measurement Model Outcome Space

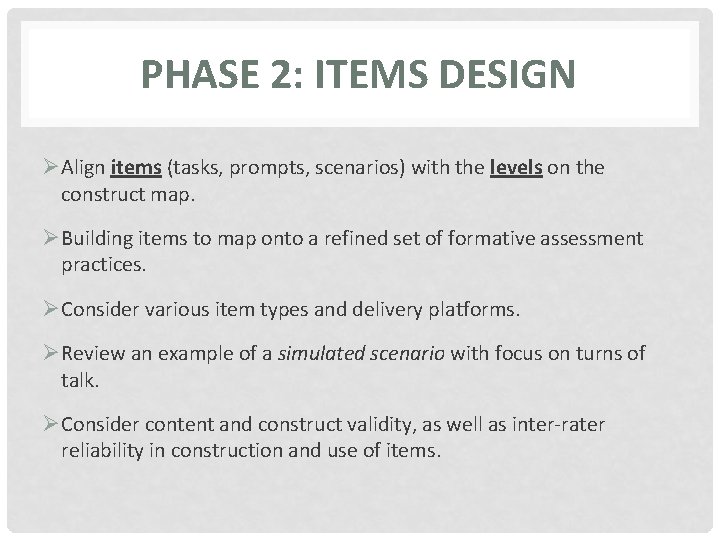

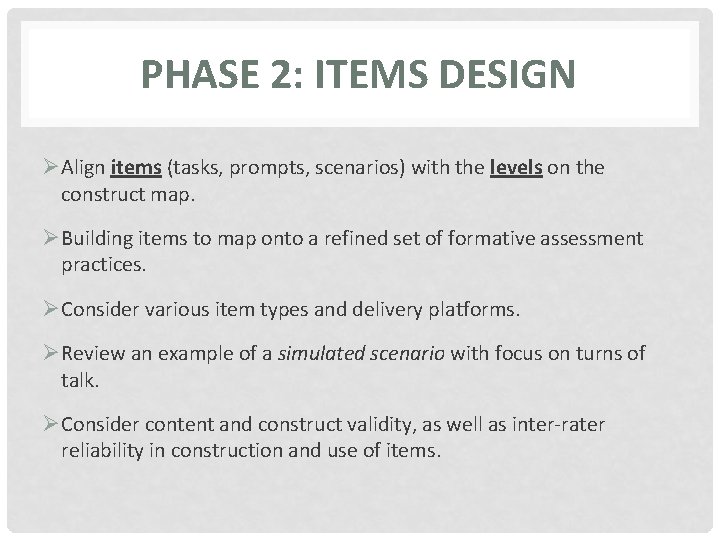

PHASE 2: ITEMS DESIGN ØAlign items (tasks, prompts, scenarios) with the levels on the construct map. ØBuilding items to map onto a refined set of formative assessment practices. ØConsider various item types and delivery platforms. ØReview an example of a simulated scenario with focus on turns of talk. ØConsider content and construct validity, as well as inter-rater reliability in construction and use of items.

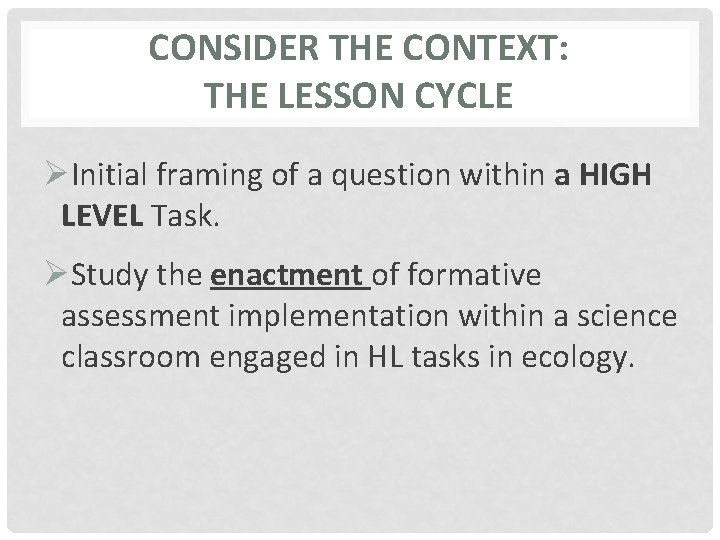

CONSIDER THE CONTEXT: THE LESSON CYCLE ØInitial framing of a question within a HIGH LEVEL Task. ØStudy the enactment of formative assessment implementation within a science classroom engaged in HL tasks in ecology.

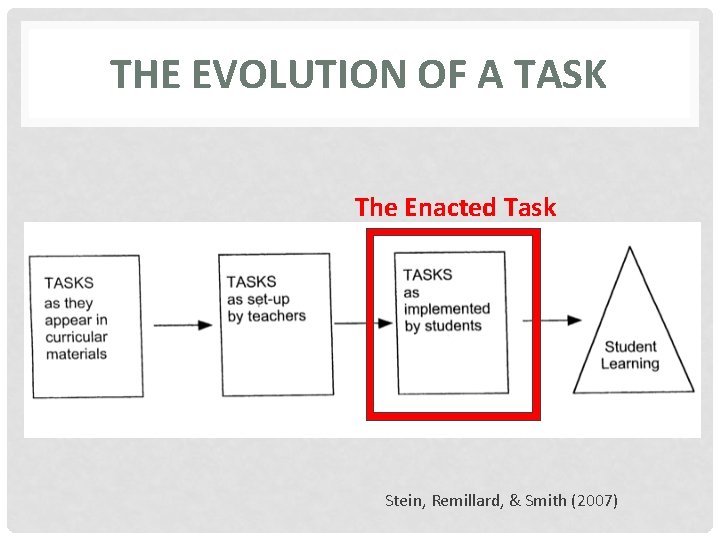

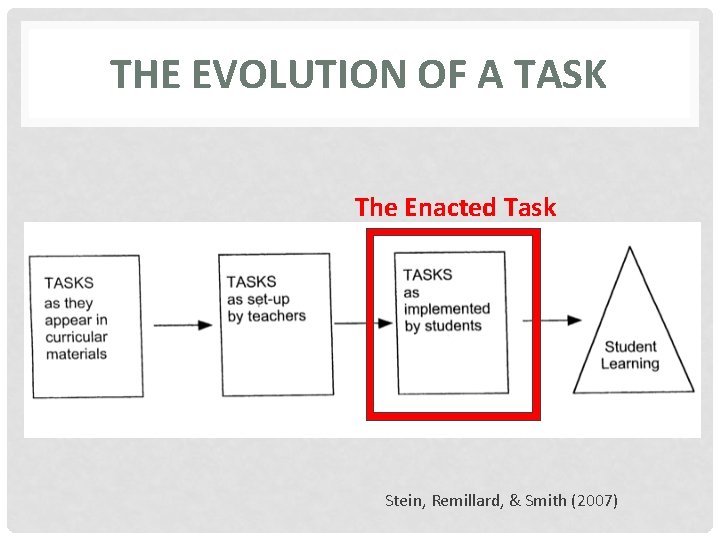

THE EVOLUTION OF A TASK The Enacted Task Stein, Remillard, & Smith (2007)

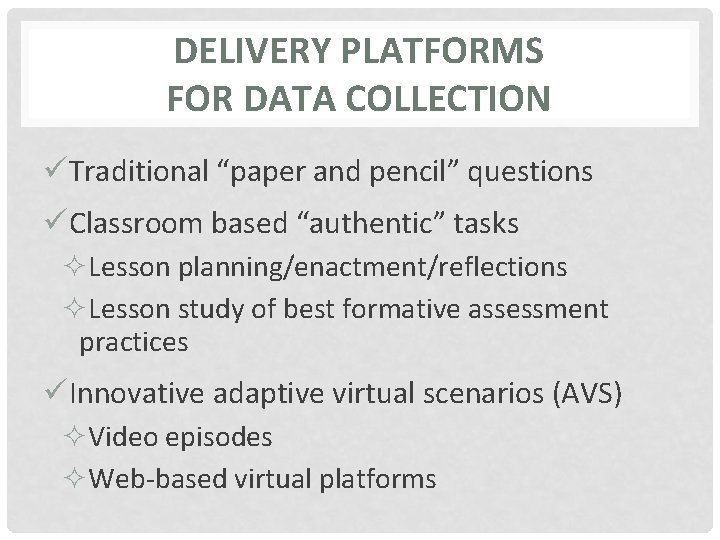

DELIVERY PLATFORMS FOR DATA COLLECTION üTraditional “paper and pencil” questions üClassroom based “authentic” tasks ²Lesson planning/enactment/reflections ²Lesson study of best formative assessment practices üInnovative adaptive virtual scenarios (AVS) ²Video episodes ²Web-based virtual platforms

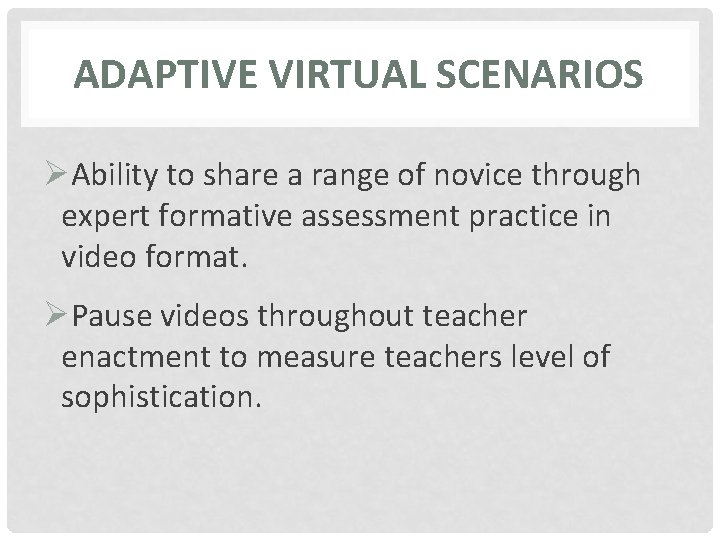

ADAPTIVE VIRTUAL SCENARIOS ØAbility to share a range of novice through expert formative assessment practice in video format. ØPause videos throughout teacher enactment to measure teachers level of sophistication.

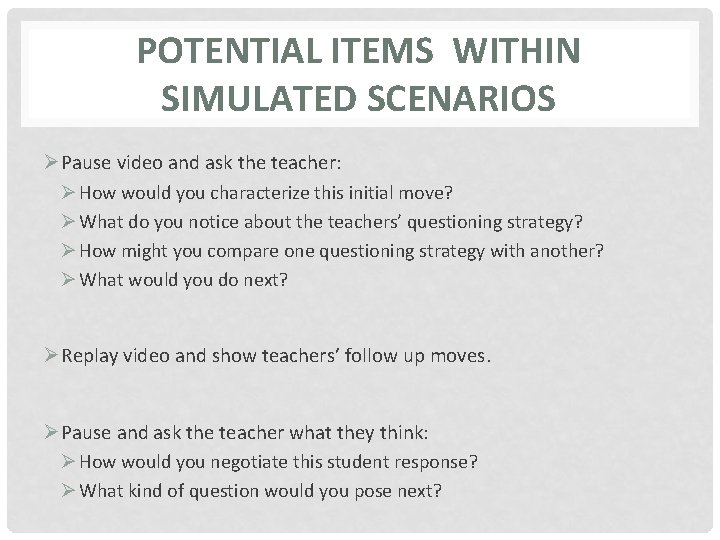

POTENTIAL ITEMS WITHIN SIMULATED SCENARIOS Ø Pause video and ask the teacher: Ø How would you characterize this initial move? Ø What do you notice about the teachers’ questioning strategy? Ø How might you compare one questioning strategy with another? Ø What would you do next? Ø Replay video and show teachers’ follow up moves. Ø Pause and ask the teacher what they think: Ø How would you negotiate this student response? Ø What kind of question would you pose next?

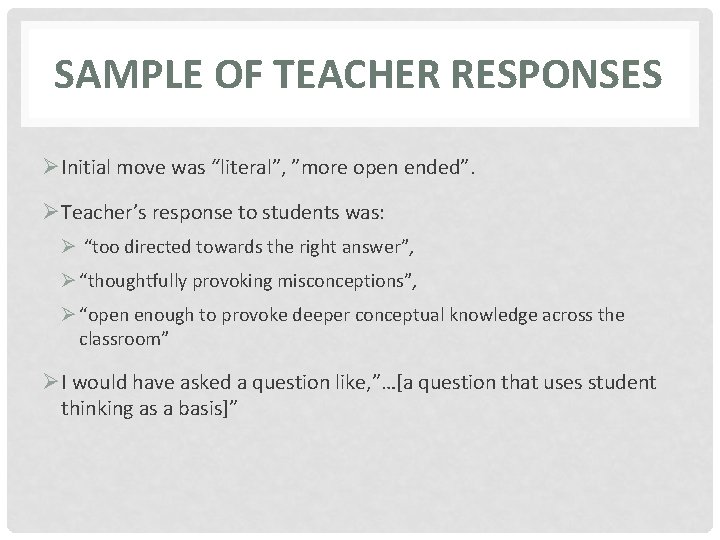

SAMPLE OF TEACHER RESPONSES ØInitial move was “literal”, ”more open ended”. ØTeacher’s response to students was: Ø “too directed towards the right answer”, Ø “thoughtfully provoking misconceptions”, Ø “open enough to provoke deeper conceptual knowledge across the classroom” ØI would have asked a question like, ”…[a question that uses student thinking as a basis]”

ADMINISTRATION OF TOOLS ØAdminister tools with widest range of teachers possible Ø pre-service Ø Induction years Ø Veteran 5 -9 years Ø Veteran 10+ years ØUse adaptive technology to capture teacher’s zone of proximal development in their expertise of formative assessment practices ØExamine relationship (if any) between scores on multiple tools

Items Design Construct Map Reliability Validity Measurement Model Outcome Space

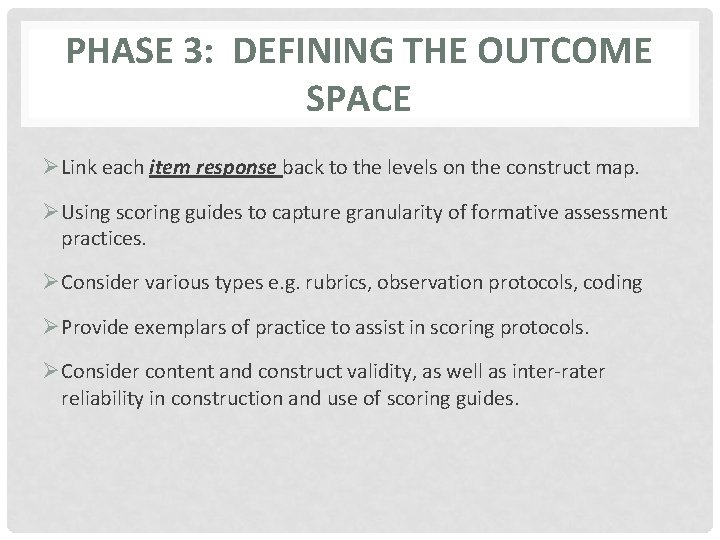

PHASE 3: DEFINING THE OUTCOME SPACE ØLink each item response back to the levels on the construct map. ØUsing scoring guides to capture granularity of formative assessment practices. ØConsider various types e. g. rubrics, observation protocols, coding ØProvide exemplars of practice to assist in scoring protocols. ØConsider content and construct validity, as well as inter-rater reliability in construction and use of scoring guides.

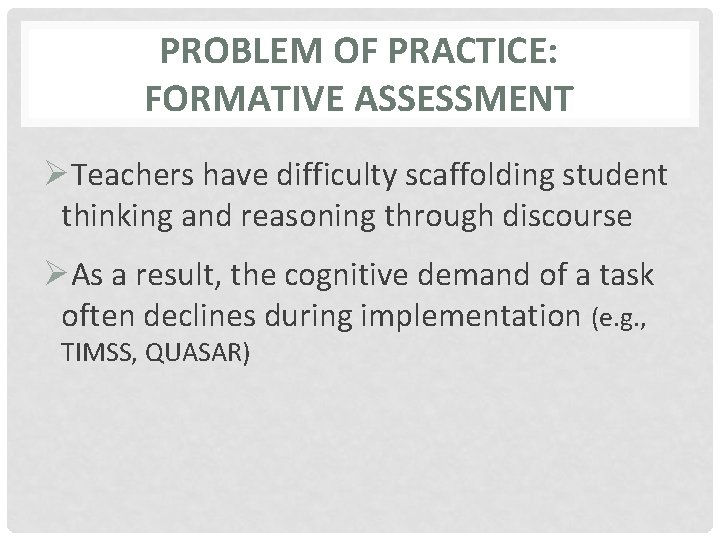

PROBLEM OF PRACTICE: FORMATIVE ASSESSMENT ØTeachers have difficulty scaffolding student thinking and reasoning through discourse ØAs a result, the cognitive demand of a task often declines during implementation (e. g. , TIMSS, QUASAR)

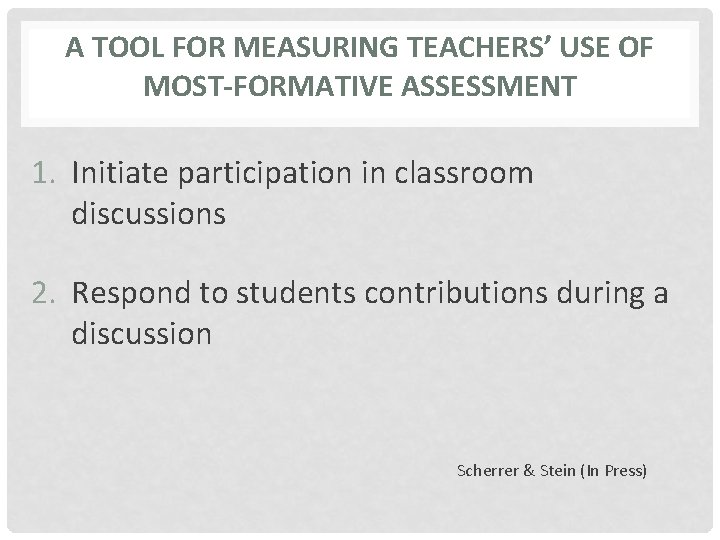

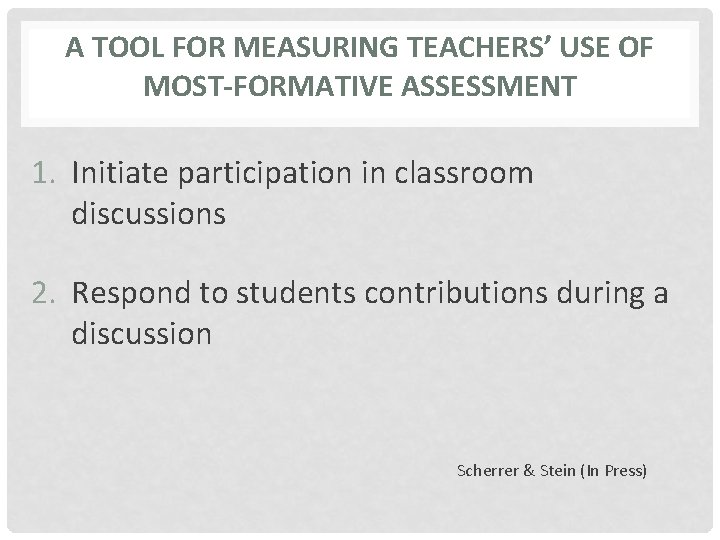

A TOOL FOR MEASURING TEACHERS’ USE OF MOST-FORMATIVE ASSESSMENT 1. Initiate participation in classroom discussions 2. Respond to students contributions during a discussion Scherrer & Stein (In Press)

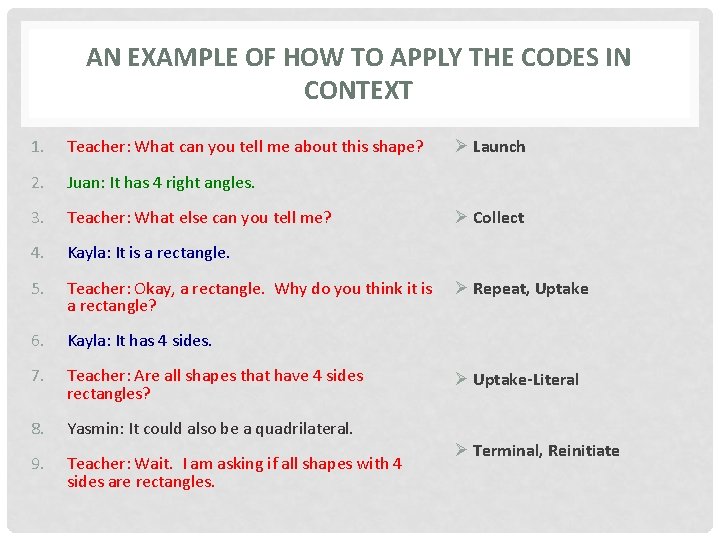

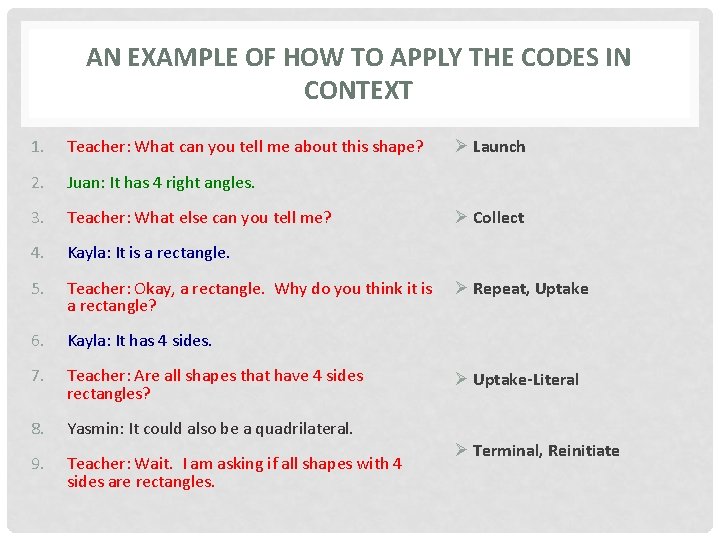

AN EXAMPLE OF HOW TO APPLY THE CODES IN CONTEXT 1. Teacher: What can you tell me about this shape? 2. Juan: It has 4 right angles. 3. Teacher: What else can you tell me? 4. Kayla: It is a rectangle. 5. Teacher: Okay, a rectangle. Why do you think it is a rectangle? 6. Kayla: It has 4 sides. 7. Teacher: Are all shapes that have 4 sides rectangles? 8. Yasmin: It could also be a quadrilateral. 9. Teacher: Wait. I am asking if all shapes with 4 sides are rectangles. Ø Launch Ø Collect Ø Repeat, Uptake Ø Uptake-Literal Ø Terminal, Reinitiate

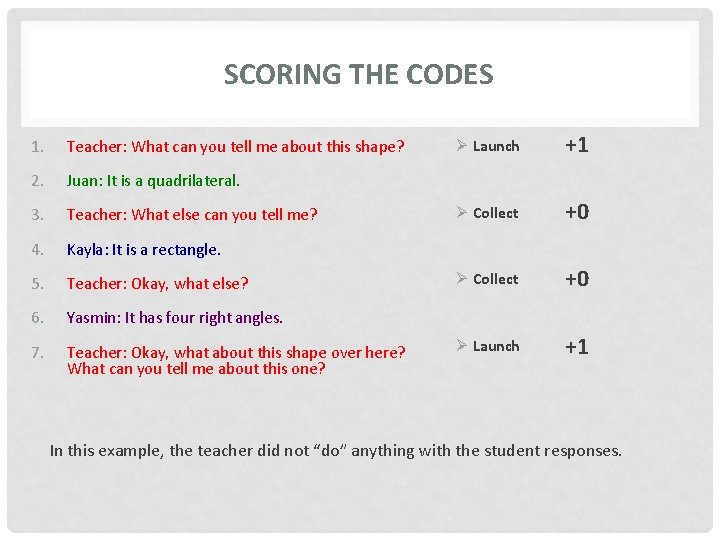

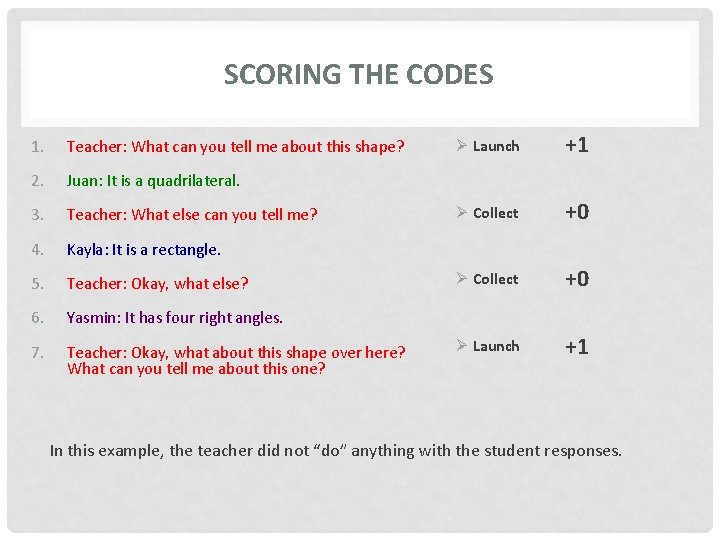

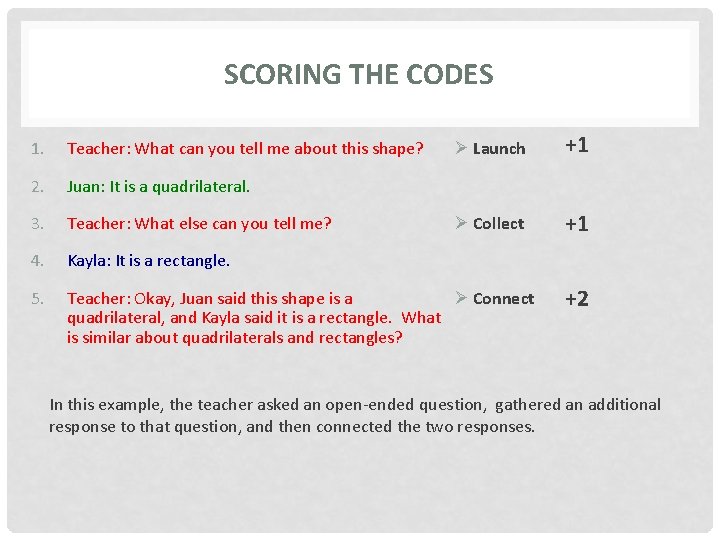

SCORING THE CODES 1. Teacher: What can you tell me about this shape? 2. Juan: It is a quadrilateral. 3. Teacher: What else can you tell me? 4. Kayla: It is a rectangle. 5. Teacher: Okay, what else? 6. Yasmin: It has four right angles. 7. Teacher: Okay, what about this shape over here? What can you tell me about this one? Ø Launch +1 Ø Collect +0 Ø Launch +1 In this example, the teacher did not “do” anything with the student responses.

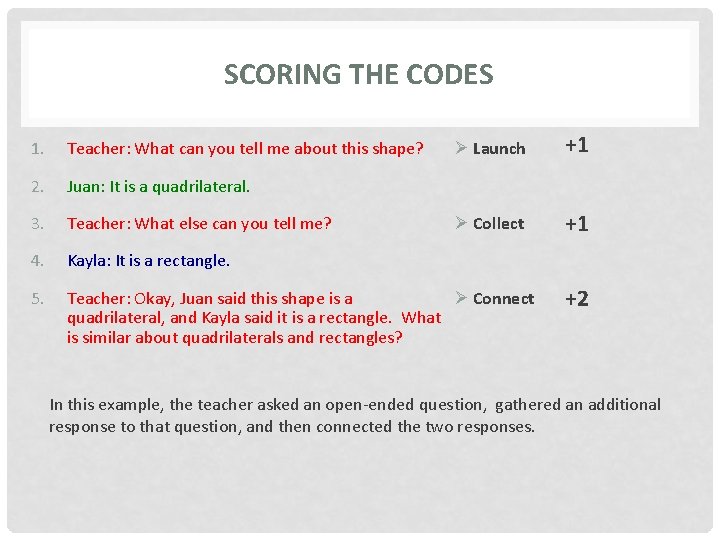

SCORING THE CODES Ø Launch +1 Ø Collect +1 1. Teacher: What can you tell me about this shape? 2. Juan: It is a quadrilateral. 3. Teacher: What else can you tell me? 4. Kayla: It is a rectangle. 5. Teacher: Okay, Juan said this shape is a Ø Connect quadrilateral, and Kayla said it is a rectangle. What is similar about quadrilaterals and rectangles? +2 In this example, the teacher asked an open-ended question, gathered an additional response to that question, and then connected the two responses.

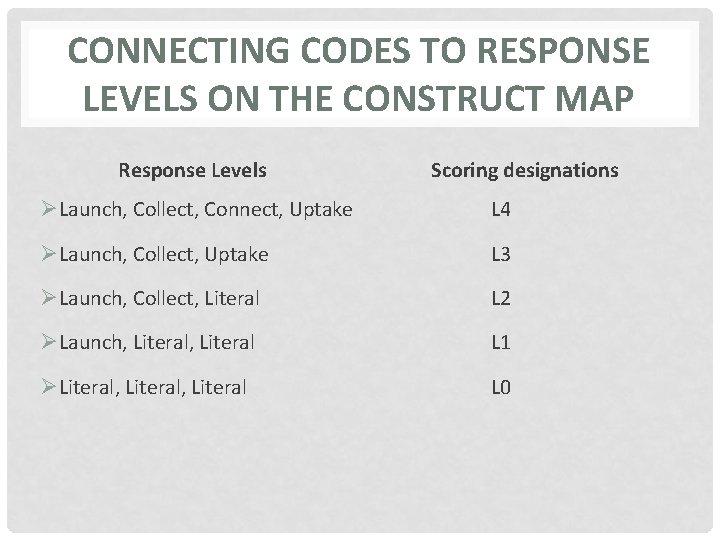

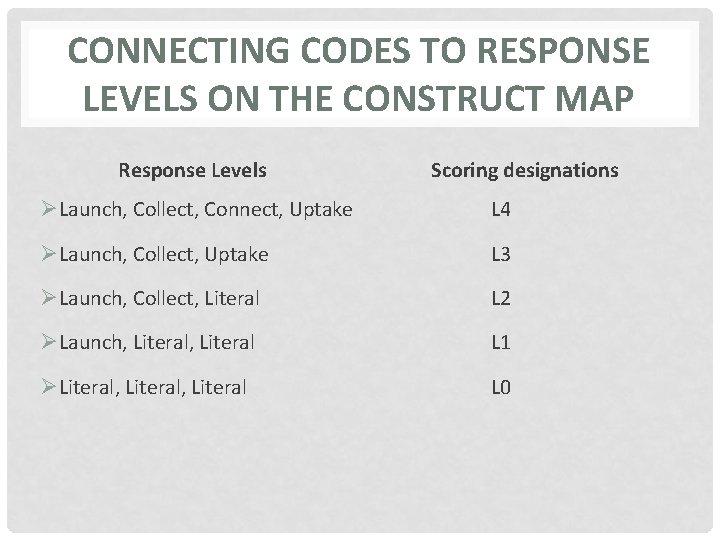

CONNECTING CODES TO RESPONSE LEVELS ON THE CONSTRUCT MAP Response Levels Scoring designations ØLaunch, Collect, Connect, Uptake L 4 ØLaunch, Collect, Uptake L 3 ØLaunch, Collect, Literal L 2 ØLaunch, Literal L 1 ØLiteral, Literal L 0

Items Design Construct Map Reliability Validity Measurement Model Outcome Space

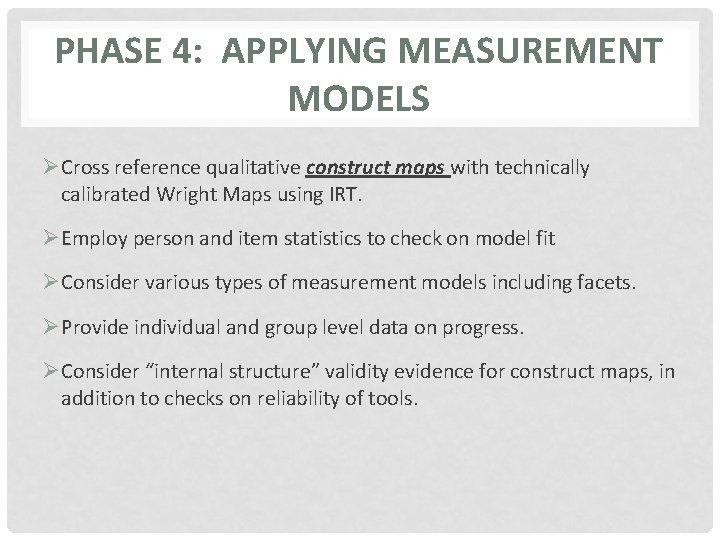

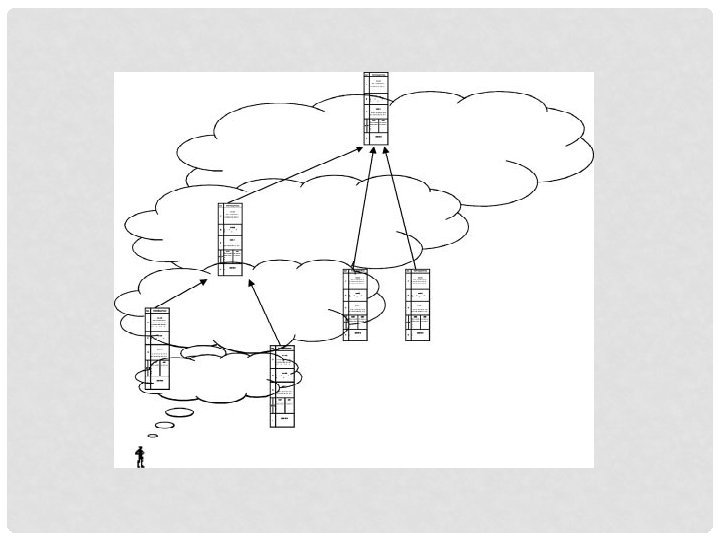

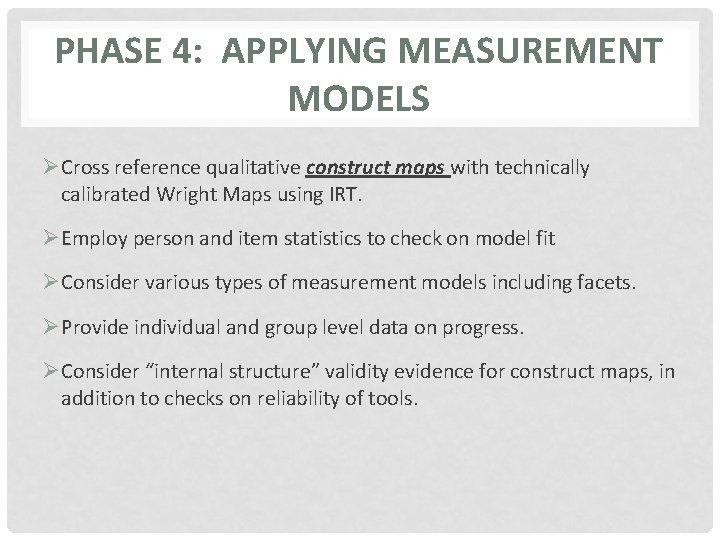

PHASE 4: APPLYING MEASUREMENT MODELS ØCross reference qualitative construct maps with technically calibrated Wright Maps using IRT. ØEmploy person and item statistics to check on model fit ØConsider various types of measurement models including facets. ØProvide individual and group level data on progress. ØConsider “internal structure” validity evidence for construct maps, in addition to checks on reliability of tools.

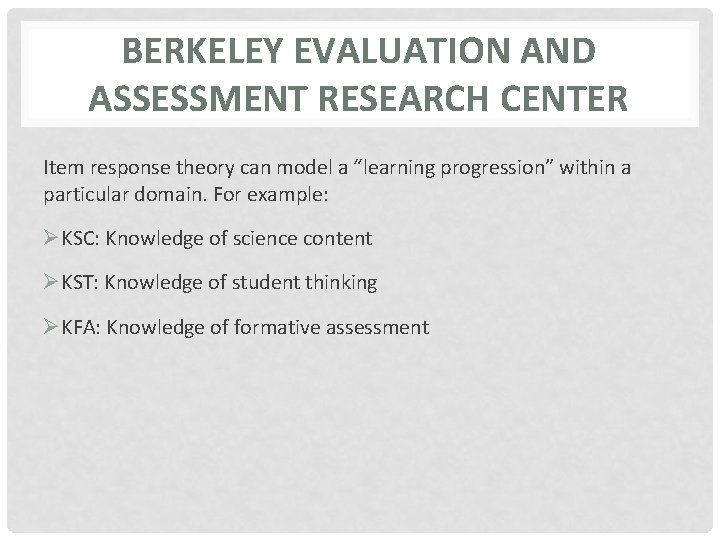

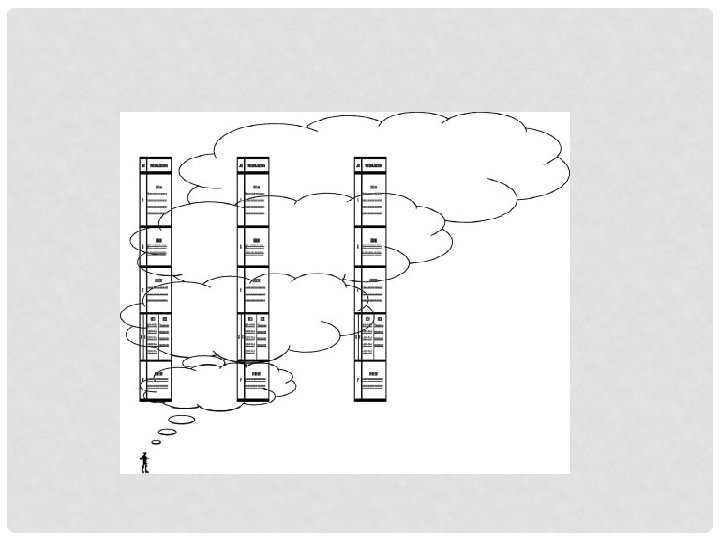

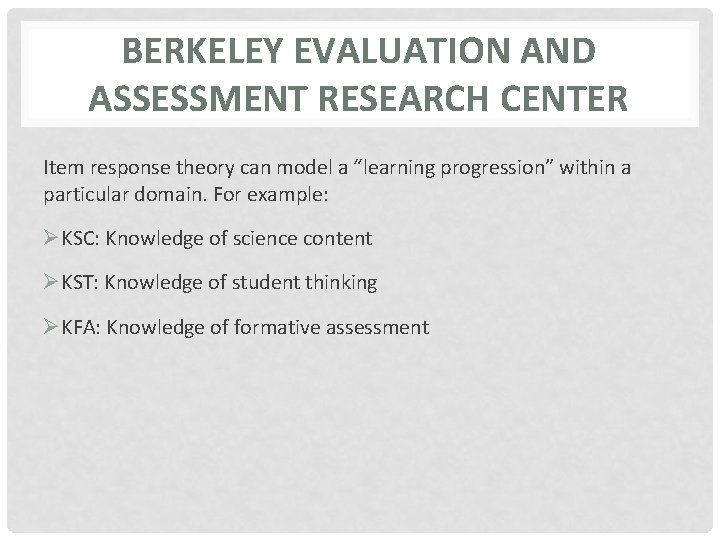

BERKELEY EVALUATION AND ASSESSMENT RESEARCH CENTER Item response theory can model a “learning progression” within a particular domain. For example: ØKSC: Knowledge of science content ØKST: Knowledge of student thinking ØKFA: Knowledge of formative assessment

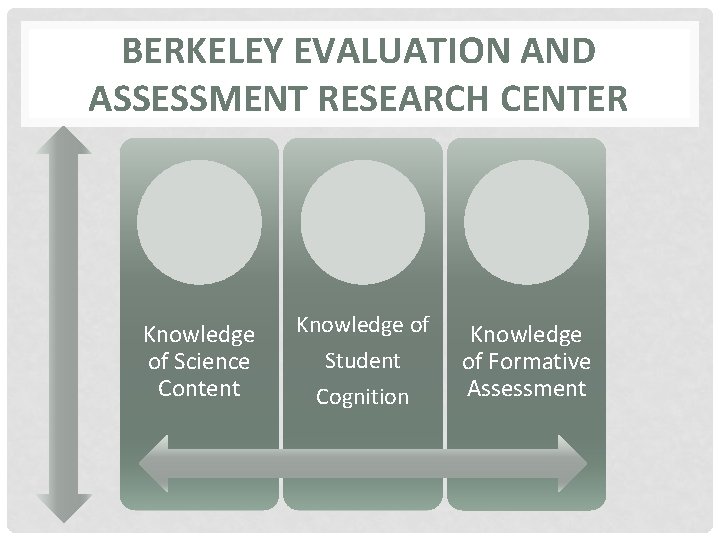

BERKELEY EVALUATION AND ASSESSMENT RESEARCH CENTER Knowledge of Science Content Knowledge of Student Cognition Knowledge of Formative Assessment

CASE STUDY: DEVELOPING AN INTEGRATED ASSESSMENT SYSTEM (DIAS) FOR TEACHER EDUCATION Pamela Moss, University of Michigan Mark Wilson, University of California, Berkeley Goal is to develop an assessment system that: Øfocuses on teaching practice grounded in professional and disciplinary knowledge as it develops over time; Øaddresses multiple purposes of a broad array of stakeholders working in different contexts; and Øcreates the foundation for programmatic coherence and professional development across time and institutional contexts.

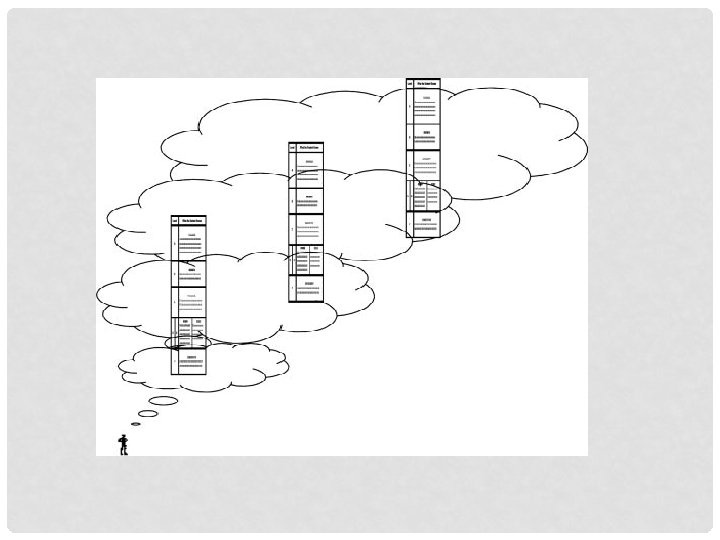

CASE STUDY: DIAS The research team identified the ways in which student teachers can learn to use formative and summative assessment to guide their students’ learning. ØDeveloped a construct map that outlined a progression of learning in “Assessment. ” ØDescribed the different aspects of “Assessment, ” such as: Ø Identifying the mathematical target to be assessed; Ø Understanding the purposes of the assessment; Ø Designing appropriate and feasible tasks (such as end of class checks); Ø Developing accurate inferences about individual student and whole class learning.

CASE STUDY: DIAS ØThe research team collected data from student teachers enrolled in the elementary mathematics teacher education program at the University of Michigan. ØDesigned scoring guides based on our construct map. ØCoded videotapes from over 100 student teachers as they conducted lessons in the classroom. ØCoded associated collected data, such as lesson plans and reflections, since these documents contain information about what the student teachers hope to learn from the assessment(s), and what they infer about the students in their classroom. ØUsing item response methods to determine which aspects of assessment practice are easier or more difficult for the student teachers and to thereby inform the teacher education program.

SYNOPSIS ØIncredible partnership ØFilling an important educational research space ØIdentified the assessment space ØFocus on the content ØEmphasis on student thinking ØContributions to instrumentation and methodology ØMarry qualitative and quantitative data using IRT framework ØNext steps: pilot study

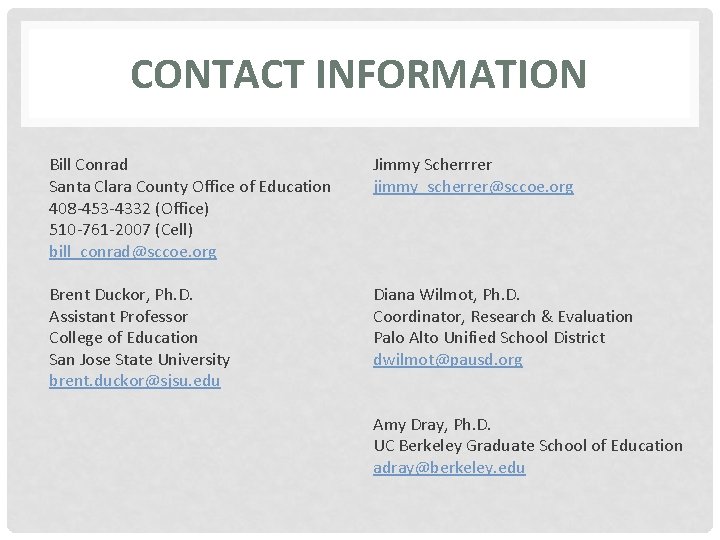

CONTACT INFORMATION Bill Conrad Santa Clara County Office of Education 408 -453 -4332 (Office) 510 -761 -2007 (Cell) bill_conrad@sccoe. org Jimmy Scherrrer jimmy_scherrer@sccoe. org Brent Duckor, Ph. D. Assistant Professor College of Education San Jose State University brent. duckor@sjsu. edu Diana Wilmot, Ph. D. Coordinator, Research & Evaluation Palo Alto Unified School District dwilmot@pausd. org Amy Dray, Ph. D. UC Berkeley Graduate School of Education adray@berkeley. edu

MEASURING TEACHERS' USE OF FORMATIVE ASSESSMENT: A LEARNING PROGRESSIONS APPROACH BRENT DUCKOR (SJSU) DIANA WILMOT (PAUSD) BILL CONRAD & JIMMY SCHERRER (SCCOE) AMY DRAY (UCB)