Measuring Performance How should the performance of a

- Slides: 15

Measuring Performance • How should the performance of a parallel computation be measured? – Traditional measures like MIPS and MFLOPS really don’t cut it • New ways to measure parallel performance are needed – Speedup – Efficiency 10/28/2020 ICSS 531 - Speedup 1

Speedup • Speedup is the most often used measure of parallel performance • If – Ts is the best possible serial time – Tn is the time taken by a parallel algorithm on n processors • Then – 10/28/2020 ICSS 531 - Speedup 2

Read Between the Lines • Exactly what is meant by Ts (i. e. the time taken to run the fastest serial algorithm on one processor) – – One processor of the parallel computer? The fastest serial machine available? A parallel algorithm run on a single processor? Is the serial algorithm the best one? • To keep things fair, Ts should be the best possible time in the serial world 10/28/2020 ICSS 531 - Speedup 3

Speedup’ • A slightly different definition of speedup also exists. – The time taken by the parallel algorithm on one processor divided by the time taken by the parallel algorithm on N processors. – However this is misleading since many parallel algorithms contain extra operations to accommodate the parallelism (e. g the communication) – The result is Ts is increased thus exaggerating the speedup. 10/28/2020 ICSS 531 - Speedup 4

Factors That Limit Speedup • Software Overhead – Even with a completely equivalent algorithm, software overhead arises in the concurrent implementation • Load Balancing – Speedup is generally limited by the speed of the slowest node. So an important consideration is to ensure that each node performs the same amount of work • Communication Overhead – Assuming that communication and calculation cannot be overlapped, then any time spent communicating the data between processors directly degrades the speedup 10/28/2020 ICSS 531 - Speedup 5

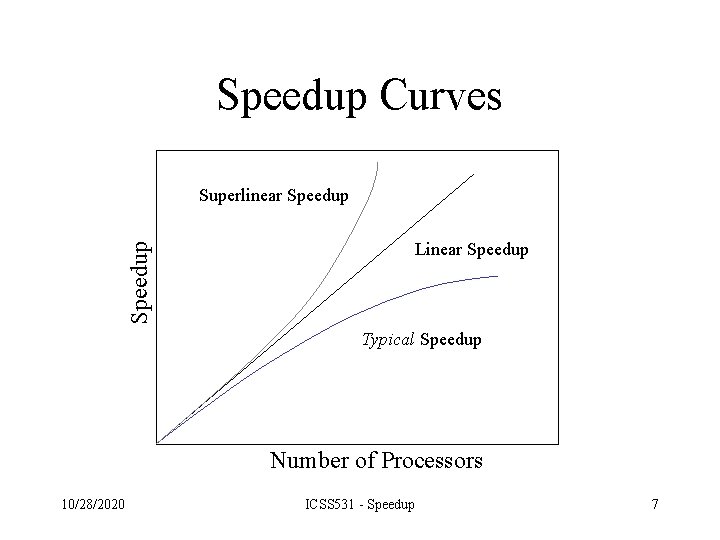

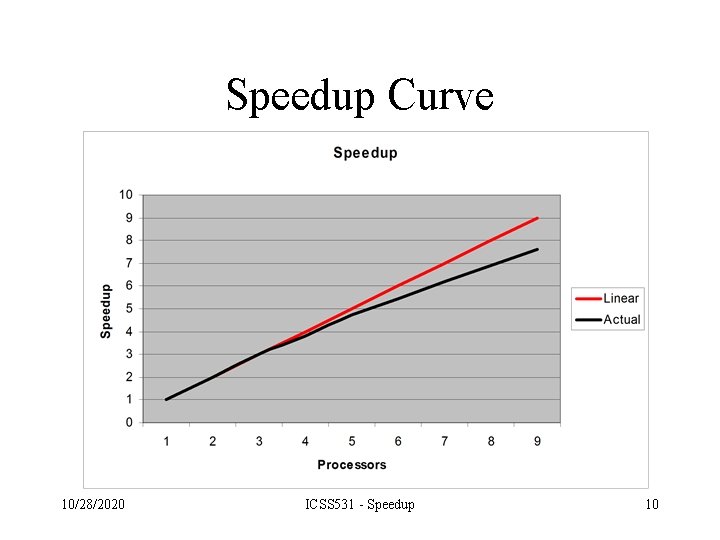

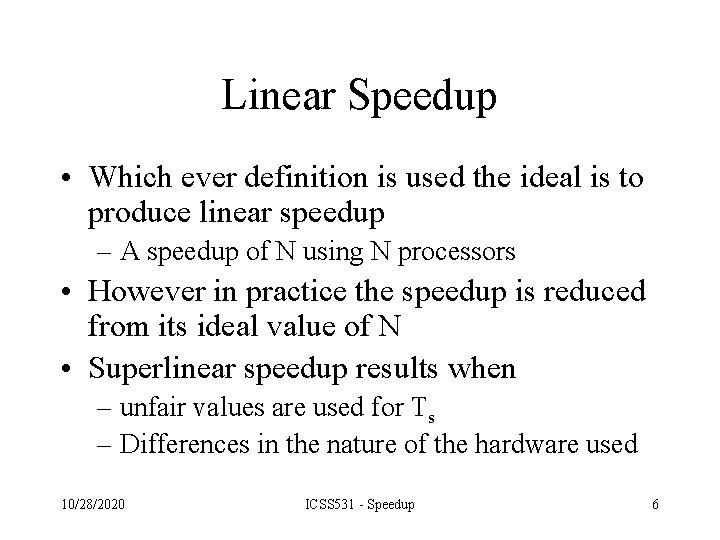

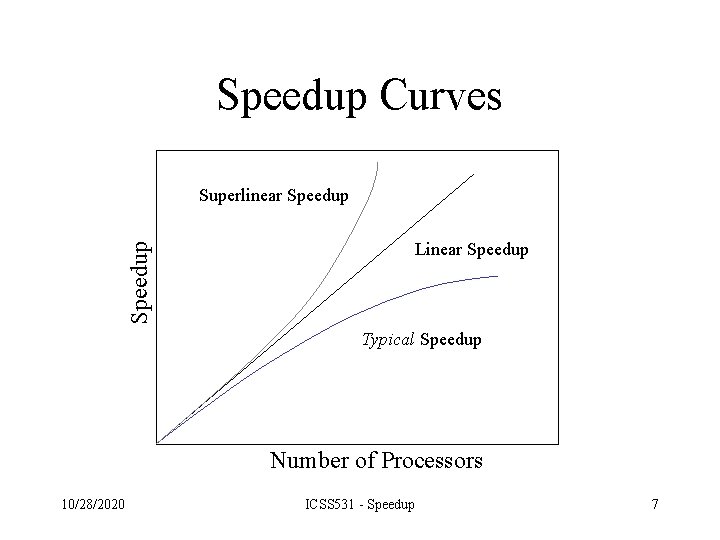

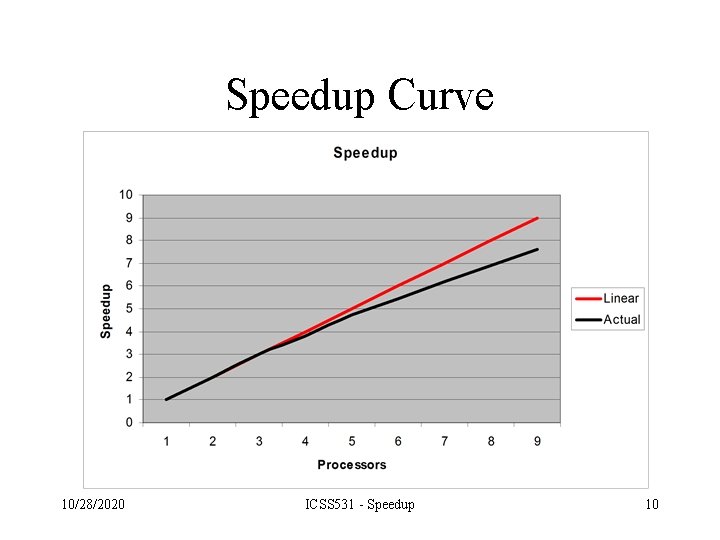

Linear Speedup • Which ever definition is used the ideal is to produce linear speedup – A speedup of N using N processors • However in practice the speedup is reduced from its ideal value of N • Superlinear speedup results when – unfair values are used for Ts – Differences in the nature of the hardware used 10/28/2020 ICSS 531 - Speedup 6

Speedup Curves Superlinear Speedup Linear Speedup Typical Speedup Number of Processors 10/28/2020 ICSS 531 - Speedup 7

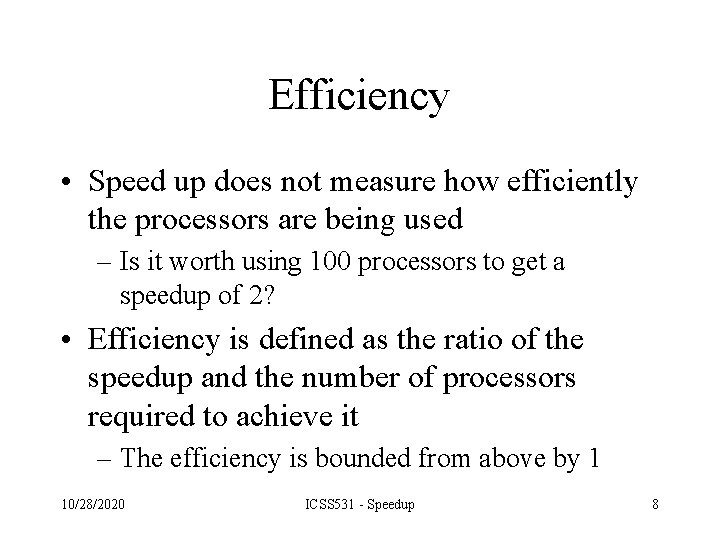

Efficiency • Speed up does not measure how efficiently the processors are being used – Is it worth using 100 processors to get a speedup of 2? • Efficiency is defined as the ratio of the speedup and the number of processors required to achieve it – The efficiency is bounded from above by 1 10/28/2020 ICSS 531 - Speedup 8

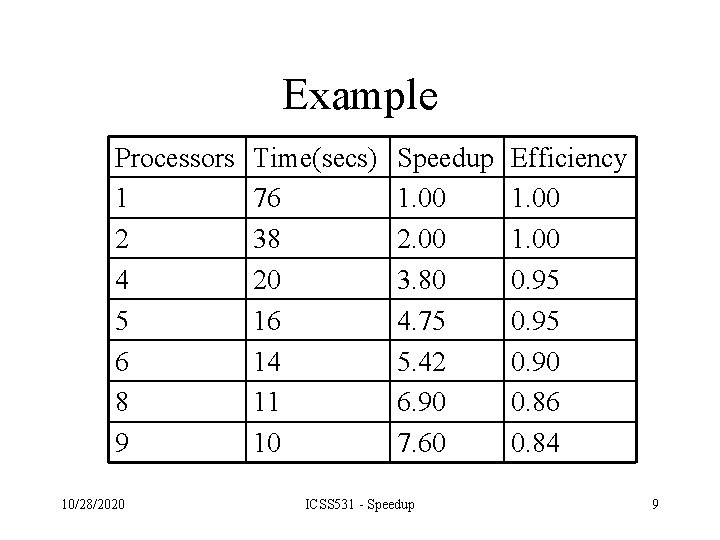

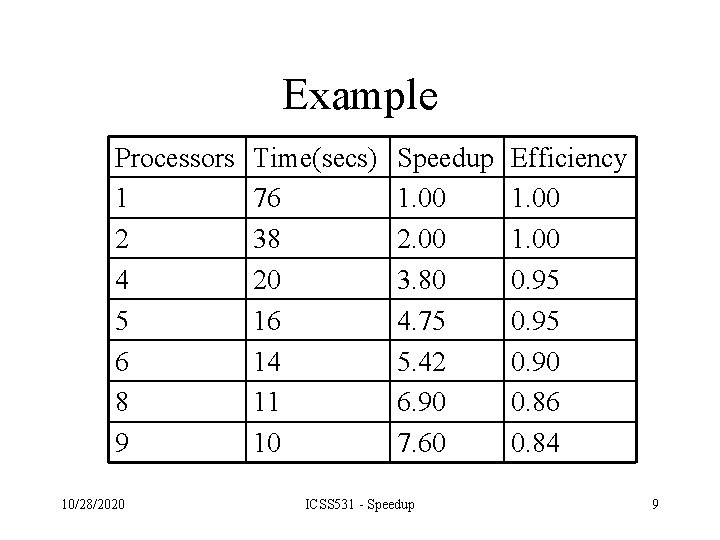

Example Processors 1 2 4 5 6 8 9 10/28/2020 Time(secs) 76 38 20 16 14 11 10 Speedup 1. 00 2. 00 3. 80 4. 75 5. 42 6. 90 7. 60 ICSS 531 - Speedup Efficiency 1. 00 0. 95 0. 90 0. 86 0. 84 9

Speedup Curve 10/28/2020 ICSS 531 - Speedup 10

Amdahl’s Law • A parallel computation has two types of operations – Those which must be executed in serial – Those which can be executed in parallel • Amdahl’s law states that the speedup of a parallel algorithm is effectively limited by the number of operations which must be performed sequentially 10/28/2020 ICSS 531 - Speedup 11

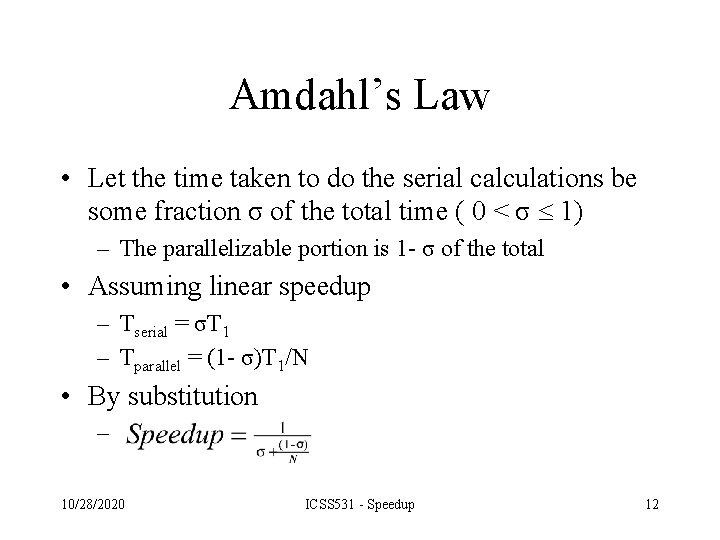

Amdahl’s Law • Let the time taken to do the serial calculations be some fraction σ of the total time ( 0 < σ 1) – The parallelizable portion is 1 - σ of the total • Assuming linear speedup – Tserial = σT 1 – Tparallel = (1 - σ)T 1/N • By substitution – 10/28/2020 ICSS 531 - Speedup 12

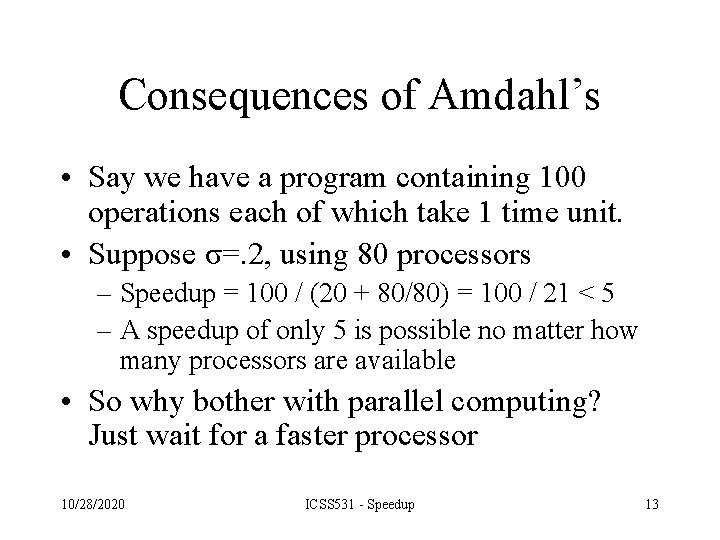

Consequences of Amdahl’s • Say we have a program containing 100 operations each of which take 1 time unit. • Suppose σ=. 2, using 80 processors – Speedup = 100 / (20 + 80/80) = 100 / 21 < 5 – A speedup of only 5 is possible no matter how many processors are available • So why bother with parallel computing? Just wait for a faster processor 10/28/2020 ICSS 531 - Speedup 13

Avoiding Amdahl • There are several ways to avoid Amdahl’s law – Concentrate on parallel algorithms with small serial components – Amdahl’s law is not complete in that it does not take into account problem size 10/28/2020 ICSS 531 - Speedup 14

Classifying Parallel Programs • Parallel programs can be placed into broad categories based on expected speedups – Trivial Parallel • Assumes complete parallelism with no overhead due to communication – Divide and Conquer • N log N speedup – Communication Bound Parallelism 10/28/2020 ICSS 531 - Speedup 15