Measurement Concepts and scale evaluation Contents Identifying and

- Slides: 14

Measurement Concepts and scale evaluation

Contents • Identifying and deciding on the variables to be measured • Development of measurement scales • Types of measurement scales • Scale evaluation

• The process of assigning numbers or labels to different objects under study to represent them quantitatively or qualitatively is called measurement. • Scaling involves the generation of continuum on which measured objects are located.

Identifying and deciding on the variables to be measured • The primary step in the measurement process is to identify the area or the concept that is of interest for the study. • While conducting business research, a researcher has to initially define what is to be measured, how it will be measured, and also the concept that needs to be measured.

Development of measurement scales • A scale can be defined as a set of numbers or symbols developed in a manner so as to facilitate the assignment of these numbers or symbols to the units under research following certain rules.

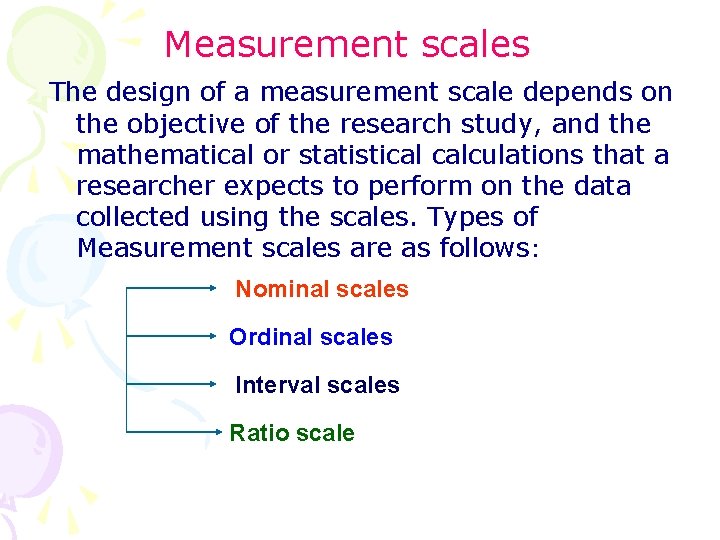

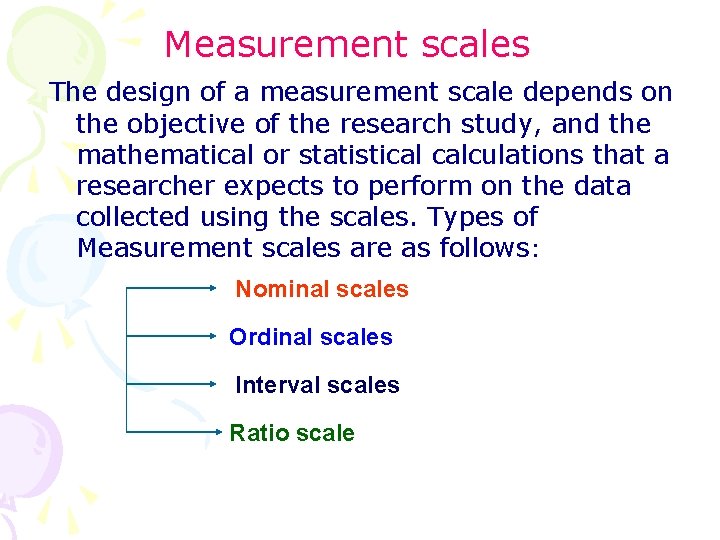

Measurement scales The design of a measurement scale depends on the objective of the research study, and the mathematical or statistical calculations that a researcher expects to perform on the data collected using the scales. Types of Measurement scales are as follows: Nominal scales Ordinal scales Interval scales Ratio scale

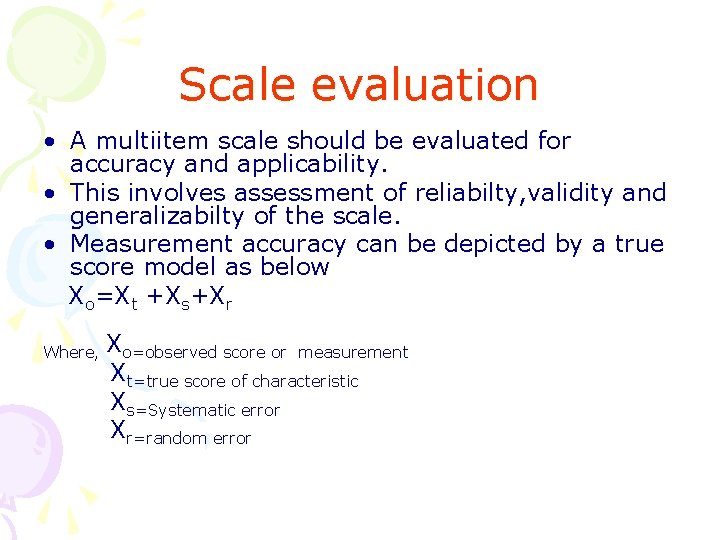

Scale evaluation • A multiitem scale should be evaluated for accuracy and applicability. • This involves assessment of reliabilty, validity and generalizabilty of the scale. • Measurement accuracy can be depicted by a true score model as below Xo=Xt +Xs+Xr Where, Xo=observed score or measurement Xt=true score of characteristic Xs=Systematic error Xr=random error

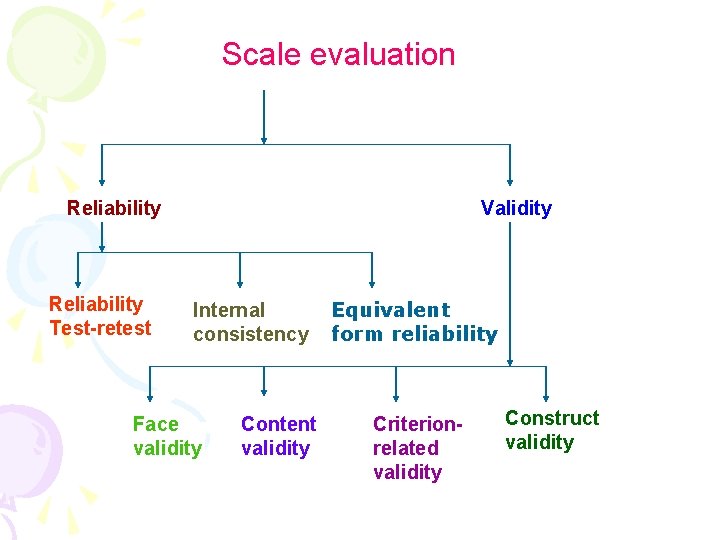

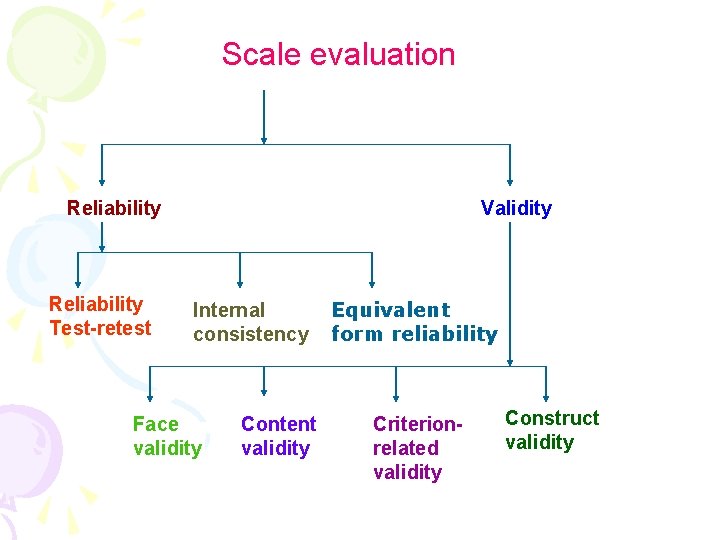

Scale evaluation Reliability Test-retest Validity Internal consistency Face validity Content validity Equivalent form reliability Criterionrelated validity Construct validity

Scale evaluation contd…. Reliability It refers to the extent to which a scale produces consistent results if measurements are made repeatedly. • Test-retest reliability: The respondents are administered identical sets of scale items at two different times in nearly equivalent conditions as possible. • Alternative forms reliability: two equivalent forms of the scale are constructed • Internal consistency reliability is used to assess the reliability of a summated scale in which several items are summed to form a total score. In split half reliability items of scale are divided into two equal halves and resulting half scores are correlated.

Scale evaluation contd…. Validity of a scale may be defined as the extent to which differences in observed scores reflect true differences among objects on the characteristic being measured ie Xo=Xt • Content validity is systematic evaluation of how well the content of a scale represents the measurement task, whether the scale items cover the entire domain of the construct being measured.

Scale evaluation contd…. Criterion validity reflects whether a scale performs as expected in relation to other variables selected as meaningful criterion. It has two forms: • Concurrent validity is assessed when data on the scale being evaluated and on the criterion variables are collected at the same time. • Predictive validity is assessed when data on the scale is collected at one point of time and data on criterion variables at a future time

Construct validity It aims to first define the concept/construct explicitly and then to show that measurement or operational definition logically connects the empirical phenomenon with the construct. Two basic types are: • Convergent validity is the extent to which the scale correlates positively with other measures of the same construct. • Discriminant validity is the extent to which a measure does not correlate with other constructs from which it is supposed to differ.

Sensitivity: It refers to an instrument’s ability to accurately measure variability in stimuli or responses. Sensitivity is not high in instrument’s involving ‘Agree’ or ‘Disagree’ types of response. Generalizability: It refers to the amount of flexibility in interpreting the data in different research designs. Relevance: It refers to the appropriateness of using a particular scale for measuring a variable. Relevance = reliability x validity