MAXIMUM ENTROPY AND FOURIER TRANSFORMATION Hirophysics com Nicole

- Slides: 23

MAXIMUM ENTROPY AND FOURIER TRANSFORMATION Hirophysics. com Nicole Rogers

An Introduction to Entropy Known as the ‘law of disorder. ’ Entropy is a measurement of uncertainty associated with a random variable. Measures the ‘multiplicity’ associated with the state of objects. Hirophysics. com

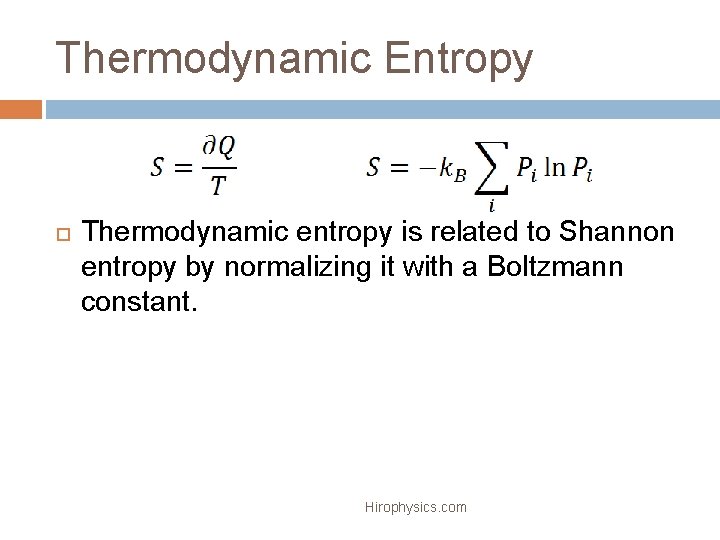

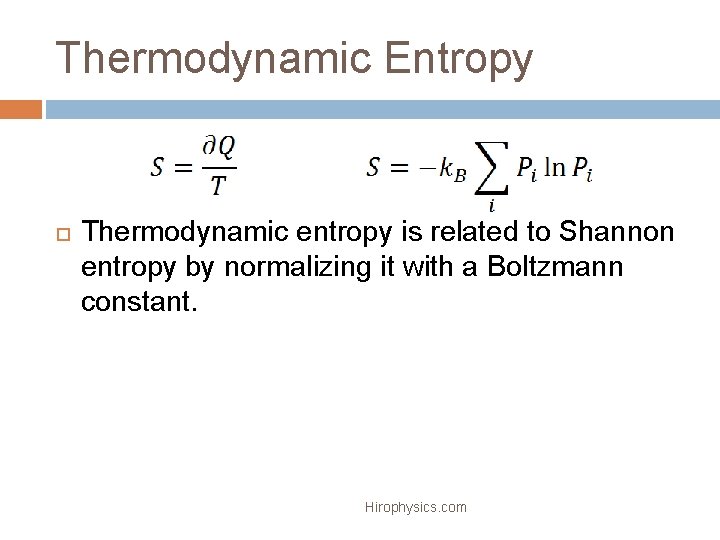

Thermodynamic Entropy Thermodynamic entropy is related to Shannon entropy by normalizing it with a Boltzmann constant. Hirophysics. com

Shannon Entropy measures how undetermined a state of uncertainty is. The higher the Shannon Entropy, the more undetermined the system is. Hirophysics. com

Shannon Entropy Example Let’s use the example of a dog race. Four dogs have various chances of winning the race. If we apply the entropy equation: H = ∑ Pi log(Pi) Racers Chance to Win (P) -log(P) -P log(P) Fido 0. 08 3. 64 0. 29 Ms Fluff 0. 17 2. 56 0. 43 Spike 0. 25 2. 00 0. 50 Woofers 0. 50 Hirophysics. com 1. 00 0. 50

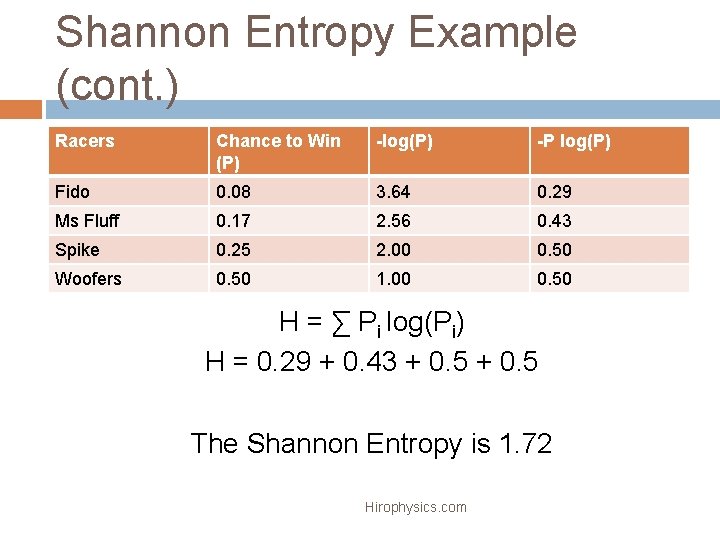

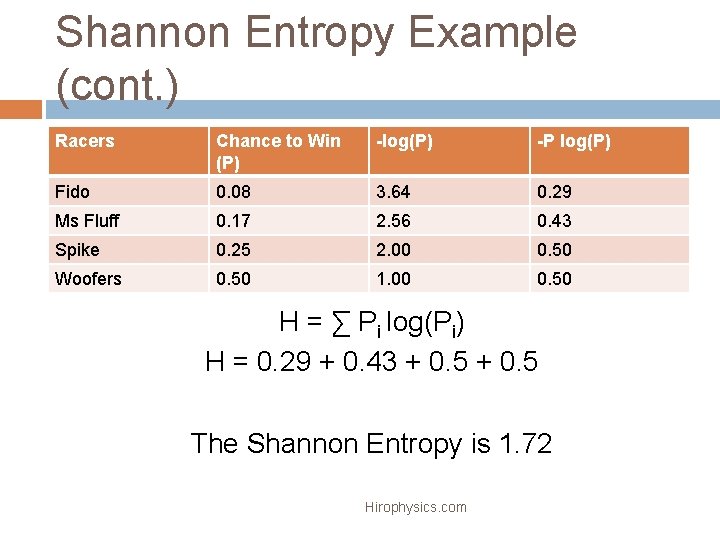

Shannon Entropy Example (cont. ) Racers Chance to Win (P) -log(P) -P log(P) Fido 0. 08 3. 64 0. 29 Ms Fluff 0. 17 2. 56 0. 43 Spike 0. 25 2. 00 0. 50 Woofers 0. 50 1. 00 0. 50 H = ∑ Pi log(Pi) H = 0. 29 + 0. 43 + 0. 5 The Shannon Entropy is 1. 72 Hirophysics. com

Things to Notice Racers Chance to Win (P) -log(P) -P log(P) Fido 0. 08 3. 64 0. 29 Ms Fluff 0. 17 2. 56 0. 43 Spike 0. 25 2. 00 0. 50 Woofers 0. 50 1. 00 0. 50 If you add the chance of each dog to win, the total will be one. This is because the chances are normalized and can me represented using a Gaussian curve. The more uncertain a situation, the higher the Shannon entropy. This will be demonstrated in the next example. Hirophysics. com

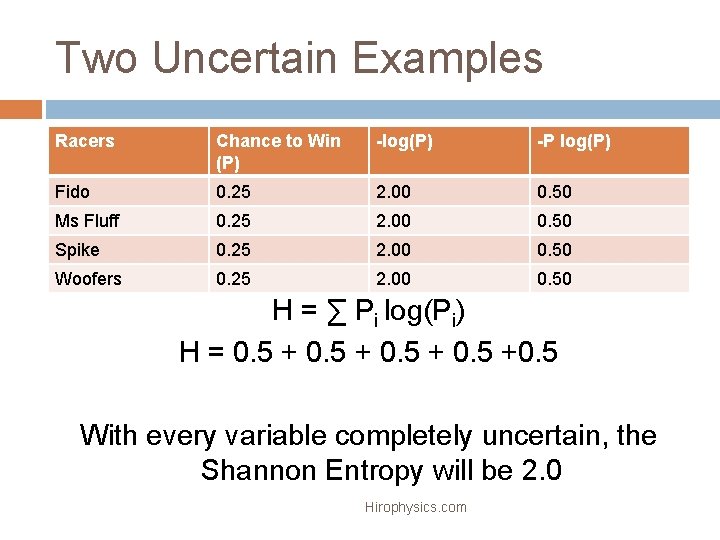

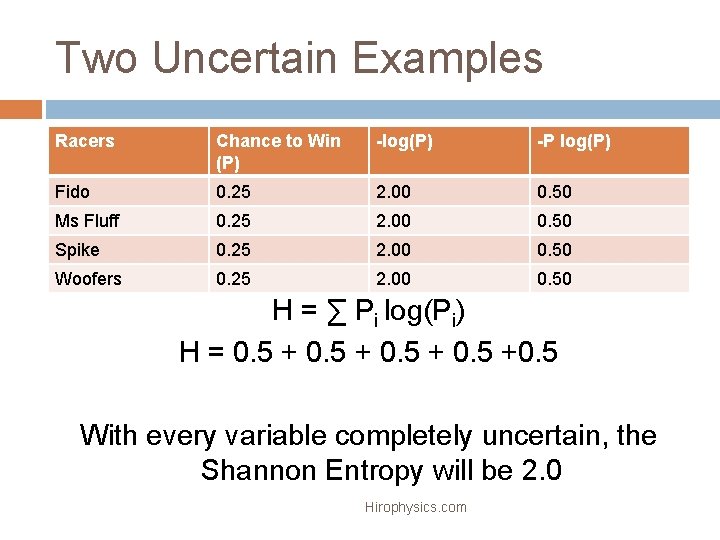

Two Uncertain Examples Racers Chance to Win (P) -log(P) -P log(P) Fido 0. 25 2. 00 0. 50 Ms Fluff 0. 25 2. 00 0. 50 Spike 0. 25 2. 00 0. 50 Woofers 0. 25 2. 00 0. 50 H = ∑ Pi log(Pi) H = 0. 5 +0. 5 With every variable completely uncertain, the Shannon Entropy will be 2. 0 Hirophysics. com

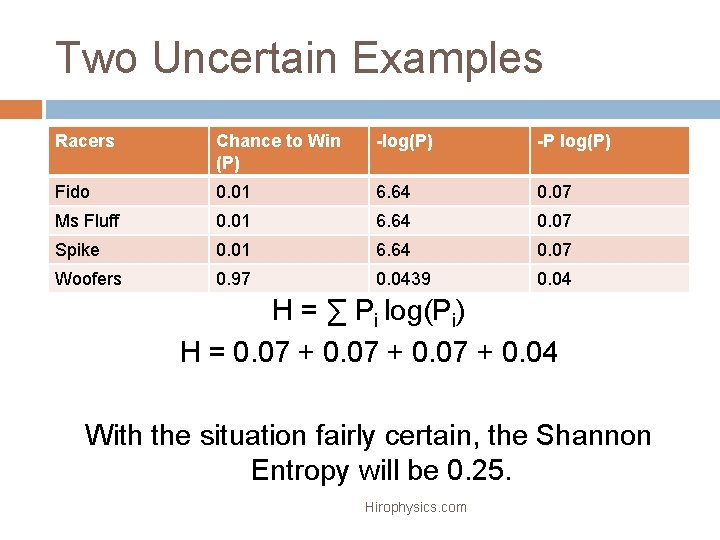

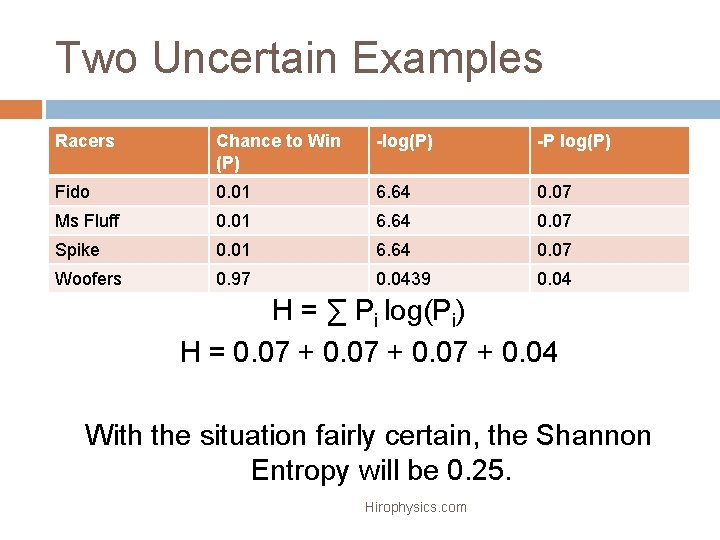

Two Uncertain Examples Racers Chance to Win (P) -log(P) -P log(P) Fido 0. 01 6. 64 0. 07 Ms Fluff 0. 01 6. 64 0. 07 Spike 0. 01 6. 64 0. 07 Woofers 0. 97 0. 0439 0. 04 H = ∑ Pi log(Pi) H = 0. 07 + 0. 04 With the situation fairly certain, the Shannon Entropy will be 0. 25. Hirophysics. com

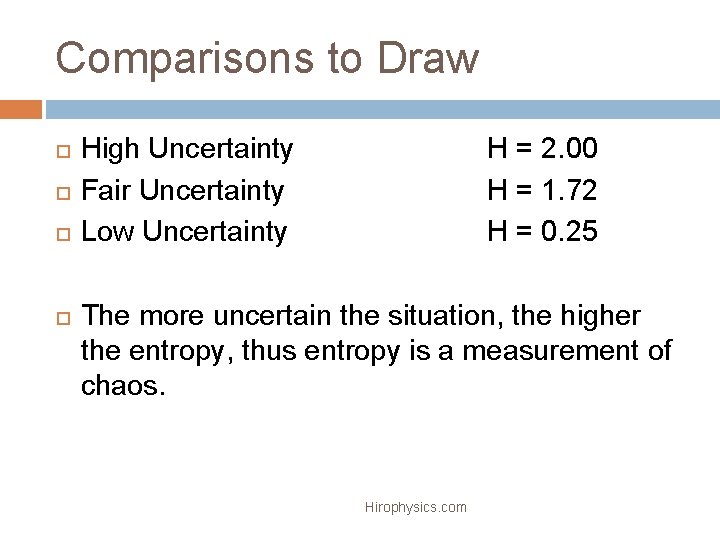

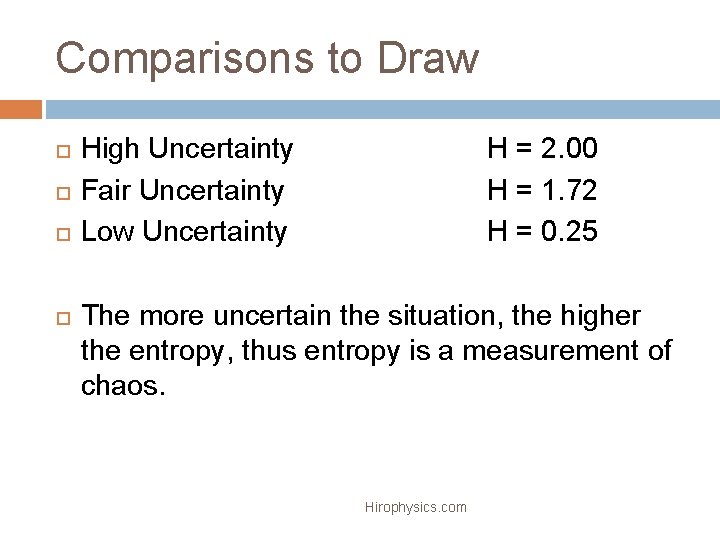

Comparisons to Draw High Uncertainty Fair Uncertainty Low Uncertainty H = 2. 00 H = 1. 72 H = 0. 25 The more uncertain the situation, the higher the entropy, thus entropy is a measurement of chaos. Hirophysics. com

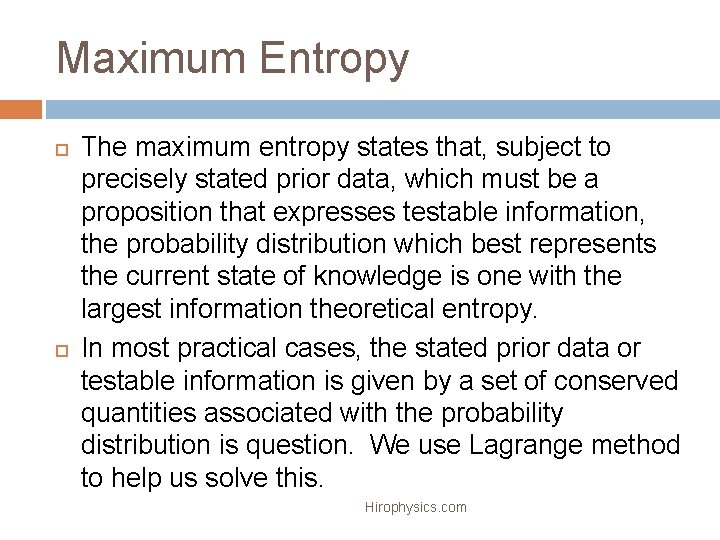

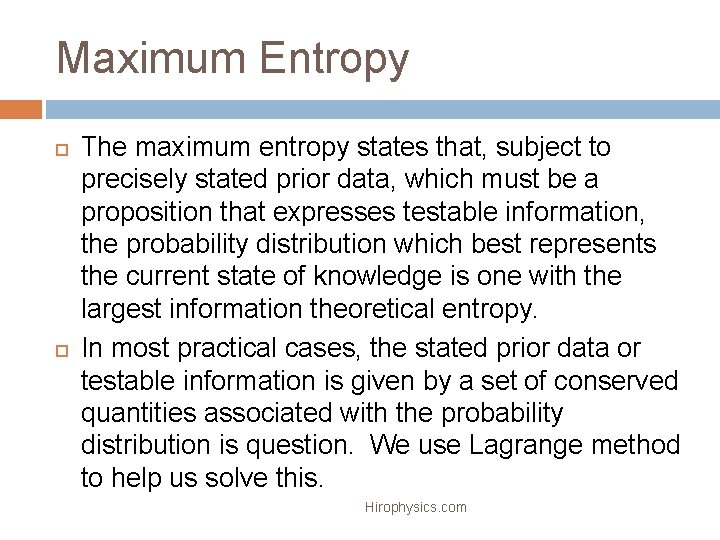

Maximum Entropy The maximum entropy states that, subject to precisely stated prior data, which must be a proposition that expresses testable information, the probability distribution which best represents the current state of knowledge is one with the largest information theoretical entropy. In most practical cases, the stated prior data or testable information is given by a set of conserved quantities associated with the probability distribution is question. We use Lagrange method to help us solve this. Hirophysics. com

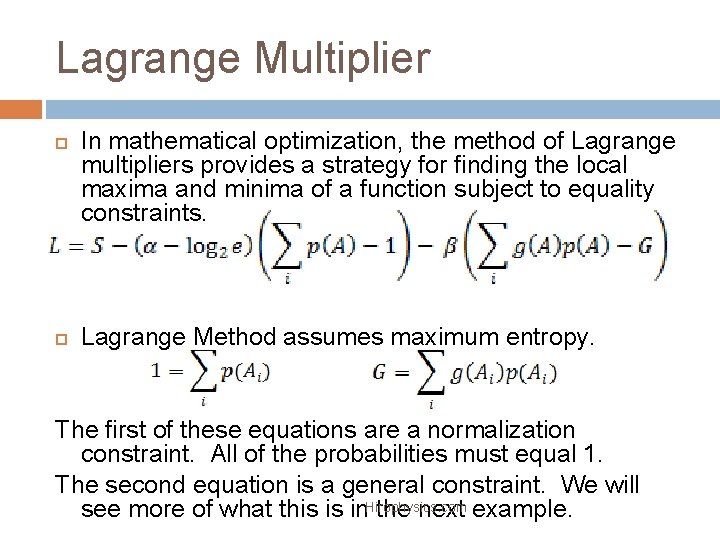

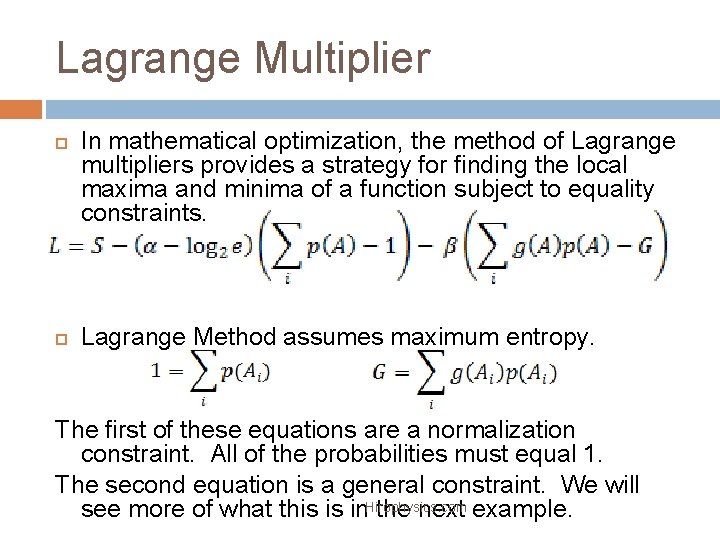

Lagrange Multiplier In mathematical optimization, the method of Lagrange multipliers provides a strategy for finding the local maxima and minima of a function subject to equality constraints. Lagrange Method assumes maximum entropy. The first of these equations are a normalization constraint. All of the probabilities must equal 1. The second equation is a general constraint. We will see more of what this is in. Hirophysics. com the next example.

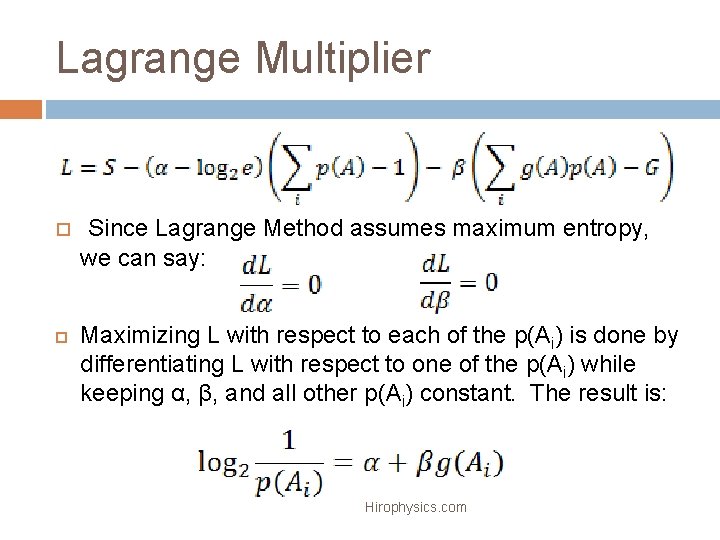

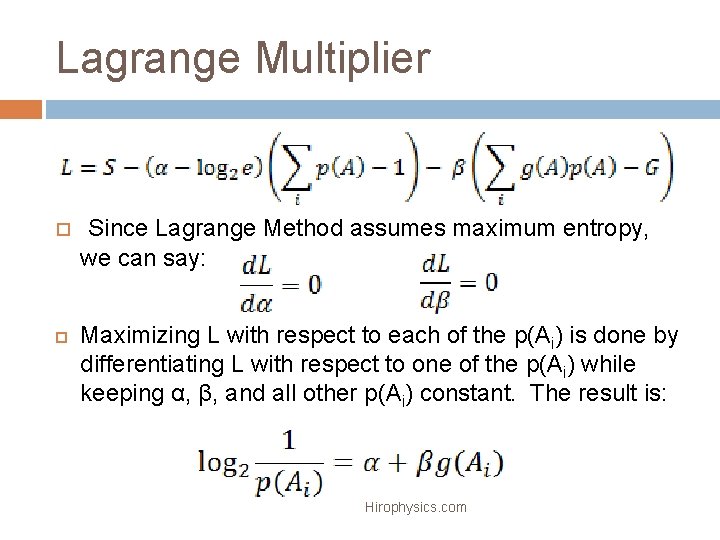

Lagrange Multiplier Since Lagrange Method assumes maximum entropy, we can say: Maximizing L with respect to each of the p(Ai) is done by differentiating L with respect to one of the p(Ai) while keeping α, β, and all other p(Ai) constant. The result is: Hirophysics. com

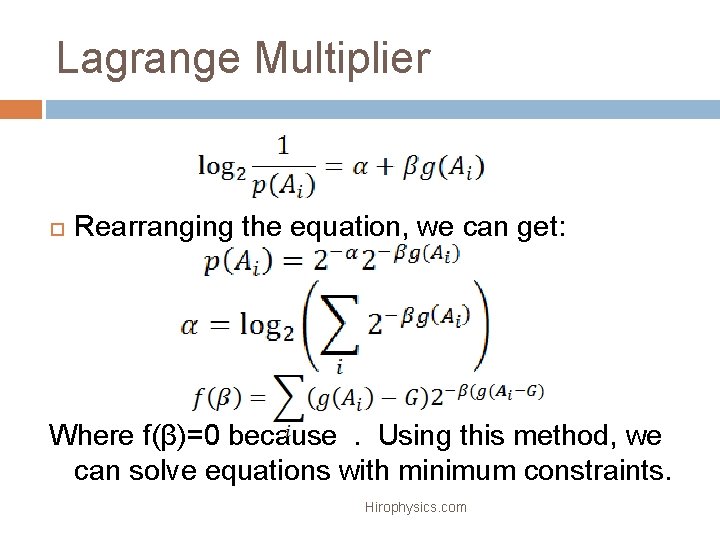

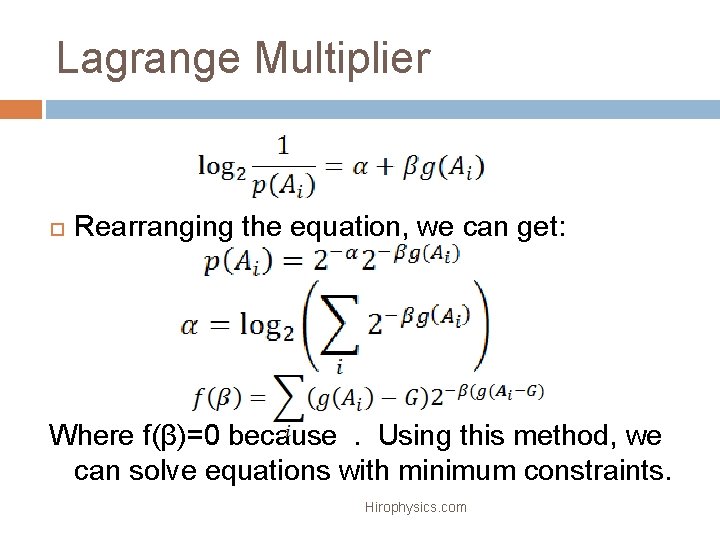

Lagrange Multiplier Rearranging the equation, we can get: Where f(β)=0 because. Using this method, we can solve equations with minimum constraints. Hirophysics. com

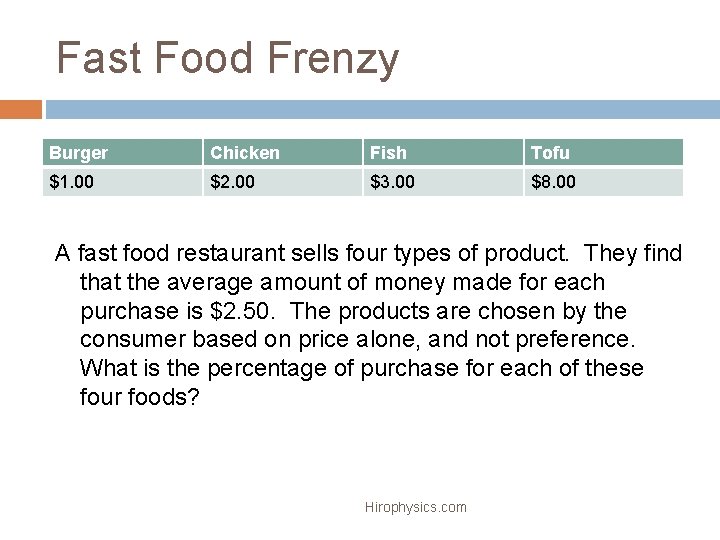

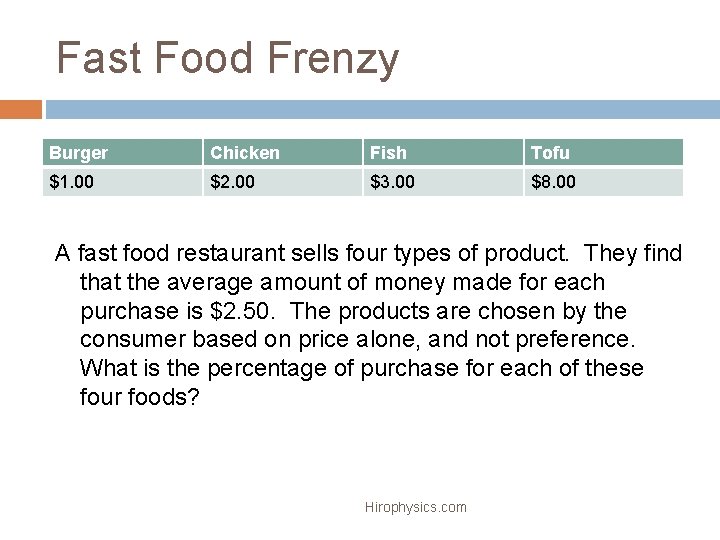

Fast Food Frenzy Burger Chicken Fish Tofu $1. 00 $2. 00 $3. 00 $8. 00 A fast food restaurant sells four types of product. They find that the average amount of money made for each purchase is $2. 50. The products are chosen by the consumer based on price alone, and not preference. What is the percentage of purchase for each of these four foods? Hirophysics. com

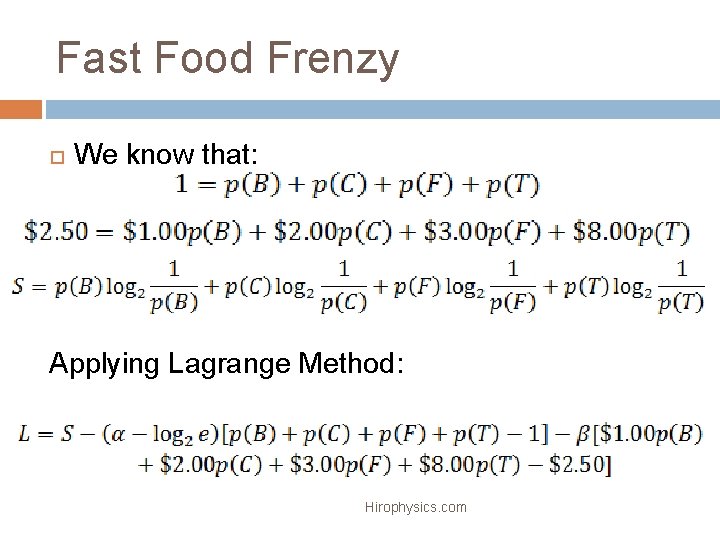

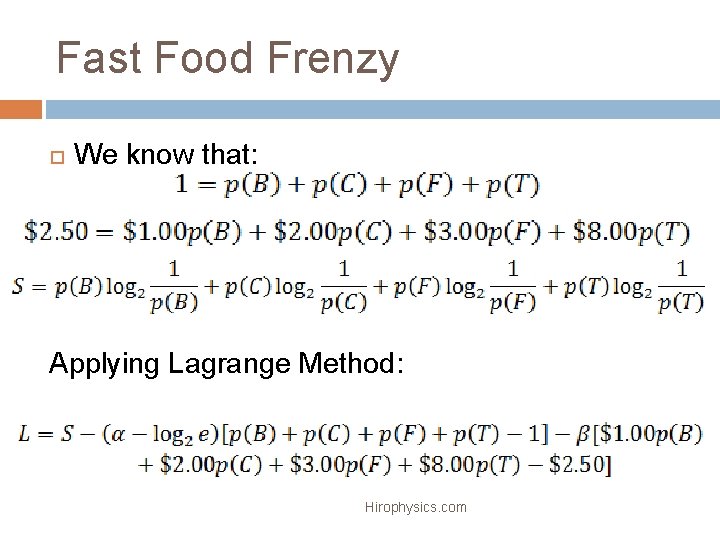

Fast Food Frenzy We know that: Applying Lagrange Method: Hirophysics. com

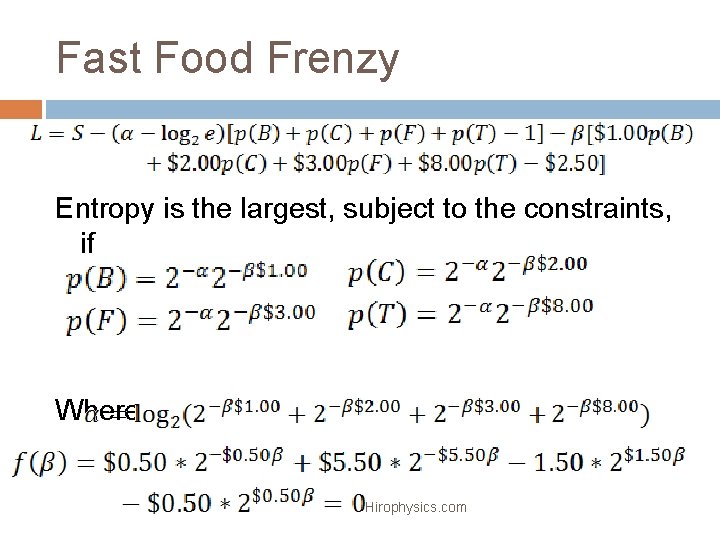

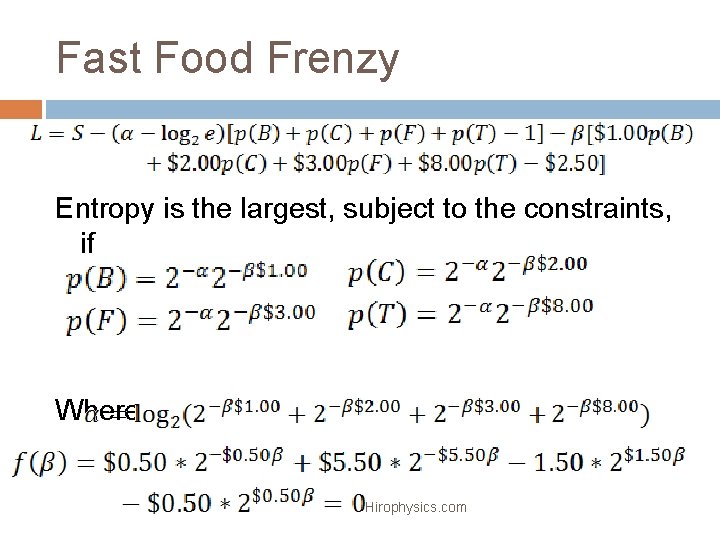

Fast Food Frenzy Entropy is the largest, subject to the constraints, if Where Hirophysics. com

Fast Food Frenzy A zero-finding program was used to find the variables in these equations. The results were: Food Probability of Purchase Burger 0. 3546 Chicken 0. 2964 Fish 0. 2477 Tofu 0. 1011 0. 3546+0. 2964+0. 2477+0. 1011 = 0. 9998 This rounds to one, and therefore is normalized. Lagrange method and maximum entropy can determine probabilities using only a small set of constraints. This answer makes sense because the probabilities of each food being chosen are consistent with the price Hirophysics. com constraint given to them.

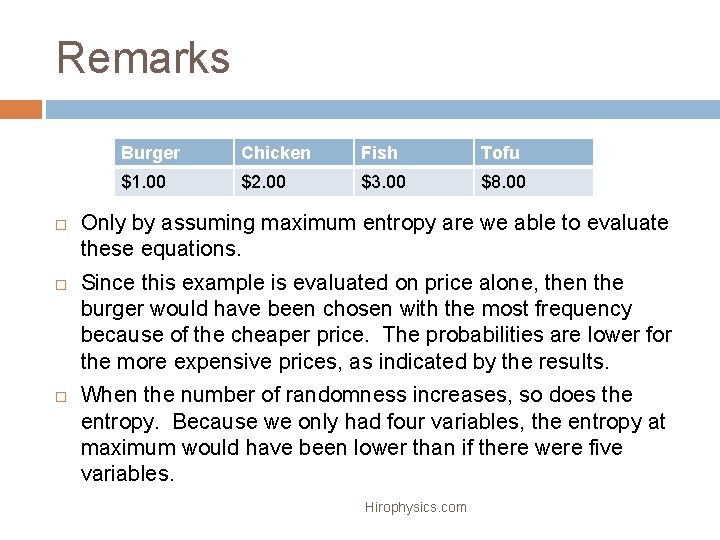

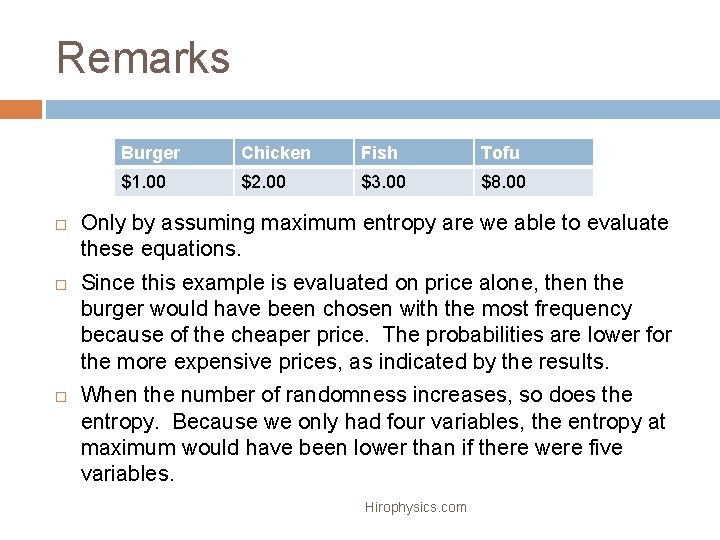

Remarks Burger Chicken Fish Tofu $1. 00 $2. 00 $3. 00 $8. 00 Only by assuming maximum entropy are we able to evaluate these equations. Since this example is evaluated on price alone, then the burger would have been chosen with the most frequency because of the cheaper price. The probabilities are lower for the more expensive prices, as indicated by the results. When the number of randomness increases, so does the entropy. Because we only had four variables, the entropy at maximum would have been lower than if there were five variables. Hirophysics. com

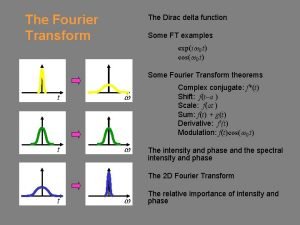

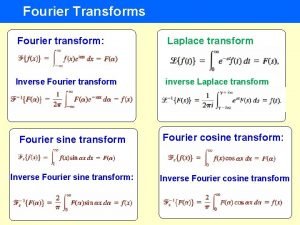

Fourier Transformation Fourier transform is a mathematical operation with many applications in physics and engineering that expresses a mathematical function of time as a function of frequency. The frequency can be approximated with sine and cosine functions. Fourier transforms and maximum entropy can both be utilized to find the specific frequencies of a sine/cosine wave. Hirophysics. com

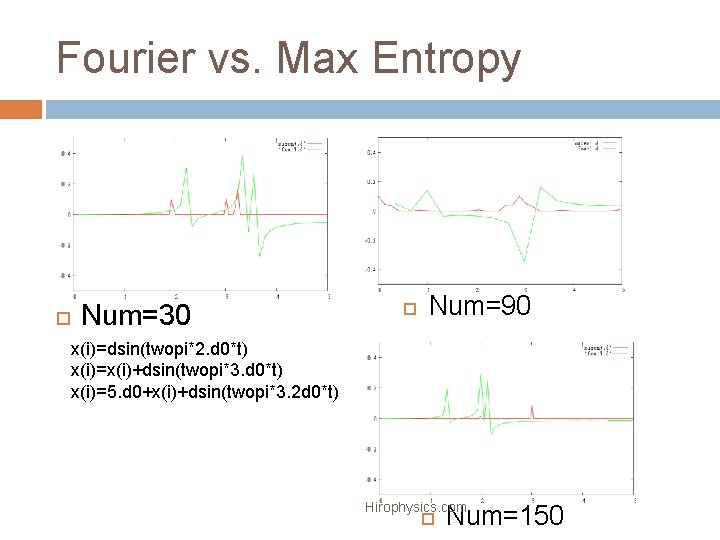

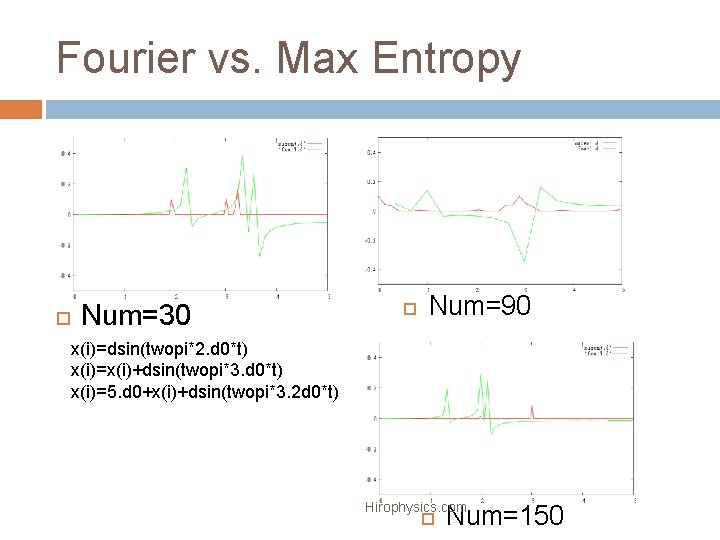

Fourier vs. Max Entropy Num=30 Num=90 x(i)=dsin(twopi*2. d 0*t) x(i)=x(i)+dsin(twopi*3. d 0*t) x(i)=5. d 0+x(i)+dsin(twopi*3. 2 d 0*t) Hirophysics. com Num=150

Fourier vs. Max Entropy Since we were looking for 2. 0π, 3. 0π, and 3. 2π in our sine and cosine waves, maximum entropy was consistently better at determining these numbers on the graphs Maximum entropy works better than Fourier from the range of 30 to 150 data sets. This is because it calculates an average using a small amount of data. If the data were dramatically increased, Fourier Method would work better. Hirophysics. com

Sources http: //en. wikipedia. org/wiki/Entropy http: //www. eoht. info/page/High+entropy+state http: //en. wikipedia. org/wiki/Second_law_of_the rmodynamics http: //www. entropylaw. com/ Hirophysics. com

Dậy thổi cơm mua thịt cá

Dậy thổi cơm mua thịt cá Cơm

Cơm Minimum enthalpy and maximum entropy

Minimum enthalpy and maximum entropy Absolute max vs local max

Absolute max vs local max Maximum likelihood vs maximum parsimony

Maximum likelihood vs maximum parsimony Maximum likelihood vs maximum parsimony

Maximum likelihood vs maximum parsimony Fourier transform time shift

Fourier transform time shift Fourier transform

Fourier transform Fourier sorok

Fourier sorok Koeffizient mathe

Koeffizient mathe Fourier transform definition

Fourier transform definition Fourier delta function

Fourier delta function Enthalpy vs entropy

Enthalpy vs entropy Q system = -q surroundings

Q system = -q surroundings What is enthalpy and entropy

What is enthalpy and entropy If gibbs free energy is negative

If gibbs free energy is negative Enthalpy entropy free energy

Enthalpy entropy free energy Ap chem spontaneity entropy and free energy

Ap chem spontaneity entropy and free energy Entropy system and surroundings

Entropy system and surroundings Entropy order parameters and complexity

Entropy order parameters and complexity Entropy equation

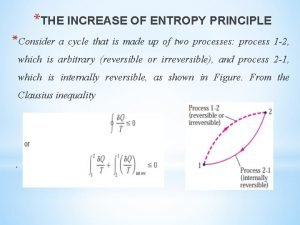

Entropy equation Increase in entropy principle

Increase in entropy principle Energi bebas gibbs

Energi bebas gibbs What is reversible process

What is reversible process