Mathematical Foundation Chapter 4 Complexity Analysis Part I

- Slides: 31

Mathematical Foundation Chapter 4

Complexity Analysis (Part I) Data Structure , Algorithm and Complexity Definitions Motivations for Complexity Analysis. Example of Basic Operations Average, Best, and Worst Cases. Simple Complexity Analysis Examples.

Definitions Data Structure: is the way in which the data of the program are stored. Algorithm: is a well defined sequence of computational steps to solve a problem. It is often accepts a set of values as input & produces a set of values as output. Algorithm Analysis: is the number of steps or instructions and memory locations needed to perform a certain problem for any input of a particular size

Motivations for Complexity Analysis There are often many different algorithms which can be used to solve the same problem. Thus, it makes sense to develop techniques that allow us to: o compare different algorithms with respect to their “efficiency” o choose the most efficient algorithm for the problem The efficiency of any algorithmic solution to a problem is a measure of the: o Time efficiency: the time it takes to execute. o Space efficiency: the space (primary or secondary memory) it uses. We will focus on an algorithm’s efficiency with respect to time.

Machine independence The evaluation of efficiency should be as machine independent as possible. It is not useful to measure how fast the algorithm runs as this depends on which particular computer, OS, programming language, compiler, and kind of inputs are used in testing Instead, o we count the number of basic operations the algorithm performs. o we calculate how this number depends on the size of the input. A basic operation is an operation which takes a constant amount of time to execute. Hence, the efficiency of an algorithm is the number of basic operations it performs. This number is a function of the input size n.

Example of Basic Operations: Arithmetic operations: *, /, %, +, Assignment statements of simple data types. Reading of primitive types writing of a primitive types Simple conditional tests: if (x < 12). . . method call (Note: the execution time of the method itself may depend on the value of parameter and it may not be constant) a method's return statement Memory Access We consider an operation such as ++ , += , and *= as consisting of two basic operations. Note: To simplify complexity analysis we will not consider memory access (fetch or store) operations.

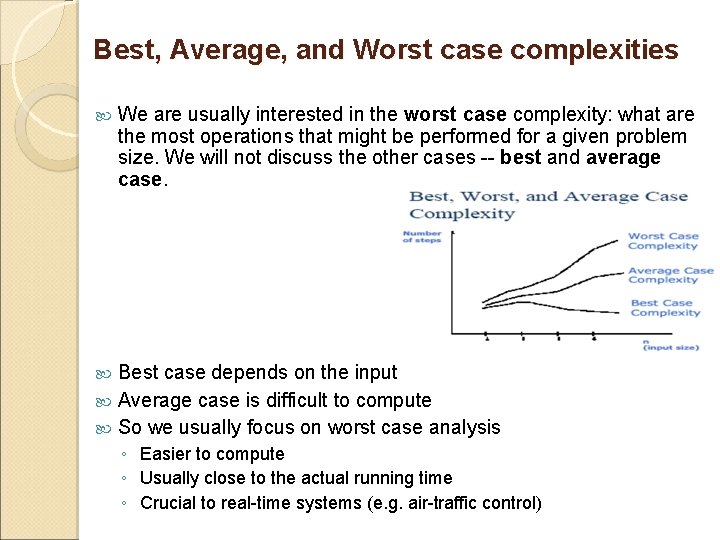

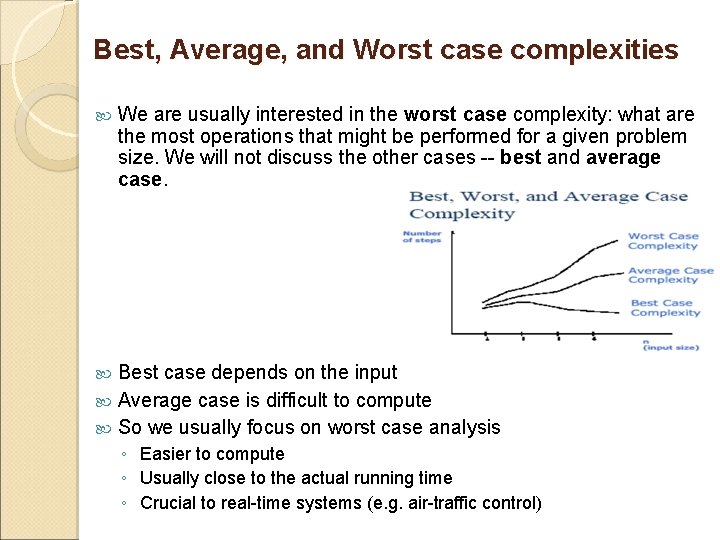

Best, Average, and Worst case complexities We are usually interested in the worst case complexity: what are the most operations that might be performed for a given problem size. We will not discuss the other cases -- best and average case. Best case depends on the input Average case is difficult to compute So we usually focus on worst case analysis ◦ Easier to compute ◦ Usually close to the actual running time ◦ Crucial to real-time systems (e. g. air-traffic control)

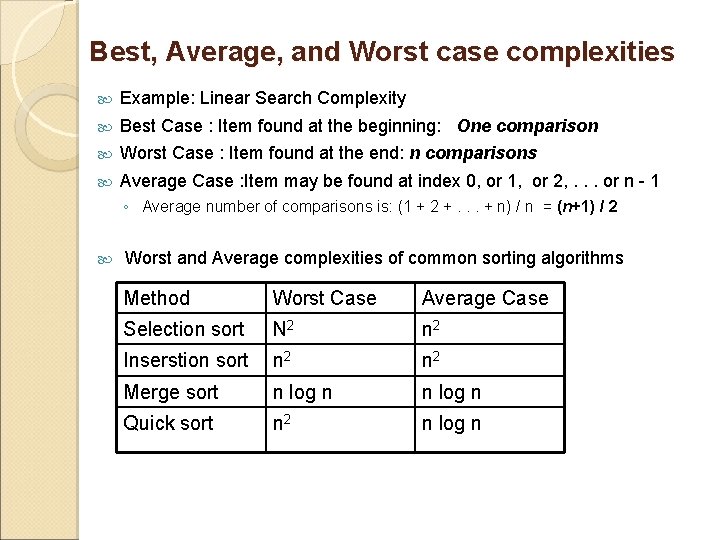

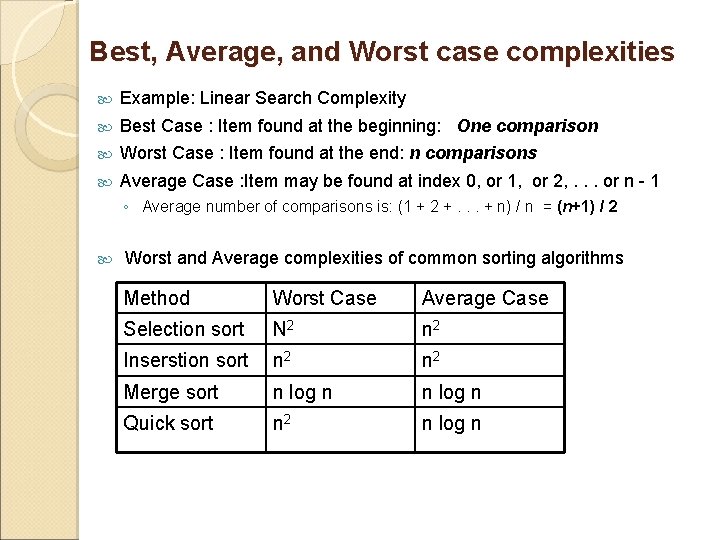

Best, Average, and Worst case complexities Example: Linear Search Complexity Best Case : Item found at the beginning: One comparison Worst Case : Item found at the end: n comparisons Average Case : Item may be found at index 0, or 1, or 2, . . . or n - 1 ◦ Average number of comparisons is: (1 + 2 +. . . + n) / n = (n+1) / 2 Worst and Average complexities of common sorting algorithms Method Worst Case Average Case Selection sort N 2 n 2 Inserstion sort n 2 Merge sort n log n Quick sort n 2 n log n

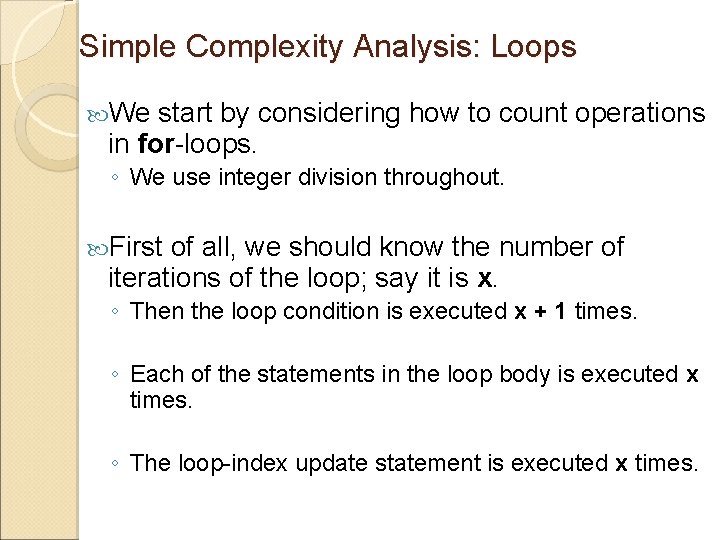

Simple Complexity Analysis: Loops We start by considering how to count operations in for-loops. ◦ We use integer division throughout. First of all, we should know the number of iterations of the loop; say it is x. ◦ Then the loop condition is executed x + 1 times. ◦ Each of the statements in the loop body is executed x times. ◦ The loop-index update statement is executed x times.

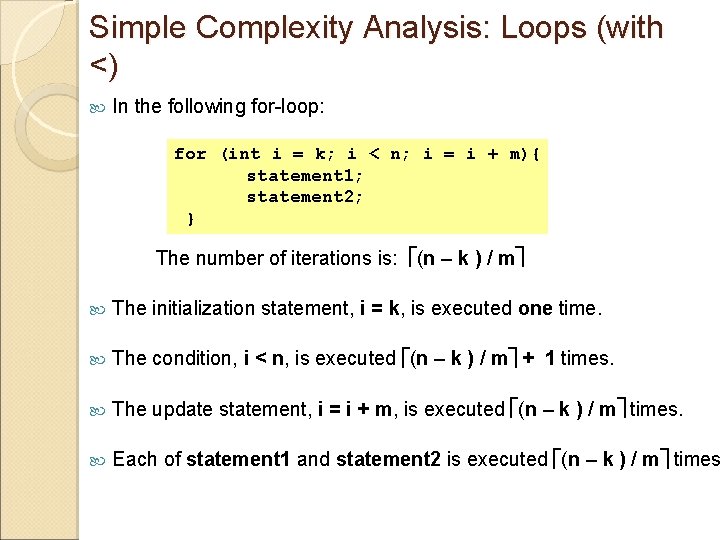

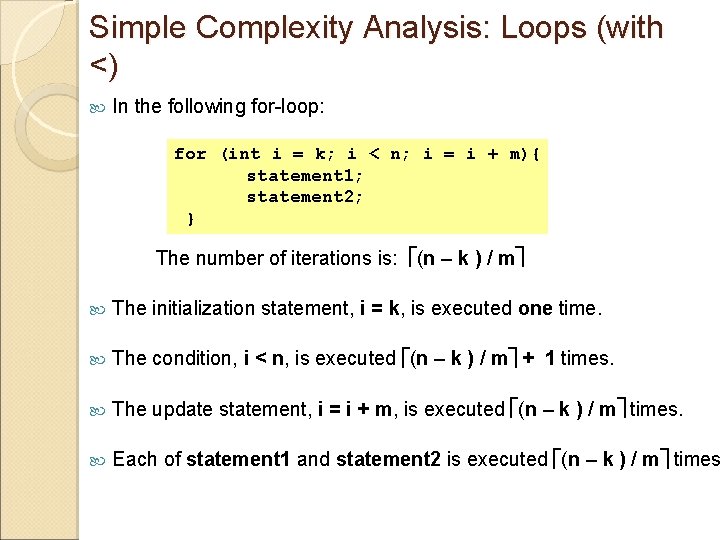

Simple Complexity Analysis: Loops (with <) In the following for-loop: for (int i = k; i < n; i = i + m){ statement 1; statement 2; } The number of iterations is: (n – k ) / m The initialization statement, i = k, is executed one time. The condition, i < n, is executed (n – k ) / m + 1 times. The update statement, i = i + m, is executed (n – k ) / m times. Each of statement 1 and statement 2 is executed (n – k ) / m times

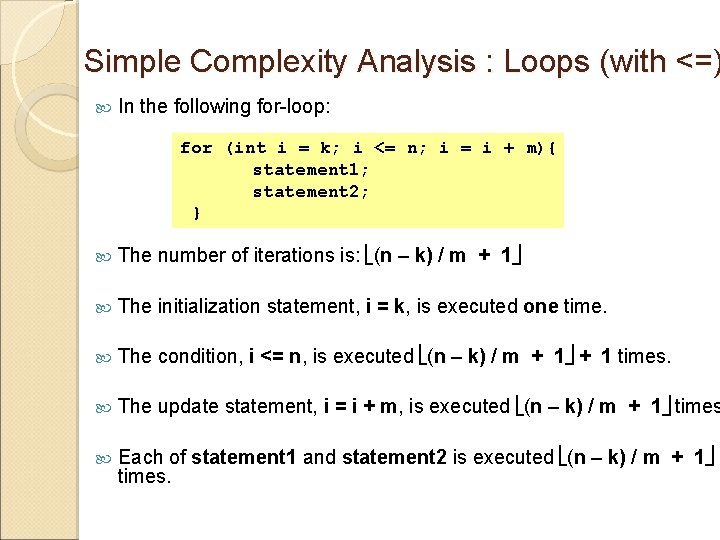

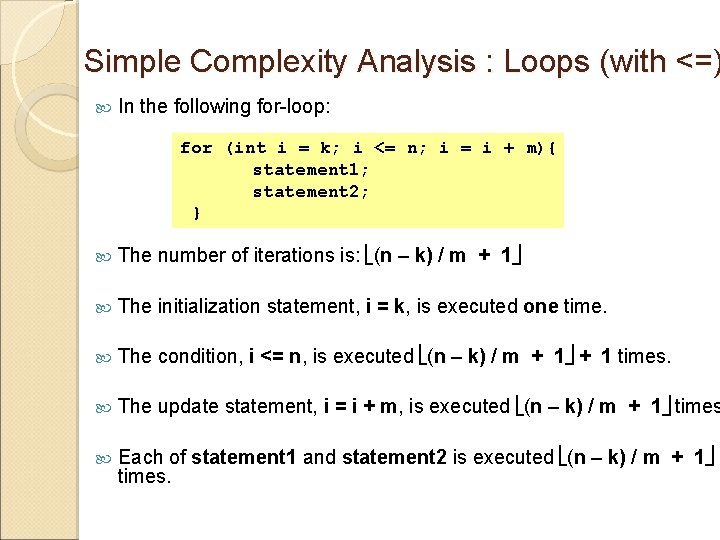

Simple Complexity Analysis : Loops (with <=) In the following for-loop: for (int i = k; i <= n; i = i + m){ statement 1; statement 2; } The number of iterations is: (n – k) / m + 1 The initialization statement, i = k, is executed one time. The condition, i <= n, is executed (n – k) / m + 1 times. The update statement, i = i + m, is executed (n – k) / m + 1 times Each of statement 1 and statement 2 is executed (n – k) / m + 1 times.

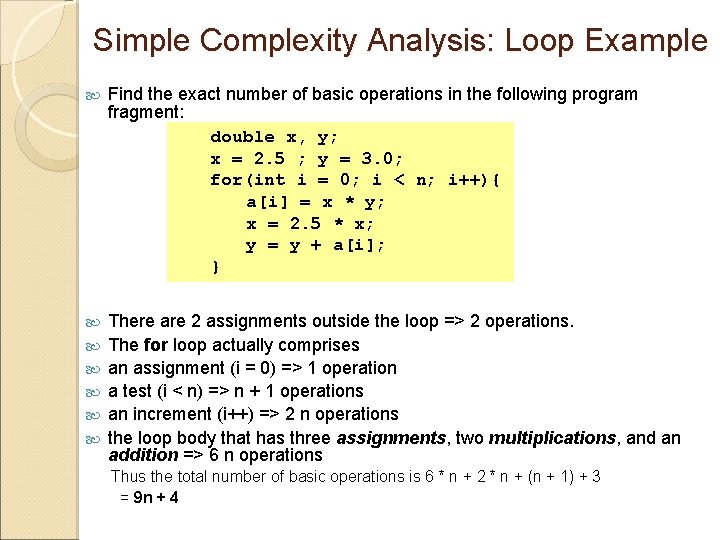

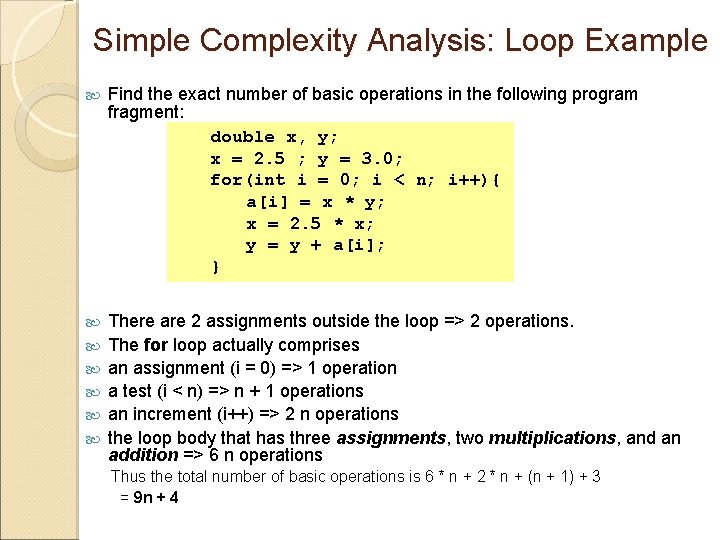

Simple Complexity Analysis: Loop Example Find the exact number of basic operations in the following program fragment: double x, y; x = 2. 5 ; y = 3. 0; for(int i = 0; i < n; i++){ a[i] = x * y; x = 2. 5 * x; y = y + a[i]; } There are 2 assignments outside the loop => 2 operations. The for loop actually comprises an assignment (i = 0) => 1 operation a test (i < n) => n + 1 operations an increment (i++) => 2 n operations the loop body that has three assignments, two multiplications, and an addition => 6 n operations Thus the total number of basic operations is 6 * n + 2 * n + (n + 1) + 3 = 9 n + 4

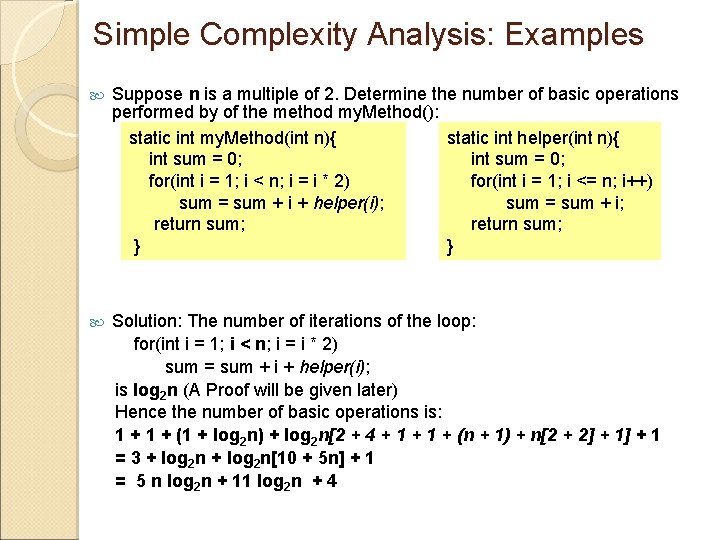

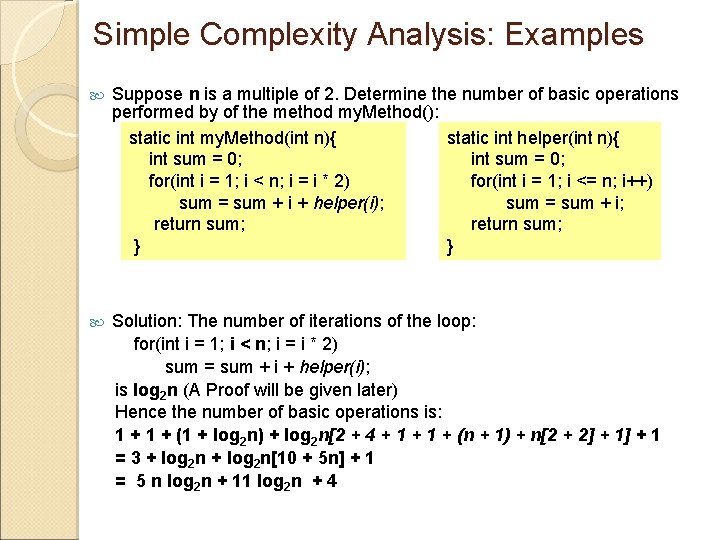

Simple Complexity Analysis: Examples Suppose n is a multiple of 2. Determine the number of basic operations performed by of the method my. Method(): static int my. Method(int n){ static int helper(int n){ int sum = 0; for(int i = 1; i < n; i = i * 2) for(int i = 1; i <= n; i++) sum = sum + i + helper(i); sum = sum + i; return sum; } } Solution: The number of iterations of the loop: for(int i = 1; i < n; i = i * 2) sum = sum + i + helper(i); is log 2 n (A Proof will be given later) Hence the number of basic operations is: 1 + (1 + log 2 n) + log 2 n[2 + 4 + 1 + (n + 1) + n[2 + 2] + 1 = 3 + log 2 n[10 + 5 n] + 1 = 5 n log 2 n + 11 log 2 n + 4

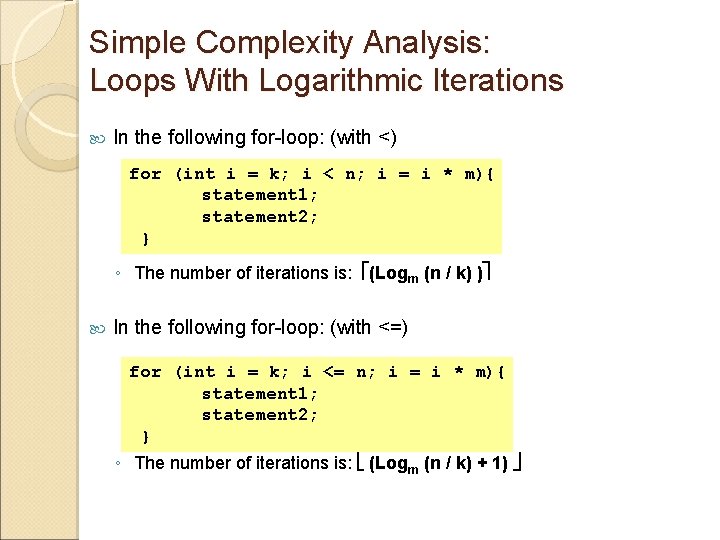

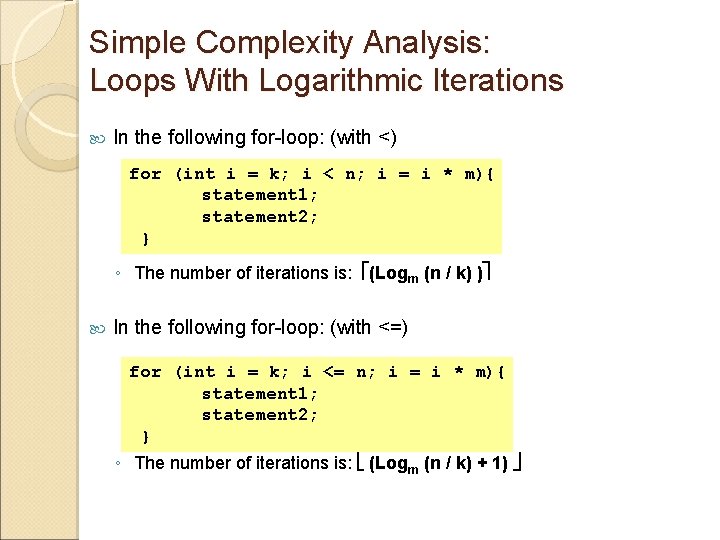

Simple Complexity Analysis: Loops With Logarithmic Iterations In the following for-loop: (with <) for (int i = k; i < n; i = i * m){ statement 1; statement 2; } ◦ The number of iterations is: (Logm (n / k) ) In the following for-loop: (with <=) for (int i = k; i <= n; i = i * m){ statement 1; statement 2; } ◦ The number of iterations is: (Logm (n / k) + 1)

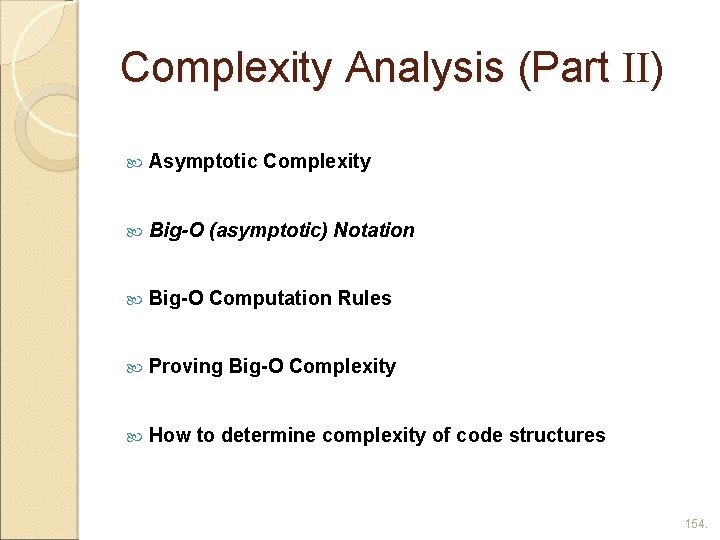

Complexity Analysis (Part II) Asymptotic Complexity Big-O (asymptotic) Notation Big-O Computation Rules Proving Big-O Complexity How to determine complexity of code structures 154.

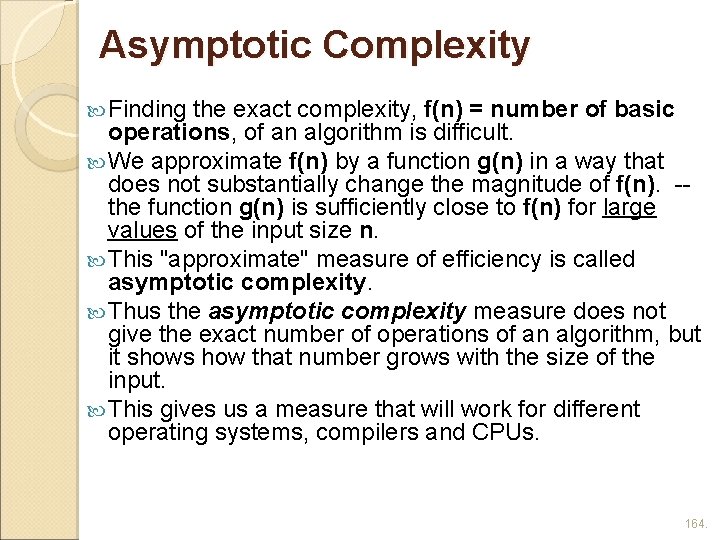

Asymptotic Complexity Finding the exact complexity, f(n) = number of basic operations, of an algorithm is difficult. We approximate f(n) by a function g(n) in a way that does not substantially change the magnitude of f(n). -the function g(n) is sufficiently close to f(n) for large values of the input size n. This "approximate" measure of efficiency is called asymptotic complexity. Thus the asymptotic complexity measure does not give the exact number of operations of an algorithm, but it shows how that number grows with the size of the input. This gives us a measure that will work for different operating systems, compilers and CPUs. 164.

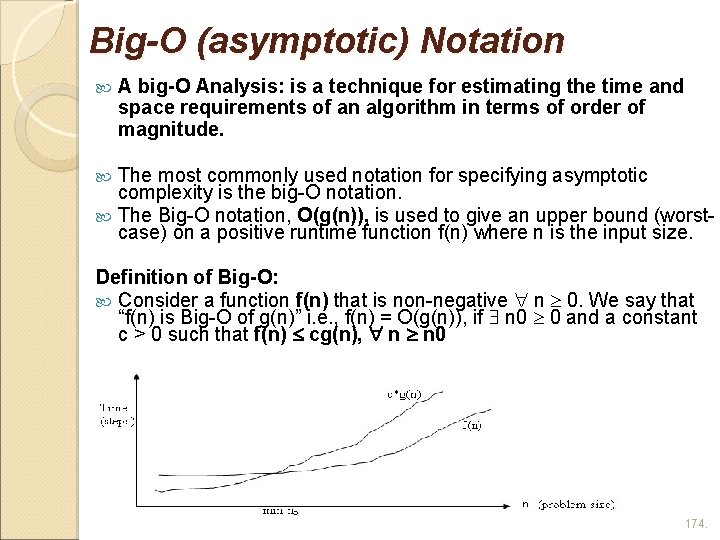

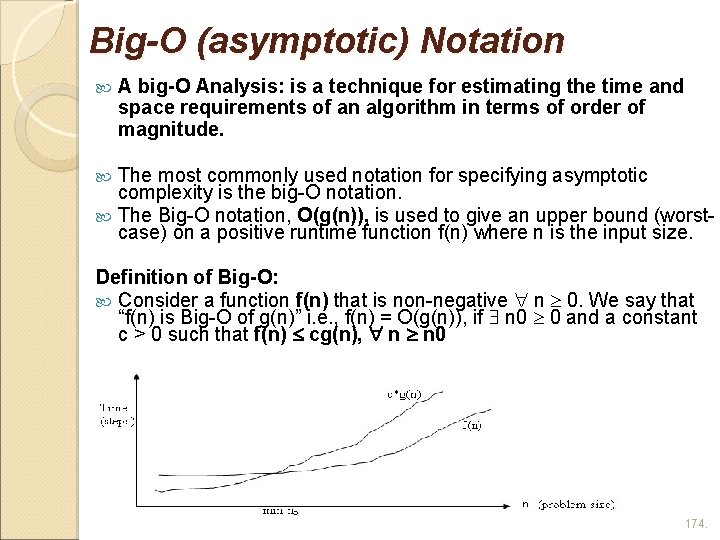

Big-O (asymptotic) Notation A big-O Analysis: is a technique for estimating the time and space requirements of an algorithm in terms of order of magnitude. The most commonly used notation for specifying asymptotic complexity is the big-O notation. The Big-O notation, O(g(n)), is used to give an upper bound (worstcase) on a positive runtime function f(n) where n is the input size. Definition of Big-O: Consider a function f(n) that is non-negative n 0. We say that “f(n) is Big-O of g(n)” i. e. , f(n) = O(g(n)), if n 0 0 and a constant c > 0 such that f(n) cg(n), n n 0 174.

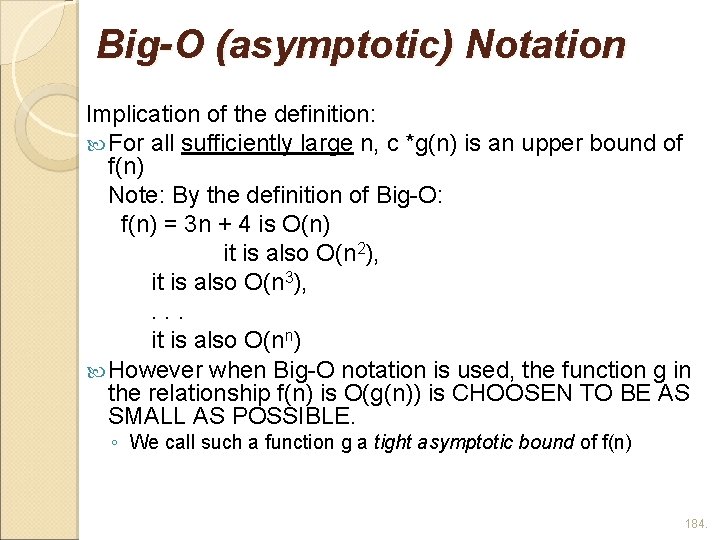

Big-O (asymptotic) Notation Implication of the definition: For all sufficiently large n, c *g(n) is an upper bound of f(n) Note: By the definition of Big-O: f(n) = 3 n + 4 is O(n) it is also O(n 2), it is also O(n 3), . . . it is also O(nn) However when Big-O notation is used, the function g in the relationship f(n) is O(g(n)) is CHOOSEN TO BE AS SMALL AS POSSIBLE. ◦ We call such a function g a tight asymptotic bound of f(n) 184.

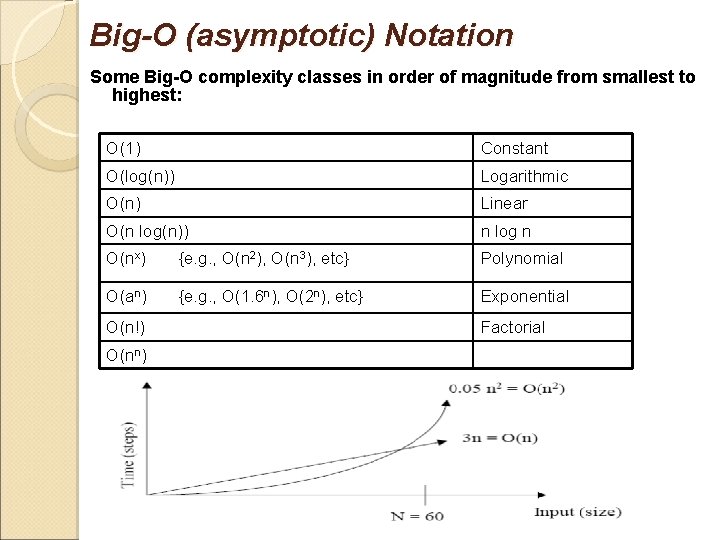

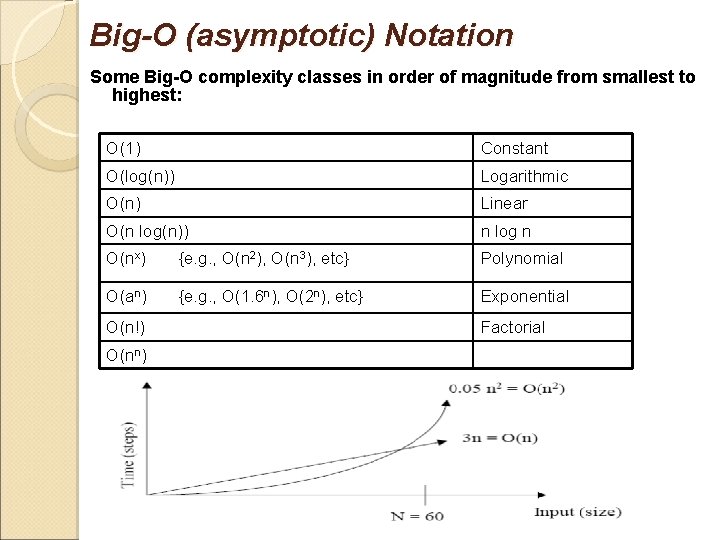

Big-O (asymptotic) Notation Some Big-O complexity classes in order of magnitude from smallest to highest: O(1) Constant O(log(n)) Logarithmic O(n) Linear O(n log(n)) n log n O(nx) {e. g. , O(n 2), O(n 3), etc} Polynomial O(an) {e. g. , O(1. 6 n), O(2 n), etc} Exponential O(n!) O(nn) 194. Factorial

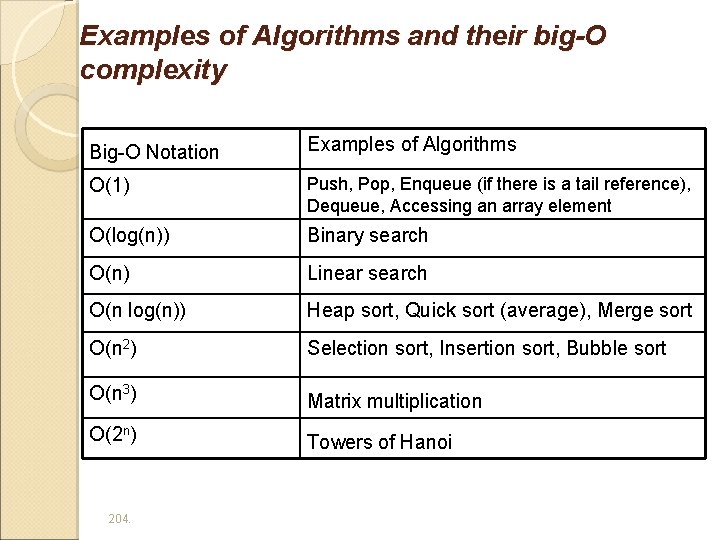

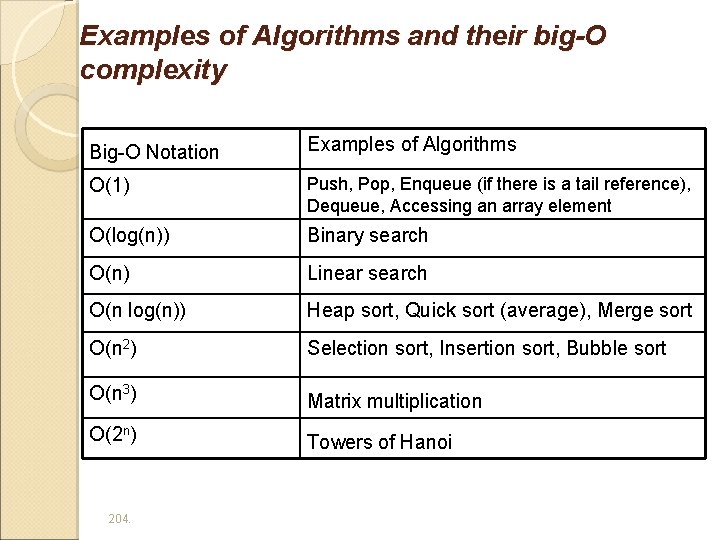

Examples of Algorithms and their big-O complexity Big-O Notation Examples of Algorithms O(1) Push, Pop, Enqueue (if there is a tail reference), Dequeue, Accessing an array element O(log(n)) Binary search O(n) Linear search O(n log(n)) Heap sort, Quick sort (average), Merge sort O(n 2) Selection sort, Insertion sort, Bubble sort O(n 3) Matrix multiplication O(2 n) Towers of Hanoi 204.

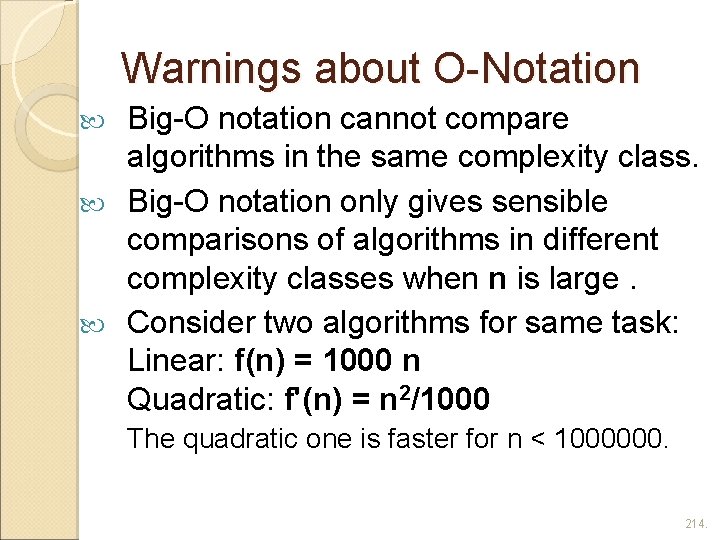

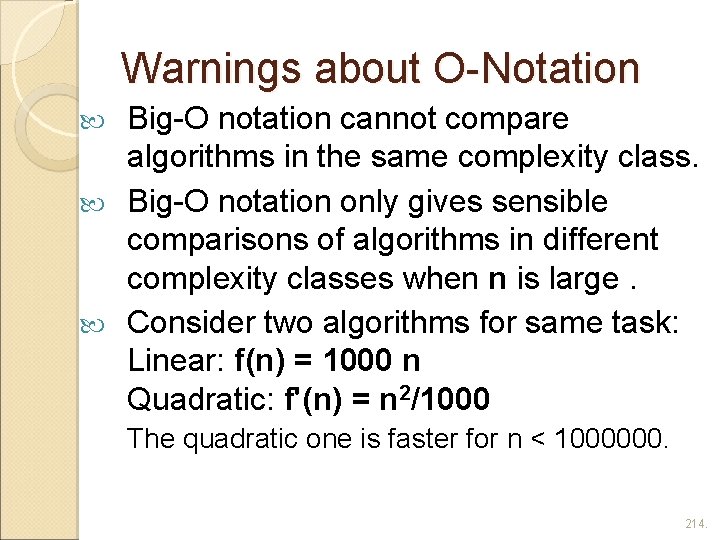

Warnings about O-Notation Big-O notation cannot compare algorithms in the same complexity class. Big-O notation only gives sensible comparisons of algorithms in different complexity classes when n is large. Consider two algorithms for same task: Linear: f(n) = 1000 n Quadratic: f'(n) = n 2/1000 The quadratic one is faster for n < 1000000. 214.

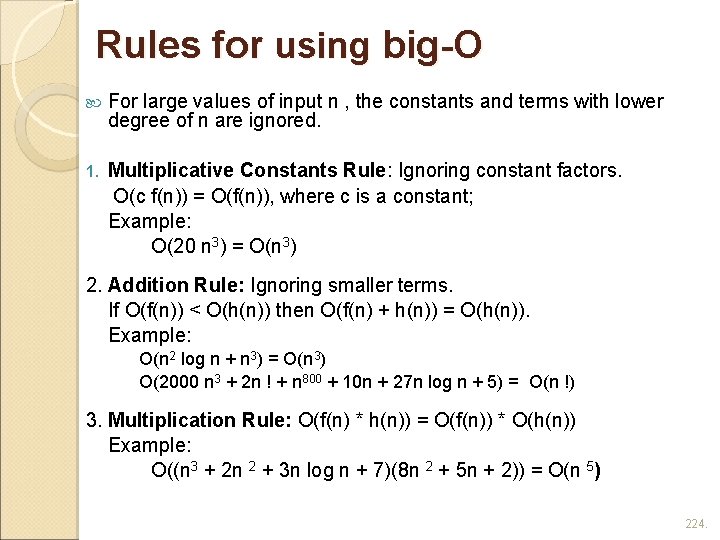

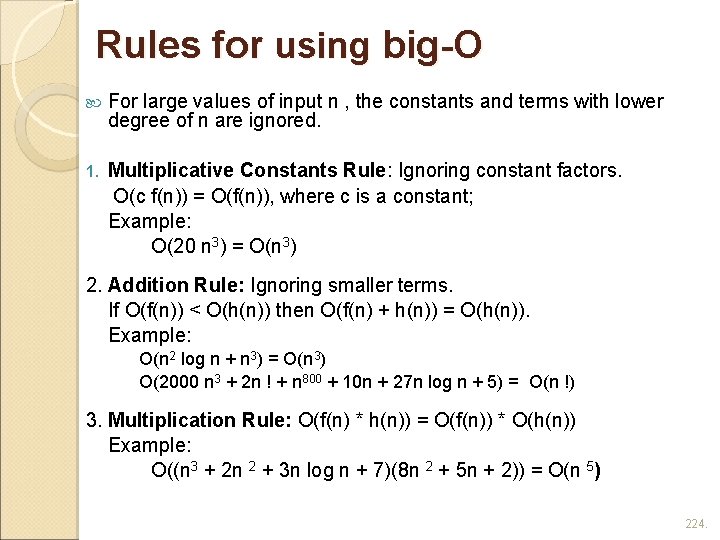

Rules for using big-O For large values of input n , the constants and terms with lower degree of n are ignored. 1. Multiplicative Constants Rule: Ignoring constant factors. O(c f(n)) = O(f(n)), where c is a constant; Example: O(20 n 3) = O(n 3) 2. Addition Rule: Ignoring smaller terms. If O(f(n)) < O(h(n)) then O(f(n) + h(n)) = O(h(n)). Example: O(n 2 log n + n 3) = O(n 3) O(2000 n 3 + 2 n ! + n 800 + 10 n + 27 n log n + 5) = O(n !) 3. Multiplication Rule: O(f(n) * h(n)) = O(f(n)) * O(h(n)) Example: O((n 3 + 2 n 2 + 3 n log n + 7)(8 n 2 + 5 n + 2)) = O(n 5) 224.

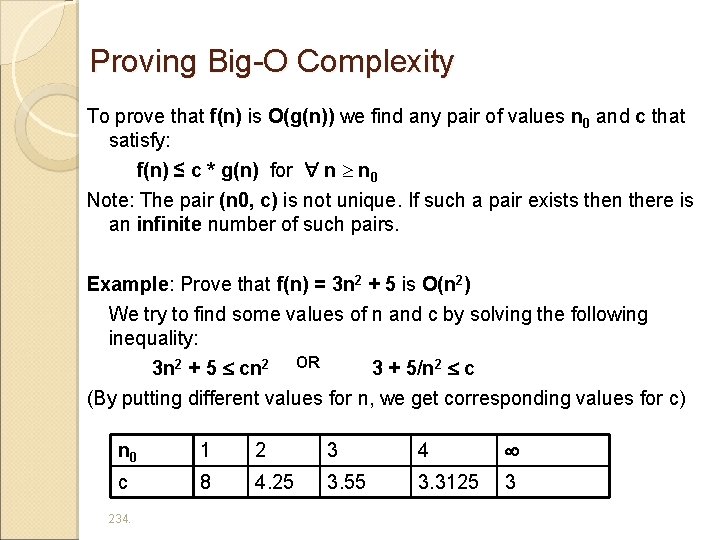

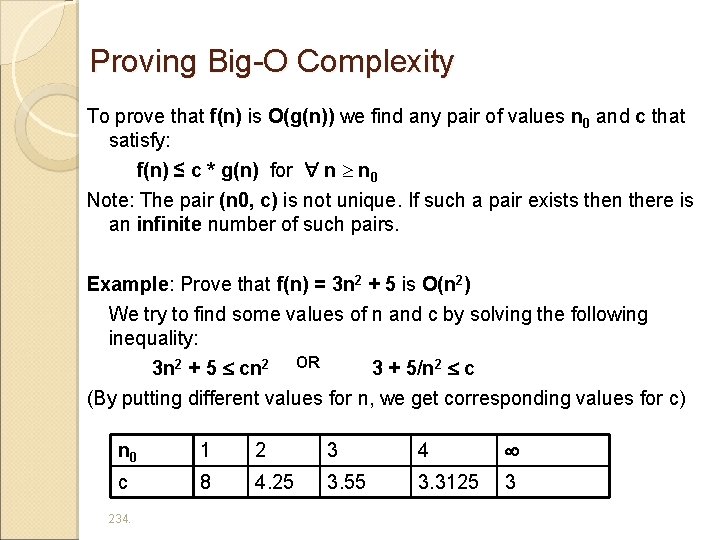

Proving Big-O Complexity To prove that f(n) is O(g(n)) we find any pair of values n 0 and c that satisfy: f(n) ≤ c * g(n) for n n 0 Note: The pair (n 0, c) is not unique. If such a pair exists then there is an infinite number of such pairs. Example: Prove that f(n) = 3 n 2 + 5 is O(n 2) We try to find some values of n and c by solving the following inequality: 3 n 2 + 5 cn 2 OR 3 + 5/n 2 c (By putting different values for n, we get corresponding values for c) n 0 1 2 3 4 c 8 4. 25 3. 55 3. 3125 3 234.

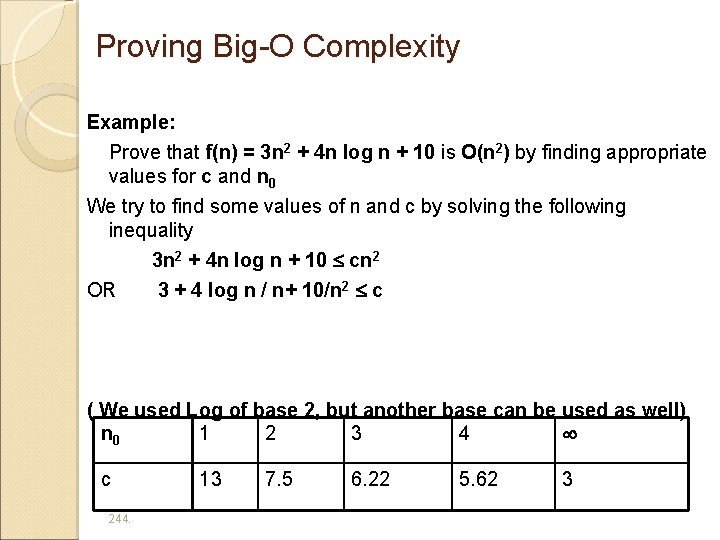

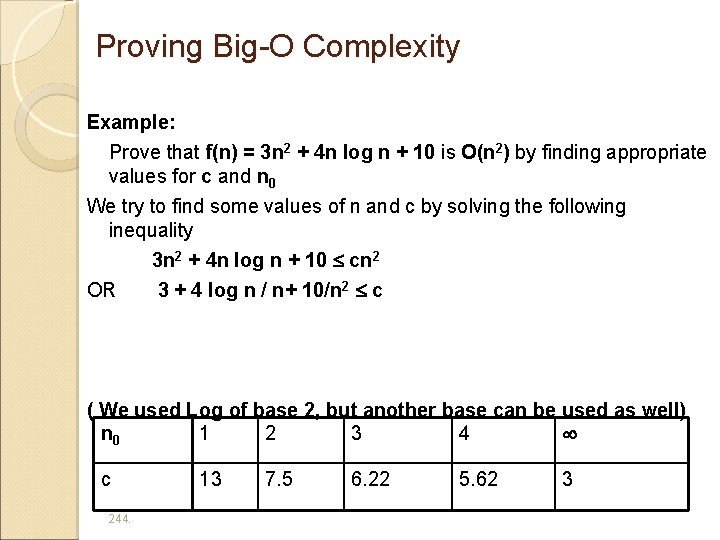

Proving Big-O Complexity Example: Prove that f(n) = 3 n 2 + 4 n log n + 10 is O(n 2) by finding appropriate values for c and n 0 We try to find some values of n and c by solving the following inequality 3 n 2 + 4 n log n + 10 cn 2 OR 3 + 4 log n / n+ 10/n 2 c ( We used Log of base 2, but another base can be used as well) n 0 1 2 3 4 c 244. 13 7. 5 6. 22 5. 62 3

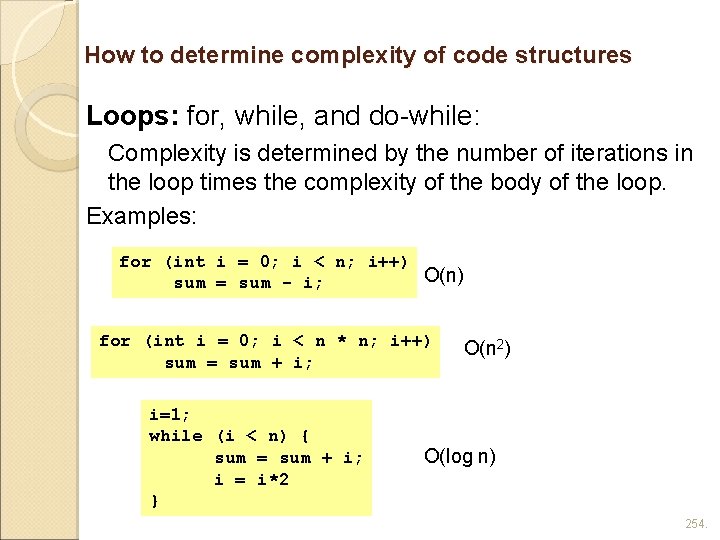

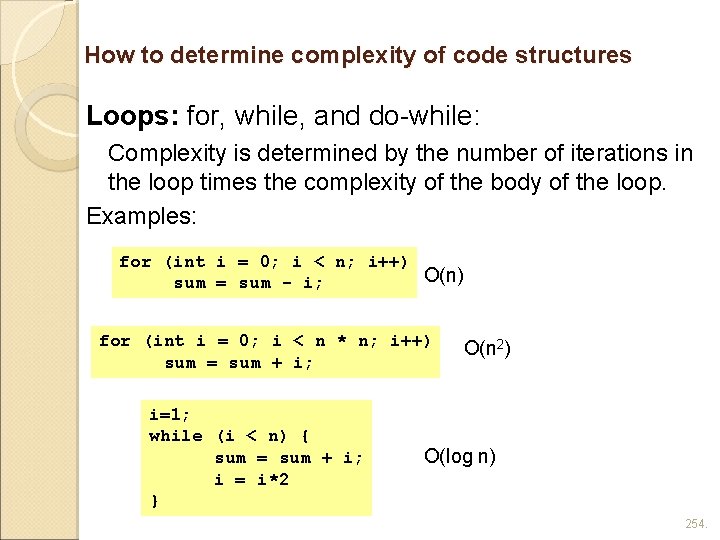

How to determine complexity of code structures Loops: for, while, and do-while: Complexity is determined by the number of iterations in the loop times the complexity of the body of the loop. Examples: for (int i = 0; i < n; i++) O(n) sum = sum - i; for (int i = 0; i < n * n; i++) sum = sum + i; i=1; while (i < n) { sum = sum + i; i = i*2 } O(n 2) O(log n) 254.

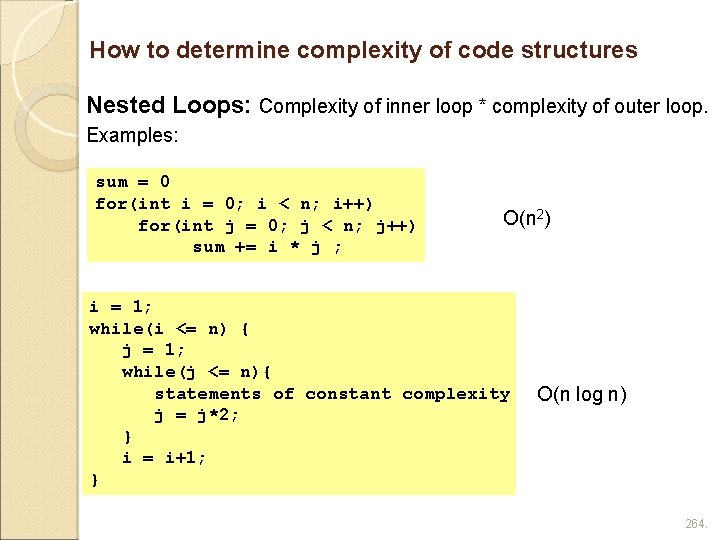

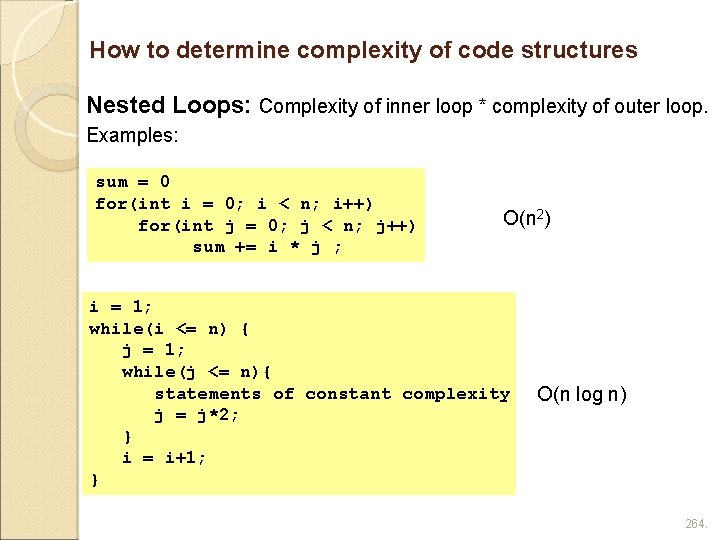

How to determine complexity of code structures Nested Loops: Complexity of inner loop * complexity of outer loop. Examples: sum = 0 for(int i = 0; i < n; i++) for(int j = 0; j < n; j++) sum += i * j ; O(n 2) i = 1; while(i <= n) { j = 1; while(j <= n){ statements of constant complexity j = j*2; } i = i+1; } O(n log n) 264.

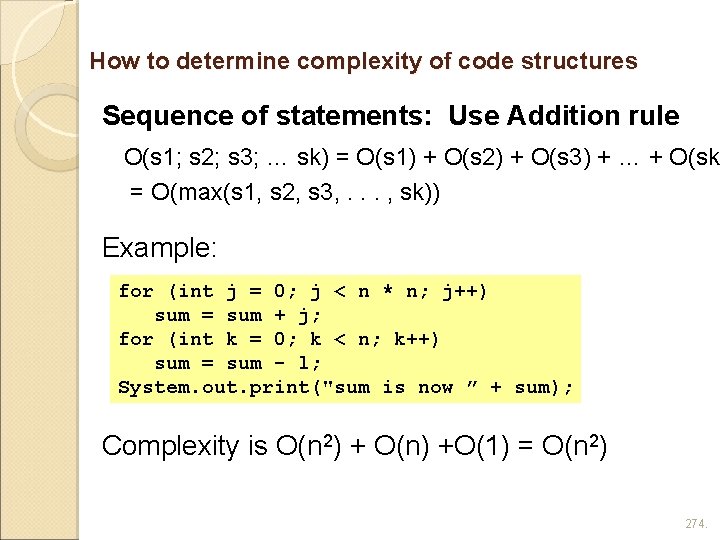

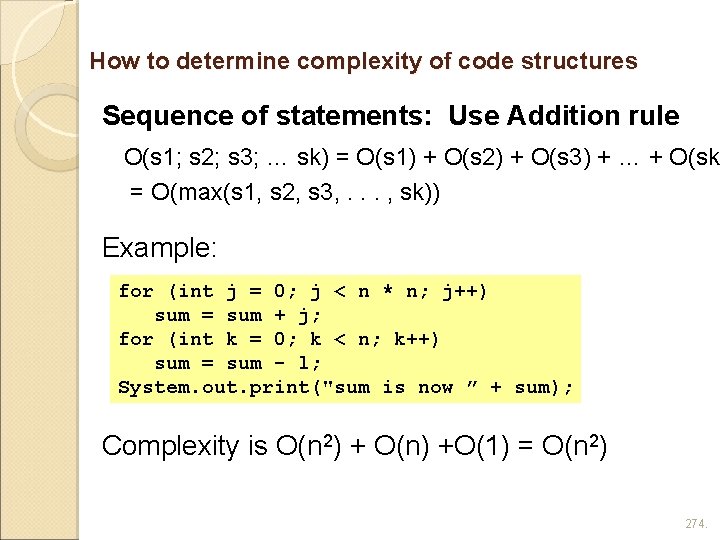

How to determine complexity of code structures Sequence of statements: Use Addition rule O(s 1; s 2; s 3; … sk) = O(s 1) + O(s 2) + O(s 3) + … + O(sk = O(max(s 1, s 2, s 3, . . . , sk)) Example: for (int j = 0; j < n * n; j++) sum = sum + j; for (int k = 0; k < n; k++) sum = sum - l; System. out. print("sum is now ” + sum); Complexity is O(n 2) + O(n) +O(1) = O(n 2) 274.

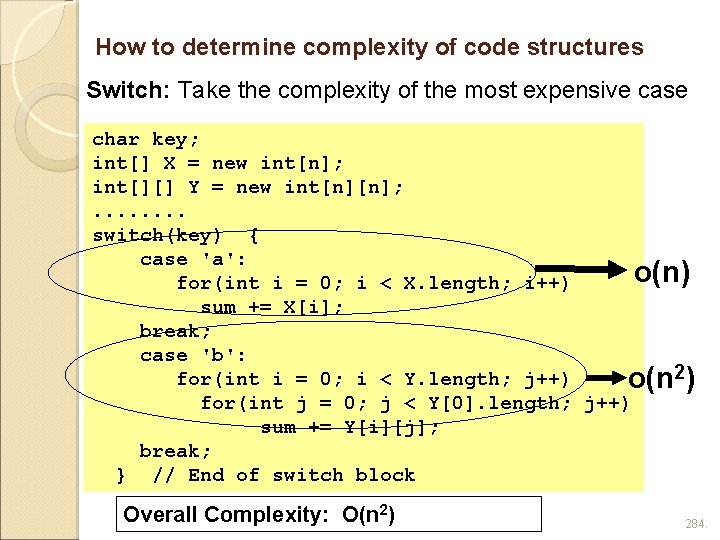

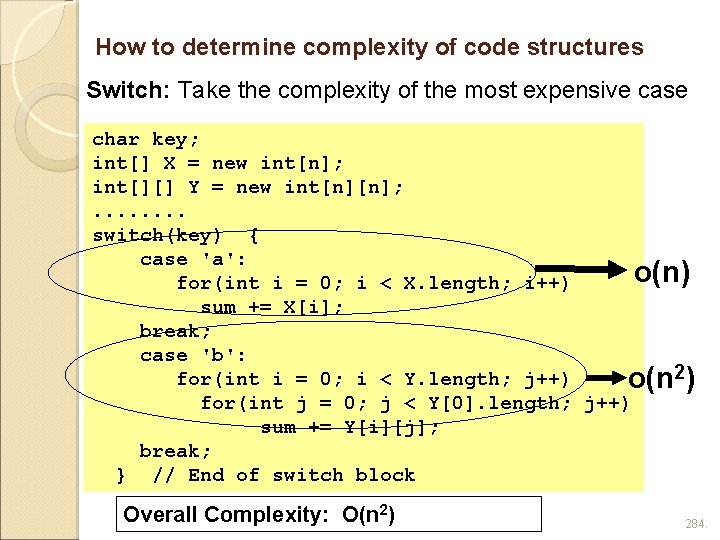

How to determine complexity of code structures Switch: Take the complexity of the most expensive case char key; int[] X = new int[n]; int[][] Y = new int[n][n]; . . . . switch(key) { case 'a': o(n) for(int i = 0; i < X. length; i++) sum += X[i]; break; case 'b': for(int i = 0; i < Y. length; j++) o(n 2) for(int j = 0; j < Y[0]. length; j++) sum += Y[i][j]; break; } // End of switch block Overall Complexity: O(n 2) 284.

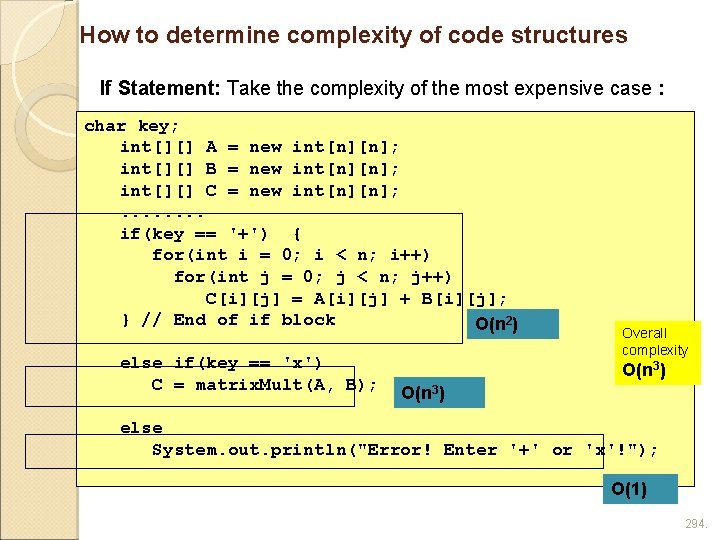

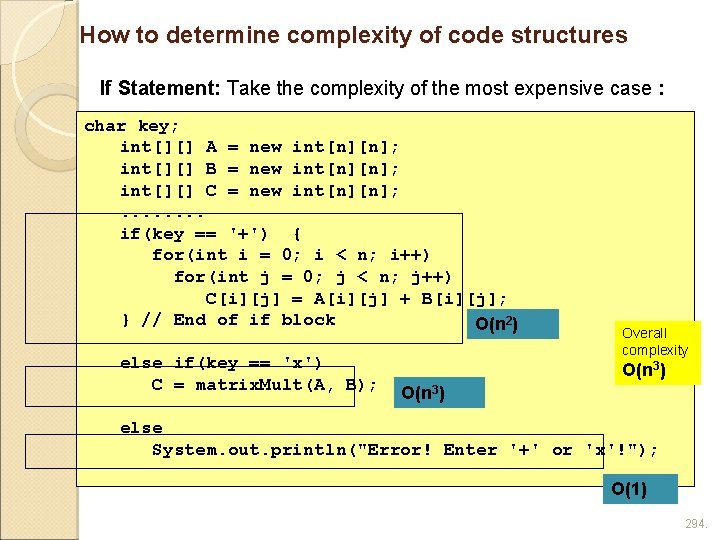

How to determine complexity of code structures If Statement: Take the complexity of the most expensive case : char key; int[][] A = new int[n][n]; int[][] B = new int[n][n]; int[][] C = new int[n][n]; . . . . if(key == '+') { for(int i = 0; i < n; i++) for(int j = 0; j < n; j++) C[i][j] = A[i][j] + B[i][j]; } // End of if block O(n 2) else if(key == 'x') C = matrix. Mult(A, B); Overall complexity O(n 3) else System. out. println("Error! Enter '+' or 'x'!"); O(1) 294.

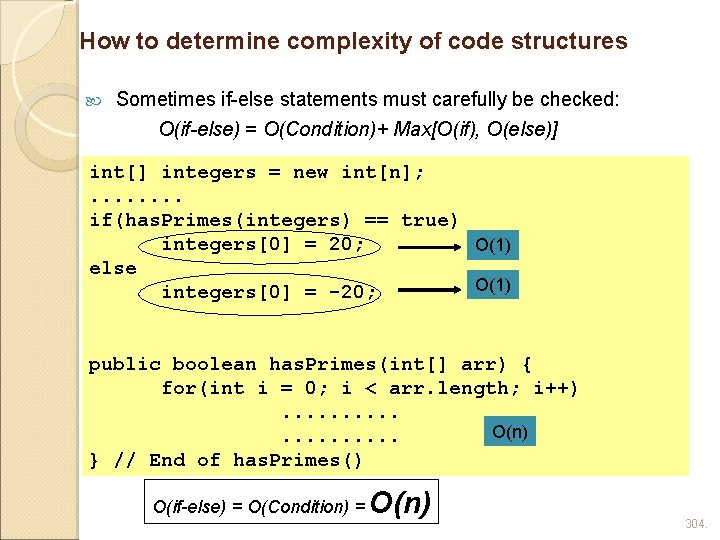

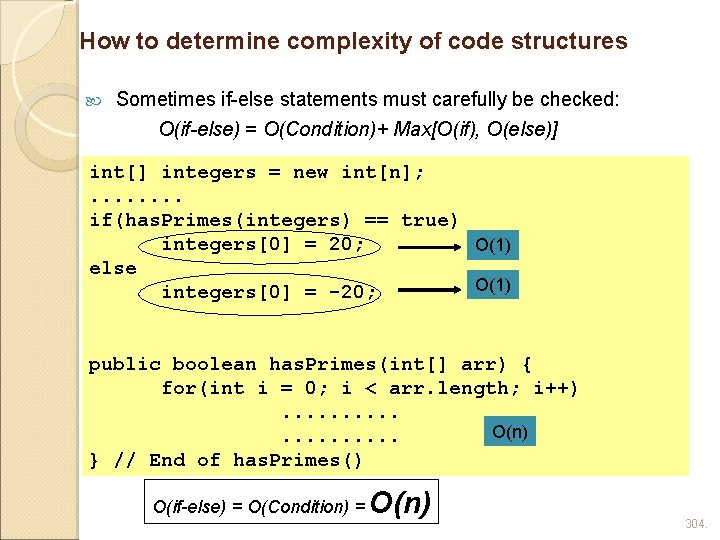

How to determine complexity of code structures Sometimes if-else statements must carefully be checked: O(if-else) = O(Condition)+ Max[O(if), O(else)] int[] integers = new int[n]; . . . . if(has. Primes(integers) == true) integers[0] = 20; O(1) else O(1) integers[0] = -20; public boolean has. Primes(int[] arr) { for(int i = 0; i < arr. length; i++). . O(n). . } // End of has. Primes() O(if-else) = O(Condition) = O(n) 304.

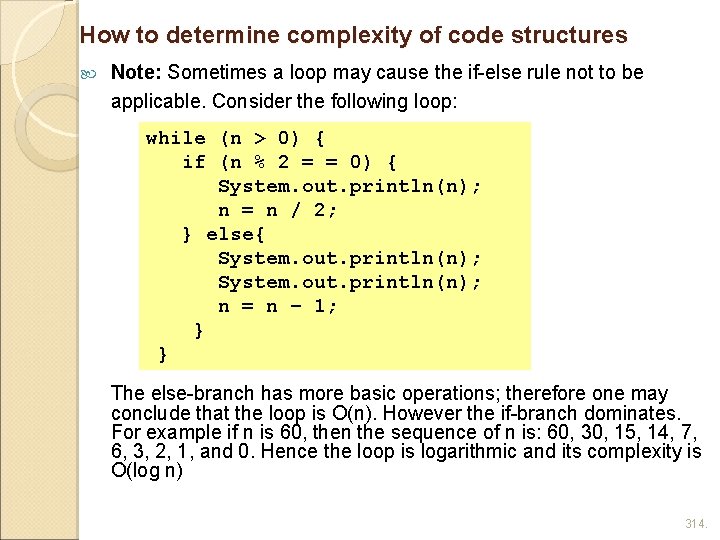

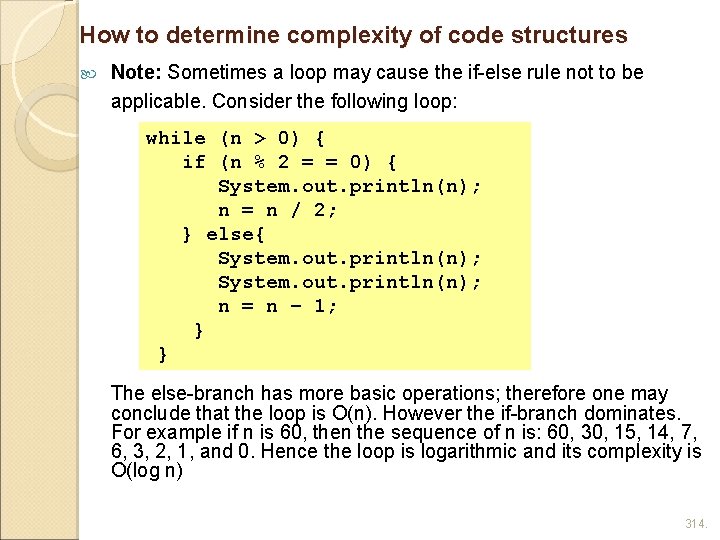

How to determine complexity of code structures Note: Sometimes a loop may cause the if-else rule not to be applicable. Consider the following loop: while (n > 0) { if (n % 2 = = 0) { System. out. println(n); n = n / 2; } else{ System. out. println(n); n = n – 1; } } The else-branch has more basic operations; therefore one may conclude that the loop is O(n). However the if-branch dominates. For example if n is 60, then the sequence of n is: 60, 30, 15, 14, 7, 6, 3, 2, 1, and 0. Hence the loop is logarithmic and its complexity is O(log n) 314.