Markov Logic Parag Singla Dept of Computer Science

- Slides: 75

Markov Logic Parag Singla Dept. of Computer Science University of Texas, Austin

Overview l l l Motivation Background Markov logic Inference Learning Applications

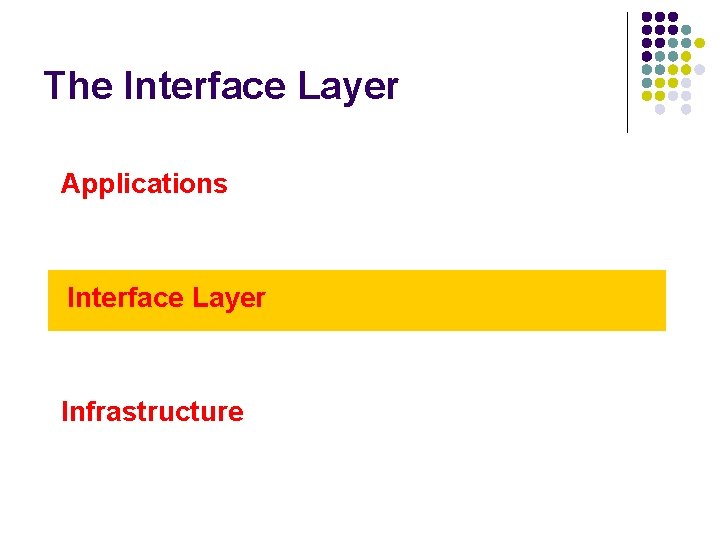

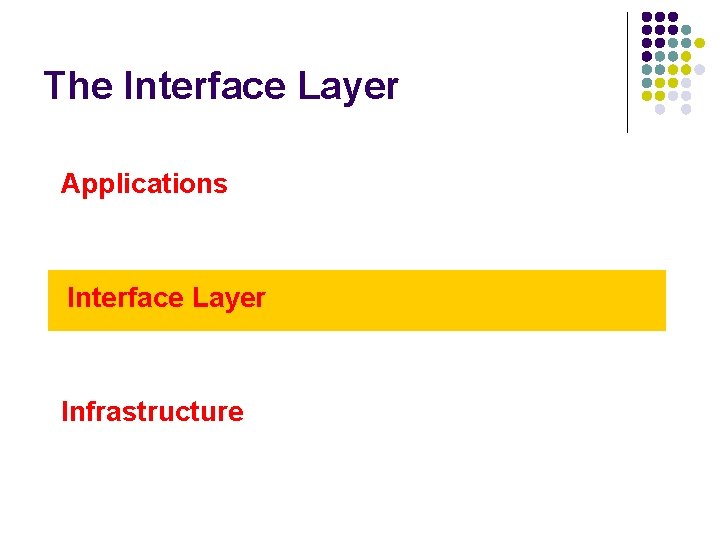

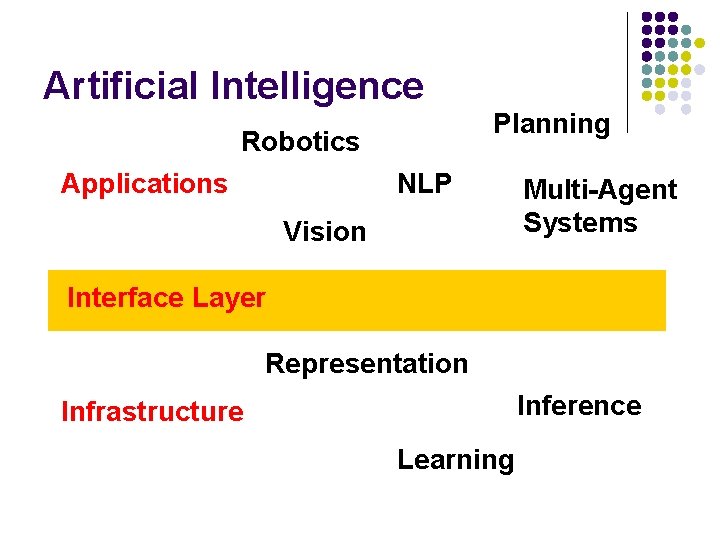

The Interface Layer Applications Interface Layer Infrastructure

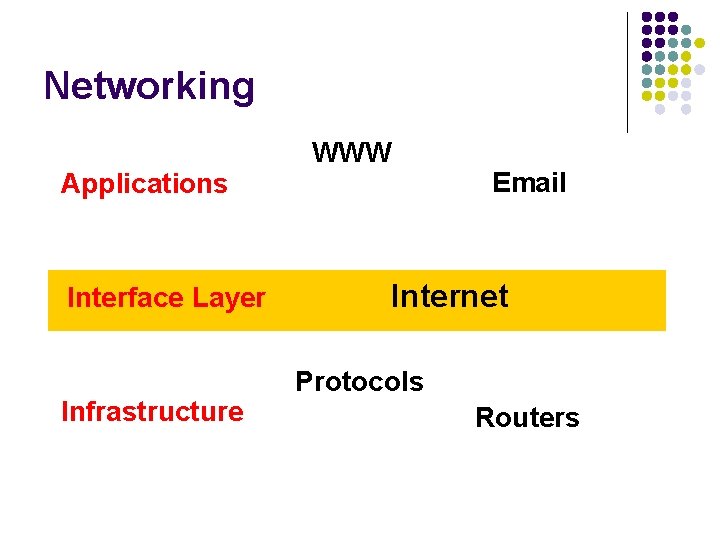

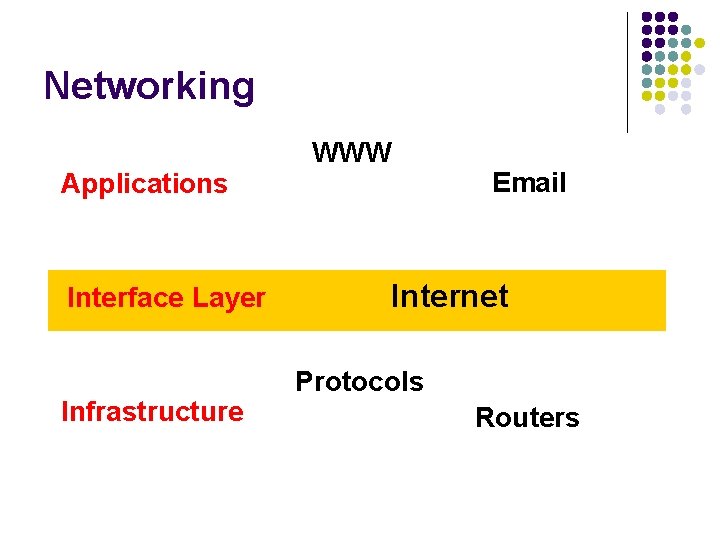

Networking Applications Interface Layer Infrastructure WWW Email Internet Protocols Routers

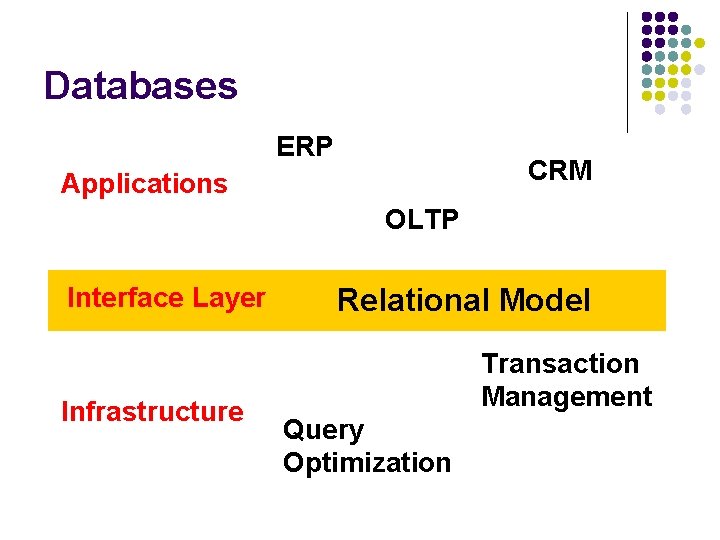

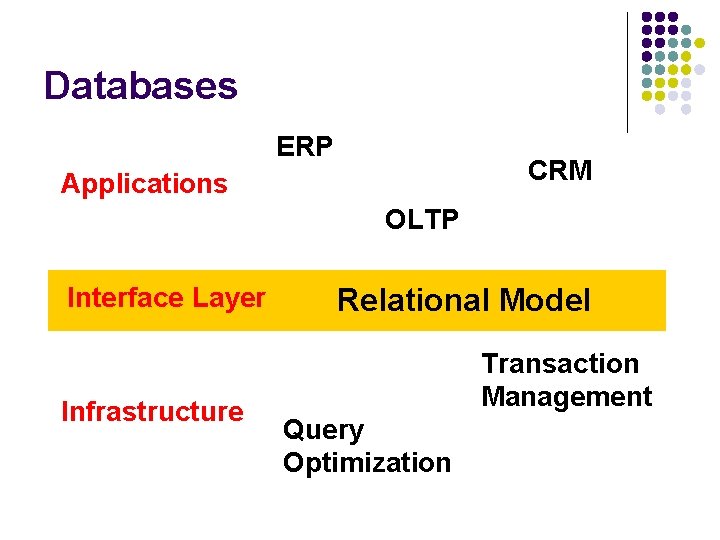

Databases ERP CRM Applications OLTP Interface Layer Infrastructure Relational Model Transaction Management Query Optimization

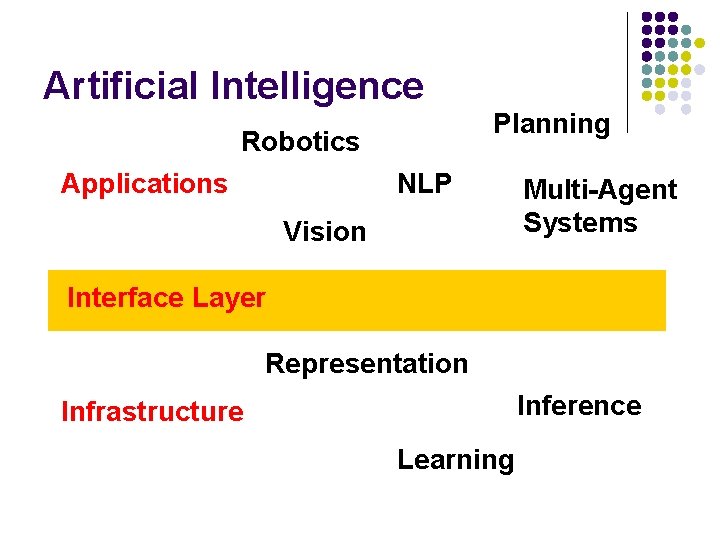

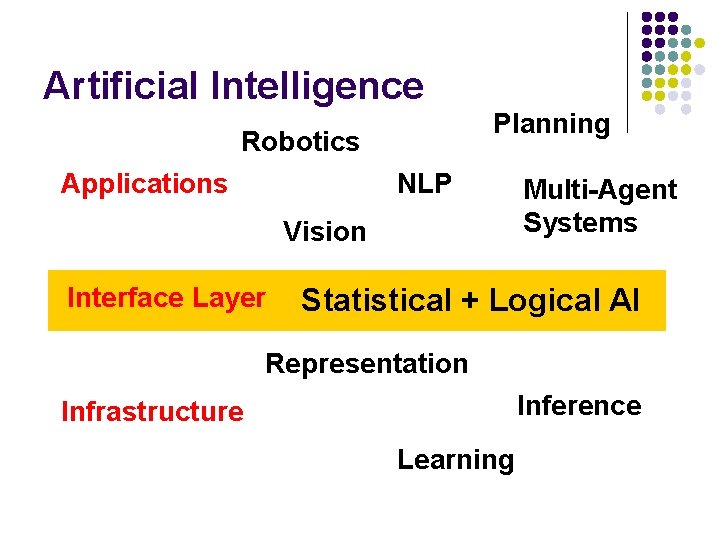

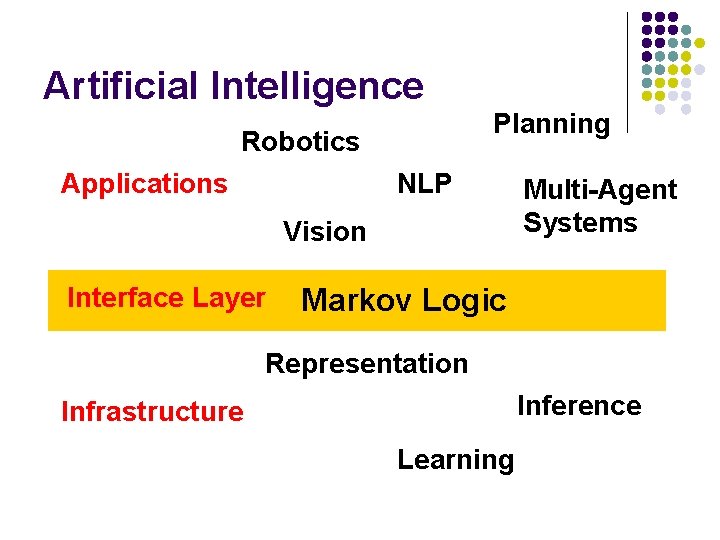

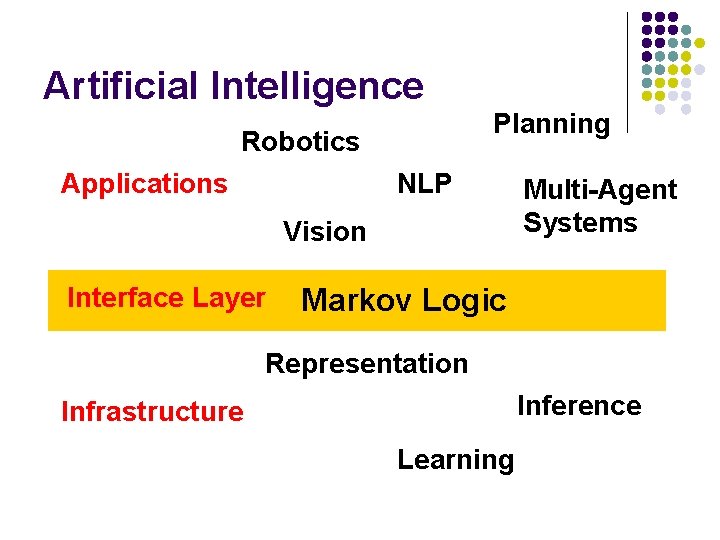

Artificial Intelligence Planning Robotics Applications NLP Vision Multi-Agent Systems Interface Layer Representation Inference Infrastructure Learning

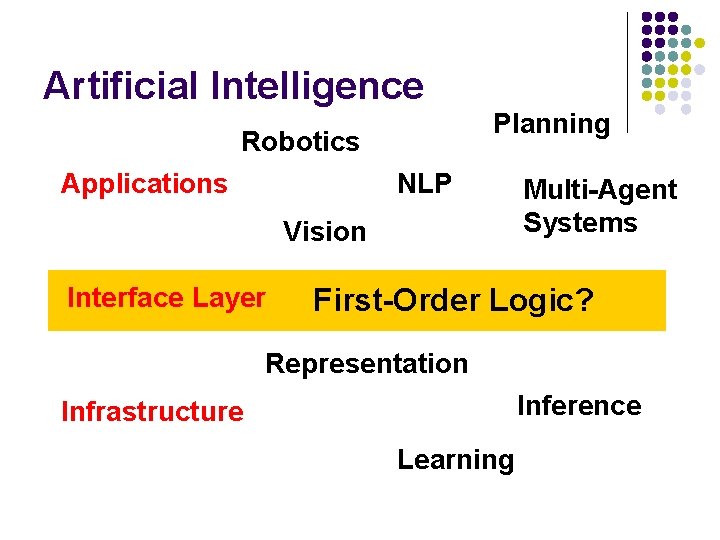

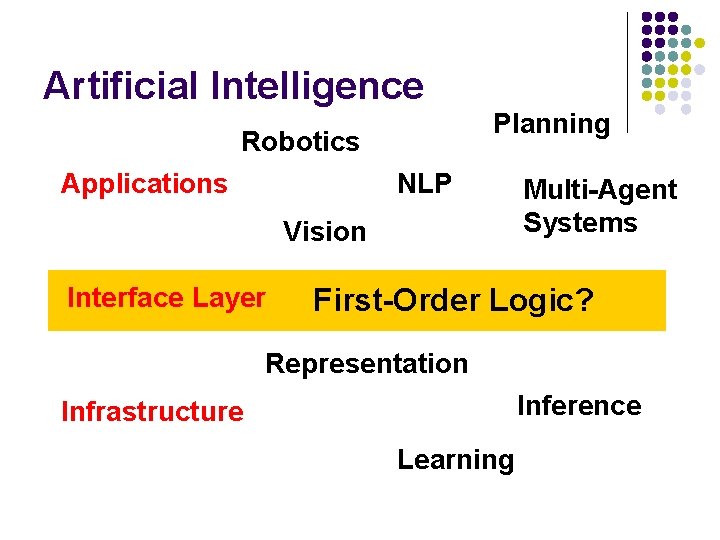

Artificial Intelligence Planning Robotics Applications NLP Vision Interface Layer Multi-Agent Systems First-Order Logic? Representation Inference Infrastructure Learning

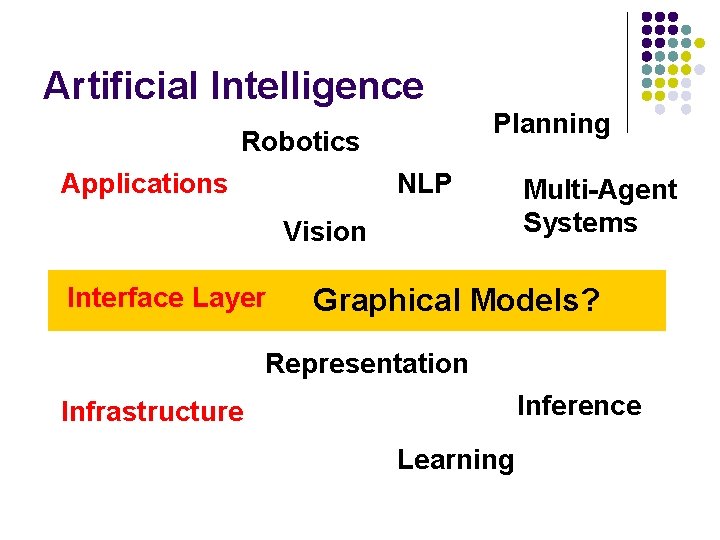

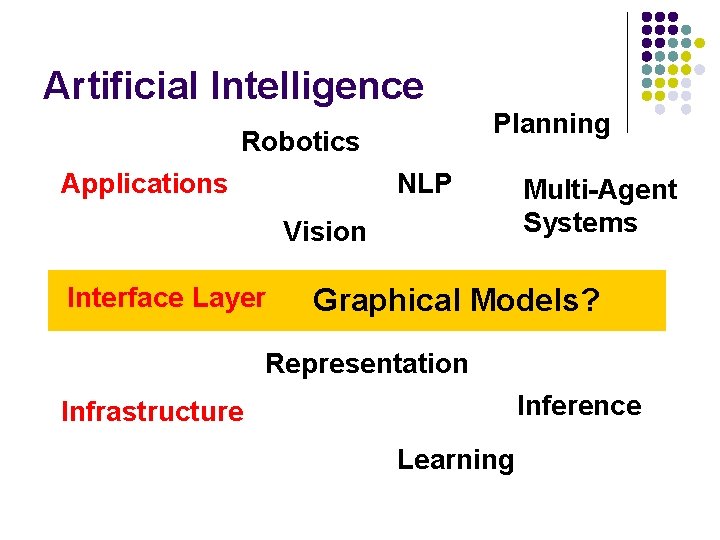

Artificial Intelligence Planning Robotics Applications NLP Vision Interface Layer Multi-Agent Systems Graphical Models? Representation Inference Infrastructure Learning

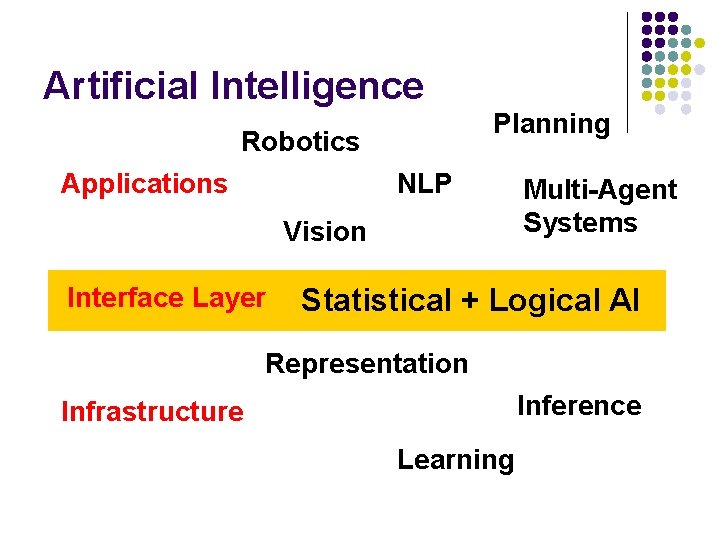

Artificial Intelligence Planning Robotics Applications NLP Vision Interface Layer Multi-Agent Systems Statistical + Logical AI Representation Inference Infrastructure Learning

Artificial Intelligence Planning Robotics Applications NLP Vision Interface Layer Multi-Agent Systems Markov Logic Representation Inference Infrastructure Learning

Overview l l l Motivation Background Markov logic Inference Learning Applications

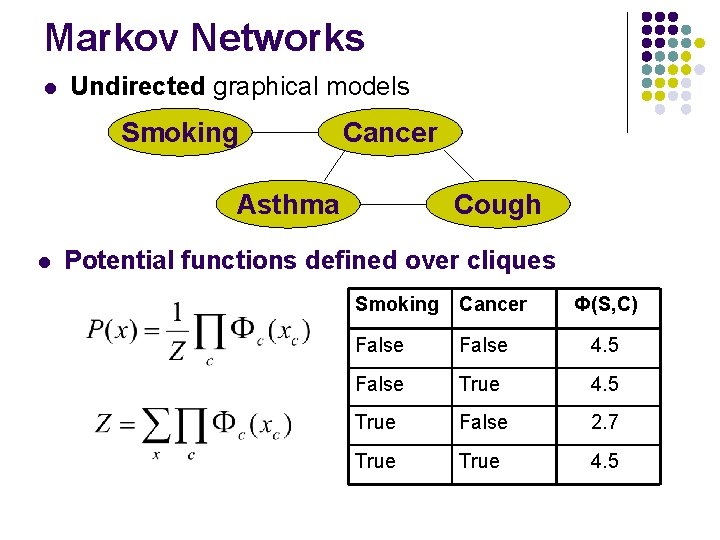

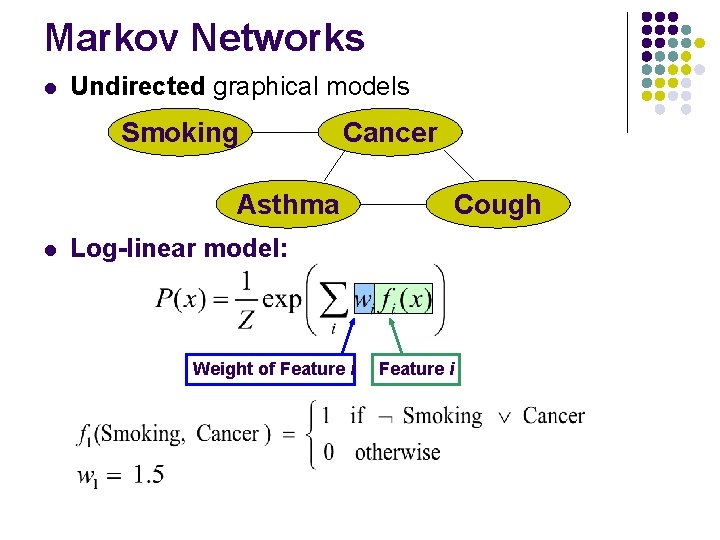

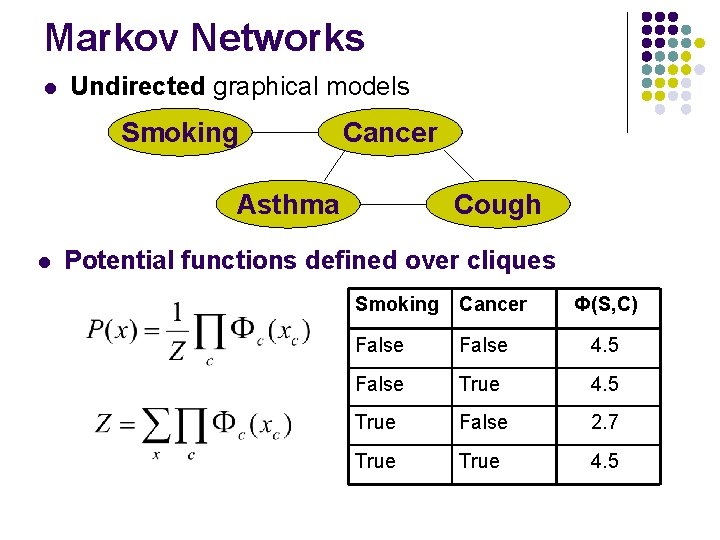

Markov Networks l Undirected graphical models Smoking Cancer Asthma l Cough Potential functions defined over cliques Smoking Cancer Ф(S, C) False 4. 5 False True 4. 5 True False 2. 7 True 4. 5

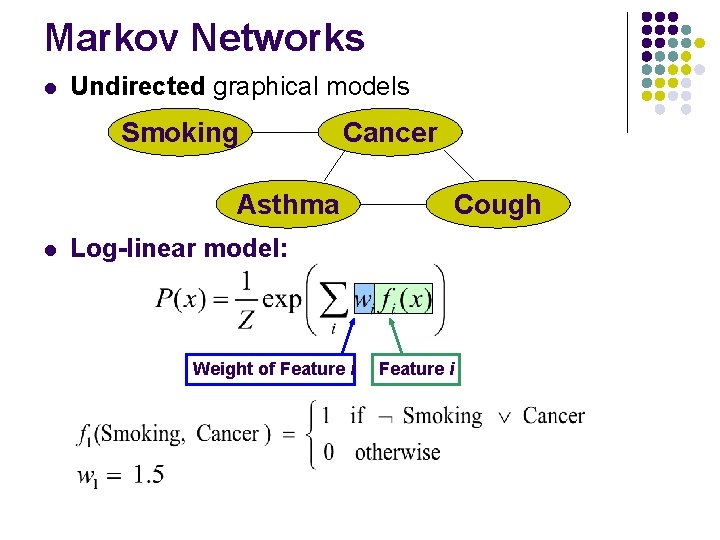

Markov Networks l Undirected graphical models Smoking Cancer Asthma l Cough Log-linear model: Weight of Feature i

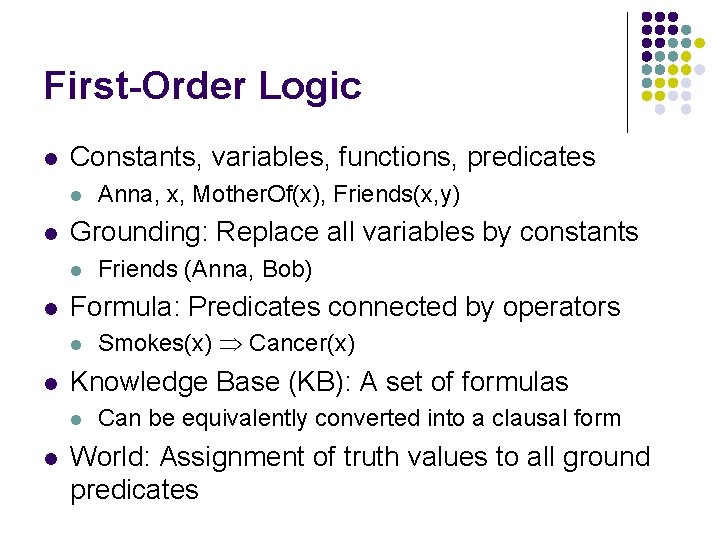

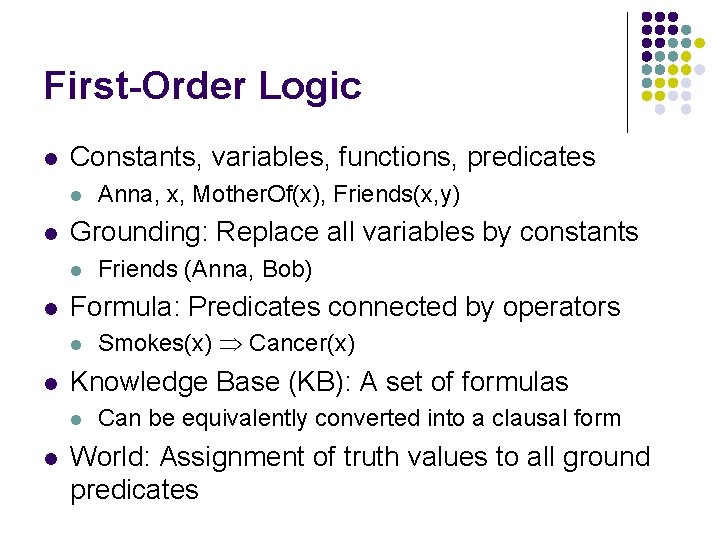

First-Order Logic l Constants, variables, functions, predicates l l Grounding: Replace all variables by constants l l Smokes(x) Cancer(x) Knowledge Base (KB): A set of formulas l l Friends (Anna, Bob) Formula: Predicates connected by operators l l Anna, x, Mother. Of(x), Friends(x, y) Can be equivalently converted into a clausal form World: Assignment of truth values to all ground predicates

Overview l l l Motivation Background Markov logic Inference Learning Applications

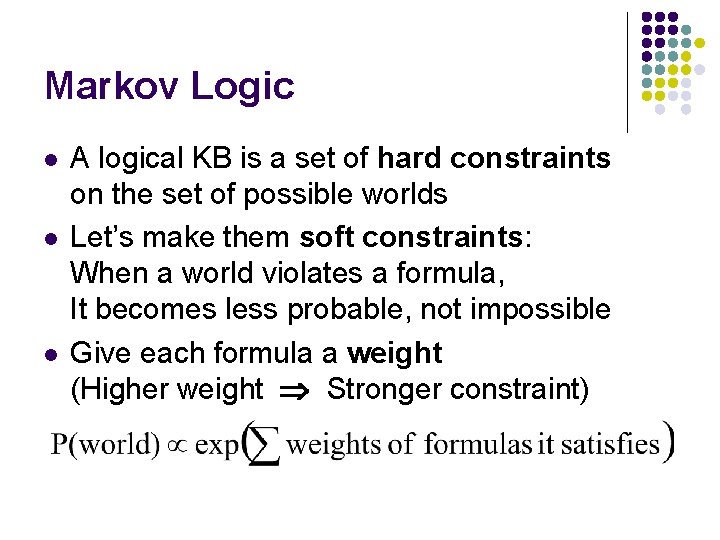

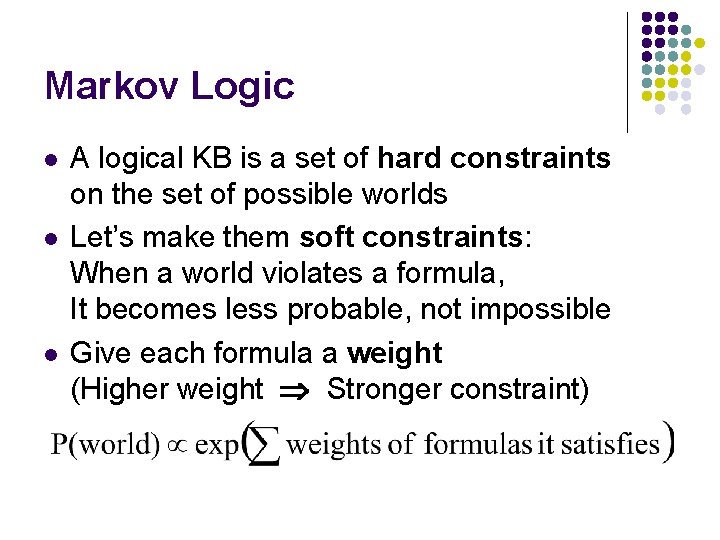

Markov Logic l l l A logical KB is a set of hard constraints on the set of possible worlds Let’s make them soft constraints: When a world violates a formula, It becomes less probable, not impossible Give each formula a weight (Higher weight Stronger constraint)

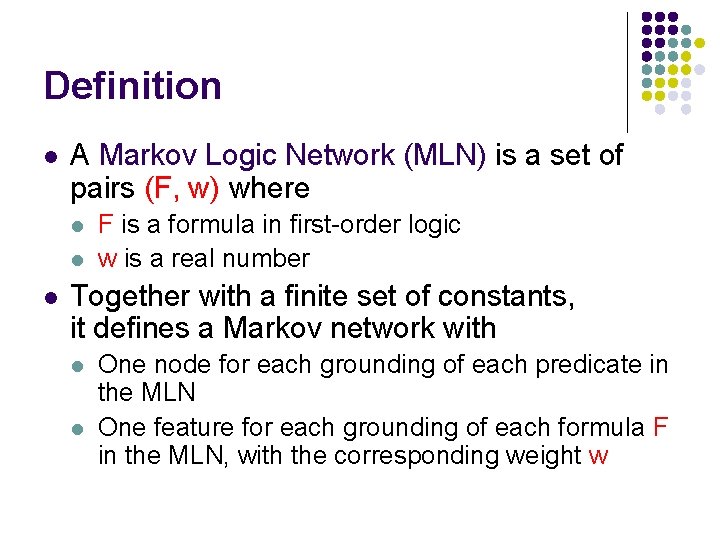

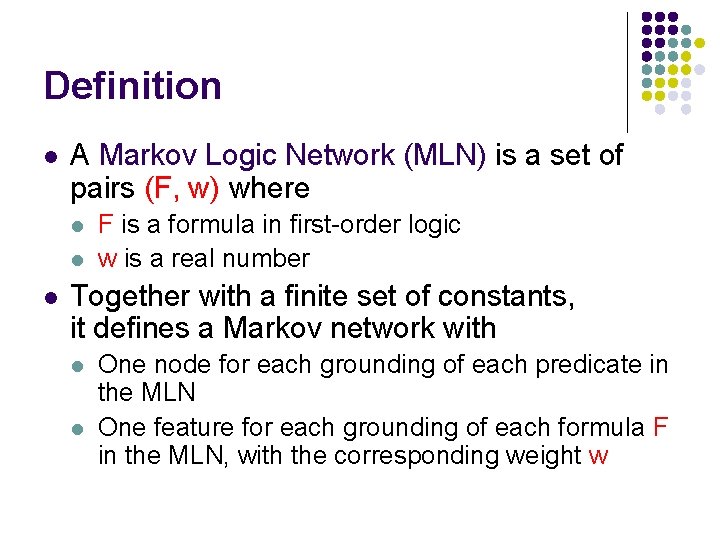

Definition l A Markov Logic Network (MLN) is a set of pairs (F, w) where l l l F is a formula in first-order logic w is a real number Together with a finite set of constants, it defines a Markov network with l l One node for each grounding of each predicate in the MLN One feature for each grounding of each formula F in the MLN, with the corresponding weight w

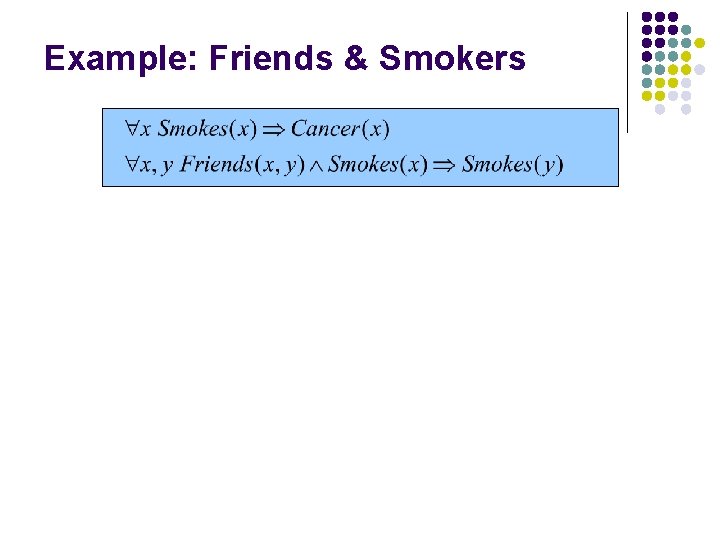

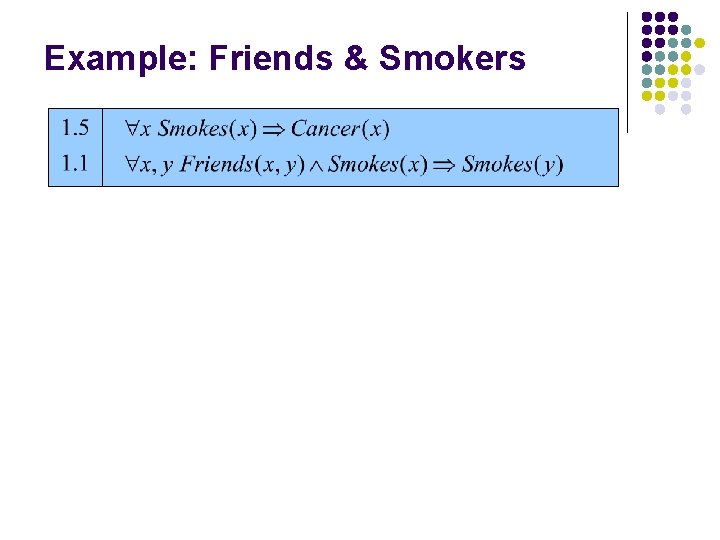

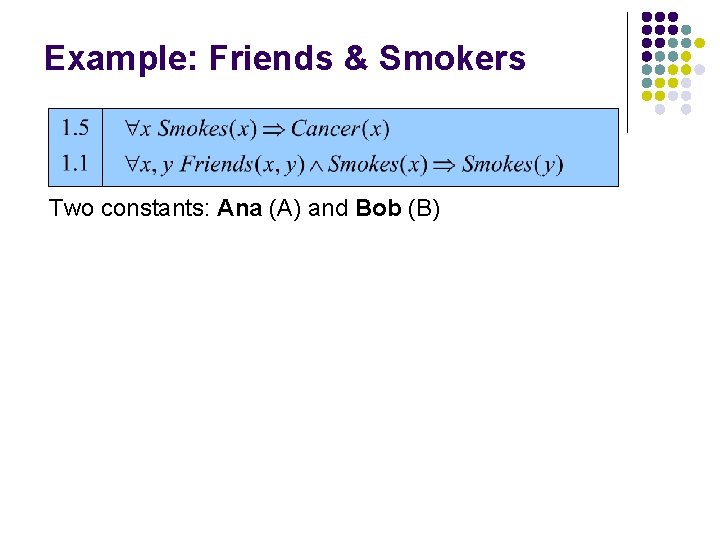

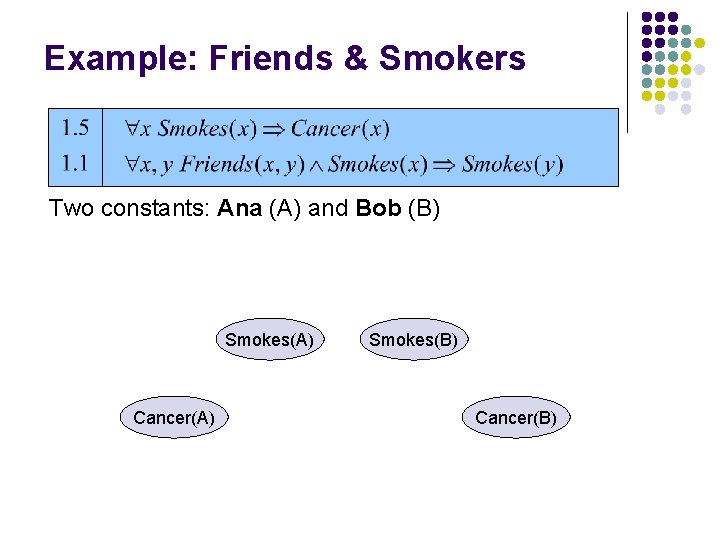

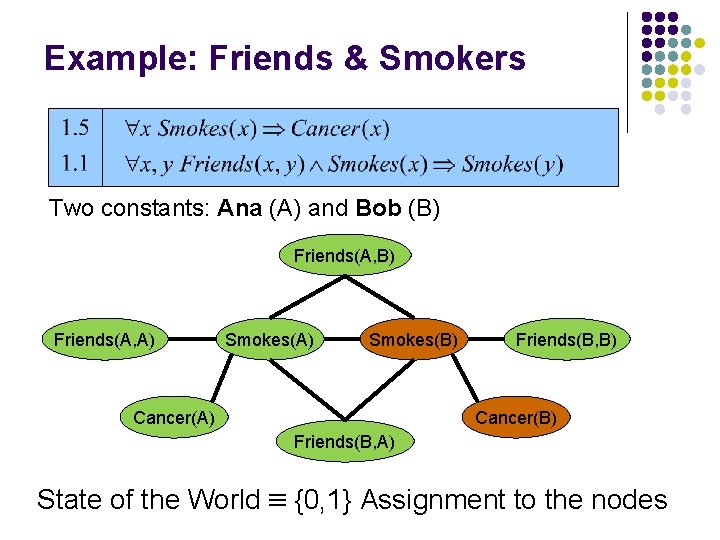

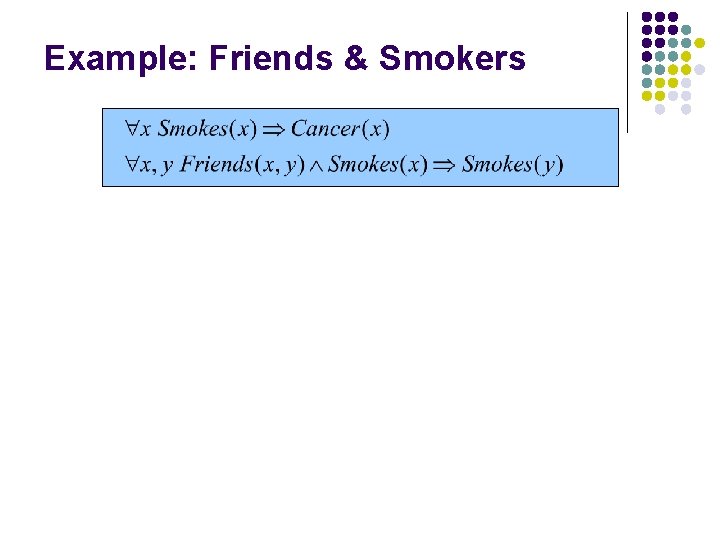

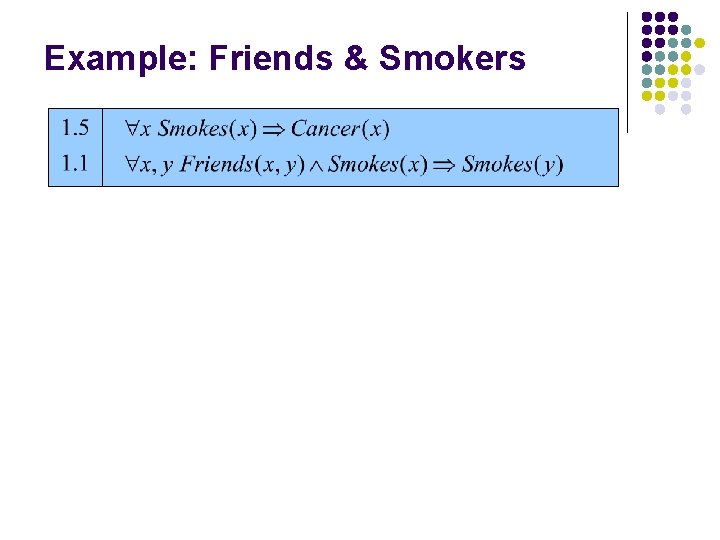

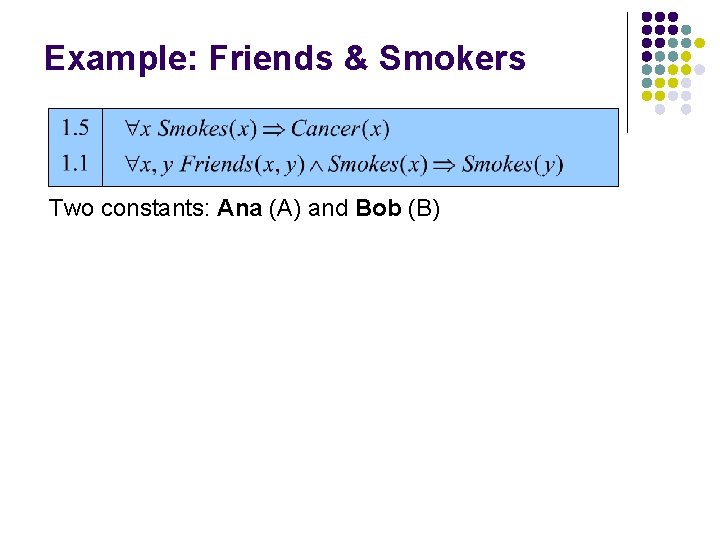

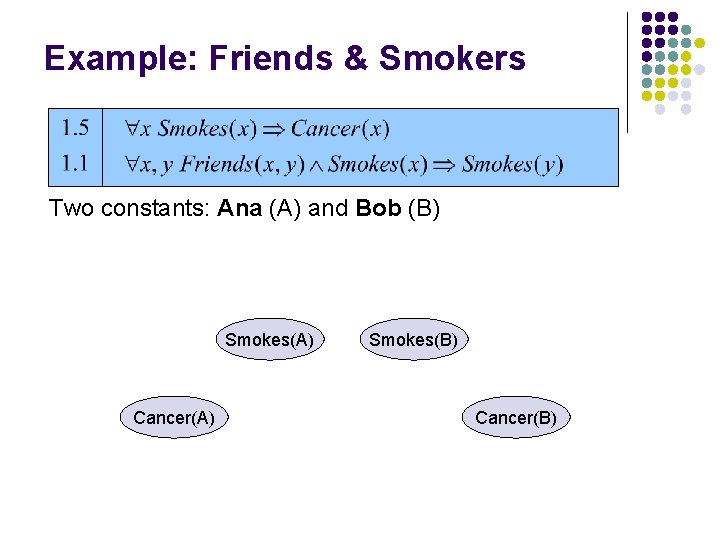

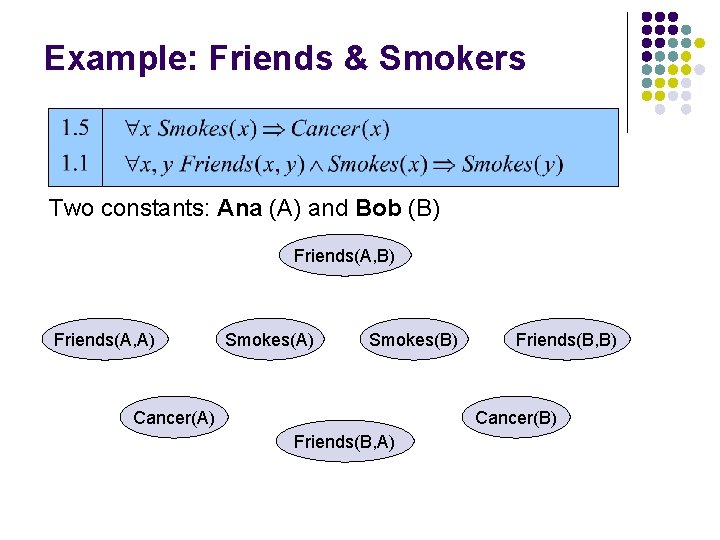

Example: Friends & Smokers

Example: Friends & Smokers

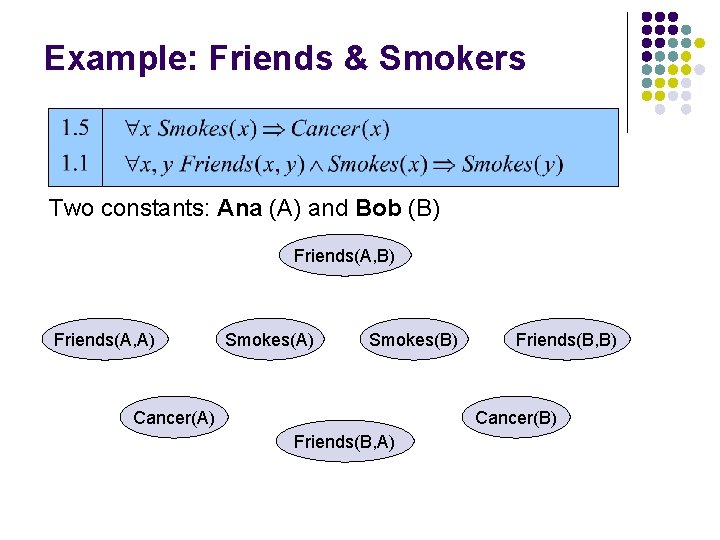

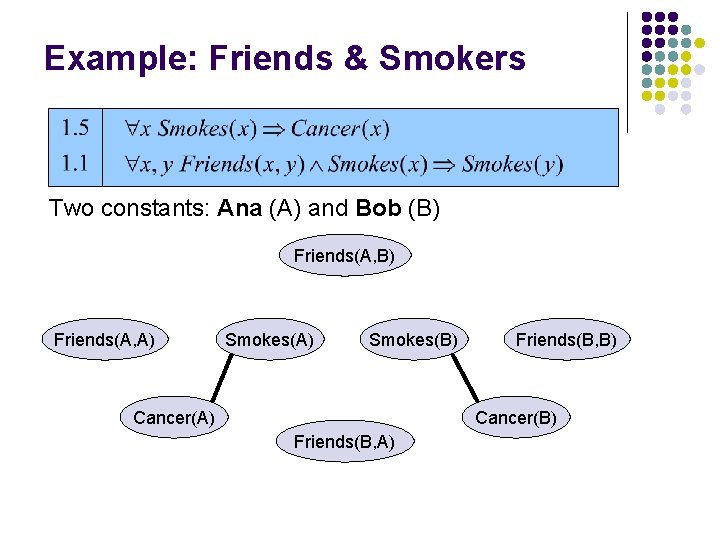

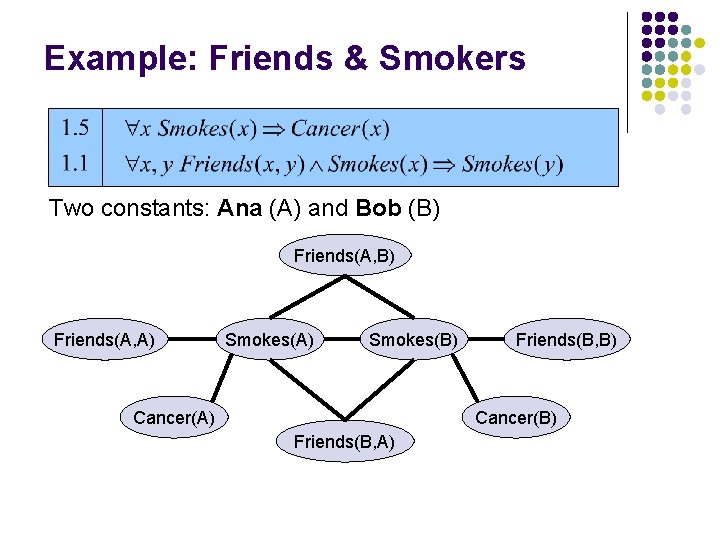

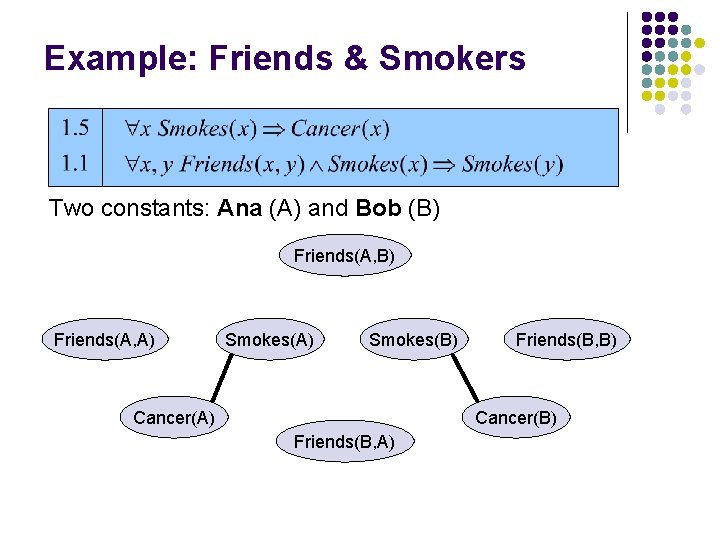

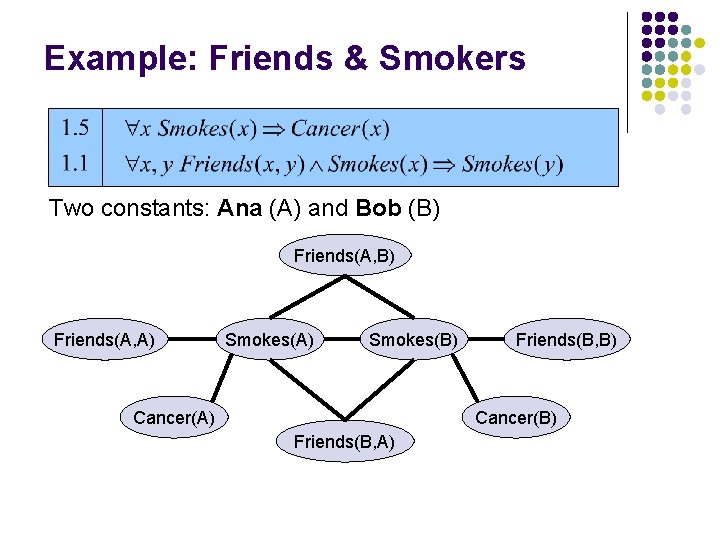

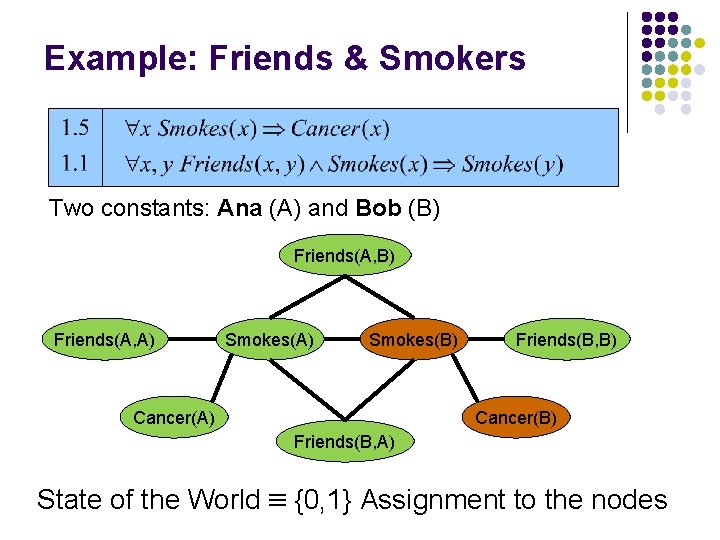

Example: Friends & Smokers Two constants: Ana (A) and Bob (B)

Example: Friends & Smokers Two constants: Ana (A) and Bob (B) Smokes(A) Cancer(A) Smokes(B) Cancer(B)

Example: Friends & Smokers Two constants: Ana (A) and Bob (B) Friends(A, A) Smokes(B) Cancer(A) Friends(B, B) Cancer(B) Friends(B, A)

Example: Friends & Smokers Two constants: Ana (A) and Bob (B) Friends(A, A) Smokes(B) Cancer(A) Friends(B, B) Cancer(B) Friends(B, A)

Example: Friends & Smokers Two constants: Ana (A) and Bob (B) Friends(A, A) Smokes(B) Cancer(A) Friends(B, B) Cancer(B) Friends(B, A)

Example: Friends & Smokers Two constants: Ana (A) and Bob (B) Friends(A, A) Smokes(B) Cancer(A) Friends(B, B) Cancer(B) Friends(B, A) State of the World {0, 1} Assignment to the nodes

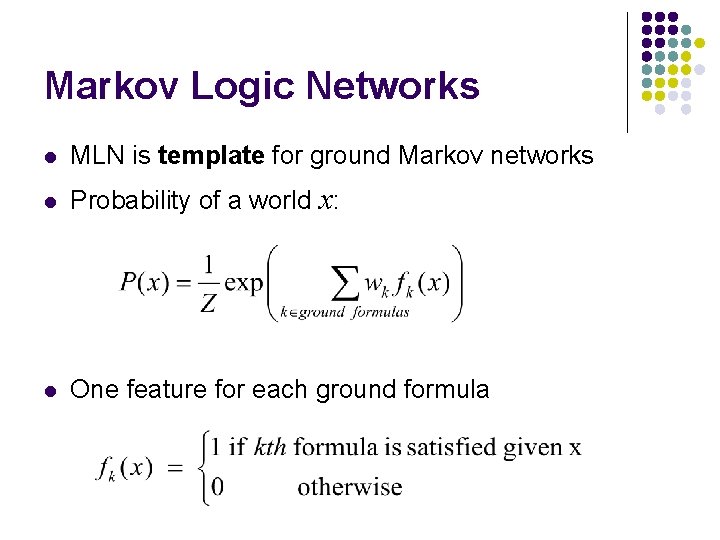

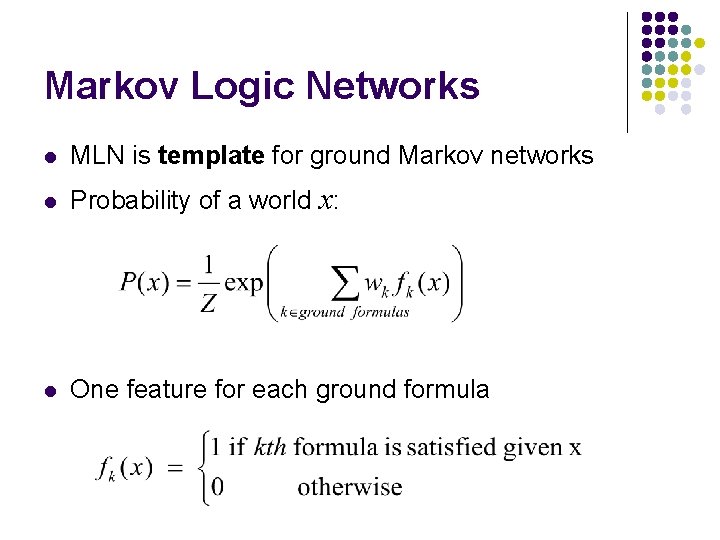

Markov Logic Networks l MLN is template for ground Markov networks l Probability of a world x: l One feature for each ground formula

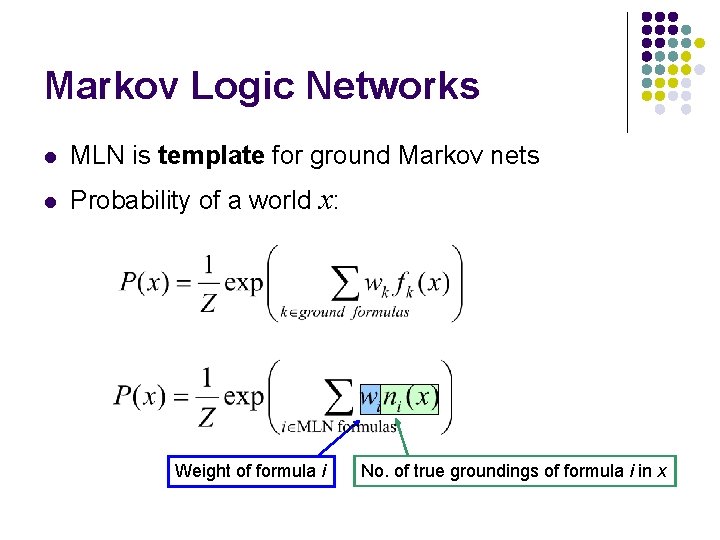

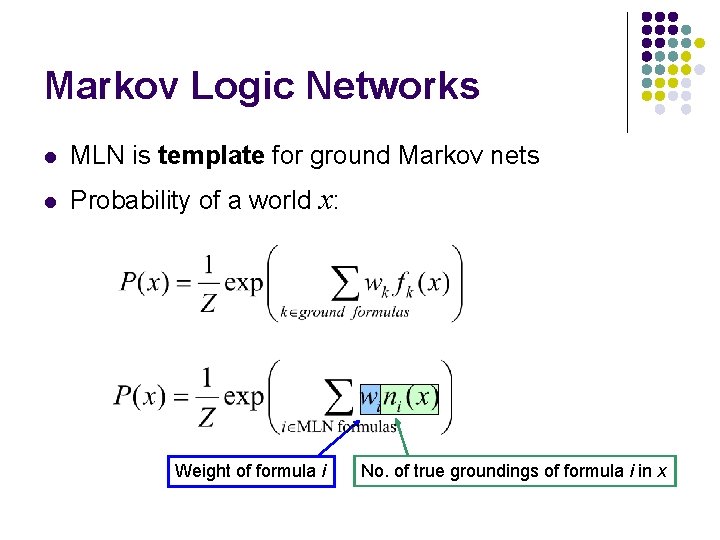

Markov Logic Networks l MLN is template for ground Markov nets l Probability of a world x: Weight of formula i No. of true groundings of formula i in x

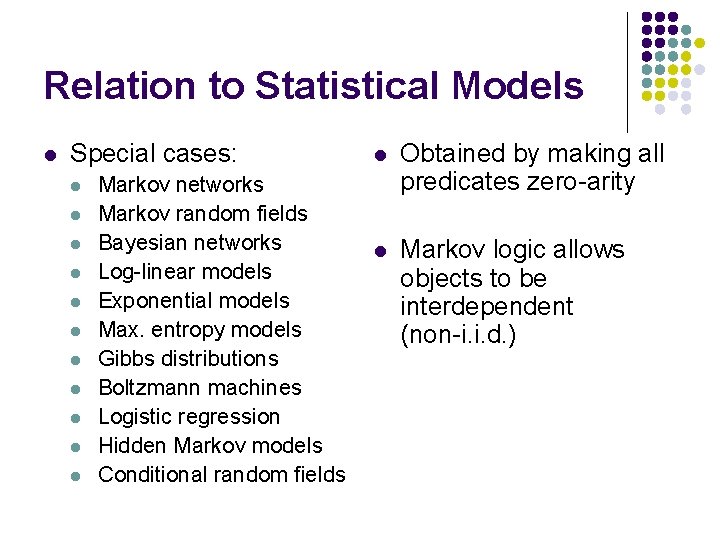

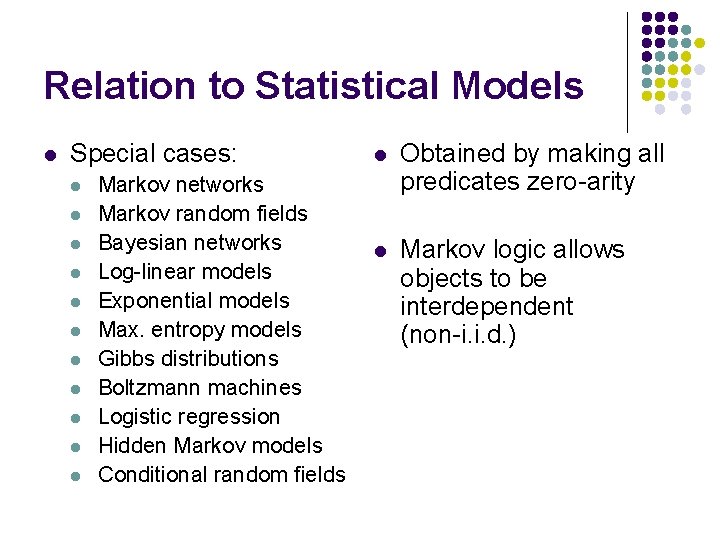

Relation to Statistical Models l Special cases: l l l Markov networks Markov random fields Bayesian networks Log-linear models Exponential models Max. entropy models Gibbs distributions Boltzmann machines Logistic regression Hidden Markov models Conditional random fields l Obtained by making all predicates zero-arity l Markov logic allows objects to be interdependent (non-i. i. d. )

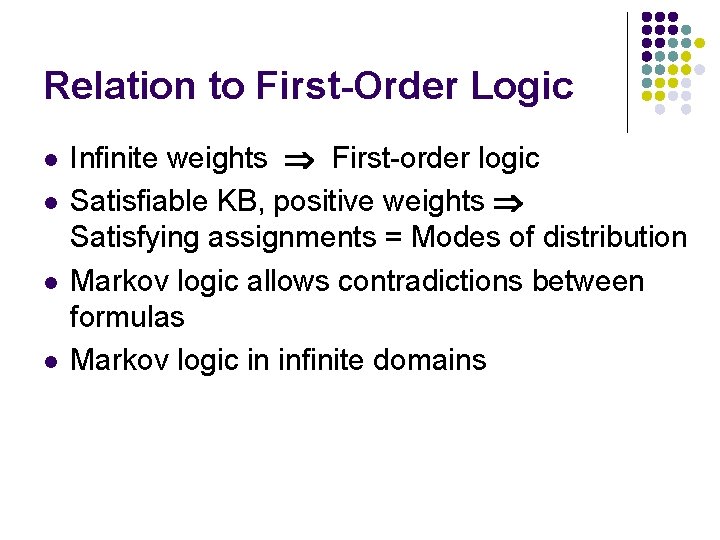

Relation to First-Order Logic l l Infinite weights First-order logic Satisfiable KB, positive weights Satisfying assignments = Modes of distribution Markov logic allows contradictions between formulas Markov logic in infinite domains

Overview l l l Motivation Background Markov logic Inference Learning Applications

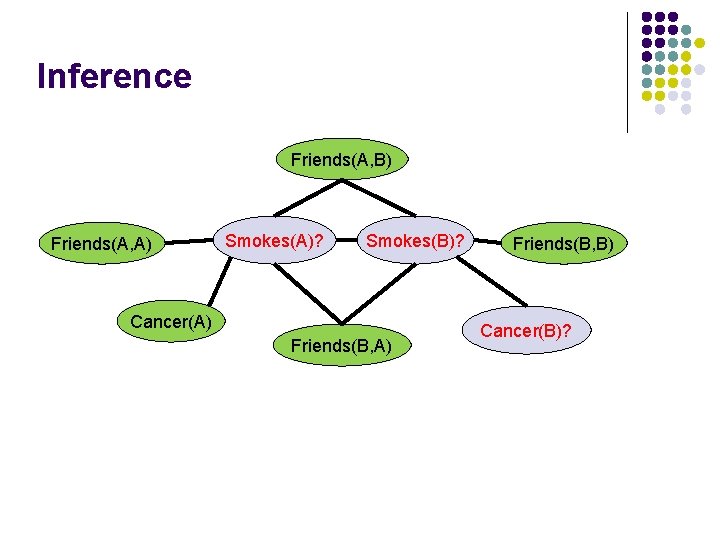

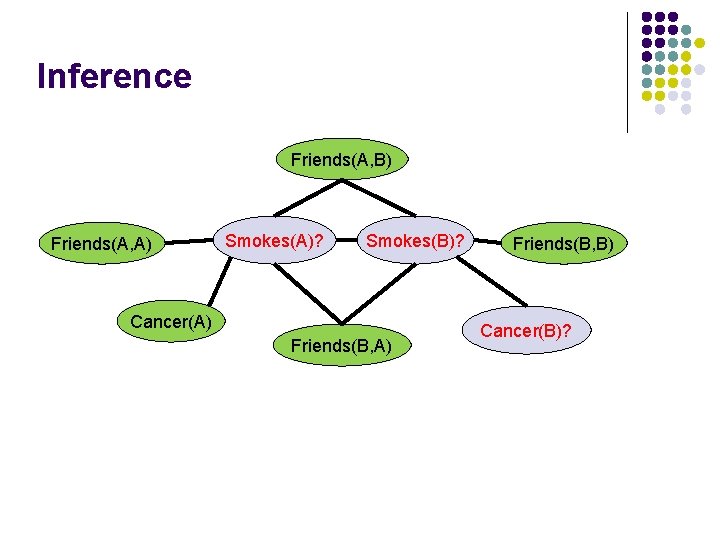

Inference Friends(A, B) Friends(A, A) Smokes(A)? Smokes(B)? Cancer(A) Friends(B, B) Cancer(B)?

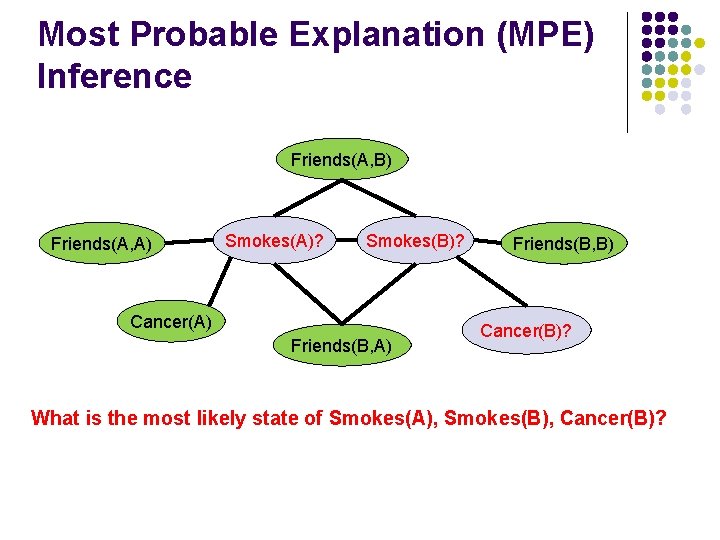

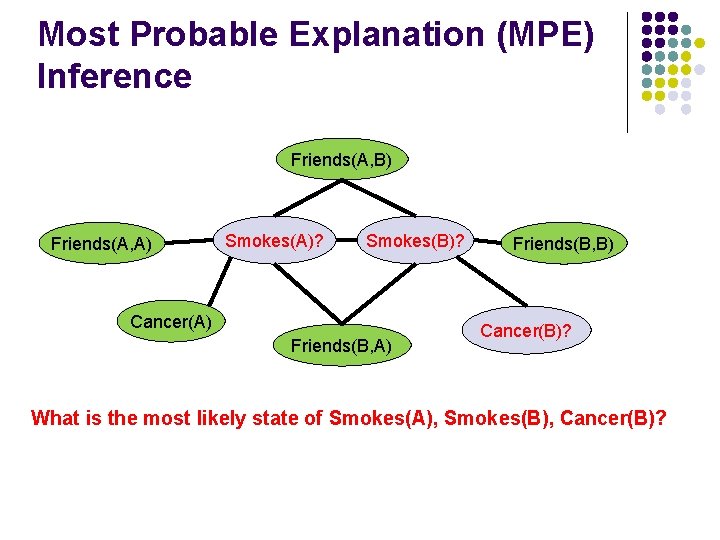

Most Probable Explanation (MPE) Inference Friends(A, B) Friends(A, A) Smokes(A)? Smokes(B)? Cancer(A) Friends(B, B) Cancer(B)? What is the most likely state of Smokes(A), Smokes(B), Cancer(B)?

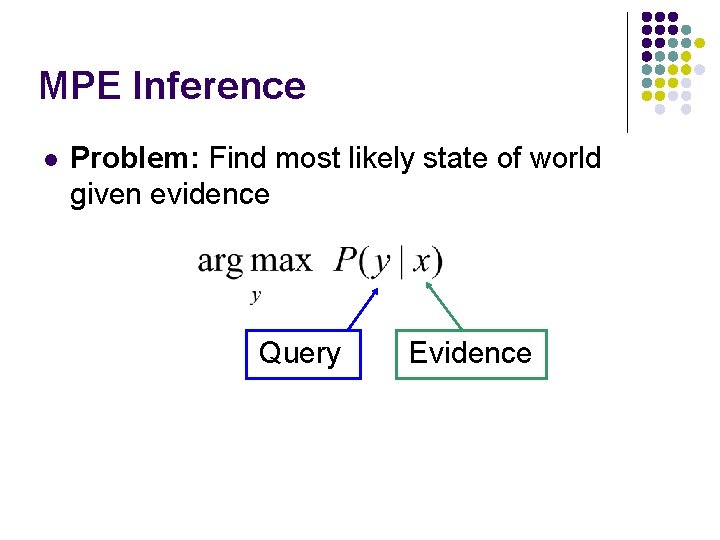

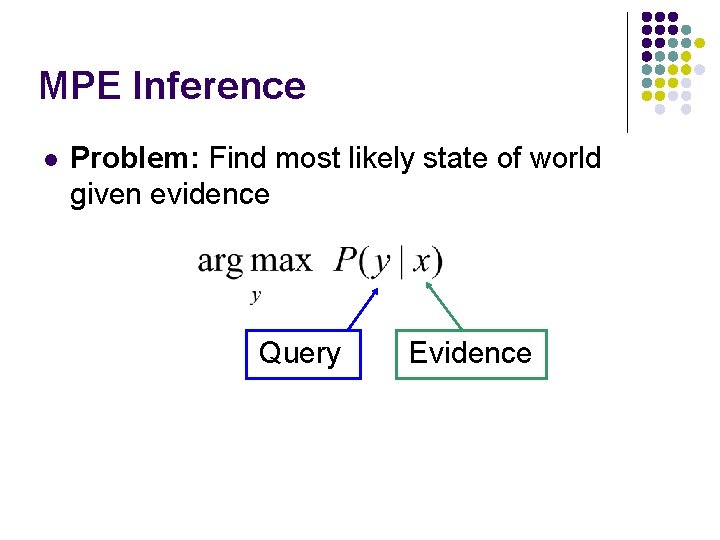

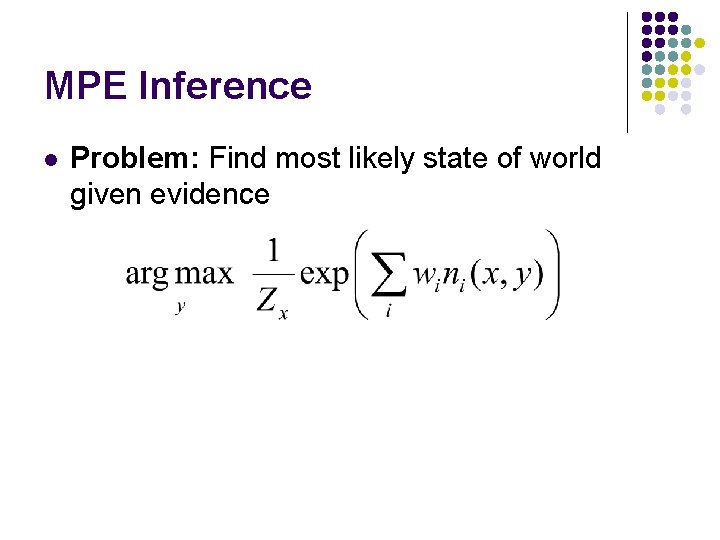

MPE Inference l Problem: Find most likely state of world given evidence Query Evidence

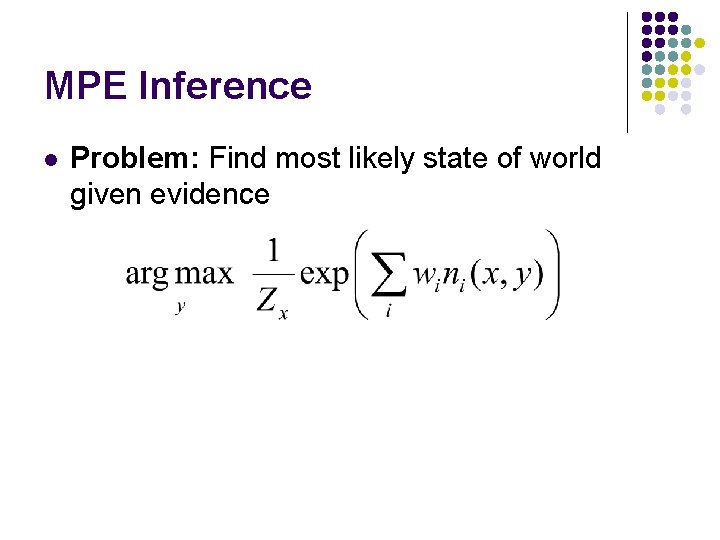

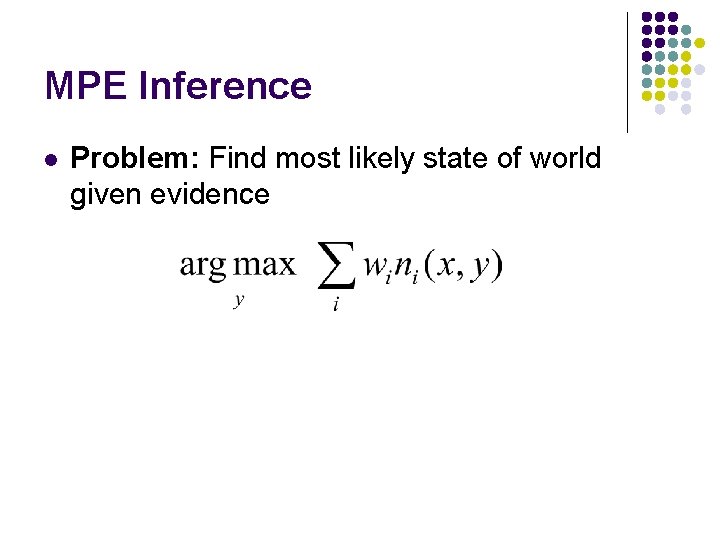

MPE Inference l Problem: Find most likely state of world given evidence

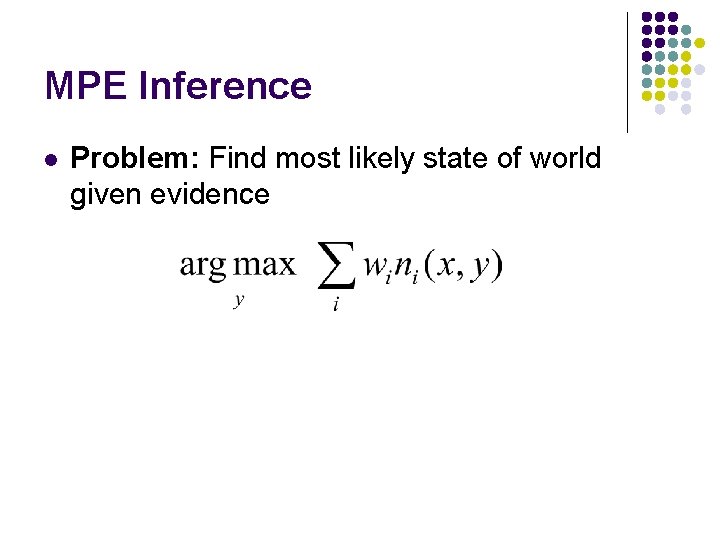

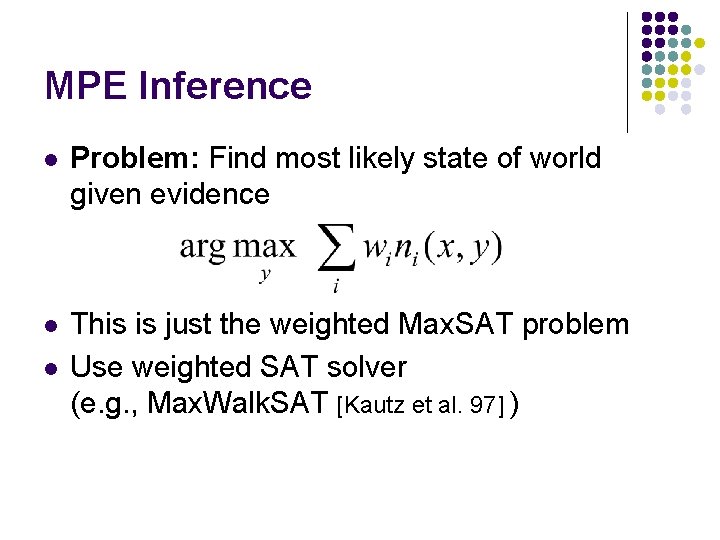

MPE Inference l Problem: Find most likely state of world given evidence

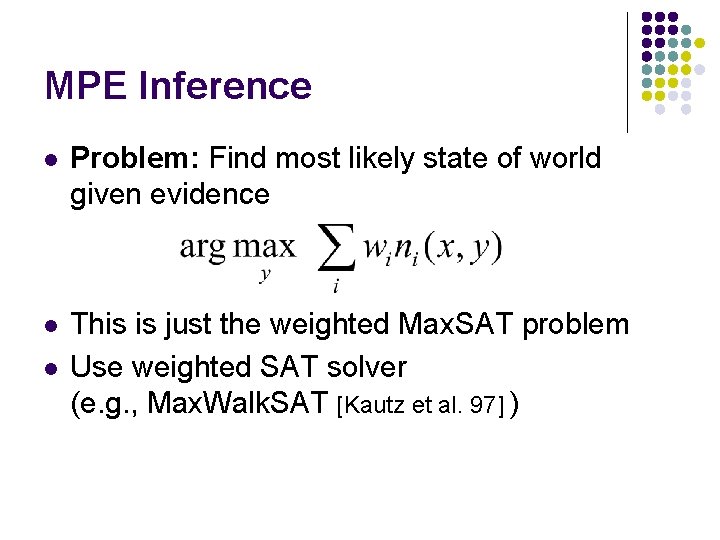

MPE Inference l Problem: Find most likely state of world given evidence l This is just the weighted Max. SAT problem Use weighted SAT solver (e. g. , Max. Walk. SAT [Kautz et al. 97] ) l

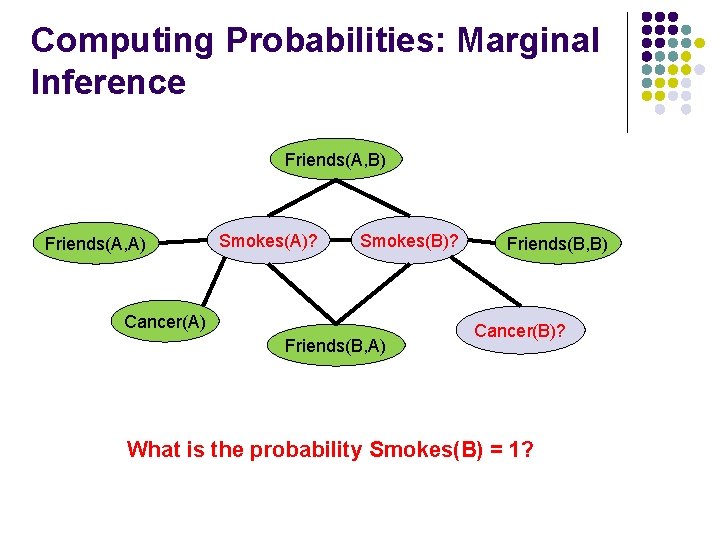

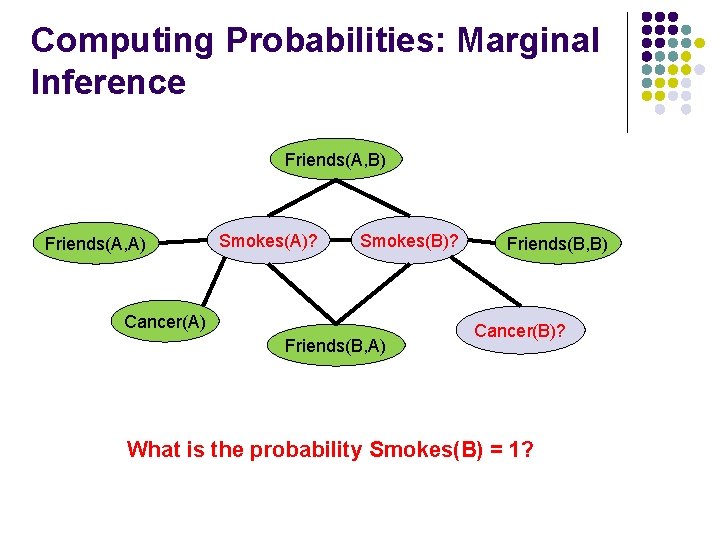

Computing Probabilities: Marginal Inference Friends(A, B) Friends(A, A) Smokes(A)? Smokes(B)? Cancer(A) Friends(B, B) Cancer(B)? What is the probability Smokes(B) = 1?

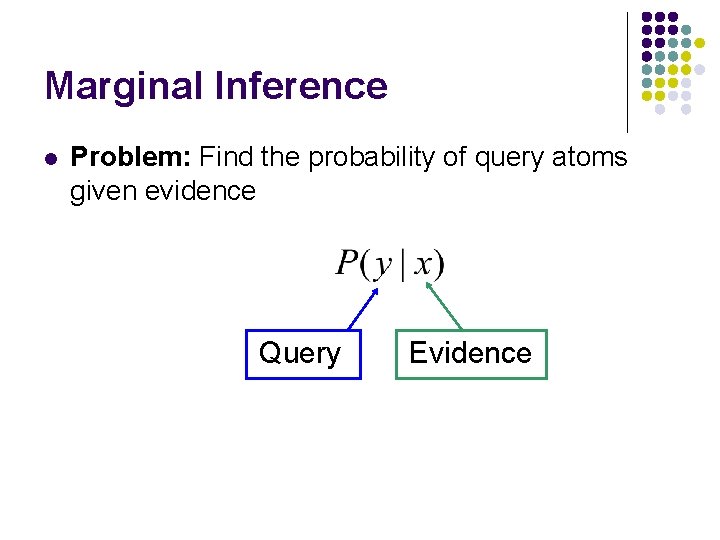

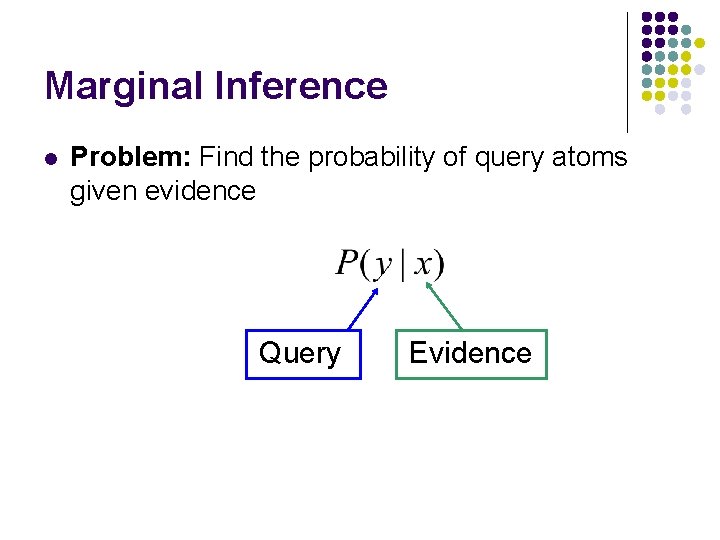

Marginal Inference l Problem: Find the probability of query atoms given evidence Query Evidence

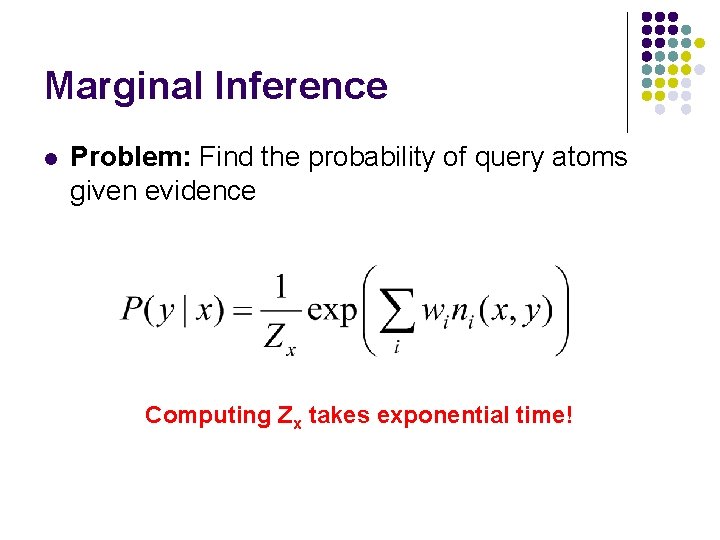

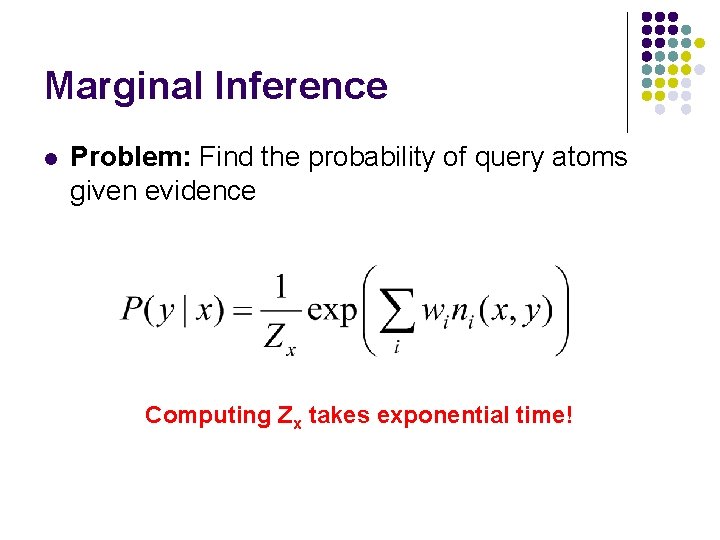

Marginal Inference l Problem: Find the probability of query atoms given evidence Computing Zx takes exponential time!

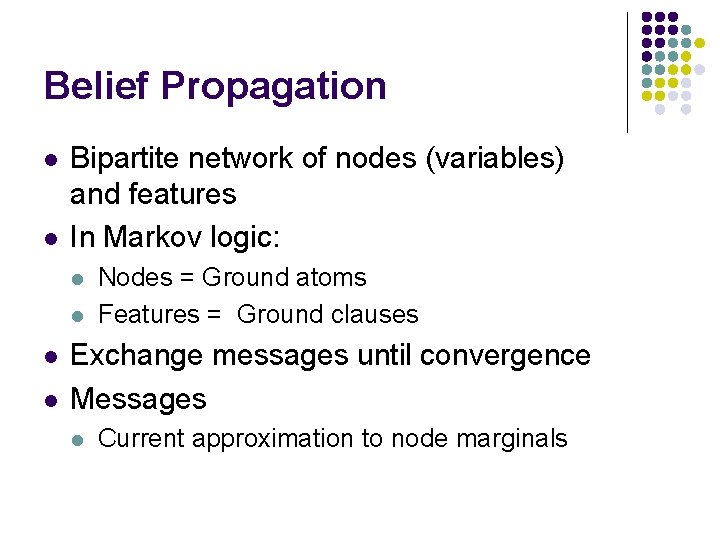

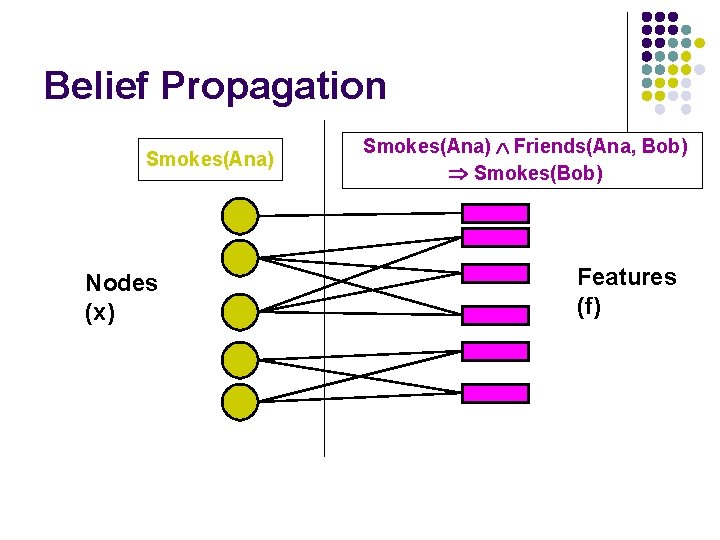

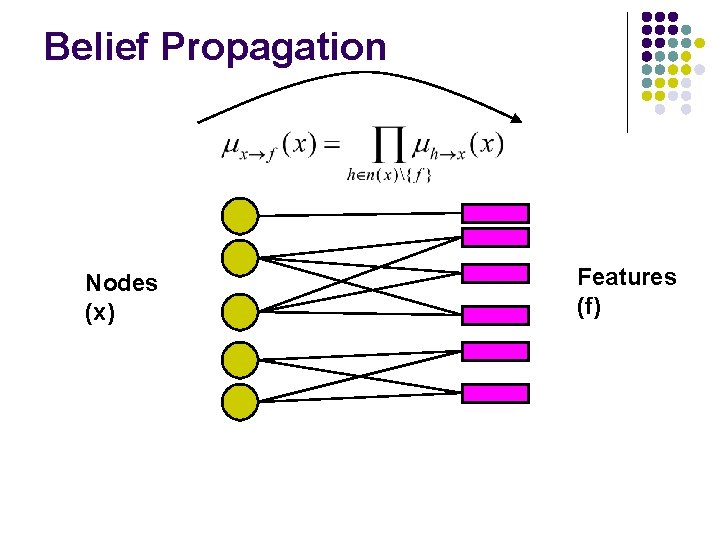

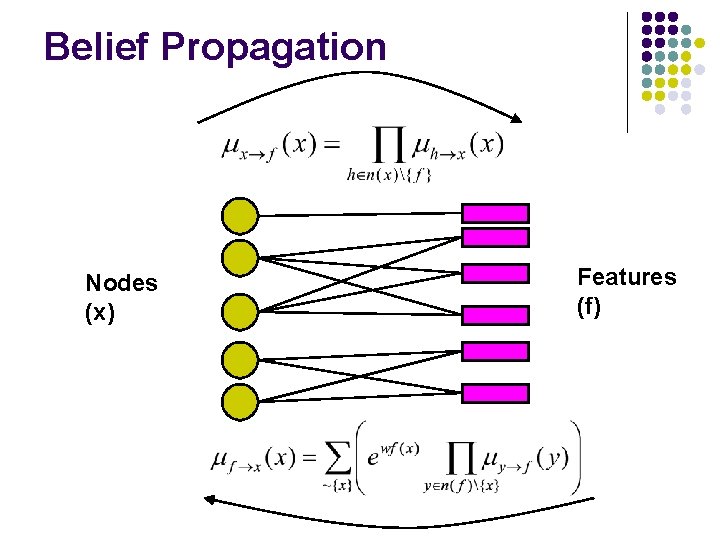

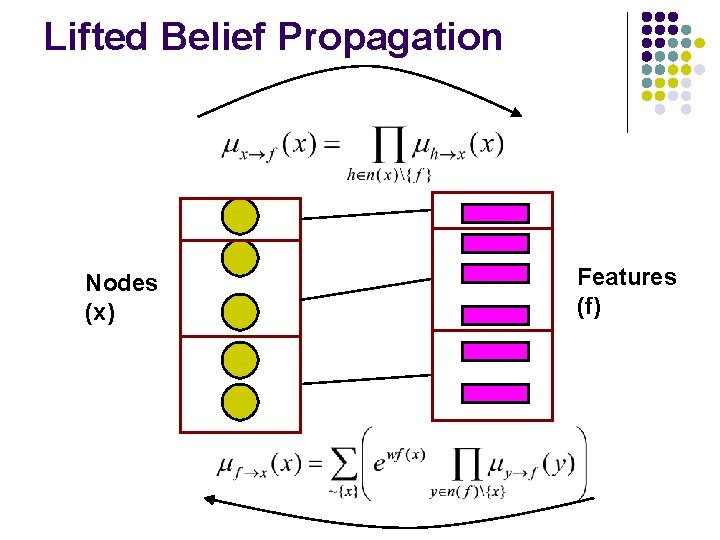

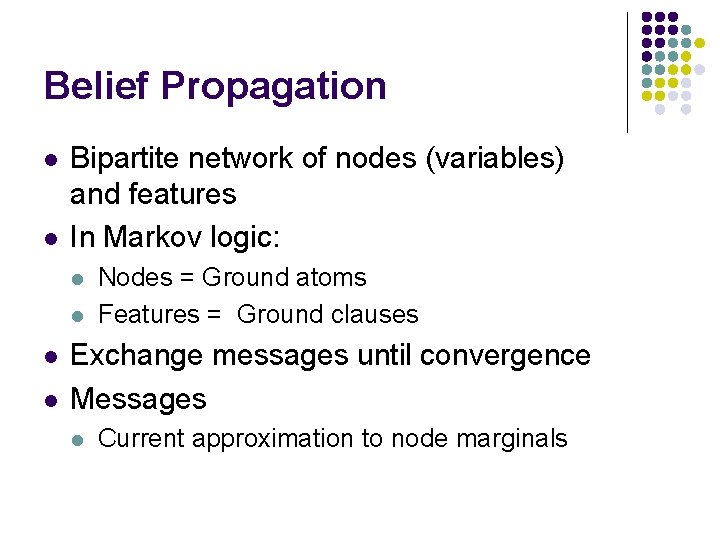

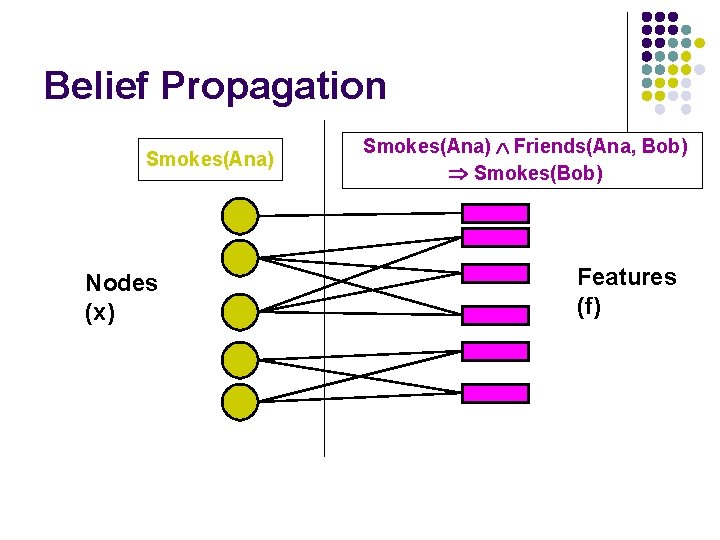

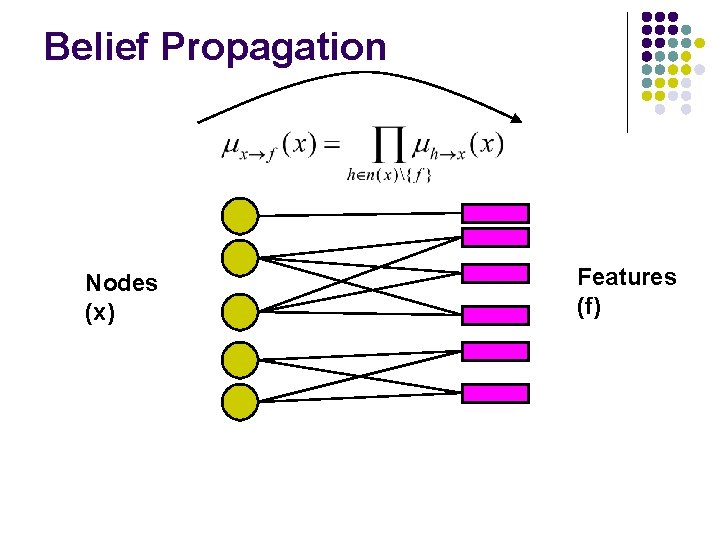

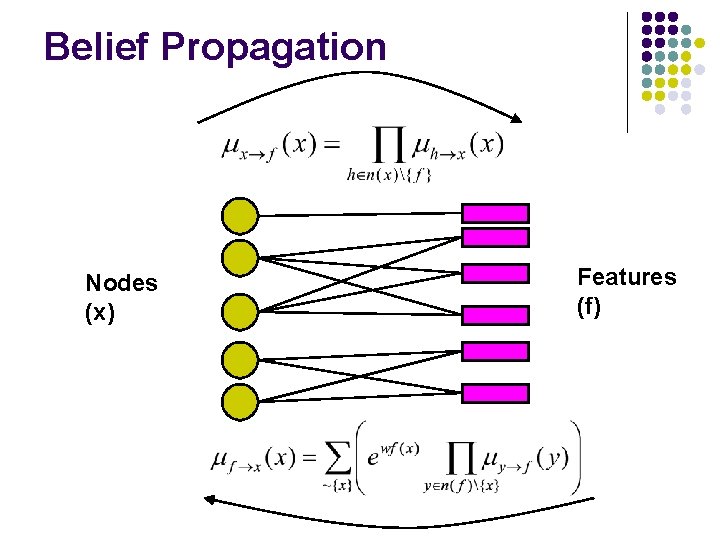

Belief Propagation l l Bipartite network of nodes (variables) and features In Markov logic: l l Nodes = Ground atoms Features = Ground clauses Exchange messages until convergence Messages l Current approximation to node marginals

Belief Propagation Smokes(Ana) Nodes (x) Friends(Ana, Bob) l Smokes(Bob) l. Smokes(Ana) Features (f)

Belief Propagation Nodes (x) Features (f)

Belief Propagation Nodes (x) Features (f)

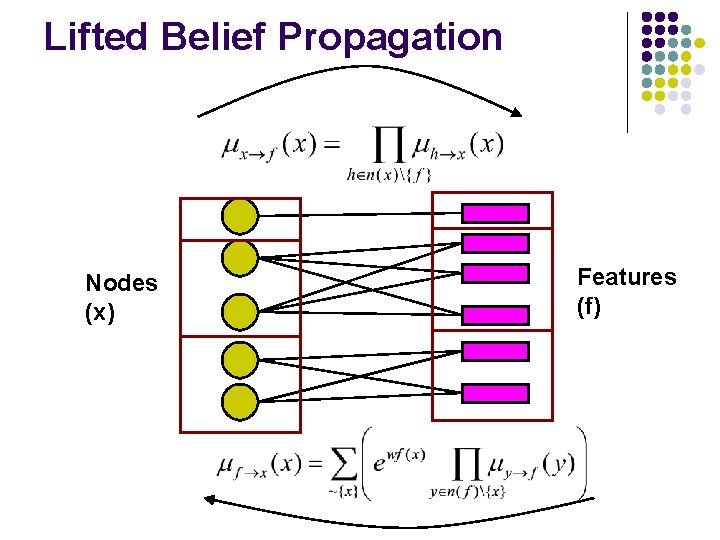

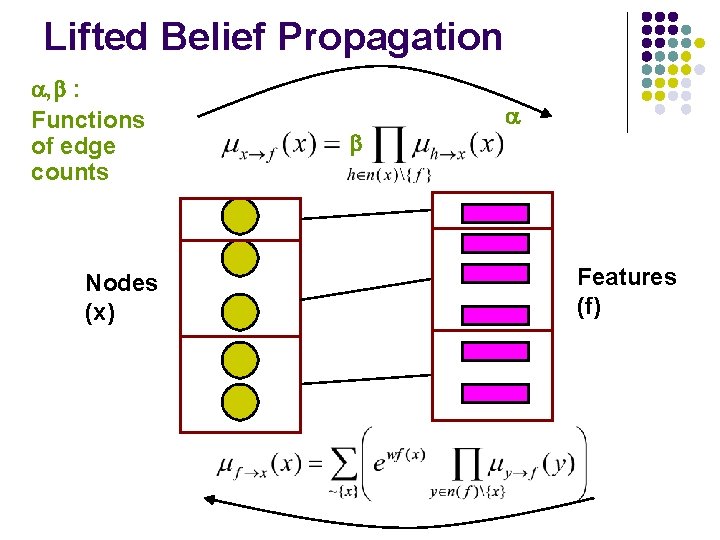

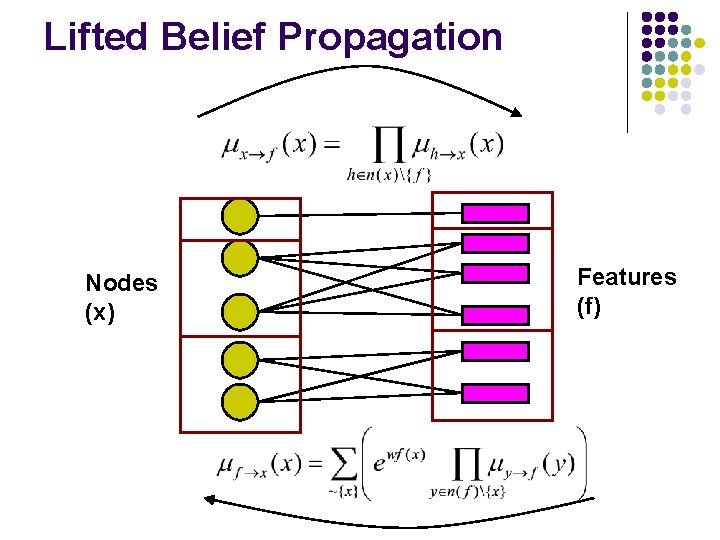

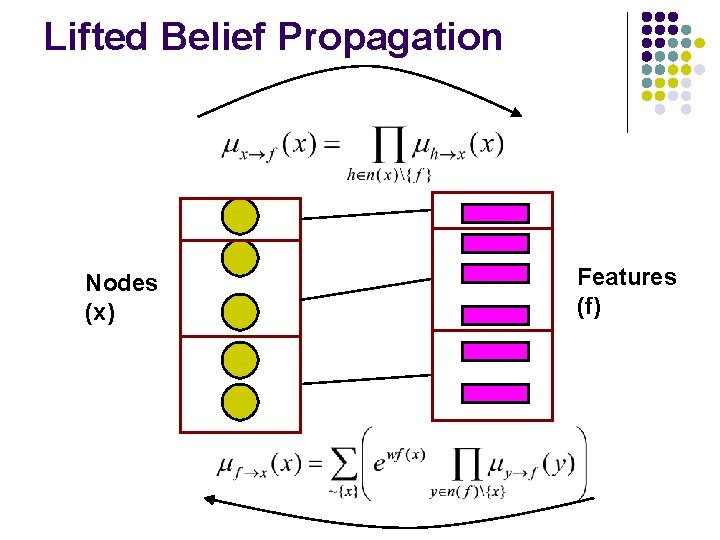

Lifted Belief Propagation Nodes (x) Features (f)

Lifted Belief Propagation Nodes (x) Features (f)

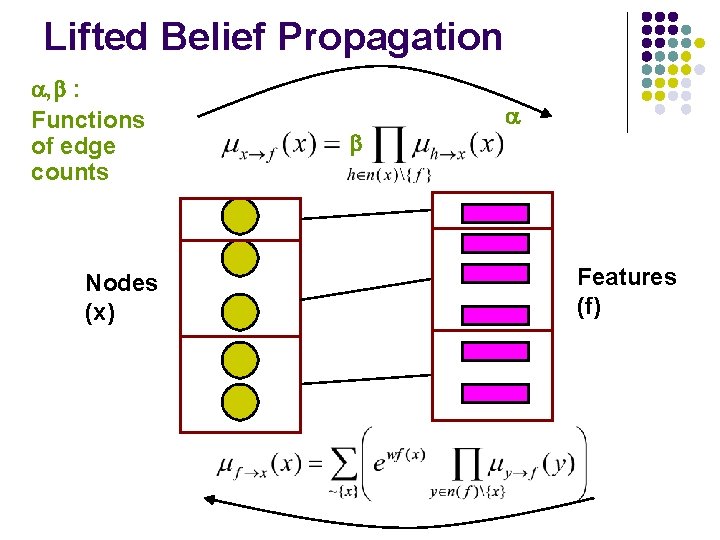

Lifted Belief Propagation , : Functions of edge counts Nodes (x) Features (f)

Overview l l l Motivation Background Markov logic Inference Learning Applications

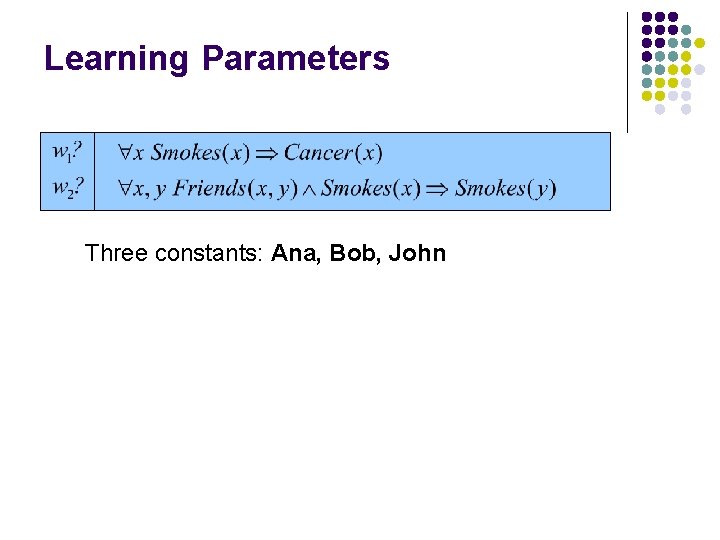

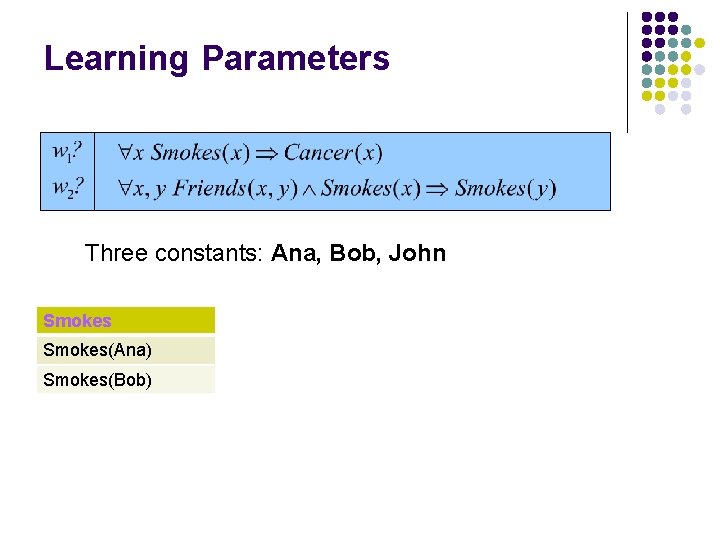

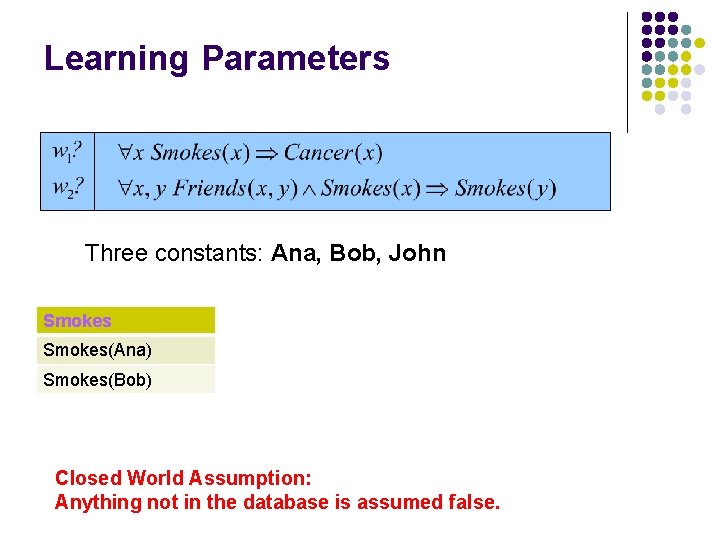

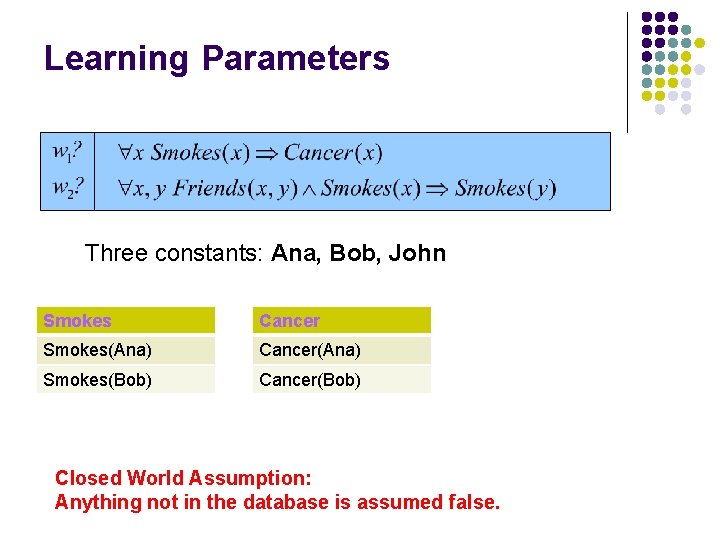

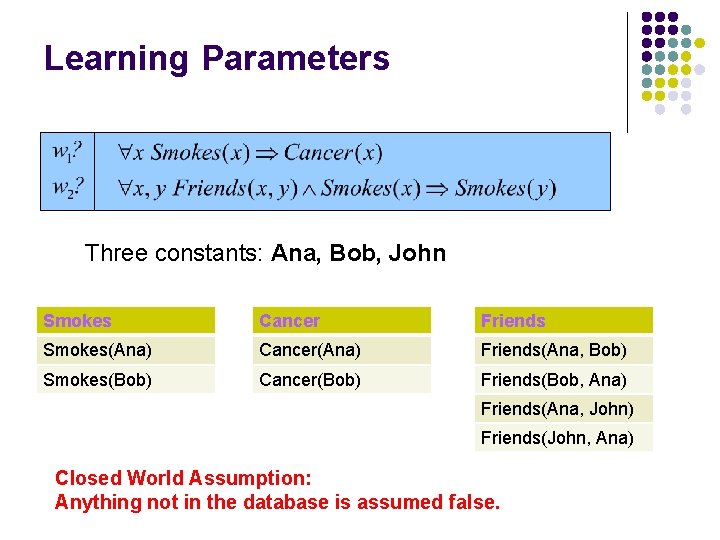

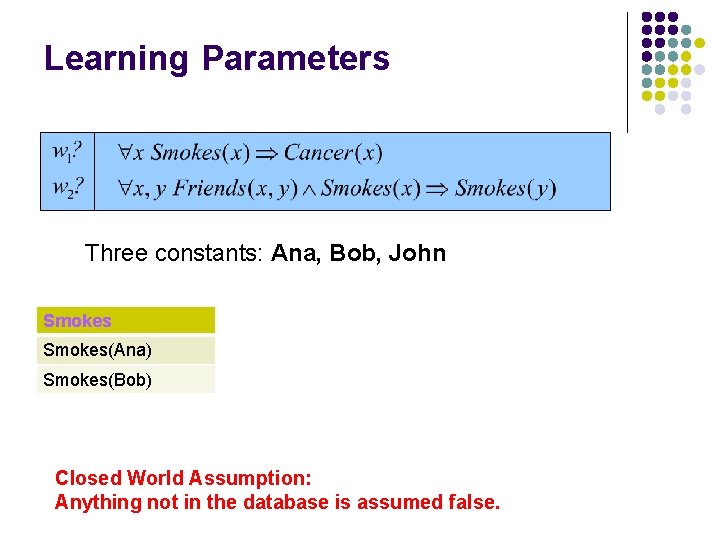

Learning Parameters Three constants: Ana, Bob, John

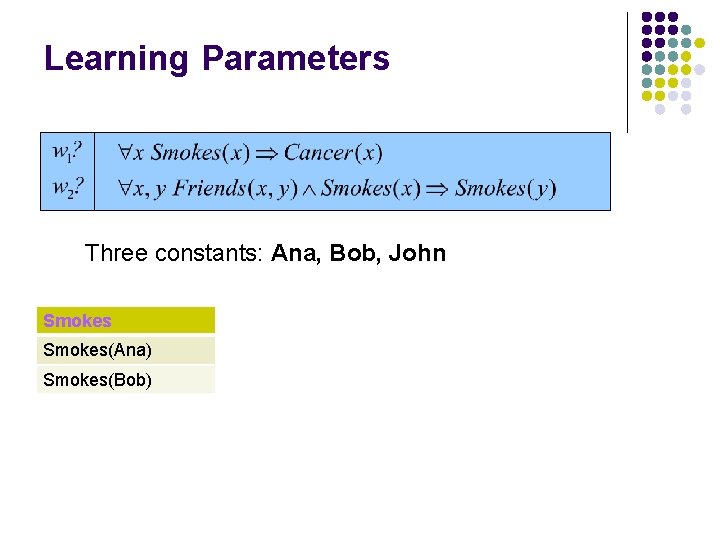

Learning Parameters Three constants: Ana, Bob, John Smokes(Ana) Smokes(Bob)

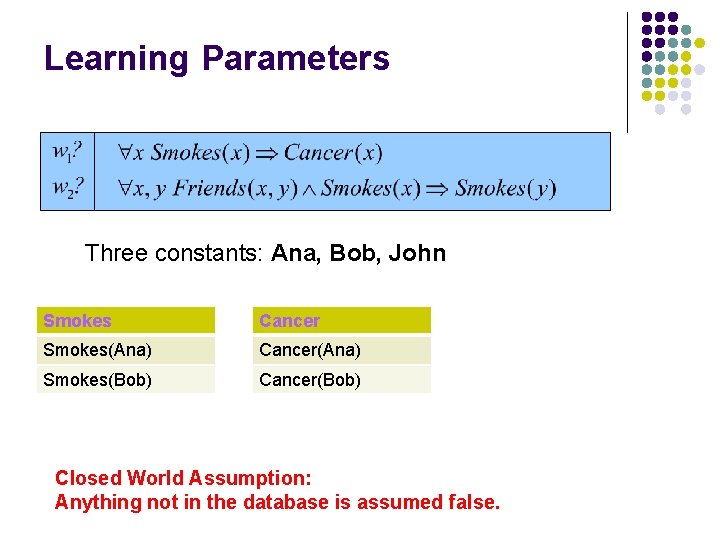

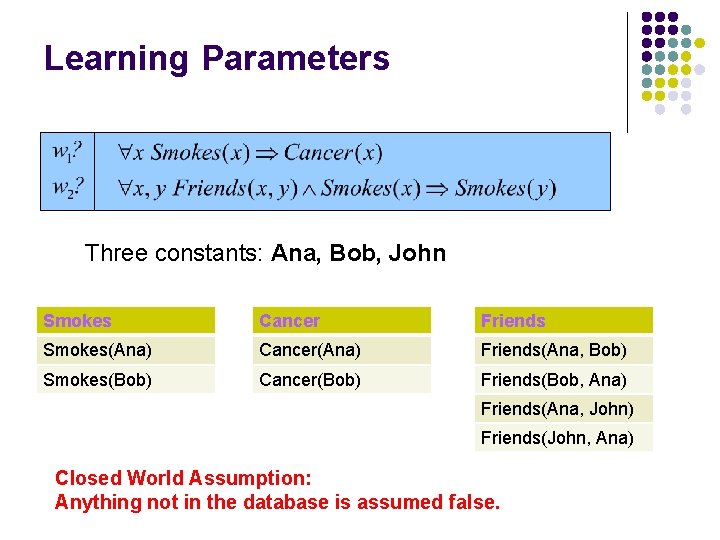

Learning Parameters Three constants: Ana, Bob, John Smokes(Ana) Smokes(Bob) Closed World Assumption: Anything not in the database is assumed false.

Learning Parameters Three constants: Ana, Bob, John Smokes Cancer Smokes(Ana) Cancer(Ana) Smokes(Bob) Cancer(Bob) Closed World Assumption: Anything not in the database is assumed false.

Learning Parameters Three constants: Ana, Bob, John Smokes Cancer Friends Smokes(Ana) Cancer(Ana) Friends(Ana, Bob) Smokes(Bob) Cancer(Bob) Friends(Bob, Ana) Friends(Ana, John) Friends(John, Ana) Closed World Assumption: Anything not in the database is assumed false.

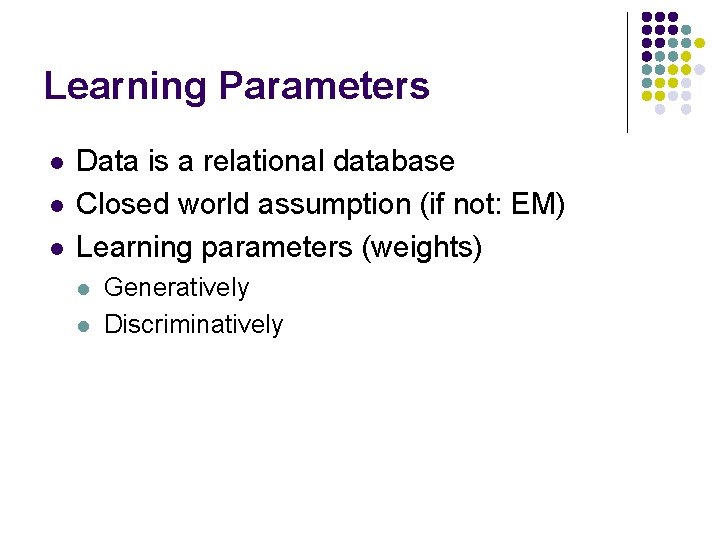

Learning Parameters l l l Data is a relational database Closed world assumption (if not: EM) Learning parameters (weights) l l Generatively Discriminatively

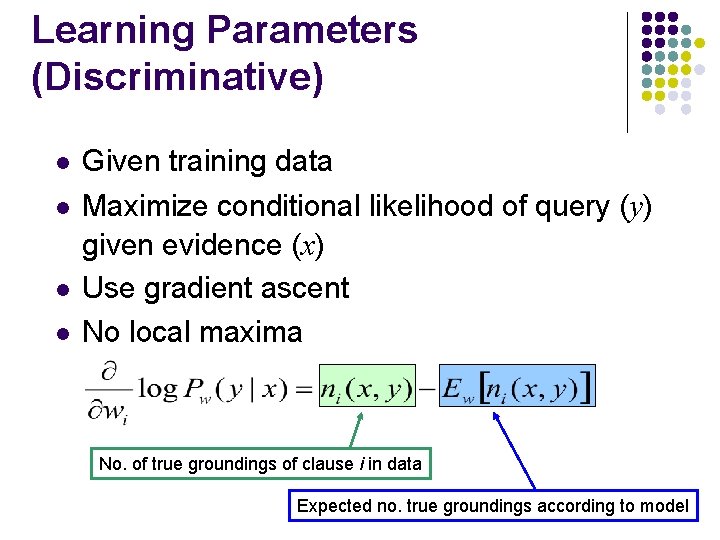

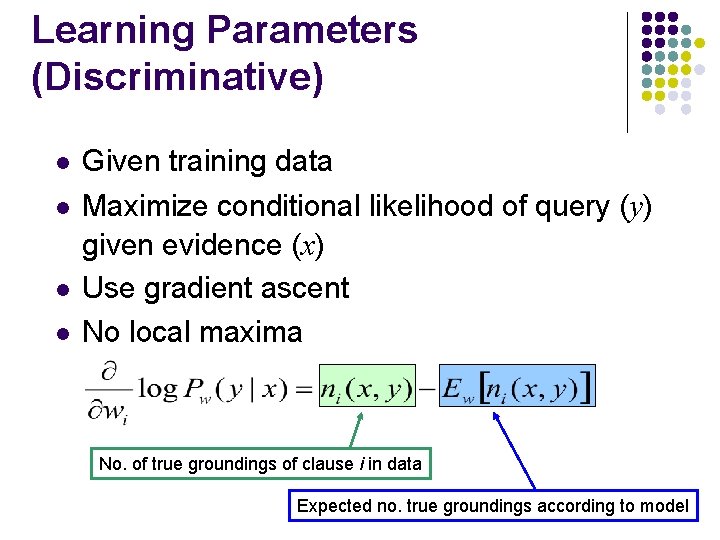

Learning Parameters (Discriminative) l Given training data l Maximize conditional likelihood of query (y) given evidence (x) Use gradient ascent No local maxima l l No. of true groundings of clause i in data Expected no. true groundings according to model

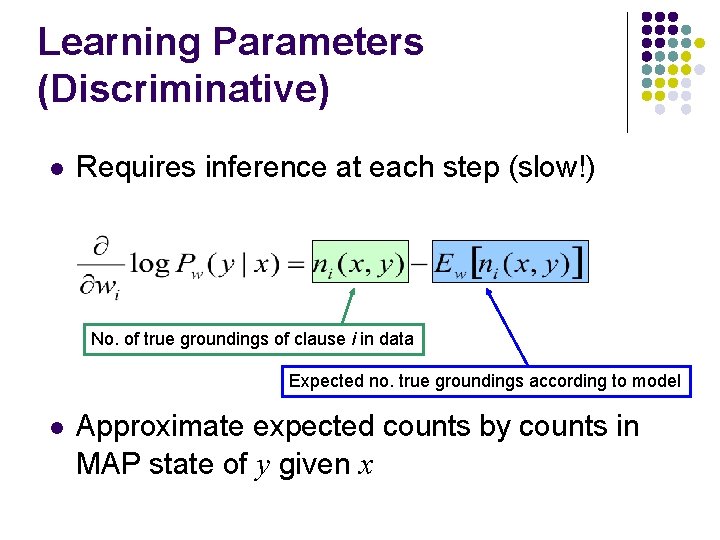

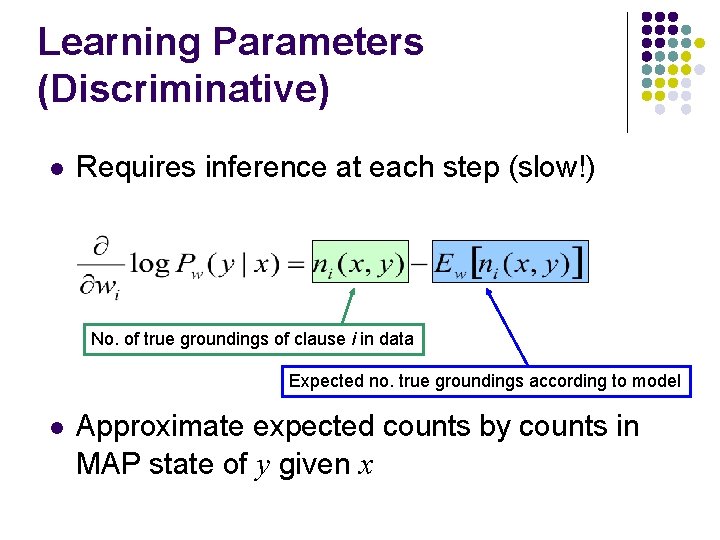

Learning Parameters (Discriminative) l Requires inference at each step (slow!) No. of true groundings of clause i in data Expected no. true groundings according to model l Approximate expected counts by counts in MAP state of y given x

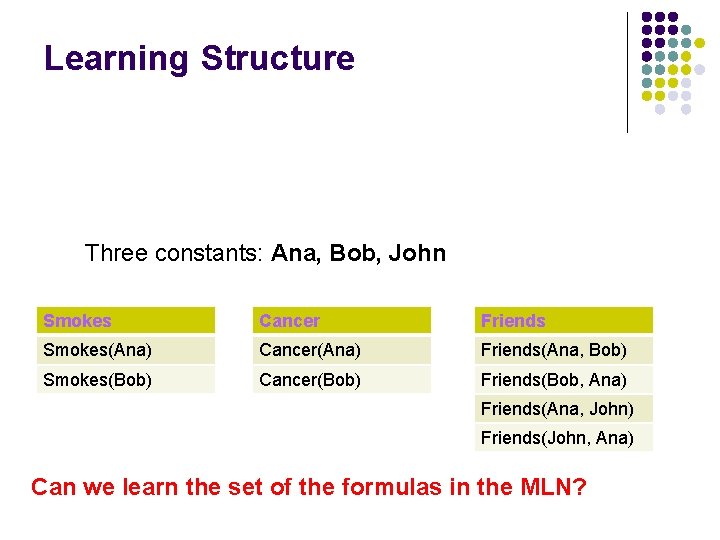

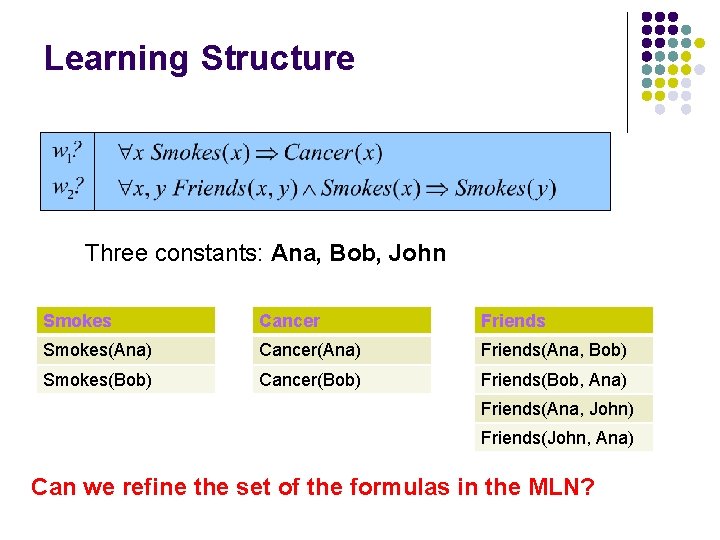

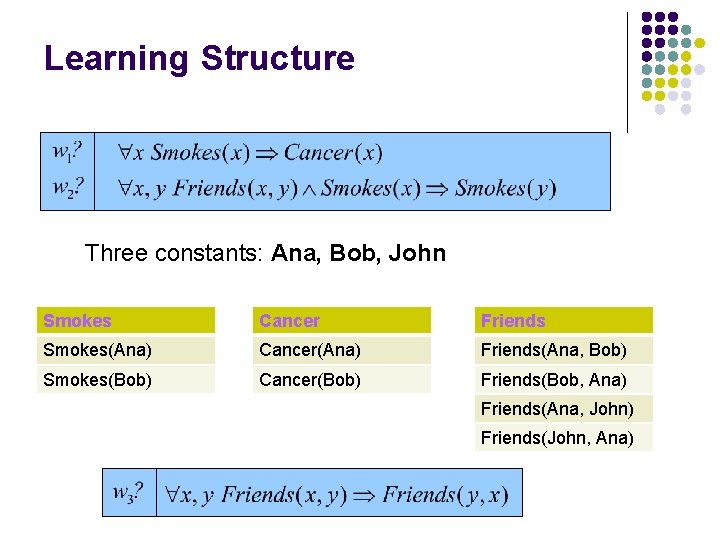

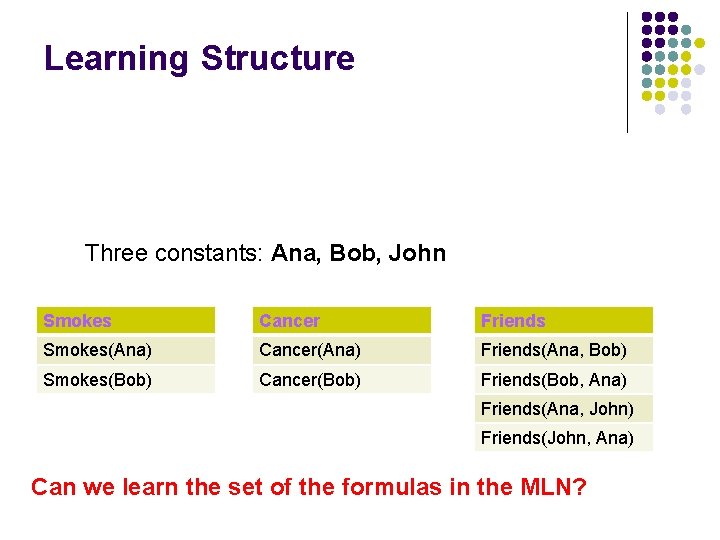

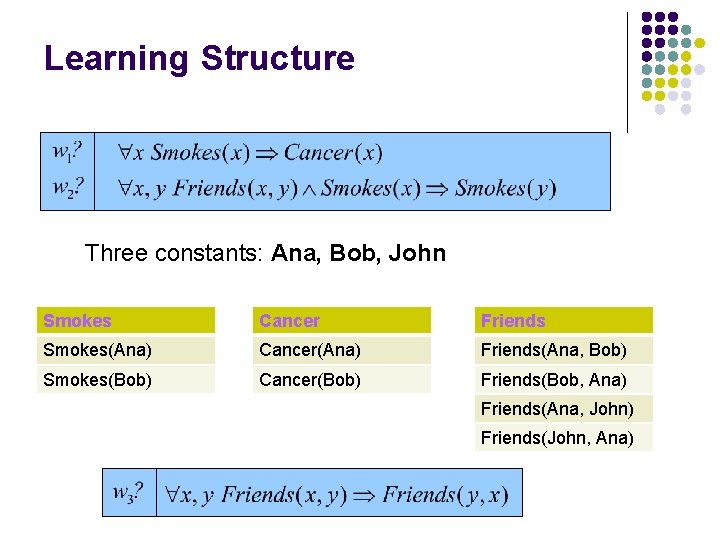

Learning Structure Three constants: Ana, Bob, John Smokes Cancer Friends Smokes(Ana) Cancer(Ana) Friends(Ana, Bob) Smokes(Bob) Cancer(Bob) Friends(Bob, Ana) Friends(Ana, John) Friends(John, Ana) Can we learn the set of the formulas in the MLN?

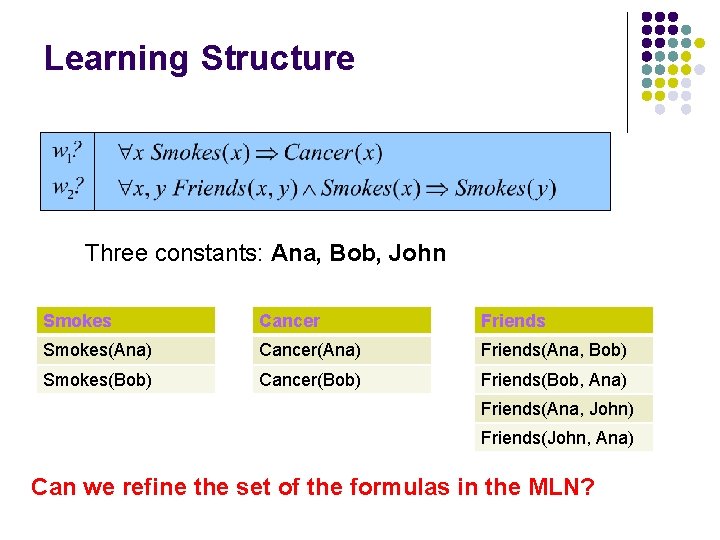

Learning Structure Three constants: Ana, Bob, John Smokes Cancer Friends Smokes(Ana) Cancer(Ana) Friends(Ana, Bob) Smokes(Bob) Cancer(Bob) Friends(Bob, Ana) Friends(Ana, John) Friends(John, Ana) Can we refine the set of the formulas in the MLN?

Learning Structure Three constants: Ana, Bob, John Smokes Cancer Friends Smokes(Ana) Cancer(Ana) Friends(Ana, Bob) Smokes(Bob) Cancer(Bob) Friends(Bob, Ana) Friends(Ana, John) Friends(John, Ana)

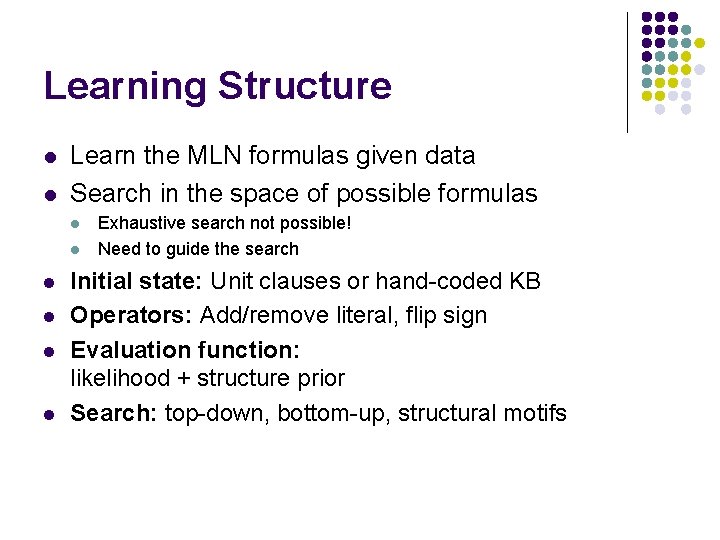

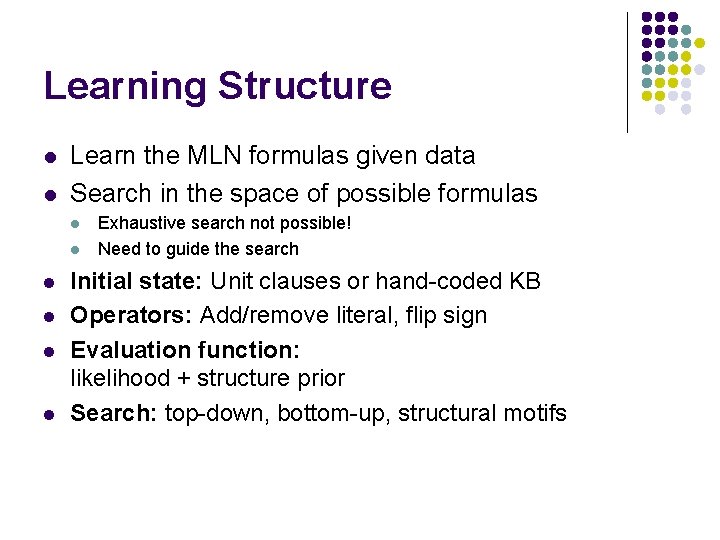

Learning Structure l l Learn the MLN formulas given data Search in the space of possible formulas l l l Exhaustive search not possible! Need to guide the search Initial state: Unit clauses or hand-coded KB Operators: Add/remove literal, flip sign Evaluation function: likelihood + structure prior Search: top-down, bottom-up, structural motifs

Overview l l l Motivation Markov logic Inference Learning Applications Conclusion & Future Work

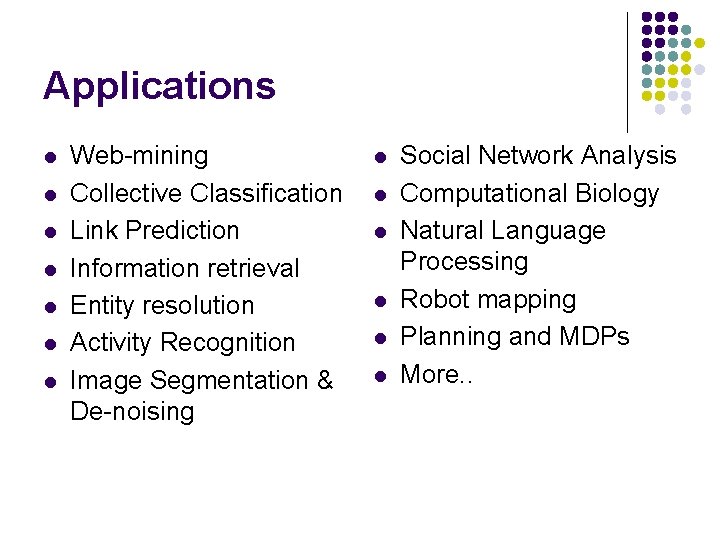

Applications l l l l Web-mining Collective Classification Link Prediction Information retrieval Entity resolution Activity Recognition Image Segmentation & De-noising l l l Social Network Analysis Computational Biology Natural Language Processing Robot mapping Planning and MDPs More. .

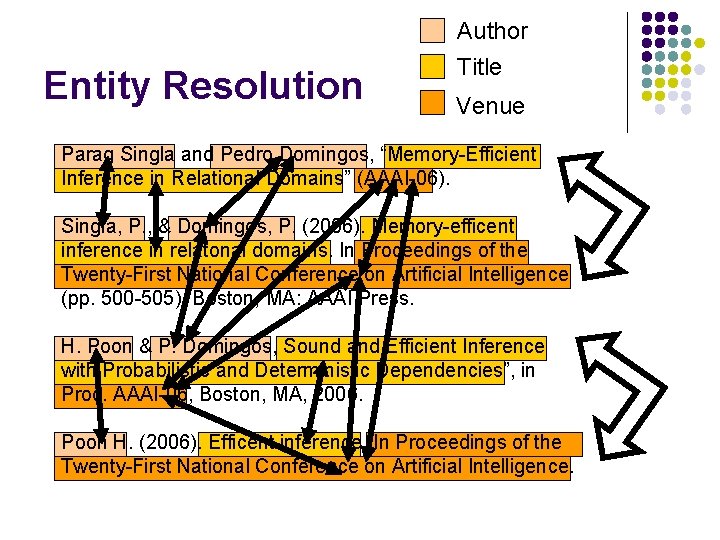

Entity Resolution l l l Data Cleaning and Integration – first step in the data-mining process Merging of data from multiple sources results in duplicates Entity Resolution: Problem of identifying which of the records/fields refer to the same underlying entity

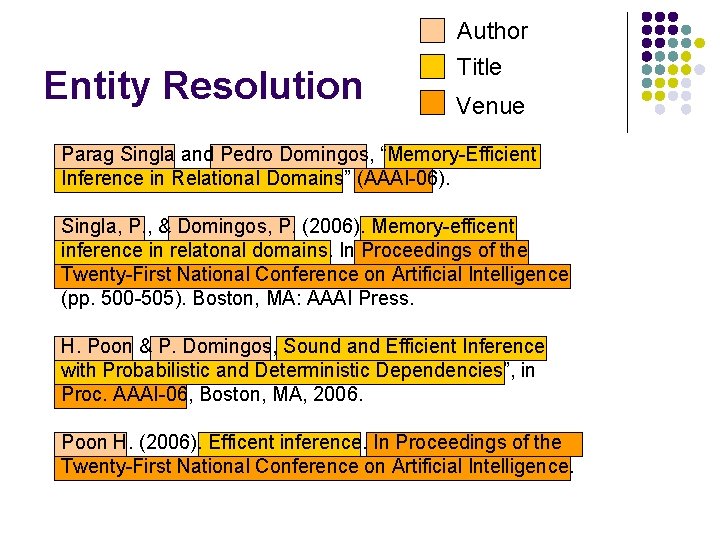

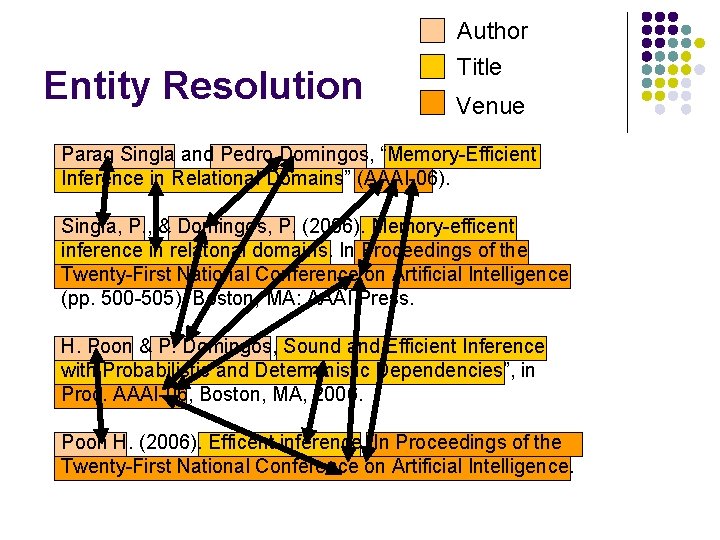

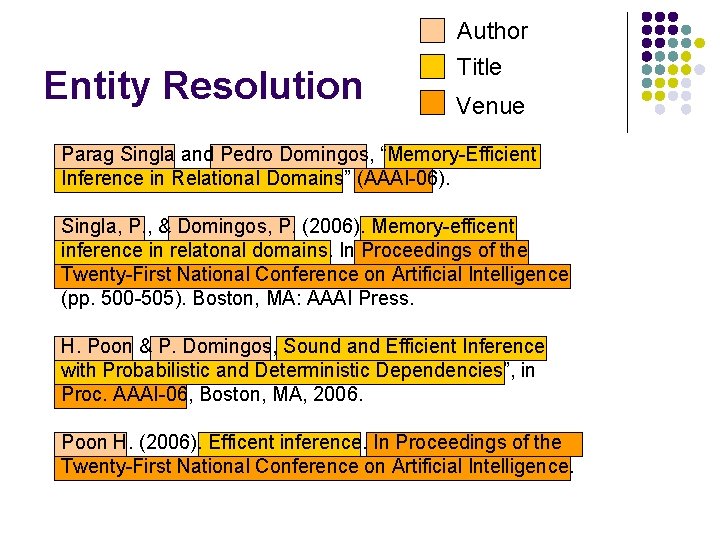

Entity Resolution Author Title Venue Parag Singla and Pedro Domingos, “Memory-Efficient Inference in Relational Domains” (AAAI-06). Singla, P. , & Domingos, P. (2006). Memory-efficent inference in relatonal domains. In Proceedings of the Twenty-First National Conference on Artificial Intelligence (pp. 500 -505). Boston, MA: AAAI Press. H. Poon & P. Domingos, Sound and Efficient Inference with Probabilistic and Deterministic Dependencies”, in Proc. AAAI-06, Boston, MA, 2006. Poon H. (2006). Efficent inference. In Proceedings of the Twenty-First National Conference on Artificial Intelligence.

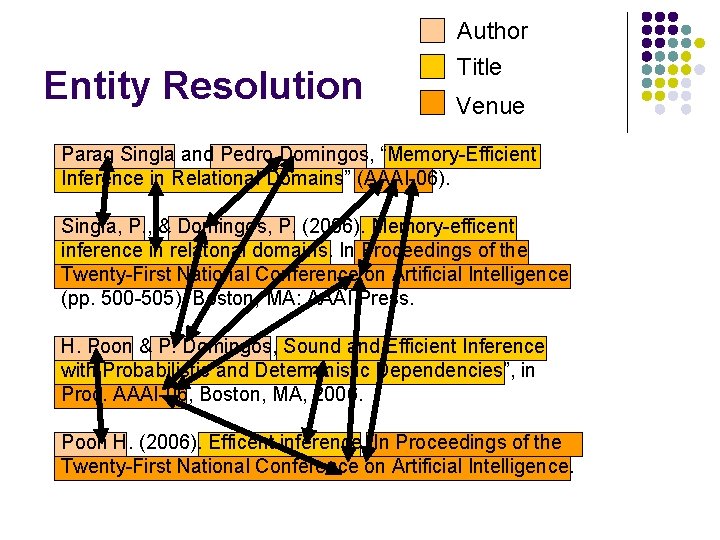

Entity Resolution Author Title Venue Parag Singla and Pedro Domingos, “Memory-Efficient Inference in Relational Domains” (AAAI-06). Singla, P. , & Domingos, P. (2006). Memory-efficent inference in relatonal domains. In Proceedings of the Twenty-First National Conference on Artificial Intelligence (pp. 500 -505). Boston, MA: AAAI Press. H. Poon & P. Domingos, Sound and Efficient Inference with Probabilistic and Deterministic Dependencies”, in Proc. AAAI-06, Boston, MA, 2006. Poon H. (2006). Efficent inference. In Proceedings of the Twenty-First National Conference on Artificial Intelligence.

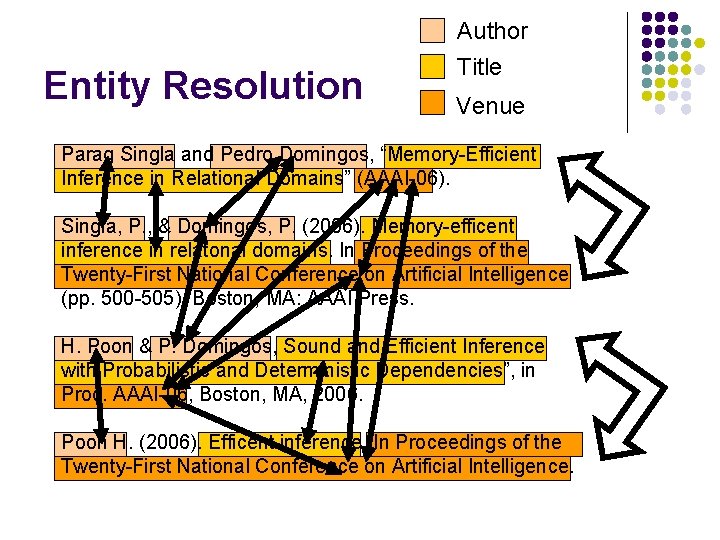

Entity Resolution Author Title Venue Parag Singla and Pedro Domingos, “Memory-Efficient Inference in Relational Domains” (AAAI-06). Singla, P. , & Domingos, P. (2006). Memory-efficent inference in relatonal domains. In Proceedings of the Twenty-First National Conference on Artificial Intelligence (pp. 500 -505). Boston, MA: AAAI Press. H. Poon & P. Domingos, Sound and Efficient Inference with Probabilistic and Deterministic Dependencies”, in Proc. AAAI-06, Boston, MA, 2006. Poon H. (2006). Efficent inference. In Proceedings of the Twenty-First National Conference on Artificial Intelligence.

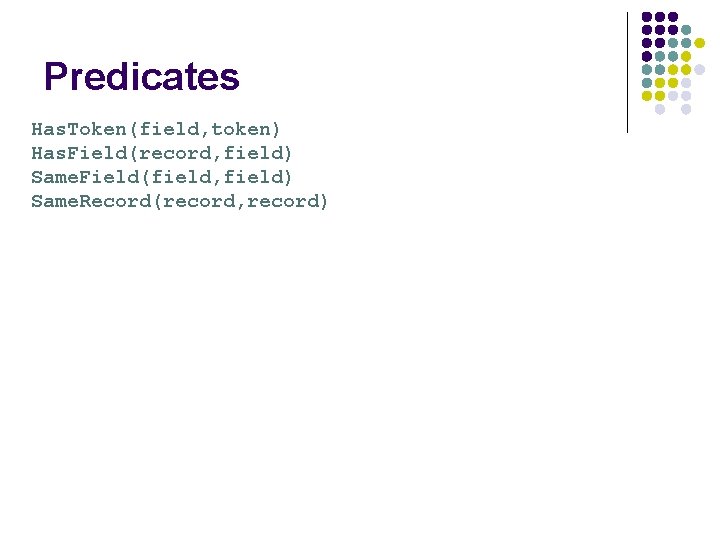

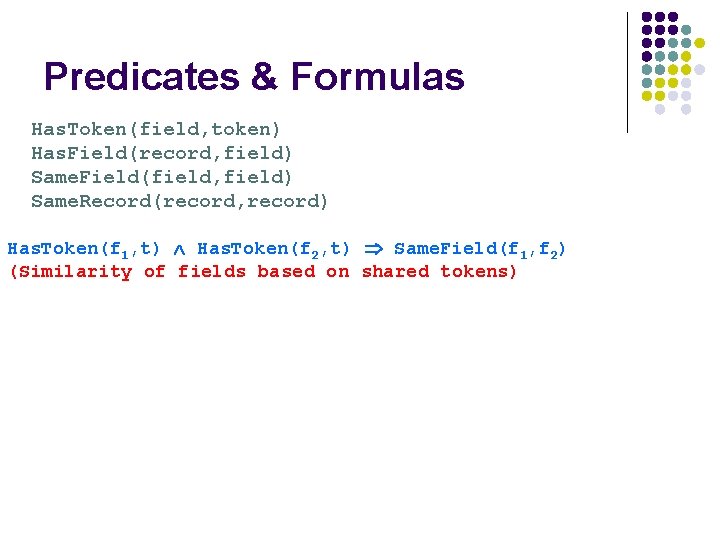

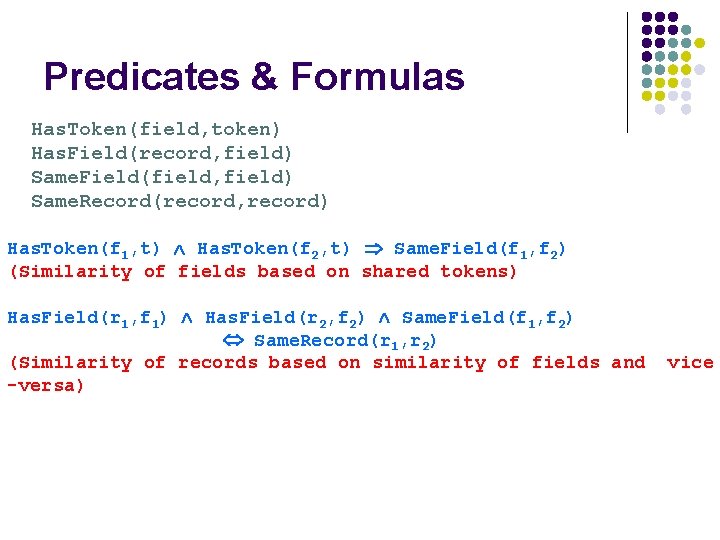

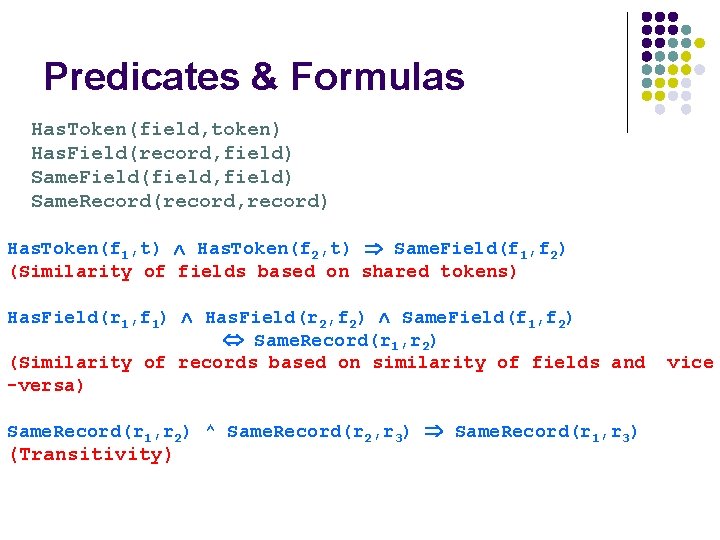

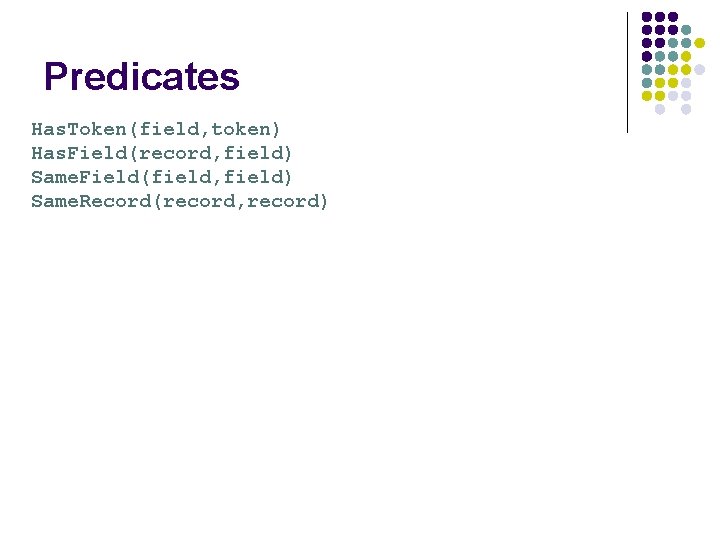

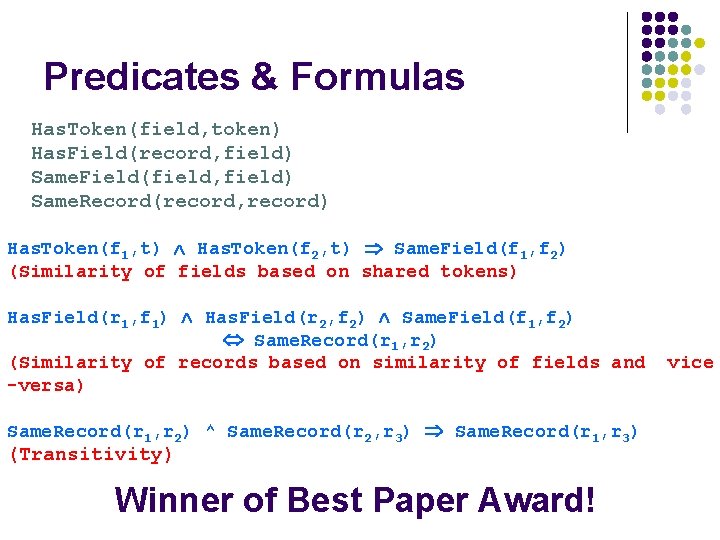

Predicates Has. Token(field, token) Has. Field(record, field) Same. Field(field, field) Same. Record(record, record)

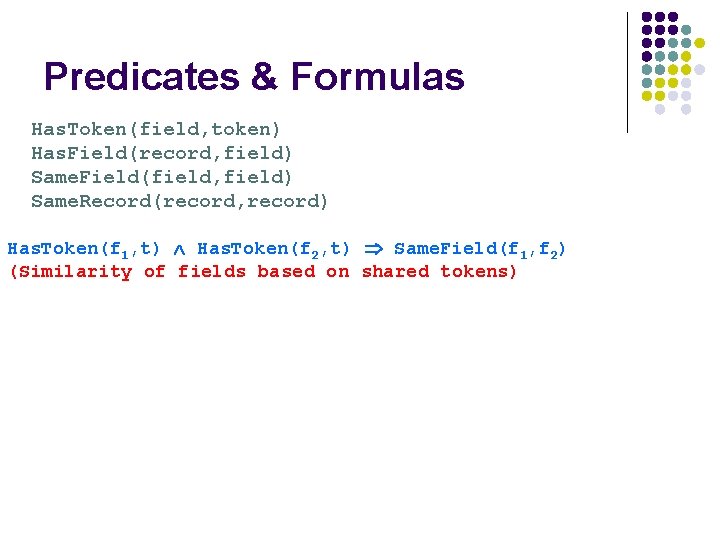

Predicates & Formulas Has. Token(field, token) Has. Field(record, field) Same. Field(field, field) Same. Record(record, record) Has. Token(f 1, t) Has. Token(f 2, t) Same. Field(f 1, f 2) (Similarity of fields based on shared tokens)

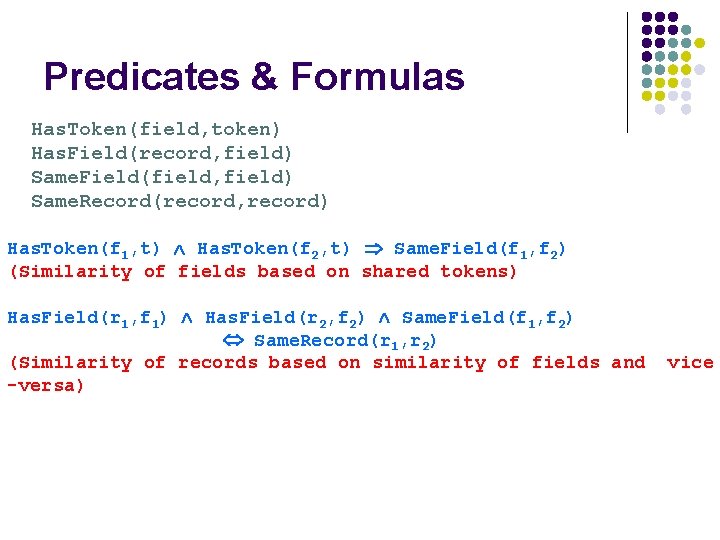

Predicates & Formulas Has. Token(field, token) Has. Field(record, field) Same. Field(field, field) Same. Record(record, record) Has. Token(f 1, t) Has. Token(f 2, t) Same. Field(f 1, f 2) (Similarity of fields based on shared tokens) Has. Field(r 1, f 1) Has. Field(r 2, f 2) Same. Field(f 1, f 2) Same. Record(r 1, r 2) (Similarity of records based on similarity of fields and -versa) vice

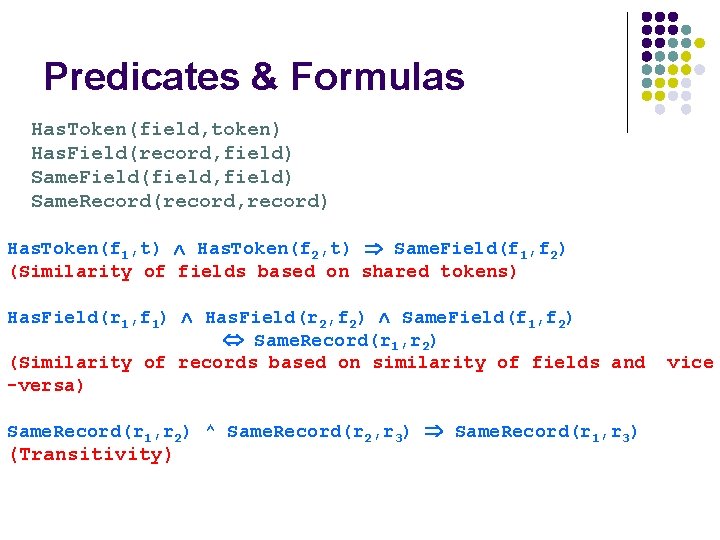

Predicates & Formulas Has. Token(field, token) Has. Field(record, field) Same. Field(field, field) Same. Record(record, record) Has. Token(f 1, t) Has. Token(f 2, t) Same. Field(f 1, f 2) (Similarity of fields based on shared tokens) Has. Field(r 1, f 1) Has. Field(r 2, f 2) Same. Field(f 1, f 2) Same. Record(r 1, r 2) (Similarity of records based on similarity of fields and -versa) Same. Record(r 1, r 2) ^ Same. Record(r 2, r 3) Same. Record(r 1, r 3) (Transitivity) vice

Predicates & Formulas Has. Token(field, token) Has. Field(record, field) Same. Field(field, field) Same. Record(record, record) Has. Token(f 1, t) Has. Token(f 2, t) Same. Field(f 1, f 2) (Similarity of fields based on shared tokens) Has. Field(r 1, f 1) Has. Field(r 2, f 2) Same. Field(f 1, f 2) Same. Record(r 1, r 2) (Similarity of records based on similarity of fields and -versa) Same. Record(r 1, r 2) ^ Same. Record(r 2, r 3) Same. Record(r 1, r 3) (Transitivity) Winner of Best Paper Award! vice

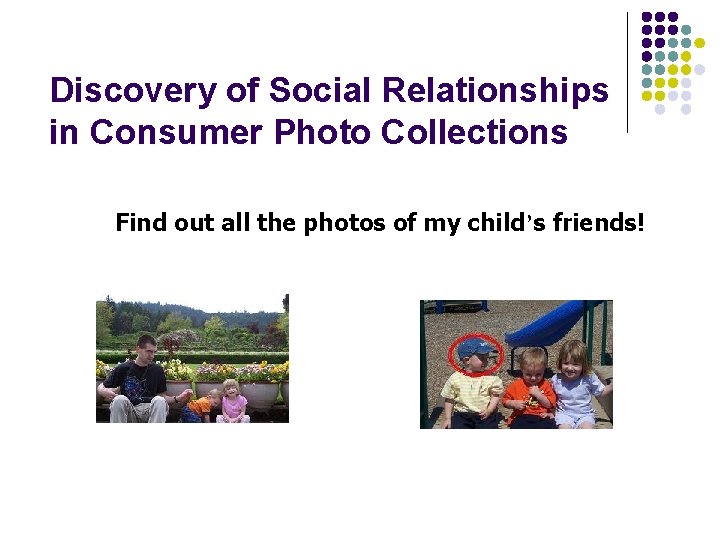

Discovery of Social Relationships in Consumer Photo Collections Find out all the photos of my child’s friends!

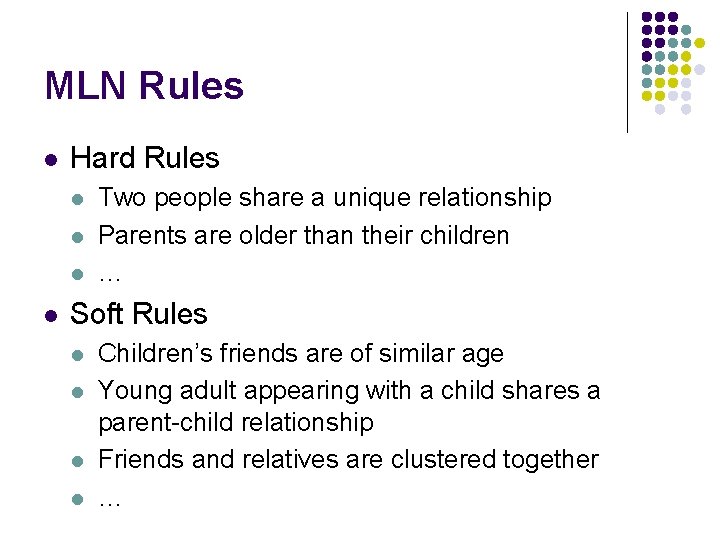

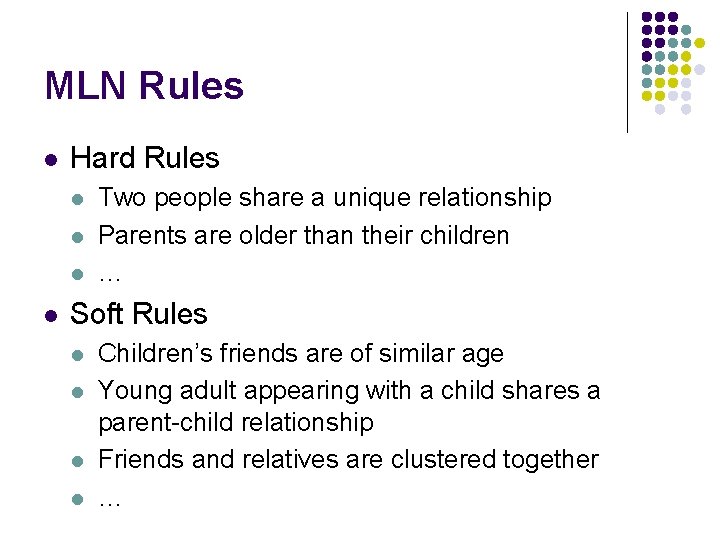

MLN Rules l Hard Rules l l Two people share a unique relationship Parents are older than their children … Soft Rules l l Children’s friends are of similar age Young adult appearing with a child shares a parent-child relationship Friends and relatives are clustered together …

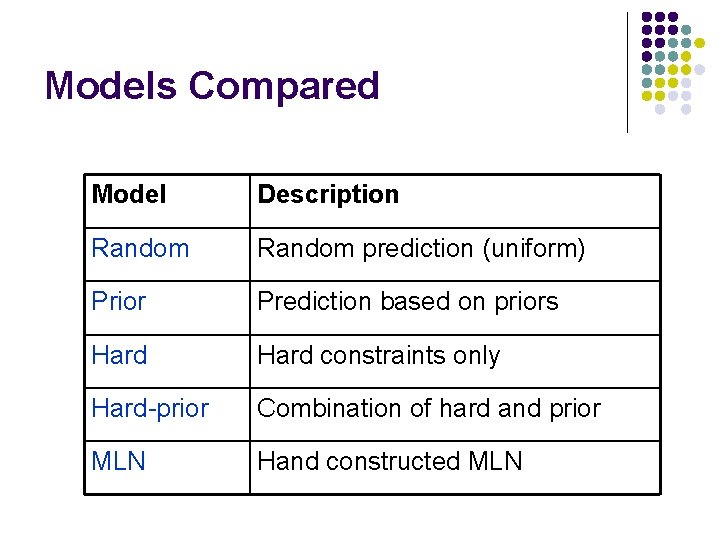

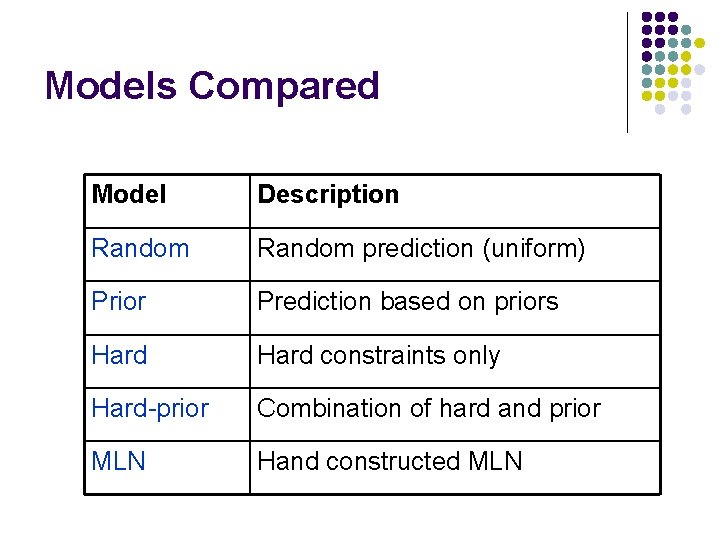

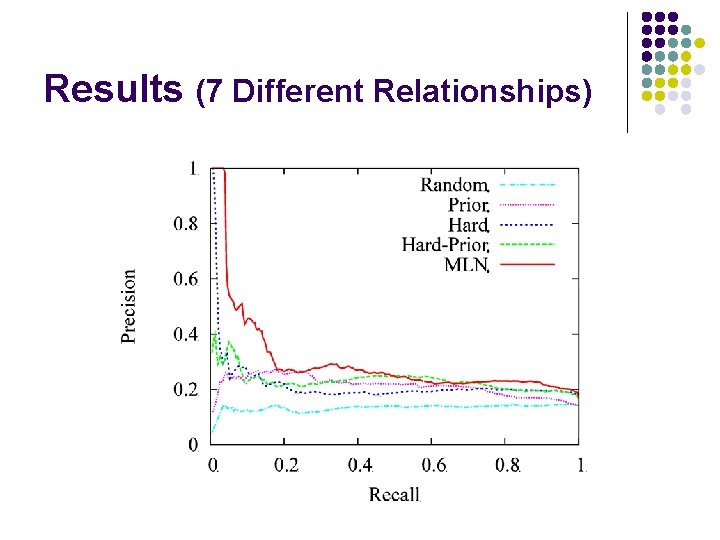

Models Compared Model Description Random prediction (uniform) Prior Prediction based on priors Hard constraints only Hard-prior Combination of hard and prior MLN Hand constructed MLN

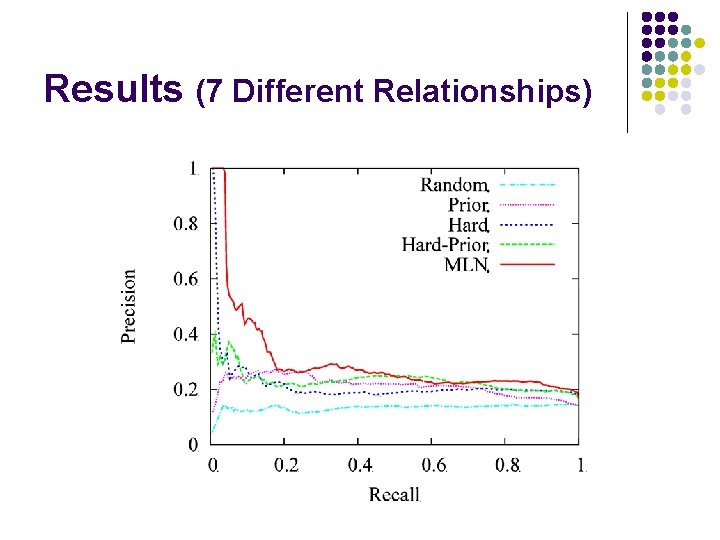

Results (7 Different Relationships)

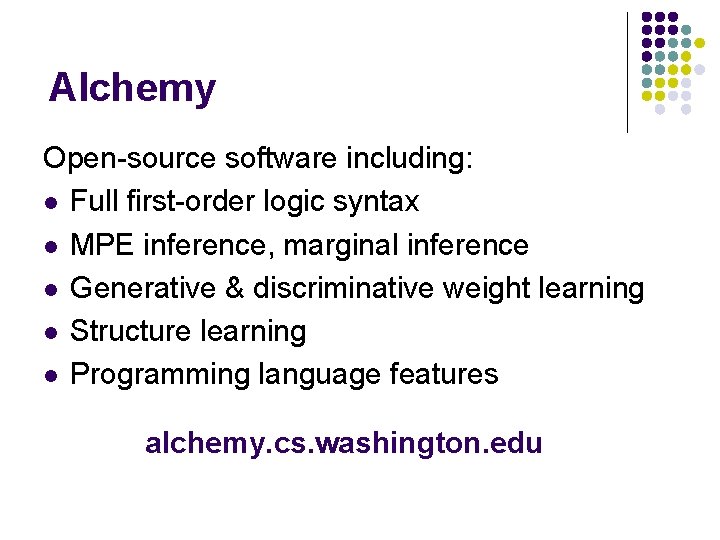

Alchemy Open-source software including: l Full first-order logic syntax l MPE inference, marginal inference l Generative & discriminative weight learning l Structure learning l Programming language features alchemy. cs. washington. edu