Markov Decision Processes Reactive Planning to Maximize Reward

![MDP Examples: TD-Gammon [Tesauro, 1995] Learning Through Reinforcement Learns to play Backgammon States: • MDP Examples: TD-Gammon [Tesauro, 1995] Learning Through Reinforcement Learns to play Backgammon States: •](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-5.jpg)

![MDP Examples: Aerial Robotics [Feron et al. ] Computing a Solution from a Continuous MDP Examples: Aerial Robotics [Feron et al. ] Computing a Solution from a Continuous](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-6.jpg)

![Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-35.jpg)

![Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-36.jpg)

![Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-37.jpg)

![Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-38.jpg)

![Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-39.jpg)

![Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-40.jpg)

![Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-41.jpg)

![Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-42.jpg)

![Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-43.jpg)

- Slides: 50

Markov Decision Processes: Reactive Planning to Maximize Reward Brian C. Williams 16. 410 November 8 th, 2004 Slides adapted from: Manuela Veloso, Reid Simmons, & Tom Mitchell, CMU 1/8/2022 1

Reading and Assignments • Markov Decision Processes • Read AIMA Chapter 17, Sections 1 – 3. v This lecture based on development in: “Machine Learning” by Tom Mitchell Chapter 13: Reinforcement Learning 2

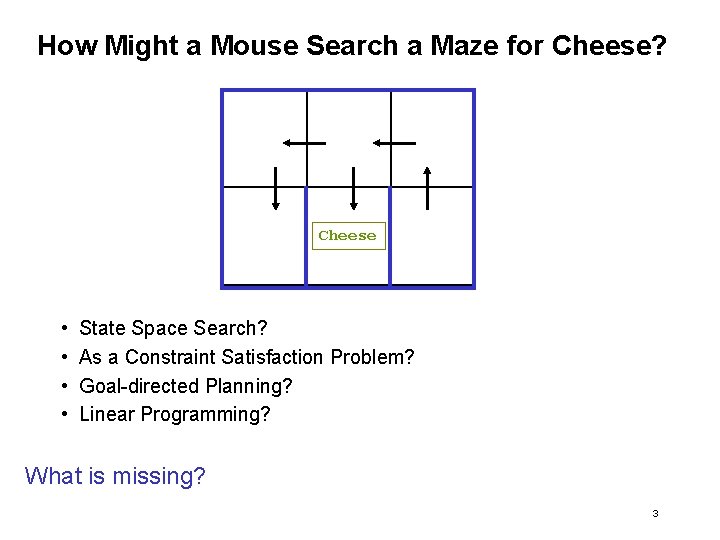

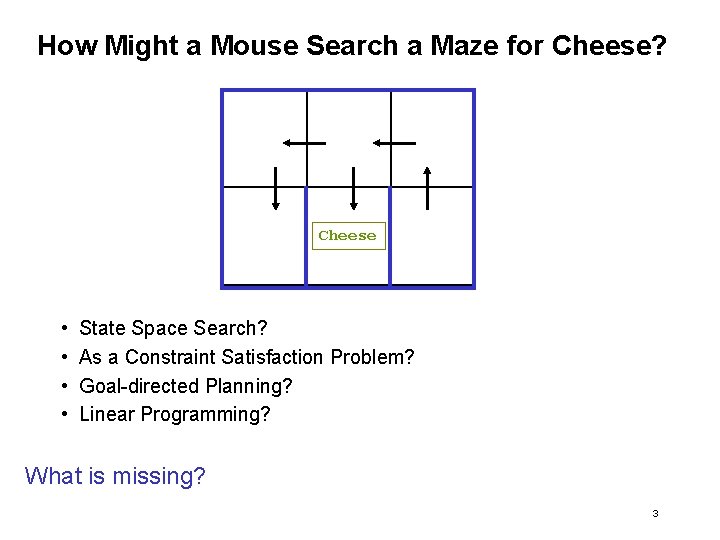

How Might a Mouse Search a Maze for Cheese? Cheese • • State Space Search? As a Constraint Satisfaction Problem? Goal-directed Planning? Linear Programming? What is missing? 3

Ideas in this lecture • Problem is to accumulate rewards, rather than to achieve goal states. • Approach is to generate reactive policies for how to act in all situations, rather than plans for a single starting situation. • Policies fall out of value functions, which describe the greatest lifetime reward achievable at every state. • Value functions are iteratively approximated. 4

![MDP Examples TDGammon Tesauro 1995 Learning Through Reinforcement Learns to play Backgammon States MDP Examples: TD-Gammon [Tesauro, 1995] Learning Through Reinforcement Learns to play Backgammon States: •](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-5.jpg)

MDP Examples: TD-Gammon [Tesauro, 1995] Learning Through Reinforcement Learns to play Backgammon States: • Board configurations (1020) Actions: • Moves Rewards: • • +100 if win - 100 if lose 0 for all other states Trained by playing 1. 5 million games against self. è Currently, roughly equal to best human player. 5

![MDP Examples Aerial Robotics Feron et al Computing a Solution from a Continuous MDP Examples: Aerial Robotics [Feron et al. ] Computing a Solution from a Continuous](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-6.jpg)

MDP Examples: Aerial Robotics [Feron et al. ] Computing a Solution from a Continuous Model 6

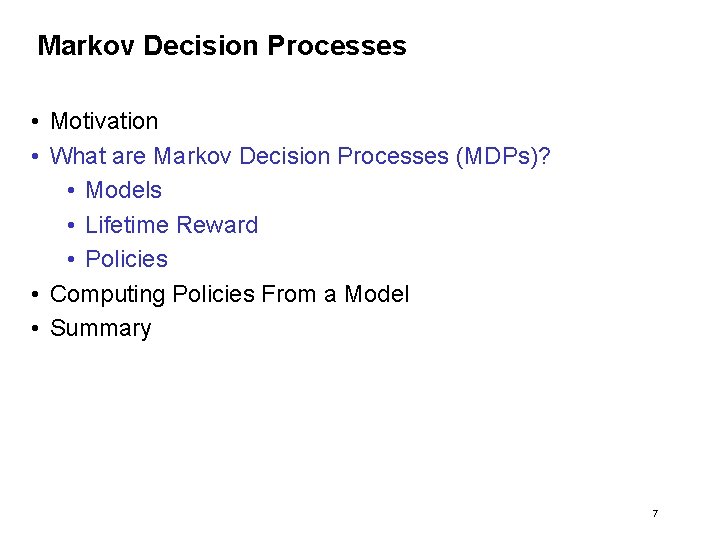

Markov Decision Processes • Motivation • What are Markov Decision Processes (MDPs)? • Models • Lifetime Reward • Policies • Computing Policies From a Model • Summary 7

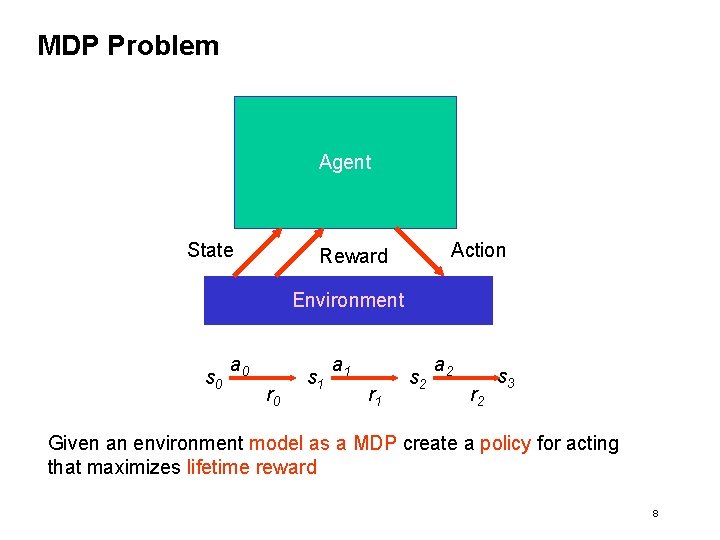

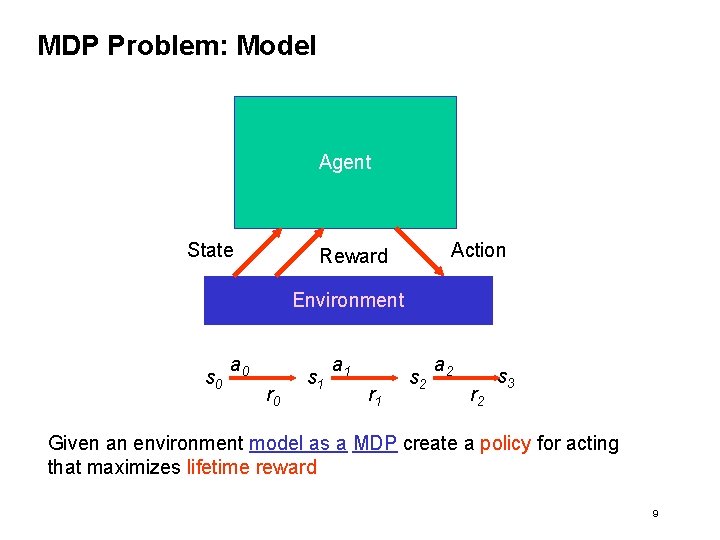

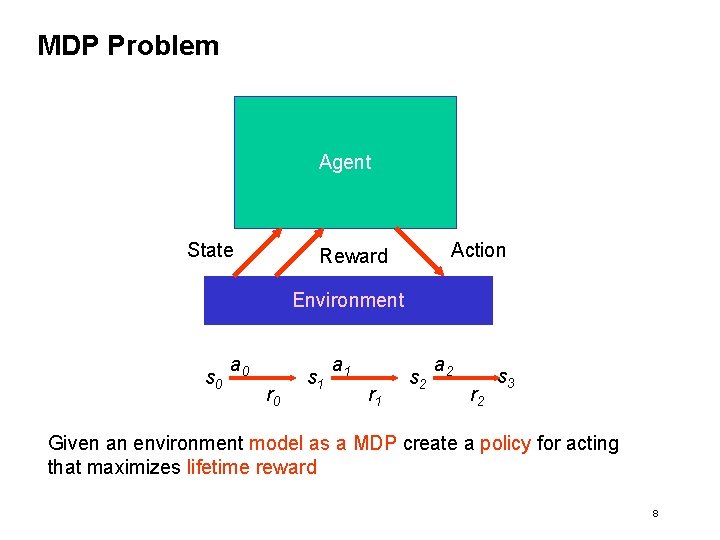

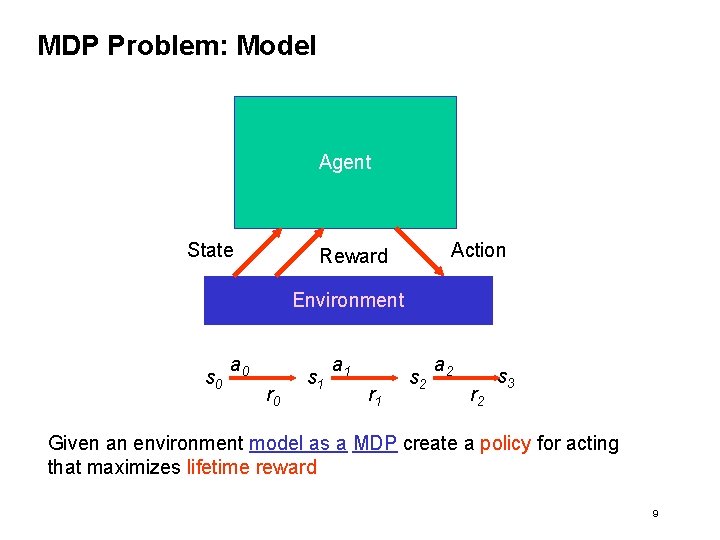

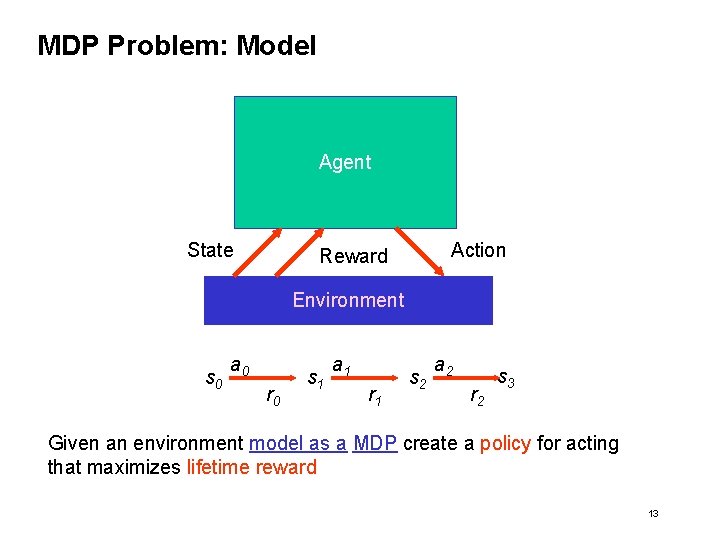

MDP Problem Agent State Action Reward Environment s 0 a 0 r 0 s 1 a 1 r 1 s 2 a 2 r 2 s 3 Given an environment model as a MDP create a policy for acting that maximizes lifetime reward 8

MDP Problem: Model Agent State Action Reward Environment s 0 a 0 r 0 s 1 a 1 r 1 s 2 a 2 r 2 s 3 Given an environment model as a MDP create a policy for acting that maximizes lifetime reward 9

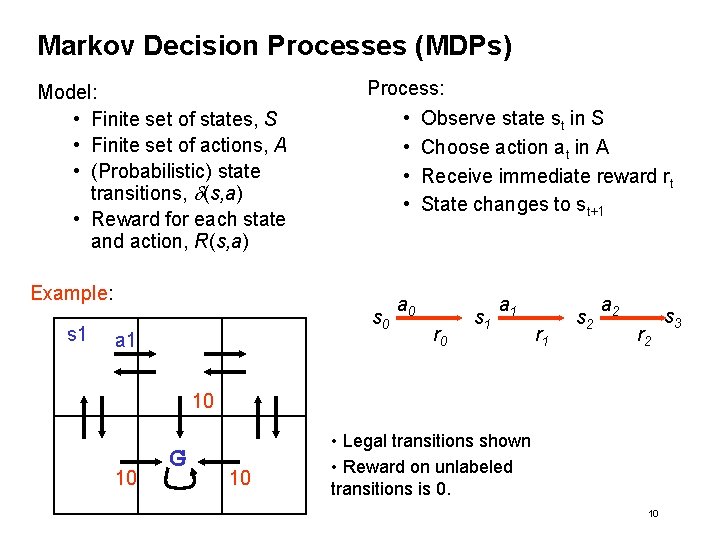

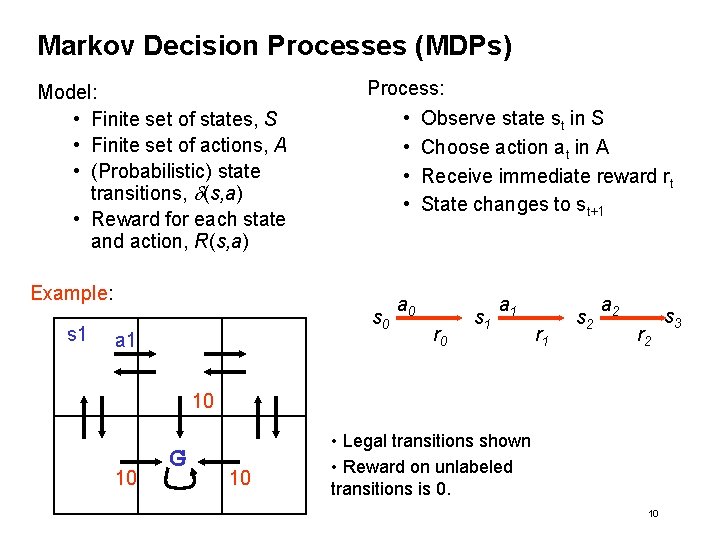

Markov Decision Processes (MDPs) Model: • Finite set of states, S • Finite set of actions, A • (Probabilistic) state transitions, d(s, a) • Reward for each state and action, R(s, a) Process: • Observe state st in S • Choose action at in A • Receive immediate reward rt • State changes to st+1 Example: s 1 s 0 a 1 a 0 r 0 s 1 a 1 r 1 s 2 a 2 r 2 10 10 G 10 • Legal transitions shown • Reward on unlabeled transitions is 0. 10 s 3

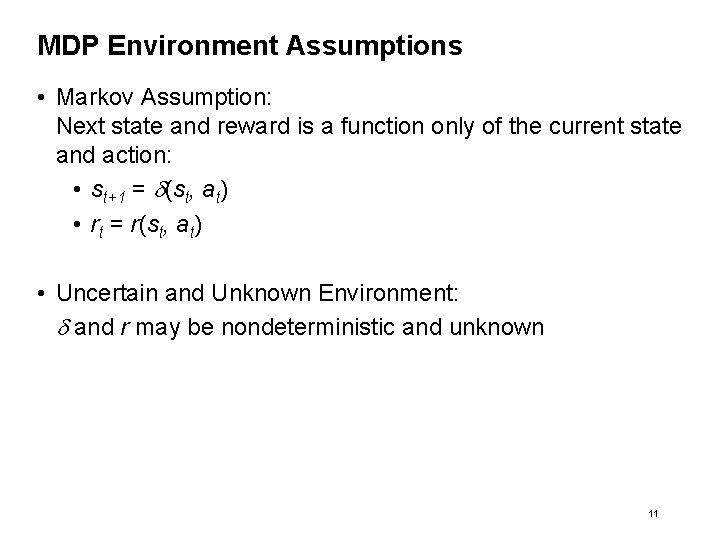

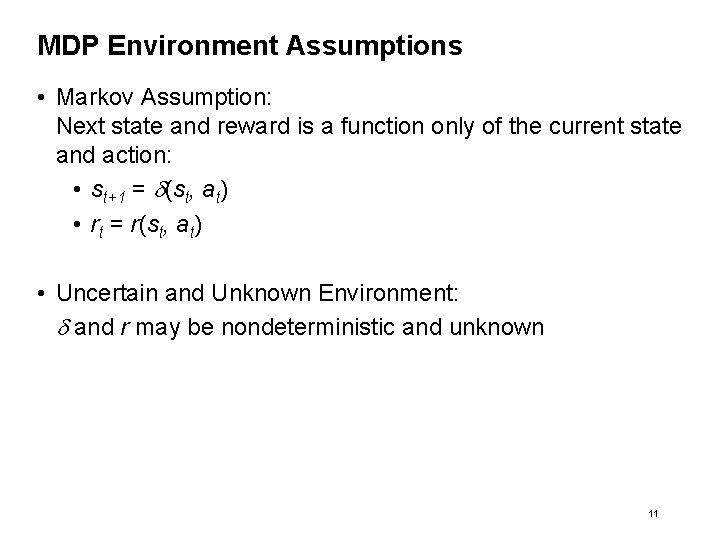

MDP Environment Assumptions • Markov Assumption: Next state and reward is a function only of the current state and action: • st+1 = d(st, at) • rt = r(st, at) • Uncertain and Unknown Environment: d and r may be nondeterministic and unknown 11

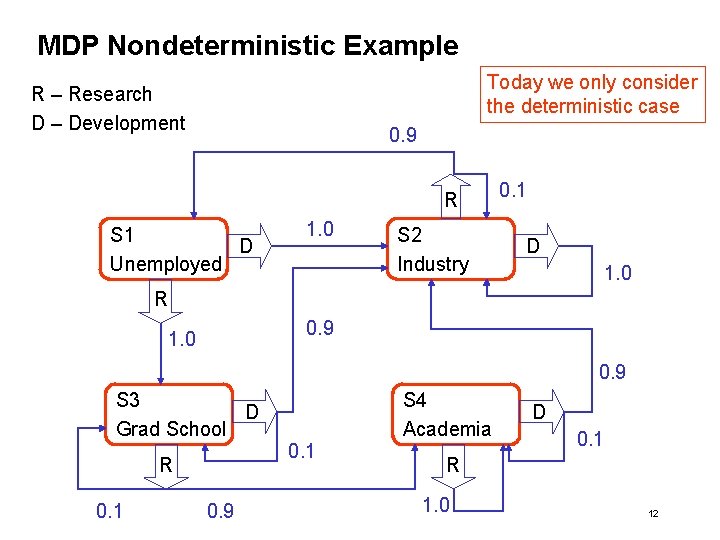

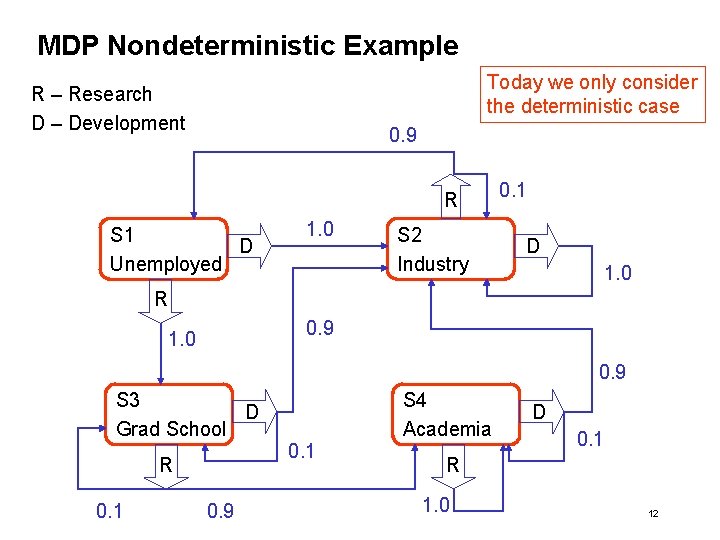

MDP Nondeterministic Example Today we only consider the deterministic case R – Research D – Development 0. 9 R S 1 D Unemployed 1. 0 S 2 Industry 0. 1 D 1. 0 R 0. 9 1. 0 0. 9 S 3 D Grad School R 0. 1 0. 9 0. 1 S 4 Academia D 0. 1 R 1. 0 12

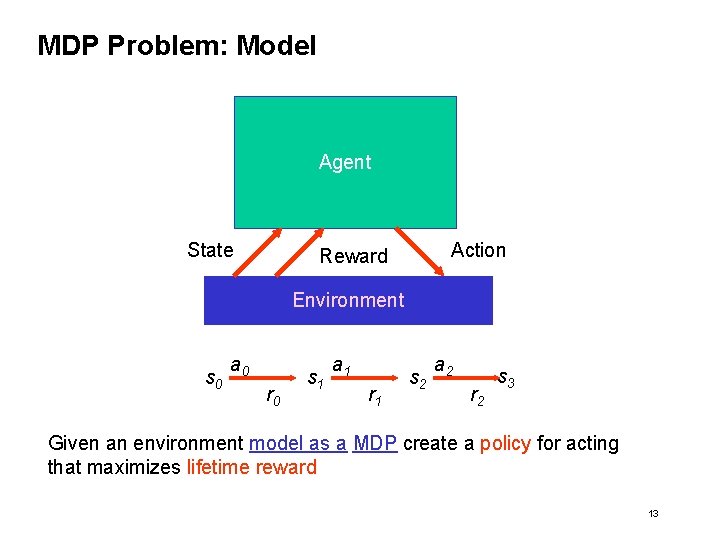

MDP Problem: Model Agent State Action Reward Environment s 0 a 0 r 0 s 1 a 1 r 1 s 2 a 2 r 2 s 3 Given an environment model as a MDP create a policy for acting that maximizes lifetime reward 13

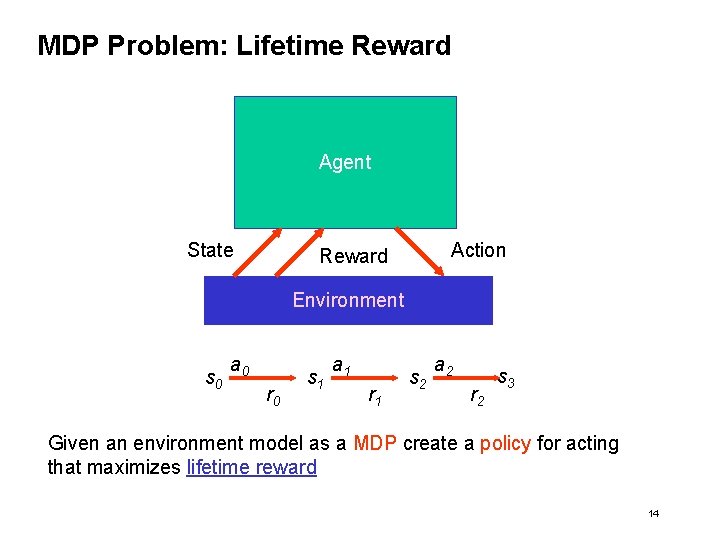

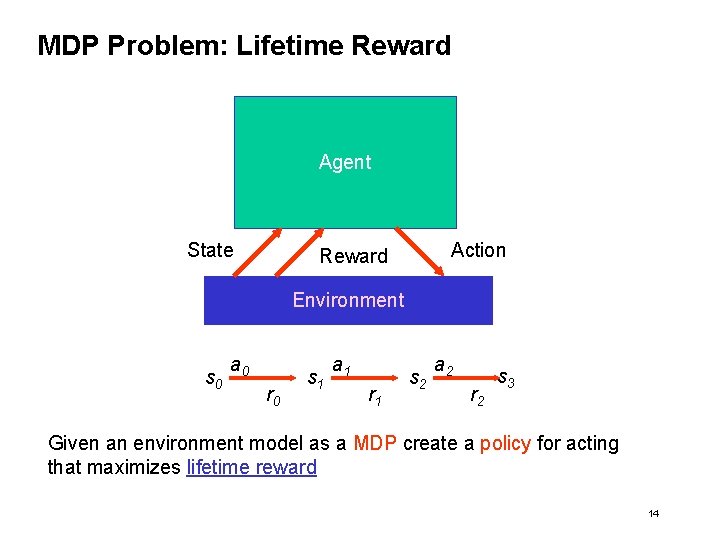

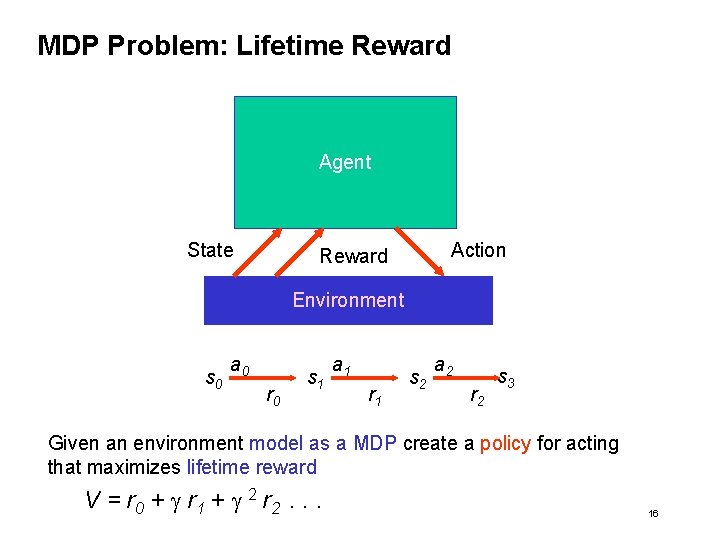

MDP Problem: Lifetime Reward Agent State Action Reward Environment s 0 a 0 r 0 s 1 a 1 r 1 s 2 a 2 r 2 s 3 Given an environment model as a MDP create a policy for acting that maximizes lifetime reward 14

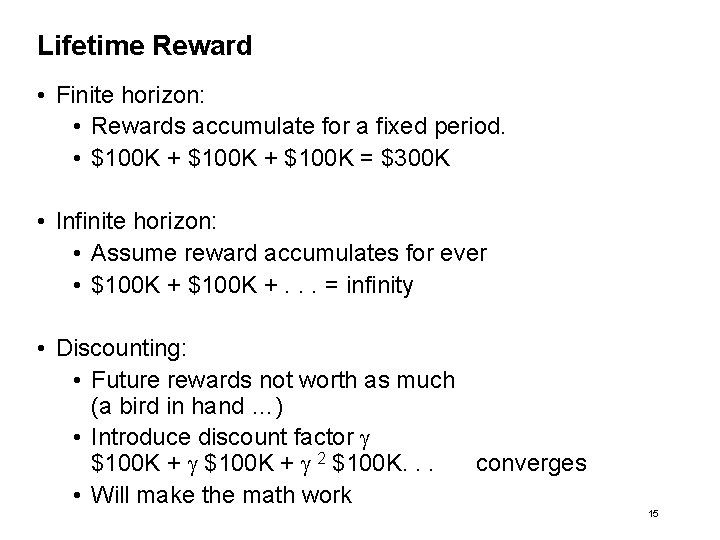

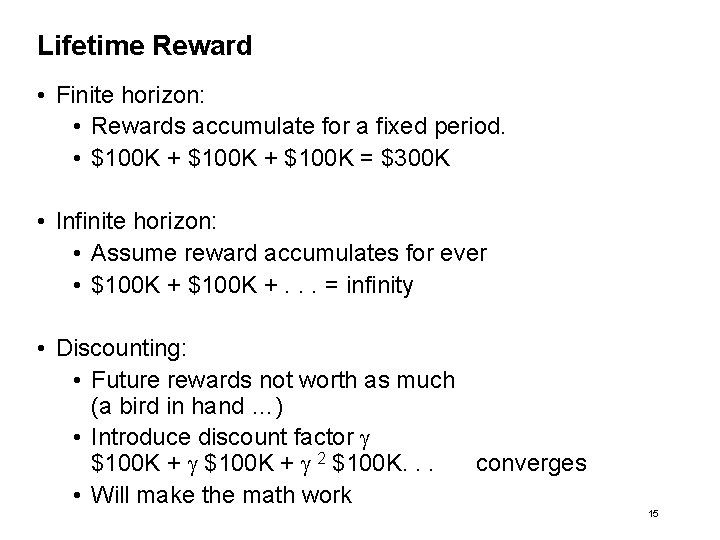

Lifetime Reward • Finite horizon: • Rewards accumulate for a fixed period. • $100 K + $100 K = $300 K • Infinite horizon: • Assume reward accumulates for ever • $100 K +. . . = infinity • Discounting: • Future rewards not worth as much (a bird in hand …) • Introduce discount factor g $100 K + g 2 $100 K. . . converges • Will make the math work 15

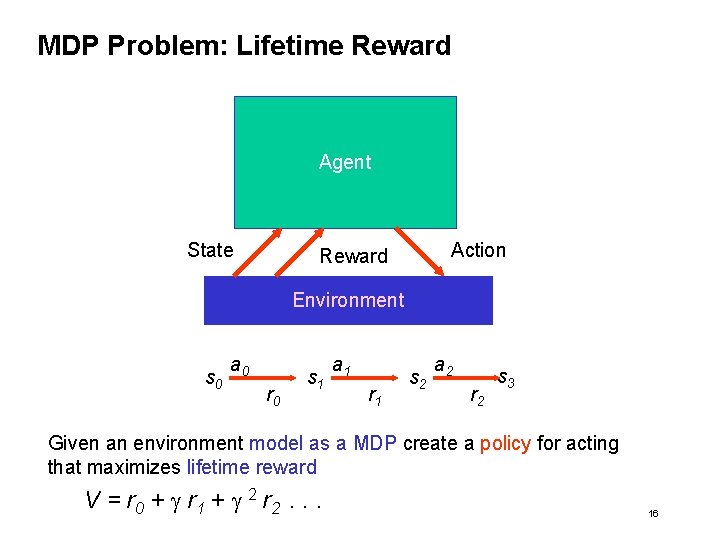

MDP Problem: Lifetime Reward Agent State Action Reward Environment s 0 a 0 r 0 s 1 a 1 r 1 s 2 a 2 r 2 s 3 Given an environment model as a MDP create a policy for acting that maximizes lifetime reward V = r 0 + g r 1 + g 2 r 2. . . 16

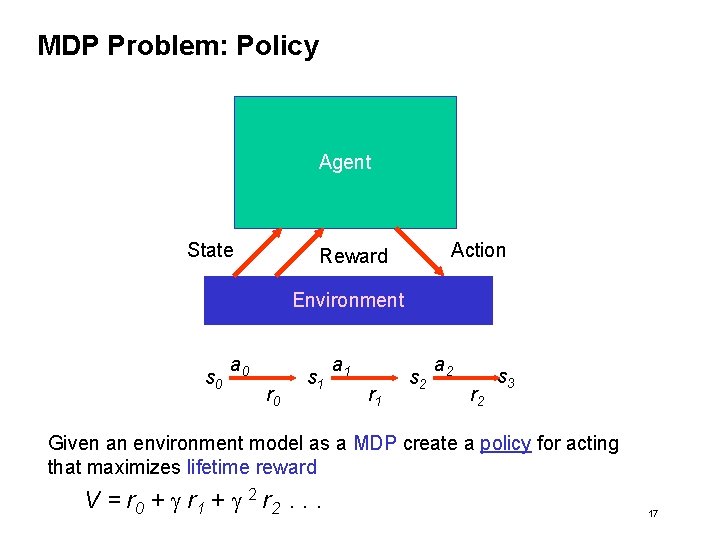

MDP Problem: Policy Agent State Action Reward Environment s 0 a 0 r 0 s 1 a 1 r 1 s 2 a 2 r 2 s 3 Given an environment model as a MDP create a policy for acting that maximizes lifetime reward V = r 0 + g r 1 + g 2 r 2. . . 17

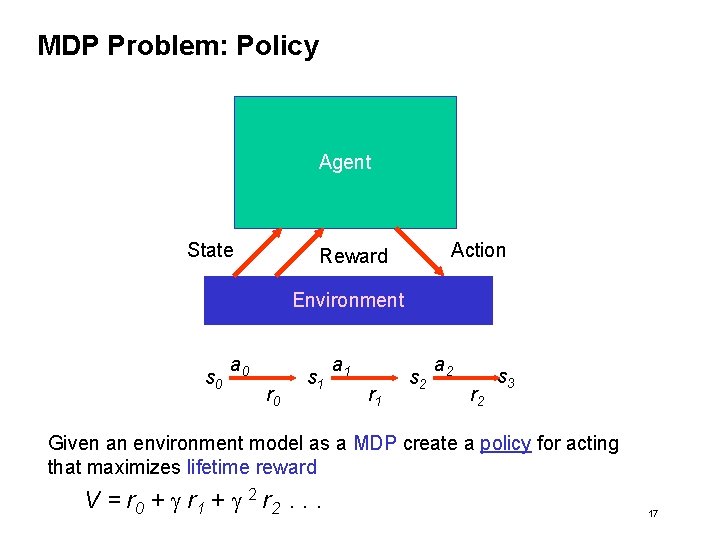

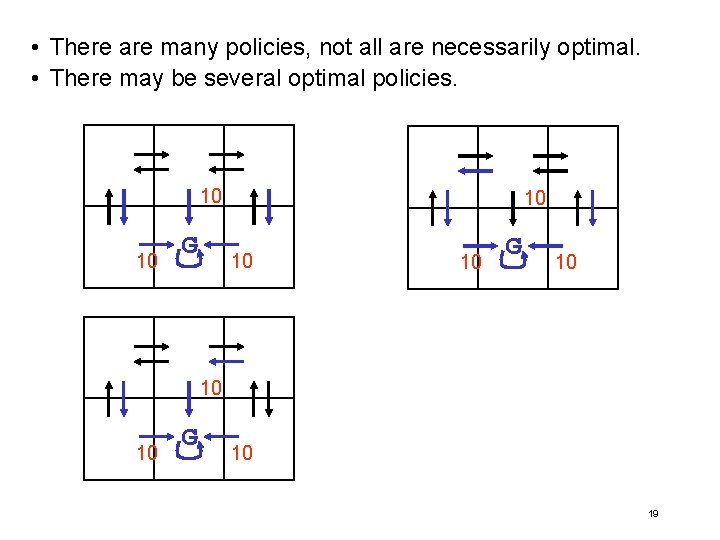

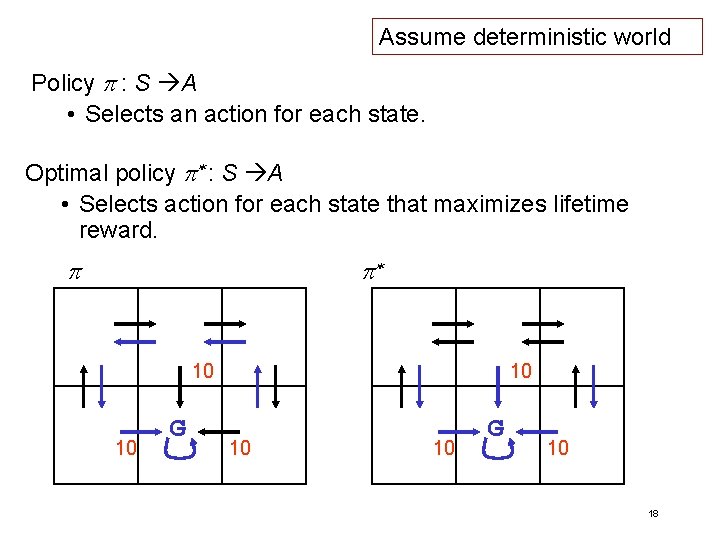

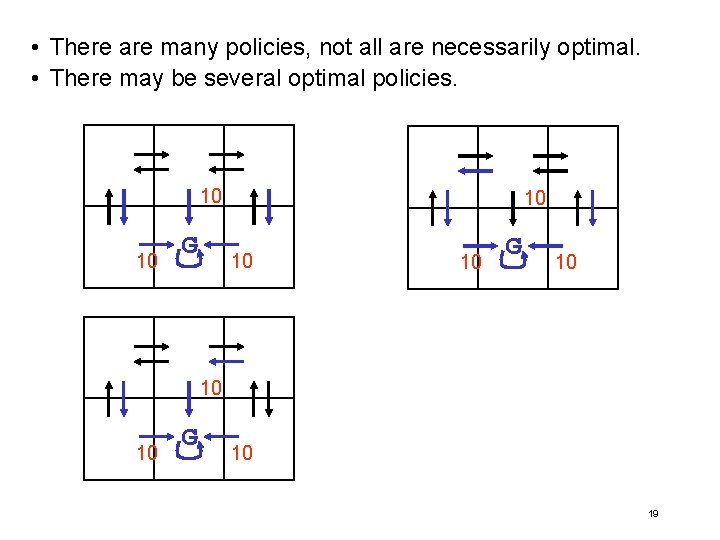

Assume deterministic world Policy p : S A • Selects an action for each state. Optimal policy p* : S A • Selects action for each state that maximizes lifetime reward. p p* 10 10 G 10 18

• There are many policies, not all are necessarily optimal. • There may be several optimal policies. 10 10 G 10 19

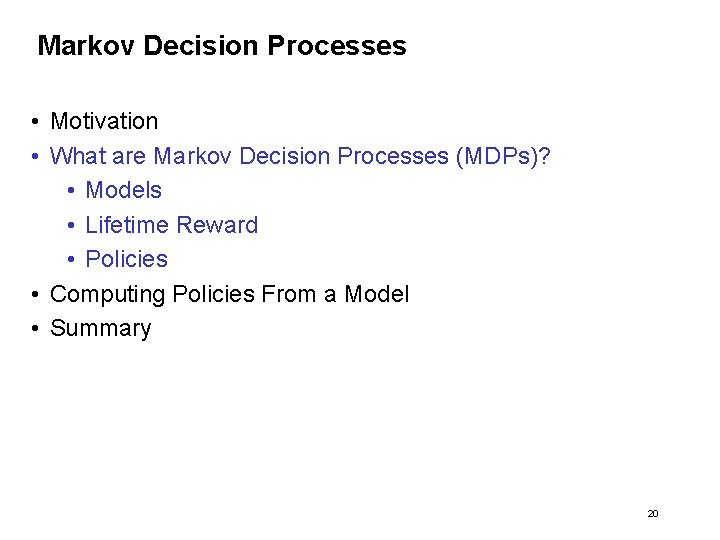

Markov Decision Processes • Motivation • What are Markov Decision Processes (MDPs)? • Models • Lifetime Reward • Policies • Computing Policies From a Model • Summary 20

Markov Decision Processes • Motivation • Markov Decision Processes • Computing Policies From a Model • Value Functions • Mapping Value Functions to Policies • Computing Value Functions through Value Iteration • An Alternative: Policy Iteration (appendix) • Summary 21

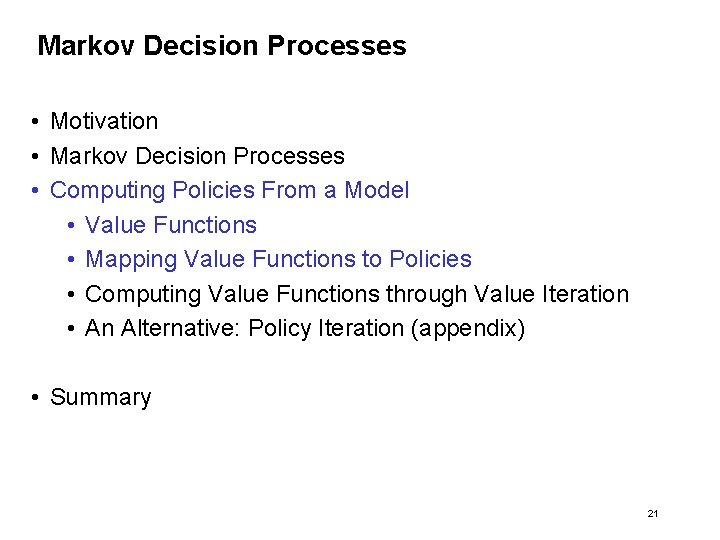

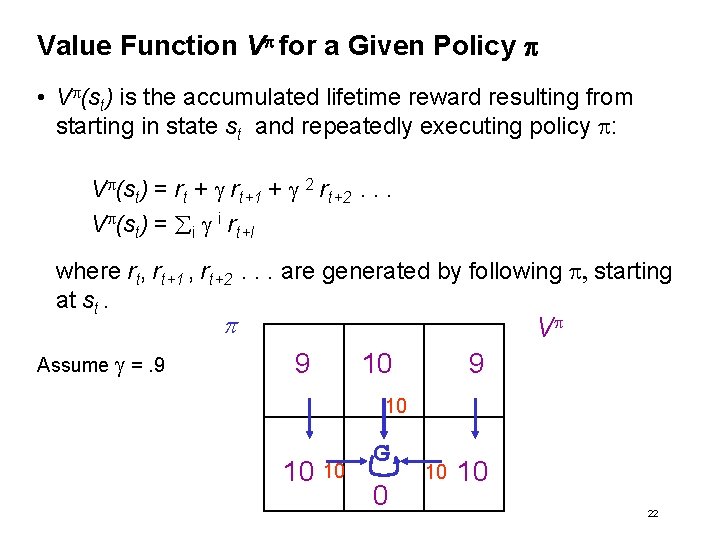

Value Function Vp for a Given Policy p • Vp(st) is the accumulated lifetime reward resulting from starting in state st and repeatedly executing policy p: Vp(st) = rt + g rt+1 + g 2 rt+2. . . Vp(st) = i g i rt+I where rt, rt+1 , rt+2. . . are generated by following p, starting at st. p Assume g =. 9 Vp 9 10 10 10 G 0 10 10 22

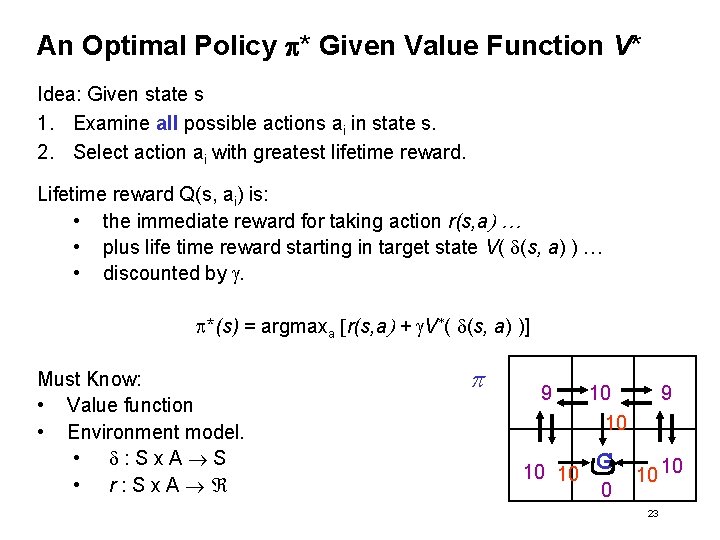

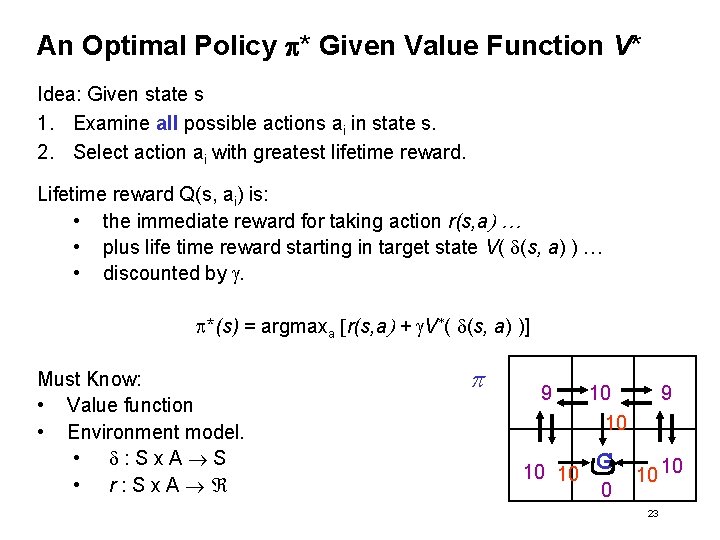

An Optimal Policy p* Given Value Function V* Idea: Given state s 1. Examine all possible actions ai in state s. 2. Select action ai with greatest lifetime reward. Lifetime reward Q(s, ai) is: • the immediate reward for taking action r(s, a) … • plus life time reward starting in target state V( d(s, a) ) … • discounted by g. p*(s) = argmaxa [r(s, a) + g. V*( d(s, a) )] Must Know: • Value function • Environment model. • d: Sx. A S • r: Sx. A p 9 10 10 10 G 0 10 10 23

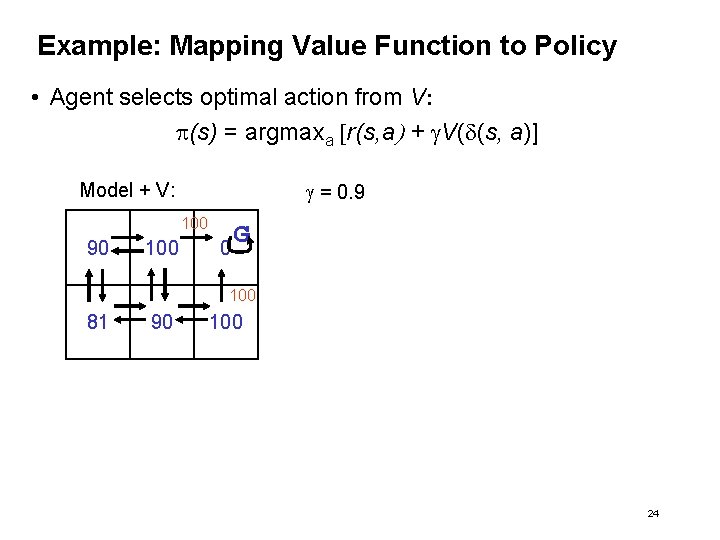

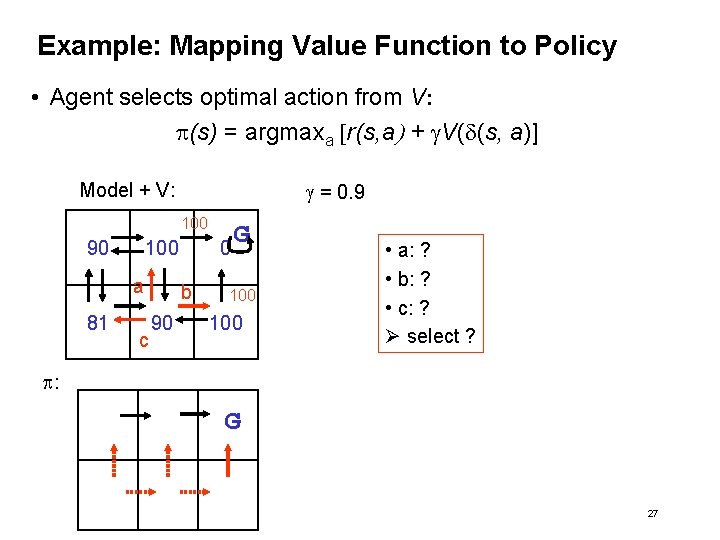

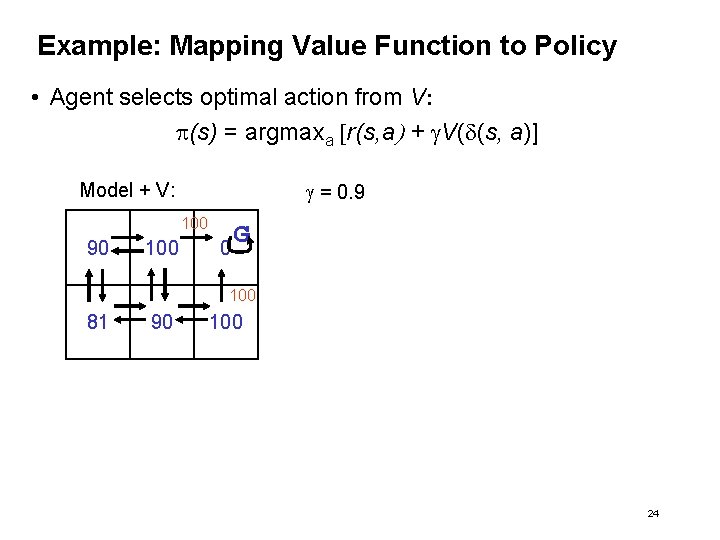

Example: Mapping Value Function to Policy • Agent selects optimal action from V: p(s) = argmaxa [r(s, a) + g. V(d(s, a)] g = 0. 9 Model + V: 100 90 100 0 G 100 81 90 100 24

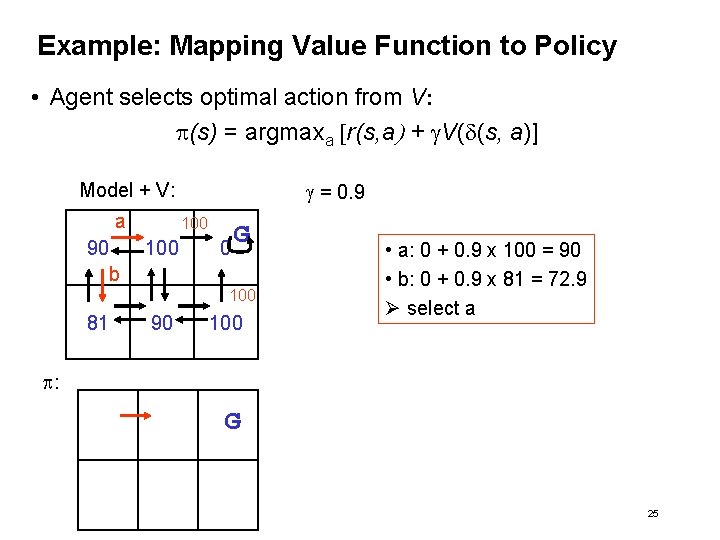

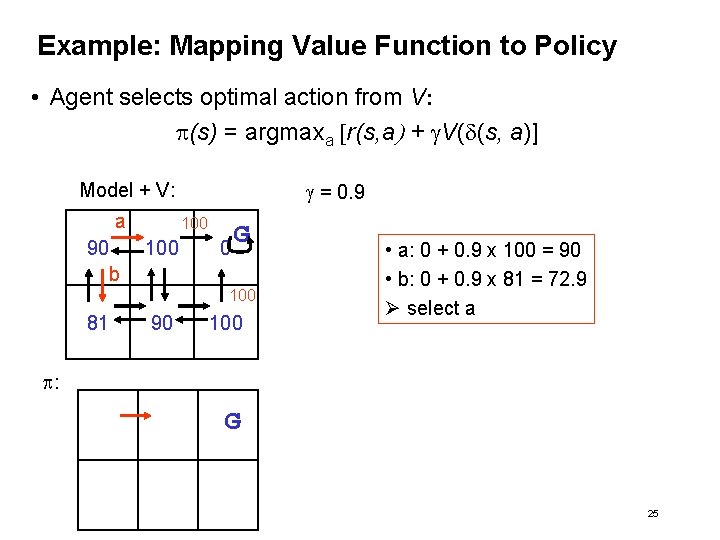

Example: Mapping Value Function to Policy • Agent selects optimal action from V: p(s) = argmaxa [r(s, a) + g. V(d(s, a)] g = 0. 9 Model + V: a 90 100 0 G b 100 81 90 100 • a: 0 + 0. 9 x 100 = 90 • b: 0 + 0. 9 x 81 = 72. 9 Ø select a p: G 25

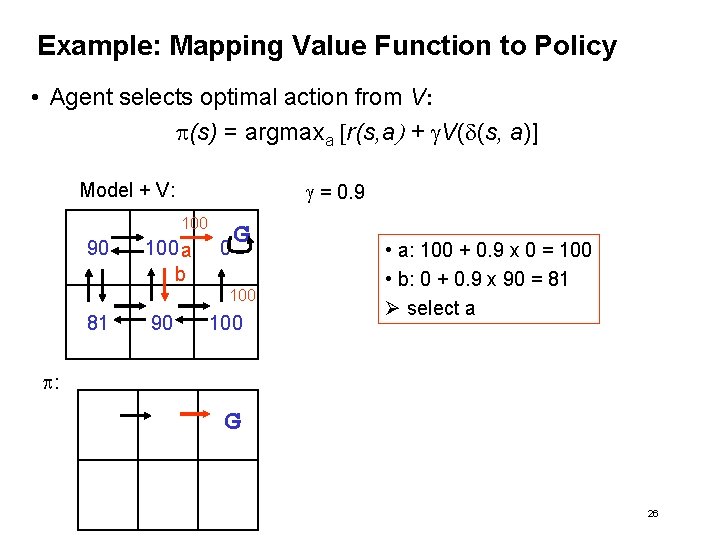

Example: Mapping Value Function to Policy • Agent selects optimal action from V: p(s) = argmaxa [r(s, a) + g. V(d(s, a)] g = 0. 9 Model + V: 100 90 100 a b 0 G 100 81 90 100 • a: 100 + 0. 9 x 0 = 100 • b: 0 + 0. 9 x 90 = 81 Ø select a p: G 26

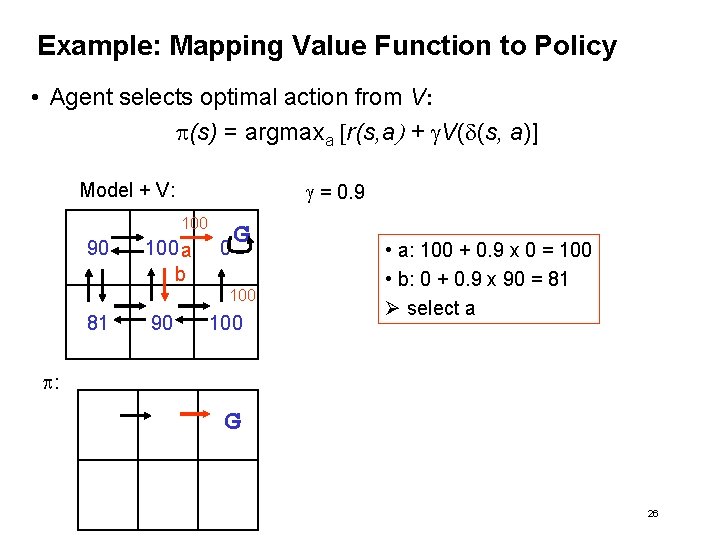

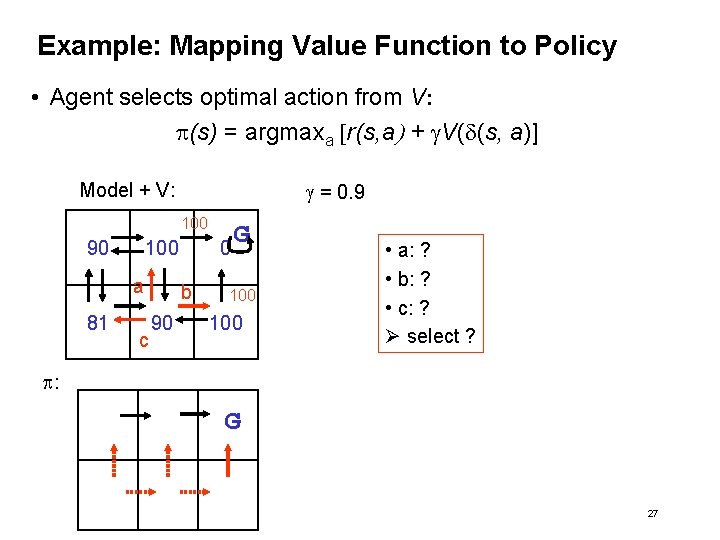

Example: Mapping Value Function to Policy • Agent selects optimal action from V: p(s) = argmaxa [r(s, a) + g. V(d(s, a)] g = 0. 9 Model + V: 100 90 100 a 81 c 0 b 90 G 100 • a: ? • b: ? • c: ? Ø select ? p: G 27

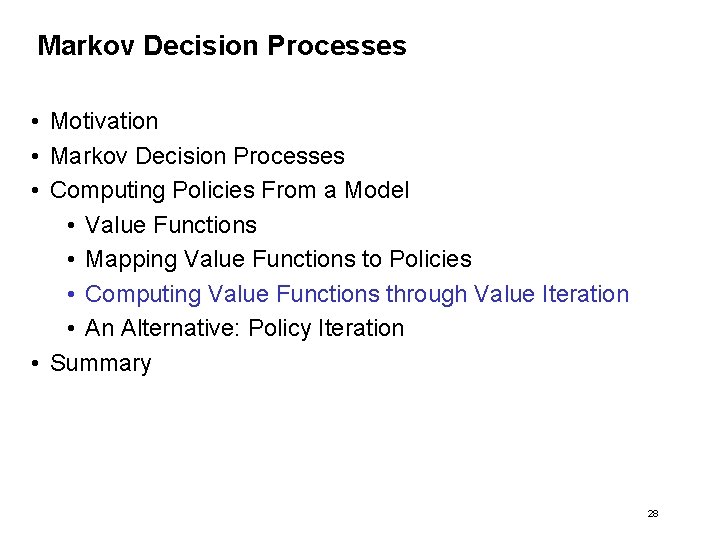

Markov Decision Processes • Motivation • Markov Decision Processes • Computing Policies From a Model • Value Functions • Mapping Value Functions to Policies • Computing Value Functions through Value Iteration • An Alternative: Policy Iteration • Summary 28

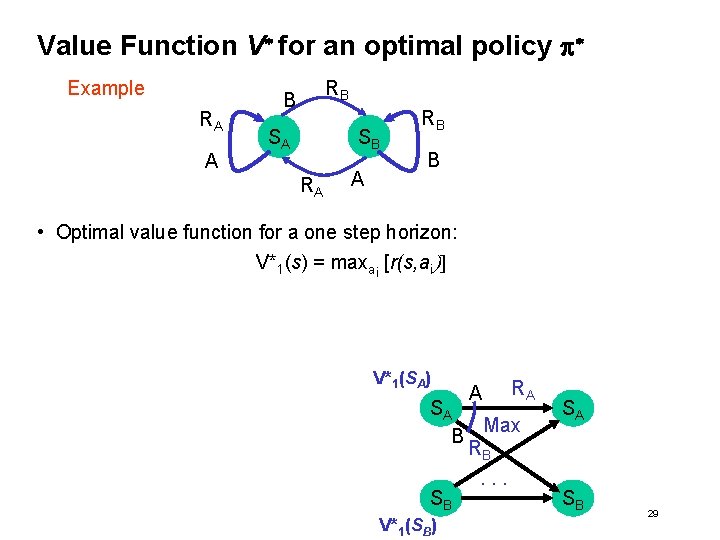

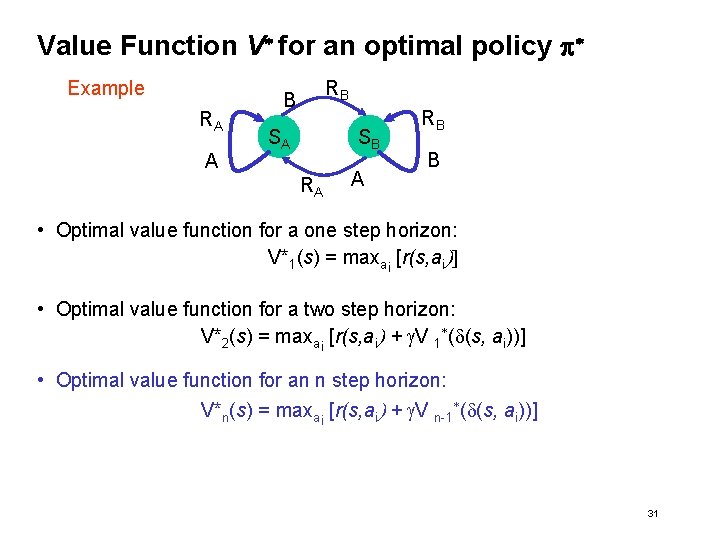

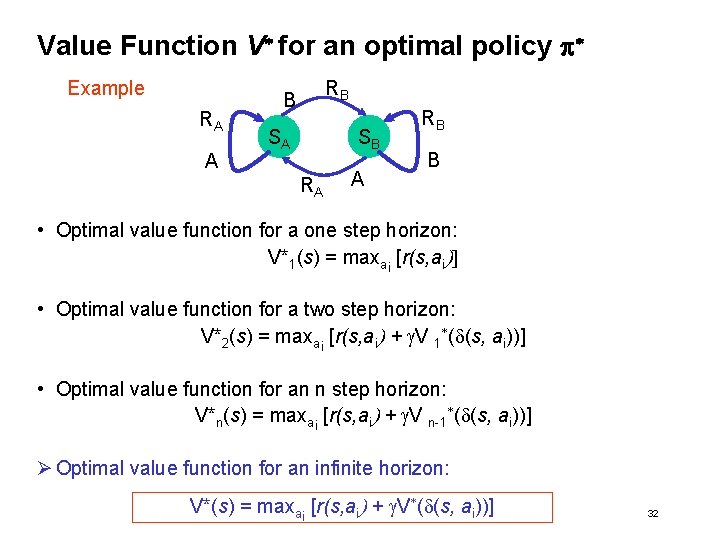

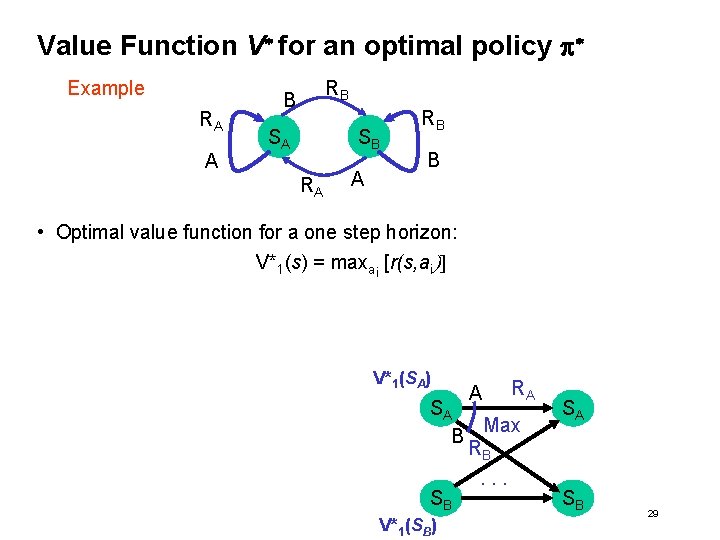

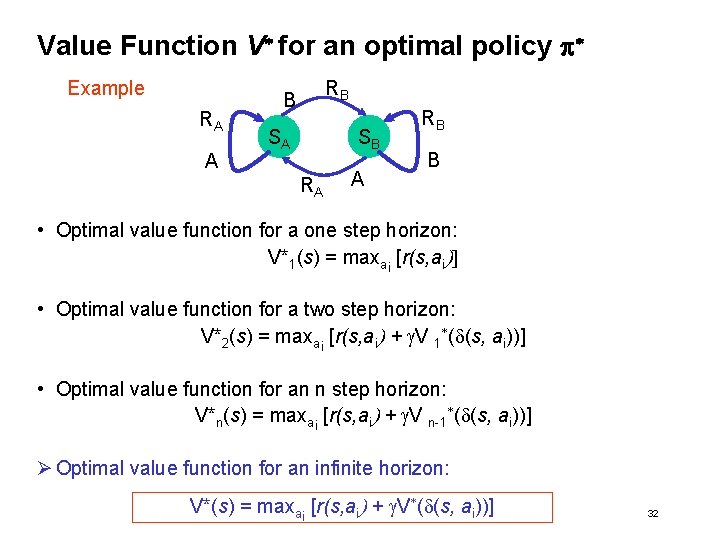

Value Function V* for an optimal policy p* Example RA A RB B SA SB RA A RB B • Optimal value function for a one step horizon: V*1(s) = maxai [r(s, ai)] V*1(SA) A RA SA Max B RB. . . SB V*1(SB) SA SB 29

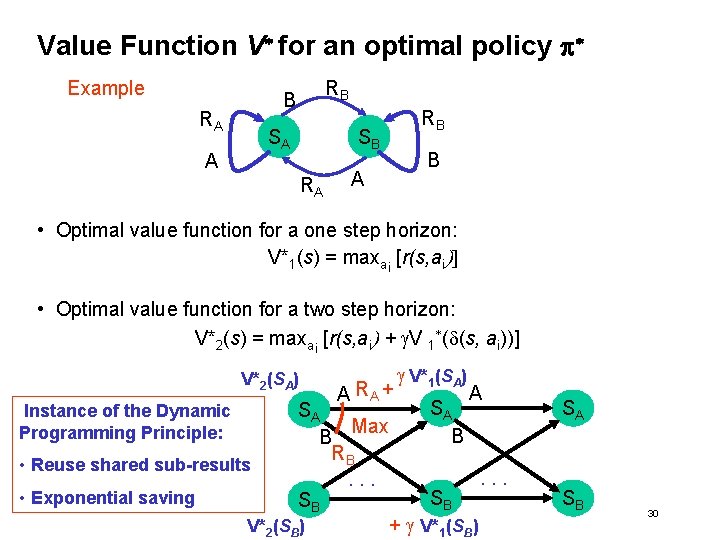

Value Function V* for an optimal policy p* Example RB B RA SA A RB SB RA B A • Optimal value function for a one step horizon: V*1(s) = maxai [r(s, ai)] • Optimal value function for a two step horizon: V*2(s) = maxai [r(s, ai) + g. V 1*(d(s, ai))] V*2(SA) Instance of the Dynamic Programming Principle: • Reuse shared sub-results • Exponential saving A RA + SA Max B RB. . . SB V*2(SB) g V*1(SA) SA B SB A + g V*1(SB) . . . SA SB 30

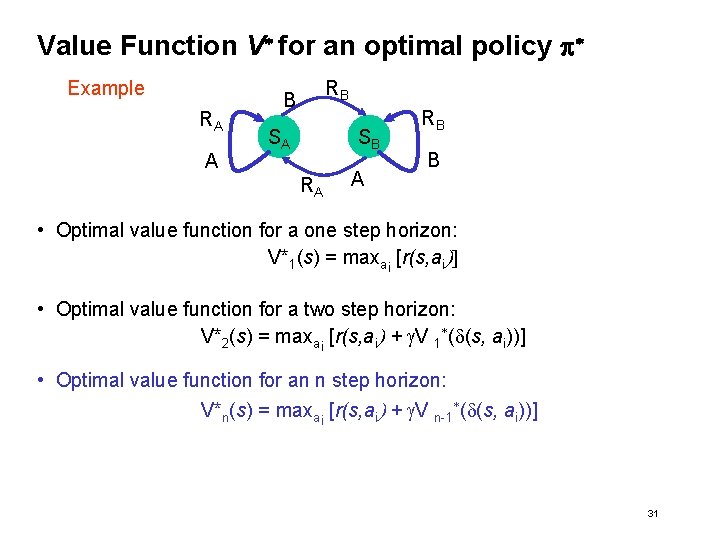

Value Function V* for an optimal policy p* Example RA A RB B SA SB RA A RB B • Optimal value function for a one step horizon: V*1(s) = maxai [r(s, ai)] • Optimal value function for a two step horizon: V*2(s) = maxai [r(s, ai) + g. V 1*(d(s, ai))] • Optimal value function for an n step horizon: V*n(s) = maxai [r(s, ai) + g. V n-1*(d(s, ai))] 31

Value Function V* for an optimal policy p* Example RA A RB B SA SB RA A RB B • Optimal value function for a one step horizon: V*1(s) = maxai [r(s, ai)] • Optimal value function for a two step horizon: V*2(s) = maxai [r(s, ai) + g. V 1*(d(s, ai))] • Optimal value function for an n step horizon: V*n(s) = maxai [r(s, ai) + g. V n-1*(d(s, ai))] Ø Optimal value function for an infinite horizon: V*(s) = maxai [r(s, ai) + g. V*(d(s, ai))] 32

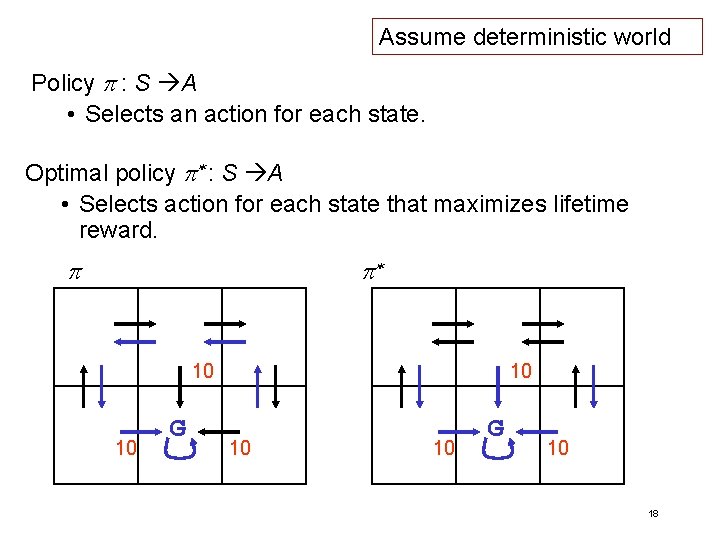

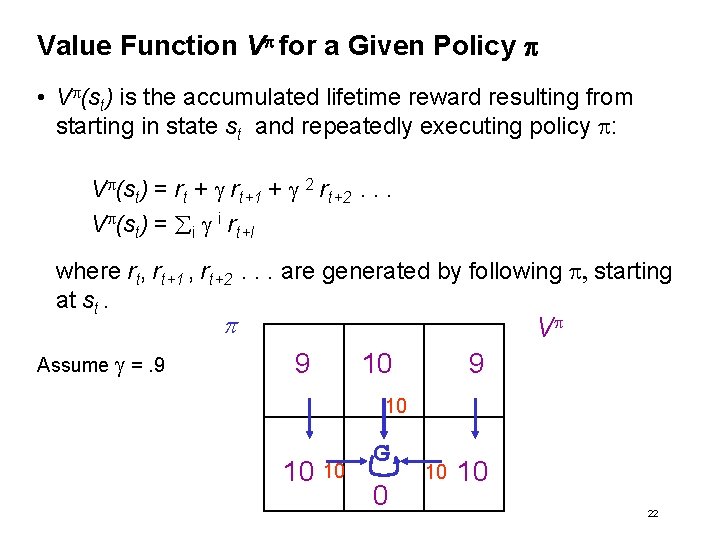

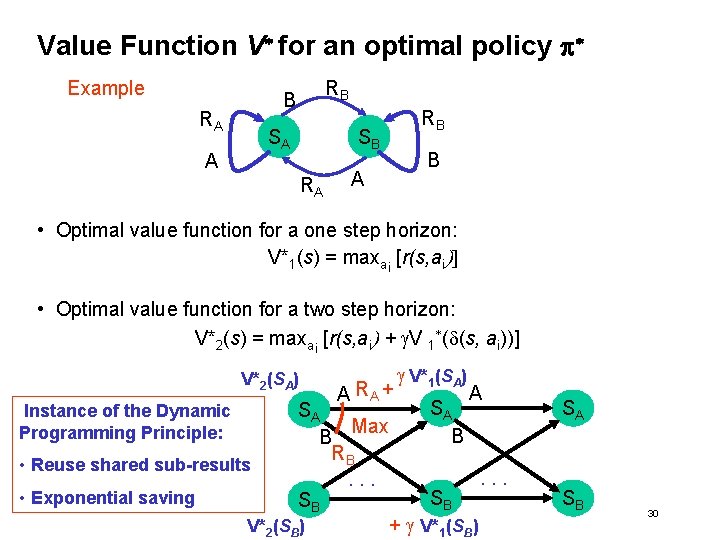

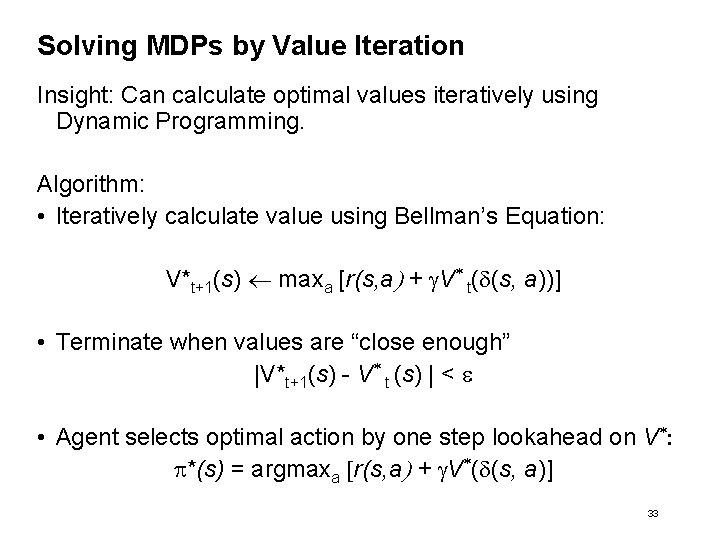

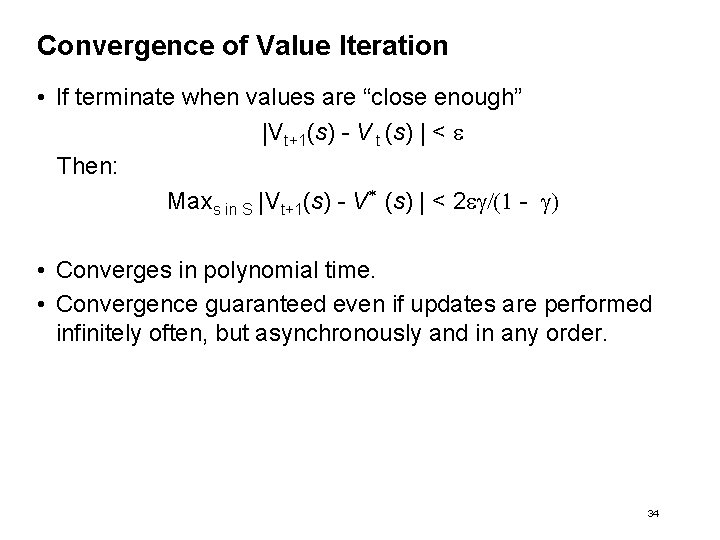

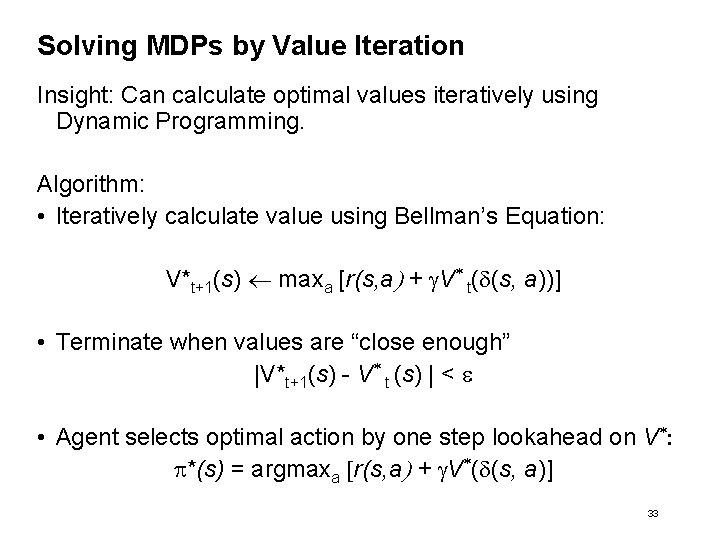

Solving MDPs by Value Iteration Insight: Can calculate optimal values iteratively using Dynamic Programming. Algorithm: • Iteratively calculate value using Bellman’s Equation: V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] • Terminate when values are “close enough” |V*t+1(s) - V* t (s) | < e • Agent selects optimal action by one step lookahead on V*: p*(s) = argmaxa [r(s, a) + g. V*(d(s, a)] 33

Convergence of Value Iteration • If terminate when values are “close enough” |Vt+1(s) - V t (s) | < e Then: Maxs in S |Vt+1(s) - V* (s) | < 2 eg/(1 - g) • Converges in polynomial time. • Convergence guaranteed even if updates are performed infinitely often, but asynchronously and in any order. 34

![Example of Value Iteration Vt1s maxa rs a g V tds a g Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-35.jpg)

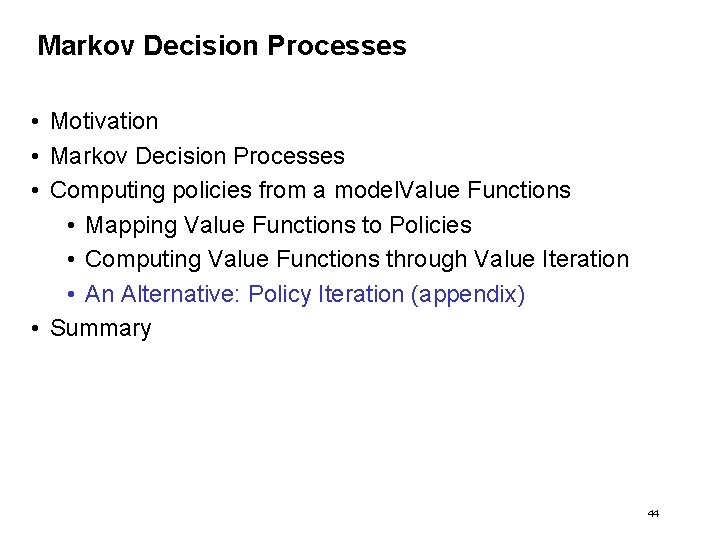

Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g = 0. 9 V* t+1 100 a 0 0 0 G b 100 0 0 100 0 G 100 0 • a: 0 + 0. 9 x 0 = 0 • b: 0 + 0. 9 x 0 = 0 Ø Max = 0 35

![Example of Value Iteration Vt1s maxa rs a g V tds a g Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-36.jpg)

Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g = 0. 9 V* t+1 100 0 0 a c b 0 G 100 0 0 100 G 100 0 • a: 100 + 0. 9 x 0 = 100 • b: 0 + 0. 9 x 0 = 0 • c: 0 + 0. 9 x 0 = 0 Ø Max = 100 36

![Example of Value Iteration Vt1s maxa rs a g V tds a g Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-37.jpg)

Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g = 0. 9 V* t+1 100 0 G a 100 0 0 100 0 G 100 0 • a: 0 + 0. 9 x 0 = 0 Ø Max = 0 37

![Example of Value Iteration Vt1s maxa rs a g V tds a g Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-38.jpg)

Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g = 0. 9 V* t+1 100 0 G 100 0 0 0 100 0 G 100 0 38

![Example of Value Iteration Vt1s maxa rs a g V tds a g Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-39.jpg)

Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g = 0. 9 V* t+1 100 0 G 100 0 0 G 100 0 0 39

![Example of Value Iteration Vt1s maxa rs a g V tds a g Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-40.jpg)

Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g = 0. 9 V* t+1 100 0 G 100 0 0 G 100 0 0 100 40

![Example of Value Iteration Vt1s maxa rs a g V tds a g Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-41.jpg)

Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g = 0. 9 V* t+1 100 0 G 100 90 100 0 G 100 0 90 100 41

![Example of Value Iteration Vt1s maxa rs a g V tds a g Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-42.jpg)

Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g = 0. 9 V* t+1 100 90 100 0 G 100 90 100 0 G 100 81 90 100 42

![Example of Value Iteration Vt1s maxa rs a g V tds a g Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g](https://slidetodoc.com/presentation_image_h2/60753b4eadd3aea156ba00783cc66808/image-43.jpg)

Example of Value Iteration V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] g = 0. 9 V* t+1 100 90 100 0 G 100 90 100 81 90 100 0 G 100 81 90 100 43

Markov Decision Processes • Motivation • Markov Decision Processes • Computing policies from a model. Value Functions • Mapping Value Functions to Policies • Computing Value Functions through Value Iteration • An Alternative: Policy Iteration (appendix) • Summary 44

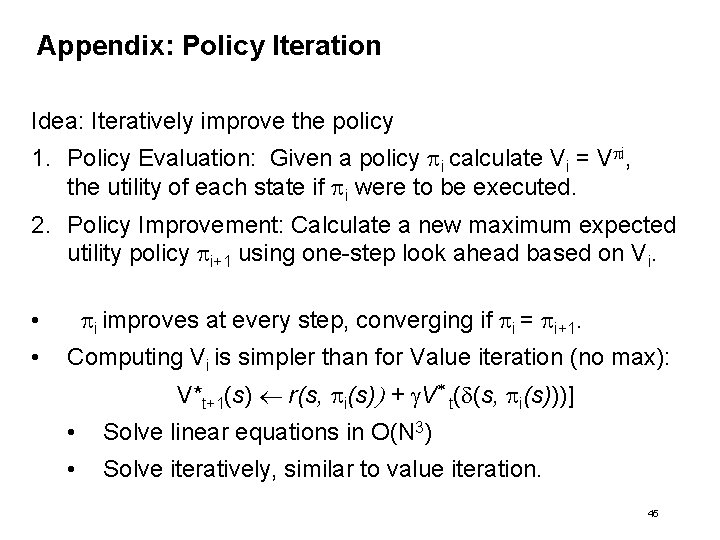

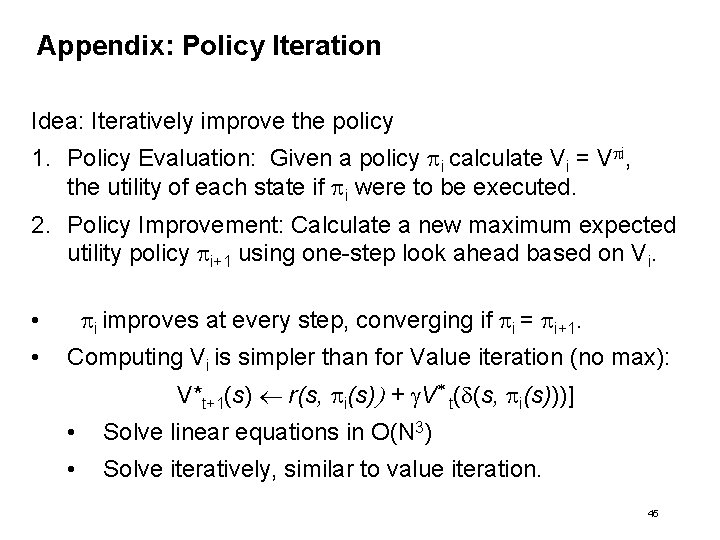

Appendix: Policy Iteration Idea: Iteratively improve the policy 1. Policy Evaluation: Given a policy pi calculate Vi = Vpi, the utility of each state if pi were to be executed. 2. Policy Improvement: Calculate a new maximum expected utility policy pi+1 using one-step look ahead based on Vi. pi improves at every step, converging if pi = pi+1. • • Computing Vi is simpler than for Value iteration (no max): V*t+1(s) r(s, pi(s)) + g. V* t(d(s, pi(s)))] • Solve linear equations in O(N 3) • Solve iteratively, similar to value iteration. 45

Markov Decision Processes • Motivation • Markov Decision Processes • Computing policies from a model • Value Iteration • Policy Iteration • Summary 46

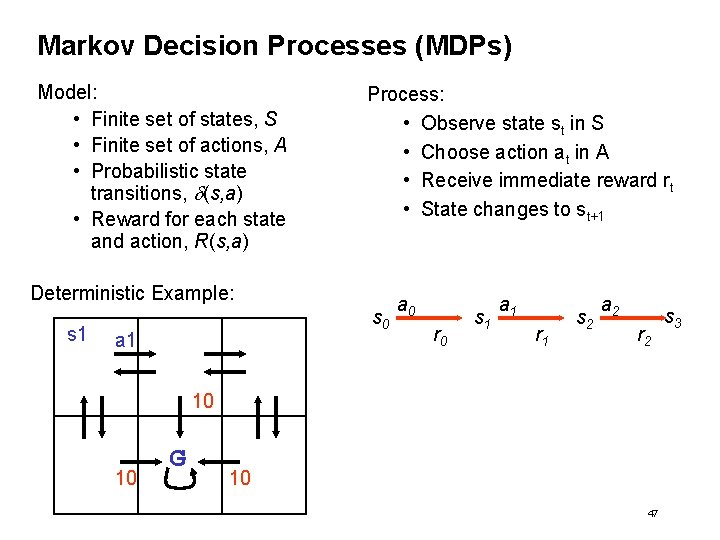

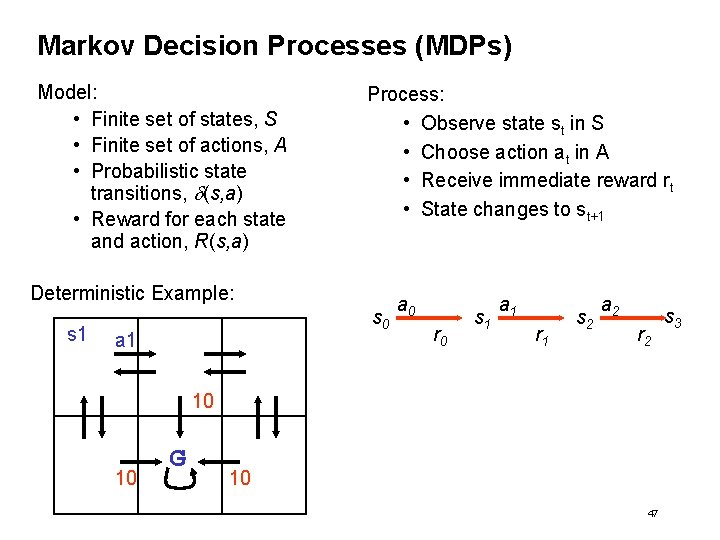

Markov Decision Processes (MDPs) Model: • Finite set of states, S • Finite set of actions, A • Probabilistic state transitions, d(s, a) • Reward for each state and action, R(s, a) Process: • Observe state st in S • Choose action at in A • Receive immediate reward rt • State changes to st+1 Deterministic Example: s 1 s 0 a 1 a 0 r 0 s 1 a 1 r 1 s 2 a 2 r 2 10 10 G 10 47 s 3

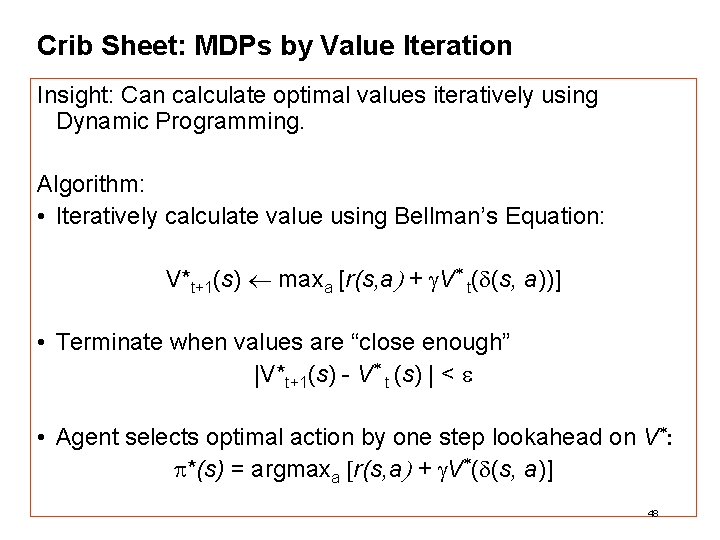

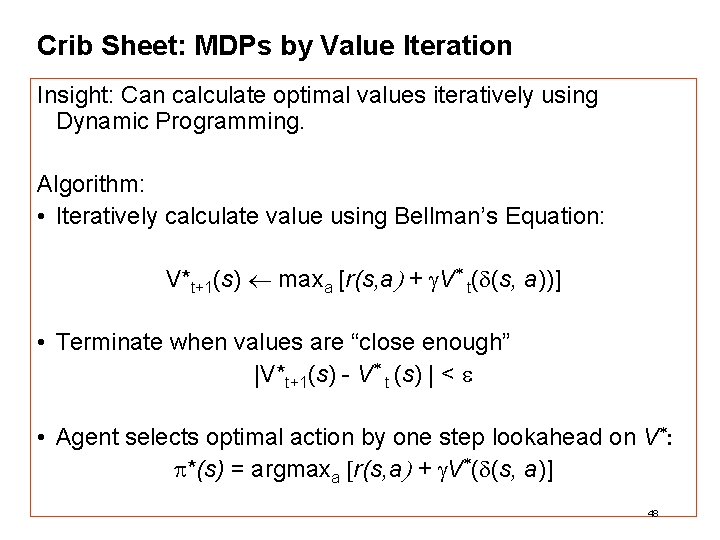

Crib Sheet: MDPs by Value Iteration Insight: Can calculate optimal values iteratively using Dynamic Programming. Algorithm: • Iteratively calculate value using Bellman’s Equation: V*t+1(s) maxa [r(s, a) + g. V* t(d(s, a))] • Terminate when values are “close enough” |V*t+1(s) - V* t (s) | < e • Agent selects optimal action by one step lookahead on V*: p*(s) = argmaxa [r(s, a) + g. V*(d(s, a)] 48

Ideas in this lecture • Objective is to accumulate rewards, rather than goal states. • Objectives are achieved along the way, rather than at the end. • Task is to generate policies for how to act in all situations, rather than a plan for a single starting situation. • Policies fall out of value functions, which describe the greatest lifetime reward achievable at every state. • Value functions are iteratively approximated. 49

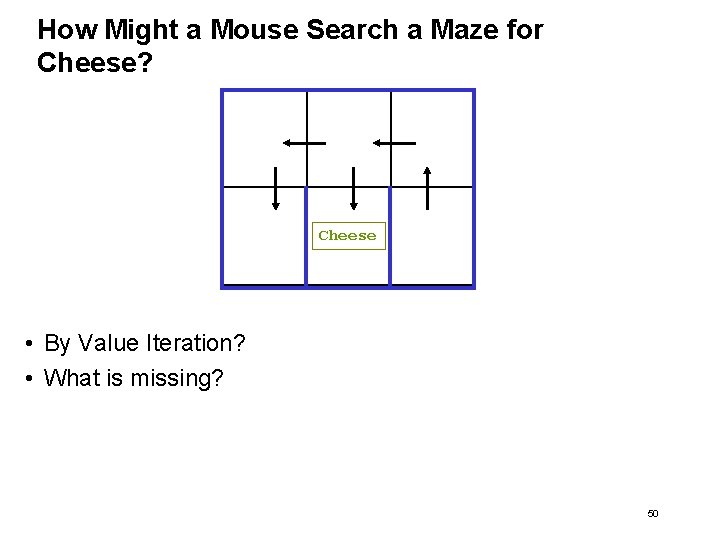

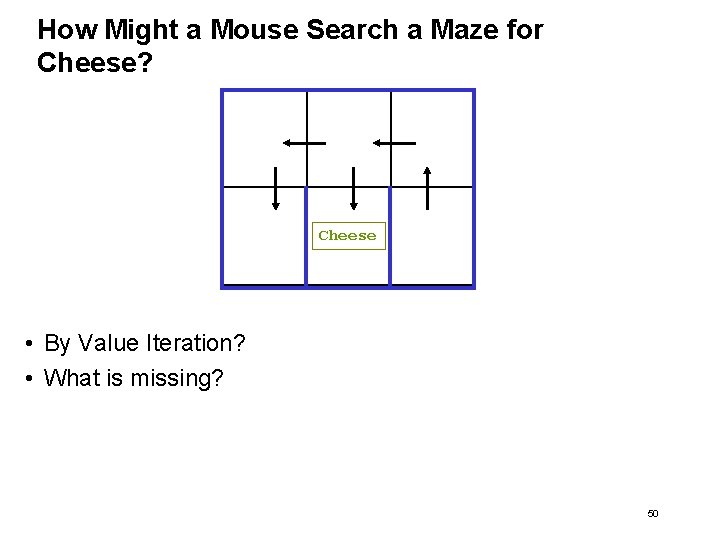

How Might a Mouse Search a Maze for Cheese? Cheese • By Value Iteration? • What is missing? 50