Map Reduce for Machine Learning on Multicore ChengTao

![Valiant Model [Valiant’ 84] x is the input y is a function of x Valiant Model [Valiant’ 84] x is the input y is a function of x](https://slidetodoc.com/presentation_image/1895bb461ef81ac7d61514e5595355ec/image-21.jpg)

![Statistical Query Model [Kearns’ 98] A restriction on Valiant model A learning algorithm uses Statistical Query Model [Kearns’ 98] A restriction on Valiant model A learning algorithm uses](https://slidetodoc.com/presentation_image/1895bb461ef81ac7d61514e5595355ec/image-22.jpg)

- Slides: 49

Map. Reduce for Machine Learning on Multicore Cheng-Tao Chu and Sang Kyun Kim et al. Stanford University, Stanford CA Presented by Inna Rytsareva 1

Outline What is Map. Reduce? Problem Description and Formalization Statistical Query Model and Summation Form Architecture (inspired by Map. Reduce) Adopted ML Algorithms Experiments Future of Map. Reduce for Machine Learning Discussion Map-Reduce for Machine Learning on Multicore 2

Motivation Problem: lots of data Example: 20+ billion web pages x 20 KB = 400+ terabytes One computer can read 30 -35 MB/sec from disk ~four months to read the web ~1, 000 hard drives just to store the web Even more to do something with the data 3

Motivation Solution: spread the work over many machines Same problem with 1000 machines, < 3 hours programming work communication and coordination recovering from machine failure status reporting debugging optimization locality repeat for every problem you want to solve 4

Cluster Computing Many racks of computers, thousands of machines per cluster Limited bisection bandwidth between racks http: //upload. wikimedia. org/wikipedia/commons/d/d 3/IBM_Blue_Gene_ P_supercomputer. jpg 5

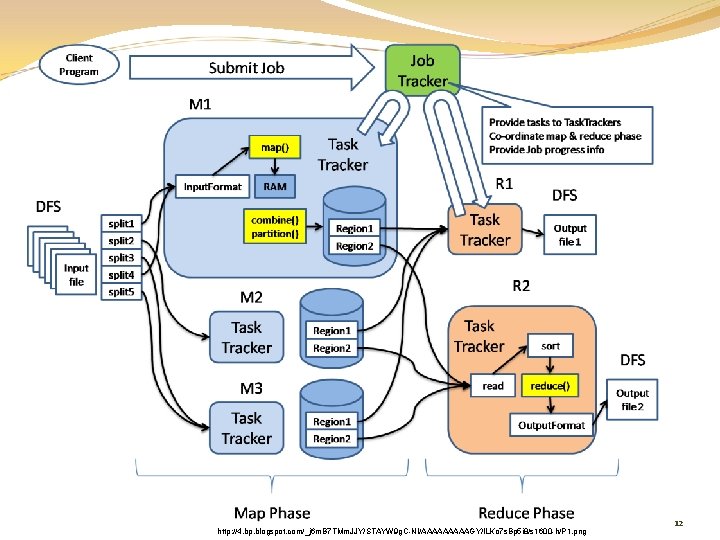

Map. Reduce A simple programming model that applies to many large-scale computing problems Hide messy details in Map. Reduce runtime library: automatic parallelization load balancing network and disk transfer optimization handling of machine failures robustness 6

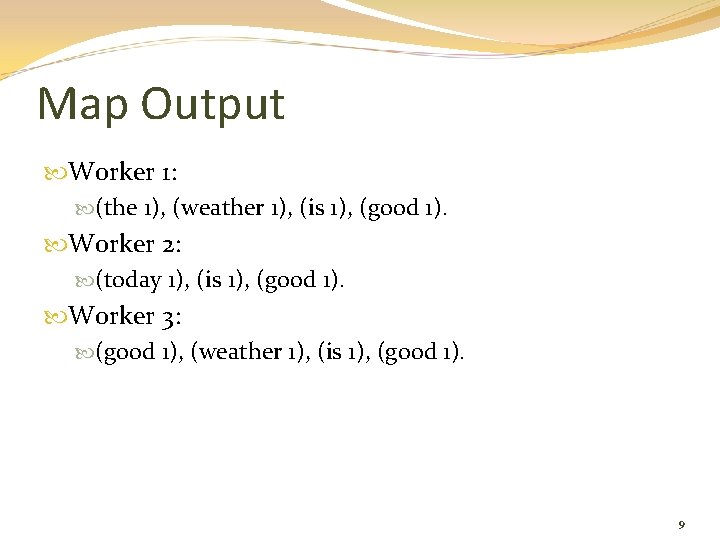

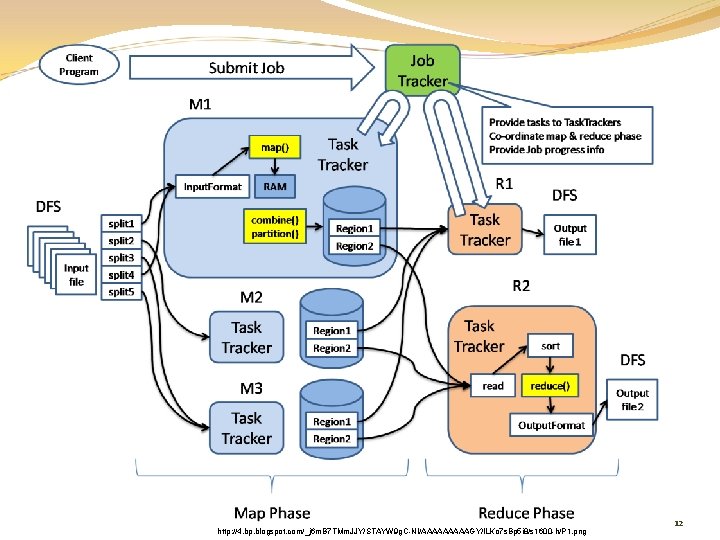

Programming model Input & Output: each a set of key/value pairs Programmer specifies two functions: map() reduce() map (in_key, in_value) -> list(out_key, intermediate_value) Processes input key/value pair Produces set of intermediate pairs reduce (out_key, list(intermediate_value)) -> list(out_value) Combines all intermediate values for a particular key Produces a set of merged output values (usually just one) 7

Example Page 1: the weather is good Page 2: today is good Page 3: good weather is good. 8

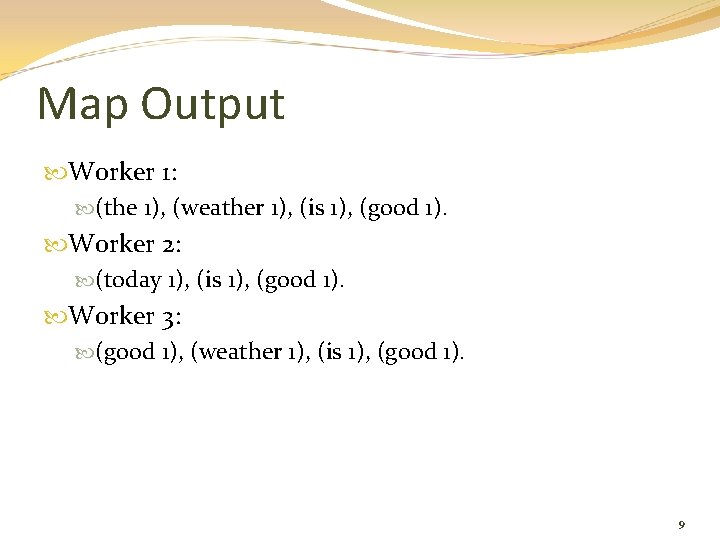

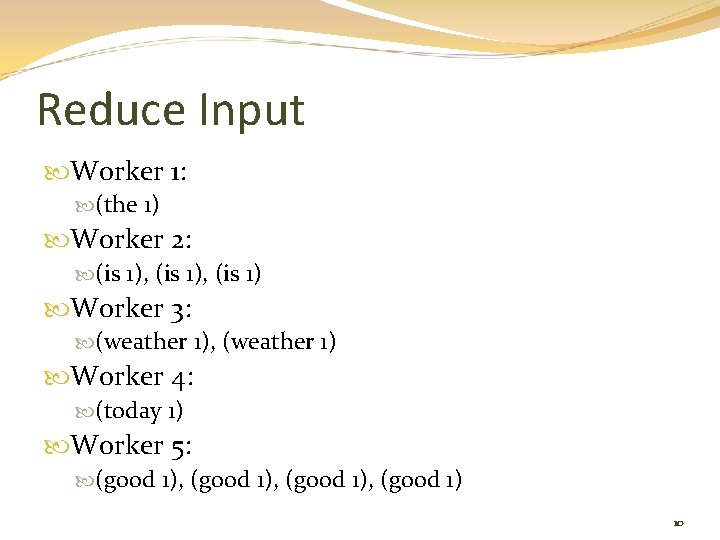

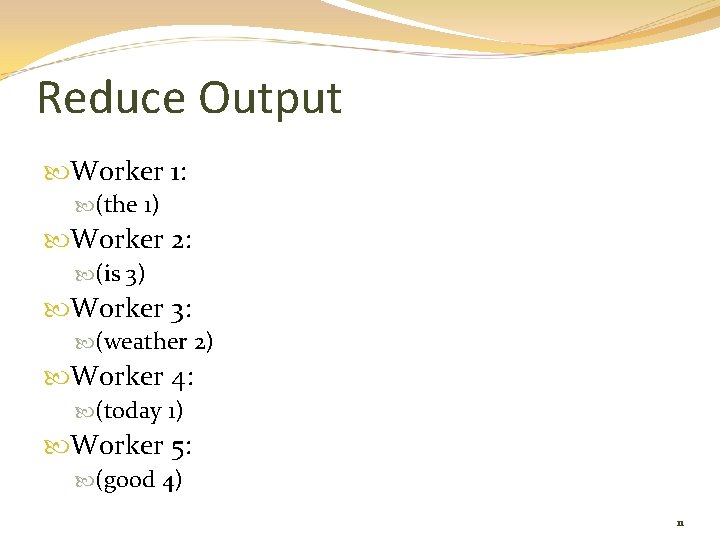

Map Output Worker 1: (the 1), (weather 1), (is 1), (good 1). Worker 2: (today 1), (is 1), (good 1). Worker 3: (good 1), (weather 1), (is 1), (good 1). 9

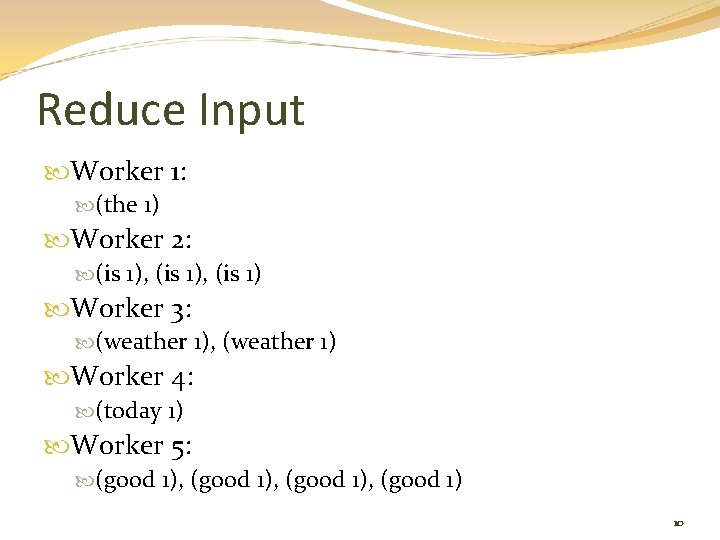

Reduce Input Worker 1: (the 1) Worker 2: (is 1), (is 1) Worker 3: (weather 1), (weather 1) Worker 4: (today 1) Worker 5: (good 1), (good 1) 10

Reduce Output Worker 1: (the 1) Worker 2: (is 3) Worker 3: (weather 2) Worker 4: (today 1) Worker 5: (good 4) 11

http: //4. bp. blogspot. com/_j 6 m. B 7 TMm. JJY/STAYW 9 g. C-NI/AAAAAGY/l. LKo 7 s. Bp 5 i 8/s 1600 -h/P 1. png 12

Fault tolerance On worker failure: Detect failure via periodic heartbeats (ping) Re-execute completed and in-progress map tasks Re-execute in progress reduce tasks Task completion committed through master Master failure: Could handle, but don't yet (master failure unlikely) 13

Map. Reduce Transparencies Parallelization Fault-tolerance Locality optimization Load balancing 14

Suitable for your task if Have a cluster Working with large dataset Working with independent data (or assumed) Can be cast into map and reduce 15

References Original paper J. Dean and S. Ghemawat, “Map. Reduce: Simplified Data Processing on Large Clusters, ” Communications of the ACM, vol. 51, 2008, pp. 107 -113. (http: //labs. google. com/papers/mapreduce. html) On wikipedia (http: //en. wikipedia. org/wiki/Map. Reduce) Hadoop – Map. Reduce in Java (http: //lucene. apache. org/hadoop/) Starfish - Map. Reduce in Ruby (http: //rufy. com/starfish/) 16

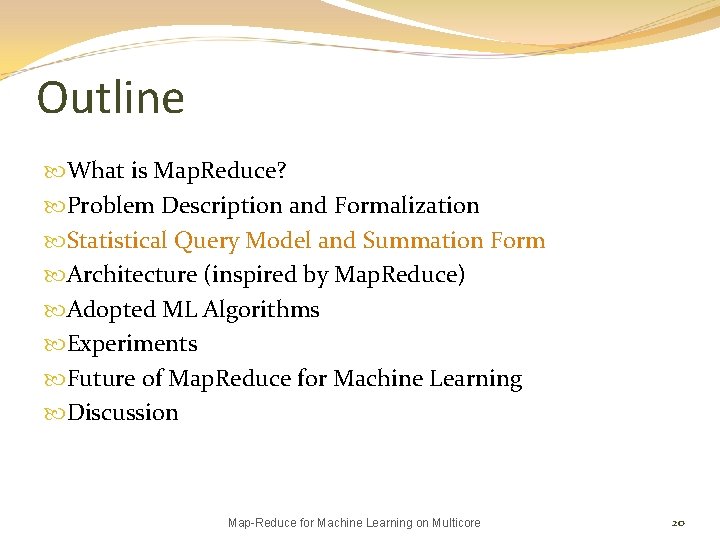

Outline What is Map. Reduce? Problem Description and Formalization Statistical Query Model and Summation Form Architecture (inspired by Map. Reduce) Adopted ML Algorithms Experiments Future of Map. Reduce for Machine Learning Discussion Map-Reduce for Machine Learning on Multicore 17

Motivations Industry-wide shift to multicore No good framework for parallelize ML algorithms Goal: develop a general and exact technique for parallel programming of a large class of ML algorithms for multicore processors http: //upload. wikimedia. org/wikipedia/commons/a/af/E 6750 bs 8. jpg 18

Idea … … … • Statistical Query Model • Summation Form • Map. Reduce 19

Outline What is Map. Reduce? Problem Description and Formalization Statistical Query Model and Summation Form Architecture (inspired by Map. Reduce) Adopted ML Algorithms Experiments Future of Map. Reduce for Machine Learning Discussion Map-Reduce for Machine Learning on Multicore 20

![Valiant Model Valiant 84 x is the input y is a function of x Valiant Model [Valiant’ 84] x is the input y is a function of x](https://slidetodoc.com/presentation_image/1895bb461ef81ac7d61514e5595355ec/image-21.jpg)

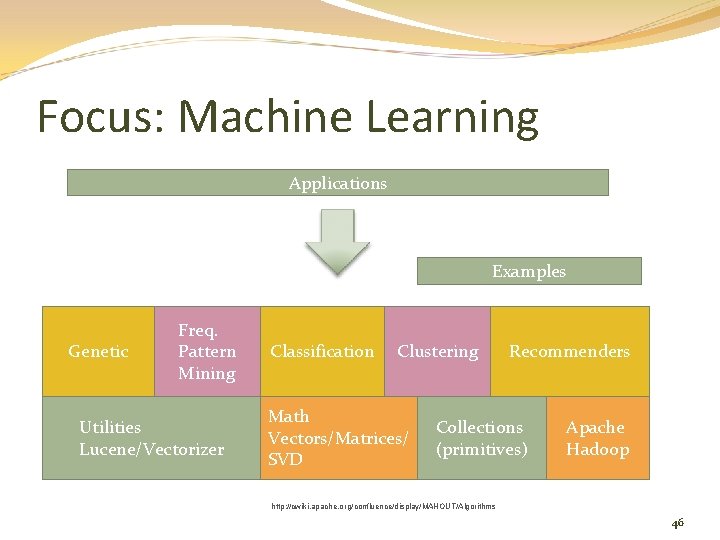

Valiant Model [Valiant’ 84] x is the input y is a function of x that we want to learn In Valiant model, the learning algorithm uses randomly drawn examples <x, y> to learn the target function 21

![Statistical Query Model Kearns 98 A restriction on Valiant model A learning algorithm uses Statistical Query Model [Kearns’ 98] A restriction on Valiant model A learning algorithm uses](https://slidetodoc.com/presentation_image/1895bb461ef81ac7d61514e5595355ec/image-22.jpg)

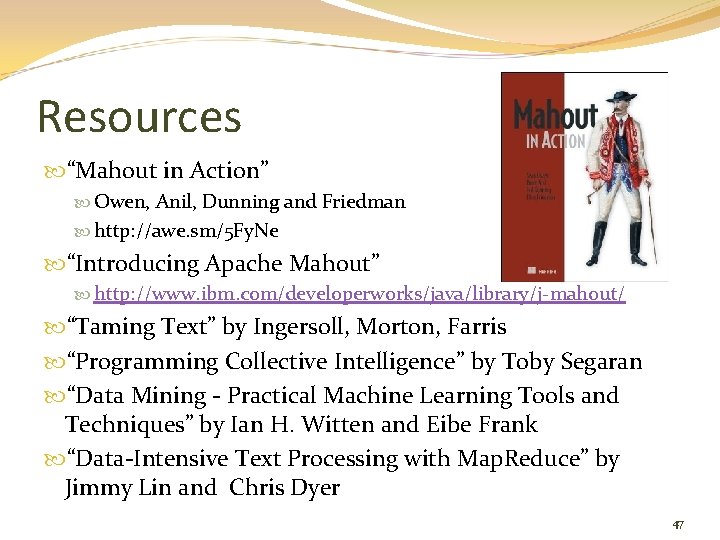

Statistical Query Model [Kearns’ 98] A restriction on Valiant model A learning algorithm uses some aggregates over the examples, not the individual examples Given a function f(x, y) over instances (data points x and labels y), a statistical oracle will return an estimate of the expectation of f(x, y) Any model that computes gradients or sufficient statistics over f(x, y) fits this model Typically this is achieved by summing over the data. 22

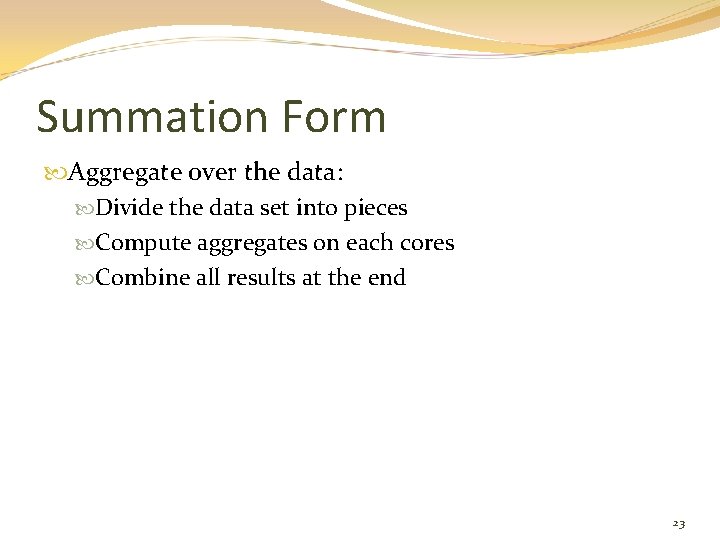

Summation Form Aggregate over the data: Divide the data set into pieces Compute aggregates on each cores Combine all results at the end 23

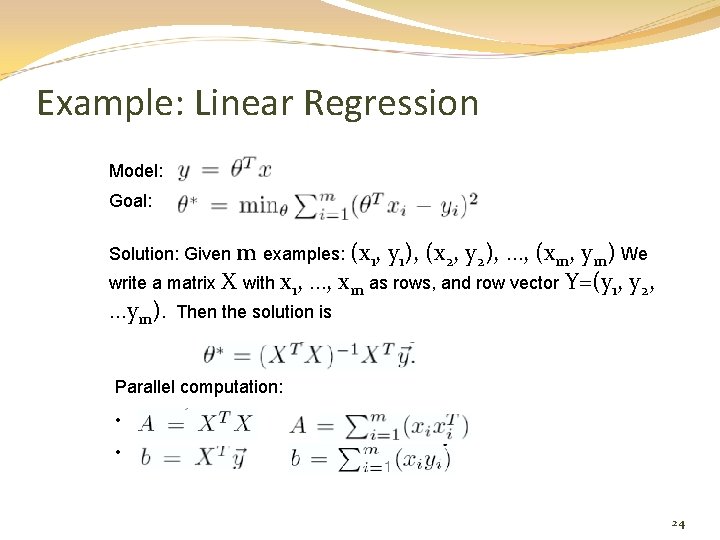

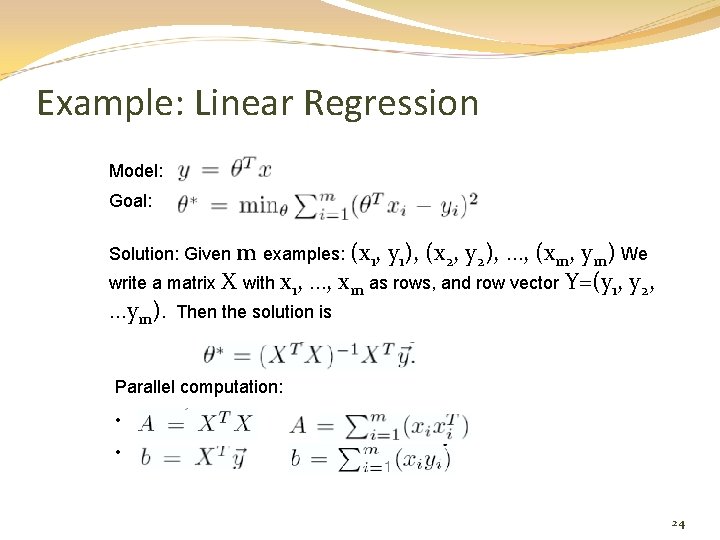

Example: Linear Regression Model: Goal: Solution: Given m examples: (x 1, y 1), (x 2, y 2), …, (xm, ym) We write a matrix X with x 1, …, xm as rows, and row vector Y=(y 1, y 2, …ym). Then the solution is Parallel computation: • • 24

Outline What is Map. Reduce? Problem Description and Formalization Statistical Query Model and Summation Form Architecture (inspired by Map. Reduce) Adopted ML Algorithms Experiments Future of Map. Reduce for Machine Learning Discussion Map-Reduce for Machine Learning on Multicore 25

Lighter Weight Map. Reduce for Multicore 26

Outline What is Map. Reduce? Problem Description and Formalization Statistical Query Model and Summation Form Architecture (inspired by Map. Reduce) Adopted ML Algorithms Experiments Future of Map. Reduce for Machine Learning Conclusion and Discussion Map-Reduce for Machine Learning on Multicore 27

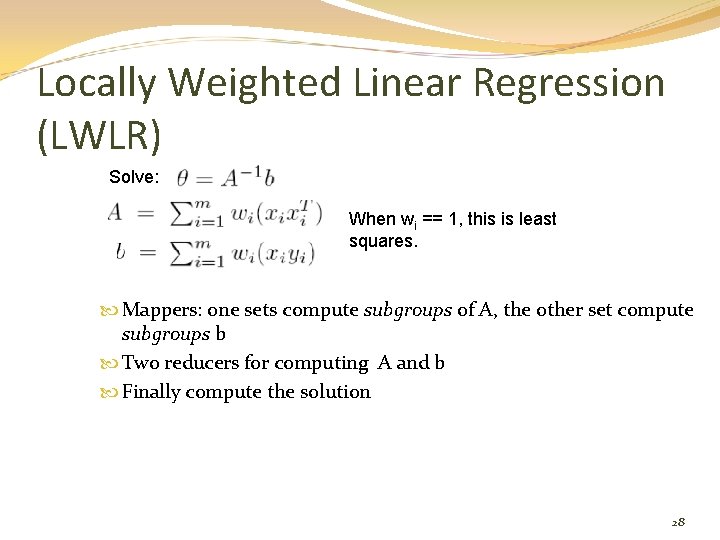

Locally Weighted Linear Regression (LWLR) Solve: When wi == 1, this is least squares. Mappers: one sets compute subgroups of A, the other set compute subgroups b Two reducers for computing A and b Finally compute the solution 28

Naïve Bayes (NB) Goal: estimate P(xj=k|y=1) and P(xj=k|y=0) and P(y) Computation: count the occurrence of (xj=k, count the occurrence of (y=1) and (y=0) y=1) and (xj=k, y=0), Mappers: count a subgroup of training samples Reducer: aggregate the intermediate counts, and calculate the final result 29

Gaussian Discriminative Analysis (GDA) Goal: classification of x into classes of y assuming each class is a Gaussian Mixture model with different means but same covariance. Computation: Mappers: compute for a subgroup of training samples Reducer: aggregate intermediate results 30

K-means Computing the Euclidean distance between sample vectors and centroids Recalculating the centroids Divide the computation to subgroups to be handled by map-reduce 31

Neural Network (NN) Back-propagation, 3 -layer network Input, middle, 2 output nodes Goal: compute the weights in the NN by back propagation Mapper: propagate its set of training data through the network, and propagate errors to calculate the partial gradient for weights Reducer: sums the partial gradients and does a batch gradient descent to update the weights 32

Principal Components Analysis (PCA) Compute the principle eigenvectors of the covariance matrix Clearly, we can compute the summation form using map-reduce Express the mean vector as a sum 33

Other Algorithms Logistic Regression Independent Component Analysis Support Vector Machine Expectation Maximization (EM) 34

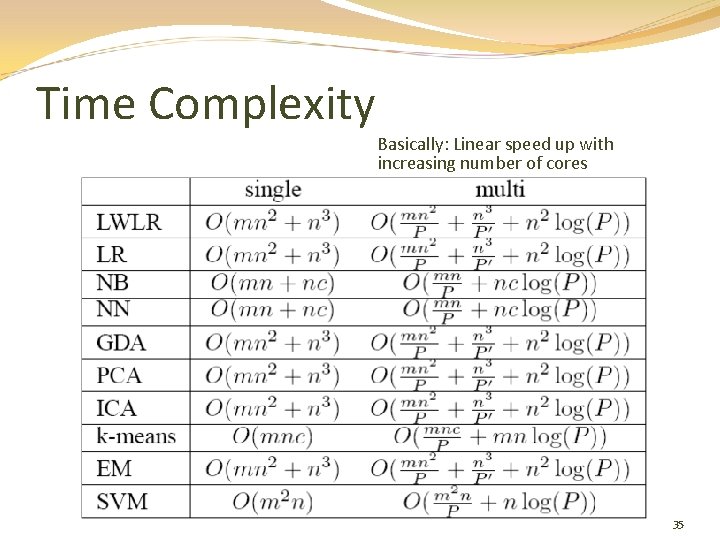

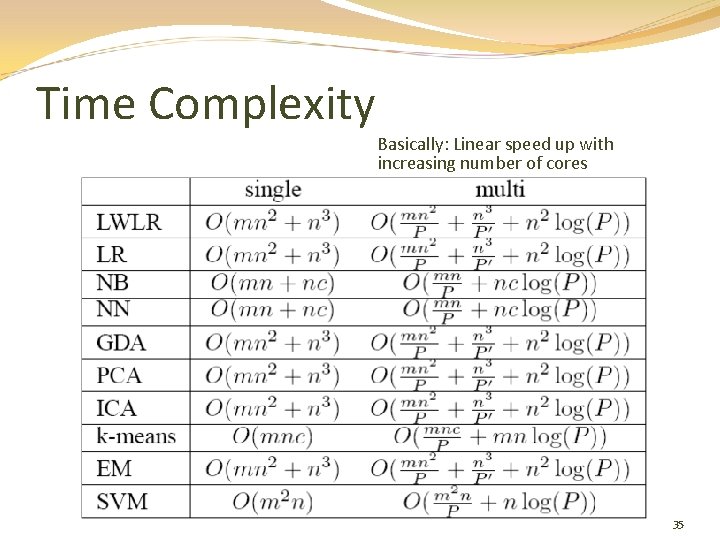

Time Complexity Basically: Linear speed up with increasing number of cores 35

Outline What is Map. Reduce? Problem Description and Formalization Statistical Query Model and Summation Form Architecture (inspired by Map. Reduce) Adopted ML Algorithms Experiments Future of Map. Reduce for Machine Learning Discussion Map-Reduce for Machine Learning on Multicore 36

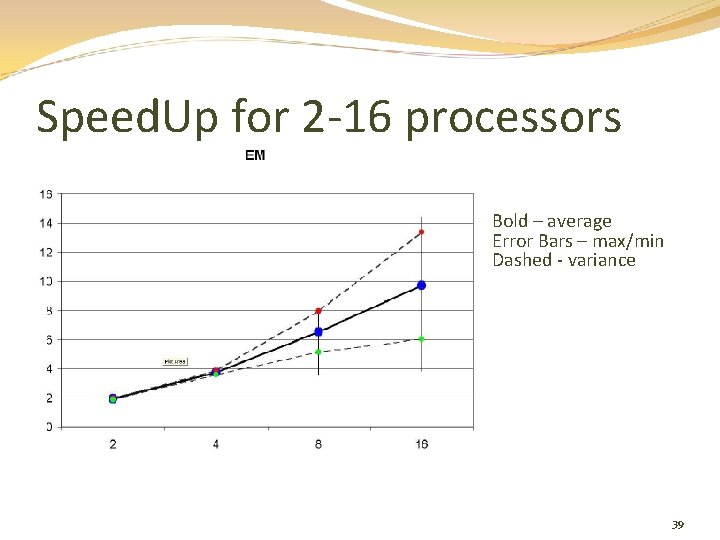

Setup Compare map-reduce version and sequential version 10 data sets Machines: Dual-processor Pentium-III 700 MHz, 1 GB RAM 16 -way Sun Enterprise 6000 37

Dual-Processor Speed. Ups 38

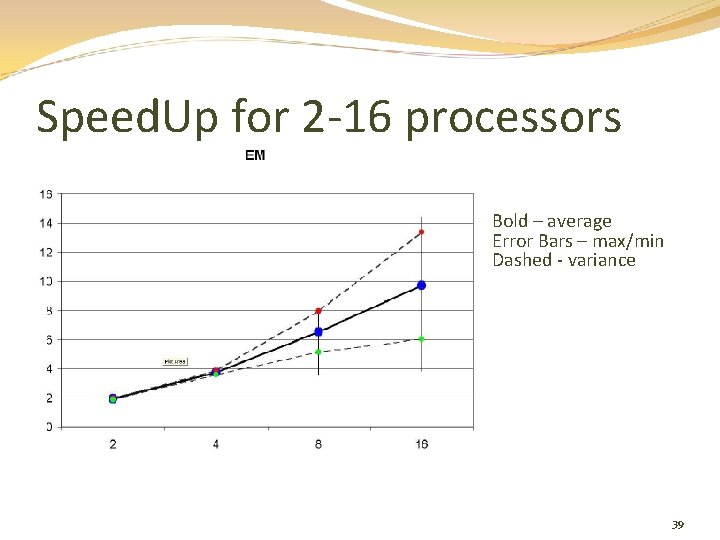

Speed. Up for 2 -16 processors Bold – average Error Bars – max/min Dashed - variance 39

Multicore Simulator Results Multicore simulator over the sensor dataset Better results – reported for NN & LR NN 16 cores 15. 5 x 32 cores 29 x 64 cores 54 x LR 16 cores 15 x 32 cores 29. 5 x 64 cores 53 x Could be because of less communication cost 40

Conclusion Parallelize summation forms NO change in the underlying algorithm NO approximation Use map-reduce on a single machine 41

Outline What is Map. Reduce? Problem Description and Formalization Statistical Query Model and Summation Form Architecture (inspired by Map. Reduce) Adopted ML Algorithms Experiments Future of Map. Reduce for Machine Learning Discussion Map-Reduce for Machine Learning on Multicore 42

Apache Mahout An Apache Software Foundation project to create scalable machine learning libraries under the Apache Software License http: //mahout. apache. org Why Mahout? Community Documentation Scalability and Examples the Apache License Not-specific research-oriented http: //dictionary. reference. com/browse/mahout 43

Focus: Scalable Goal: Be as fast and efficient as the possible given the intrinsic design of the algorithm Some algorithms won’t scale to massive machine clusters Others fit logically on a Map. Reduce framework like Apache Hadoop Still others will need other distributed programming models Most Mahout implementations are Map. Reduce enabled Work in Progress 44

Sampling of Who uses Mahout? https: //cwiki. apache. org/confluence/display/MAHOUT/Powered+By+Mahout 45

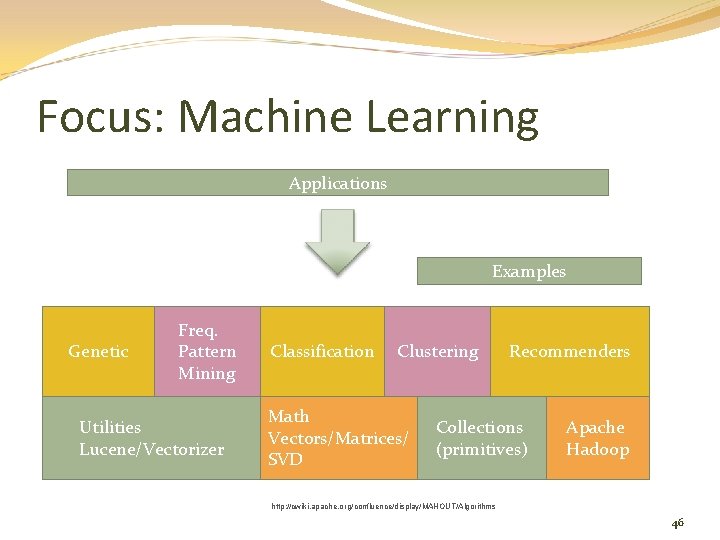

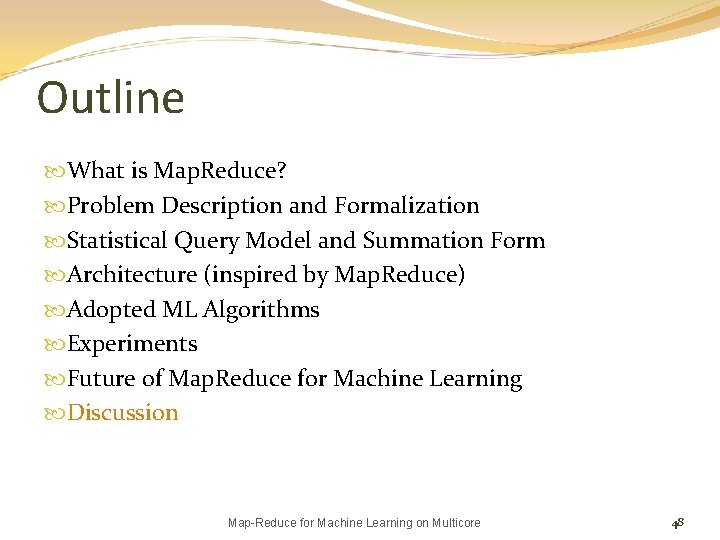

Focus: Machine Learning Applications Examples Genetic Freq. Pattern Mining Utilities Lucene/Vectorizer Classification Clustering Math Vectors/Matrices/ SVD Recommenders Collections (primitives) Apache Hadoop http: //cwiki. apache. org/confluence/display/MAHOUT/Algorithms 46

Resources “Mahout in Action” Owen, Anil, Dunning and Friedman http: //awe. sm/5 Fy. Ne “Introducing Apache Mahout” http: //www. ibm. com/developerworks/java/library/j-mahout/ “Taming Text” by Ingersoll, Morton, Farris “Programming Collective Intelligence” by Toby Segaran “Data Mining - Practical Machine Learning Tools and Techniques” by Ian H. Witten and Eibe Frank “Data-Intensive Text Processing with Map. Reduce” by Jimmy Lin and Chris Dyer 47

Outline What is Map. Reduce? Problem Description and Formalization Statistical Query Model and Summation Form Architecture (inspired by Map. Reduce) Adopted ML Algorithms Experiments Future of Map. Reduce for Machine Learning Discussion Map-Reduce for Machine Learning on Multicore 48

Discussion What are other alternatives to Map. Reduce? What to do if “summation form” is not applicable? Does the dataset quality effect implementation and performance of parallel machine learning algorithms? Multicore processors… future? Predicting Structural and Functional Sites in Proteins by Searching for Maximum-Weight Cliques 49