ManagementOriented Evaluation evaluation for decisionmakers Jing Wang And

- Slides: 17

Management-Oriented Evaluation …evaluation for decision-makers. Jing Wang And Faye Jones

Theoretical Basis—Systems Theory • Systems Theorist—Burns and Stalker (1972), Azumi and Hage (1972), Lincoln (1985) , Gharajedaghi (1985), Morgan (1986). • Systems theorist in education—(Henry Bernard, Horace Mann, William Harris, Carleton Washburne). • Mechanical/linear constructions of the world versus organic/systems constructions. • Closed versus Open systems. The role of the environment. References: Patton, 2002: Fitzpatrick, Sanders, & Worthen, 2004: Scott, 2003.

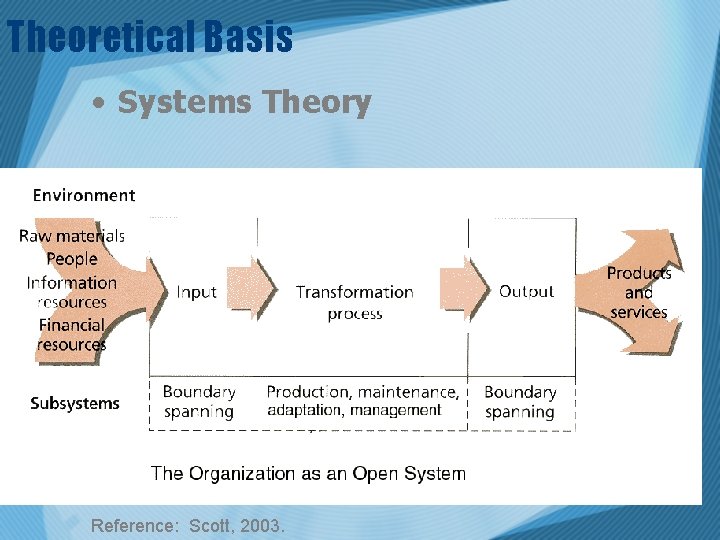

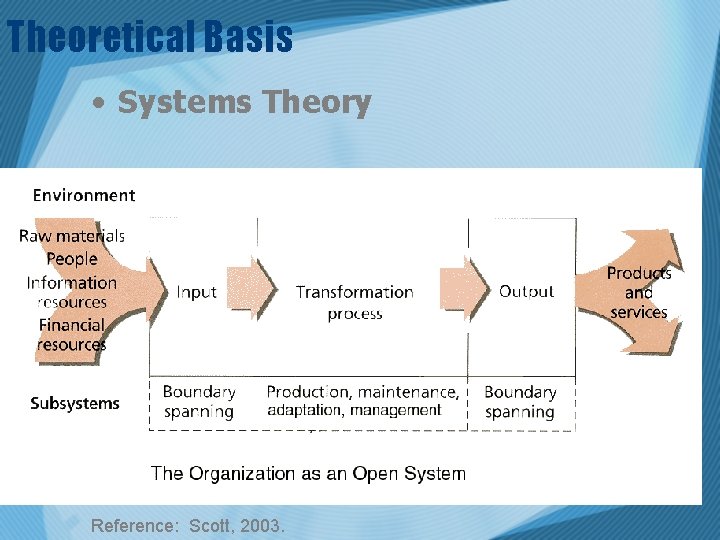

Theoretical Basis • Systems Theory Reference: Scott, 2003.

Defining and conceptualizing a system • A system is a whole that is both greater than and different from its parts • The effective management of a system requires managing the interactions of its parts, not the action of its parts taken separately (Gharajedaghi and Ackoff, 1985). Describe that system—volunteers! Reference: Patton, 2002.

Management Oriented Evaluation • The primary focus of management oriented evaluation is to serve the decision-maker (s). • The needs of the decision-makers guide the direction of evaluation.

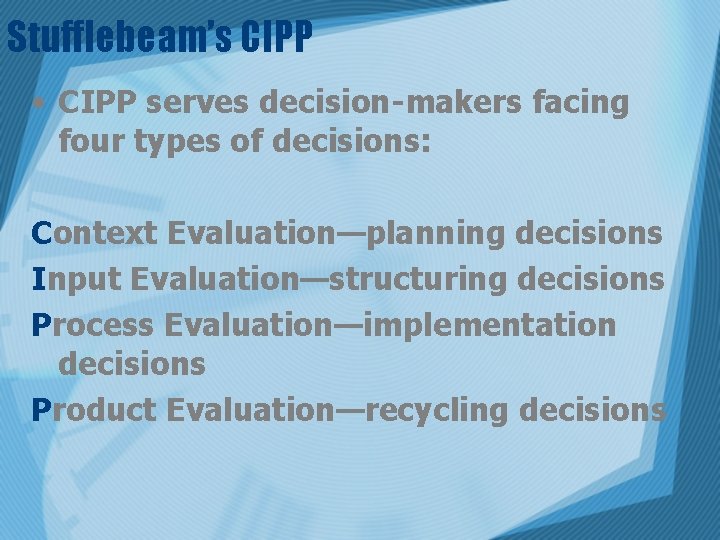

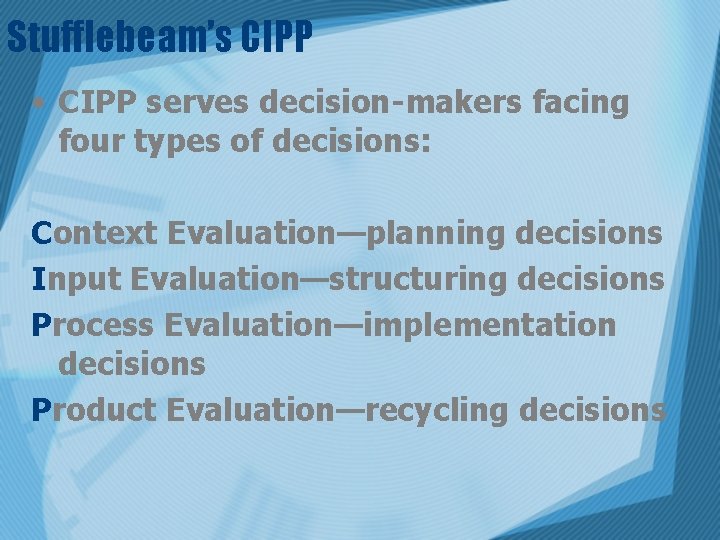

Stufflebeam’s CIPP • CIPP serves decision-makers facing four types of decisions: Context Evaluation—planning decisions Input Evaluation—structuring decisions Process Evaluation—implementation decisions Product Evaluation—recycling decisions

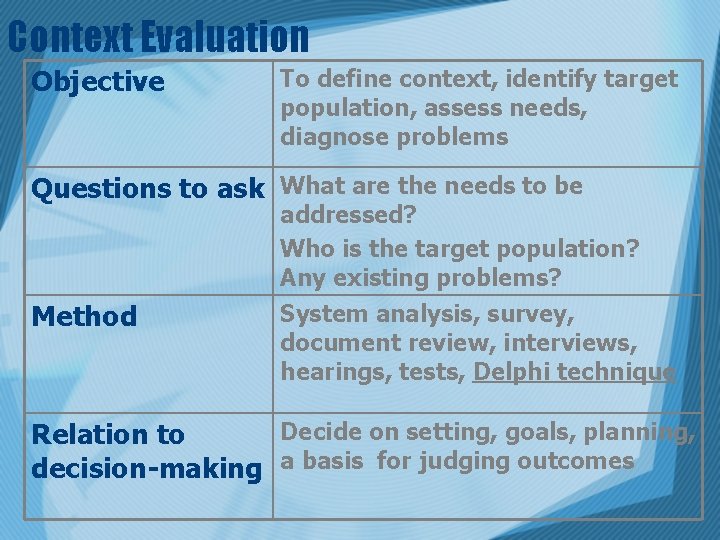

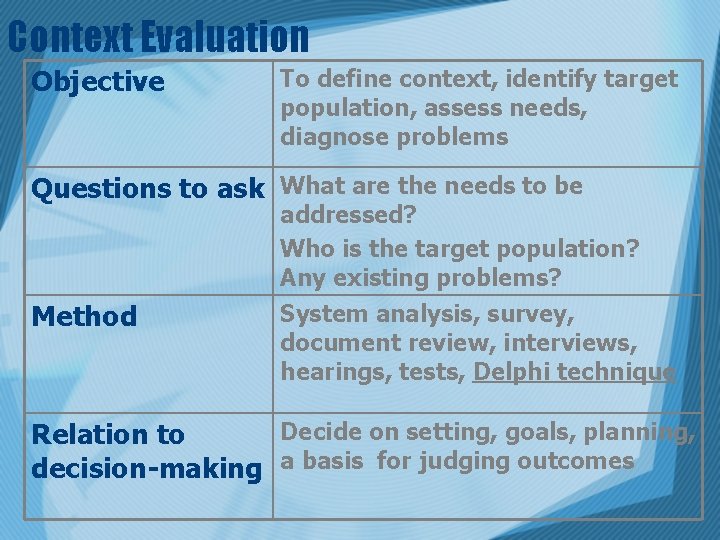

Context Evaluation Objective To define context, identify target population, assess needs, diagnose problems Questions to ask What are the needs to be addressed? Who is the target population? Any existing problems? Method System analysis, survey, document review, interviews, hearings, tests, Delphi technique Decide on setting, goals, planning, Relation to decision-making a basis for judging outcomes

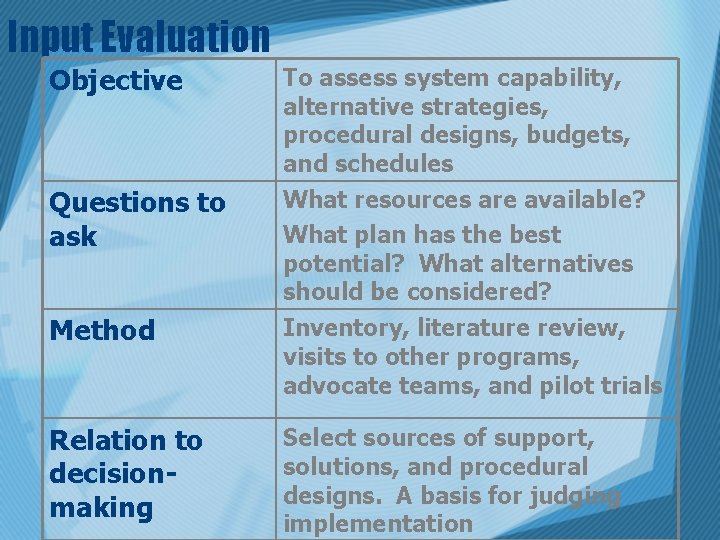

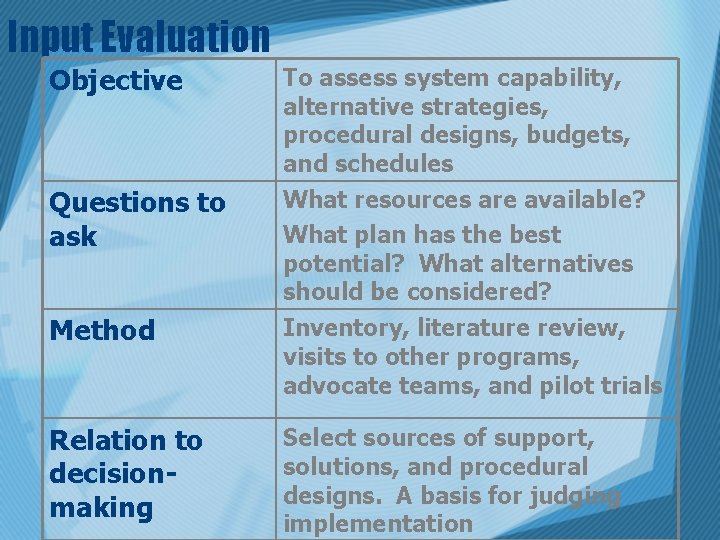

Input Evaluation Objective Questions to ask To assess system capability, alternative strategies, procedural designs, budgets, and schedules What resources are available? What plan has the best potential? What alternatives should be considered? Method Inventory, literature review, visits to other programs, advocate teams, and pilot trials Relation to decisionmaking Select sources of support, solutions, and procedural designs. A basis for judging implementation

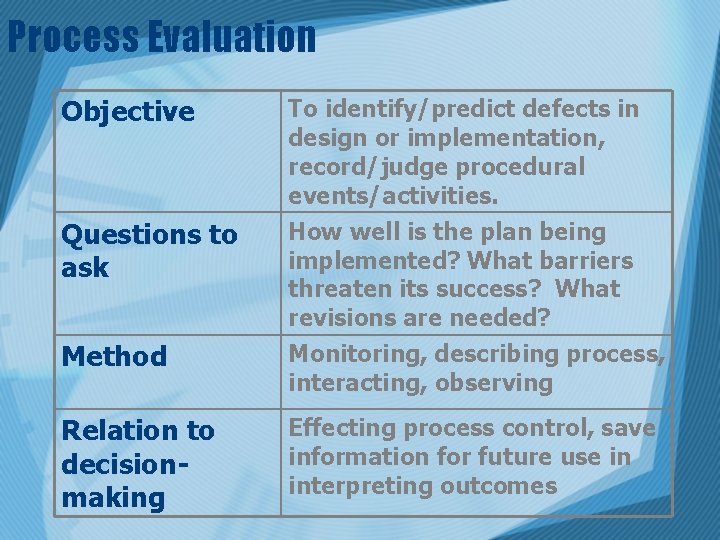

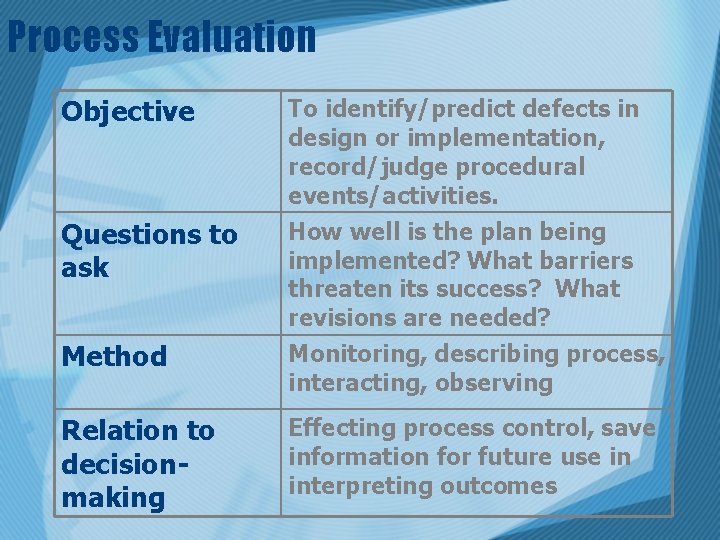

Process Evaluation Objective Questions to ask To identify/predict defects in design or implementation, record/judge procedural events/activities. How well is the plan being implemented? What barriers threaten its success? What revisions are needed? Method Monitoring, describing process, interacting, observing Relation to decisionmaking Effecting process control, save information for future use in interpreting outcomes

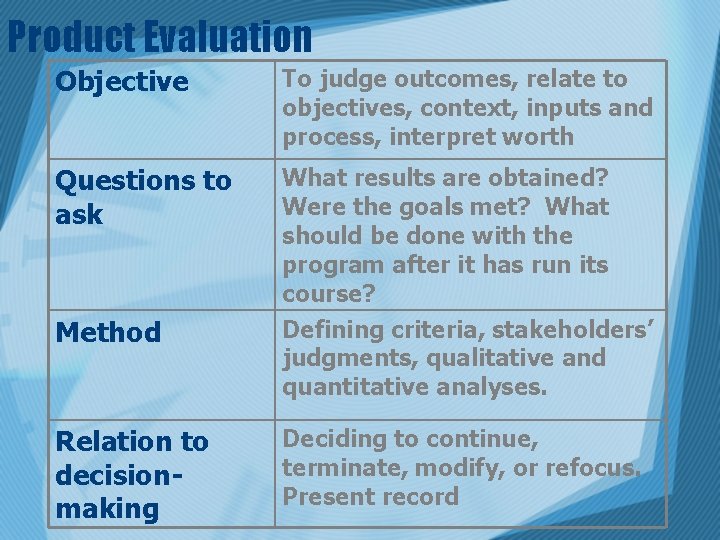

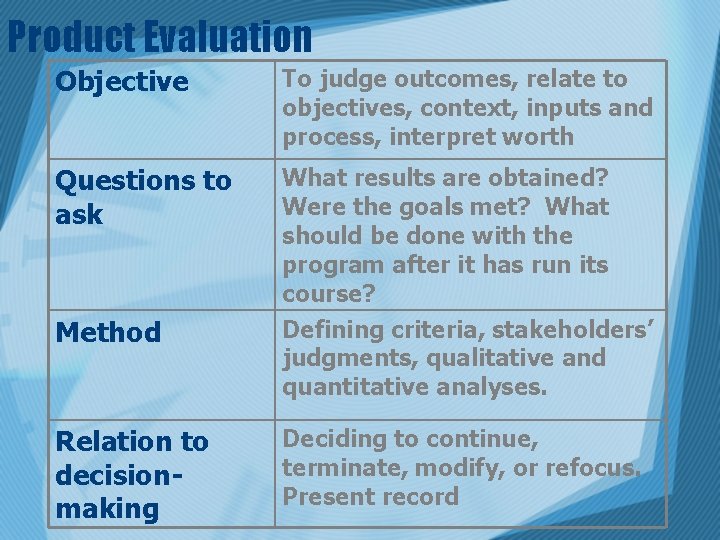

Product Evaluation Objective To judge outcomes, relate to objectives, context, inputs and process, interpret worth Questions to ask What results are obtained? Were the goals met? What should be done with the program after it has run its course? Method Defining criteria, stakeholders’ judgments, qualitative and quantitative analyses. Relation to decisionmaking Deciding to continue, terminate, modify, or refocus. Present record

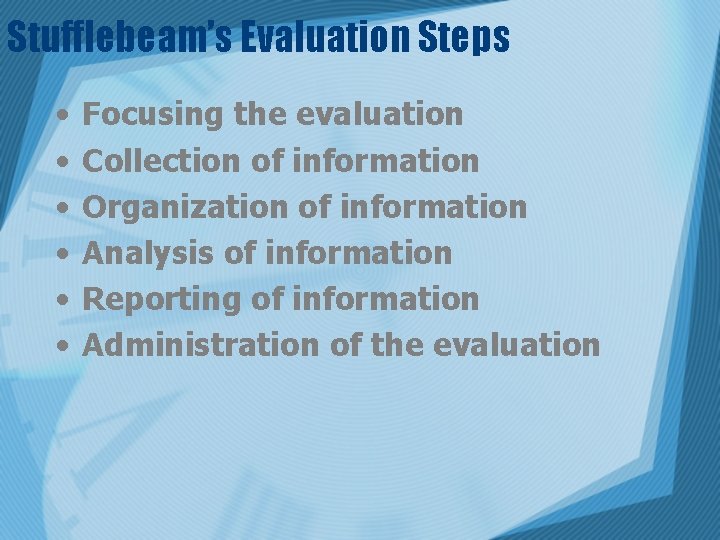

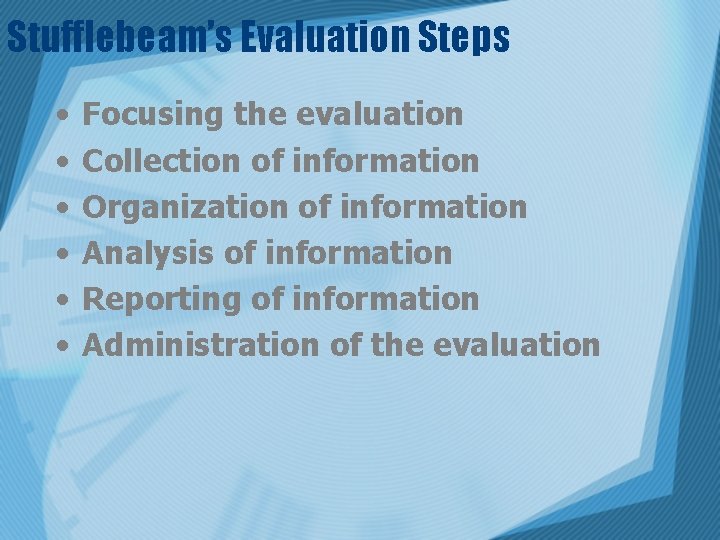

Stufflebeam’s Evaluation Steps • • • Focusing the evaluation Collection of information Organization of information Analysis of information Reporting of information Administration of the evaluation

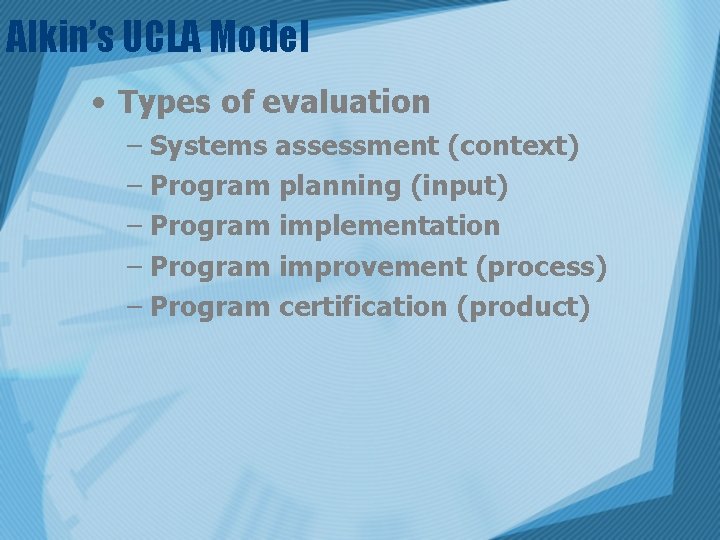

Alkin’s UCLA Model • Types of evaluation – Systems assessment (context) – Program planning (input) – Program implementation – Program improvement (process) – Program certification (product)

Strengths • Focuses on informational needs and pending decisions of decision-makers • Systematic and comprehensive • Provides a wide variety of information • Stresses importance of utility of information • Evaluation happens throughout the program’s life • Provides timely feedback and improvement • CIPP—heuristic tool that helps generate important questions to be answered. Easy to explain.

Weaknesses • Narrow focus – Inability to respond to issues that clash with concerns of decision makers – Indecisive leaders unlikely to benefit • Possibly unfair or undemocratic evaluation • May be expensive and complex • Unwarranted assumptions – Important decisions may be correctly identified up front – Orderliness and predictability of decision -making process

Application of CIPP • Evaluation framework for nursing education programs: application of the CIPP model • Critical success factors – Create an evaluation matrix – Form a program evaluation committee including representatives from all partners – Determine who will conduct the evaluation: internal or external – Ensure the evaluators understand adhere to the program evaluation standards

It’s Time for…. . • Management Oriented Evaluation Trivia. – Split up into two groups. – Each group selects a team captain. – The group with the most money wins.

References • • Fitzpatrick, J. L. , Sanders, J. R. , & Worthen, B. R. (2004). Program evaluation: alternative approaches and practical guidelines. White Plains, NY: Longman. Patton, M. Q. (2002). Qualitative evaluation and research methods (3 rd ed. ). Newbury Park, California: Sage. Scott, W. R. (2003). Organizations: Rational, natural, and open systems (5 th ed. ). Upper Saddle River, NJ: Prentice Hall. Singh, M. D. (2004). Evaluation framework for nursing education programs: Application of the CIPP model. International Journal of Nursing Education Scholarship, 1, Issue 1.