MachineIndependent Virtual Memory Management of Paged Uniprocessor and

- Slides: 21

Machine-Independent Virtual Memory Management of Paged Uniprocessor and Multiprocessor Architectures by Rashid, Tevanian, Young, Golub, Baron, Black, Bolosky, and Chew Dept. of CS, Carnegie Mellon Univ. , Pittburg, PA ACM October, 1987 Presentation by Stephen Karg 1

The Mach Mission The Problem: O/S portability suffers from a proliferation of distinct memory structures, resulting in limited VM management other than simple paging support. The Goal: Design a memory management system that is readily portable to distinct uniprocessor and multiprocessor computing engines. 2

Main Features • Supports virtual memory sharing between tasks (message passing etc. ) • Allows for user-defined page handlers. • Supports distributed paging and sharing across networks. • All hardware-dependencies defined in a single abstraction. 3

Mach VM Basics • task – execution environment in which multiple threads may run, akin to a process. • Mach is designed to support large, sparse memory allocation. • Each task possesses a large virtual address space (e. g. 4 GB). • Typically consists of contiguous blocks of mapped memory surrounded by large areas of unallocated space. No attempt to compress address space, currently unnecessary in most cases. • Page tables only reflect currently allocated regions, no free space (otherwise way too big). • Resulting page-fault lookup a little more complex, but lots of costly address-space maintenance eliminated. 4

Primary Data Structures Machine-Independent: (1) Resident Page Table Cache for physically allocated pages. (2) Virtual Memory Object Unit of virtual memory that can be mapped to a task address space. (3) Address Map virtual_address_range memory_object. offset Machine-Dependent: (4) pmap Hardware-defined physical address map. 5

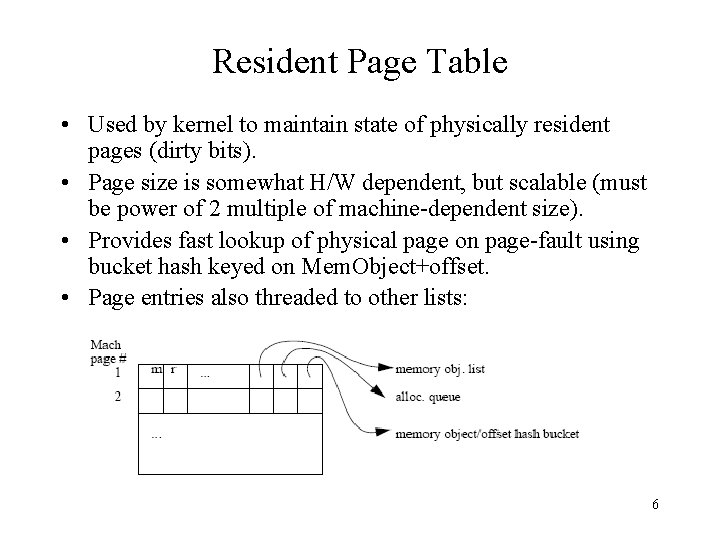

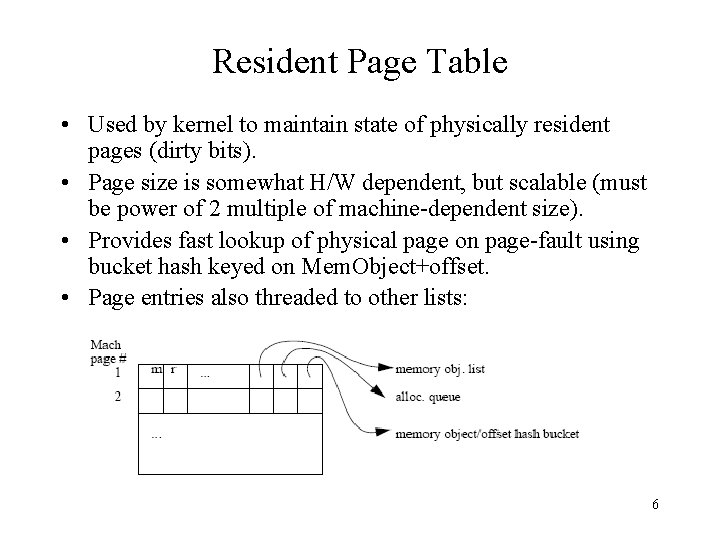

Resident Page Table • Used by kernel to maintain state of physically resident pages (dirty bits). • Page size is somewhat H/W dependent, but scalable (must be power of 2 multiple of machine-dependent size). • Provides fast lookup of physical page on page-fault using bucket hash keyed on Mem. Object+offset. • Page entries also threaded to other lists: 6

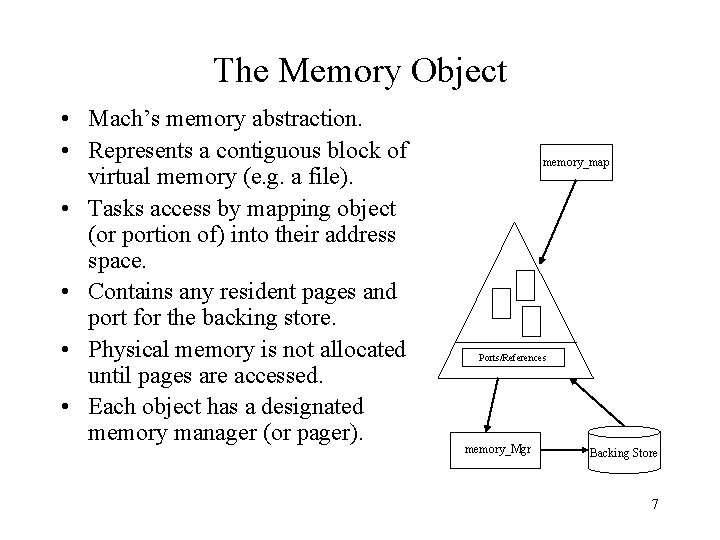

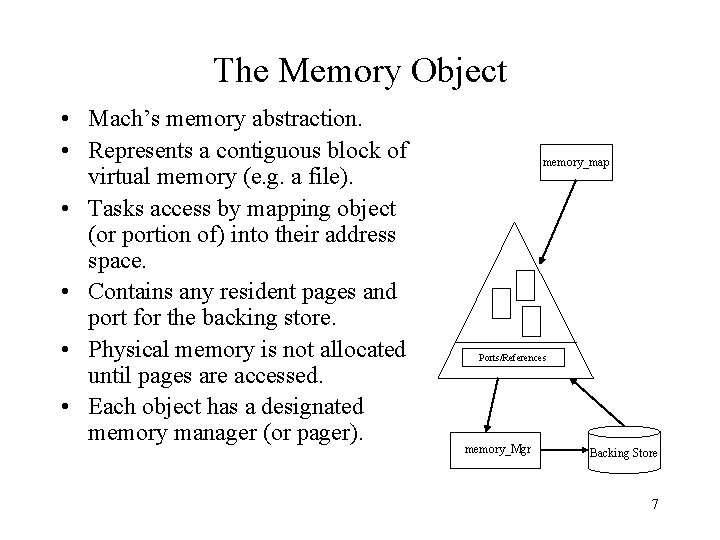

The Memory Object • Mach’s memory abstraction. • Represents a contiguous block of virtual memory (e. g. a file). • Tasks access by mapping object (or portion of) into their address space. • Contains any resident pages and port for the backing store. • Physical memory is not allocated until pages are accessed. • Each object has a designated memory manager (or pager). memory_map Ports/References memory_Mgr Backing Store 7

The Address Map • Kernel maintained sorted doubly-linked-list of (allocated) virtual addresses. • Each node maps a contiguous range of virtual addresses to a contiguous region of a memory object. • Due to sparse allocation (i. e. contiguous memory), list size is kept to a relative minimum. • Each mapping carries information regarding inheritance and protection attributes of region it defines. • Mach allows the task to specify protection values for its virtual memory pages (r, w, x). These attributes can be used to control access to memory shared between tasks and can be inherited. 8

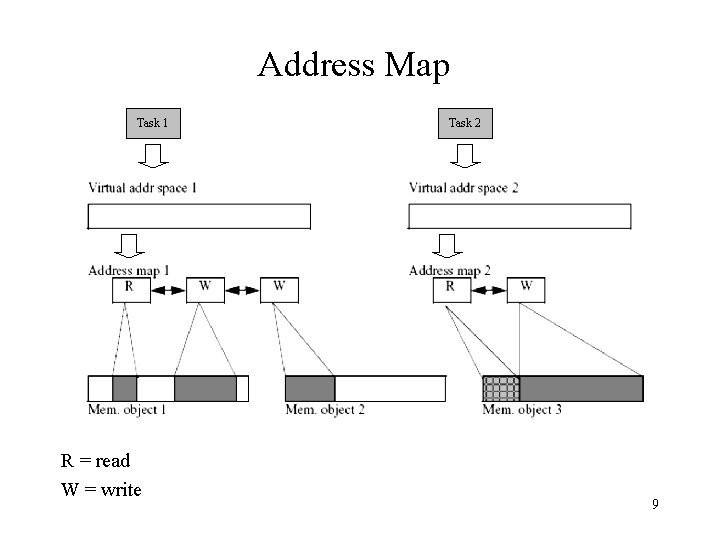

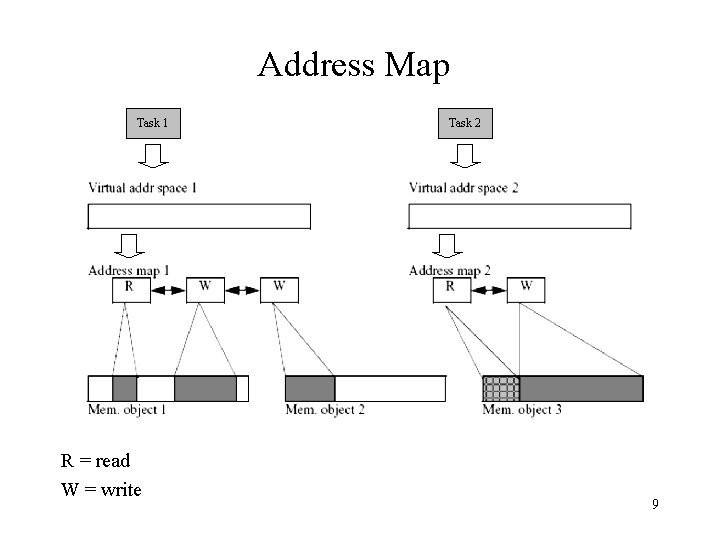

Address Map Task 1 R = read W = write Task 2 9

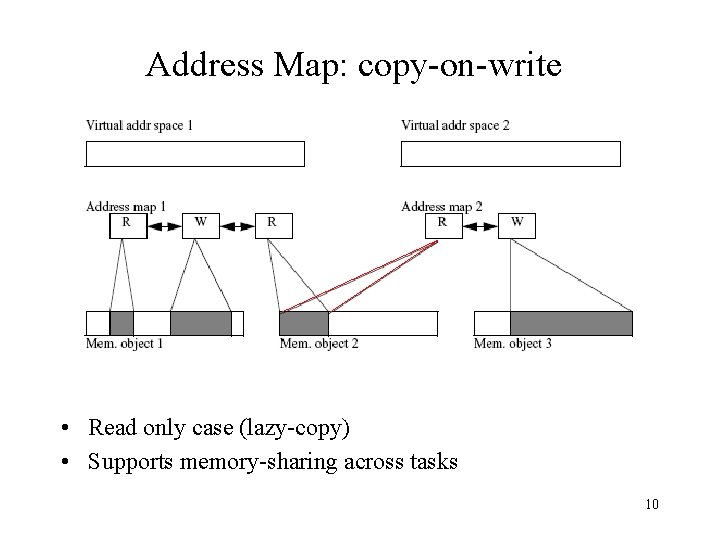

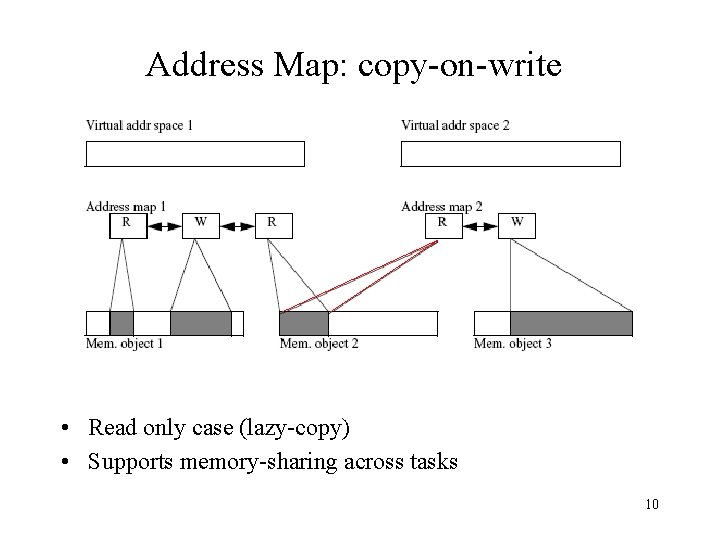

Address Map: copy-on-write • Read only case (lazy-copy) • Supports memory-sharing across tasks 10

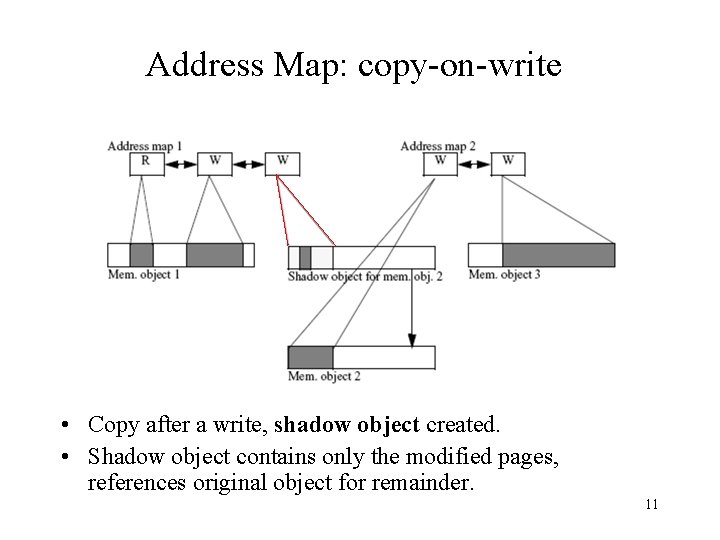

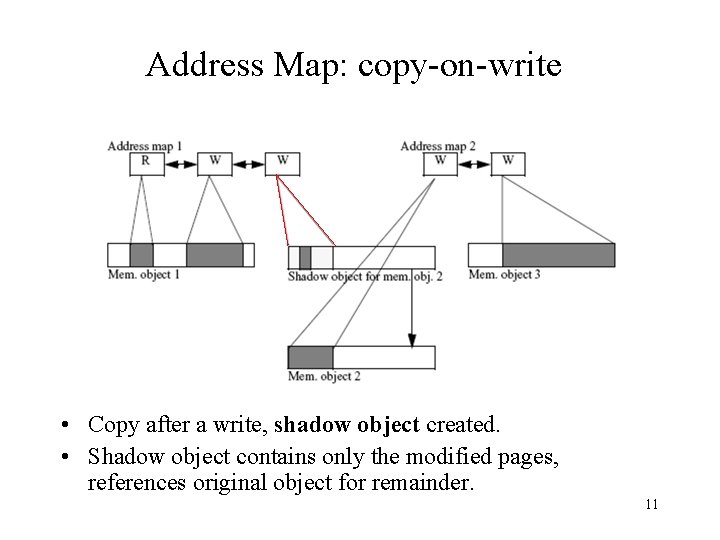

Address Map: copy-on-write • Copy after a write, shadow object created. • Shadow object contains only the modified pages, references original object for remainder. 11

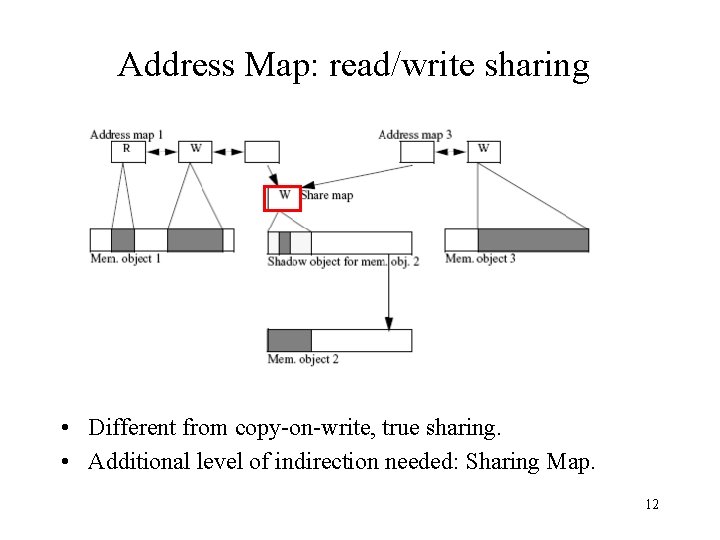

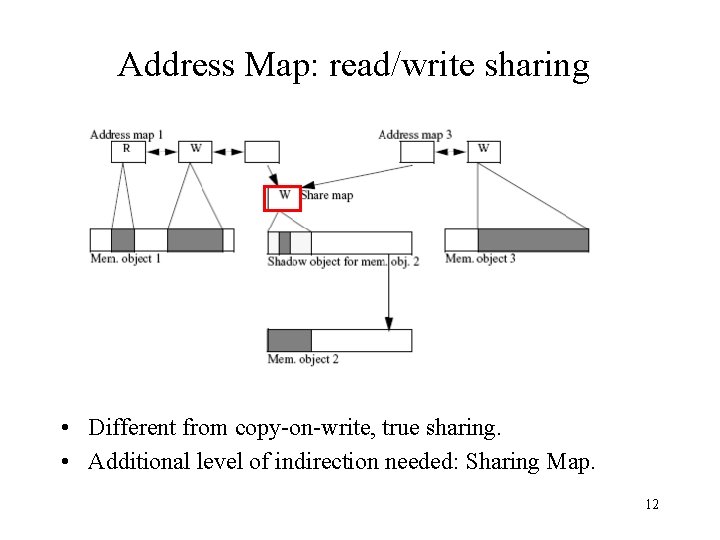

Address Map: read/write sharing • Different from copy-on-write, true sharing. • Additional level of indirection needed: Sharing Map. 12

Address Map Problems • Most of complexity of Mach’s memory management arises from avoiding long chains of shadow objects that can build up on repeated updates. • Solution: garbage collection of intermediate shadow objects no longer needed. (complex algorithm, object locking needed on multiple CPU’s) • Long chains sometimes occur during heavy paging and cannot be detected using in-memory data structures alone. • Thrash-o-rama. 13

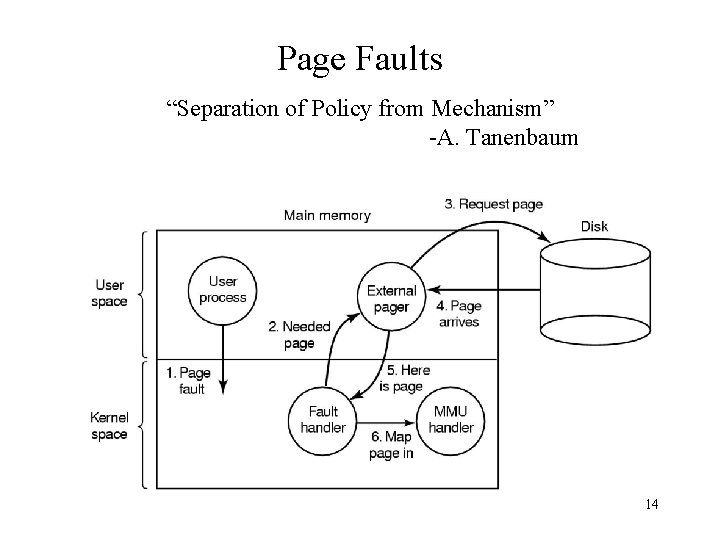

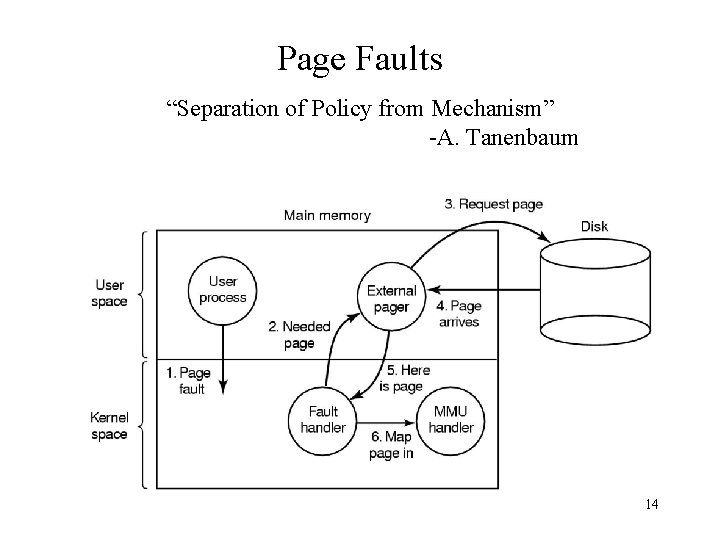

Page Faults “Separation of Policy from Mechanism” -A. Tanenbaum 14

Page Faults 1. 2. 3. 4. 5. 6. User process page-faults, traps. Kernel fault-handler intercepts and makes an asynchronous upcall to associated external pager. External pager goes to disk. Copies requested page into its own address space. Tells fault handler page has arrived and where to find it. Fault handler unmaps page from pager’s address space and asks MMU handler (pmap) to map it into the user’s address space at the right spot. 15

External Pagers • One associated with each memory object, can be userdefined for specific application needs • Default Mach page handler also provided (at user-level). • If kernel sends pageout ‘request’ to a user-level pager, it can decide which page to swap out. • If pager is uncooperative, then default pager will be invoked to perform the necessary pageout. • Upside: – VM ‘tuning’ based on application needs (e. g. DBMS). – Good for maintaining consistency on multiprocessors. – Allowed for expansion of VM sharing over distributed network. • Downside: – Upcalls from kernel (response? ) – Lots of context switching (well amortized? ) 16

Distributed Shared Memory Server • • Manages pagers on multiple machines across network. Physical read-only copies allowed on multiple machines. All related fault handlers on group communications channel. On a page-fault: – – – • Message broadcast to related handlers: “I need page X” Handler with resident page X sends a copy to friend in need. Notice: trip to disk was not required. On a write: – – – Invalidate shared page in all other tasks (another broadcast). Allow write to local page. Subsequent accesses page-fault and get copy of updated page. 17

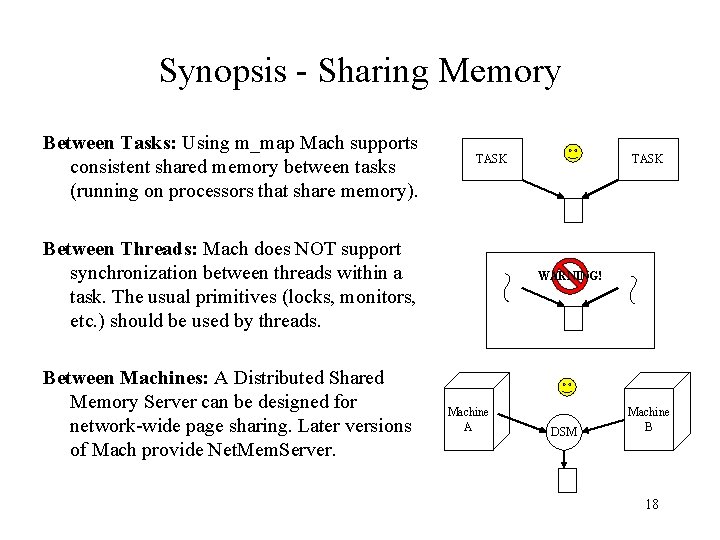

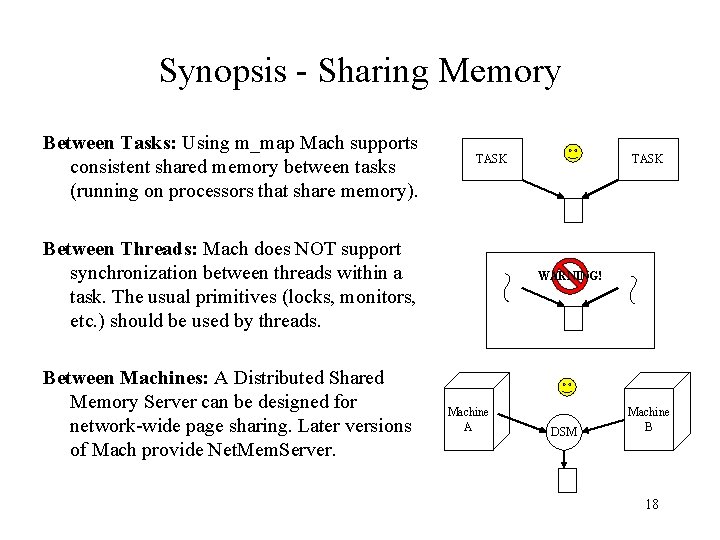

Synopsis - Sharing Memory Between Tasks: Using m_map Mach supports consistent shared memory between tasks (running on processors that share memory). TASK Between Threads: Mach does NOT support synchronization between threads within a task. The usual primitives (locks, monitors, etc. ) should be used by threads. Between Machines: A Distributed Shared Memory Server can be designed for network-wide page sharing. Later versions of Mach provide Net. Mem. Server. TASK WARNING! Machine A DSM Machine B 18

pmap Interface • Where portability and hardware meet. • Implements only those operations necessary to manage the hardware-required mapping data structures (namely, the page tables). • Does not need to track all valid mappings, but maintains current physical mapping of kernel and frequently referenced task addresses. • Can use lazy-evaluation for other VM information – reconstructed at fault-time from machine-independent data structures. • Implementer of pmap “needs to know very little about the way Mach functions”, but will need to know very much about underlying architecture. • The hardware page table used has significant impact on implementation and relative ease or portability. 19

Mach VM performance • Performance measured on fine-grained operations plus some high-volume testing show impressive results. • Same or better performance when compared to traditional UNIX – suppose a good benchmark at the time (‘ 87). • Considering various complexity issues with shadow chains and user-level page handlers, would have liked to have seen more load-bearing tests of Mach paging thresholds. • With added OOD complexity and regular contextswitching, good performance results were somewhat surprising. 20

Conclusions Accomplishments: • First machine-independent, portable VM design. • Feature-rich memory management (OOD meets VM). • User-level pagers very beneficial, customizable and opened the door for distributed VM management. • Allows for unbiased examination of underlying hardware support (useful research tool). Drawbacks: • Usual tradeoff: Customizability vs. Complexity • Have seen since then that Mach can be slow, but often compared to H/W tuned, non-portable OS’s. 21