Machine learning tehniques for credit risk modeling in

- Slides: 30

Machine learning tehniques for credit risk modeling in practice Balaton Attila OTP Bank Analysis and Modeling Department 2017. 02. 23.

„Machine learning is the subfield of computer science that gives computers the ability to learn without being explicitly programmed (Arthur Samuel, 1959). Evolved from the study of pattern recognition and computational learning theory in artificial intelligence, machine learning explores the study and construction of algorithms that can learn from and make predictions on data…”* * Wikipedia

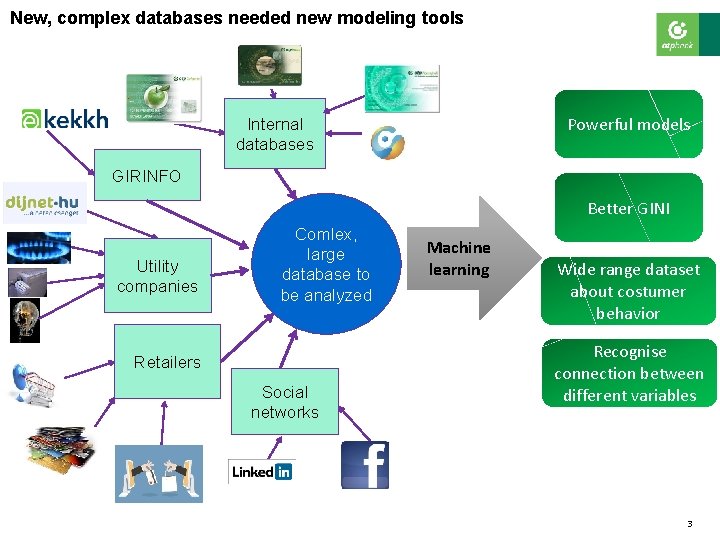

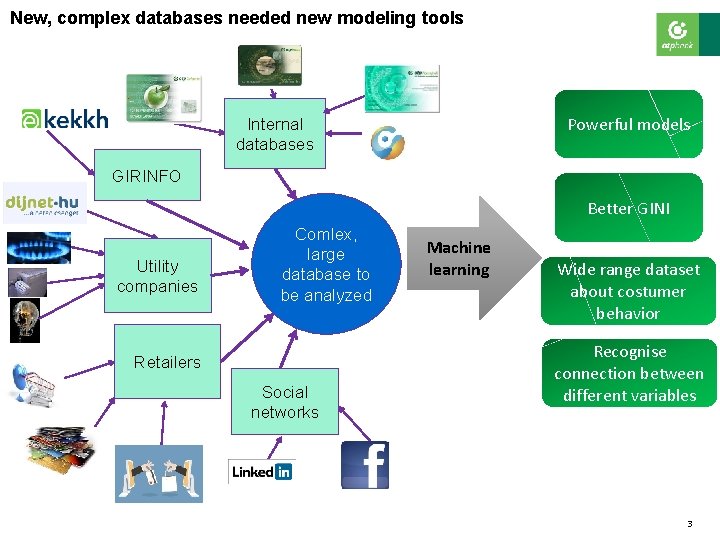

New, complex databases needed new modeling tools Powerful models Internal databases GIRINFO Better GINI Utility companies Comlex, large database to be analyzed Retailers Social networks Machine learning Wide range dataset about costumer behavior Recognise connection between different variables 3

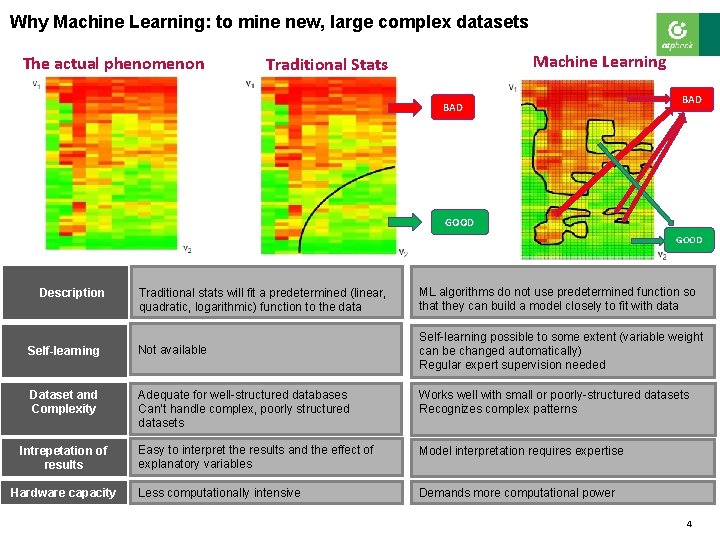

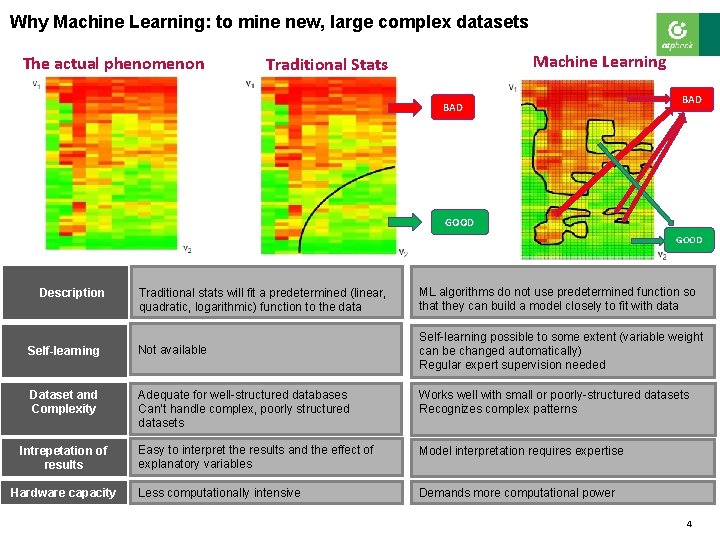

Why Machine Learning: to mine new, large complex datasets The actual phenomenon Machine Learning Traditional Stats BAD GOOD Traditional stats will fit a predetermined (linear, quadratic, logarithmic) function to the data ML algorithms do not use predetermined function so that they can build a model closely to fit with data Self-learning Not available Self-learning possible to some extent (variable weight can be changed automatically) Regular expert supervision needed Dataset and Complexity Adequate for well-structured databases Can’t handle complex, poorly structured datasets Works well with small or poorly-structured datasets Recognizes complex patterns Easy to interpret the results and the effect of explanatory variables Model interpretation requires expertise Less computationally intensive Demands more computational power Description Intrepetation of results Hardware capacity 4

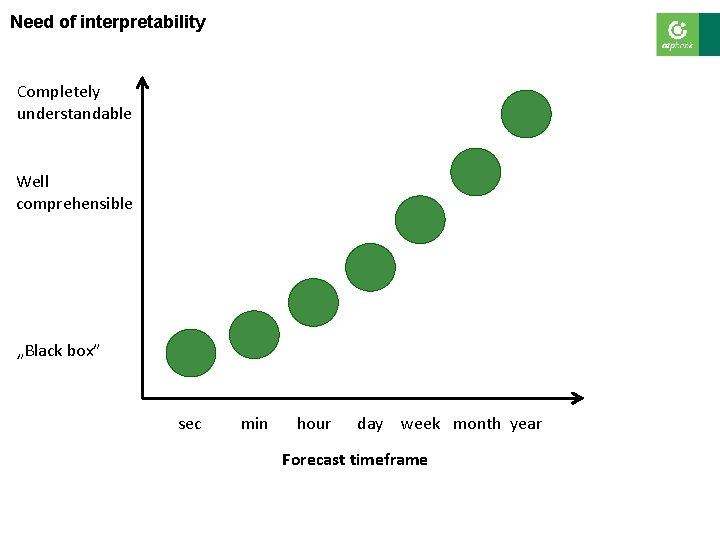

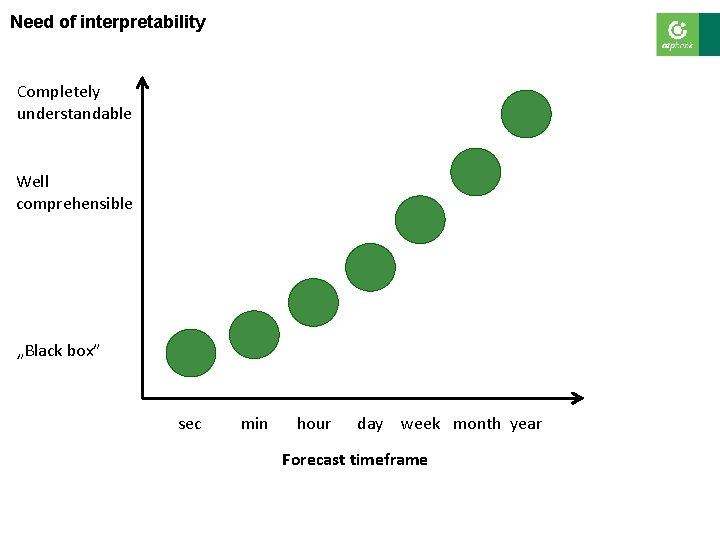

Need of interpretability Completely understandable Well comprehensible „Black box” sec min hour day week month year Forecast timeframe

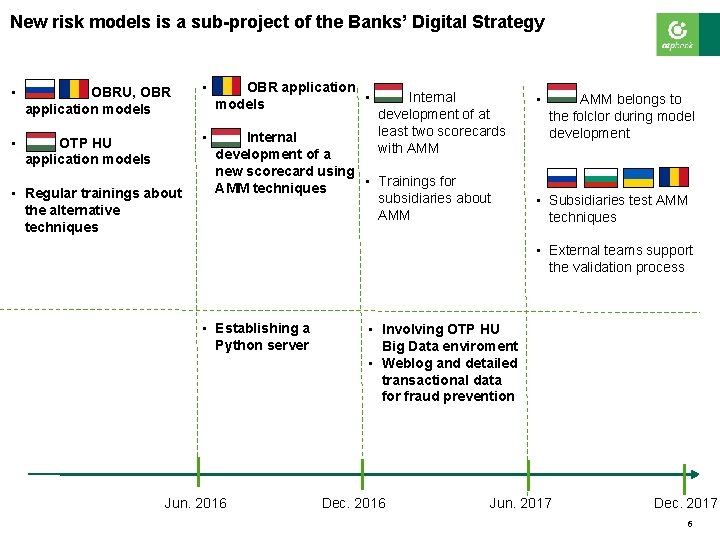

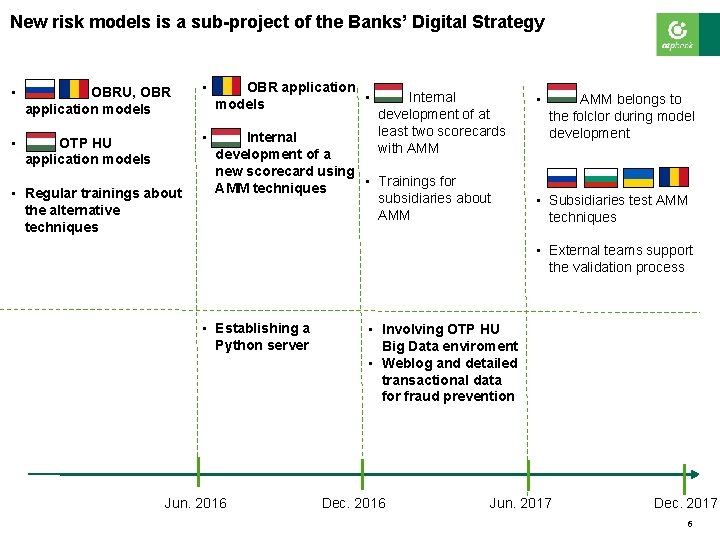

New risk models is a sub-project of the Banks’ Digital Strategy • OBRU, OBR application models • OTP HU application models • Regular trainings about the alternative techniques • OBR application • Internal models development of at least two scorecards • Internal with AMM development of a new scorecard using • Trainings for AMM techniques subsidiaries about AMM • AMM belongs to the folclor during model development • Subsidiaries test AMM techniques • External teams support the validation process • Establishing a Python server Jun. 2016 • Involving OTP HU Big Data enviroment • Weblog and detailed transactional data for fraud prevention Dec. 2016 Jun. 2017 Dec. 2017 6

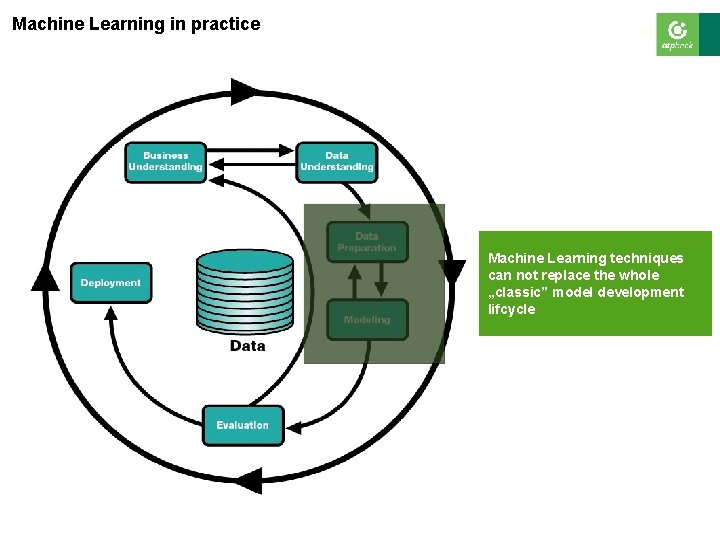

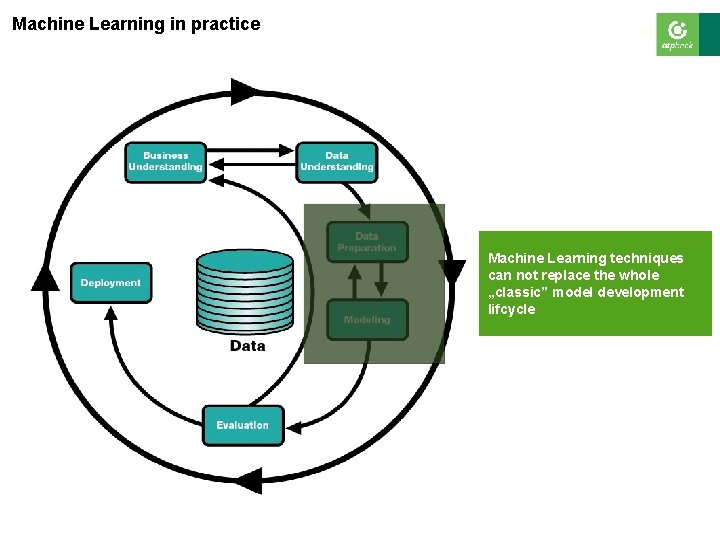

Machine Learning in practice Machine Learning techniques can not replace the whole „classic” model development lifcycle

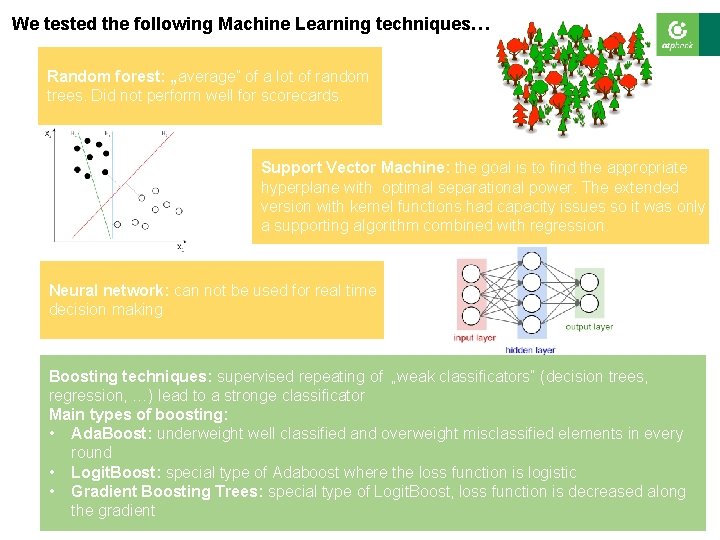

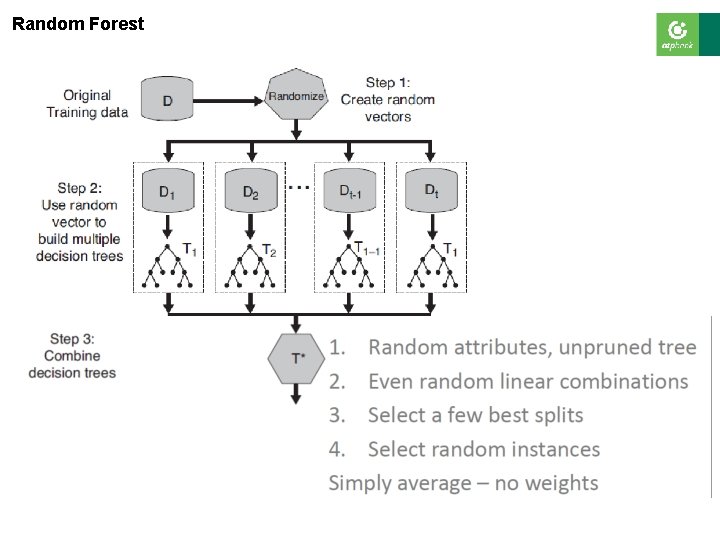

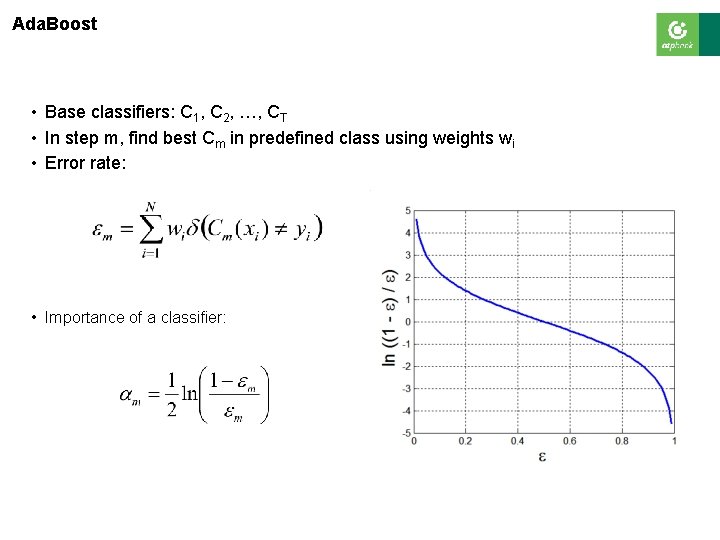

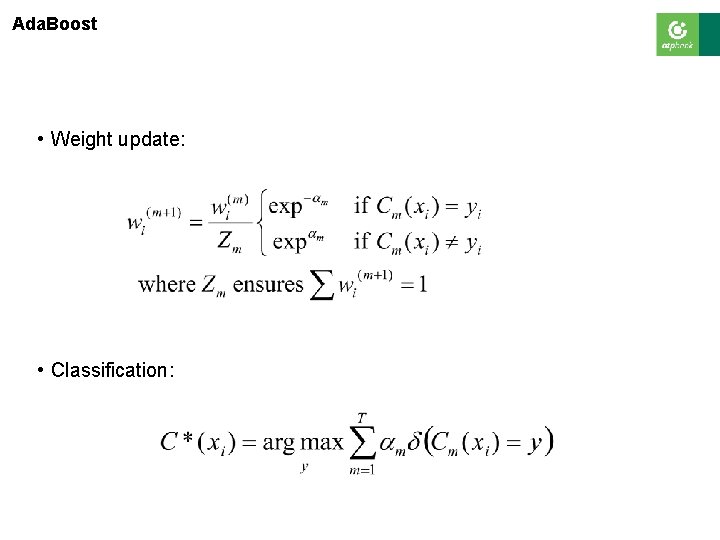

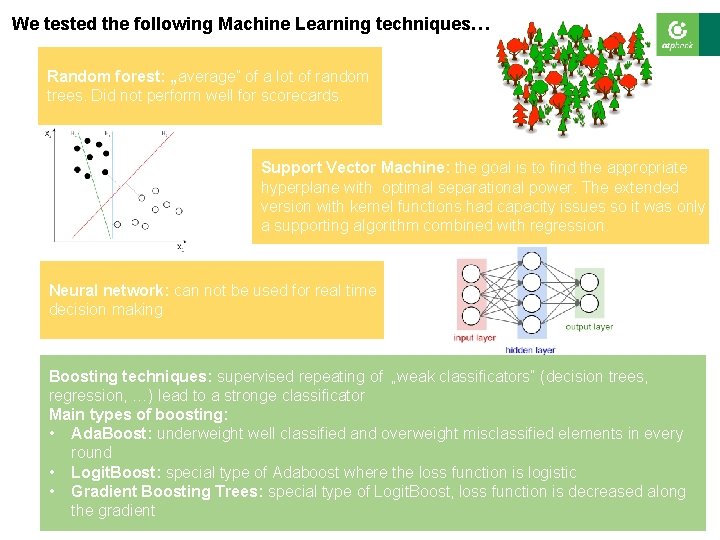

We tested the following Machine Learning techniques… Random forest: „average” of a lot of random trees. Did not perform well for scorecards. Support Vector Machine: the goal is to find the appropriate hyperplane with optimal separational power. The extended version with kernel functions had capacity issues so it was only a supporting algorithm combined with regression. Neural network: can not be used for real time decision making Boosting techniques: supervised repeating of „weak classificators” (decision trees, regression, …) lead to a stronge classificator Main types of boosting: • Ada. Boost: underweight well classified and overweight misclassified elements in every round • Logit. Boost: special type of Adaboost where the loss function is logistic • Gradient Boosting Trees: special type of Logit. Boost, loss function is decreased along the gradient

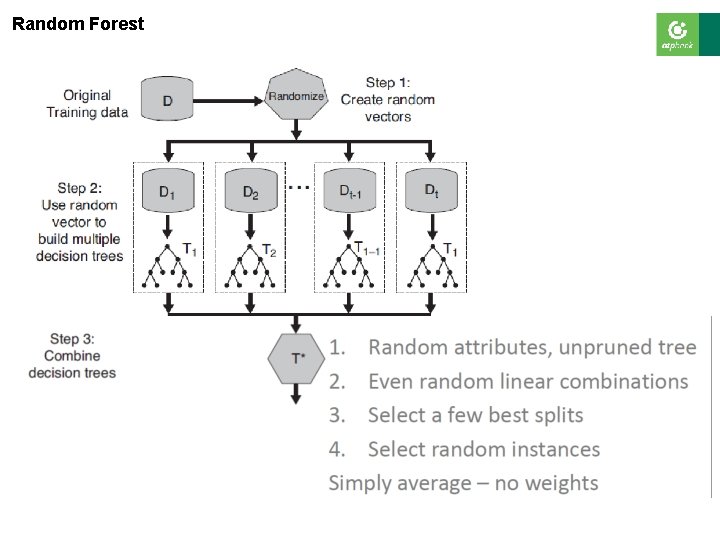

Random Forest

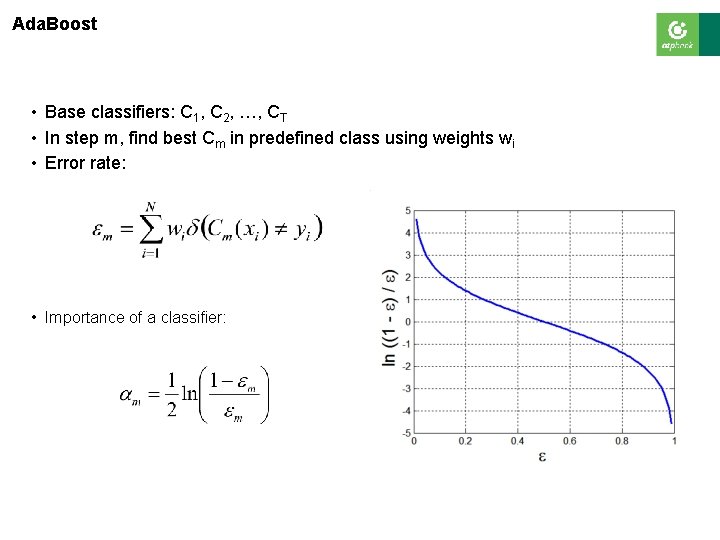

Ada. Boost • Base classifiers: C 1, C 2, …, CT • In step m, find best Cm in predefined class using weights wi • Error rate: • Importance of a classifier:

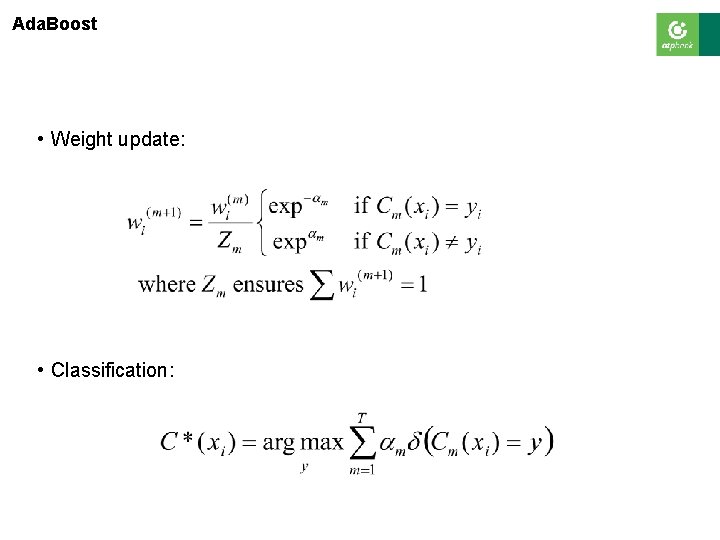

Ada. Boost • Weight update: • Classification:

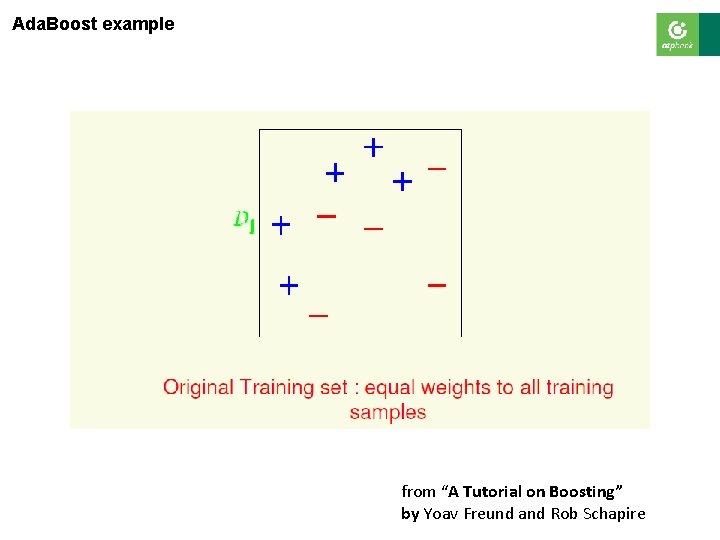

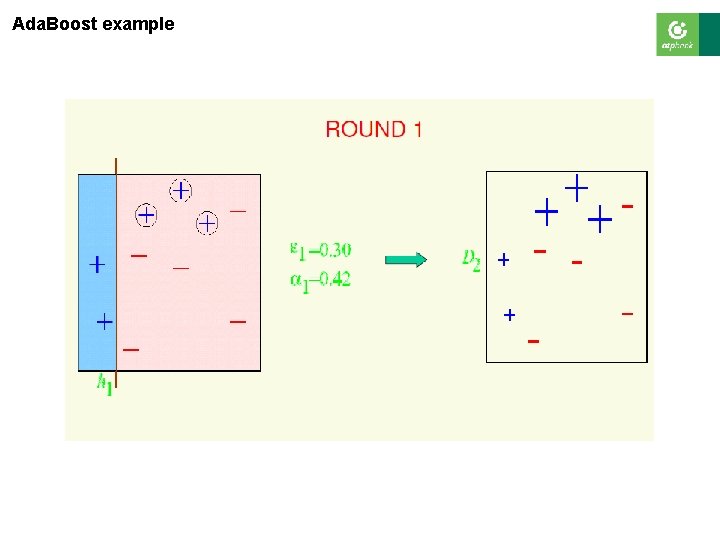

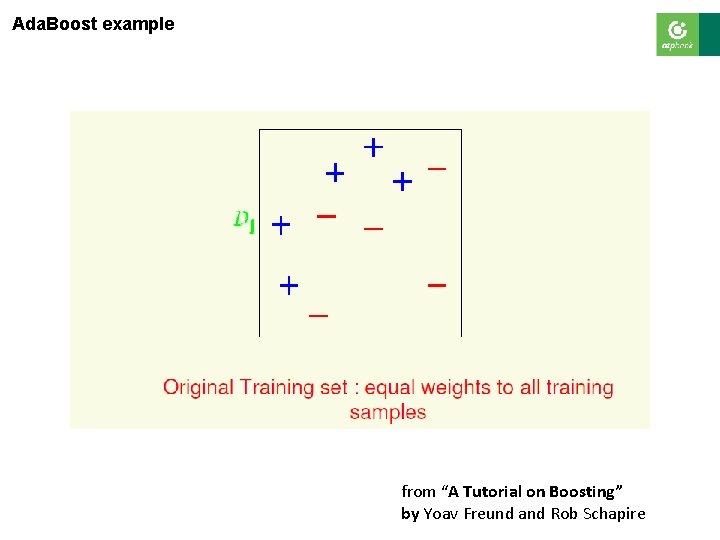

Ada. Boost example from “A Tutorial on Boosting” by Yoav Freund and Rob Schapire

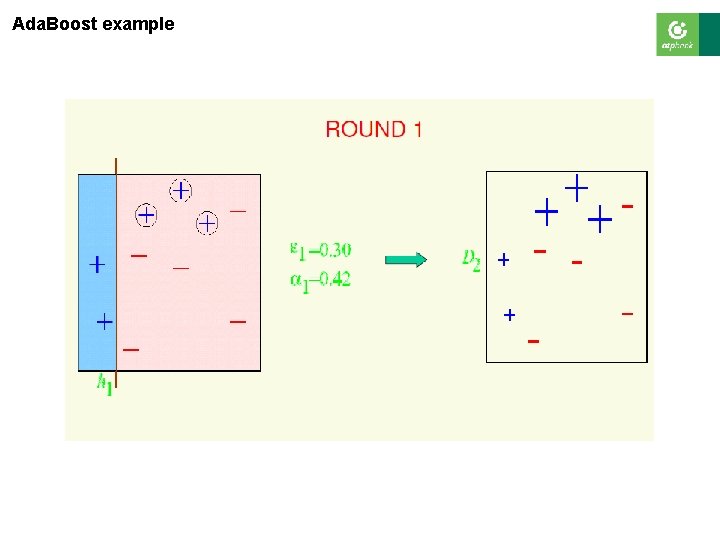

Ada. Boost example

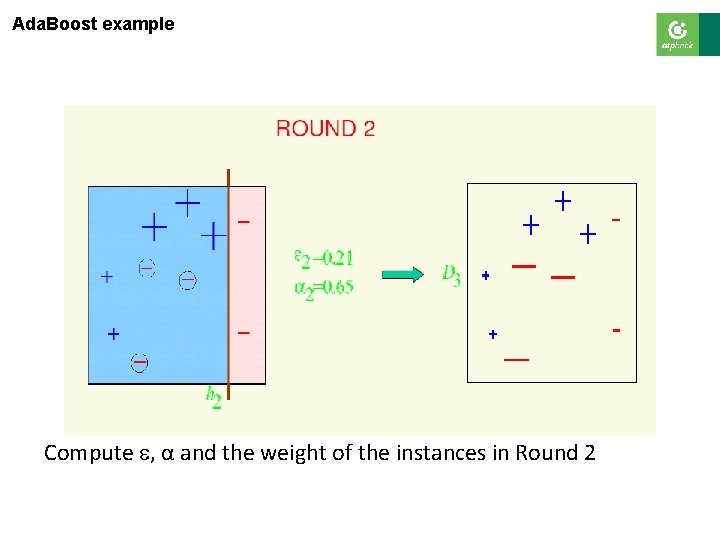

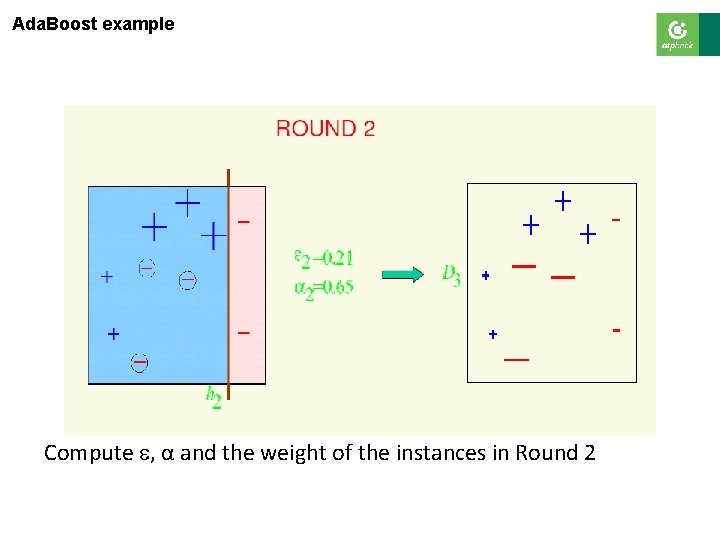

Ada. Boost example Compute , α and the weight of the instances in Round 2

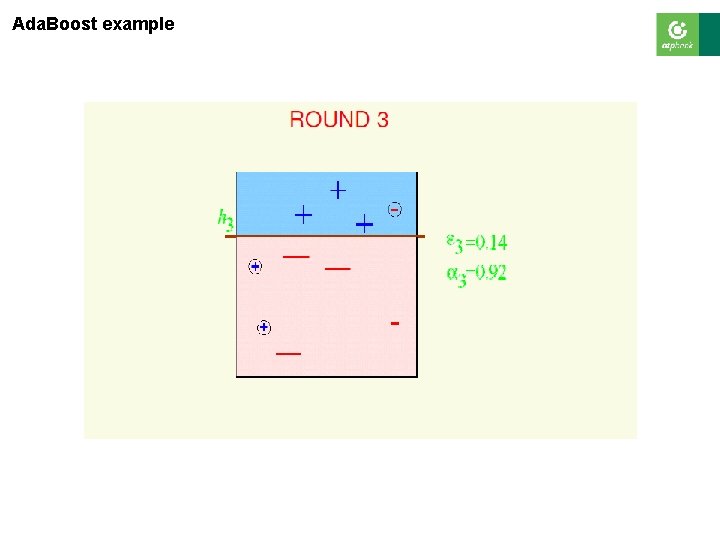

Ada. Boost example

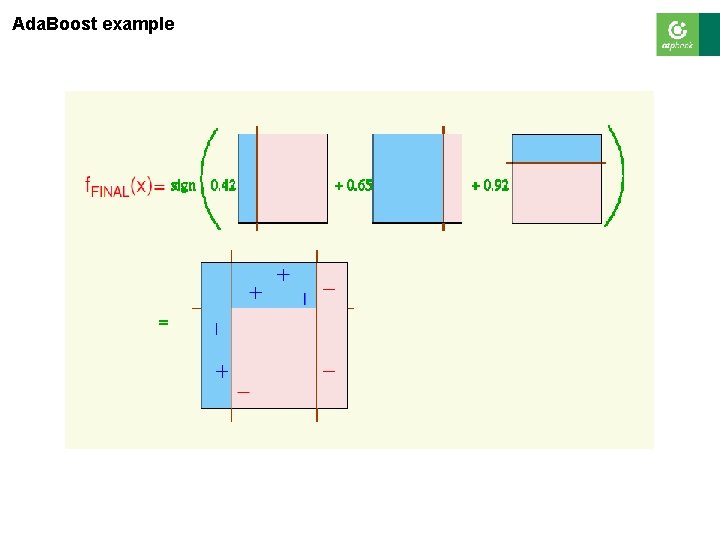

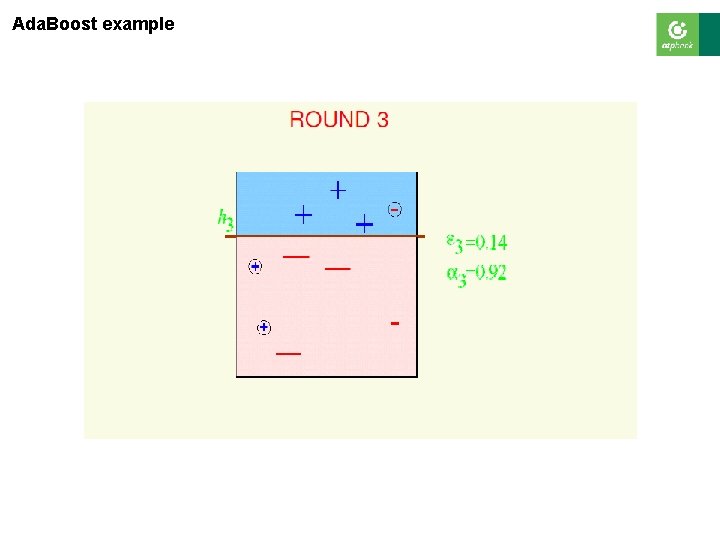

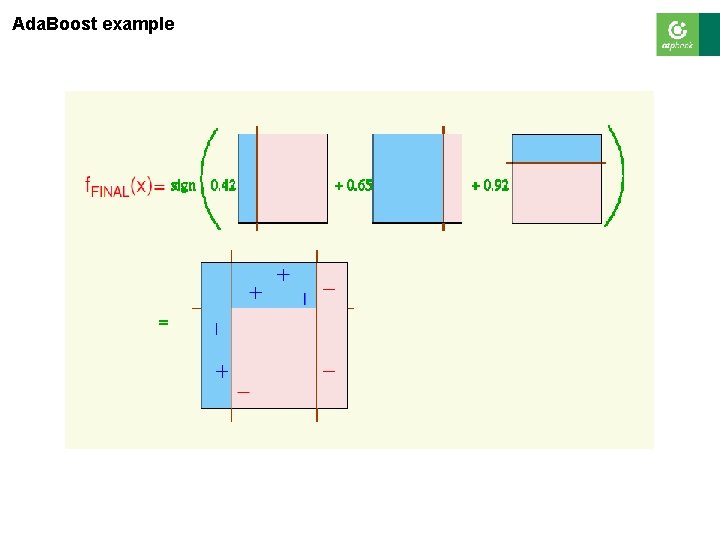

Ada. Boost example

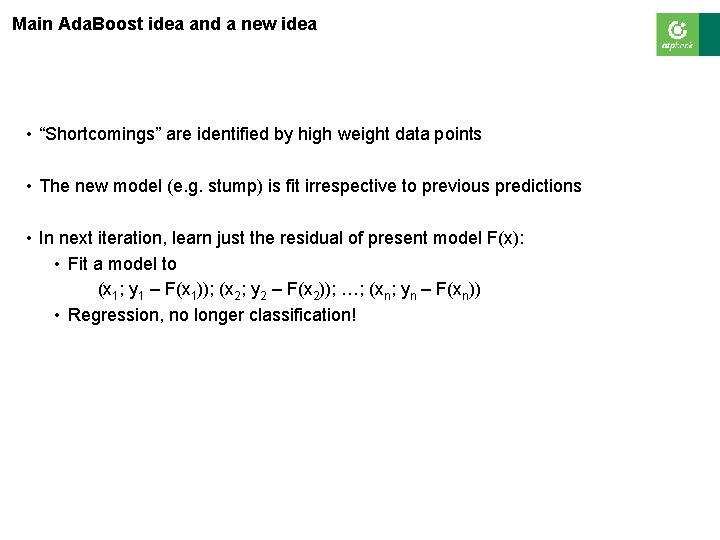

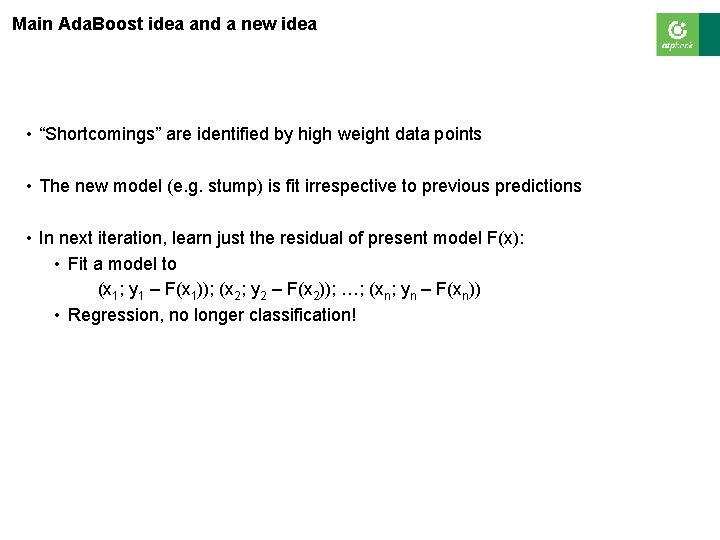

Main Ada. Boost idea and a new idea • “Shortcomings” are identified by high weight data points • The new model (e. g. stump) is fit irrespective to previous predictions • In next iteration, learn just the residual of present model F(x): • Fit a model to (x 1; y 1 – F(x 1)); (x 2; y 2 – F(x 2)); …; (xn; yn – F(xn)) • Regression, no longer classification!

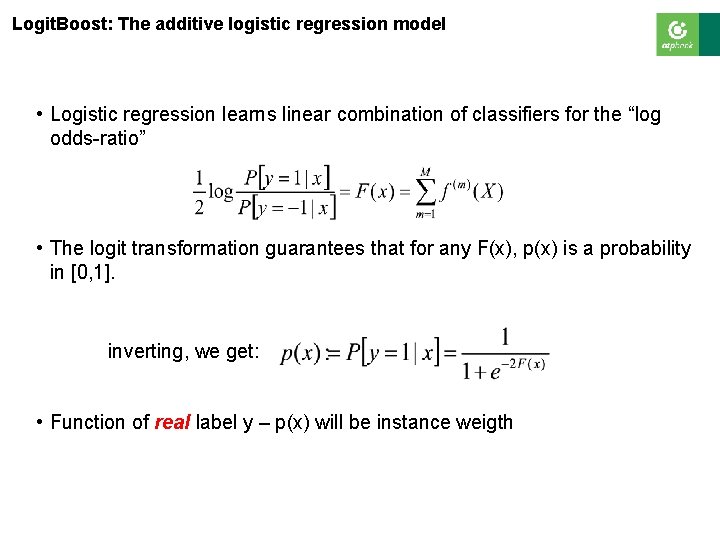

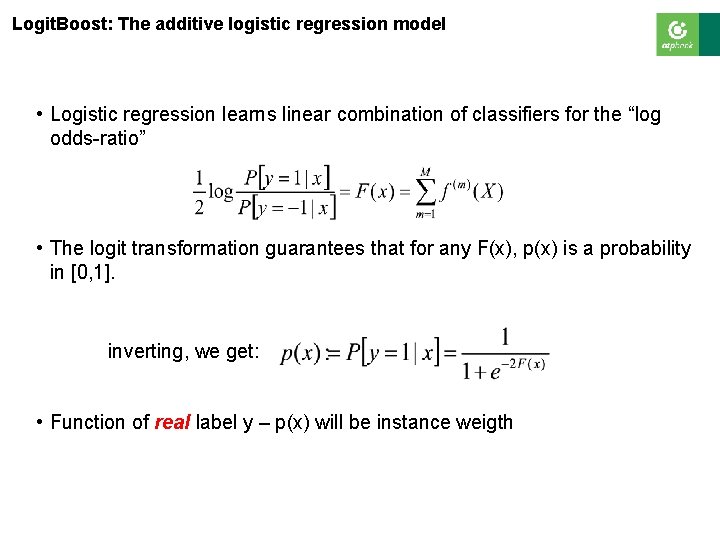

Logit. Boost: The additive logistic regression model • Logistic regression learns linear combination of classifiers for the “log odds-ratio” • The logit transformation guarantees that for any F(x), p(x) is a probability in [0, 1]. inverting, we get: • Function of real label y – p(x) will be instance weigth

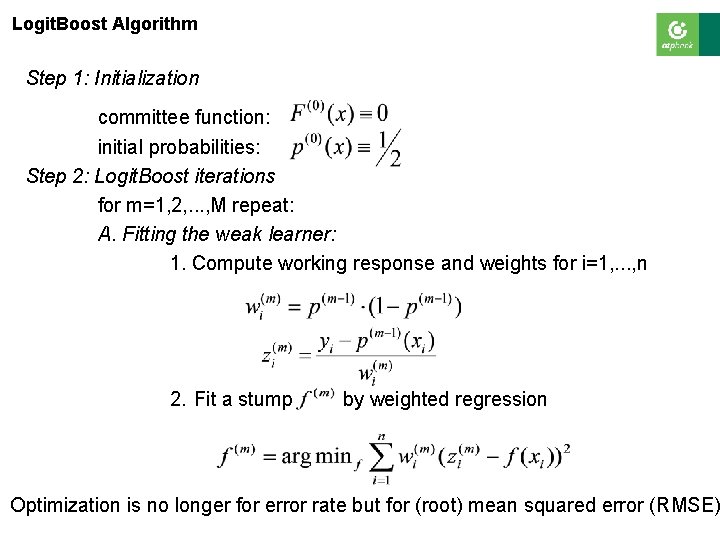

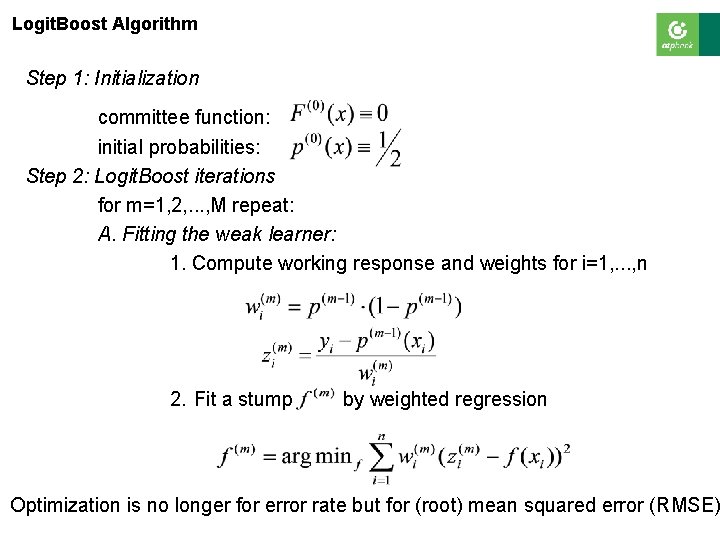

Logit. Boost Algorithm Step 1: Initialization committee function: initial probabilities: Step 2: Logit. Boost iterations for m=1, 2, . . . , M repeat: A. Fitting the weak learner: 1. Compute working response and weights for i=1, . . . , n 2. Fit a stump by weighted regression Optimization is no longer for error rate but for (root) mean squared error (RMSE)

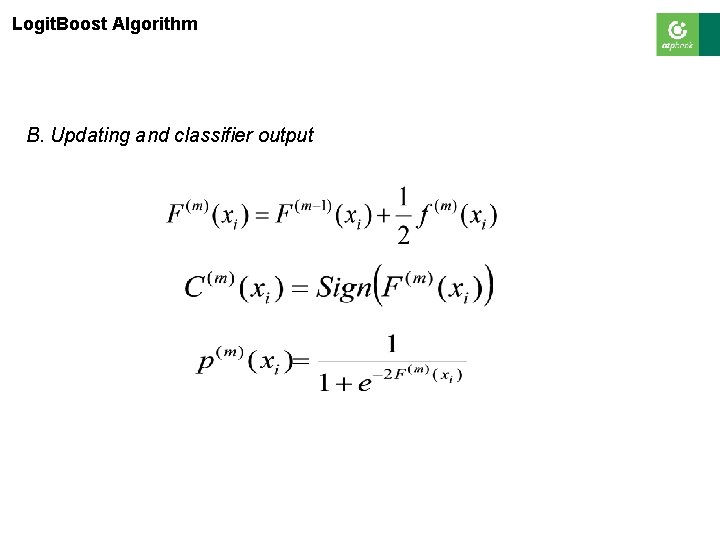

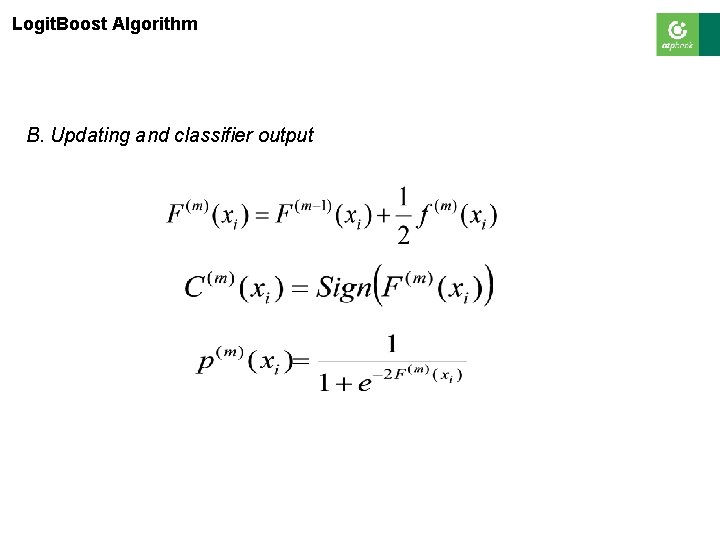

Logit. Boost Algorithm B. Updating and classifier output

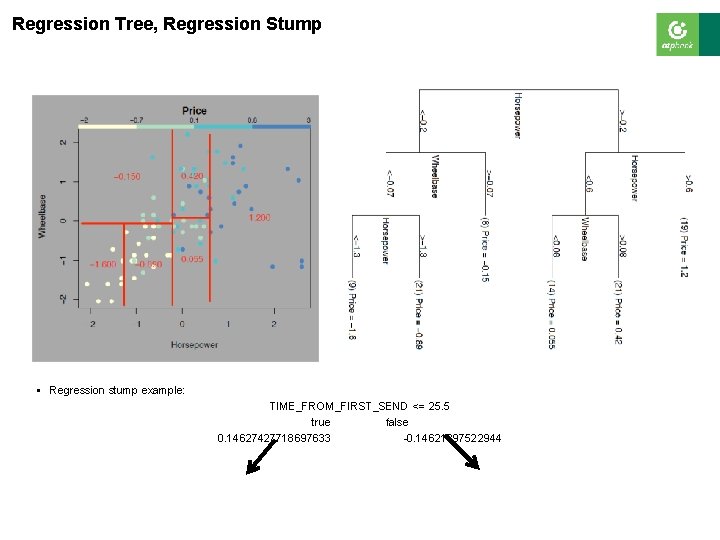

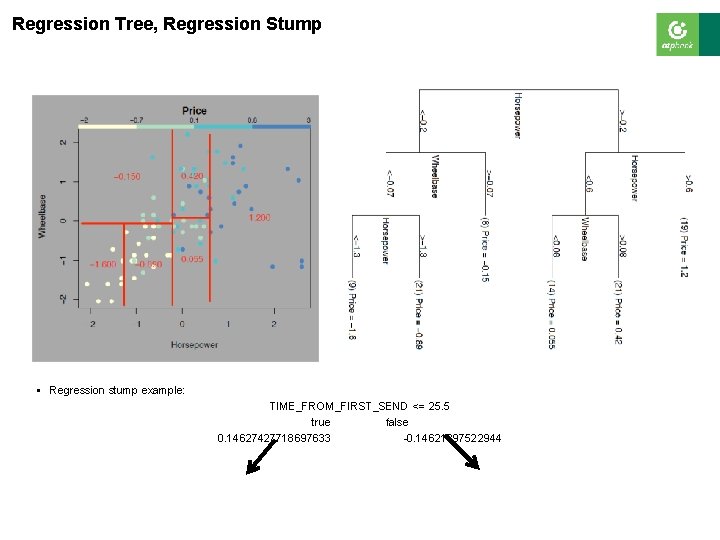

Regression Tree, Regression Stump § Regression stump example: TIME_FROM_FIRST_SEND <= 25. 5 true false 0. 14627427718697633 -0. 14621897522944

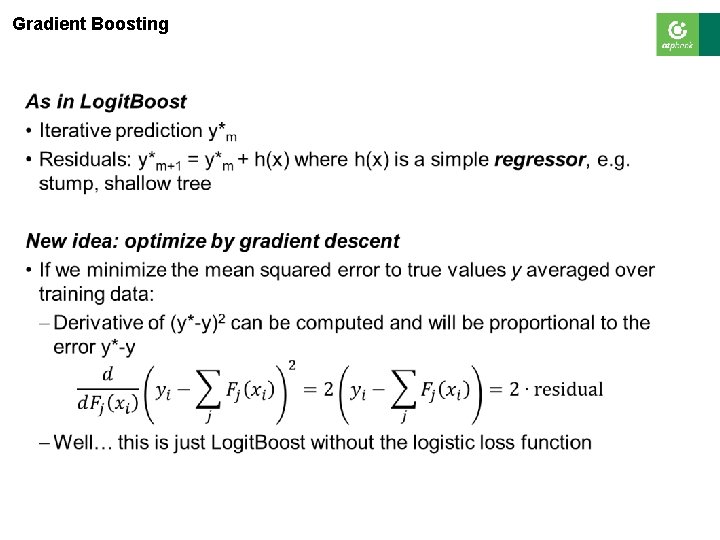

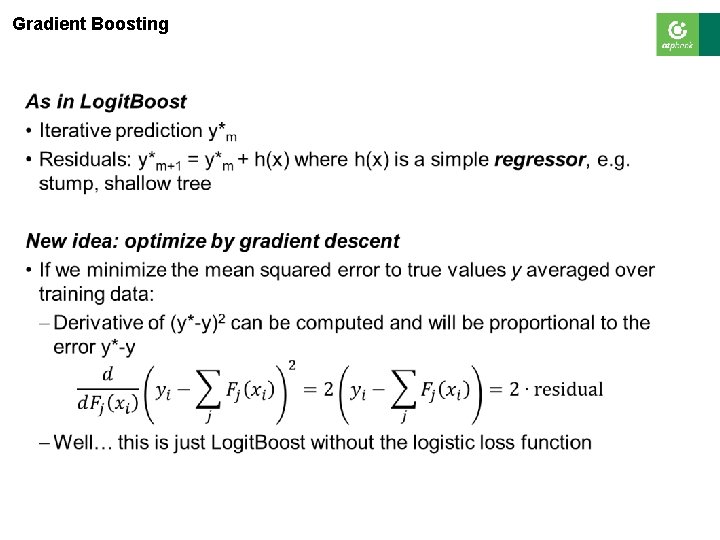

Gradient Boosting §

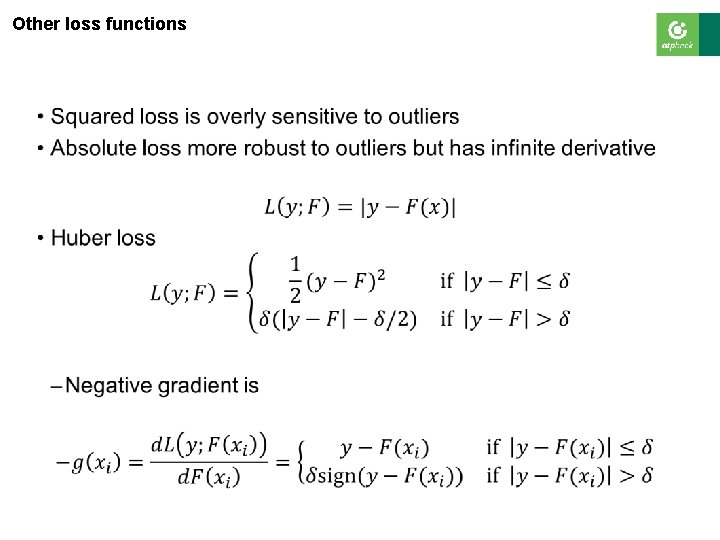

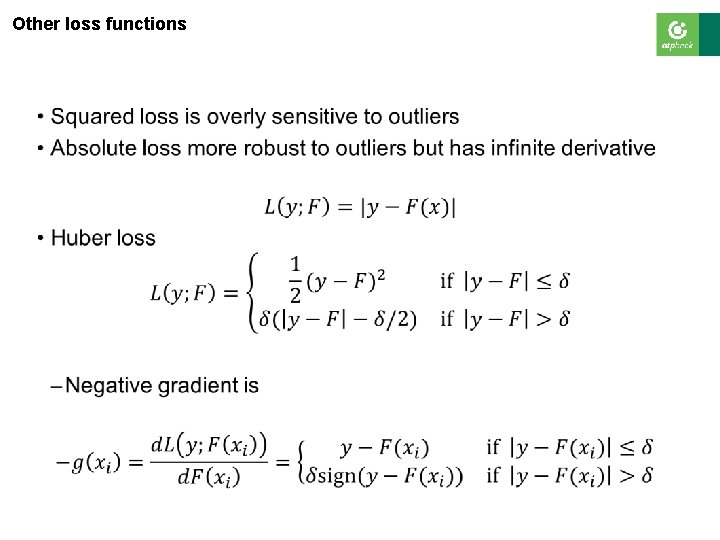

Other loss functions §

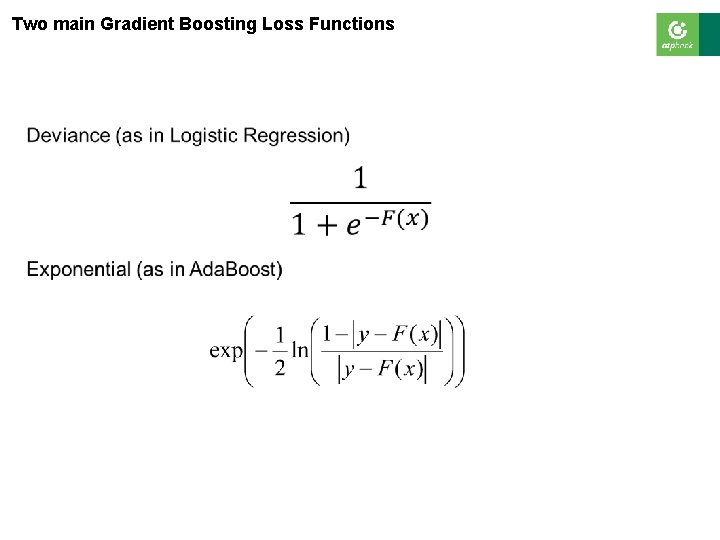

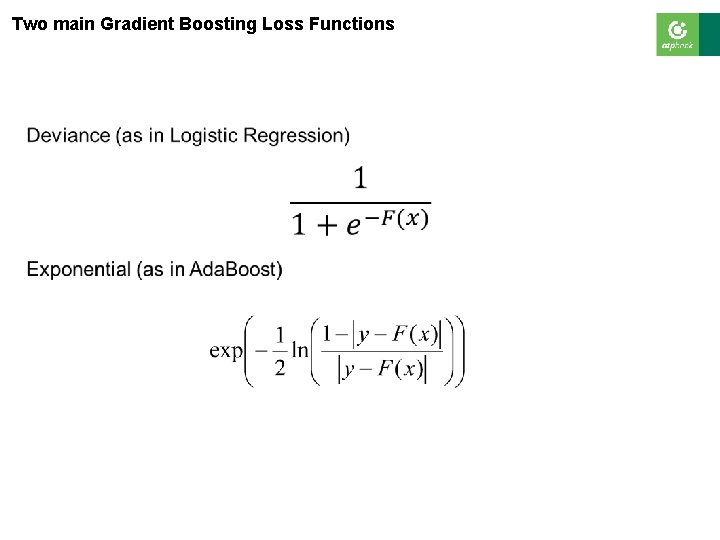

Two main Gradient Boosting Loss Functions §

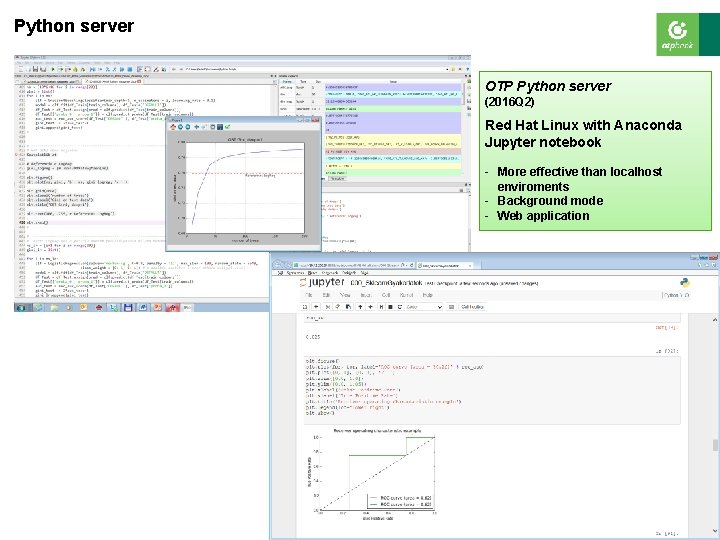

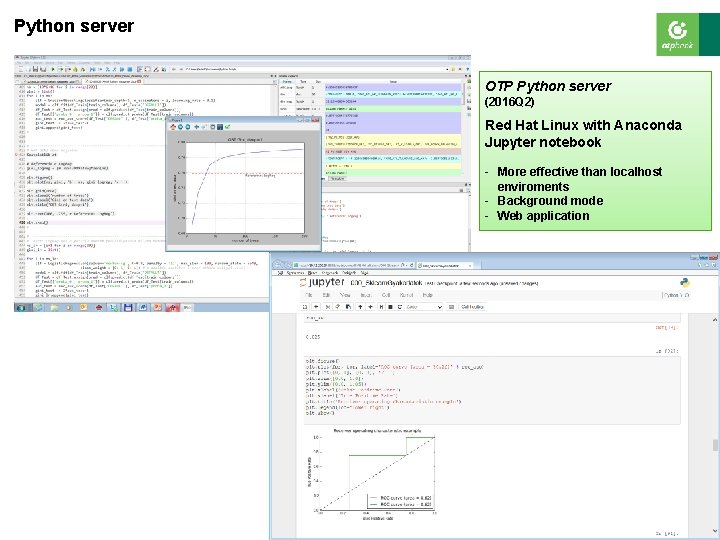

Python server OTP Python server (2016 Q 2) Red Hat Linux with Anaconda Jupyter notebook - More effective than localhost enviroments - Background mode - Web application

Application credit fraud prevention

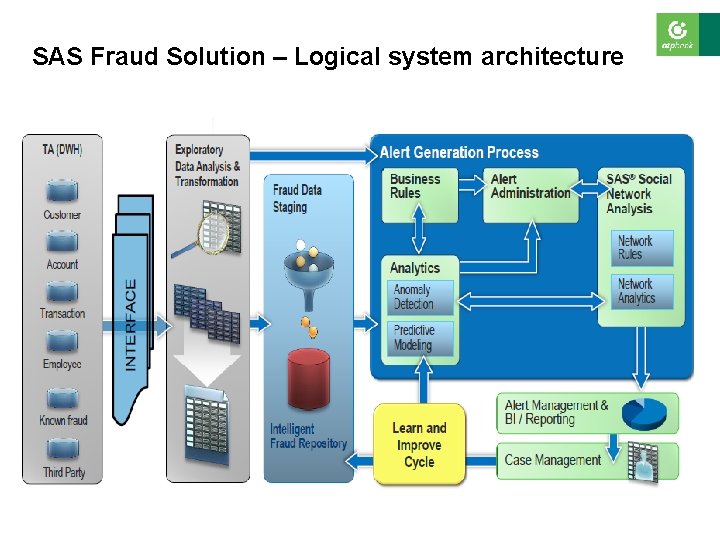

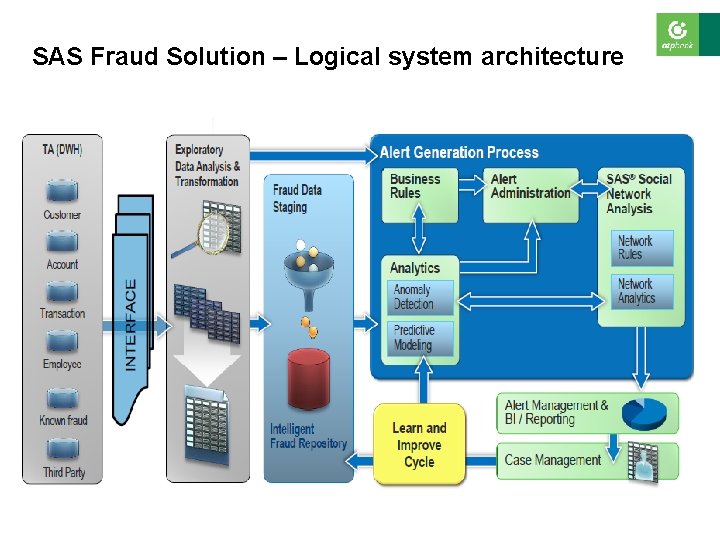

SAS Fraud Solution – Logical system architecture

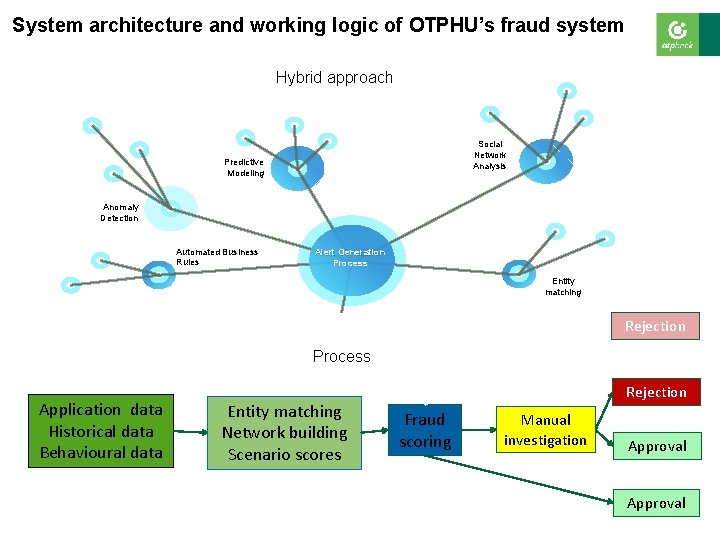

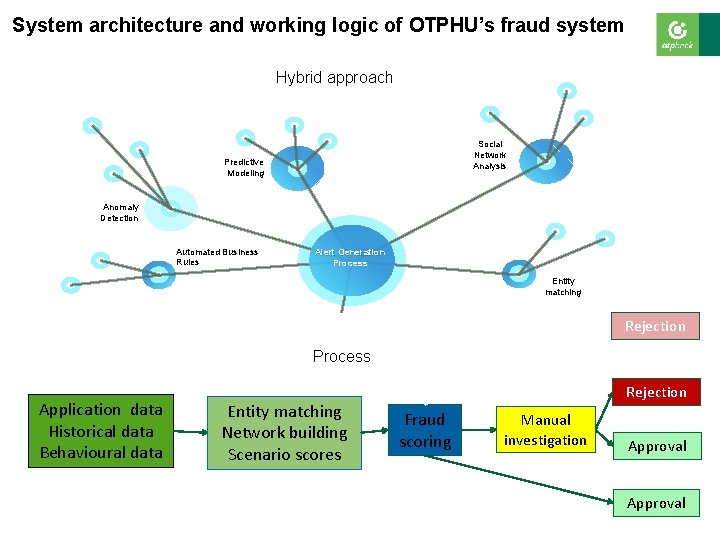

System architecture and working logic of OTPHU’s fraud system Hybrid approach Social Network Analysis Predictive Modeling Anomaly Detection Automated Business Rules Alert Generation Process Entity matching Rejection Process Application data Historical data Behavioural data Entity matching Network building Scenario scores Rejection Fraud scoring Manual investigation Approval

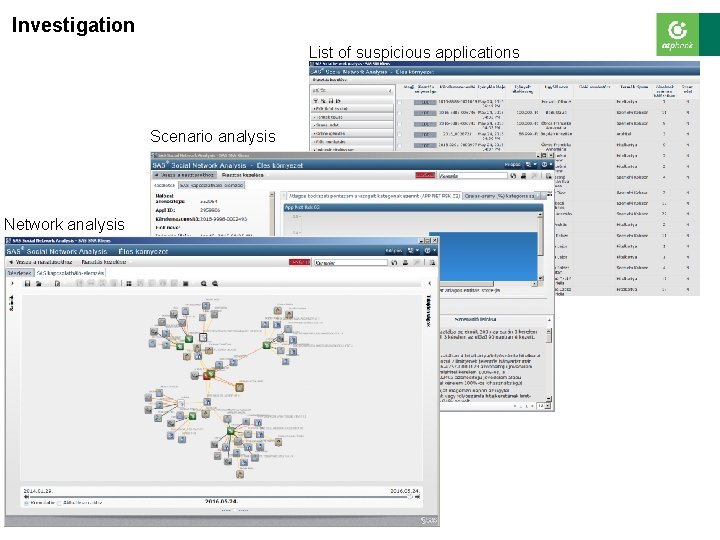

Investigation List of suspicious applications Scenario analysis Network analysis

Thank you for your attention!