MACHINE LEARNING LECTURE 3 FINDS AND VERSION SPACES

- Slides: 34

MACHINE LEARNING LECTURE 3 FIND-S AND VERSION SPACES Based on Chapter 2 of Mitchell’s book

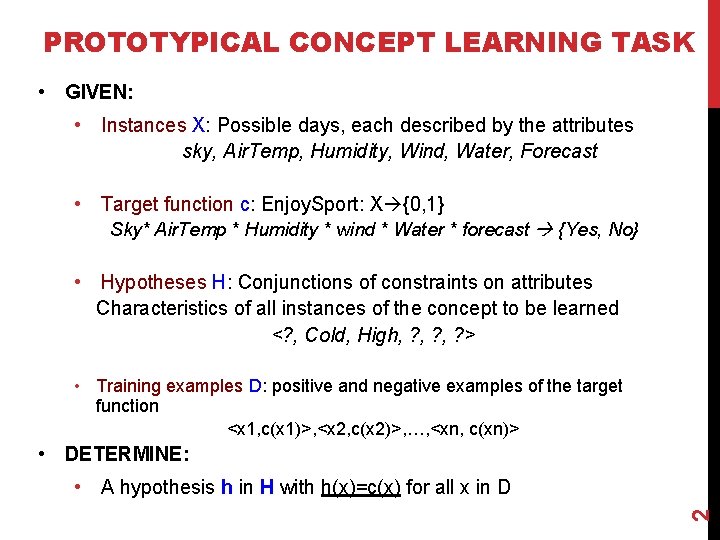

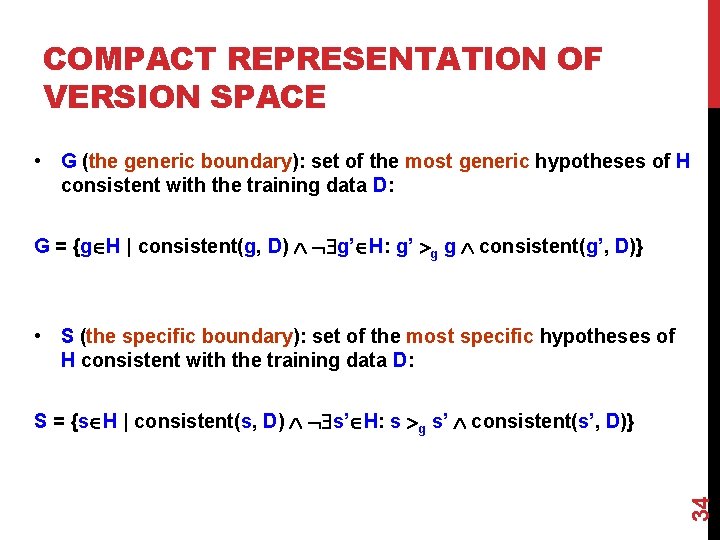

PROTOTYPICAL CONCEPT LEARNING TASK • GIVEN: • Instances X: Possible days, each described by the attributes sky, Air. Temp, Humidity, Wind, Water, Forecast • Target function c: Enjoy. Sport: X {0, 1} Sky* Air. Temp * Humidity * wind * Water * forecast {Yes, No} • Hypotheses H: Conjunctions of constraints on attributes Characteristics of all instances of the concept to be learned <? , Cold, High, ? , ? > • Training examples D: positive and negative examples of the target function <x 1, c(x 1)>, <x 2, c(x 2)>, …, <xn, c(xn)> • DETERMINE: 2 • A hypothesis h in H with h(x)=c(x) for all x in D

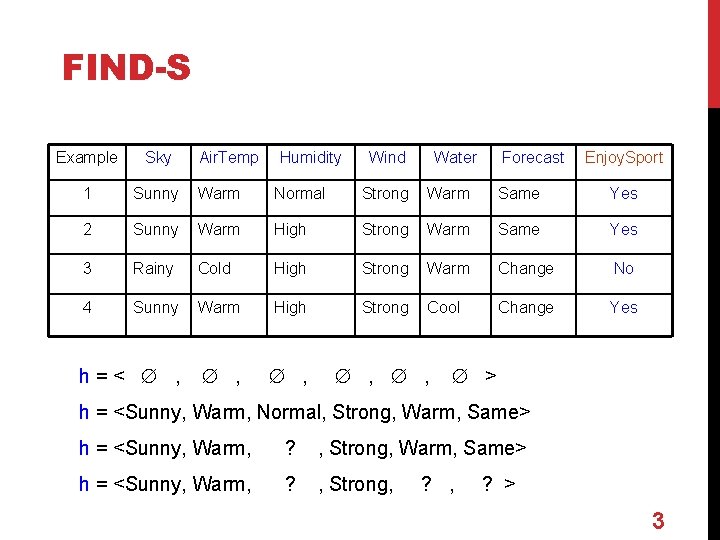

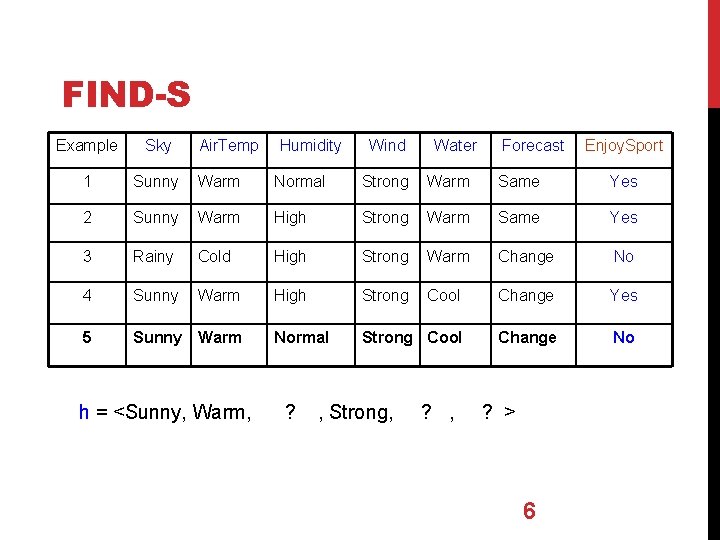

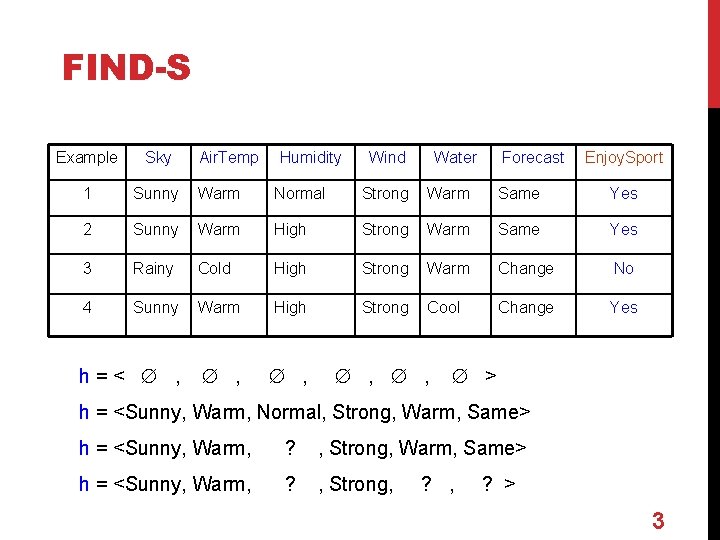

FIND-S Example Sky Air. Temp Humidity Wind Water Forecast Enjoy. Sport 1 Sunny Warm Normal Strong Warm Same Yes 2 Sunny Warm High Strong Warm Same Yes 3 Rainy Cold High Strong Warm Change No 4 Sunny Warm High Strong Cool Change Yes h=< , , , > h = <Sunny, Warm, Normal, Strong, Warm, Same> h = <Sunny, Warm, ? , Strong, ? > 3

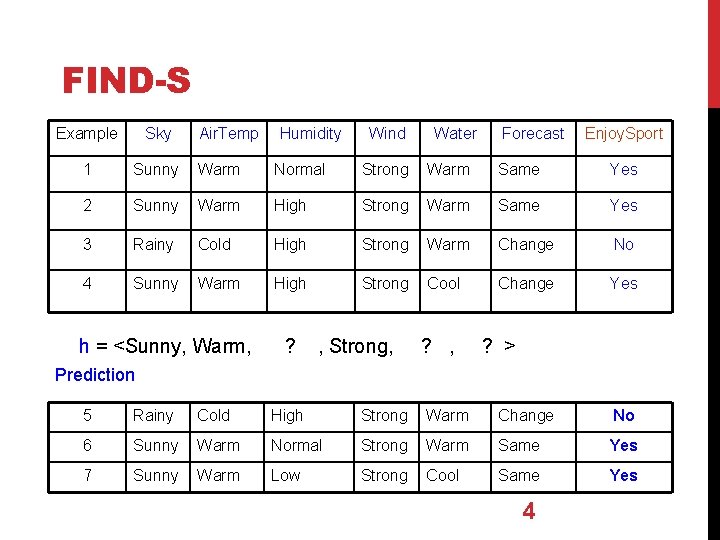

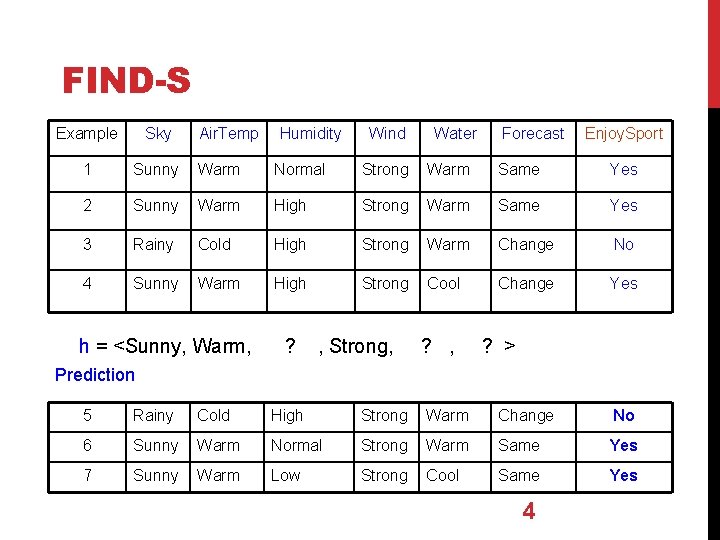

FIND-S Example Sky Air. Temp 1 Sunny Warm Normal Strong Warm Same Yes 2 Sunny Warm High Strong Warm Same Yes 3 Rainy Cold High Strong Warm Change No 4 Sunny Warm High Strong Cool Change Yes h = <Sunny, Warm, Humidity ? Wind , Strong, Water ? , Forecast Enjoy. Sport ? > Prediction 5 Rainy Cold High Strong Warm Change No 6 Sunny Warm Normal Strong Warm Same Yes 7 Sunny Warm Low Strong Cool Same Yes 4

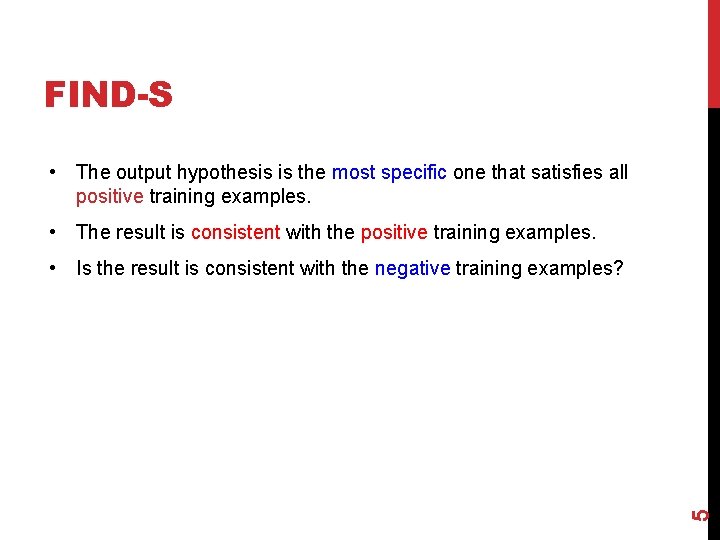

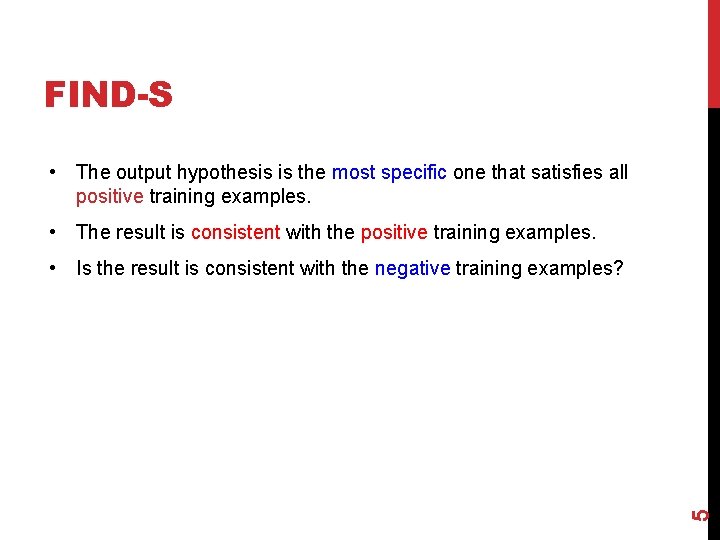

FIND-S • The output hypothesis is the most specific one that satisfies all positive training examples. • The result is consistent with the positive training examples. 5 • Is the result is consistent with the negative training examples?

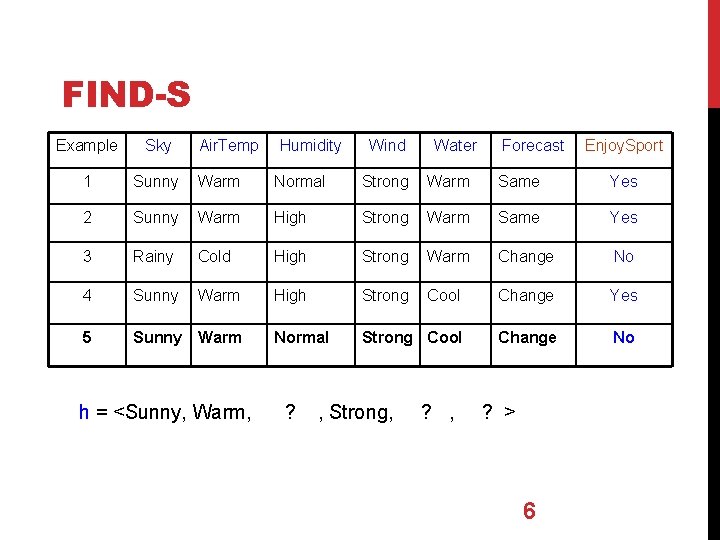

FIND-S Example Sky Air. Temp 1 Sunny Warm Normal Strong Warm Same Yes 2 Sunny Warm High Strong Warm Same Yes 3 Rainy Cold High Strong Warm Change No 4 Sunny Warm High Strong Cool Change Yes 5 Sunny Warm Normal Strong Cool Change No h = <Sunny, Warm, Humidity ? Wind , Strong, Water ? , Forecast ? > 6 Enjoy. Sport

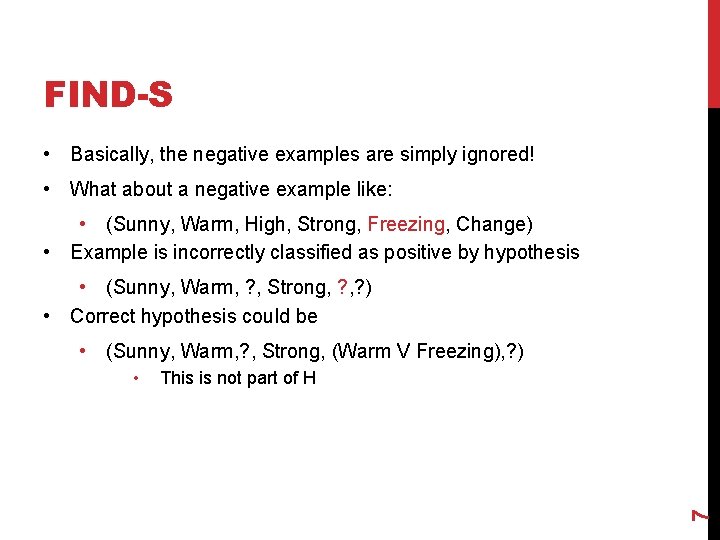

FIND-S • Basically, the negative examples are simply ignored! • What about a negative example like: • (Sunny, Warm, High, Strong, Freezing, Change) • Example is incorrectly classified as positive by hypothesis • (Sunny, Warm, ? , Strong, ? ) • Correct hypothesis could be • (Sunny, Warm, ? , Strong, (Warm V Freezing), ? ) This is not part of H 7 •

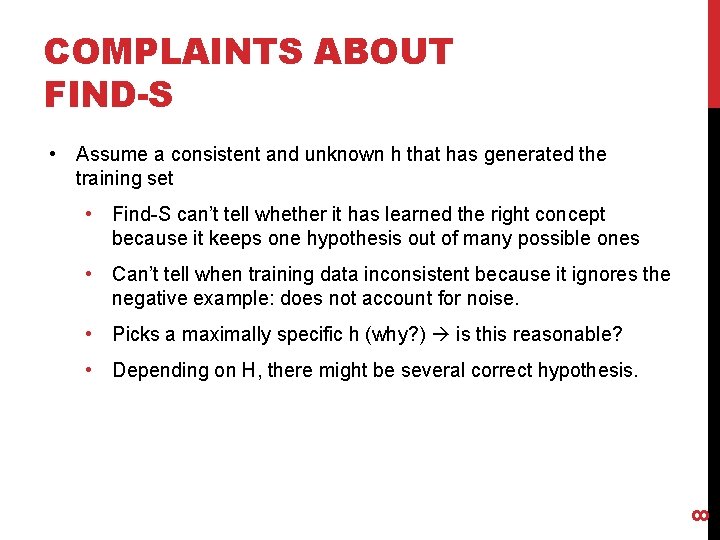

COMPLAINTS ABOUT FIND-S • Assume a consistent and unknown h that has generated the training set • Find-S can’t tell whether it has learned the right concept because it keeps one hypothesis out of many possible ones • Can’t tell when training data inconsistent because it ignores the negative example: does not account for noise. • Picks a maximally specific h (why? ) is this reasonable? 8 • Depending on H, there might be several correct hypothesis.

LIST-THEN-ELIMINATE ALGORITHM Version space: a set of all hypotheses that are consistent with the training examples. Algorithm: – Initial version space = set containing every hypothesis in H – For each training example <x, c(x)>, remove from the version space any hypothesis h for which h(x) c(x) 9 – Output the hypotheses in the version space

LIST-THEN-ELIMINATE ALGORITHM • Fundamental assumptions • The data is correct, there are no erroneous instances 10 • A correct description is a conjunction of some of the attributes with values

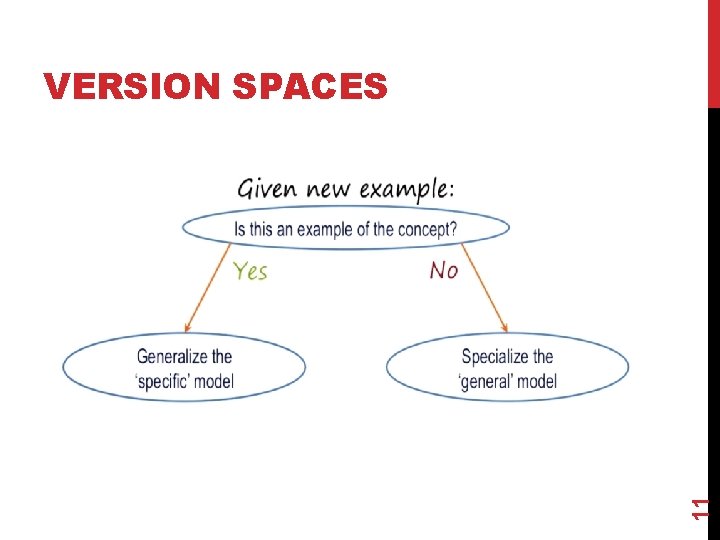

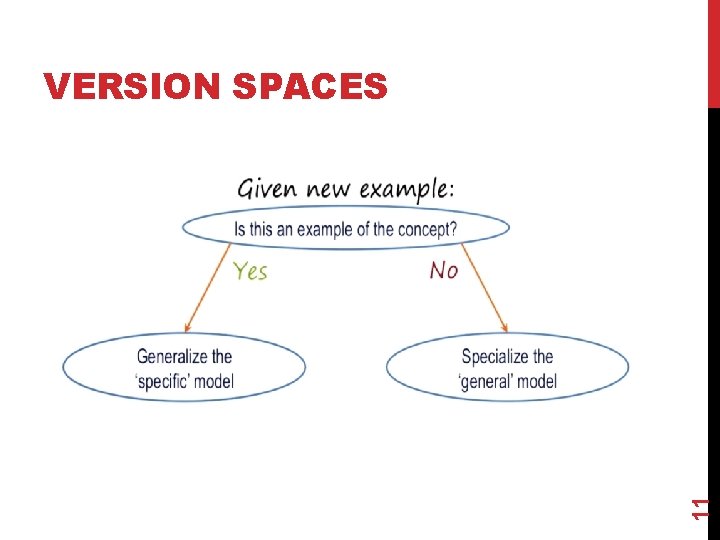

11 VERSION SPACES

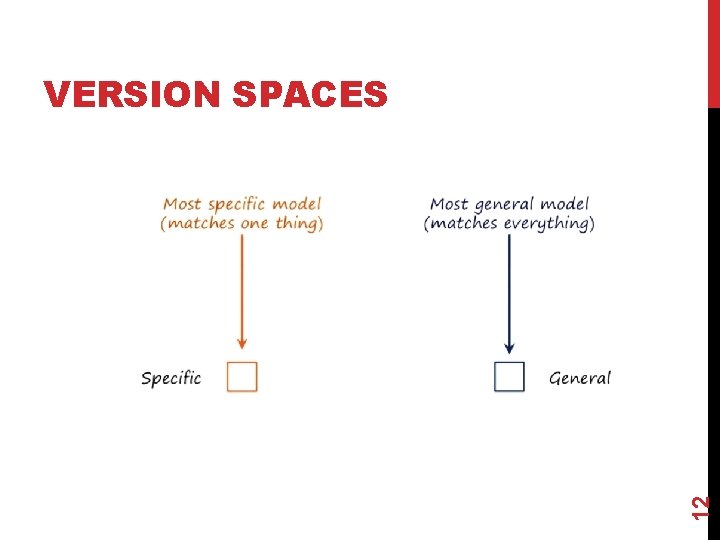

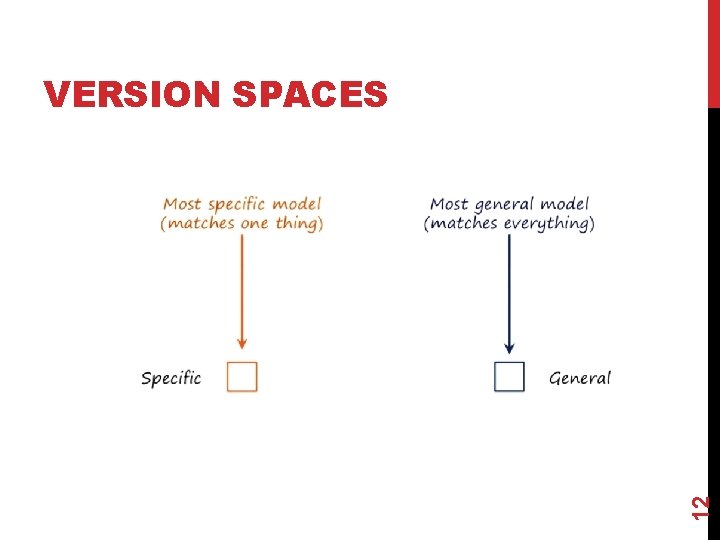

12 VERSION SPACES

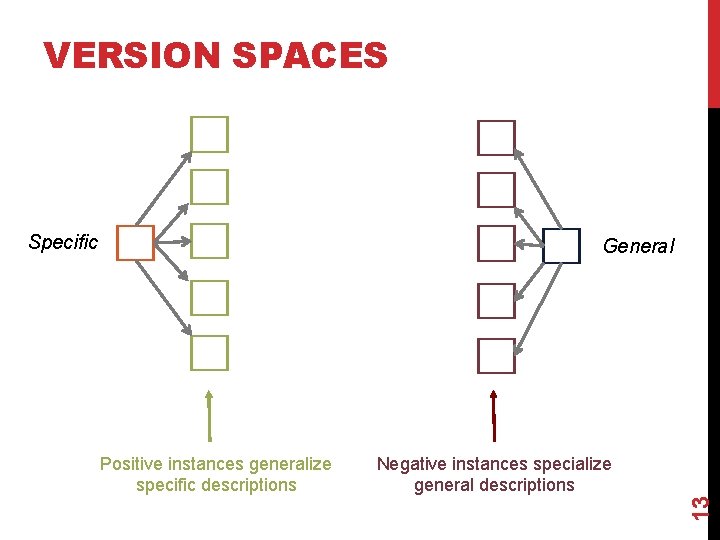

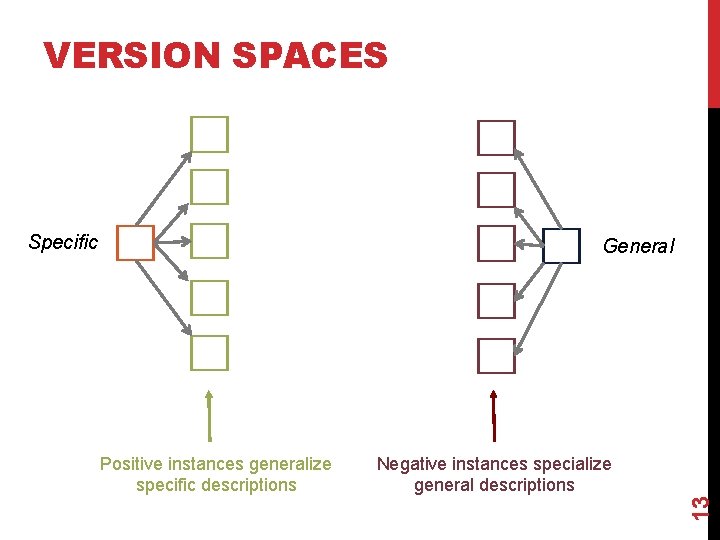

VERSION SPACES Specific General Negative instances specialize general descriptions 13 Positive instances generalize specific descriptions

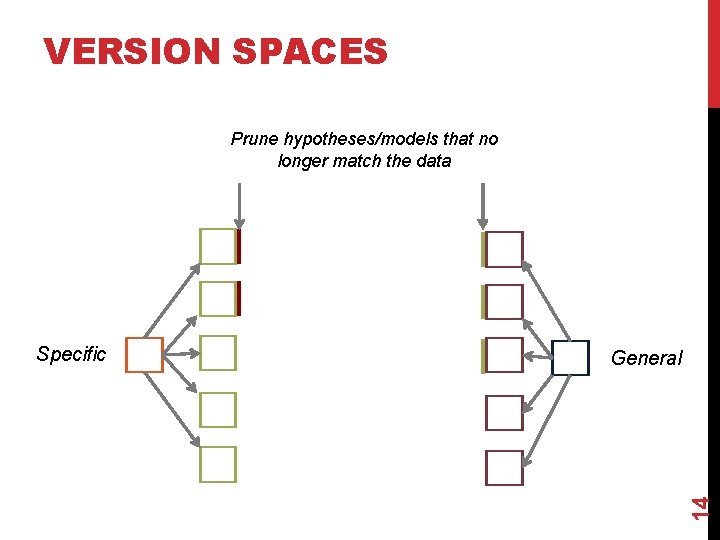

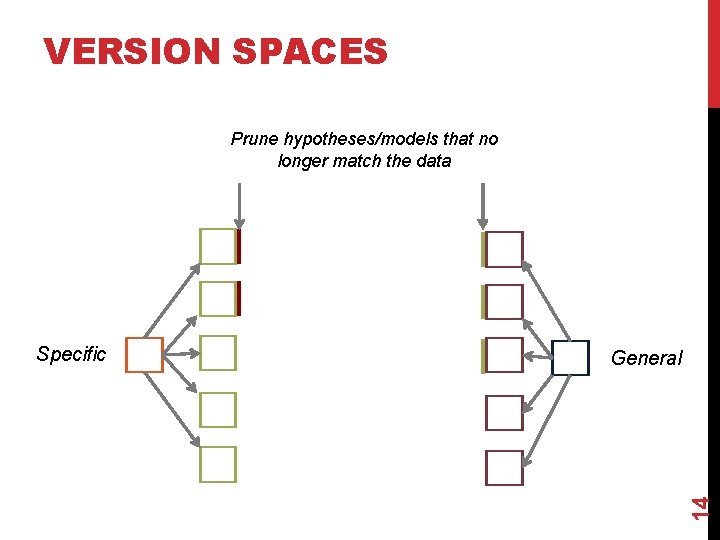

VERSION SPACES Prune hypotheses/models that no longer match the data General 14 Specific

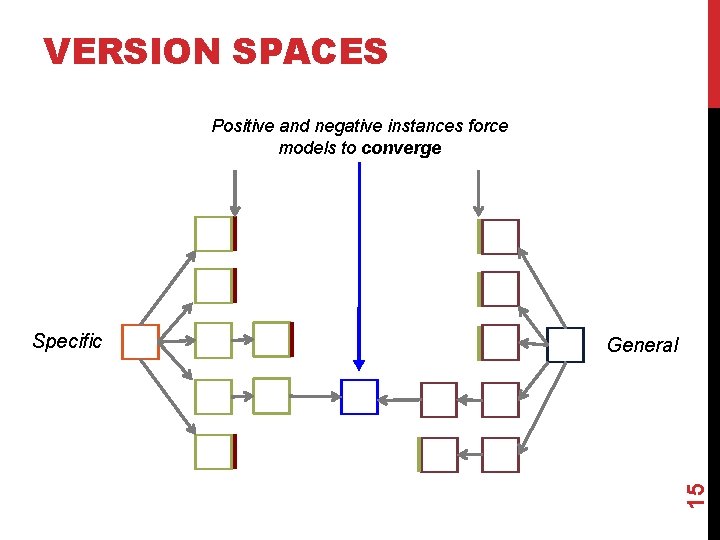

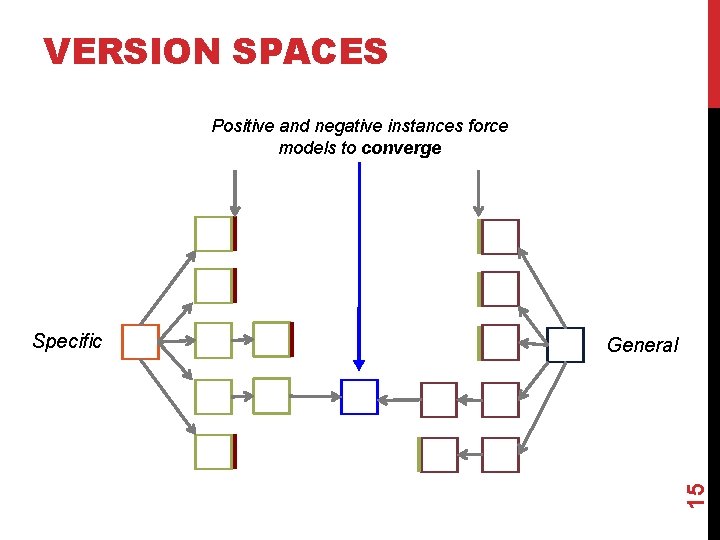

VERSION SPACES Positive and negative instances force models to converge General 15 Specific

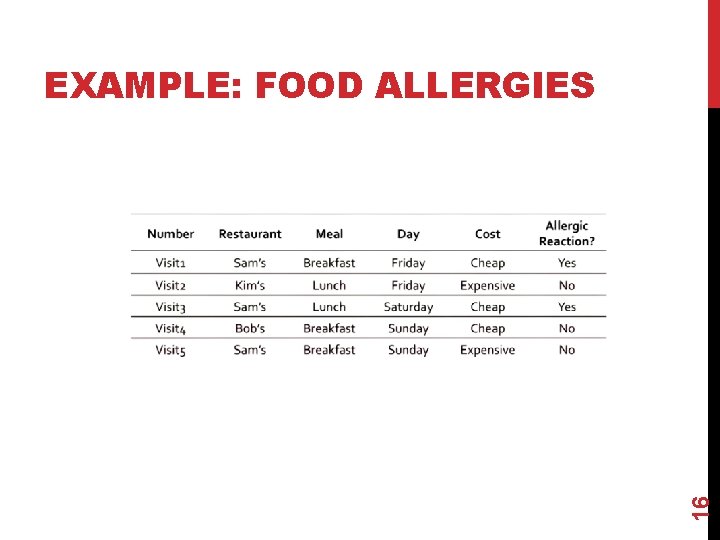

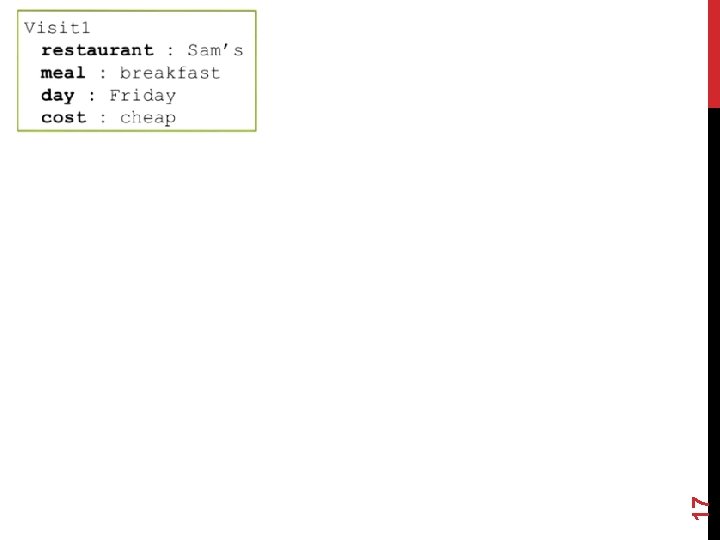

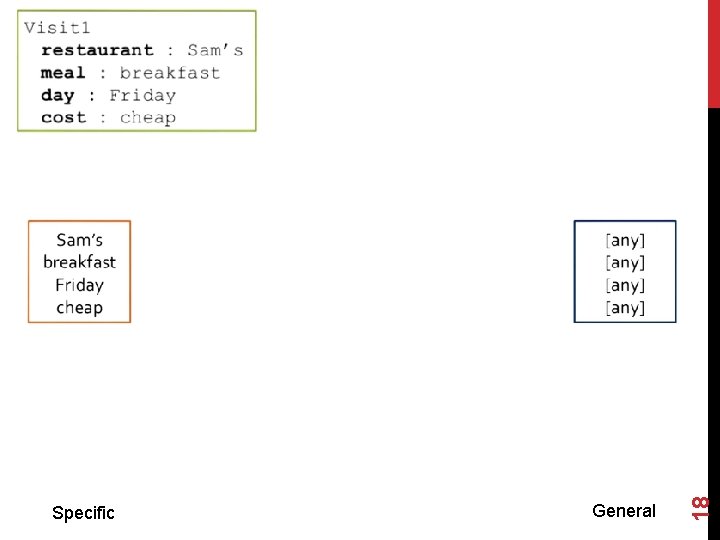

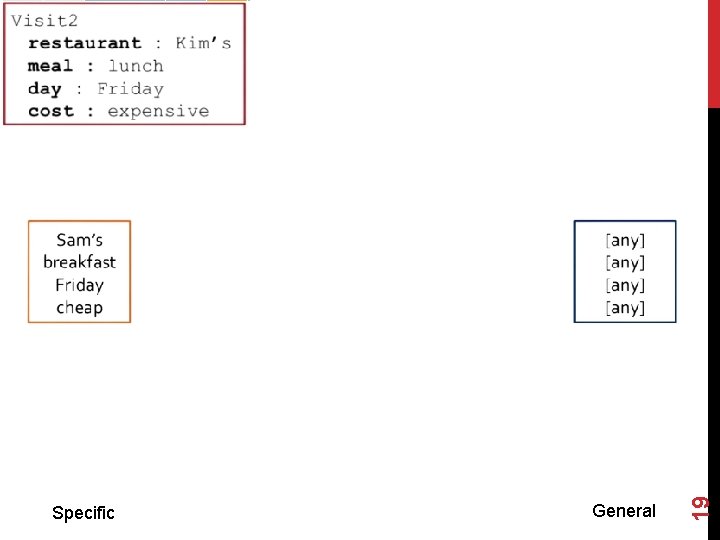

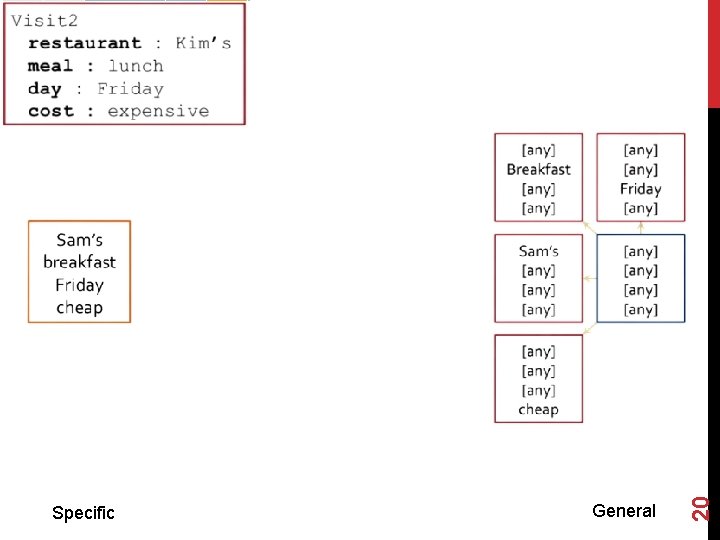

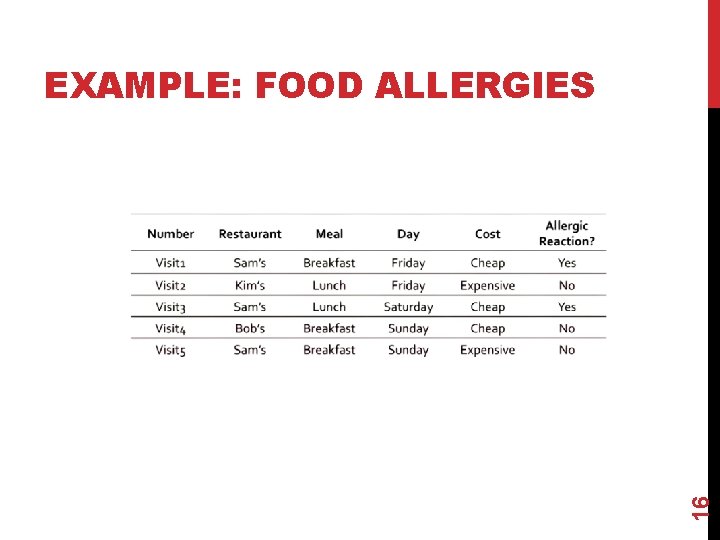

16 EXAMPLE: FOOD ALLERGIES

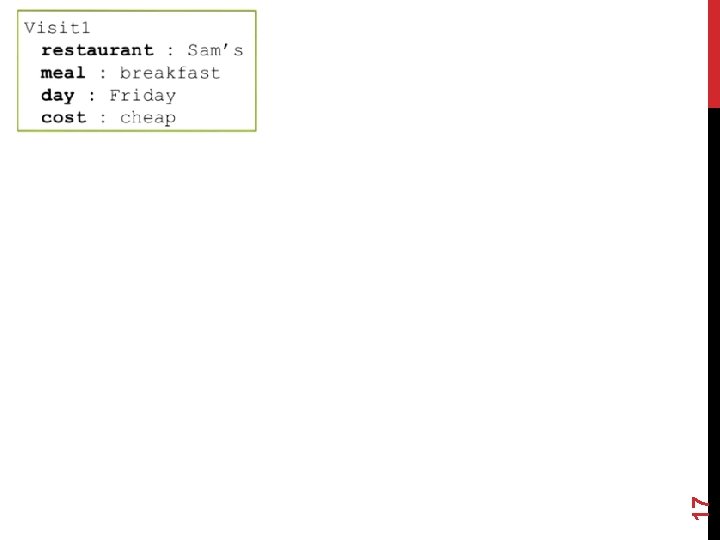

17

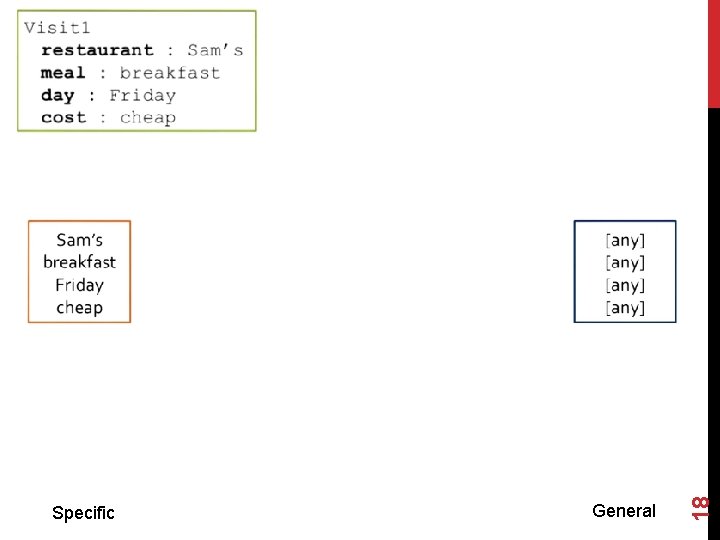

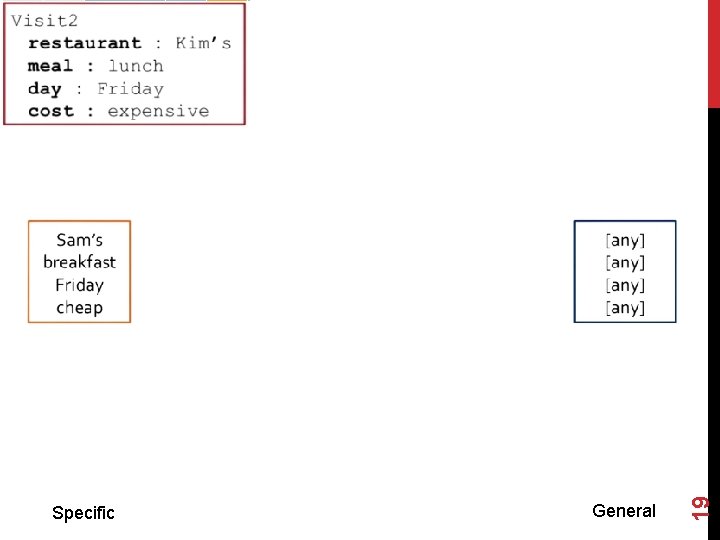

General 18 Specific

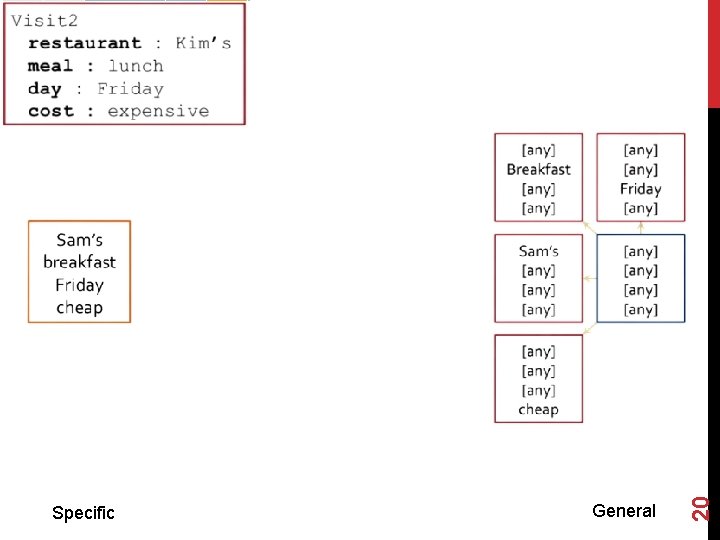

General 19 Specific

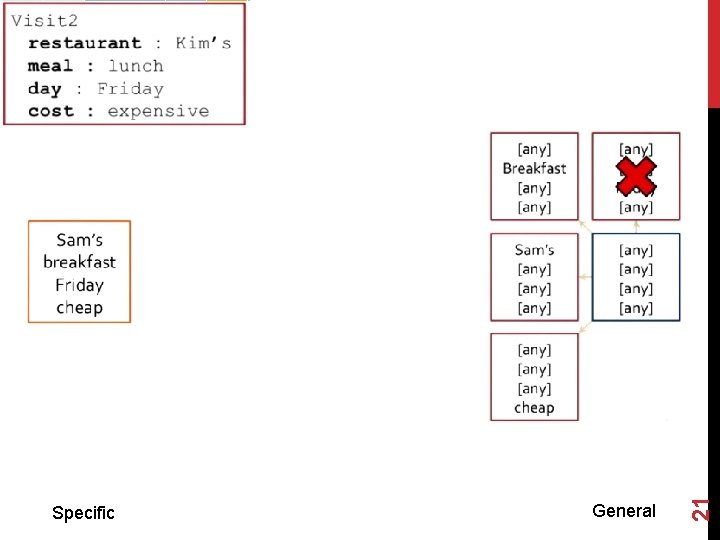

General 20 Specific

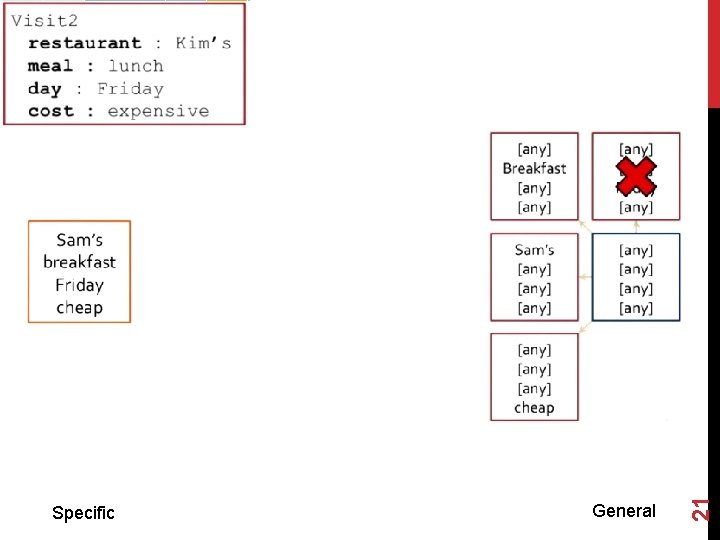

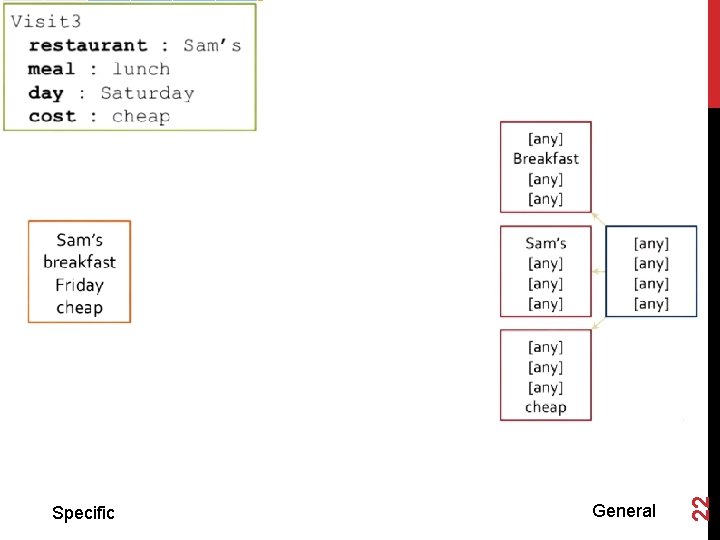

General 21 Specific

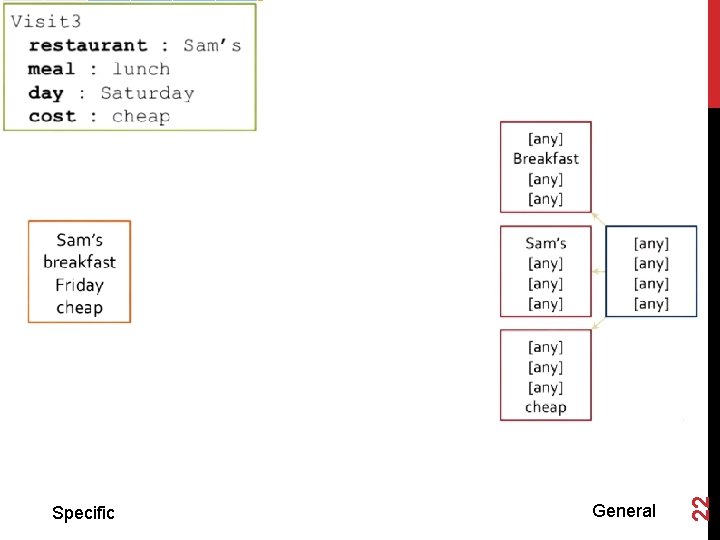

General 22 Specific

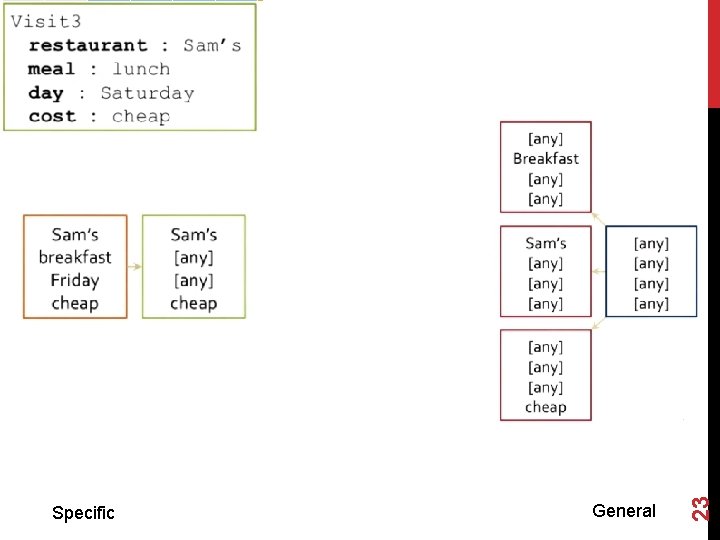

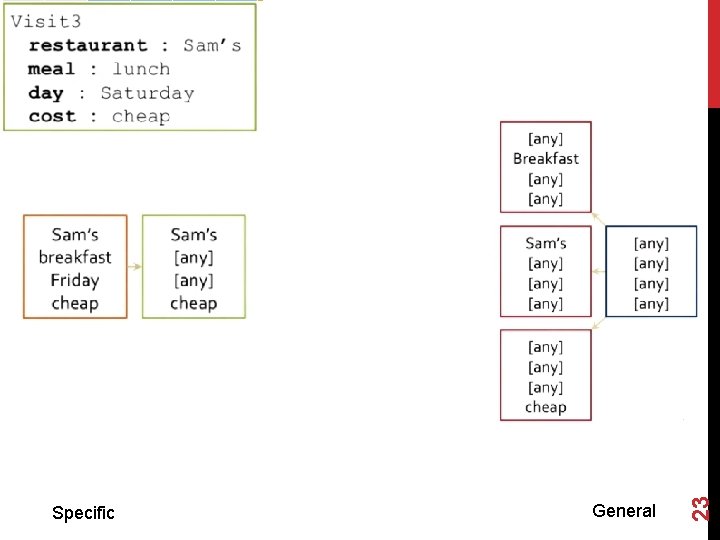

General 23 Specific

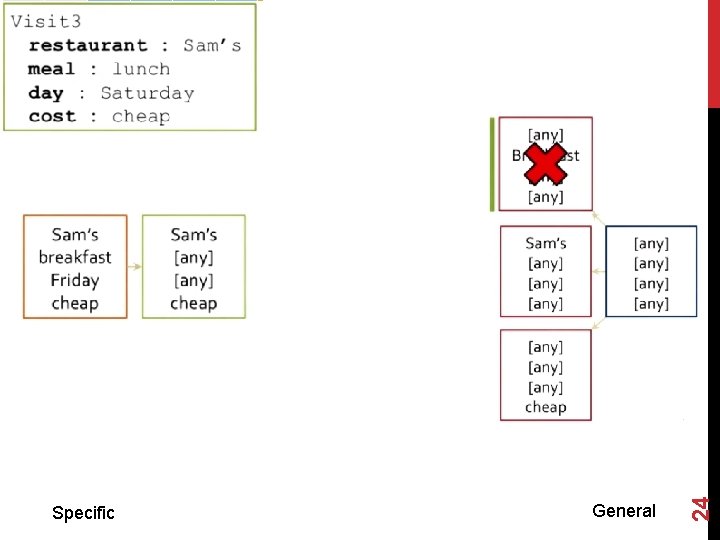

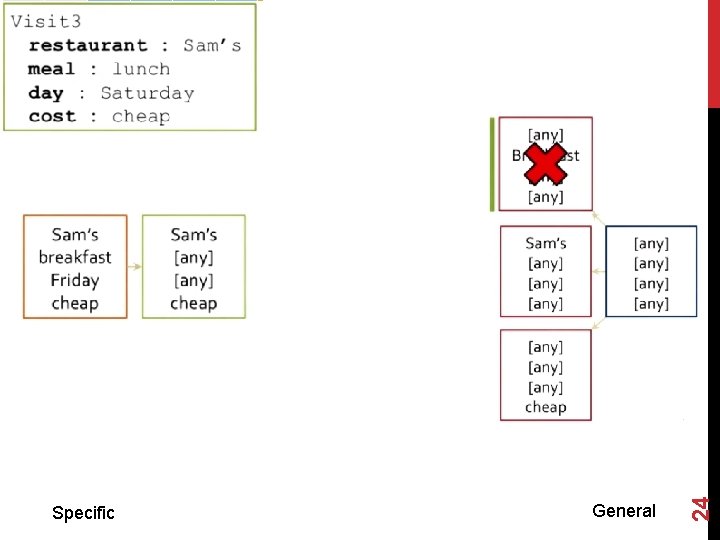

General 24 Specific

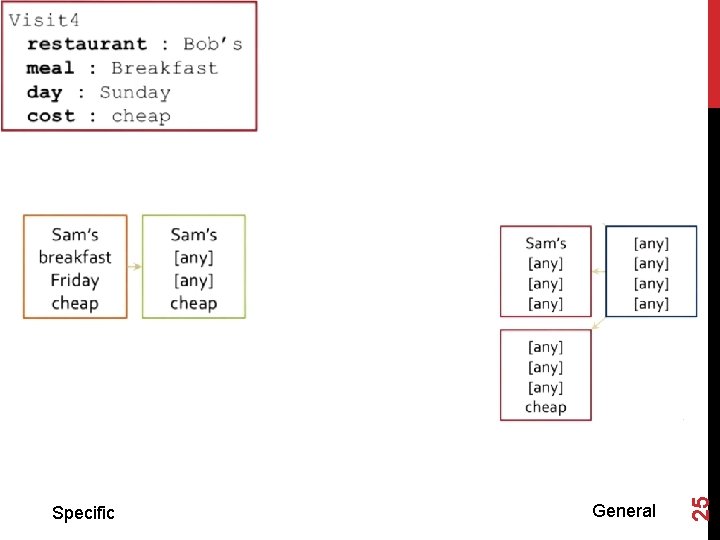

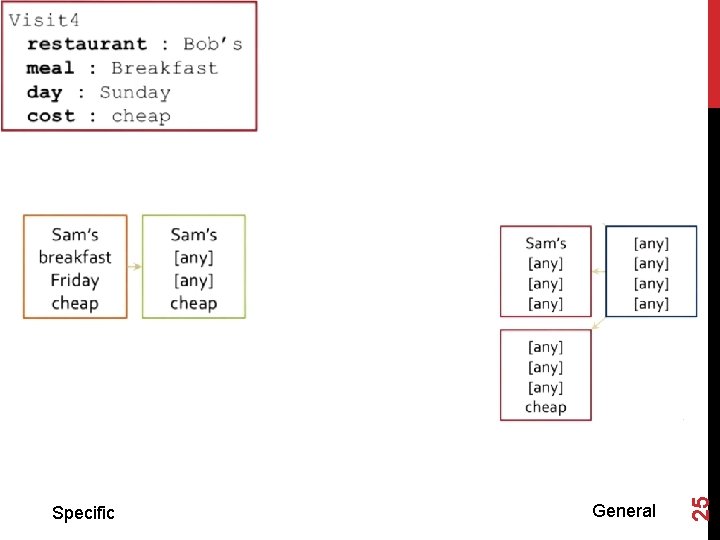

General 25 Specific

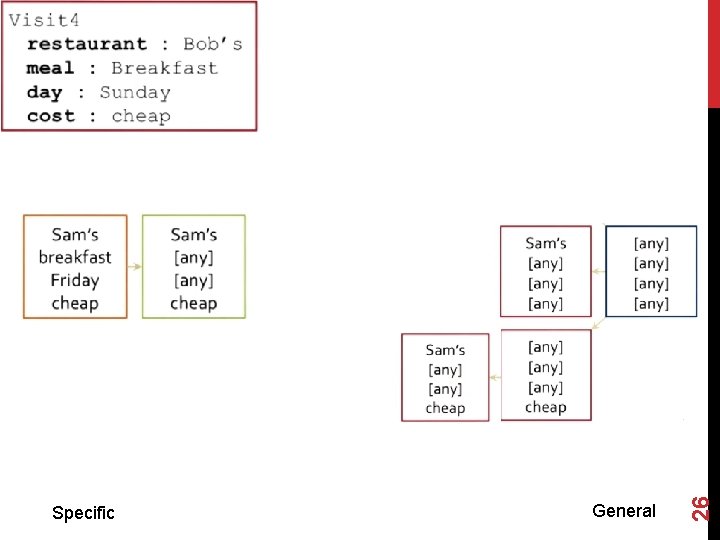

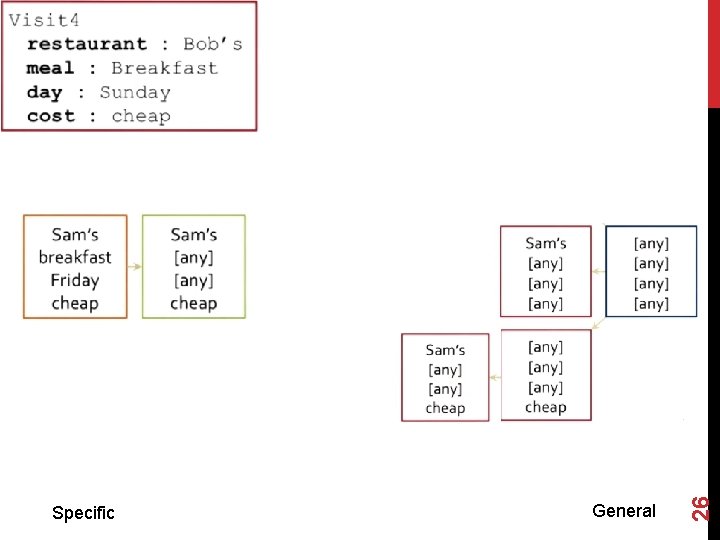

General 26 Specific

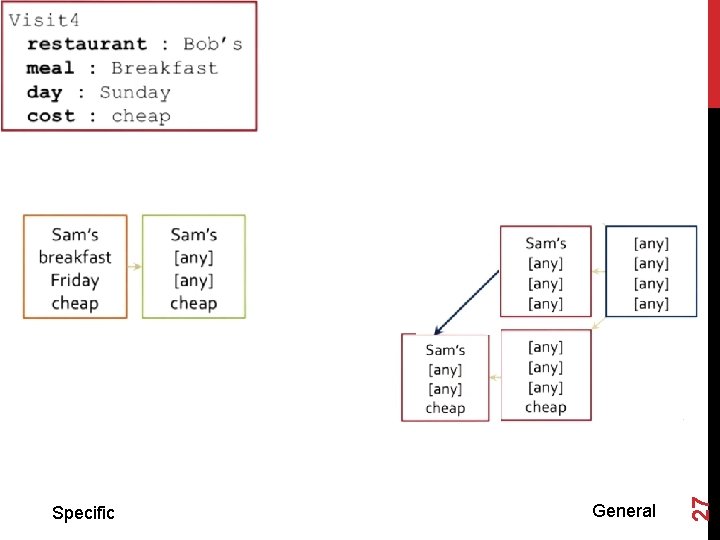

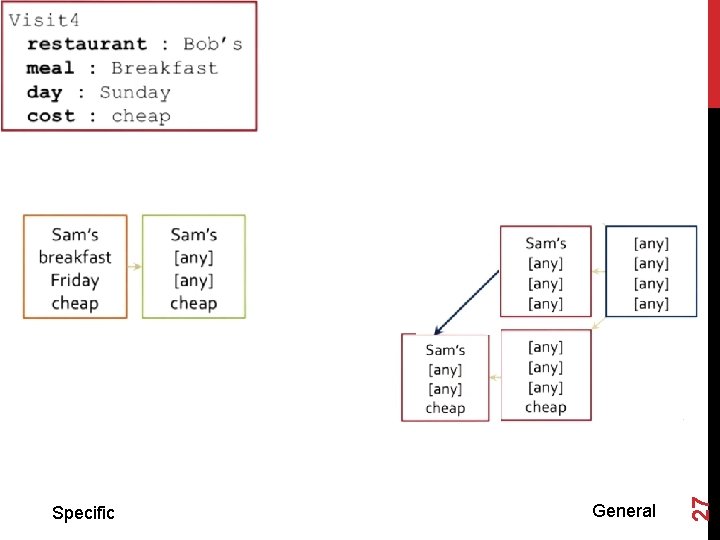

General 27 Specific

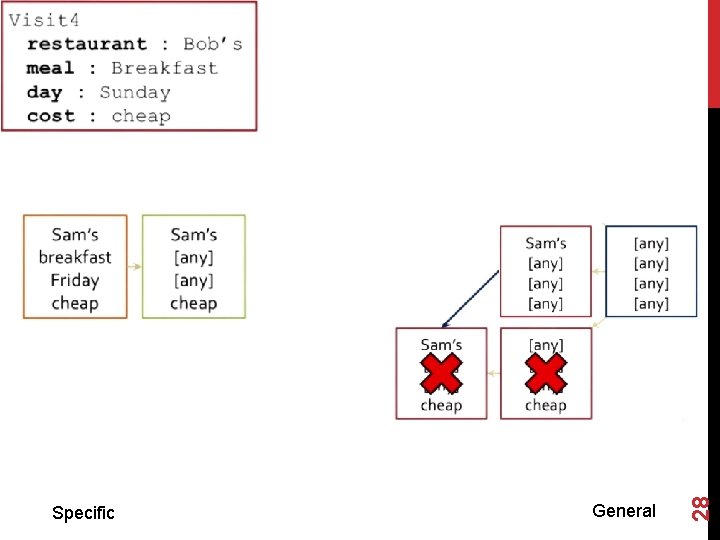

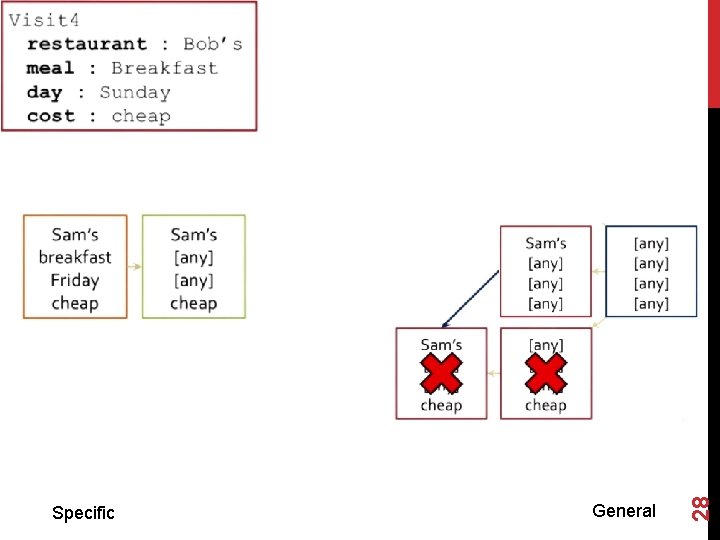

General 28 Specific

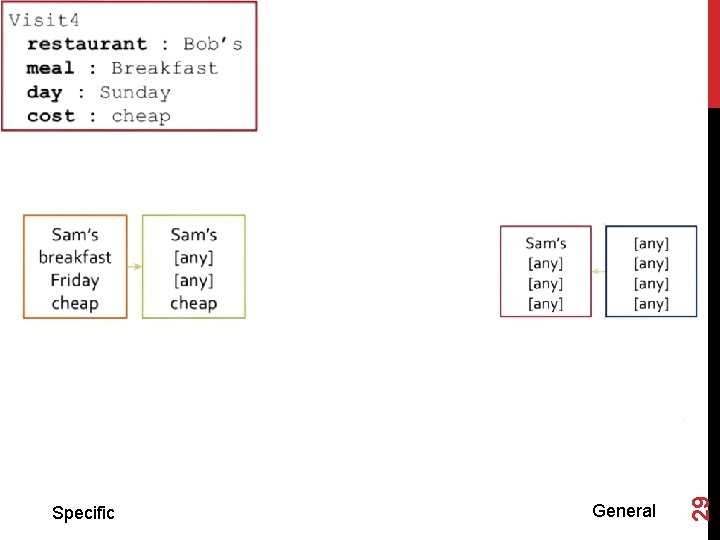

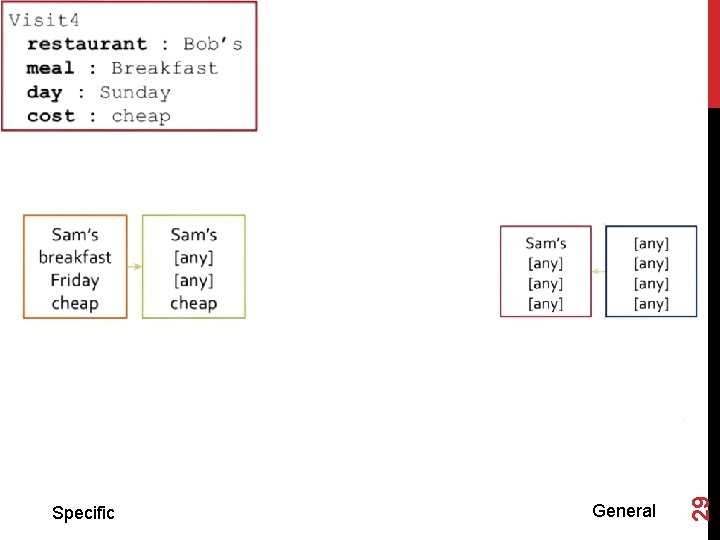

General 29 Specific

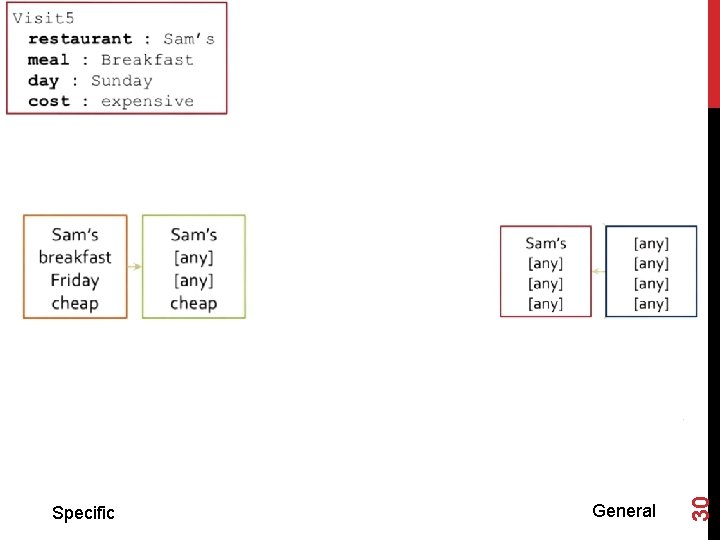

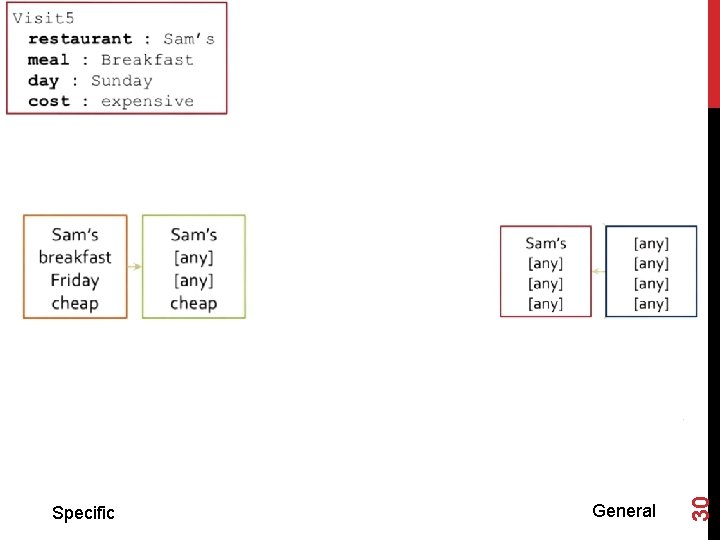

General 30 Specific

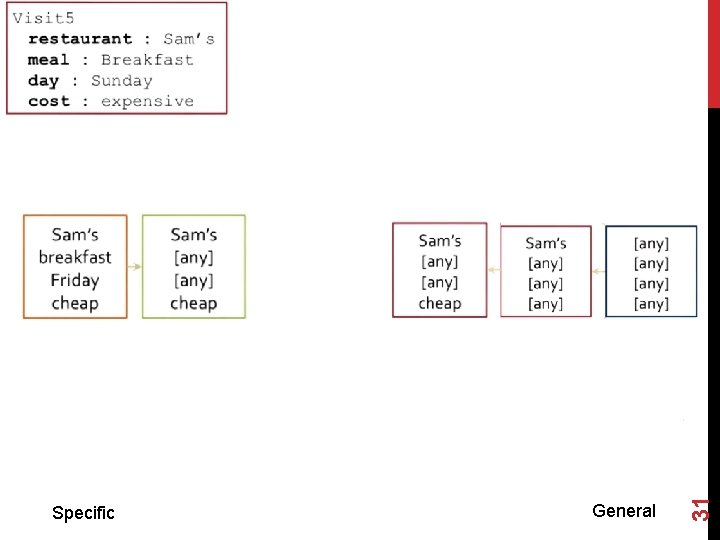

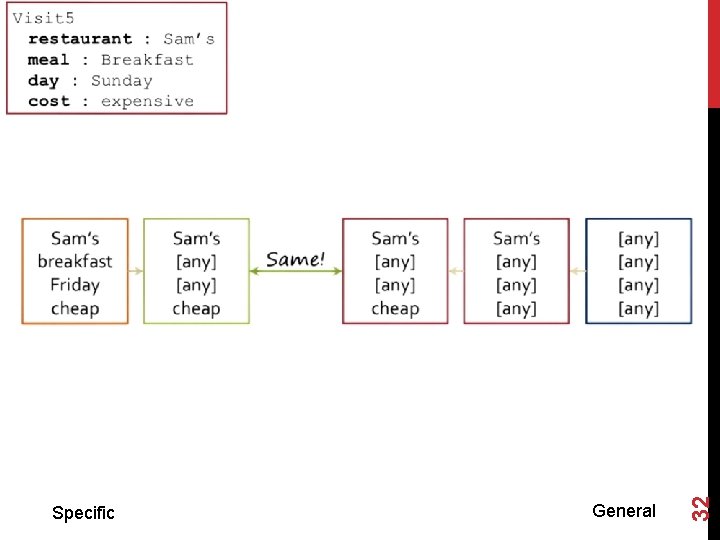

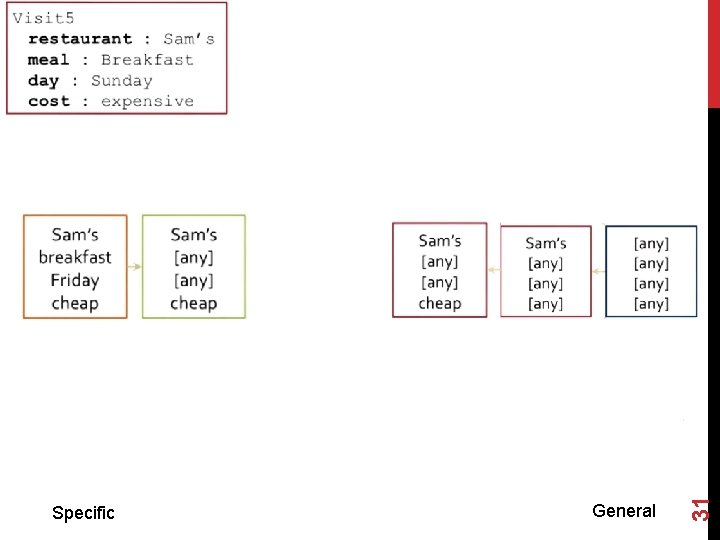

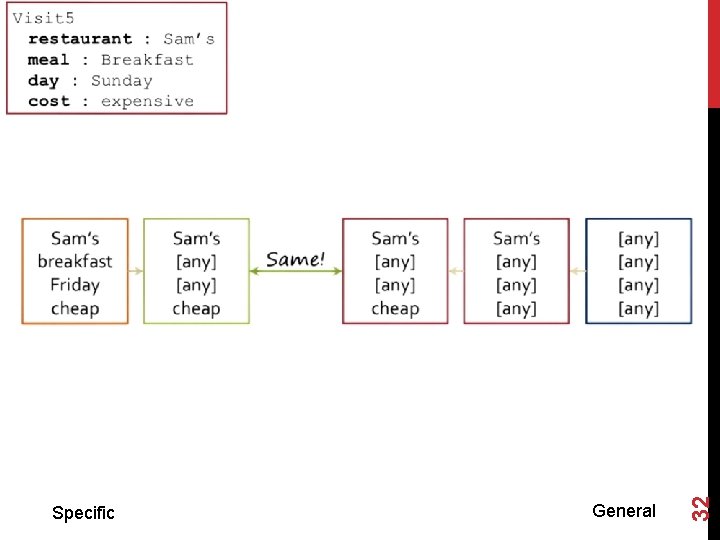

General 31 Specific

General 32 Specific

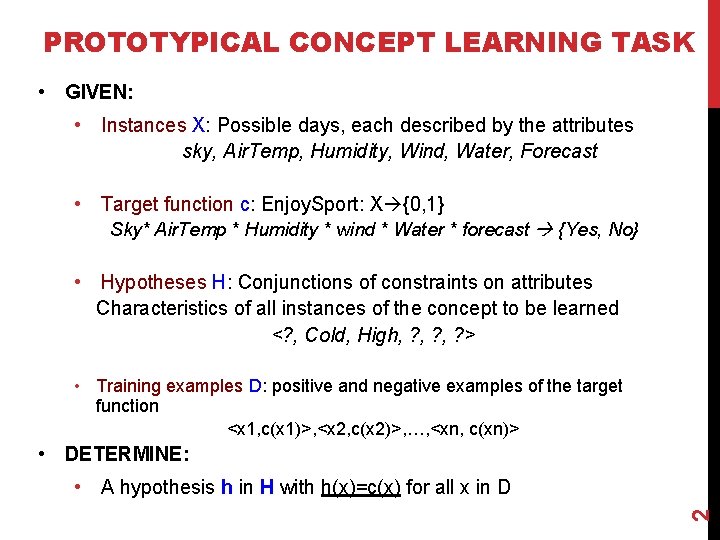

VERSION SPACES ALGORITHM For each example: • If the example is positive: • • generalize all specific models to include it prune away general models that cannot include it • If the example is negative • • specialize all general models to include it prune away specific models that cannot include it 33 • Prune away models subsumed by other models

COMPACT REPRESENTATION OF VERSION SPACE • G (the generic boundary): set of the most generic hypotheses of H consistent with the training data D: G = {g H | consistent(g, D) g’ H: g’ g g consistent(g’, D)} • S (the specific boundary): set of the most specific hypotheses of H consistent with the training data D: 34 S = {s H | consistent(s, D) s’ H: s g s’ consistent(s’, D)}