MACHINE LEARNING LECTURE 2 CONCEPT LEARNING AND VERSION

- Slides: 18

MACHINE LEARNING LECTURE 2 CONCEPT LEARNING AND VERSION SPACES 1 Based on Chapter 2 of Mitchell’s book

OUTLINE • Concept Learning from examples • Hypothesis representation • General-to specific ordering of hypotheses 2 • the Find-S algorithm

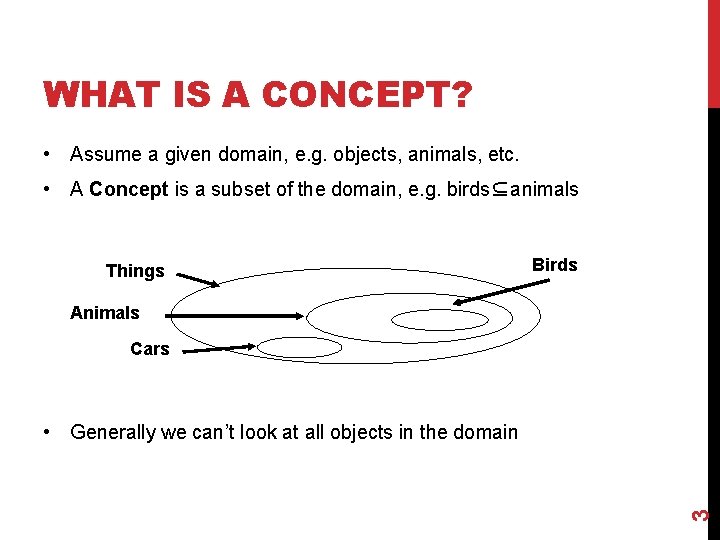

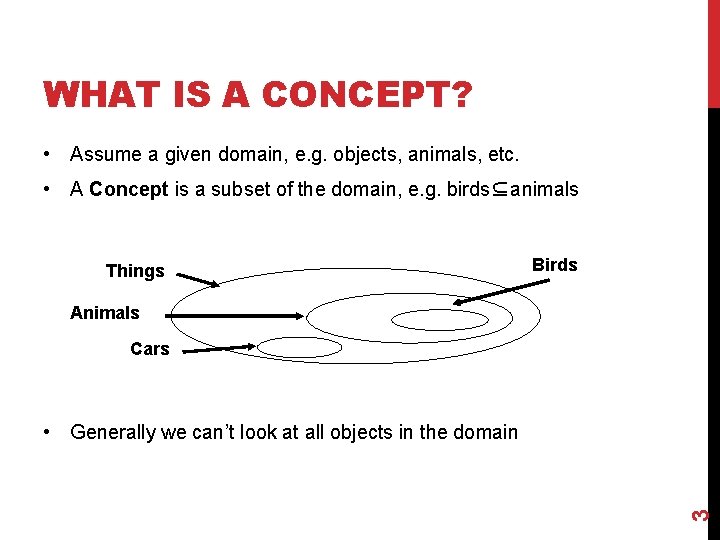

WHAT IS A CONCEPT? • Assume a given domain, e. g. objects, animals, etc. • A Concept is a subset of the domain, e. g. birds⊆animals Things Birds Animals Cars 3 • Generally we can’t look at all objects in the domain

WHAT IS A CONCEPT? • A Concept could be defined as a Boolean-valued function C defined over the larger set • Example: a function defined over all animals whose value is true for birds and false for every other animal. c(bird) = true c(cat) = false c(car) = false c(pigeon) = true 4 • •

WHAT IS CONCEPT LEARNING? • Given a set of examples labeled as members (positive) or non-members (negative) of a concept: 5 • Infer the general definition of this concept • Approximate c from training examples: • Infer the best concept-description from the set of all possible hypotheses

EXAMPLE OF A CONCEPT LEARNING TASK • Concept: Good days on which my friend enjoys water sport (values: Yes, No) • Task: predict the value of ”Enjoy Sport” for an arbitrary day based on the values of the other attributes • Result: classifier for days Temp Humid Sunny Rainy Sunny Warm Cold Warm Normal High Wind Water Strong Warm instance Strong Warm Cool Forecast Enjoy Sport Same Change Yes No Yes 6 Sky attributes

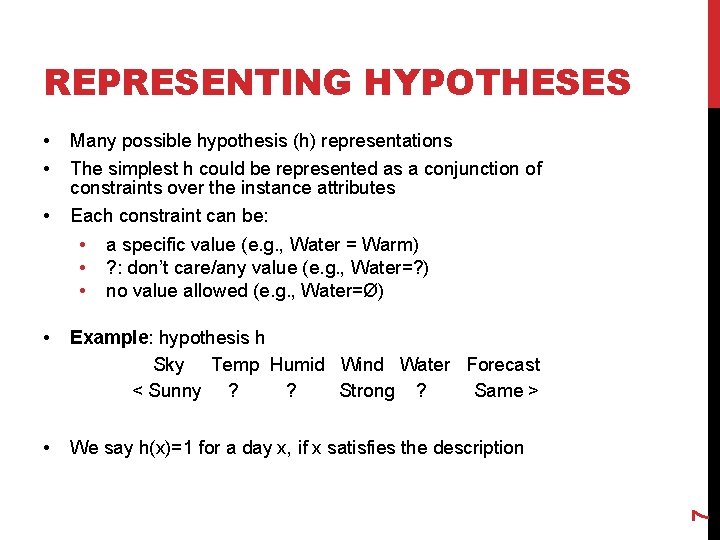

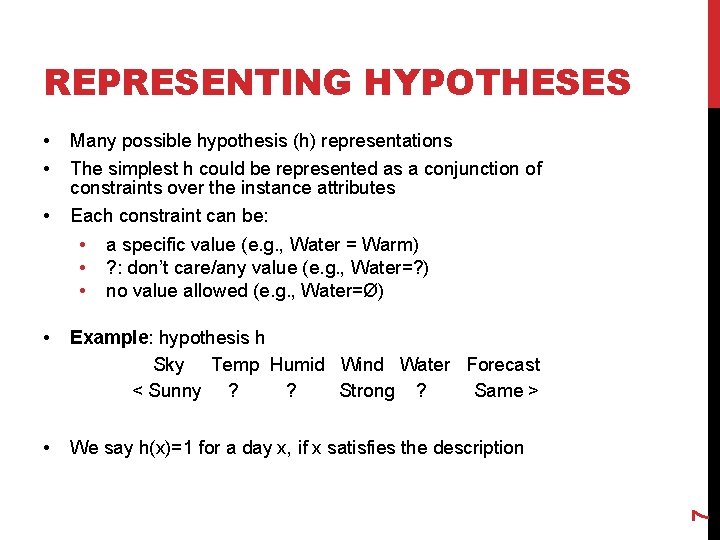

REPRESENTING HYPOTHESES • • Many possible hypothesis (h) representations • Each constraint can be: The simplest h could be represented as a conjunction of constraints over the instance attributes a specific value (e. g. , Water = Warm) ? : don’t care/any value (e. g. , Water=? ) no value allowed (e. g. , Water=Ø) • Example: hypothesis h Sky Temp Humid Wind Water Forecast < Sunny ? ? Strong ? Same > • We say h(x)=1 for a day x, if x satisfies the description 7 • • •

MOST GENERAL/SPECIFIC HYPOTHESIS every day is a positive example 8 No day is a positive example

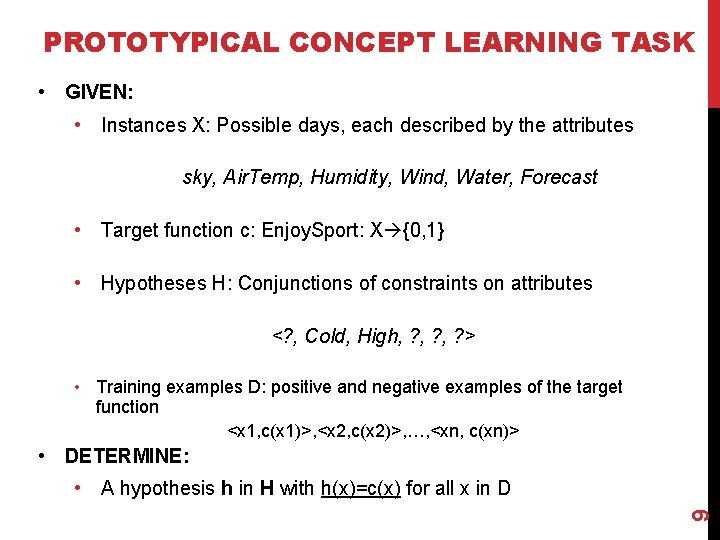

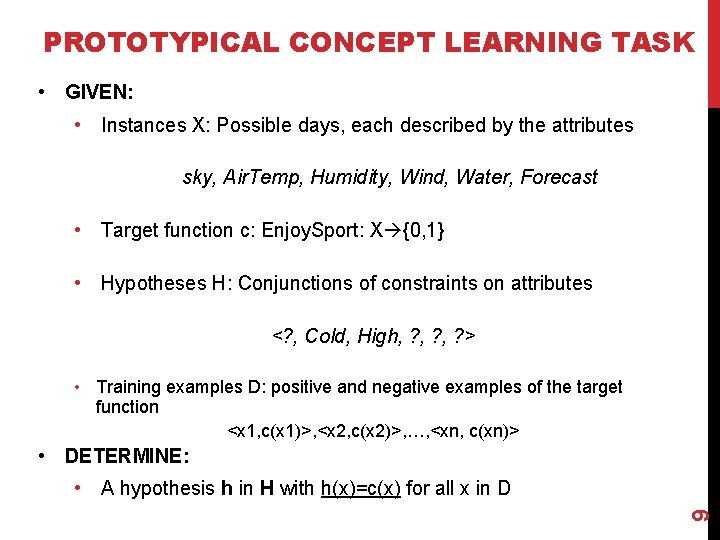

PROTOTYPICAL CONCEPT LEARNING TASK • GIVEN: • Instances X: Possible days, each described by the attributes sky, Air. Temp, Humidity, Wind, Water, Forecast • Target function c: Enjoy. Sport: X {0, 1} • Hypotheses H: Conjunctions of constraints on attributes <? , Cold, High, ? , ? > • Training examples D: positive and negative examples of the target function <x 1, c(x 1)>, <x 2, c(x 2)>, …, <xn, c(xn)> • DETERMINE: 9 • A hypothesis h in H with h(x)=c(x) for all x in D

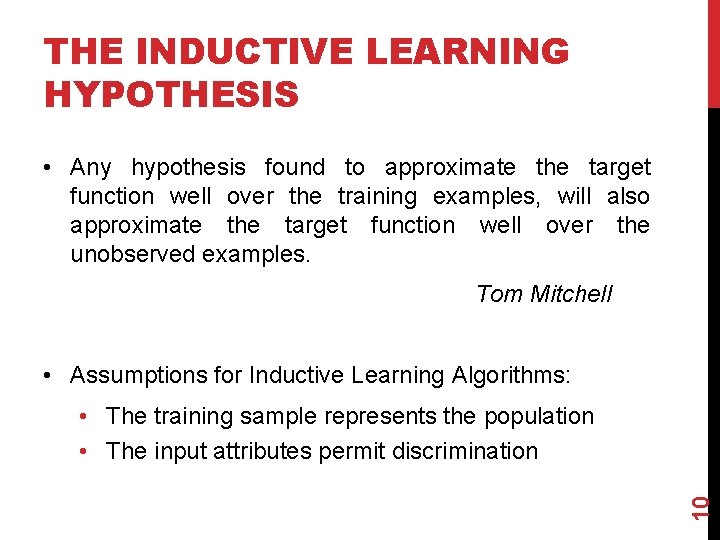

THE INDUCTIVE LEARNING HYPOTHESIS • Any hypothesis found to approximate the target function well over the training examples, will also approximate the target function well over the unobserved examples. Tom Mitchell • Assumptions for Inductive Learning Algorithms: 10 • The training sample represents the population • The input attributes permit discrimination

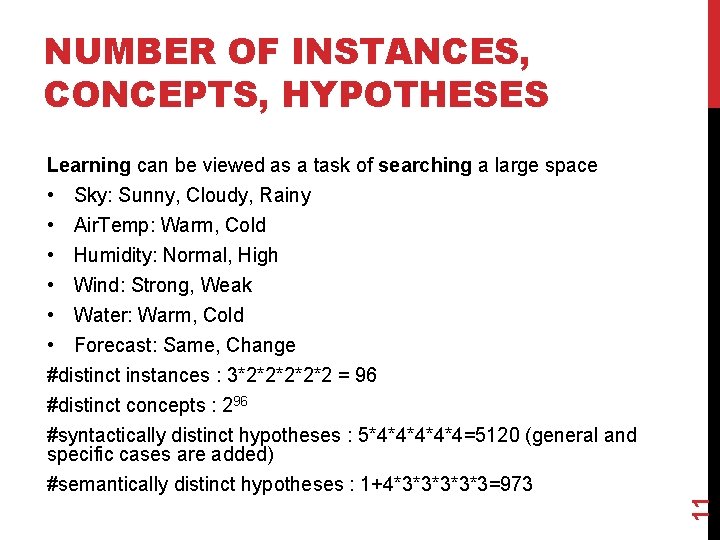

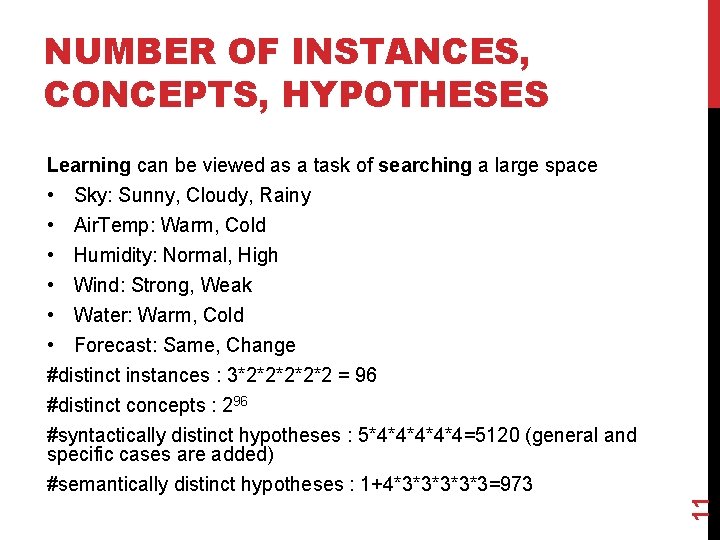

NUMBER OF INSTANCES, CONCEPTS, HYPOTHESES Learning can be viewed as a task of searching a large space • Sky: Sunny, Cloudy, Rainy 11 • Air. Temp: Warm, Cold • Humidity: Normal, High • Wind: Strong, Weak • Water: Warm, Cold • Forecast: Same, Change #distinct instances : 3*2*2*2 = 96 #distinct concepts : 296 #syntactically distinct hypotheses : 5*4*4*4=5120 (general and specific cases are added) #semantically distinct hypotheses : 1+4*3*3*3=973

CONCEPT LEARNING AS SEARCH • Learning can be viewed as a task of searching a large space 12 • Different learning algorithms search this space in different ways!

GENERAL-TO-SPECIFIC ORDERING OF HYPOTHESIS • Many algorithms rely on ordering of hypothesis • Consider two hypotheses: • h 1=(Sunny, ? , Strong, ? ) • h 2= (Sunny, ? , ? , ? ) h 2 imposes fewer constraints than h 1: it classifies more instances x as positive h(x)=1 h 2 is more general than h 1! 13 • How to formalize this?

GENERAL-TO-SPECIFIC ORDERING OF HYPOTHESIS • Many algorithms rely on ordering of hypothesis • Consider two hypotheses: • h 1=(Sunny, ? , Strong, ? ) • h 2= (Sunny, ? , ? , ? ) h 2 imposes fewer constraints than h 1: it classifies more instances x as positive h(x)=1 h 2 is more general than h 1! • How to formalize this? 14 • h 2 is more general than h 1 iff h 2(x)=1 h 1(x)=1 • We note it h 2 ≥g h 1

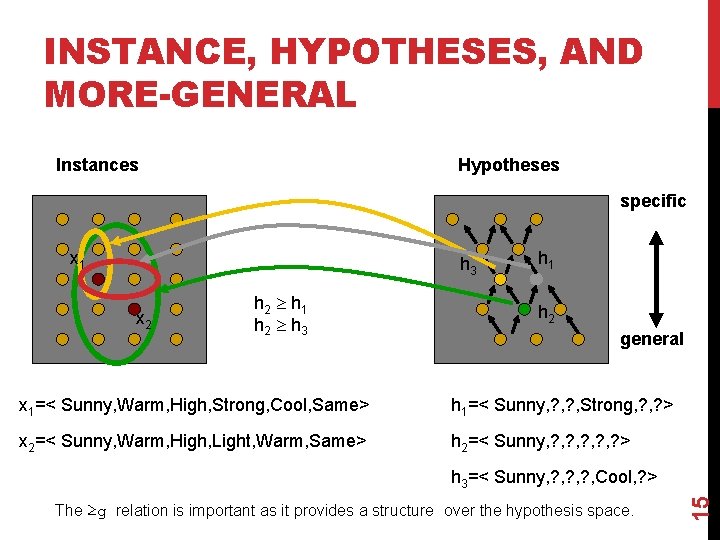

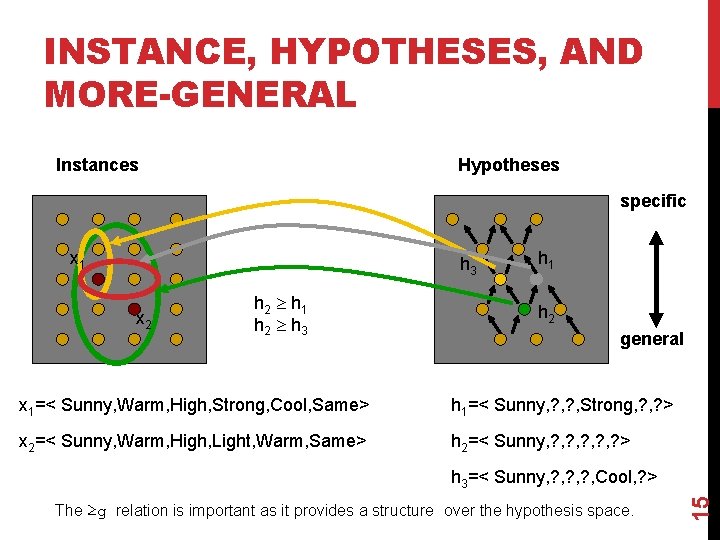

INSTANCE, HYPOTHESES, AND MORE-GENERAL Instances Hypotheses specific x 1 h 3 x 2 h 2 h 1 h 2 h 3 h 1 h 2 general x 1=< Sunny, Warm, High, Strong, Cool, Same> h 1=< Sunny, ? , Strong, ? > x 2=< Sunny, Warm, High, Light, Warm, Same> h 2=< Sunny, ? , ? , ? > The ≥g relation is important as it provides a structure over the hypothesis space. 15 h 3=< Sunny, ? , ? , Cool, ? >

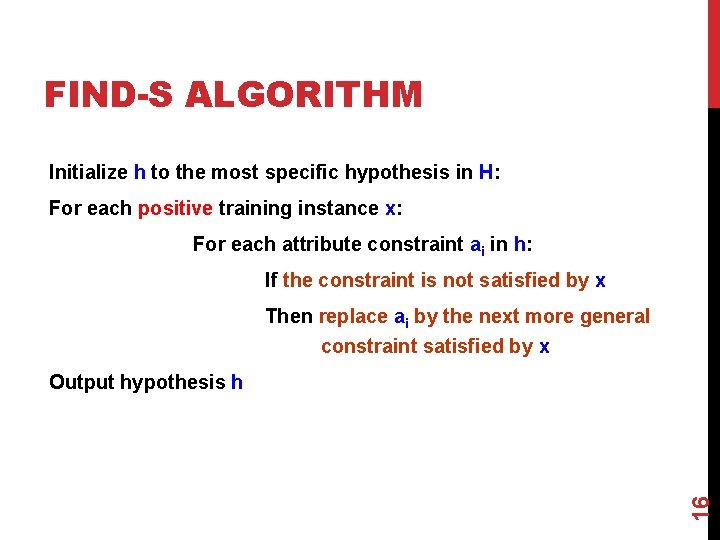

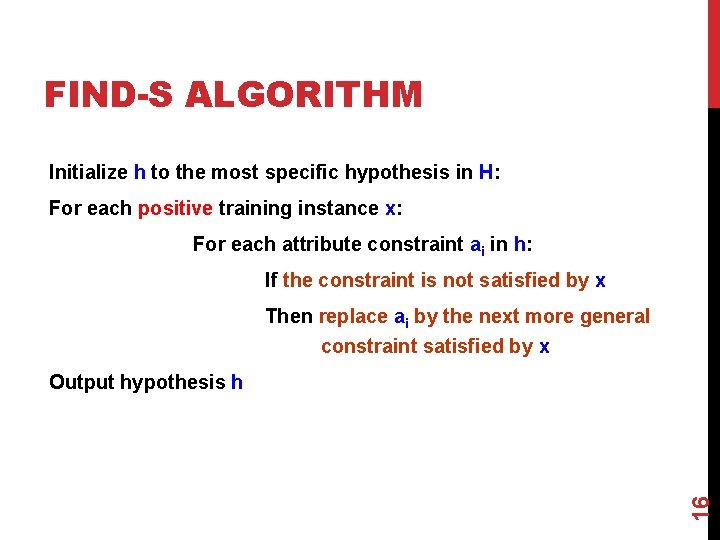

FIND-S ALGORITHM Initialize h to the most specific hypothesis in H: For each positive training instance x: For each attribute constraint ai in h: If the constraint is not satisfied by x Then replace ai by the next more general constraint satisfied by x 16 Output hypothesis h

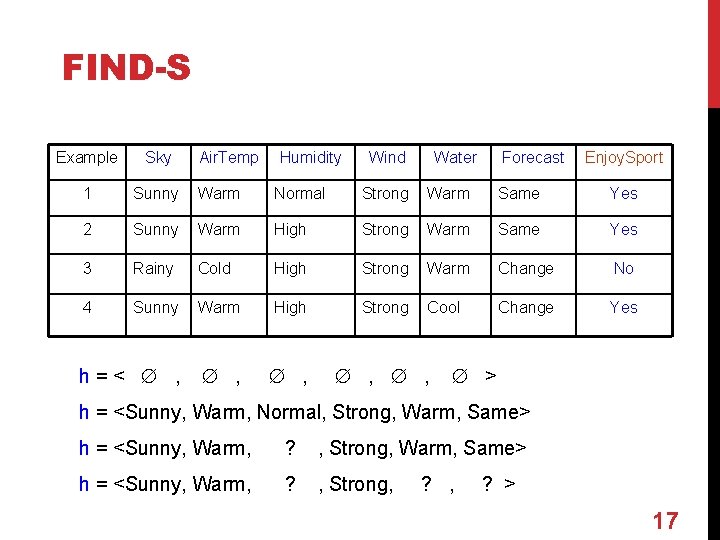

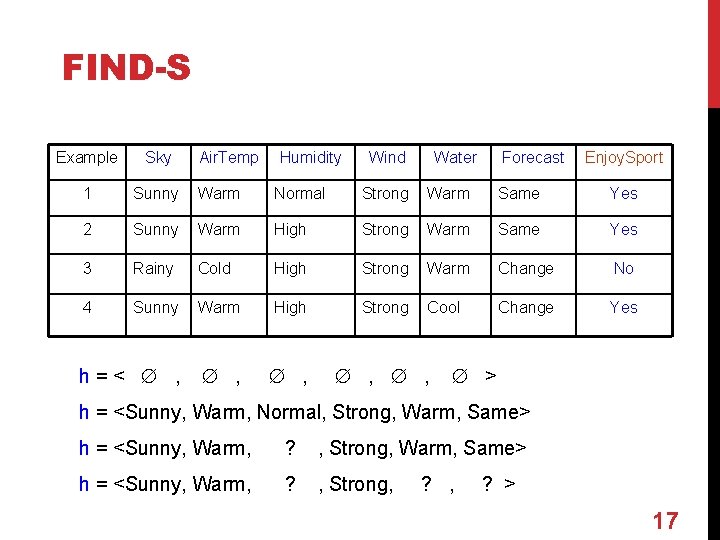

FIND-S Example Sky Air. Temp Humidity Wind Water Forecast Enjoy. Sport 1 Sunny Warm Normal Strong Warm Same Yes 2 Sunny Warm High Strong Warm Same Yes 3 Rainy Cold High Strong Warm Change No 4 Sunny Warm High Strong Cool Change Yes h=< , , , > h = <Sunny, Warm, Normal, Strong, Warm, Same> h = <Sunny, Warm, ? , Strong, ? > 17

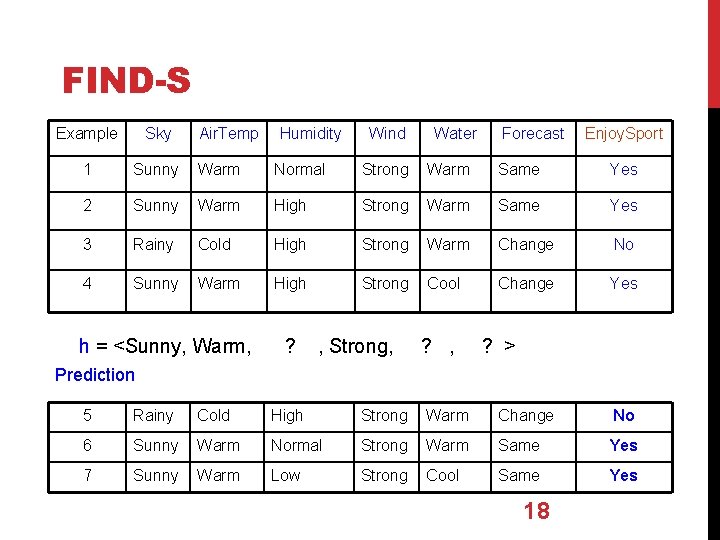

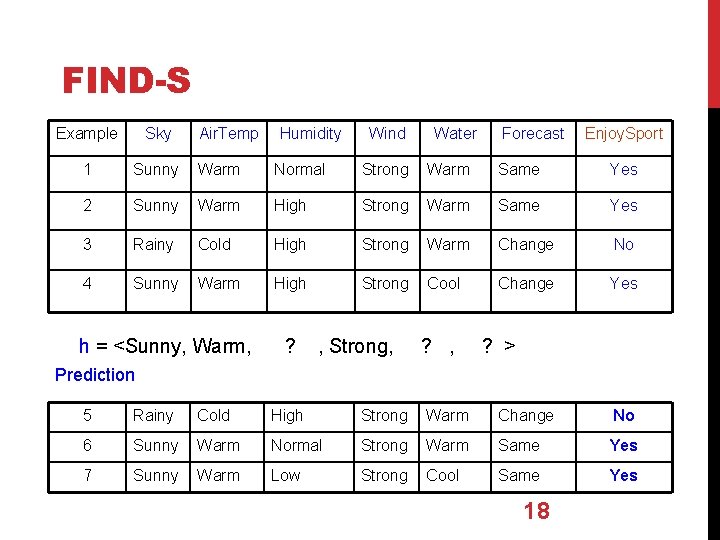

FIND-S Example Sky Air. Temp 1 Sunny Warm Normal Strong Warm Same Yes 2 Sunny Warm High Strong Warm Same Yes 3 Rainy Cold High Strong Warm Change No 4 Sunny Warm High Strong Cool Change Yes h = <Sunny, Warm, Humidity ? Wind , Strong, Water ? , Forecast Enjoy. Sport ? > Prediction 5 Rainy Cold High Strong Warm Change No 6 Sunny Warm Normal Strong Warm Same Yes 7 Sunny Warm Low Strong Cool Same Yes 18