Machine Learning Latent Aspect Models Eric Xing Lecture

- Slides: 38

Machine Learning Latent Aspect Models Eric Xing Lecture 14, August 15, 2010 Reading: see class homepage Eric Xing © Eric Xing @ CMU, 2006 -2010

Apoptosis + Medicine 2 Eric Xing © Eric Xing @ CMU, 2006 -2010

Apoptosis + Medicine probabilistic generative model 3 Eric Xing © Eric Xing @ CMU, 2006 -2010

Apoptosis + Medicine statistical inference 4 Eric Xing © Eric Xing @ CMU, 2006 -2010

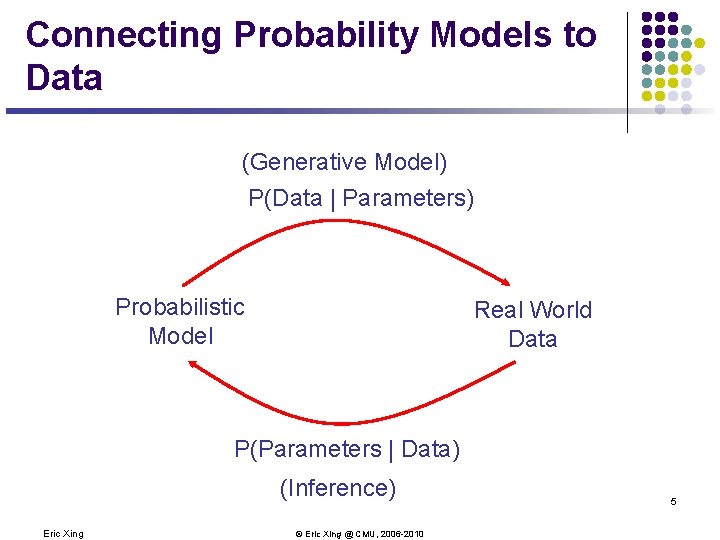

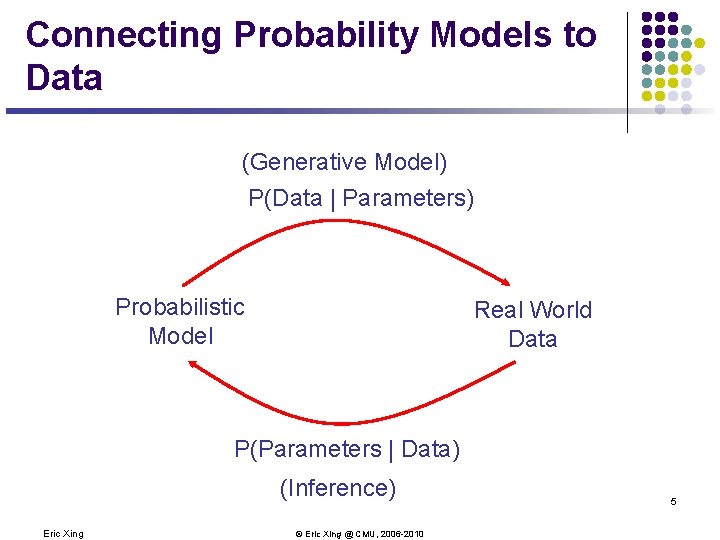

Connecting Probability Models to Data (Generative Model) P(Data | Parameters) Probabilistic Model Real World Data P(Parameters | Data) (Inference) Eric Xing © Eric Xing @ CMU, 2006 -2010 5

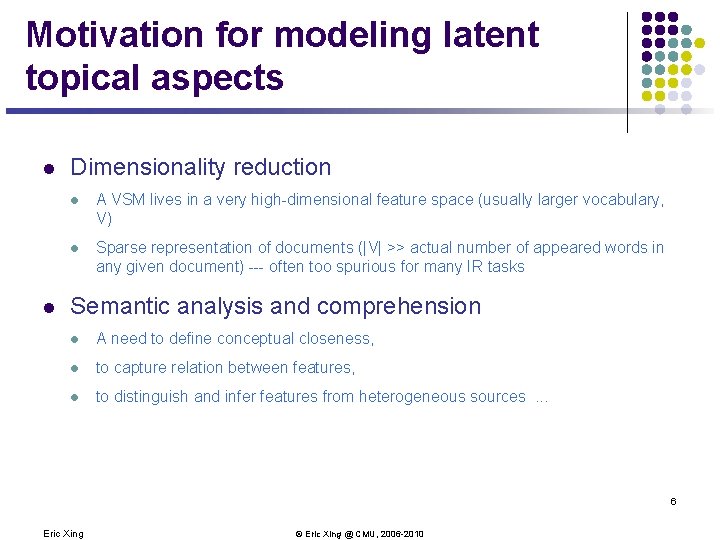

Motivation for modeling latent topical aspects l l Dimensionality reduction l A VSM lives in a very high-dimensional feature space (usually larger vocabulary, V) l Sparse representation of documents (|V| >> actual number of appeared words in any given document) --- often too spurious for many IR tasks Semantic analysis and comprehension l A need to define conceptual closeness, l to capture relation between features, l to distinguish and infer features from heterogeneous sources. . . 6 Eric Xing © Eric Xing @ CMU, 2006 -2010

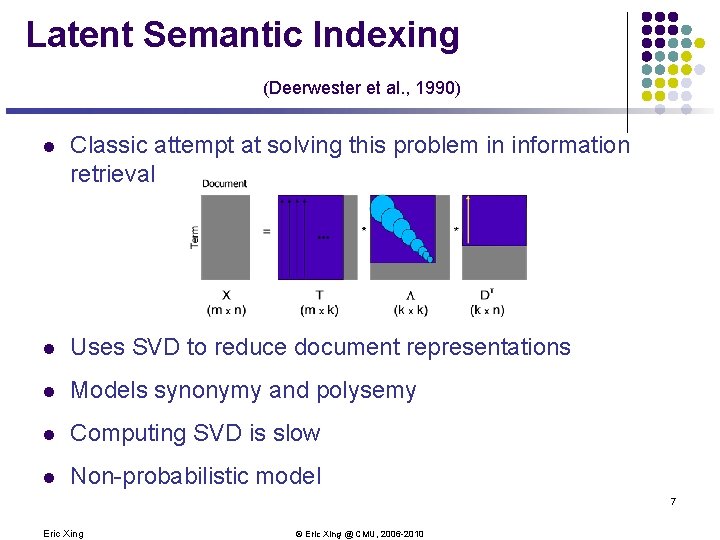

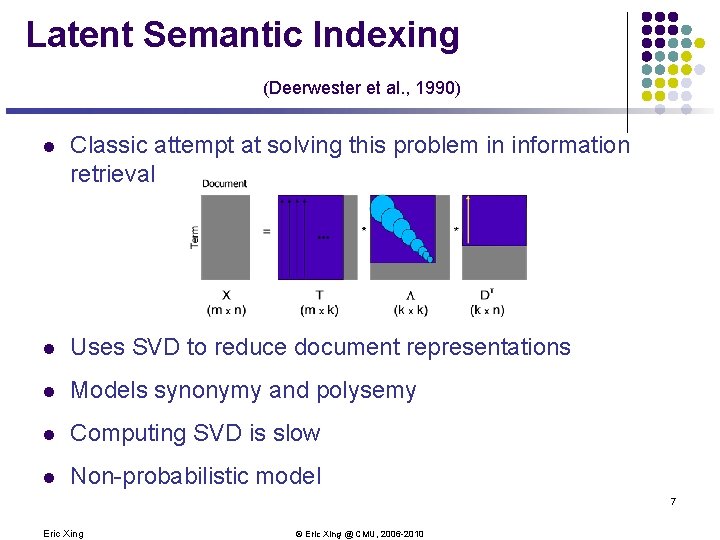

Latent Semantic Indexing (Deerwester et al. , 1990) l Classic attempt at solving this problem in information retrieval l Uses SVD to reduce document representations l Models synonymy and polysemy l Computing SVD is slow l Non-probabilistic model 7 Eric Xing © Eric Xing @ CMU, 2006 -2010

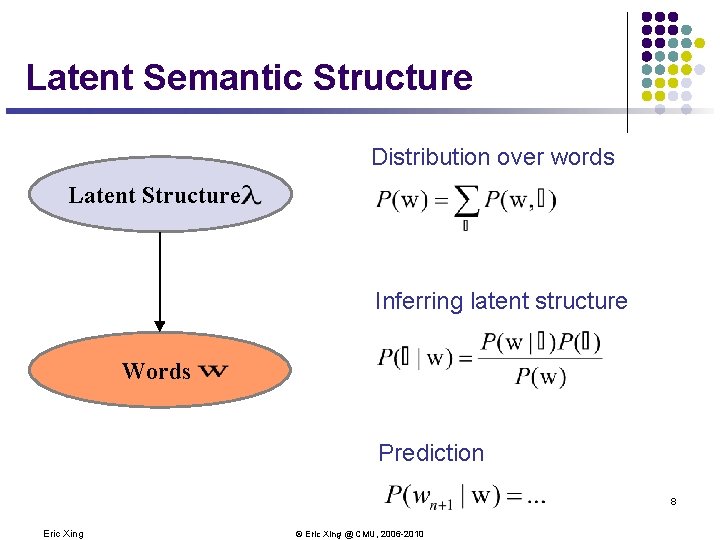

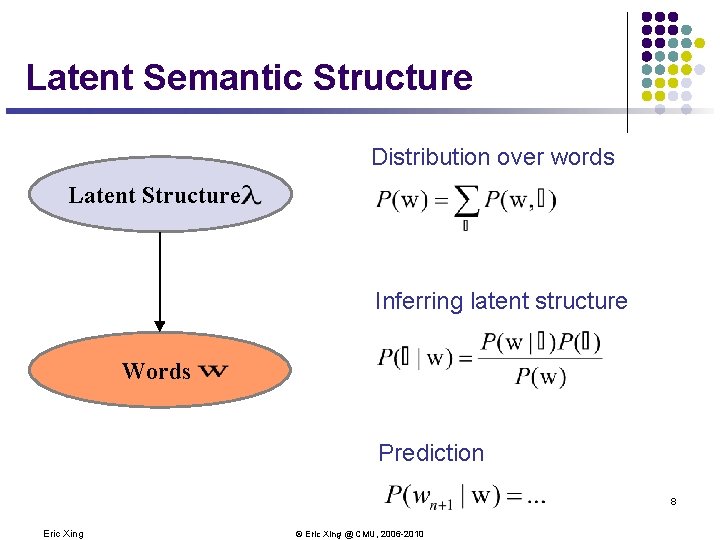

Latent Semantic Structure Distribution over words Latent Structure Inferring latent structure Words Prediction 8 Eric Xing © Eric Xing @ CMU, 2006 -2010

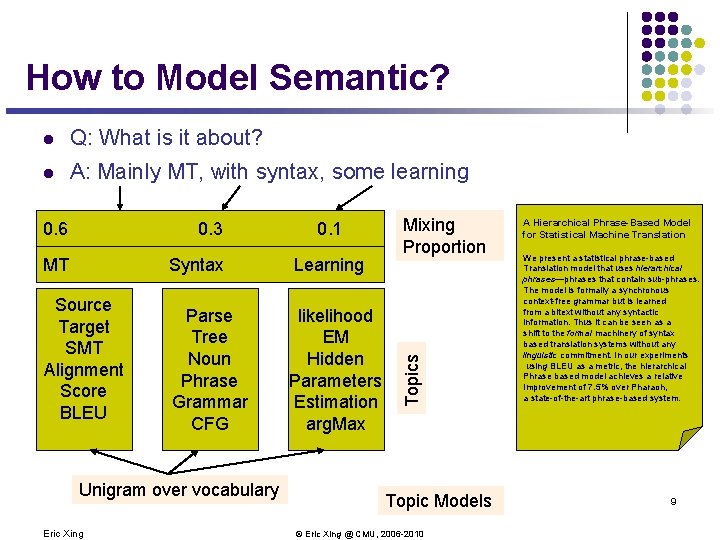

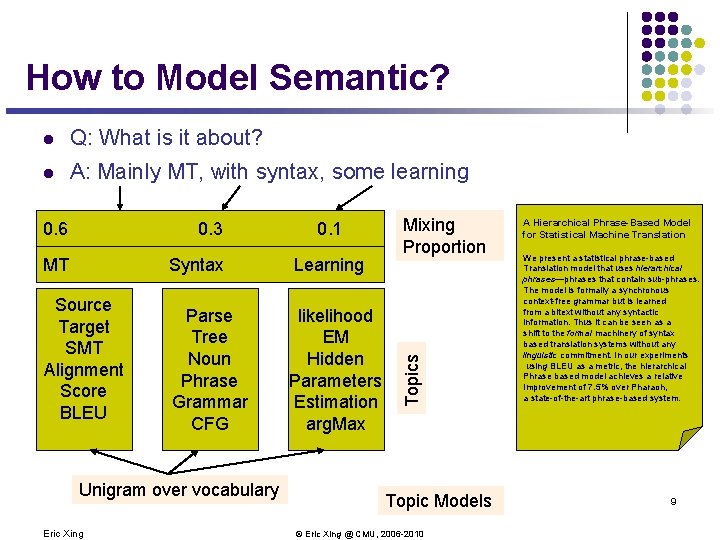

How to Model Semantic? l Q: What is it about? l A: Mainly MT, with syntax, some learning 0. 3 MT Syntax Learning Parse Tree Noun Phrase Grammar CFG likelihood EM Hidden Parameters Estimation arg. Max Source Target SMT Alignment Score BLEU Unigram over vocabulary Eric Xing 0. 1 Mixing Proportion Topics 0. 6 Topic Models © Eric Xing @ CMU, 2006 -2010 A Hierarchical Phrase-Based Model for Statistical Machine Translation We present a statistical phrase-based Translation model that uses hierarchical phrases—phrases that contain sub-phrases. The model is formally a synchronous context-free grammar but is learned from a bitext without any syntactic information. Thus it can be seen as a shift to the formal machinery of syntax based translation systems without any linguistic commitment. In our experiments using BLEU as a metric, the hierarchical Phrase based model achieves a relative Improvement of 7. 5% over Pharaoh, a state-of-the-art phrase-based system. 9

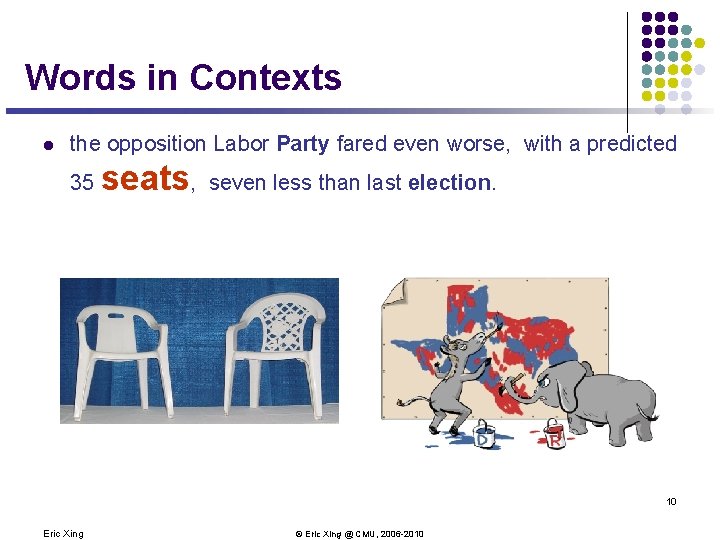

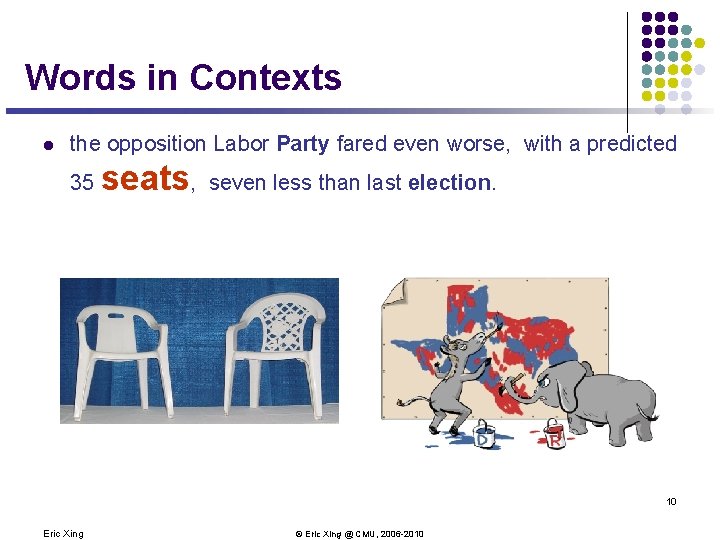

Words in Contexts l the opposition Labor Party fared even worse, with a predicted 35 seats, seven less than last election. 10 Eric Xing © Eric Xing @ CMU, 2006 -2010

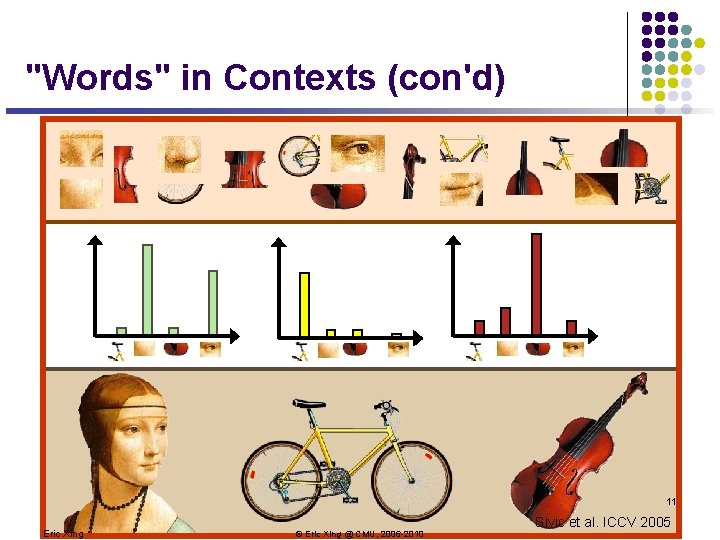

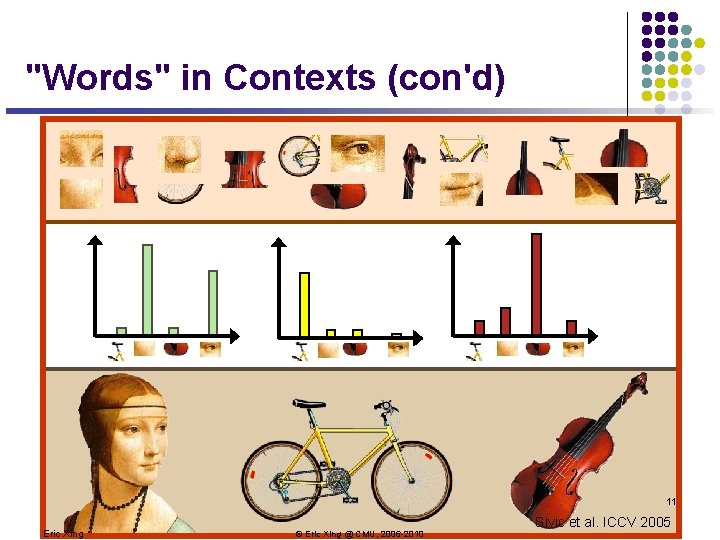

"Words" in Contexts (con'd) 11 Eric Xing © Eric Xing @ CMU, 2006 -2010 Sivic et al. ICCV 2005

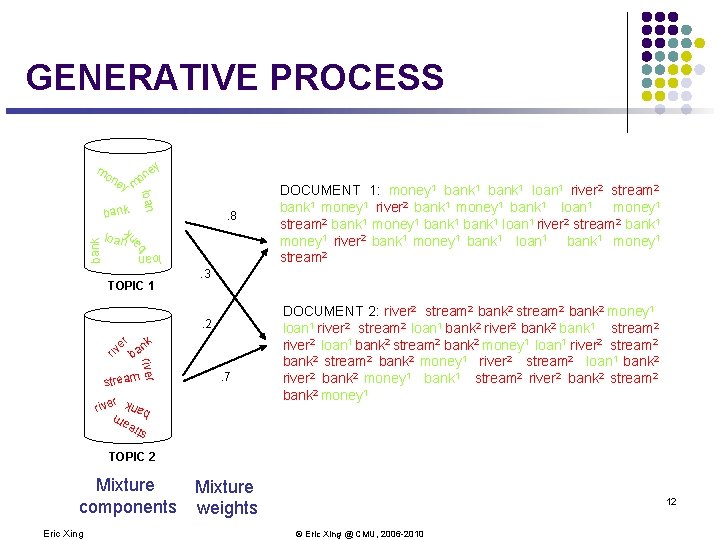

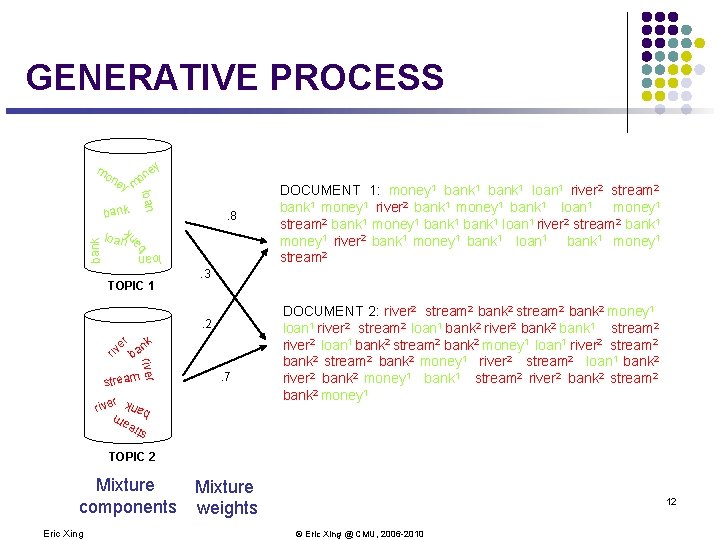

GENERATIVE PROCESS mo n ey on loan ba nk . 8 loan bank loan ey m TOPIC 1 . 3 riv er . 2 nk river ba stream DOCUMENT 1: money 1 bank 1 loan 1 river 2 stream 2 bank 1 money 1 river 2 bank 1 money 1 bank 1 loan 1 money 1 stream 2 bank 1 money 1 bank 1 loan 1 river 2 stream 2 bank 1 money 1 river 2 bank 1 money 1 bank 1 loan 1 bank 1 money 1 stream 2 . 7 r rive DOCUMENT 2: river 2 stream 2 bank 2 money 1 loan 1 river 2 stream 2 loan 1 bank 2 river 2 bank 1 stream 2 river 2 loan 1 bank 2 stream 2 bank 2 money 1 loan 1 river 2 stream 2 bank 2 money 1 river 2 stream 2 loan 1 bank 2 river 2 bank 2 money 1 bank 1 stream 2 river 2 bank 2 stream 2 bank 2 money 1 str ea m ban k TOPIC 2 Mixture components weights Eric Xing 12 © Eric Xing @ CMU, 2006 -2010

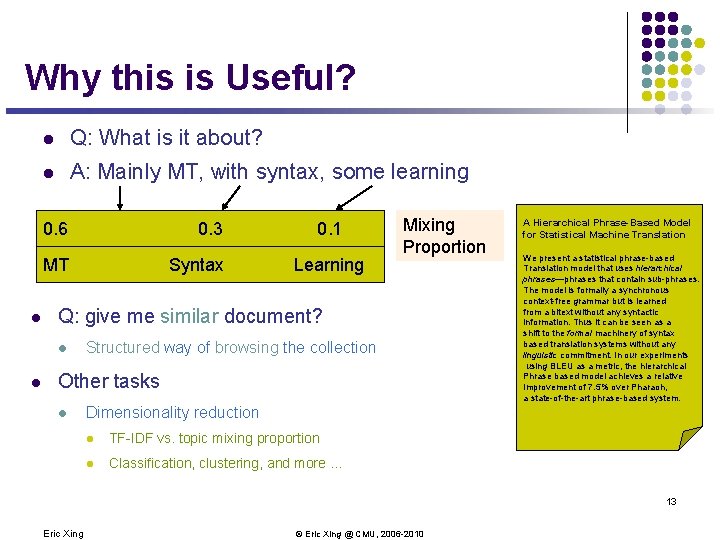

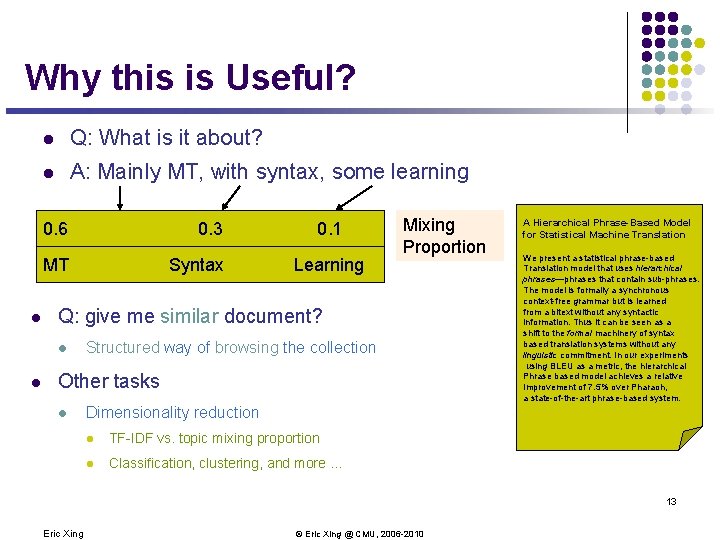

Why this is Useful? l l Q: What is it about? l A: Mainly MT, with syntax, some learning 0. 6 0. 3 MT Syntax Learning Mixing Proportion Q: give me similar document? l l 0. 1 Structured way of browsing the collection Other tasks l A Hierarchical Phrase-Based Model for Statistical Machine Translation We present a statistical phrase-based Translation model that uses hierarchical phrases—phrases that contain sub-phrases. The model is formally a synchronous context-free grammar but is learned from a bitext without any syntactic information. Thus it can be seen as a shift to the formal machinery of syntax based translation systems without any linguistic commitment. In our experiments using BLEU as a metric, the hierarchical Phrase based model achieves a relative Improvement of 7. 5% over Pharaoh, a state-of-the-art phrase-based system. Dimensionality reduction l TF-IDF vs. topic mixing proportion l Classification, clustering, and more … 13 Eric Xing © Eric Xing @ CMU, 2006 -2010

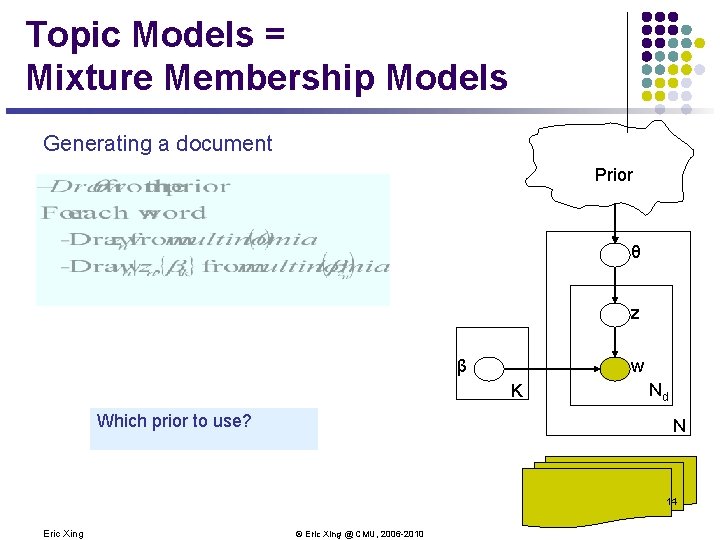

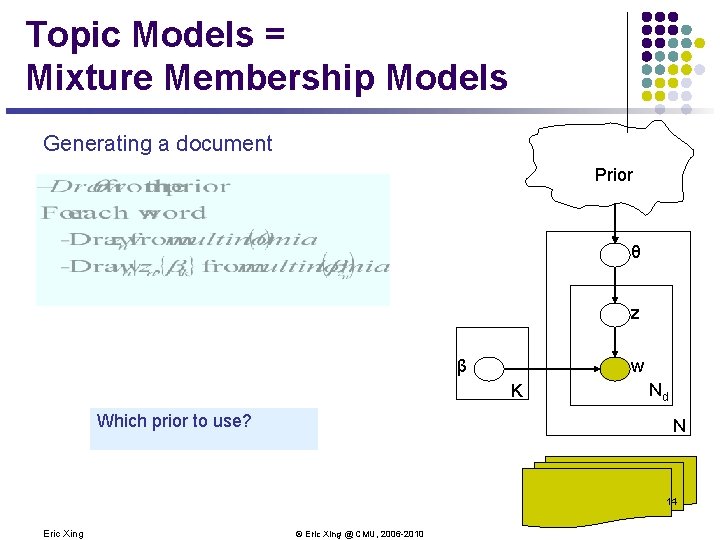

Topic Models = Mixture Membership Models Generating a document Prior θ z β w K Which prior to use? Nd N 14 Eric Xing © Eric Xing @ CMU, 2006 -2010

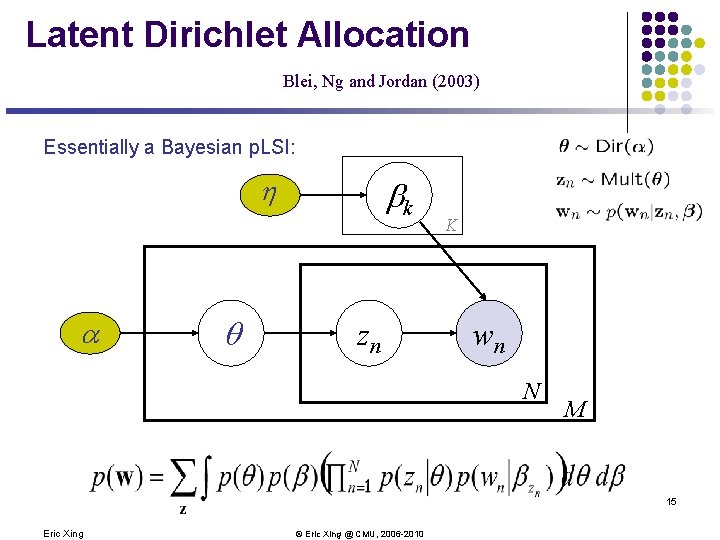

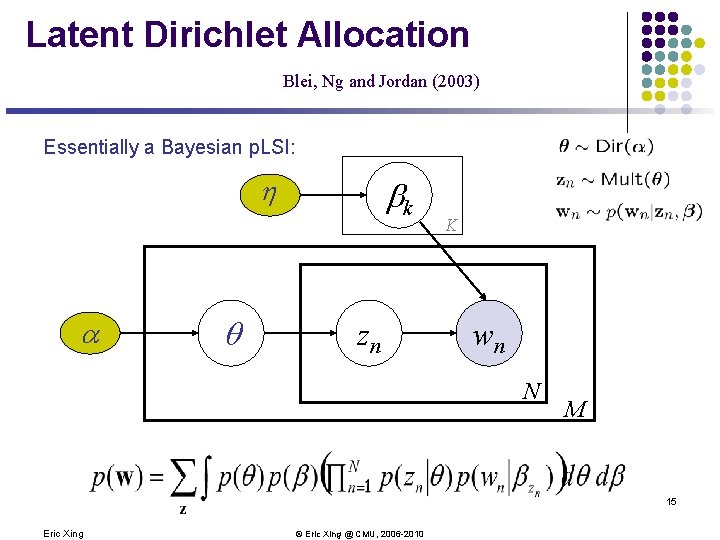

Latent Dirichlet Allocation Blei, Ng and Jordan (2003) Essentially a Bayesian p. LSI: h a q bk zn K wn N M 15 Eric Xing © Eric Xing @ CMU, 2006 -2010

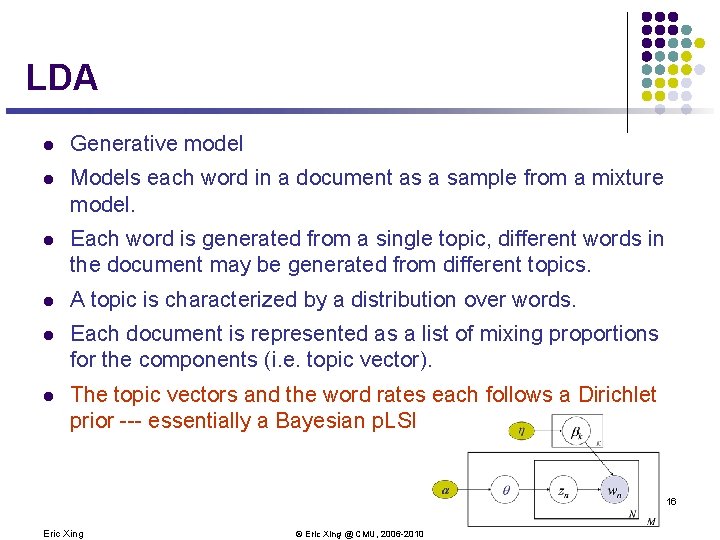

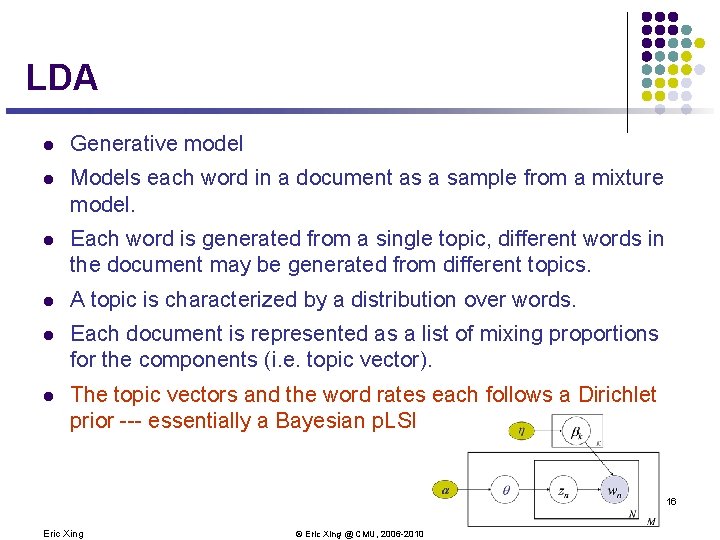

LDA l Generative model l Models each word in a document as a sample from a mixture model. l Each word is generated from a single topic, different words in the document may be generated from different topics. l A topic is characterized by a distribution over words. l Each document is represented as a list of mixing proportions for the components (i. e. topic vector). l The topic vectors and the word rates each follows a Dirichlet prior --- essentially a Bayesian p. LSI 16 Eric Xing © Eric Xing @ CMU, 2006 -2010

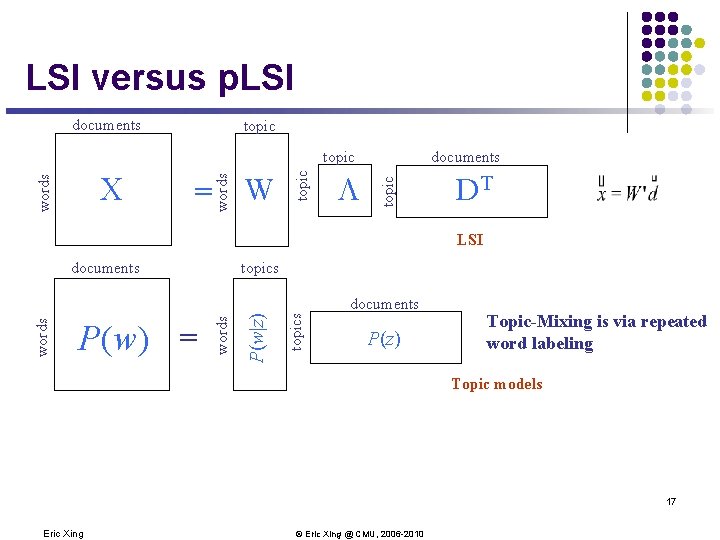

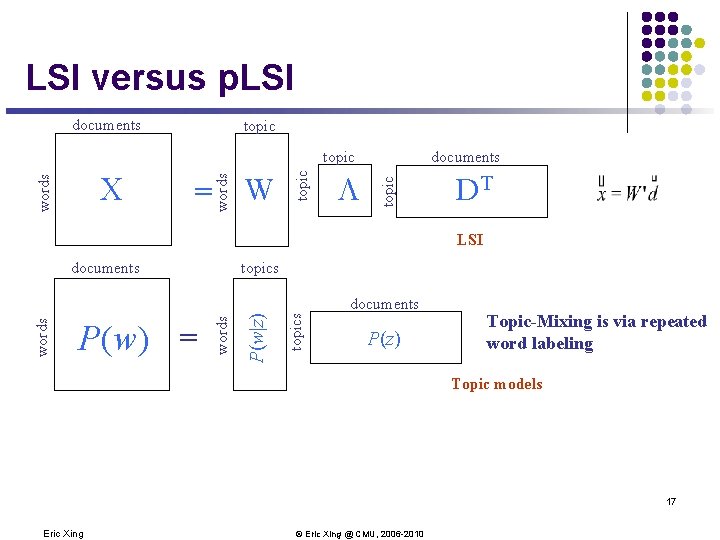

LSI versus p. LSI documents topic W L documents topic = topic words C words topic DT LSI documents topics P(w|z) P(w) = words documents P(z) Topic-Mixing is via repeated word labeling Topic models 17 Eric Xing © Eric Xing @ CMU, 2006 -2010

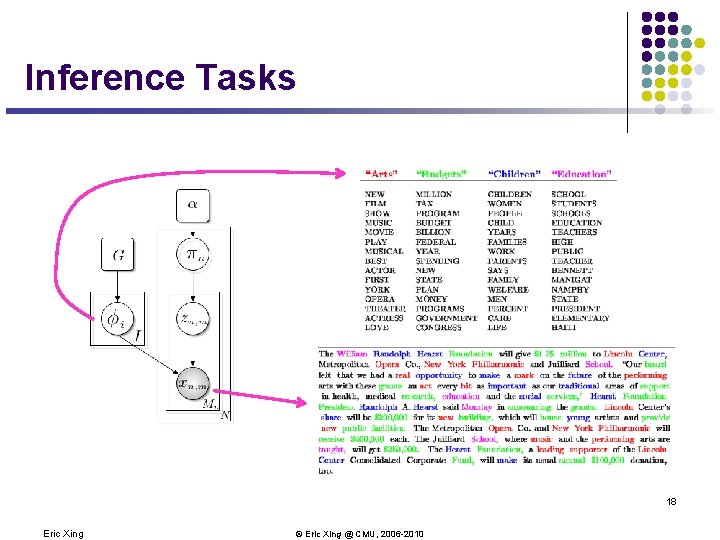

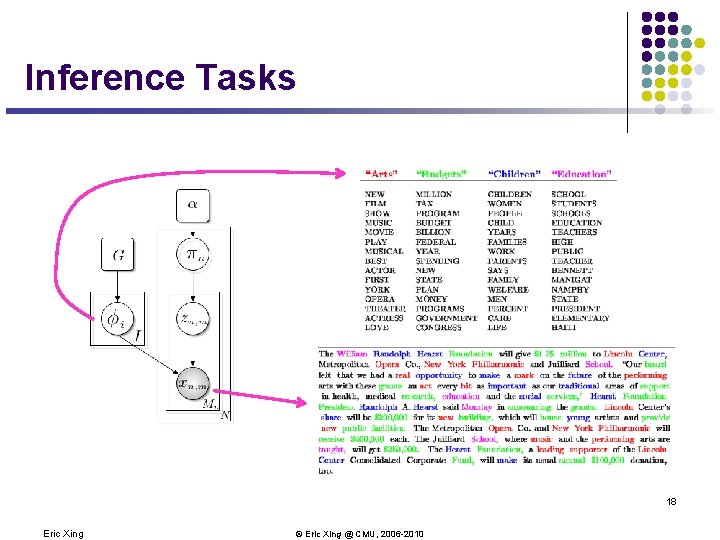

Inference Tasks 18 Eric Xing © Eric Xing @ CMU, 2006 -2010

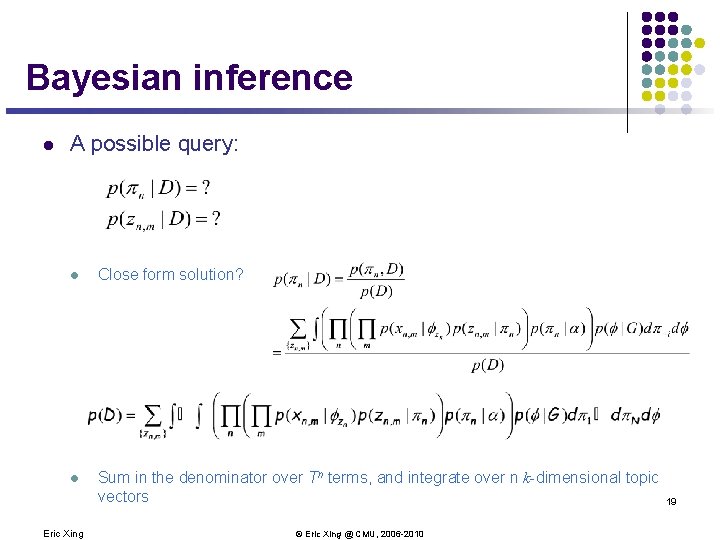

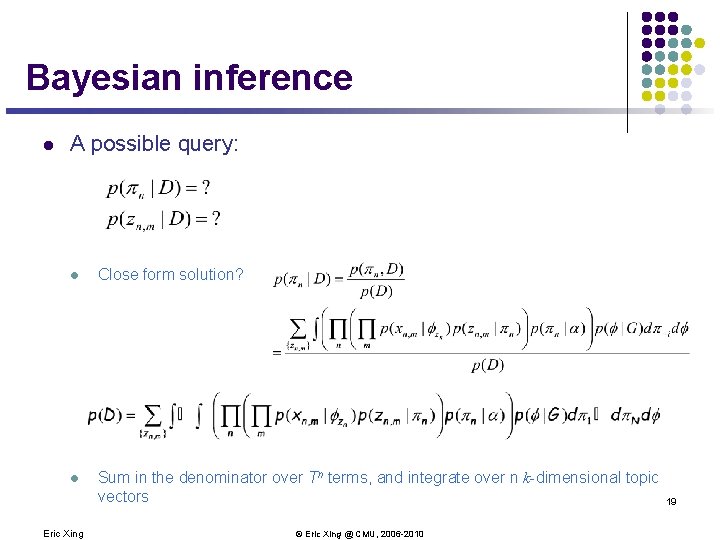

Bayesian inference l A possible query: l Close form solution? l Sum in the denominator over Tn terms, and integrate over n k-dimensional topic vectors Eric Xing © Eric Xing @ CMU, 2006 -2010 19

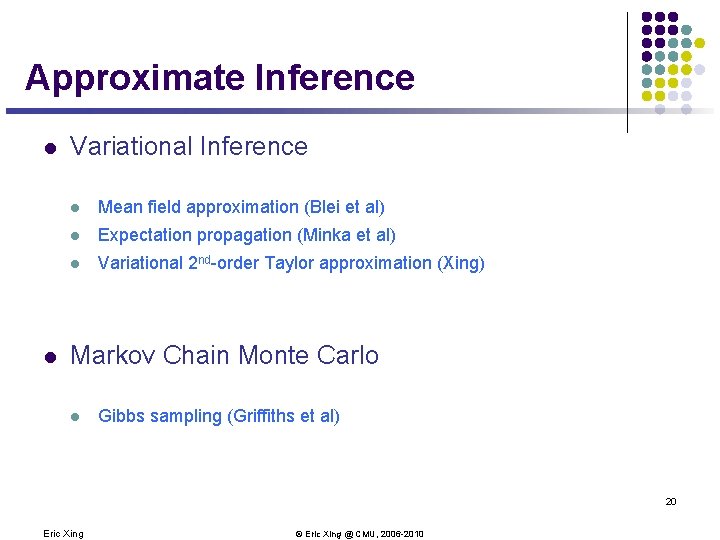

Approximate Inference l l Variational Inference l Mean field approximation (Blei et al) l Expectation propagation (Minka et al) l Variational 2 nd-order Taylor approximation (Xing) Markov Chain Monte Carlo l Gibbs sampling (Griffiths et al) 20 Eric Xing © Eric Xing @ CMU, 2006 -2010

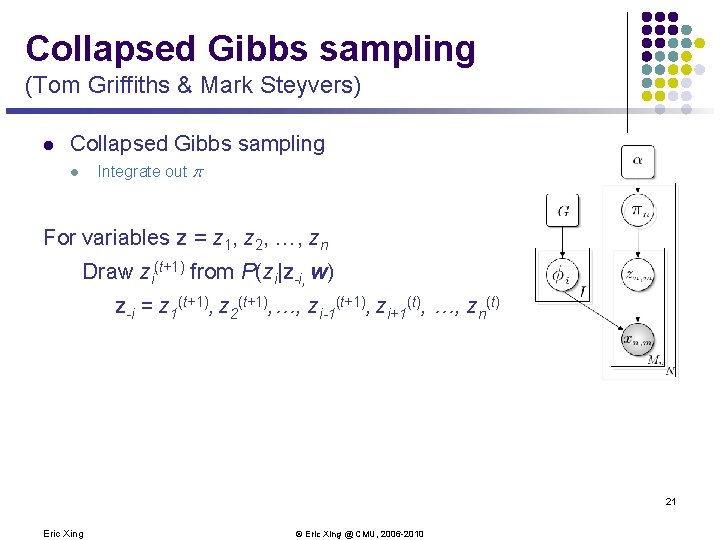

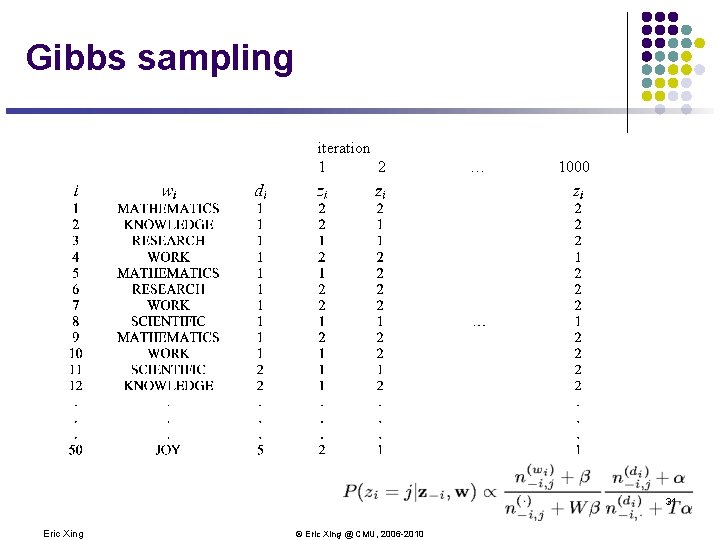

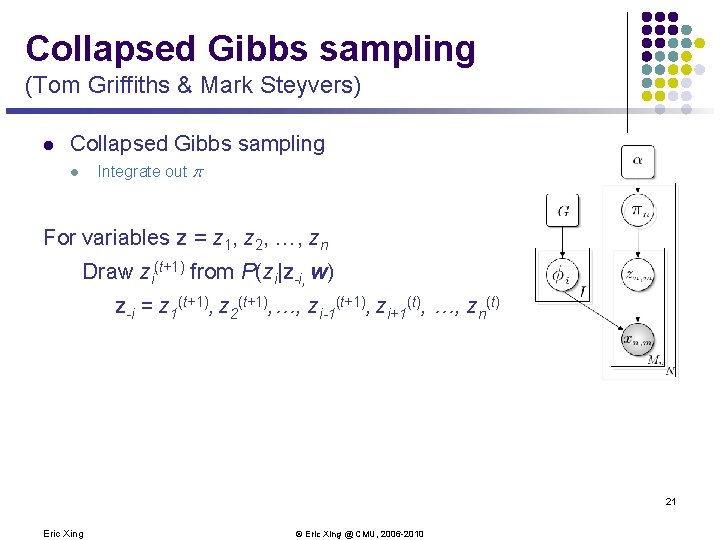

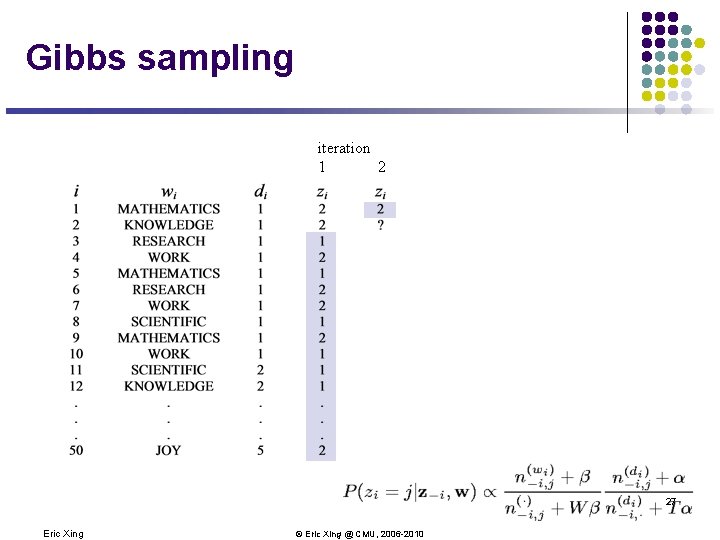

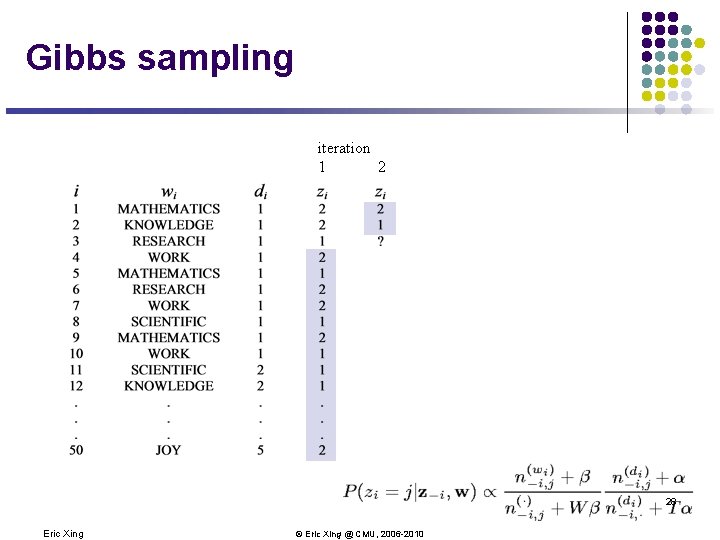

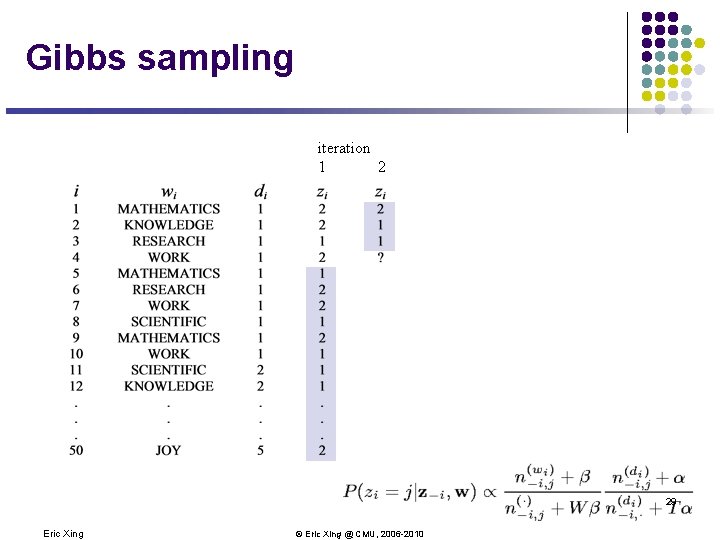

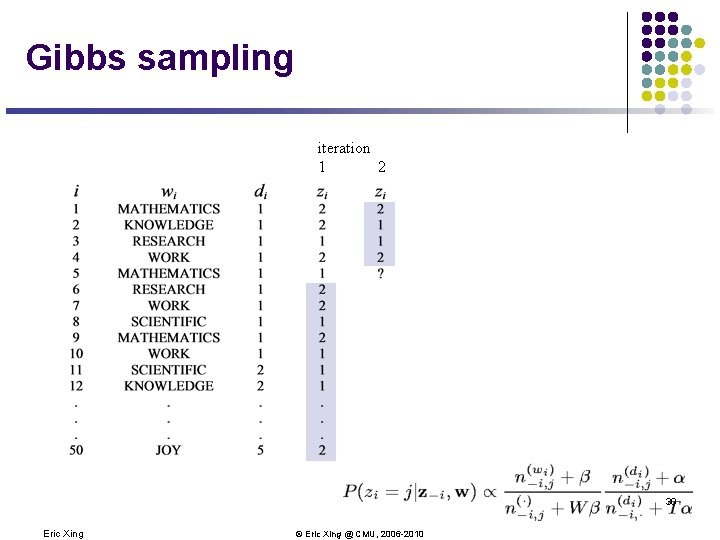

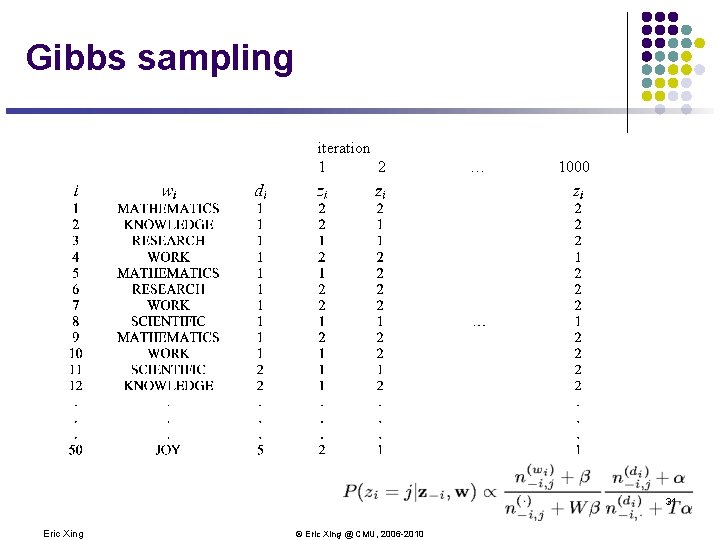

Collapsed Gibbs sampling (Tom Griffiths & Mark Steyvers) l Collapsed Gibbs sampling Integrate out p l For variables z = z 1, z 2, …, zn Draw zi(t+1) from P(zi|z-i, w) z-i = z 1(t+1), z 2(t+1), …, zi-1(t+1), zi+1(t), …, zn(t) 21 Eric Xing © Eric Xing @ CMU, 2006 -2010

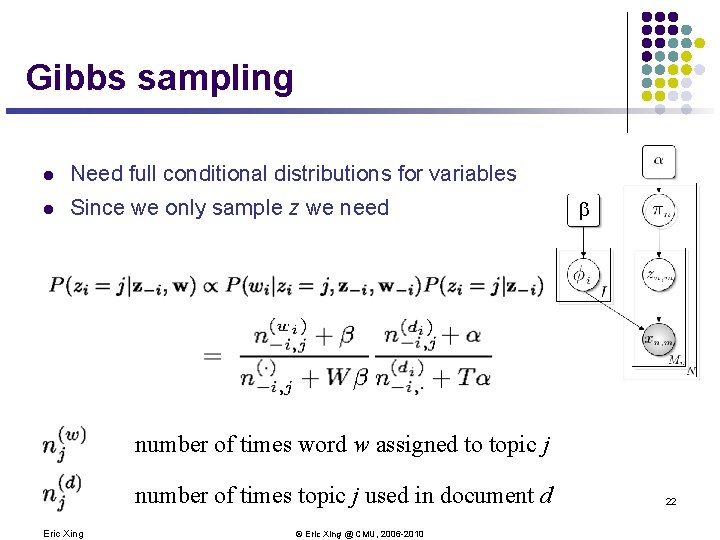

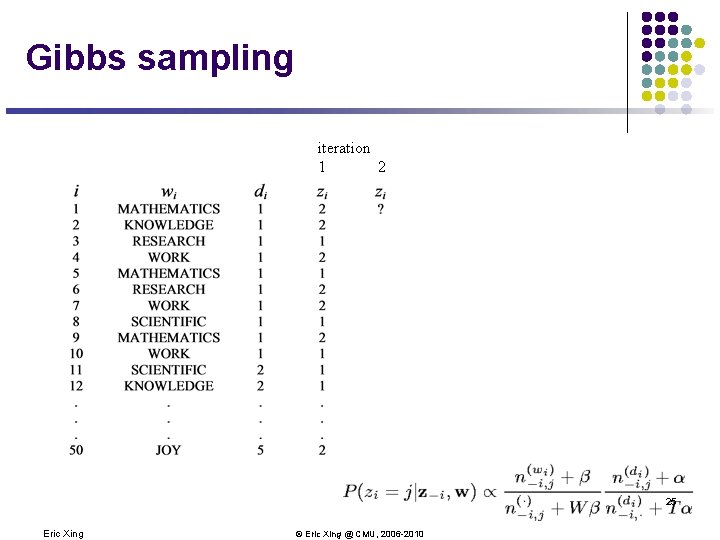

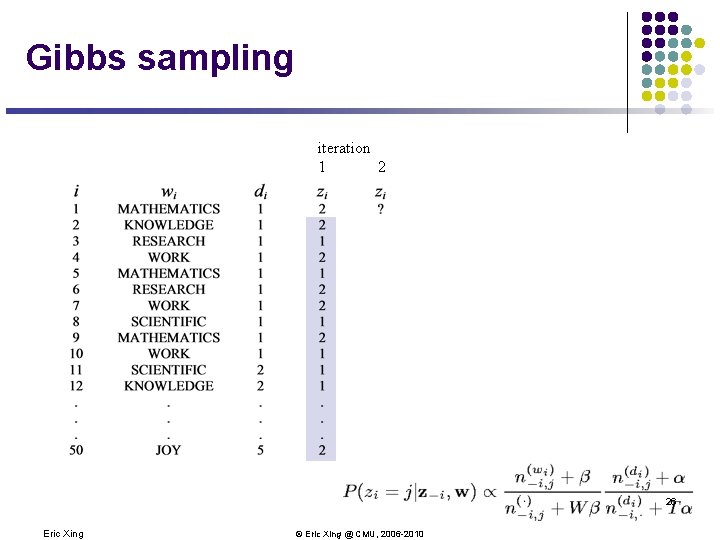

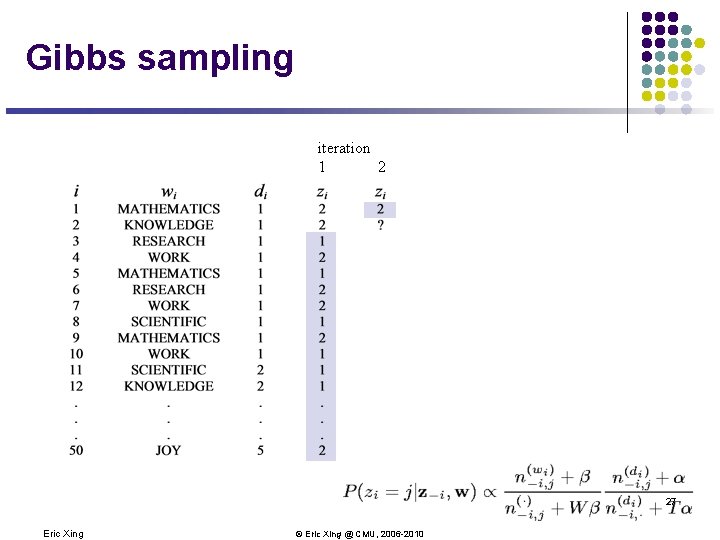

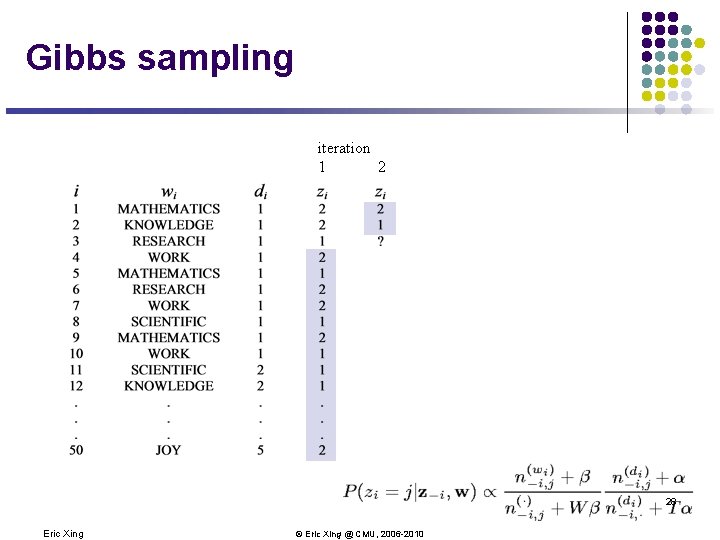

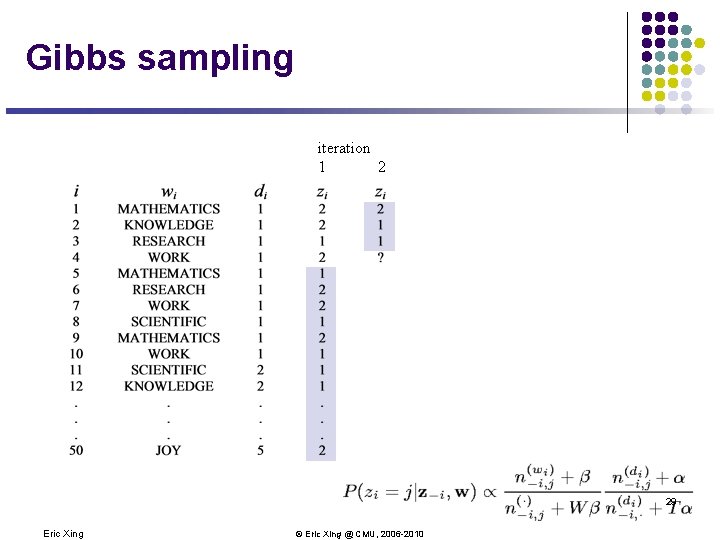

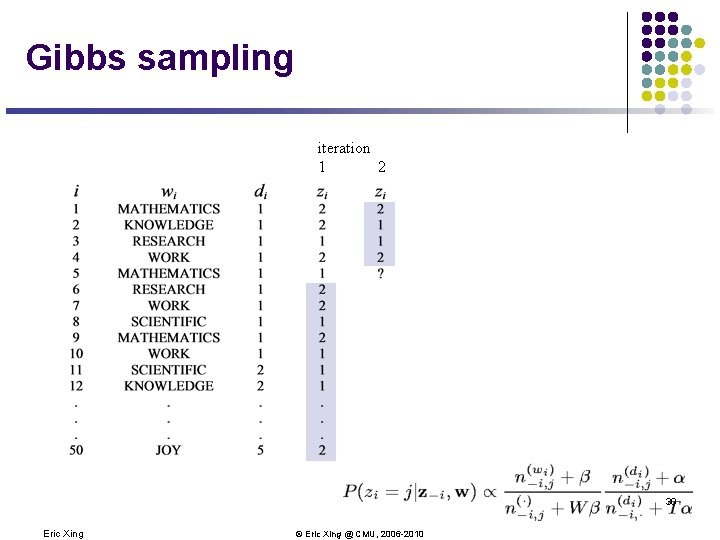

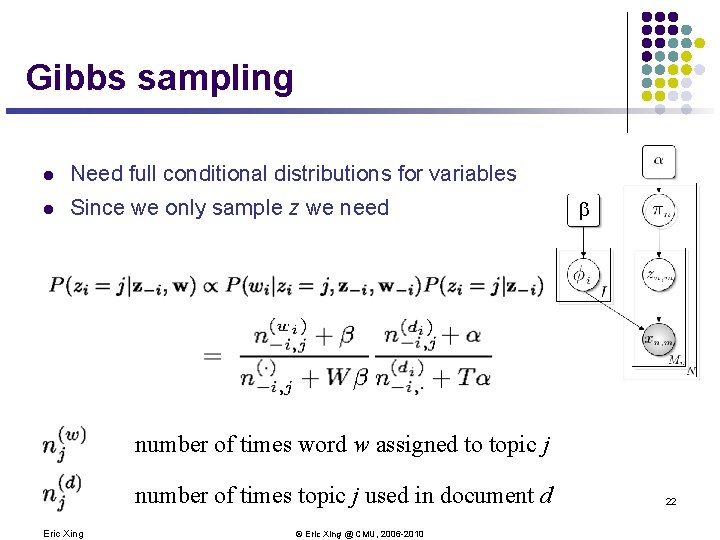

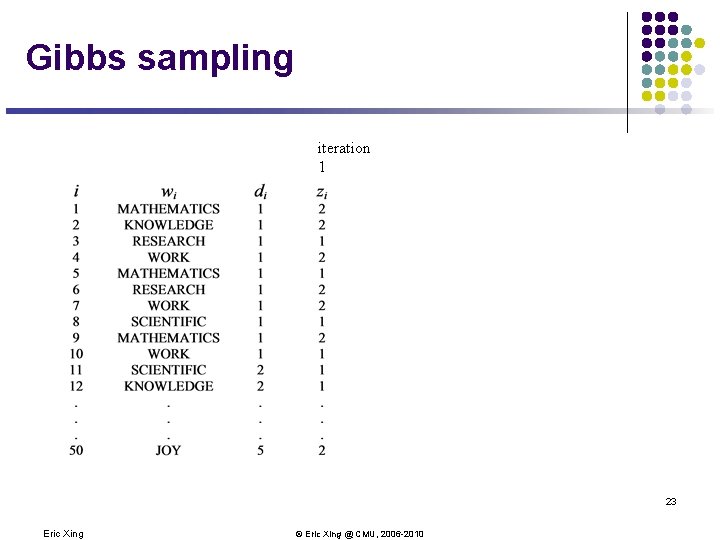

Gibbs sampling l Need full conditional distributions for variables l Since we only sample z we need b number of times word w assigned to topic j number of times topic j used in document d Eric Xing © Eric Xing @ CMU, 2006 -2010 22

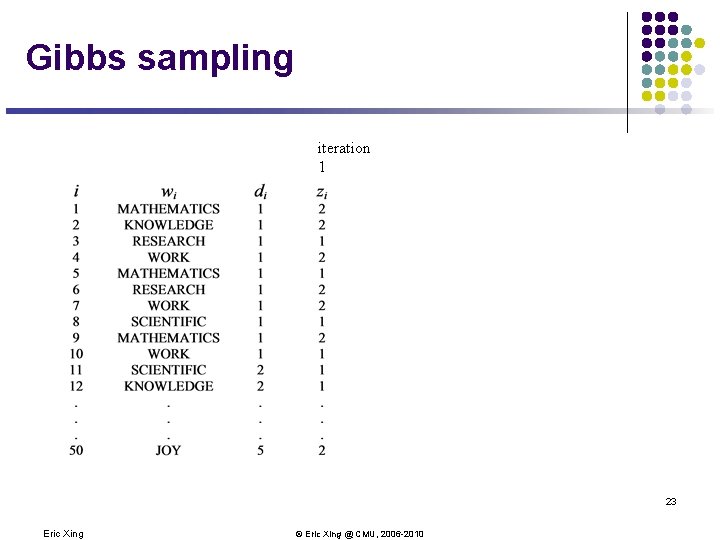

Gibbs sampling iteration 1 23 Eric Xing © Eric Xing @ CMU, 2006 -2010

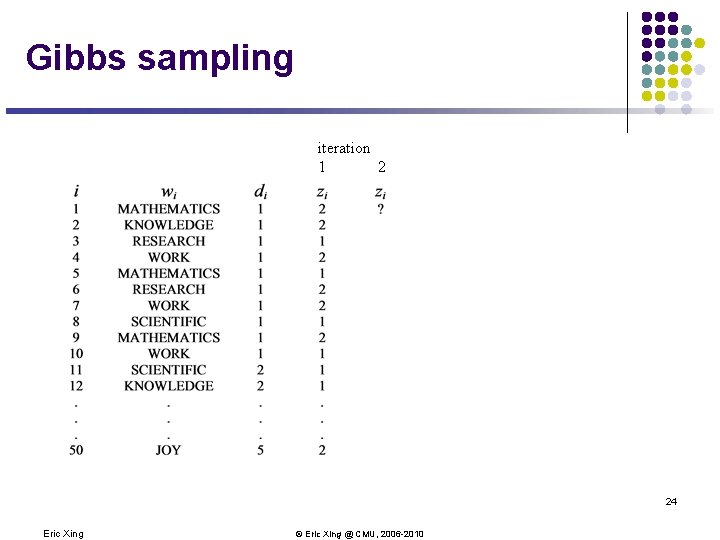

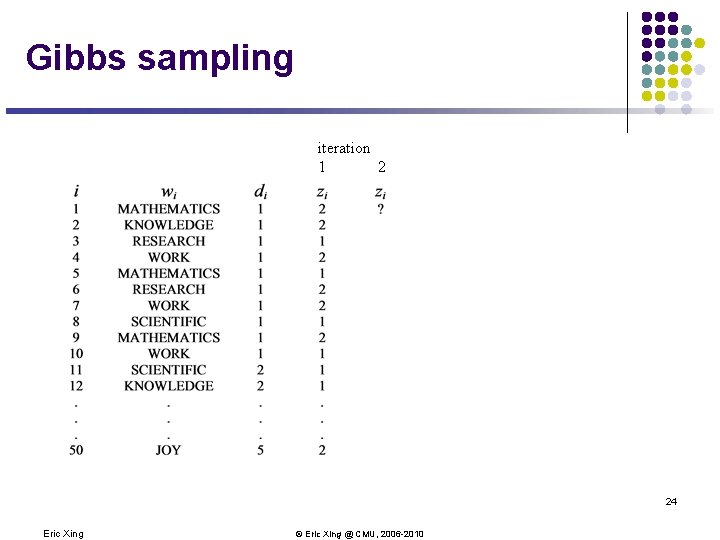

Gibbs sampling iteration 1 2 24 Eric Xing © Eric Xing @ CMU, 2006 -2010

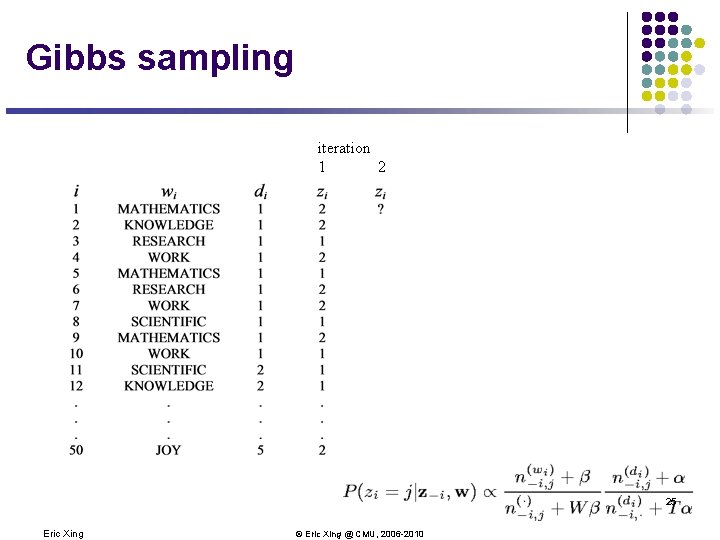

Gibbs sampling iteration 1 2 25 Eric Xing © Eric Xing @ CMU, 2006 -2010

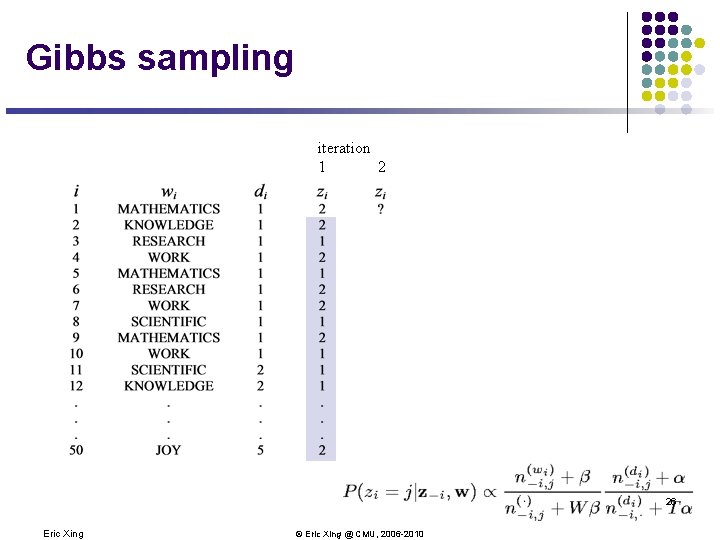

Gibbs sampling iteration 1 2 26 Eric Xing © Eric Xing @ CMU, 2006 -2010

Gibbs sampling iteration 1 2 27 Eric Xing © Eric Xing @ CMU, 2006 -2010

Gibbs sampling iteration 1 2 28 Eric Xing © Eric Xing @ CMU, 2006 -2010

Gibbs sampling iteration 1 2 29 Eric Xing © Eric Xing @ CMU, 2006 -2010

Gibbs sampling iteration 1 2 30 Eric Xing © Eric Xing @ CMU, 2006 -2010

Gibbs sampling iteration 1 2 … 1000 31 Eric Xing © Eric Xing @ CMU, 2006 -2010

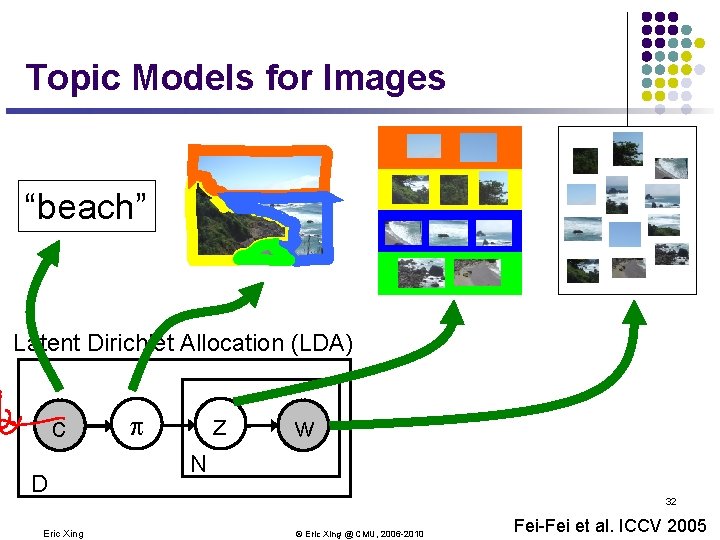

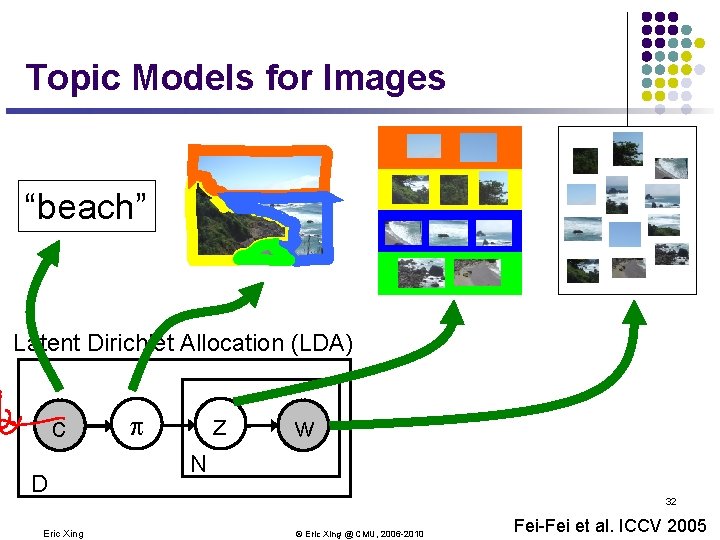

Topic Models for Images “beach” Latent Dirichlet Allocation (LDA) c D Eric Xing z w N 32 © Eric Xing @ CMU, 2006 -2010 Fei-Fei et al. ICCV 2005

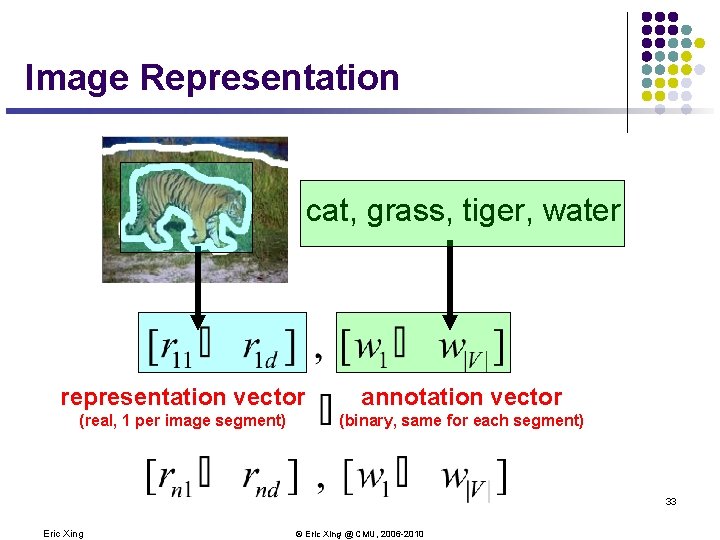

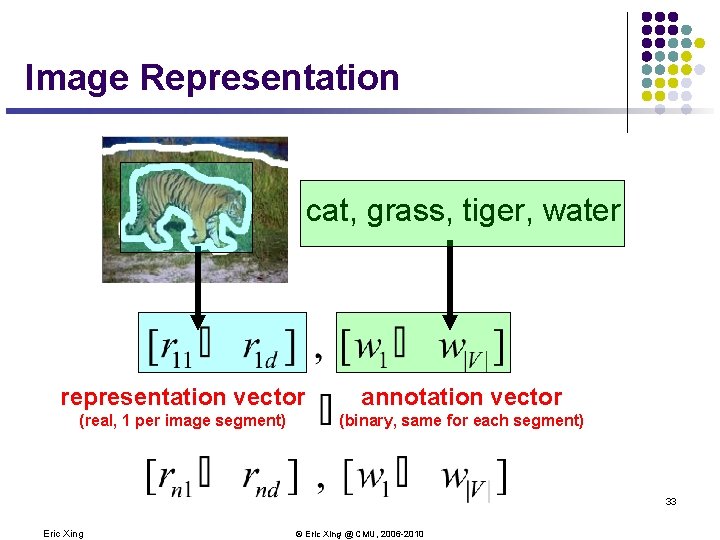

Image Representation cat, grass, tiger, water representation vector annotation vector (real, 1 per image segment) (binary, same for each segment) 33 Eric Xing © Eric Xing @ CMU, 2006 -2010

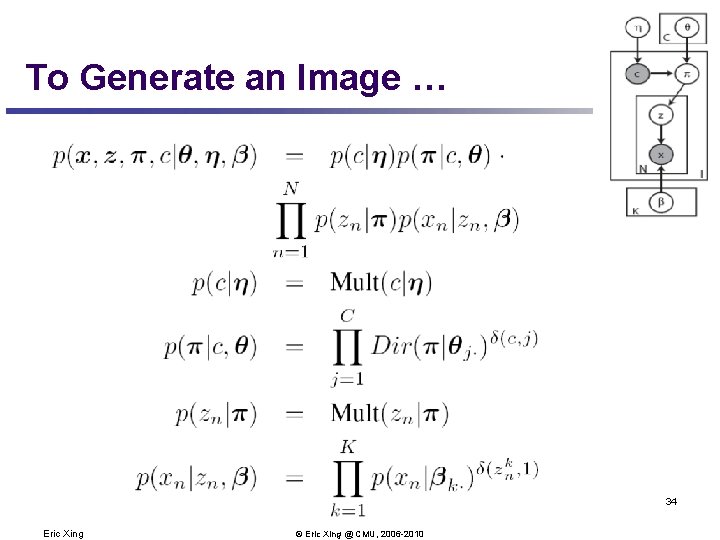

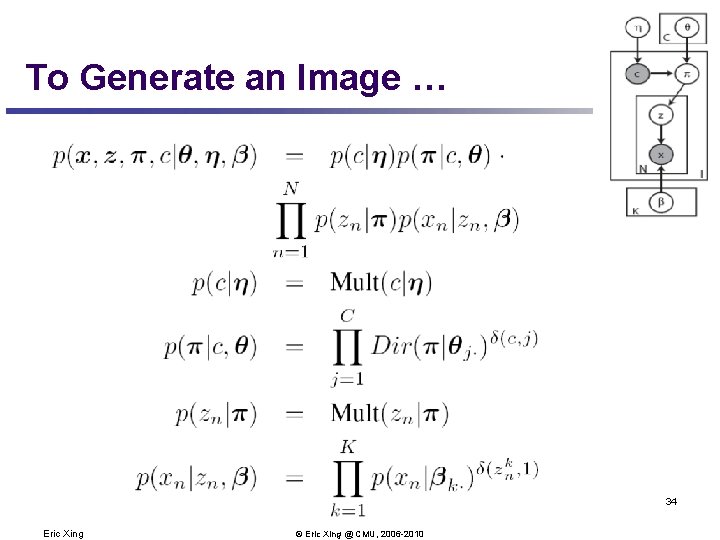

To Generate an Image … 34 Eric Xing © Eric Xing @ CMU, 2006 -2010

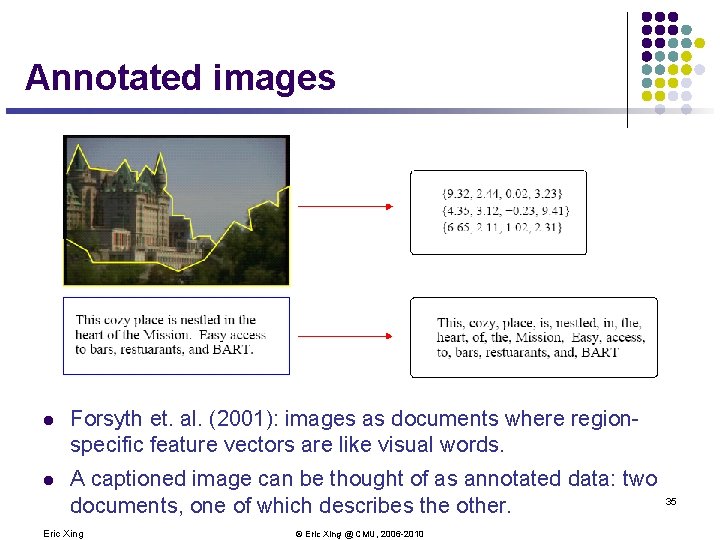

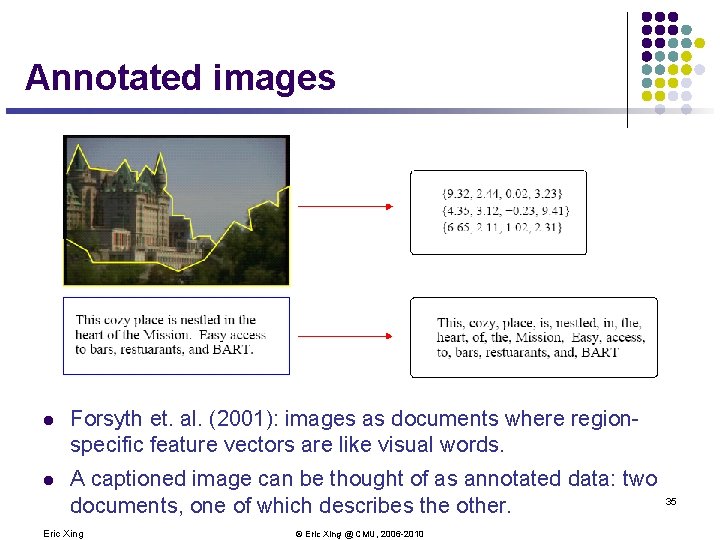

Annotated images l Forsyth et. al. (2001): images as documents where regionspecific feature vectors are like visual words. l A captioned image can be thought of as annotated data: two documents, one of which describes the other. Eric Xing © Eric Xing @ CMU, 2006 -2010 35

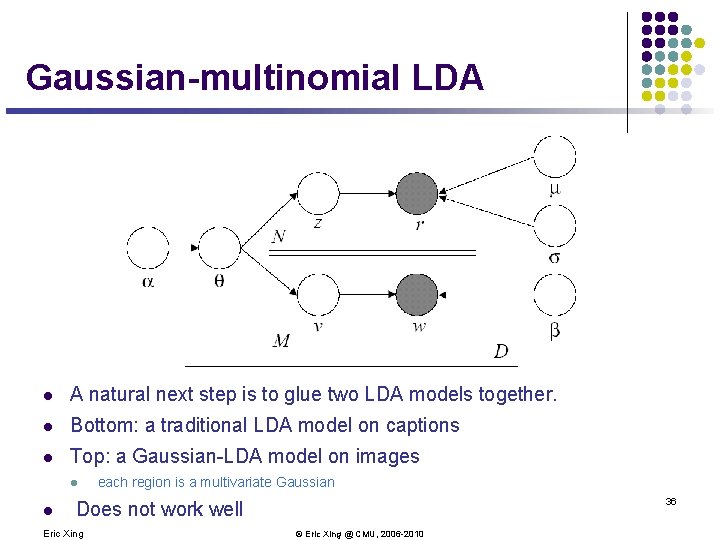

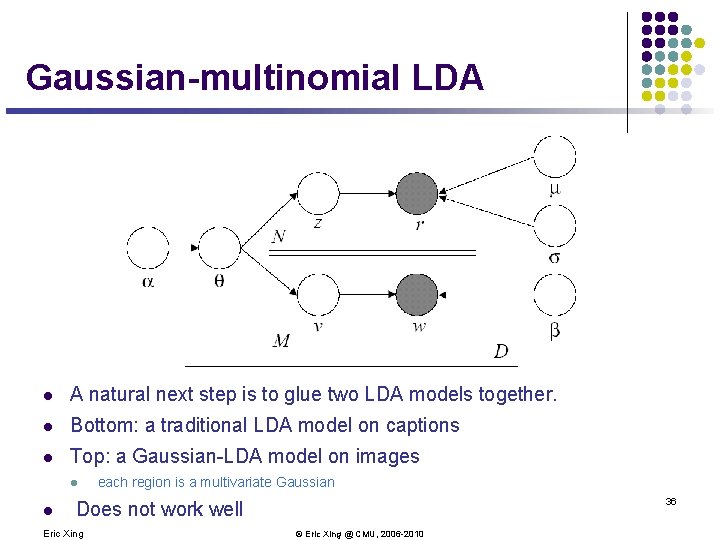

Gaussian-multinomial LDA l A natural next step is to glue two LDA models together. l Bottom: a traditional LDA model on captions l Top: a Gaussian-LDA model on images l l each region is a multivariate Gaussian 36 Does not work well Eric Xing © Eric Xing @ CMU, 2006 -2010

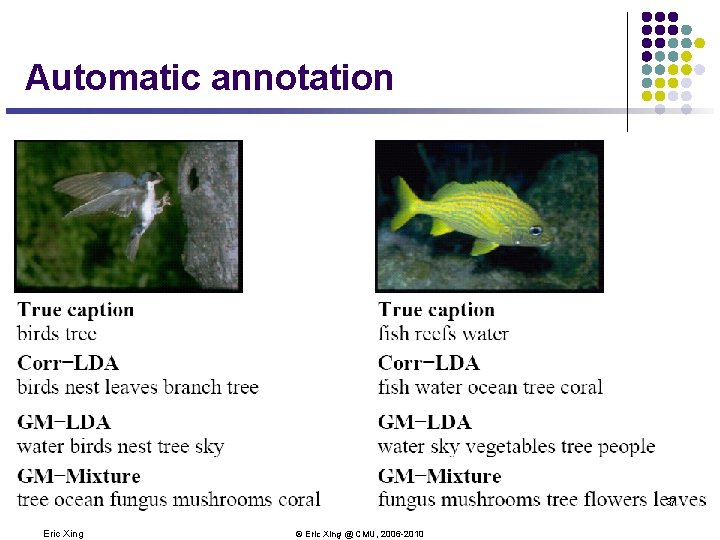

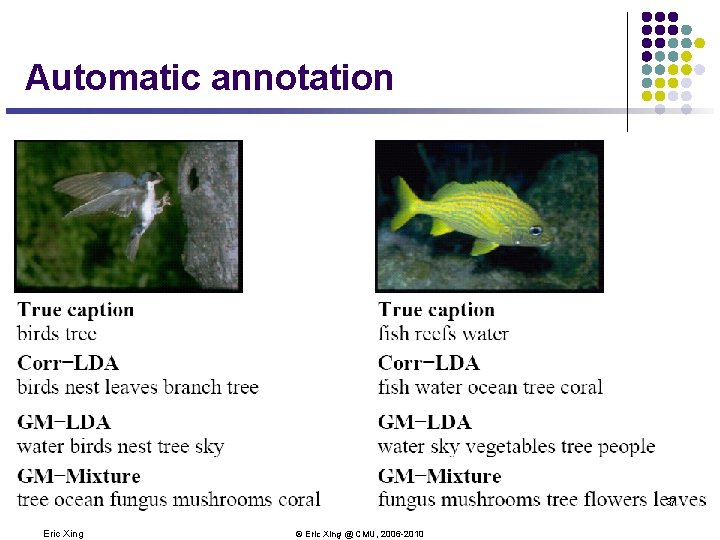

Automatic annotation 37 Eric Xing © Eric Xing @ CMU, 2006 -2010

Conclusion l GM-based topic models are cool l Flexible l Modular l Interactive l …. . 38 Eric Xing © Eric Xing @ CMU, 2006 -2010