Machine Learning in High Energy Machine Learning Physics

![Artificial Neural Network (ANN). . . Output probabilities Input Layer Hidden Layers [6] [9] Artificial Neural Network (ANN). . . Output probabilities Input Layer Hidden Layers [6] [9]](https://slidetodoc.com/presentation_image/18c5792e0b7d513e85ac52b8d193635b/image-9.jpg)

![Natural Language Processing [[[Tomorrow], and tomorrow], [creeps in [this [petty pace]]] [from [day to Natural Language Processing [[[Tomorrow], and tomorrow], [creeps in [this [petty pace]]] [from [day to](https://slidetodoc.com/presentation_image/18c5792e0b7d513e85ac52b8d193635b/image-32.jpg)

- Slides: 59

Machine Learning in High Energy Machine Learning Physics in High Energy Physics Satyaki Bhattacharya Saha Institute for Nuclear Physics Satyaki Bhattacharya Saha Institute of Nuclear Physics 1

Why machine learning ? We are always looking for a faint signal against a large background To do so we work in a high dimensional space (multivariate) We would like to draw decision boundaries in this space, the best we can ML algorithms are “universal approximators” and can do this job well 2

Brief history Machine learning for multivariate techniques have been used since Large Electron Positron (LEP)collider era Artificial Neural Network (ANN) was the tool of choice. A study (Byron Roe. et. al. ) in Mini. Boone experiment demonstrated better performance with Boosted Decision Trees (BDT) Has been (almost) the default since then, with many successes The Higgs discovery was heavily aided by BDT 3

The new era of ML in HEP Era of deep learning came along with the advent of graphics processor units (GPU) orders of magnitude increased computing power made possible what was not possible before Since the discovery of Higgs there has been an explosion of application of machine learning in HEP – in calorimetry and tracking, Monte Carlo generation, data quality and compute job monitoring. . . 4

In this talk. . . Two broad areas of machine learning applications – image processing and natural language processing Both have found potential application in HEP I will talk about a few interesting use cases This talk is not an inclusive review 5

resources Community white paper for machine learning in HEP (https: //arxiv. org/pdf/1807. 02876. pdf) Review by Kyle Cranmer (https: //arxiv. org/pdf/1806. 11484 v 1. pdf) 6

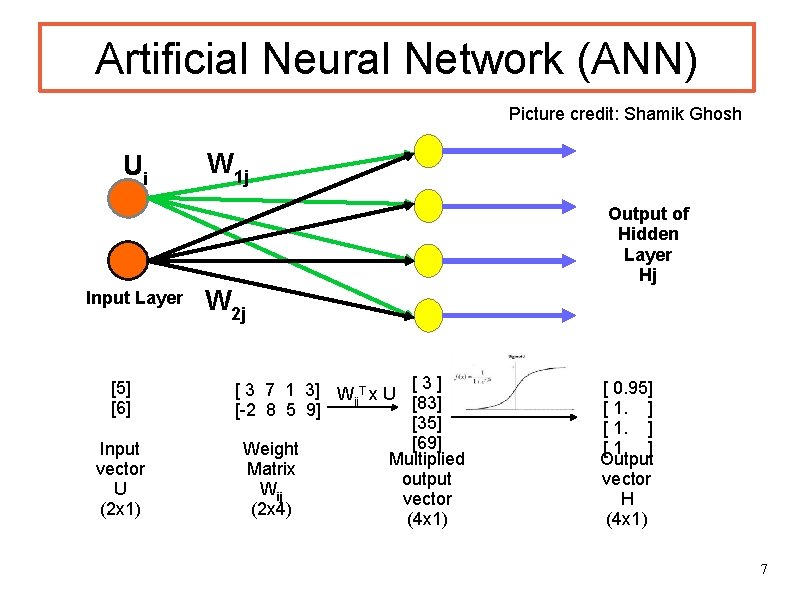

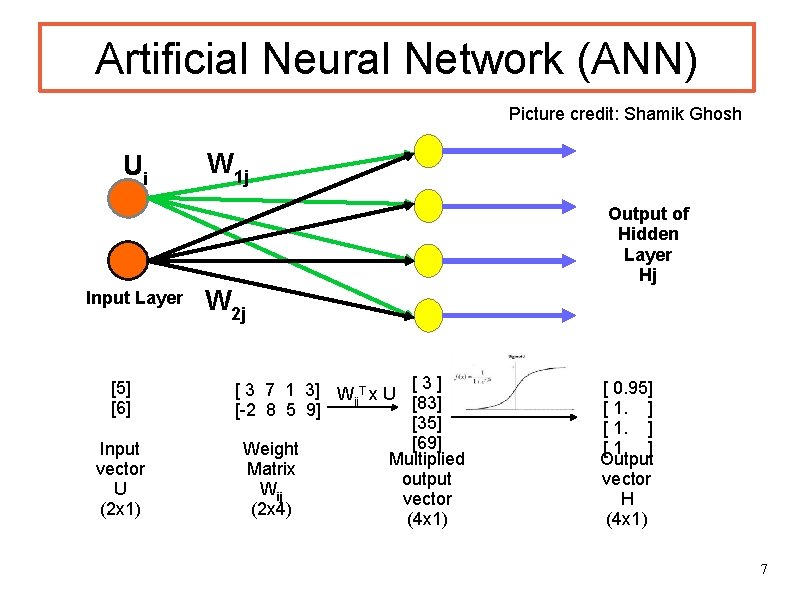

Artificial Neural Network (ANN) Picture credit: Shamik Ghosh Ui W 1 j Output of Hidden Layer Hj Input Layer [5] [6] Input vector U (2 x 1) W 2 j [ 3 7 1 3] W T x U [ 3 ] ij [83] [-2 8 5 9] [35] [69] Weight Multiplied Matrix output Wij vector (2 x 4) (4 x 1) [ 0. 95] [ 1. ] Output vector H (4 x 1) 7

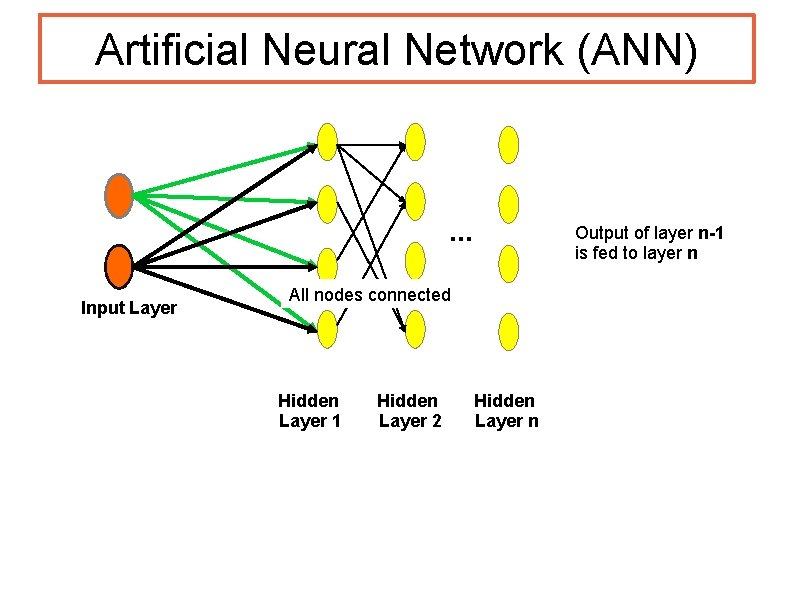

Artificial Neural Network (ANN) . . . Input Layer Output of layer n-1 is fed to layer n All nodes connected Hidden Layer 1 Hidden Layer 2 Hidden Layer n

![Artificial Neural Network ANN Output probabilities Input Layer Hidden Layers 6 9 Artificial Neural Network (ANN). . . Output probabilities Input Layer Hidden Layers [6] [9]](https://slidetodoc.com/presentation_image/18c5792e0b7d513e85ac52b8d193635b/image-9.jpg)

Artificial Neural Network (ANN). . . Output probabilities Input Layer Hidden Layers [6] [9] [ 0. 04] [ 0. 95] Softmax converts output to probablities

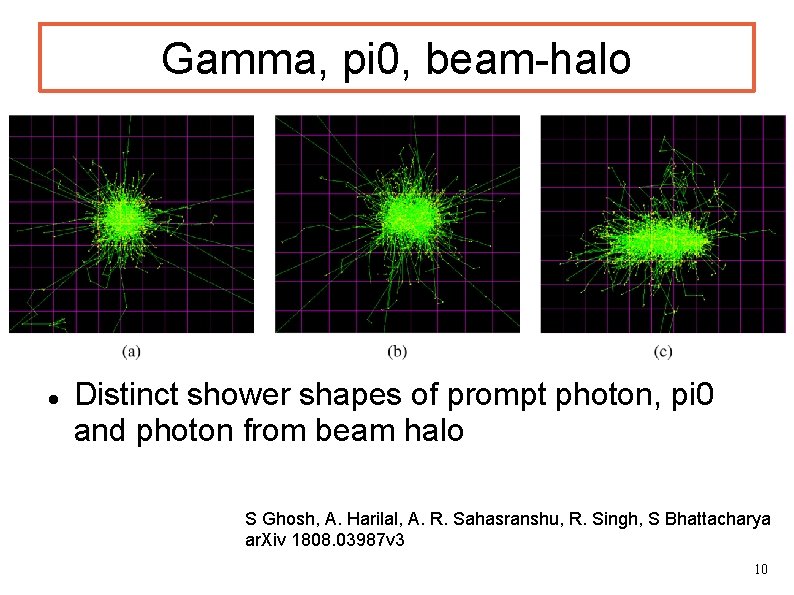

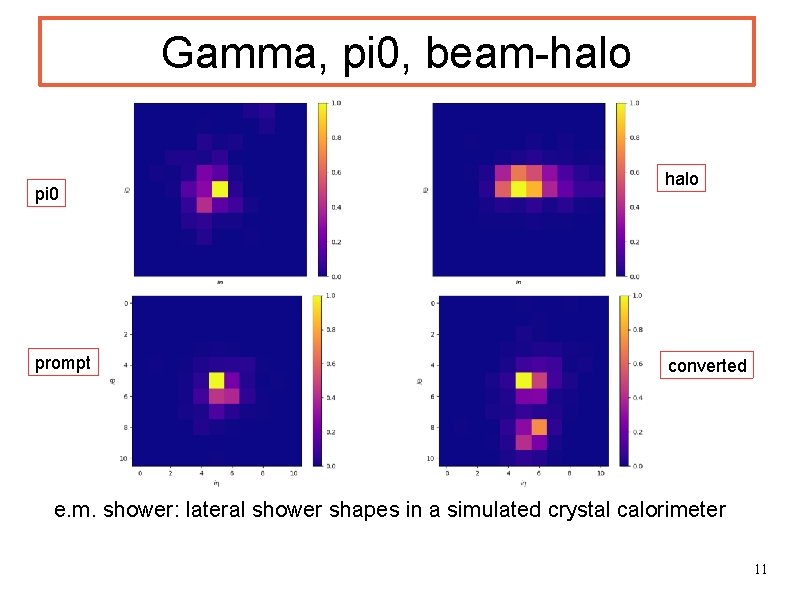

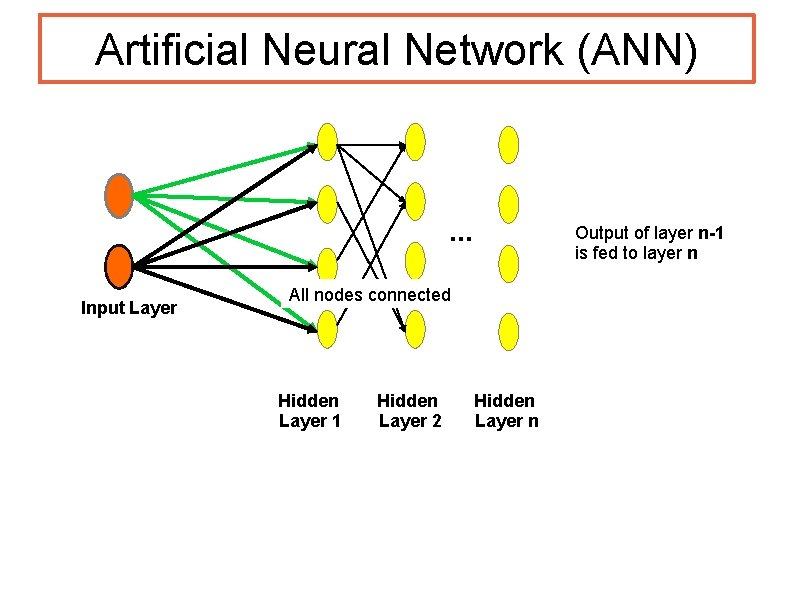

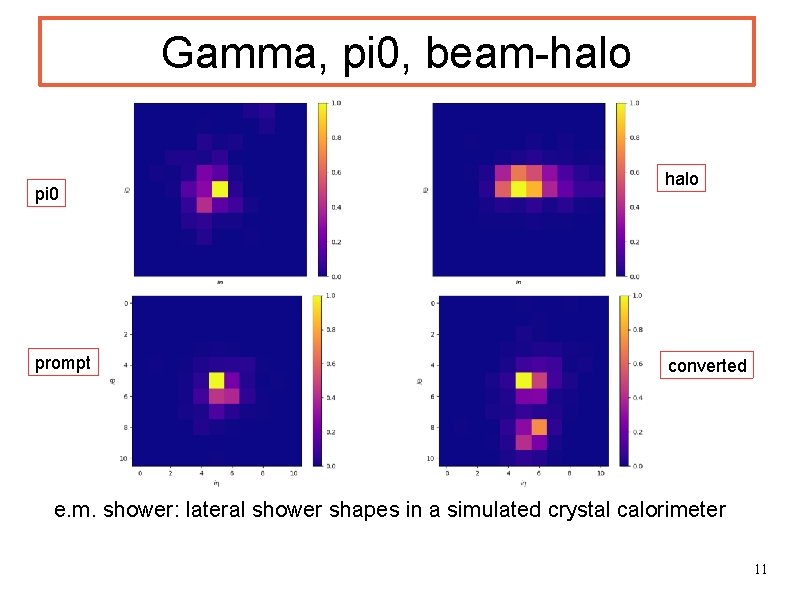

Gamma, pi 0, beam-halo Distinct shower shapes of prompt photon, pi 0 and photon from beam halo S Ghosh, A. Harilal, A. R. Sahasranshu, R. Singh, S Bhattacharya ar. Xiv 1808. 03987 v 3 10

Gamma, pi 0, beam-halo pi 0 prompt halo converted e. m. shower: lateral shower shapes in a simulated crystal calorimeter 11

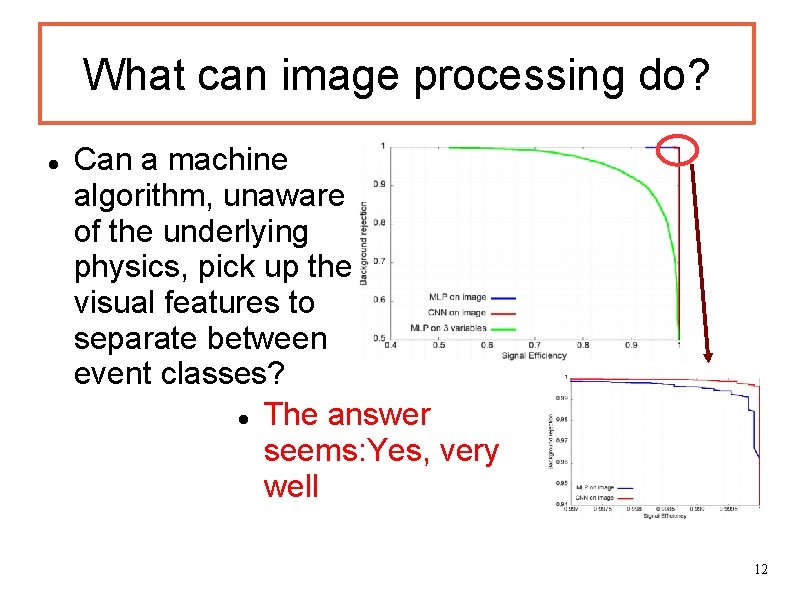

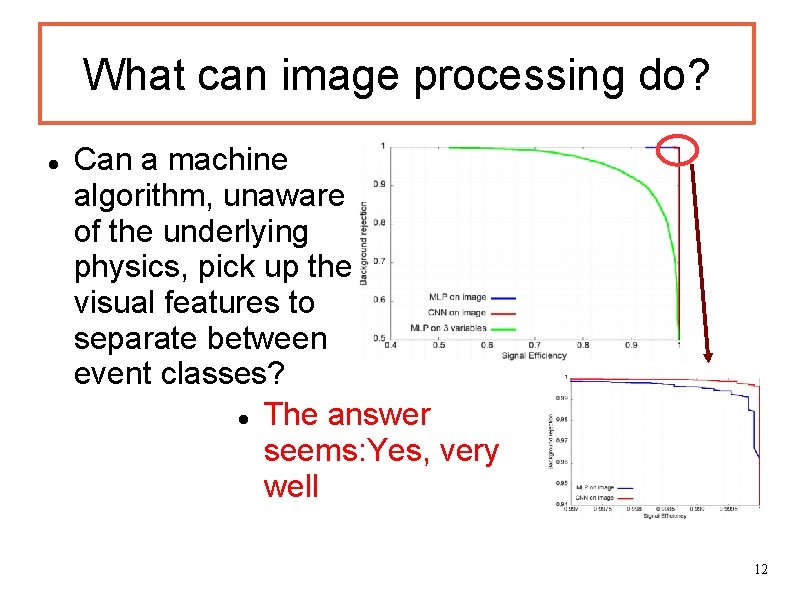

What can image processing do? Can a machine algorithm, unaware of the underlying physics, pick up the visual features to separate between event classes? The answer seems: Yes, very well 12

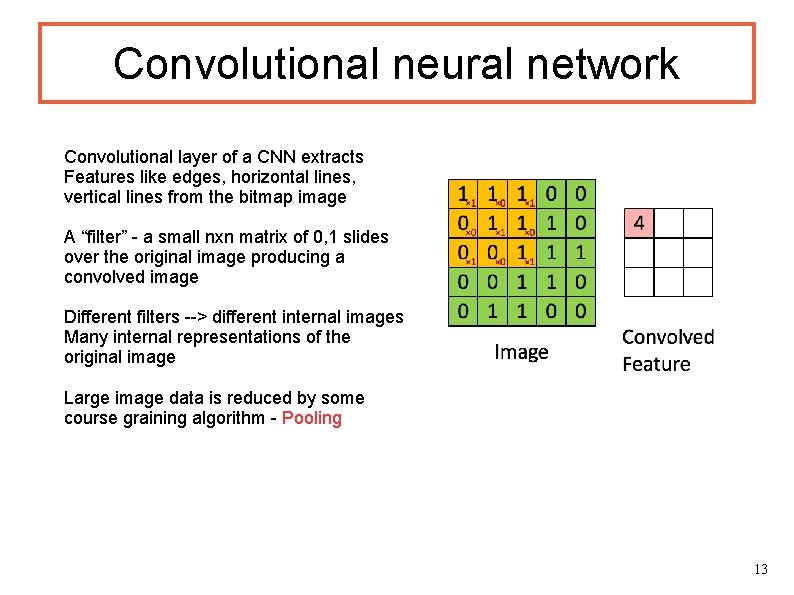

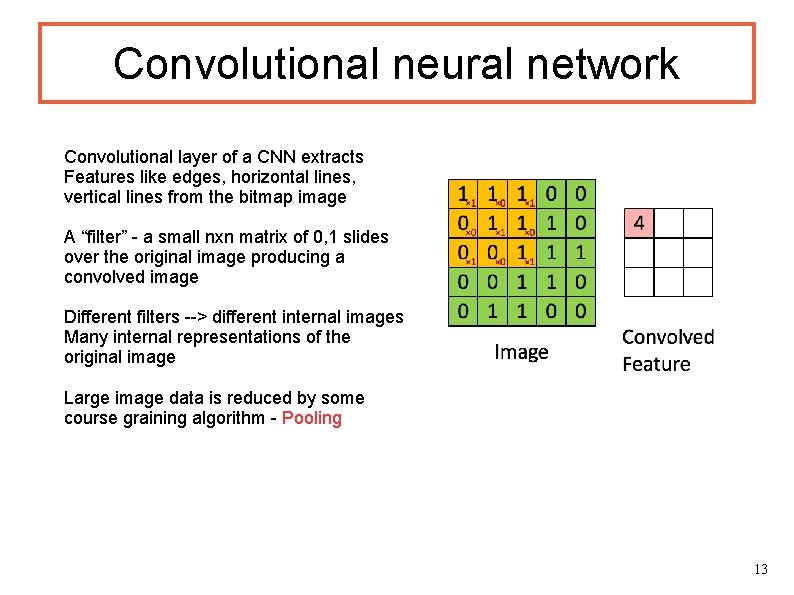

Convolutional neural network Convolutional layer of a CNN extracts Features like edges, horizontal lines, vertical lines from the bitmap image A “filter” - a small nxn matrix of 0, 1 slides over the original image producing a convolved image Different filters --> different internal images Many internal representations of the original image Large image data is reduced by some course graining algorithm - Pooling 13

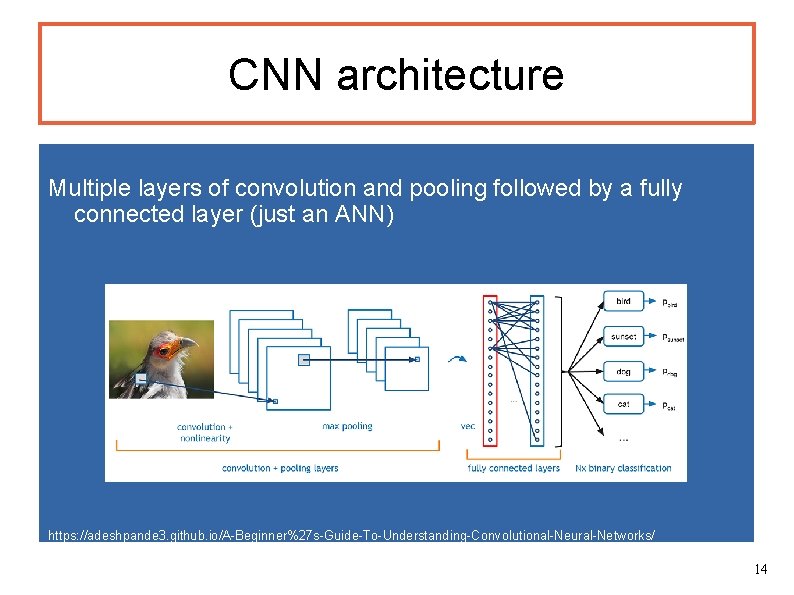

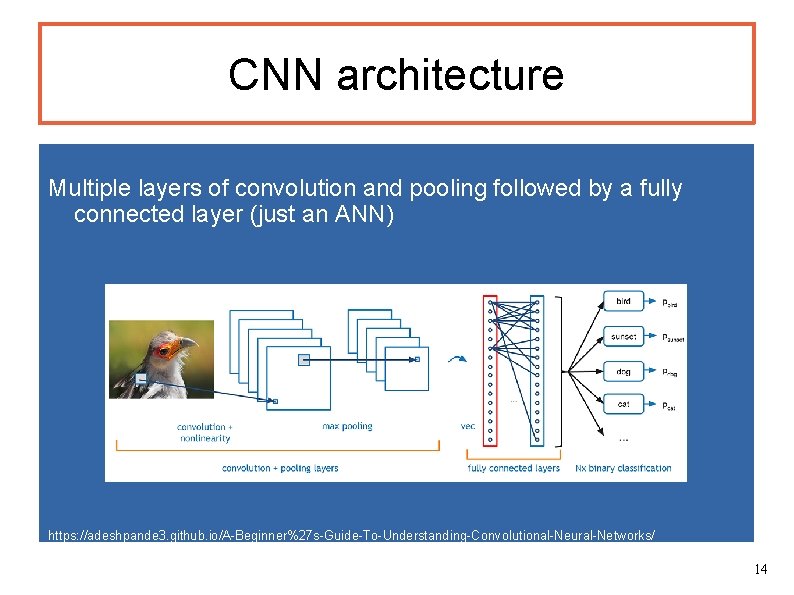

CNN architecture Multiple layers of convolution and pooling followed by a fully connected layer (just an ANN) https: //adeshpande 3. github. io/A-Beginner%27 s-Guide-To-Understanding-Convolutional-Neural-Networks/ 14

15

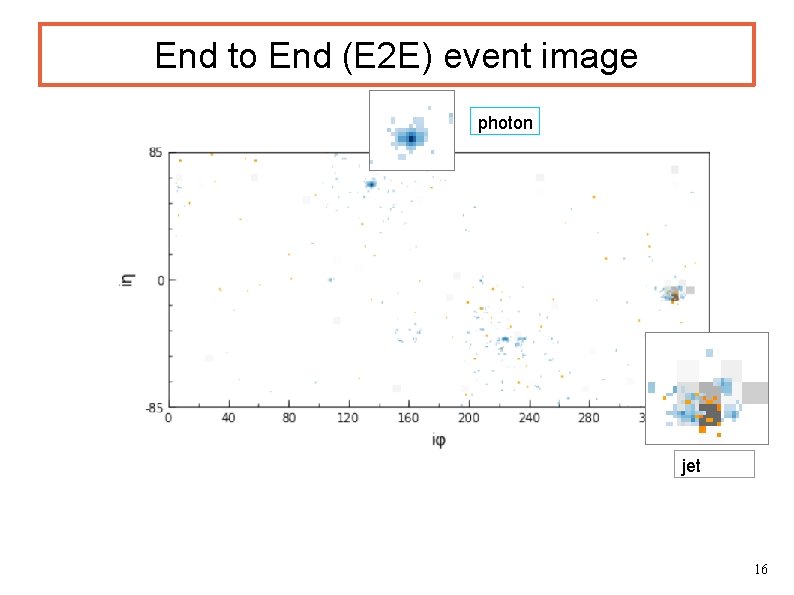

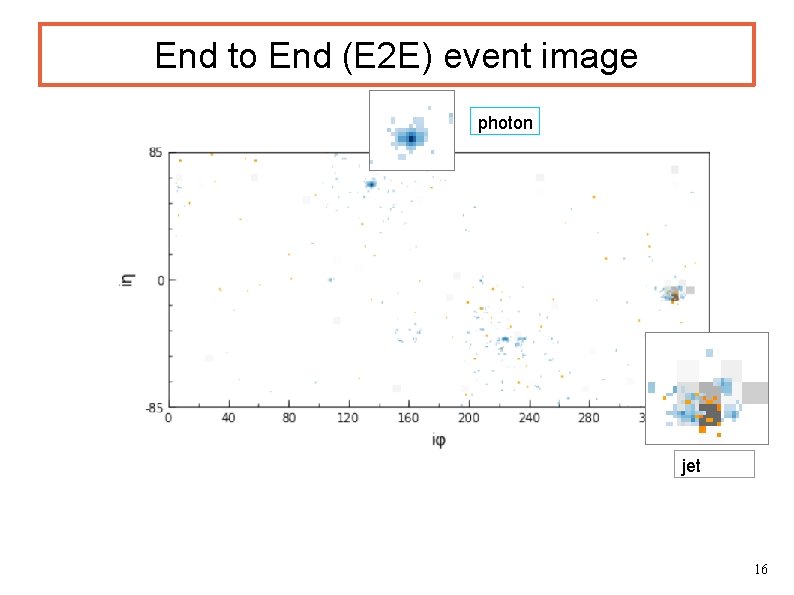

End to End (E 2 E) event image photon jet 16

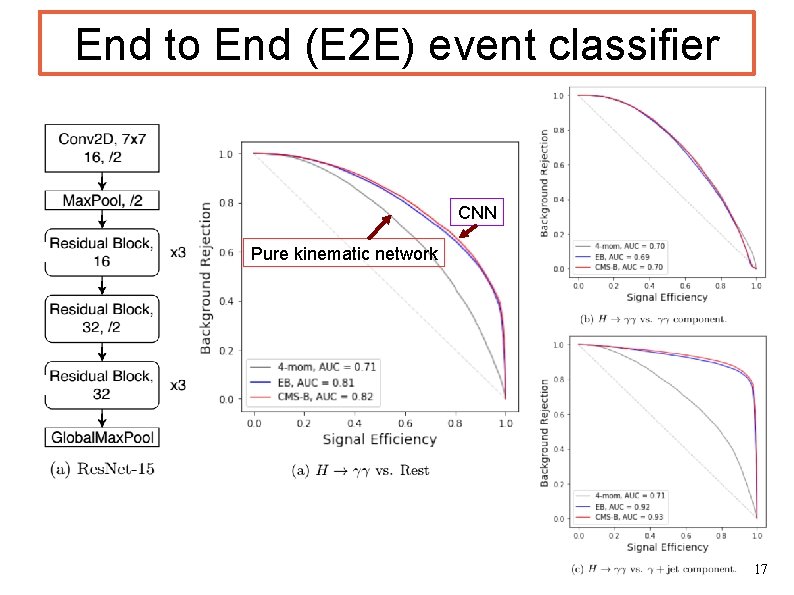

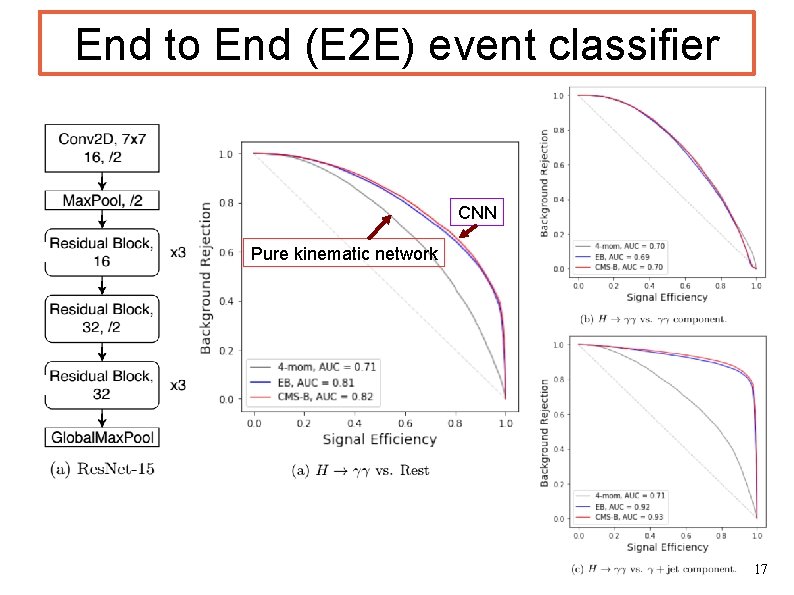

End to End (E 2 E) event classifier CNN Pure kinematic network 17

End to End (E 2 E) event classifier Can learn angular distribution as well as energy scale of constituent hits. Can learn about the shower shape. On this simplified analysis example, performs significantly better than a pure kinematics based analysis. True potential lies in BSM searches with complex final states. 18

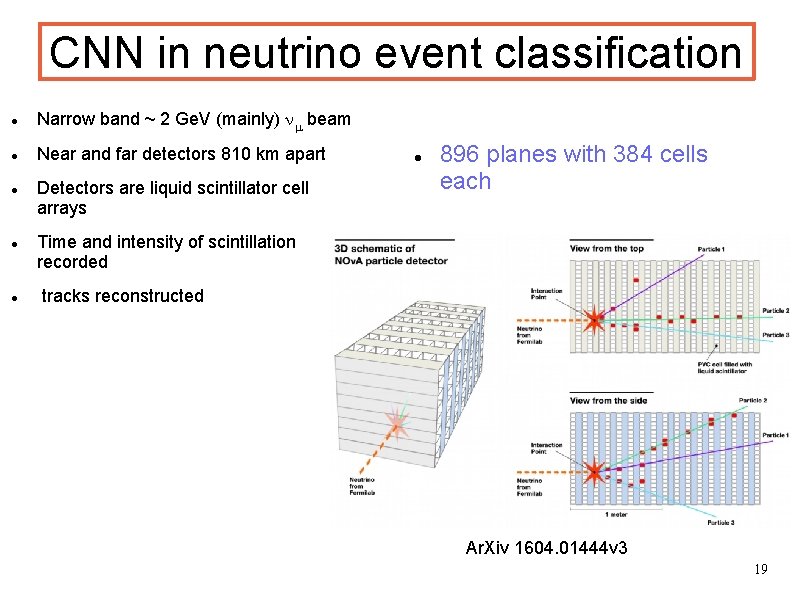

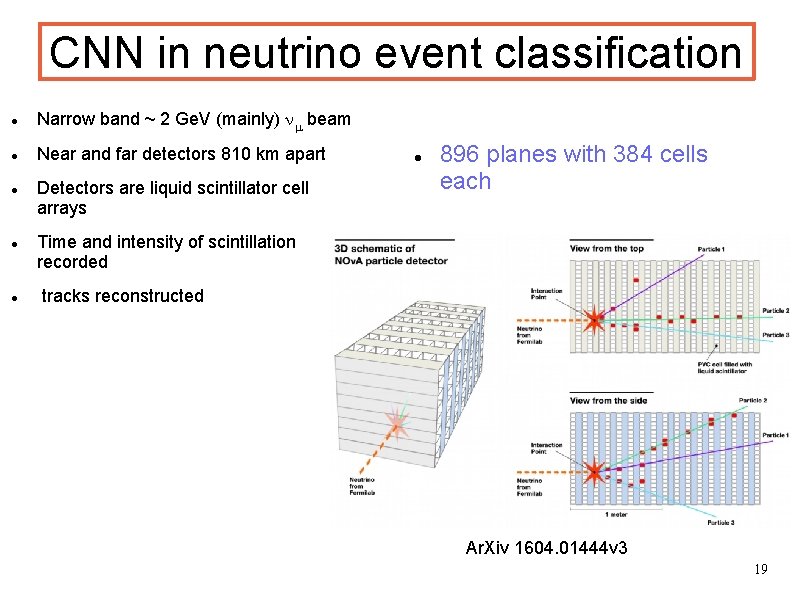

CNN in neutrino event classification Narrow band ~ 2 Ge. V (mainly) nm beam Near and far detectors 810 km apart Detectors are liquid scintillator cell arrays 896 planes with 384 cells each Time and intensity of scintillation recorded tracks reconstructed Ar. Xiv 1604. 01444 v 3 19

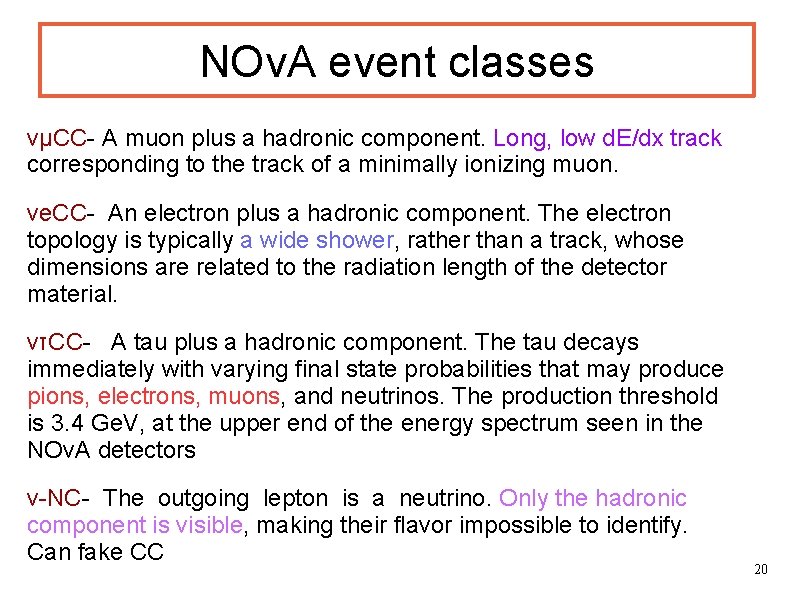

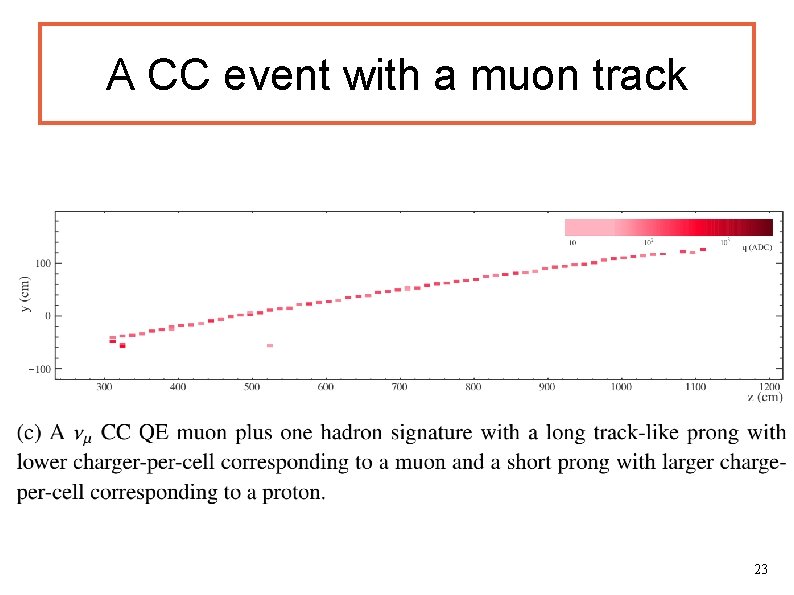

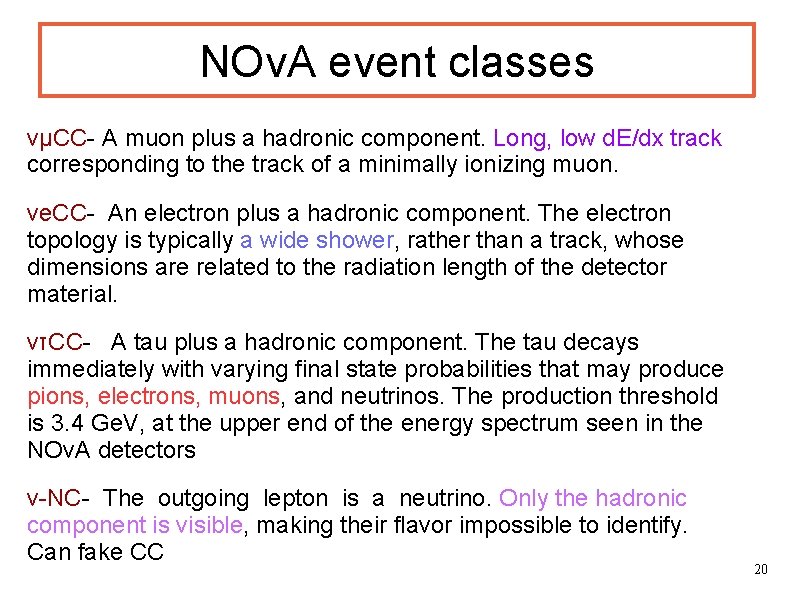

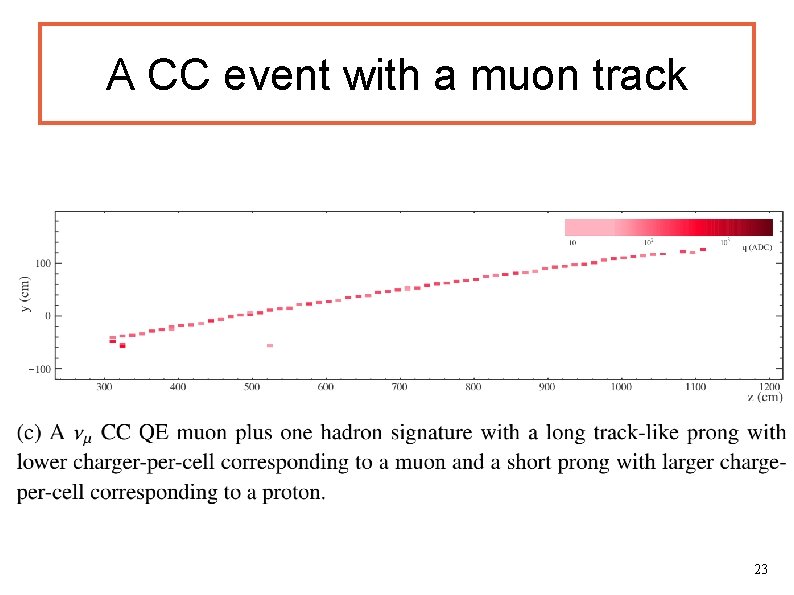

NOv. A event classes νμCC- A muon plus a hadronic component. Long, low d. E/dx track corresponding to the track of a minimally ionizing muon. νe. CC- An electron plus a hadronic component. The electron topology is typically a wide shower, rather than a track, whose dimensions are related to the radiation length of the detector material. ντCC- A tau plus a hadronic component. The tau decays immediately with varying final state probabilities that may produce pions, electrons, muons, and neutrinos. The production threshold is 3. 4 Ge. V, at the upper end of the energy spectrum seen in the NOv. A detectors ν-NC- The outgoing lepton is a neutrino. Only the hadronic component is visible, making their flavor impossible to identify. Can fake CC 20

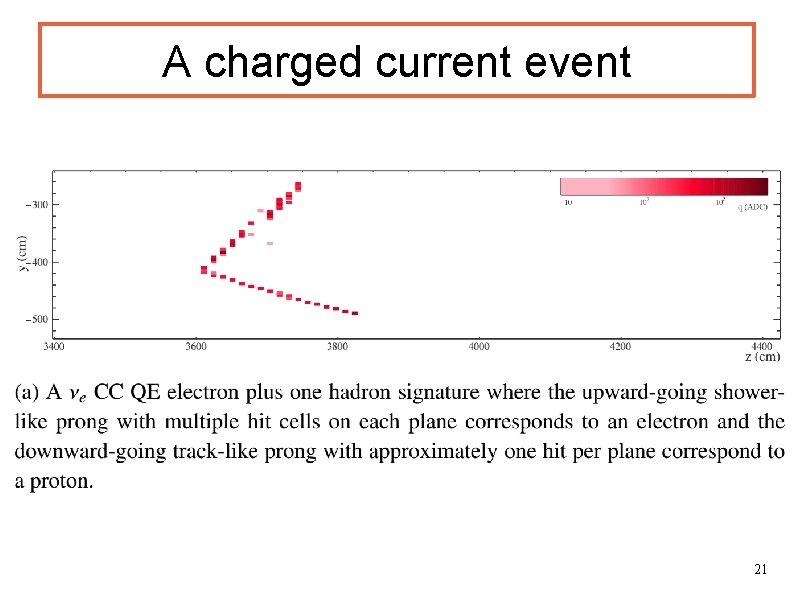

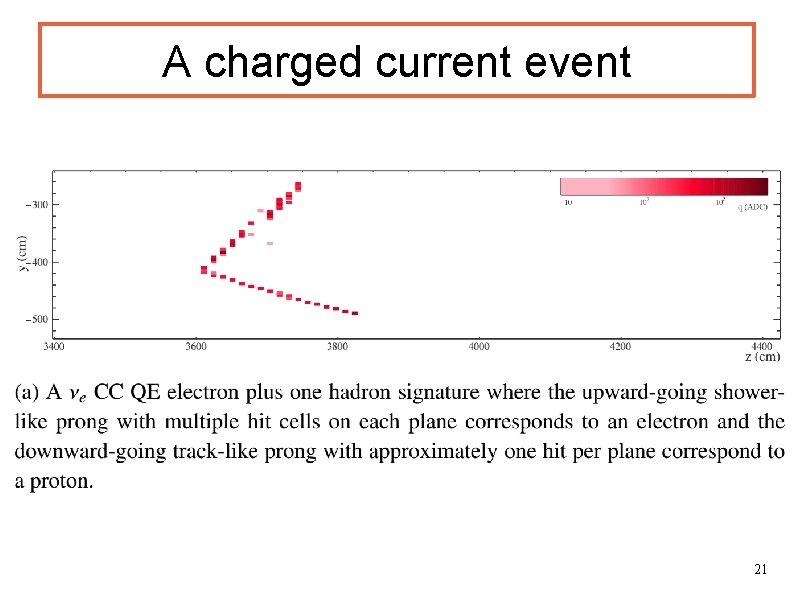

A charged current event 21

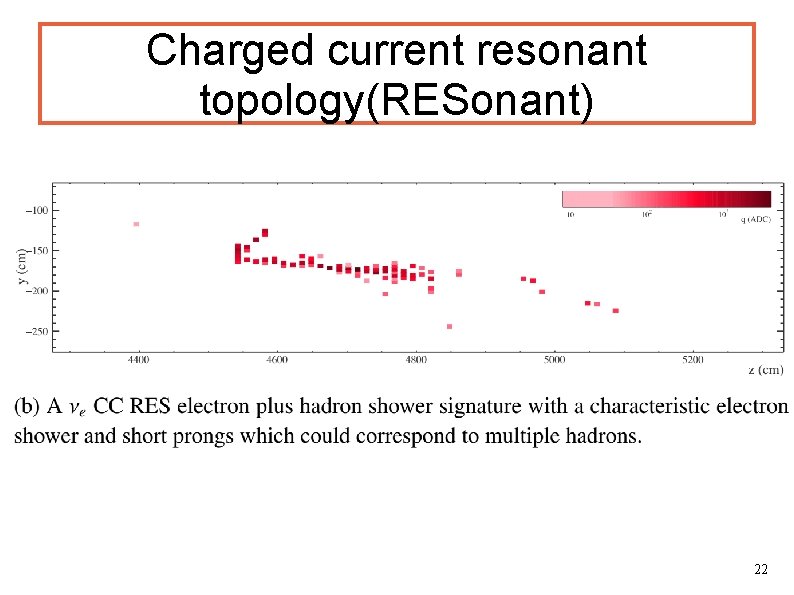

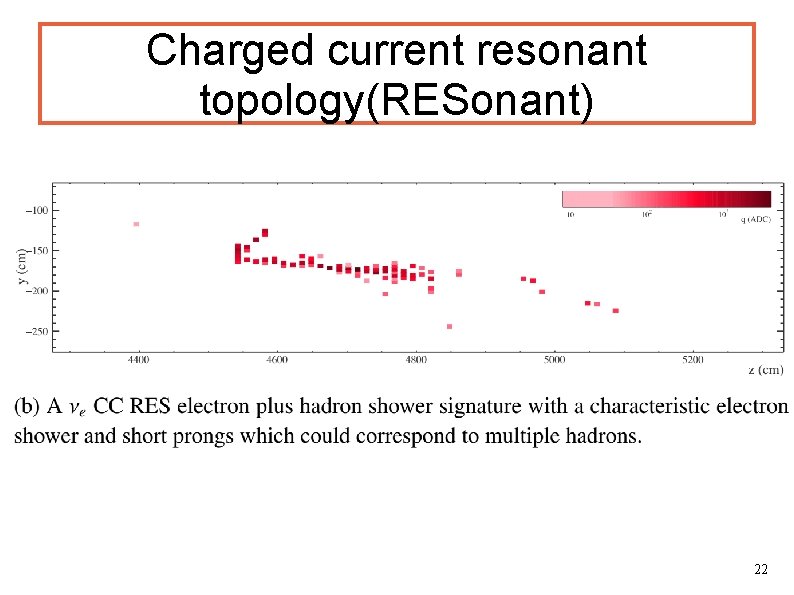

Charged current resonant topology(RESonant) 22

A CC event with a muon track 23

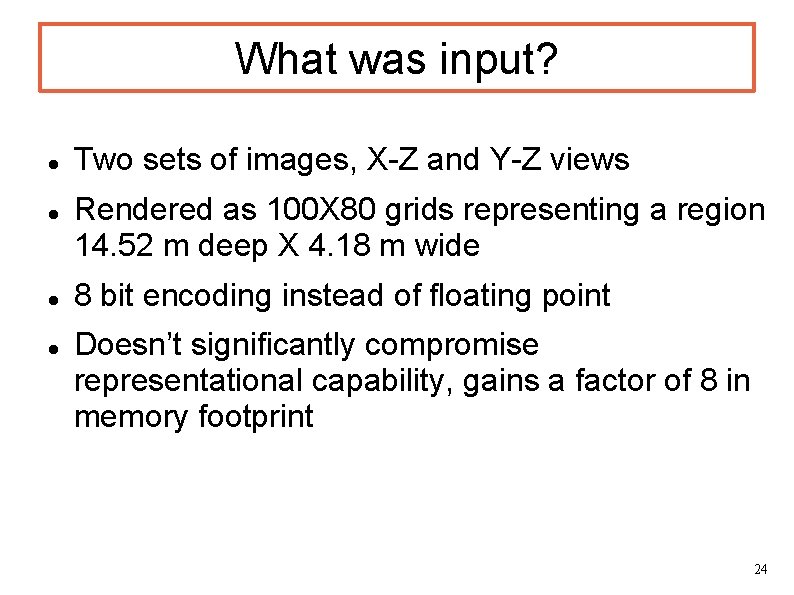

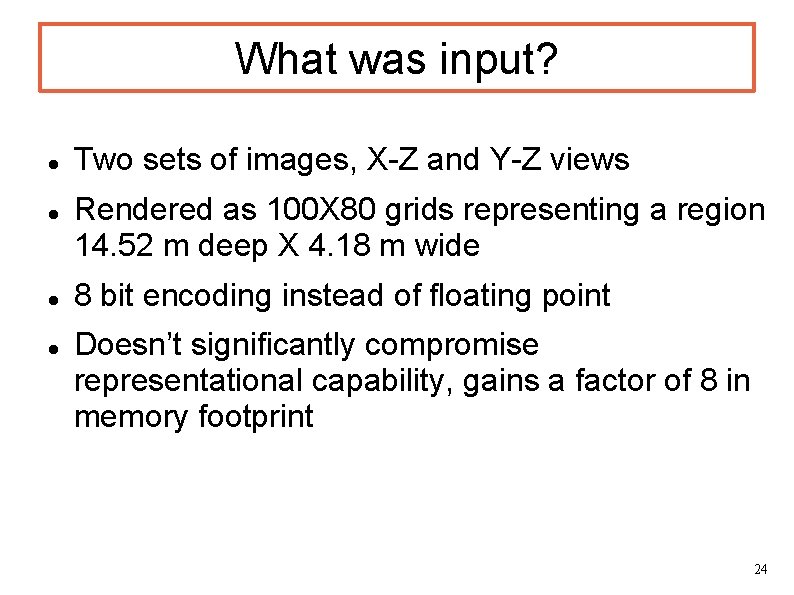

What was input? Two sets of images, X-Z and Y-Z views Rendered as 100 X 80 grids representing a region 14. 52 m deep X 4. 18 m wide 8 bit encoding instead of floating point Doesn’t significantly compromise representational capability, gains a factor of 8 in memory footprint 24

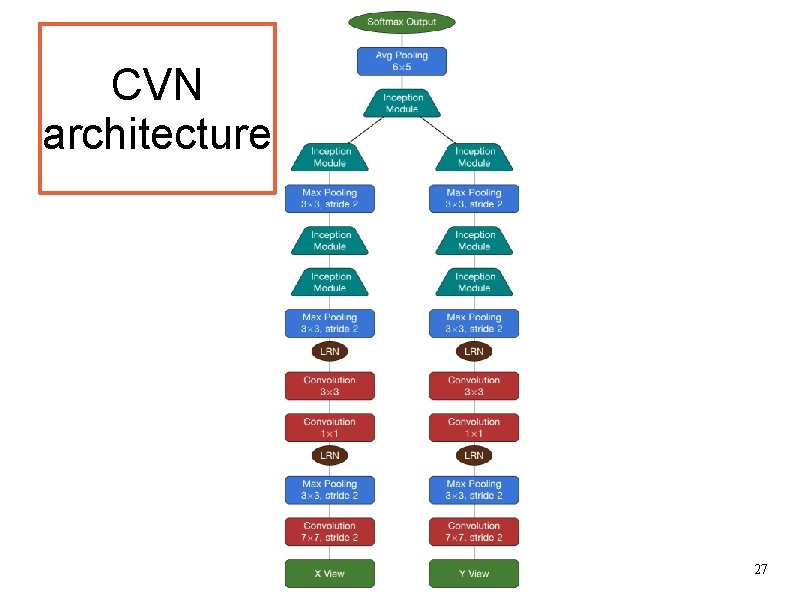

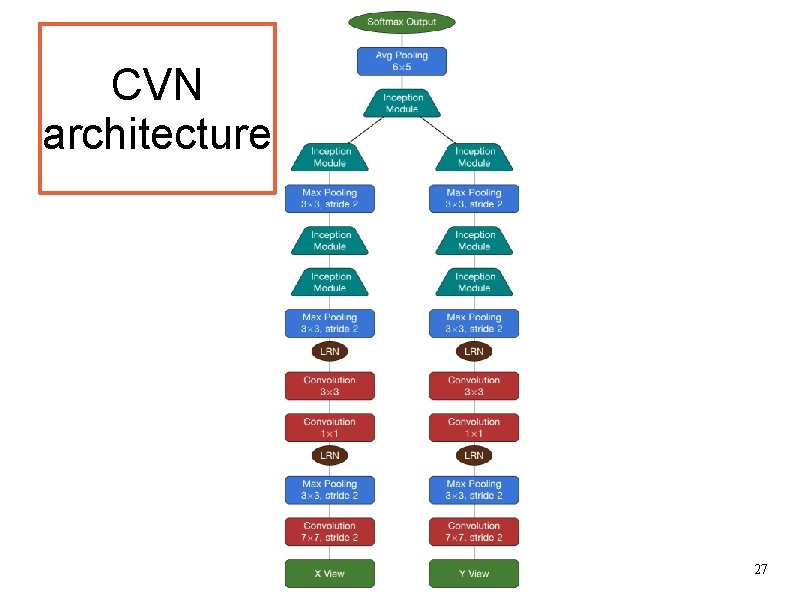

Convolutional Visual Network CVN was developed using the Caffe framework. Caffe is an openframework for deep learning applications highly modular and makes accelerated training on graphics processing units (GPU) straightforward. Common layer types are pre-implemented in Caffe Easy to make new architectures by specifying the desired layers and their connections in a configuration file. Caffe is packaged with a configuration file implementing the Goog. Le. Net 25

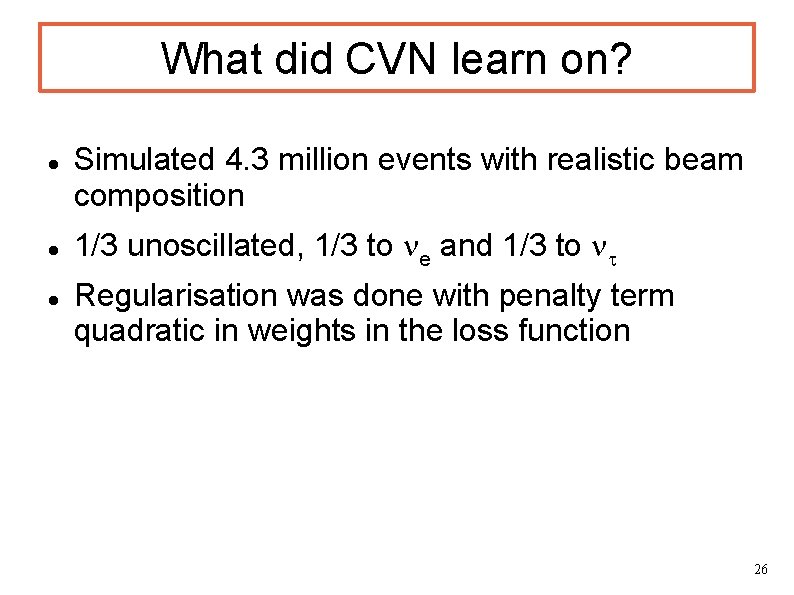

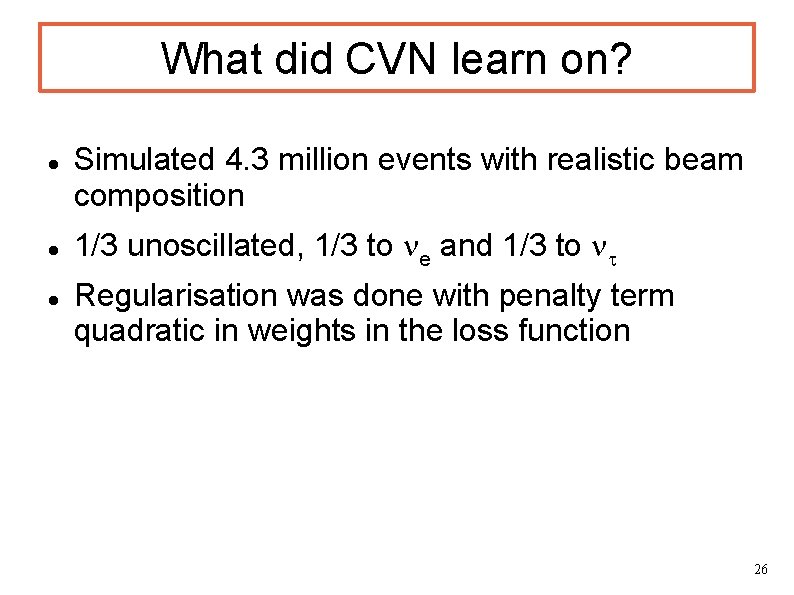

What did CVN learn on? Simulated 4. 3 million events with realistic beam composition 1/3 unoscillated, 1/3 to ne and 1/3 to nt Regularisation was done with penalty term quadratic in weights in the loss function 26

CVN architecture 27

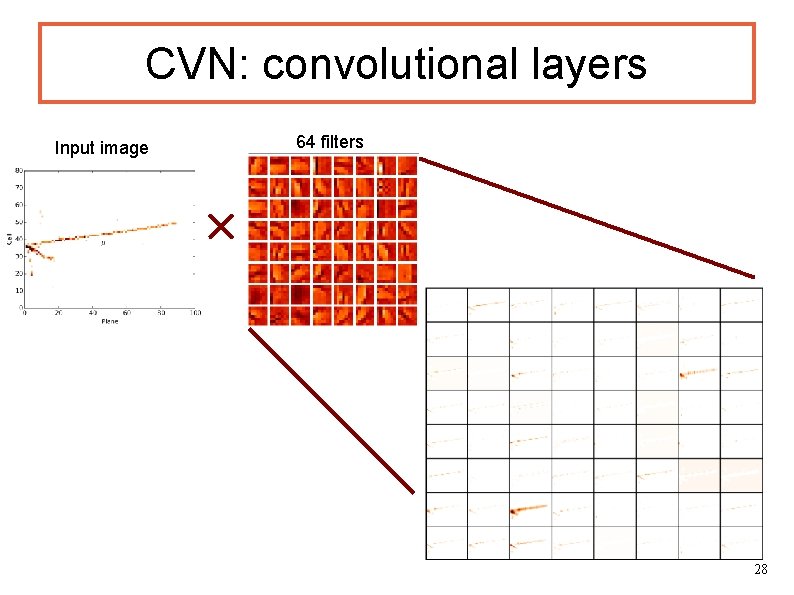

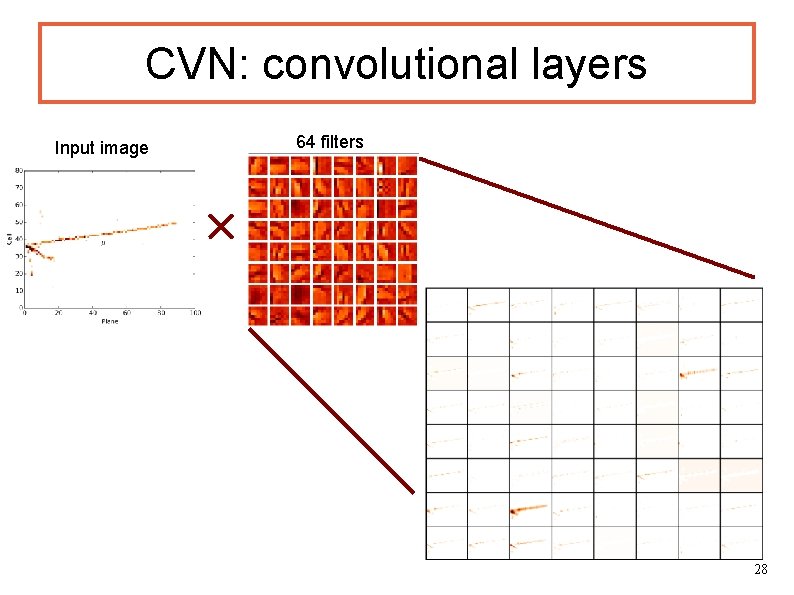

CVN: convolutional layers Input image 64 filters 28

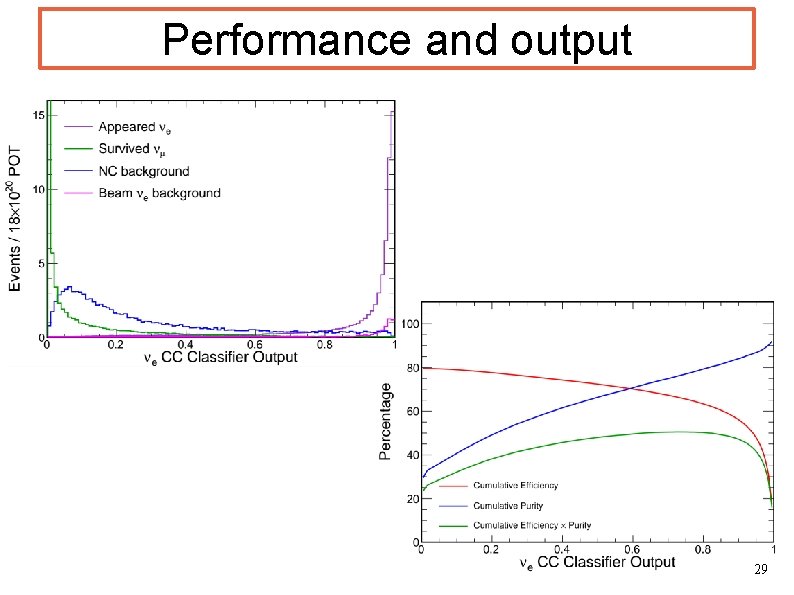

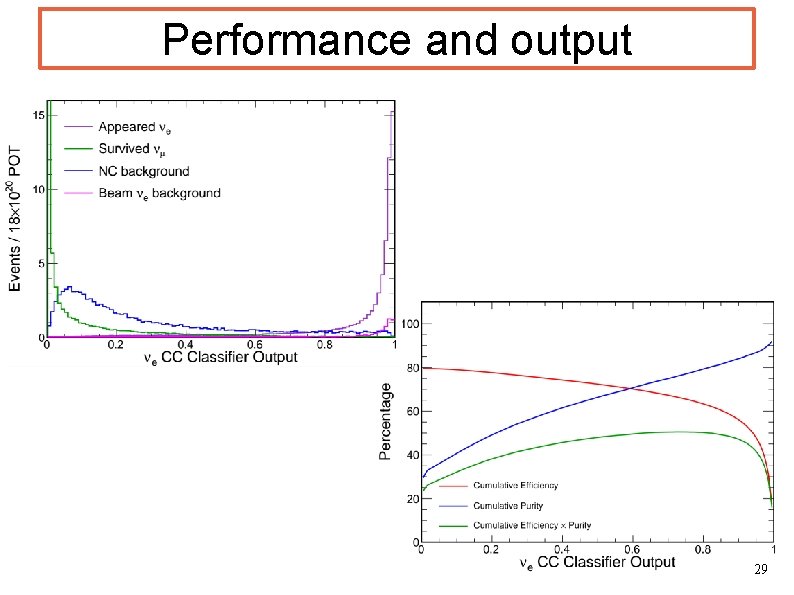

Performance and output 29

CVN performs better! For muon events performance comparable. For electron events (harder to separate) CVN achieves 49% against 35% efficiency of established Nov. A analysis, for very similar purity! CVN not overly sensitive to systamatics. 30

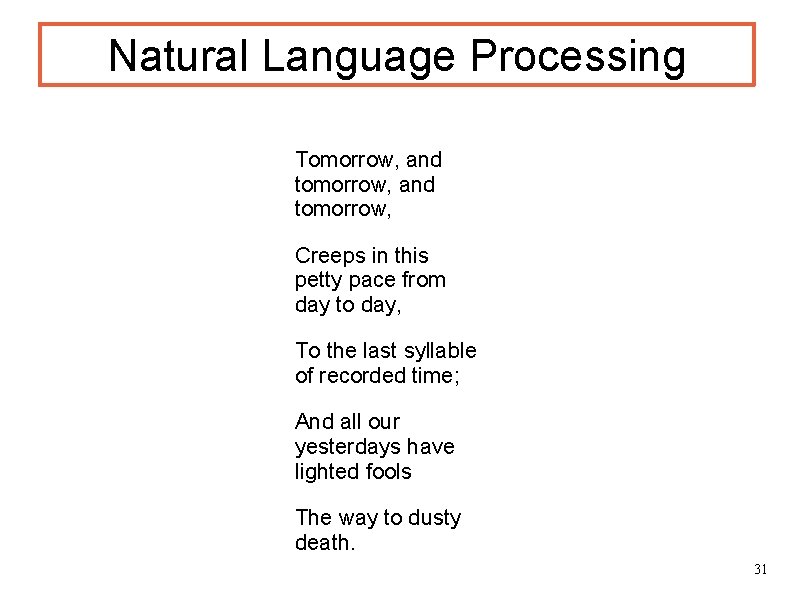

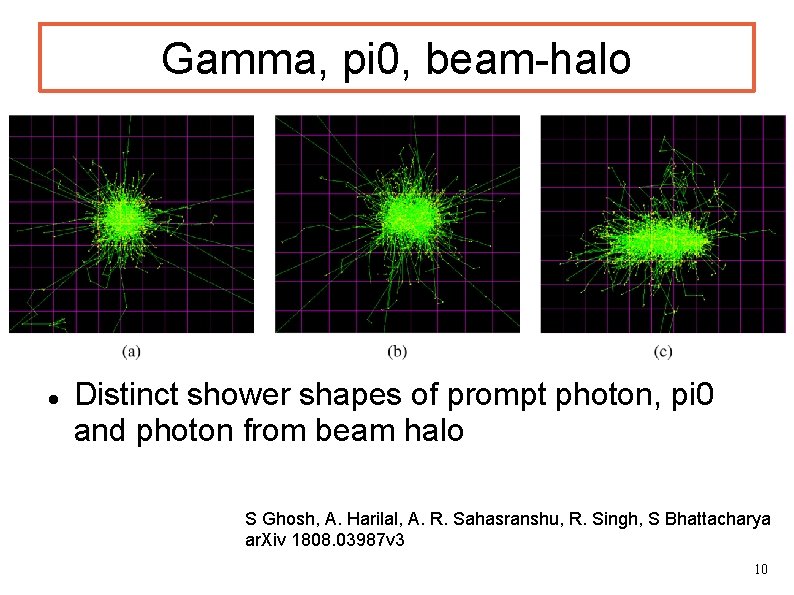

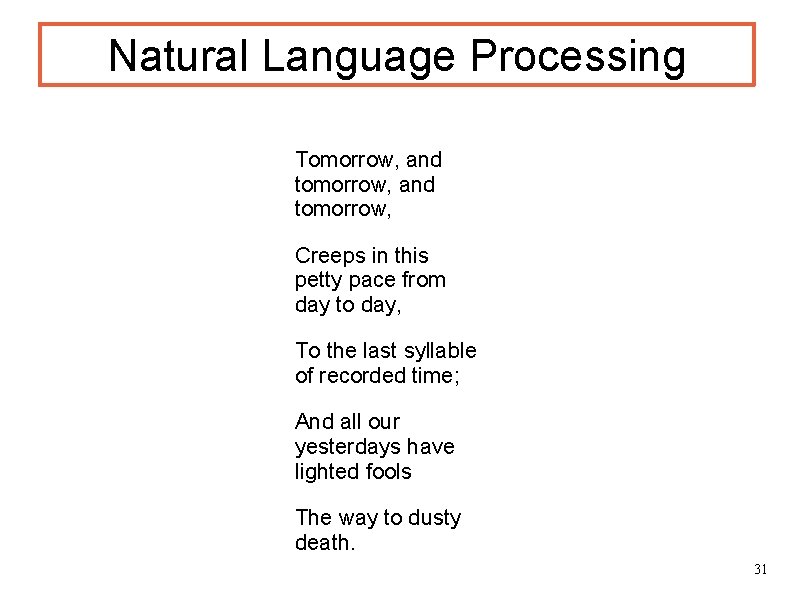

Natural Language Processing Tomorrow, and tomorrow, Creeps in this petty pace from day to day, To the last syllable of recorded time; And all our yesterdays have lighted fools The way to dusty death. 31

![Natural Language Processing Tomorrow and tomorrow creeps in this petty pace from day to Natural Language Processing [[[Tomorrow], and tomorrow], [creeps in [this [petty pace]]] [from [day to](https://slidetodoc.com/presentation_image/18c5792e0b7d513e85ac52b8d193635b/image-32.jpg)

Natural Language Processing [[[Tomorrow], and tomorrow], [creeps in [this [petty pace]]] [from [day to day]], to the last syllable of recorded time; And all our yesterdays have lighted fools the way to dusty death. Languages have a nested (or recursive) structure 32

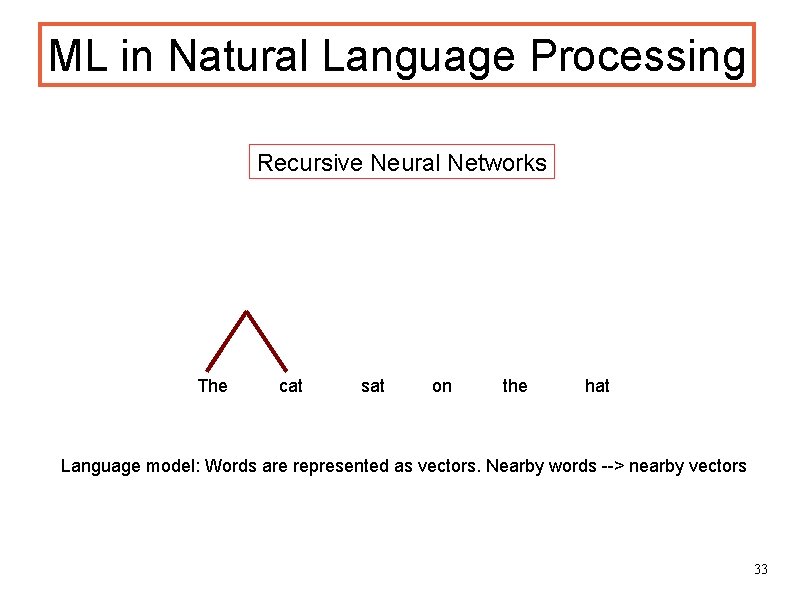

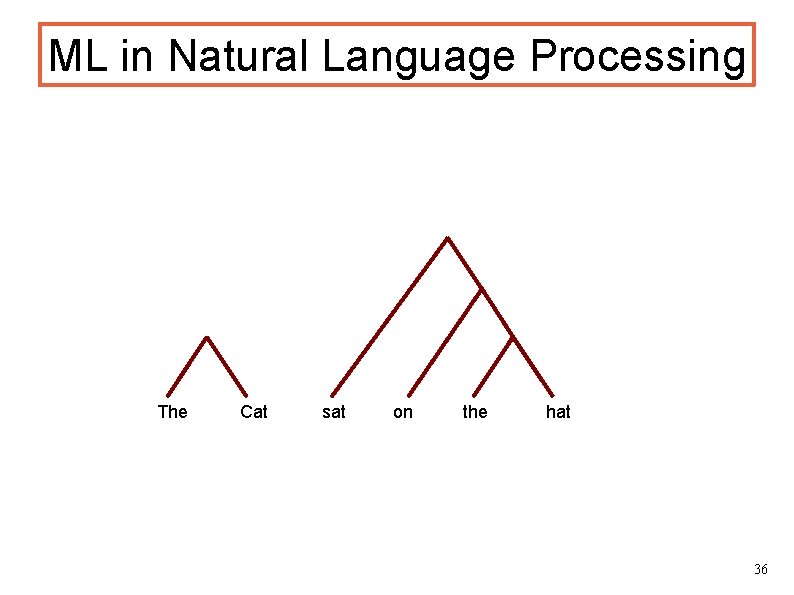

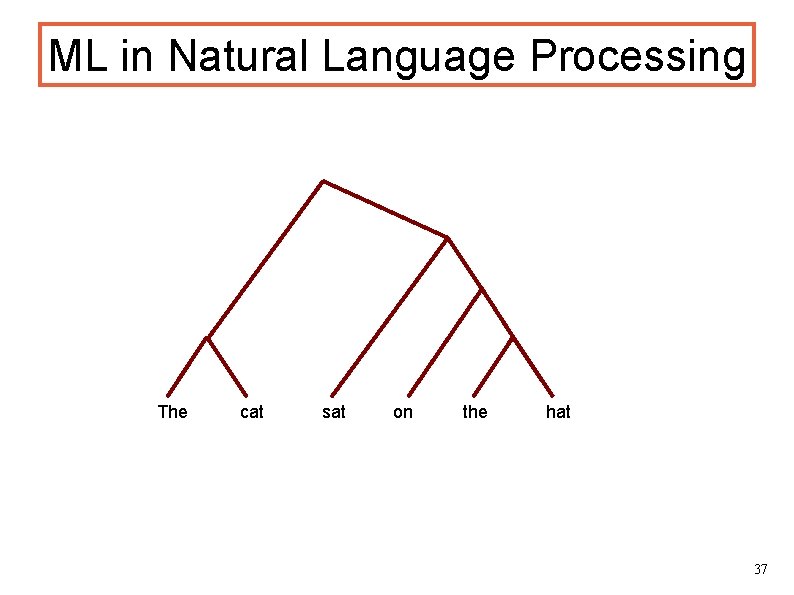

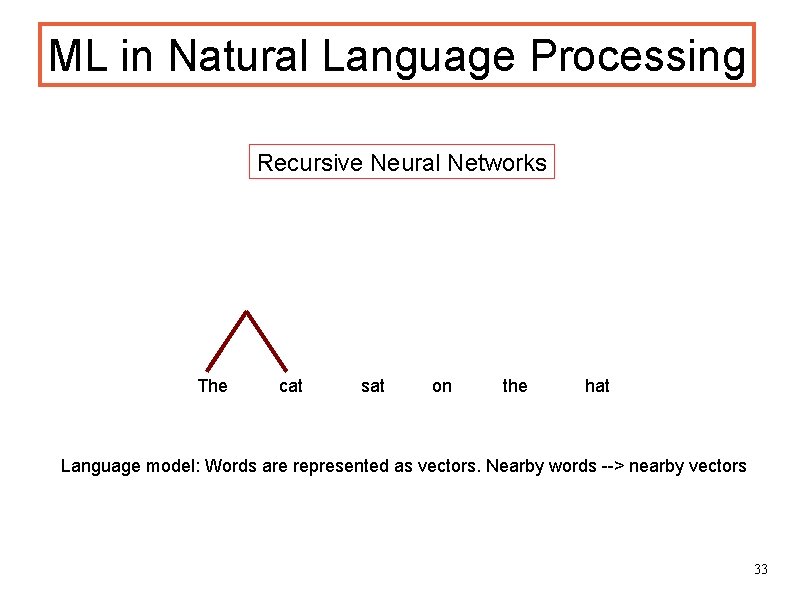

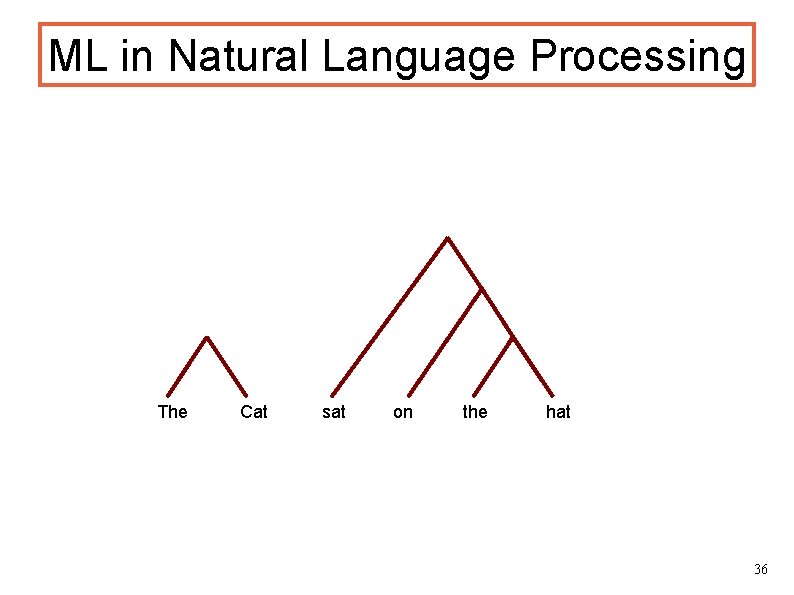

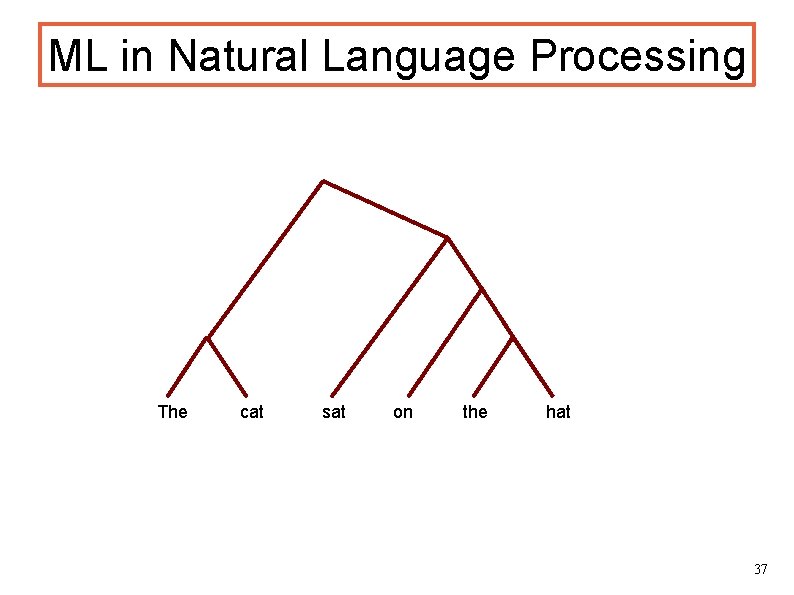

ML in Natural Language Processing Recursive Neural Networks The cat sat on the hat Language model: Words are represented as vectors. Nearby words --> nearby vectors 33

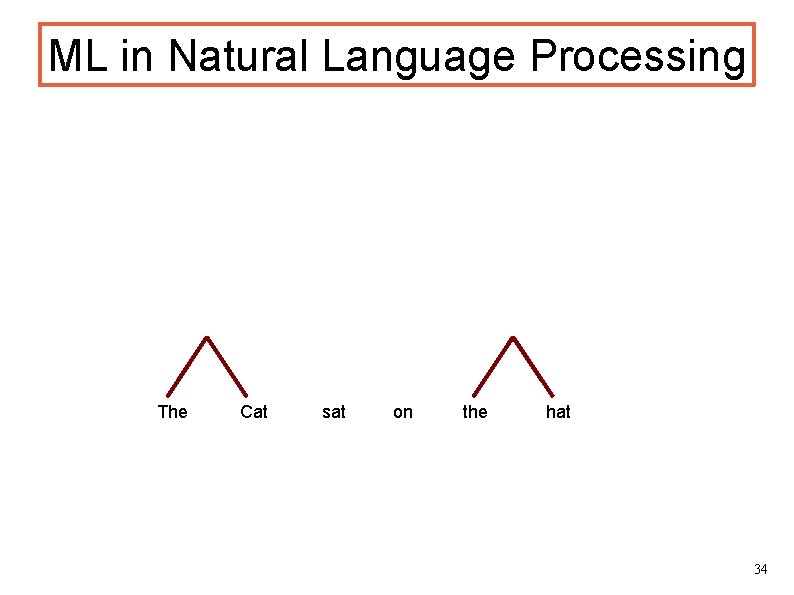

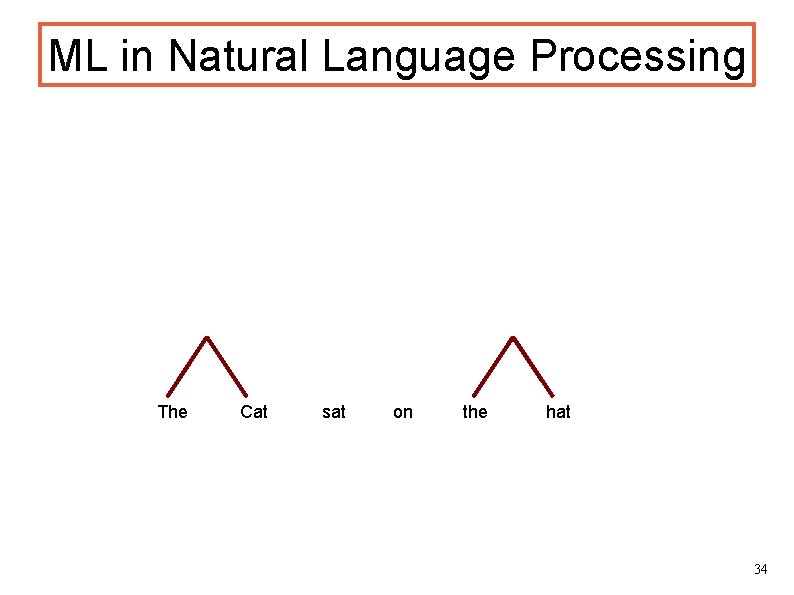

ML in Natural Language Processing The Cat sat on the hat 34

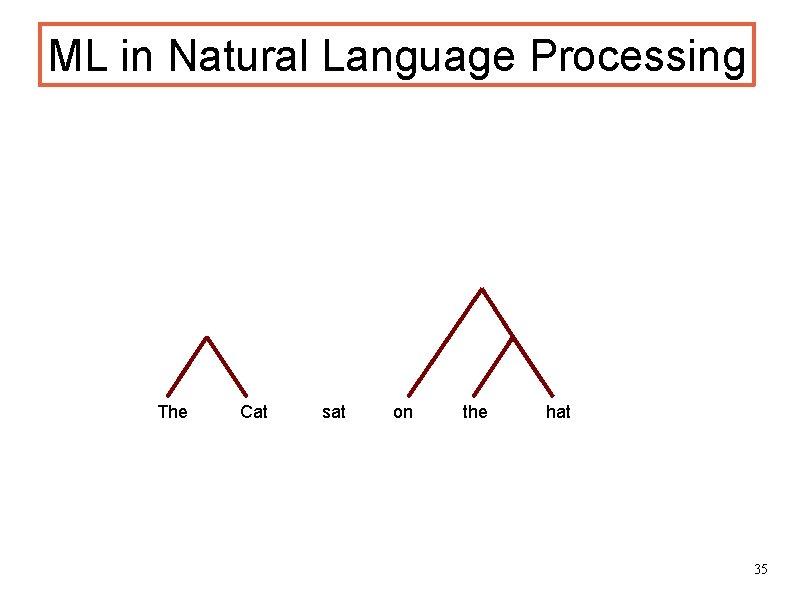

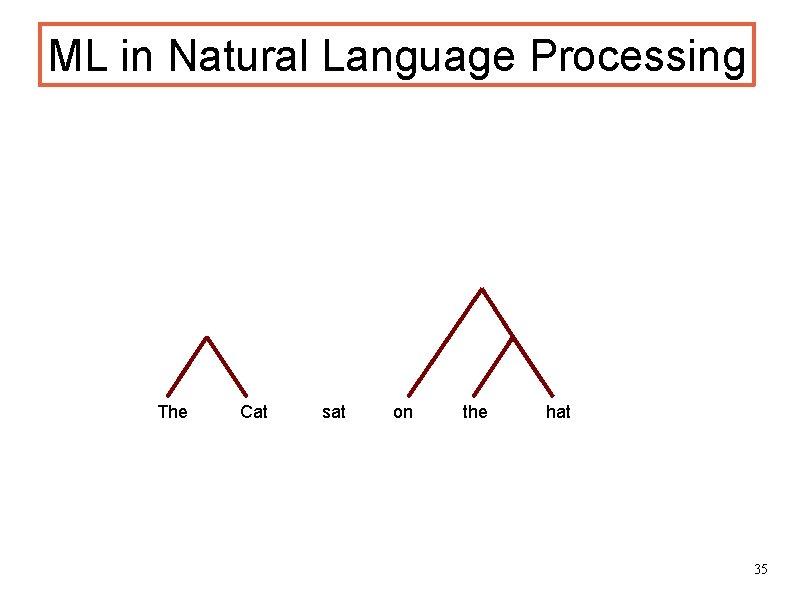

ML in Natural Language Processing The Cat sat on the hat 35

ML in Natural Language Processing The Cat sat on the hat 36

ML in Natural Language Processing The cat sat on the hat 37

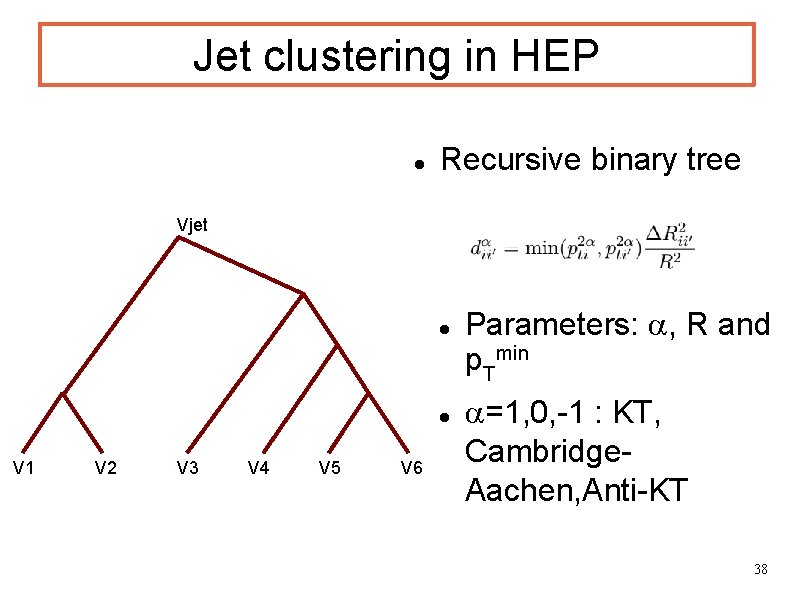

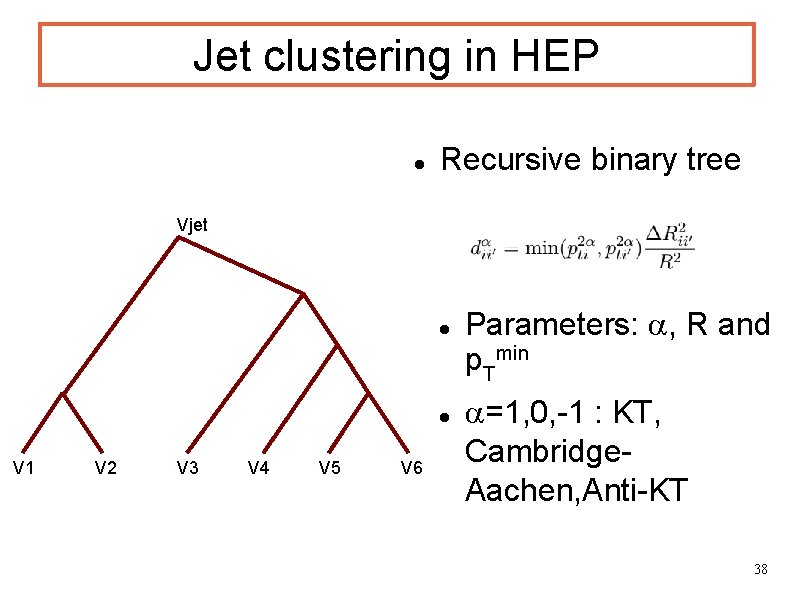

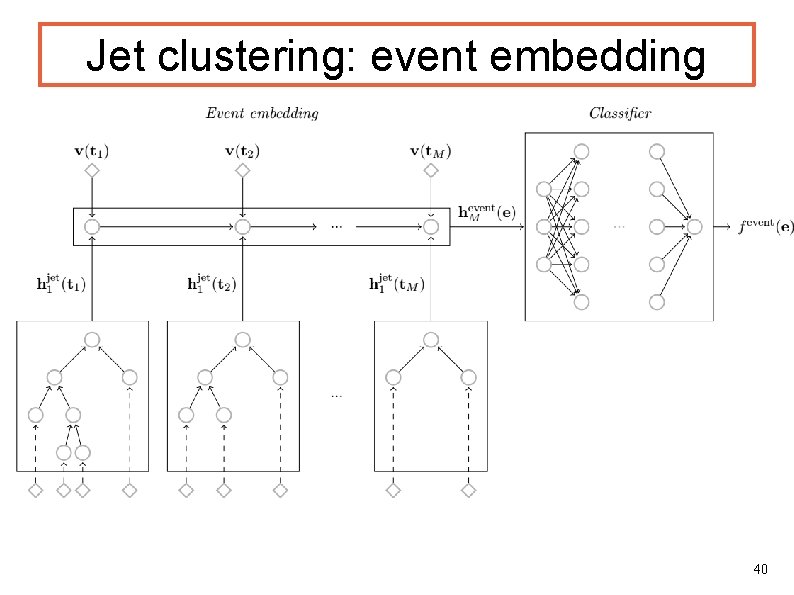

Jet clustering in HEP Recursive binary tree Vjet V 1 V 2 V 3 V 4 V 5 V 6 Parameters: a, R and p. Tmin a=1, 0, -1 : KT, Cambridge. Aachen, Anti-KT 38

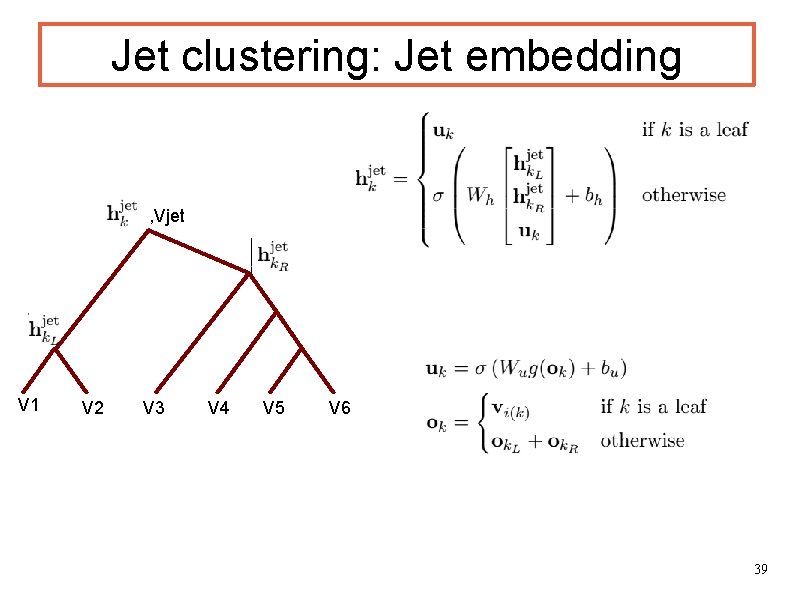

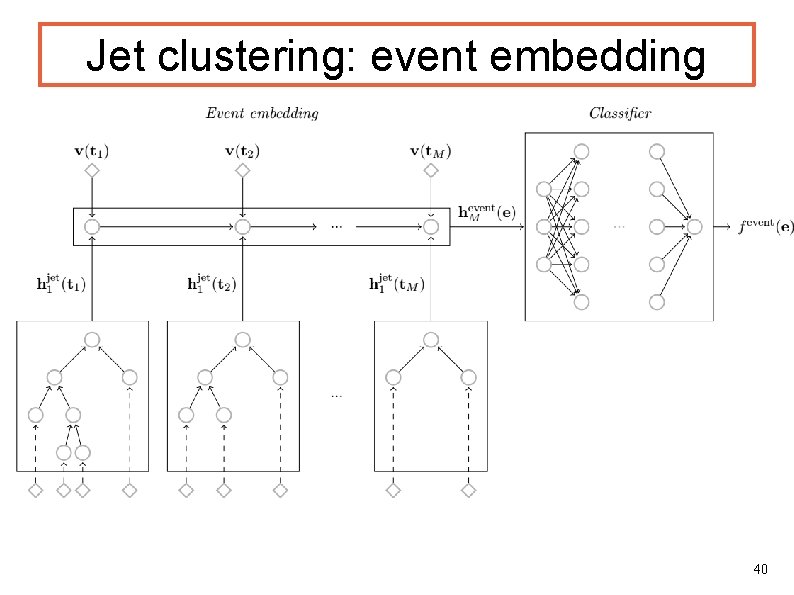

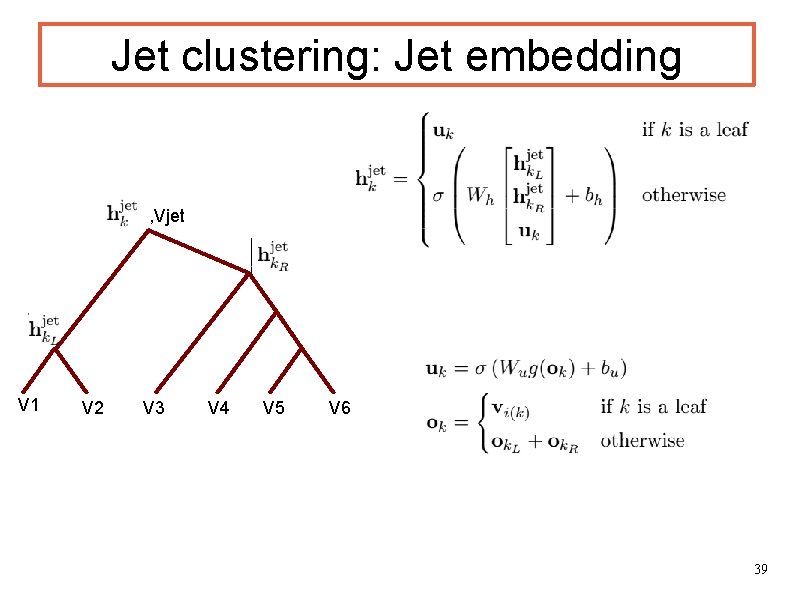

Jet clustering: Jet embedding , Vjet V 1 V 2 V 3 V 4 V 5 V 6 39

Jet clustering: event embedding 40

Generative vs. Discriminative: features(x)-->labels(y) Given features output p(y|x) Generative: labels-->features Given a label generate features p(x|y) 41

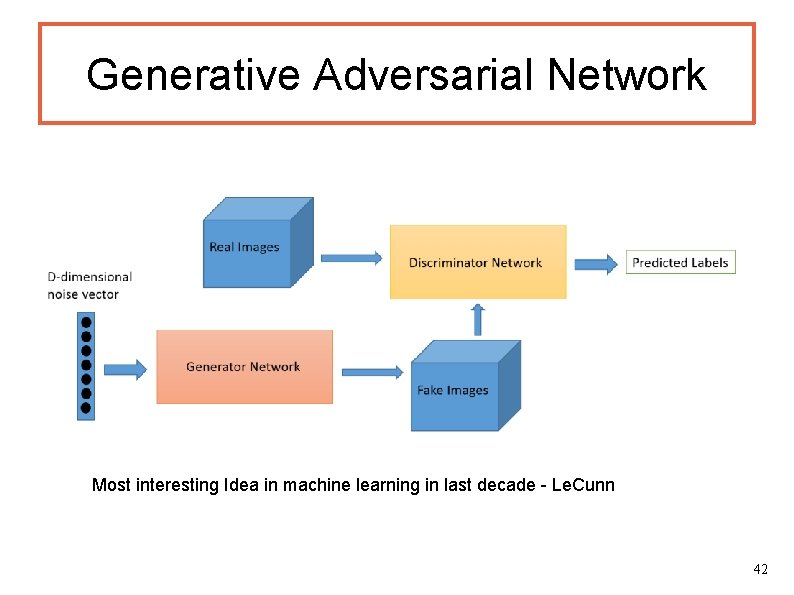

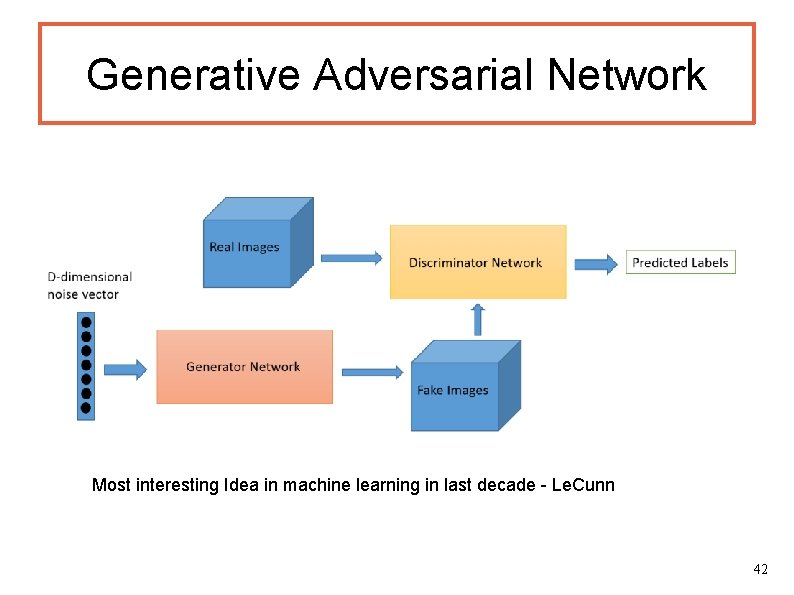

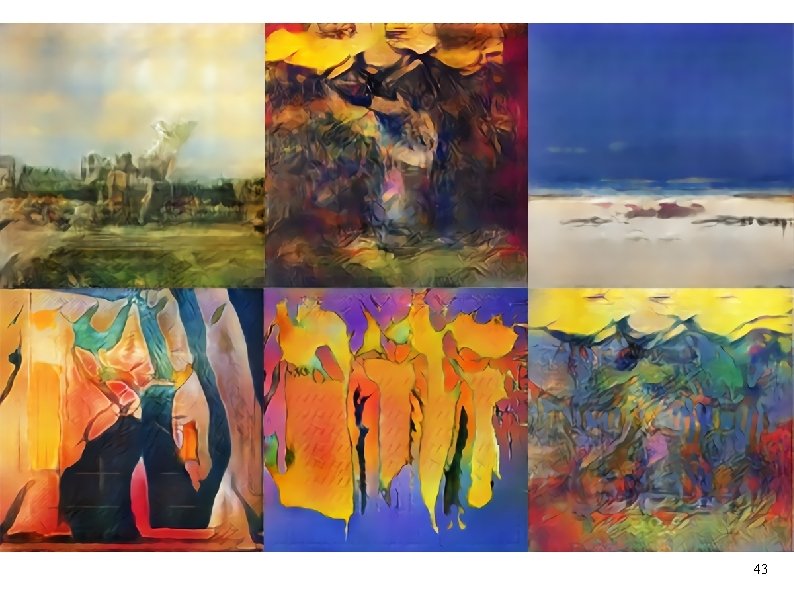

Generative Adversarial Network Most interesting Idea in machine learning in last decade - Le. Cunn 42

Machine Learning in High Energy Physics Satyaki Bhattacharya Saha Institute for Nuclear Physics 43

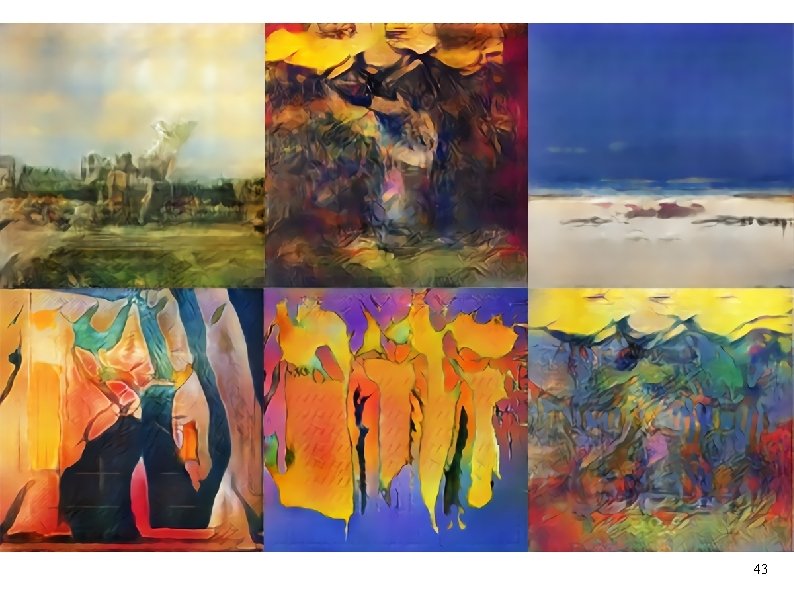

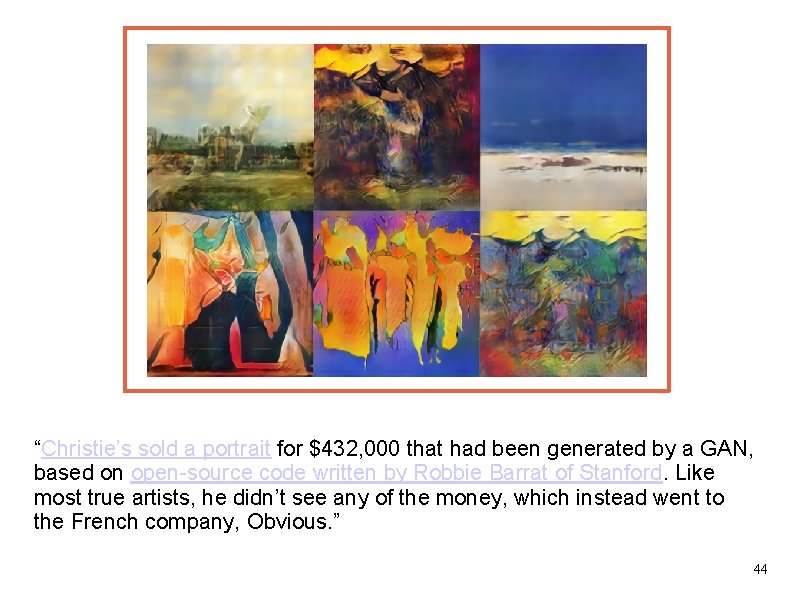

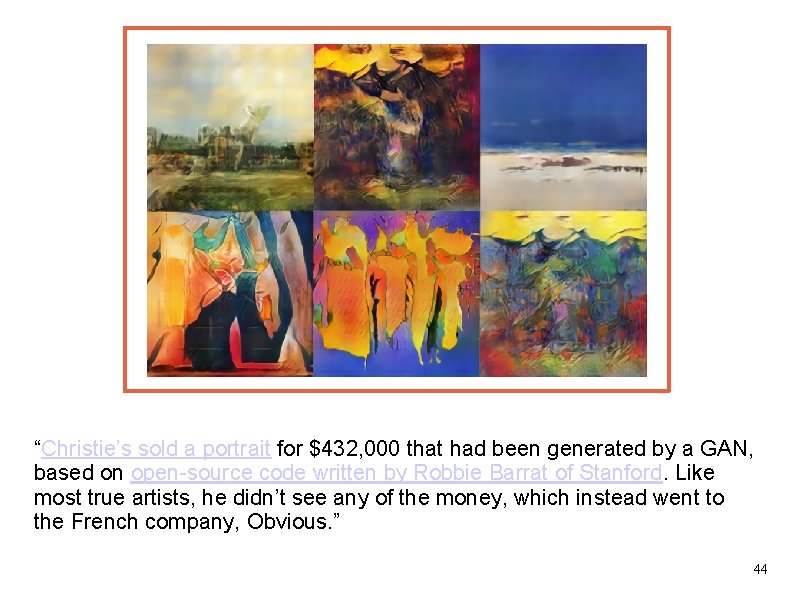

“Christie’s sold a portrait for $432, 000 that had been generated by a GAN, based on open-source code written by Robbie Barrat of Stanford. Like most true artists, he didn’t see any of the money, which instead went to the French company, Obvious. ” 44

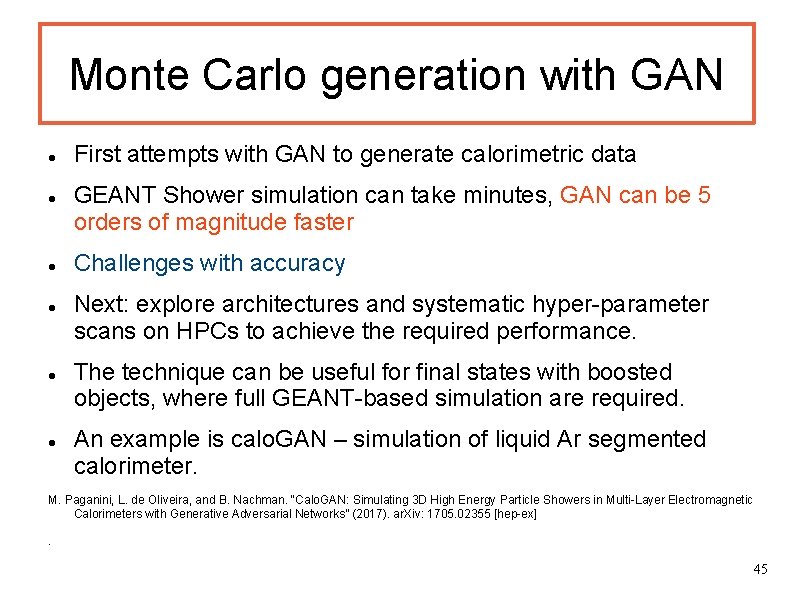

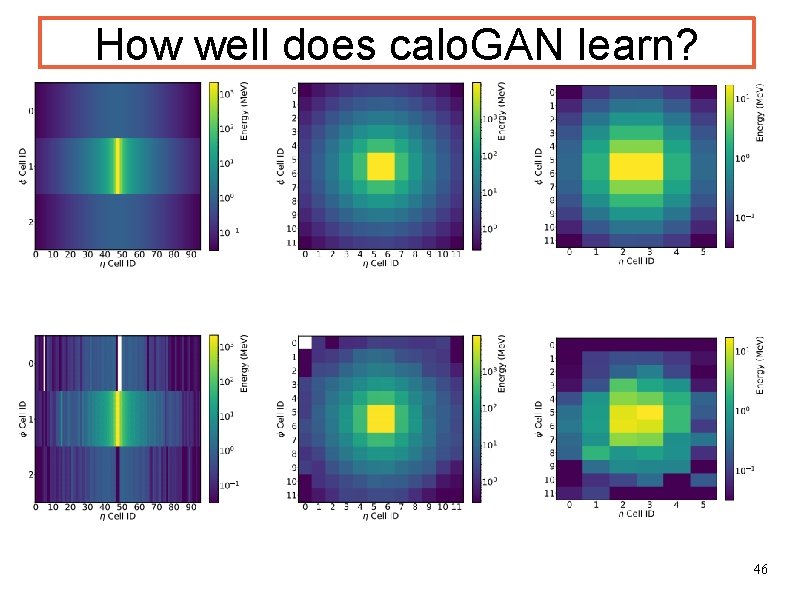

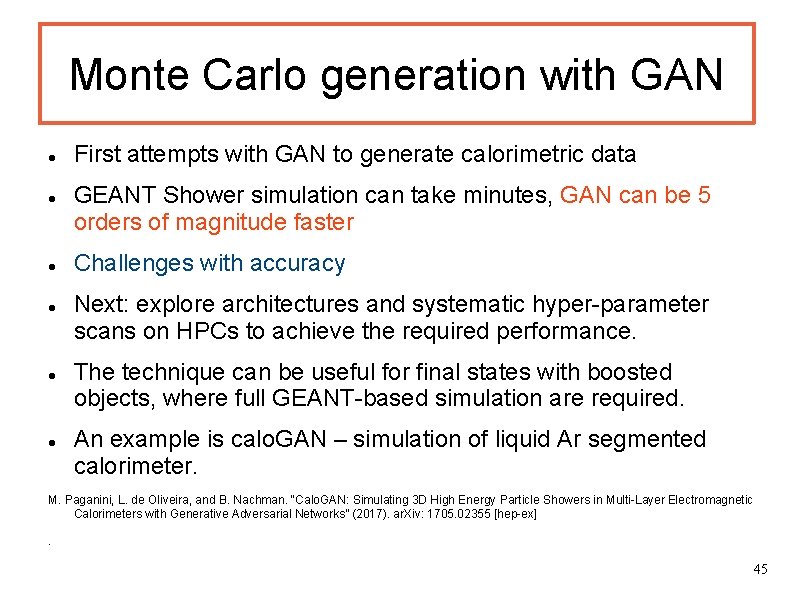

Monte Carlo generation with GAN First attempts with GAN to generate calorimetric data GEANT Shower simulation can take minutes, GAN can be 5 orders of magnitude faster Challenges with accuracy Next: explore architectures and systematic hyper-parameter scans on HPCs to achieve the required performance. The technique can be useful for final states with boosted objects, where full GEANT-based simulation are required. An example is calo. GAN – simulation of liquid Ar segmented calorimeter. M. Paganini, L. de Oliveira, and B. Nachman. “Calo. GAN: Simulating 3 D High Energy Particle Showers in Multi-Layer Electromagnetic Calorimeters with Generative Adversarial Networks” (2017). ar. Xiv: 1705. 02355 [hep-ex]. 45

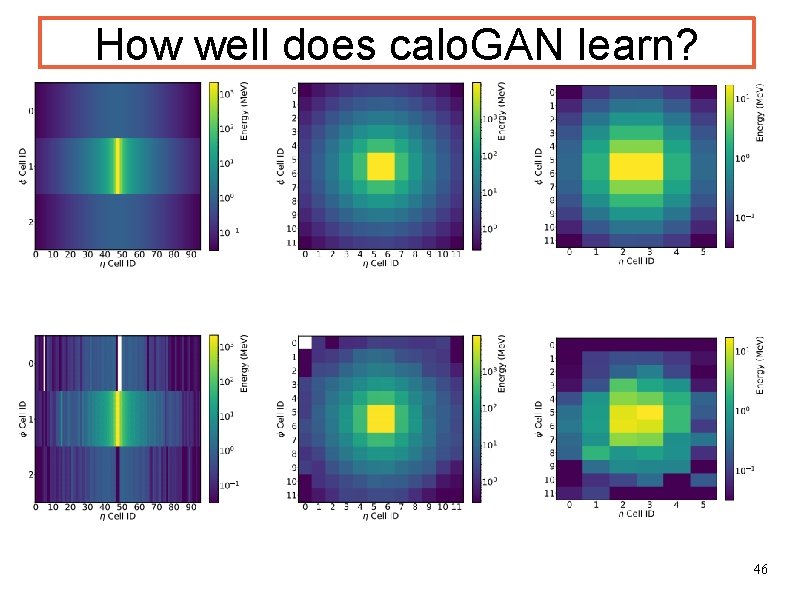

How well does calo. GAN learn? 46

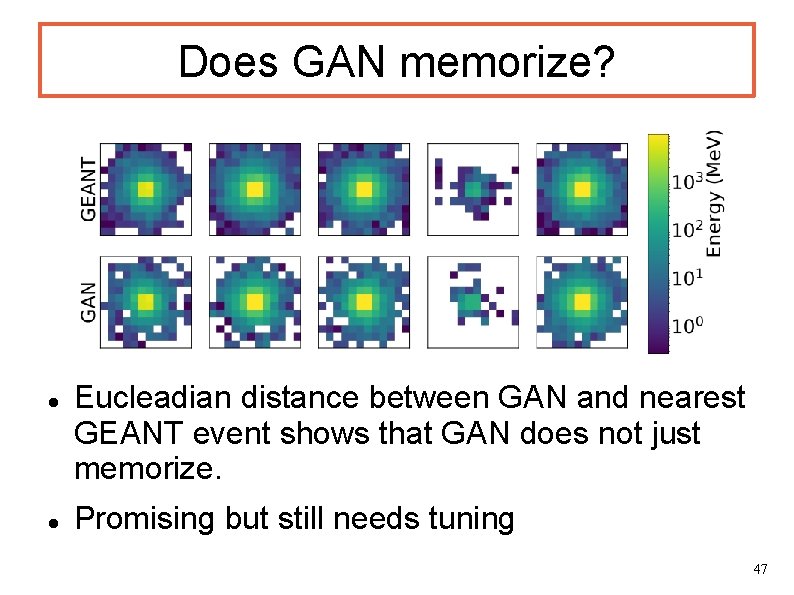

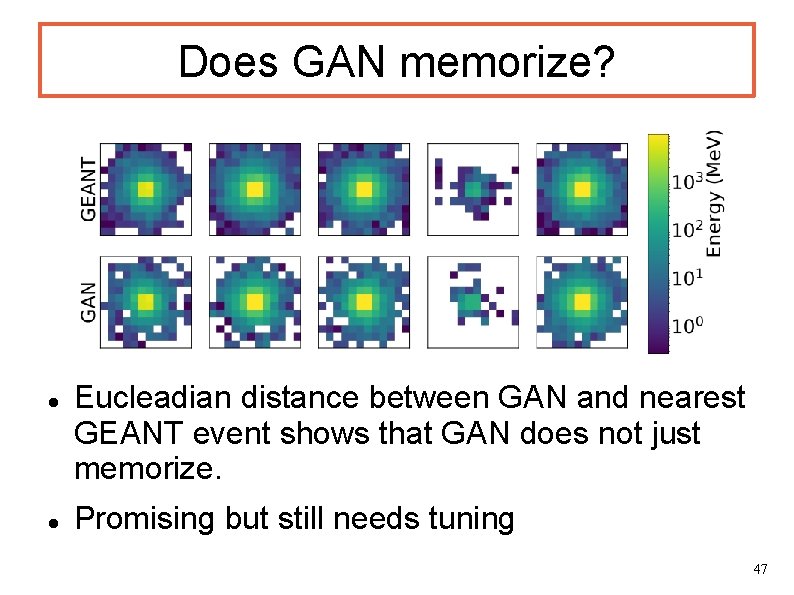

Does GAN memorize? Eucleadian distance between GAN and nearest GEANT event shows that GAN does not just memorize. Promising but still needs tuning 47

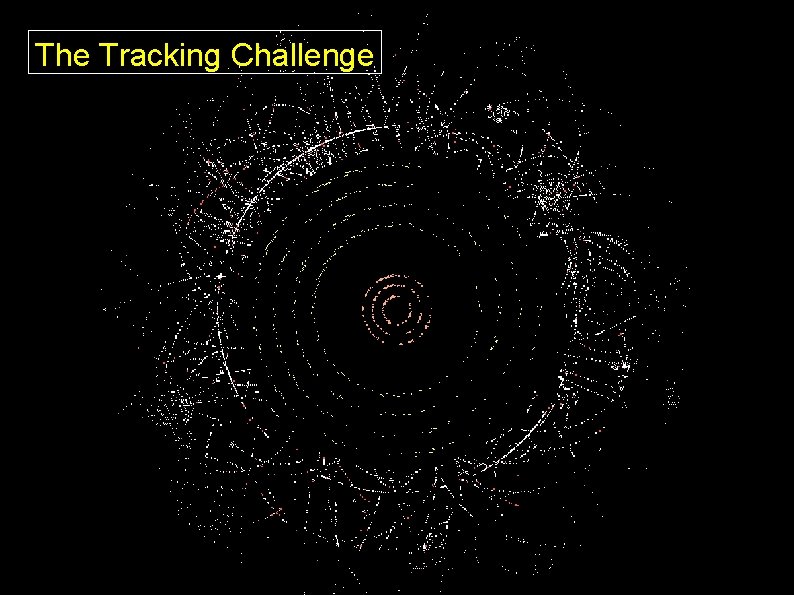

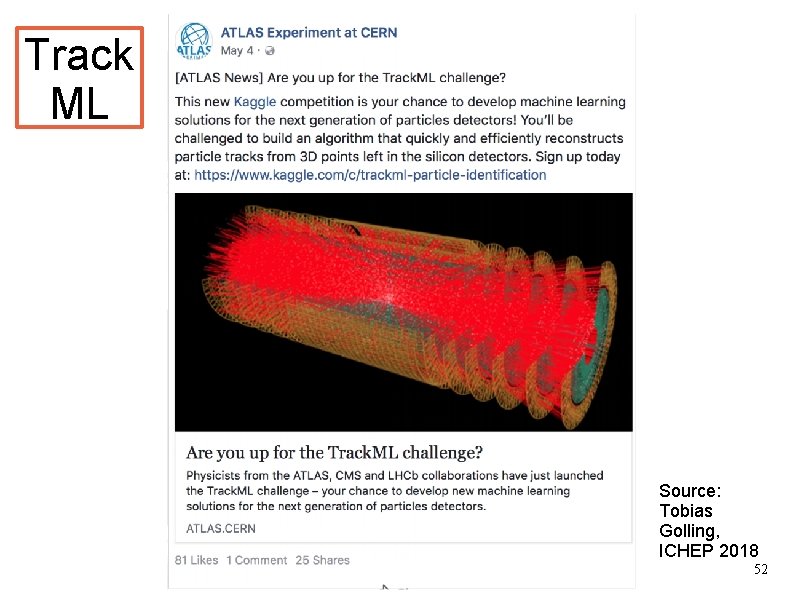

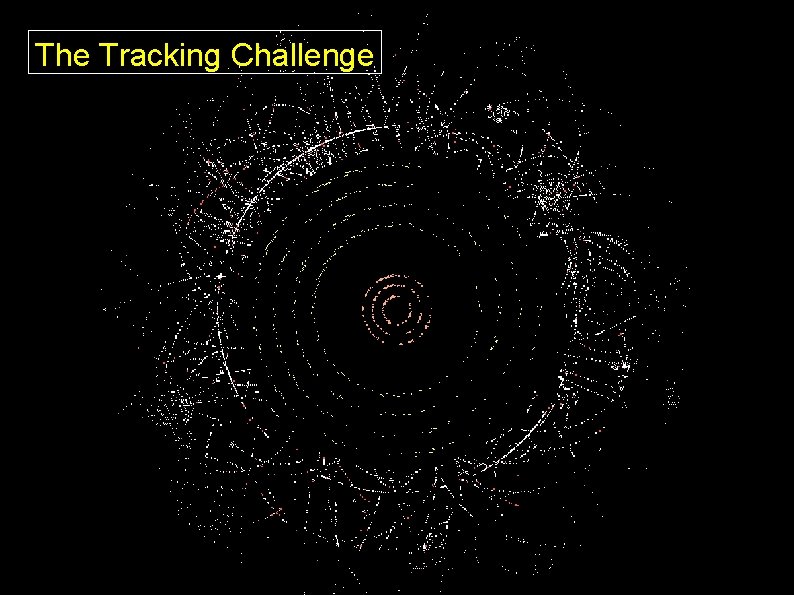

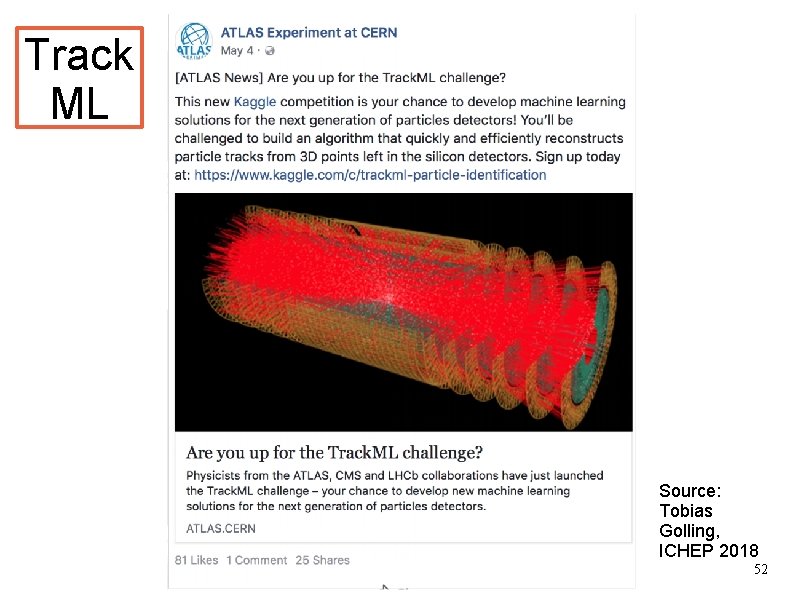

The Tracking Challenge 48

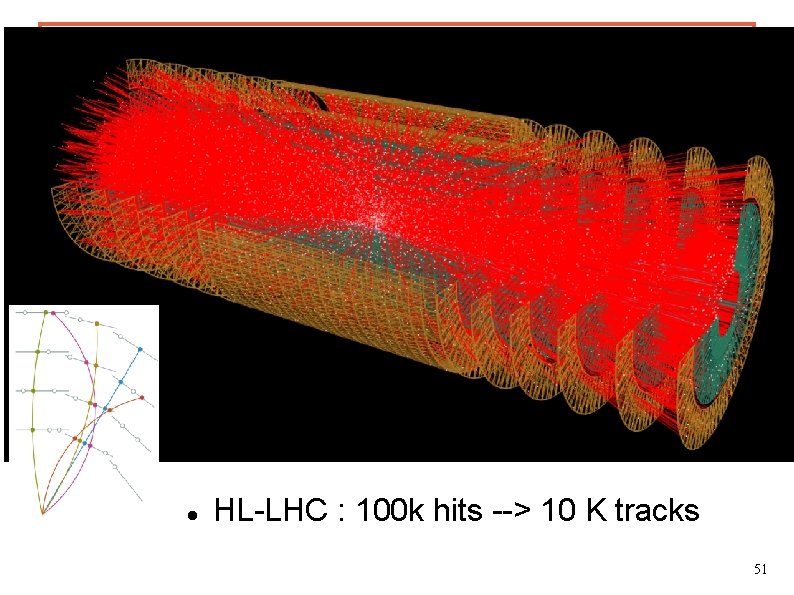

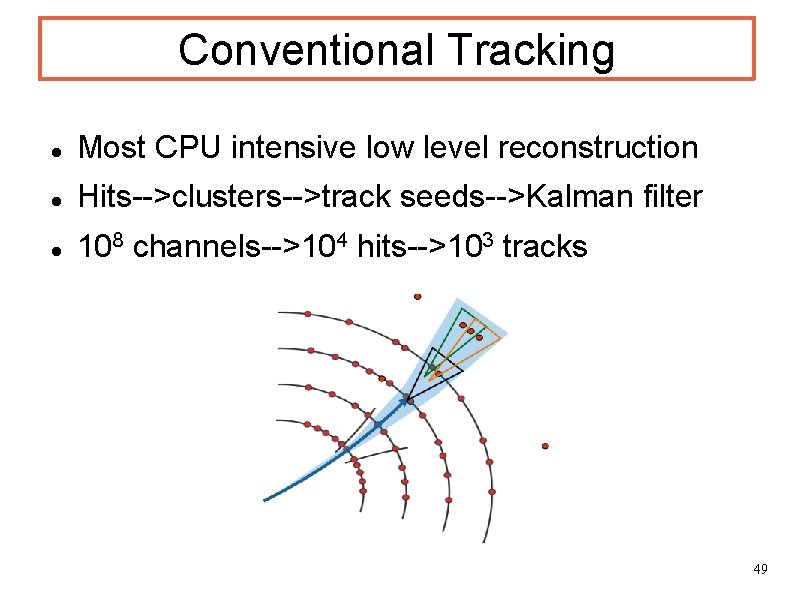

Conventional Tracking Most CPU intensive low level reconstruction Hits-->clusters-->track seeds-->Kalman filter 108 channels-->104 hits-->103 tracks 49

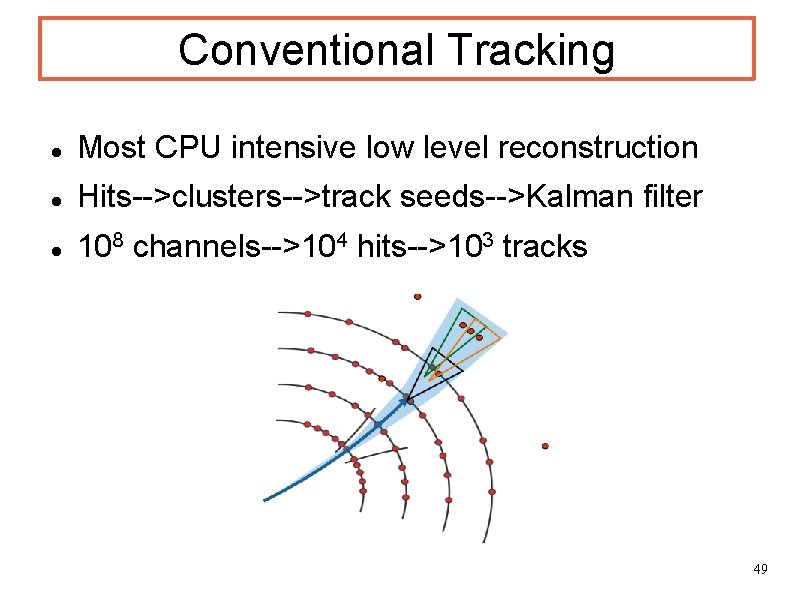

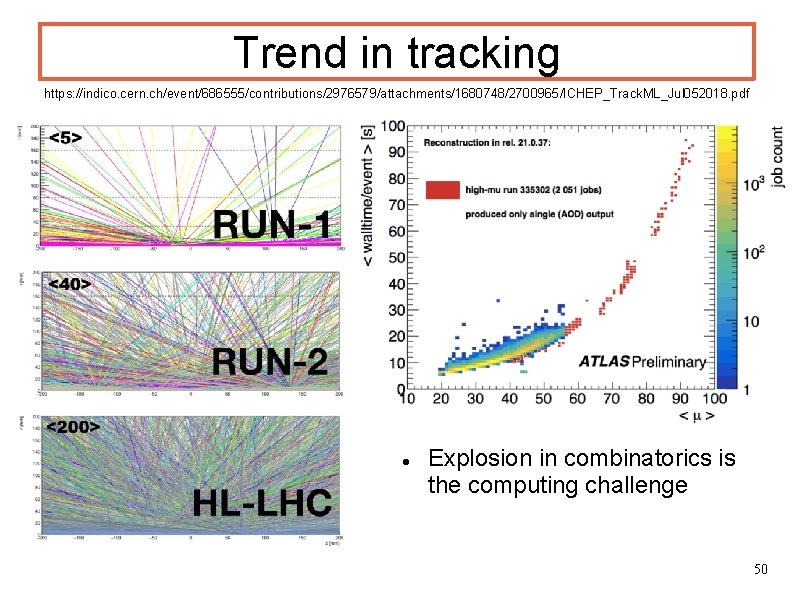

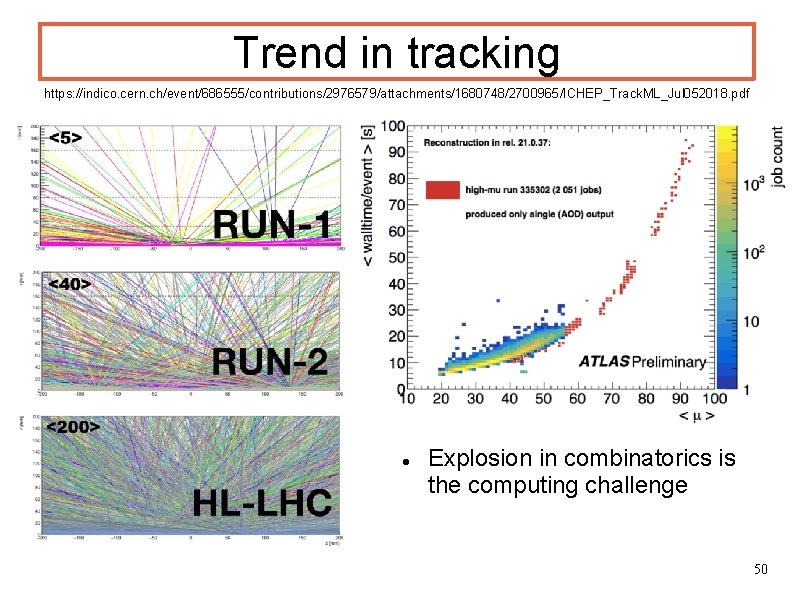

Trend in tracking https: //indico. cern. ch/event/686555/contributions/2976579/attachments/1680748/2700965/ICHEP_Track. ML_Jul 052018. pdf Explosion in combinatorics is the computing challenge 50

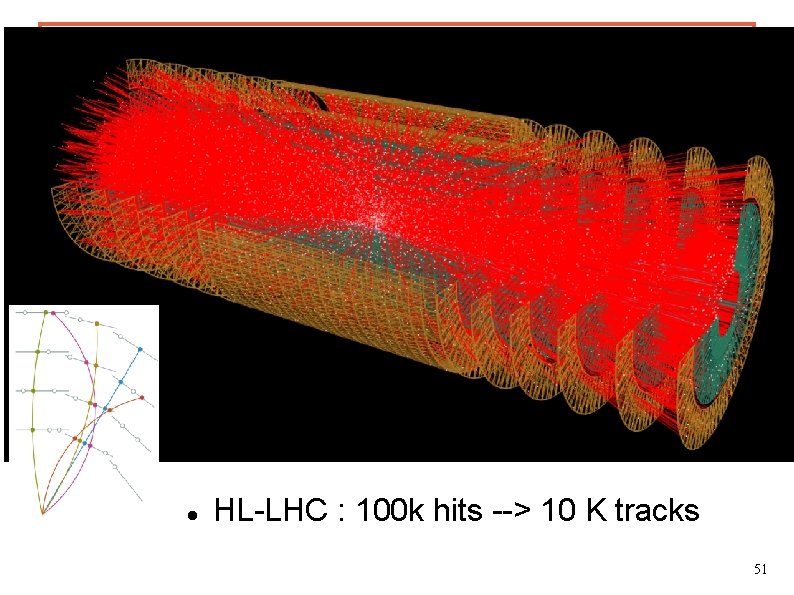

HL-LHC : 100 k hits --> 10 K tracks 51

Track ML Source: Tobias Golling, ICHEP 2018 52

Summary A new era of machine learning applications in HEP has started since 2012. Image processing and natural language processing deep networks promise to be powerful tools for classification problems in HEP in near future Many interesting pioneering works in the field but still “preliminary” More systematic studies and interactions with ML community needed. Our knowledge is not deep yet, but we are deeply interested in this ongoing revolution in machine learning 53

Collaborative Activities Direct collaboration between HEP researchers and computer scientists working in the area of machine learning is an important possible driver of innovation in the area of machine learning applications described in Section 2. It is important for high-energy physics to engage and collaborate with the academic community focused on new machine learning algorithms and applications as they will naturally be interested in applying new ideas to interesting and complex data provided by HEP. 54

Collaborative Benchmark datasets CERN open data portal 55

Machine learning schools 56

TMVA Default ML package of HEP community in past decade Main usage: ANN and BDT Used for BDT in the Higgs discovery analyses Can produce ROC curves and a bunch of other performance plots for a set of chosen ML methods on a problem Giving way to new genration? Keras, Tensorflow, Theano, xgboost. . . A. Hoecker et al. “TMVA: Toolkit for Multivariate Data Analysis. ”Po. SACAT (2007), p. 040. ar. Xiv: physics/0703039. 57

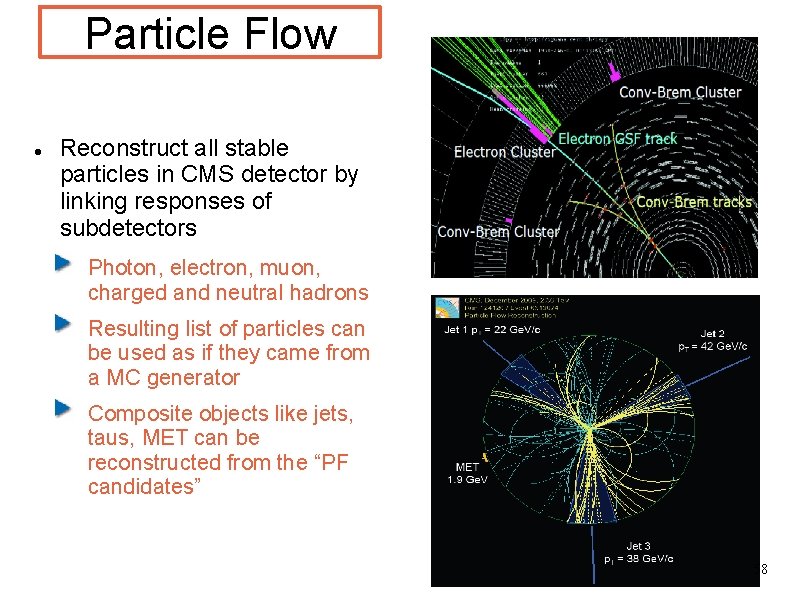

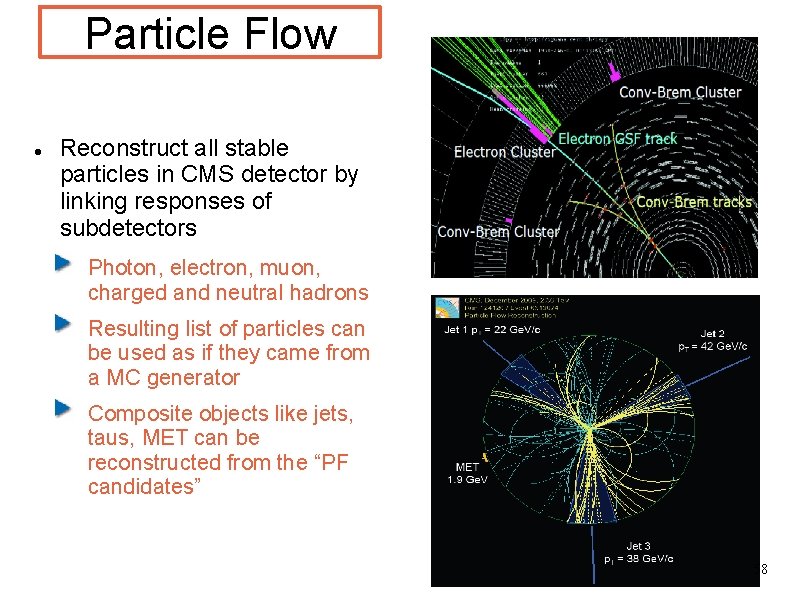

Particle Flow Reconstruct all stable particles in CMS detector by linking responses of subdetectors Photon, electron, muon, charged and neutral hadrons Resulting list of particles can be used as if they came from a MC generator Composite objects like jets, taus, MET can be reconstructed from the “PF candidates” 58

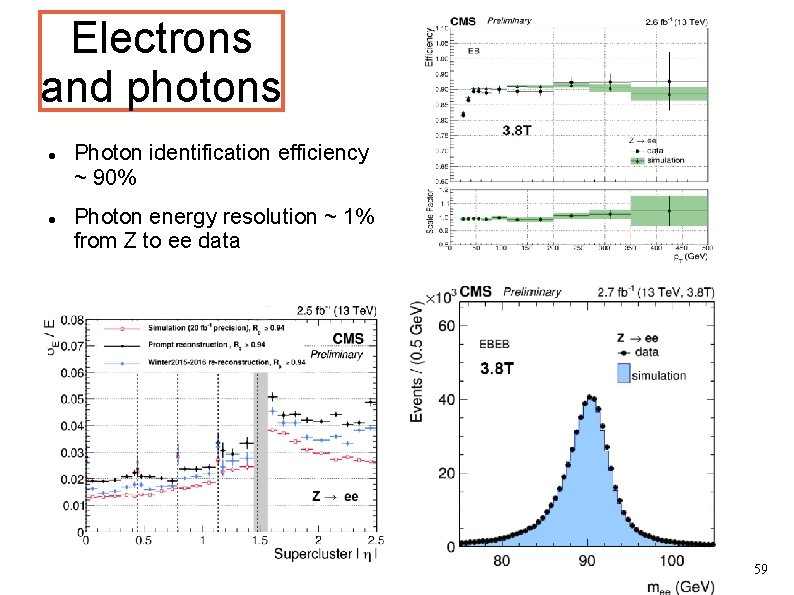

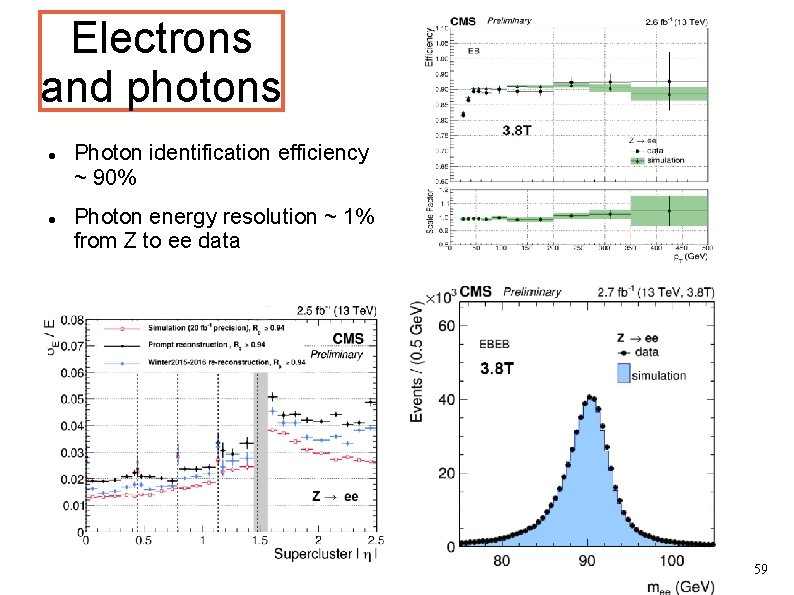

Electrons and photons Photon identification efficiency ~ 90% Photon energy resolution ~ 1% from Z to ee data 59