Machine Learning for Signal Processing Sparse and Overcomplete

- Slides: 132

Machine Learning for Signal Processing Sparse and Overcomplete Representations Bhiksha Raj (slides from Sourish Chaudhuri) Oct 14, 2014 1

Key Topics in this Lecture • Basics – Component-based representations – Overcomplete and Sparse Representations, – Dictionaries • Pursuit Algorithms • How to learn a dictionary • Why is an overcomplete representation powerful? Sparse and Overcomplete Representations 2

Representing Data Dictionary (codebook) Sparse and Overcomplete Representations 3

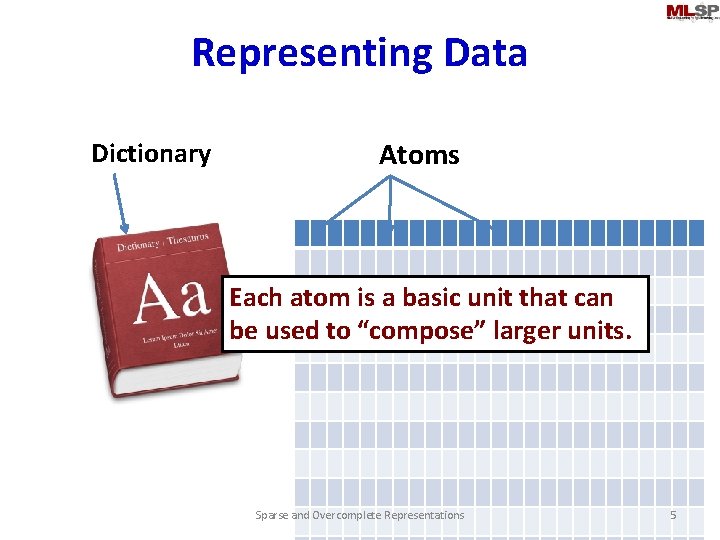

Representing Data Dictionary Atoms Sparse and Overcomplete Representations 4

Representing Data Dictionary Atoms Each atom is a basic unit that can be used to “compose” larger units. Sparse and Overcomplete Representations 5

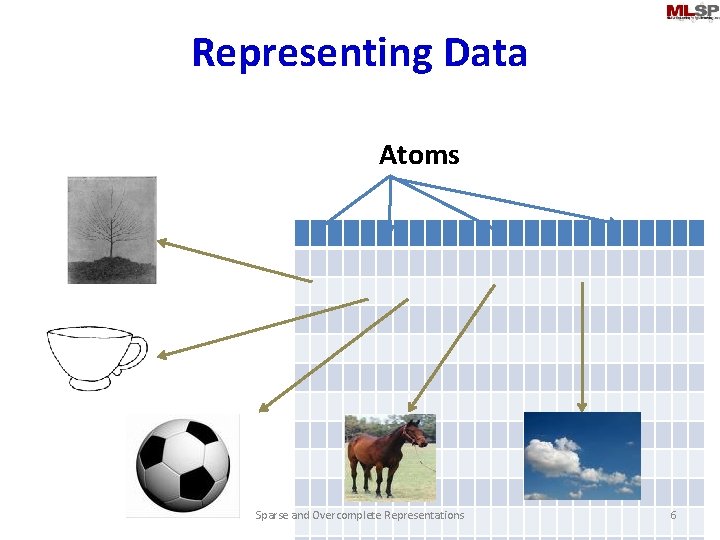

Representing Data Atoms Sparse and Overcomplete Representations 6

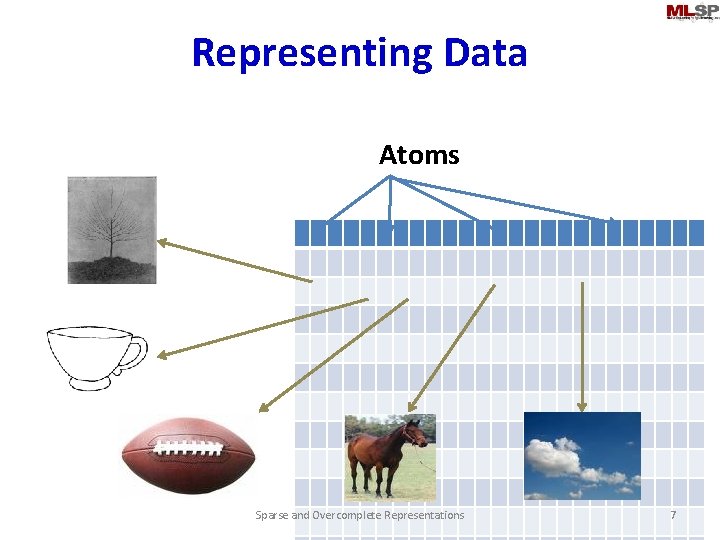

Representing Data Atoms Sparse and Overcomplete Representations 7

Representing Data Atoms Many such bases (concepts) Sparse and Overcomplete Representations 8

Representing Data Sparse and Overcomplete Representations 9

Representing Data Using concepts that we know… Sparse and Overcomplete Representations 10

Representing Data Using concepts that we know… Sparse and Overcomplete Representations 11

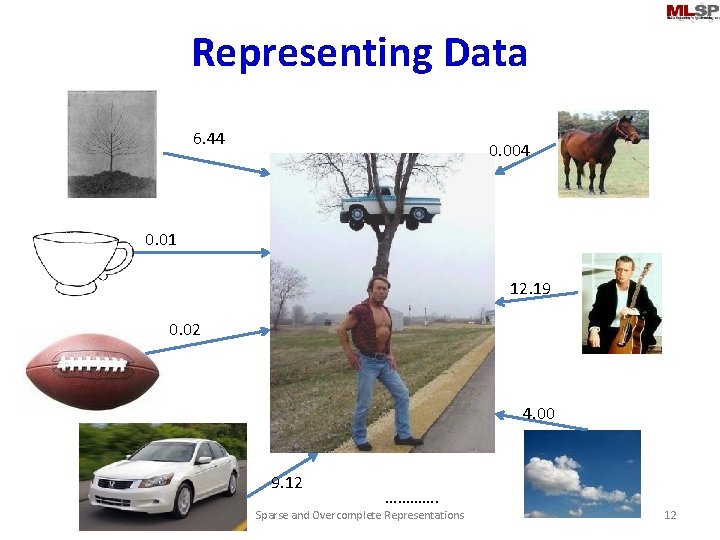

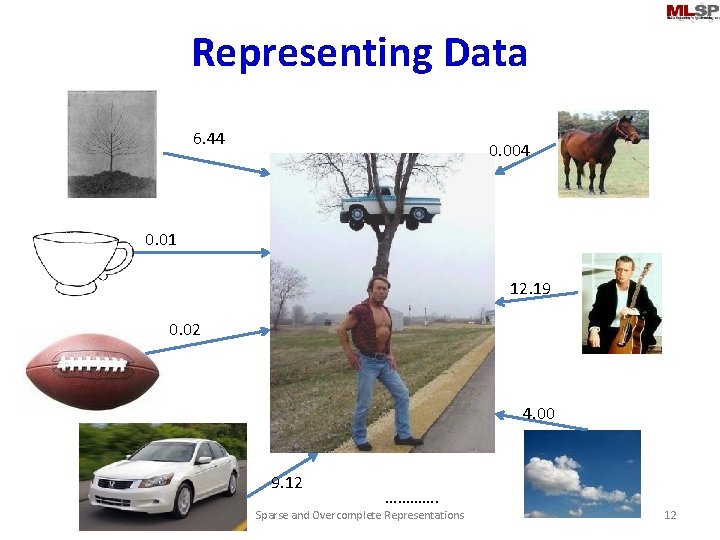

Representing Data 6. 44 0. 004 0. 01 12. 19 0. 02 4. 00 9. 12 …………. Sparse and Overcomplete Representations 12

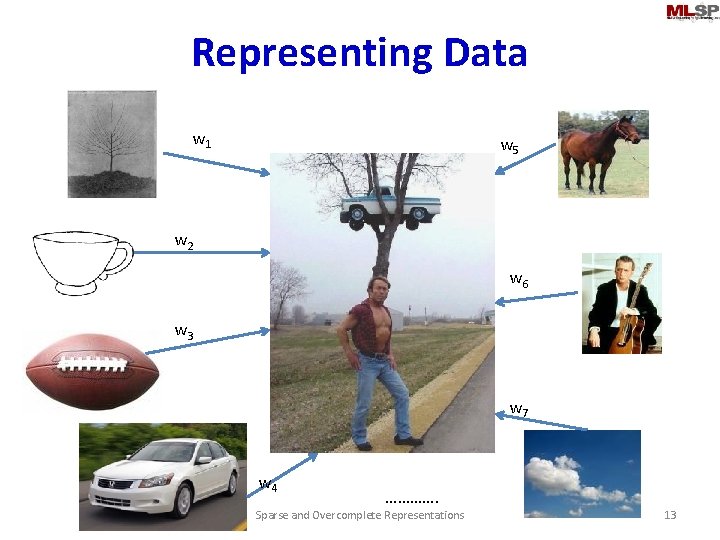

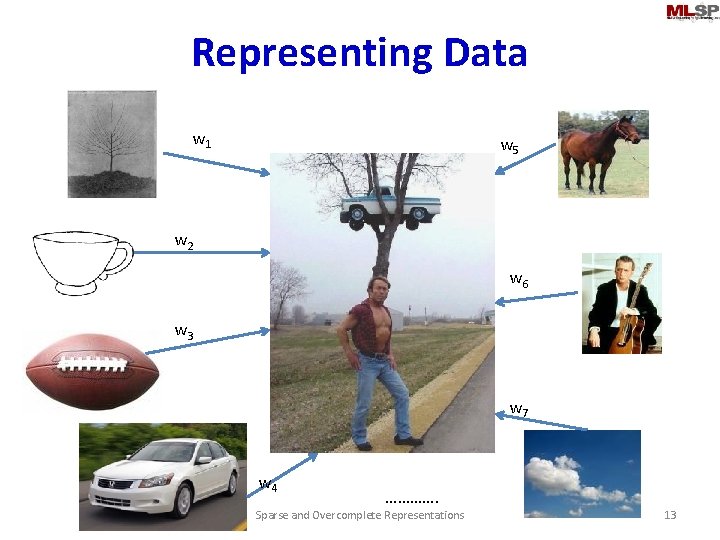

Representing Data w 1 w 5 w 2 w 6 w 3 w 7 w 4 …………. Sparse and Overcomplete Representations 13

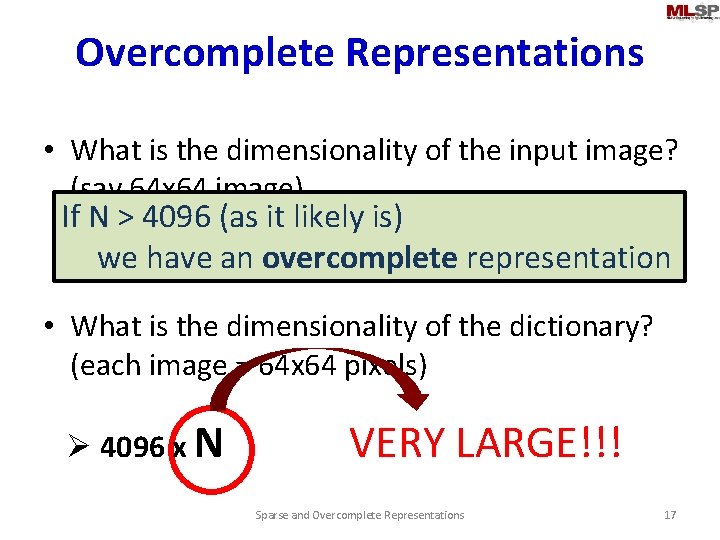

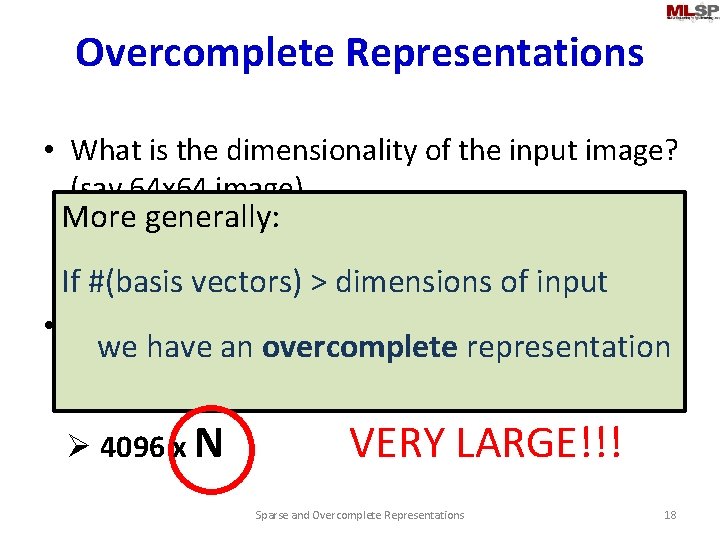

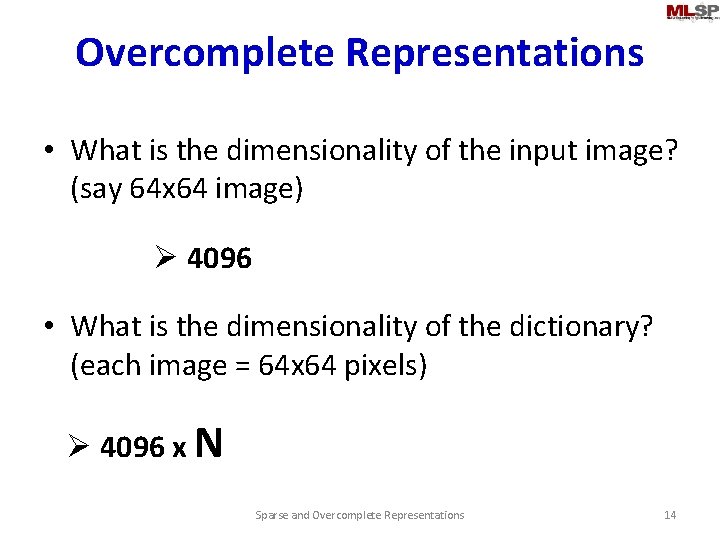

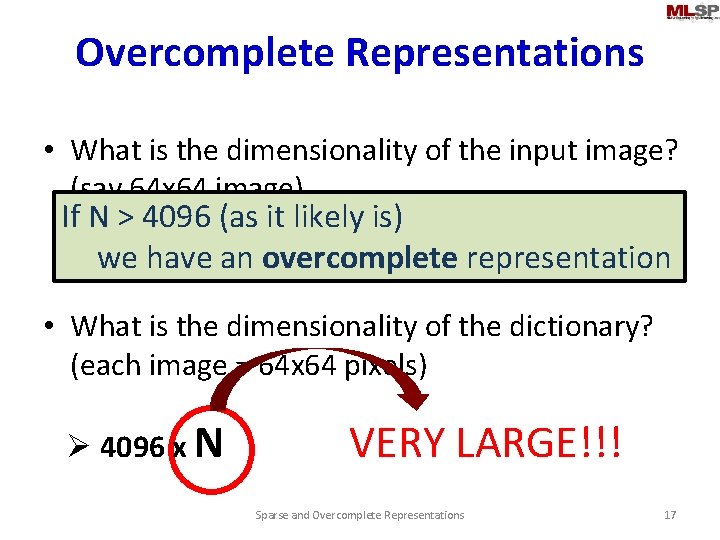

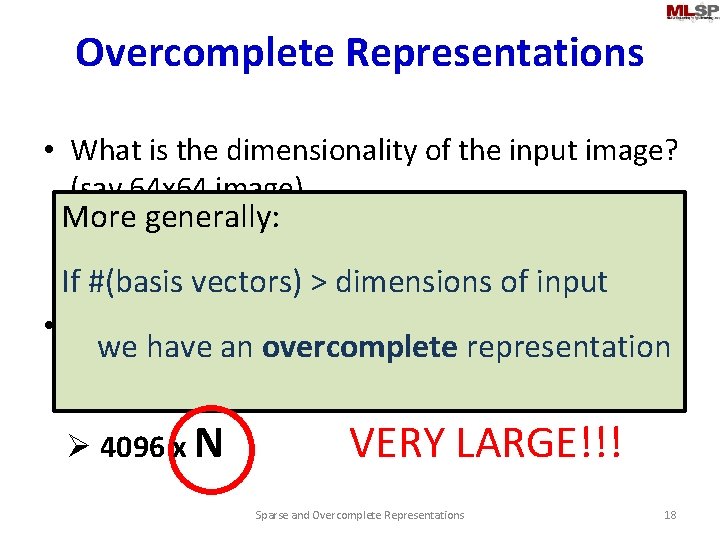

Overcomplete Representations • What is the dimensionality of the input image? (say 64 x 64 image) Ø 4096 • What is the dimensionality of the dictionary? (each image = 64 x 64 pixels) Ø 4096 x N Sparse and Overcomplete Representations 14

Overcomplete Representations • What is the dimensionality of the input image? (say 64 x 64 image) Ø 4096 • What is the dimensionality of the dictionary? (each image = 64 x 64 pixels) Ø 4096 x N ? ? ? Sparse and Overcomplete Representations 15

Overcomplete Representations • What is the dimensionality of the input image? (say 64 x 64 image) Ø 4096 • What is the dimensionality of the dictionary? (each image = 64 x 64 pixels) Ø 4096 x N VERY LARGE!!! Sparse and Overcomplete Representations 16

Overcomplete Representations • What is the dimensionality of the input image? (say 64 x 64 image) If N > 4096 (as it likely is) Ø 4096 we have an overcomplete representation • What is the dimensionality of the dictionary? (each image = 64 x 64 pixels) Ø 4096 x N VERY LARGE!!! Sparse and Overcomplete Representations 17

Overcomplete Representations • What is the dimensionality of the input image? (say 64 x 64 image) More generally: Ø 4096 If #(basis vectors) > dimensions of input • What is the dimensionality of the dictionary? we have an overcomplete representation (each image = 64 x 64 pixels) Ø 4096 x N VERY LARGE!!! Sparse and Overcomplete Representations 18

Representing Data = Sparse and Overcomplete Representations 19

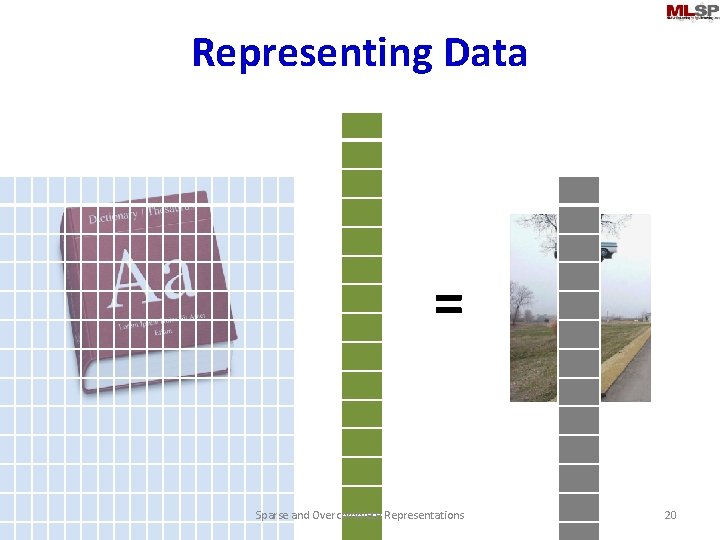

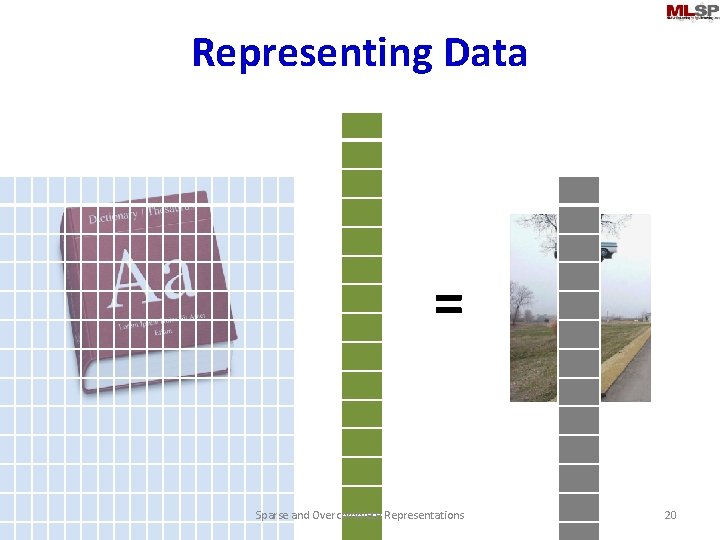

Representing Data = Sparse and Overcomplete Representations 20

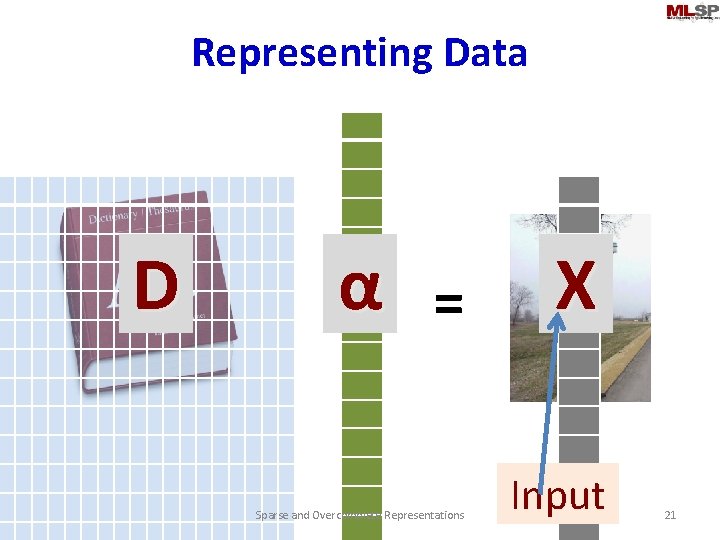

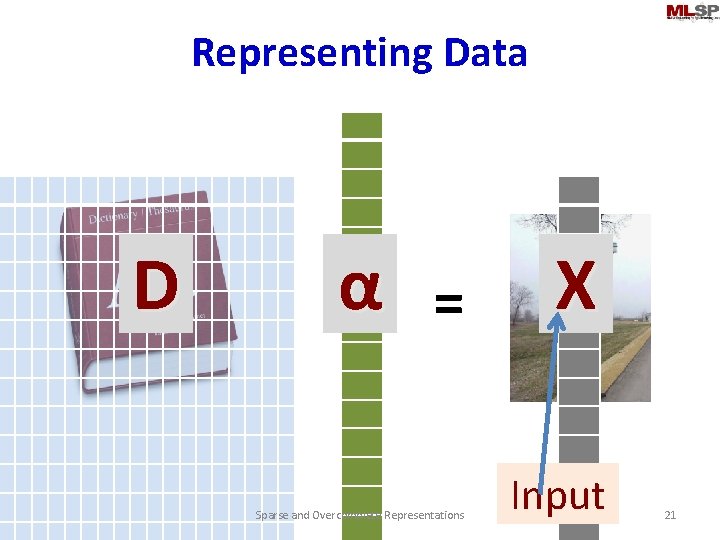

Representing Data D α = Sparse and Overcomplete Representations X Input 21

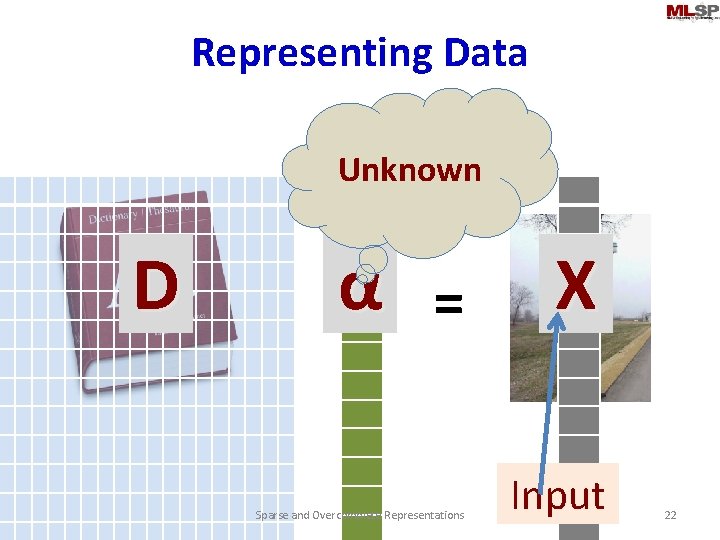

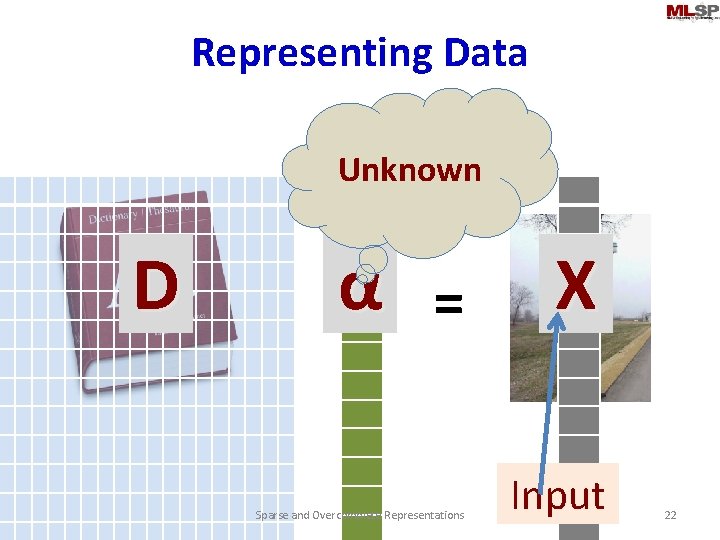

Representing Data Unknown D α = Sparse and Overcomplete Representations X Input 22

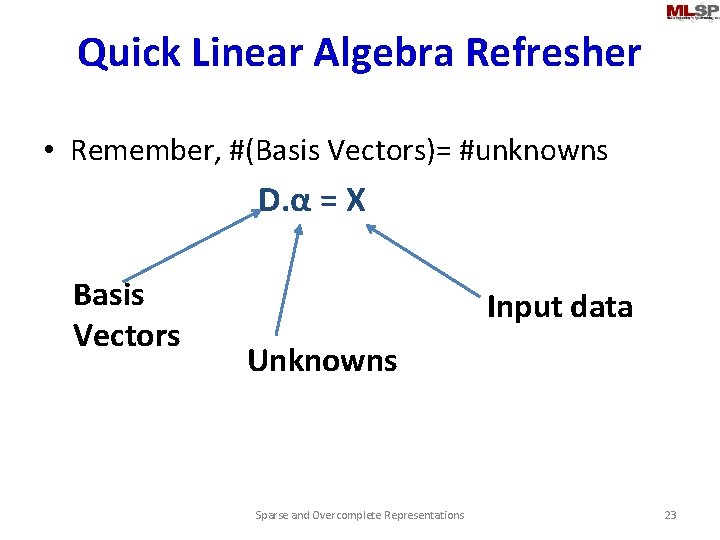

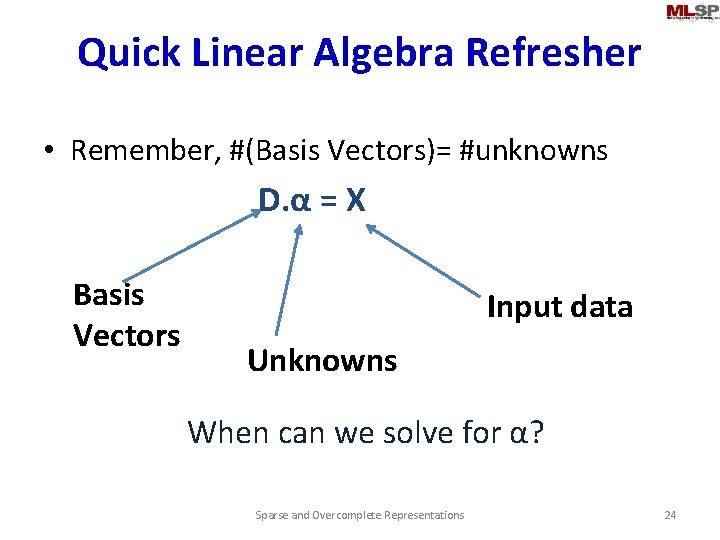

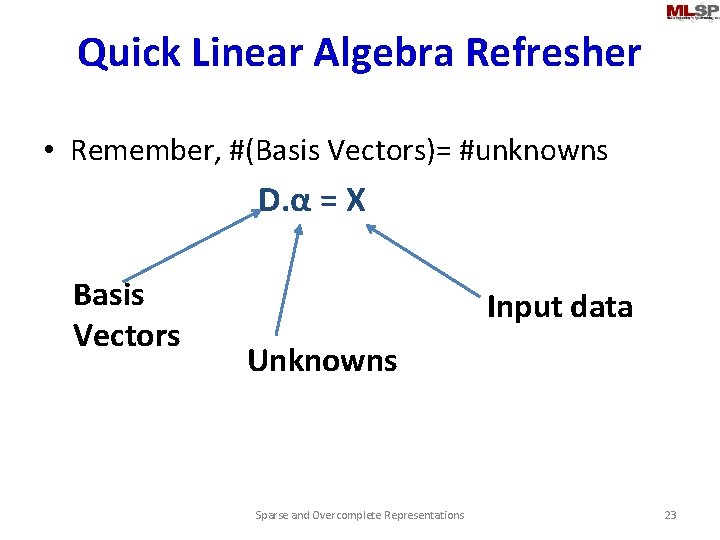

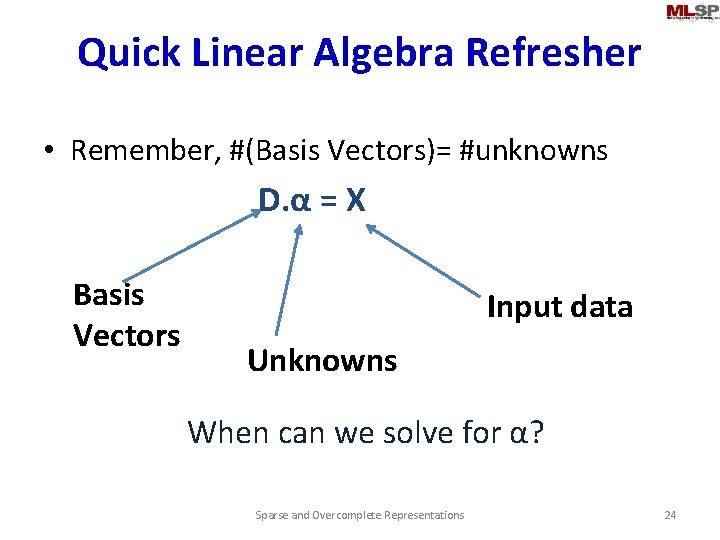

Quick Linear Algebra Refresher • Remember, #(Basis Vectors)= #unknowns D. α = X Basis Vectors Input data Unknowns Sparse and Overcomplete Representations 23

Quick Linear Algebra Refresher • Remember, #(Basis Vectors)= #unknowns D. α = X Basis Vectors Input data Unknowns When can we solve for α? Sparse and Overcomplete Representations 24

Quick Linear Algebra Refresher D. α = X • When #(Basis Vectors) = dim(Input Data), we have a unique solution • When #(Basis Vectors) < dim(Input Data), we may have no solution • When #(Basis Vectors) > dim(Input Data), we have infinitely many solutions Sparse and Overcomplete Representations 25

Quick Linear Algebra Refresher D. α = X • When #(Basis Vectors) = dim(Input Data), we have a unique solution • When #(Basis Vectors) < dim(Input Data), we may have no solution • When #(Basis Vectors) > dim(Input Data), we have infinitely many solutions Our Case Sparse and Overcomplete Representations 26

Overcomplete Representations #(Basis Vectors) > dimensions of the input Sparse and Overcomplete Representations 27

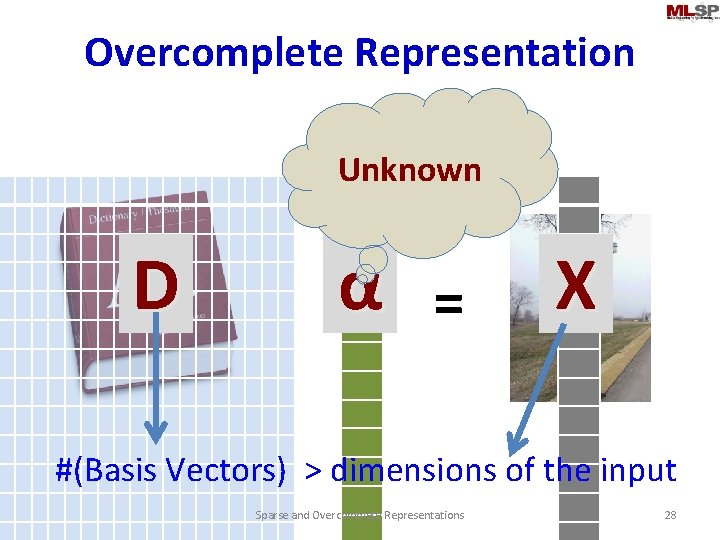

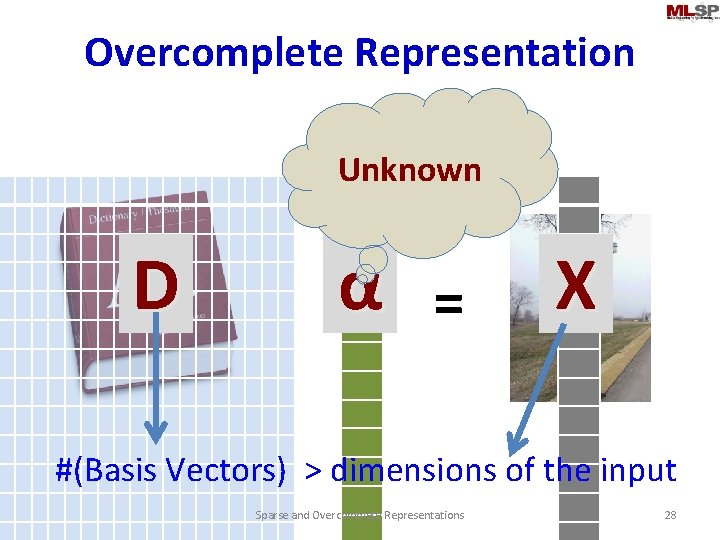

Overcomplete Representation Unknown D α = X #(Basis Vectors) > dimensions of the input Sparse and Overcomplete Representations 28

Overcomplete Representations • Why do we use them? • How do we learn them? Sparse and Overcomplete Representations 29

Overcomplete Representations • Why do we use them? – A more natural representation of the real world – More flexibility in matching data – Can yield a better approximation of the statistical distribution of the data. • How do we learn them? Sparse and Overcomplete Representations 30

Overcompleteness and Sparsity • To solve an overcomplete system of the type: D. α = X • Make assumptions about the data. • Suppose, we say that X is composed of no more than a fixed number (k) of “bases” from D (k ≤ dim(X)) – The term “bases” is an abuse of terminology. . • Now, we can find the set of k bases that best fit the data point, X. Sparse and Overcomplete Representations 31

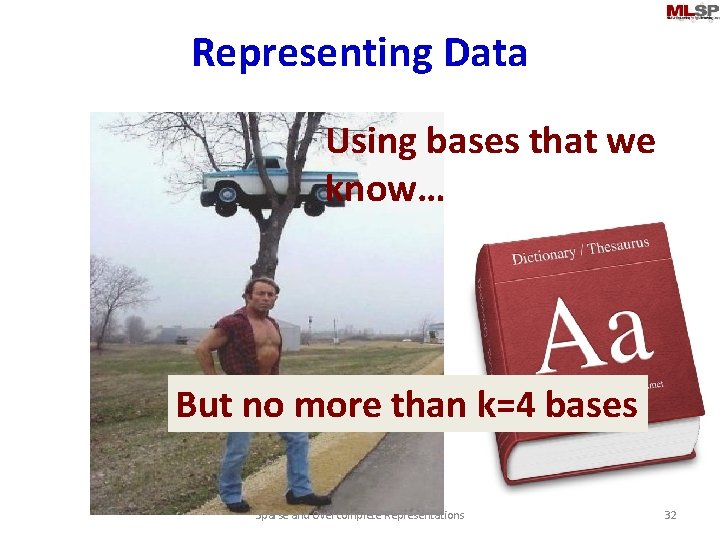

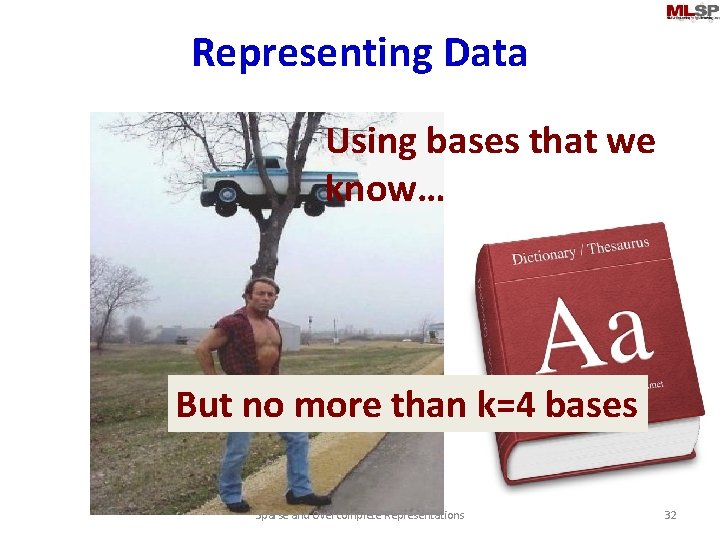

Representing Data Using bases that we know… But no more than k=4 bases Sparse and Overcomplete Representations 32

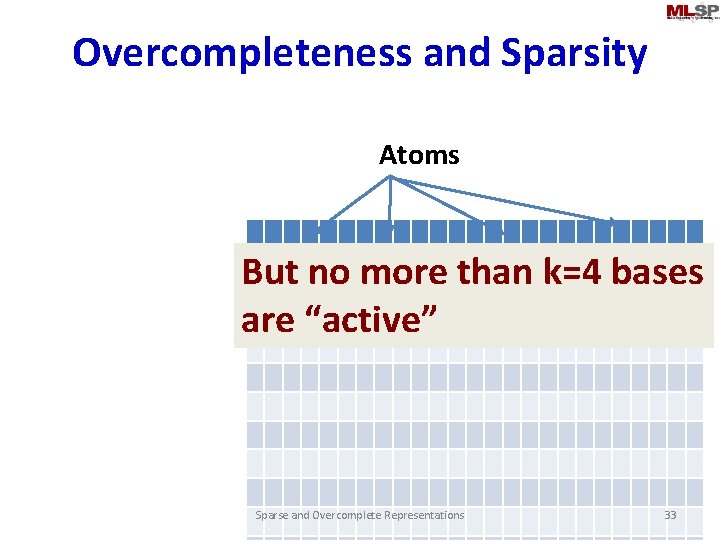

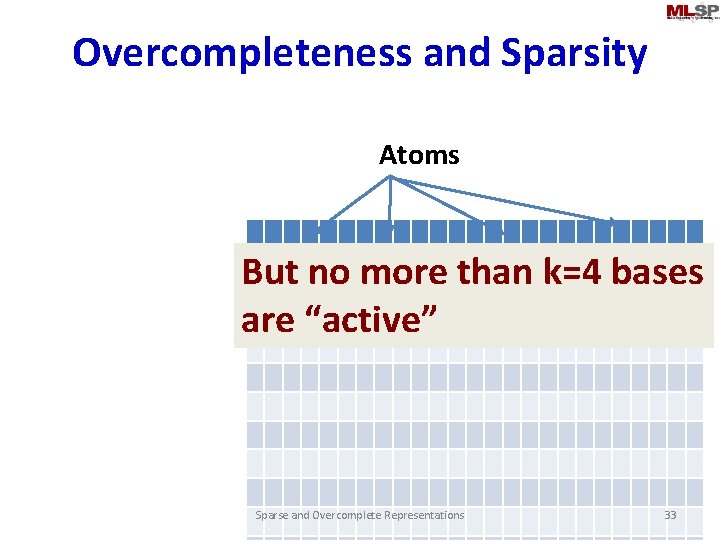

Overcompleteness and Sparsity Atoms But no more than k=4 bases are “active” Sparse and Overcomplete Representations 33

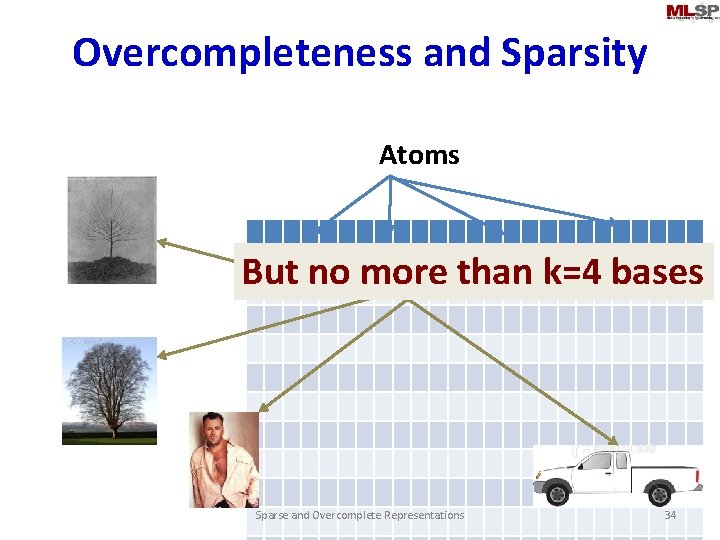

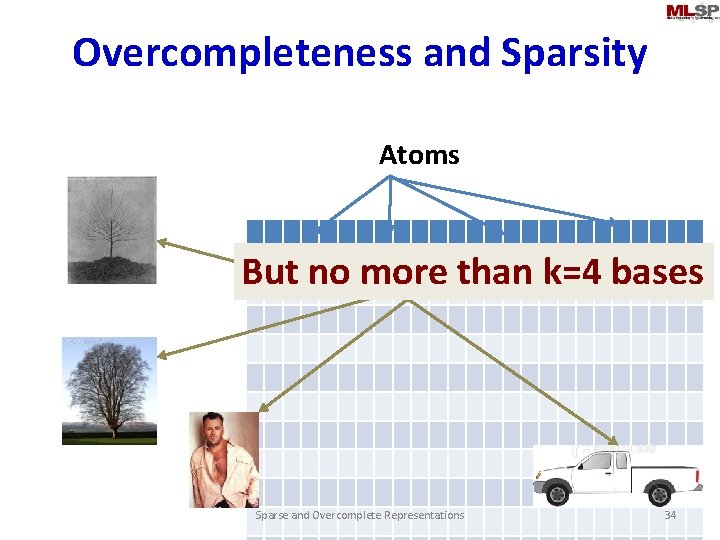

Overcompleteness and Sparsity Atoms But no more than k=4 bases Sparse and Overcomplete Representations 34

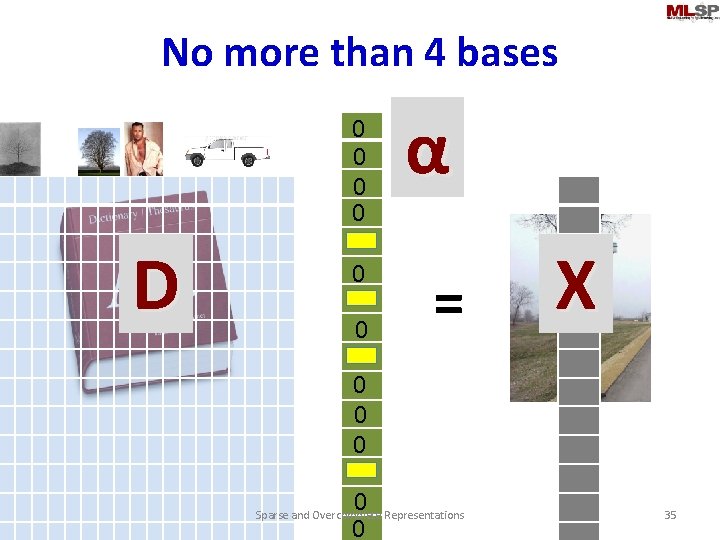

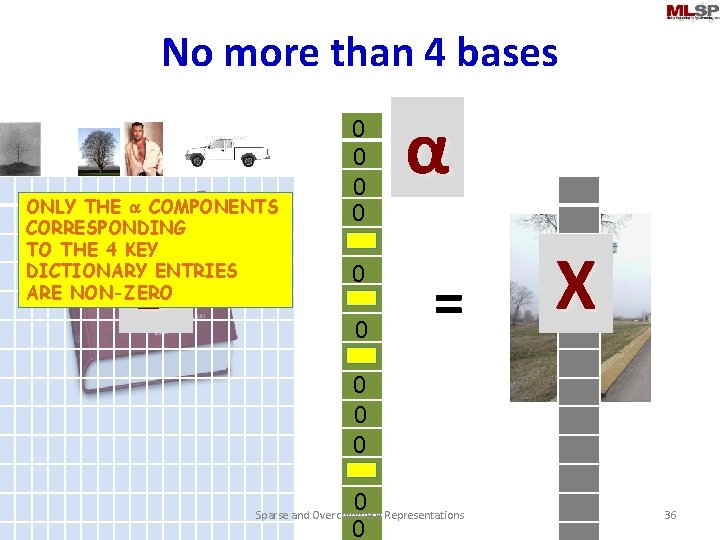

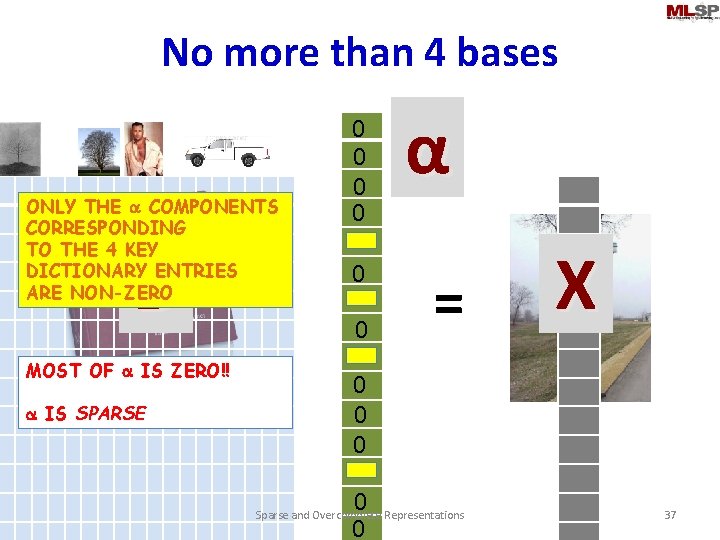

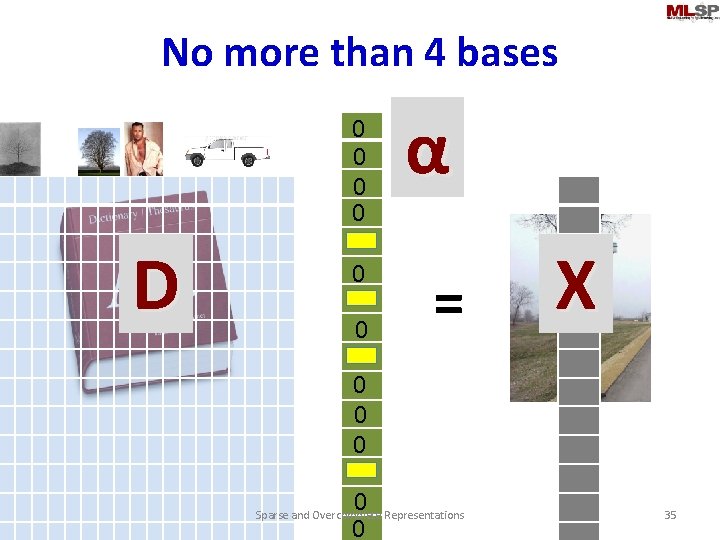

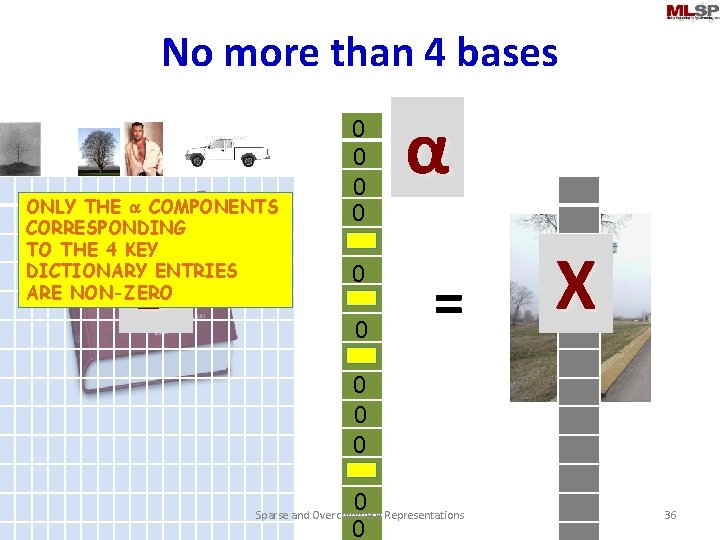

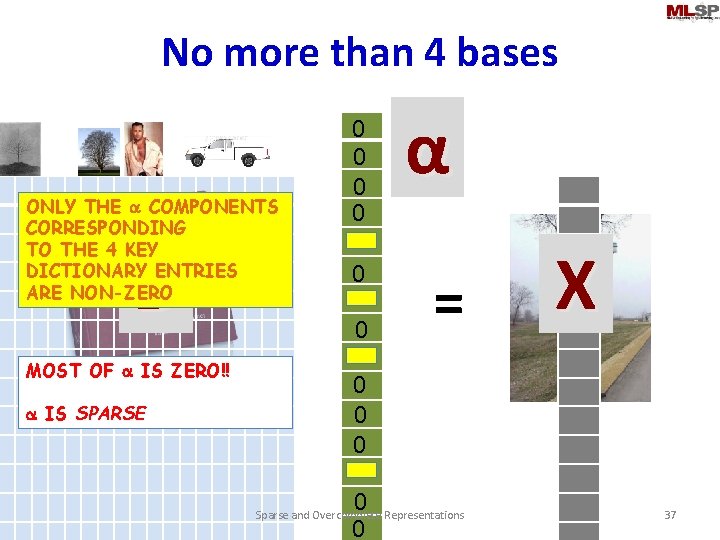

No more than 4 bases 0 0 D 0 0 α = X 0 0 0 Sparse and Overcomplete Representations 35

No more than 4 bases ONLY THE a COMPONENTS CORRESPONDING TO THE 4 KEY DICTIONARY ENTRIES ARE NON-ZERO D 0 0 0 α = X 0 0 0 Sparse and Overcomplete Representations 36

No more than 4 bases ONLY THE a COMPONENTS CORRESPONDING TO THE 4 KEY DICTIONARY ENTRIES ARE NON-ZERO D MOST OF a IS ZERO!! a IS SPARSE 0 0 0 α = X 0 0 0 Sparse and Overcomplete Representations 37

Sparsity- Definition • Sparse representations are representations that account for most or all information of a signal with a linear combination of a small number of atoms. (from: www. see. ed. ac. uk/~tblumens/Sparse. html) Sparse and Overcomplete Representations 38

The Sparsity Problem • We don’t really know k • You are given a signal X • Assuming X was generated using the dictionary, can we find α that generated it? Sparse and Overcomplete Representations 39

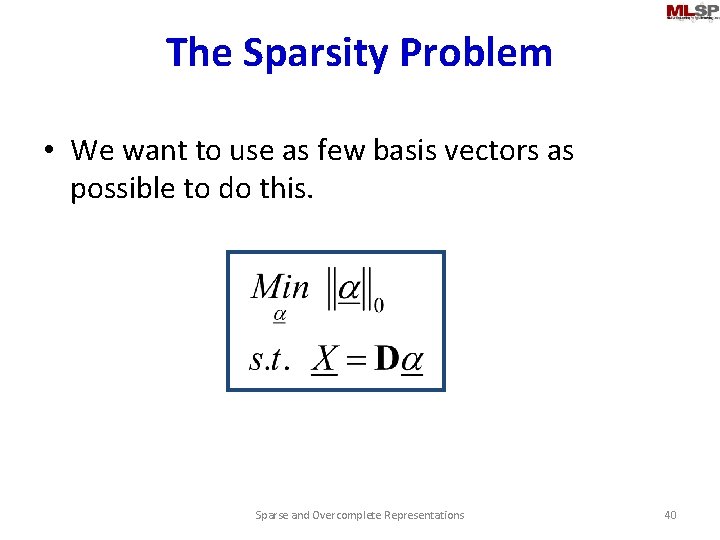

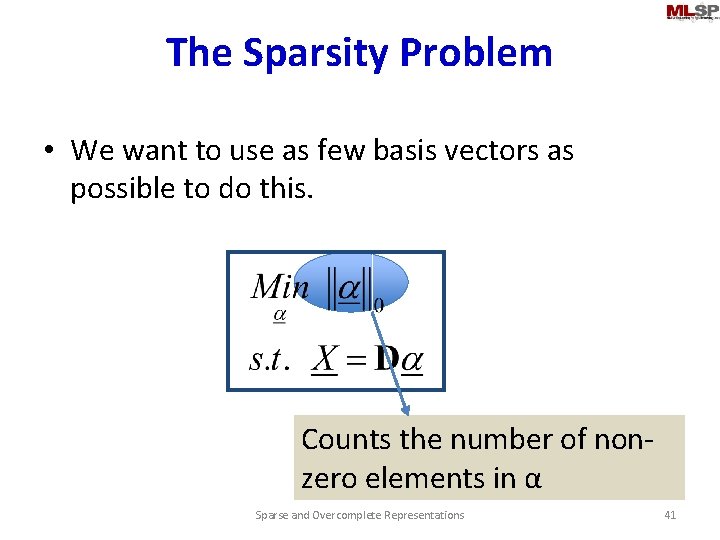

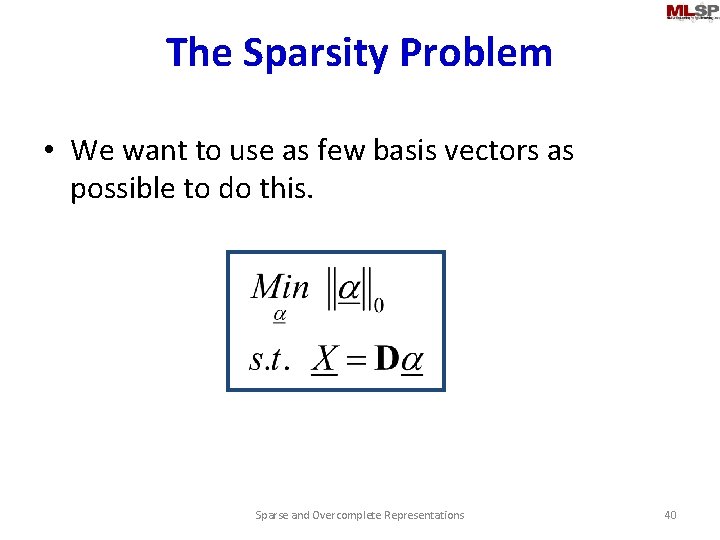

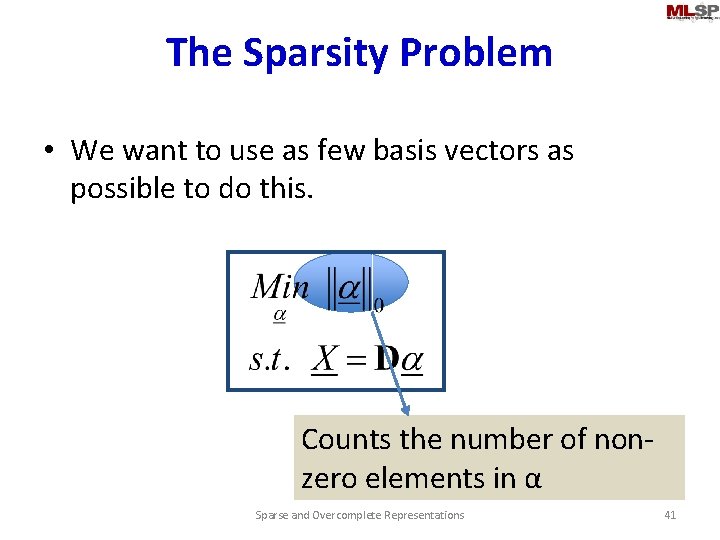

The Sparsity Problem • We want to use as few basis vectors as possible to do this. Sparse and Overcomplete Representations 40

The Sparsity Problem • We want to use as few basis vectors as possible to do this. Counts the number of nonzero elements in α Sparse and Overcomplete Representations 41

The Sparsity Problem • We want to use as few basis vectors as possible to do this. How can we solve the above? Sparse and Overcomplete Representations 42

Obtaining Sparse Solutions • We will look at 2 algorithms: – Matching Pursuit (MP) – Basis Pursuit (BP) Sparse and Overcomplete Representations 43

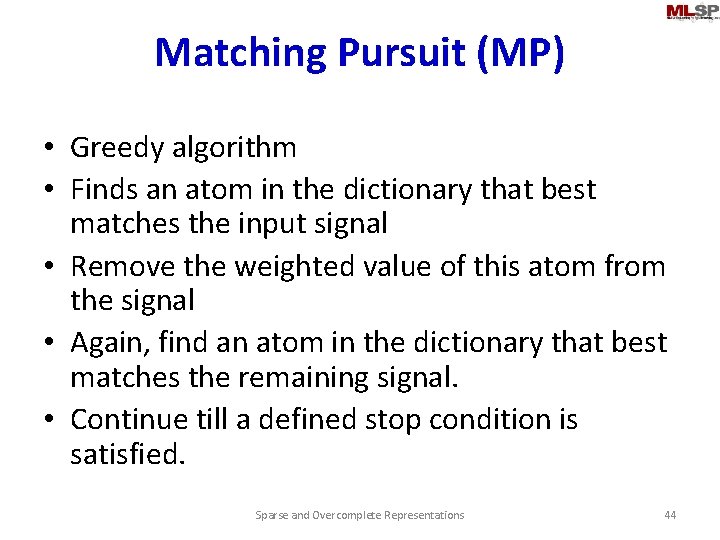

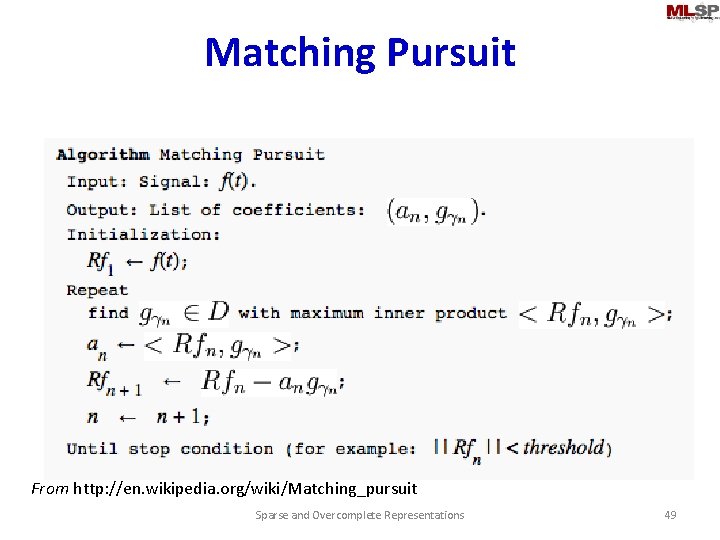

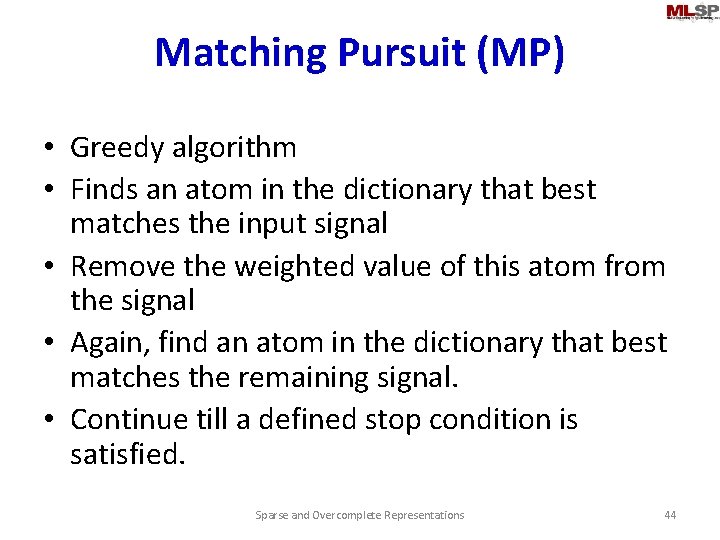

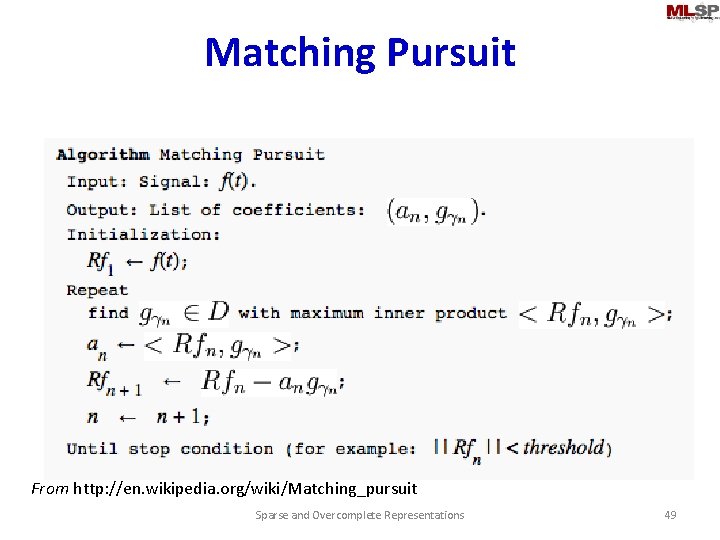

Matching Pursuit (MP) • Greedy algorithm • Finds an atom in the dictionary that best matches the input signal • Remove the weighted value of this atom from the signal • Again, find an atom in the dictionary that best matches the remaining signal. • Continue till a defined stop condition is satisfied. Sparse and Overcomplete Representations 44

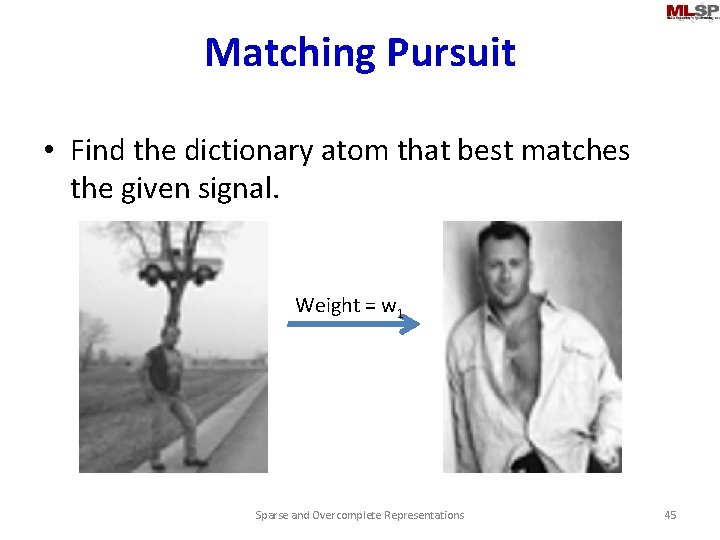

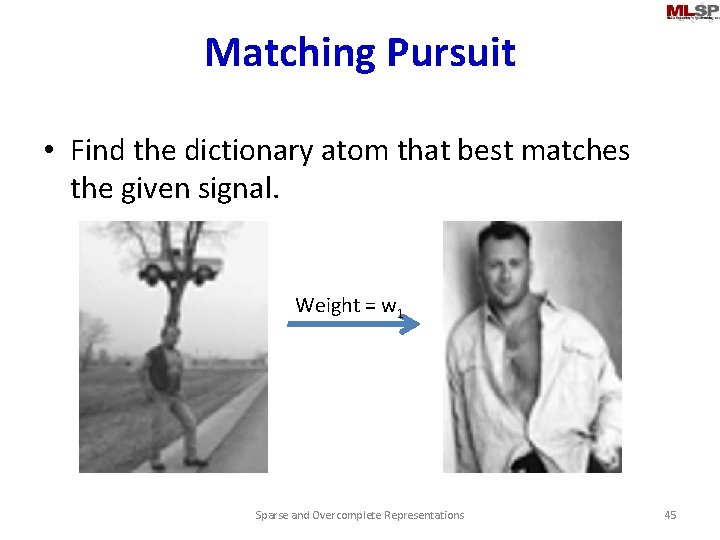

Matching Pursuit • Find the dictionary atom that best matches the given signal. Weight = w 1 Sparse and Overcomplete Representations 45

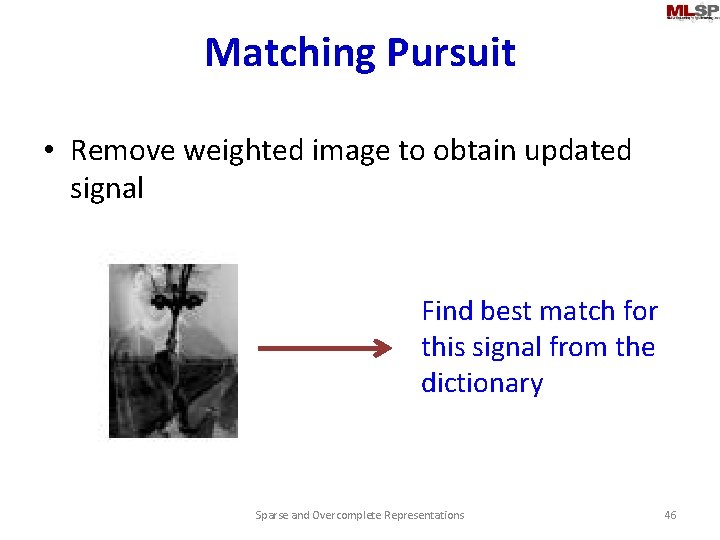

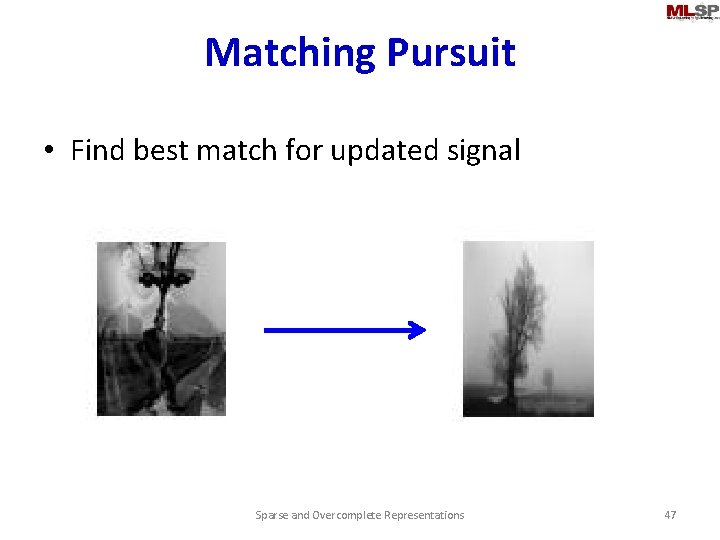

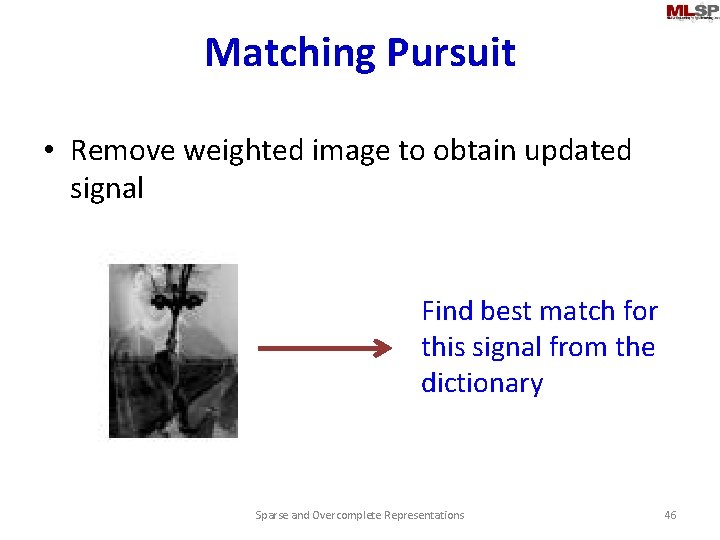

Matching Pursuit • Remove weighted image to obtain updated signal Find best match for this signal from the dictionary Sparse and Overcomplete Representations 46

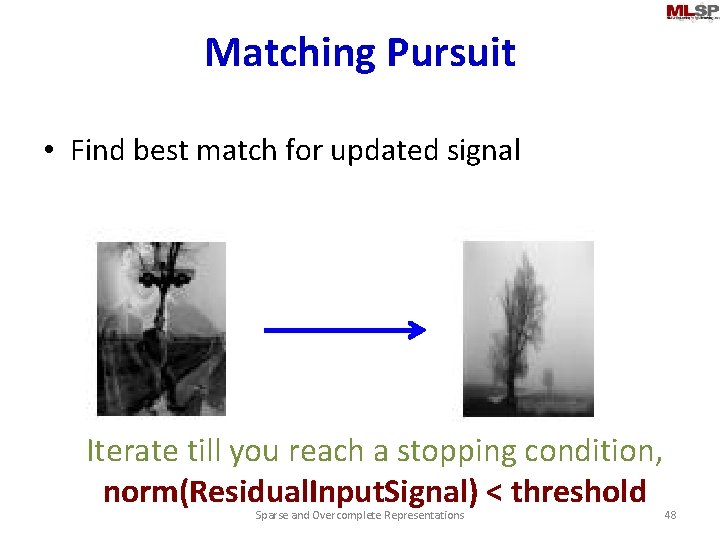

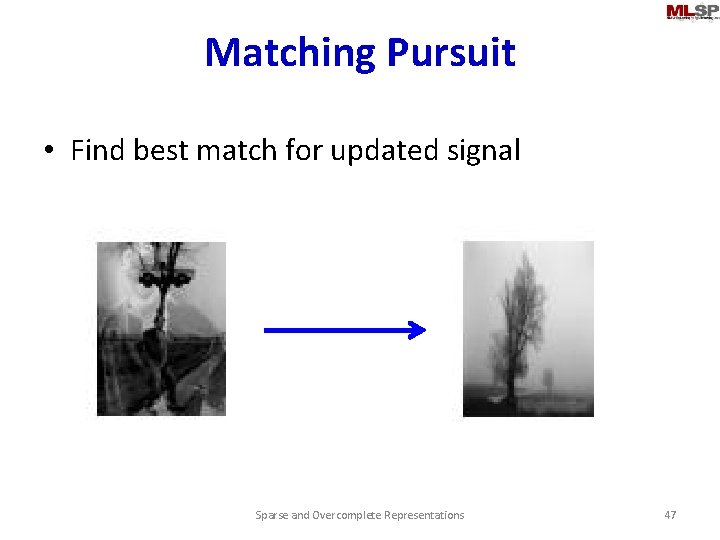

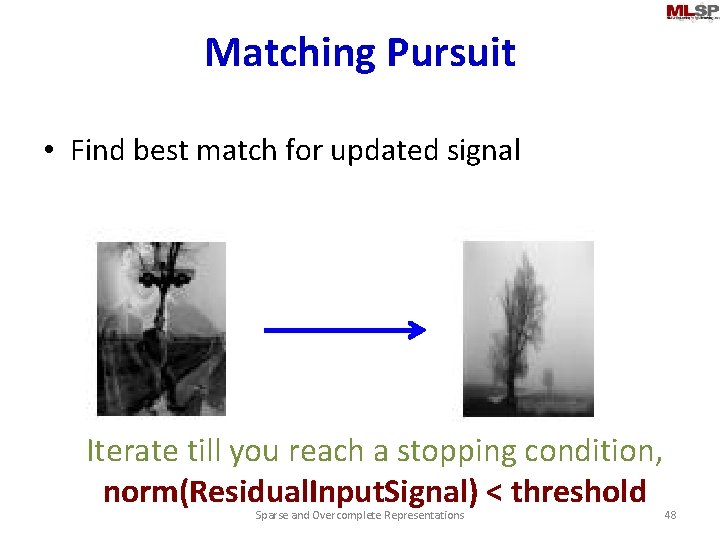

Matching Pursuit • Find best match for updated signal Sparse and Overcomplete Representations 47

Matching Pursuit • Find best match for updated signal Iterate till you reach a stopping condition, norm(Residual. Input. Signal) < threshold Sparse and Overcomplete Representations 48

Matching Pursuit From http: //en. wikipedia. org/wiki/Matching_pursuit Sparse and Overcomplete Representations 49

Matching Pursuit • Problems ? ? ? Sparse and Overcomplete Representations 50

Matching Pursuit • Main Problem – Computational complexity – The entire dictionary has to be searched at every iteration Sparse and Overcomplete Representations 51

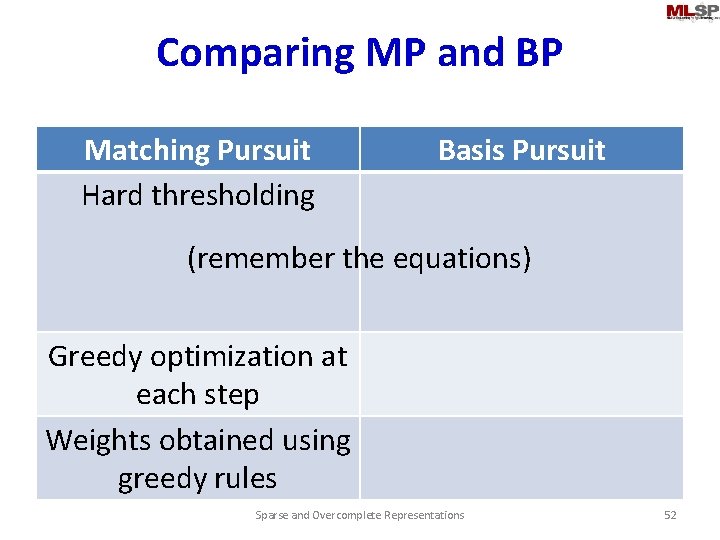

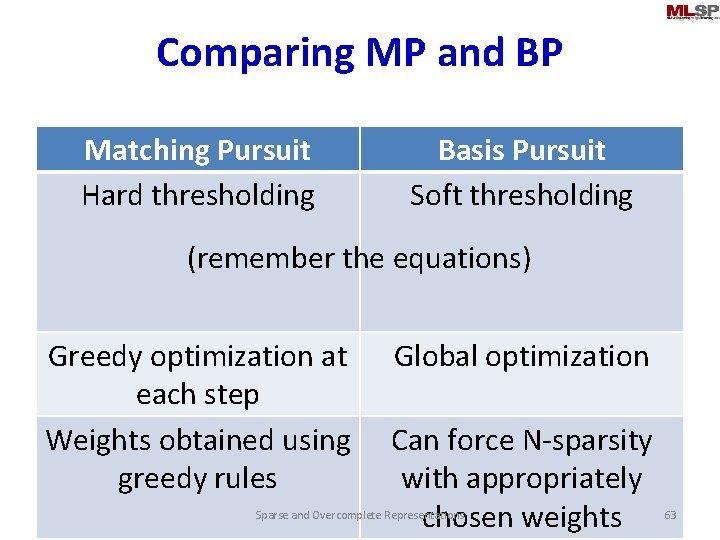

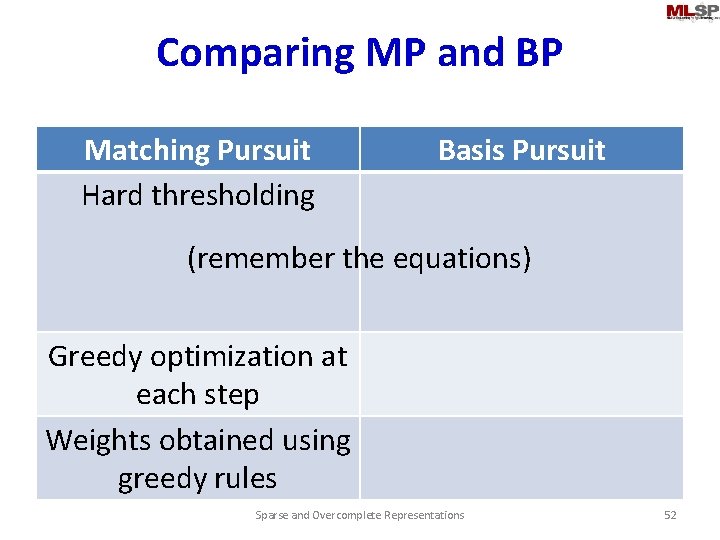

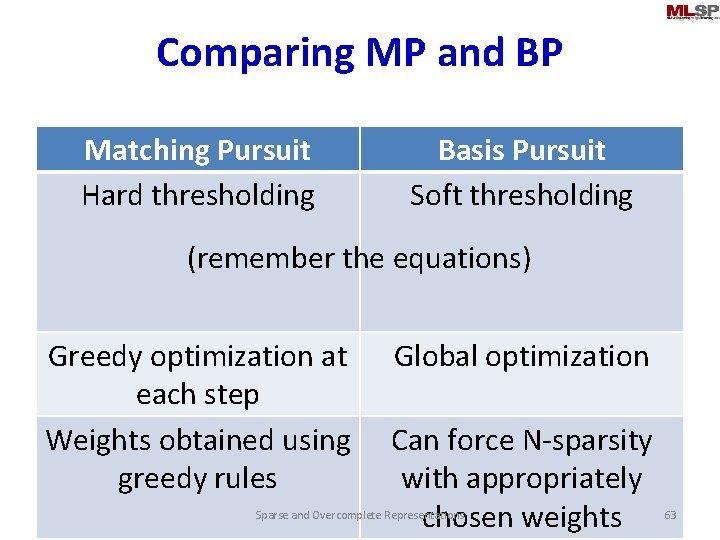

Comparing MP and BP Matching Pursuit Hard thresholding Basis Pursuit (remember the equations) Greedy optimization at each step Weights obtained using greedy rules Sparse and Overcomplete Representations 52

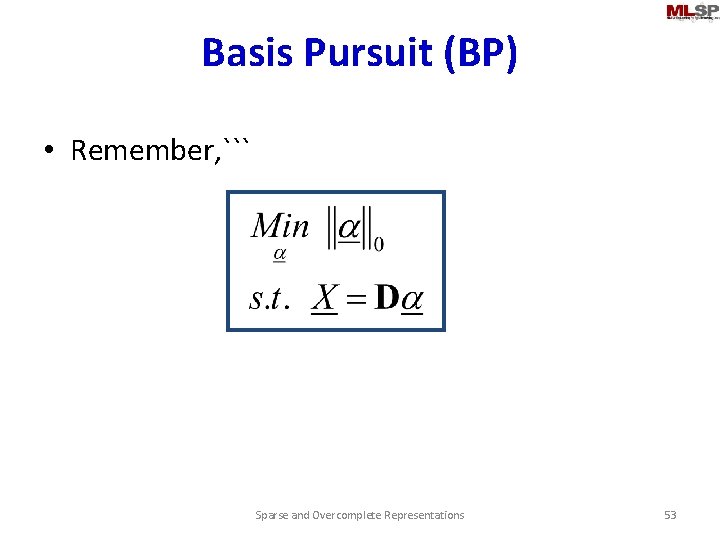

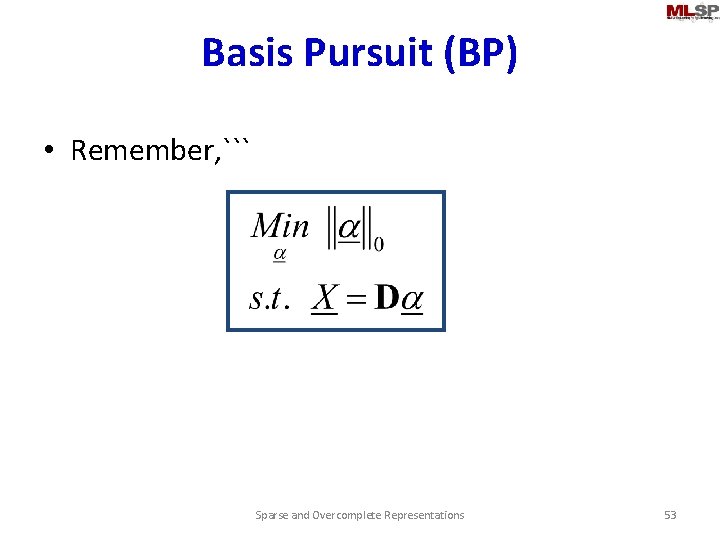

Basis Pursuit (BP) • Remember, ``` Sparse and Overcomplete Representations 53

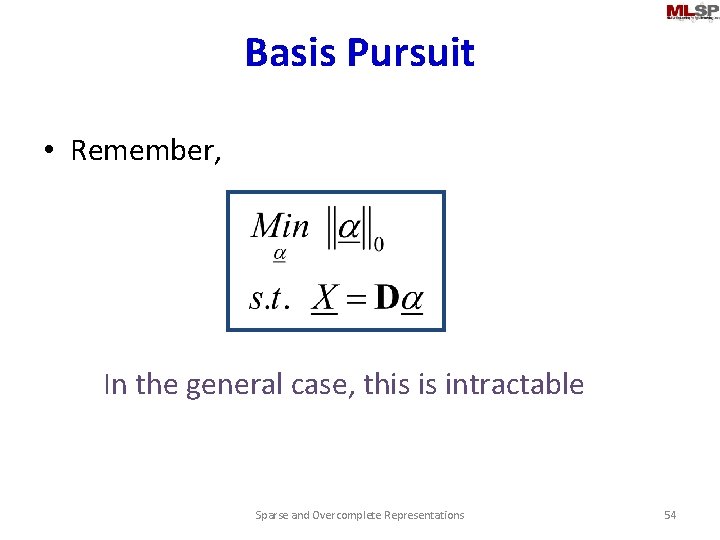

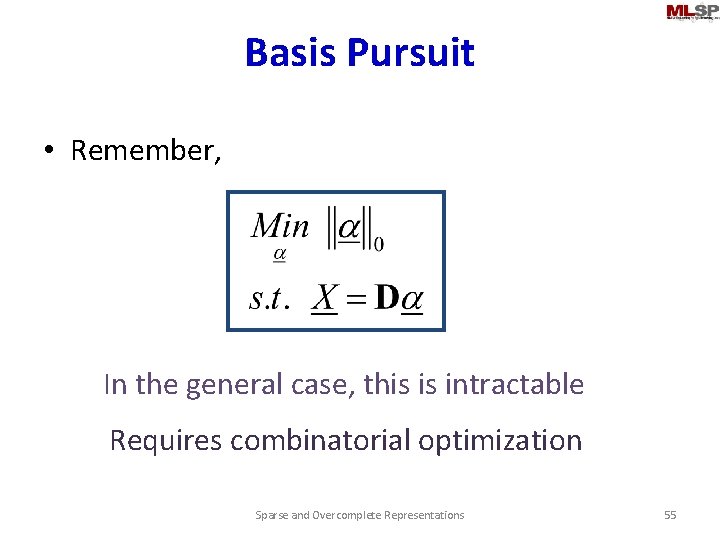

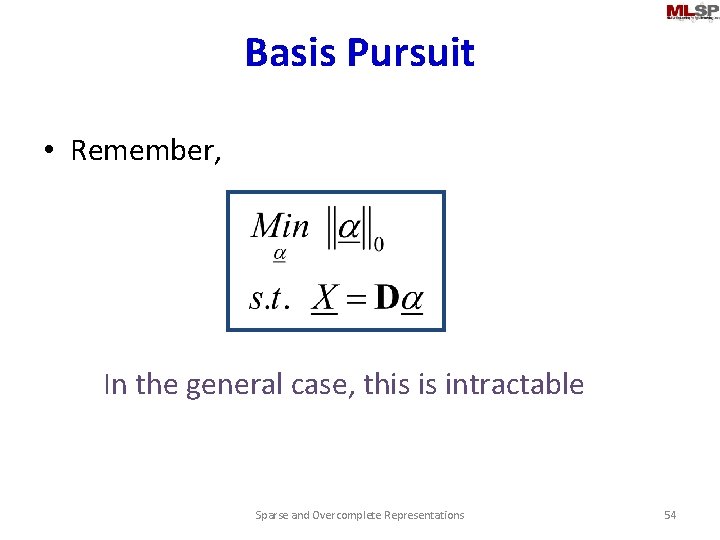

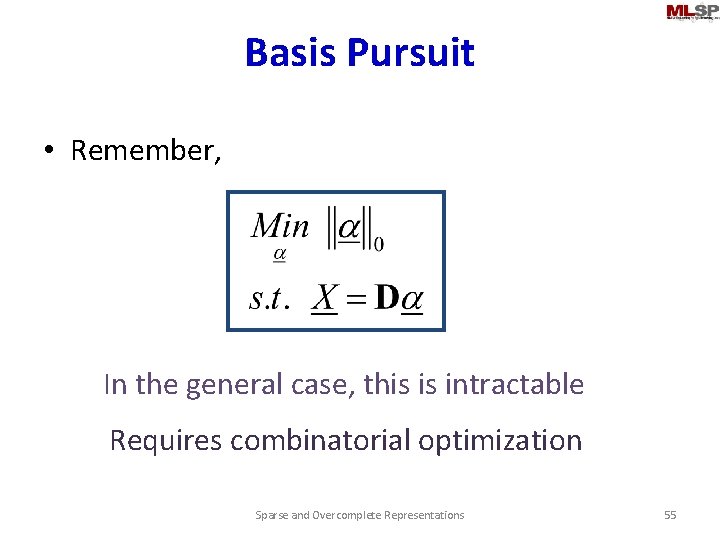

Basis Pursuit • Remember, In the general case, this is intractable Sparse and Overcomplete Representations 54

Basis Pursuit • Remember, In the general case, this is intractable Requires combinatorial optimization Sparse and Overcomplete Representations 55

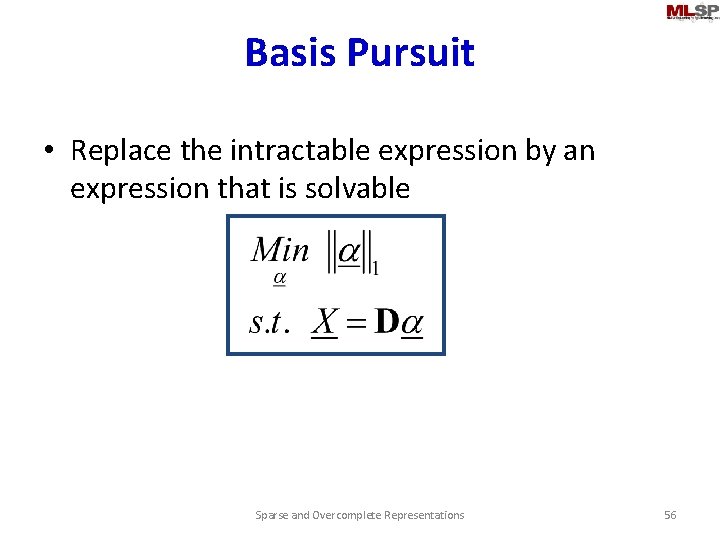

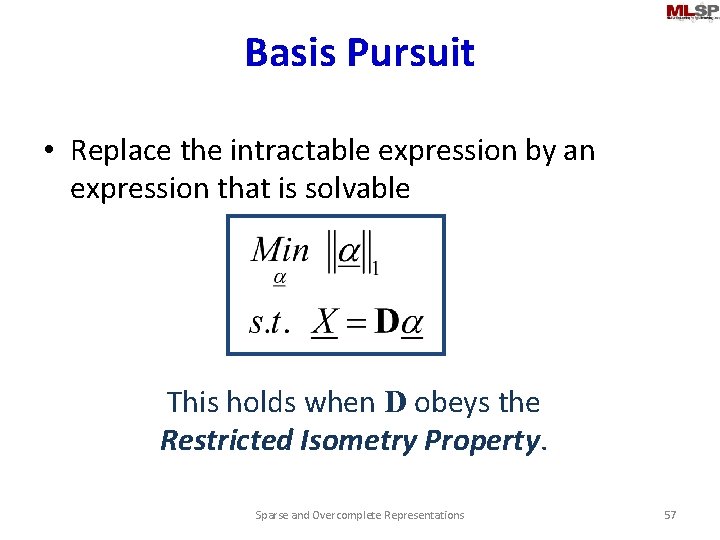

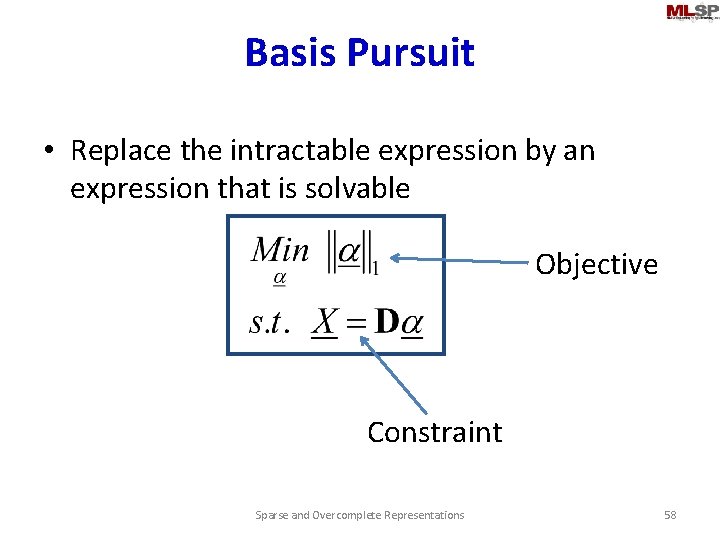

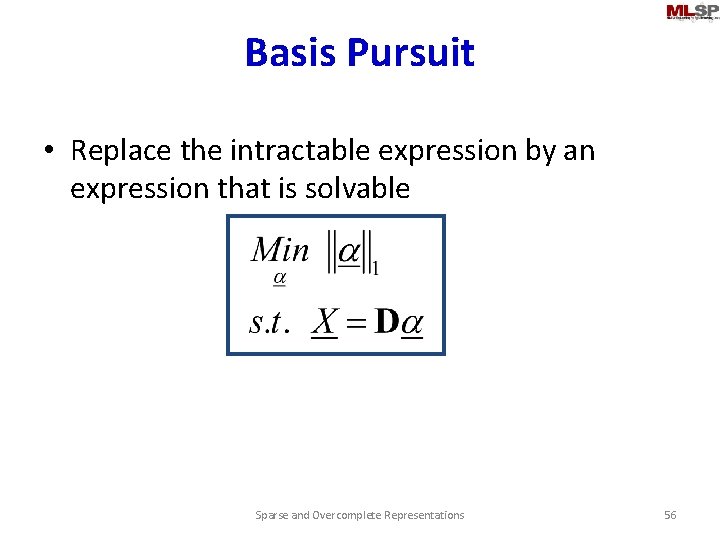

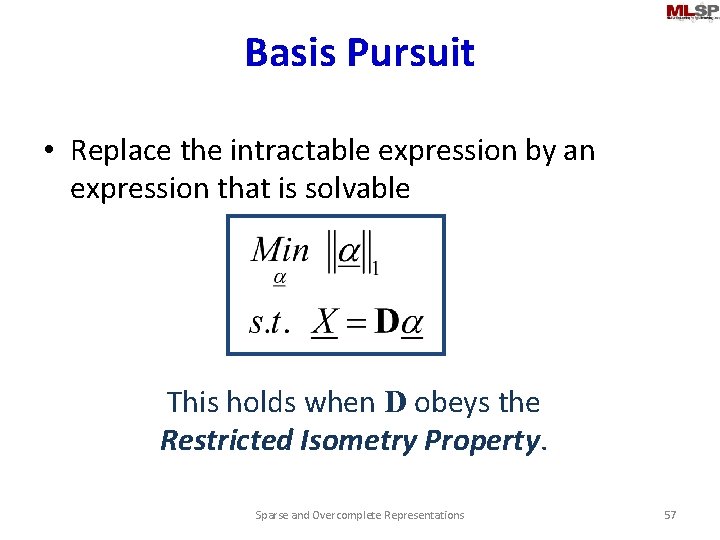

Basis Pursuit • Replace the intractable expression by an expression that is solvable Sparse and Overcomplete Representations 56

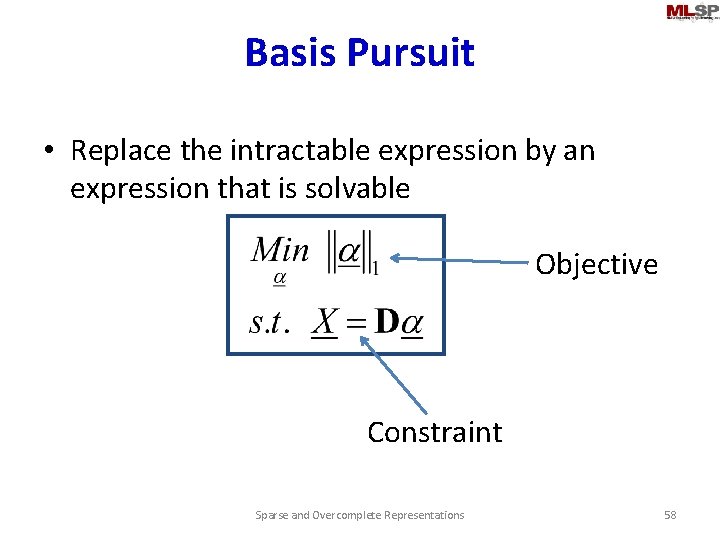

Basis Pursuit • Replace the intractable expression by an expression that is solvable This holds when D obeys the Restricted Isometry Property. Sparse and Overcomplete Representations 57

Basis Pursuit • Replace the intractable expression by an expression that is solvable Objective Constraint Sparse and Overcomplete Representations 58

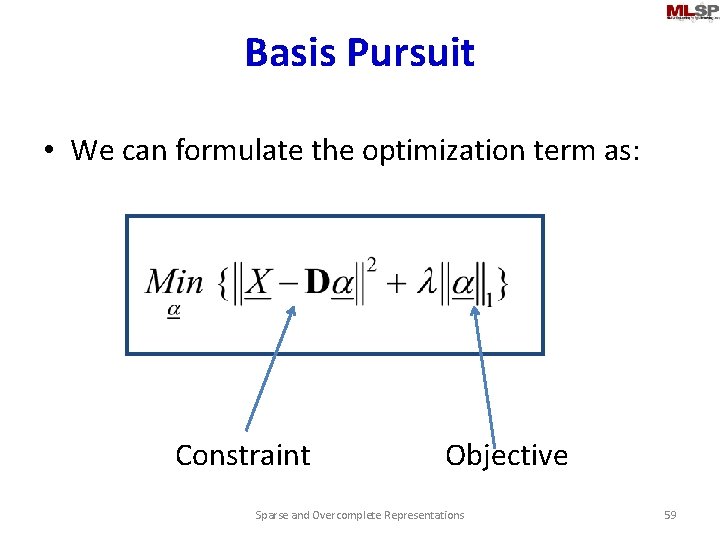

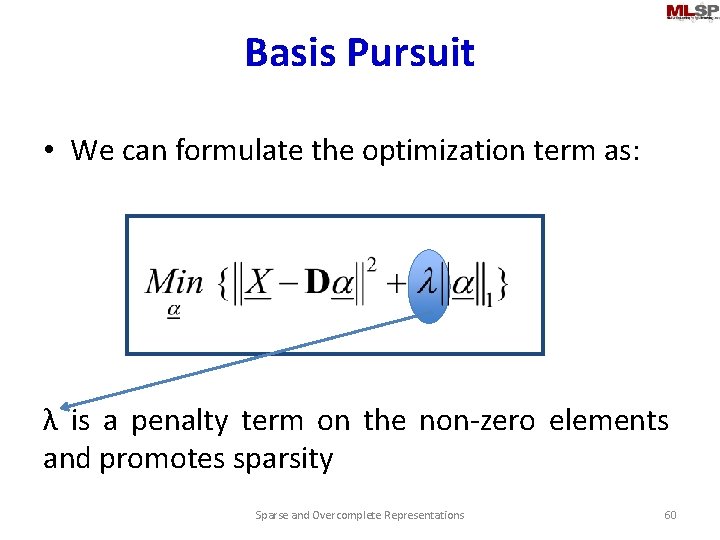

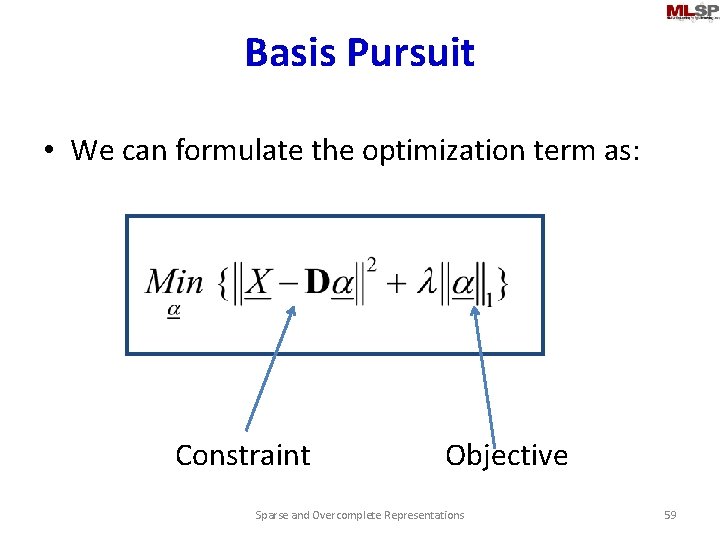

Basis Pursuit • We can formulate the optimization term as: Constraint Objective Sparse and Overcomplete Representations 59

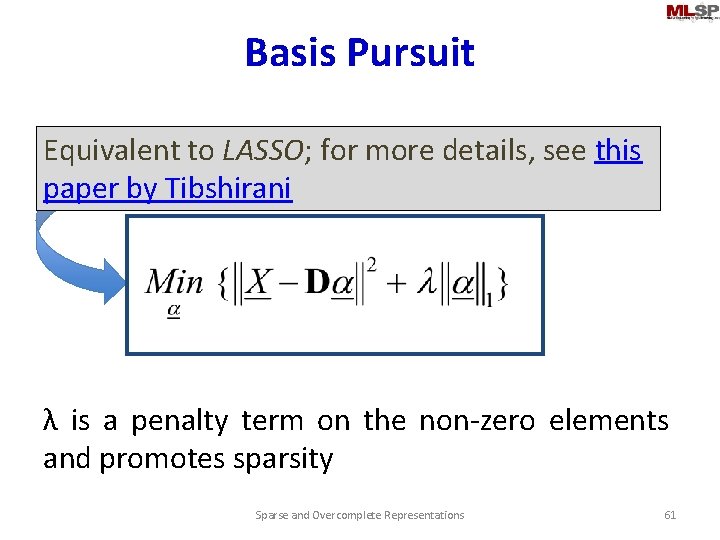

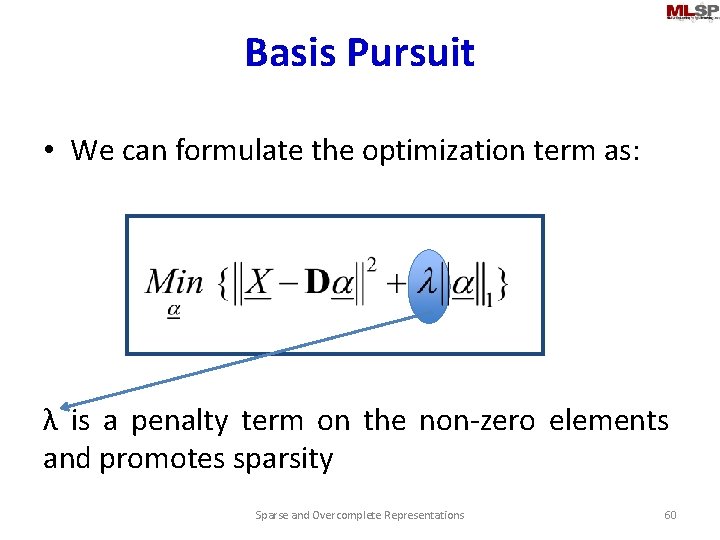

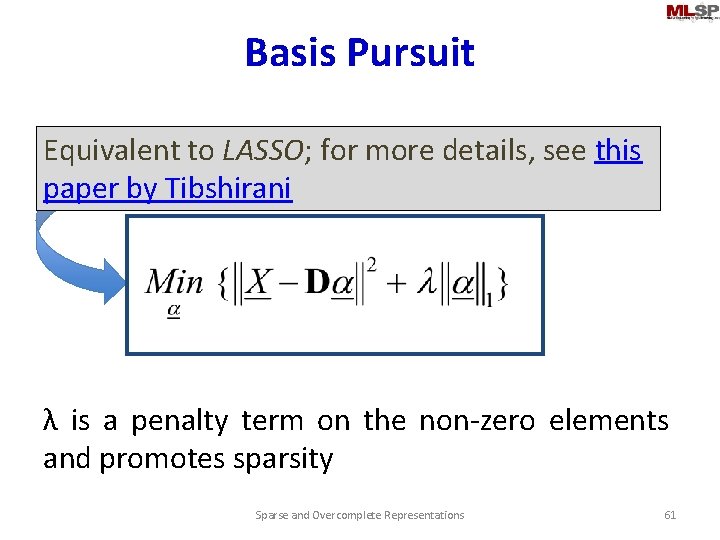

Basis Pursuit • We can formulate the optimization term as: λ is a penalty term on the non-zero elements and promotes sparsity Sparse and Overcomplete Representations 60

Basis Pursuit Equivalent to LASSO; the for optimization more details, term see this • We can formulate as: paper by Tibshirani λ is a penalty term on the non-zero elements and promotes sparsity Sparse and Overcomplete Representations 61

Basis Pursuit • There are efficient ways to solve the LASSO formulation. [Link to Matlab code] Sparse and Overcomplete Representations 62

Comparing MP and BP Matching Pursuit Hard thresholding Basis Pursuit Soft thresholding (remember the equations) Greedy optimization at each step Weights obtained using greedy rules Global optimization Can force N-sparsity with appropriately chosen weights Sparse and Overcomplete Representations 63

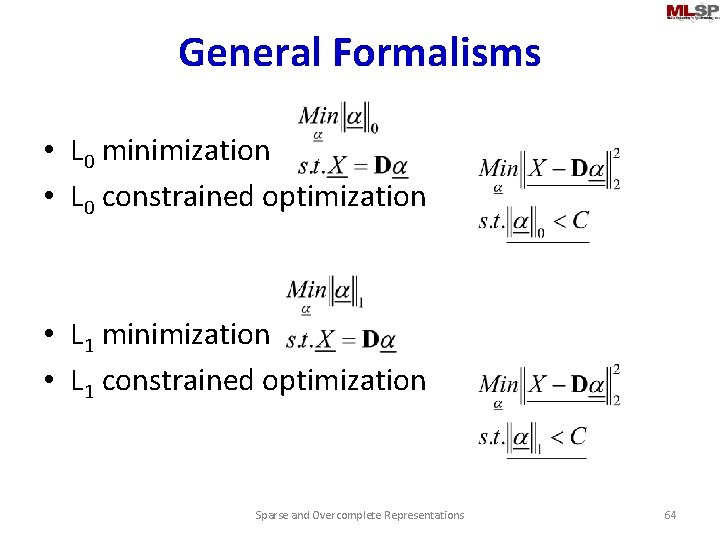

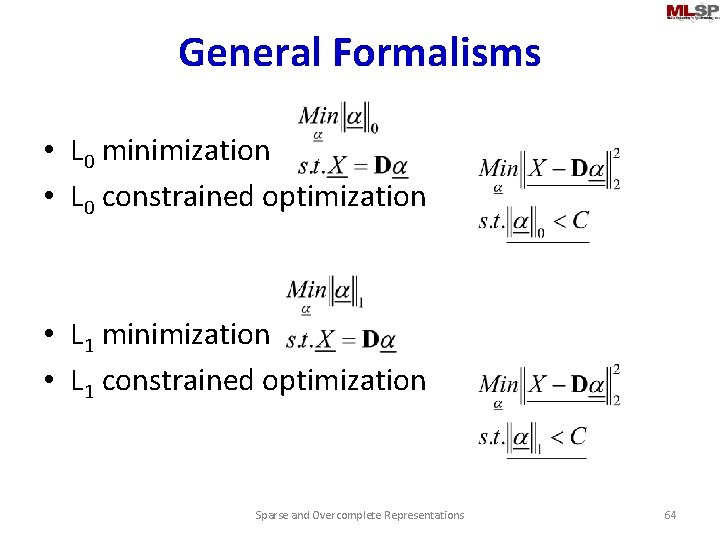

General Formalisms • L 0 minimization • L 0 constrained optimization • L 1 minimization • L 1 constrained optimization Sparse and Overcomplete Representations 64

Many Other Methods. . • • Iterative Hard Thresholding (IHT) Co. SAMP OMP … Sparse and Overcomplete Representations 65

Applications of Sparse Representations • Many many applications – Signal representation – Statistical modelling –. . • Two extremely popular signal processing applications: – Compressive sensing – Denoising Sparse and Overcomplete Representations 66

Compressive Sensing • Recall the Nyquist criterion? • To reconstruct a signal, you need to sample at twice the maximum frequency of the original signal Sparse and Overcomplete Representations 67

Compressive Sensing • Recall the Nyquist criterion? • To reconstruct a signal, you need to sample at twice the frequency of the original signal • Is it possible to reconstruct signals when they have not been sampled so as to satisfy the Nyquist criterion? Sparse and Overcomplete Representations 68

Compressive Sensing • Recall the Nyquist criterion? • To reconstruct a signal, you need to sample at twice the frequency of the original signal • Is it possible to reconstruct signals when they have not been sampled so as to satisfy the Nyquist criterion? • Under specific criteria, yes!!!! Sparse and Overcomplete Representations 69

Compressive Sensing • What criteria? Sparse and Overcomplete Representations 70

Compressive Sensing • What criteria? Sparsity! Sparse and Overcomplete Representations 71

Compressive Sensing • What criteria? Sparsity! • Exploit the structure of the data • Most signals are sparse, in some domain Sparse and Overcomplete Representations 72

Applications of Sparse Representations • Two extremely popular applications: – Compressive sensing – Denoising Sparse and Overcomplete Representations 73

Applications of Sparse Representations • Two extremely popular applications: – Compressive sensing – Denoising Sparse and Overcomplete Representations 74

Denoising • As the name suggests, remove noise! Sparse and Overcomplete Representations 75

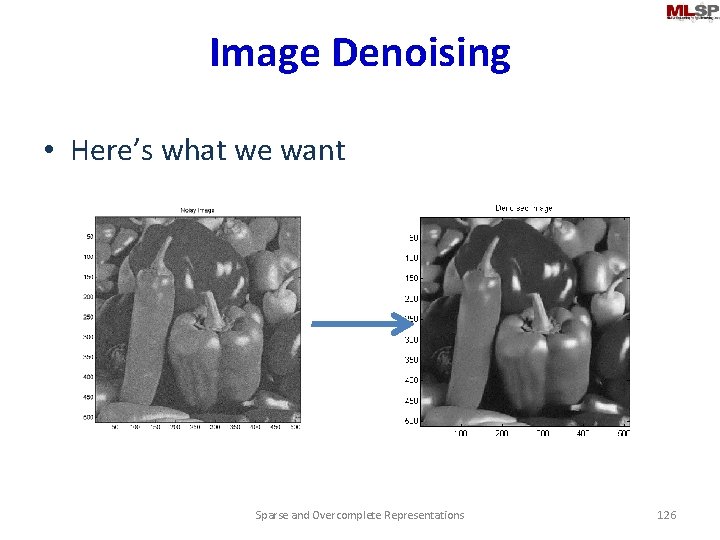

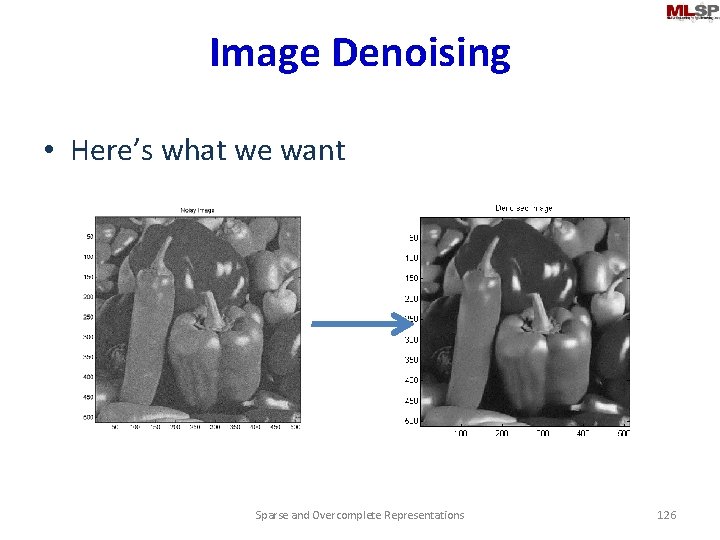

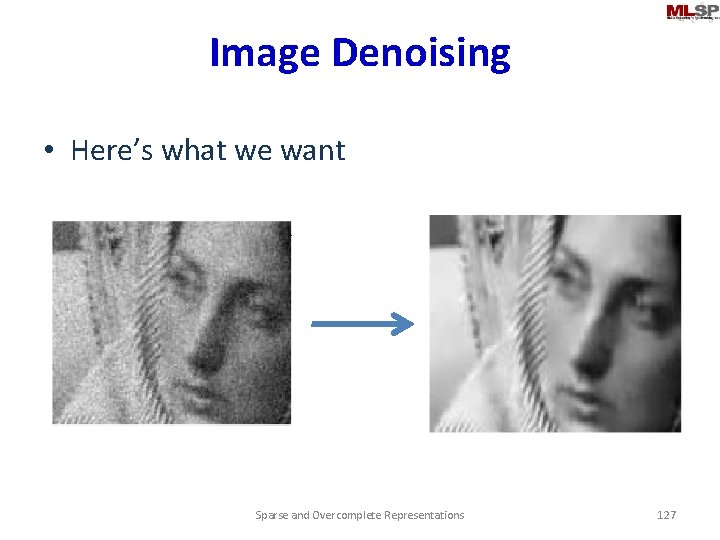

Denoising • As the name suggests, remove noise! • We will look at image denoising as an example Sparse and Overcomplete Representations 76

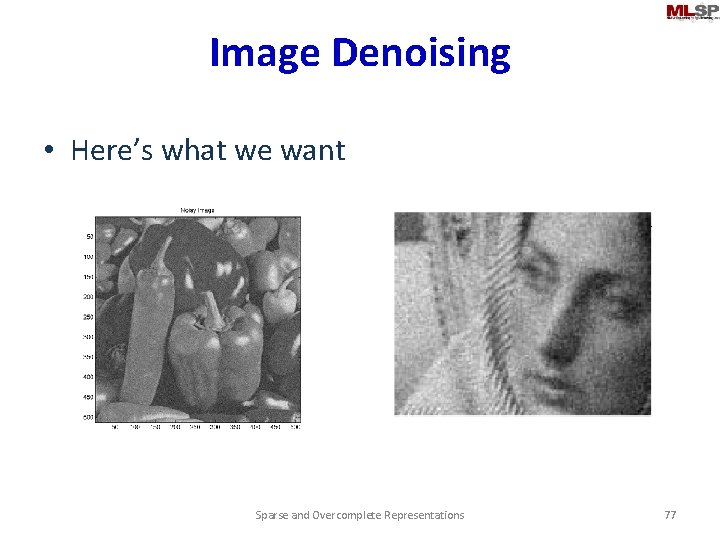

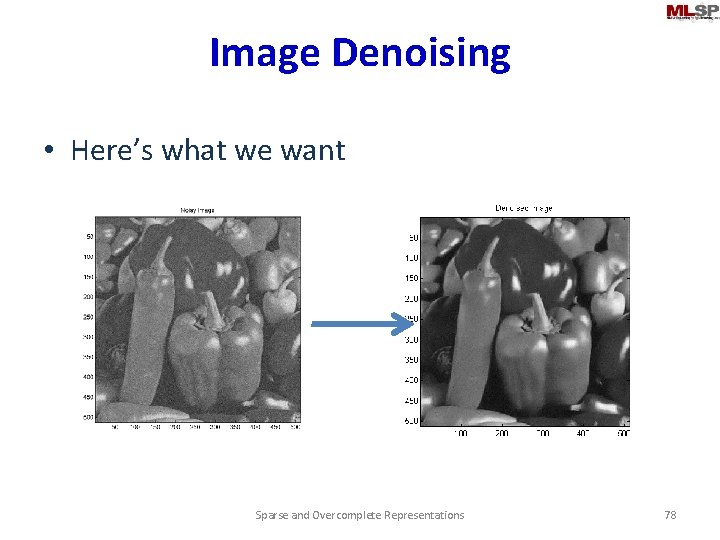

Image Denoising • Here’s what we want Sparse and Overcomplete Representations 77

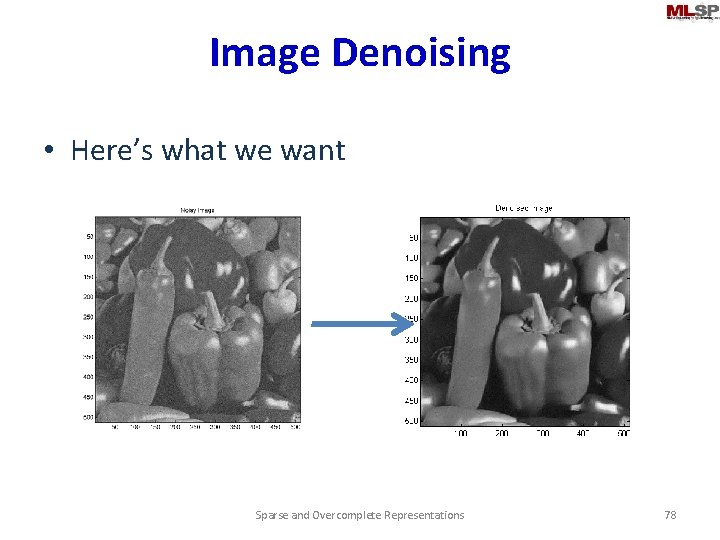

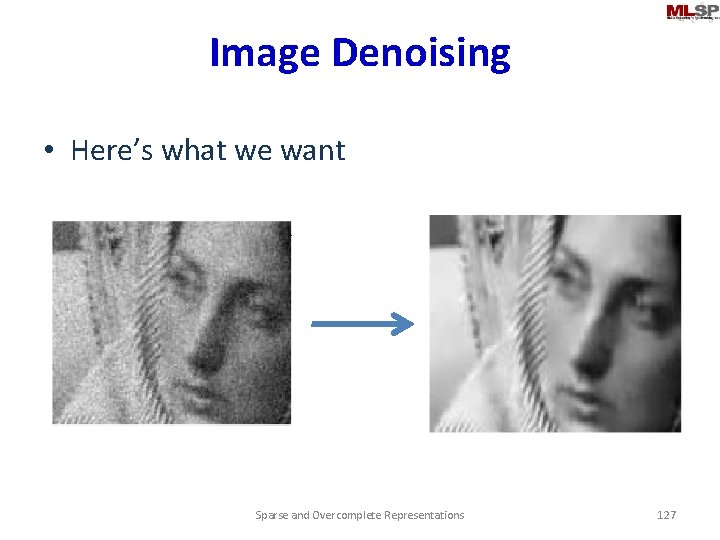

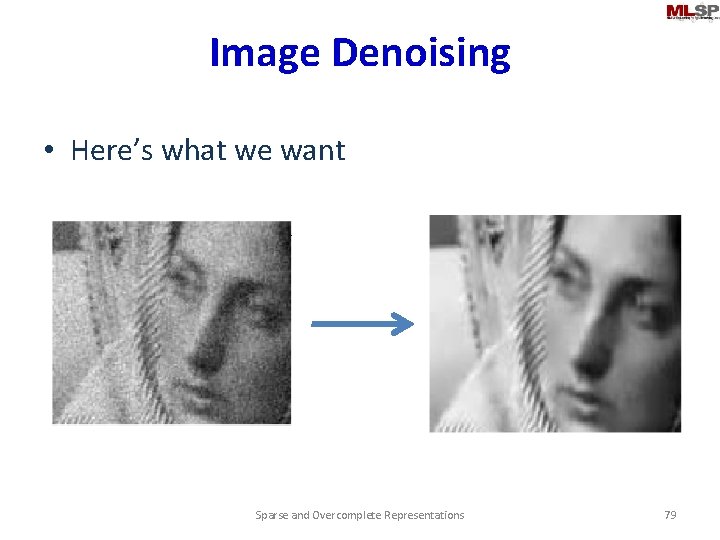

Image Denoising • Here’s what we want Sparse and Overcomplete Representations 78

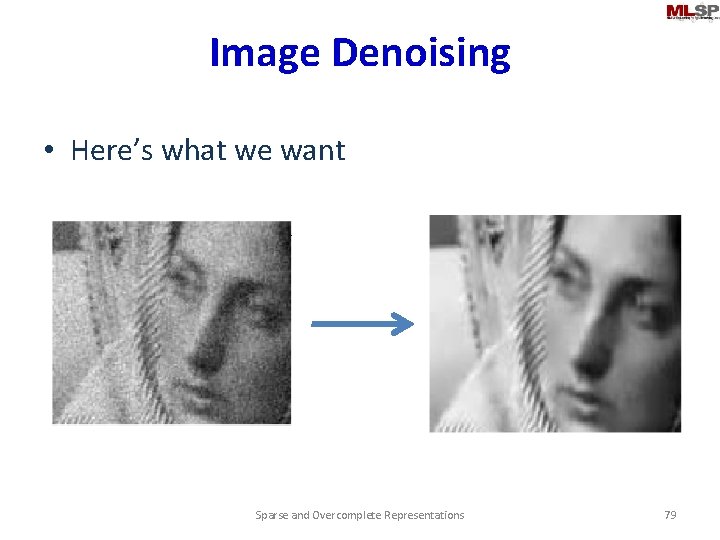

Image Denoising • Here’s what we want Sparse and Overcomplete Representations 79

Denoising • As the name suggests, remove noise! • We will look at image denoising as an example A more general take-away: How to learn the dictionaries Sparse and Overcomplete Representations 80

The Image Denoising Problem • Given an image • Remove Gaussian additive noise from it Sparse and Overcomplete Representations 81

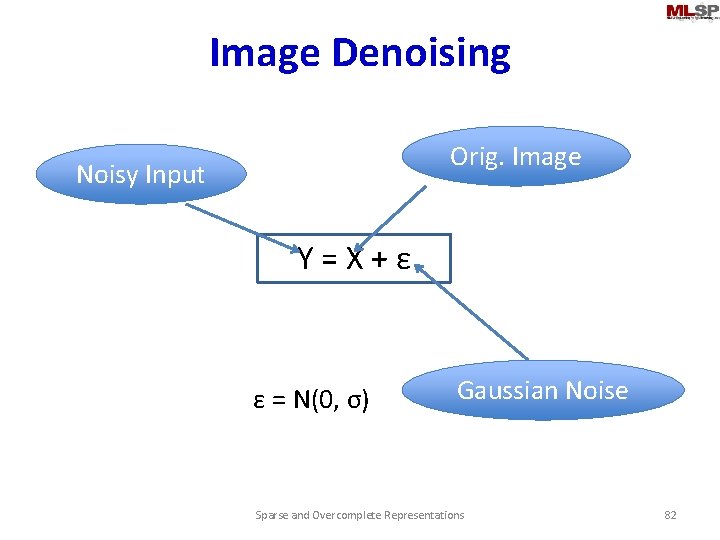

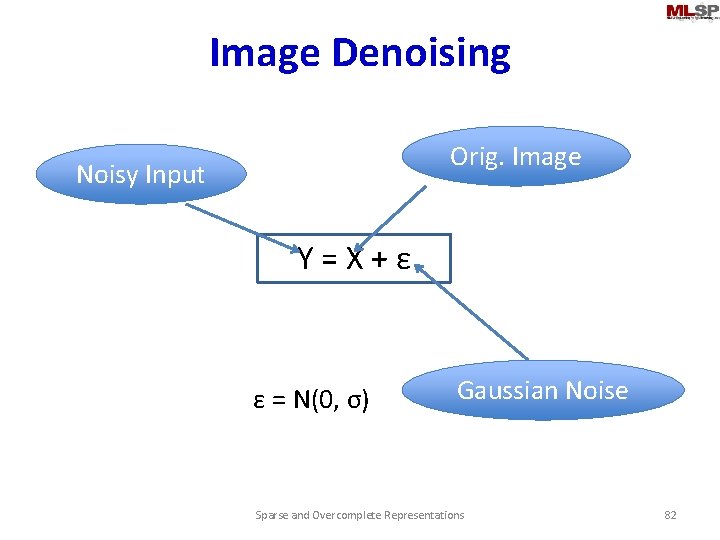

Image Denoising Orig. Image Noisy Input Y=X+ε ε = Ν(0, σ) Gaussian Noise Sparse and Overcomplete Representations 82

Image Denoising • Remove the noise from Y, to obtain X as best as possible. Sparse and Overcomplete Representations 83

Image Denoising • Remove the noise from Y, to obtain X as best as possible • Using sparse representations over learned dictionaries Sparse and Overcomplete Representations 84

Image Denoising • Remove the noise from Y, to obtain X as best as possible • Using sparse representations over learned dictionaries • Yes, we will learn the dictionaries Sparse and Overcomplete Representations 85

Image Denoising • Remove the noise from Y, to obtain X as best as possible • Using sparse representations over learned dictionaries • Yes, we will learn the dictionaries • What data will we use? The corrupted image itself! Sparse and Overcomplete Representations 86

Image Denoising • We use the data to be denoised to learn the dictionary. • Training and denoising become an iterated process. • We use image patches of size √n x √n pixels (i. e. if the image is 64 x 64, patches are 8 x 8) Sparse and Overcomplete Representations 87

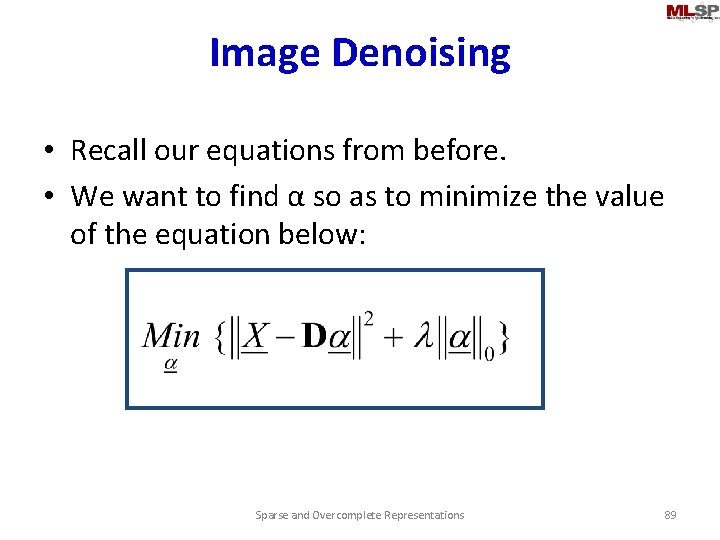

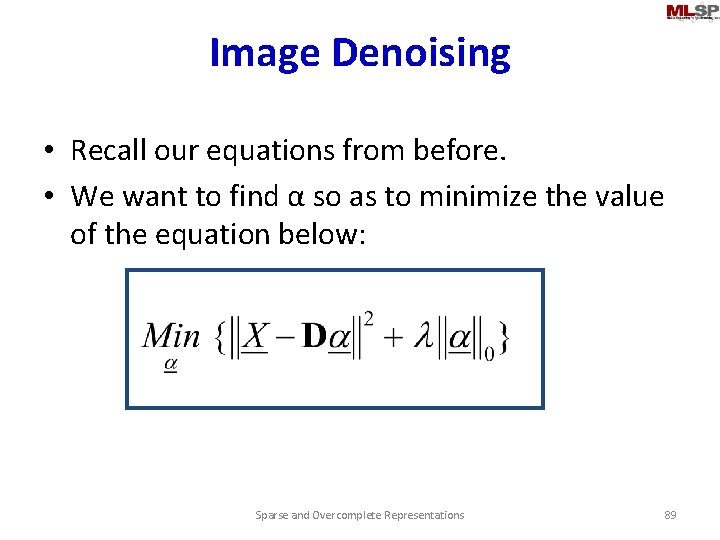

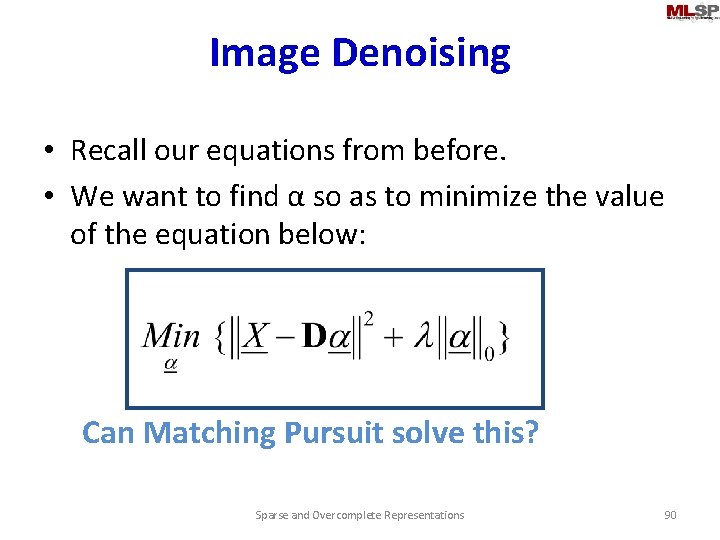

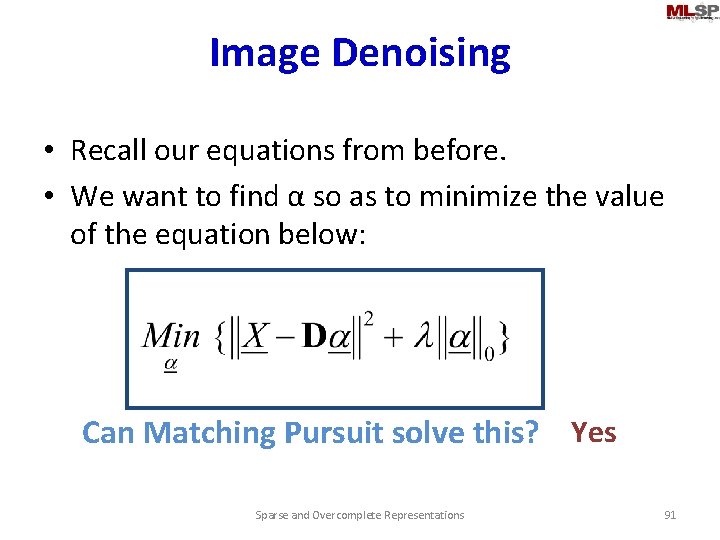

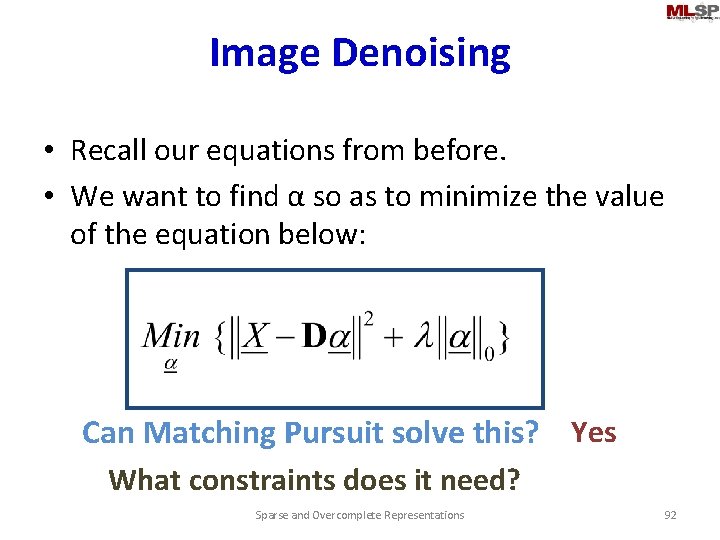

Image Denoising • The data dictionary D – Size = n x k (k > n) – This is known and fixed, to start with – Every image patch can be sparsely represented using D Sparse and Overcomplete Representations 88

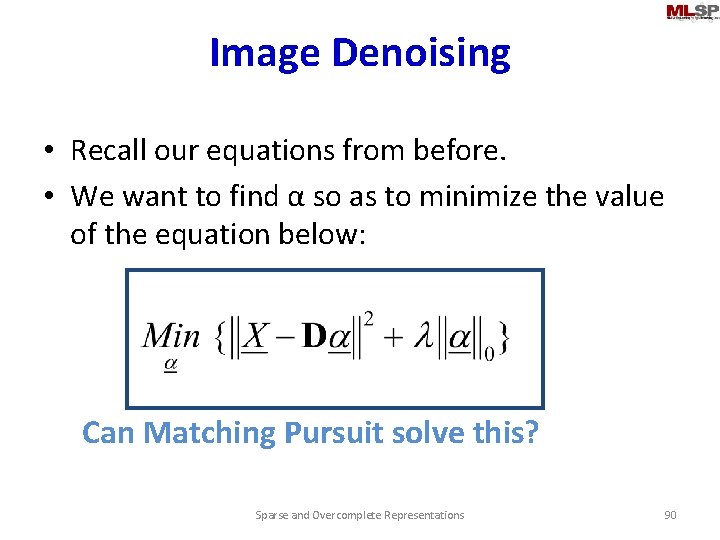

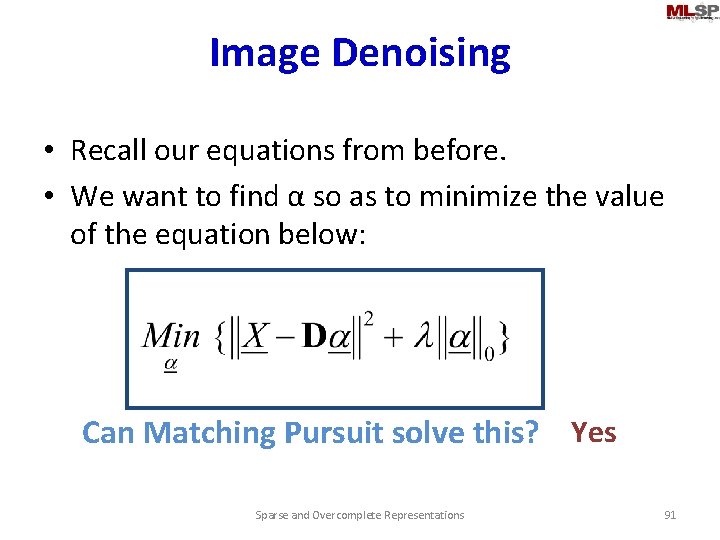

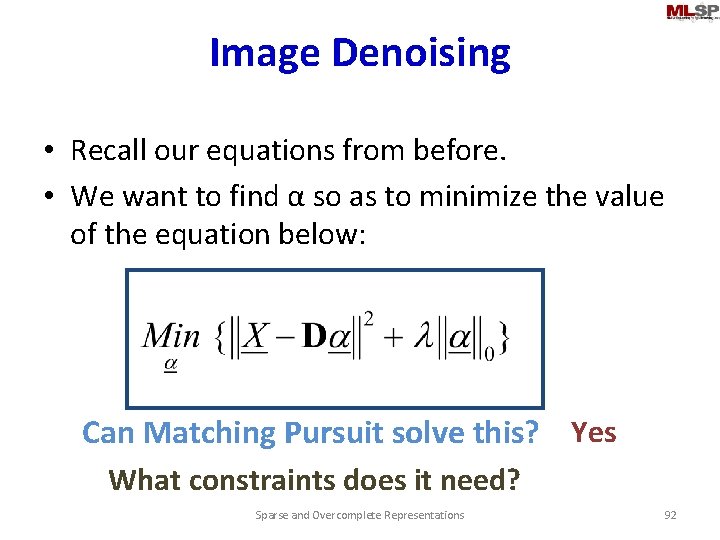

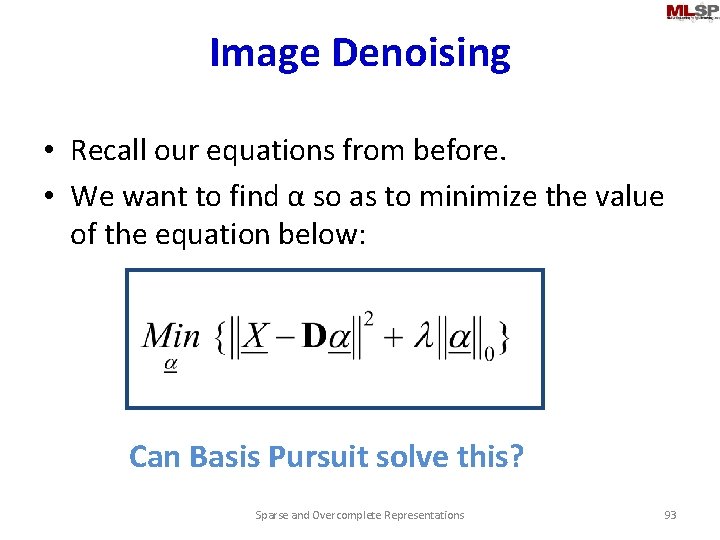

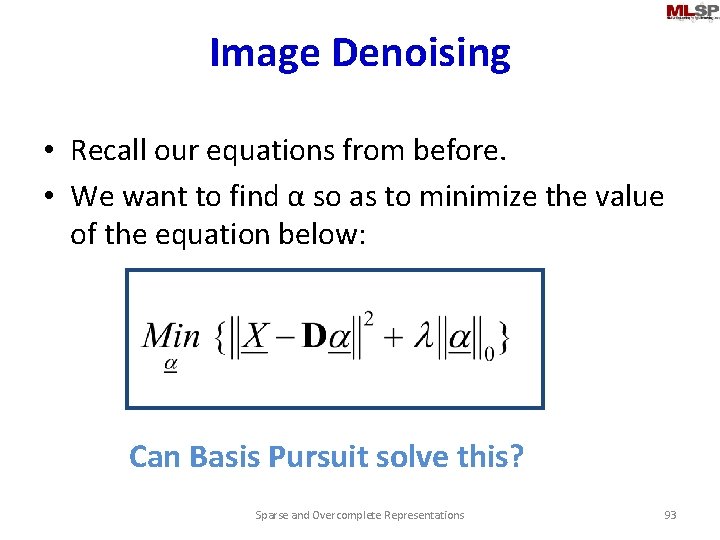

Image Denoising • Recall our equations from before. • We want to find α so as to minimize the value of the equation below: Sparse and Overcomplete Representations 89

Image Denoising • Recall our equations from before. • We want to find α so as to minimize the value of the equation below: Can Matching Pursuit solve this? Sparse and Overcomplete Representations 90

Image Denoising • Recall our equations from before. • We want to find α so as to minimize the value of the equation below: Can Matching Pursuit solve this? Yes Sparse and Overcomplete Representations 91

Image Denoising • Recall our equations from before. • We want to find α so as to minimize the value of the equation below: Can Matching Pursuit solve this? Yes What constraints does it need? Sparse and Overcomplete Representations 92

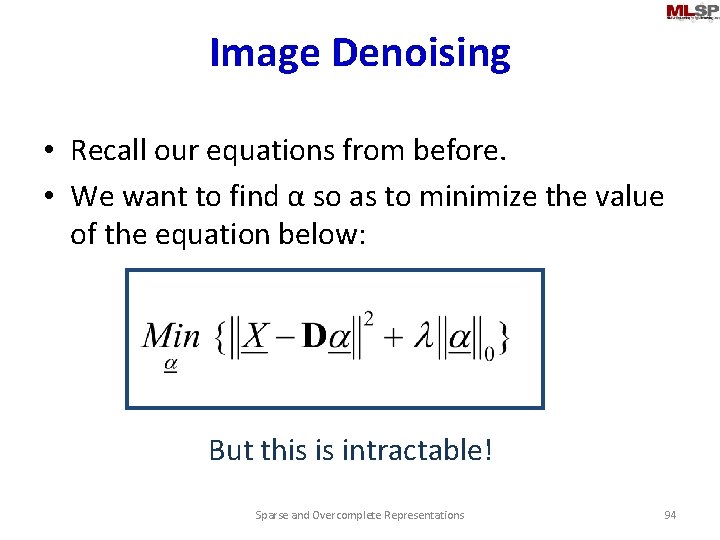

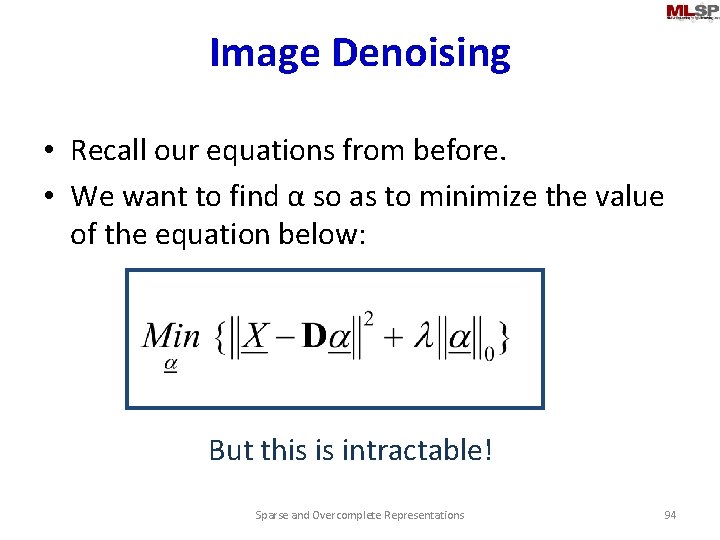

Image Denoising • Recall our equations from before. • We want to find α so as to minimize the value of the equation below: Can Basis Pursuit solve this? Sparse and Overcomplete Representations 93

Image Denoising • Recall our equations from before. • We want to find α so as to minimize the value of the equation below: But this is intractable! Sparse and Overcomplete Representations 94

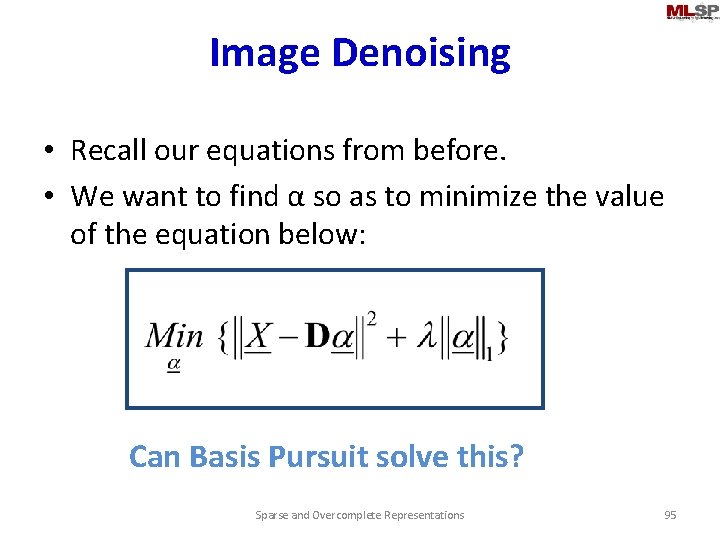

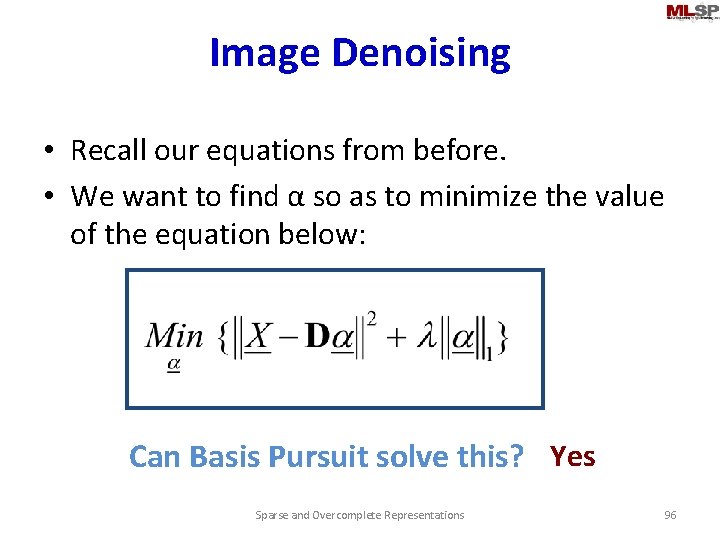

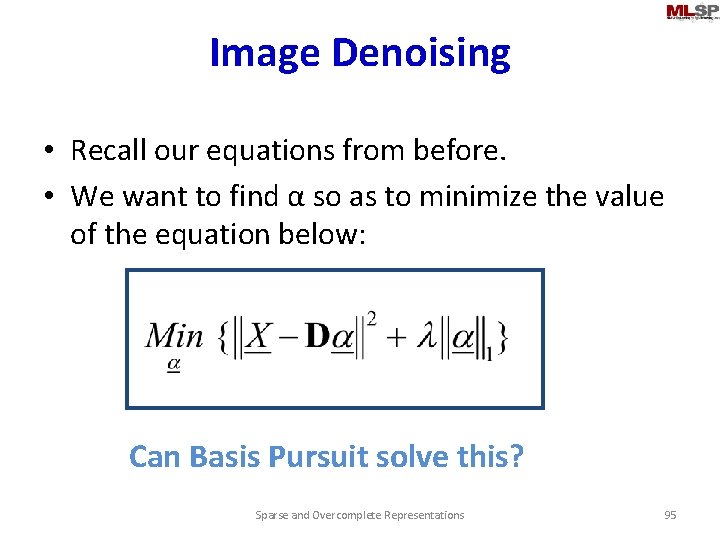

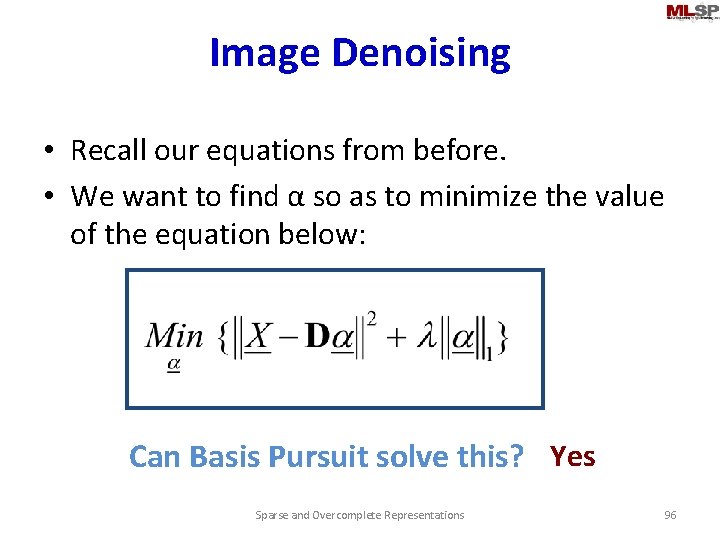

Image Denoising • Recall our equations from before. • We want to find α so as to minimize the value of the equation below: Can Basis Pursuit solve this? Sparse and Overcomplete Representations 95

Image Denoising • Recall our equations from before. • We want to find α so as to minimize the value of the equation below: Can Basis Pursuit solve this? Yes Sparse and Overcomplete Representations 96

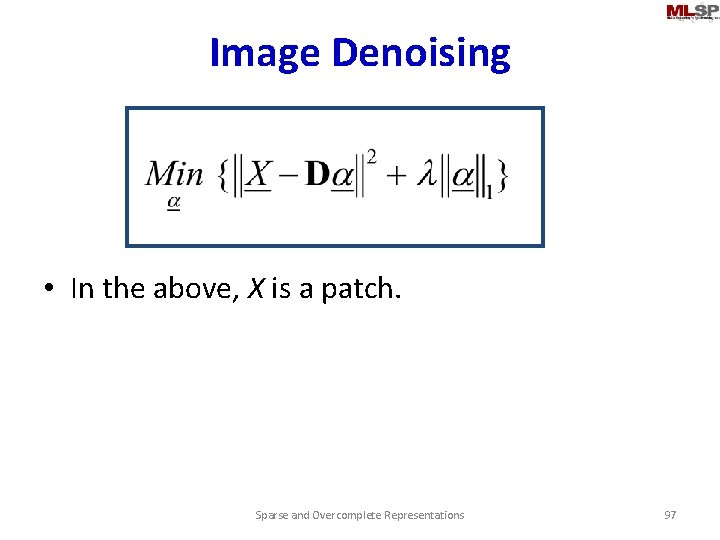

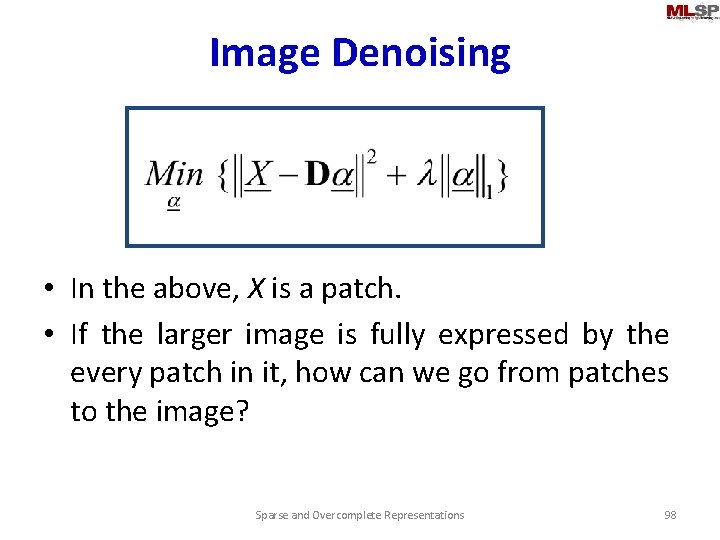

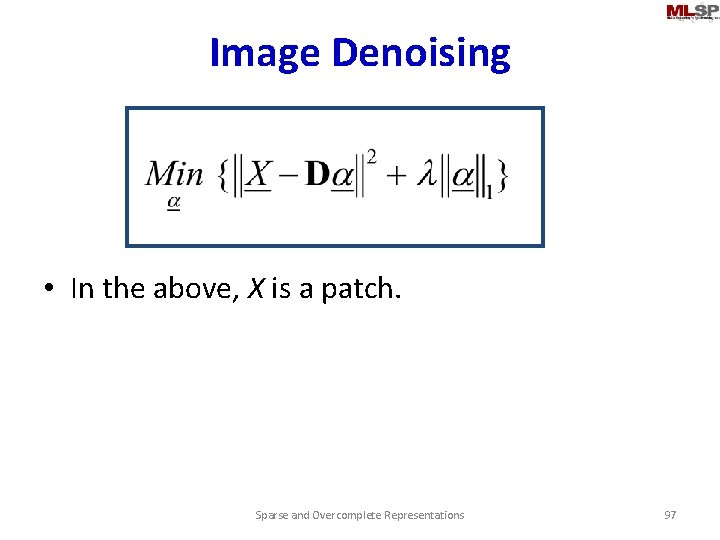

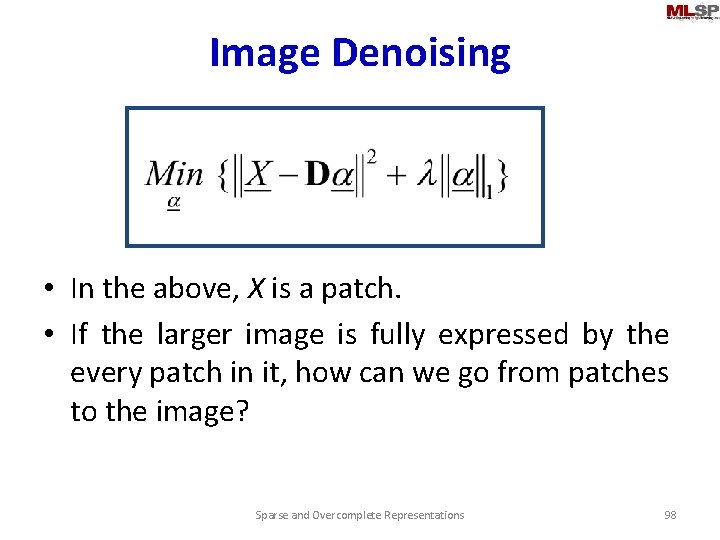

Image Denoising • In the above, X is a patch. Sparse and Overcomplete Representations 97

Image Denoising • In the above, X is a patch. • If the larger image is fully expressed by the every patch in it, how can we go from patches to the image? Sparse and Overcomplete Representations 98

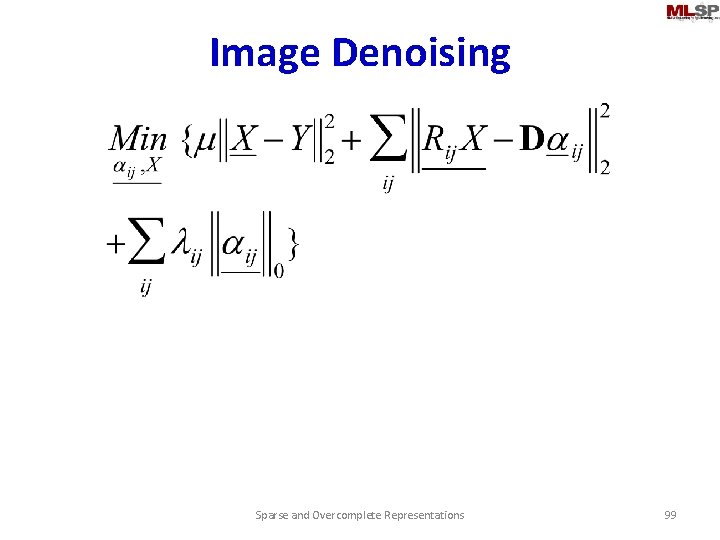

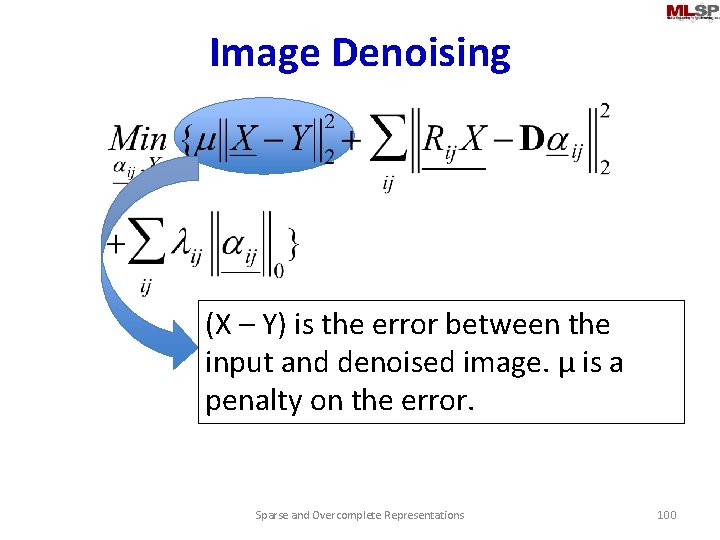

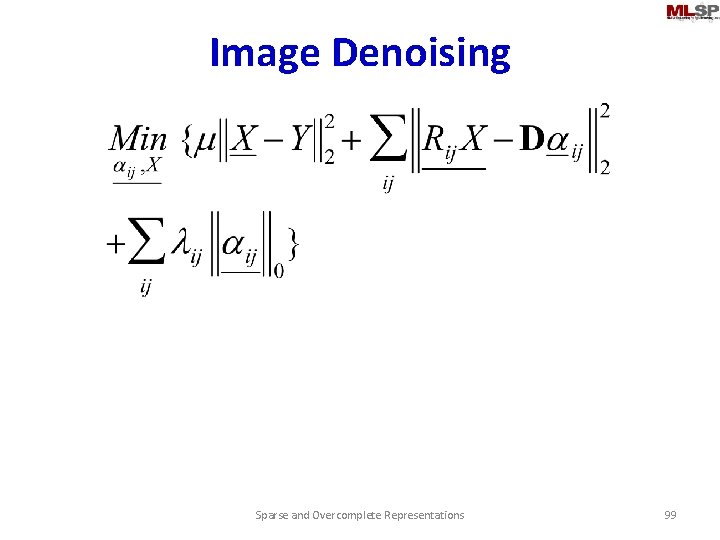

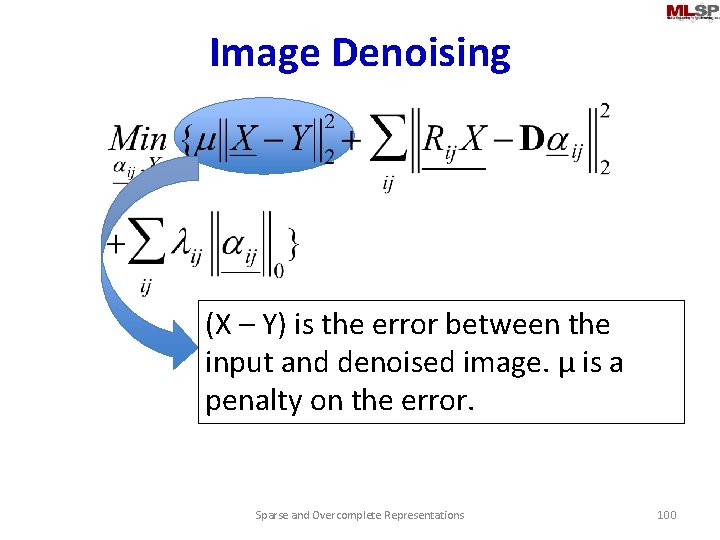

Image Denoising Sparse and Overcomplete Representations 99

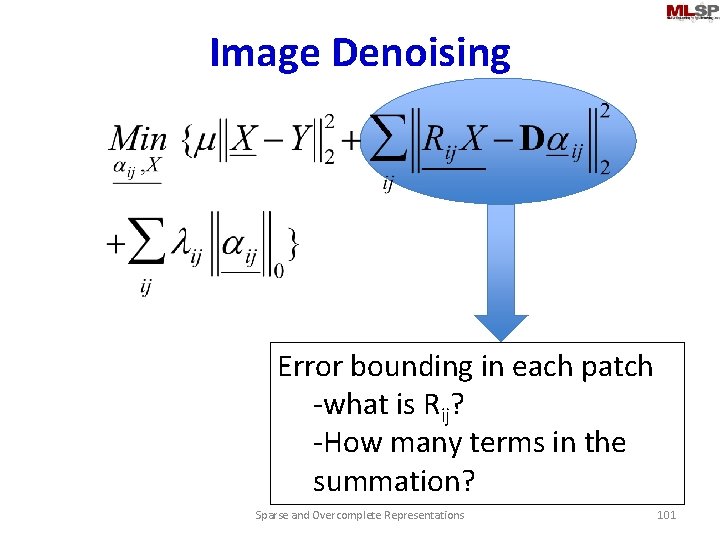

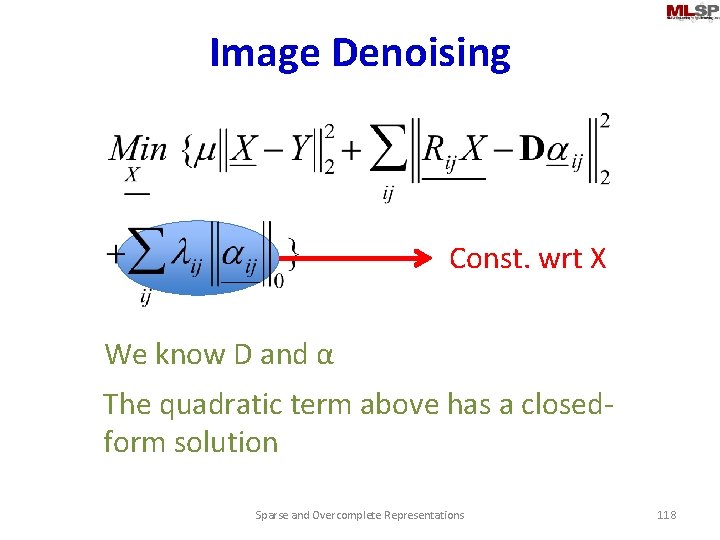

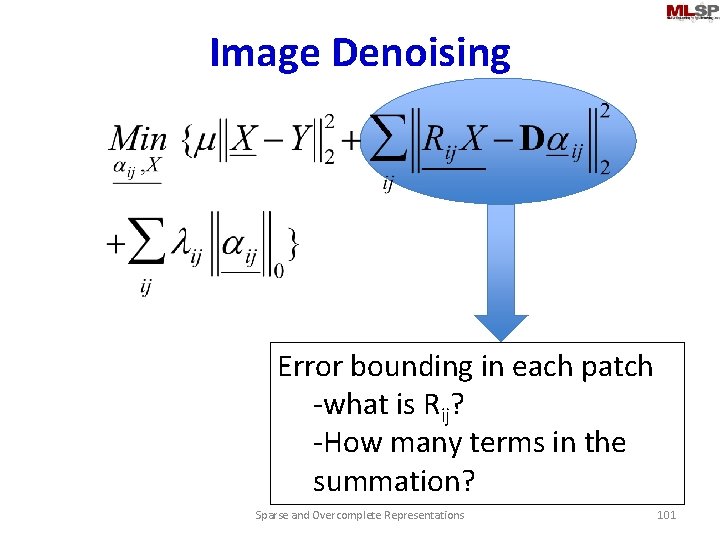

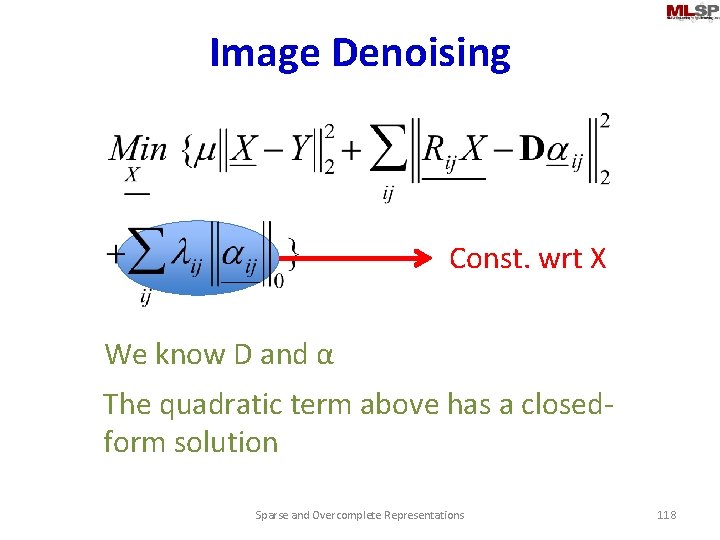

Image Denoising (X – Y) is the error between the input and denoised image. μ is a penalty on the error. Sparse and Overcomplete Representations 100

Image Denoising Error bounding in each patch -what is Rij? -How many terms in the summation? Sparse and Overcomplete Representations 101

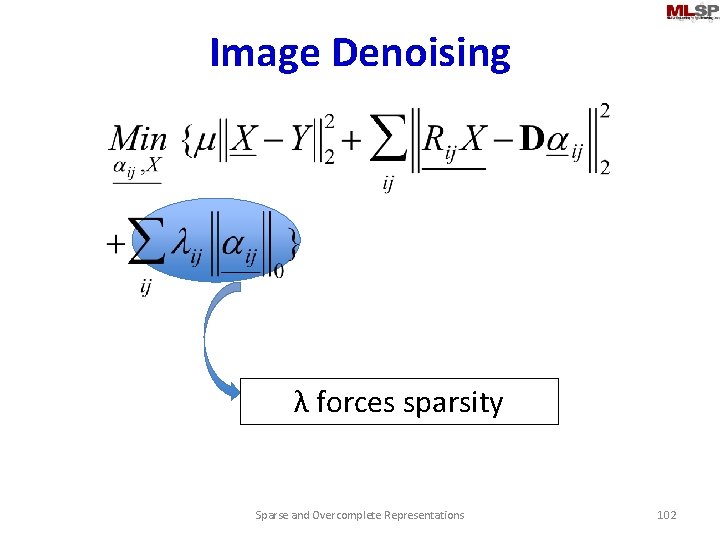

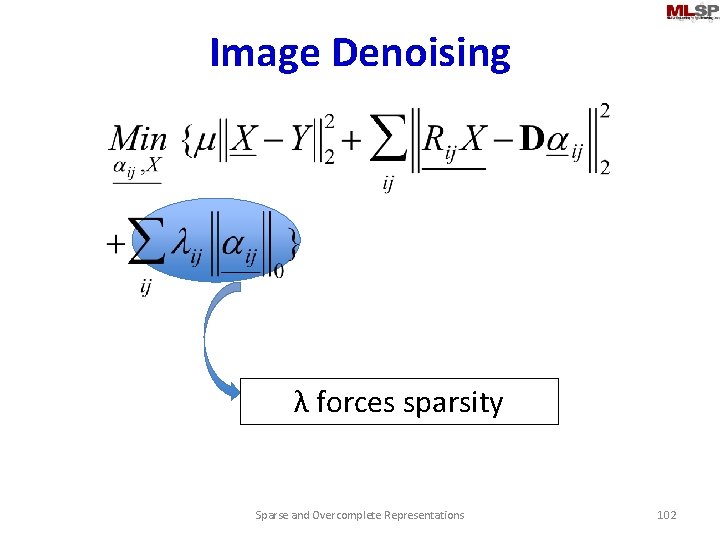

Image Denoising λ forces sparsity Sparse and Overcomplete Representations 102

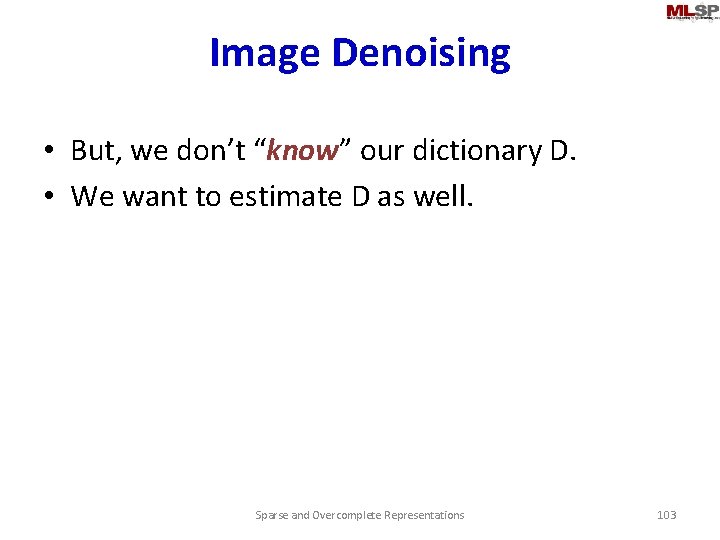

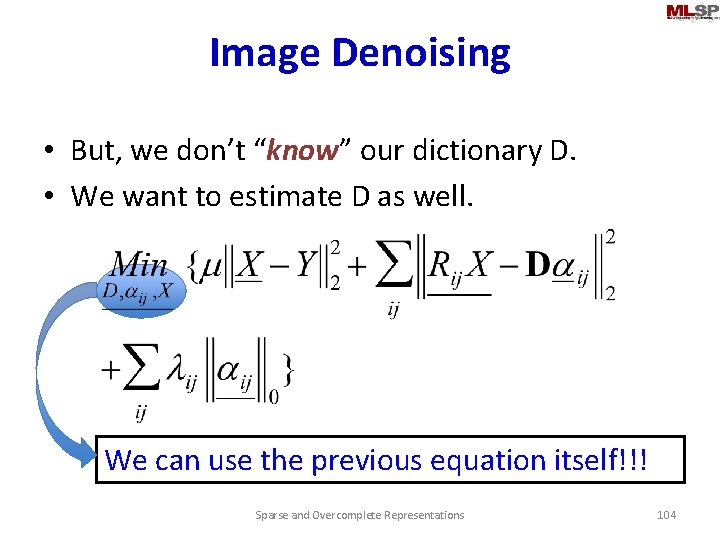

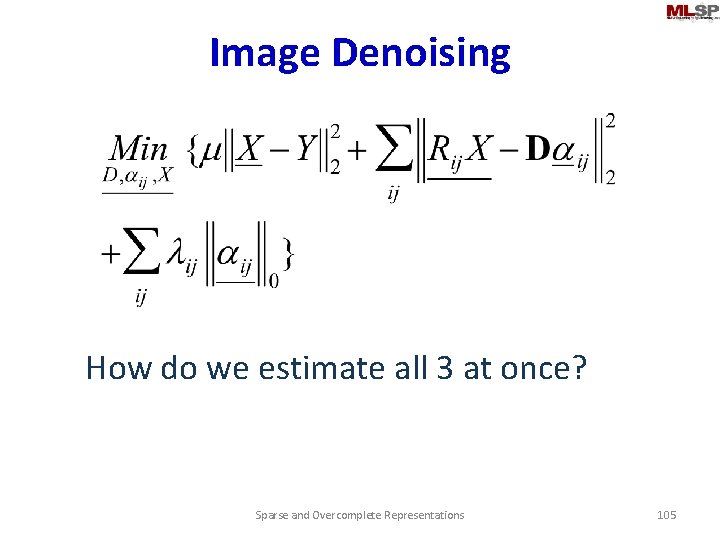

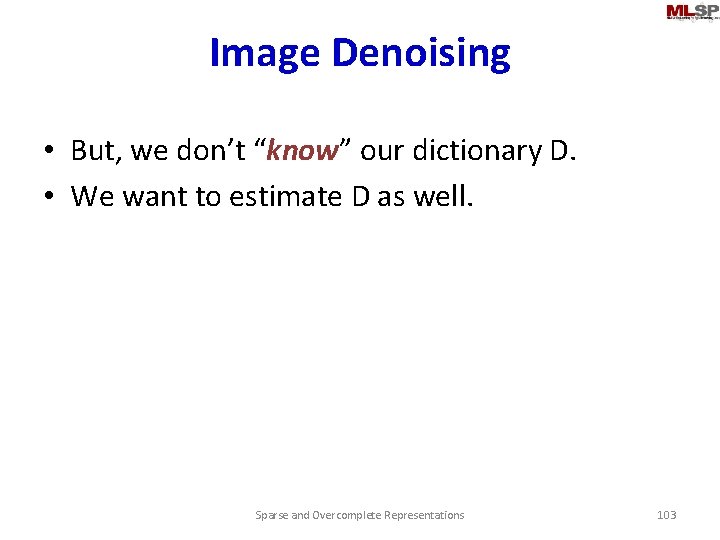

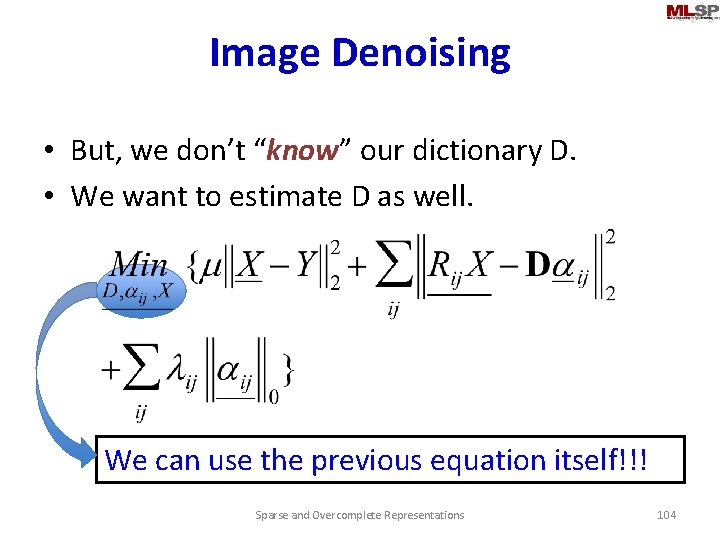

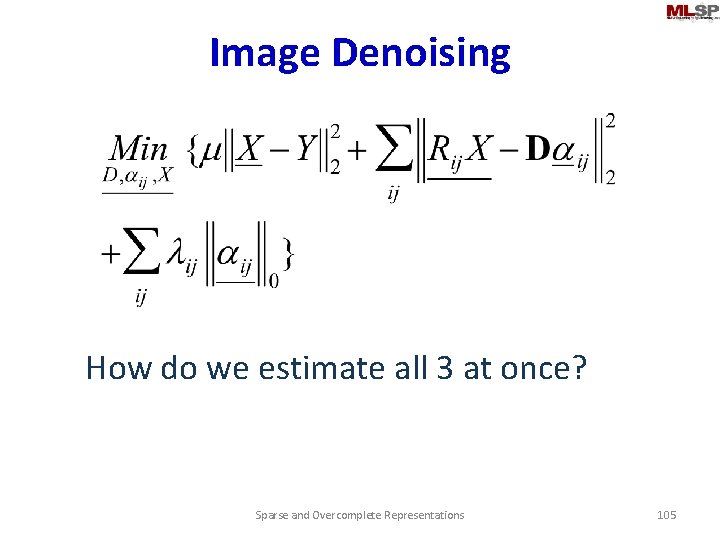

Image Denoising • But, we don’t “know” our dictionary D. • We want to estimate D as well. Sparse and Overcomplete Representations 103

Image Denoising • But, we don’t “know” our dictionary D. • We want to estimate D as well. We can use the previous equation itself!!! Sparse and Overcomplete Representations 104

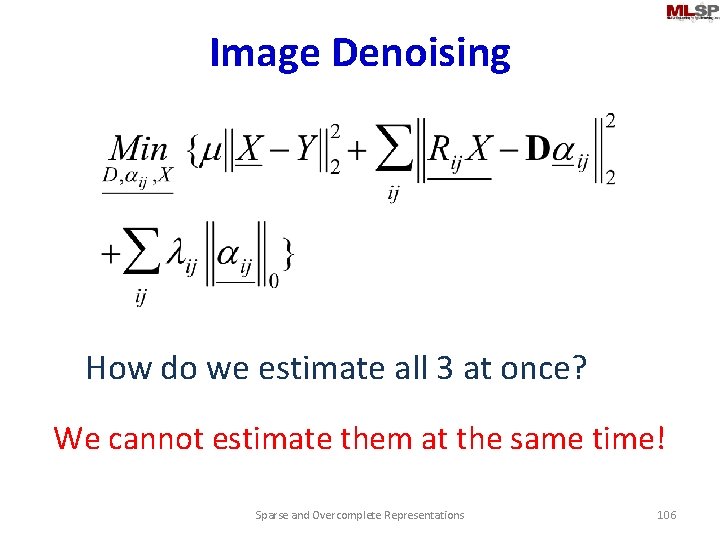

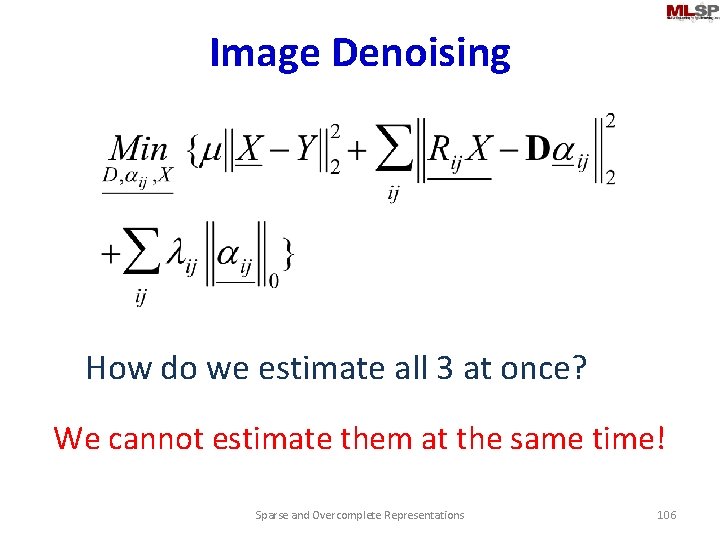

Image Denoising How do we estimate all 3 at once? Sparse and Overcomplete Representations 105

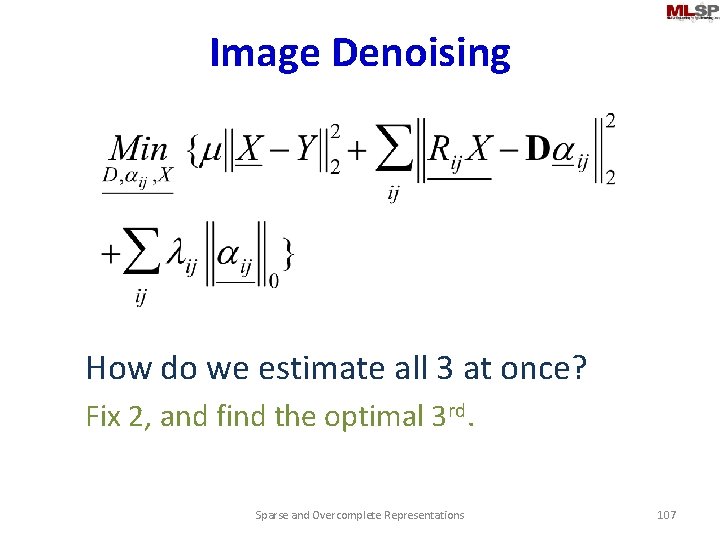

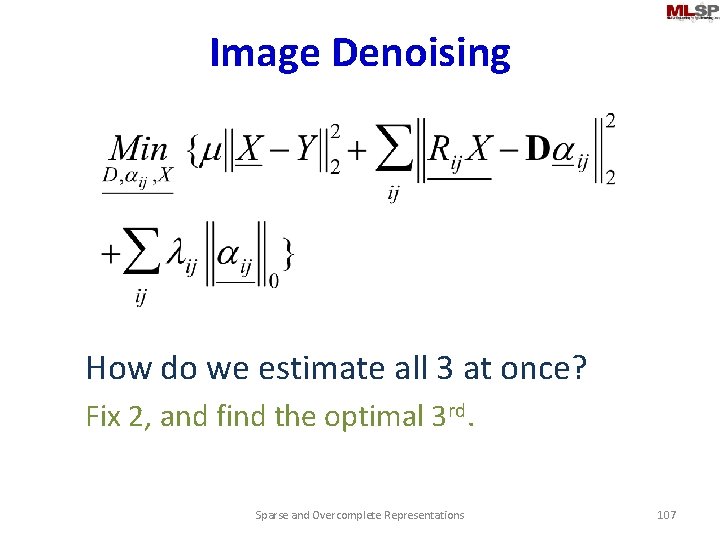

Image Denoising How do we estimate all 3 at once? We cannot estimate them at the same time! Sparse and Overcomplete Representations 106

Image Denoising How do we estimate all 3 at once? Fix 2, and find the optimal 3 rd. Sparse and Overcomplete Representations 107

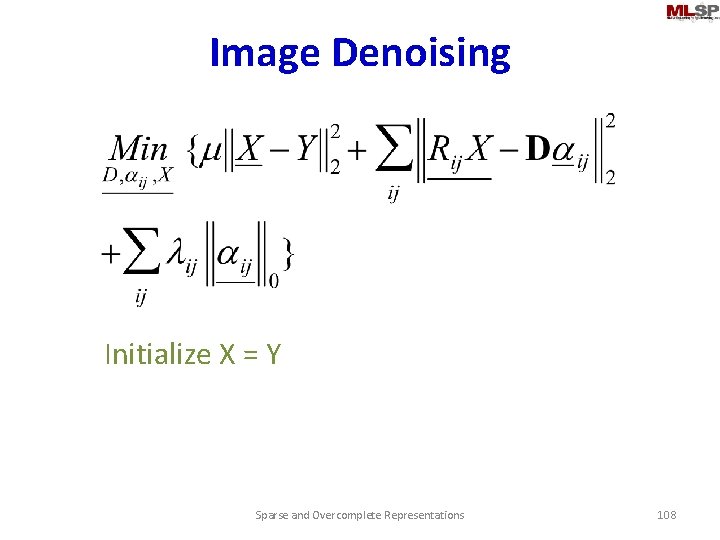

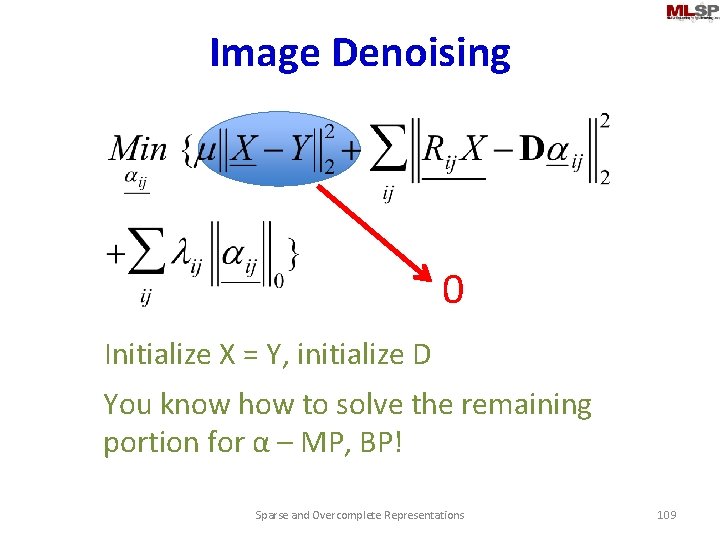

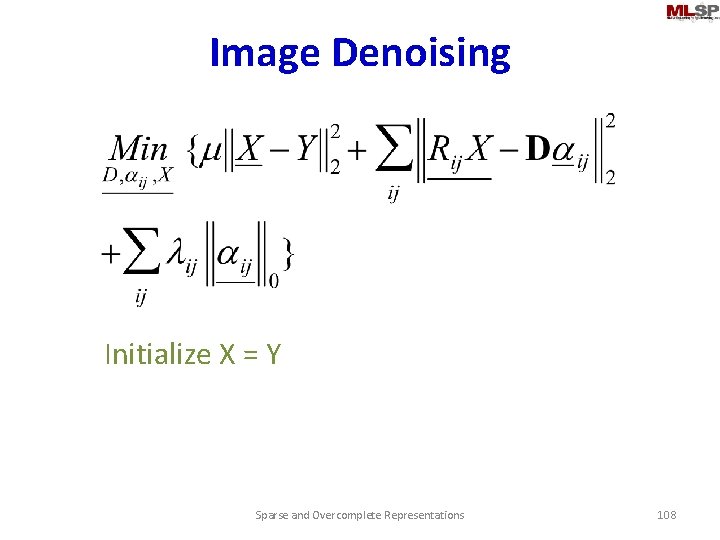

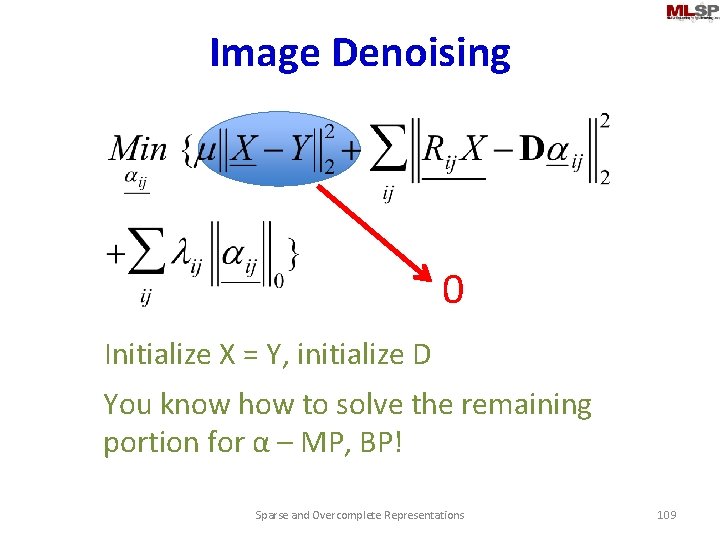

Image Denoising Initialize X = Y Sparse and Overcomplete Representations 108

Image Denoising 0 Initialize X = Y, initialize D You know how to solve the remaining portion for α – MP, BP! Sparse and Overcomplete Representations 109

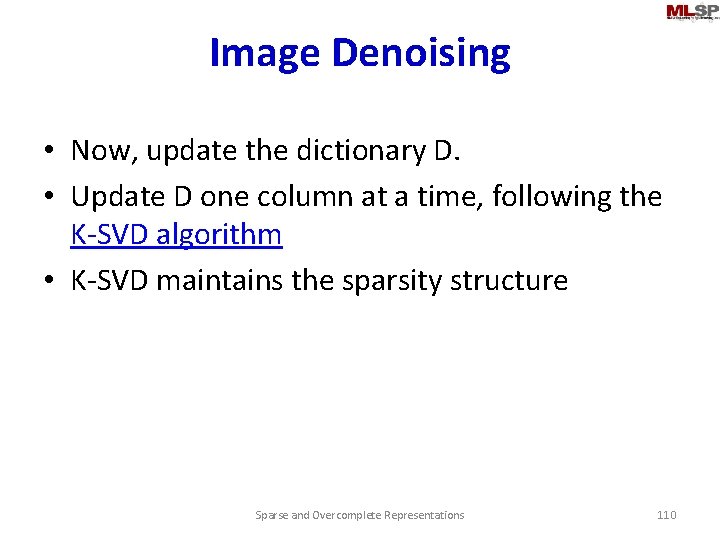

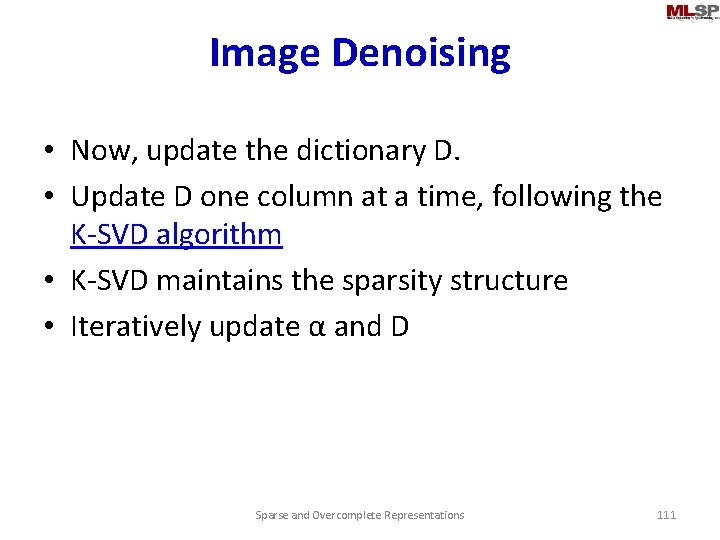

Image Denoising • Now, update the dictionary D. • Update D one column at a time, following the K-SVD algorithm • K-SVD maintains the sparsity structure Sparse and Overcomplete Representations 110

Image Denoising • Now, update the dictionary D. • Update D one column at a time, following the K-SVD algorithm • K-SVD maintains the sparsity structure • Iteratively update α and D Sparse and Overcomplete Representations 111

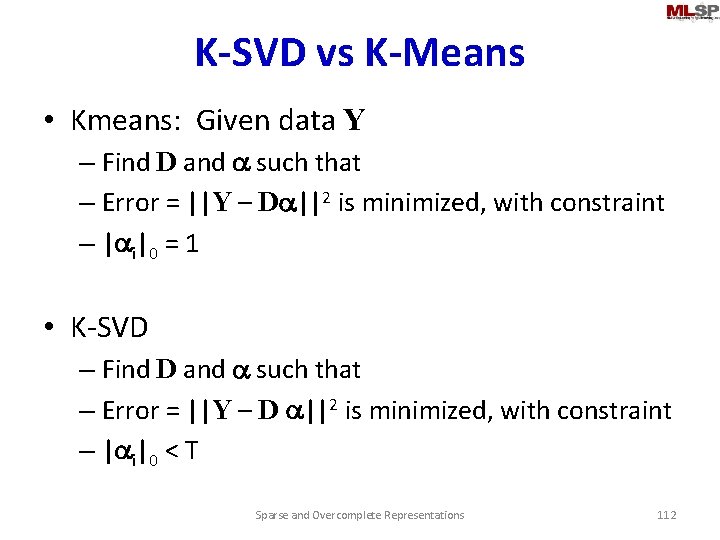

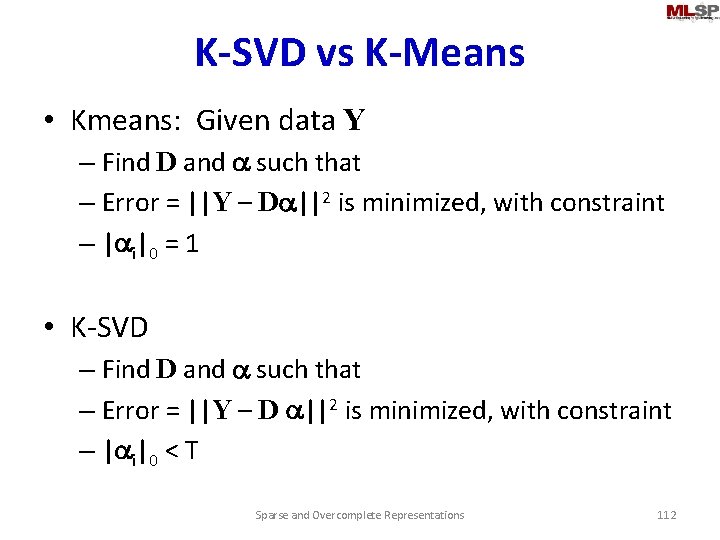

K-SVD vs K-Means • Kmeans: Given data Y – Find D and a such that – Error = ||Y – Da||2 is minimized, with constraint – |ai|0 = 1 • K-SVD – Find D and a such that – Error = ||Y – D a||2 is minimized, with constraint – |ai|0 < T Sparse and Overcomplete Representations 112

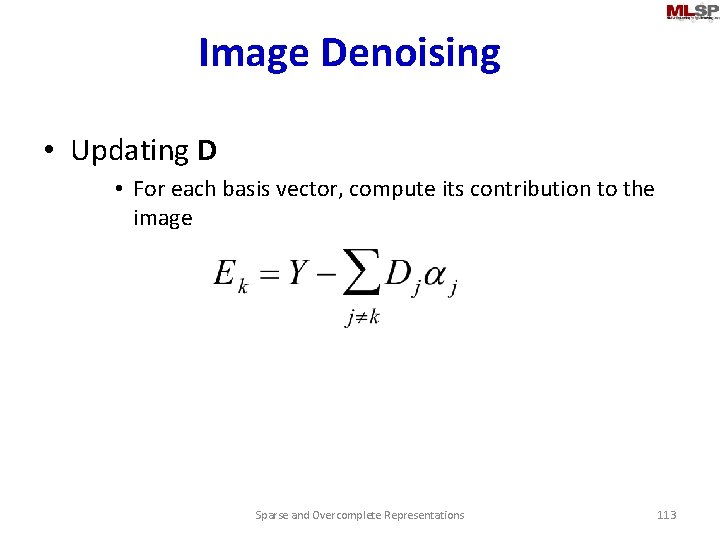

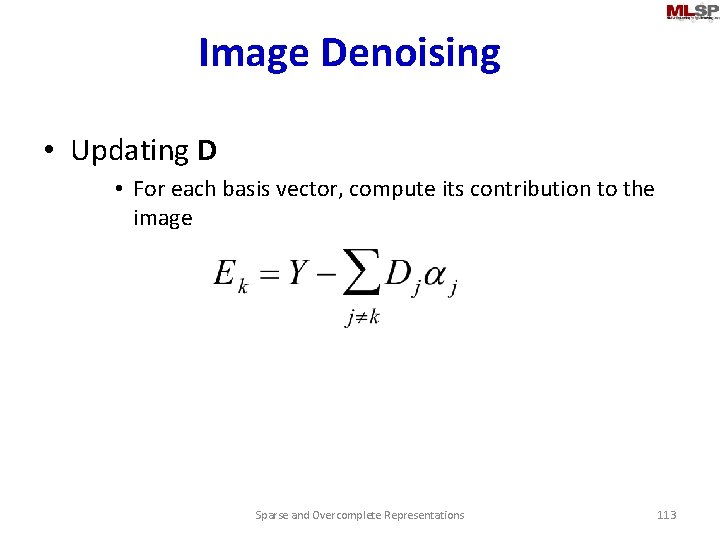

Image Denoising • Updating D • For each basis vector, compute its contribution to the image Sparse and Overcomplete Representations 113

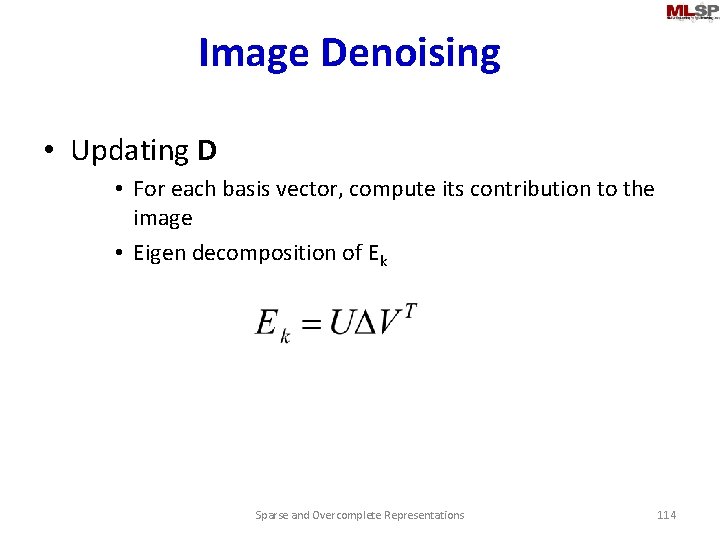

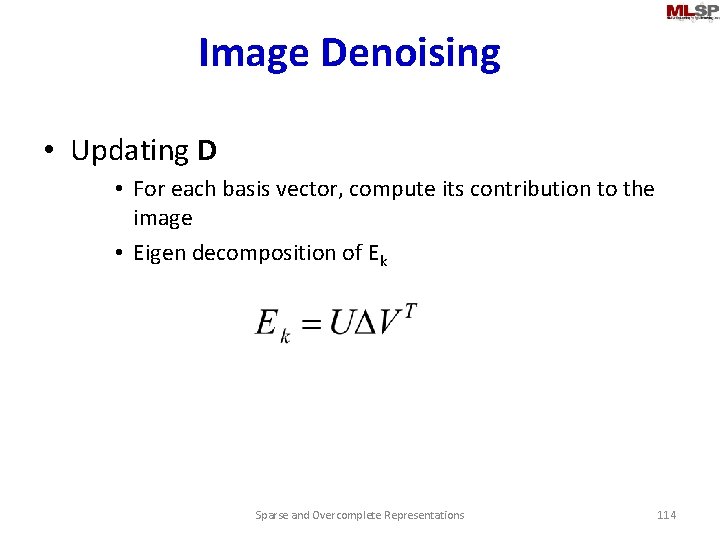

Image Denoising • Updating D • For each basis vector, compute its contribution to the image • Eigen decomposition of Ek Sparse and Overcomplete Representations 114

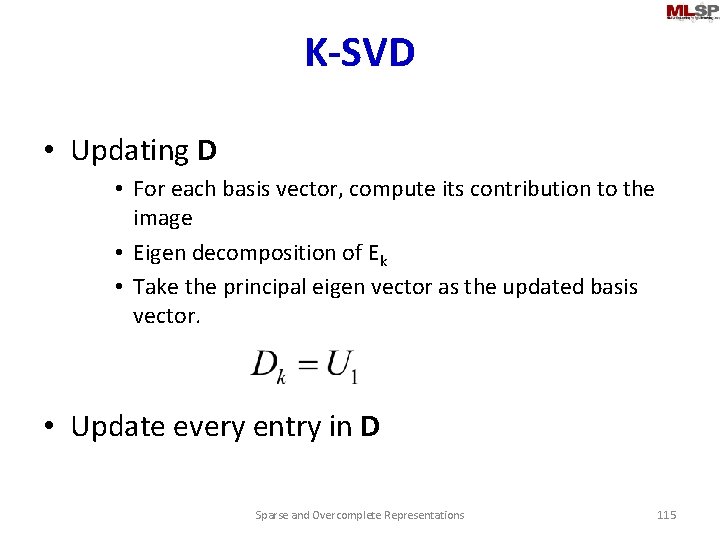

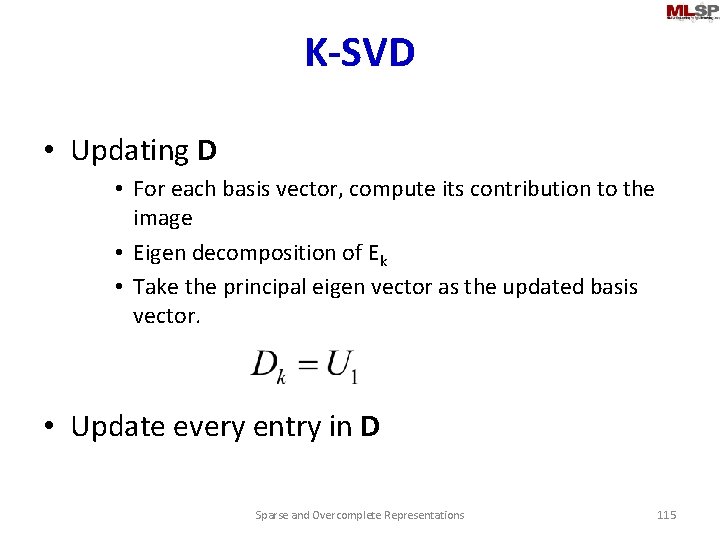

K-SVD • Updating D • For each basis vector, compute its contribution to the image • Eigen decomposition of Ek • Take the principal eigen vector as the updated basis vector. • Update every entry in D Sparse and Overcomplete Representations 115

K-SVD • • Initialize D Estimate a Update every entry in D Iterate Sparse and Overcomplete Representations 116

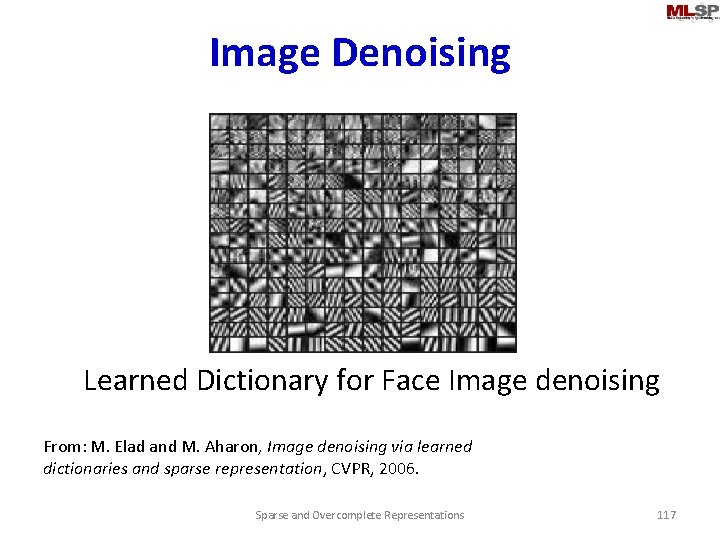

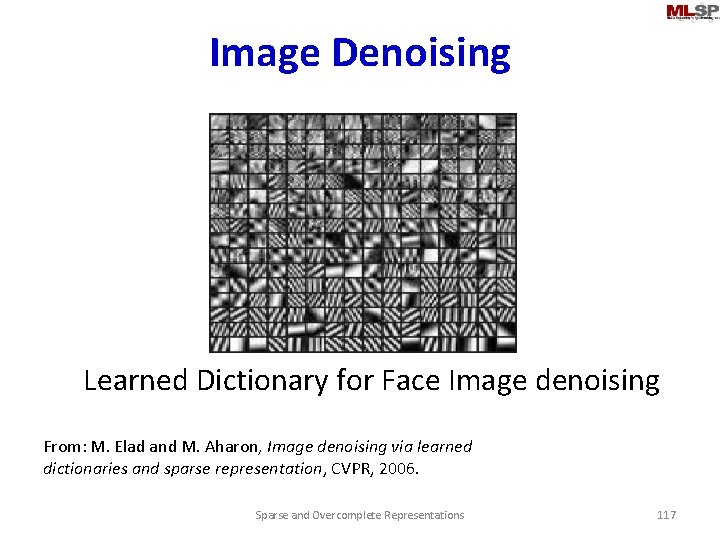

Image Denoising Learned Dictionary for Face Image denoising From: M. Elad and M. Aharon, Image denoising via learned dictionaries and sparse representation, CVPR, 2006. Sparse and Overcomplete Representations 117

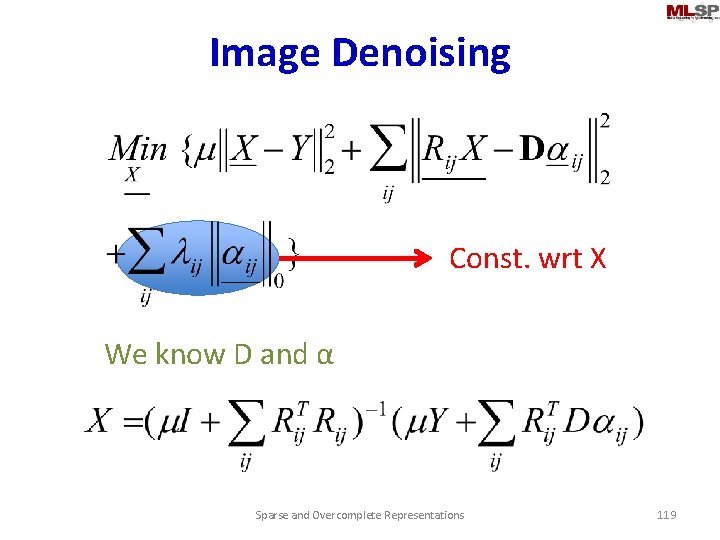

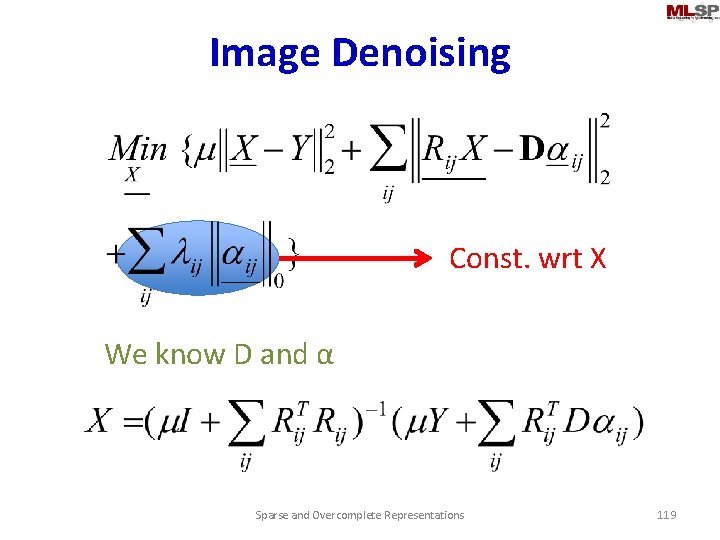

Image Denoising Const. wrt X We know D and α The quadratic term above has a closedform solution Sparse and Overcomplete Representations 118

Image Denoising Const. wrt X We know D and α Sparse and Overcomplete Representations 119

Image Denoising • Summarizing… We wanted to obtain 3 things Sparse and Overcomplete Representations 120

Image Denoising • Summarizing… We wanted to obtain 3 things Ø Weights α Ø Dictionary D Ø Denoised Image X Sparse and Overcomplete Representations 121

Image Denoising • Summarizing… We wanted to obtain 3 things Ø Weights α – Your favorite pursuit algorithm Ø Dictionary D – Using K-SVD Ø Denoised Image X Sparse and Overcomplete Representations 122

Image Denoising • Summarizing… We wanted to obtain 3 things Ø Weights α – Your favorite pursuit algorithm Ø Dictionary D – Using K-SVD Iterating Ø Denoised Image X Sparse and Overcomplete Representations 123

Image Denoising • Summarizing… We wanted to obtain 3 things Ø Weights α Ø Dictionary D Ø Denoised Image X- Closed form solution Sparse and Overcomplete Representations 124

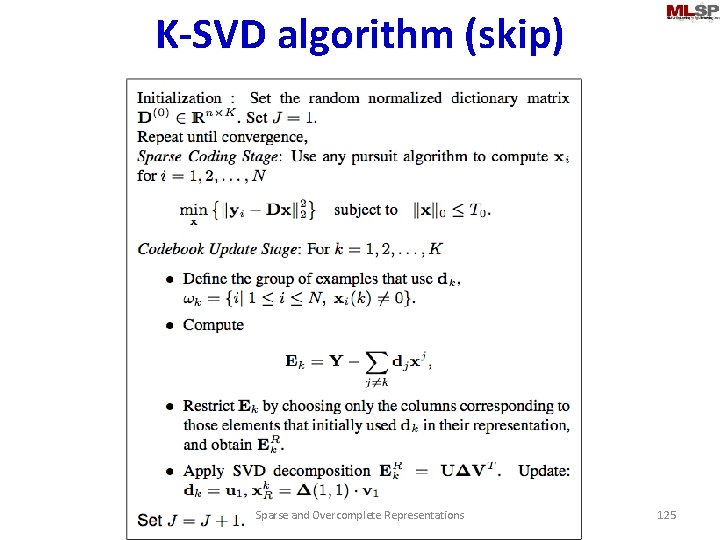

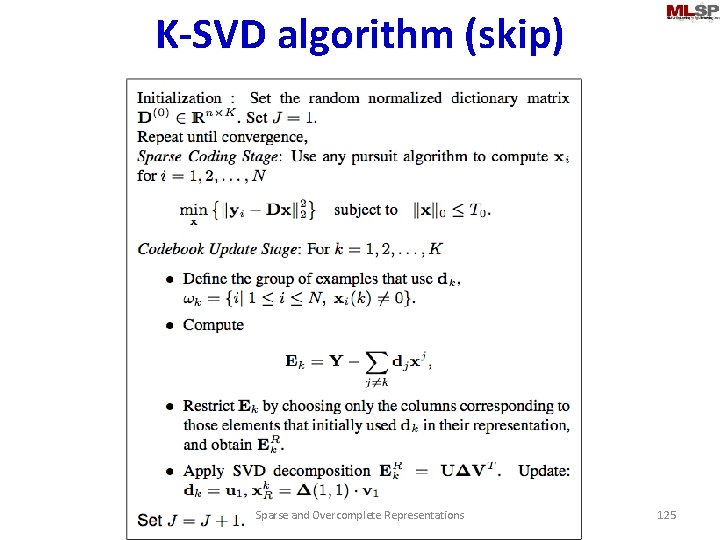

K-SVD algorithm (skip) Sparse and Overcomplete Representations 125

Image Denoising • Here’s what we want Sparse and Overcomplete Representations 126

Image Denoising • Here’s what we want Sparse and Overcomplete Representations 127

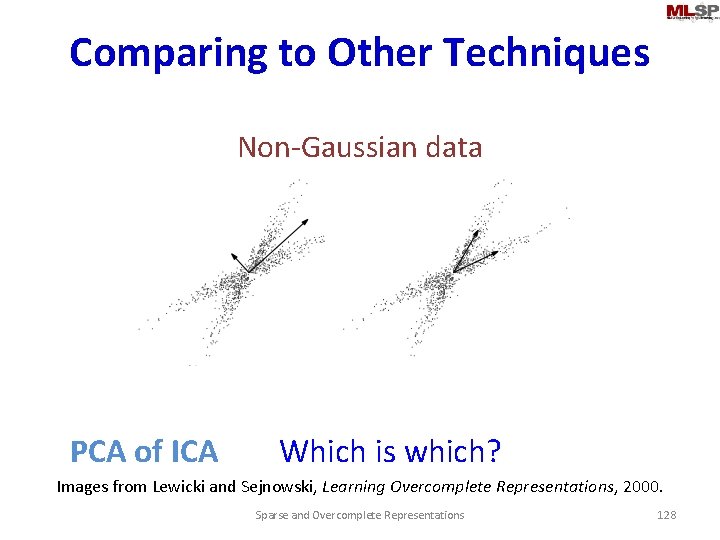

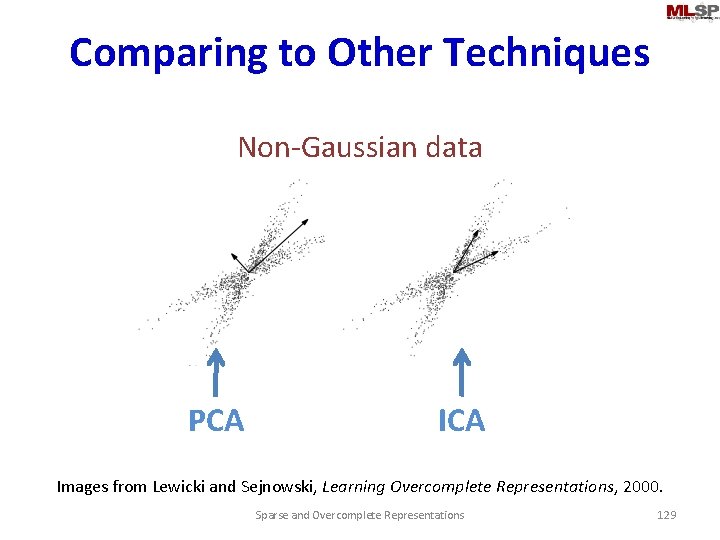

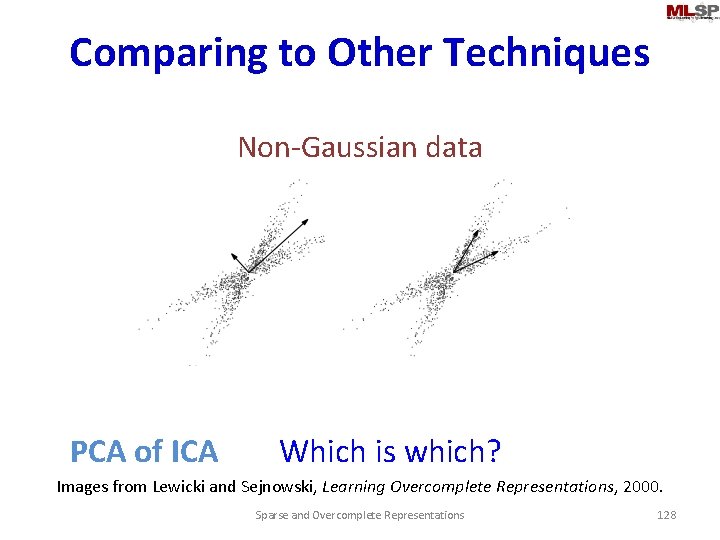

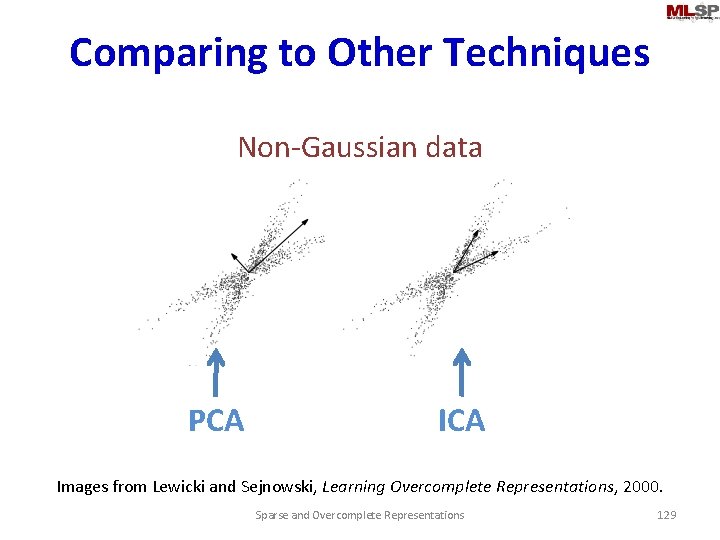

Comparing to Other Techniques Non-Gaussian data PCA of ICA Which is which? Images from Lewicki and Sejnowski, Learning Overcomplete Representations, 2000. Sparse and Overcomplete Representations 128

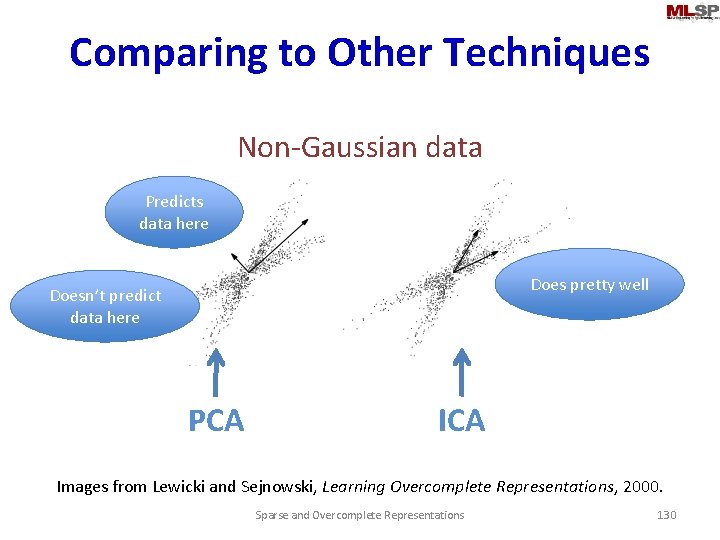

Comparing to Other Techniques Non-Gaussian data PCA Images from Lewicki and Sejnowski, Learning Overcomplete Representations, 2000. Sparse and Overcomplete Representations 129

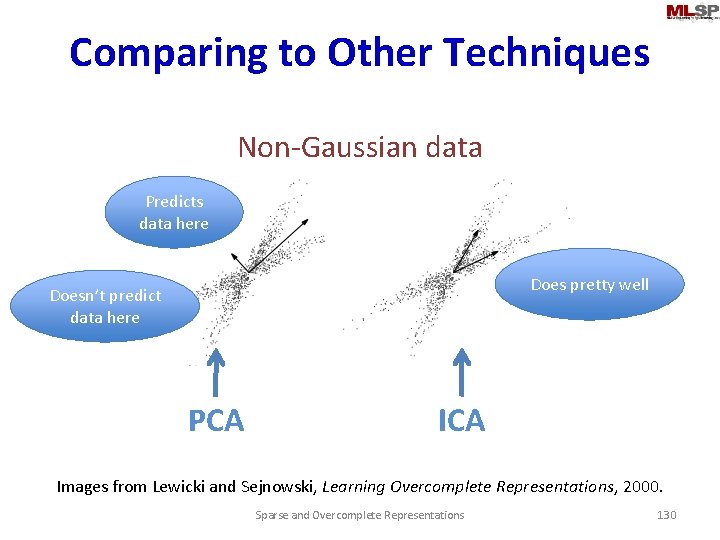

Comparing to Other Techniques Non-Gaussian data Predicts data here Does pretty well Doesn’t predict data here PCA Images from Lewicki and Sejnowski, Learning Overcomplete Representations, 2000. Sparse and Overcomplete Representations 130

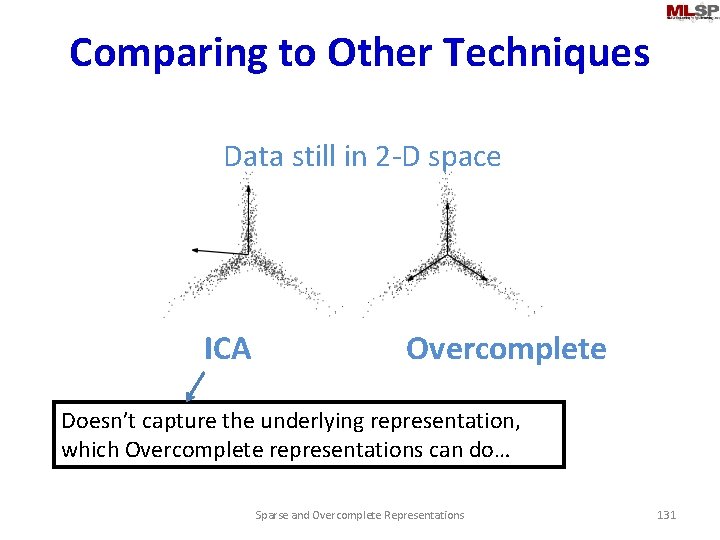

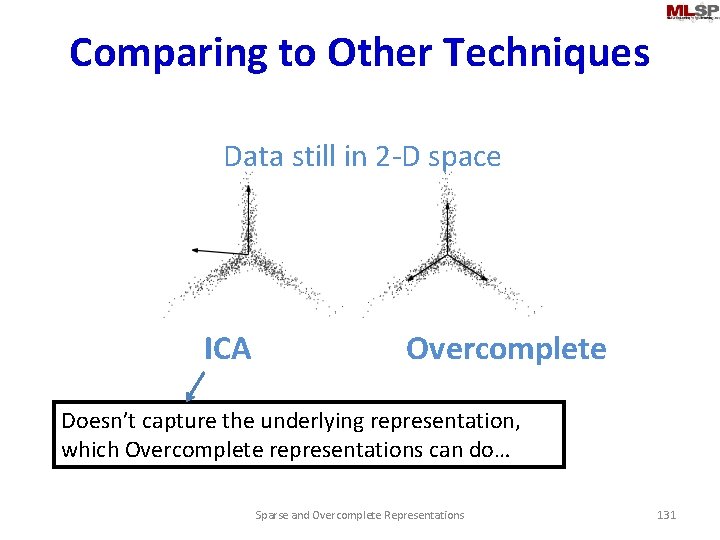

Comparing to Other Techniques Data still in 2 -D space ICA Overcomplete Doesn’t capture the underlying representation, which Overcomplete representations can do… Sparse and Overcomplete Representations 131

Summary • Overcomplete representations can be more powerful than component analysis techniques. • Dictionary can be learned from data. • Relative advantages and disadvantages of the pursuit algorithms. Sparse and Overcomplete Representations 132