Machine Learning and Motion Planning Dave Millman October

Machine Learning and Motion Planning Dave Millman October 17, 2007

Machine Learning intro • Machine Learning (ML) – The study of algorithms which improve automatically though experience. - Mitchell • General description – Data driven – Extract some information from data – Mathematically based • Probability, Statistics, Information theory, Computational learning theory, optimization

A very small set of uses of ML – Text • Document labeling, Part of speech tagging, Summarization – Vision • Object recognition, Hand writing recognition, Emotion labeling, Surveillance – Sound • Speech recognition, music genra classification – Finance • Algorithmic trading – Medical, Biological, Chemical, on and on…

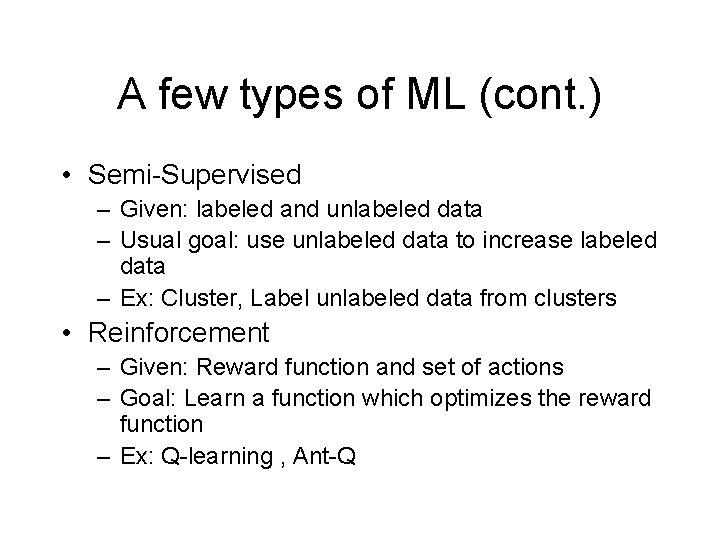

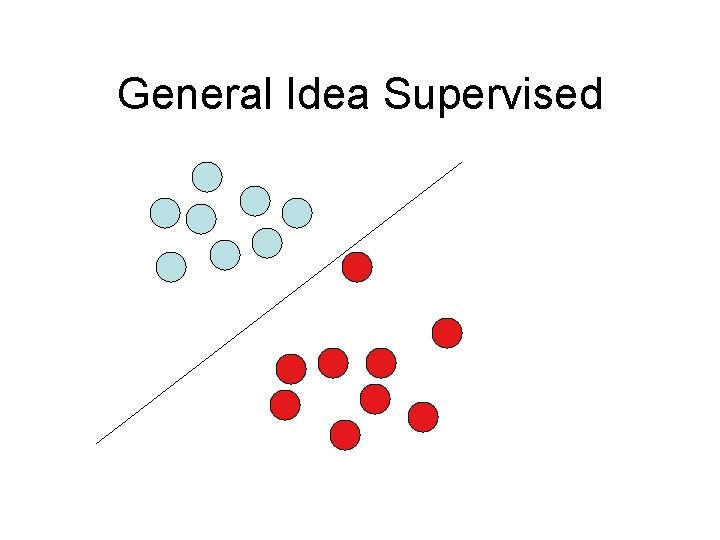

A few types of ML • Supervised – Given: labeled data – Usual goal: learn function – Ex: SVM, Neural Networks, Boosting etc. • Unsupervised – Given: unlabeled data – Usual goal: cluster data, learn conditional probabilities – Ex: Nearest Neighbors, Decision trees

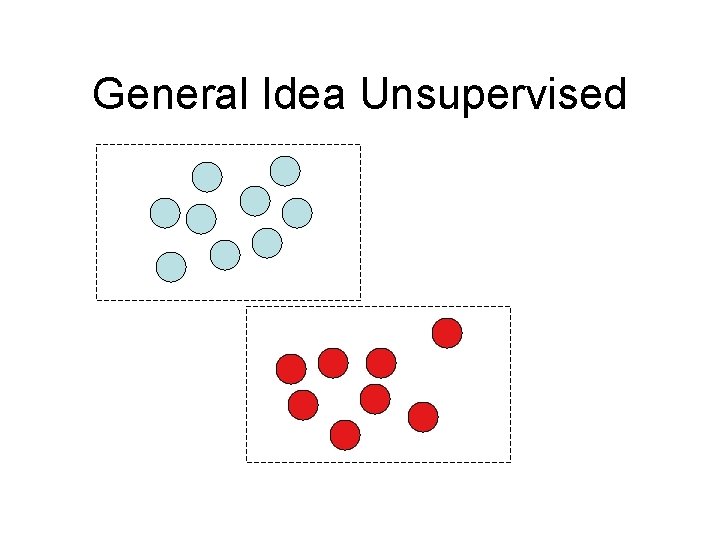

A few types of ML (cont. ) • Semi-Supervised – Given: labeled and unlabeled data – Usual goal: use unlabeled data to increase labeled data – Ex: Cluster, Label unlabeled data from clusters • Reinforcement – Given: Reward function and set of actions – Goal: Learn a function which optimizes the reward function – Ex: Q-learning , Ant-Q

General Idea Supervised

General Idea Unsupervised

General Idea Semi. Supervised

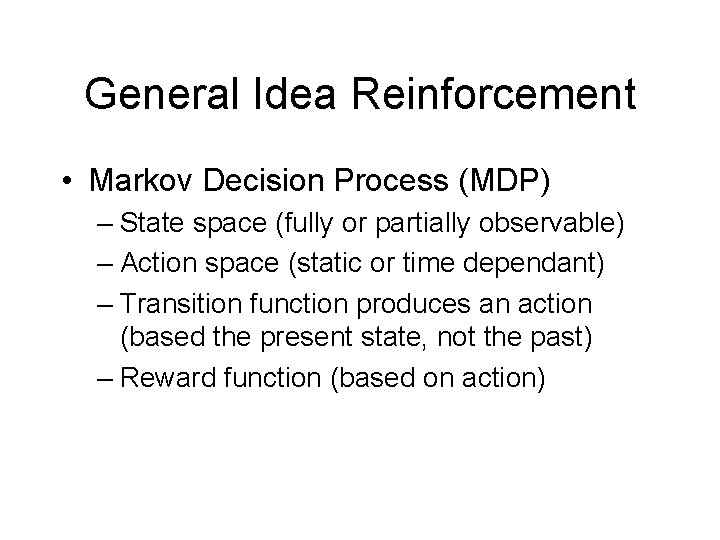

General Idea Reinforcement • Markov Decision Process (MDP) – State space (fully or partially observable) – Action space (static or time dependant) – Transition function produces an action (based the present state, not the past) – Reward function (based on action)

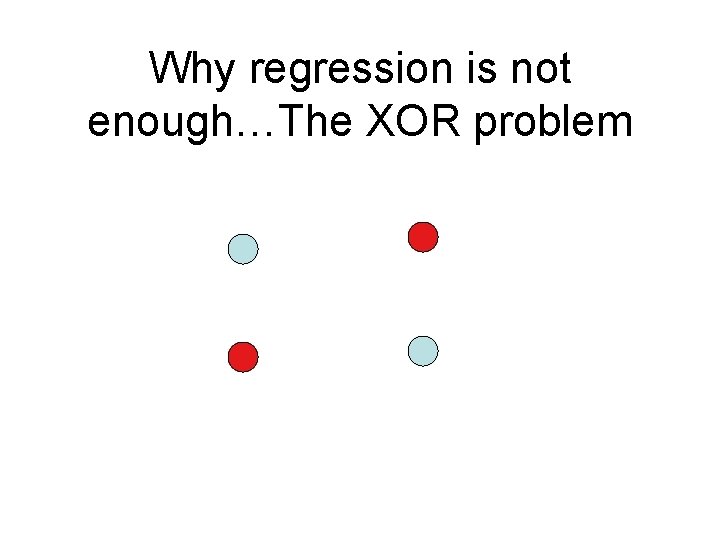

Why regression is not enough…The XOR problem

![Text book Q-Learning [MI 06] • Learning flocking behavior – N agents – discrete Text book Q-Learning [MI 06] • Learning flocking behavior – N agents – discrete](http://slidetodoc.com/presentation_image_h2/dc9cc792a66eb664eab40d3d16ded9fd/image-11.jpg)

Text book Q-Learning [MI 06] • Learning flocking behavior – N agents – discrete time steps – Agent i partner j – Define Q-state Q(st, at) – st - state – ai - action

![Our text book example – State of i • [R] = floor(|i-j|) – Actions Our text book example – State of i • [R] = floor(|i-j|) – Actions](http://slidetodoc.com/presentation_image_h2/dc9cc792a66eb664eab40d3d16ded9fd/image-12.jpg)

Our text book example – State of i • [R] = floor(|i-j|) – Actions for i • • a 1 - Attract to j a 2 - Parallel positive orientation to j a 3 - Parallel negative orientation to j a 4 - Repulsion from j

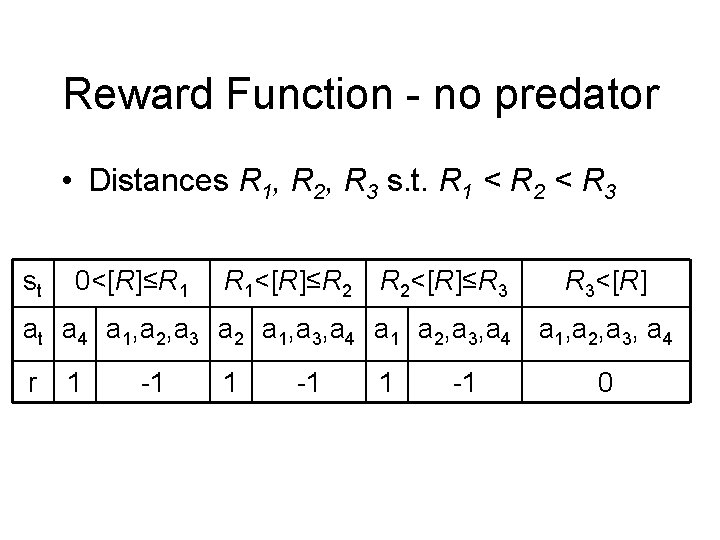

Reward Function - no predator • Distances R 1, R 2, R 3 s. t. R 1 < R 2 < R 3 st 0<[R]≤R 1 R 1<[R]≤R 2 R 2<[R]≤R 3 R 3<[R] at a 4 a 1, a 2, a 3 a 2 a 1, a 3, a 4 a 1 a 2, a 3, a 4 a 1, a 2, a 3, a 4 r 1 -1 0

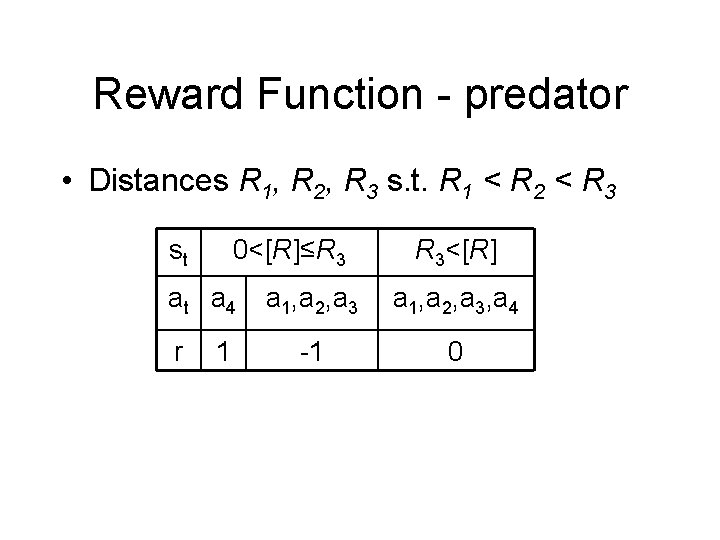

Reward Function - predator • Distances R 1, R 2, R 3 s. t. R 1 < R 2 < R 3 st 0<[R]≤R 3 at a 4 r 1 R 3<[R] a 1, a 2, a 3, a 4 -1 0

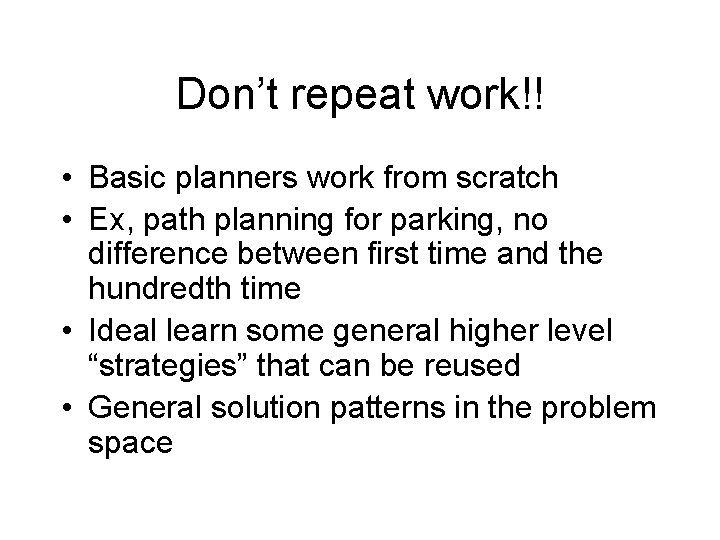

Don’t repeat work!! • Basic planners work from scratch • Ex, path planning for parking, no difference between first time and the hundredth time • Ideal learn some general higher level “strategies” that can be reused • General solution patterns in the problem space

![Viability Filtering [KP 07] • Agent can “see”, perceptual information – Range finder like Viability Filtering [KP 07] • Agent can “see”, perceptual information – Range finder like](http://slidetodoc.com/presentation_image_h2/dc9cc792a66eb664eab40d3d16ded9fd/image-16.jpg)

Viability Filtering [KP 07] • Agent can “see”, perceptual information – Range finder like virtual sensors • Data base of successfully perceptuallyparameterized motions – From its own experimentation or external source • Database exploited for future queries – Search based off of what has previously been successful in similar situations.

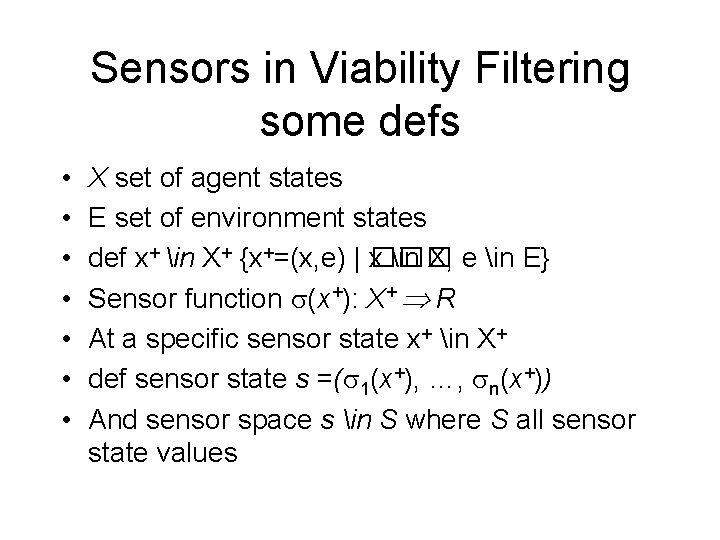

Sensors in Viability Filtering some defs • • X set of agent states E set of environment states def x+ in X+ {x+=(x, e) | ��� x in X, e in E} Sensor function (x+): X+ R At a specific sensor state x+ in X+ def sensor state s =( 1(x+), …, n(x+)) And sensor space s in S where S all sensor state values

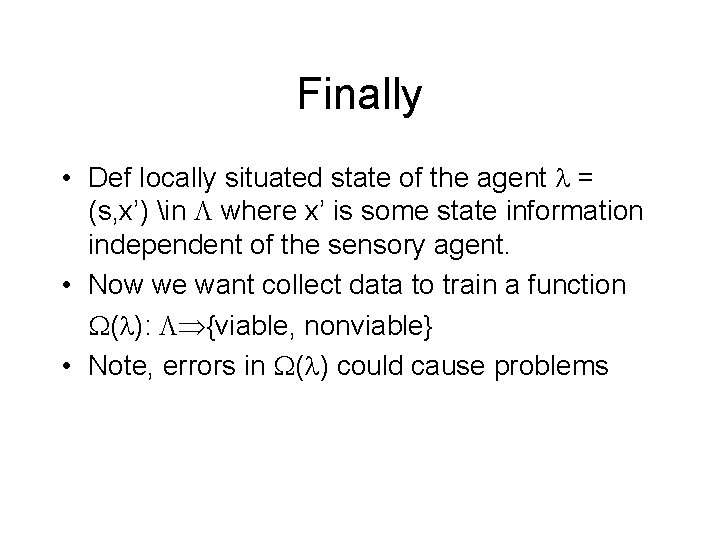

Finally • Def locally situated state of the agent = (s, x’) in where x’ is some state information independent of the sensory agent. • Now we want collect data to train a function ( ): {viable, nonviable} • Note, errors in ( ) could cause problems

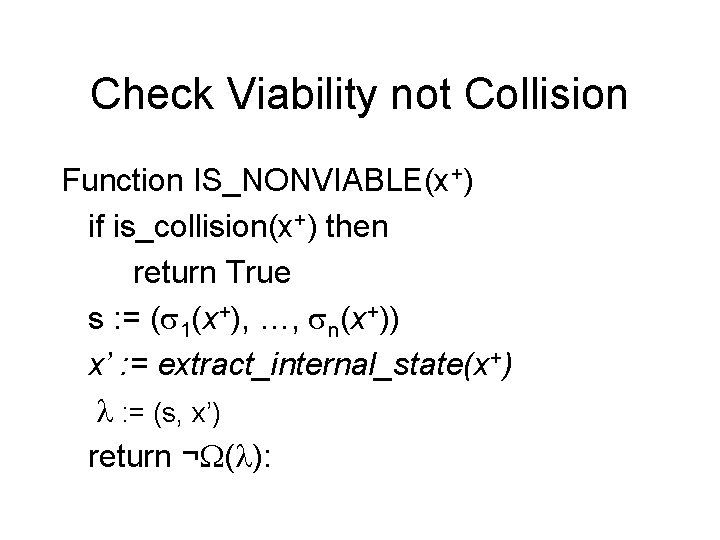

Check Viability not Collision Function IS_NONVIABLE(x+) if is_collision(x+) then return True s : = ( 1(x+), …, n(x+)) x’ : = extract_internal_state(x+) : = (s, x’) return ¬ ( ):

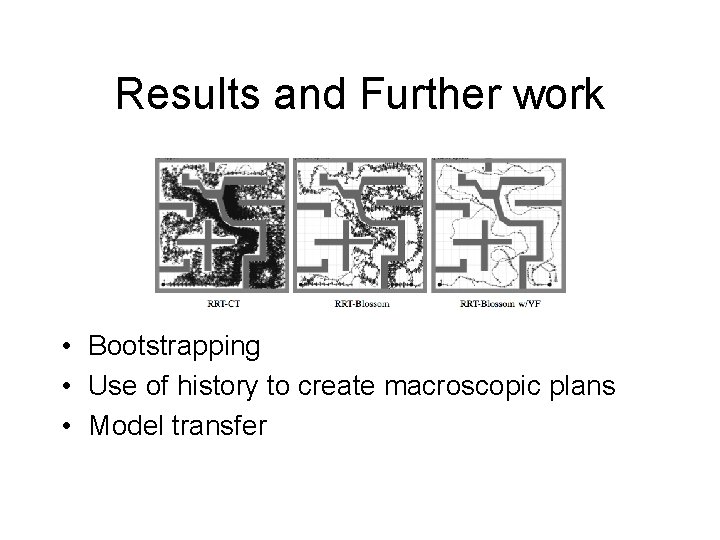

Results and Further work • Bootstrapping • Use of history to create macroscopic plans • Model transfer

![Training a Dog [B 02] • MIT lab - System where the user interactively Training a Dog [B 02] • MIT lab - System where the user interactively](http://slidetodoc.com/presentation_image_h2/dc9cc792a66eb664eab40d3d16ded9fd/image-21.jpg)

Training a Dog [B 02] • MIT lab - System where the user interactively train the dog using “click training” • Uses acoustic patterns as cues for actions • Can be taught cues on different acoustic pattern • Can create new actions from state space search • Simplified Q-learning based on animal training techniques

Training a Dog (cont. ) • Predictable regularities – animals will tend to successful state – small time window • Maximize use of supervisor feedback – limit the state space by only looking at states that matter, ex if utterance u followed by action a produces a reward then utterance u is important. • Easy to train – Credit accumulation – And allowing state action pair to delegate credit to another state action pain.

![Alternatives to Q-Learning • Q-decomp [RZ 03] • A simple world with initial state Alternatives to Q-Learning • Q-decomp [RZ 03] • A simple world with initial state](http://slidetodoc.com/presentation_image_h2/dc9cc792a66eb664eab40d3d16ded9fd/image-23.jpg)

Alternatives to Q-Learning • Q-decomp [RZ 03] • A simple world with initial state S 0 and three terminal states SL , SU , SR , each with an associated reward of dollars and/or euros. The discount factor is γ ∈ (0, 1). [fig from. RZ 03] – Complex agent as set of simpler subagents – Subagent has its own reward function – Arbitrator decides best actions based on “advice” from subagents

![Learning Behavior with Q-Decomp [CT 06] • Q-Decomp as the learning technique • Reward Learning Behavior with Q-Decomp [CT 06] • Q-Decomp as the learning technique • Reward](http://slidetodoc.com/presentation_image_h2/dc9cc792a66eb664eab40d3d16ded9fd/image-24.jpg)

Learning Behavior with Q-Decomp [CT 06] • Q-Decomp as the learning technique • Reward function - Inverse Reinforcement Learning (IRL) [NR 00] – Mimicking behavior from an “expert”

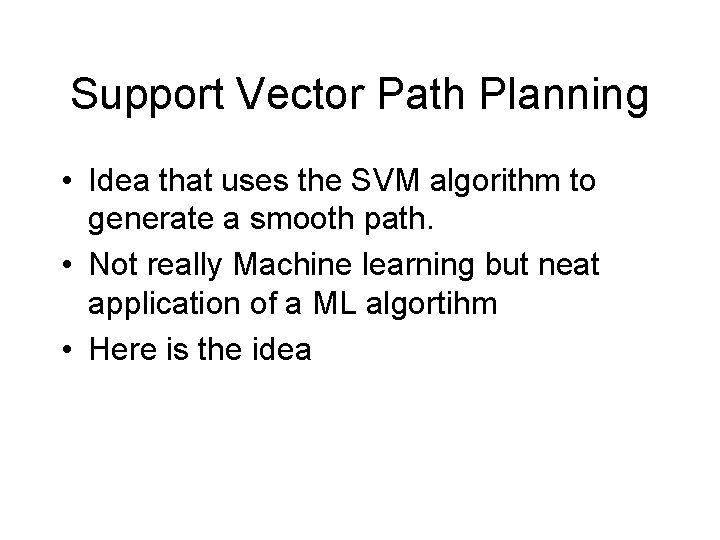

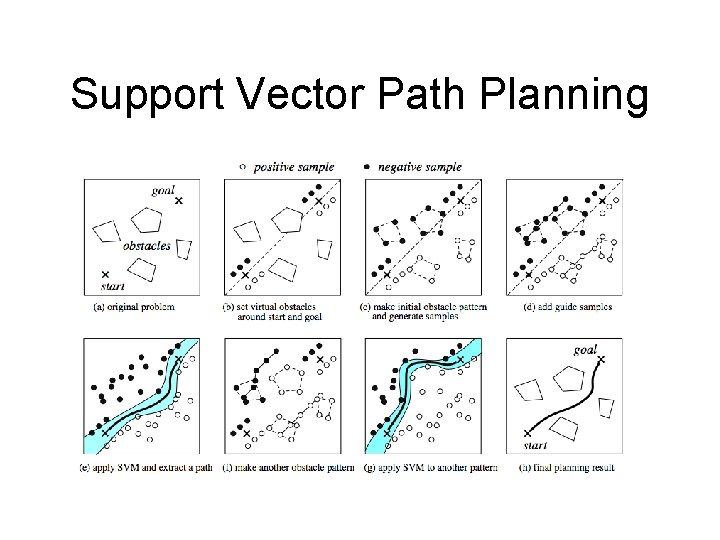

Support Vector Path Planning • Idea that uses the SVM algorithm to generate a smooth path. • Not really Machine learning but neat application of a ML algortihm • Here is the idea

Support Vector Path Planning

Videos • Robot learning to pick up objects – http: //www. cs. ou. edu/~fagg/movies/index. html#torso_2004 • Training a Dog – http: //characters. media. mit. edu/projects/dobie. html

![References • • [NR 00] A. Y. Ng and S. Russell. Algorithms for inverse References • • [NR 00] A. Y. Ng and S. Russell. Algorithms for inverse](http://slidetodoc.com/presentation_image_h2/dc9cc792a66eb664eab40d3d16ded9fd/image-28.jpg)

References • • [NR 00] A. Y. Ng and S. Russell. Algorithms for inverse reinforcement learning. In Proc. 17 th International Conf. on Machine Learning, pages 663 -670. Morgan Kaufmann, San Francisco, CA, 2000. [B 02] B. Blumberg et al. Integrated learning for interactive synthetic characters. In SIGGRAPH ‘ 02: Proceedings of the 29 th annual conference on Computer graphics and interactive techniques, pages 417 -426, New York, NY, USA, 2002. ACM Press. [RZ 03] S. J. Russell and A. Zimdars. Q-decomposition for reinforcement learning agents. In ICML, pages 656 -663, 2003 [MI 06] K. Morihiro, Teijiro Isokawa, Haruhiko Nishimura, Nobuyuki Matsui, Emergence of Flocking Behavior Based on Reinforcement Learning, Knowledge -Based Intelligent Information and Engineering Systems, pages 699 -706, 2006 [CT 06] T. Conde and D. Thalmann. Learnable behavioural model for autonomous virtual agents: low-level learning. In AAMAS ‘ 06: Proceedings of the fifth international joint conference on Autonomous agents and multiagent systems, pages 89 -96, New York, NY, USA, 2006. ACM Press. [M 06] J. Miura. Support vector path planning. In Intelligent Robots and Systems, 2006 IEEE/RSJ International Conference on, pages 2894 -2899, 2006. [KP 07] M. Kalisiak and M. van de Panne. Faster motion planning using learned local viability models. In ICRA, pages 2700 -2705, 2007.

![Machine Learning Ref [M 07] Mehryar Mohri - Foundations of Machine Learning course notes Machine Learning Ref [M 07] Mehryar Mohri - Foundations of Machine Learning course notes](http://slidetodoc.com/presentation_image_h2/dc9cc792a66eb664eab40d3d16ded9fd/image-29.jpg)

Machine Learning Ref [M 07] Mehryar Mohri - Foundations of Machine Learning course notes http: //www. cs. nyu. edu/~mohri/ml 07. html [M 97] Tom M. Mitchell. Machine learning. Mc. Graw-Hill, 1997 RN 05] Russell S, Norvig P (1995) Artificial Intelligence: A Modern Approach, Prentice Hall Series in Artificial Intelligence. Englewood Cliffs, New Jersey [CV 95] Corinna Cortes and Vladimir Vapnik, Support-Vector Networks, Machine Learning, 20, 1995. [V 98] Vladimir N. Vapnik. Statistical Learning Theory. Wiley, 1998. [KV 94] Michael J. Kearns and Umesh V. Vazirani. An Introduction to Computational Learning Theory. MIT Press, 1994.

- Slides: 29