Machine Learning Algorithms in Computational Learning Theory TIAN

![References 66 [1] Tel Aviv University’s Machine Lecture: http: //www. cs. tau. ac. il/~mansour/ml-course-10/scribe References 66 [1] Tel Aviv University’s Machine Lecture: http: //www. cs. tau. ac. il/~mansour/ml-course-10/scribe](https://slidetodoc.com/presentation_image_h/c6c91e898d676200635d03379fff9f6f/image-66.jpg)

- Slides: 71

Machine Learning Algorithms in Computational Learning Theory TIAN HE JI GUAN WANG Shangxuan Xiangnan Kun Peiyong Hancheng 25 th Jan 2013

Outlines 2 1. 2. Introduction Probably Approximately Correct Framework (PAC) q q q 3. PAC Framework Weak PAC-Learnability Error Reduction Mistake Bound Model of Learning q Mistake Bound Model q Predicting from Expert Advice q The Weighted Majority Algorithm q Online Learning from Examples q 4. 5. 6. The Winnow Algorithm PAC versus Mistake Bound Model Conclusion Q&A

Machine Learning 3 Machine cannot learn but can be trained.

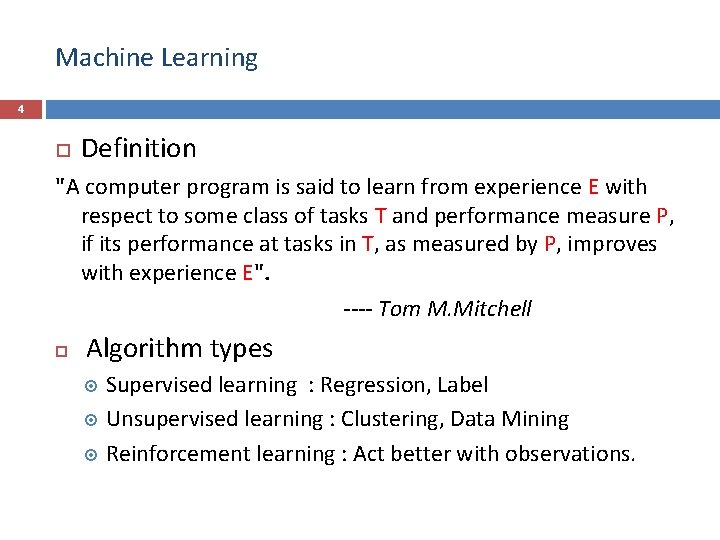

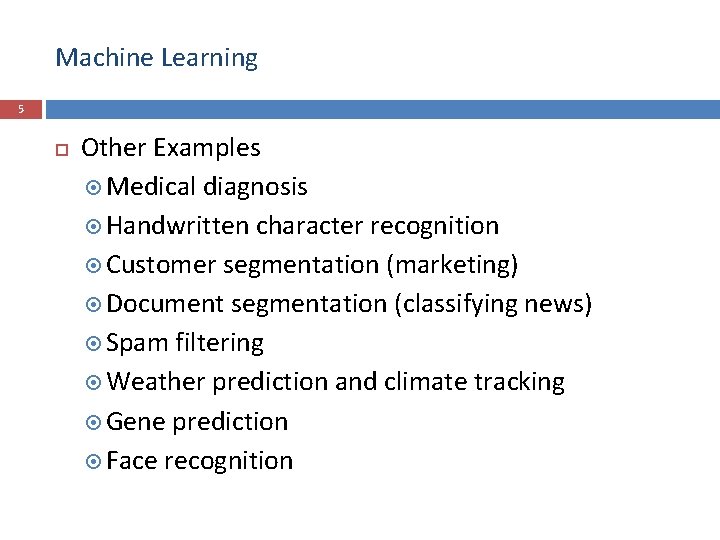

Machine Learning 4 Definition "A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E". ---- Tom M. Mitchell Algorithm types Supervised learning : Regression, Label Unsupervised learning : Clustering, Data Mining Reinforcement learning : Act better with observations.

Machine Learning 5 Other Examples Medical diagnosis Handwritten character recognition Customer segmentation (marketing) Document segmentation (classifying news) Spam filtering Weather prediction and climate tracking Gene prediction Face recognition

Computational Learning Theory 6 Why learning works Under what conditions is successful learning possible and impossible? Under what conditions is a particular learning algorithm assured of learning successfully? We need particular settings (models) Probably approximately correct (PAC) Mistake bound models

7 Probably Approximately Correct Framework (PAC) PAC Learnability Weak PAC-Learnability Error Reduction Occam’s Razor

PAC Learning 8 PAC Learning Any hypothesis that is consistent with a sufficiently large set of training examples is unlikely to be wrong. Stationarity : The future being like the past. Concept: An efficiently computable function of a domain. Function : {0, 1} n -> {0, 1}. A concept class is a collection of concepts.

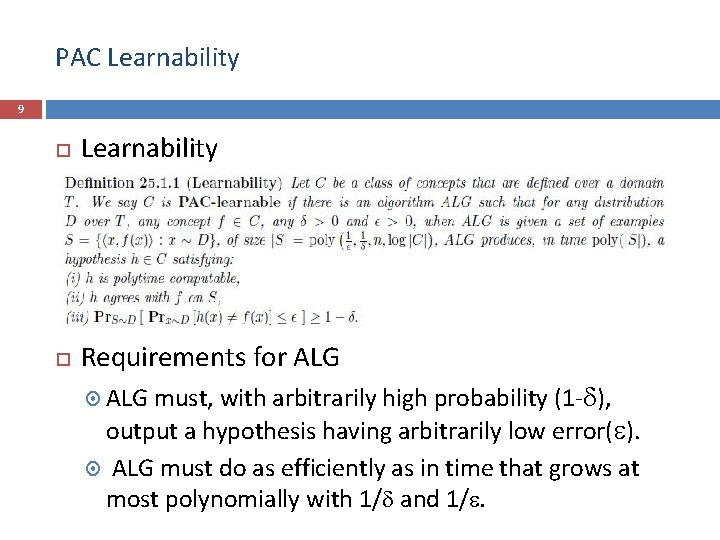

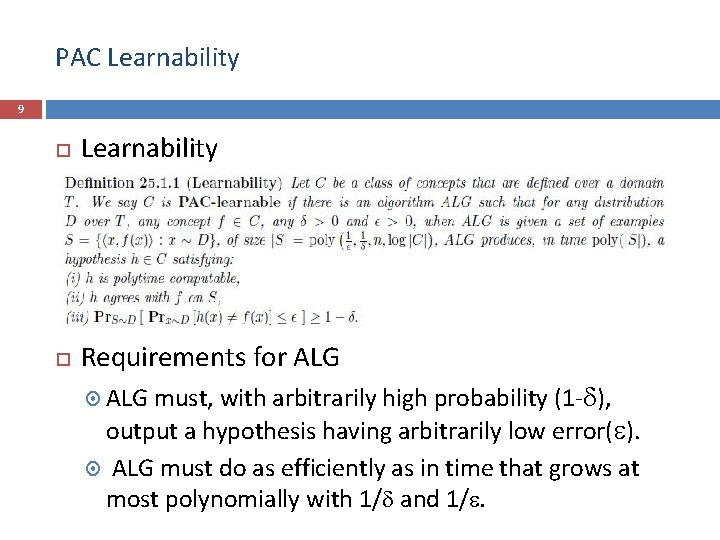

PAC Learnability 9 Learnability Requirements for ALG must, with arbitrarily high probability (1 - d), output a hypothesis having arbitrarily low error(e). ALG must do as efficiently as in time that grows at most polynomially with 1/d and 1/e.

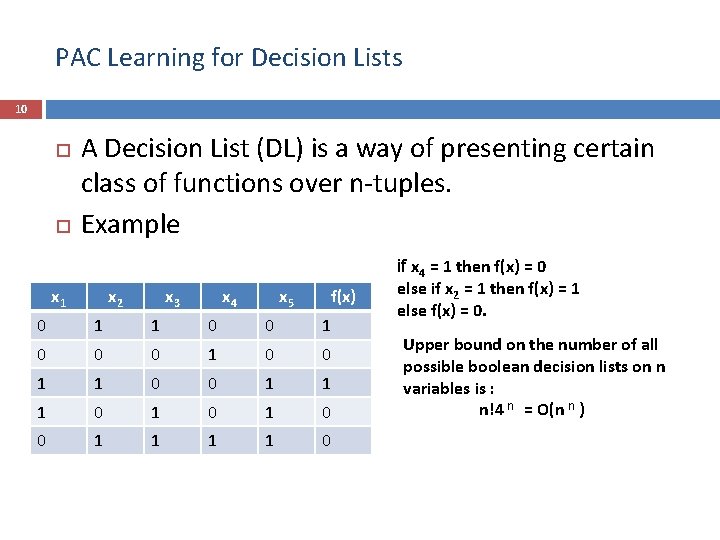

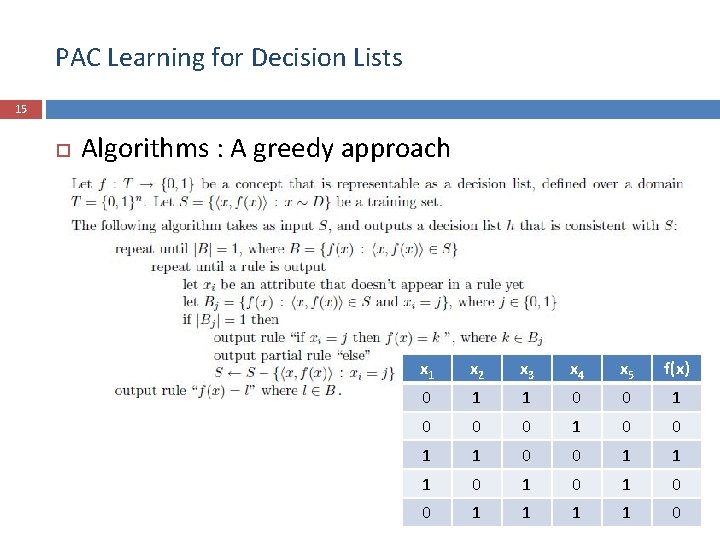

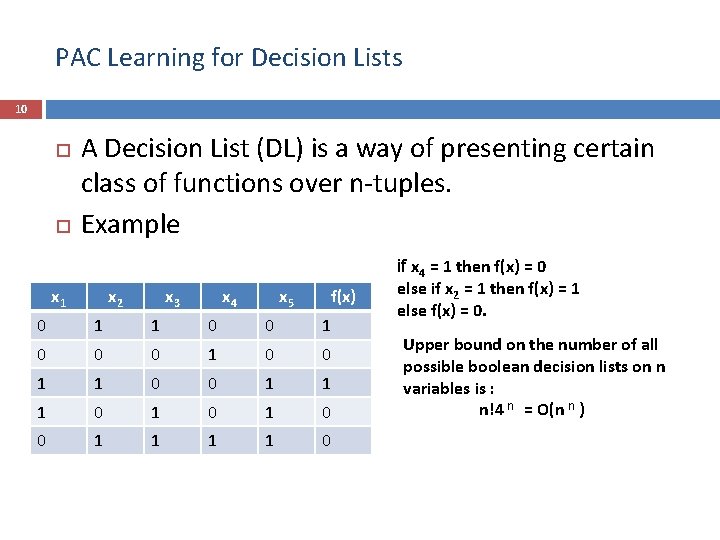

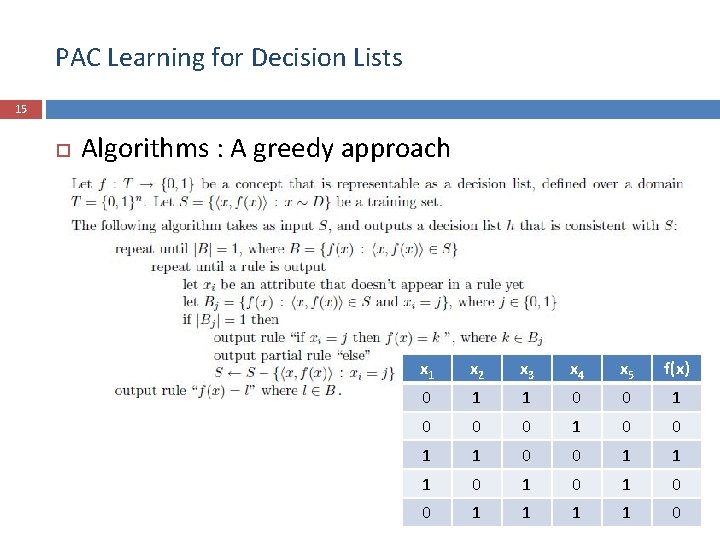

PAC Learning for Decision Lists 10 A Decision List (DL) is a way of presenting certain class of functions over n-tuples. Example if x 4 = 1 then f(x) = 0 x 1 x 2 x 3 x 4 x 5 f(x) 0 1 1 0 0 0 1 1 0 1 0 1 0 else if x 2 = 1 then f(x) = 1 else f(x) = 0. Upper bound on the number of all possible boolean decision lists on n variables is : n!4 n = O(n n )

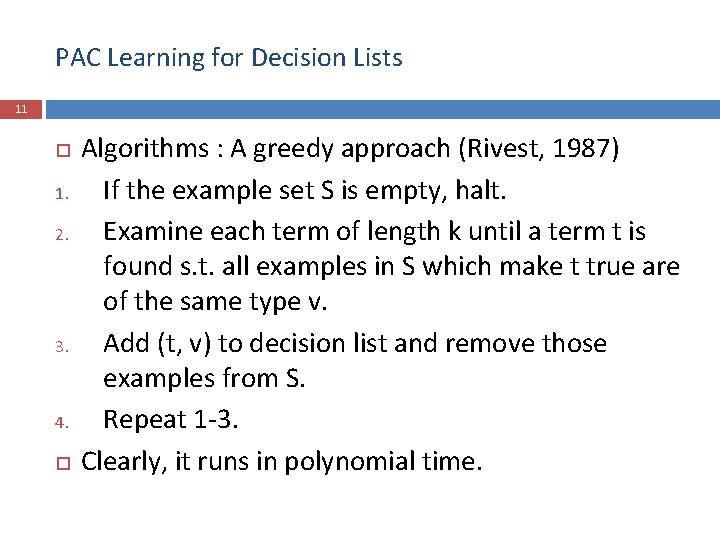

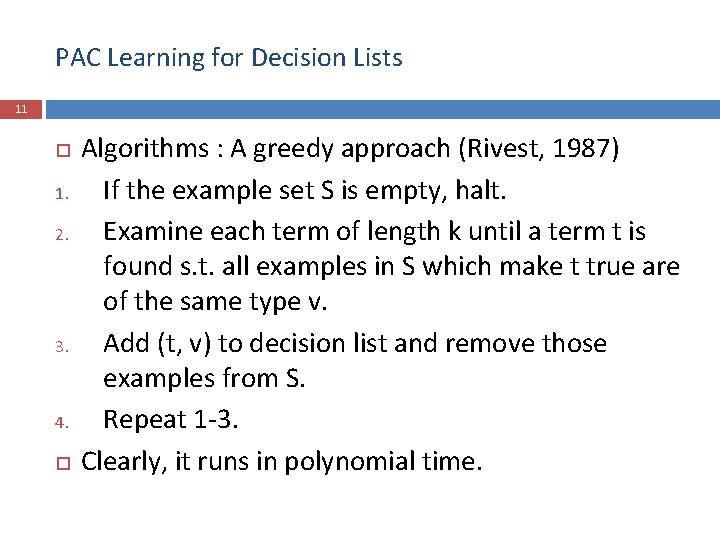

PAC Learning for Decision Lists 11 1. 2. 3. 4. Algorithms : A greedy approach (Rivest, 1987) If the example set S is empty, halt. Examine each term of length k until a term t is found s. t. all examples in S which make t true are of the same type v. Add (t, v) to decision list and remove those examples from S. Repeat 1 -3. Clearly, it runs in polynomial time.

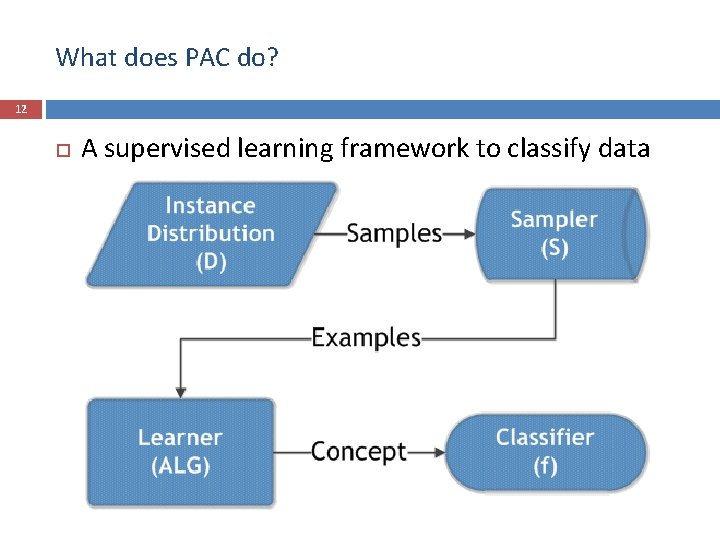

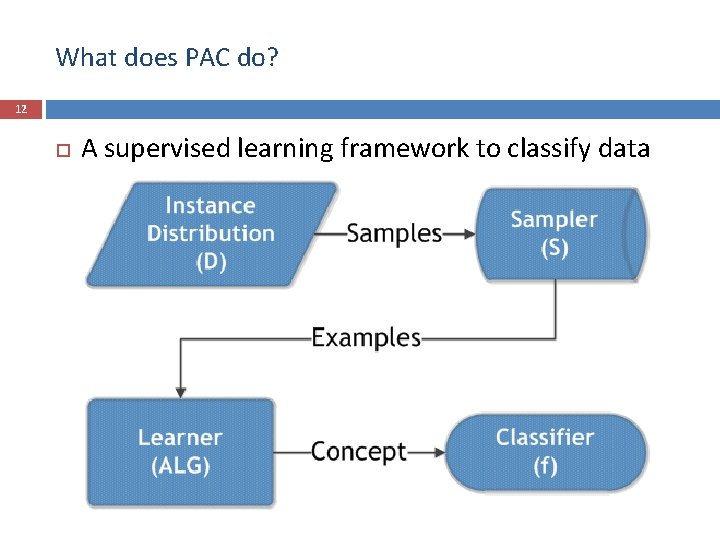

What does PAC do? 12 A supervised learning framework to classify data

How can we use PAC? 13 Use PAC as a general framework to guide us on efficient sampling for machine learning Use PAC as a theoretical analyzer to distinguish hard problems from easy problems Use PAC to evaluate the performance of some algorithms Use PAC to solve some real problems

What we are going to cover? 14 Explore what PAC can learn Apply PAC to real data with noise Give a probabilistic analysis on the performance of PAC

PAC Learning for Decision Lists 15 Algorithms : A greedy approach x 1 x 2 x 3 x 4 x 5 f(x) 0 1 1 0 0 0 1 1 1 0 1 0 0 1 1 0

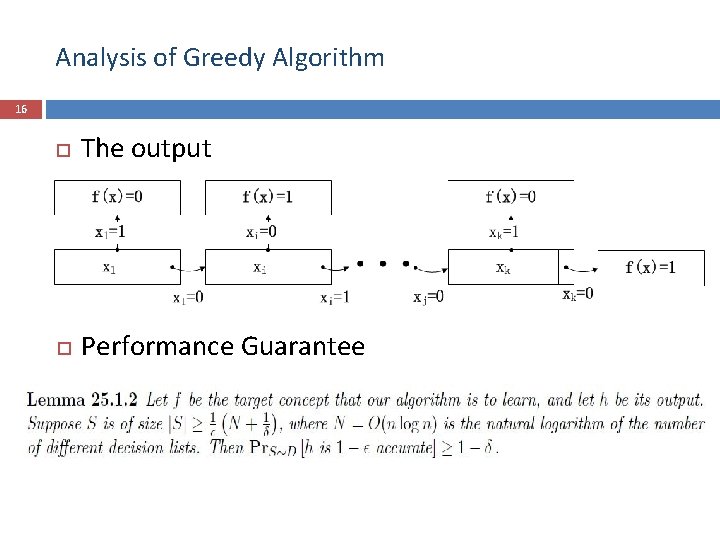

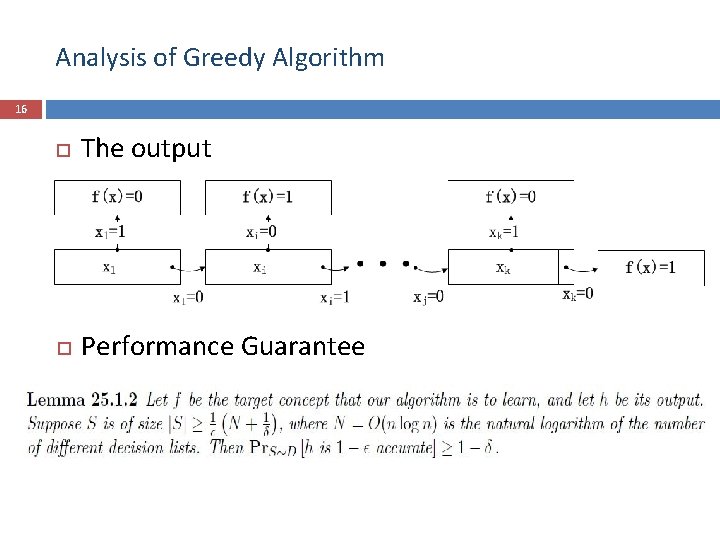

Analysis of Greedy Algorithm 16 The output Performance Guarantee

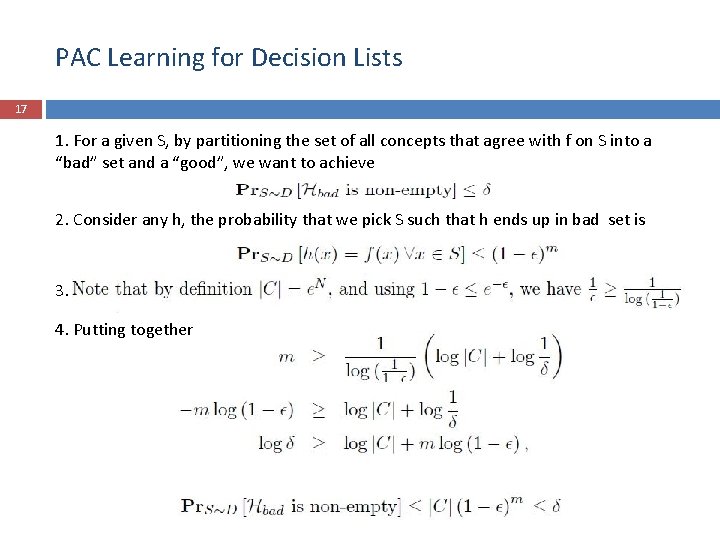

PAC Learning for Decision Lists 17 1. For a given S, by partitioning the set of all concepts that agree with f on S into a “bad” set and a “good”, we want to achieve 2. Consider any h, the probability that we pick S such that h ends up in bad set is 3. 4. Putting together

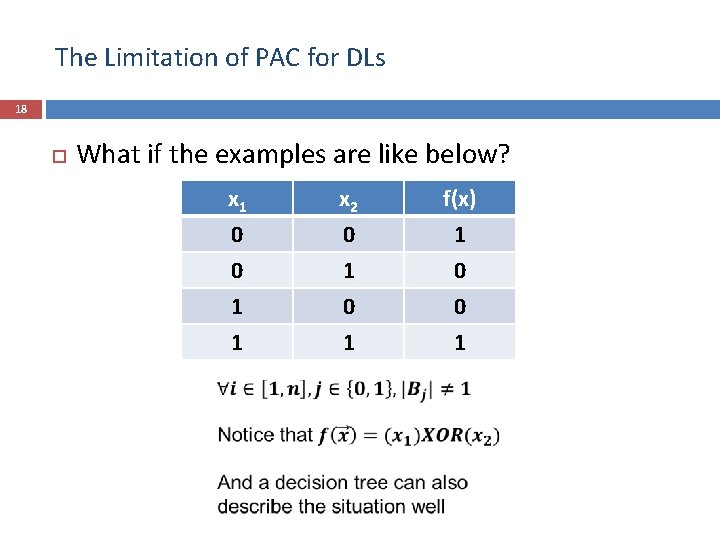

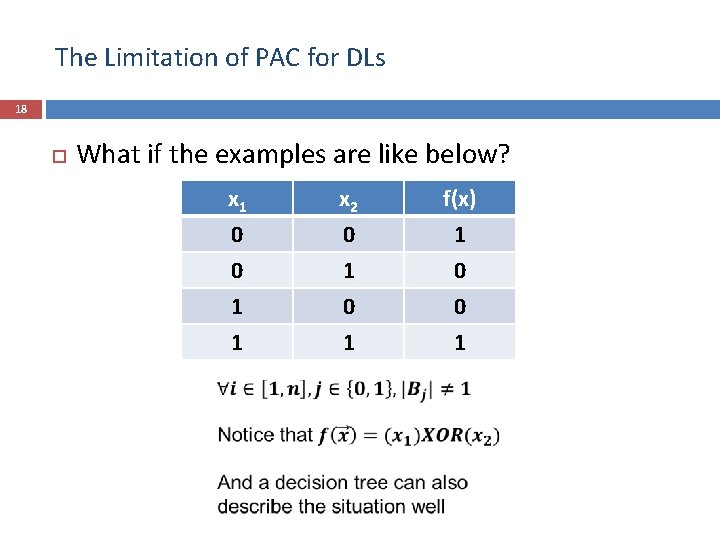

The Limitation of PAC for DLs 18 What if the examples are like below? x 1 0 0 1 x 2 0 1 0 f(x) 1 0 0 1 1 1

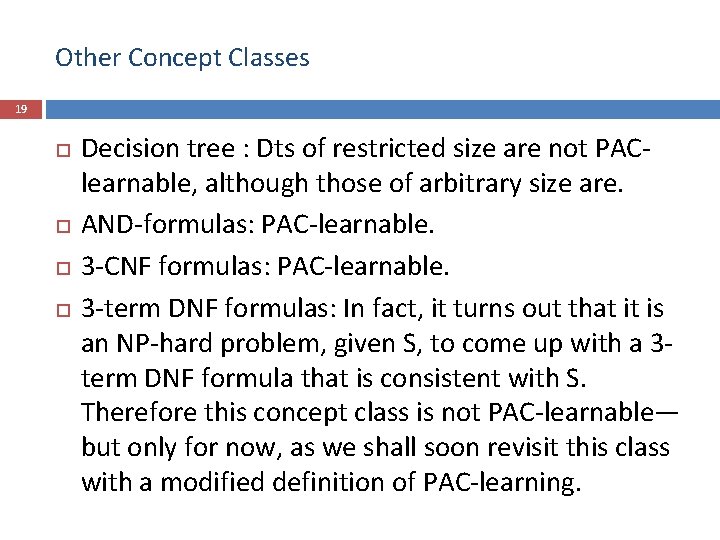

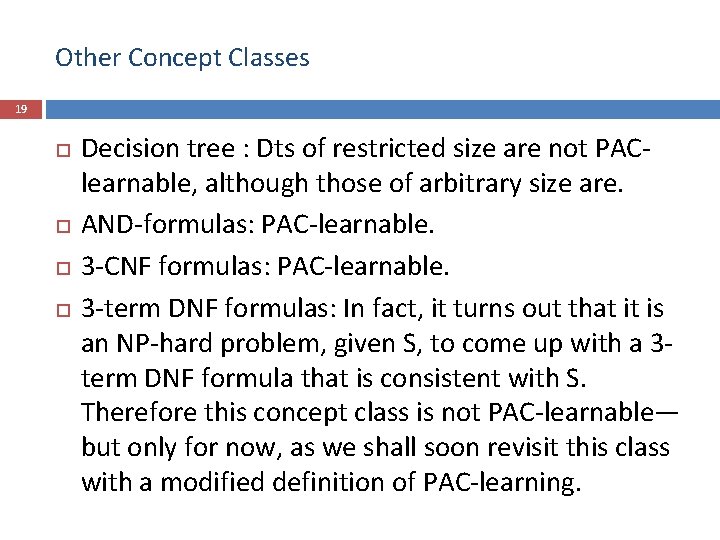

Other Concept Classes 19 Decision tree : Dts of restricted size are not PAClearnable, although those of arbitrary size are. AND-formulas: PAC-learnable. 3 -CNF formulas: PAC-learnable. 3 -term DNF formulas: In fact, it turns out that it is an NP-hard problem, given S, to come up with a 3 term DNF formula that is consistent with S. Therefore this concept class is not PAC-learnable— but only for now, as we shall soon revisit this class with a modified definition of PAC-learning.

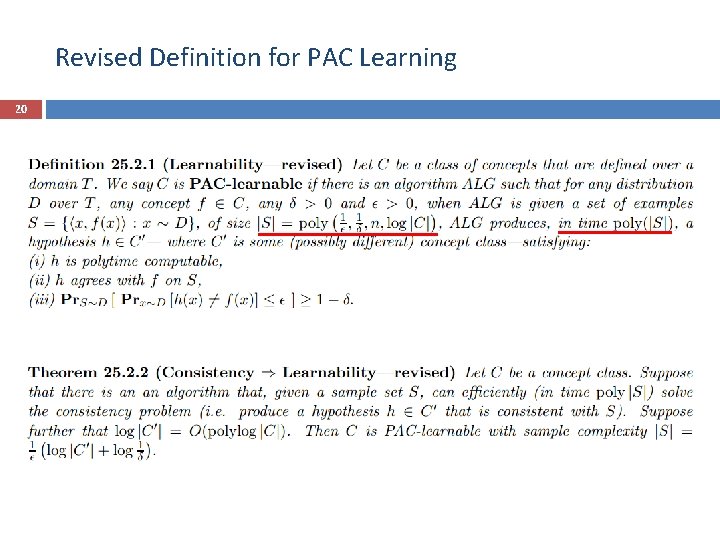

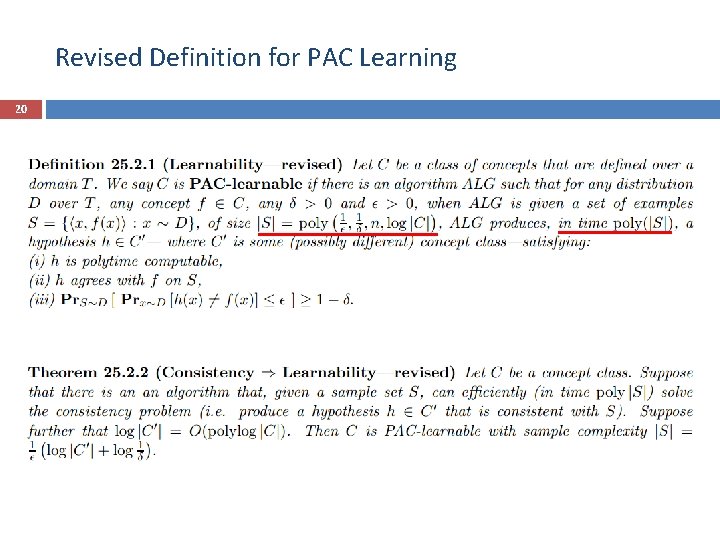

Revised Definition for PAC Learning 20

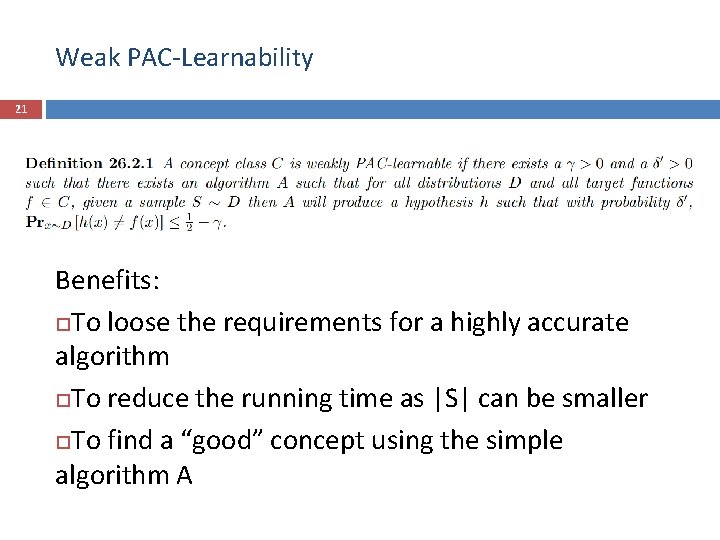

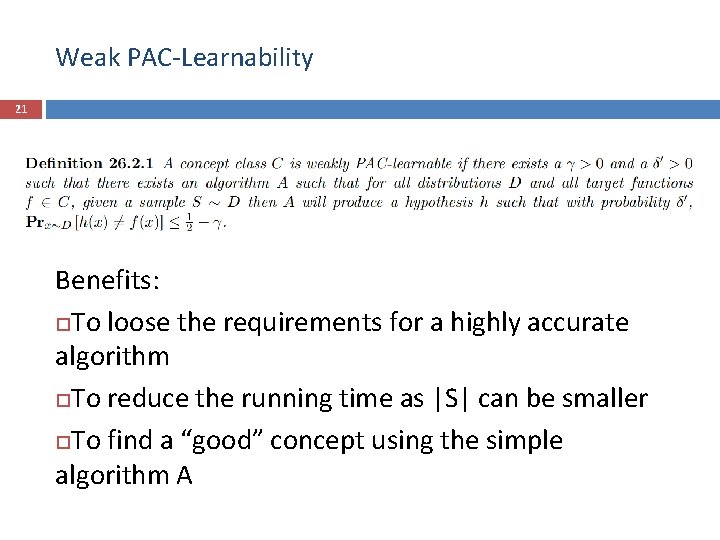

Weak PAC-Learnability 21 Benefits: To loose the requirements for a highly accurate algorithm To reduce the running time as |S| can be smaller To find a “good” concept using the simple algorithm A

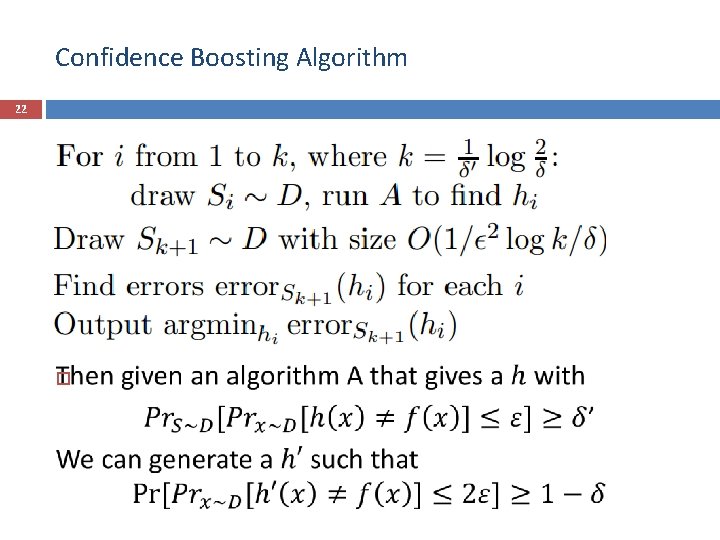

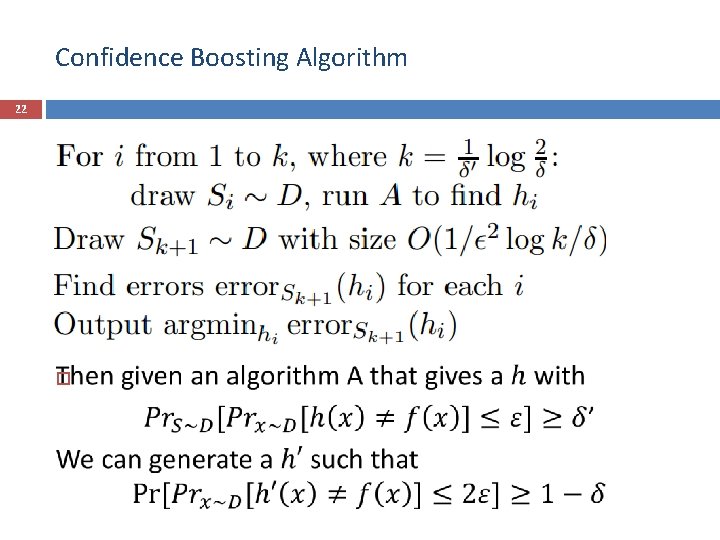

Confidence Boosting Algorithm 22

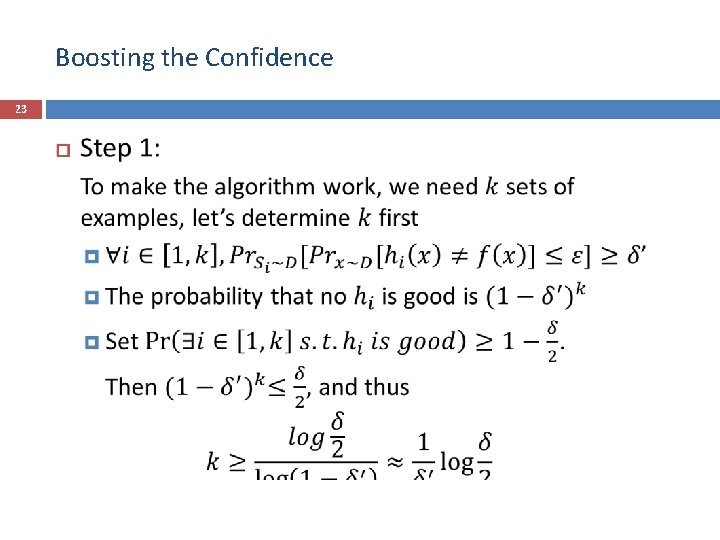

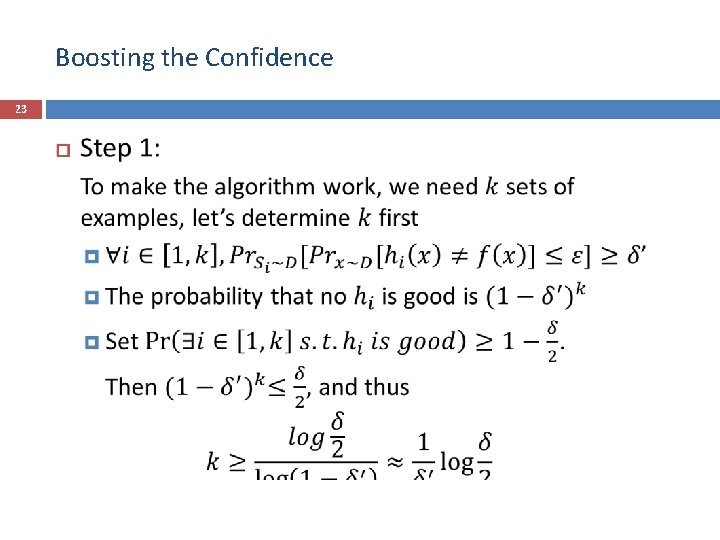

Boosting the Confidence 23

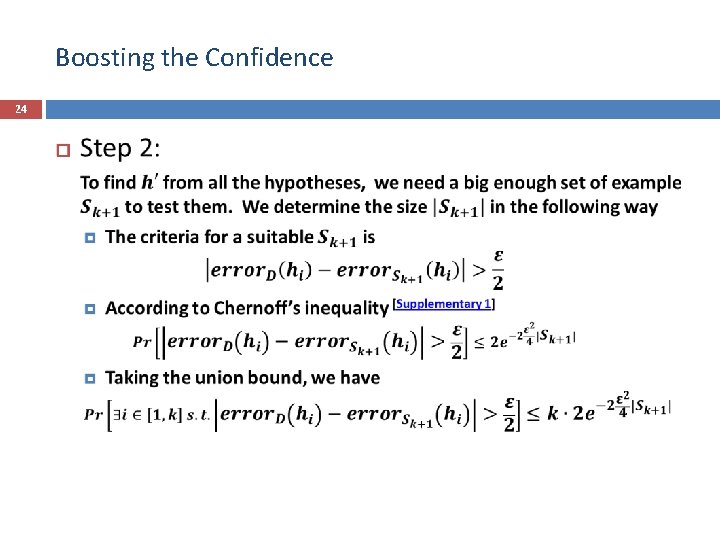

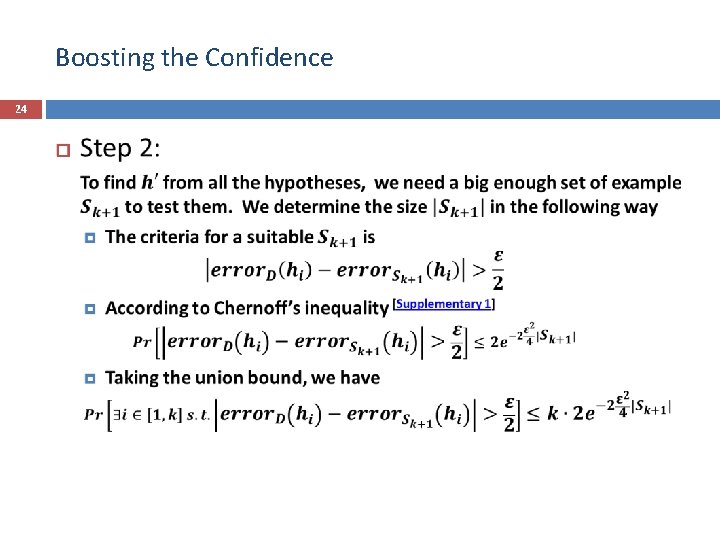

Boosting the Confidence 24

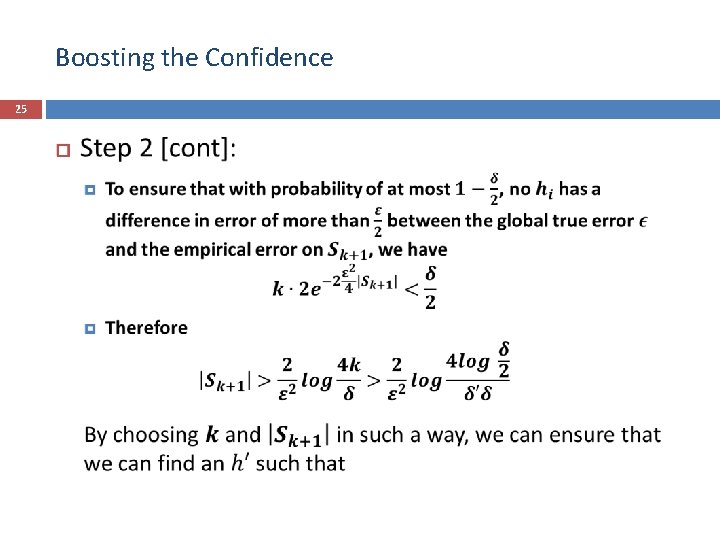

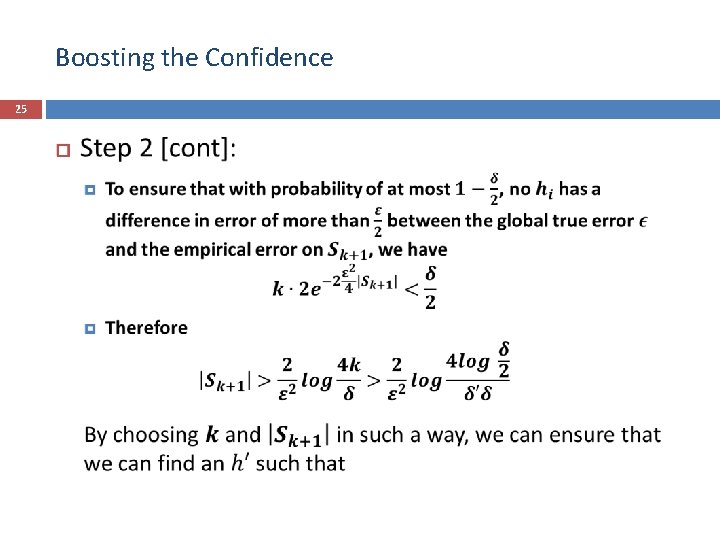

Boosting the Confidence 25

Error Reduction by Boosting 26 The basic idea exploits the fact that you can learn a little on every distribution and with more iterations we can get much lower error rate.

Error Reduction by Boosting 27 Detailed Steps: 1. Some algorithm A produces a hypothesis that has an error probability of no more than p = 1/2−γ (γ>0). We would like to decrease this error probability to 1/2−γ′ with γ′> γ. 2. We invoke A three times, each time with a slightly different distribution and get hypothesis h 1, h 2 and h 3, respectively. 3. Final hypothesis then becomes h=Maj(h 1, h 2, h 3).

Error Reduction by Boosting 28 Learn h 1 from D 1 with error p Modify D 1 so that the total weight of incorrectly marked examples are 1/2, thus we get D 2. Pick sample S 2 from this distribution and use A to learn h 2. Modify D 2 so that h 1 and h 2 always disagree, thus we get D 3. Pick sample S 3 from this distribution and use A to learn h 3.

Error Reduction by Boosting 29 The total error probability h is at most 3 p^2− 2 p^3, which is less than p when p∈(0, 1/2). The proof of how to get this probability is shown in [1]. Thus there exists γ′> γ such that the error probability of our new hypothesis is at most 1/2−γ′. [1] http: //courses. csail. mit. edu/6. 858/lecture-12. ps

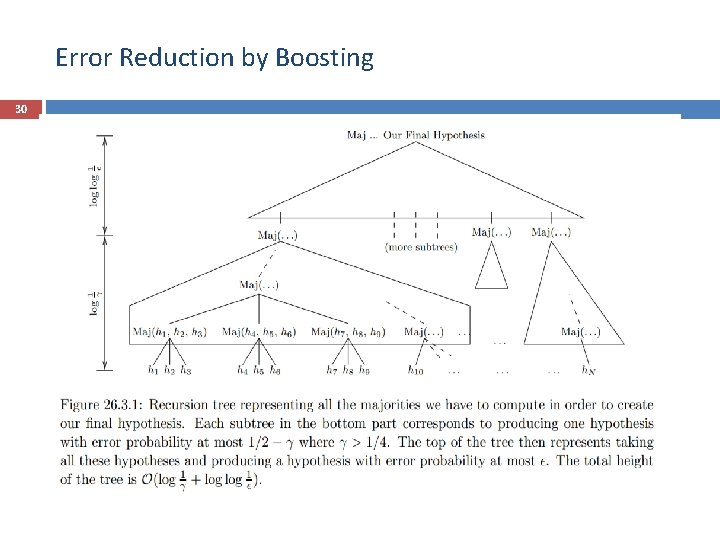

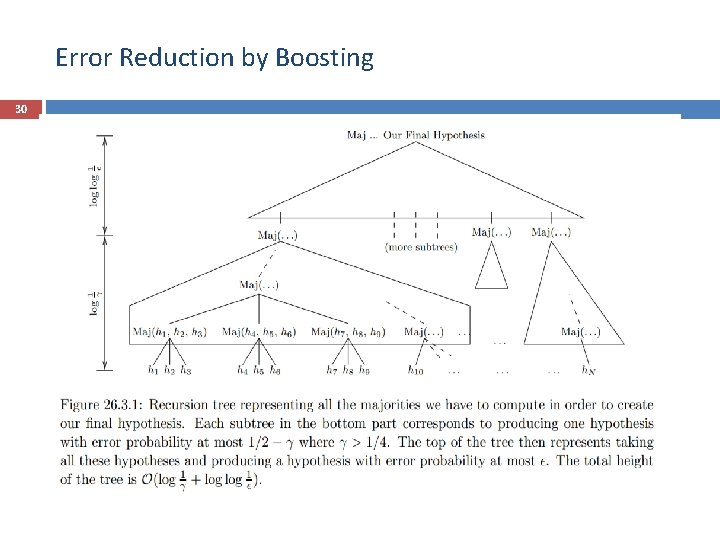

Error Reduction by Boosting 30

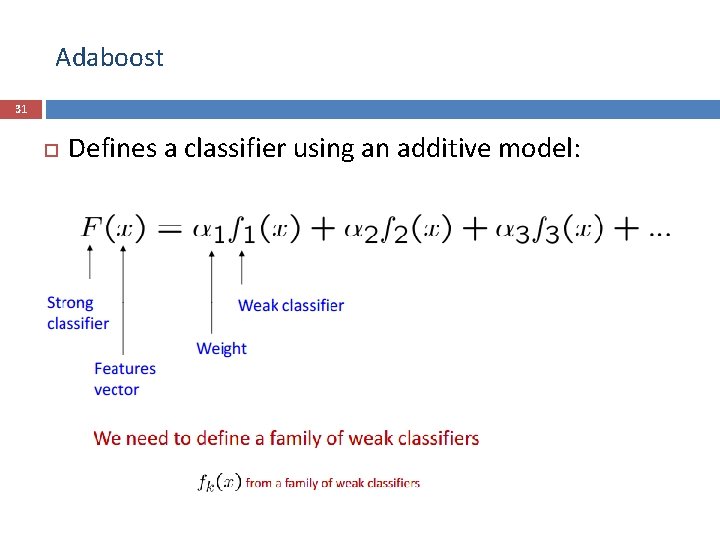

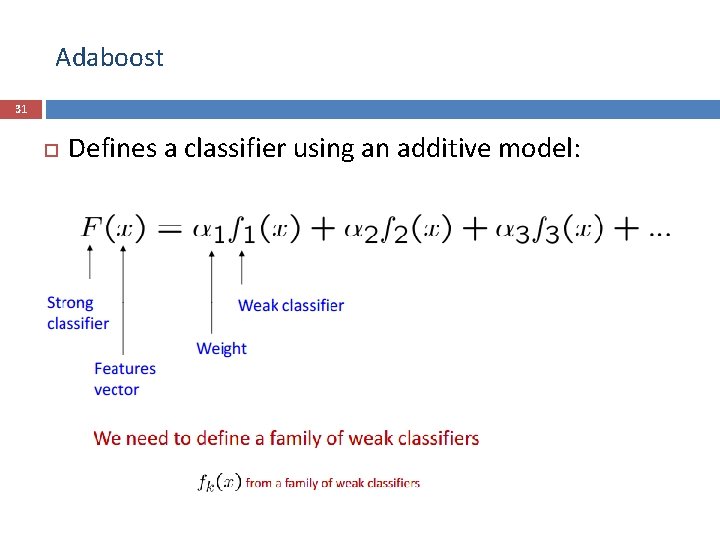

Adaboost 31 Defines a classifier using an additive model:

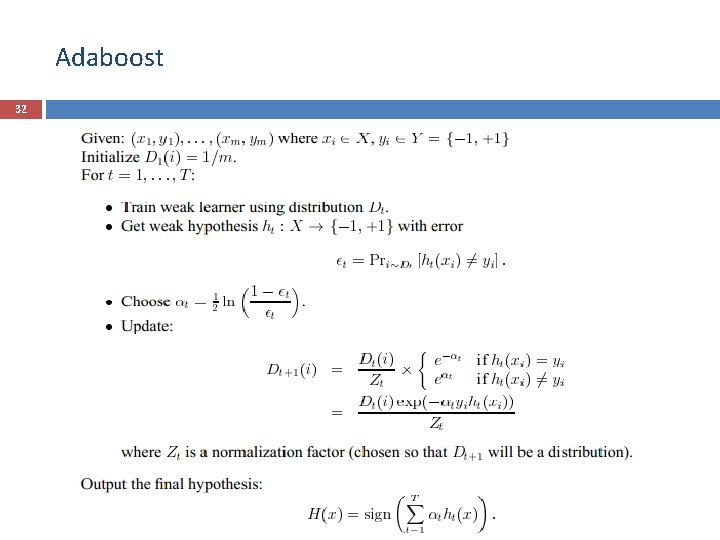

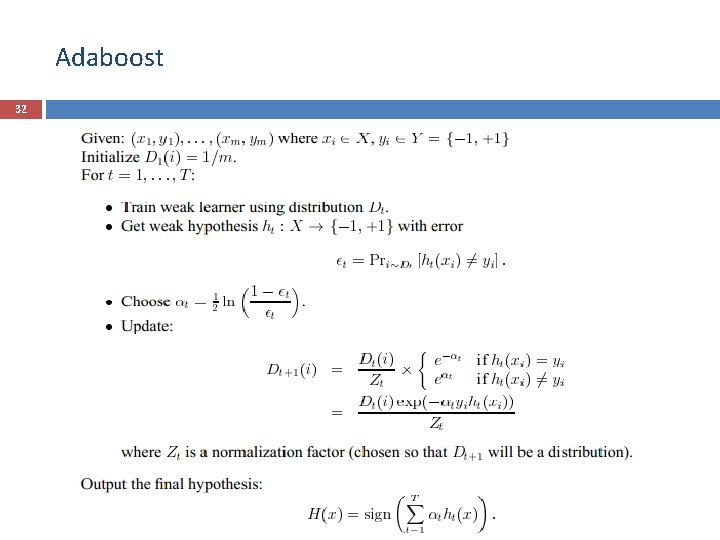

Adaboost 32

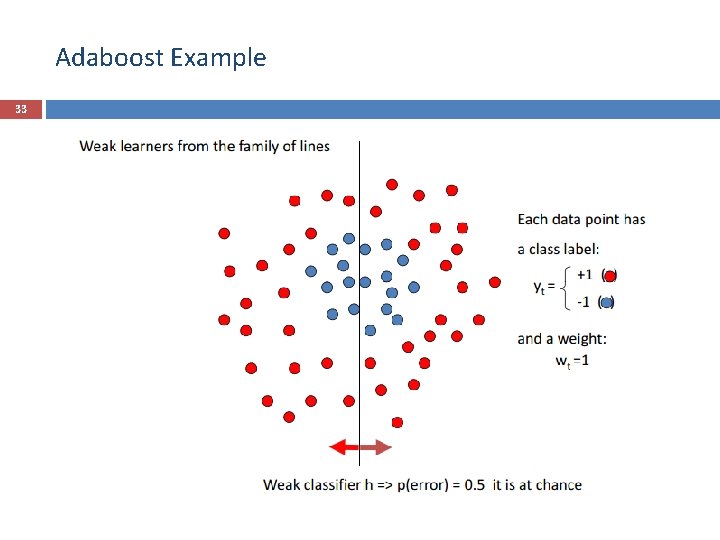

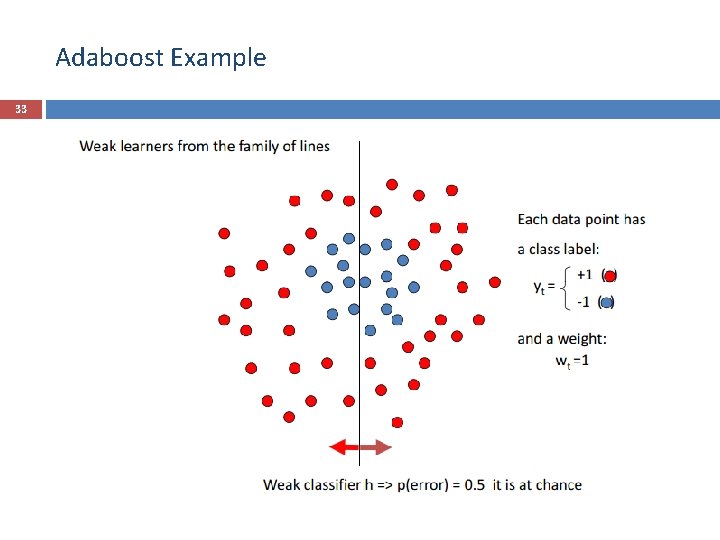

Adaboost Example 33

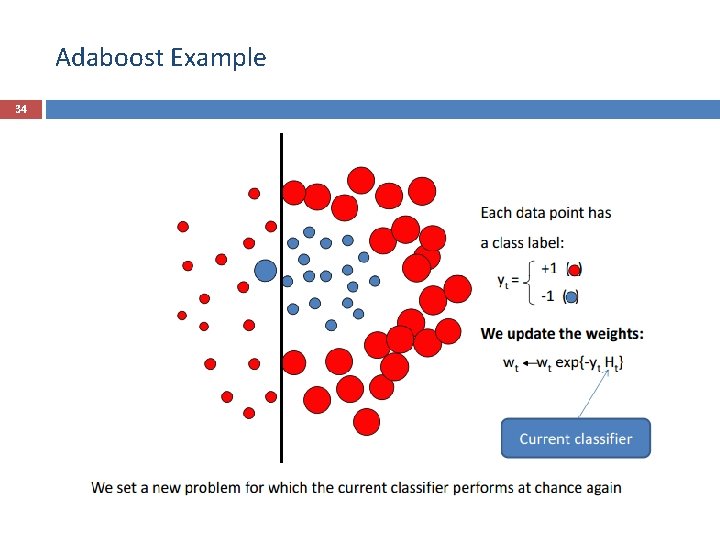

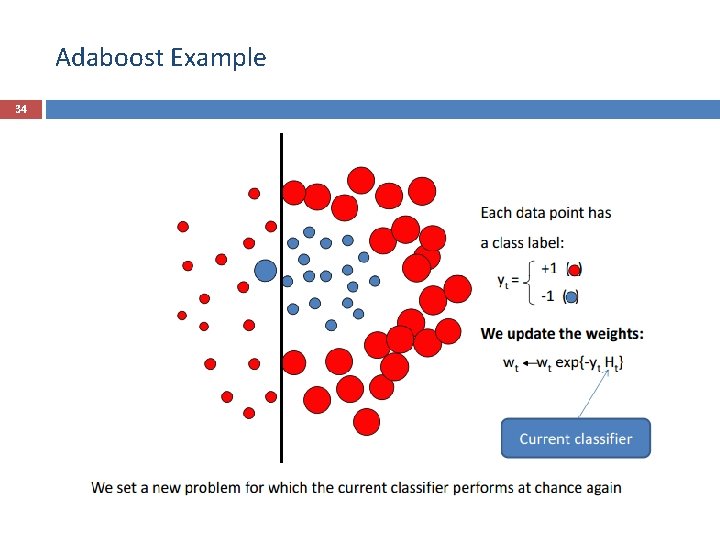

Adaboost Example 34

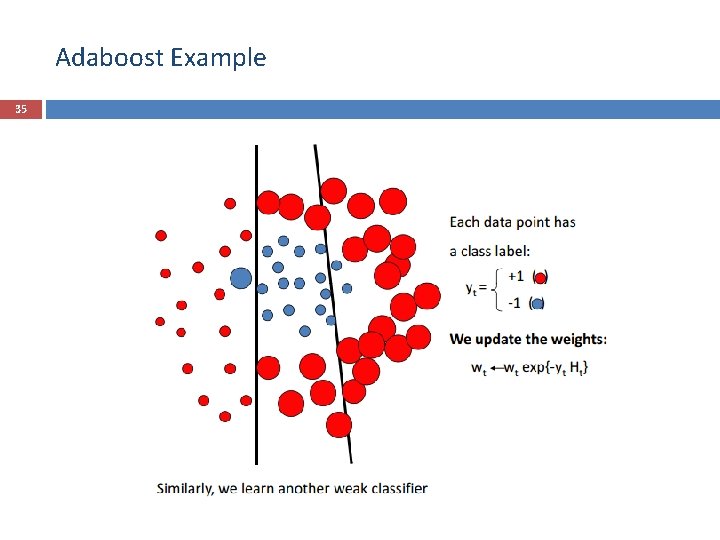

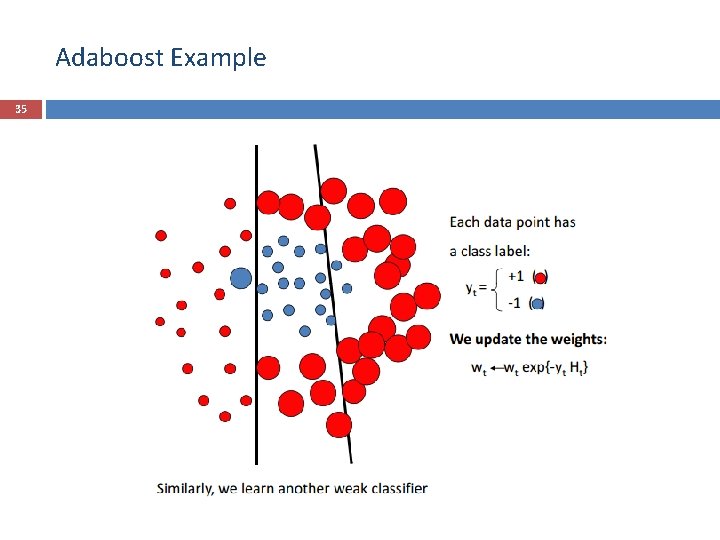

Adaboost Example 35

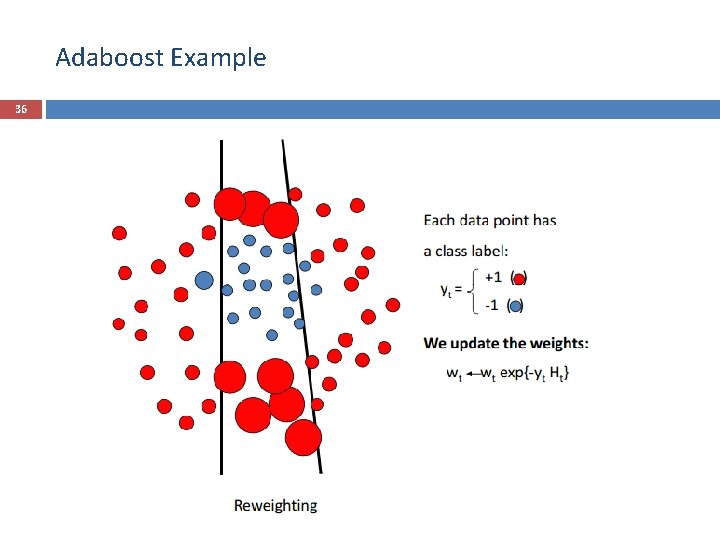

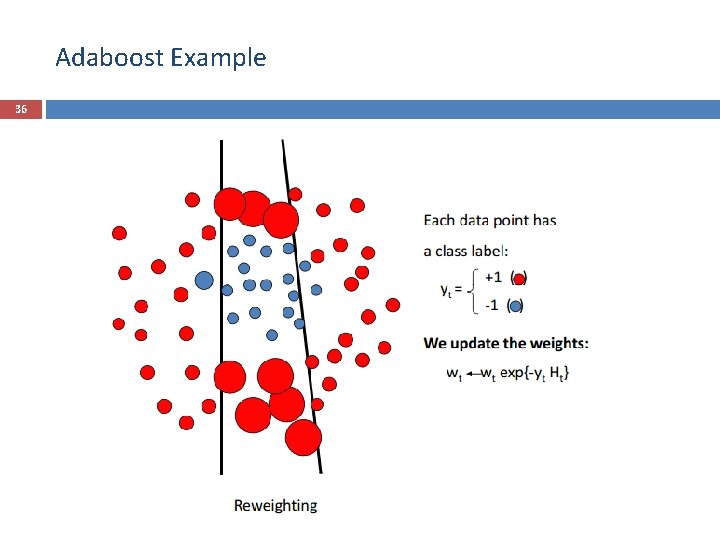

Adaboost Example 36

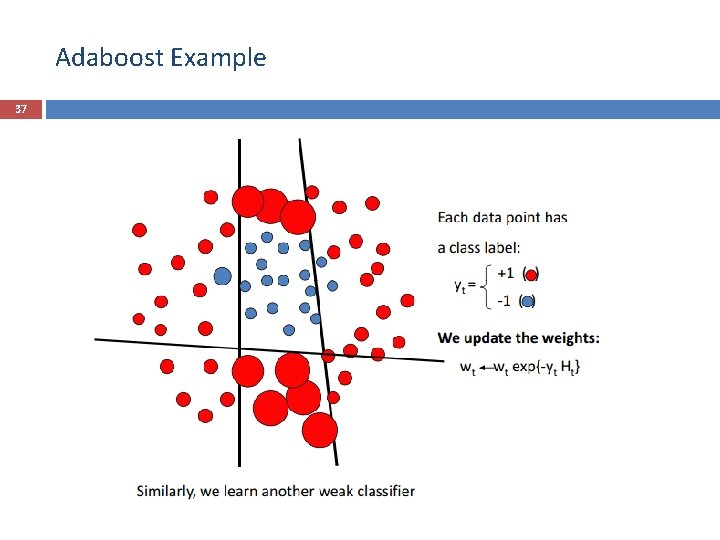

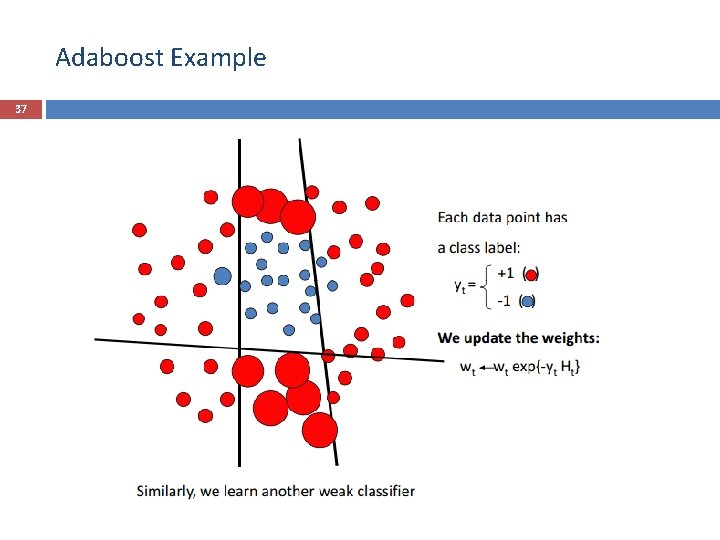

Adaboost Example 37

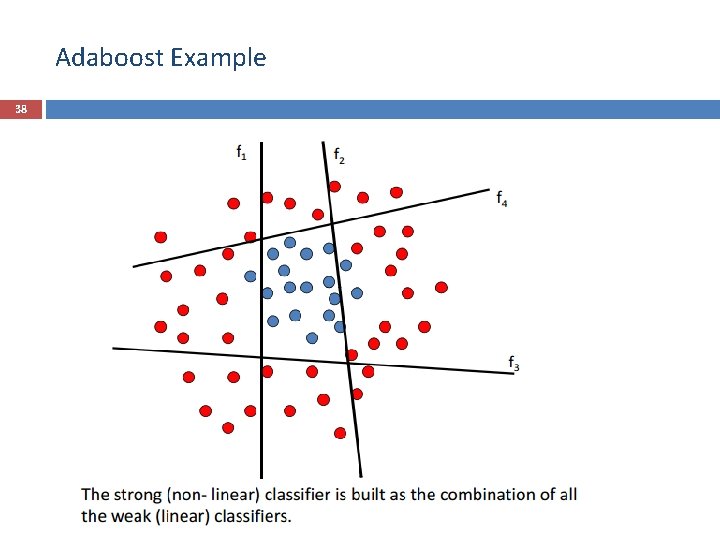

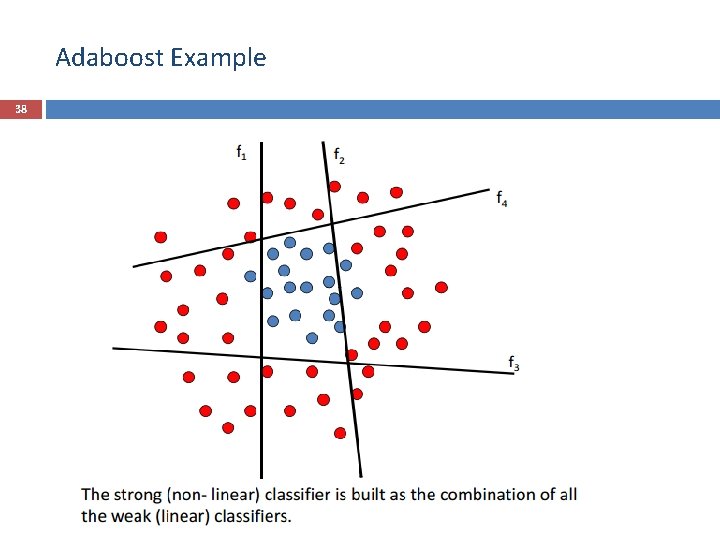

Adaboost Example 38

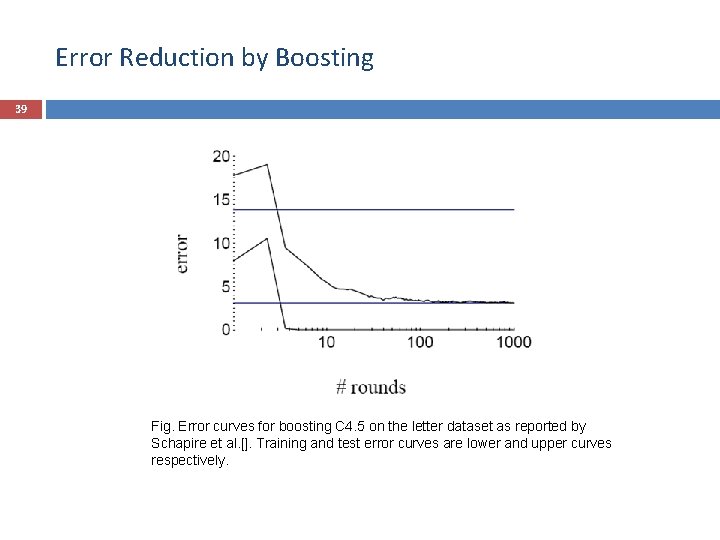

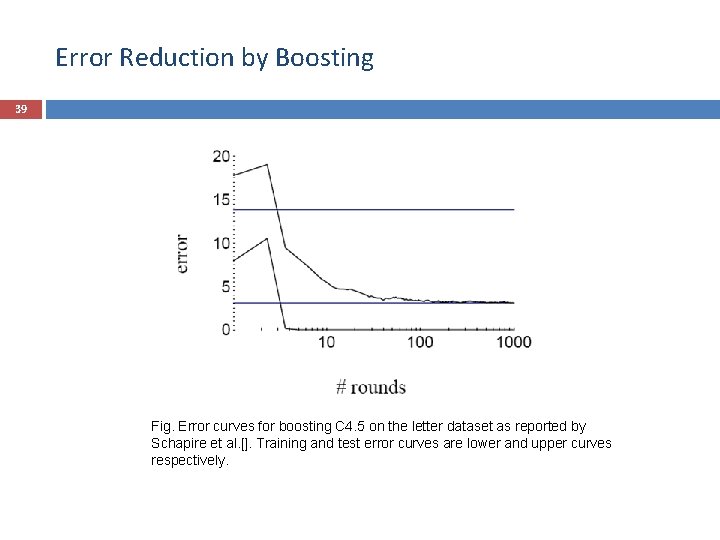

Error Reduction by Boosting 39 Fig. Error curves for boosting C 4. 5 on the letter dataset as reported by Schapire et al. []. Training and test error curves are lower and upper curves respectively.

PAC learning conclusion 40 Strong PAC learning Weak PAC learning Error reduction and boosting

41 Mistake Bound Model of Learning Mistake Bound Model Predicting from Expert Advice The Weighted Majority Algorithm Online Learning from Examples The Winnow Algorithm

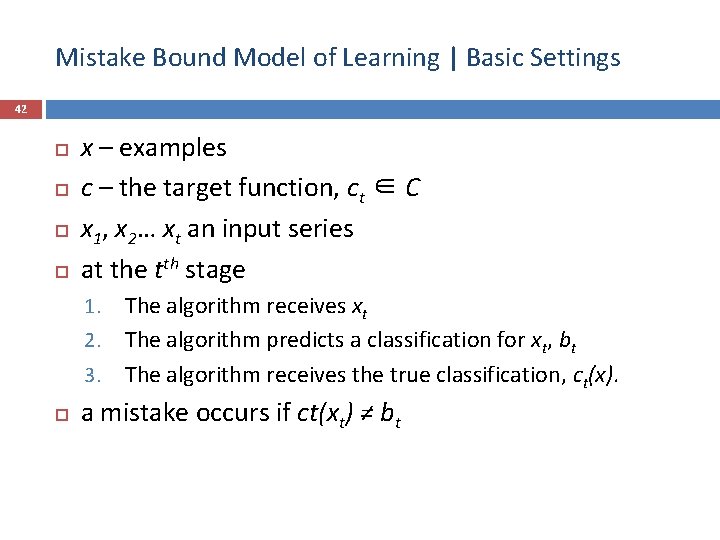

Mistake Bound Model of Learning | Basic Settings 42 x – examples c – the target function, ct ∈ C x 1, x 2… xt an input series at the tth stage The algorithm receives xt 2. The algorithm predicts a classification for xt, bt 3. The algorithm receives the true classification, ct(x). 1. a mistake occurs if ct(xt) ≠ bt

Mistake Bound Model of Learning | Basic Settings 43 A hypotheses class C has an algorithm A with mistake M: if for any concept c ∈ C, and for any ordering of examples, the total number of mistakes ever made by A is bounded by M.

Mistake Bound Model of Learning | Basic Settings 44 Predicting from Expert Advice The Weighted Majority Algorithm Online Learning from Examples The Winnow Algorithm

45 Predicting from Expert Advice The Weighted Majority Algorithm Deterministic Randomized Predicting from Expert Advice

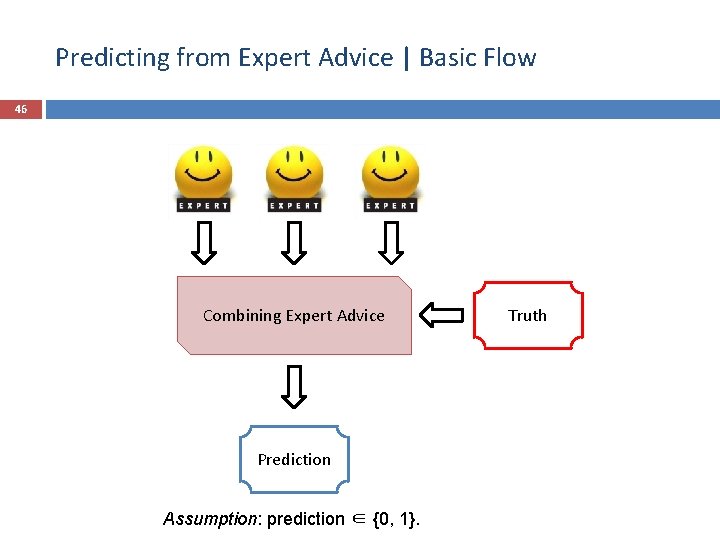

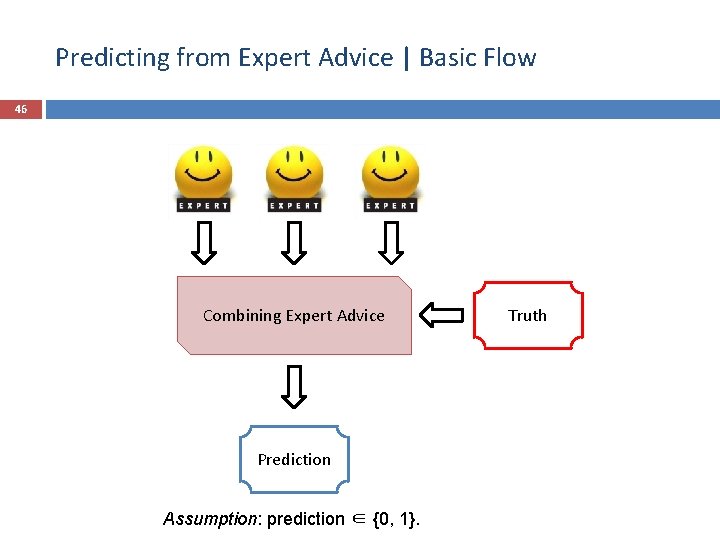

Predicting from Expert Advice | Basic Flow 46 Combining Expert Advice Prediction Assumption: prediction ∈ {0, 1}. Truth

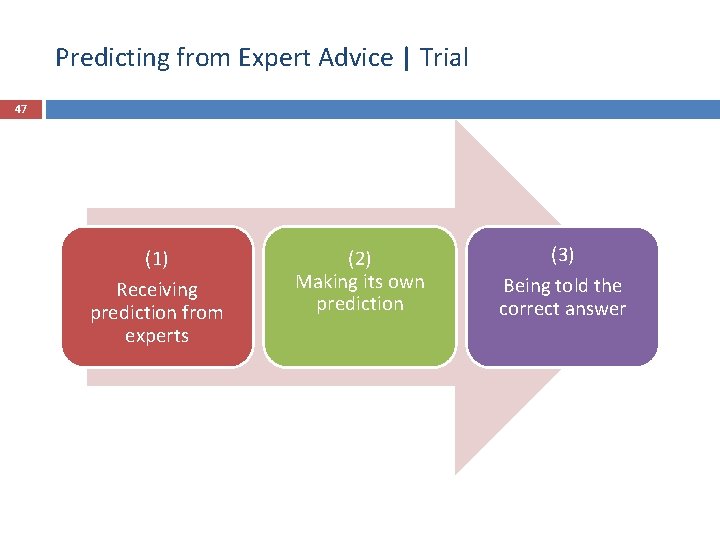

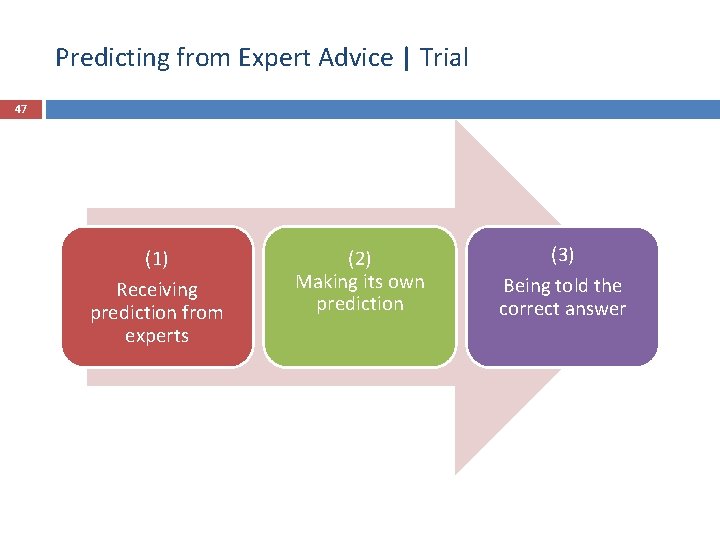

Predicting from Expert Advice | Trial 47 (1) Receiving prediction from experts (2) Making its own prediction (3) Being told the correct answer

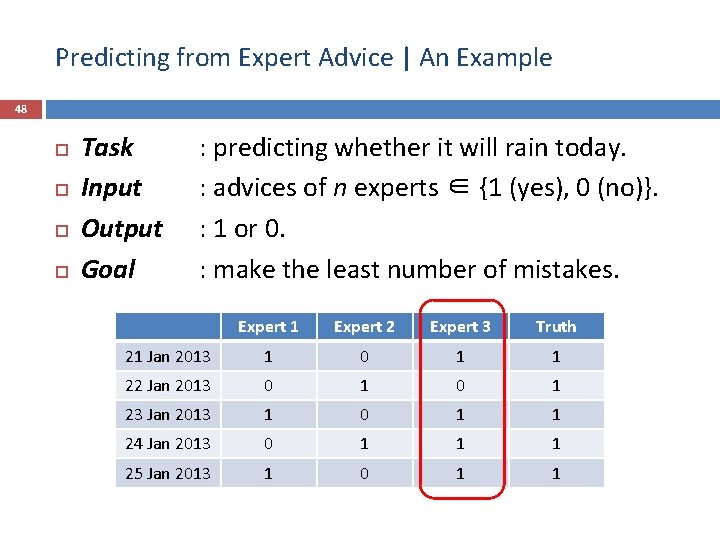

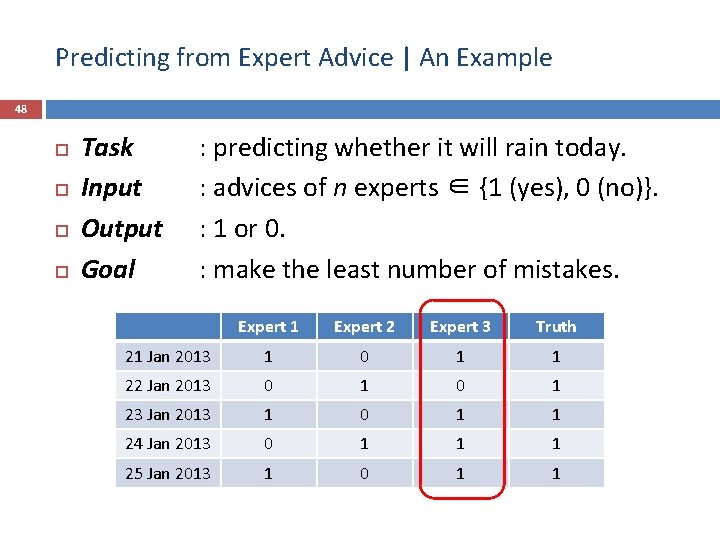

Predicting from Expert Advice | An Example 48 Task Input Output Goal : predicting whether it will rain today. : advices of n experts ∈ {1 (yes), 0 (no)}. : 1 or 0. : make the least number of mistakes. Expert 1 Expert 2 Expert 3 Truth 21 Jan 2013 1 0 1 1 22 Jan 2013 0 1 23 Jan 2013 1 0 1 1 24 Jan 2013 0 1 1 1 25 Jan 2013 1 0 1 1

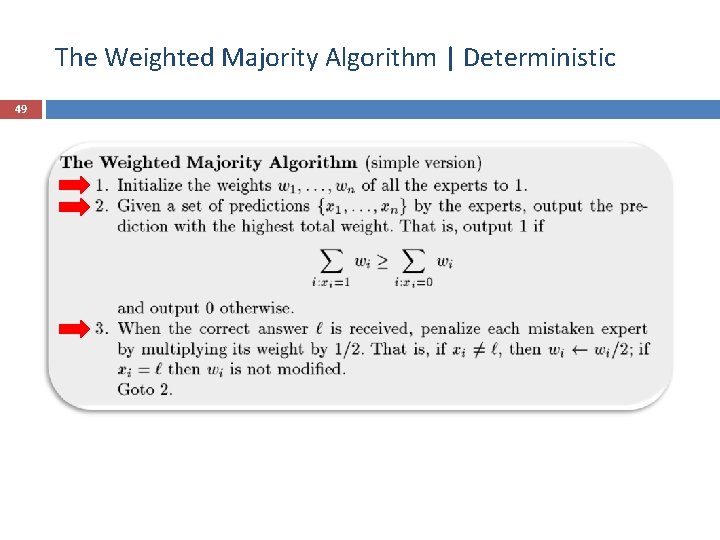

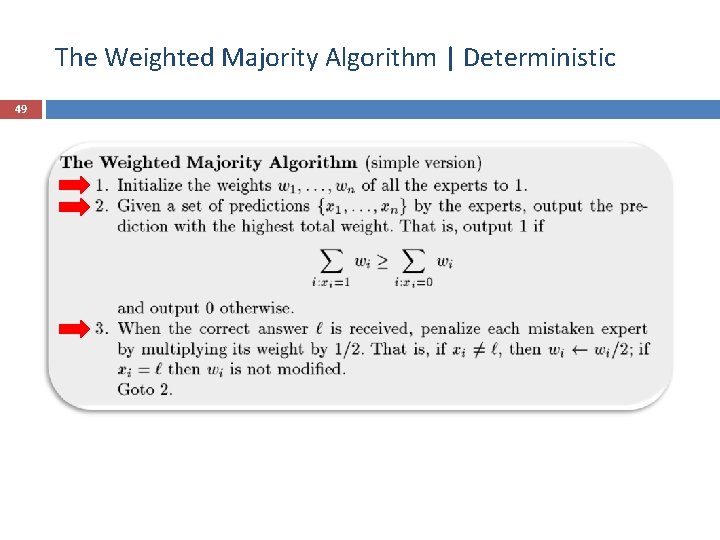

The Weighted Majority Algorithm | Deterministic 49

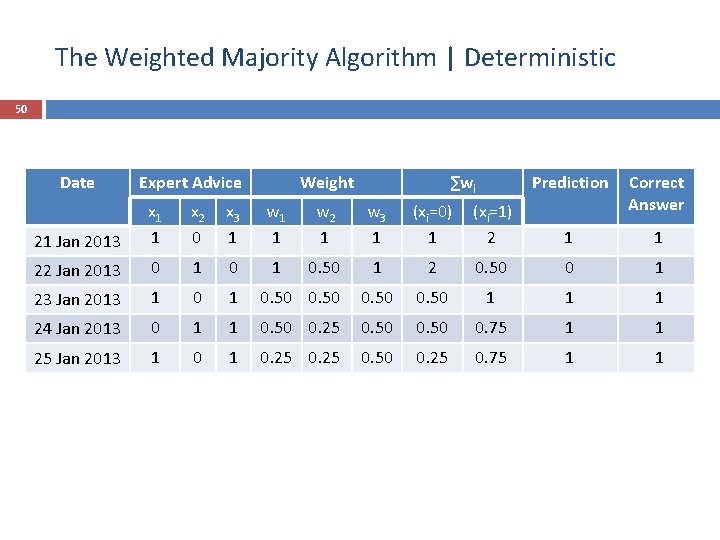

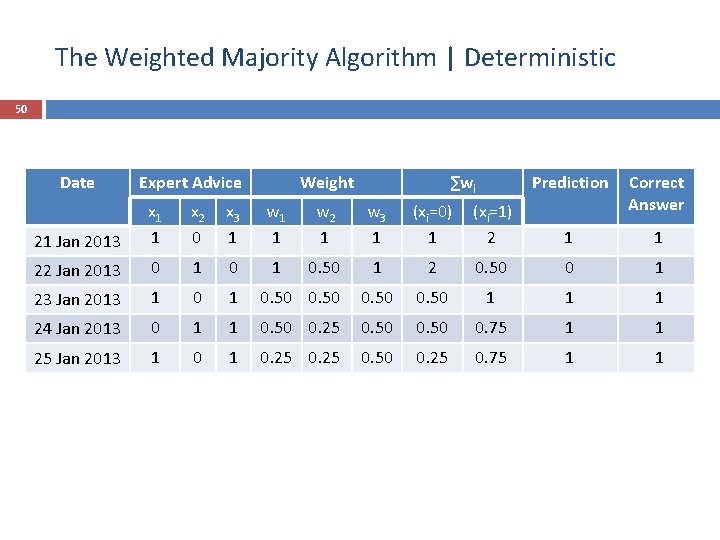

The Weighted Majority Algorithm | Deterministic 50 Date Expert Advice Weight ∑wi Prediction Correct Answer 21 Jan 2013 x 1 1 x 2 0 x 3 1 w 1 1 w 2 1 w 3 1 (xi=0) 1 (xi=1) 2 1 1 22 Jan 2013 0 1 0. 50 1 2 0. 50 0 1 23 Jan 2013 1 0. 50 1 1 1 24 Jan 2013 0 1 1 0. 50 0. 25 0. 50 0. 75 1 1 25 Jan 2013 1 0. 25 0. 50 0. 25 0. 75 1 1

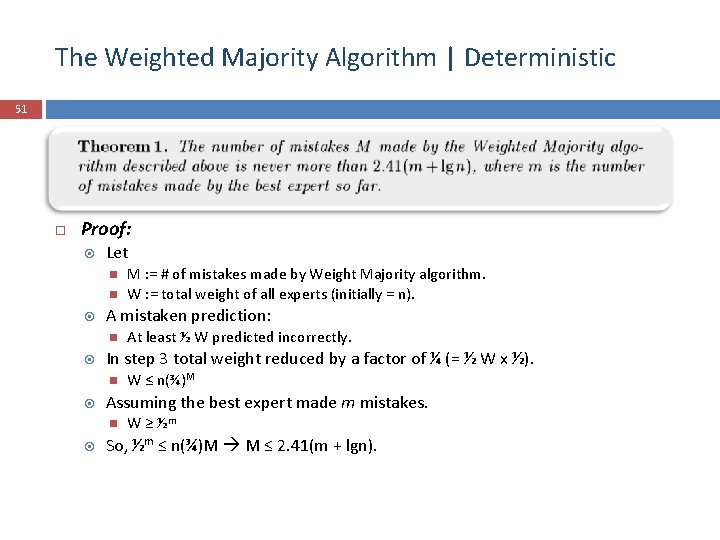

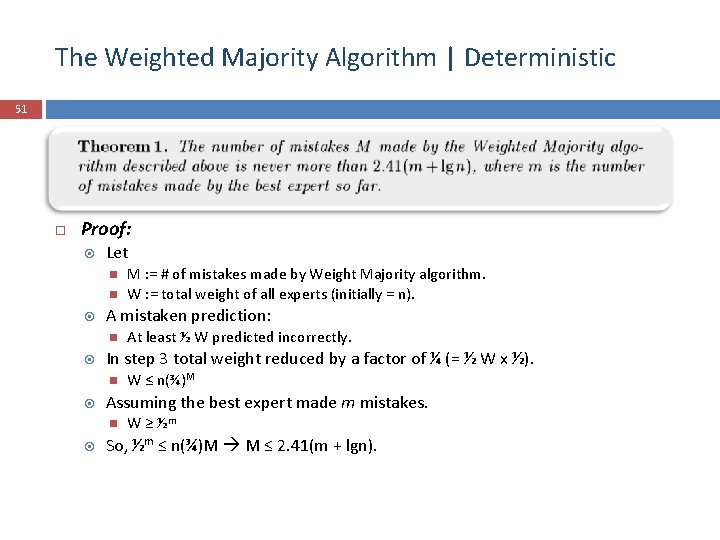

The Weighted Majority Algorithm | Deterministic 51 Proof: Let A mistaken prediction: W ≤ n(¾)M Assuming the best expert made m mistakes. At least ½ W predicted incorrectly. In step 3 total weight reduced by a factor of ¼ (= ½ W x ½). M : = # of mistakes made by Weight Majority algorithm. W : = total weight of all experts (initially = n). W ≥ ½m So, ½m ≤ n(¾)M M ≤ 2. 41(m + lgn).

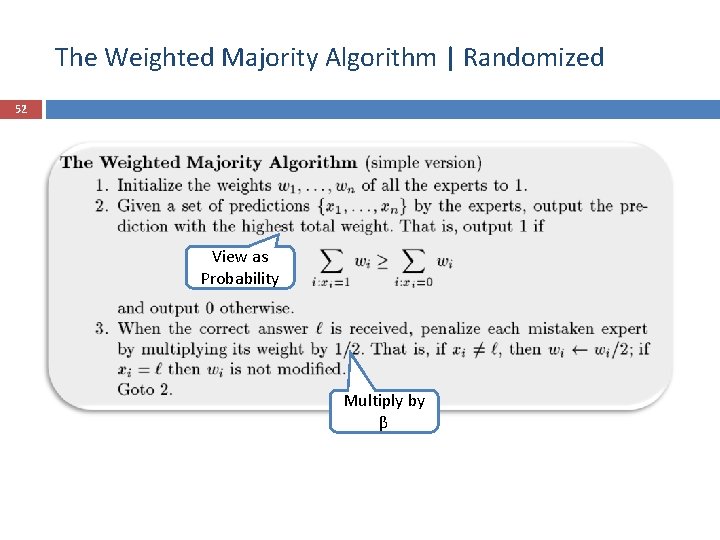

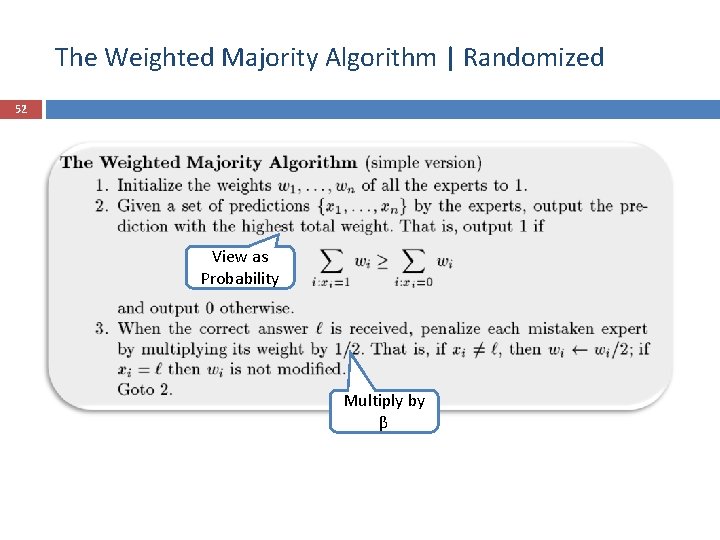

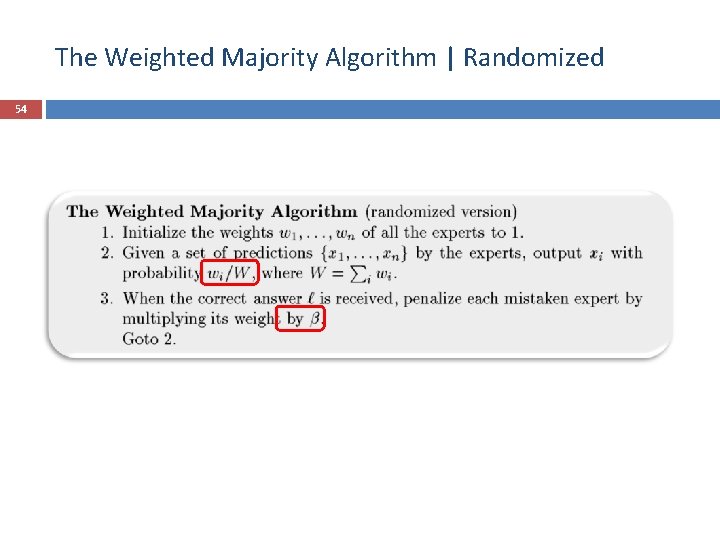

The Weighted Majority Algorithm | Randomized 52 View as Probability Multiply by β

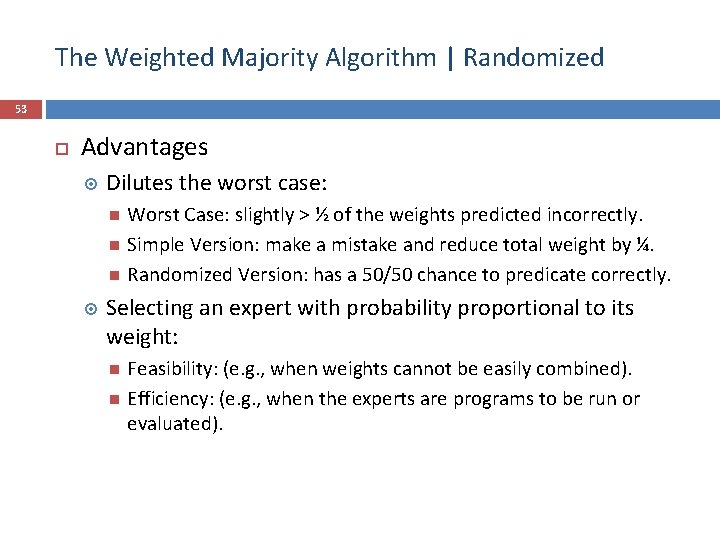

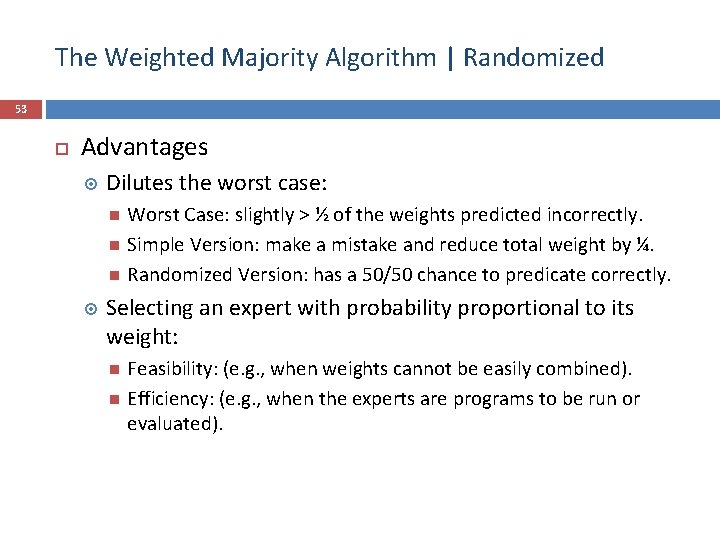

The Weighted Majority Algorithm | Randomized 53 Advantages Dilutes the worst case: Worst Case: slightly > ½ of the weights predicted incorrectly. Simple Version: make a mistake and reduce total weight by ¼. Randomized Version: has a 50/50 chance to predicate correctly. Selecting an expert with probability proportional to its weight: Feasibility: (e. g. , when weights cannot be easily combined). Efficiency: (e. g. , when the experts are programs to be run or evaluated).

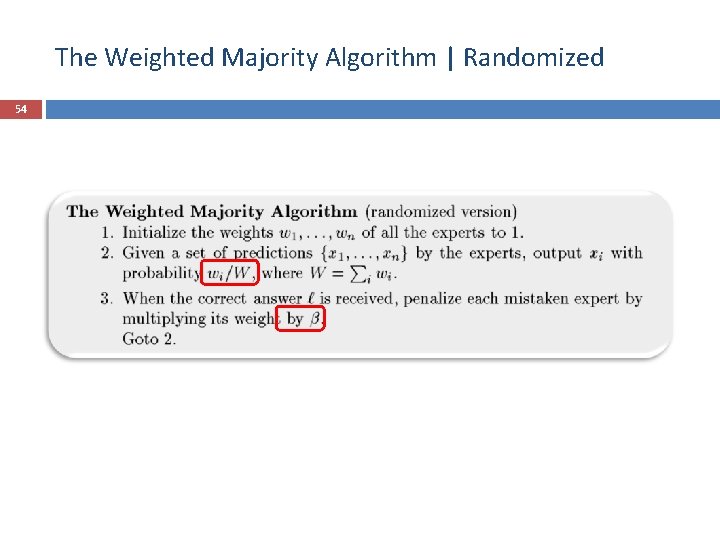

The Weighted Majority Algorithm | Randomized 54

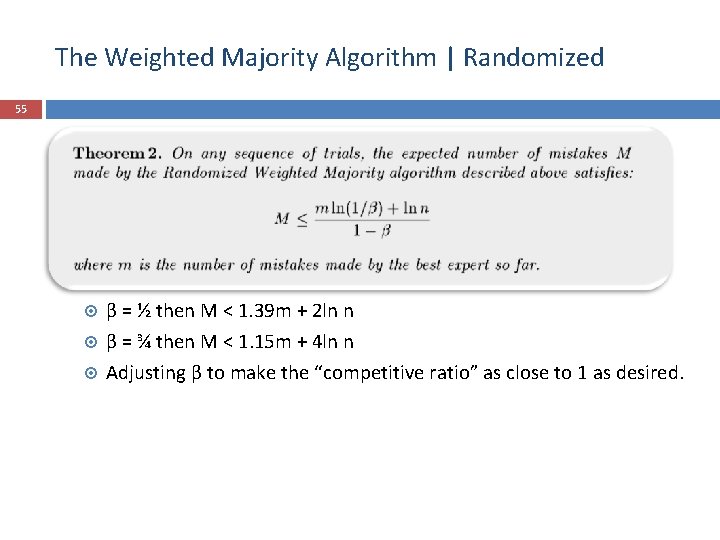

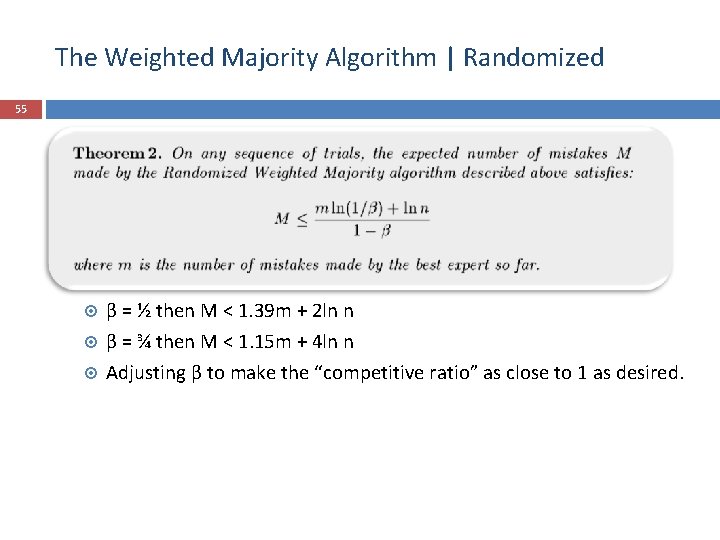

The Weighted Majority Algorithm | Randomized 55 β = ½ then M < 1. 39 m + 2 ln n β = ¾ then M < 1. 15 m + 4 ln n Adjusting β to make the “competitive ratio” as close to 1 as desired.

56 Mistake Bound Model of Learning Mistake Bound Model Predicting from Expert Advice The Weighted Majority Algorithm Online Learning from Examples The Winnow Algorithm

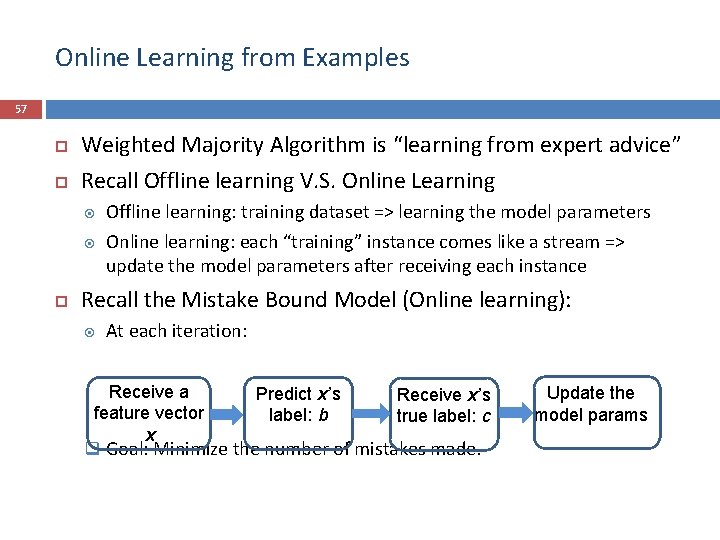

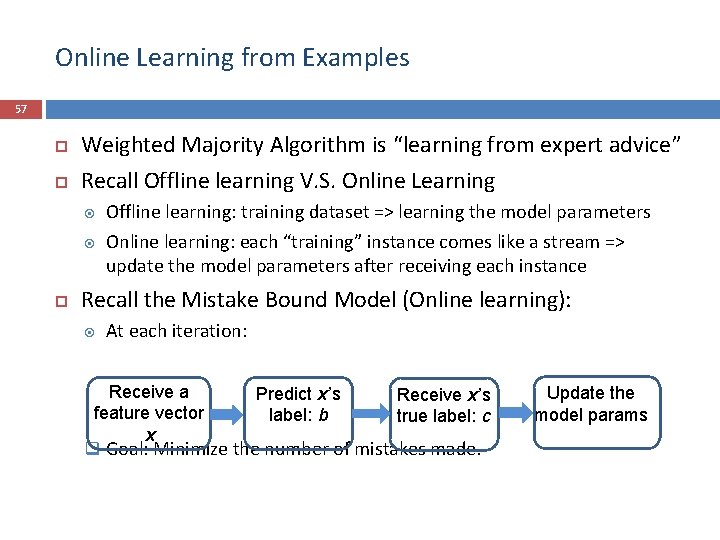

Online Learning from Examples 57 Weighted Majority Algorithm is “learning from expert advice” Recall Offline learning V. S. Online Learning Offline learning: training dataset => learning the model parameters Online learning: each “training” instance comes like a stream => update the model parameters after receiving each instance Recall the Mistake Bound Model (Online learning): At each iteration: Receive a Predict x’s Receive x’s feature vector label: b true label: c x q Goal: Minimize the number of mistakes made. Update the model params

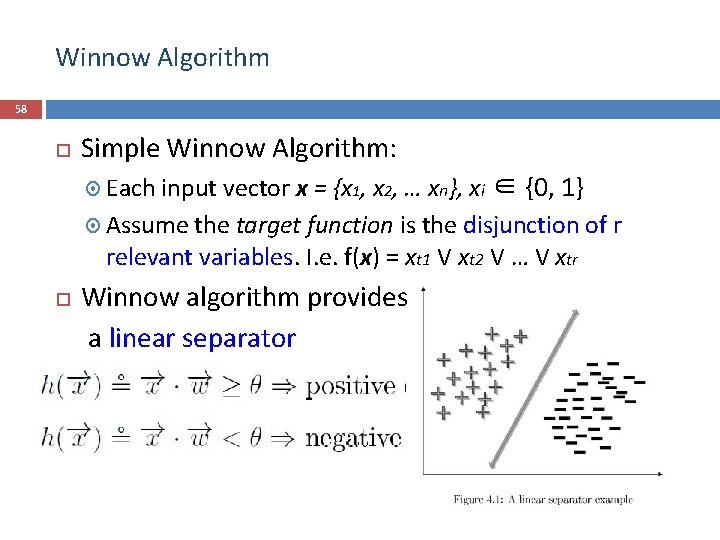

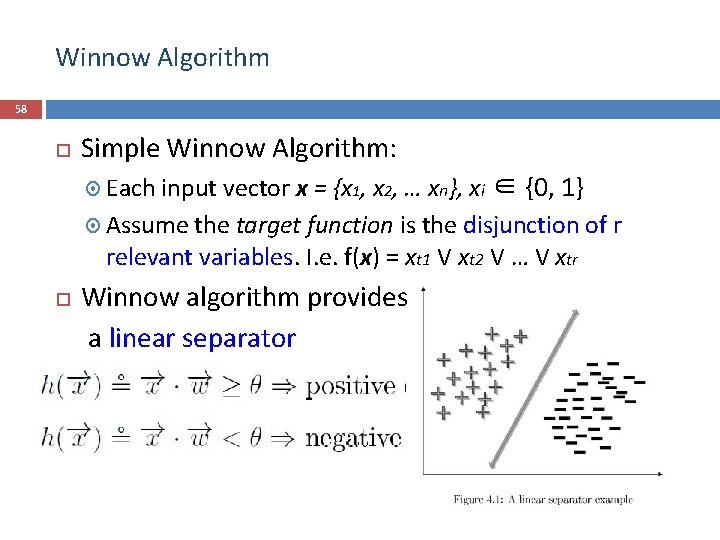

Winnow Algorithm 58 Simple Winnow Algorithm: Each input vector x = {x 1, x 2, … xn}, xi ∈ {0, 1} Assume the target function is the disjunction of r relevant variables. I. e. f(x) = xt 1 V xt 2 V … V xtr Winnow algorithm provides a linear separator

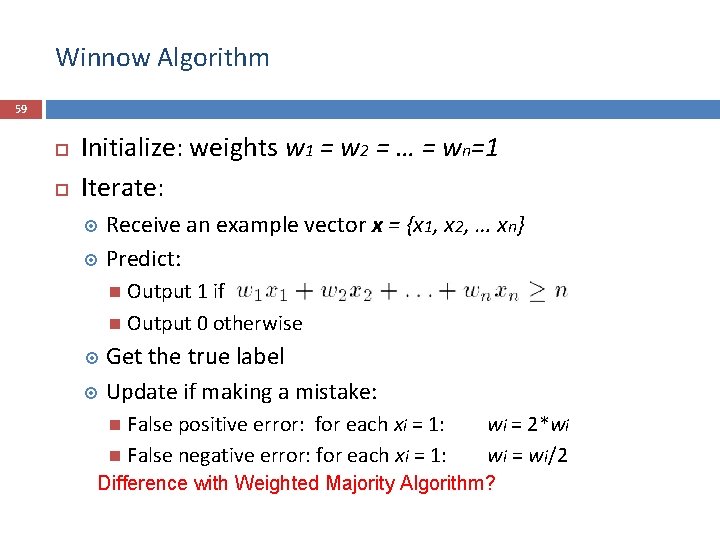

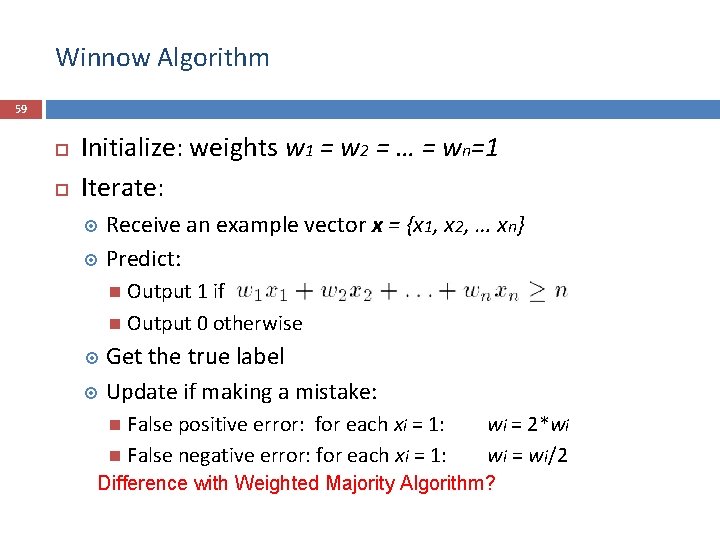

Winnow Algorithm 59 Initialize: weights w 1 = w 2 = … = wn=1 Iterate: Receive an example vector x = {x 1, x 2, … xn} Predict: Output 1 if Output 0 otherwise Get the true label Update if making a mistake: False positive error: for each xi = 1: False negative error: for each xi = 1: wi = 2*wi wi = wi/2 Difference with Weighted Majority Algorithm?

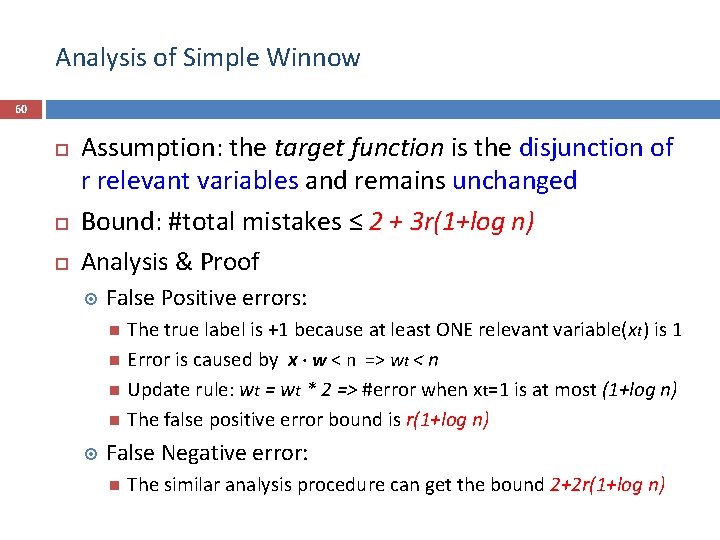

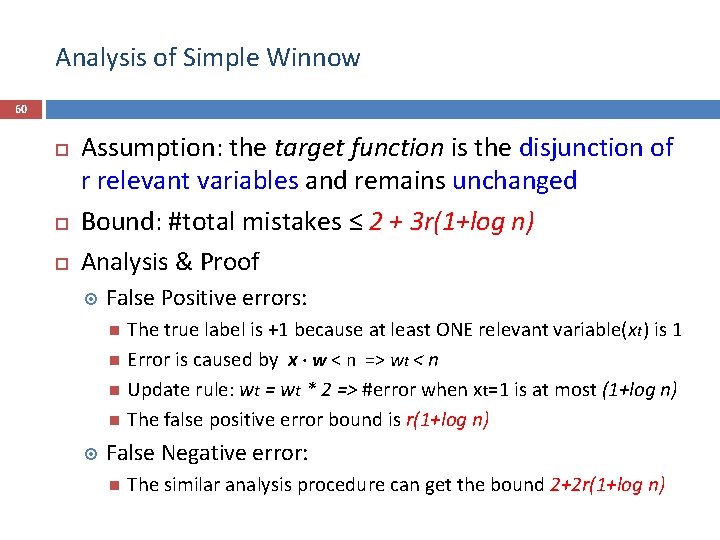

Analysis of Simple Winnow 60 Assumption: the target function is the disjunction of r relevant variables and remains unchanged Bound: #total mistakes ≤ 2 + 3 r(1+log n) Analysis & Proof False Positive errors: The true label is +1 because at least ONE relevant variable(xt) is 1 Error is caused by x ∙ w < n => wt < n Update rule: wt = wt * 2 => #error when xt=1 is at most (1+log n) The false positive error bound is r(1+log n) False Negative error: The similar analysis procedure can get the bound 2+2 r(1+log n)

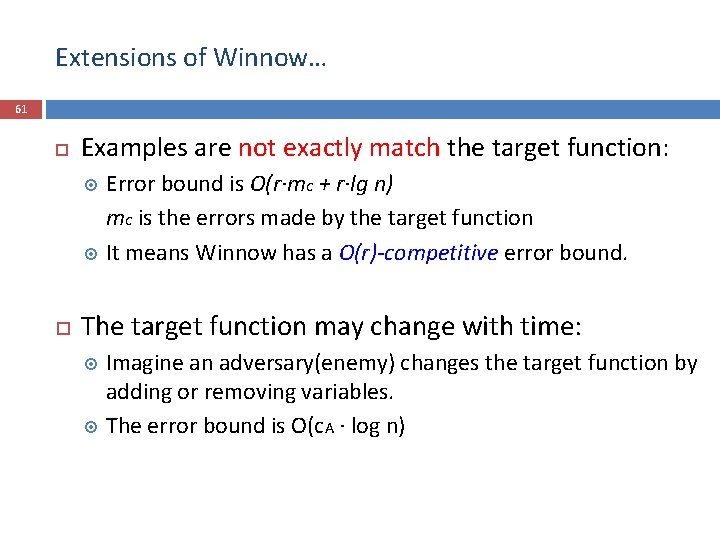

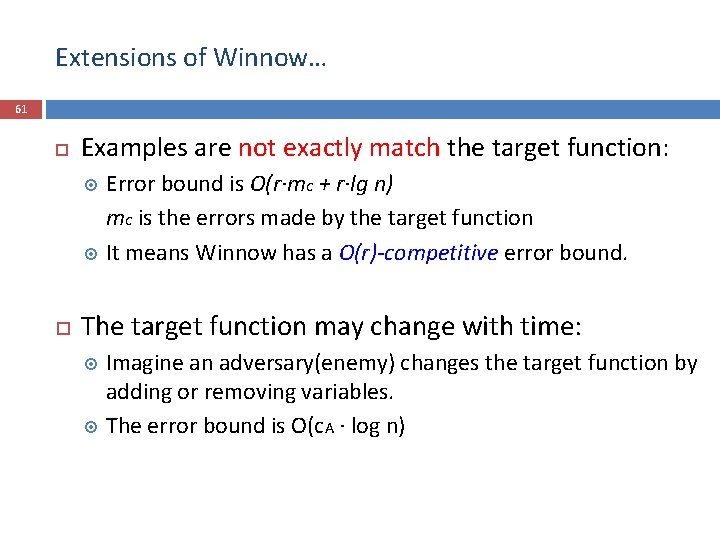

Extensions of Winnow… 61 Examples are not exactly match the target function: Error bound is O(r∙mc + r∙lg n) mc is the errors made by the target function It means Winnow has a O(r)-competitive error bound. The target function may change with time: Imagine an adversary(enemy) changes the target function by adding or removing variables. The error bound is O(c. A ∙ log n)

Extensions of Winnow… 62 The feature variable is continuous rather than boolean Theorem: if the target function is embedding-closed, then Winnow can also be learned in the Infinite-Attribute model By adding some randomness in the algorithm, the bound can be further improved.

PAC versus MBM (Mistake Bound Model) 63 Intuitively, MBM is stronger than PAC MBM gives the determinant error upper bound PAC guarantees the mistake with constant probability A natural question: if we know A learns some concept class C in the MBM, can A learn the same C in the PAC model? Answer: Of course! We can construct APAC in a principled way [1]

Conclusion 64 PAC (Probably Approximately Correct) Easier than MBM model since examples are restricted to be coming from a distribution. Strong PAC and weak PAC Error reduction and boosting MBM(Mistake Bound Model) Stronger bound than PAC: exactly upper bound of errors 2 representative algorithms Weighted Majority: for online expert learning Winnow: for onlinear classifier learning Relationship between PAC and MBM

Q & A 65

![References 66 1 Tel Aviv Universitys Machine Lecture http www cs tau ac ilmansourmlcourse10scribe References 66 [1] Tel Aviv University’s Machine Lecture: http: //www. cs. tau. ac. il/~mansour/ml-course-10/scribe](https://slidetodoc.com/presentation_image_h/c6c91e898d676200635d03379fff9f6f/image-66.jpg)

References 66 [1] Tel Aviv University’s Machine Lecture: http: //www. cs. tau. ac. il/~mansour/ml-course-10/scribe 4. pdf Machine Learning Theory http: //www. cs. ucla. edu/~jenn/courses/F 11. html http: //www. staff. science. uu. nl/~leeuw 112/soia. ML. pdf PAC www. cs. cmu. edu/~avrim/Talks/FOCS 03/tutorial. ppt http: //www. autonlab. org/tutorials/pac 05. pdf http: //www. cis. temple. edu/~giorgio/cis 587/readings/pac. html Occam’s Razor http: //www. cs. iastate. edu/~honavar/occam. pdf The Weighted Majority Algorithm http: //www. mit. edu/~9. 520/spring 08/Classes/online_learning_2008. pdf http: //users. soe. ucsc. edu/~manfred/pubs/C 50. pdf http: //users. soe. ucsc. edu/~manfred/pubs/J 24. pdf The Winnow Algorithm http: //www. cc. gatech. edu/~ninamf/ML 11/lect 0906. pdf http: //stat. wharton. upenn. edu/~skakade/courses/stat 928/lectures/lecture 19. pdf http: //www. cc. gatech. edu/~ninamf/ML 10/lect 0121. pdf

67 Supplementary Slides

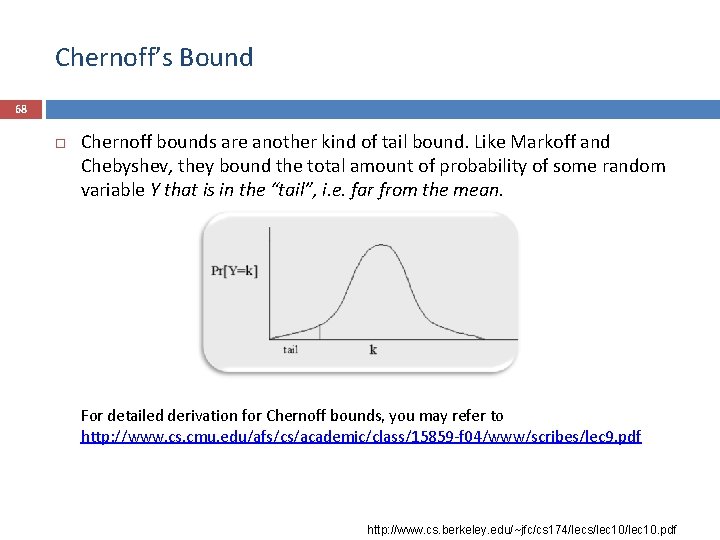

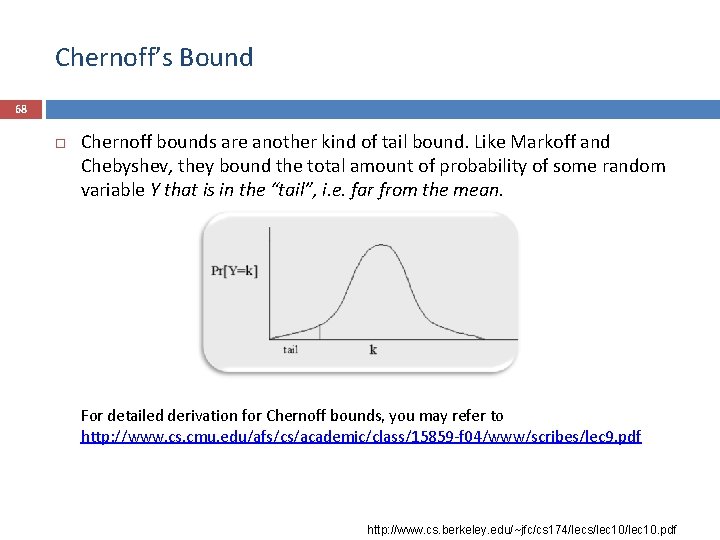

Chernoff’s Bound 68 Chernoff bounds are another kind of tail bound. Like Markoff and Chebyshev, they bound the total amount of probability of some random variable Y that is in the “tail”, i. e. far from the mean. For detailed derivation for Chernoff bounds, you may refer to http: //www. cs. cmu. edu/afs/cs/academic/class/15859 -f 04/www/scribes/lec 9. pdf http: //www. cs. berkeley. edu/~jfc/cs 174/lecs/lec 10. pdf

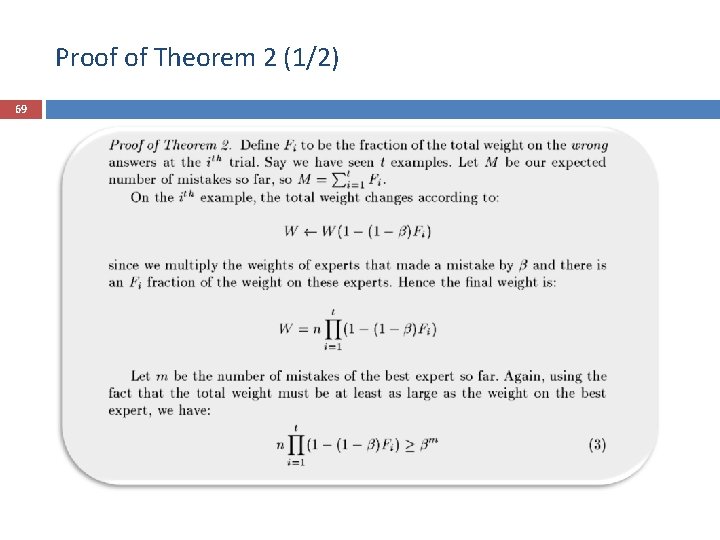

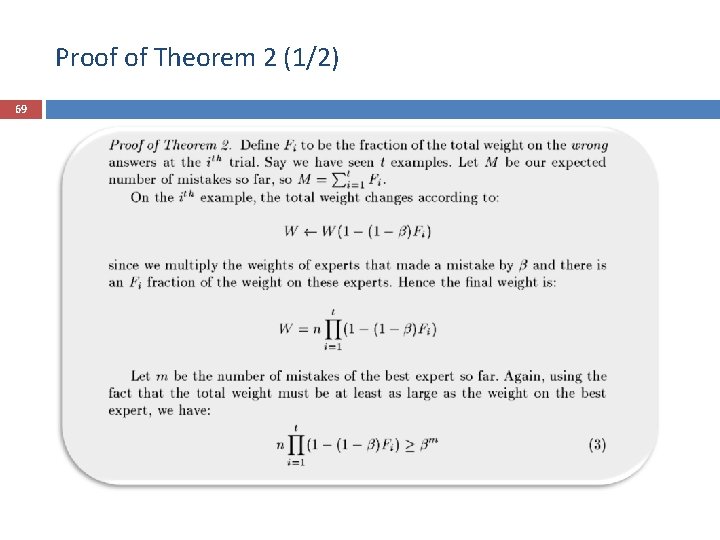

Proof of Theorem 2 (1/2) 69

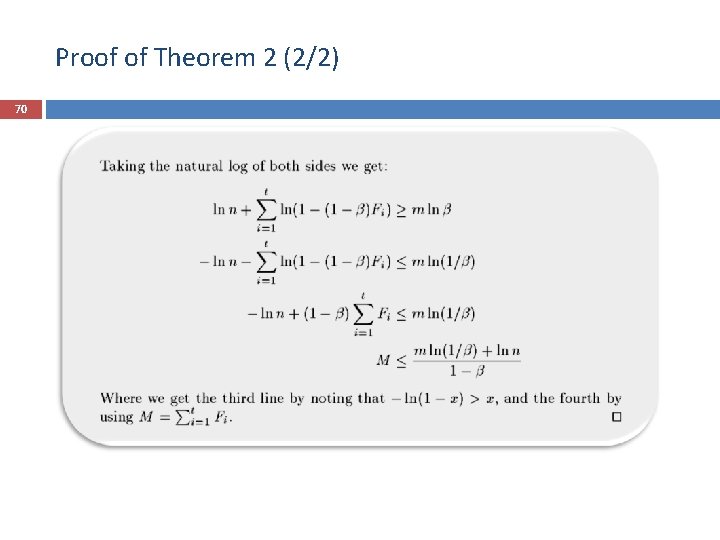

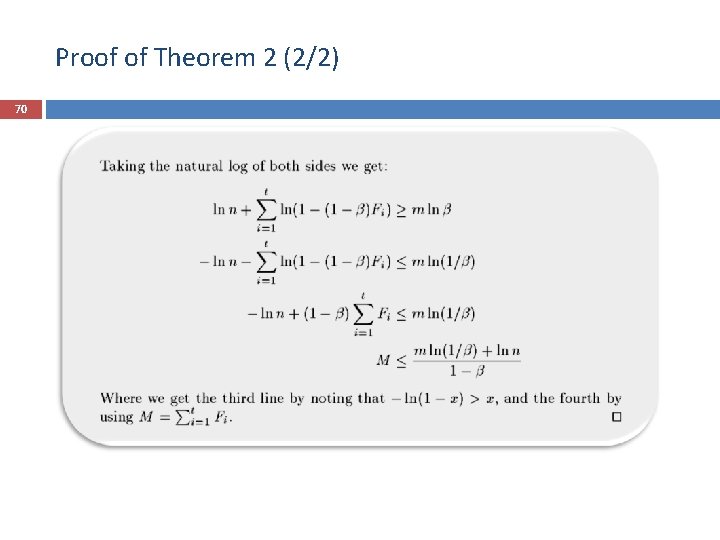

Proof of Theorem 2 (2/2) 70

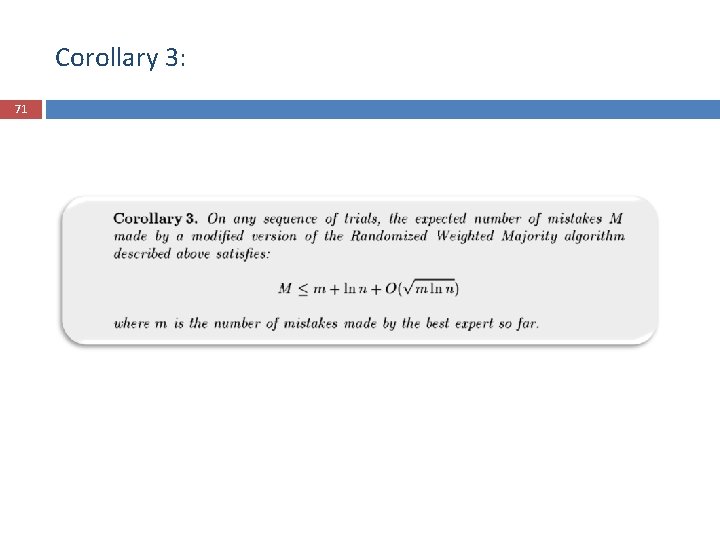

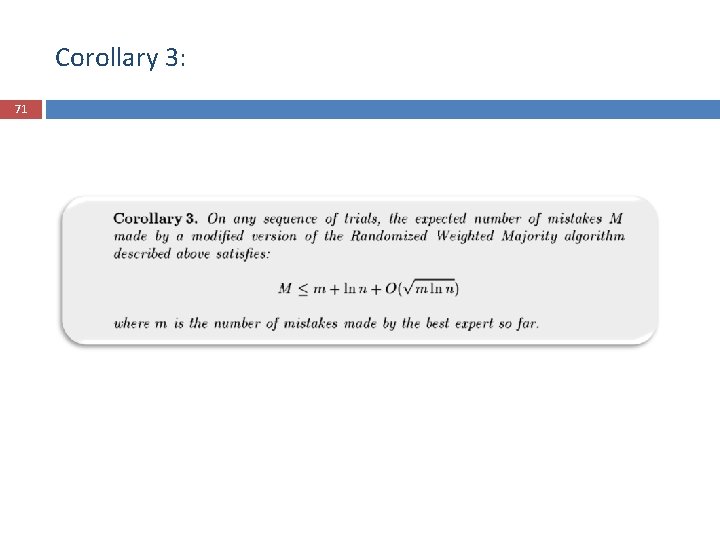

Corollary 3: 71