Machine Learning A Quick look Sources Artificial Intelligence

- Slides: 32

Machine Learning A Quick look Sources: • Artificial Intelligence – Russell & Norvig • Artifical Intelligence - Luger By: Héctor Muñoz-Avila

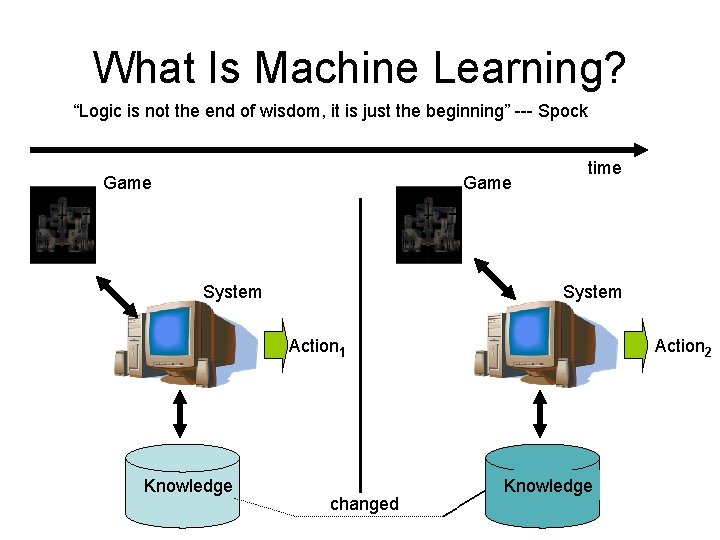

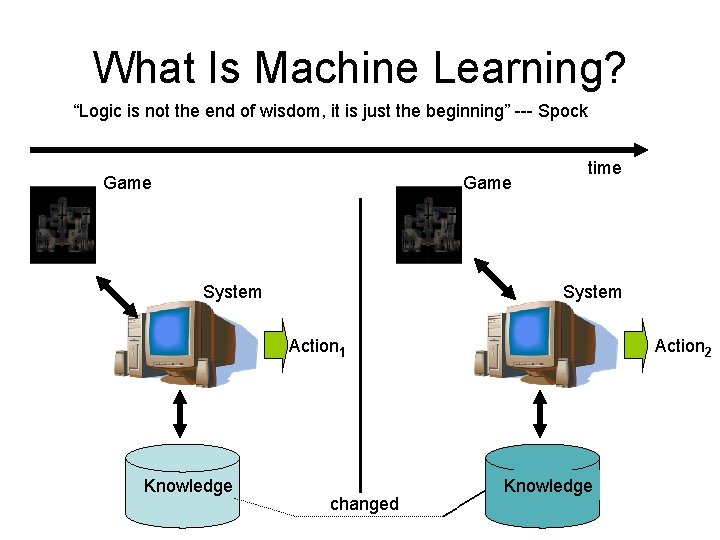

What Is Machine Learning? “Logic is not the end of wisdom, it is just the beginning” --- Spock Game System time System Action 1 Knowledge changed Action 2 Knowledge

Learning: The Big Picture • Two forms of learning: ØSupervised: the input and output of the learning component can be perceived (for example: experienced player giving friendly teacher) ØUnsupervised: there is no hint about the correct answers of the learning component (for example to find clusters of data)

Offline vs. Online Learning • Online – during gameplay – Adapt to player tactics – Avoid repetition of mistakes – Requirements: computationally cheap, effective, robust, fast learning (Spronck 2004) • Offline – Between the end of a game and the next 4 – Devise new tactics – Discover exploits

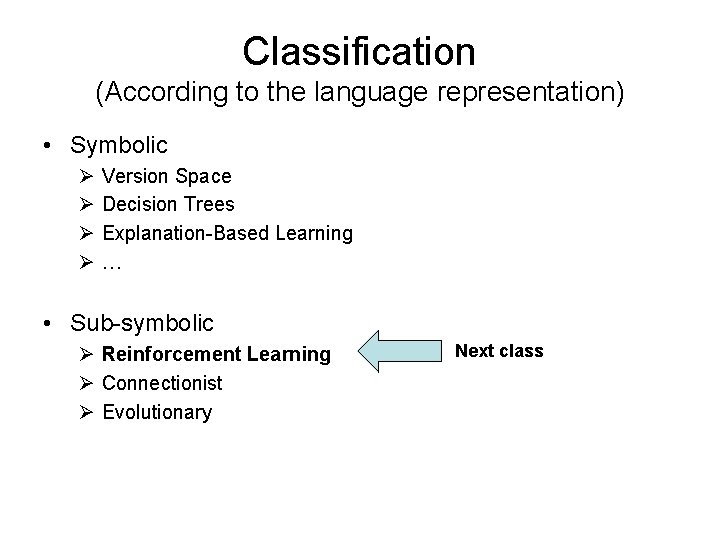

Classification (According to the language representation) • Symbolic Ø Ø Version Spaces Decision Trees Explanation-Based Learning … • Sub-symbolic Ø Reinforcement Learning Ø Connectionist Ø Evolutionary

Classification (According to the language representation) • Symbolic Ø Ø Version Space Decision Trees Explanation-Based Learning … • Sub-symbolic Ø Reinforcement Learning Ø Connectionist Ø Evolutionary

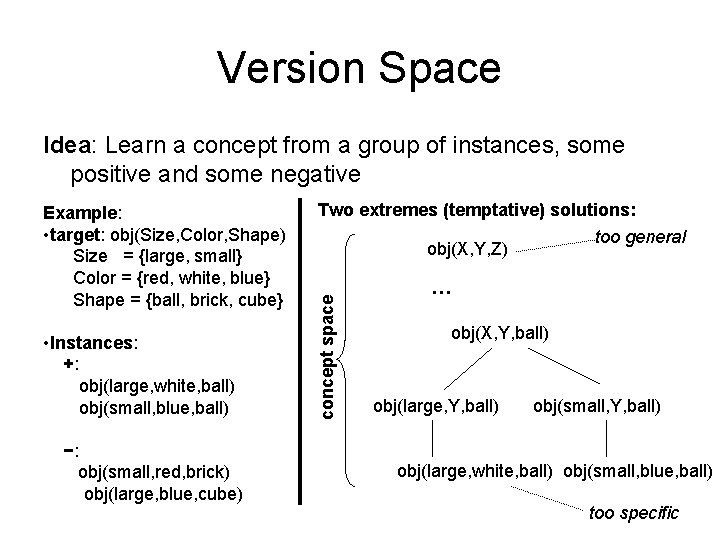

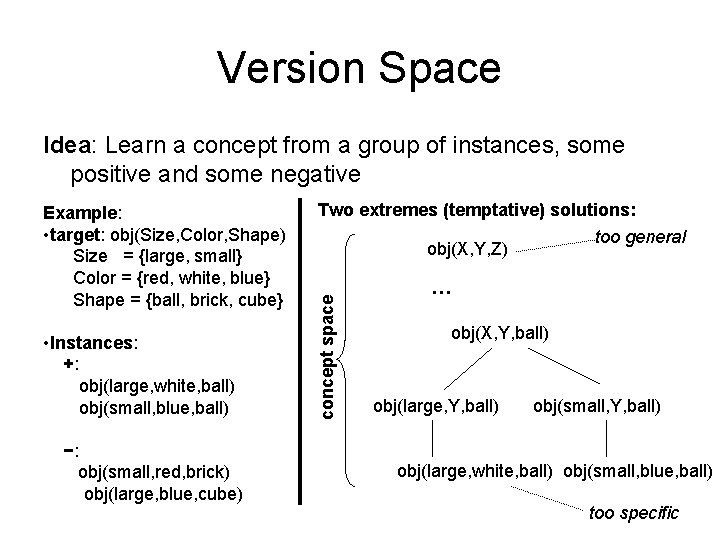

Version Space Idea: Learn a concept from a group of instances, some positive and some negative • Instances: +: obj(large, white, ball) obj(small, blue, ball) −: obj(small, red, brick) obj(large, blue, cube) Two extremes (temptative) solutions: too general obj(X, Y, Z) concept space Example: • target: obj(Size, Color, Shape) Size = {large, small} Color = {red, white, blue} Shape = {ball, brick, cube} … obj(X, Y, ball) obj(large, Y, ball) obj(small, Y, ball) obj(large, white, ball) obj(small, blue, ball) … too specific

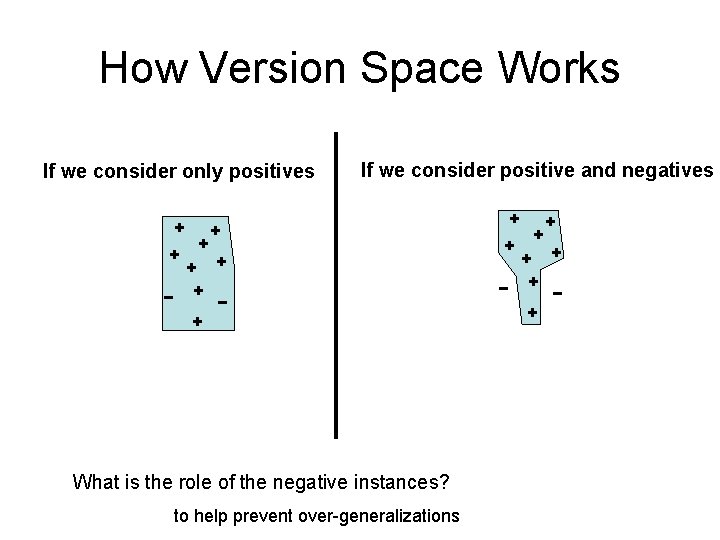

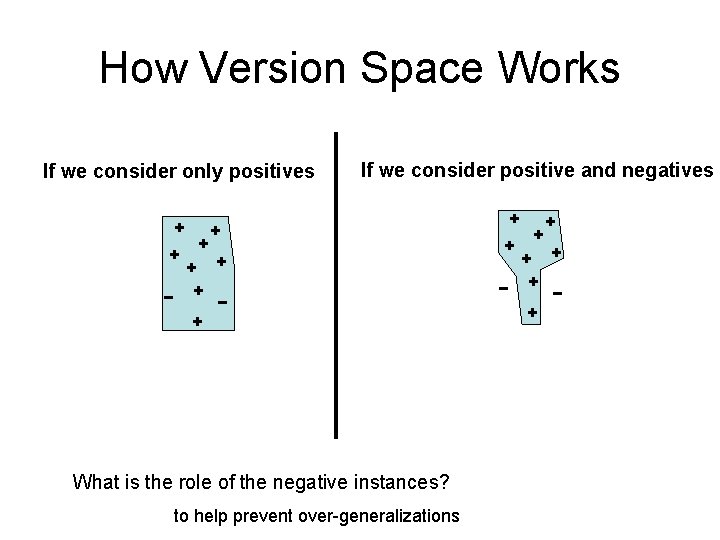

How Version Space Works If we consider only positives + + + If we consider positive and negatives + + + − + What is the role of the negative instances? to help prevent over-generalizations + + + − +

Classification (According to the language representation) • Symbolic Ø Ø Version Space Decision Trees Explanation-Based Learning … • Sub-symbolic Ø Reinforcement Learning Ø Connectionist Ø Evolutionary

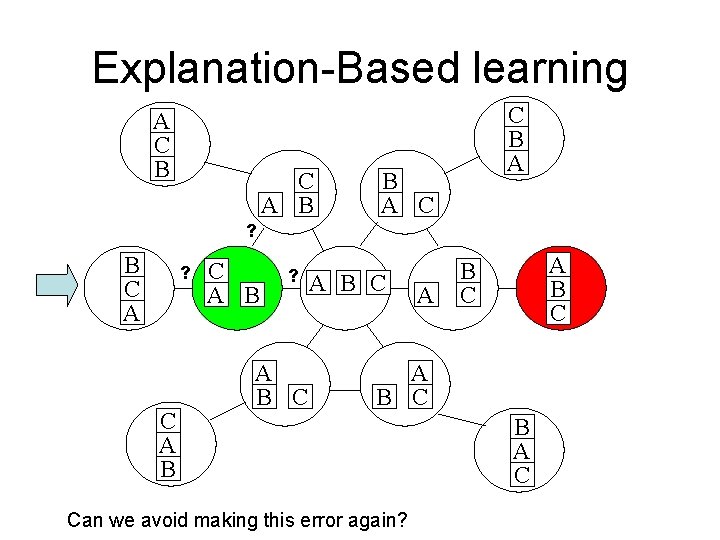

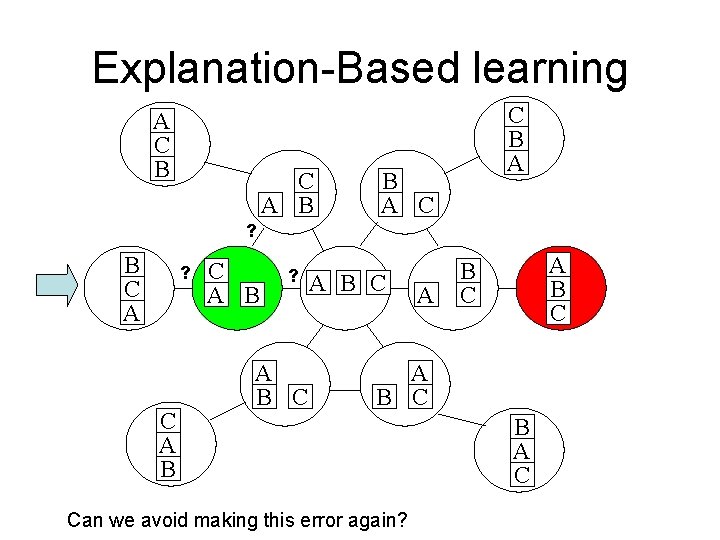

Explanation-Based learning A C B C A B C B A C ? B C A ? C A B ? A B C A B C Can we avoid making this error again? B A C

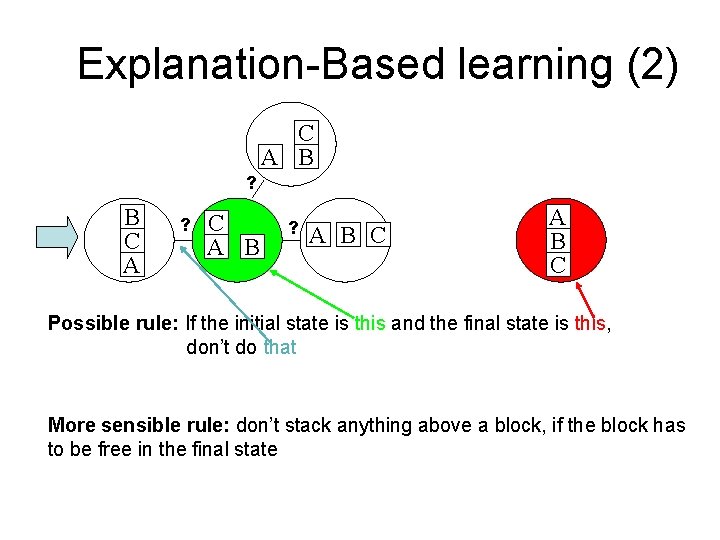

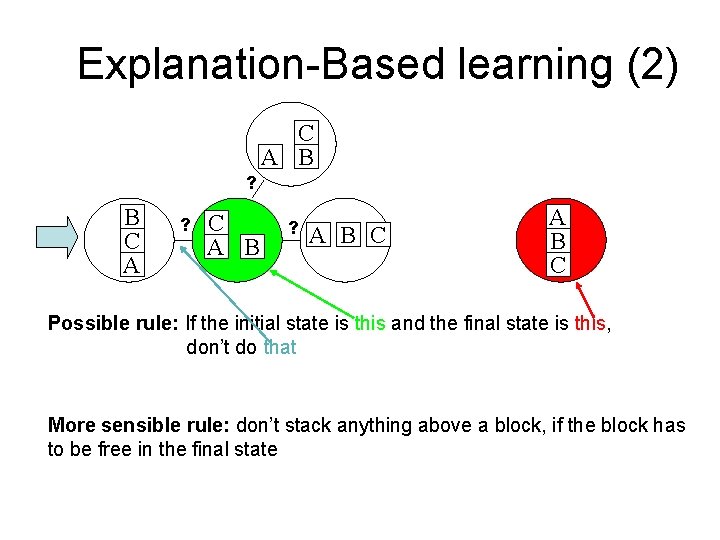

Explanation-Based learning (2) C A B ? B C A ? C A B ? A B C Possible rule: If the initial state is this and the final state is this, don’t do that More sensible rule: don’t stack anything above a block, if the block has to be free in the final state

Classification (According to the language representation) • Symbolic Ø Ø Version Space Decision Trees Explanation-Based Learning … • Sub-symbolic Ø Reinforcement Learning Ø Connectionist Ø Evolutionary

Motivation # 1: Analysis Tool • Suppose that a gaming company have a data base of runs with a beta version of the game, lots of data • How can that company’s developers use this data to figure out an good strategies for their AI

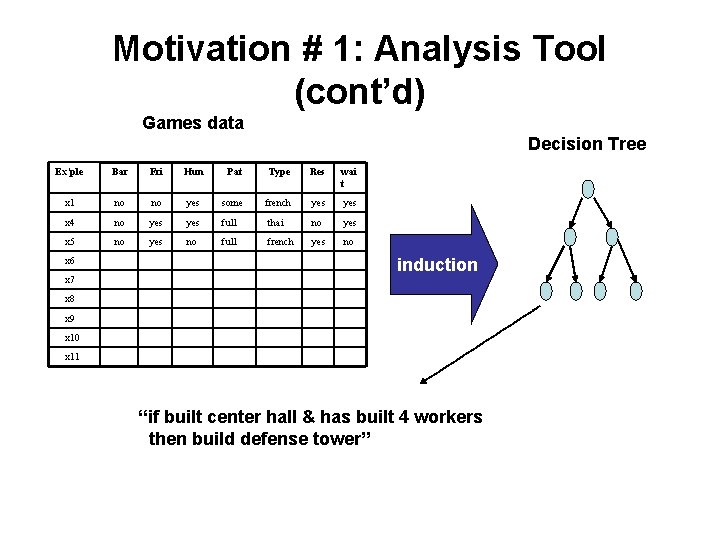

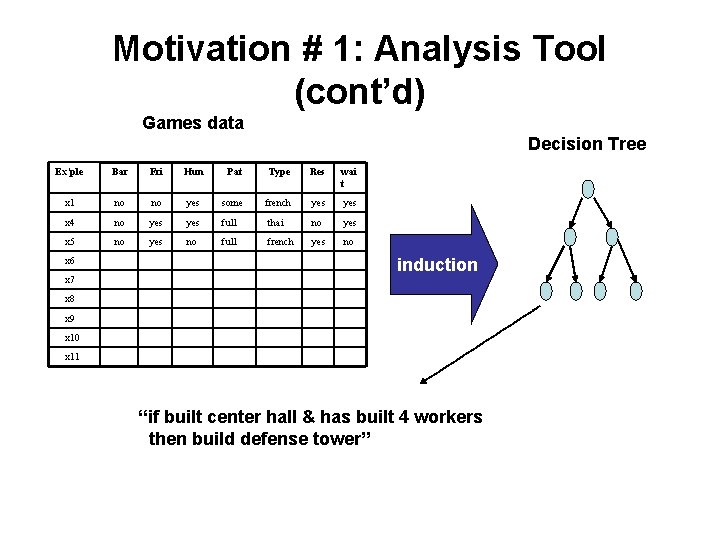

Motivation # 1: Analysis Tool (cont’d) Games data Decision Tree Ex’ple Bar Fri Hun Pat Type Res wai t x 1 no no yes some french yes x 4 no yes full thai no yes x 5 no yes no full french yes no x 6 x 7 induction x 8 x 9 x 10 x 11 “if built center hall & has built 4 workers then build defense tower”

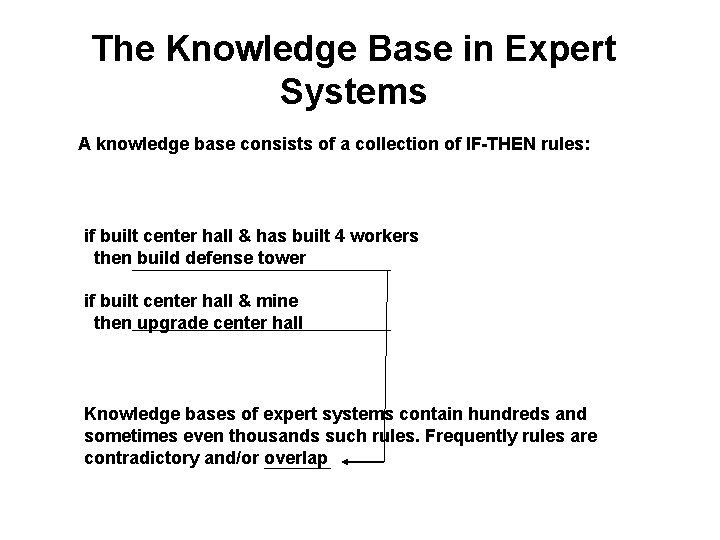

The Knowledge Base in Expert Systems A knowledge base consists of a collection of IF-THEN rules: if built center hall & has built 4 workers then build defense tower if built center hall & mine then upgrade center hall Knowledge bases of expert systems contain hundreds and sometimes even thousands such rules. Frequently rules are contradictory and/or overlap

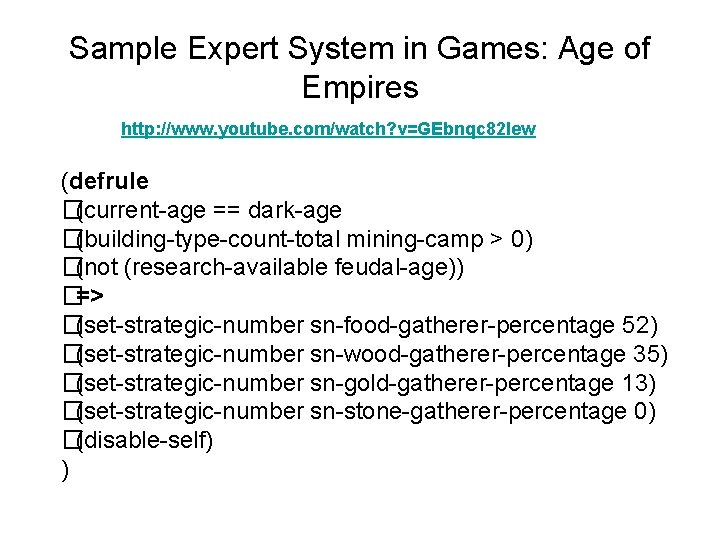

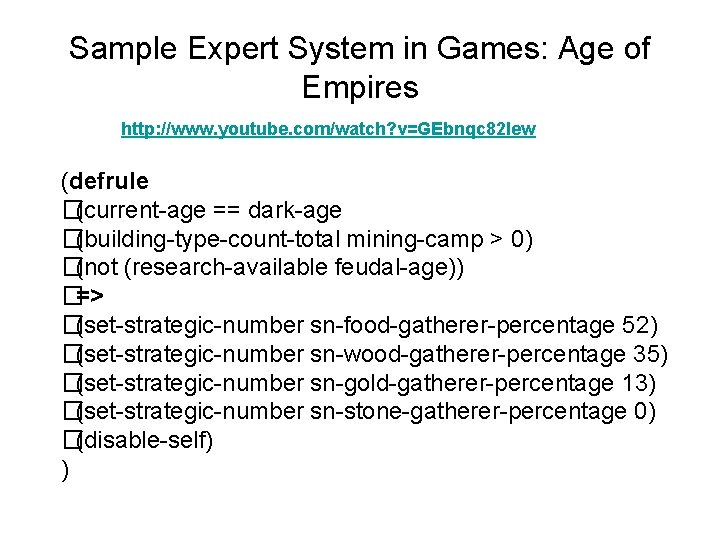

Sample Expert System in Games: Age of Empires http: //www. youtube. com/watch? v=GEbnqc 82 lew (defrule �(current-age == dark-age �(building-type-count-total mining-camp > 0) �(not (research-available feudal-age)) �=> �(set-strategic-number sn-food-gatherer-percentage 52) �(set-strategic-number sn-wood-gatherer-percentage 35) �(set-strategic-number sn-gold-gatherer-percentage 13) �(set-strategic-number sn-stone-gatherer-percentage 0) �(disable-self) )

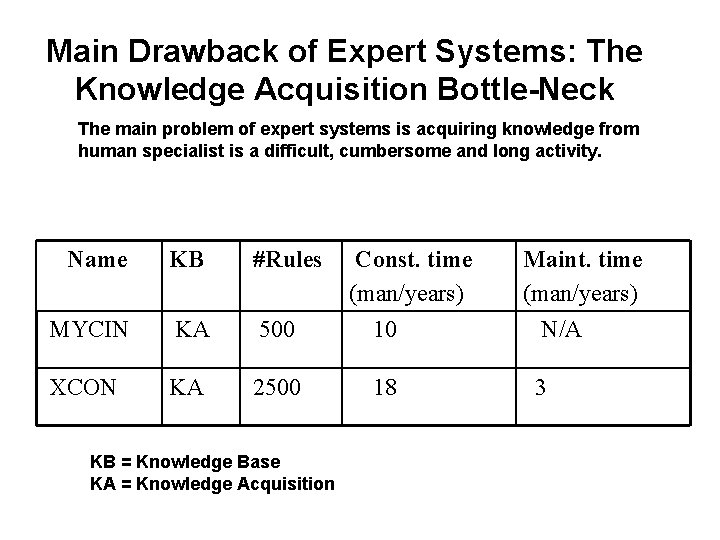

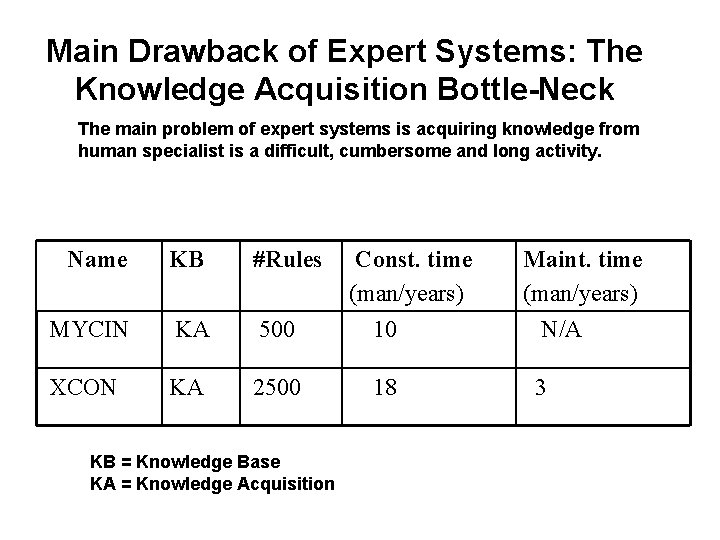

Main Drawback of Expert Systems: The Knowledge Acquisition Bottle-Neck The main problem of expert systems is acquiring knowledge from human specialist is a difficult, cumbersome and long activity. Name KB #Rules MYCIN KA 500 XCON KA 2500 KB = Knowledge Base KA = Knowledge Acquisition Const. time (man/years) 10 18 Maint. time (man/years) N/A 3

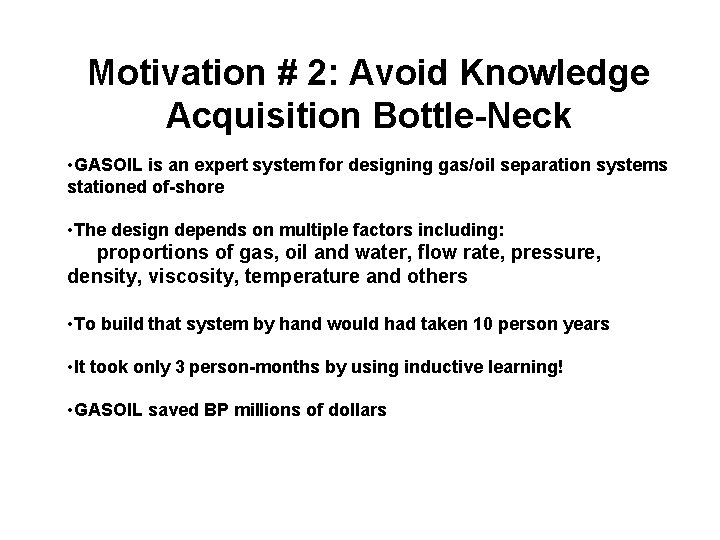

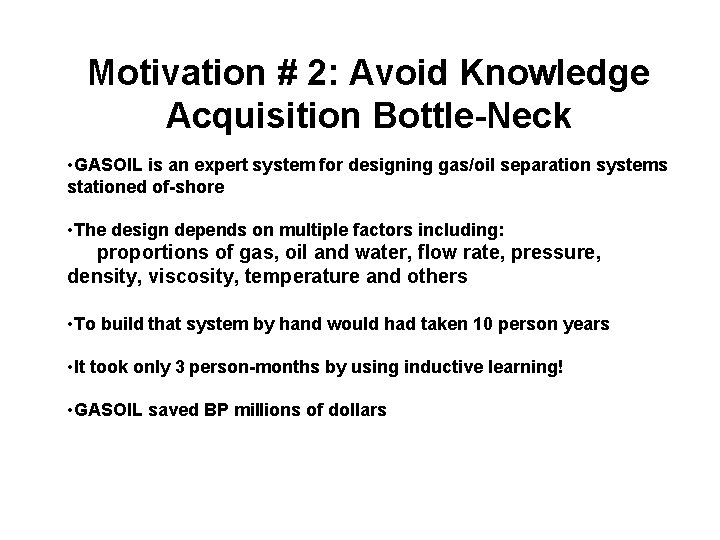

Motivation # 2: Avoid Knowledge Acquisition Bottle-Neck • GASOIL is an expert system for designing gas/oil separation systems stationed of-shore • The design depends on multiple factors including: proportions of gas, oil and water, flow rate, pressure, density, viscosity, temperature and others • To build that system by hand would had taken 10 person years • It took only 3 person-months by using inductive learning! • GASOIL saved BP millions of dollars

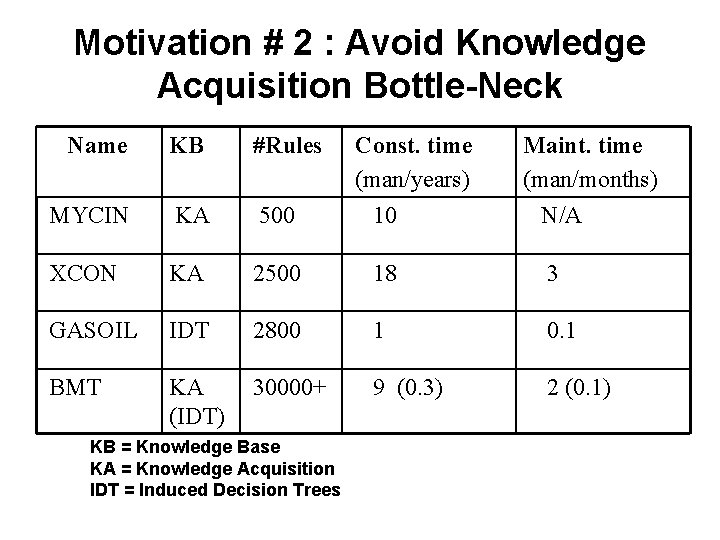

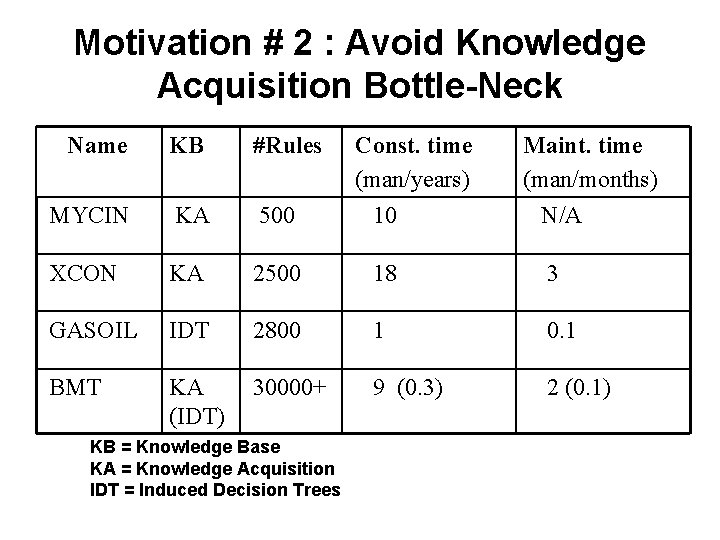

Motivation # 2 : Avoid Knowledge Acquisition Bottle-Neck Name KB #Rules MYCIN KA 500 XCON KA 2500 18 3 GASOIL IDT 2800 1 0. 1 BMT KA (IDT) 30000+ 9 (0. 3) 2 (0. 1) KB = Knowledge Base KA = Knowledge Acquisition IDT = Induced Decision Trees Const. time (man/years) 10 Maint. time (man/months) N/A

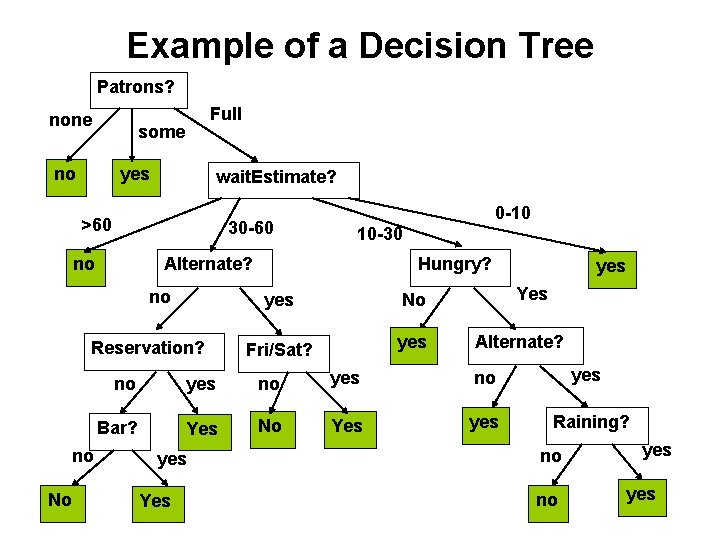

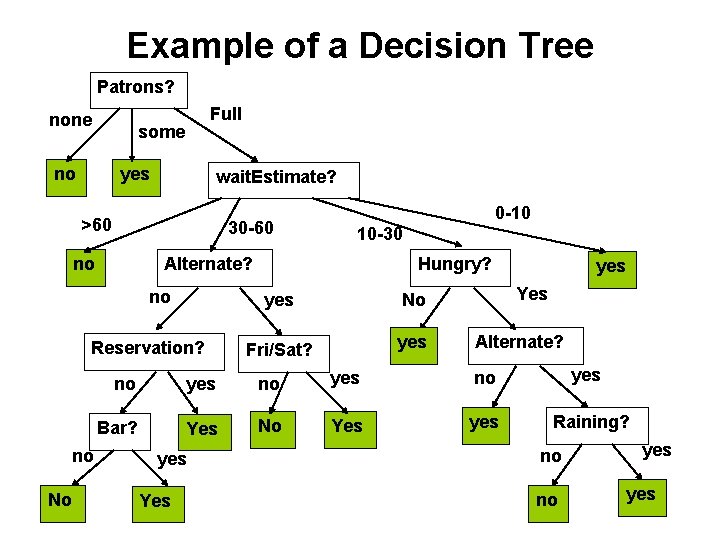

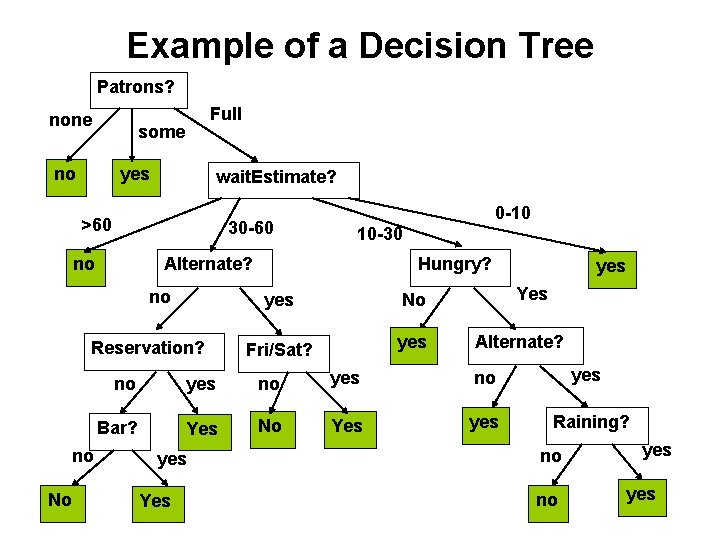

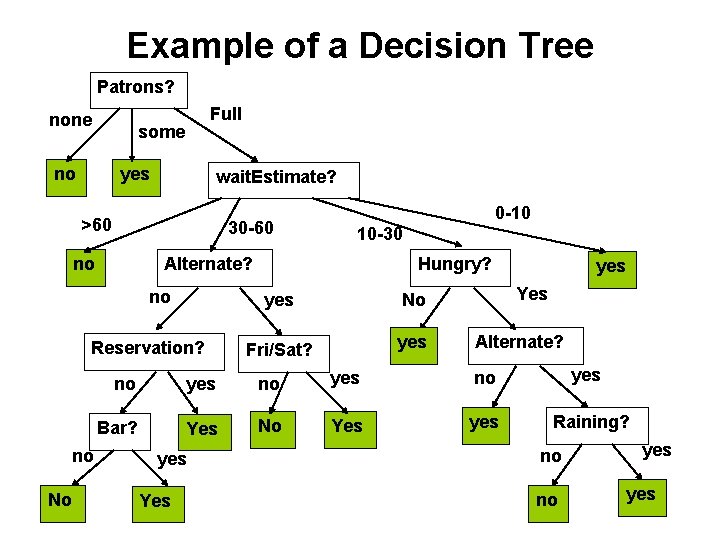

Example of a Decision Tree Patrons? none Full some no yes wait. Estimate? >60 30 -60 no Reservation? No 10 -30 Alternate? no no 0 -10 Hungry? yes No Fri/Sat? yes Yes Alternate? no yes no Bar? Yes No Yes yes yes Raining? no no yes

Definition of A Decision Tree A decision tree is a tree where: • The leaves are labeled with classifications (if the classification is “yes” or “no”. The tree is called a boolean tree) • The non-leaves nodes are labeled with attributes • The arcs out of a node labeled with an attribute A are labeled with the possible values of the attribute A

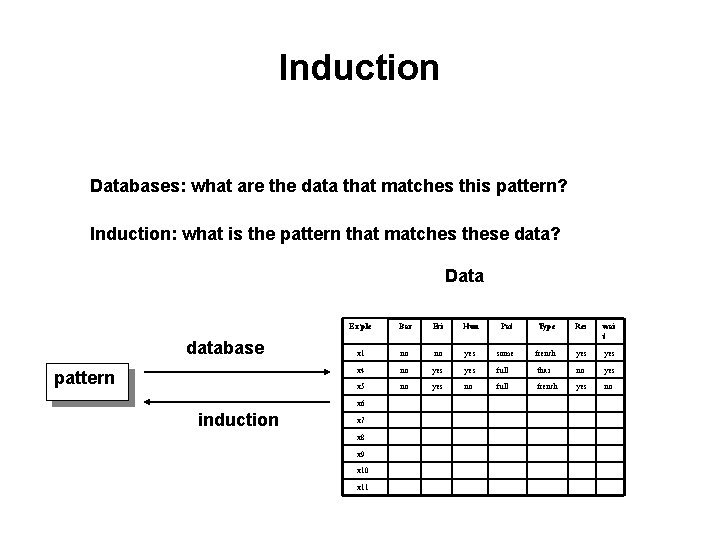

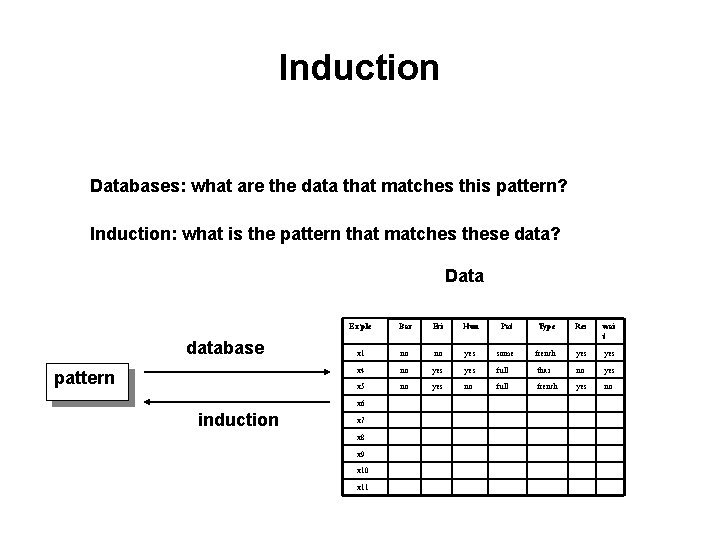

Induction Databases: what are the data that matches this pattern? Induction: what is the pattern that matches these data? Data database pattern Ex’ple Bar Fri Hun Pat Type Res wai t x 1 no no yes some french yes x 4 no yes full thai no yes x 5 no yes no full french yes no x 6 induction x 7 x 8 x 9 x 10 x 11

Induction of Decision Trees • Objective: find a concise decision tree that agrees with the examples • The guiding principle we are going to use is the Ockham’s razor principle: the most likely hypothesis is the simplest one that is consistent with the examples • Problem: finding the smallest decision tree is NP-complete • However, with simple heuristics we can find a small decision tree (approximations)

Induction of Decision Trees: Algorithm: 1. Initially all examples are in the same group 2. Select the attribute that makes the most difference (i. e. , for each of the values of the attribute most of the examples are either positive or negative) 3. Group the examples according to each value for the selected attribute 4. Repeat 1 within each group (recursive call)

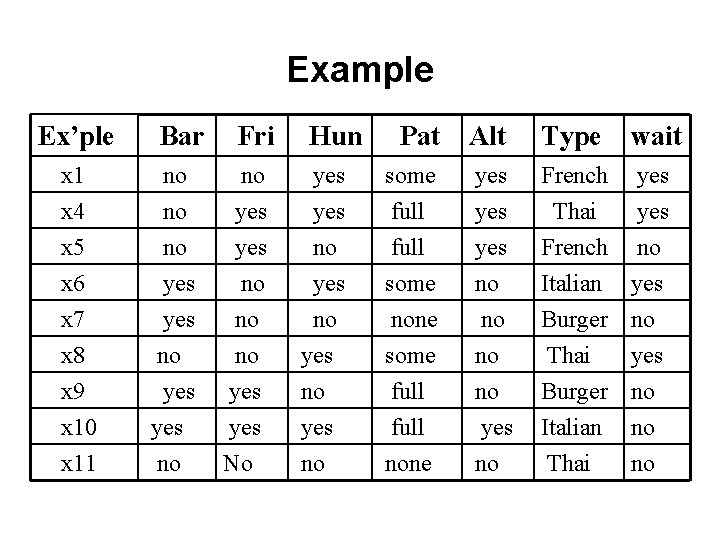

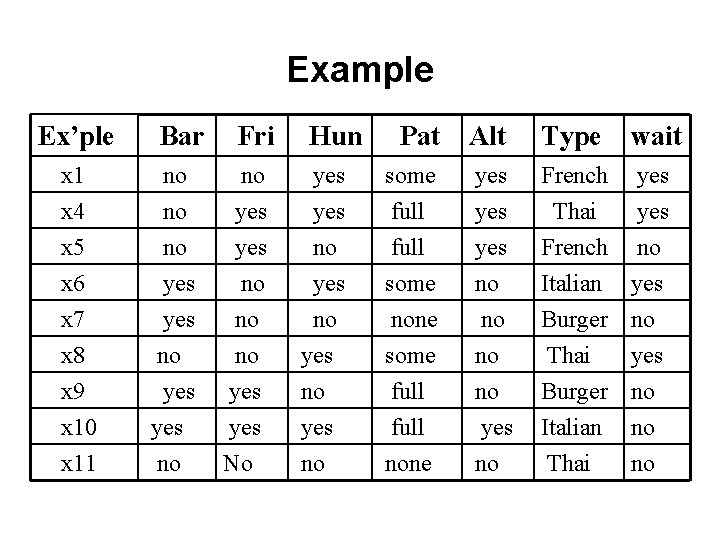

Example Ex’ple Bar Fri Hun x 1 x 4 x 5 no no yes x 6 x 7 x 8 yes no x 9 yes x 10 x 11 yes no Pat Alt Type wait yes no some full yes yes French Thai French yes no no yes some none some no no no Italian yes Burger no Thai yes no full no Burger no yes No yes no full none yes no Italian Thai no no

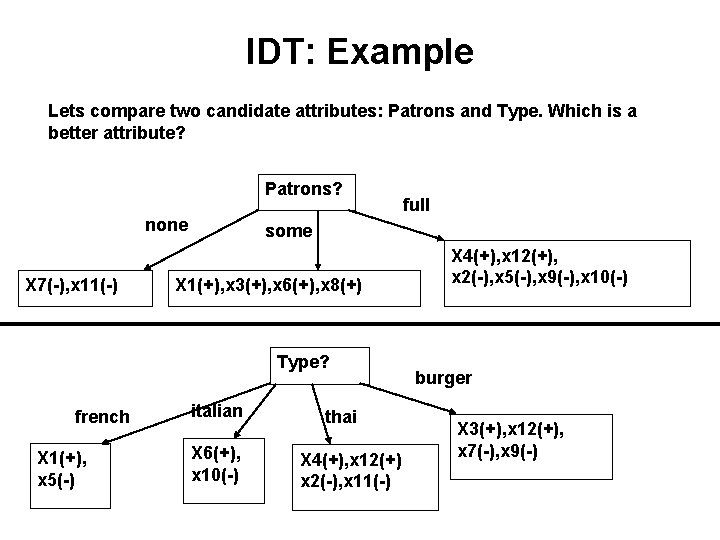

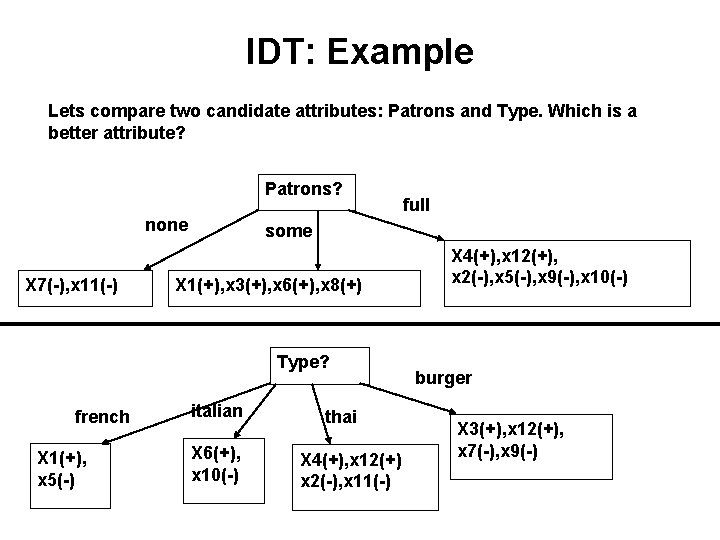

IDT: Example Lets compare two candidate attributes: Patrons and Type. Which is a better attribute? Patrons? none X 7(-), x 11(-) some X 1(+), x 3(+), x 6(+), x 8(+) Type? french X 1(+), x 5(-) full italian X 6(+), x 10(-) thai X 4(+), x 12(+) x 2(-), x 11(-) X 4(+), x 12(+), x 2(-), x 5(-), x 9(-), x 10(-) burger X 3(+), x 12(+), x 7(-), x 9(-)

Example of a Decision Tree Patrons? none Full some no yes wait. Estimate? >60 30 -60 no Reservation? No 10 -30 Alternate? no no 0 -10 Hungry? yes No Fri/Sat? yes Yes Alternate? no yes no Bar? Yes No Yes yes yes Raining? no no yes

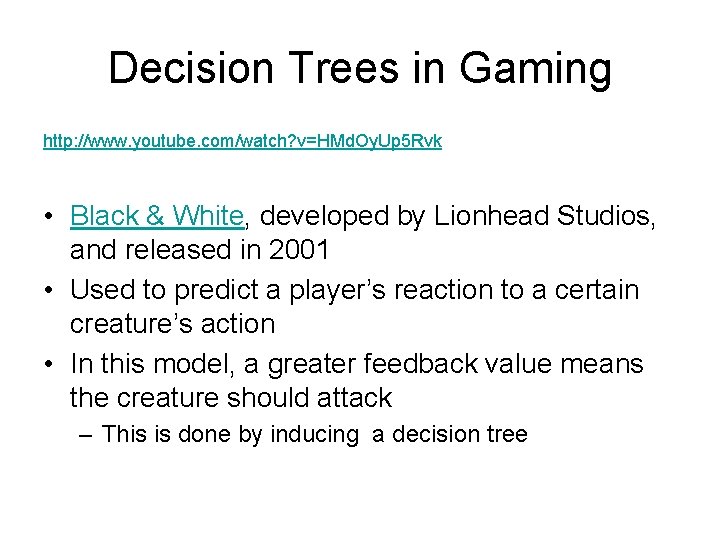

Decision Trees in Gaming http: //www. youtube. com/watch? v=HMd. Oy. Up 5 Rvk • Black & White, developed by Lionhead Studios, and released in 2001 • Used to predict a player’s reaction to a certain creature’s action • In this model, a greater feedback value means the creature should attack – This is done by inducing a decision tree

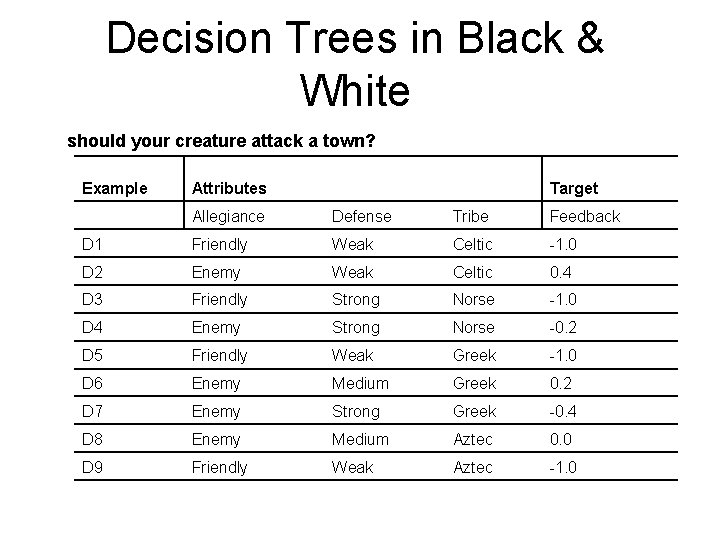

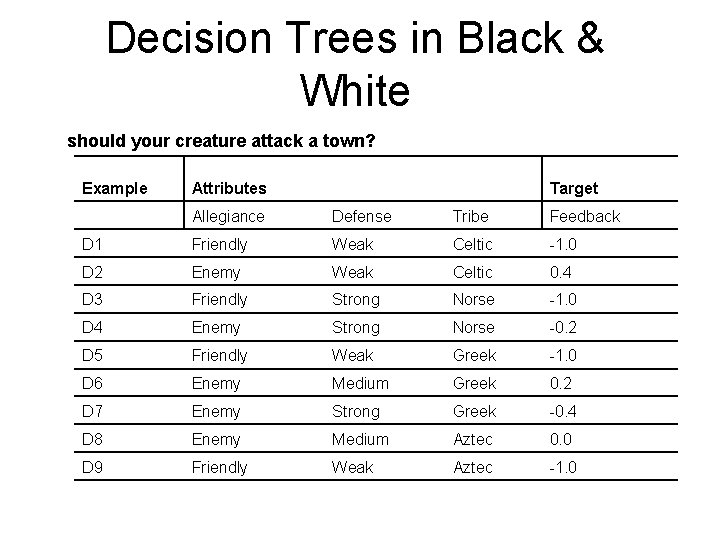

Decision Trees in Black & White should your creature attack a town? Example Attributes Target Allegiance Defense Tribe Feedback D 1 Friendly Weak Celtic -1. 0 D 2 Enemy Weak Celtic 0. 4 D 3 Friendly Strong Norse -1. 0 D 4 Enemy Strong Norse -0. 2 D 5 Friendly Weak Greek -1. 0 D 6 Enemy Medium Greek 0. 2 D 7 Enemy Strong Greek -0. 4 D 8 Enemy Medium Aztec 0. 0 D 9 Friendly Weak Aztec -1. 0

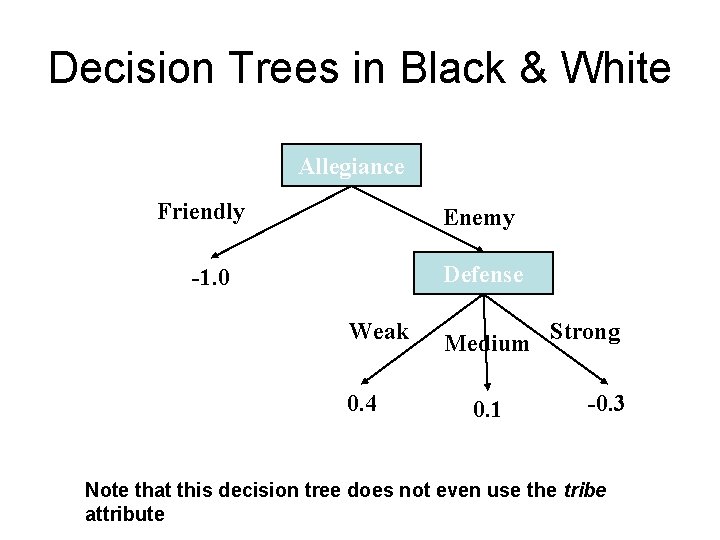

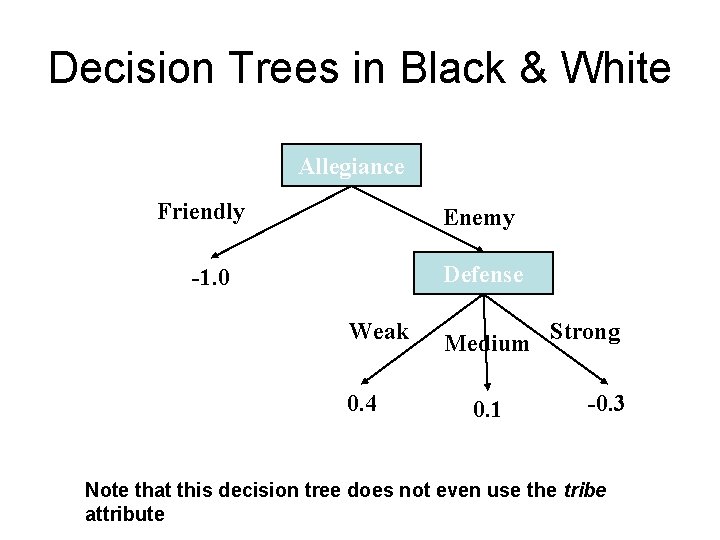

Decision Trees in Black & White Allegiance Friendly Enemy Defense -1. 0 Weak 0. 4 Medium Strong 0. 1 -0. 3 Note that this decision tree does not even use the tribe attribute

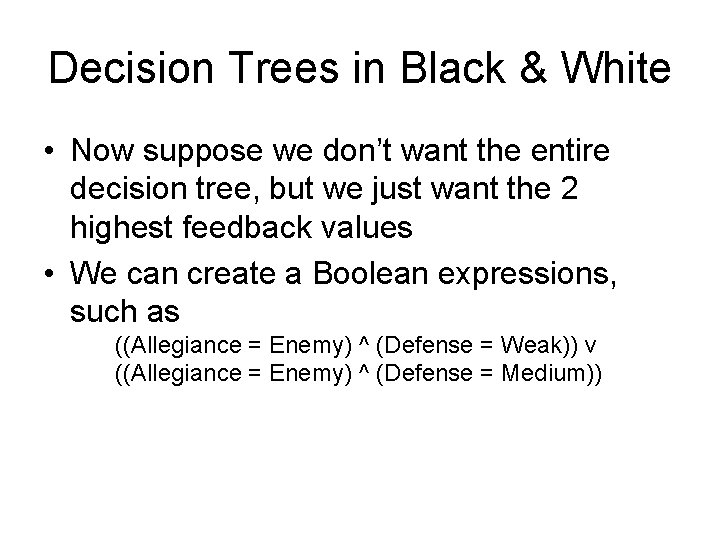

Decision Trees in Black & White • Now suppose we don’t want the entire decision tree, but we just want the 2 highest feedback values • We can create a Boolean expressions, such as ((Allegiance = Enemy) ^ (Defense = Weak)) v ((Allegiance = Enemy) ^ (Defense = Medium))

Classification (According to the language representation) • Symbolic Ø Ø Version Space Decision Trees Explanation-Based Learning … • Sub-symbolic Ø Reinforcement Learning Ø Connectionist Ø Evolutionary Next class