Machine Learning 2 Inductive Dependency Parsing Joakim Nivre

- Slides: 15

Machine Learning 2 Inductive Dependency Parsing Joakim Nivre Uppsala University Växjö University Department of Linguistics and Philology School of Mathematics and Systems Engineering

Inductive Dependency Parsing • Dependency-based representations … – have restricted expressivity but provide a transparent encoding of semantic structure. – have restricted complexity in parsing. • Inductive machine learning … – is necessary for accurate disambiguation. – is beneficial for robustness. – makes (formal) grammars superfluous.

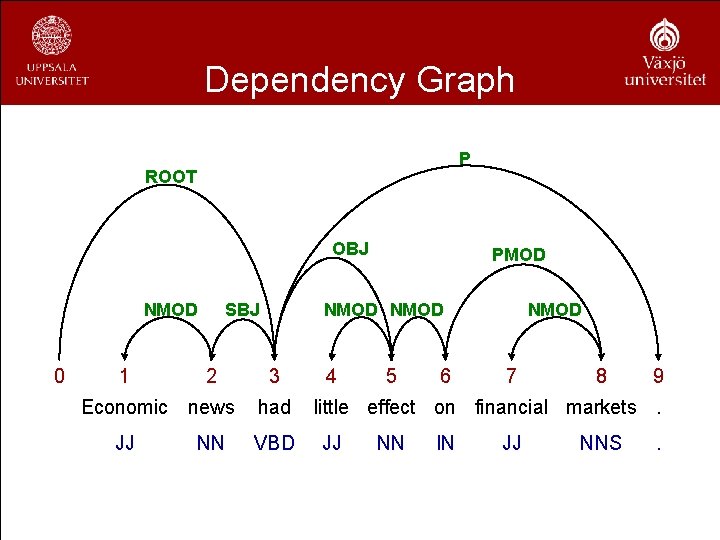

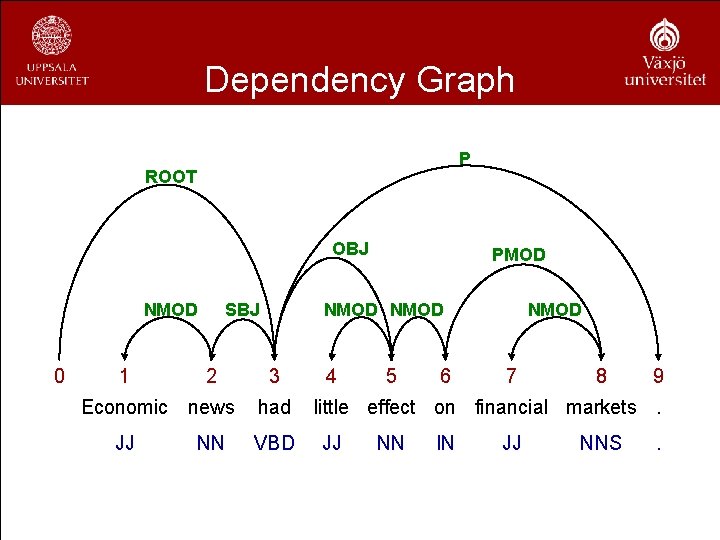

Dependency Graph P ROOT OBJ NMOD 0 1 SBJ 2 Economic news JJ NN PMOD NMOD 3 had VBD 4 5 6 NMOD 7 8 9 little effect on financial markets. JJ NN IN JJ NNS .

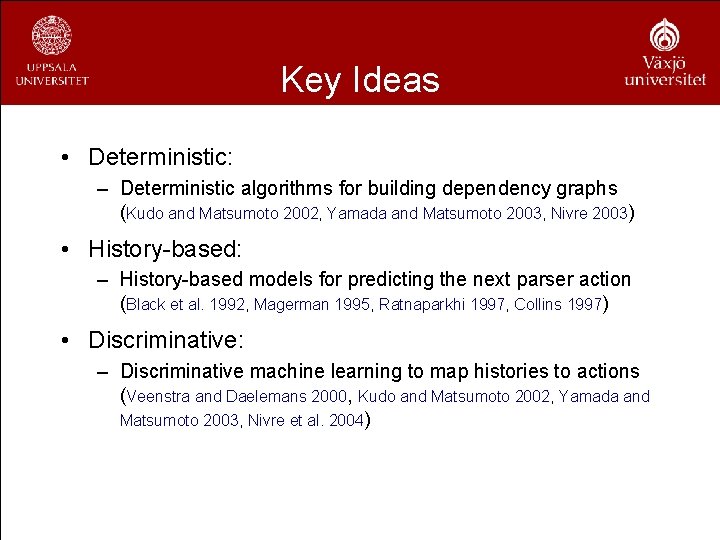

Key Ideas • Deterministic: – Deterministic algorithms for building dependency graphs (Kudo and Matsumoto 2002, Yamada and Matsumoto 2003, Nivre 2003) • History-based: – History-based models for predicting the next parser action (Black et al. 1992, Magerman 1995, Ratnaparkhi 1997, Collins 1997) • Discriminative: – Discriminative machine learning to map histories to actions (Veenstra and Daelemans 2000, Kudo and Matsumoto 2002, Yamada and Matsumoto 2003, Nivre et al. 2004)

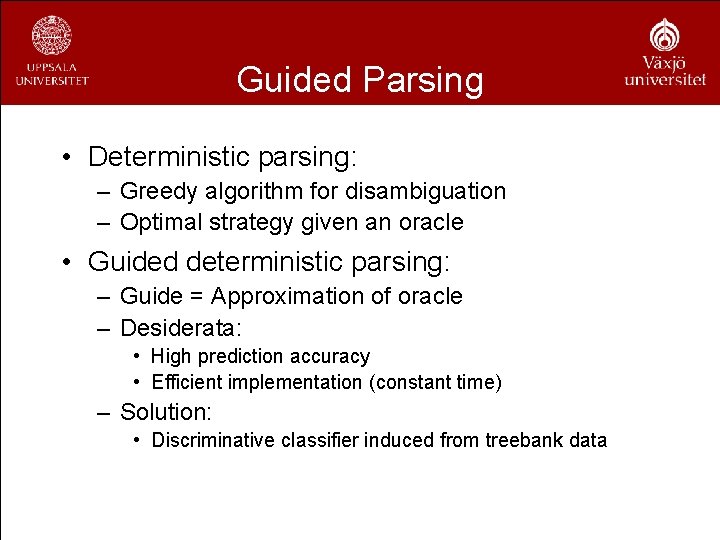

Guided Parsing • Deterministic parsing: – Greedy algorithm for disambiguation – Optimal strategy given an oracle • Guided deterministic parsing: – Guide = Approximation of oracle – Desiderata: • High prediction accuracy • Efficient implementation (constant time) – Solution: • Discriminative classifier induced from treebank data

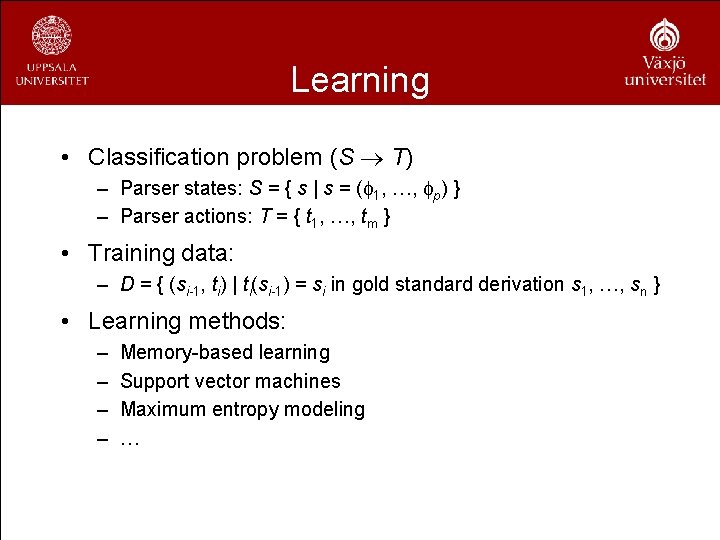

Learning • Classification problem (S T) – Parser states: S = { s | s = ( 1, …, p) } – Parser actions: T = { t 1, …, tm } • Training data: – D = { (si-1, ti) | ti(si-1) = si in gold standard derivation s 1, …, sn } • Learning methods: – – Memory-based learning Support vector machines Maximum entropy modeling …

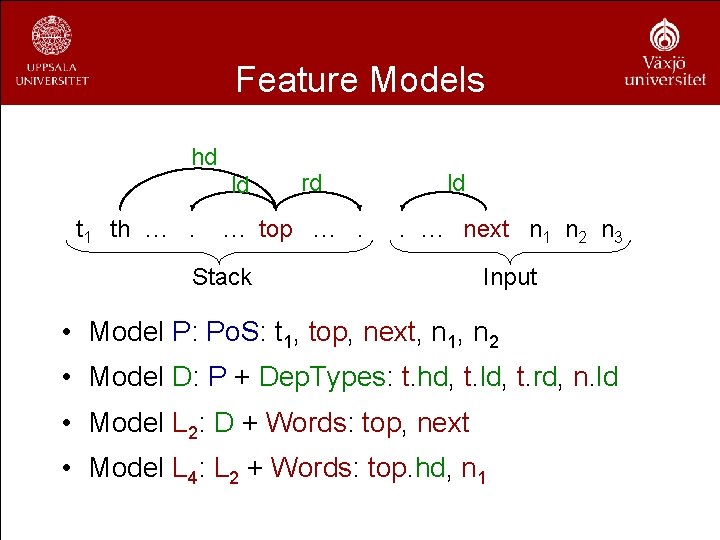

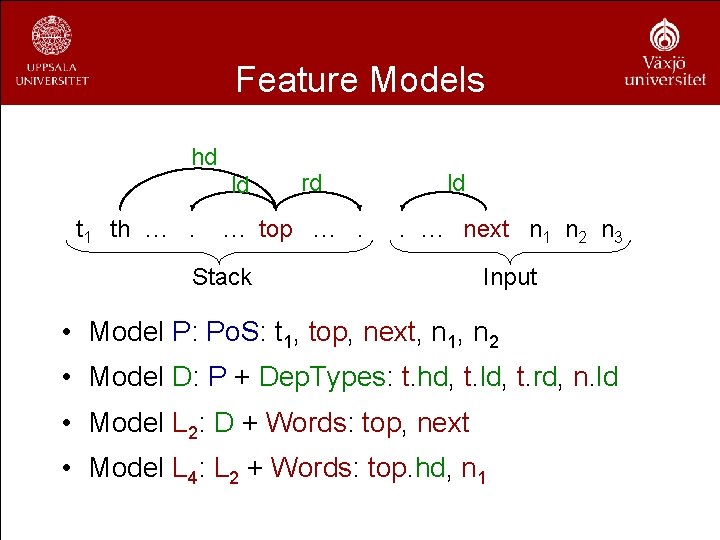

Feature Models hd ld t 1 th …. rd … top …. ld. … next n 1 n 2 n 3 Stack Input • Model P: Po. S: t 1, top, next, n 1, n 2 • Model D: P + Dep. Types: t. hd, t. ld, t. rd, n. ld • Model L 2: D + Words: top, next • Model L 4: L 2 + Words: top. hd, n 1

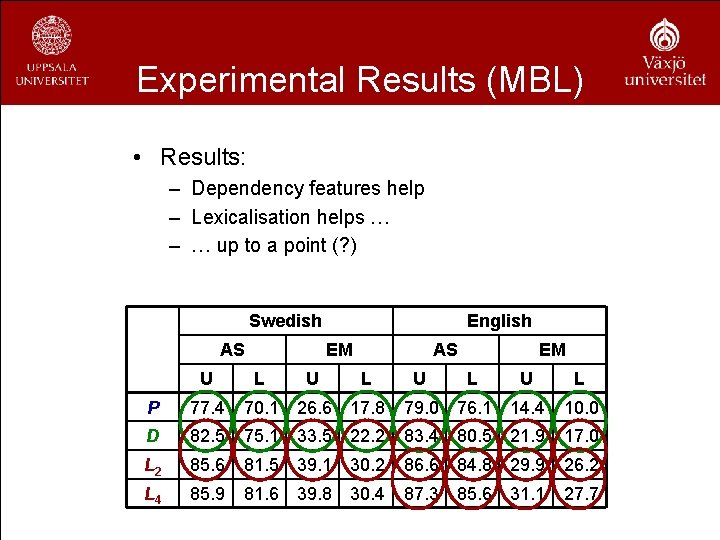

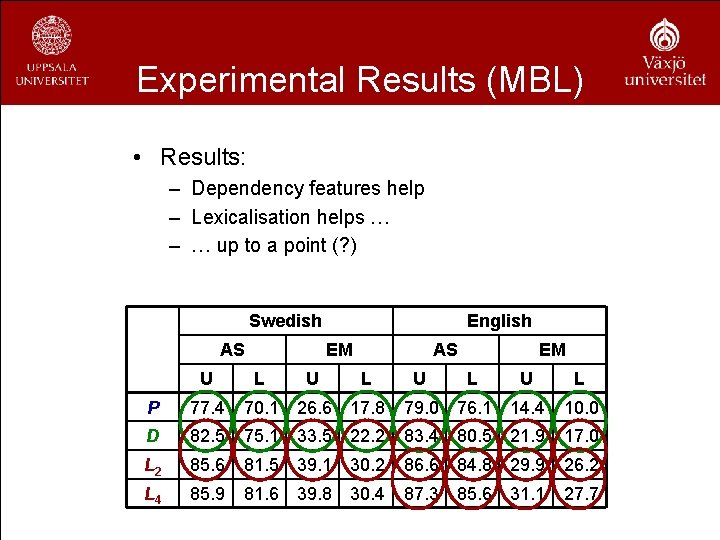

Experimental Results (MBL) • Results: – Dependency features help – Lexicalisation helps … – … up to a point (? ) Swedish AS English EM AS EM U L U L P 77. 4 70. 1 26. 6 17. 8 79. 0 76. 1 14. 4 10. 0 D 82. 5 75. 1 33. 5 22. 2 83. 4 80. 5 21. 9 17. 0 L 2 85. 6 81. 5 39. 1 30. 2 86. 6 84. 8 29. 9 26. 2 L 4 85. 9 81. 6 39. 8 30. 4 87. 3 85. 6 31. 1 27. 7

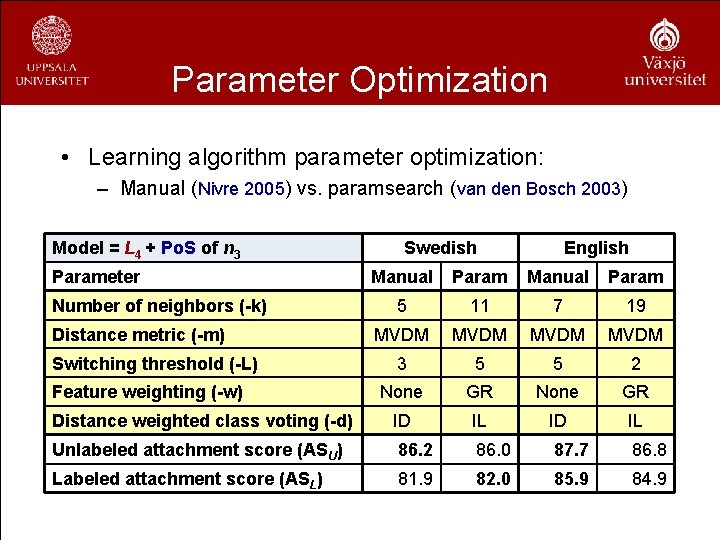

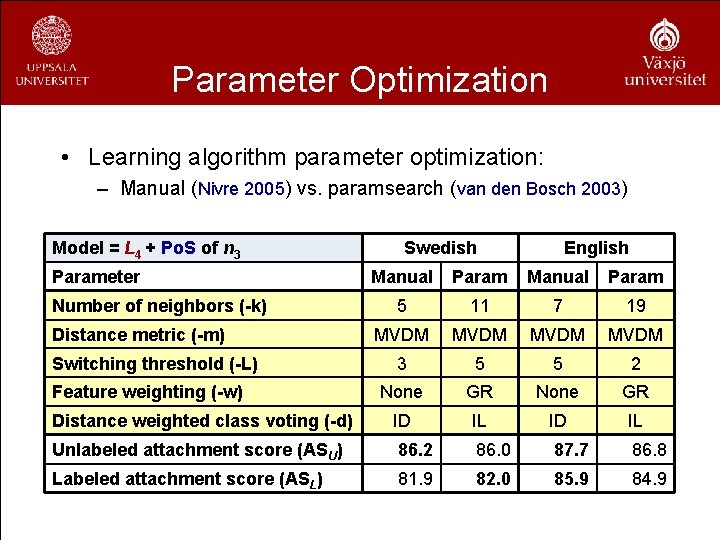

Parameter Optimization • Learning algorithm parameter optimization: – Manual (Nivre 2005) vs. paramsearch (van den Bosch 2003) Model = L 4 + Po. S of n 3 Parameter Number of neighbors (-k) Distance metric (-m) Switching threshold (-L) Feature weighting (-w) Distance weighted class voting (-d) Swedish English Manual Param 5 11 7 19 MVDM 3 5 5 2 None GR ID IL Unlabeled attachment score (ASU) 86. 2 86. 0 87. 7 86. 8 Labeled attachment score (ASL) 81. 9 82. 0 85. 9 84. 9

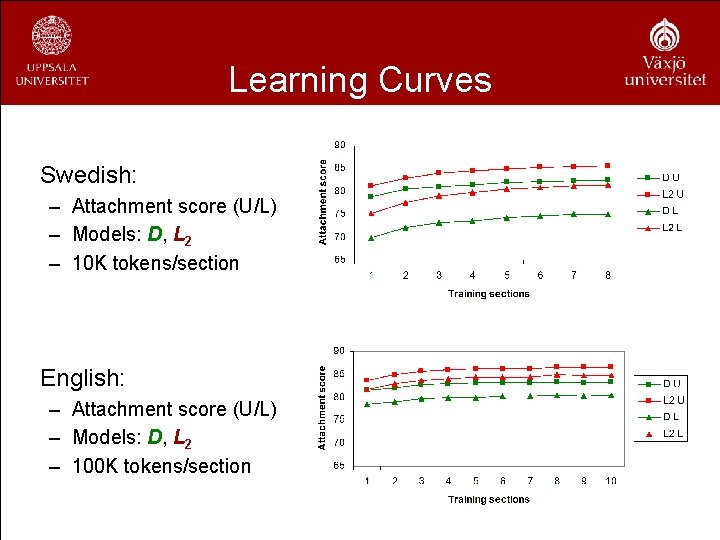

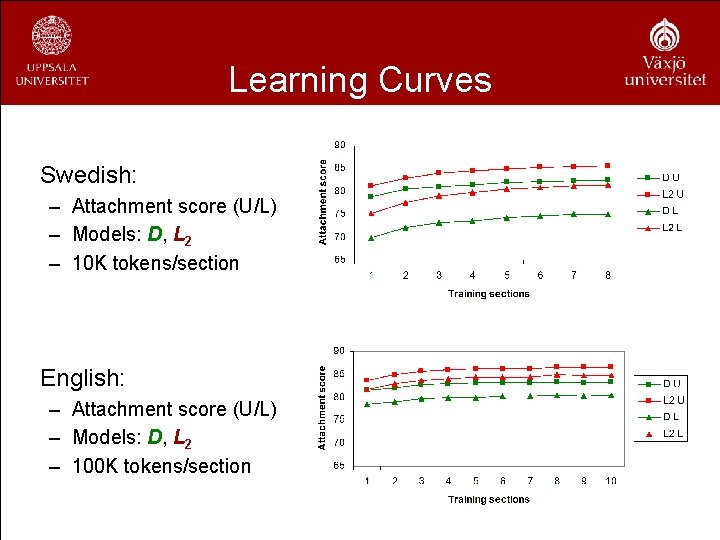

Learning Curves Swedish: – Attachment score (U/L) – Models: D, L 2 – 10 K tokens/section English: – Attachment score (U/L) – Models: D, L 2 – 100 K tokens/section

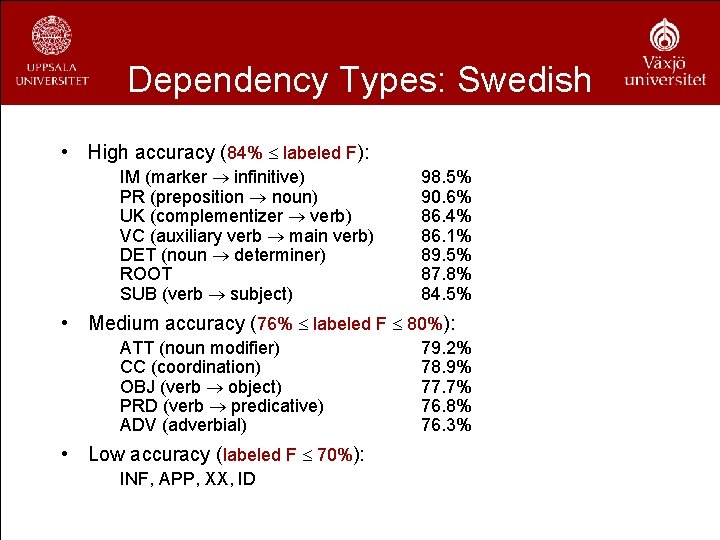

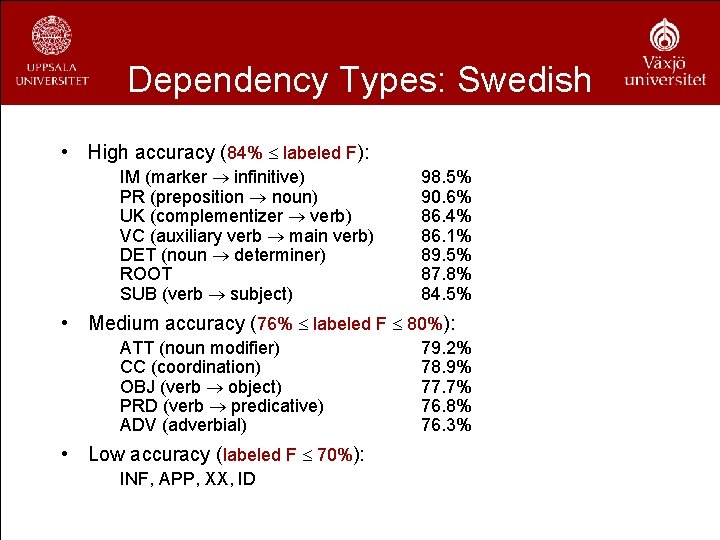

Dependency Types: Swedish • High accuracy (84% labeled F): IM (marker infinitive) PR (preposition noun) UK (complementizer verb) VC (auxiliary verb main verb) DET (noun determiner) ROOT SUB (verb subject) 98. 5% 90. 6% 86. 4% 86. 1% 89. 5% 87. 8% 84. 5% • Medium accuracy (76% labeled F 80%): ATT (noun modifier) CC (coordination) OBJ (verb object) PRD (verb predicative) ADV (adverbial) • Low accuracy (labeled F 70%): INF, APP, XX, ID 79. 2% 78. 9% 77. 7% 76. 8% 76. 3%

Dependency Types: English • High accuracy (86% labeled F): VC (auxiliary verb main verb) NMOD (noun modifier) SBJ (verb subject) PMOD (preposition modifier) SBAR (complementizer verb) 95. 0% 91. 0% 89. 3% 88. 6% 86. 1% • Medium accuracy (73% labeled F 83%): ROOT OBJ (verb object) VMOD (verb modifier) AMOD (adjective/adverb modifier) PRD (predicative) • Low accuracy (labeled F 70%): DEP (null label) 82. 4% 81. 1% 76. 8% 76. 7% 73. 8%

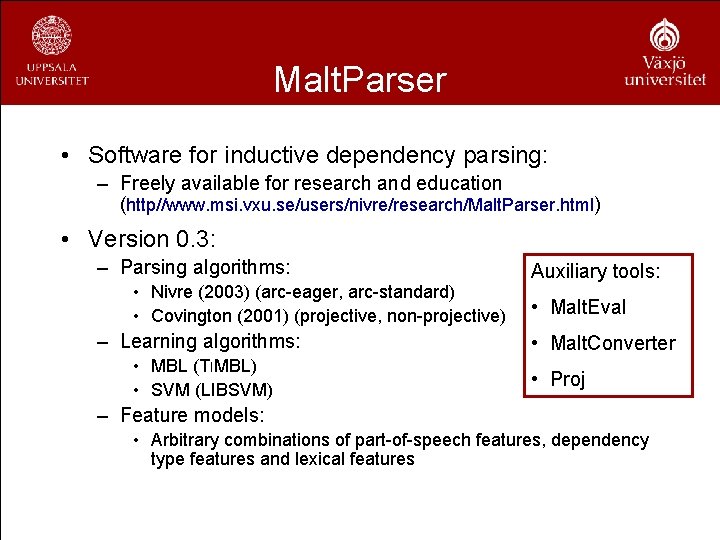

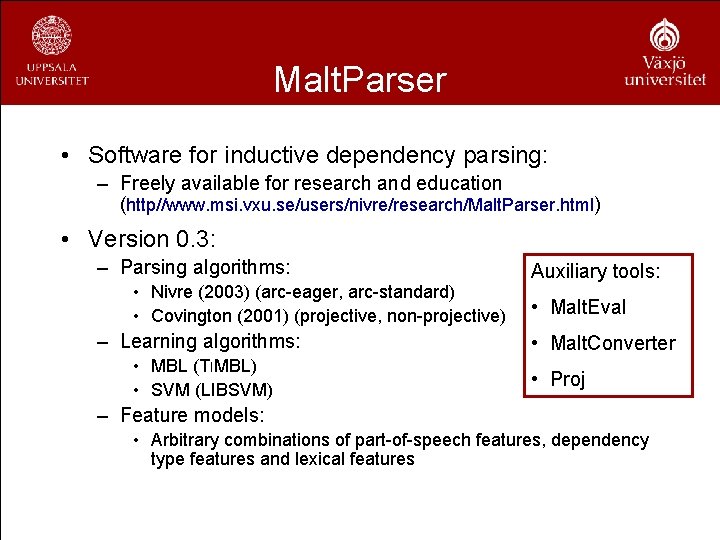

Malt. Parser • Software for inductive dependency parsing: – Freely available for research and education (http//www. msi. vxu. se/users/nivre/research/Malt. Parser. html) • Version 0. 3: – Parsing algorithms: • Nivre (2003) (arc-eager, arc-standard) • Covington (2001) (projective, non-projective) – Learning algorithms: • MBL (TIMBL) • SVM (LIBSVM) Auxiliary tools: • Malt. Eval • Malt. Converter • Proj – Feature models: • Arbitrary combinations of part-of-speech features, dependency type features and lexical features

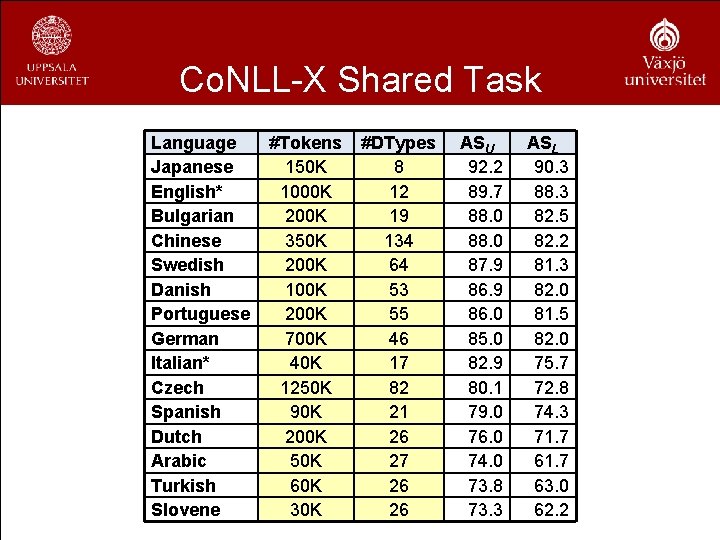

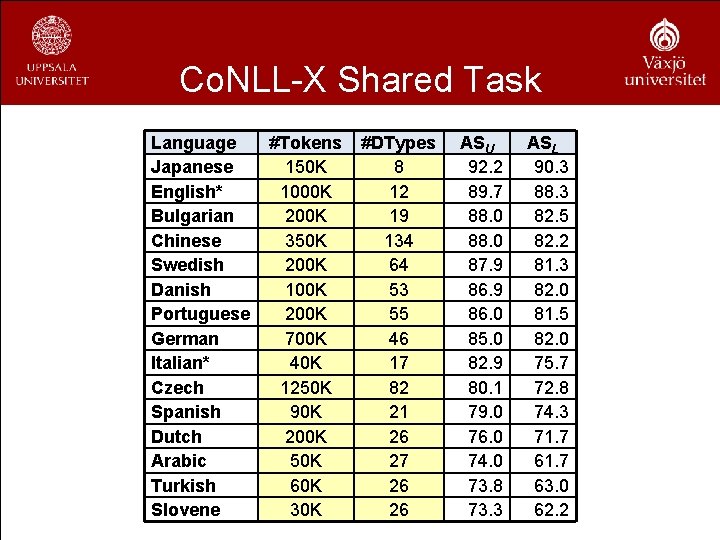

Co. NLL-X Shared Task Language Japanese English* Bulgarian Chinese Swedish Danish Portuguese German Italian* Czech Spanish Dutch Arabic Turkish Slovene #Tokens 150 K 1000 K 200 K 350 K 200 K 100 K 200 K 700 K 40 K 1250 K 90 K 200 K 50 K 60 K 30 K #DTypes 8 12 19 134 64 53 55 46 17 82 21 26 27 26 26 ASU 92. 2 89. 7 88. 0 87. 9 86. 0 85. 0 82. 9 80. 1 79. 0 76. 0 74. 0 73. 8 73. 3 ASL 90. 3 88. 3 82. 5 82. 2 81. 3 82. 0 81. 5 82. 0 75. 7 72. 8 74. 3 71. 7 63. 0 62. 2

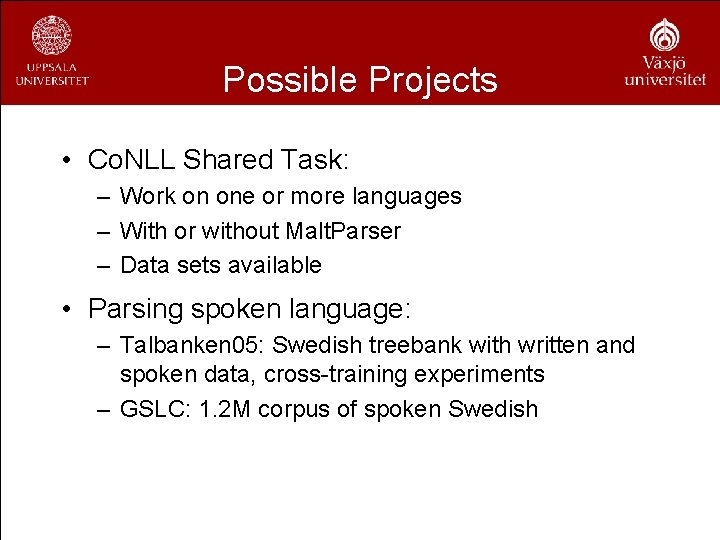

Possible Projects • Co. NLL Shared Task: – Work on one or more languages – With or without Malt. Parser – Data sets available • Parsing spoken language: – Talbanken 05: Swedish treebank with written and spoken data, cross-training experiments – GSLC: 1. 2 M corpus of spoken Swedish