Machine Learning 101 Intro to AI ML Deep

- Slides: 22

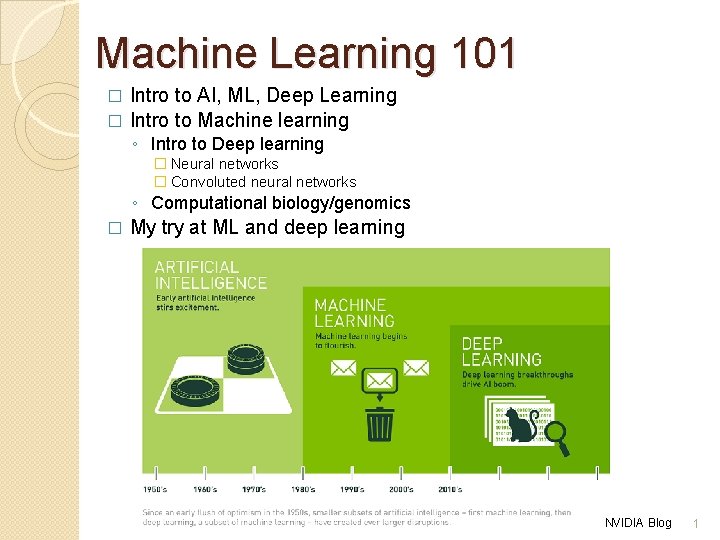

Machine Learning 101 Intro to AI, ML, Deep Learning � Intro to Machine learning � ◦ Intro to Deep learning � Neural networks � Convoluted neural networks ◦ Computational biology/genomics � My try at ML and deep learning NVIDIA Blog 1

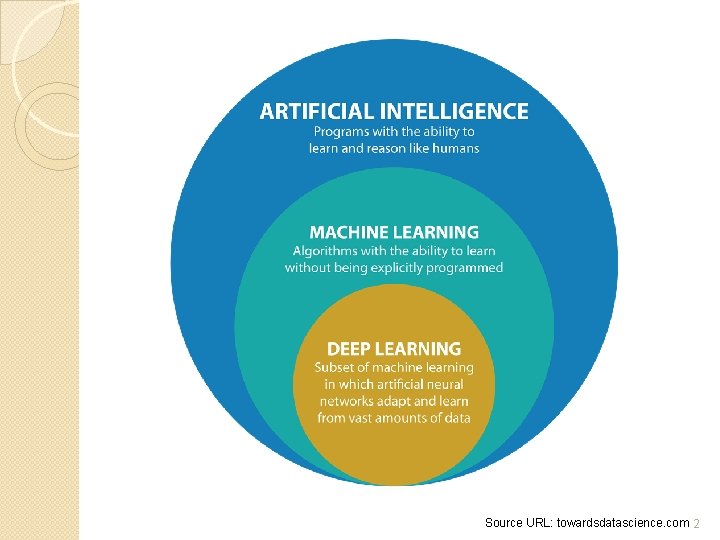

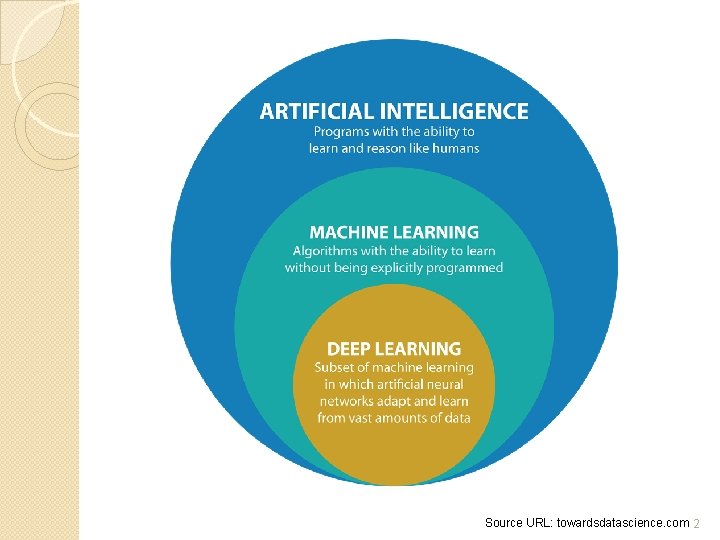

Source URL: towardsdatascience. com 2

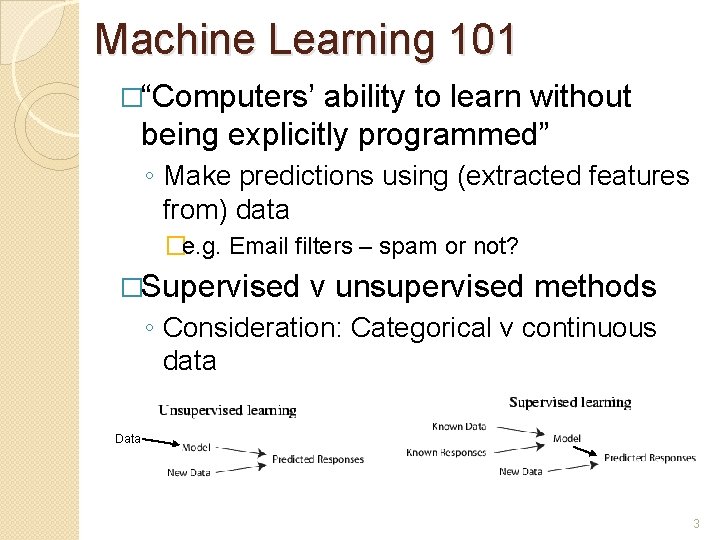

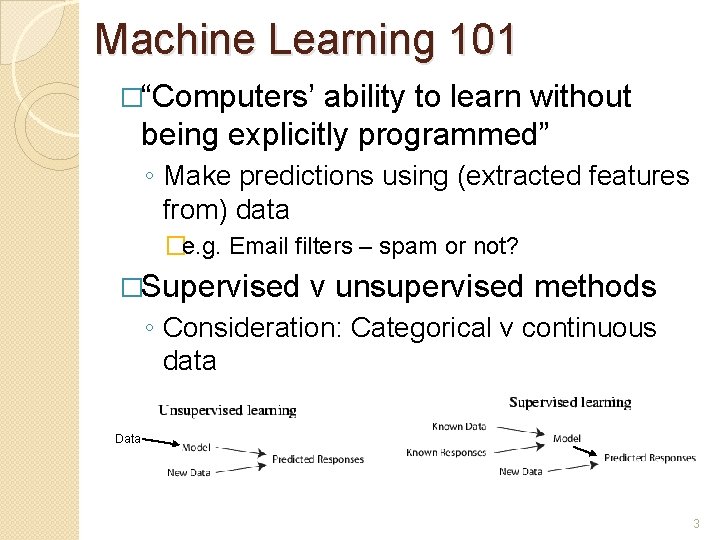

Machine Learning 101 �“Computers’ ability to learn without being explicitly programmed” ◦ Make predictions using (extracted features from) data �e. g. Email filters – spam or not? �Supervised v unsupervised methods ◦ Consideration: Categorical v continuous data Data 3

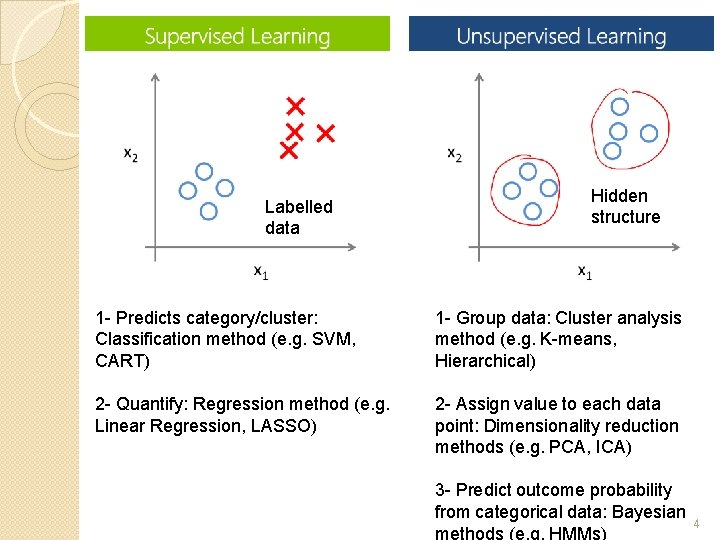

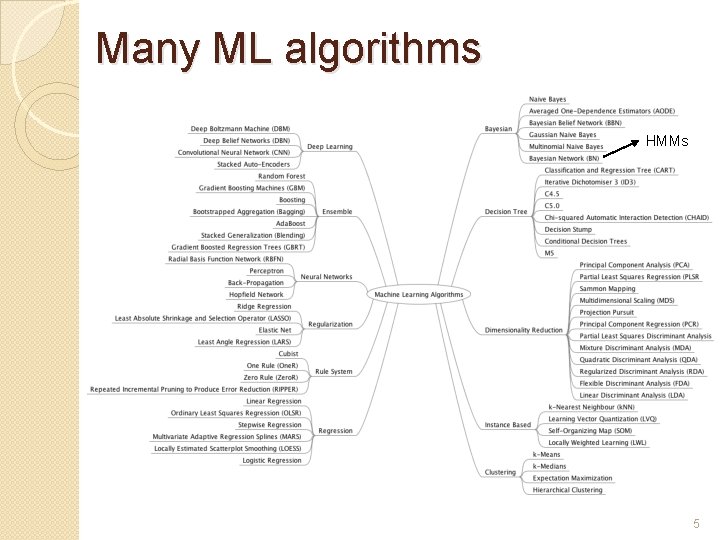

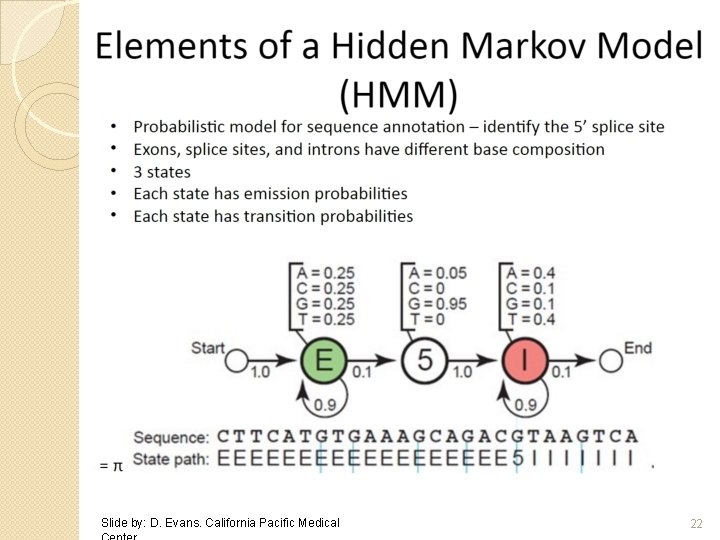

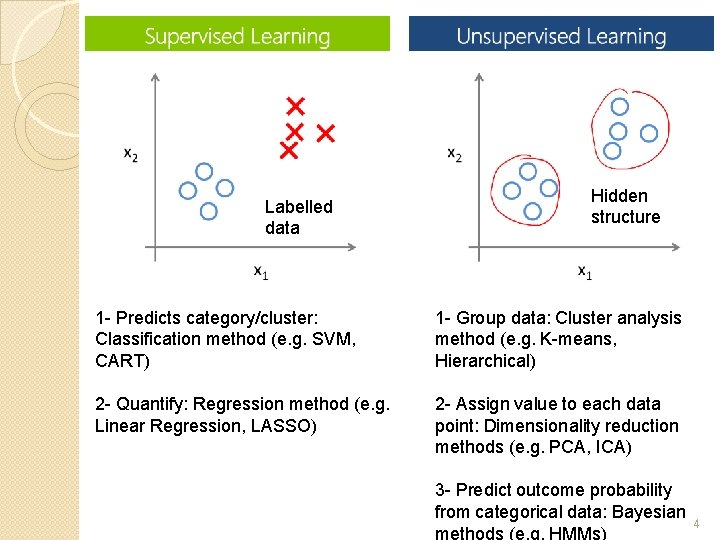

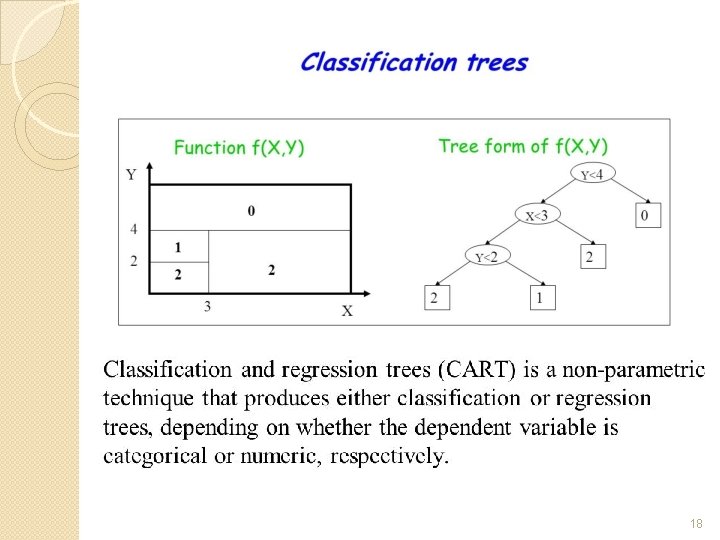

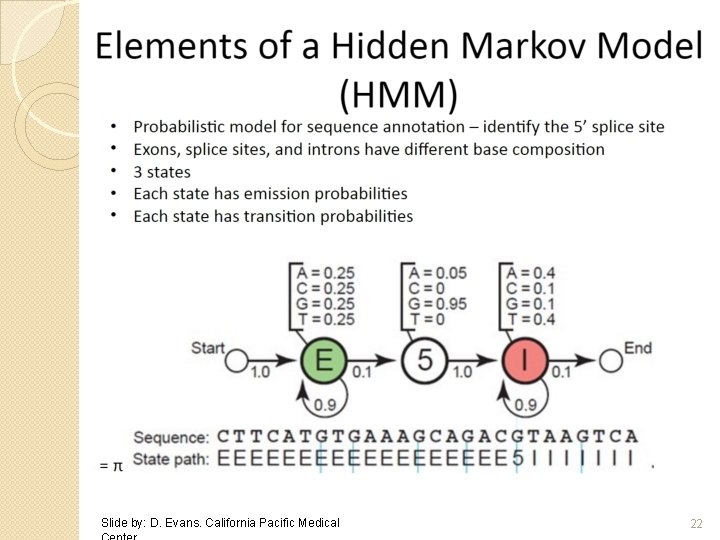

Labelled data Hidden structure 1 - Predicts category/cluster: Classification method (e. g. SVM, CART) 1 - Group data: Cluster analysis method (e. g. K-means, Hierarchical) 2 - Quantify: Regression method (e. g. Linear Regression, LASSO) 2 - Assign value to each data point: Dimensionality reduction methods (e. g. PCA, ICA) 3 - Predict outcome probability from categorical data: Bayesian methods (e. g. HMMs) 4

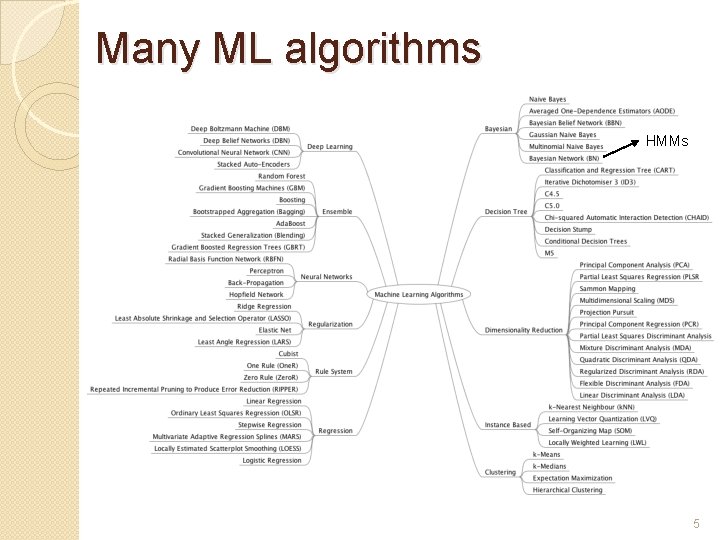

Many ML algorithms HMMs 5

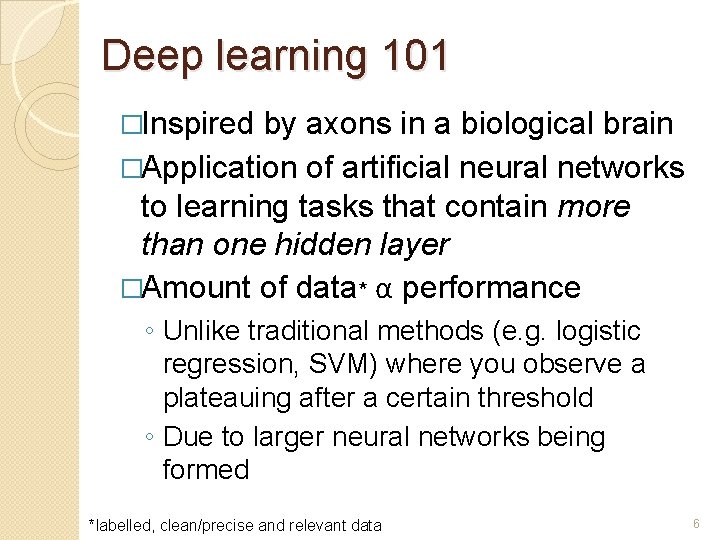

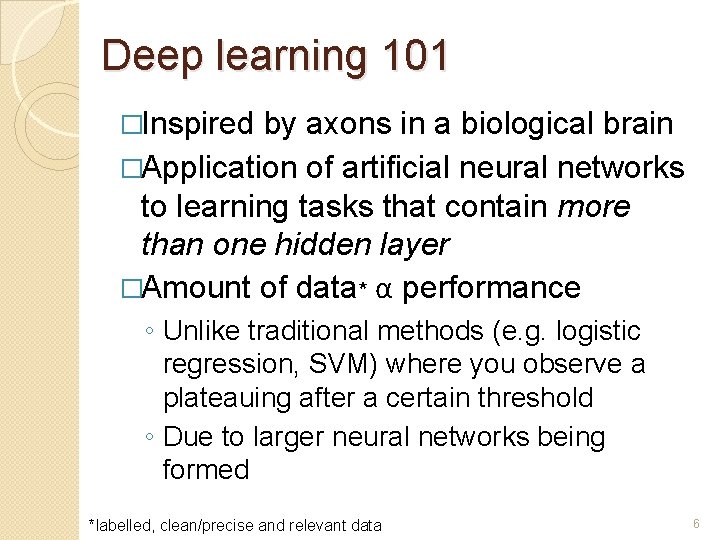

Deep learning 101 �Inspired by axons in a biological brain �Application of artificial neural networks to learning tasks that contain more than one hidden layer �Amount of data* α performance ◦ Unlike traditional methods (e. g. logistic regression, SVM) where you observe a plateauing after a certain threshold ◦ Due to larger neural networks being formed *labelled, clean/precise and relevant data 6

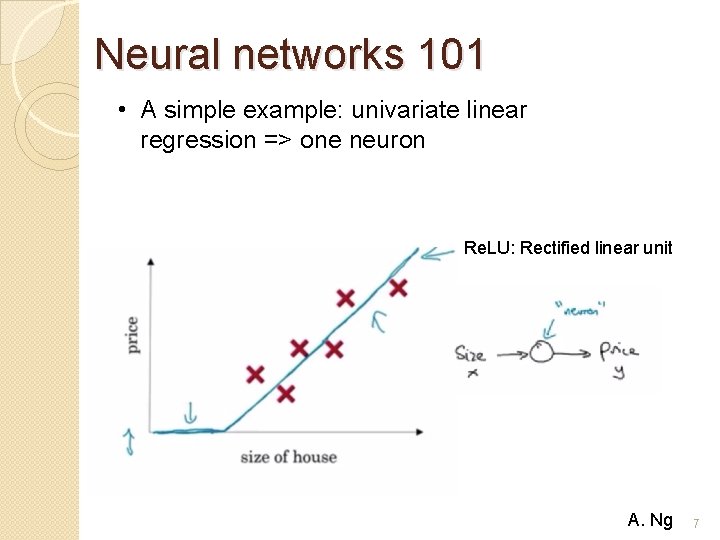

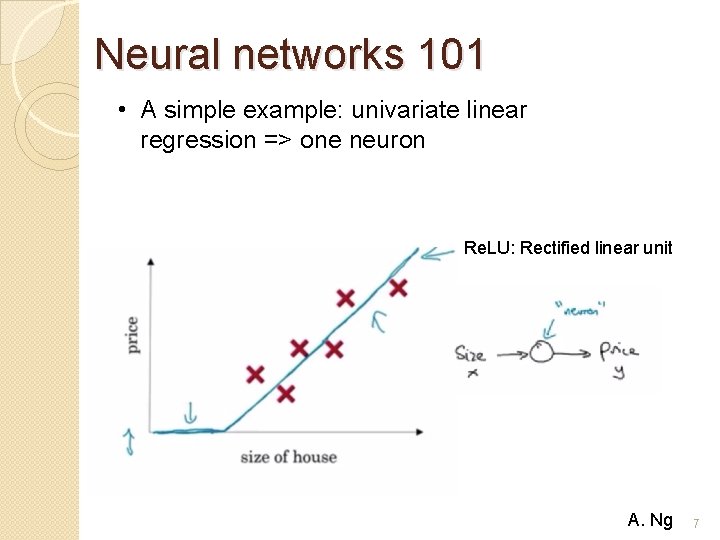

Neural networks 101 • A simple example: univariate linear regression => one neuron Re. LU: Rectified linear unit A. Ng 7

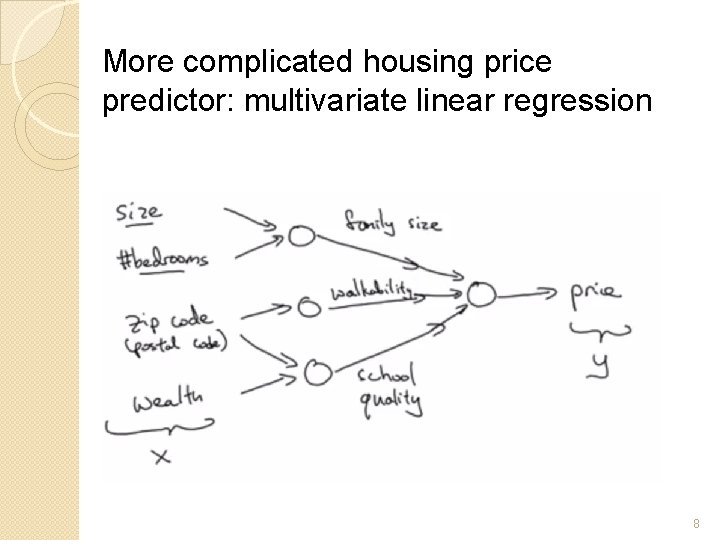

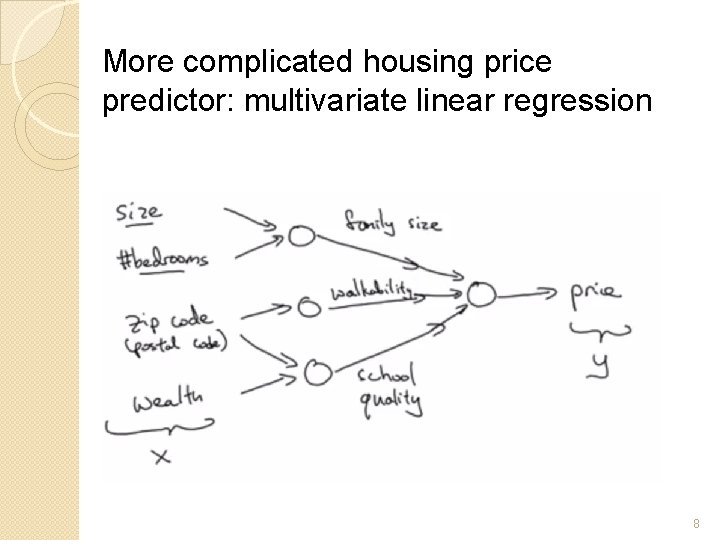

More complicated housing price predictor: multivariate linear regression 8

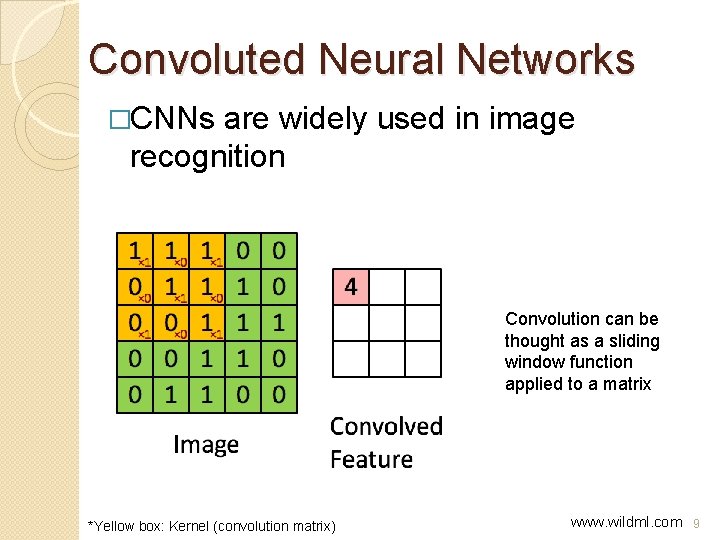

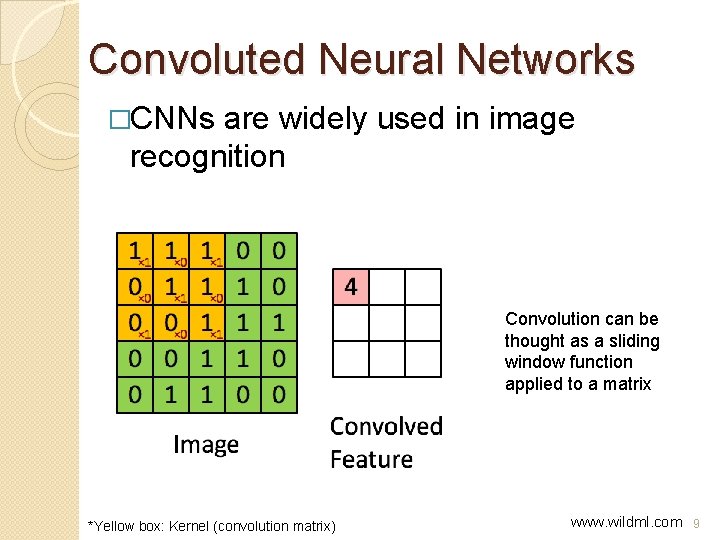

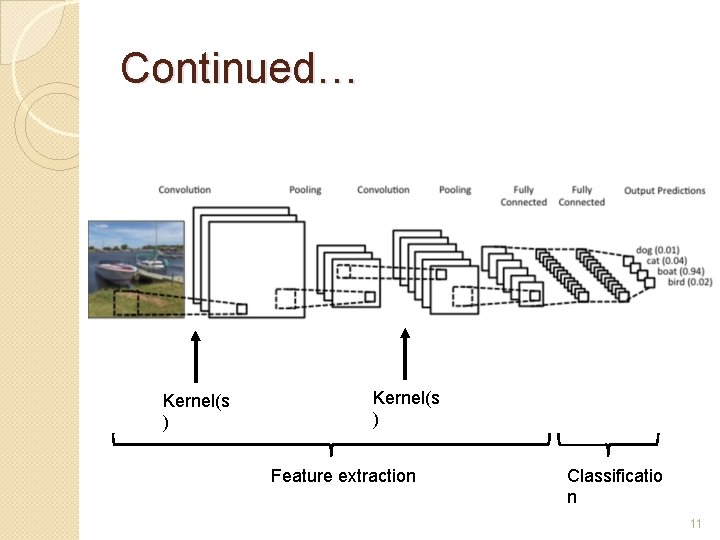

Convoluted Neural Networks �CNNs are widely used in image recognition Convolution can be thought as a sliding window function applied to a matrix *Yellow box: Kernel (convolution matrix) www. wildml. com 9

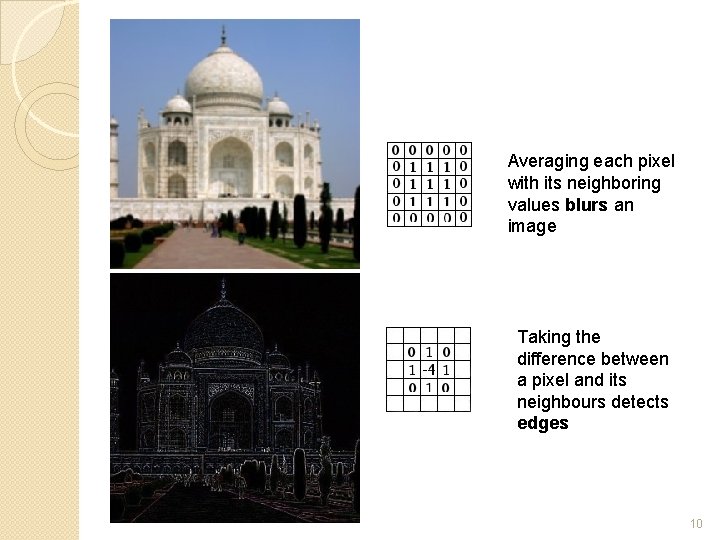

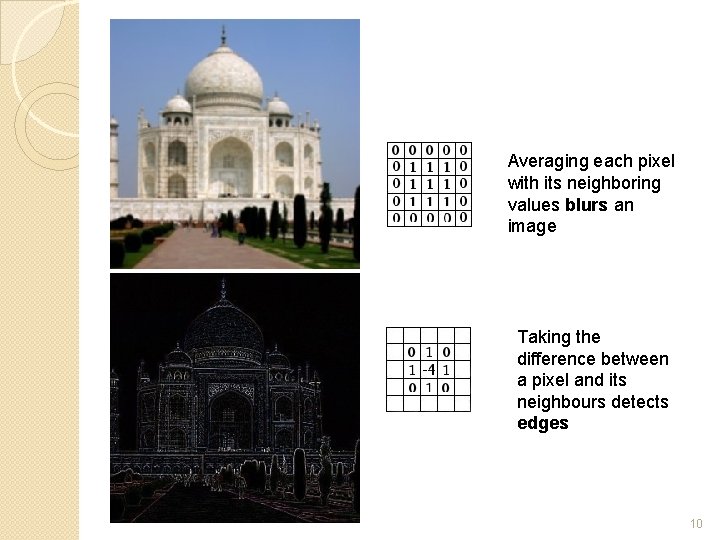

Averaging each pixel with its neighboring values blurs an image Taking the difference between a pixel and its neighbours detects edges 10

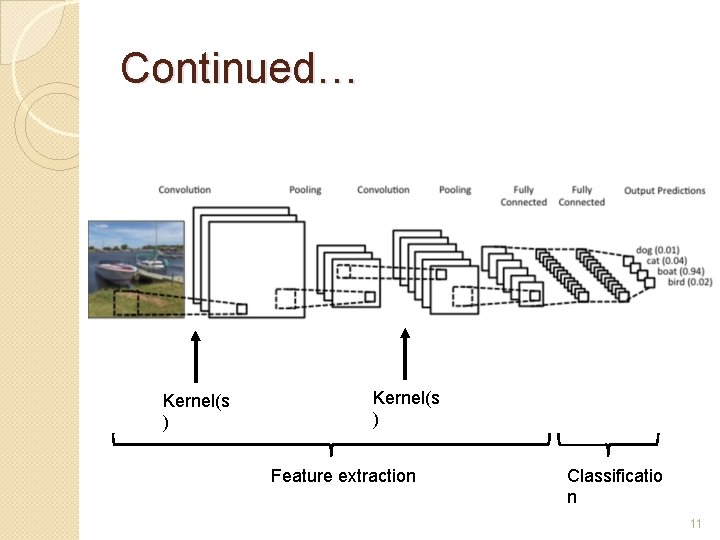

Continued… Kernel(s ) Feature extraction Classificatio n 11

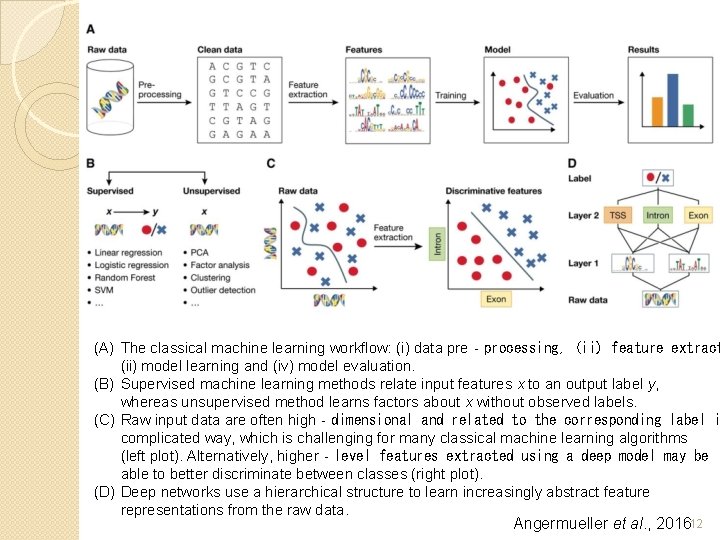

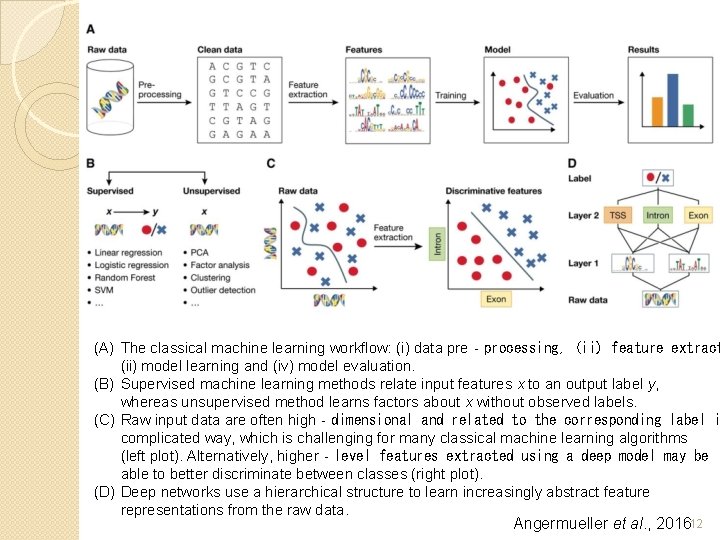

(A) The classical machine learning workflow: (i) data pre‐processing, (ii) feature extract (ii) model learning and (iv) model evaluation. (B) Supervised machine learning methods relate input features x to an output label y, whereas unsupervised method learns factors about x without observed labels. (C) Raw input data are often high‐dimensional and related to the corresponding label i complicated way, which is challenging for many classical machine learning algorithms (left plot). Alternatively, higher‐level features extracted using a deep model may be able to better discriminate between classes (right plot). (D) Deep networks use a hierarchical structure to learn increasingly abstract feature representations from the raw data. Angermueller et al. , 201612

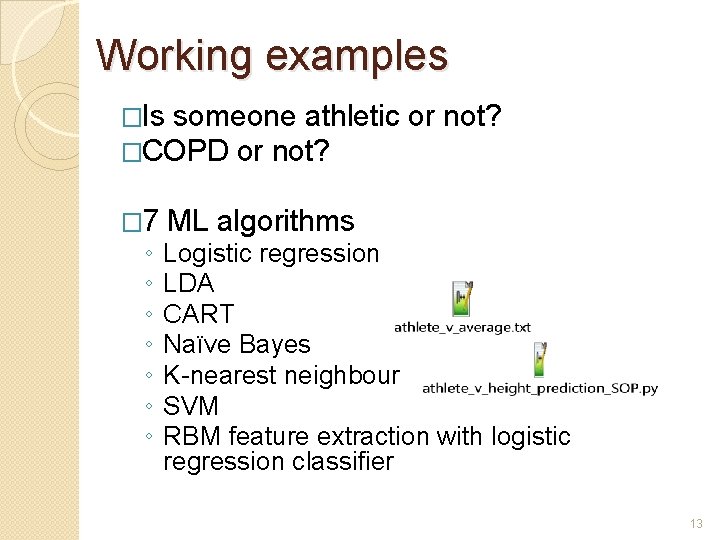

Working examples �Is someone athletic �COPD or not? � 7 ◦ ◦ ◦ ◦ or not? ML algorithms Logistic regression LDA CART Naïve Bayes K-nearest neighbour SVM RBM feature extraction with logistic regression classifier 13

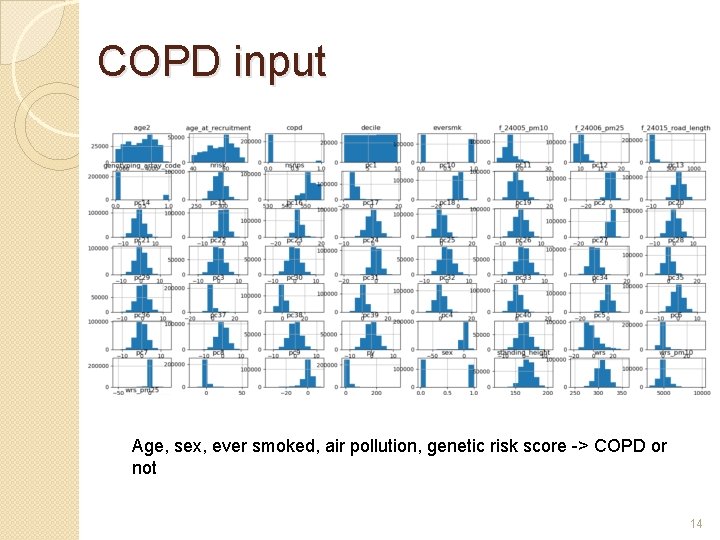

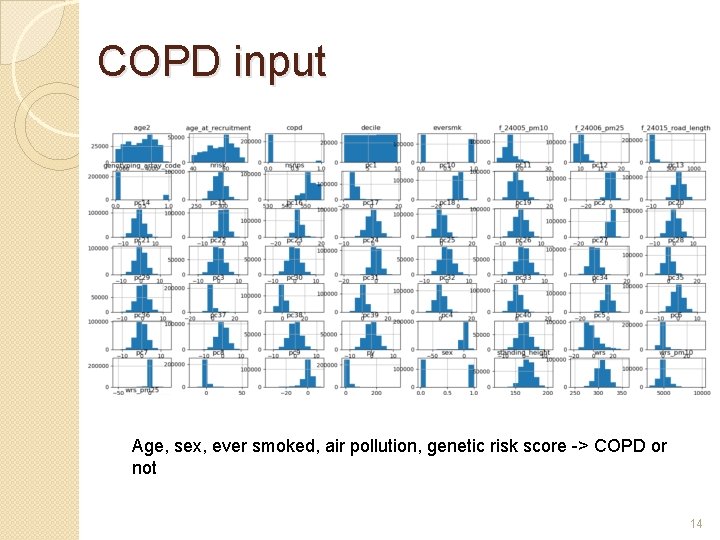

COPD input Age, sex, ever smoked, air pollution, genetic risk score -> COPD or not 14

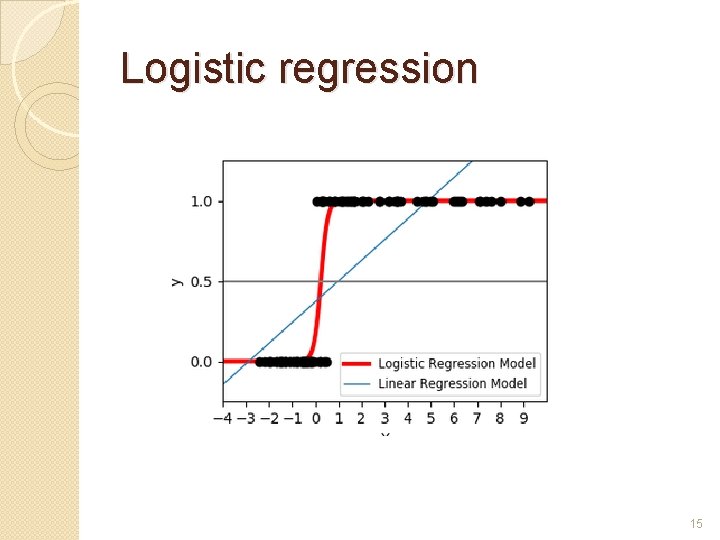

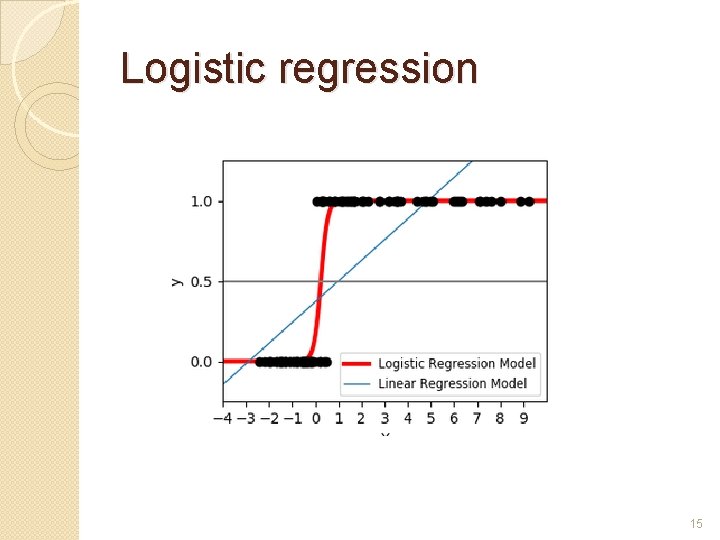

Logistic regression 15

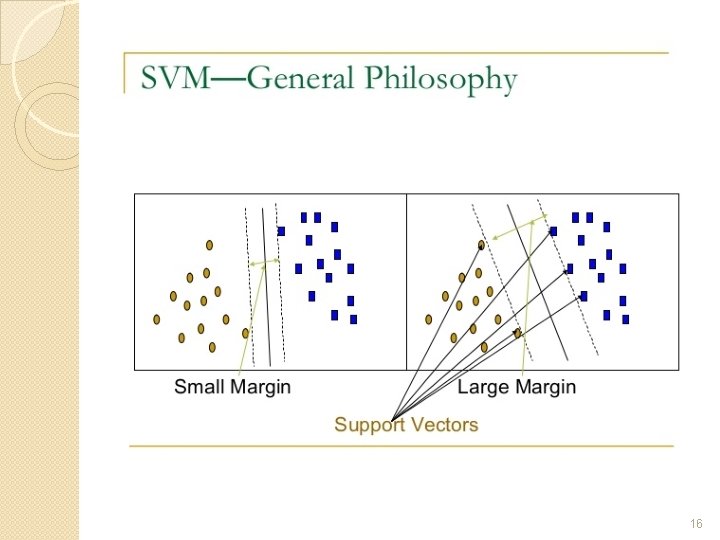

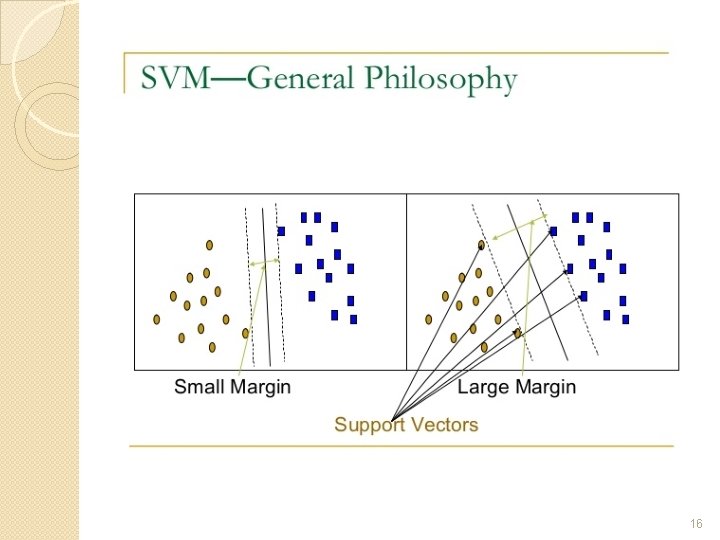

16

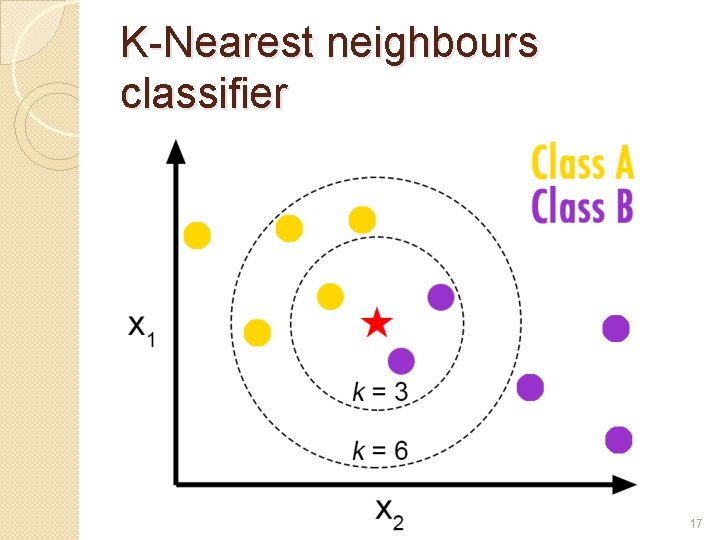

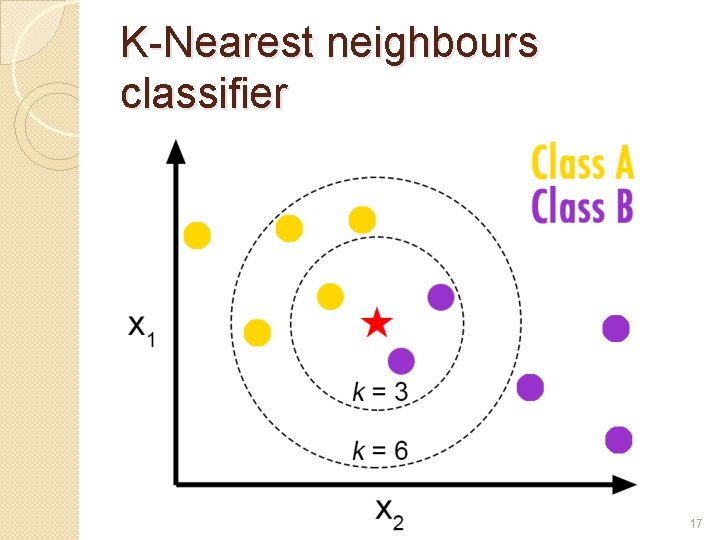

K-Nearest neighbours classifier 17

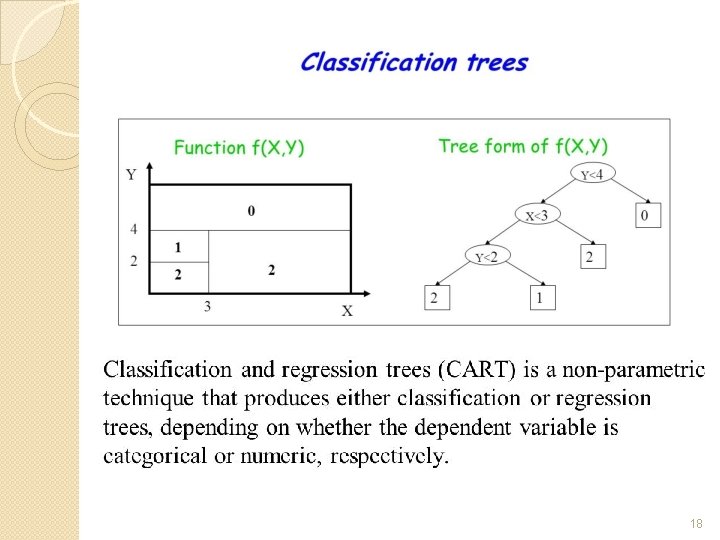

18

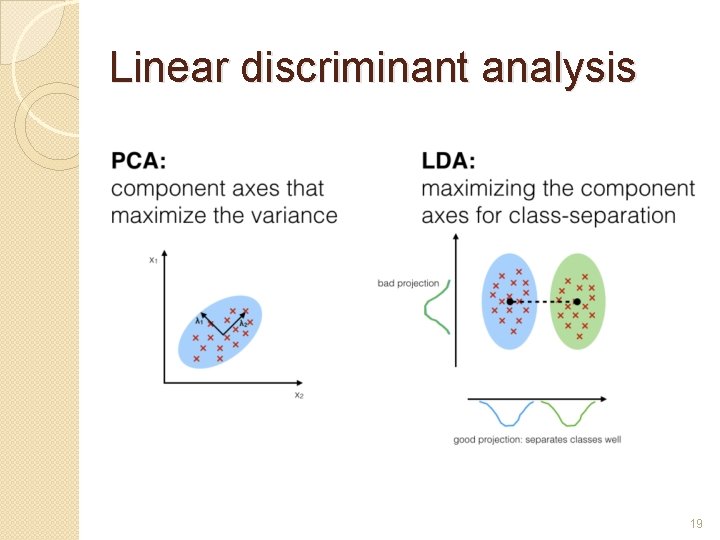

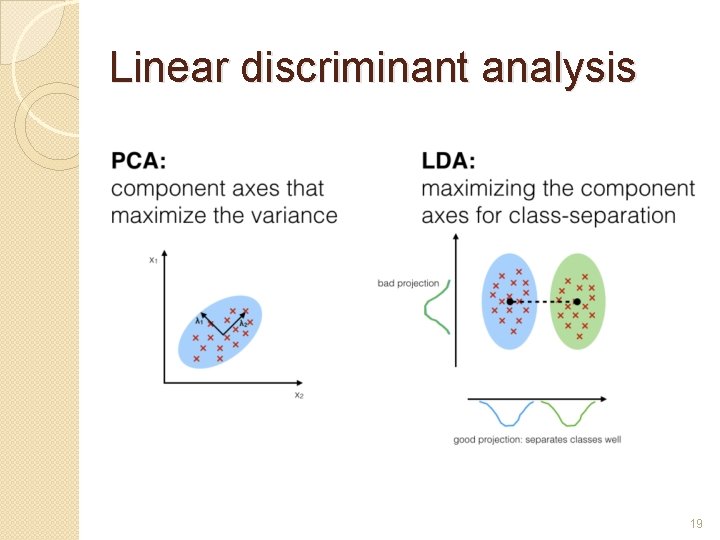

Linear discriminant analysis 19

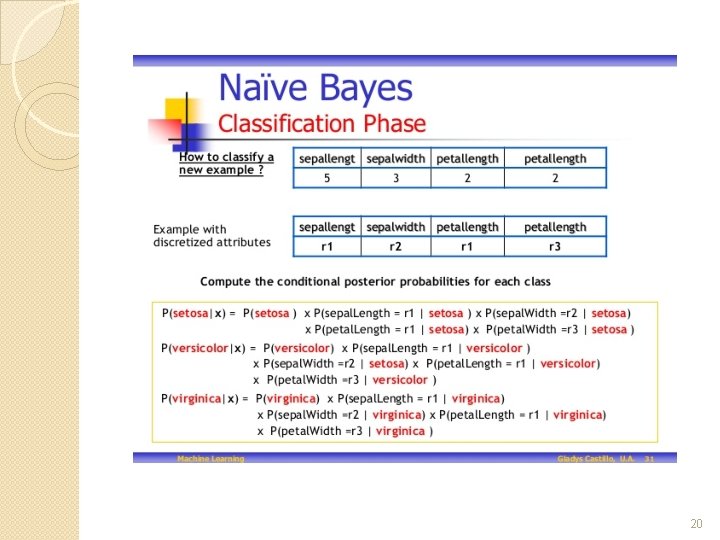

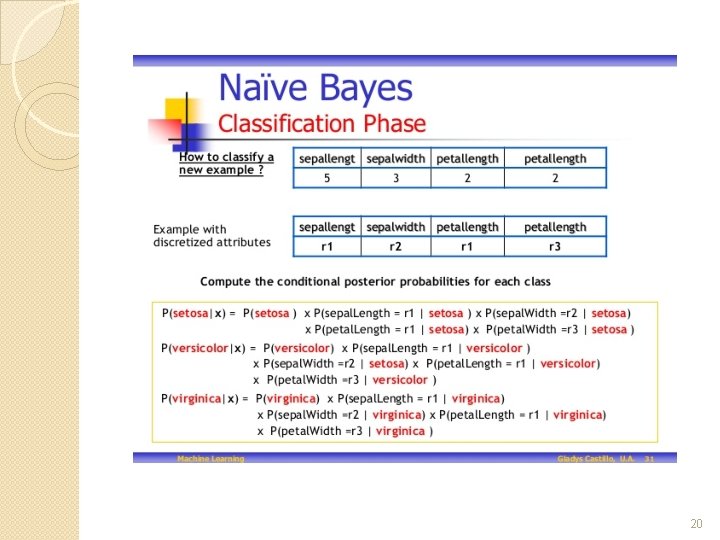

20

Appendi x 21

Slide by: D. Evans. California Pacific Medical 22