Lynx A Learning Linux Prefetching Mechanism For SSD

- Slides: 16

Lynx: A Learning Linux Prefetching Mechanism For SSD Performance Model Arezki Laga∗†, Jalil Boukhobza† , Michel Koskas∗ , Frank Singhoff† ∗ Kode Software, Paris, France † Univ. Bretagne Occidentale UMR 6285, Lab-STICC F-29200 Brest, France 2016 5 th Non-Volatile Memory Systems and Applications Symposium

Outline • Introduction • Background • Design • Evaluation • Conclusion

Introduction

Introduction(1/2) • The Linux kernel includes a prefetching mechanism called Read-ahead that is integrated at the Virtual File System (VFS) level. • Read-ahead is mainly based on the assumption that most I/O workloads present a high spatial locality. • The objective of doing so is both to hide and reduce I/O latencies.

Introduction(2/2) • Many studies show that I/O workloads increasingly adopt a random pattern. • In this work, we propose a prefetching mechanism fully integrated to the Linux kernel, named Lynx. It relies on SSD performance model to prefetch sequentially and/or randomly data according to application I/O patterns.

Background

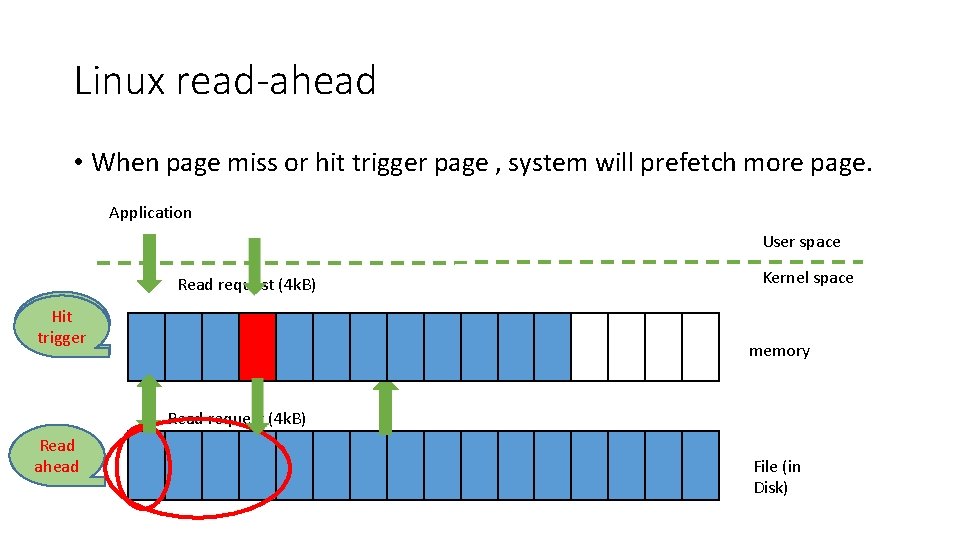

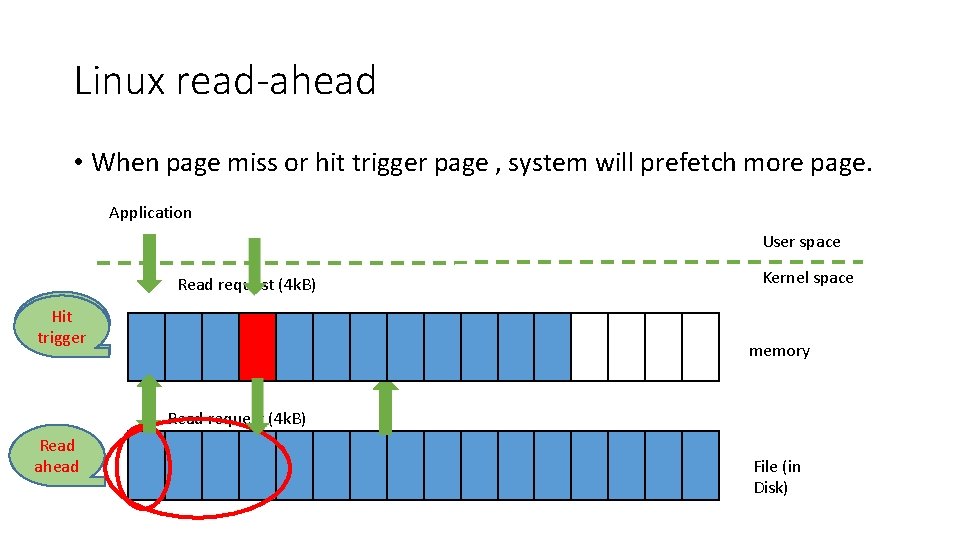

Linux read-ahead • When page miss or hit trigger page , system will prefetch more page. Application User space Read request (4 k. B) Page Hit miss trigger Kernel space memory Read request (4 k. B) Read ahead File (in Disk)

Design

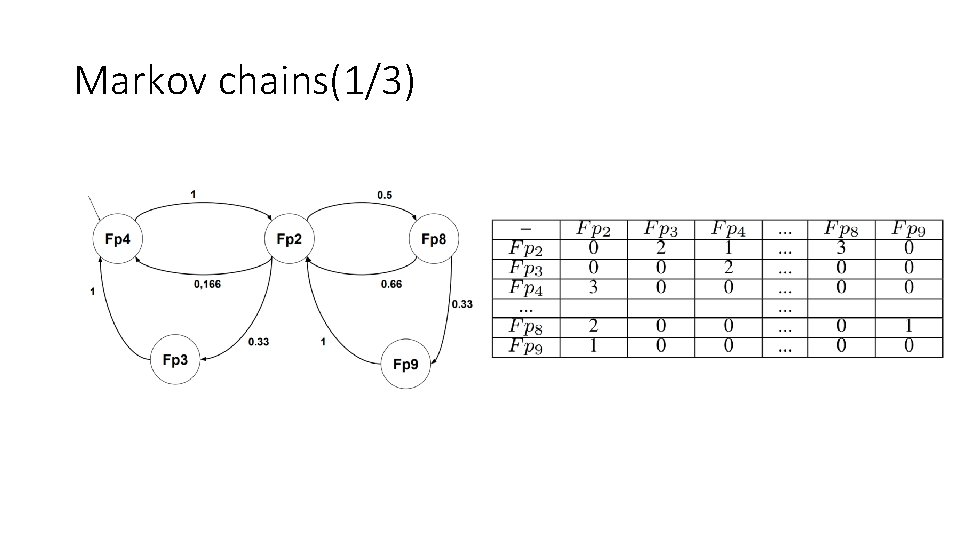

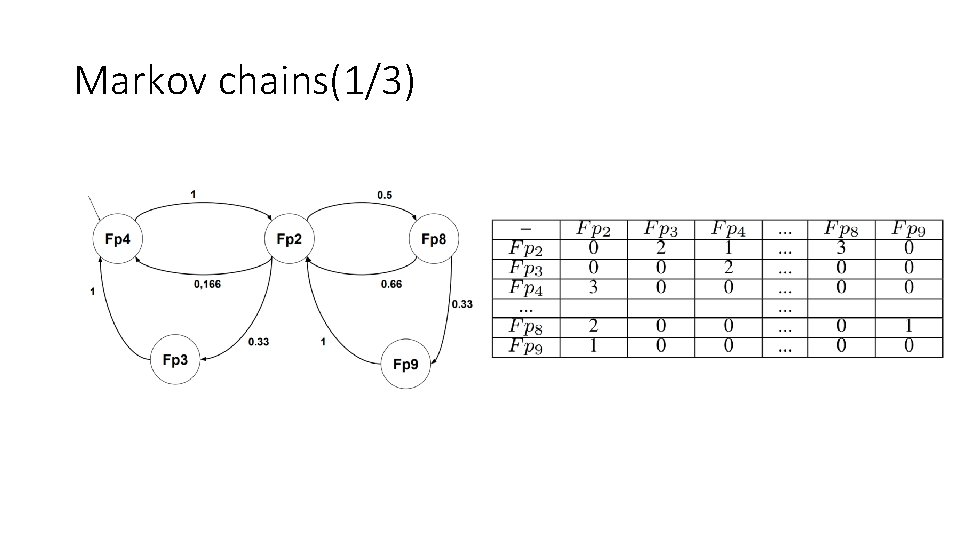

Markov chains(1/3)

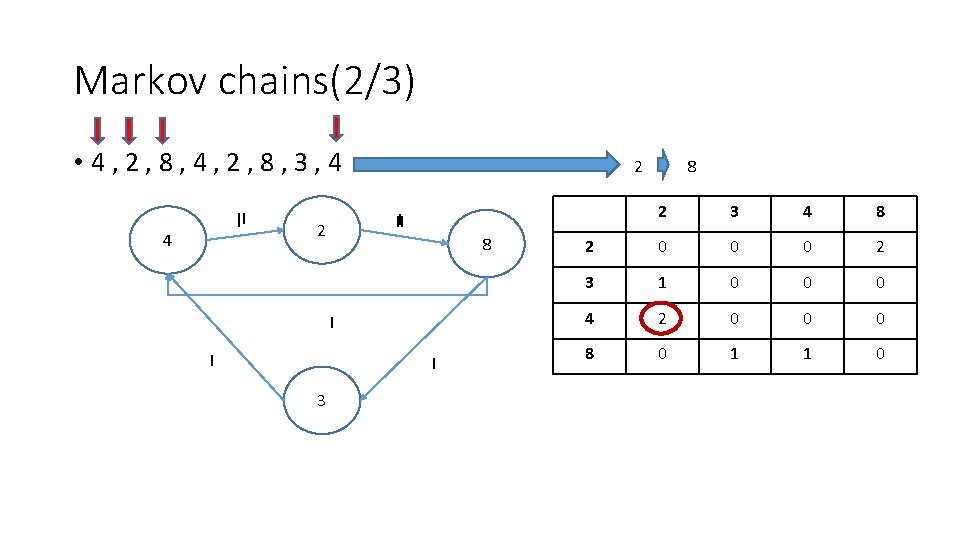

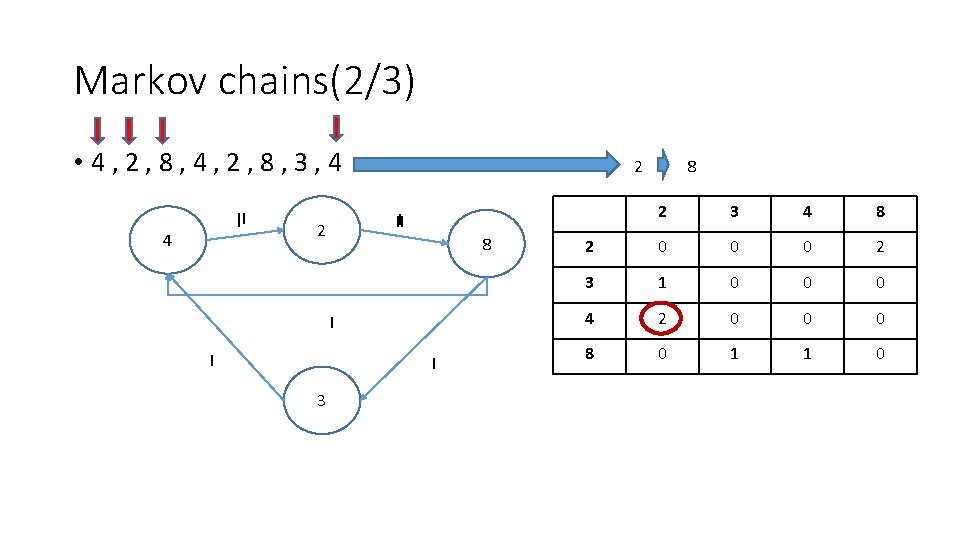

Markov chains(2/3) • 4, 2, 8, 3, 4 II I 4 2 8 I I I 3 2 3 4 8 2 0 0 0 2 3 1 0 0 0 4 2 0 0 0 8 0 1 1 0 III 2 8

Design(3/3) • When to prefetch: as soon as there is an outgoing edge from the actual node representing the accessed file page • How much to prefetch: this parameter is configurable in Lynx; in the performance evaluation part, it is set to be one file page considering the latency of accessing SSD is small enough. 1. Learning mechanism 3. Controlling the prediction accuracy 2. Prediction mechanism 4. User space control mechanism

Evaluation

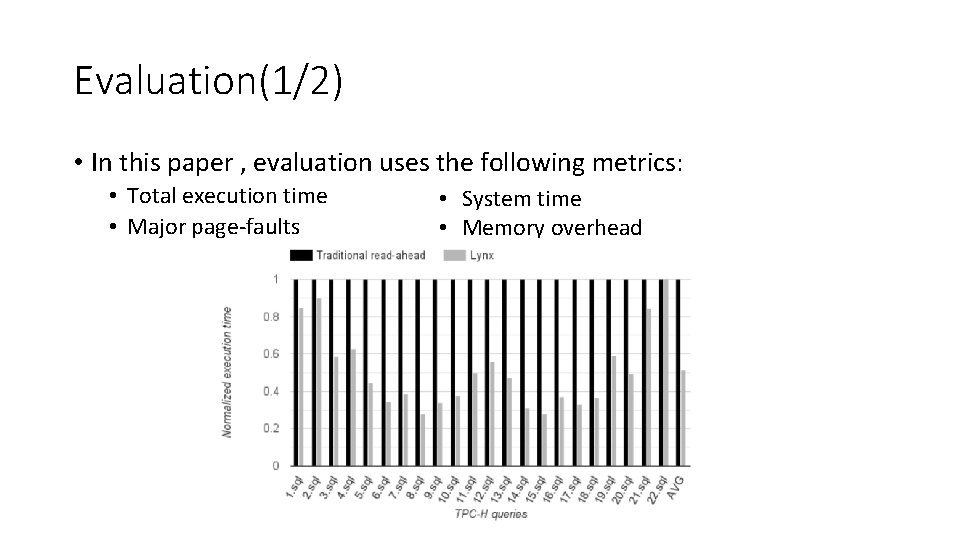

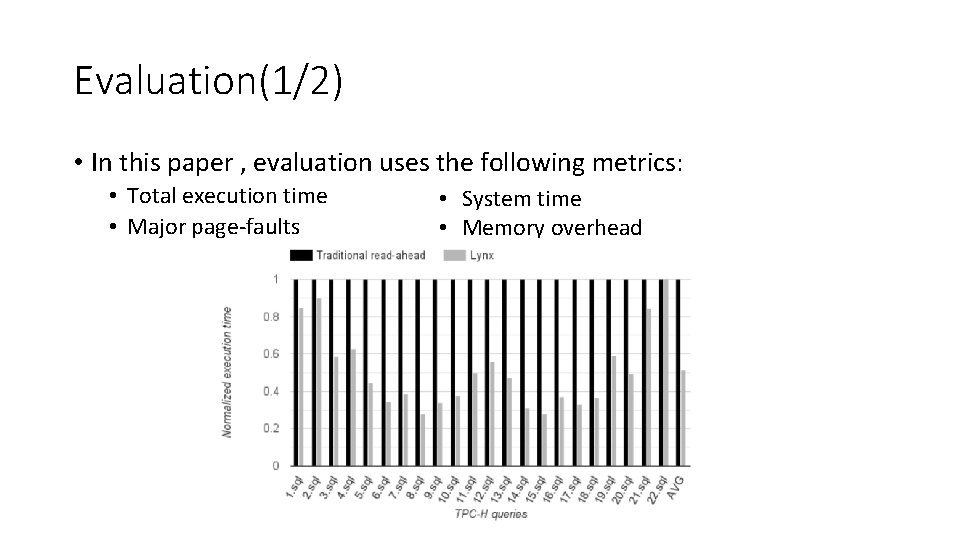

Evaluation(1/2) • In this paper , evaluation uses the following metrics: • Total execution time • Major page-faults • System time • Memory overhead

Evaluation(2/2) • Memory overhead: The total main memory allocated to build the state machine was measured to 94 MB. This represents less than 1% of the file data size (10 GB)

Conclusion

Conclusion(1/1) • This paper describes a new prefetching mechanism named Lynx, to complement the Linux read-ahead. • Lynx relies on learning I/O patterns and representing them under the form of a Markov chain. • Limitations of Lynx • when two applications access the same file in an interleaved way, there will be only one pattern prefetched. • the size of meta data.