LSTM Long Short Term Memory Image Processing Seminar

- Slides: 55

LSTM Long Short Term Memory Image Processing Seminar Roy Franco IDC 2018

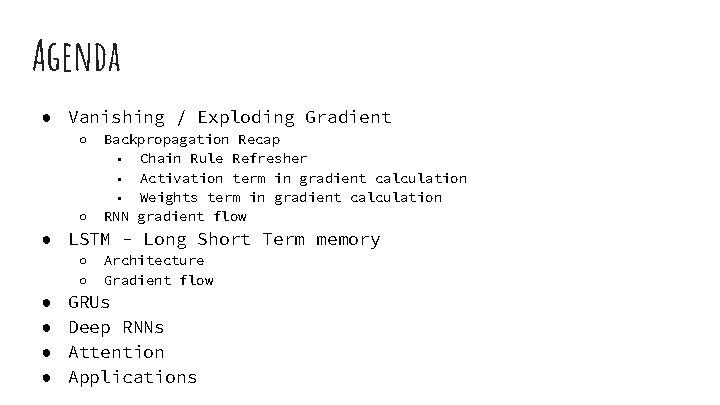

Agenda ● Vanishing / Exploding Gradient ○ ○ Backpropagation Recap ■ Chain Rule Refresher ■ Activation term in gradient calculation ■ Weights term in gradient calculation RNN gradient flow ● LSTM - Long Short Term memory ○ ○ ● ● Architecture Gradient flow GRUs Deep RNNs Attention Applications

Vanishing/Exploding Gradient

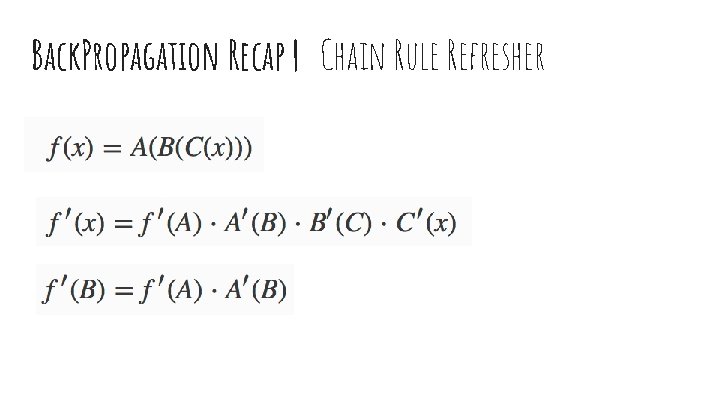

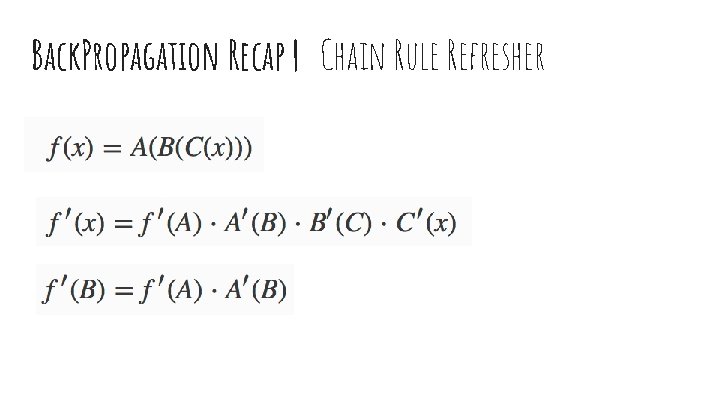

Back. Propagation Recap | Chain Rule Refresher

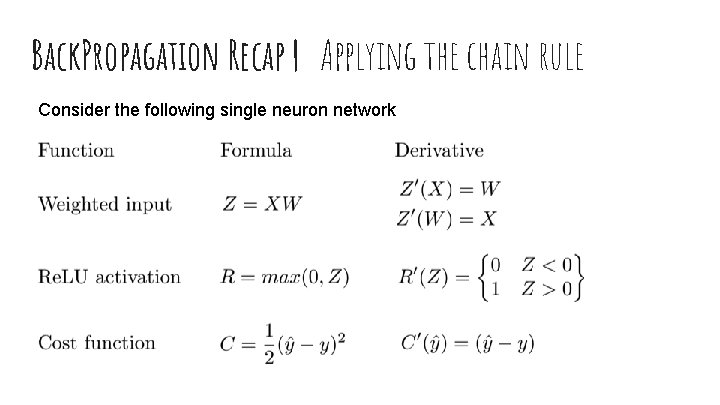

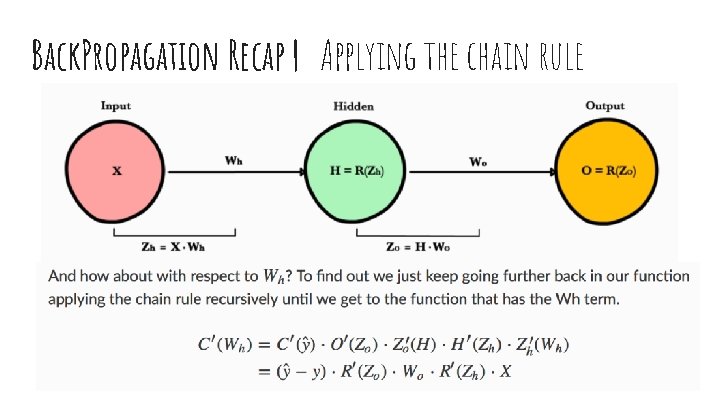

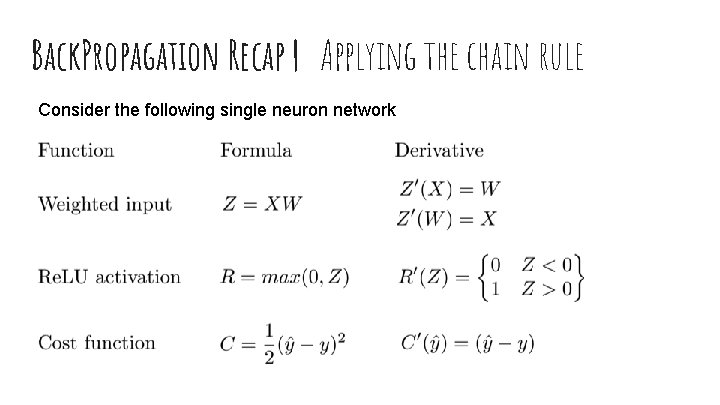

Back. Propagation Recap | Applying the chain rule Consider the following single neuron network

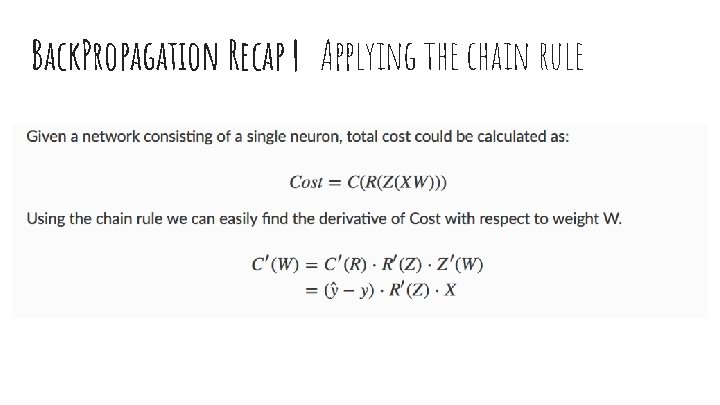

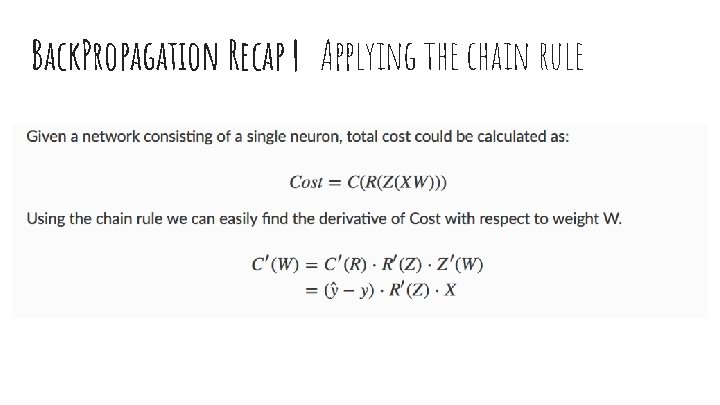

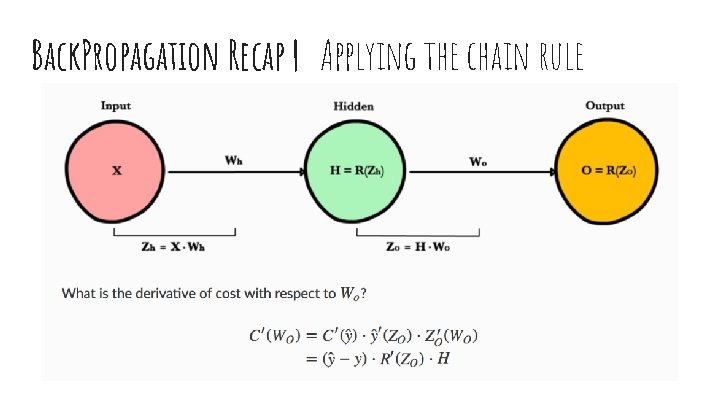

Back. Propagation Recap | Applying the chain rule

Back. Propagation Recap | Applying the chain rule

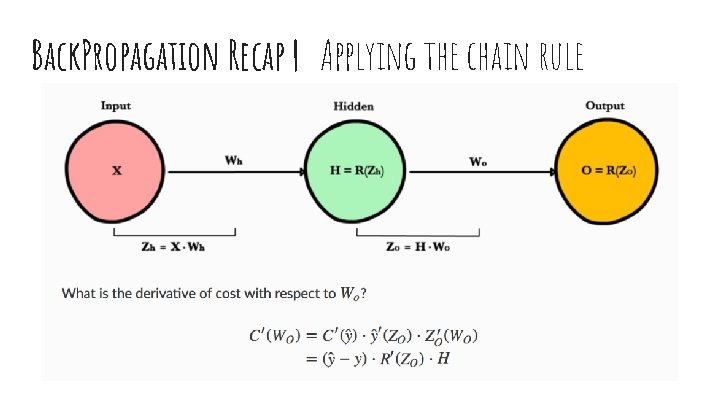

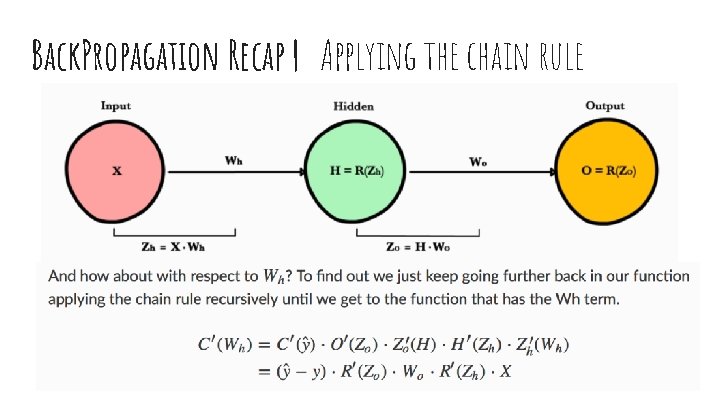

Back. Propagation Recap | Applying the chain rule

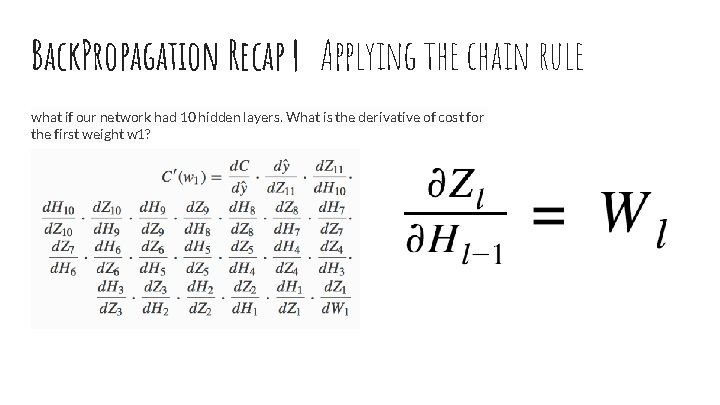

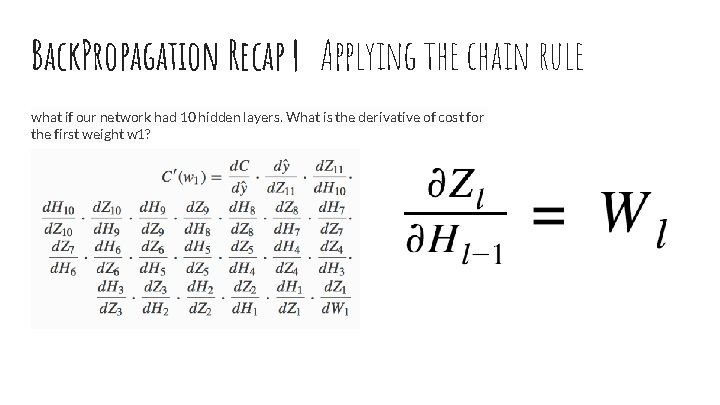

Back. Propagation Recap | Applying the chain rule what if our network had 10 hidden layers. What is the derivative of cost for the first weight w 1?

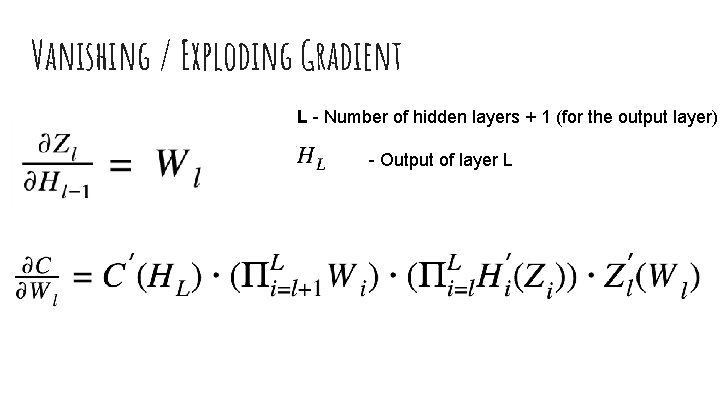

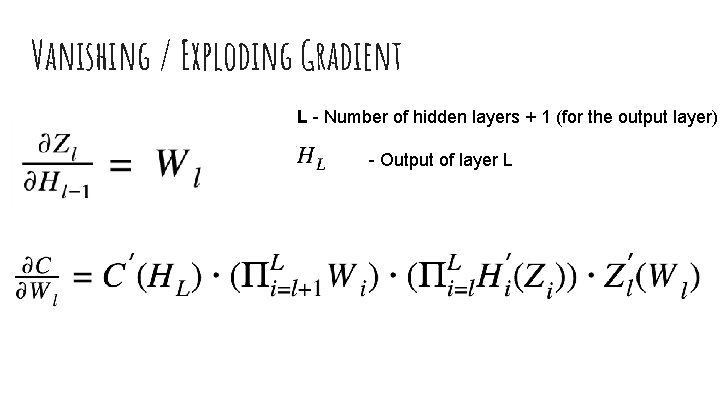

Vanishing / Exploding Gradient L - Number of hidden layers + 1 (for the output layer) - Output of layer L

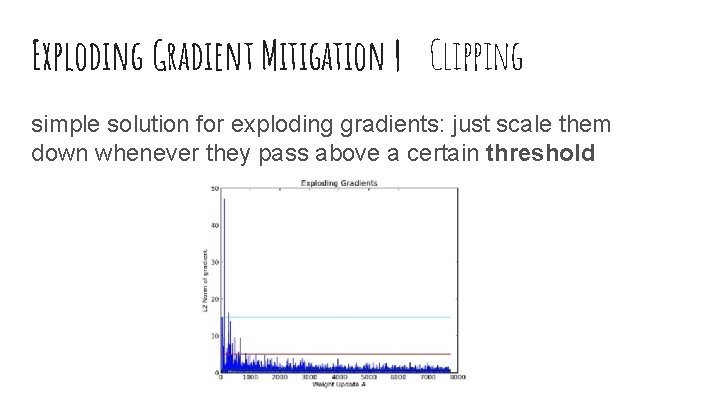

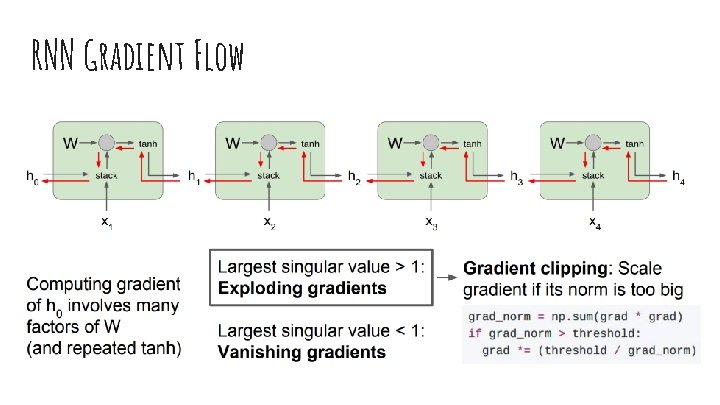

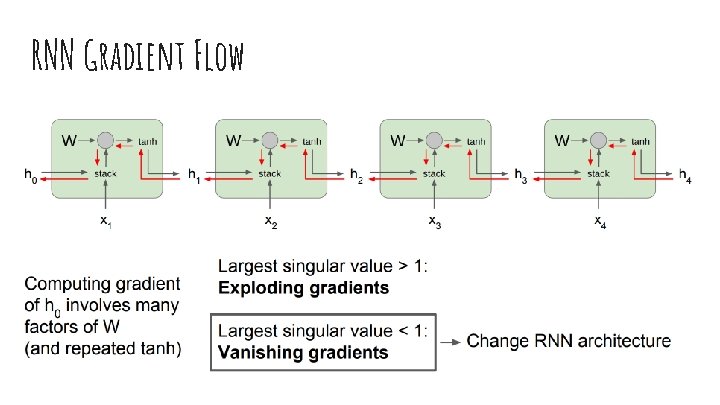

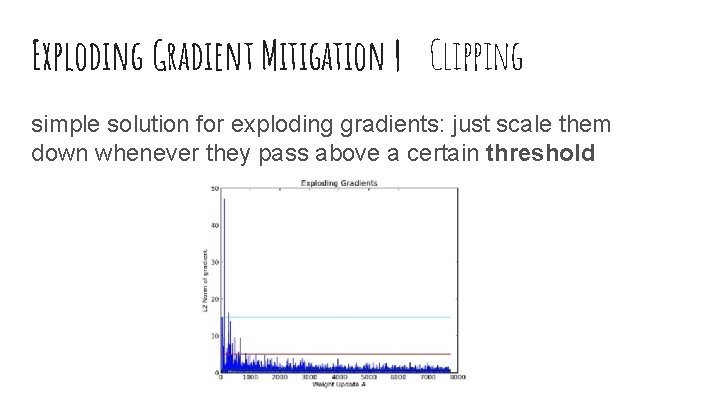

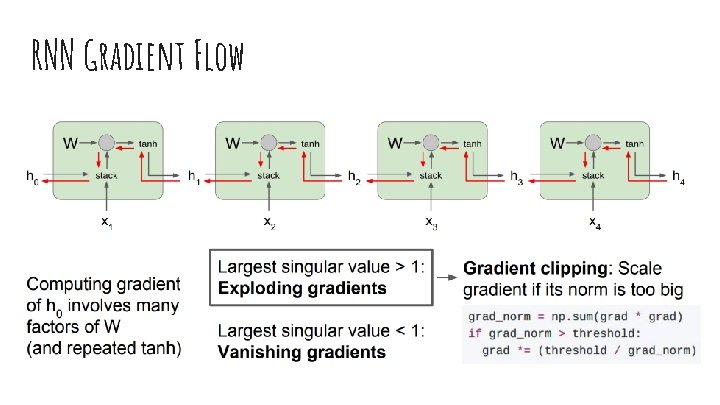

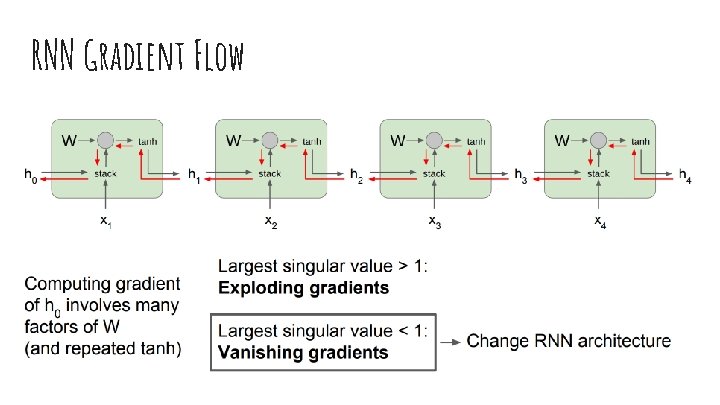

Exploding Gradient Mitigation | Clipping simple solution for exploding gradients: just scale them down whenever they pass above a certain threshold

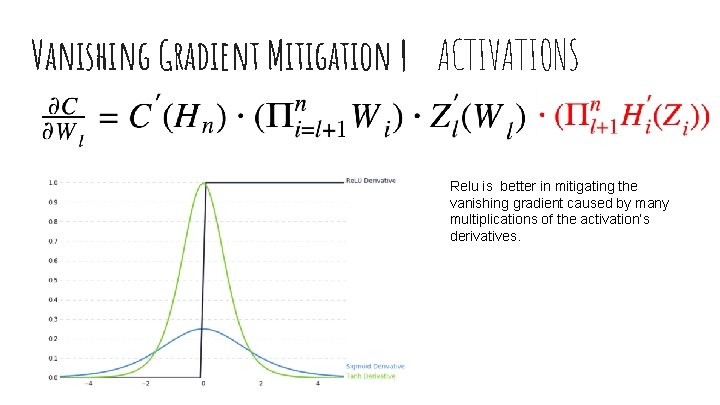

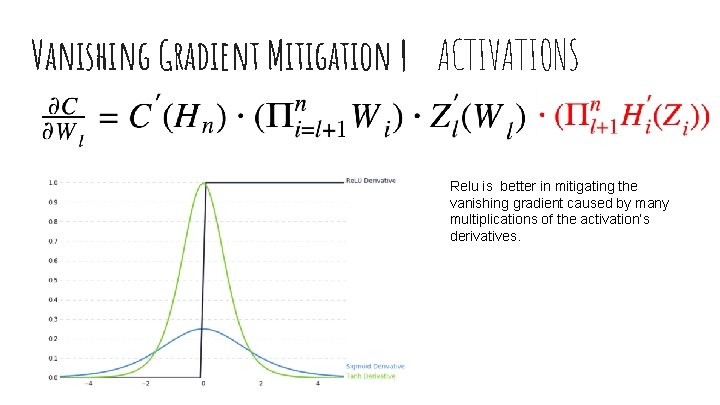

Vanishing Gradient Mitigation | ACTIVATIONS Relu is better in mitigating the vanishing gradient caused by many multiplications of the activation’s derivatives.

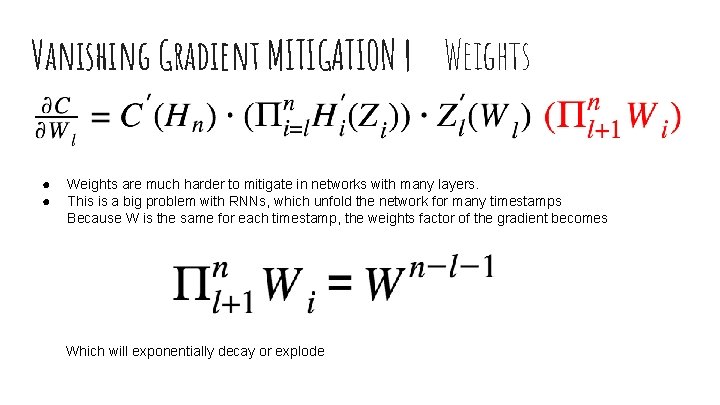

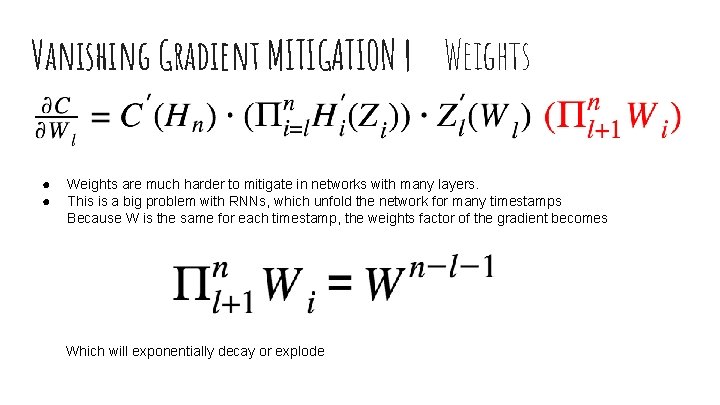

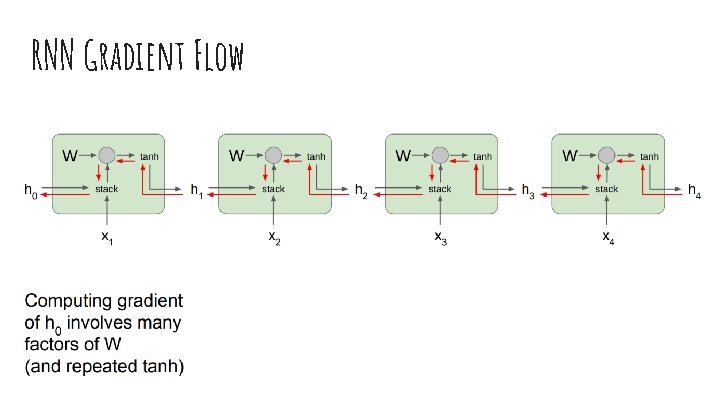

Vanishing Gradient MITIGATION | Weights ● ● Weights are much harder to mitigate in networks with many layers. This is a big problem with RNNs, which unfold the network for many timestamps Because W is the same for each timestamp, the weights factor of the gradient becomes Which will exponentially decay or explode

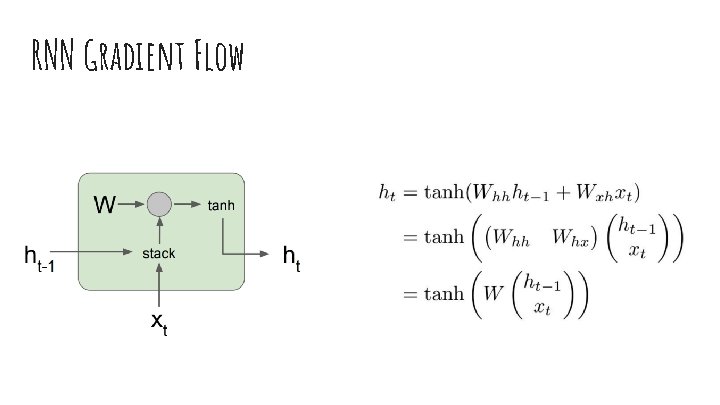

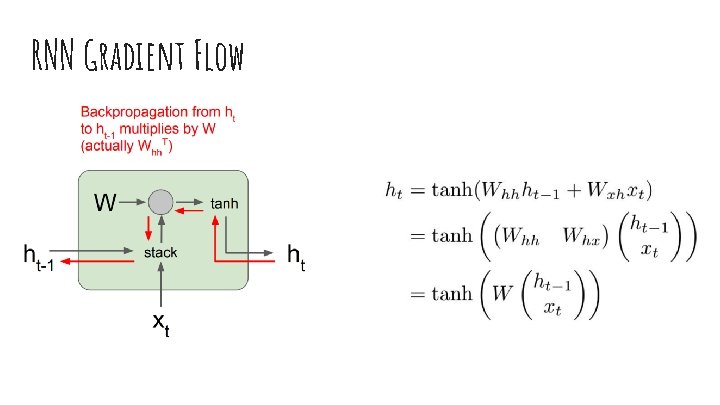

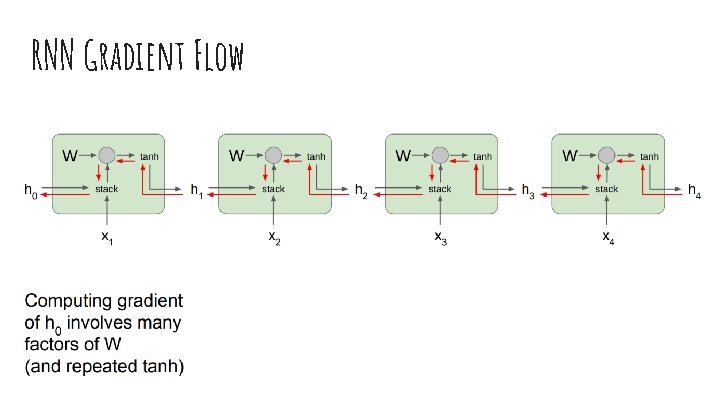

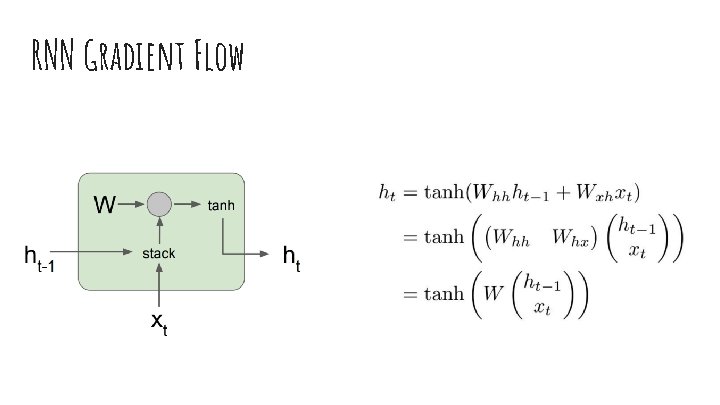

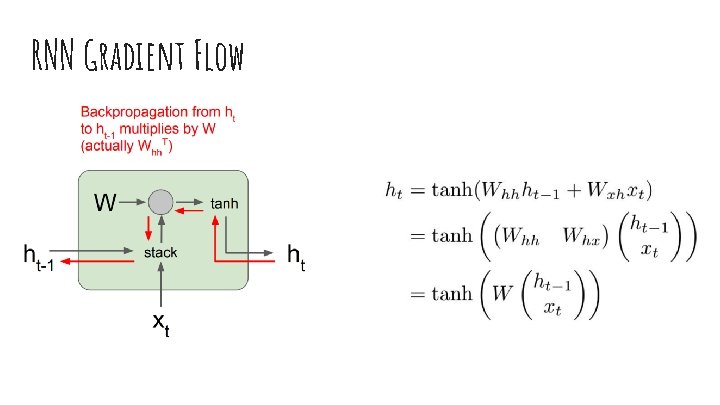

RNN Gradient Flow

RNN Gradient Flow

RNN Gradient Flow

RNN Gradient Flow

RNN Gradient Flow

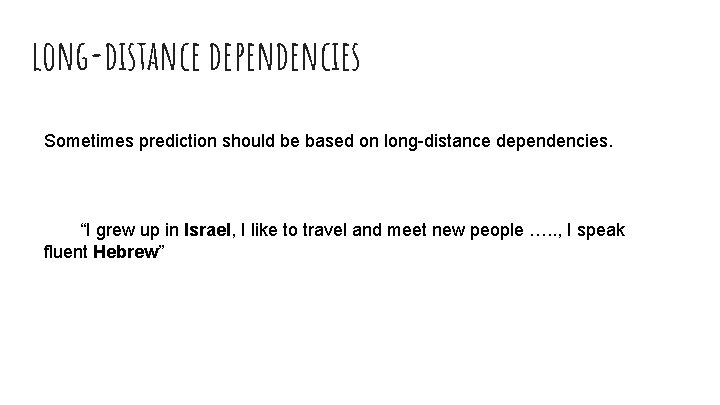

long-distance dependencies Sometimes prediction should be based on long-distance dependencies. “I grew up in Israel, I like to travel and meet new people …. . , I speak fluent Hebrew”

lstm Long Short Term Memory

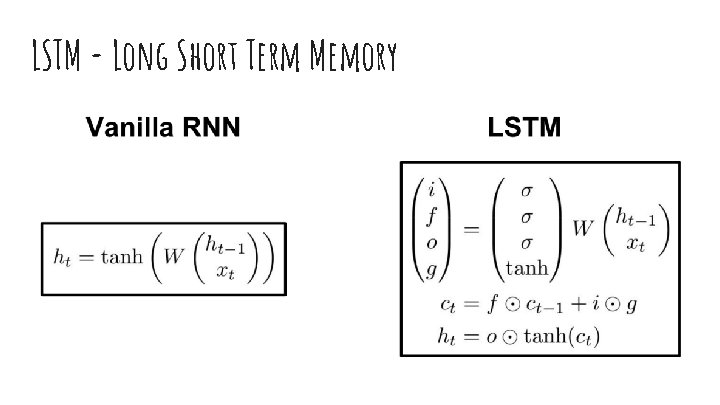

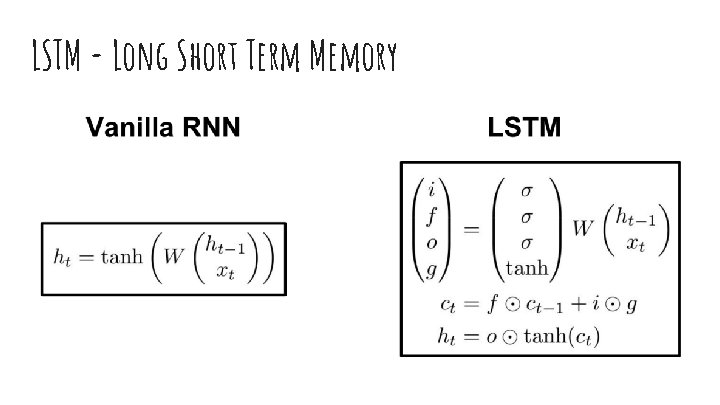

LSTM - Long Short Term Memory

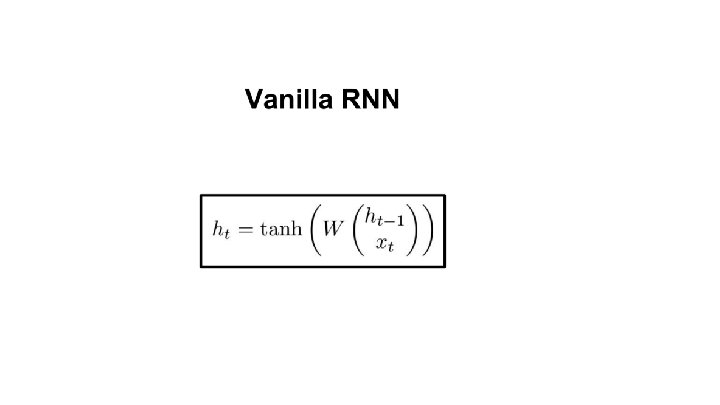

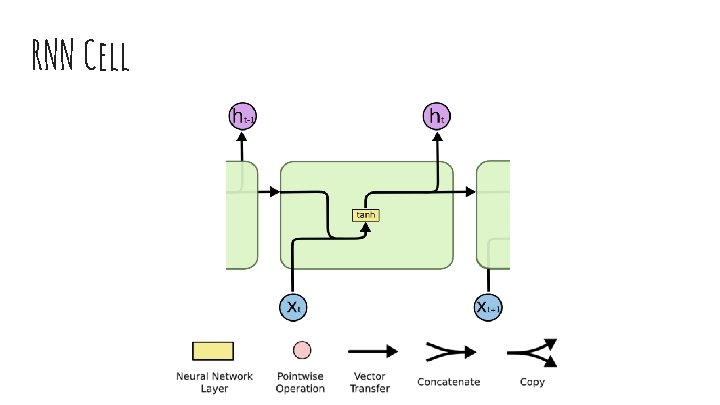

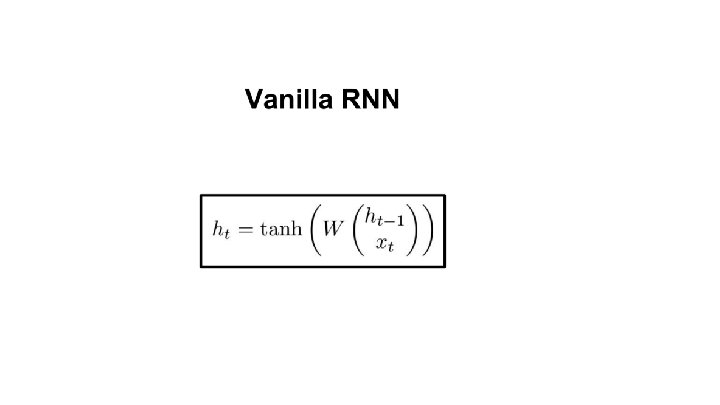

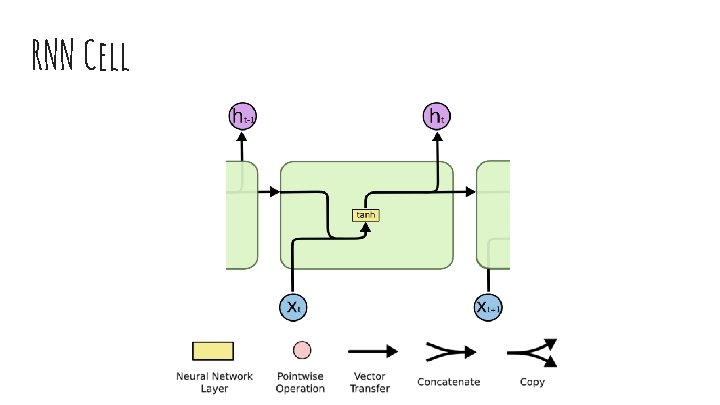

RNN Cell

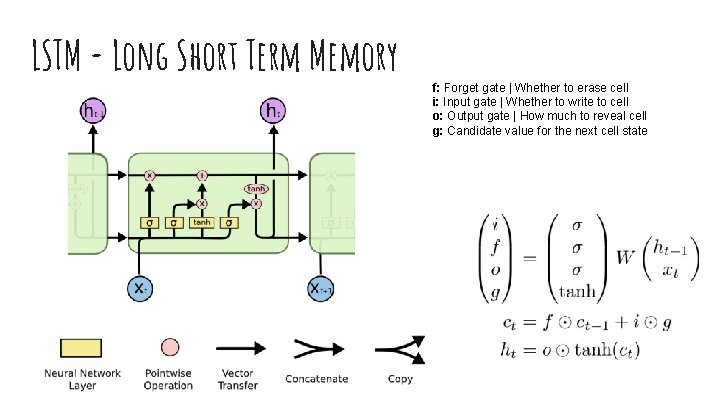

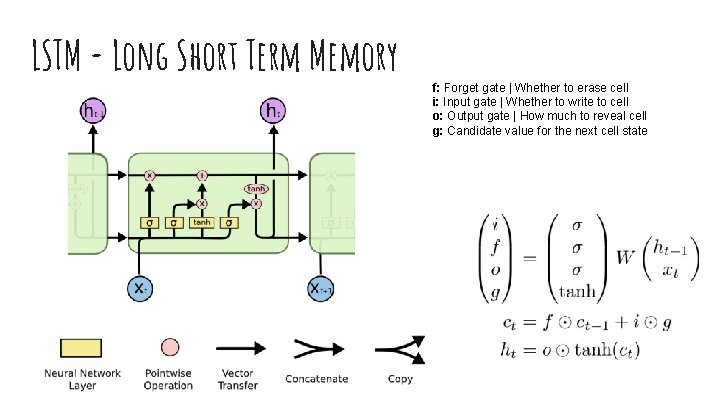

LSTM - Long Short Term Memory f: Forget gate | Whether to erase cell i: Input gate | Whether to write to cell o: Output gate | How much to reveal cell g: Candidate value for the next cell state

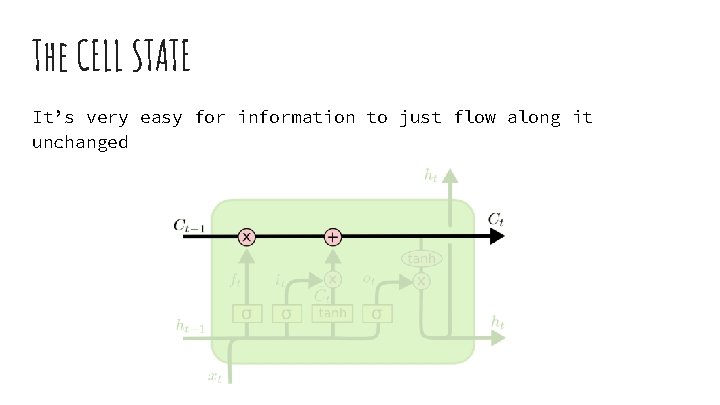

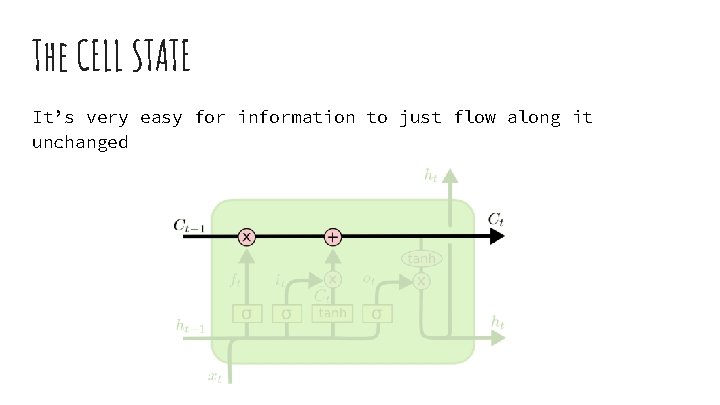

The CELL STATE It’s very easy for information to just flow along it unchanged

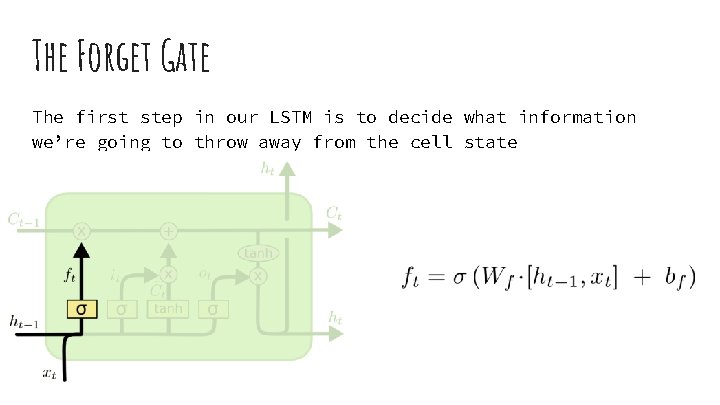

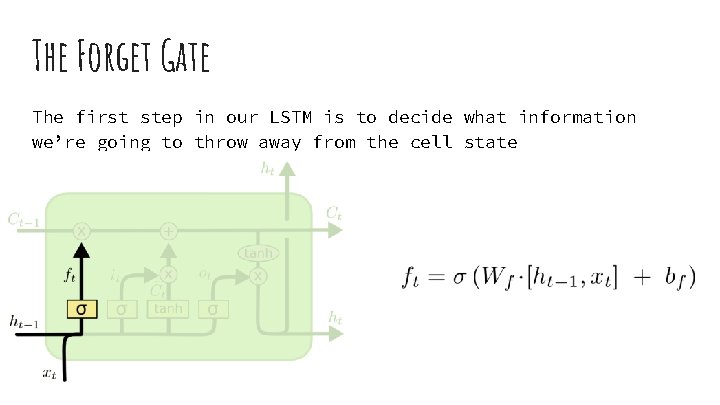

The Forget Gate The first step in our LSTM is to decide what information we’re going to throw away from the cell state

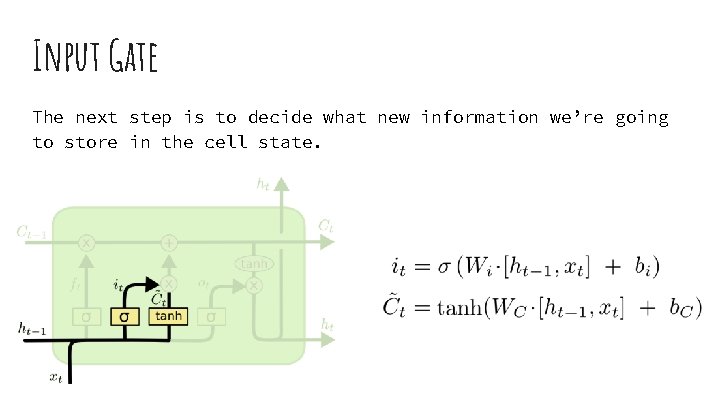

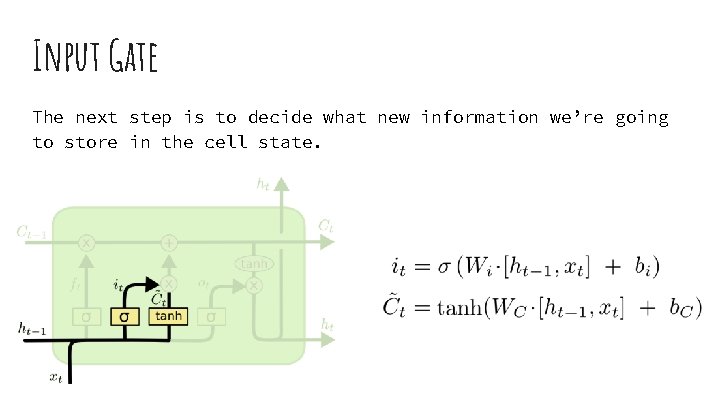

Input Gate The next step is to decide what new information we’re going to store in the cell state.

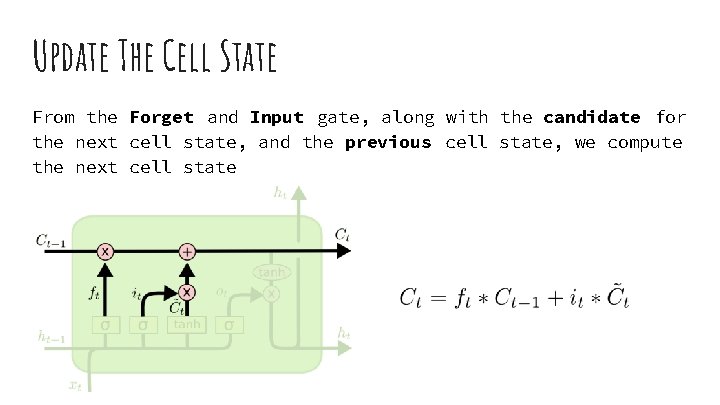

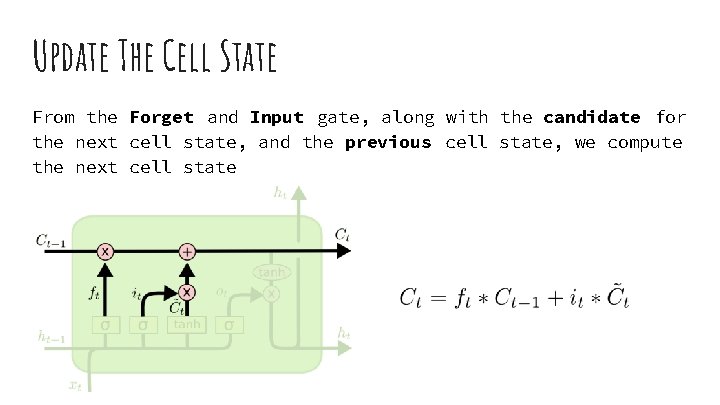

Update The Cell State From the Forget and Input gate, along with the candidate for the next cell state, and the previous cell state, we compute the next cell state

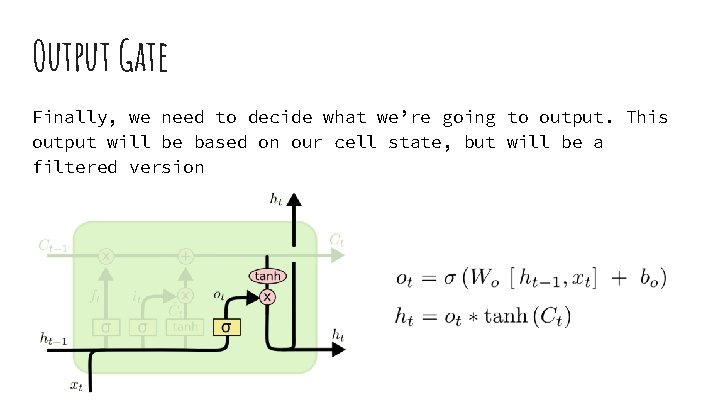

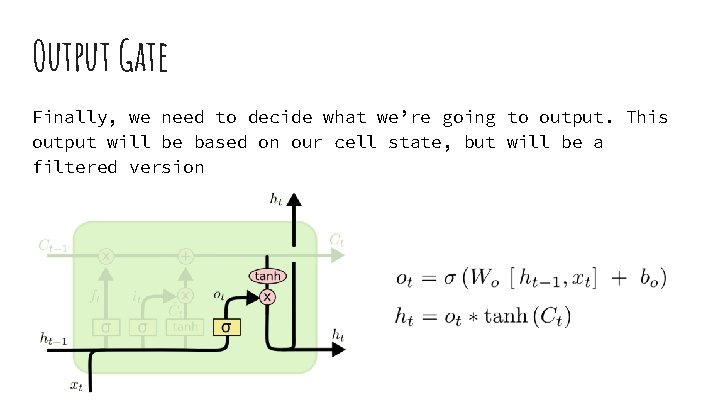

Output Gate Finally, we need to decide what we’re going to output. This output will be based on our cell state, but will be a filtered version

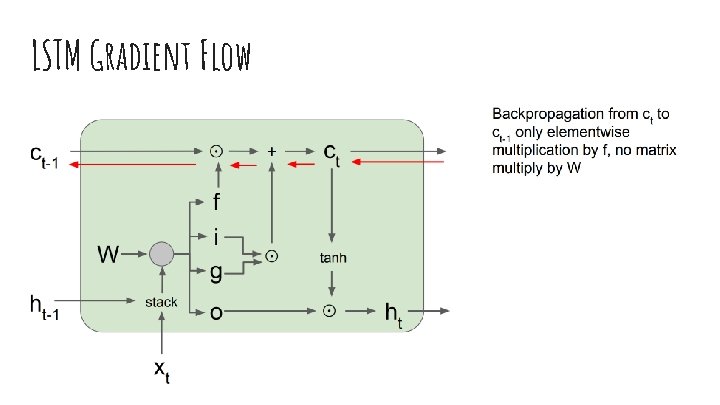

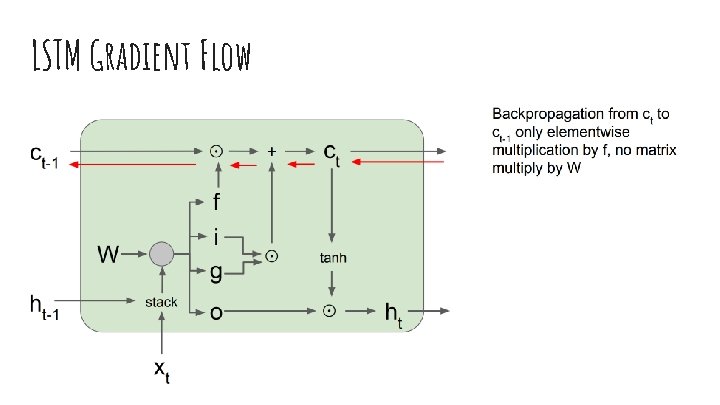

LSTM Gradient Flow

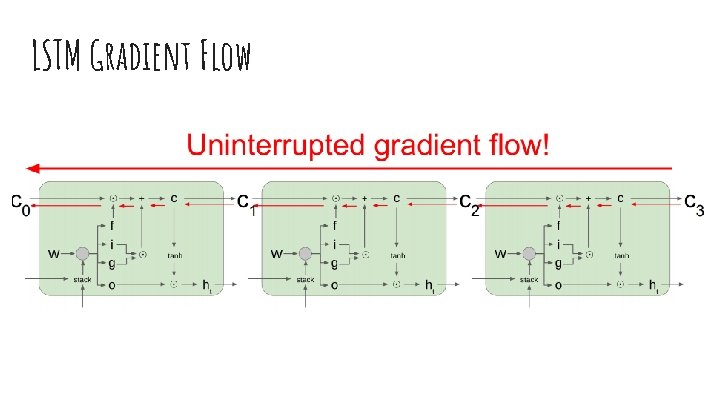

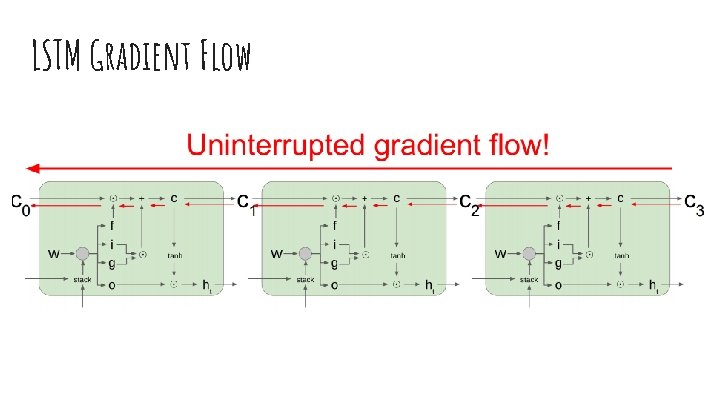

LSTM Gradient Flow

g. RU Gated Recurrent Unit

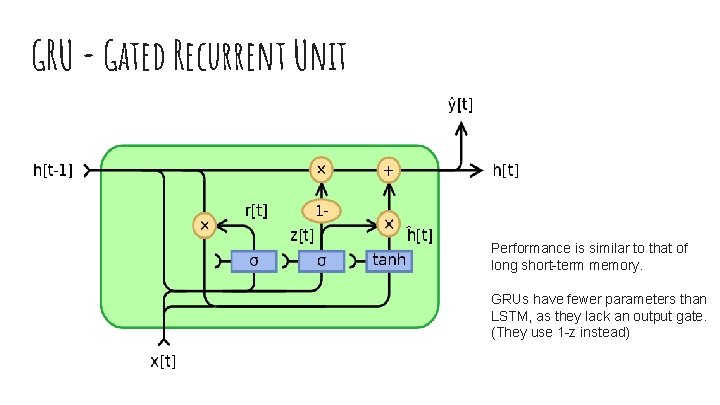

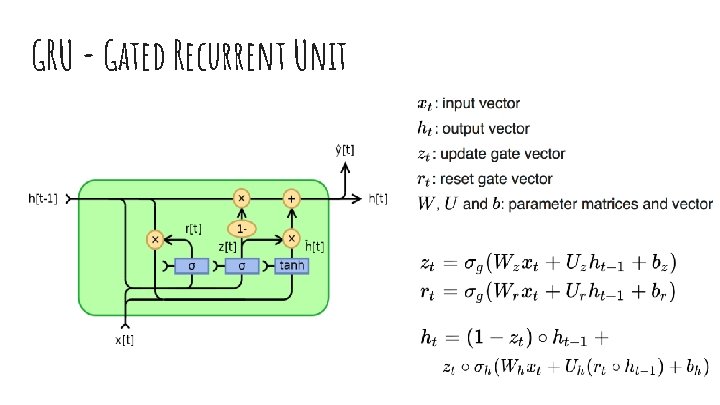

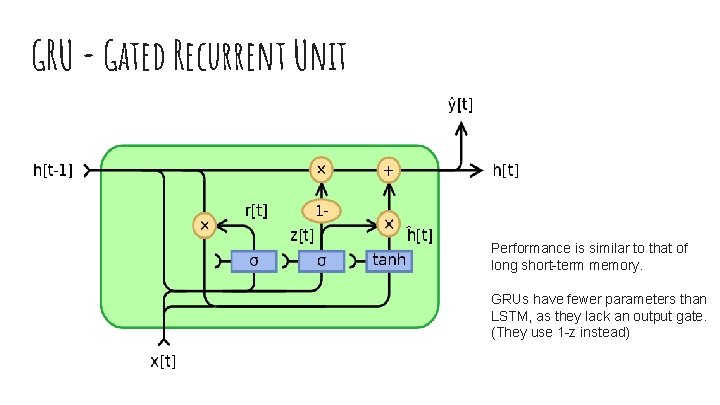

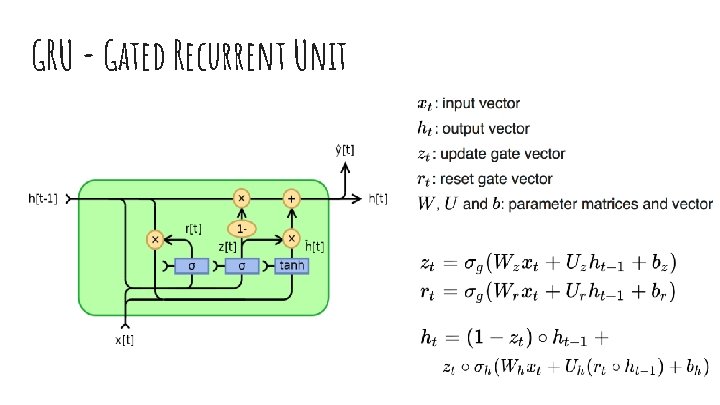

GRU - Gated Recurrent Unit Performance is similar to that of long short-term memory. GRUs have fewer parameters than LSTM, as they lack an output gate. (They use 1 -z instead)

GRU - Gated Recurrent Unit

Deep RNNs

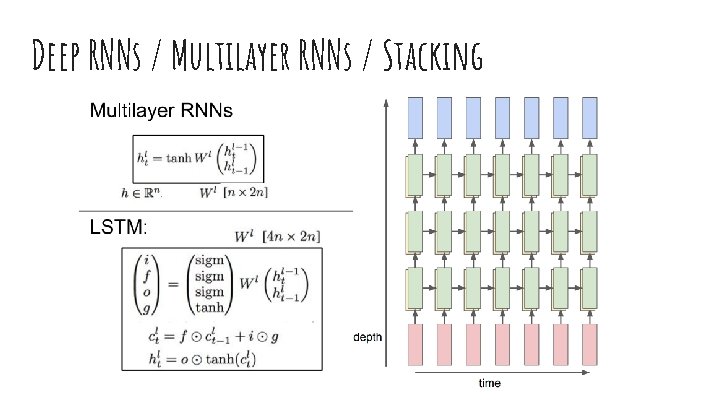

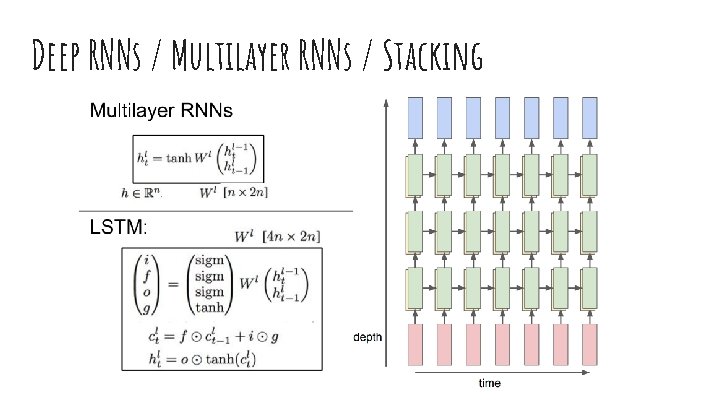

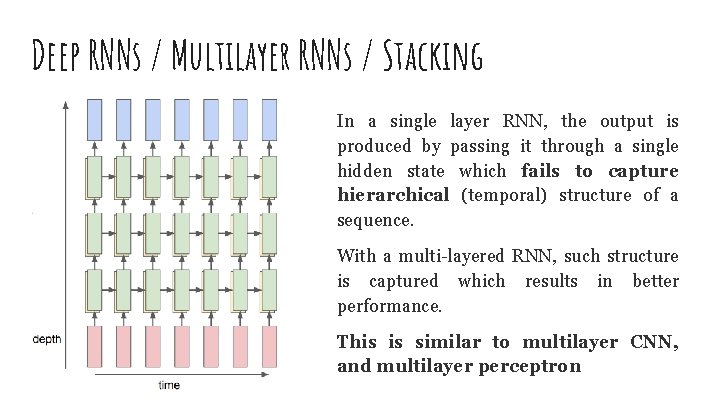

Deep RNNs / Multilayer RNNs / Stacking

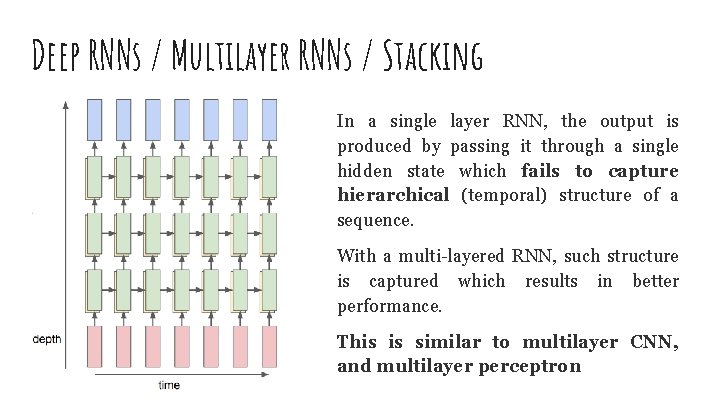

Deep RNNs / Multilayer RNNs / Stacking In a single layer RNN, the output is produced by passing it through a single hidden state which fails to capture hierarchical (temporal) structure of a sequence. With a multi-layered RNN, such structure is captured which results in better performance. This is similar to multilayer CNN, and multilayer perceptron

Attention

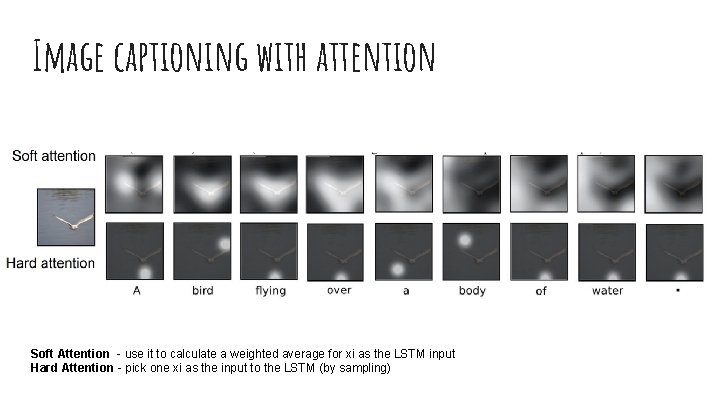

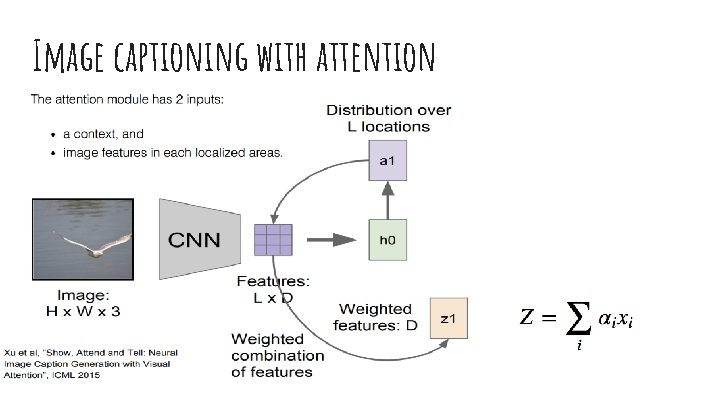

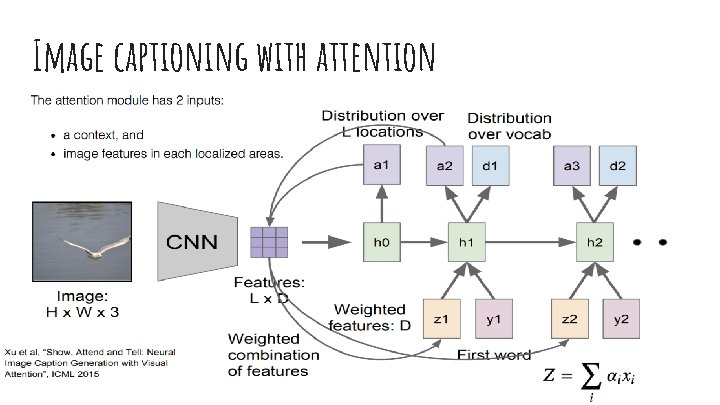

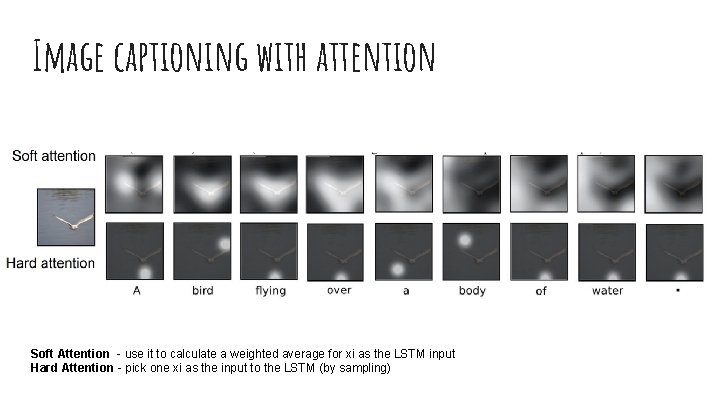

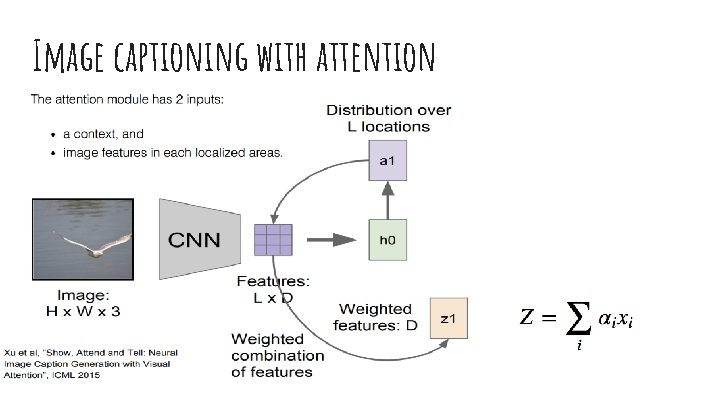

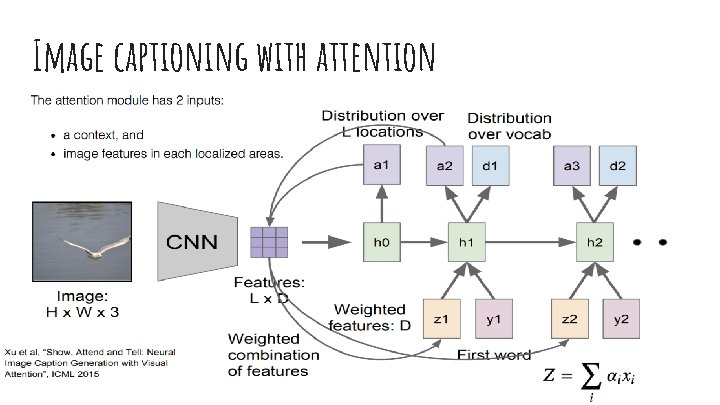

Image captioning with attention Soft Attention - use it to calculate a weighted average for xi as the LSTM input Hard Attention - pick one xi as the input to the LSTM (by sampling)

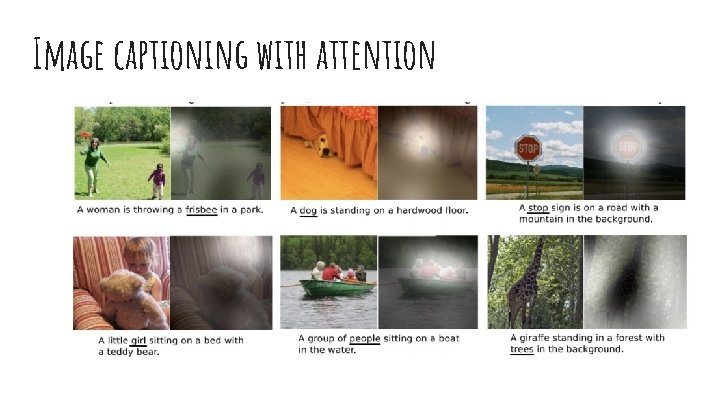

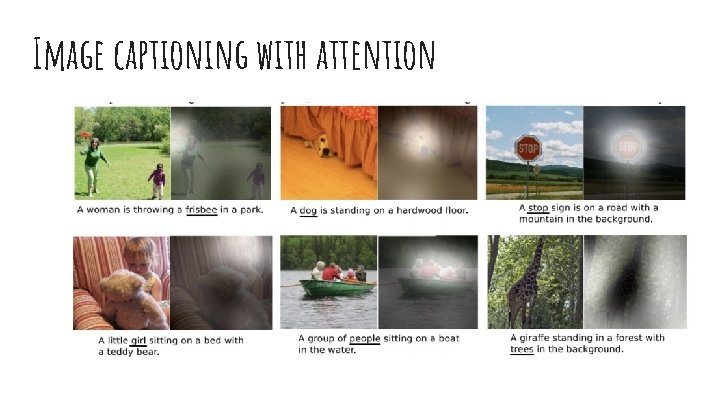

Image captioning with attention

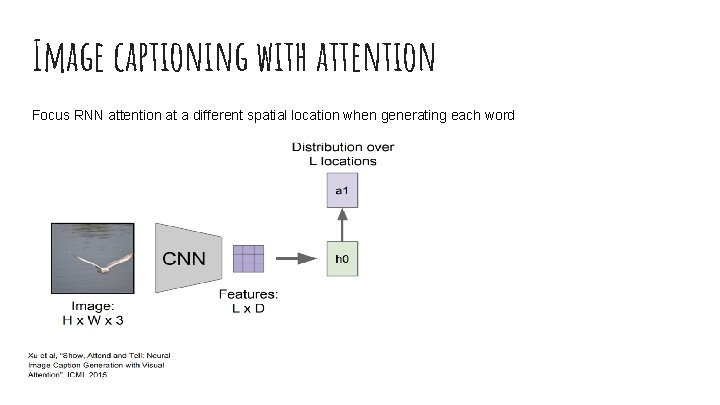

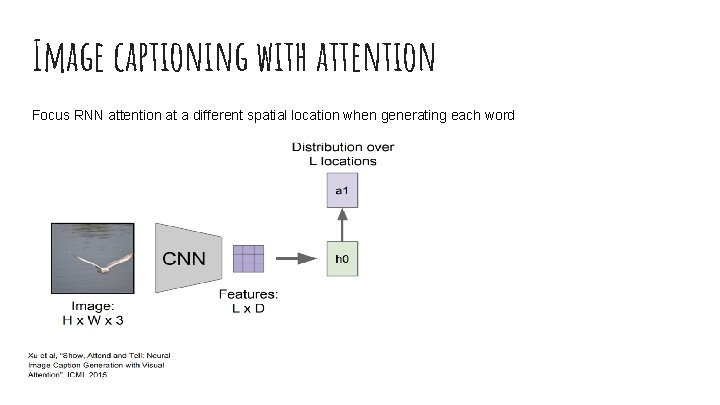

Image captioning with attention Focus RNN attention at a different spatial location when generating each word

Image captioning with attention

Image captioning with attention

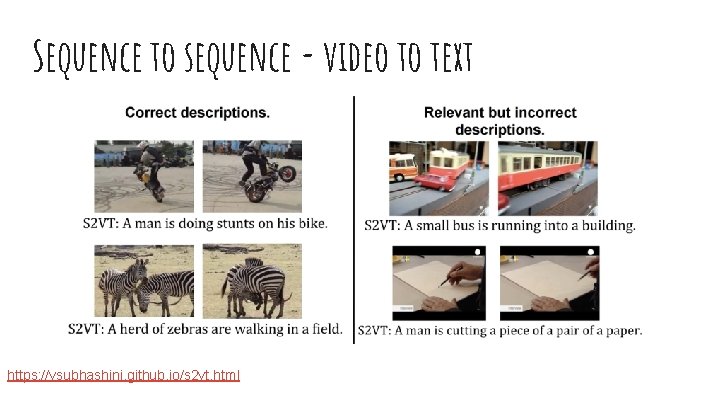

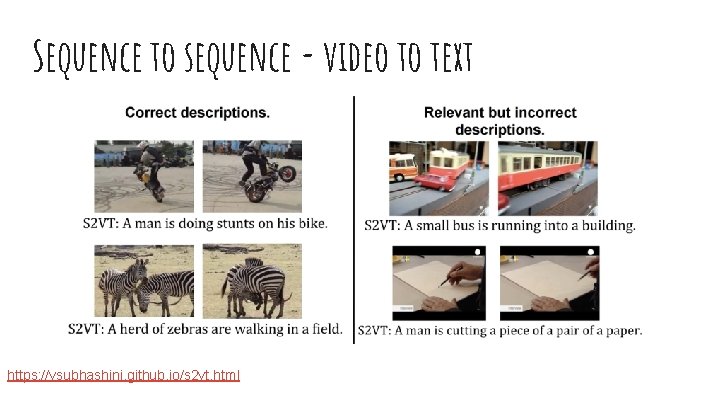

Sequence to sequence - video to text https: //vsubhashini. github. io/s 2 vt. html

Audio Classification MIT 6. S 094: Recurrent Neural Networks for Steering Through Time

Audio Classification MIT 6. S 094: Recurrent Neural Networks for Steering Through Time

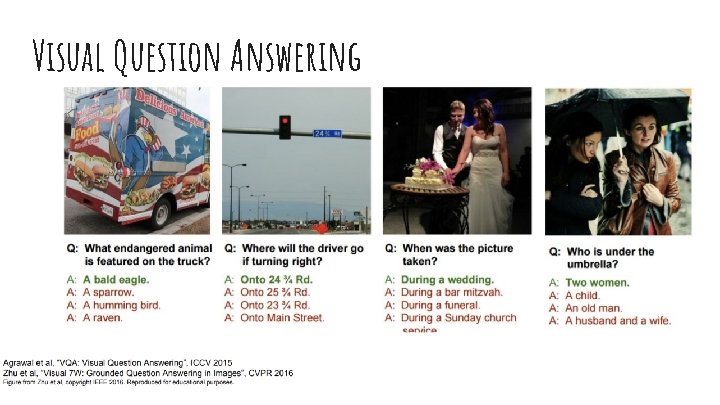

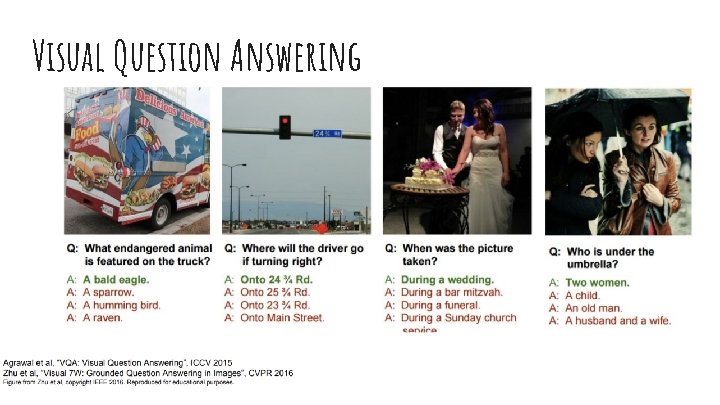

Visual Question Answering

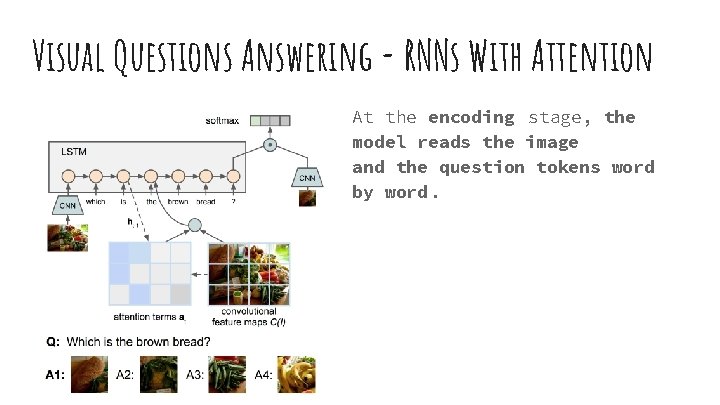

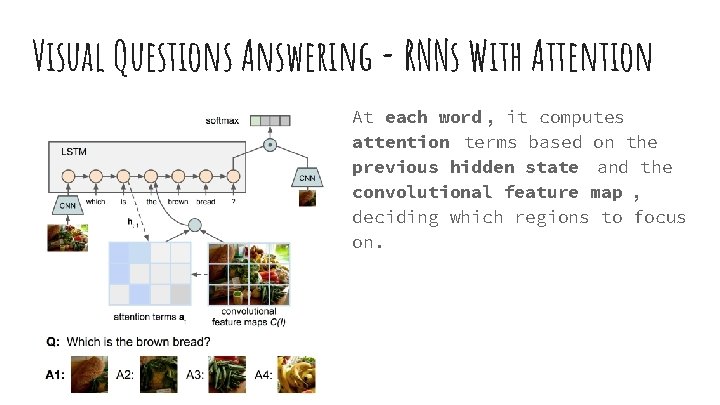

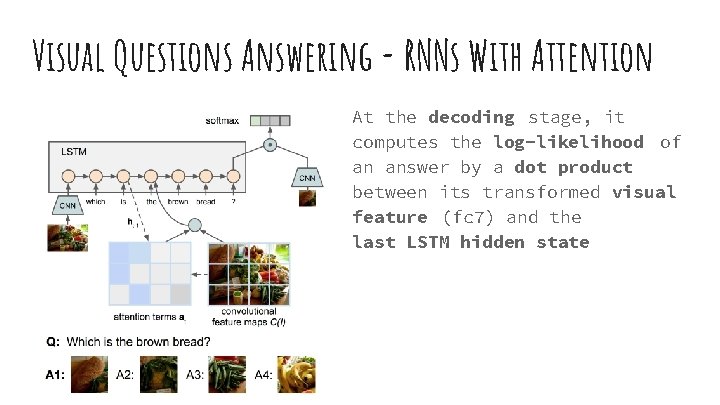

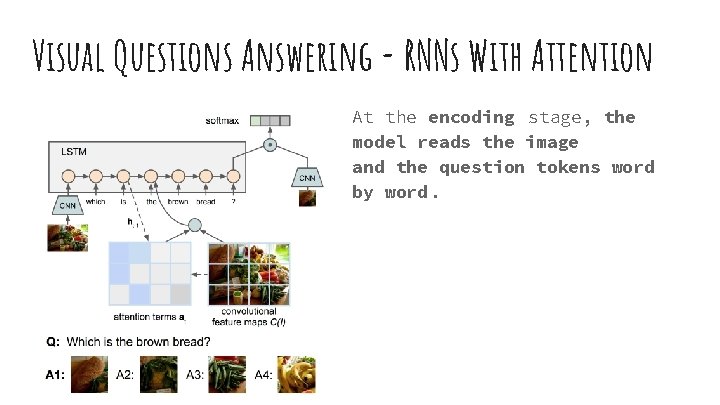

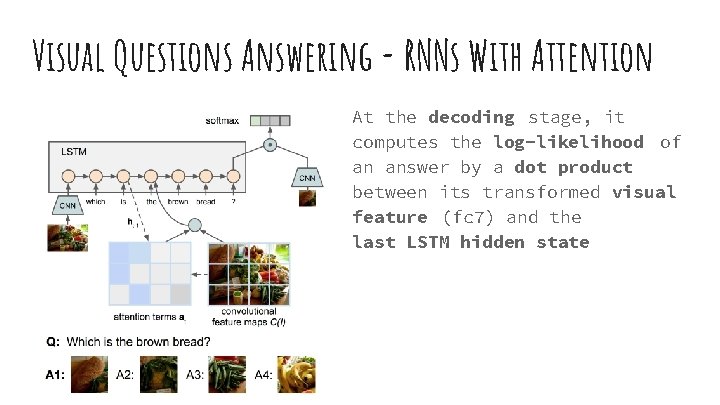

Visual Questions Answering - RNNs With Attention At the encoding stage, the model reads the image and the question tokens word by word.

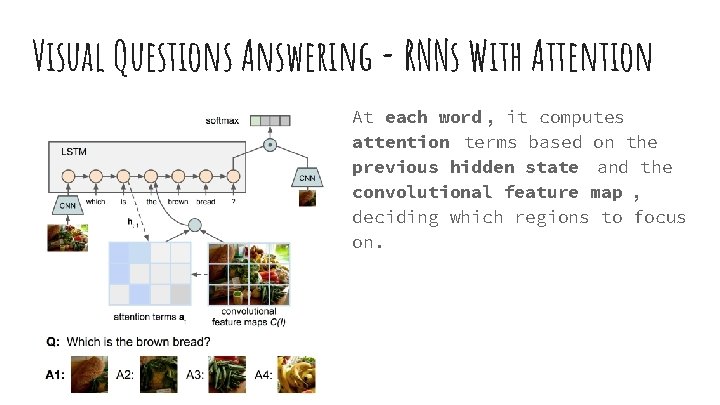

Visual Questions Answering - RNNs With Attention At each word , it computes attention terms based on the previous hidden state and the convolutional feature map , deciding which regions to focus on.

Visual Questions Answering - RNNs With Attention At the decoding stage, it computes the log-likelihood of an answer by a dot product between its transformed visual feature (fc 7) and the last LSTM hidden state

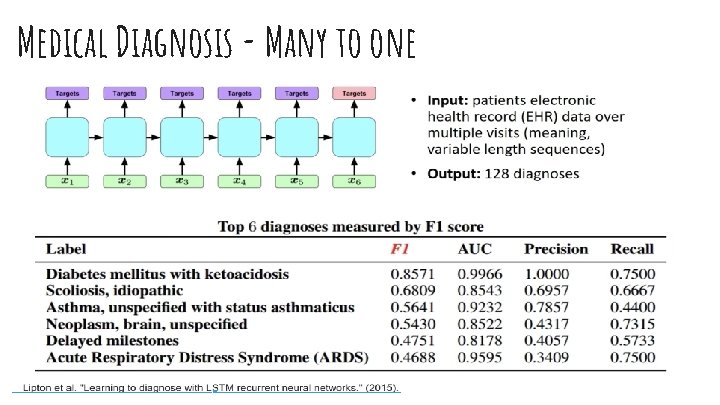

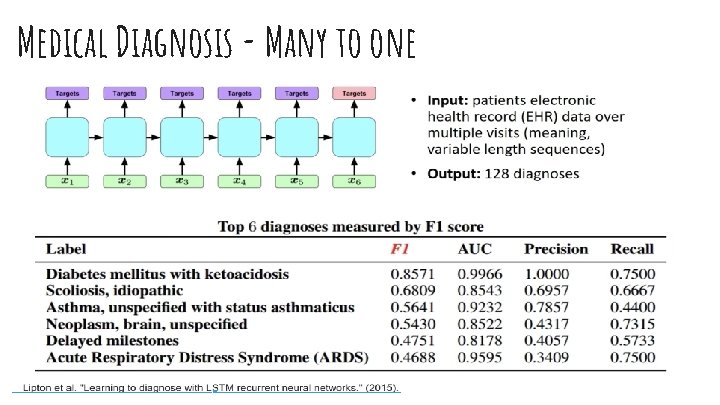

Medical Diagnosis - Many to one

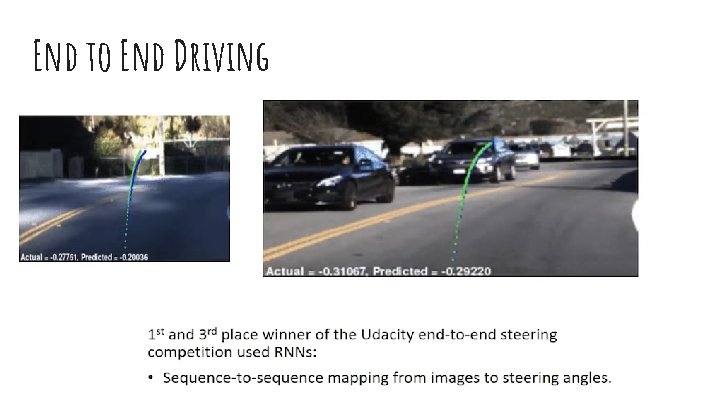

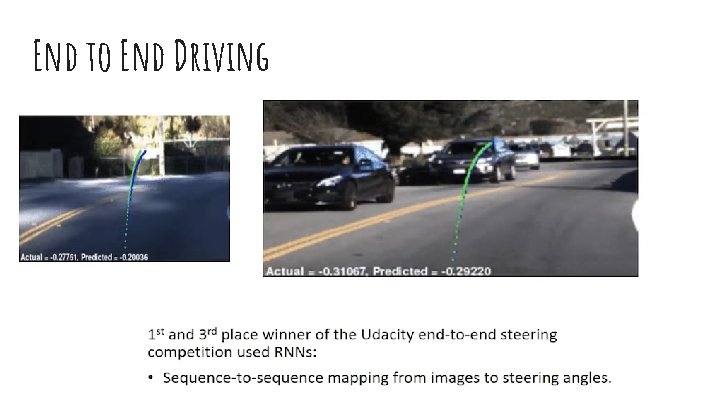

End to End Driving

End to End Driving MIT 6. S 094: Recurrent Neural Networks for Steering Through Time

Summary - Chain Rule Backpropagation Vanishing / Exploding Gradient LSTM GRU Attention Applications

References - Stanford CS 231 - Fei-Fei & Justin Johnson & Serena Yeung. Lecture 10 https: //deeplearning 4 j. org/lstm. html Coursera, Machine Learning course by Andrew Ng. https: //karpathy. github. io/2015/05/21/rnn-effectiveness/ https: //arxiv. org/pdf/1406. 6247. pdf Udacity - Deep learning, by Luis Serrano https: //medium. com/syncedreview/a-brief-overview-of-attention-mechanism-13 c 578 ba 9129 http: //vision. stanford. edu/pdf/Karpathy. ICLR 2016. pdf https: //highnoongmt. wordpress. com/2015/05/22/lisls-stis-recurrent-neural-networks-for-folk-music-generation/ NLP course (IDC) - Kfir Bar - NLM lecture https: //cs. stanford. edu/people/karpathy/deepimagesent/ http: //cs 231 n. stanford. edu/slides/2017/cs 231 n_2017_lecture 10. pdf http: //colah. github. io/posts/2015 -08 -Understanding-LSTMs/