LSST Science Platform INFRASTRUCTURE MICHELLE BUTLER LSP Architecture

LSST Science Platform INFRASTRUCTURE – MICHELLE BUTLER

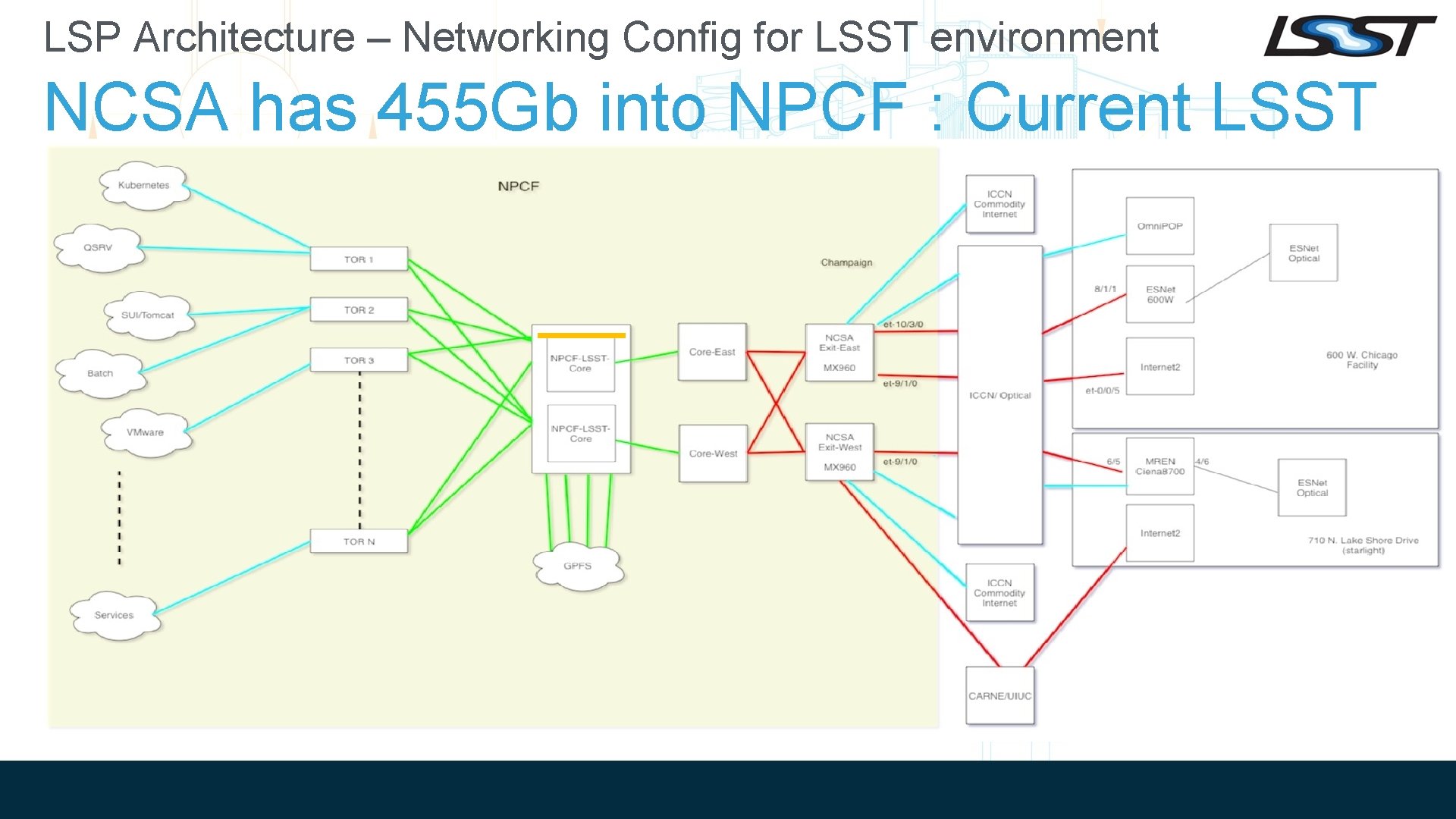

LSP Architecture – Networking Config for LSST environment NCSA has 455 Gb into NPCF : Current LSST Infrastructure

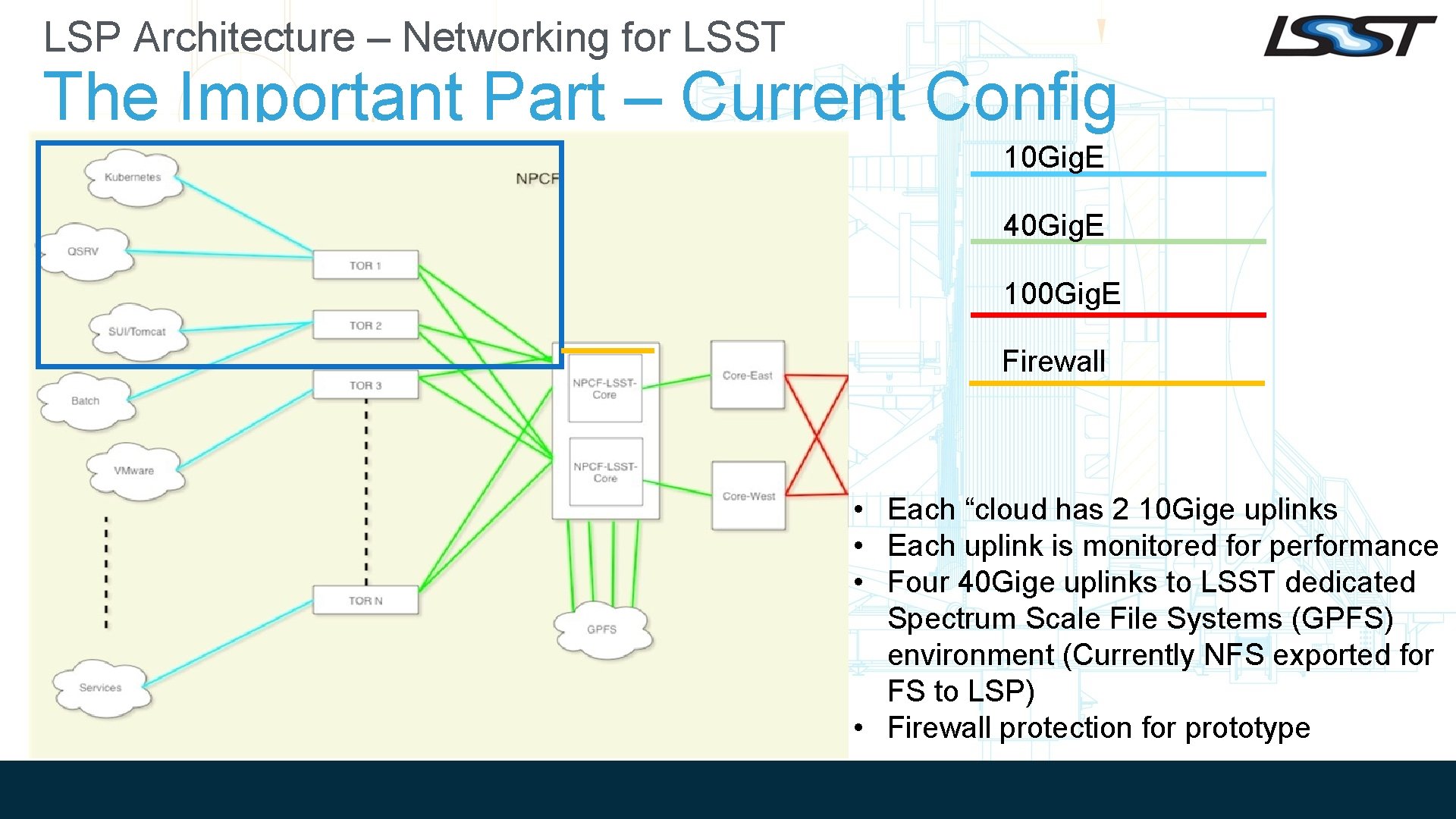

LSP Architecture – Networking for LSST The Important Part – Current Config 10 Gig. E (Prototype) 40 Gig. E 100 Gig. E Firewall • Each “cloud has 2 10 Gige uplinks • Each uplink is monitored for performance • Four 40 Gige uplinks to LSST dedicated Spectrum Scale File Systems (GPFS) environment (Currently NFS exported for FS to LSP) • Firewall protection for prototype

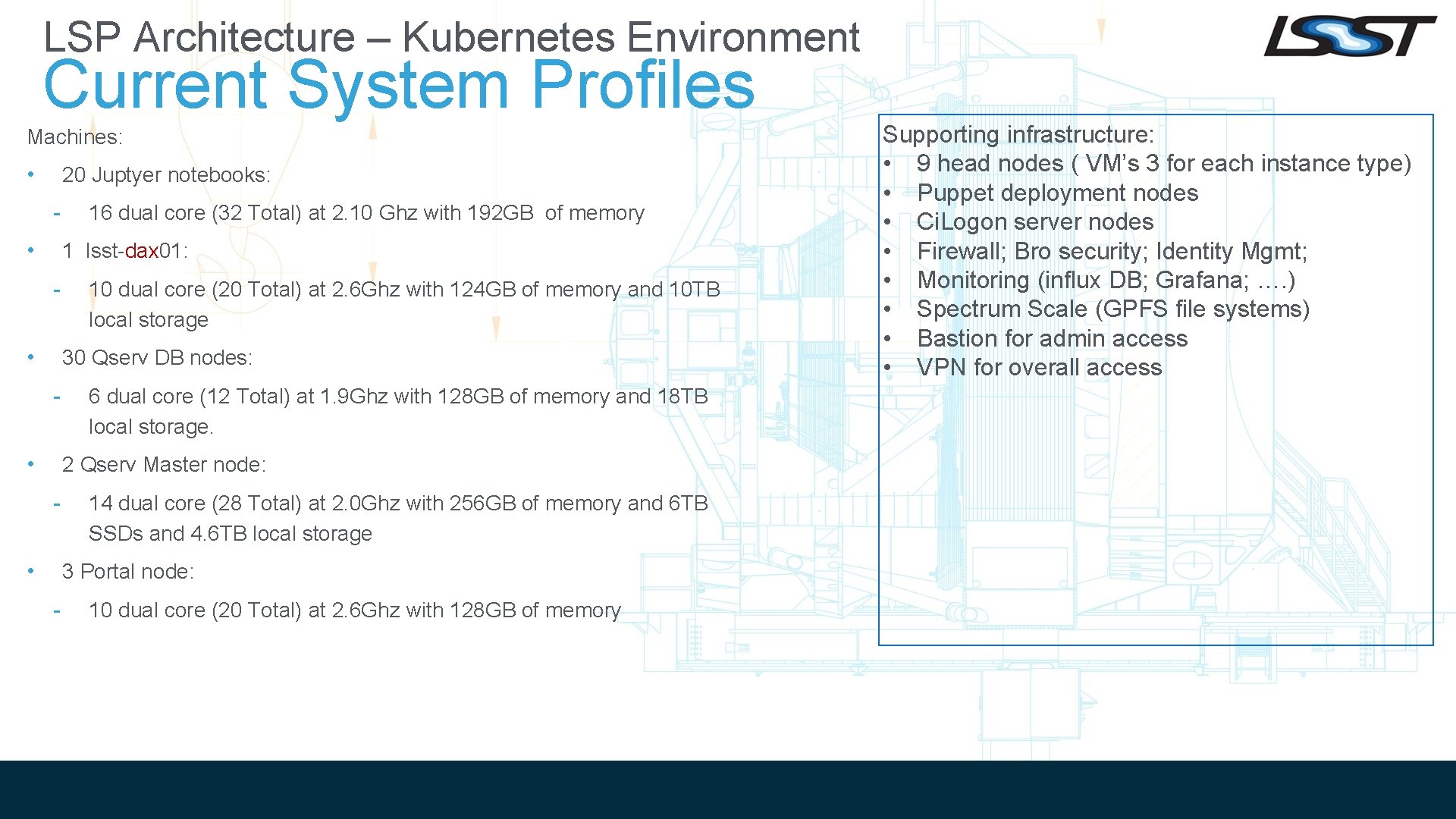

LSP Architecture – Kubernetes Environment Current System Profiles Machines: • 20 Juptyer notebooks: • 16 dual core (32 Total) at 2. 10 Ghz with 192 GB of memory 1 lsst-dax 01: • 10 dual core (20 Total) at 2. 6 Ghz with 124 GB of memory and 10 TB local storage 30 Qserv DB nodes: • 6 dual core (12 Total) at 1. 9 Ghz with 128 GB of memory and 18 TB local storage. 2 Qserv Master node: • 14 dual core (28 Total) at 2. 0 Ghz with 256 GB of memory and 6 TB SSDs and 4. 6 TB local storage 3 Portal node: - 10 dual core (20 Total) at 2. 6 Ghz with 128 GB of memory Supporting infrastructure: • 9 head nodes ( VM’s 3 for each instance type) • Puppet deployment nodes • Ci. Logon server nodes • Firewall; Bro security; Identity Mgmt; • Monitoring (influx DB; Grafana; …. ) • Spectrum Scale (GPFS file systems) • Bastion for admin access • VPN for overall access

LSP Architecture – Prototype config Current node instances: 3 separate levels of • All user interfacing nodes are Kubernetes (K 8): prototyping - LSST Data Facility (LDF) has 3 separate instances being maintained: � Stable: The configuration is as stable as possible and testing of K 8, aspect software, ingress/egress, Idm and configuration is kept to a miniumum. We are mindful of project staff who are finding this instance useful, and the teams make a best effort to keep this environment stable. � Infrastructure (Int): This configuration is for all sorts of testing and changes. “Wild West”. The users of this instance is staff for testing all levels of applications. � Admin: LDF admin and LSP admin specifically for testing K 8 underlying software. Examples are new releases; new ingress/egress testing; new GPFS configurations and clients. � Each instance includes all aspects of LSP. (Portal, API, Notebook) - Qserv DB nodes � Shared environment accessible to stable and int instances.

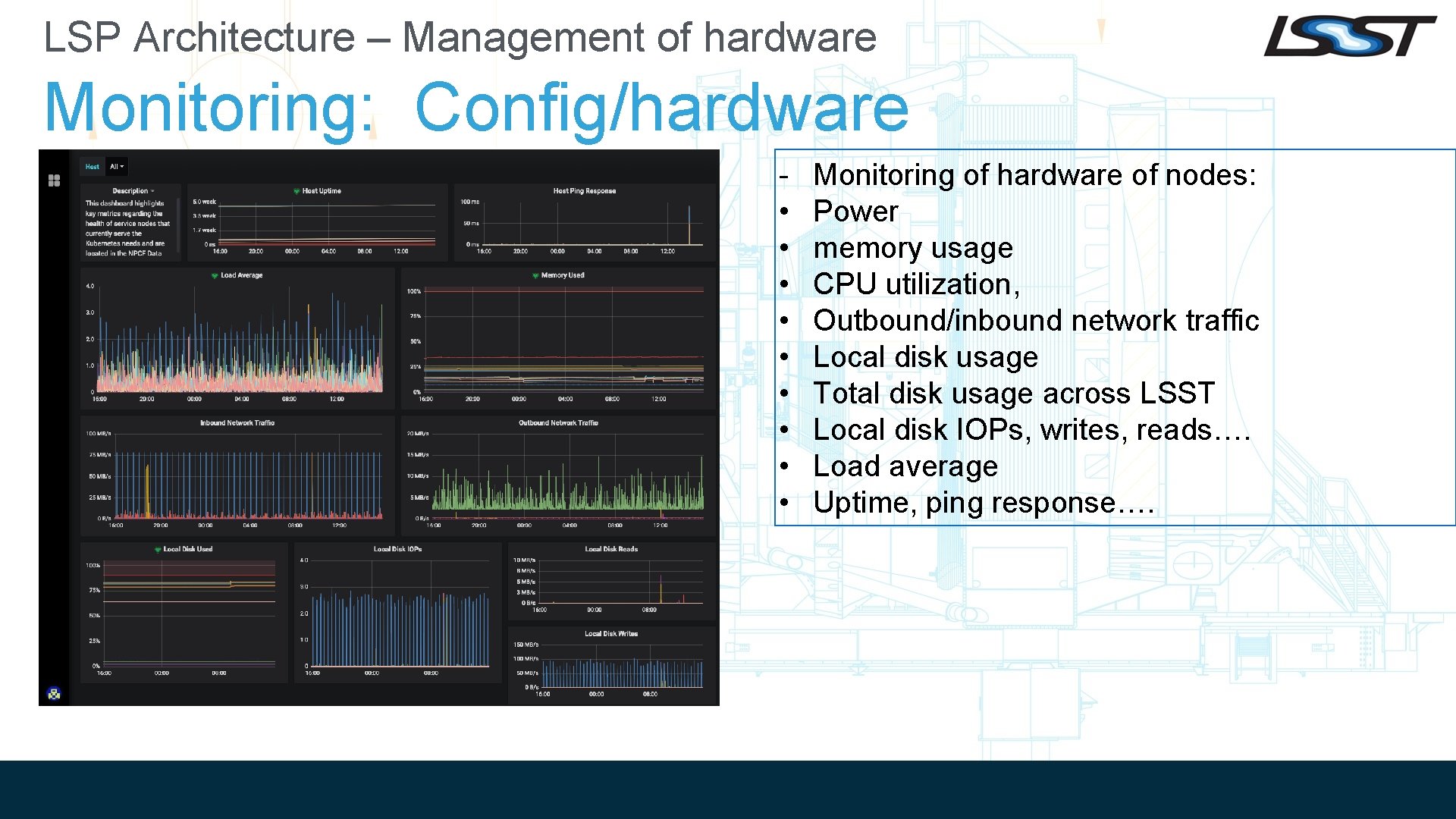

LSP Architecture – Management of hardware Monitoring: Config/hardware • • • Monitoring of hardware of nodes: Power memory usage CPU utilization, Outbound/inbound network traffic Local disk usage Total disk usage across LSST Local disk IOPs, writes, reads…. Load average Uptime, ping response….

LSP Architecture – Lessons Learned So Far: What have we done/learned with this • prototype • • Discovered a good average number for a user in the tutorials is 1 CPU/3 GB; but for normal staff user a average pod is ~2 CPU/6 GB enabling the LDF K 8 nodes to support 16 staff users/node. The configuration also supports pods as large as 4 cpu/12 GB if needed by users. Mounting options are important with in the container; using immutable FS flags for important unchanging data. Firewall protection – the good and the bad. - Good: not allow unexpected users in. Bad: Demos/workshops a real pain. - Currently a VPN connection and 2 FA is required unless at LSST established IP address. Created 3 different K 8 instances to better allow for changes within the different aspects. - Test for admin specific, integration for any LSP changes, stable for less changes; stricter control of “admin access” in ”stable” than in “int” and change control into the future to roll software from “int” to stable. Risks: - NFS mount – moving to native GPFS mount for performance and better overall configuration; � No root mount within container/notebook is required; � K 8 so new, and tools for monitoring/management/security is in catchup mode within the community of K 8 - Sharing of file systems (/project and /scratch) is scary for NCSA not common for HPC - Scaling is not fully characterized at this time but through this prototype testing, more knowledge will be gained.

LSP Architecture • Questions?

- Slides: 8